Investigation of Cybersecurity Bottlenecks of AI Agents in Industrial Automation

Abstract

1. Introduction

2. Background and Related Work

2.1. Background

2.1.1. Agentic AI in Industrial Automation

2.1.2. Threats and Vulnerabilities in Agentic AI

2.1.3. Cybersecurity Methods for Agentic AI

2.1.4. Challenges and Limitations

2.1.5. Emerging Trends in AI Security

2.2. Related Work

- Insufficient empirical validation in real industrial environments [9].

- Lack of integrated solutions combining anomaly detection, prevention, and response [1].

- Minimal exploration of adversarial resilience in ML-based detection systems [2].

- Limited application of security standards in Agentic AI operational models [59].

- Neglect of human-in-the-loop security practices in most technical models [20].

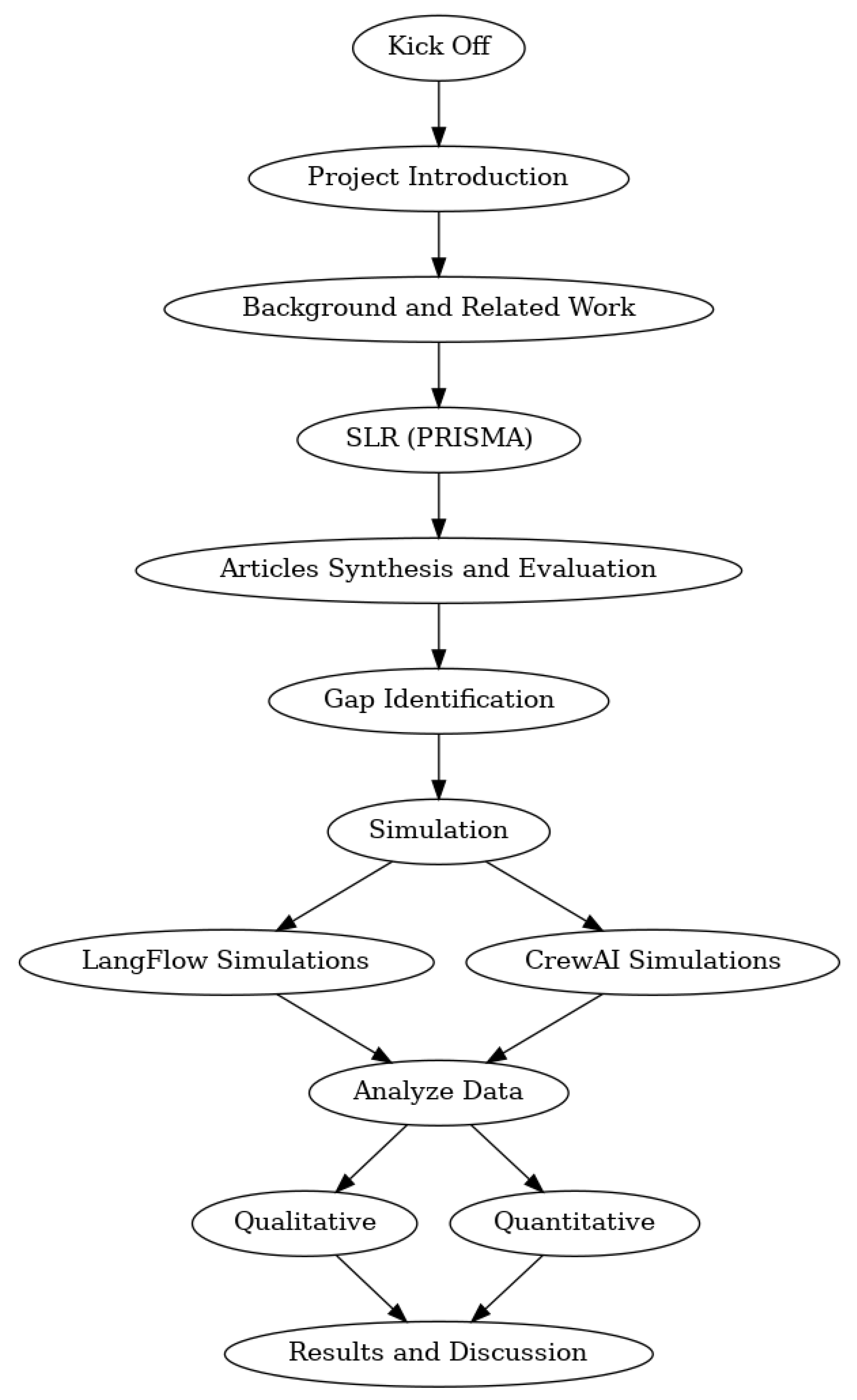

3. Research Methodology

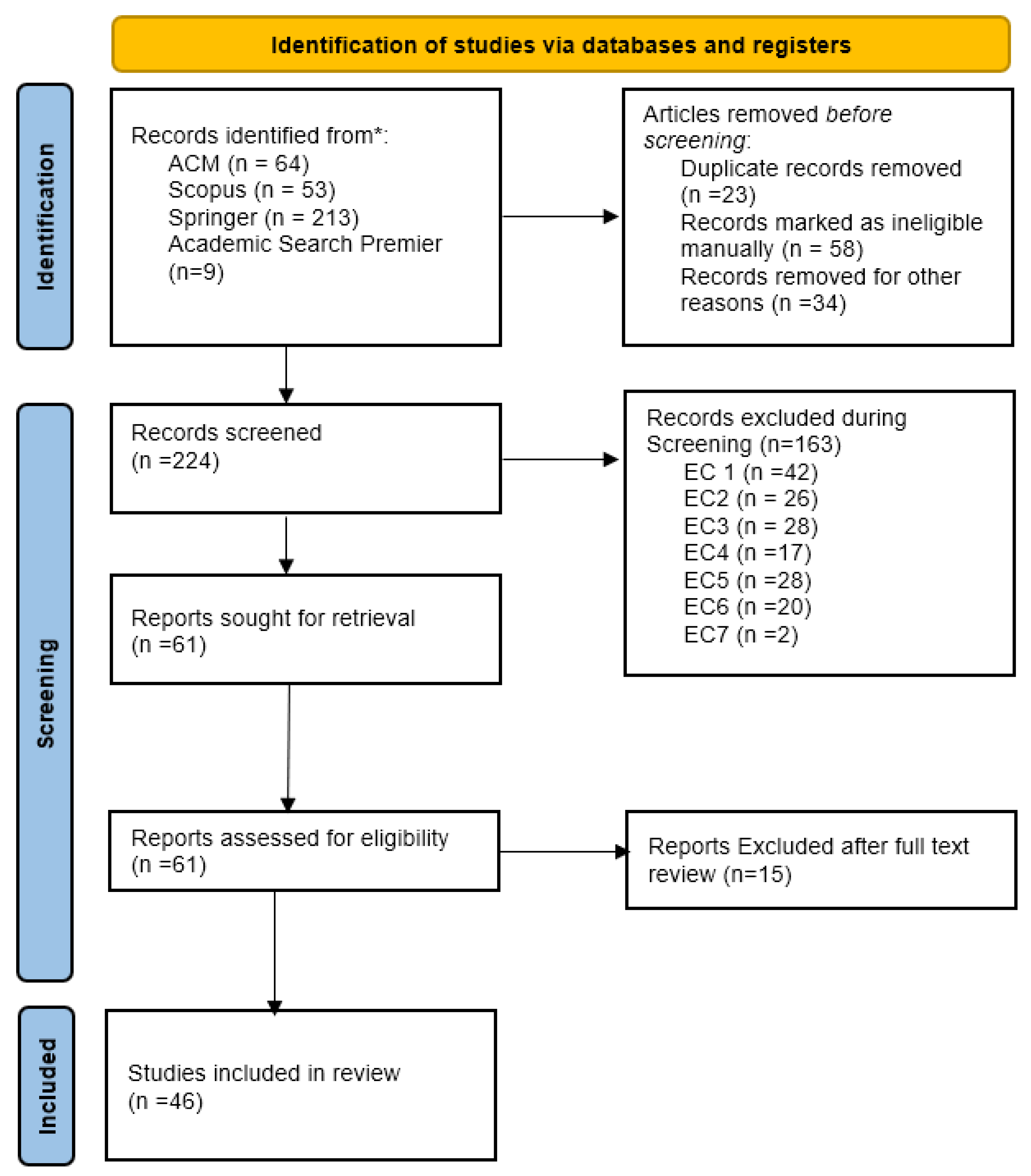

3.1. Systematic Literature Review

3.1.1. Identification of Studies

3.1.2. Articles Screening

3.1.3. Data Extraction

- Metadata: Basic details such as title, authors, year of publication, venue, and source.

- Security bottlenecks: Identified risks, threats, and vulnerabilities of Agentic AI in industrial automation.

- Mitigation Strategies: Approaches proposed or evaluated by researchers to address identified bottlenecks.

- Results and ConclusionsKey findings, recommendations, and best practices.

3.2. Experimental Setup

3.2.1. CrewAI Framework

3.2.2. LangFlow Framework

3.2.3. Large Language Model Integration

- Summarize the environmental conditions.

- Assess whether the system is operating normally or abnormally.

- Take Action Automatically.

3.2.4. Simulated Attacks

3.2.5. Evaluation Metrics

3.3. Research Questions

- RQ1: What are the cyber security bottlenecks (threats, risks and vulnerabilities) of Agentic AI in industrial automation?

- RQ2: What simulations can be conducted to demonstrate attacks on Agentic AI in industrial automation, with focus on adversarial attacks?

- RQ3: What are the mechanisms that can be used to detect attacks on Agentic AI systems?

- RQ4: What are the recommendations that can be used to secure Agentic AI systems against attacks and threats?

3.4. Research Design

3.5. Data Collection Method

3.6. Data Analysis Method

4. Systematic Review and Simulations

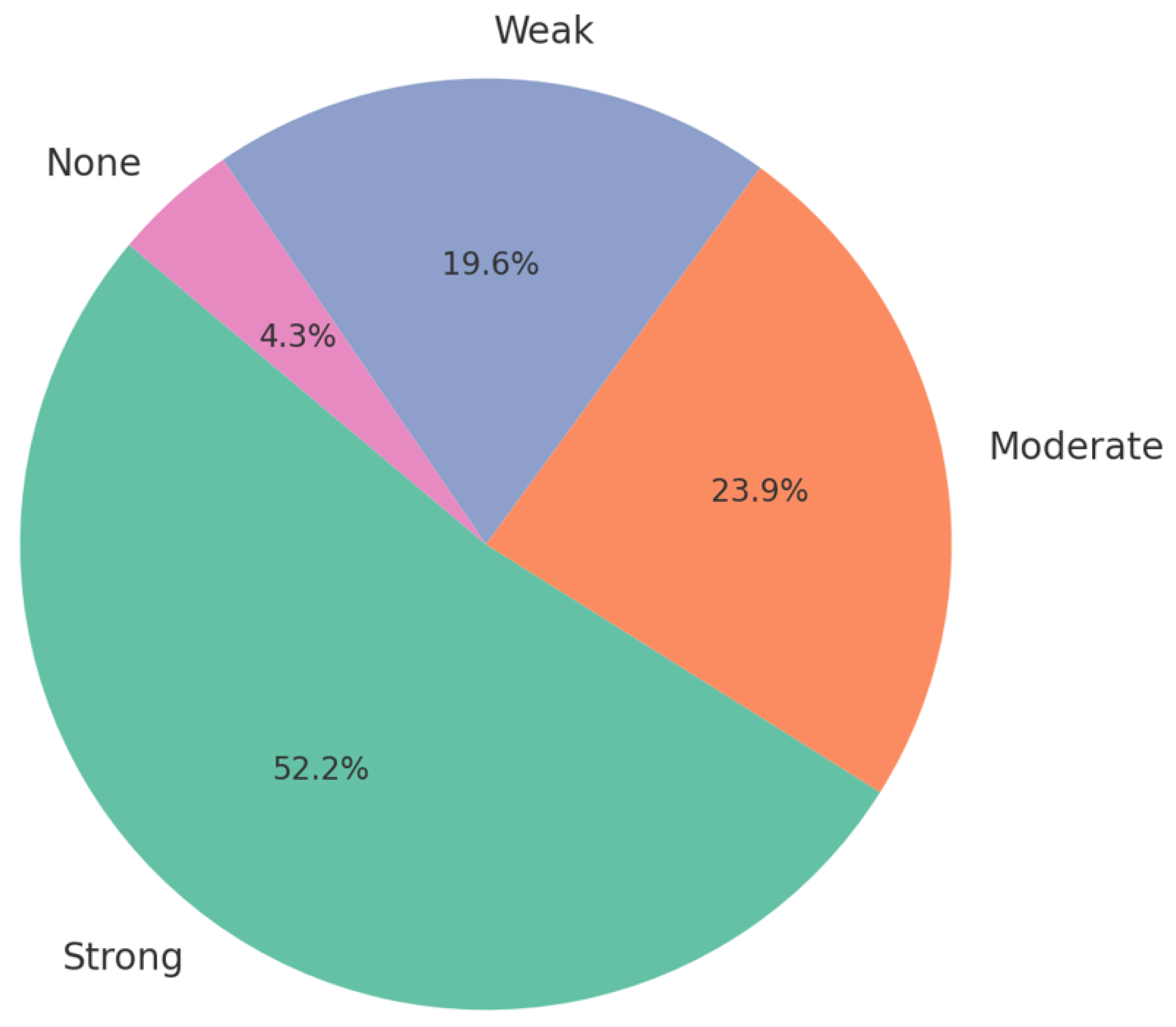

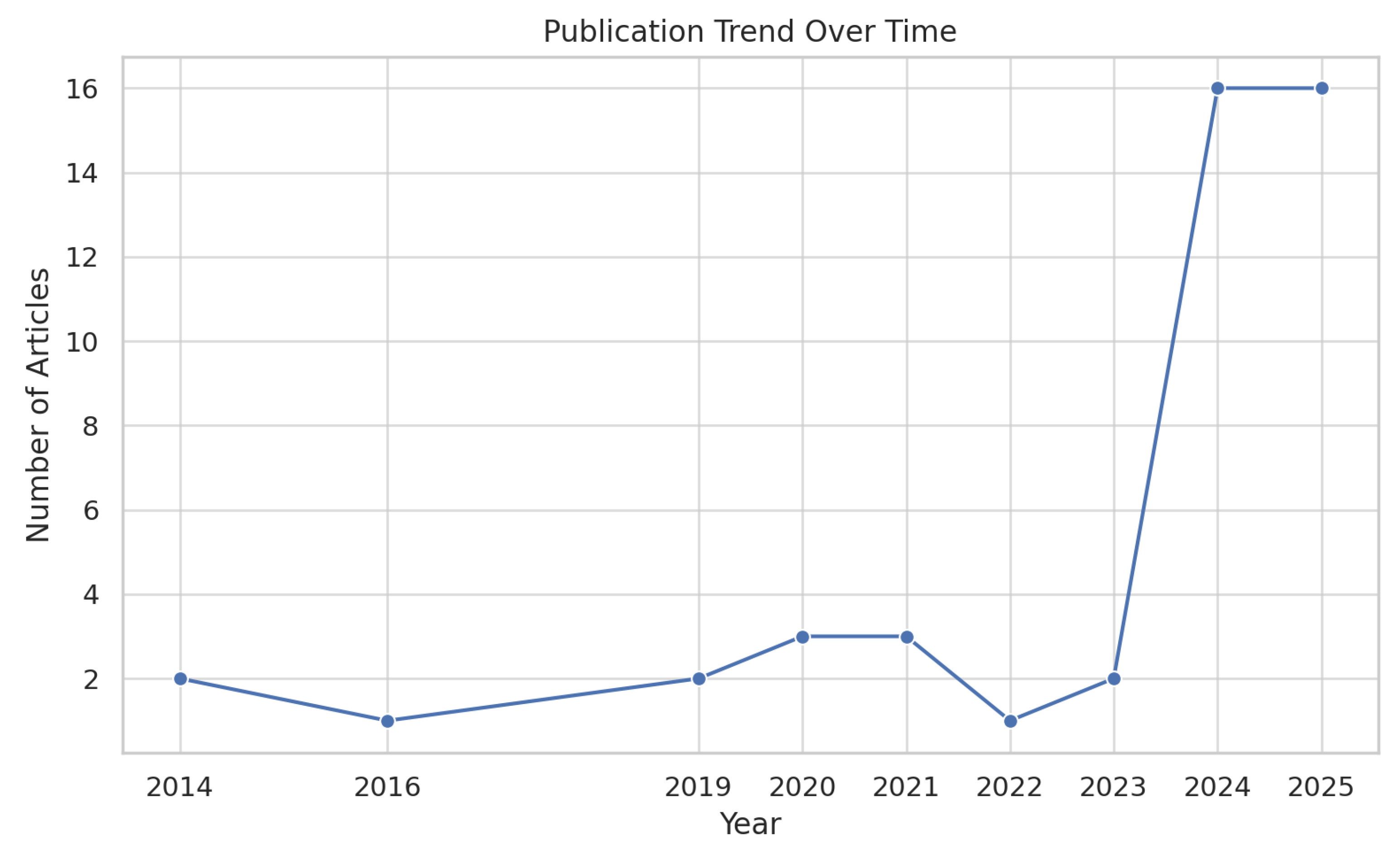

4.1. Literature Review Findings

4.2. Threat Modeling Application

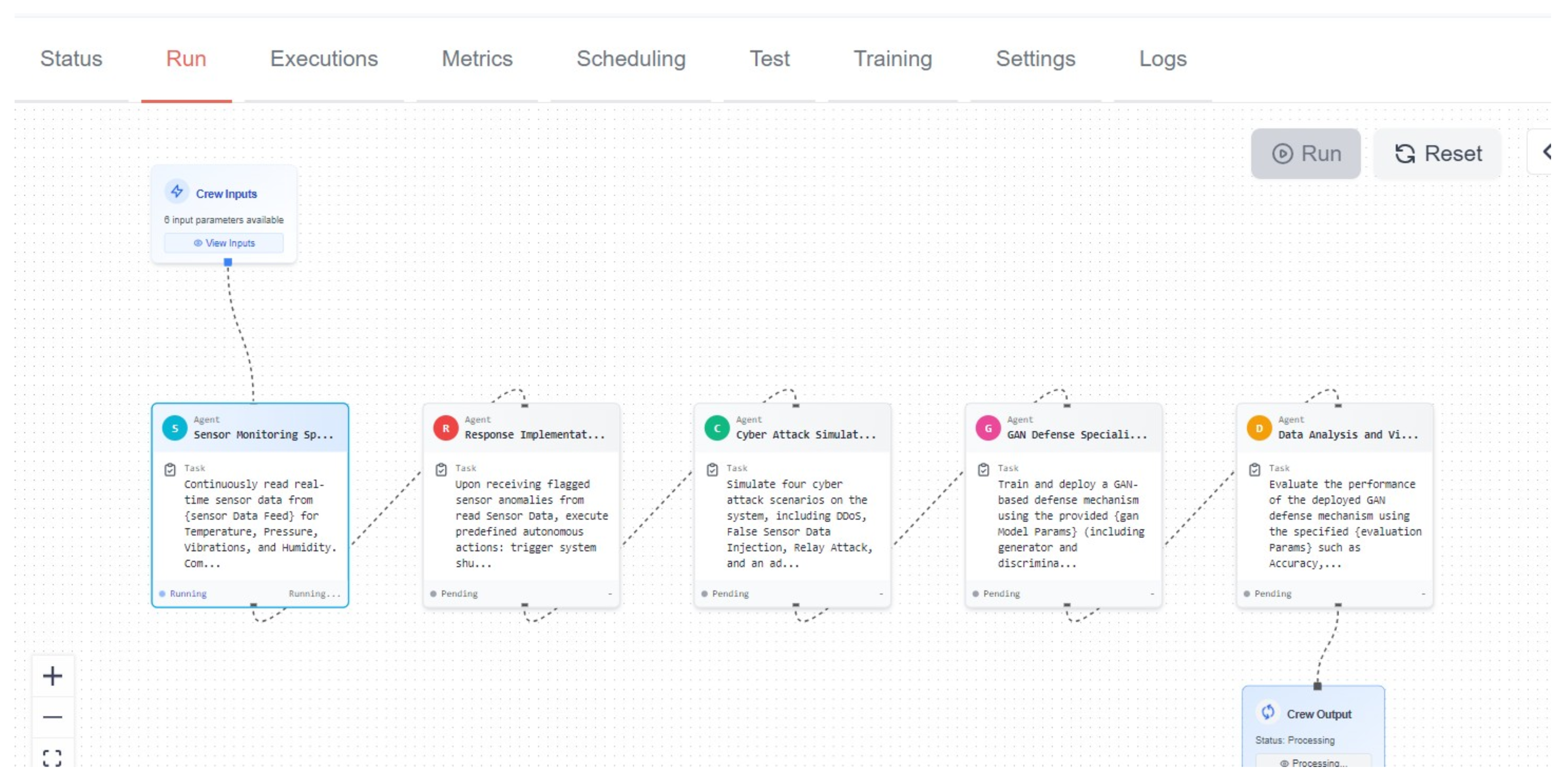

4.2.1. CrewAI Design and Implementation

- Sensor monitoring agent. It was responsible for the real-time processing of input sensor data from the input nodes. The simulated sensors were temperature, vibration, humidity and pressure. The baseline readings were configured in the input node and sent to the sensor monitoring node. This was setting operational base line standards for normal system behavior, as shown in Figure 7.

- Response Implementation Node. This was responsible for interpretation of the sensor data processed by sensor monitoring agent. It was responsible for taking actions if any threshold exceeded predefined baseline. This node could make an autonomous decision for the safety of the simulated factory as shown in Figure 8.

- Attack Simulation Node. This node was specifically responsible for simulating attacks on the system as shown in Figure 9.

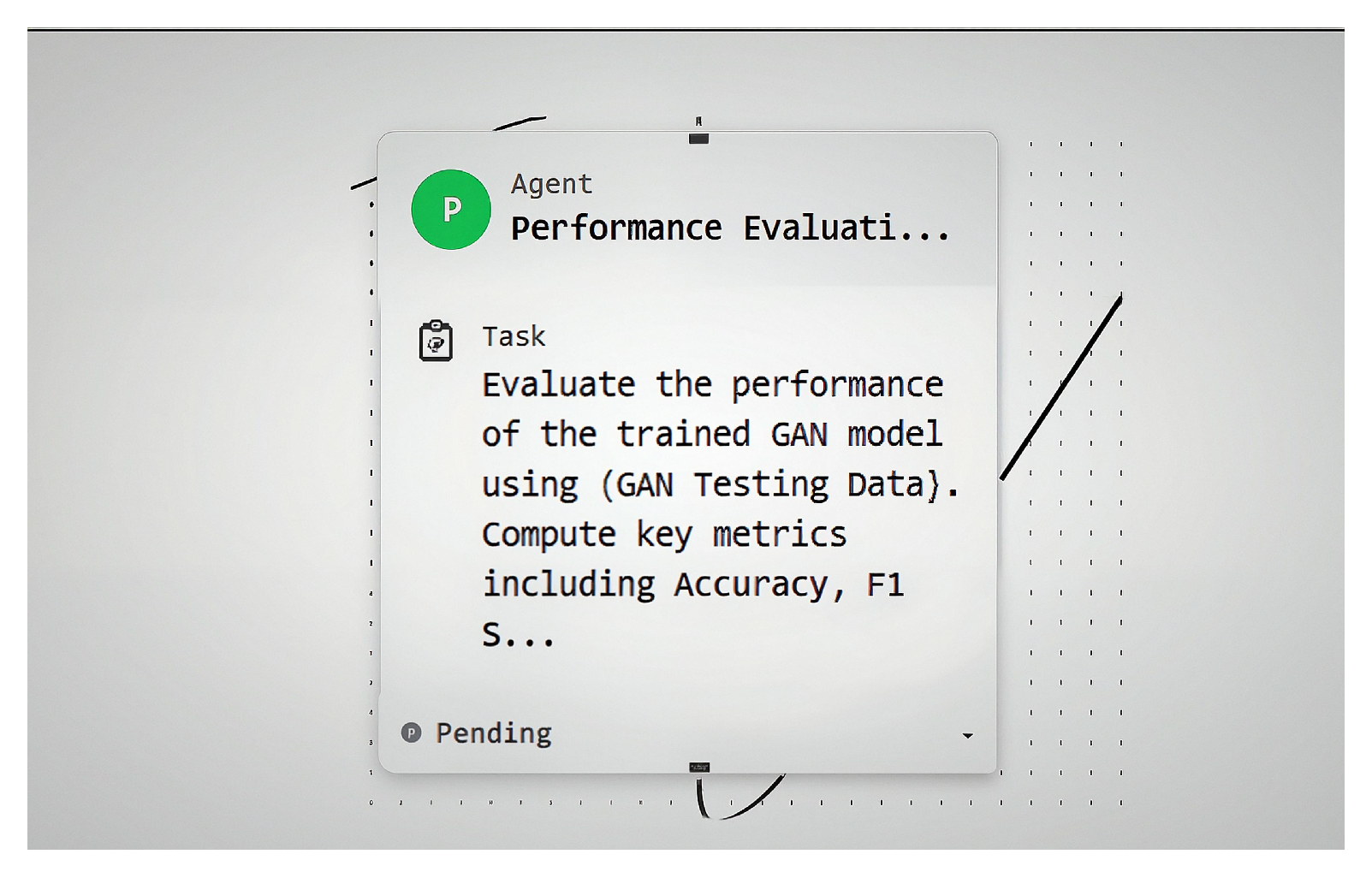

- GAN Defense Node. This node was responsible for performing independent assessments and the detection of security threats breached by deploying a GAN-based detection algorithm. The data received from the response implantation node was used as baseline to measure against the data received by attack simulation node. In this way, that historic data was used as a discriminator, to identify adversarial inputs generated by attack simulation node as shown in Figure 10.

- Data Analysis Node. This node was responsible for analyzing all the data throughout the simulation and providing data on how the systems performed before, during, and after the attacks and how the GAN performed in defending the systems against the simulated attacks as shown in Figure 11.

4.2.2. LangFlow Design and Implementation

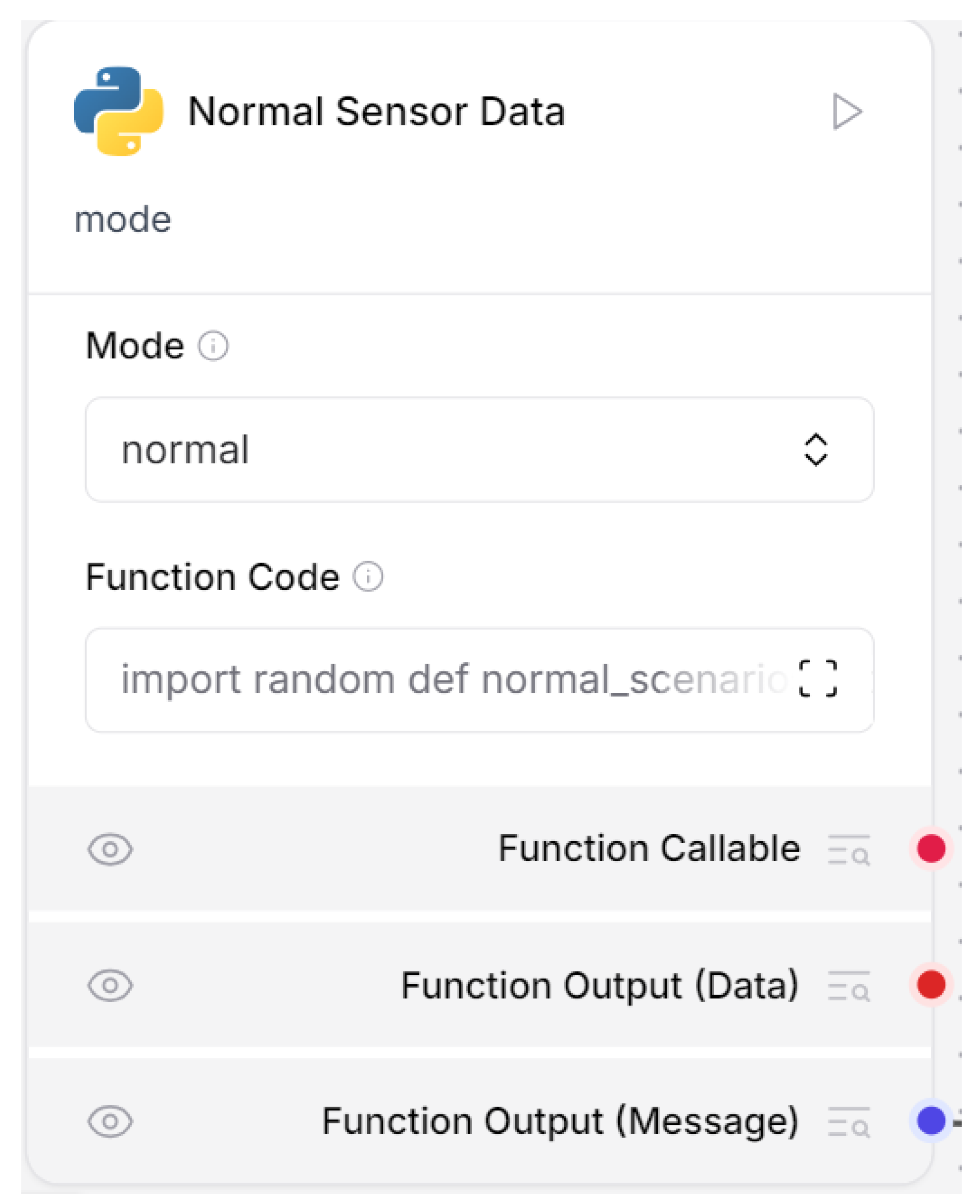

- Sensor input node: It was responsible for real-time input of sensor data The simulated sensors were temperature, vibration, humidity and pressure. The baseline readings were configured in the input node and sent to the sensor monitoring node. This was setting operational base line standards for normal system behavior. The data was sent to prompt node. The Figure 13 show input node.

- Prompt node. This was responsible for taking this received data and sending it to the Large Language Model for interpretation and action. Figure 14 shows prompt node.

- LLM Node. This was the decision node which was taking autonomous decisions based on the data received from the prompt node. Figure 15 shows the LLM node.

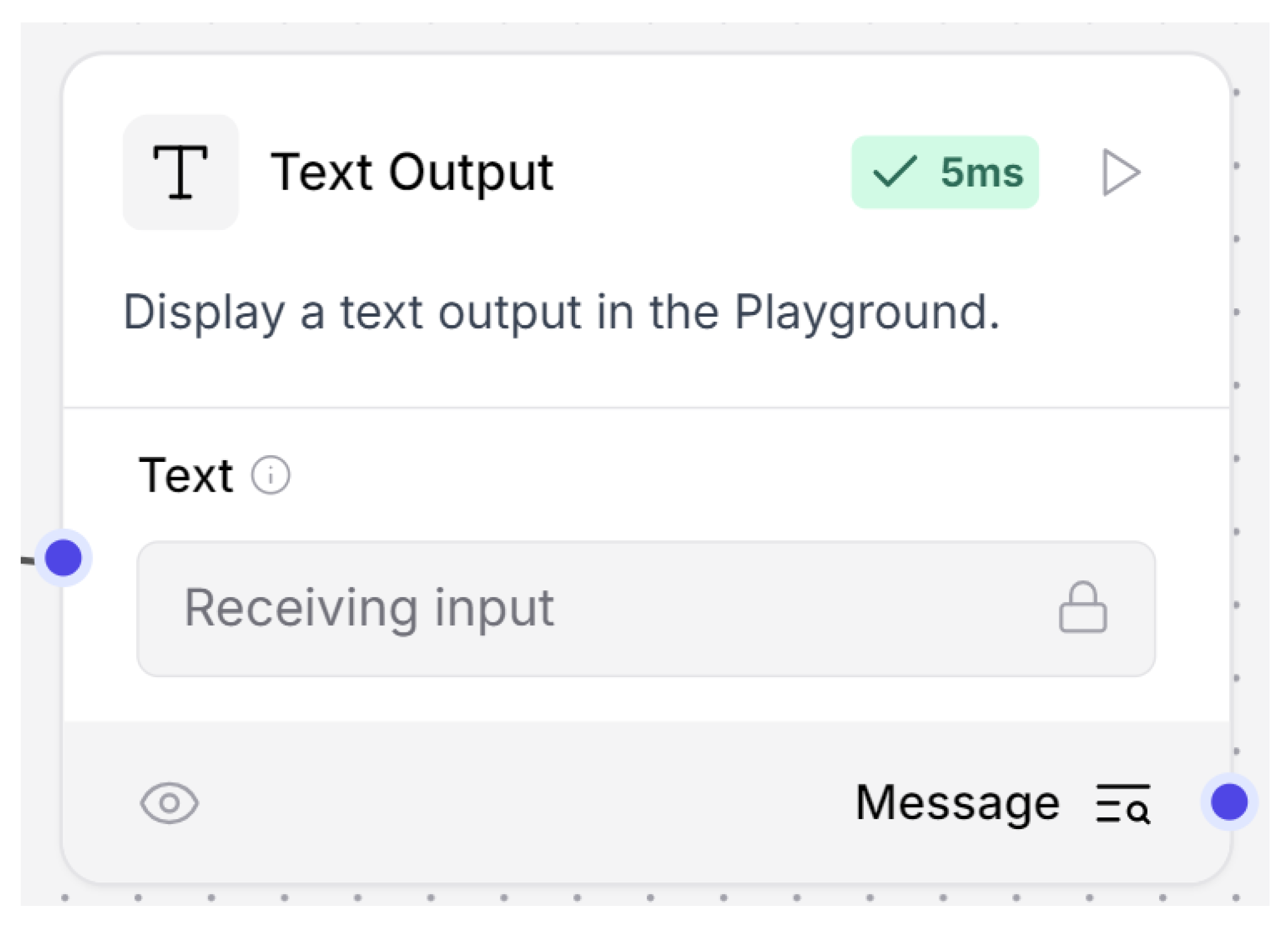

- Output Node. This was responsible for displaying the decision taken by the LLM. Figure 16 shows the output node.

5. Results

5.1. Systematic Literature Review Results Analysis

5.2. Cyber Security Bottlenecks of Agentic AI

5.2.1. Prompt Injection and Adversarial Attacks

5.2.2. Unpredictability of Agentic AI Systems Behavior

5.2.3. Denial of Service and Jamming Attacks

5.2.4. Increased Attack Surface and Data Privacy Concerns

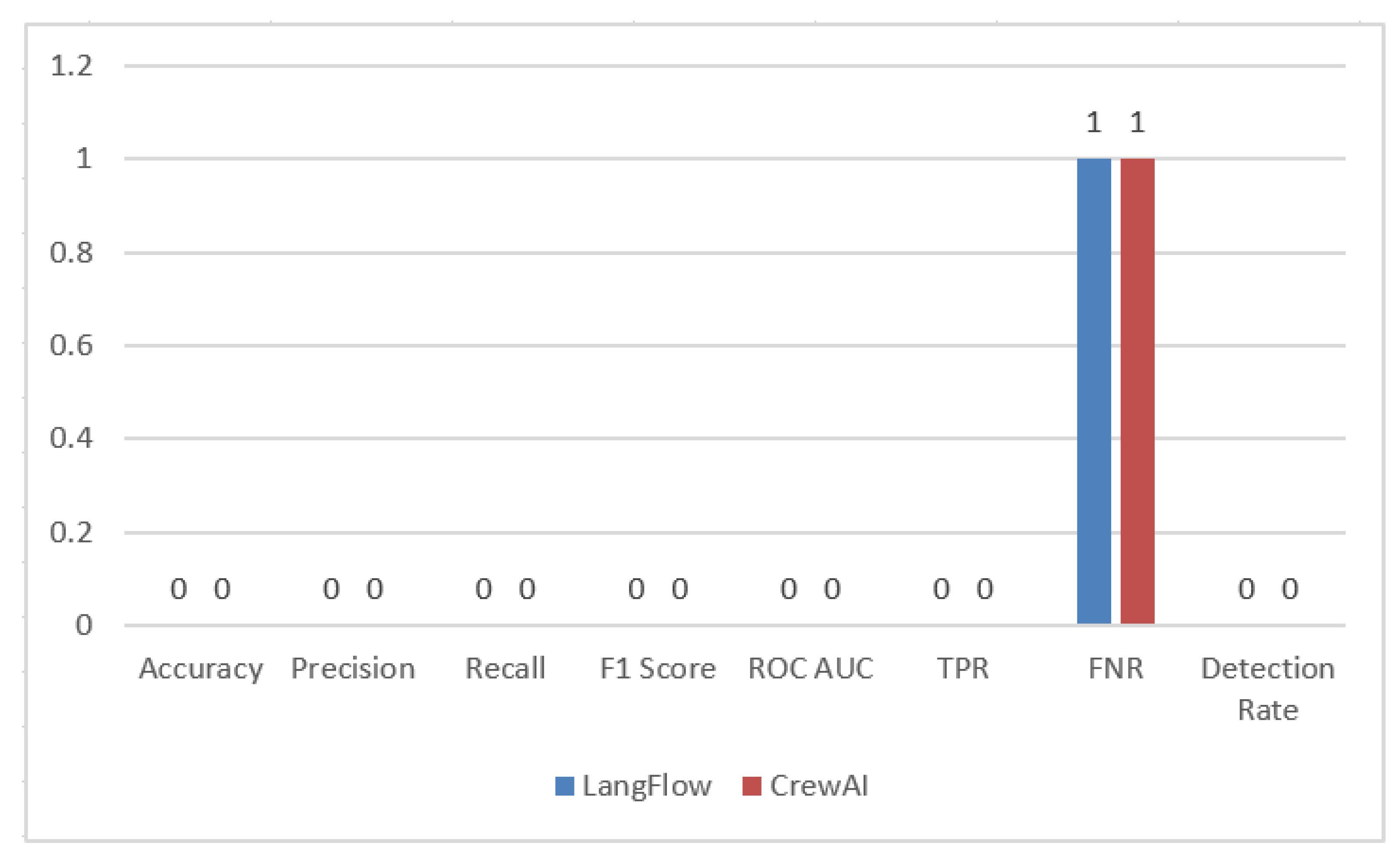

5.3. Simulation Results

5.3.1. Pre Attack/Baseline Results

5.3.2. Attack Simulation Without Any Defense Mechanism

5.3.3. Attack Simulation with GAN in Place

6. Discussions

6.1. Limitations of GAN-Based Detection Systems

6.2. Augmenting Detection with LLMs: Not Defense

6.3. CrewAI vs. Langflow: Framework-Level Observation

6.4. Demonstrated Security Implications in Industrial CPS

6.5. Proposed Mitigation Strategies

Toward Secure, Transparent Agentic AI

6.6. Limitations, Gaps and Future Direction

6.7. Summary of Key Risks and Mitigation Findings

7. Conclusions

7.1. Future Work and Research

7.2. Critical Discussion

7.3. Generalization of the Results

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pandey, R.K.; Das, T.K. Anomaly detection in cyber-physical systems using actuator state transition model. Int. J. Inf. Technol. 2025, 17, 1509–1521. [Google Scholar] [CrossRef]

- Bousetouane, F. Agentic Systems: A Guide to Transforming Industries with Vertical AI Agents. arXiv 2025, arXiv:2501.00881. [Google Scholar] [CrossRef]

- Suresh, P. Agentic AI: Redefining Autonomy for Complex Goal-Driven Systems. ResearchGate, 2025. Available online: https://doi.org/10.13140/RG.2.2.24115.75047 (accessed on 23 May 2025).

- Ismail, I.; Kurnia, R.; Brata, Z.A.; Nelistiani, G.A.; Heo, S.; Kim, H.; Kim, H. Toward Robust Security Orchestration and Automated Response in Security Operations Centers with a Hyper-Automation Approach Using Agentic AI. Information 2025, 16, 365. [Google Scholar] [CrossRef]

- Shao, X.; Xie, L.; Li, C.; Wang, Z. A Study on Networked Industrial Robots in Smart Manufacturing: Vulnerabilities, Data Integrity Attacks and Countermeasures. J. Intell. Robot. Syst. 2023, 109, 60. [Google Scholar] [CrossRef]

- Ajiga, D.; Folorunsho, S. The role of software automation in improving industrial operations and efficiency. Int. J. Eng. Res. Appl. 2024, 7, 22–35. [Google Scholar] [CrossRef]

- Ocaka, A.; Briain, D.O.; Davy, S.; Barrett, K. Cybersecurity Threats, Vulnerabilities, Mitigation Measures in Industrial Control and Automation Systems: A Technical Review. In Proceedings of the 2022 Cyber Research Conference-Ireland (Cyber-RCI 2022), Galway, Ireland, 25 April 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Bendjelloul, A.; Gaham, M.; Bouzouia, B.; Moufid, M.; Mihoubi, B. Multi Agent Systems Based CPPS—An Industry 4.0 Test Case. In Proceedings of the Lecture Notes in Networks and Systems (LNNS), Amsterdam, The Netherlands, 1–2 September 2022; Springer Science and Business Media Deutschland GmbH: Berlin/Heidelberg, Germany, 2022; Volume 413, pp. 187–196. [Google Scholar] [CrossRef]

- Orabi, M.; Tran, K.P.; Egger, P.; Thomassey, S. Anomaly detection in smart manufacturing: An Adaptive Adversarial Transformer-based model. J. Manuf. Syst. 2024, 77, 591–611. [Google Scholar] [CrossRef]

- Castro, J.P. Agentic AI and the Cybersecurity Compass: Optimizing Cyber Defense. ResearchGate. 2024. Available online: https://www.researchgate.net/publication/388079969 (accessed on 16 May 2025).

- Elía, I.; Pagola, M. Anomaly detection in Smart-manufacturing era: A review. Eng. Appl. Artif. Intell. 2024, 139, 109578. [Google Scholar] [CrossRef]

- Bui, M.T.; Boffa, M.; Valentim, R.V.; Navarro, J.M.; Chen, F.; Bao, X.; Houidi, Z.B.; Rossi, D. A Systematic Comparison of Large Language Models Performance for Intrusion Detection. Proc. ACM Netw. 2024, 2, 1–23. [Google Scholar] [CrossRef]

- Khan, R.; Sarkar, S.; Mahata, S.K.; Jose, E. Security Threats in Agentic AI System. arXiv 2024, arXiv:2410.14728. [Google Scholar]

- Dhameliya, N. Revolutionizing PLC Systems with AI: A New Era of Industrial Automation. Am. Digit. J. Comput. Digit. Technol. 2023, 1, 33–48. [Google Scholar]

- Yigit, Y.; Ferrag, M.A.; Sarker, I.H.; Maglaras, L.A.; Chrysoulas, C.; Moradpoor, N.; Janicke, H. Critical Infrastructure Protection: Generative AI, Challenges, and Opportunities. arXiv 2024, arXiv:2405.04874. [Google Scholar] [CrossRef]

- Parmar, A.; Gnanadhas, J.; Mini, T.T.; Abhilash, G.; Biswal, A.C. Multi-agent approach for anomaly detection in automation networks. In Proceedings of the International Conference on Circuits, Communication, Control and Computing, Bangalore, India, 21–22 November 2014; pp. 225–230. [Google Scholar] [CrossRef]

- Berti, A.; Maatallah, M.; Jessen, U.; Sroka, M.; Ghannouchi, S.A. Re-Thinking Process Mining in the AI-Based Agents Era. arXiv 2024, arXiv:2408.07720. [Google Scholar]

- Biswas, D. Stateful Monitoring and Responsible Deployment of AI Agents. In Proceedings of the 17th International Conference on Agents and Artificial Intelligence—Volume 1: ICAART. INSTICC, Porto, Portugal, 23–25 February 2025; SciTePress: Setúbal, Portugal, 2025; pp. 393–399. [Google Scholar] [CrossRef]

- Sunny, S.M.A.; Liu, X.F.; Shahriar, M.R. Development of machine tool communication method and its edge middleware for cyber-physical manufacturing systems. Int. J. Comput. Integr. Manuf. 2023, 36, 1009–1030. [Google Scholar] [CrossRef]

- Hentout, A.; Aouache, M.; Maoudj, A.; Akli, I. Human–robot interaction in industrial collaborative robotics: A literature review of the decade 2008–2017. Adv. Robot. 2019, 33, 764–799. [Google Scholar] [CrossRef]

- Shrestha, L.; Balogun, H.; Khan, S. AI-Driven Phishing: Techniques, Threats, and Defence Strategies. In Cybersecurity and Human Capabilities Through Symbiotic Artificial Intelligence; Jahankhani, H., Issac, B., Eds.; Springer: Cham, Switzerland, 2025; pp. 121–143. [Google Scholar]

- Mazhar, T.; Irfan, H.M.; Khan, S.; Haq, I.; Ullah, I.; Iqbal, M.; Hamam, H. Analysis of Cyber Security Attacks and Its Solutions for the Smart grid Using Machine Learning and Blockchain Methods. Future Internet 2023, 15, 83. [Google Scholar] [CrossRef]

- Sharma, P.; Dash, B. Impact of Big Data Analytics and ChatGPT on Cybersecurity. In Proceedings of the 2023 4th International Conference on Computing and Communication Systems (I3CS), Shillong, India, 16–18 March 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Al-Jaroodi, J.; Mohamed, N.; Jawhar, I. A Service-Oriented Middleware Framework for Manufacturing Industry 4.0. ACM SIGBED Rev. 2018, 15, 29–36. [Google Scholar] [CrossRef]

- Yaseen, A. Reducing Industrial Risk with AI and Automation. Int. J. Intell. Autom. Comput. 2021, 4, 60–80. Available online: https://link.springer.com/journal/11633 (accessed on 23 May 2025).

- Leitão, P.; Karnouskos, S.; Ribeiro, L.; Lee, J.; Strasser, T.; Colombo, A.W. Smart Agents in Industrial Cyber-Physical Systems. Proc. IEEE 2016, 104, 1086–1101. [Google Scholar] [CrossRef]

- Li, Y.; Wang, J. Multi-Objective Dynamic Scheduling Model of Flexible Job Shop Based on NSGA-II Algorithm and Scroll Window Technology. In Advances in Swarm Intelligence; Tan, Y., Shi, Y., Niu, B., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12145, pp. 435–444. [Google Scholar] [CrossRef]

- Slimane, J.B.; Alshammari, A. Securing the Industrial Backbone: Cybersecurity Threats, Vulnerabilities, and Mitigation Strategies in Control and Automation Systems. J. Electr. Syst. 2024, 20. [Google Scholar] [CrossRef]

- Varadarajan, M.N.; Viji, C.; Rajkumar, N.; Mohanraj, A. Integration of Ai and Iot for Smart Home Automation. SSRG Int. J. Electron. Commun. Eng. 2024, 11, 37–43. [Google Scholar] [CrossRef]

- Khan, A.H.; Lucas, M. AI-Powered Automation: Revolutionizing Industrial Processes and Enhancing Operational Efficiency. Rev. Intel. Artif. Med. 2024, 15, 1151–1175. [Google Scholar]

- Amoo, O.O.; Sodiya, E.O.; Atagoga, A. AI-driven warehouse automation: A comprehensive review of systems. GSC Adv. Res. Rev. 2024, 18, 272–282. [Google Scholar] [CrossRef]

- Yigit, Y.; Ferrag, M.A.; Sarker, I.H.; Maglaras, L. Generative AI and LLMs for Critical Infrastructure Protection: Evaluation Benchmarks, Agentic AI, Challenges, and Opportunities. Sensors 2025, 25, 1666. [Google Scholar] [CrossRef]

- Deng, Z.; Guo, Y.; Han, C.; Ma, W.; Xiong, J.; Wen, S.; Xiang, Y. AI Agents Under Threat: A Survey of Key Security Challenges and Future Pathways. ACM Comput. Surv. 2024, 1, 1–35. [Google Scholar] [CrossRef]

- Edris, E.K.K. Utilisation of Artificial Intelligence and Cyber Security Capabilities: The Symbiotic Relationship for Enhanced Security and Applicability. Electronics 2025, 14, 2057. [Google Scholar] [CrossRef]

- Lebed, S.V.; Namiot, D.E.; Zubareva, E.V.; Khenkin, P.V.; Vorobeva, A.A.; Svichkar, D.A. Large Language Models in Cyberattacks. Dokl. Math. 2024, 110, S510–S520. [Google Scholar] [CrossRef]

- Sotiropoulos, J.; Del Rosario, R.F.; Kokuykin, E.; Oakley, H.; Habler, I.; Underkoffler, K.; Huang, K.; Steffensen, P.; Aralimatti, R.; Bitton, R.; et al. OWASP Top 10 for LLM Apps & Gen AI Agentic Security Initiative: Agentic AI—Threats and Mitigations; Version 1.0.1; OWASP: Wakefield, MA, USA, 2025; Available online: https://hal.science/hal-04985337v1 (accessed on 23 September 2025).

- Ahmed, U.; Lin, J.C.W.; Srivastava, G. Exploring the Potential of Cyber Manufacturing System in the Digital Age. ACM Trans. Internet Technol. 2023, 23, 1–38. [Google Scholar] [CrossRef]

- Folorunso, A.; Adewumi, T.O.; Okonkwo, R. Impact of AI on cybersecurity and security compliance. Glob. J. Eng. Technol. Adv. 2024, 21, 167–184. [Google Scholar] [CrossRef]

- Makhija, N.; Konatam, S.; Acharya, S.; Najana, M. GenAI Current Use Cases and Future Challenges in Advanced Manufacturing. Int. J. Glob. Innov. Solut. (IJGIS) 2024. [Google Scholar] [CrossRef]

- Mishra, A.; Gupta, N.; Gupta, B.B. Defense mechanisms against DDoS attack based on entropy in SDN-cloud using POX controller. Telecommun. Syst. 2021, 77, 47–62. [Google Scholar] [CrossRef]

- Zhang, C.; Costa-Perez, X.; Patras, P. Tiki-Taka: Attacking and Defending Deep Learning-based Intrusion Detection Systems. In Proceedings of the CCSW 2020—2020 ACM SIGSAC Conference on Cloud Computing Security Workshop, Virtual Event, USA, 9 November 2020; Association for Computing Machinery, Inc.: New York, NY, USA, 2020; pp. 27–39. [Google Scholar] [CrossRef]

- Admass, W.; Yayeh, Y.; Diro, A.A. Cyber Security: State of the Art, Challenges and Future Directions. Cyber Secur. Appl. 2023, 2, 100031. [Google Scholar] [CrossRef]

- Pulikottil, T.; Estrada-Jimenez, L.A.; Rehman, H.U.; Mo, F.; Nikghadam-Hojjati, S.; Barata, J. Agent-based manufacturing—Review and expert evaluation. Int. J. Adv. Manuf. Technol. 2023, 127, 2151–2180. [Google Scholar] [CrossRef]

- Siraparapu, S.R.; Azad, S.M. A Framework for Integrating Diverse Data Types for Live Streaming in Industrial Automation. IEEE Access 2024, 12, 111694–111708. [Google Scholar] [CrossRef]

- Acharya, D.B.; Kuppan, K.; B, D. Agentic AI: Autonomous Intelligence for Complex Goals—A Comprehensive Survey. IEEE Access 2024, 13, 18912–18936. [Google Scholar] [CrossRef]

- Shostack, A. Threat Modeling: Designing for Security; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Rashid, S.M.Z.U.; Montasir, I.; Haq, A.; Ahmmed, M.T.; Alam, M.M. Securing Agentic AI: Threats, Risks and Mitigation. Preprint, January 2025. Available online: https://www.researchgate.net/publication/388493552 (accessed on 20 May 2025). [CrossRef]

- Mishra, S. Exploring the Impact of AI-Based Cyber Security Financial Sector Management. Appl. Sci. 2023, 13, 5875. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, G.; Xue, C.; Wang, J.; Nixon, M.; Han, S. Time-Sensitive Networking (TSN) for Industrial Automation: Current Advances and Future Directions. ACM Comput. Surv. 2024, 57, 1–38. [Google Scholar] [CrossRef]

- Das, B.C.; Amini, M.H.; Wu, Y. Security and privacy challenges of large language models: A survey. ACM Comput. Surv. 2025, 57, 1–39. [Google Scholar] [CrossRef]

- Radanliev, P.; Roure, D.D.; Nicolescu, R.; Huth, M.; Santos, O. Digital twins: Artificial intelligence and the IoT cyber-physical systems in Industry 4.0. Int. J. Intell. Robot. Appl. 2022, 6, 171–185. [Google Scholar] [CrossRef]

- Salikutluk, V.; Schöpper, J.; Herbert, F.; Scheuermann, K.; Frodl, E.; Balfanz, D.; Jäkel, F.; Koert, D. An Evaluation of Situational Autonomy for Human-AI Collaboration in a Shared Workspace Setting. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems (CHI ’24), Honolulu, HI, USA, 11–16 May 2024. [Google Scholar] [CrossRef]

- Zhang, G.; Gao, W.; Li, Y.; Guo, X.; Hu, P.; Zhu, J. Detection of False Data Injection Attacks in a Smart Grid Based on WLS and an Adaptive Interpolation Extended Kalman Filter. Energies 2023, 16, 7203. [Google Scholar] [CrossRef]

- Subramanian, J.; Sinha, A.; Seraj, R.; Mahajan, A. Approximate Information State for Approximate Planning and Reinforcement Learning in Partially Observed Systems. J. Mach. Learn. Res. 2022, 23, 1–83. [Google Scholar]

- Lebed, S.; Namiot, D.; Zubareva, E.; Khenkin, P.; Vorobeva, A.; Svichkar, D. Large Language Models in Cyberattacks. In Proceedings of the Doklady Mathematics; Springer: Berlin/Heidelberg, Germany, 2024; Volume 110, pp. S510–S520. [Google Scholar]

- Hamdi, A.; Müller, M.; Ghanem, B. SADA: Semantic adversarial diagnostic attacks for autonomous applications. Proc. Aaai Conf. Artif. Intell. 2020, 34, 10901–10908. [Google Scholar] [CrossRef]

- Mubarak, S.; Habaebi, M.H.; Islam, M.R.; Rahman, F.D.A.; Tahir, M. Anomaly Detection in ICS Datasets with Machine Learning Algorithms. Comput. Syst. Sci. Eng. 2021, 37, 34–46. [Google Scholar] [CrossRef]

- Zainuddin, A.A.; Amir Hussin, A.A.; Hassan, M.K.A.; Handayani, D.O.D.; Ahmad Puzi, A.; Zakaria, N.A.; Rosdi, N.N.H.; Ahmadzamani, N.Z.A. International Grand Invention, Innovation and Design EXPO (IGIIDeation) 2025; Technical Report; KICT Publishing: Kuala Lumpur, Malaysia, 2025. [Google Scholar]

- Sarsam, S.M. Cybersecurity Challenges in Autonomous Vehicles: Threats, Vulnerabilities, and Mitigation Strategies. SHIFRA 2023, 2023, 34–42. [Google Scholar] [CrossRef]

- Moher, D.; Shamseer, L.; Clarke, M.; Ghersi, D.; Liberati, A.; Petticrew, M.; Shekelle, P.; Stewart, L.A.; PRISMA-P Group. Preferred Reporting Items for Systematic Review and Meta-Analysis Protocols (PRISMA-P) 2015 Statement. Syst. Rev. 2015, 4, 1. [Google Scholar] [CrossRef]

- Duan, Z.; Wang, J. Exploration of LLM Multi-Agent Application Implementation Based on LangGraph+CrewAI. arXiv 2024, arXiv:2411.18241. [Google Scholar]

- Venkadesh, P.; Divya, S.; Kumar, K.S. Unlocking AI Creativity: A Multi-Agent Approach with CrewAI. J. Trends Comput. Sci. Smart Technol. 2024, 6, 338–356. [Google Scholar] [CrossRef]

- Jeong, C. Beyond Text: Implementing Multimodal Large Language Model-Powered Multi-Agent Systems Using a No-Code Platform. arXiv 2025, arXiv:2501.00750. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Davis, J.; Goadrich, M. The Relationship Between Precision-Recall and ROC Curves. In Proceedings of the 23rd International Conference on Machine Learning (ICML), Pittsburgh, PA, USA, 25–29 June 2006; pp. 233–240. [Google Scholar]

- Saito, T.; Rehmsmeier, M. The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLoS ONE 2015, 10, e0118432. [Google Scholar] [CrossRef]

- Fawcett, T. An Introduction to ROC Analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Bradley, A.P. The Use of the Area Under the ROC Curve in the Evaluation of Machine Learning Algorithms. Pattern Recognit. 1997, 30, 1145–1159. [Google Scholar] [CrossRef]

- Khan, S.I.; Kaur, C.; Ansari, M.S.A.; Muda, I.; Borda, R.F.C.; Bala, B.K. Implementation of cloud based IoT technology in manufacturing industry for smart control of manufacturing process. Int. J. Interact. Des. Manuf. 2025, 19, 773–785. [Google Scholar] [CrossRef]

- Conlon, N.; Ahmed, N.R.; Szafir, D. A Survey of Algorithmic Methods for Competency Self-Assessments in Human-Autonomy Teaming. ACM Comput. Surv. 2024, 56, 1–31. [Google Scholar] [CrossRef]

- Zhu, J.P.; Cai, P.; Xu, K.; Li, L.; Sun, Y.; Zhou, S.; Su, H.; Tang, L.; Liu, Q. AutoTQA: Towards Autonomous Tabular Question Answering through Multi-Agent Large Language Models. Proc. VLDB Endow. 2024, 17, 3920–3933. [Google Scholar] [CrossRef]

- Hong, Y.; Chen, G.; Bushnell, L. Distributed observers design for leader-following control of multi-agent networks. Automatica 2008, 44, 846–850. [Google Scholar] [CrossRef]

- Akyol, E.; Rose, K.; Basar, T. On Optimal Jamming Over an Additive Noise Channel. arXiv 2013, arXiv:1303.3049. [Google Scholar] [CrossRef]

- Ishii, H.; Wang, Y.; Feng, S. An overview on multi-agent consensus under adversarial attacks. Annu. Rev. Control 2022, 53, 252–272. [Google Scholar] [CrossRef]

- Falade, P.V. Decoding the Threat Landscape: ChatGPT, FraudGPT, and WormGPT in Social Engineering Attacks. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2023, 185–198. [Google Scholar] [CrossRef]

- Sritriratanarak, W.; Garcia, P. Cyber Physical Games: Rational Multi-Agent Decision-Making in Temporally Non-Deterministic Environments. ACM Trans. Cyber-Phys. Syst. 2025, 9, 17. [Google Scholar] [CrossRef]

- Bairaktaris, J.A.; Johannssen, A.; Tran, K.P. Security Strategies for AI Systems in Industry 4.0. Qual. Reliab. Eng. Int. 2024, 41, 897–915. [Google Scholar] [CrossRef]

- Lu, J.; Sibai, H.; Fabry, E. Adversarial Examples that Fool Detectors. arXiv 2017, arXiv:1712.02494. [Google Scholar] [CrossRef]

- Idaho National Laboratory (INL). Autonomous System Inference, Trojan, and Adversarial Reprogramming Attack and Defense (Final); Idaho National Laboratory (INL): Idaho Falls, ID, USA, 2023. [CrossRef]

- Casella, A.; Wang, W. Performant LLM Agentic Framework for Conversational AI. In Proceedings of the 2025 1st International Conference on Artificial Intelligence and Computing, Kuala Lumpur, Malaysia, 14–16 February 2025. [Google Scholar]

- Ahmed, I.; Syed, M.A.; Maaruf, M.; Khalid, M. Distributed computing in multi-agent systems: A survey of decentralized machine learning approaches. Computing 2025, 107, 2. [Google Scholar] [CrossRef]

- Chien, C.H.; Trappey, A.J. Human-AI cooperative generative adversarial network (GAN) for quality predictions of small-batch product series. Adv. Eng. Inform. 2025, 65, 103327. [Google Scholar] [CrossRef]

- Sivakumar, S. Agentic AI in Predictive AIOps Enhancing IT Autonomy and Performance. Int. J. Sci. Res. Manag. (IJSRM) 2024, 12, 1631–1638. [Google Scholar] [CrossRef]

- Severson, T.A.; Croteau, B.; Rodríguez-Seda, E.J.; Kiriakidis, K.; Robucci, R.; Patel, C. A resilient framework for sensor-based attacks on cyber–physical systems using trust-based consensus and self-triggered control. Control Eng. Pract. 2020, 101, 104509. [Google Scholar] [CrossRef]

- Lin, C.C.; Tsai, C.T.; Liu, Y.L.; Chang, T.T.; Chang, Y.S. Security and Privacy in 5G-IIoT Smart Factories: Novel Approaches, Trends, and Challenges. Mob. Netw. Appl. 2023, 28, 1043–1058. [Google Scholar] [CrossRef]

- Aryankia, K.; Selmic, R.R. Neuro-Adaptive Formation Control of Nonlinear Multi-Agent Systems with Communication Delays. J. Intell. Robot. Syst. Theory Appl. 2023, 109, 92. [Google Scholar] [CrossRef]

- Marali, M.; Sudarsan, S.D.; Gogioneni, A. Cyber security threats in industrial control systems and protection. In Proceedings of the 2019 International Conference on Advances in Computing and Communication Engineering (ICACCE), Sathyamangalam, India, 4–6 April 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Rai, R.; Tiwari, M.K.; Ivanov, D.; Dolgui, A. Machine learning in manufacturing and industry 4.0 applications. Int. J. Prod. Res. 2021, 59, 4773–4778. [Google Scholar] [CrossRef]

- Upadhyay, D.; Sampalli, S. SCADA (Supervisory Control and Data Acquisition) systems: Vulnerability assessment and security recommendations. Comput. Secur. 2020, 89, 101666. [Google Scholar] [CrossRef]

- Jia, J.; Yu, R.; Du, Z.; Chen, J.; Wang, Q.; Wang, X. Distributed localization for IoT with multi-agent reinforcement learning. Neural Comput. Appl. 2022, 34, 7227–7240. [Google Scholar] [CrossRef]

- Bayrak, B.; Giger, F.; Meurisch, C. Insightful Assistant: AI compatible Operation Graph Representations for Enhancing Industrial Conversational Agents. arXiv 2020, arXiv:2007.12929. [Google Scholar] [CrossRef]

- Miyachi, T.; Yamada, T. Current issues and challenges on cyber security for industrial automation and control systems. In Proceedings of the 2014 SICE Annual Conference (SICE), Tokyo, Japan, 13–15 December 2014; pp. 821–826. [Google Scholar] [CrossRef]

- Liu, M.; Zhang, L.; Chen, J.; Chen, W.A.; Yang, Z.; Lo, L.J.; Wen, J.; O’Neill, Z. Large language models for building energy applications: Opportunities and challenges. Build. Simul. 2025, 18, 225–234. [Google Scholar] [CrossRef]

- Zhu, Q.; Başar, T. Robust and Resilient Control Design for Cyber-Physical Systems with an Application to Power Systems. In Proceedings of the 50th IEEE Conference on Decision and Control and European Control Conference (CDC-ECC), Orlando, FL, USA, 12–15 December 2011; pp. 4066–4071. [Google Scholar] [CrossRef]

- Ullah, F.; Naeem, H.; Jabbar, S.; Khalid, S.; Latif, M.A.; Al-Turjman, F.; Mostarda, L. Cyber security threats detection in internet of things using deep learning approach. IEEE Access 2019, 7, 124379–124389. [Google Scholar] [CrossRef]

- Kim, S.J.; Cho, D.E.; Yeo, S.S. Secure Model against APT in m-Connected SCADA Network. Int. J. Distrib. Sens. Netw. 2014, 10, 594652. [Google Scholar] [CrossRef]

- Saßnick, O.; Rosenstatter, T.; Schäfer, C.; Huber, S. STRIDE-based Methodologies for Threat Modeling of Industrial Control Systems: A Review. In Proceedings of the 2024 IEEE 7th International Conference on Industrial Cyber-Physical Systems (ICPS), St. Louis, MO, USA, 12–15 May 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Khan, R.; McLaughlin, K.; Laverty, D.; Sezer, S. STRIDE-based threat modeling for cyber-physical systems. In Proceedings of the 2017 IEEE PES Innovative Smart Grid Technologies Conference Europe (ISGT-Europe), Torino, Italy, 26–29 September 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Benaddi, H.; Jouhari, M.; Ibrahimi, K.; Ben Othman, J.; Amhoud, E.M. Anomaly Detection in Industrial IoT Using Distributional Reinforcement Learning and Generative Adversarial Networks. Sensors 2022, 22, 8085. [Google Scholar] [CrossRef] [PubMed]

- TBD, A. Anomaly-Based Intrusion Detection Using GAN for Industrial Control Systems. In Proceedings of the 2022 10th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India, 13–14 October 2022. [Google Scholar]

- Chen, Z.; Li, Z.; Huang, J.; Long, H. An Effective Method for Anomaly Detection in Industrial Internet of Things Using XGBoost and LSTM. Sci. Rep. 2024, 14, 23969. [Google Scholar] [CrossRef] [PubMed]

| Database | Search Query String | Number of Articles Retrieved |

|---|---|---|

| ACM Digital Library | (“Security” OR “Cybersecurti*” OR “IT Security*” OR “Internet Security*”) AND (“Bottleneck*” OR “Threat*” OR “Risk*” OR “Vulnerabilit*” OR “Challenge*” OR “Problem*”) AND (“Agentic AI” OR “LLM” OR “Multi-Agent*” OR “AI Agent*”) AND (“Industrial Automation” OR “Smart Factor*” OR “Smart Manufactur*” OR “Robot*”) | 64 |

| Scopus | TITLE-ABS-KEY ((“Bottleneck*” OR “Threat*” OR “Risk*” OR “Vulnerabilit*” OR “Challenge*” OR “Problem*”)) AND (“Agentic AI” OR “LLM” OR “Multi-Agent*” OR “AI Agent*”) AND (“Industrial Automation” OR “Smart Factor*” OR “Smart Manufactur*” OR “Robot*”) AND (“Security” OR “Cybersecur*” OR “IT Security*” OR “Internet Security*”) | 53 |

| SpringerLink | (“Security” OR “Cybersecurti*” OR “IT Security*” OR “Internet Security*”) AND (“Agentic AI” OR “LLM” OR “Multi-Agent*” OR “AI Agent*”) AND (“Bottleneck*” OR “Threat*” OR “Risk*” OR “Vulnerabilit*” OR “Challenge*” OR “Problem*”) AND (“Industrial Automation” OR “Smart Factor*” OR “Smart Manufactur*” OR “Robot*”) | 213 |

| Academic Search Premier | (“Security” OR “Cybersecurti*” OR “IT Security*” OR “Internet Security*”) AND (“Bottleneck*” OR “Threat*” OR “Risk*” OR “Vulnerabilit*” OR “Challenge*” OR “Problem*”) AND (“Agentic AI” OR “LLM” OR “Multi-Agent*” OR “AI Agent*”) AND (“Industrial Automation” OR “Smart Factor*” OR “Smart Manufactur*” OR “Robot*”) | 9 |

| Inclusion Criteria | Explanation |

|---|---|

| IC1 | Studies focusing on agentic AI systems (e.g., autonomous agents, decision making AI, multi agent AI, self learning systems, or LLM-enabled agents) in industrial automation. Example: a paper on multi agent coordination in smart factories. |

| IC2 | Studies addressing cybersecurity challenges, threats, or vulnerabilities in the context of AI driven or agent-based industrial systems. Example: adversarial attacks on CPS enabled manufacturing. |

| IC3 | Empirical studies, simulation based works, or case studies with a clear cybersecurity component in industrial environments. Example: GAN-based anomaly detection in an IoT-enabled production line. |

| IC4 | Published in peer-reviewed journals, conference proceedings, or other high quality academic sources. |

| IC5 | Published between 2015 to 2025, ensuring focus on contemporary developments in Agentic AI and industrial cybersecurity. |

| IC6 | Written in English. |

| IC7 | Papers including technical contributions (e.g., architectures, frameworks, threat models, attack vectors, countermeasures). Example: STRIDE-based threat modeling for multi agent systems. |

| Exclusion Criteria | Explanation |

|---|---|

| EC1 | Studies limited to general purpose AI. Example: LLM chatbot studies in education. |

| EC2 | Papers related to AI in non industrial sectors (e.g., finance) that do not address automation. |

| EC3 | Papers not addressing cybersecurity aspects, even if they focus on industrial AI (e.g., works limited to efficiency optimization without security evaluation). |

| EC4 | Non-peer-reviewed works (e.g., blog posts, opinion pieces, white papers, grey literature). |

| EC5 | Articles published before 2015. |

| EC6 | Papers with only abstract available or without full text access. |

| EC7 | Papers not written in English. |

| Term | Definition |

|---|---|

| True Positive (TP) | Correctly detected attacks |

| False Positive (FP) | Normals data was incorrectly flagged as an attack |

| True Negative (TP) | Normal data that was correctly ignored |

| False Negative (FN) | Attack data was incorrectly flagged as normal |

| Metric | Definition | Formula |

|---|---|---|

| Accuracy | Proportion of correctly classified sensor reports (attack or normal) out of all events in both framework simulations | |

| Precision | Total number of actual attacks out of all flagged attacks, | |

| Recall (TPR) | Total number of correctly detected attacks out of all simulated attacks (DDOs, FDI, Replay, Adversarial) | |

| F1 Score | Balance between Precision and Recall | |

| FPR | Data falsely incorrectly as attacks out of all normal data, | |

| FNR | The number of missed by the system out of all real attacks (DDOs, FDI, Replay, Adversarial) | |

| Detection Rate | Proportion of attacks (DDOs, FDI, Replay, Adversarial) that were successfully detected | |

| AUC-ROC | Performance curve plotting TPR vs. FPR | Plotted graphically |

| Category | Bottlenecks Identified | Explanation |

|---|---|---|

| Data related | False Data Injection, Replay Attacks, Poor sensor integrity | Compromise of input data directly affects the reliability of Agentic AI, leading to false or misleading decisions [5] |

| Model related | Adversarial Attacks, Model overfitting, Misclassification | Weaknesses in ML/LLM reasoning and generalization expose vulnerabilities in autonomous decision making [55,69] |

| Communication related | Latency, Distributed Denial of Service (DDoS), Insecure communication | Bottlenecks in inter agent coordination and network resilience that can block or delay safe responses [37] |

| System level | Decision drift, Unintended actions, LLM exploitation | Failures in coordinating autonomous systems and ensuring safe operations across industrial environments [34,39] |

| Agent/Node | Role in Simulation | Real-World Mapping |

|---|---|---|

| Sensor Monitoring Agent | Processes sensor inputs (temperature, vibration, humidity, pressure) and sets baseline for normal operation | Industrial IoT sensors and monitoring devices that continuously track machine/environmental conditions |

| Response Implementation Node | Interprets sensor data, triggers safety actions when thresholds are exceeded | PLCs (Programmable Logic Controllers) or automated control systems in factories that shut down or adjust processes |

| Attack Simulation Node | Injects malicious inputs (e.g., FDI, DDoS, replay) to test resilience | Cyber adversaries or penetration testing tools that mimic real-world cyberattacks |

| GAN Defence Node | Uses GAN-based detection to identify anomalies and adversarial inputs | Intrusion detection systems (IDS), anomaly detection AI, or ML based cybersecurity defense tools [5] |

| Data Analysis Node | Analyzes simulation outcomes before, during, and after attacks | Security operation center (SOC) tools and forensic systems for monitoring and post incident analysis [39,70] |

| Input Node | Provides baseline sensor values to the system | Real-world sensor feeds providing operational thresholds |

| Output Node | Displays final system decisions and responses | Dashboard/control panels for operators or automated alerting systems |

| Framework | Accuracy | Precision | Recall | F1 Score | AUC-ROC | Detection Rate | FNP | FPR |

|---|---|---|---|---|---|---|---|---|

| CrewAI | 0.67 | 1.00 | 0.50 | 0.67 | 0.95 | 0.50 | 0.50 | 0.00 |

| LangFlow | 0.60 | 1.00 | 0.43 | 0.60 | 0.88 | 0.43 | 0.57 | 0.00 |

| Category | Threat Description | Impacts | Mitigation Strategy |

|---|---|---|---|

| Spoofing | False/fake sensor data was sent to the LLM agents | System was fooled to trust wrong source and took wrong and harmful decisions | Input signature validation and enforcement of source verification in agent prompts [55] |

| Tampering | Sensor readings were sent through adversarial attacks | System was fooled to trust wrong source and took wrong and harmful decisions based on fake input readings | Implement anomaly detection systems; e.g., GAN-based anomaly detection flagged inconsistent sensor input patterns [13,89] |

| Repudiation | Agentic actions not logged or traceable (e.g., false detection unrecorded) | Difficult to audit or trace attacker behavior | Use structured, timestamped logging (e.g., in CrewAI), including agent ID and decisions [16] |

| Info Disclosure | Unauthorized exposure of sensitive system or operational data | Exposes vulnerabilities to attackers or users | Implement end to end encryption in securing communication between AI agents operating across distributed architectures [18] |

| DoS | LangFlow and CrewAI nodes were flooded with malicious requests | The system crashed or became unresponsive | Fine tune GAN pre filters at input channels and implement rate limiting [28] |

| Privilege Escalation | Malicious user manipulates task prompts or gains unauthorized control | Unauthorized actions executed at higher privilege level | Enforce role based task restrictions; validate task flow in CrewAI using logic rules [47] |

| Theme/Challenge | Risks & Threats Identified | Proposed Mitigation Strategies |

|---|---|---|

| Data Integrity | False Data Injection (FDI), Data Poisoning | GAN-based anomaly detection, Secure data pipelines, Validation layers |

| Availability | Distributed Denial of Service (DDoS), Replay Attacks | Redundancy mechanisms, Rate limiting, Adaptive anomaly detection |

| Confidentiality | Adversarial Attacks, Model Inversion, Prompt Injection | Adversarial training, Model hardening, Secure communication |

| Trust & Reliability | Unintended decision drift, Misclassification, LLM exploitation | STRIDE threat modeling, Human in the loop oversight, Continuous monitoring |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shrestha, S.; Banda, C.; Mishra, A.K.; Djebbar, F.; Puthal, D. Investigation of Cybersecurity Bottlenecks of AI Agents in Industrial Automation. Computers 2025, 14, 456. https://doi.org/10.3390/computers14110456

Shrestha S, Banda C, Mishra AK, Djebbar F, Puthal D. Investigation of Cybersecurity Bottlenecks of AI Agents in Industrial Automation. Computers. 2025; 14(11):456. https://doi.org/10.3390/computers14110456

Chicago/Turabian StyleShrestha, Sami, Chipiliro Banda, Amit Kumar Mishra, Fatiha Djebbar, and Deepak Puthal. 2025. "Investigation of Cybersecurity Bottlenecks of AI Agents in Industrial Automation" Computers 14, no. 11: 456. https://doi.org/10.3390/computers14110456

APA StyleShrestha, S., Banda, C., Mishra, A. K., Djebbar, F., & Puthal, D. (2025). Investigation of Cybersecurity Bottlenecks of AI Agents in Industrial Automation. Computers, 14(11), 456. https://doi.org/10.3390/computers14110456