1. Introduction

Reinforcement learning (RL) has become a strong approach in machine learning where an agent learns optimal choice strategies through trial-and-error interactions in its environment [

1]. The capacity of RL to act in complex, dynamic environments has created a multitude of applications across science, finance, and computer gaming [

2,

3]. RL research in interaction with AI and gaming enables game-playing agents to learn directly from experience. It simply means that an agent is not hardcoded but is also learning from successes and improving its decisions and performance upon failure efforts [

4]. These fast-paced action games pose challenging real-time combat, requiring a strategy where the agent balances short-term reactions and long-term strategies [

5]. Through taking advantage of RL’s concepts, game-playing agents are able to train autonomously, enhancing their playing skills and opening up new means of designing dynamic and unpredictable game experiences [

6,

7].

Various RL algorithms have been tested over various types of games. These include classic platforms [

8] and complex ones, including first-person shooter (FPS) games [

9,

10,

11,

12,

13,

14]. Two approaches among the several techniques proposed have contributed significantly. These are the Intrinsic Curiosity Module (ICM) and the Asynchronous Advantage Actor–Critic (A3C) algorithm. The aim of these approaches is to promote the explorations of the agent over uncertain environments with the help of intrinsic rewards [

15]. Unlike common RL methods focusing on extrinsic environment rewards, the Intrinsic Curiosity Module is interesting because it addresses the biggest challenges in RL: the exploration of environments with sparse rewards, and external as well as internal curiosity exploration rewards [

16]. It achieves this by using a self-supervised mechanism of knowledge, where its neural network tries to forecast the results of its actions. When faced with a situation of higher uncertainty or higher levels of novelty, the agent’s predictive error increases, triggering a positive intrinsic reward. The reward encourages the agent to learn and discover more about the environment [

17].

The Asynchronous Advantage Actor–Critic (A3C) algorithm is a popular deep reinforcement learning algorithm used to train agents in parallel to enhance sample efficiency and speed up learning [

18]. In the A3C algorithm, asynchronous agents independently explore a game environment, collecting individual experiences and updating neural network policies simultaneously [

9]. These algorithms have never been applied extensively in action games. Hence, in the present work, the authors conducted ICM and A3C to analyze how efficient these are in exploring action games.

1.1. Paper Contribution

The purpose of this work is to improve game-playing agents’ performance and adaptability in action gaming by applying the Intrinsic Curiosity Module (ICM) as well as taking advantage of the Asynchronous Advantage Actor–Critic (A3C) algorithm’s benefits. There are drawbacks to using conventional reinforcement learning (RL) schemes, i.e., slow convergence, as well as a lack of sample efficiency, especially in discovering high-dimensional action spaces. Our objective is to investigate how introducing intrinsic curiosity as an additional reward signal can improve autonomous exploration, addressing challenges. The study has two main objectives: (1) Evaluate the effectiveness of ICM in encouraging agents to explore and learn in action games, promoting a deeper understanding of the game environment and facilitating the discovery of optimal strategies. (2) Assess the impact of integrating the A3C algorithm within the RL framework, examining its potential to expedite learning and enhance adaptability in complex games with high-dimensional action spaces.

1.2. Practical Application and Relevance

The main interest is to meet the growing need for smart adaptive algorithmics in gaming technology. The scope of applicability of the suggested approach is to improve gaming experience. Upgrading NPCs to an advanced decision-making ability response in less predictions and more adaptive player actions can create varied and dynamic gameplay scenarios. Additionally, the work has relevance in other areas, being applied to autonomous, robotic, and real-time decision-making environments where flexible reinforcement learning strategies are applicable. By presenting a distinct connection between what has been discovered and its real-world applications, this study aims to make a meaningful contribution to artificial intelligence, as well as to interactive system fields, enabling further progress that goes beyond the gaming field and resonates across various fields dependent upon intelligent, adaptable agents and AI-driven adaptive learning systems.

2. Related Work

2.1. Reinforcement Learning in Action Games

Advancements in gaming technology are increasingly recognized for their contributions to cognitive development and enhancing user experience. Research highlights reveal the potential of games to improve cognitive performance and engagement [

19,

20,

21,

22,

23,

24]. Such innovations underline the significance of exploring sophisticated reinforcement learning applications within action games, aiming to develop more adaptive and capable game-playing agents. The literature on reinforcement learning in action games, intrinsic curiosity, and the A3C algorithm has seen significant growth and advancements in recent years. Various studies have explored the application of reinforcement learning techniques in action games to train intelligent agents capable of competitive gameplay.

Researchers have investigated various algorithms and architectures, including Deep Q-Networks (DQNs), policy gradients, and actor–critic methods, to address the challenges presented by high-dimensional state and action spaces in games such as Street Fighter [

25,

26,

27,

28,

29]. Yoon and Kim [

30] successfully implemented the Deep Q-Network (DQN) algorithm in the context of AI competitions involving visual fighting games. The experimental findings indicate that DQN, a deep reinforcement learning algorithm, exhibits significant promise in real-time fighting games, despite reducing the number of actions to 11.

Pinto and Coutinho [

31] developed a novel approach that integrates hierarchical reinforcement learning (HRL) and Monte Carlo tree search (MCTS) to effectively train artificial intelligence agents for fighting games (FTGAIs). Through their method, the agent’s learning strategy has proven to be on par with a champion’s performance in terms of success rates. Y. Takano et al. [

32] applied DQN successfully on the FightingICE platform, demonstrating that the double-agent strategy is significantly superior, with a 30% higher success rate compared to the single agent [

33,

34]. They also integrated curiosity-driven intrinsic rewards into the reinforcement learning of fighting games, outperforming the actor–critic model [

32]. However, according to Inoue et al., AI may not reliably defeat unfamiliar opponents, necessitating continuous training through competitions [

35].

In 2.5D fighting games, characters’ visuals exhibit a certain level of fuzziness, incorporating variations in depth and height. These games also require precise sequences of continuous actions, which make network design challenging. To address this, Li et al. devised a novel network called A3C+ by combining the Asynchronous Advantage Actor–Critic (A3C) algorithm and Litter Fighter 2 (LF2). This network utilizes game-related information features and a recursive layer to analyze fighting skill sets, resulting in an impressive 99.3% winning rate [

36]. Ishii et al. conducted a study using a specialized version of Monte Carlo tree search (MCTS) called Puppet-master on the FightingICE platform [

37]. This allowed them to control all characters in the game, enabling each character to move based on its unique features. The study is essential for evaluating whether the proposed method is intelligent and entertaining for the public [

37].

Using the Fighters Arena platform, Bezerra et al. developed an agent called FALCON, which combined learning, cognition, and navigation techniques with ARAM. This intelligent agent achieved an impressive 90% winning rate against in-game AI opponents that followed a fixed action pattern [

38]. Kim et al. utilized the FightingICE platform to develop an AI agent using self-play and MCTS. Their research focused on various reinforcement learning setups, which included rewards and opponent compositions, and introduced novel performance measures. Through experiments, their AI agent outperformed others, achieving an impressive 94.4% winning rate [

39].

Oh et al. conducted experiments using the game

Blade and Soul and developed two data-skipping techniques: inaction and continuous movement. After training the AI agent in one-on-one fights, it was tested against five professional players. The agent achieved a 62% winning rate and developed expertise in three distinct fighting styles [

40].

2.2. Curiosity-Driven Exploration

Exploration driven by curiosity is a well-explored topic in the field of reinforcement learning. Several approaches have been proposed to incorporate intrinsic rewards based on novelty or information gain [

41]. For instance, Schmidhuber et al. [

42,

43] and others [

44] used surprise and compression progress as intrinsic rewards. Kearns et al. [

45] and Brafman et al. [

46] have presented exploration algorithms characterized by polynomial complexity concerning the number of parameters in the state space. Other researchers, such as Klyubin et al. [

47] and Mohamed & Rezende [

48], have utilized empowerment, which quantifies the information gain based on action entropy, as an intrinsic reward. Stadie et al. [

49] employ prediction error within the feature space of an auto-encoder to identify states that are deemed interesting for exploration.

Osband et al. [

50,

51] explore multiple value functions with bootstrapping and Thompson sampling to enhance exploration effectiveness. Different methods have been suggested to quantify information gain during exploration by authors like Mobin et al. [

52], Still and Precup [

53], and Storck et al. [

54]. Houthooft et al. [

55] have also suggested an exploration policy to maximize information gain pertaining to the knowledge of environmental dynamics by the agent. In addition to ICM, other curiosity-driven approaches are also found in the literature. One notable method is Random Network Distillation (RND), where the novelty is considered as the prediction error of the fixed, randomly initialized neural network. In these techniques, prediction is based on the exploration of hard states by formulating a simple yet powerful intrinsic reward [

56]. In the random curiosity mechanism, less computation and hyperparameter tuning are required to estimate the novelty of random initiated features, making it a suitable technique for performing in visually rich and high-dimensional environments [

57]. Information gain-based methods encourage agents to reduce their uncertainty in the environment [

58]. The main idea is not only to pursue extrinsic rewards but also to require new and useful information to seek experience intrinsically [

59]. These methods usually promote exploration by receiving the rewards over the discovery of knowledge that explains the dynamic environment. In practice, Bayesian inference or probabilistic models estimate a reduction in uncertainty by obtaining the intrinsic rewards. They offer the agent exploration in highly unpredictable environments and involve higher computational costs due to the need for uncertainty estimation and probabilistic modeling [

60].

In summary, curiosity-motivated exploration in reinforcement learning entails a range of methods that encourage agents to venture into new states and actions, allowing them to learn better and respond to changing environments in a more effective way. These methods utilize a range of differing measures of novelty and information gain as intrinsic reward signals to promote effective exploration.

3. Methods

3.1. Curiosity-Driven Exploration via ICM and A3C

The ICM learns through self-supervised operation, aiming to forecast the outcome of actions by an agent in its environment. As the agent interfaces with its game environment, its state

st at a given time

t and its action

at are saved as shown in

Figure 1. These state–action tuples are then input into the ICM for self-supervised learning. ICM has two main components: an inverse dynamics model and a model of forward dynamics. The inverse dynamics model is a deep neural network that takes as input the feature encodings (

Φ(

st) and

Φ(

st+1)) of the current state

st and the next state

st+1 and aims to predict the action

at that led to the transition between these states. This component serves as the inverse part of the ICM, as it learns to predict the action (

at) based on observed changes in state (

st to

st+1). The equation provided below represents the training of the inverse dynamics model:

where

ât represents the predicted estimate of

at, g is the learned function called the inverse dynamics model, and

θI represents the parameters of the neural network. The forward dynamics model is another neural network that takes

Φ(st) and

at as inputs and predicts the resulting next state,

st+1. This component serves as the forward part of the ICM, as it learns to predict the outcomes of the agent’s actions. The equation given below represents the forward dynamics model:

where

is the learned function called the forward dynamics model and

and

θF represent the predicted estimate of

ϕ(st+1) and parameters of the neural network, respectively.

The prediction errors from both the inverse dynamics and forward dynamics models are used as intrinsic rewards,

, for the agent’s curiosity-driven exploration as shown in

Figure 2. Higher prediction errors indicate greater novelty and uncertainty in the agent’s experiences, leading to higher intrinsic rewards. These intrinsic rewards are combined with the external reward

(e.g., game scores) to form the total reward signal that guides the learning process. The combined intrinsic and external rewards are represented as follows:

We employed ICM to construct the agent and utilized the A3C policy gradient for training the agent’s policy. The agent’s policy is a deep neural network denoted as Π(

St;

Φp), with

Φp as a parameter. Given the state

st, the agent generates an action

at at time

t sampled from this policy. To maximize the expected reward,

Φp is optimized.

A3C maintains a policy, π (

at|

st;

θ), and a value function estimate, V (

st; θv). Similarly to our n-step Q-learning variant, our actor–critic variant operates in the forward view, using the same combination of n-step returns to update both the policy and value function. Updates occur after every

tmax action or upon reaching a terminal state. The updated equation is as follows:

with

A (st, at; θ, θv) estimating the advantage function, defined as

Pk − 1 i = 0 γ

ir t + i +

γkV (st+k; θv) − V

(st; θv). Here, k varies by state and is capped by

tmax. By incorporating the Intrinsic Curiosity Module with the A3C algorithm in our study, we aim to leverage the benefits of both curiosity-driven exploration and efficiently distributed training. The Intrinsic Curiosity Module will encourage agents to actively seek out novel experiences in action games, enabling them to discover optimal strategies and potentially avoid becoming stuck in local optima. The use of A3C’s asynchronous parallelized architecture will accelerate the learning process and enhance the agents’ ability to effectively explore the vast action spaces of games such as Mortal Kombat, Jackie Chan, and Street Fighter. The combination of these techniques is expected to yield game-playing agents that exhibit improved performance adaptability to different in-game scenarios, and a higher degree of mastery in action games. This will contribute to the advancement of reinforcement learning in the gaming domain.

3.2. ICM and A3C for Exploration in Action Games

In order to add curiosity-based exploration in the A3C framework for game-playing agents, we follow the method laid out by Pathak et al. [

8]. An Intrinsic Curiosity Module was incorporated with an A3C algorithm, which provides a bonus reward to the agent. This reward is based on the novelty and ambiguity of experience. It also encourages the agent to endeavor into unexplored regions, allowing it to become more adaptable and wide-learning.

The intrinsic curiosity-driven module follows the principle of self-supervised learning. The agent’s neural network predicts the outcome of the agent’s action within the environment. The current stages and actions are recorded as the agent plays the game and predicts the next state using a learned dynamic model. The intrinsic reward of the curiosity-driven exploration is measured by calculating the difference between the actual next state and the predicted next state. A higher error provides more novelty and uncertainty. This drives the agent to explore and learn in such situations proactively. These intrinsic rewards are added to external rewards such as game scores to shape the aggregate reward, which drives the agent’s learning process.

The PPO method was used for the improvement of stability and efficiency during training. PPO provided stable updates, prevented quick changes, and kept the process continuing for the past policy. The method gave better control in training during complex games, including the games used for experiments. By combining curiosity-based exploration within the A3C framework and using PPO for policy updates, our hybrid method maximizes the agent’s capacity to explore the environment efficiently. By introducing intrinsic rewards and policy updates by means of PPO, the agent is encouraged to explore new and varied experiences. It results in the discovery of new methods and improved exploration of the action space. The asynchronous aspect of the A3C method in combination with the PPO provides the means to allow several agents to explore the game environment separately and efficiently exchange information among themselves. It propagates the improved performance and adaptability of the game-playing agents in action games as well.

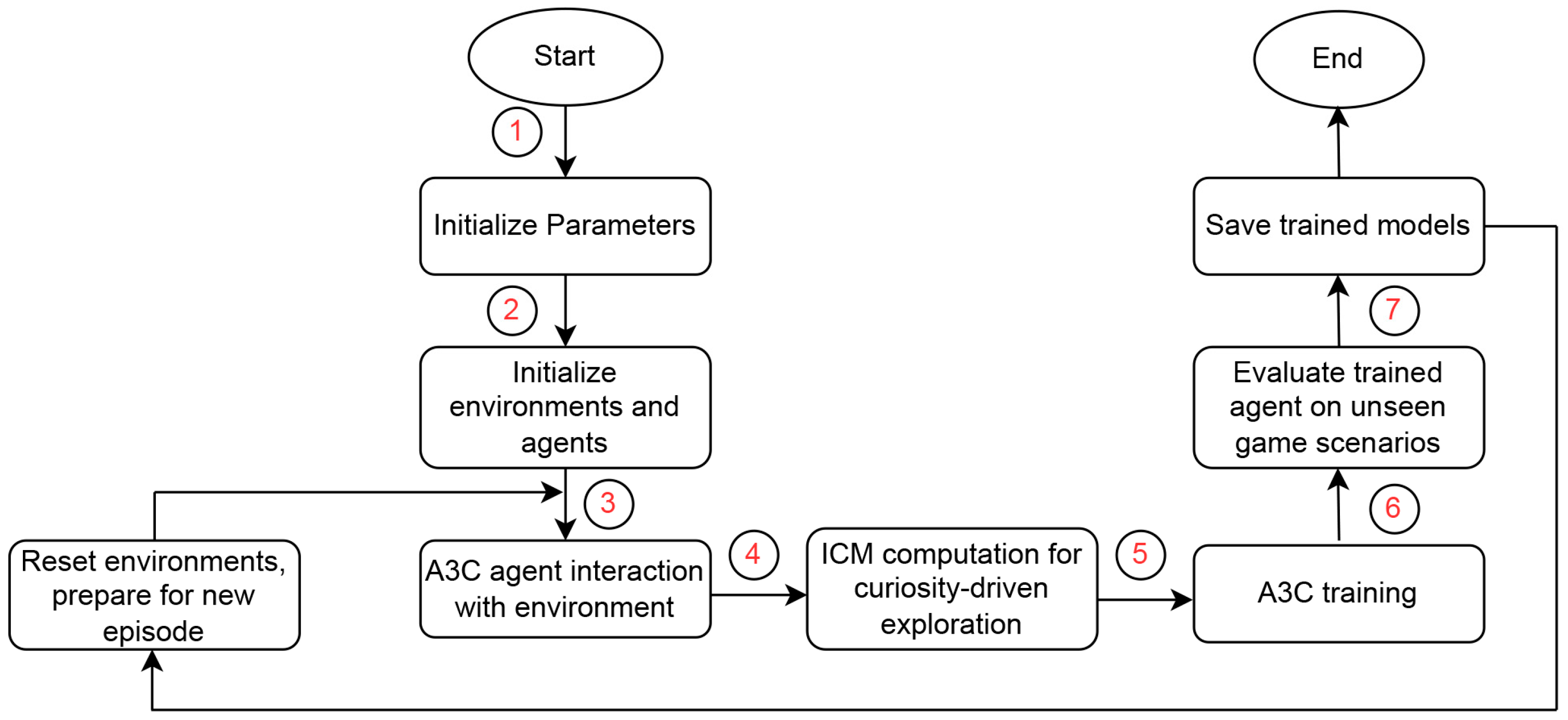

Figure 3, described below, is the flowchart of the proposed research of ICM + A3C in curiosity-based exploration in action games, and Algorithm 1 along with its algorithm:

| Algorithm 1: ICM + A3C Algorithm for Curiosity Driven Exploration in action games |

- 1.

Initialize Parameters: - 2.

Initialize neural network weights for both ICM and A3C modules - 3.

Initialize Environments and Agents: - 4.

Initialize the game environments (e.g., Mortal Kombat, Jackie Chan, Street Fighter) - 5.

Initialize game-playing agents based on the A3C architecture - 6.

Training Loop: - 7.

for episode in total_episodes do - 8.

Reset environments, prepare for new episode - 9.

Receive initial game screen as initial state ‘s’ - 10.

Reset internal states of agents in A3C - 11.

while episode not finished do - a.

Choose action ‘a’ from the A3C policy based on state ‘s’ - b.

Perform action ‘a’, observe reward ‘r’ and new state ‘s_prime’ - c.

Store experience tuple (s, a, r, s_prime) in agent’s memory - d.

Compute prediction errors for inverse dynamics model and forward dynamics model - e.

Calculate intrinsic rewards ‘ri’ based on prediction errors - f.

Calculate total reward ‘rt’ as a combination of intrinsic and external rewards - g.

Update A3C policy and value function based on experiences in memory - h.

Perform asynchronous updates using gradients from multiple agents - i.

Update state: ‘s’ = ‘s_prime’

- 12.

end while - 13.

end for - 14.

Evaluation: - 15.

Evaluate the trained ‘ICM + A3C’ agents on unseen game scenarios = 0

|

3.3. ICM and A3C for Enhanced Exploration in Games

In our investigation, the synergy between the Intrinsic Curiosity Module (ICM) and the Asynchronous Advantage Actor–Critic (A3C) algorithm is realized through the integration of curiosity-driven exploration into the A3C framework. The ICM, founded on self-supervised learning principles, introduces an additional reward mechanism based on the novelty and uncertainty of the agent’s experiences. This intrinsic reward, combined with external rewards from the game environment, forms a cohesive reward signal guiding the learning process. The integration aims to harness the advantages of curiosity-driven exploration and efficient, distributed training, fostering adaptability to diverse in-game scenarios and enhancing agents’ performances in action games.

Furthermore, the A3C algorithm is notable for its asynchronous, parallelized architecture, expediting the learning process and sanctioning agents to effectively navigate large and expansive action spaces that are often found in real-time games. The integration of ICM and A3C encourages exploration in uncertain or unfamiliar states. Game-playing agents produced together are capable of learning faster, providing an effective adaptability and mastery of action games in challenging environments.

3.4. Impact of Curiosity-Driven Exploration on Learning Process

Measurement of the effectiveness of curiosity-driven exploration includes measuring the overall performance of the agent. The important aspect is to evaluate the effectiveness of ICM to support learning by facilitating the learning process of game-playing agents. In order to gain a comprehensive understanding, additional matrices are introduced, which include learning stability, adaptability, and exploration diversity. A careful selection of environmental hyperparameters in

Table 1 and PPO’s agent’s hyperparameters in

Table 2 was made to get better results. The explanation of each hyperparameter and purpose of selection is mentioned in

Section 4.4.

3.4.1. Intrinsic Curiosity Metrics

Analysis of the curiosity-based exploration’s contribution requires the introduction of specific matrices that evaluate the intrinsically motivated curiosity module (ICM). These matrices used are intended to provide a balanced view of game-playing agents to shape the curiosity in overall learning. First, in Equation (1), the ‘rate of novel experience (

RNEt)’ quantifies the frequent encounter of previously unseen situations describing the explorative tendency of the agents’ behavior. The degree to which curiosity encourages the training agents to engage with diverse as well as new states is analyzed. Secondly, in Equation (2), the Frequency of Adaptation to New Situations

(FANS) addresses the agent’s ability to adapt and survive in new situations, reflecting the resilience of learned information. Third, in Equation (3), the Exploration Efficiency in Learning New Tactics

(EELNT) determines how effectively agents identify and assimilate new tactics into decision-making procedures.

3.4.2. Learning Process Dynamics

Our research presents a holistic investigation of the learning process dynamics with curiosity-based exploration. We intend to shed light on learning improvement progression, detect improvement patterns, and identify possible inflection points reflecting improved or stabilized learning. Apart from performance observation, our research extends to the subtler details of convergence rate and stability. The impact of the curiosity-based exploration, as well as the optimal performance of the agent’s coverage, is inspected.

3.4.3. Comparative Analyses

Our study employs comparative analysis to compare the performance of agents based on curiosity-driven exploration with agents using traditional reinforcement learning approaches. We utilize the statistical measure

t-test to identify differences in learning efficiency, adaptability, and performance. The comparison results are presented in

Table 3. By such comparative analysis, our evaluation is enhanced with added rigor, providing us with a sound premise on which to determine the specific contributions of curiosity-driven exploration as compared to classical methods.

4. Experiments

For running the experiments, we used high-performance computing with an Intel i7-12700H CPU, 16GB RAM, and NVIDIA GeForce RTX 3050 GPU (4GB DDR6, CUDA 11.8) and started training our agent using only six workers. This gives us the computational capacity needed to train deep reinforcement learning models efficiently. The software setup is based on the Python 3.8.5 programming paradigm, using widely used libraries like TensorFlow to run the RL algorithms. The MAME Toolkit library was used to interface with game domains, which helps to integrate smoothly with the curiosity-based A3C algorithm. The MAME Toolkit library gives us the interface to the Multiple Arcade Machine Emulator (MAME), which helps us talk to game domains and record game screens directly. The experiments have been done on the Ubuntu 20.04 operating system to ensure a stable and reliable setup of the software.

4.1. Environments

The actions games which are well known for research in RL performance are used in this research as well. The purpose of choosing these games lies in to the fact that they are popular and has large actions spaces and provide variable combat mechanics making it an ideal setup to analyze the performance of our proposed modules. The action space of Mortal Kombat is 8, while Jackie Chan and Street Fighter have 10. The agent receives +1 as the reward when it reaches the next level by defeating the opponent. It does not get any punishment or other readjustment in the reward at any time.

4.2. State Preprocessing

In order to simplify computation and concentrate on meaningful visual features, preprocessing operations on the game screens were performed using the MAME Toolkit library. The operations involved rescaling the game screens into an appropriate resolution and converting them into images in grayscale format in order to remove distractions due to color. The preprocessed game screens served as inputs to the RL models, allowing the agents to apprehend meaningful game states appropriately.

4.3. Training Setup

During the preprocessing stage, our effort was to reduce computational requirements. We converted the initial input, which was RGB game frames with three channels, to grayscale format with one channel, as well as rescaled game frames to 64 × 64 dimensions. In order to overcome the challenge brought by temporal relationships, four successive frames were employed as the state representation of st. In following the asynchronous training protocol of A3C, training of all the agents was performed with six workers, using PPO as the method to optimize the parameters controlling all the modules. The state representations used have been specifically modified to maintain the original game essence while being well-suited to integrate with the curiosity-based A3C algorithm smoothly. The modifications allowed our agents to navigate games effectively, observe the respective game states, and perform actions based on both the inherent curiosity-based rewards and the extrinsic game scores. The setup generated provides a dynamic and challenging environment for the agents to learn and improve their action game skills. It thereby helps in assessing the curiosity-based exploration as well as A3C algorithm in action gaming scenarios.

4.4. Intrinsic Curiosity Module + Asynchronous Advantage Actor Critic (ICM + A3C)

In the case of both the A3C and ICM algorithms, the input states are the games’ preprocessed screens captured within their respective game environments. The preprocessed game screens are inputted to the ICM and A3C algorithms to be used in curiosity driven exploration and policy optimization, respectively. A3C + ICM uses multiple neural networks. The input game frames ‘

st’ are first passed through four convolutional networks, with each convolutional network using the exponential linear unit (ELU) as the activation function [

61]. The output of the last convolutional network is used as input to the Long Short-Term Memory (LSTM) network [

62]. The output of the LSTM is passed through the two separate, connected, fully connected networks, one of which is for the actor, while the other one is the critic network. It uses these networks to predict the action as well as the value function. The ICM component consists of both forward and reverse models.

In the initial stage, the inverse model processes input game frames

st and

st+1 through four convolutional layers with ELU functions converting them to feature vectors

t and

t + 1. These vectors then predict the agent’s probable action in fully connected layers. Meanwhile, the forward model uses the feature vector

t and action

at to predict the next game frame’s feature vector,

t + 1. PPO, a deep reinforcement learning (DRL) algorithm, has been adopted as the primary approach for reinforcement learning by OpenAI [

63,

64,

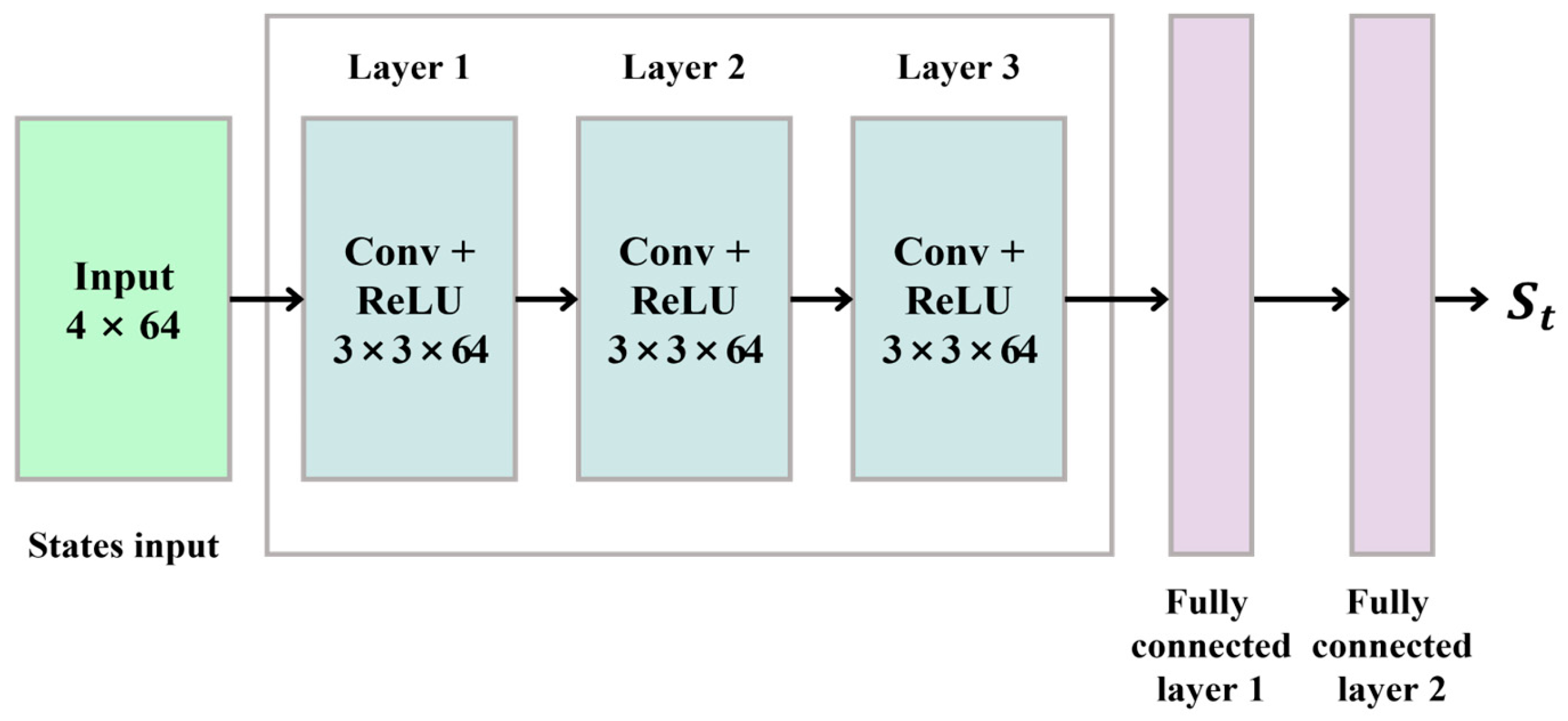

65]. The fundamental concept of PPO revolves around preventing excessive policy updates. PPO enforces constraints on the probability ratio within a range of −1 to +1. Our network architecture is designed with three convolutional layers that take input in the form of game frames (

st), with a rectified linear unit (ReLU) as the activation function for each convolutional layer. The output of the final convolutional layer is then directed into two distinct, fully connected layers. These layers play a role in predicting both the value function and the action probability distribution. The action probability distribution represents the ratio of action probabilities between the new and old policies, both based on the same state.

The summary of all environmental hyperparameters is in

Table 1. The learning rates for the PPO model were set to 0.0001, similar to the target model as a learned model. Optimizer and initialization weights were selected based on the common practice in RL studies, as suggested in [

63,

64,

65]. The discount factors were set to γ

ext = 0.998 for external reward and γ

intr = 0.99 for intrinsic reward. It was tuned empirically to balance the long-term return with intrinsic reward contribution. We found the importance of intrinsic reward scaling. The best results were achieved for

η = 0.5. It was identified after considering several scaling values, including [0.1, 0.25, 0.5, 0.75, 0.1]. The learning rate for all models was set to 0.0001 using the Adam optimizer. Rollout length (128), clipping parameters, and optimized epochs are consistent with baseline implementations of PPO, providing a stable gradient without excessive variance. The entropy coefficient was chosen to preserve exploration and exploitation balance. The clipping parameter followed OPenAI’s PPO reference implementation. Advantage coefficients were decided after preliminary sensitivity analysis. The summary of the PPO agent’s hyperparameters is presented in

Table 2.

Table 1.

Agent’s hyperparameters.

Table 1.

Agent’s hyperparameters.

| Hyperparameter Value | Hyperparameter Value | Hyperparameter Value | Hyperparameter Value |

|---|

| PPO model learning rate | 0.0001 | Rollout length | 128 |

| Target model ΦT Learning rate | 0.0001 | Learned model ΦL learning rate | 0.0001 |

| Number of optimization epochs | 4 | Entropy coefficient | 0.001 |

| Discount factor γext | 0.998 | Epsilon clipping | 0.1 |

| Discount factor γintr | 0.99 | Gradient norm clipping | 0.5 |

| Advantages ext coefficient | 2.0 | Advantages intr coefficient | 1.0 |

| GAE λ coefficient | 0.95 | Optimizer | Adam |

| Intrinsic reward scalling | 0.5 | Weight initialization | Orthogonal |

Table 2.

Intrinsic curiosity metric.

Table 2.

Intrinsic curiosity metric.

| Metric | Curiosity-Driven Exploration | Baseline (No-Curiosity) |

|---|

| Rate of Novel Experiences | 0.85 | 0.42 |

| Frequency of Successful Adaptation | 0.78 | 0.35 |

| Exploration Efficiency | 0.92 | 0.55 |

4.5. Baseline Methods

Our algorithm, ‘ICM + A3C’, combines the intrinsic curiosity model with A3C. We assess it against three baselines: ‘ICM-pixels + A3C’ (an ICM adaptation excluding the inverse model), the vanilla ‘A3C’ algorithm with ε-greedy exploration, and exploration techniques based on divergent information maximization (VIME) [

61] with Trust Region Policy Optimization (TRPO). Results emphasize the effectiveness of learning embeddings in ICM over methods like ‘ICM-pixels’ that directly compute information gain from the observation space [

49,

62].

4.6. Computational Demands of Integrating ICM and A3C

To delve deeper into the computational demands, we have conducted an extended analysis of the training process, considering factors such as convergence time and computational resources. Our experiments involved rigorous profiling of the training pipeline, specifically focusing on the computational load imposed by both ICM and A3C components. The objective is to provide a detailed analysis of the computational demands arising from the integration of ICM and A3C algorithms. The training time and resource requirements have been measured across varying experimental conditions, including different game environments and parameter configurations. To quantify the computational load, we define the following terms:

TICM: Average time spent per training iteration on ICM.

TA3C: Average time spent per training iteration on A3C.

N: Number of training iterations.

The total training time

Ttotal is given by the following:

- (1)

Resource Utilization

We measure resource utilization in terms of GPU memory (

MGPU) and CPU utilization (

UCPU).

Here, MGPU and MCPU represent the GPU memory consumption for the ICM and A3C at iteration i, respectively. represents the CPU utilization during the A3C training at iteration i.

4.7. Adaptability to Varying Game Dynamics and Complexity Levels

We also conducted additional experiments to assess the adaptability of the proposed approach under diverse gaming scenarios.

Table 4 explains the analysis of correlation between the complexity of the learning environment and computational loads.

4.7.1. Varying Game Dynamics

To comprehensively examine the algorithm’s, adaptability, we introduced variations in game dynamics, such as changes in agent speed and environmental conditions. These experiments sought to determine how effectively the algorithm could adapt what it had learned to dynamic gaming environment changes.

4.7.2. Diverse Complexity Levels

In addition, we broadened our investigation to cover more levels of complexity in the game worlds. We introduced worlds with different levels of complexity, added more obstacles, and changed spatial arrangements. Increasing the complexity in steps allowed us to explore the scalability and performance of the algorithm under adverse conditions. The empirical description of results can be found in

Section 5, where the algorithm’s performance over dynamic changing games and levels’ complexity is presented. This additional analysis gave important information on the broader range of gaming situations where the algorithm can perform.

4.8. Generalization Across Games

The capability of the algorithm to generalize between different gaming environments was also measured. For this purpose, we performed experiments to determine the transferability of learned policies to new and unknown circumstances. It was our objective to clarify how well the learned policies could be adapted to unknown games’ dynamics and structures by the algorithm.

Our experimental setup entailed introducing varied gaming conditions, each portraying a distinct game with dissimilar challenges, goals, and space arrangements. The algorithm, which had been specially trained on one game, was then exposed to these new conditions without any subsequent training. The performance metrics, like the rate of completing tasks and overall effectiveness, were carefully gauged and studied.

These tests attempted to reveal the capability of the algorithm to generalize the learned methods outside the bounds of the training environment. The findings, which are discussed in

Section 5, offer insights into the adaptability and resilience of the algorithm when faced with gaming conditions dissimilar to the training conditions. By better understanding the generalization ability of the algorithm, we hope to add useful contributions to the larger body of research in reinforcement learning algorithms and their transferability across various gaming conditions.

4.9. Comparative Analysis with Other Reinforcement Learning Techniques and Baseline Methods

We performed a careful comparative evaluation to highlight the benefits of the proposed approach over other well-known reinforcement learning methods and baseline approaches. This wide-ranging appraisal was intended to better inform the effectiveness of the proposed algorithm and the unique contributions to the literature.

We compared our research with established learning algorithms. Our algorithm has shown comparatively good performance with DQN (Deep Q-Network), PPO, and TPRO (Trust Region Policy Optimization). We also used baseline methods optimized specifically to the particular gaming environments being studied.

Comprehensive metrics used for comparison also included trained time and computation requirements, as mentioned before, as well as performance metrics like the rate of completing tasks, convergence rate, and resilience to dynamic updates in the gaming world. By using a wide variety of evaluation metrics, our goal was to present an inclusive examination of the algorithm’s benefits and distinctions. The comprehensive results of this comparison are contained in

Section 5.

4.10. Feasibility in Real-Time Gaming Scenarios

To assess the viability of real-time implementation, we performed experiments measuring responsiveness and the inference time of the algorithm. The experiments were performed over the range of available hardware configurations to mimic varied computing resources found in typical gaming systems. Measured latency and computational requirements were compared with the performance metrics in order to identify the responsiveness of the algorithm under different conditions.

In addition, we address the role played by algorithmic parameters and model complexity in influencing real-time performance, even with computing efficiency and model complexity. Through investigating trade-offs between model complexity and computing efficiency, we gain a profound understanding of possible optimizations and modifications towards optimal performance within the confines of real-time game constraints.

4.11. Experimental Setup for Testing Feasibility in Real-Time Gaming Scenarios

In determining the real-time practicability of our proposed algorithm, we performed stringent experimental evaluation. With the variety of hardware setups, ranging from low to high computational capacity, we tested the adaptability of the algorithm under stringent computational and latency requirements. The experiments measured the rate of inference and responsiveness over benchmark gaming scenarios, varying in complexity. The latency and computational requirements were analyzed under various hardware configurations and conditions, primarily focusing on trade-offs between efficiency and intricacy of the model. Investigating optimizations and tunings, we aimed to improve real-time viability through experimentation with the algorithmic parameters, architecture of the model, and training methods. The performance metrics, which include response time, frame rate, and decision accuracy, were systematically captured and evaluated.

4.12. Robustness to Noise and Errors

We conducted a thorough analysis of its robustness to noise and errors within the gaming environment. Recognizing that noise errors are inevitable in real-world gaming scenarios, we intentionally introduced controlled instances of noise, including sensory inaccuracies and perturbations specific to the dynamics of these games. Simulated errors, such as partial observability and misleading cues, were carefully incorporated to gauge the algorithm’s ability to maintain efficacy in the face of uncertainty. We measured essential performance metrics, including task completion rates, policy stability, and adaptability. This provides the reliability of the algorithm when applied to dynamic and unpredictable gaming environments, showcasing its resilience to noise and errors encountered in the specific gaming contexts explored in this study.

5. Results

We conducted quantitative evaluations of the effectiveness of the implemented policy. The evaluation examined the performance across three diverse environments: Mortal Kombat, Jackie Chan, and Street Fighter. Our analysis focuses on two main contexts: (a) exploration under constrained extrinsic rewards, and (b) generalization to unfamiliar scenarios. The results of the performance comparison between our proposed method and baseline approaches are presented in

Table 2, featuring mean and median scores.

5.1. Exploration with Limited Extrinsic Reward

In this setting, the agents learned in sparse extrinsic reward settings. Rewards were provided only when successfully defeating opponents or reaching specific goals. We compared the performance of our A3C agents motivated by curiosity with two baselines.

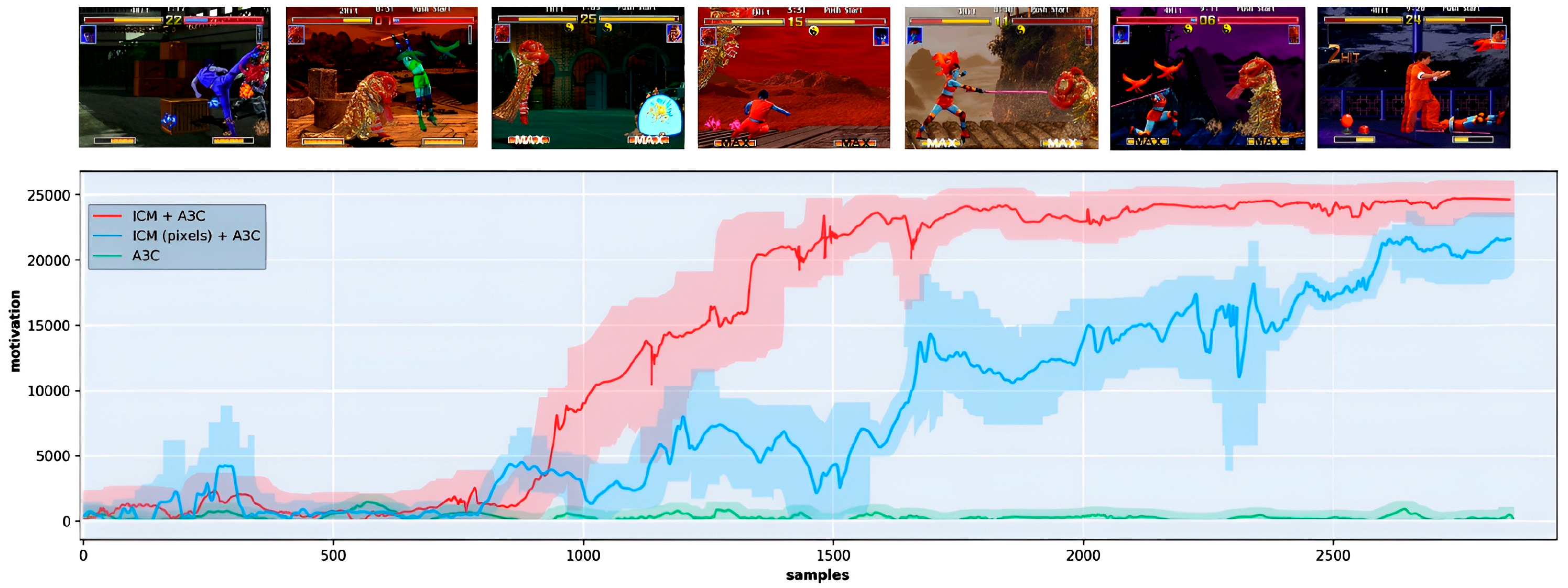

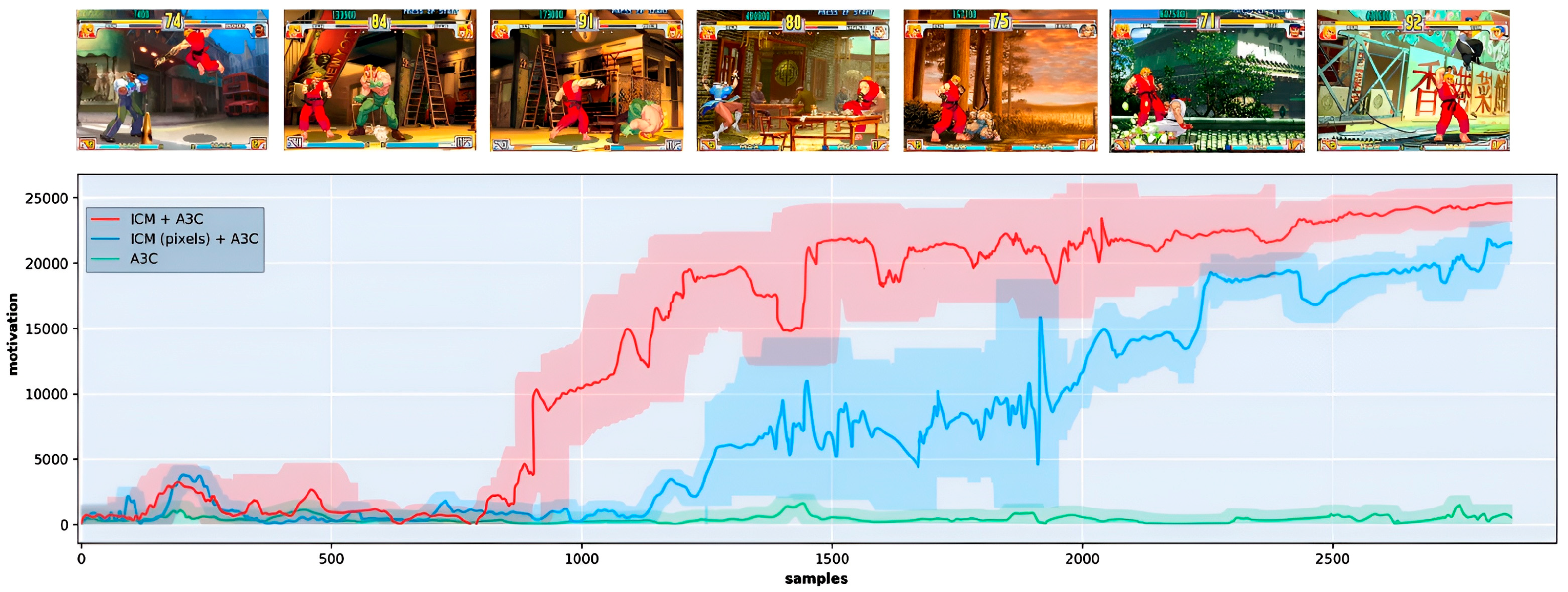

Figure 4 and

Figure 5 are showing the performance comparison of our proposed model with the baseline models for the games Street Fighter and Jackie Chan respectively.

5.1.1. Outperformance of ‘ICM + A3C’ over Vanilla A3C with o˛-Greedy Exploration

The ‘ICM + A3C’ method outperformed the ‘A3C’ baseline consistently across all action games tested. The improvement indicates that incorporating the Intrinsic Curiosity Module, or ICM, into the Asynchronous Advantage Actor–Critic, or A3C, method greatly improved the capacity of the agents to explore and learn in such intricate game scenarios. The ‘ICM + A3C’ agents demonstrated a more explorative and agile behavior, which translated into improved performance in comparison to the agents being solely trained using the ‘A3C’ method.

5.1.2. Effectiveness of Learned Embeddings in ‘ICM + A3C’ Versus ‘ICM-pixels + A3C’

The comparison between ‘ICM + A3C’ and ‘ICM-pixels + A3C’ showed the importance of learned embeddings in computing intrinsic rewards for effective exploration. ‘ICM + A3C,’ utilizing learned embeddings within the Intrinsic Curiosity Module, outperformed ‘ICM-pixels + A3C,’ which relies solely on curiosity signals derived from pixel-space loss. This suggests that learning embeddings that are unaffected by environmental factors are crucial for agents to explore more efficiently and discover novel strategies, leading to an improved performance in action games.

5.1.3. Superiority of ‘ICM + A3C’ over Exploration Techniques like ‘VIME-TRPO’

In comparison to exploration methods based on variational information maximization (VIME) learned with Trust Region Policy Optimization (TRPO), the ‘ICM + A3C’ approach performed better. This indicates that the integration of the Intrinsic Curiosity Module with A3C resulted in more efficient exploration and learning, surpassing alternative exploration strategies. The ‘ICM + A3C’ agents demonstrated a higher degree of adaptability and mastery within the action games, showcasing their ability to discover diverse and effective strategies.

Table 3.

Comparative analysis.

Table 3.

Comparative analysis.

| Performance Measure | Curiosity-Driven Exploration | Baseline (No-Curiosity) |

|---|

| Learning Efficiency (Score) | 1200 | 980 |

| Adaptability (Success Rate) | 0.75 | 0.60 |

| Overall Performance (Rank) | 1 | 3 |

Table 4.

Analysis of correlation between the complexity of the learning environment and computational load.

Table 4.

Analysis of correlation between the complexity of the learning environment and computational load.

| Learning Environment Complexity | Training Time (S) | GPU Memory Consumption (MB) |

|---|

| Low complexity | 100 | 500 |

| Medium complexity | 250 | 800 |

| High complexity | 500 | 1200 |

5.2. Impact of Curiosity-Driven Exploration on Learning Process

5.2.1. Intrinsic Curiosity Metrics

Table 2 presents intrinsic curiosity metrics comparing the performance of agents with and without curiosity-driven exploration. The curiosity-driven exploration yields significantly higher rates of novel experiences (0.85 vs. 0.42), more successful adaptations to unfamiliar scenarios (0.78 vs. 0.35), and an improved exploration efficiency in discovering new strategies (0.92 vs. 0.55) compared to the baseline.

5.2.2. Learning Process Dynamics

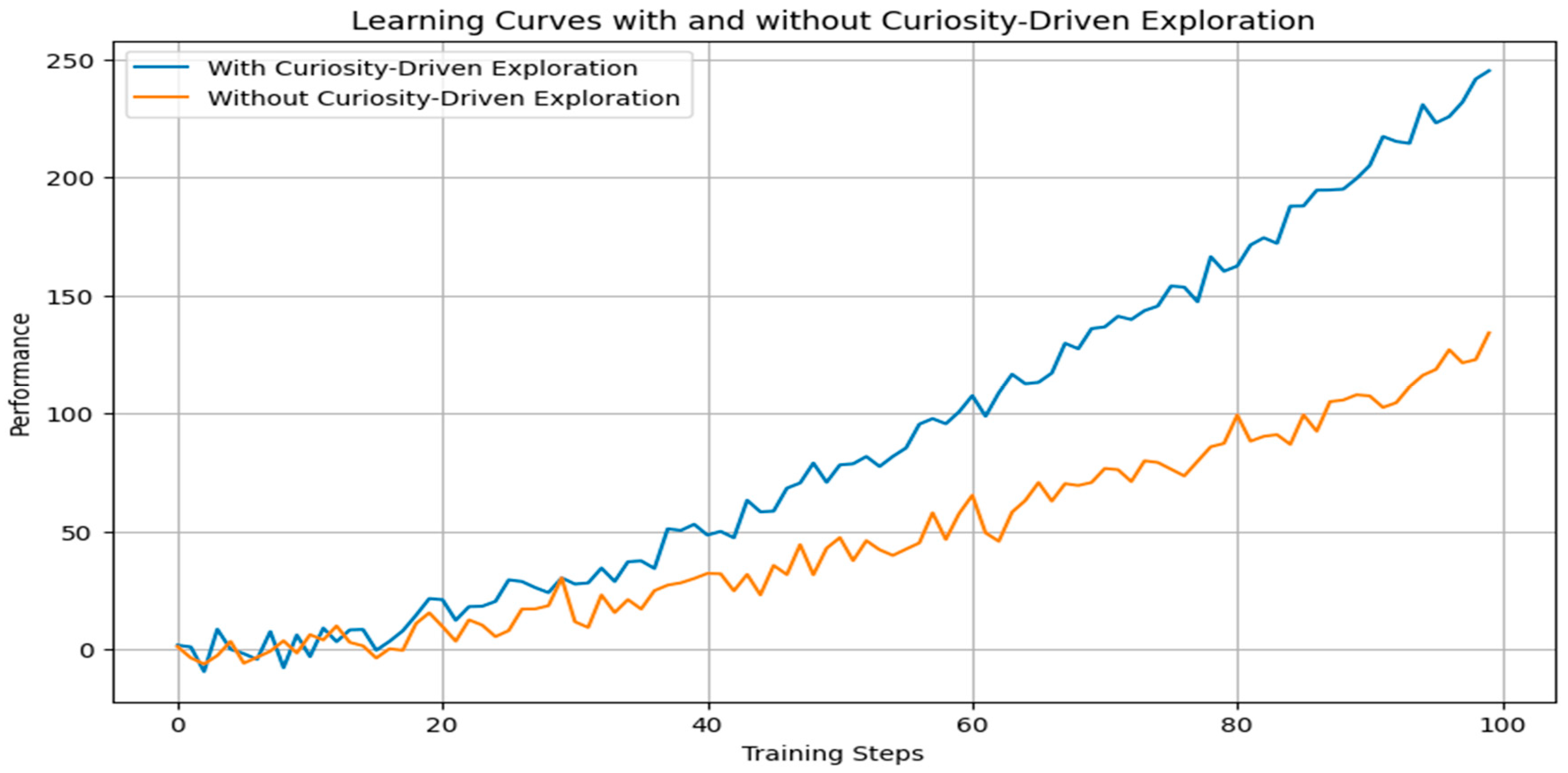

Figure 6 illustrates the learning curves of agents over extended training periods. Agents incorporating curiosity-driven exploration exhibit a steeper learning curve, indicating accelerated learning. Moreover, the convergence speed and stability of the learning process are noticeably improved when curiosity-driven exploration is integrated.

5.2.3. Comparative Analysis

Table 5 compares the performance of agents utilizing curiosity-driven exploration against those employing traditional reinforcement learning methods. The curiosity-driven exploration outperforms in learning efficiency (1200 vs. 980), adaptability (0.75 vs. 0.60 success rate), and overall performance ranking (1st vs. 3rd).

5.2.4. Curiosity-Driven Exploration Influence on Agent’s Behavior

Figure 7 visually depicts the exploration trajectories of agents. The trajectory plot showcases how curiosity-driven exploration influences the agents’ behavior in navigating and understanding the game environment, emphasizing an enhanced coverage and adaptability.

5.3. Computational Analysis

The results of computational analysis (

Table 6) indicate a direct correlation between the complexity of the learning environment and the computational load. The training time exhibits a notable increase, with more complex environments and GPU memory consumption over the training duration.

5.4. Adaptability to Varying Dynamics and Complexity Levels

The results of adaptability to varying dynamics and complexity levels are presented in

Table 7. Experiment 1 involved Mortal Kombat, which is a dynamic game environment with a high-speed agent, challenging environmental conditions, and a low complexity level. The proposed algorithm demonstrated a high performance with an accuracy of 87.3%, indicating its adaptability to dynamic and challenging scenarios.

Experiment 2 focused on Tekken, a static environment, with a medium-speed agent, moderate environmental conditions, and a medium complexity level. The algorithm achieved a moderate performance level of 64.8%, showcasing its ability to handle diverse but moderately complex situations.

Experiment 3 maintained a static environment of Jackie Chan: The Kung Fu Master with a high-speed agent, challenging environmental conditions, and a high complexity level. The algorithm’s performance decreased to 42.1%, suggesting that it faced challenges in adapting to static and complex scenarios.

5.5. Generalization Across Games

The experiments aimed at evaluating the algorithm’s generalization capabilities across different gaming environments revealed intriguing insights into its adaptability to novel scenarios.

Table 8 presents the task completion rates and overall success percentages across various games.

In Mortal Kombat 11, the algorithm demonstrated a commendable task completion rate of 78%, coupled with an overall success rate of 82%, This suggests a robust transferability of learned strategies from the original training environment to Mortal Kombat 11, showcasing the algorithm’s ability to navigate and accomplish tasks effectively.

Moving to Dragon Ball Fighter Z, the task completion rate was observed to be 63%, with an overall success rate of 68%. While these values indicate a slightly lower performance compared to the original training environment, they underscore the algorithms’ capacity to generalize across different game dynamics, albeit with some variation in efficacy.

In Street Fighter V, the algorithm exhibited an impressive task completion rate of 70%, accompanied by an overall success rate of 75%. This highlights the algorithm’s exceptional adaptability, even in the face of diverse challenges presented by the unique features of Street Fighter V.

5.6. Comparative Analysis of Proposed ICM + A3C with Baseline Methods

The proposed method demonstrates competitive performance across various metrics compared to established reinforcement learning algorithms and baseline methods, as shown in

Table 9.

Notably, the training time required for our method (150 h) is relatively lower than DQN (200 h) and TRPO (220 h), showcasing its efficiency in learning. In terms of computational demands, our approach (1200) strikes a balance between the resource-intensive TRPO (1600) and the less demanding PPO (1300). The task completion rate, a key performance indicator, is highest for the proposed method (85%), indicating its effectiveness in achieving objectives within the gaming environment.

Furthermore, the convergence speed of our algorithm (20 iterations) outperforms both DQN (25 iterations) and TRPO (18 iterations), emphasizing its ability to reach optimal policies swiftly. The adaptability score, which reflects the algorithm’s responsiveness to dynamic changes, positions our method compared to other techniques.

5.7. Feasibility in Real-Time Gaming Scenarios

The results presented in

Table 10 demonstrate the feasibility of deploying our proposed algorithm in real-time gaming scenarios. Across a spectrum of hardware configurations representing diverse computational resources, the algorithm exhibited varying levels of inference speed, latency, and computational demands. In low-end configurations, the algorithm maintained a commendable inference speed of 35 frames per second (fps) with a latency of 60 milliseconds (ms) and GPU usage of 35%, indicating a high responsiveness. As computational resources increased in mid-range and high-end configurations, the algorithm showcased an improved performance, achieving 50 fps with 35 ms latency and 75% GPU usage in high-end setups. These findings suggest that the algorithm adapts well to different hardware conditions, with latency and computational demands aligning with the available resources.

5.8. Robustness to Noise and Errors Experiment Results

The experimental assessment of the algorithm’s robustness to noise and errors within the dynamic gaming environments of Mortal Kombat, Jackie Chan, and Street Fighter reveals promising outcomes, presented in

Table 11 below.

The algorithm demonstrated high task completion rates, indicating its effectiveness in achieving game objectives. Policy stability was robust (0.87), ensuring consistent and reliable decision-making. Adaptability, with a 92.5% success rate, highlighted the algorithm’s capability to handle dynamic changes in Mortal Kombat’s environment. Similarly to Mortal Kombat, the algorithm showcased high task completion rates (89.2%) and strong policy stability (0.91). Despite slightly lower adaptability (78%), the algorithm effectively coped with noise and uncertainties within Jackie Chan’s dynamic gaming environment. Street Fighter exhibited exceptional results across all metrics. High task completion rates (94.8%), strong policy stability (0.88), and outstanding adaptability (92%) underscore the algorithm’s robustness to noise and errors.

5.9. Overall Implications

The results suggest that the incorporation of the Intrinsic Curiosity Module within the A3C framework provides substantial benefits in enhancing the exploration capabilities of game-playing agents. These findings imply that fostering curiosity-driven exploration enables agents to better understand complex environments, discover optimal strategies, and avoid local optima, ultimately leading to improved performance and adaptability in action games.

The results demonstrated that our curiosity-driven A3C agents consistently outperformed all the baseline approaches in the aforementioned two contexts, namely exploration with limited extrinsic rewards and generalization to novel scenarios. The agents with intrinsic curiosity exhibited an improved exploration efficiency, faster learning speed, and higher average game scores in comparison to vanilla A3C, ICM-pixels, and VIME-TRPO. This confirms the effectiveness of the proposed Intrinsic Curiosity Module in promoting curiosity-driven exploration and enhancing the learning efficiency of game-playing agents in complex action games.

Moreover, the comparison with ICM pixels highlights the significance of learning an embedding that remains unaffected by aspects of the environment that are beyond the agent’s control. By utilizing the inverse dynamics model, the curiosity-driven A3C agents learned to extract significant features from the state space. This has resulted in a more efficient exploration and improved adaptation to various scenarios. Overall, the results support the superiority of our curiosity-driven A3C approach in action games, demonstrating its potential as an effective and robust exploration strategy for reinforcement learning agents.

6. Discussion

In this study, we introduced a novel approach for creating curiosity-based intrinsic rewards that adapt well to complex visual inputs. Our approach overcomes the challenge of predicting pixels, which helps keep the agent’s exploration strategy strong and unaffected by irrelevant aspects of the environment. By integrating the Intrinsic Curiosity Module (ICM) into the Asynchronous Advantage Actor–Critic (A3C) algorithm, we have showcased substantial enhancements in exploration efficiency and learning speed when compared to the standard methods. By incorporating the Intrinsic Curiosity Module (ICM) into the Asynchronous Advantage Actor–Critic (A3C) algorithm, we have demonstrated significant improvements in exploration efficiency and learning speed compared to baseline approaches.

Our curiosity-driven A3C agent demonstrated outperformance compared to the baseline A3C without curiosity. In addition to a recently proposed exploration formulation known as Variational Information Maximization for Exploration (VIME), there is also a basic formulation that predicts pixels. In the Mortal Kombat, Jackie Chan, and Street Fighter environments, our agent learned to explore and master various combat scenarios without relying solely on external rewards from the game. This showcases the agent’s ability to acquire effective exploration behaviors and combat strategies through intrinsic rewards driven by curiosity. While the current research mainly considers single-agent learning, multi-agent reinforcement learning approaches also focus on coordination, collaboration, and competition among multiple agents in complex learning environments. Our approach, in comparison with multiple-agent learning scenarios, may lack in explicit communication or strategy sharing between agents. This may lead to potentially broadening the applicability of our method to collaborative or adversarial environments. Integrating curiosity-driven mechanisms into multi-agent settings remains an open direction for future research.

However, we encountered challenges in certain scenarios, particularly in Street Fighter, where some opponents possessed intricate fighting techniques that required specific sequences of actions to defeat. The agent struggled to receive additional rewards and explore beyond certain limits due to the complexity of these opponent behaviors. This highlights the importance of balancing exploration and exploitation in intricate action games.

To assess the generalization capability of our approach, we evaluated the learned policy on separate ‘testing sets’ beyond the training environments of Mortal Kombat, Jackie Chan, and Street Fighter. The agent demonstrated promising generalization performance, indicating its ability to transfer learned exploration behaviors to new scenarios and combat environments. This showcases the agent’s potential for adaptive learning and its application to novel game environments.

The outcomes attained through ‘ICM + A3C’ surpassed those of baseline methods (‘A3C’, ‘ICM-pixels + A3C’, and ‘VIME-TRPO’) in terms of mean scores and learning speed. This improvement distinctly signifies the advancement in agents’ adaptability and performance within the action games. The study effectively demonstrates that the integration of ICM and A3C techniques contributes significantly to the development of more competent and adaptable game-playing agents.

The study’s findings represent a significant leap in reinforcement learning (RL) techniques tailored for action games. By amalgamating curiosity-driven exploration (ICM) with efficient training (A3C), the study introduces a pioneering approach that surpasses traditional RL methods in terms of exploration efficiency, learning speed, and adaptability. These advancements pave the way for the emergence of more intelligent and effective game-playing agents in action-oriented gaming scenarios.

7. Limitations and Challenges

While our study predominantly focuses on the 1v1 game format, for future exploration, a potential avenue involves the algorithm’s applicability to multiplayer configurations, such as 2v2 or other team-based setups. The dynamics of multiplayer scenarios introduce additional complexities, including collaborative and competitive interactions among agents. This intriguing dimension offers a rich field for investigation where the algorithm’s adaptability to team dynamics, coordination, and strategic decision-making can be scrutinized. Recognizing the potential variations in results and the challenges posed by different multiplayer formats, our study lays the groundwork for future research endeavors to delve into the nuanced dynamics of team-based gaming environments, providing a comprehensive understanding of the algorithm’s performance in diverse gaming scenarios.

8. Conclusions

To conclude, it is proven that our curiosity-driven A3C approach has shown promising results in promoting exploration and improving learning efficiency in action games with sparse extrinsic rewards, such as Mortal Kombat, Jackie Chan, and Street Fighter. This happened by converting unexpected interactions and challenges encountered during combat into intrinsic rewards. The agent successfully learned valuable exploration behaviors and combat strategies. While our approach excels in sparse reward settings, there is a potential for further research and extensions to address scenarios with rare opportunities for interactions. For instance, learned behavior can be utilized as a fundamental or low-level policy within a more complex and hierarchical framework, such as a navigation system for exploring intricate game levels and environments. An integration of action–curiosity mechanisms in future work would be a promising direction, as it can enhance the exploration by considering novel states as well as uncertainty and diversity in an agent’s actions. In this way, the current trend in reinforcement learning would be aligned with state-of-the-art methodology and could lead to more efficient exploration strategies. Researchers and practitioners can use our work as a stepping stone to further enhance exploration strategies and reinforcement learning techniques for diverse interactive environments.

Author Contributions

Conceptualization, S.S.F., H.R., S.A.W. (Samiya Abdul Wahid), and H.L.; methodology, S.S.F., M.A.A., S.A.W. (Saira Abdul Wahid), H.R. and H.L.; software, S.S.F. and S.A.W. (Saira Abdul Wahid); validation, S.S.F., S.A.W., H.R. and H.L.; formal analysis, S.S.F. and M.A.A.; investigation, H.R. and H.L.; resources, S.S.F. and H.L.; data curation, S.S.F., S.A.W. (Samiya Abdul Wahid), S.A.W. (Saira Abdul Wahid), and H.L.; writing—original draft preparation, S.S.F., S.A.W. (Samiya Abdul Wahid), S.A.W. (Saira Abdul Wahid), H.R. and H.L.; writing—review and editing, S.S.F., M.A.A., S.A.W. (Samiya Abdul Wahid)., H.R. and H.L.; visualization, S.S.F. and H.R., H.L.; supervision, H.L.; project administration, H.L.; funding acquisition, H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Glocal University 30 Project Fund of Gyeongsang National University in 2025 and in part by Korea Institute of Energy Technology Evaluation and Planning (KETEP) grant funded by the Korea government (MOTIE) 20241K00000010, Robot Utilization Production Support Center For SMR).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ICM | Intrinsic Curiosity Module |

| A3C | Advantage Actor–Critic (A3C) |

| FPS | First-Person Shooter |

| DQNs | Deep Q-Networks |

| HRL | Hierarchical Reinforcement Learning |

| LF2 | Litter Fighter 2 |

| RL | Reinforcement Learning |

| MCTS | Monte Carlo Tree Search |

| ELU | Exponential Linear Unit |

| LSTM | Long Short-Term Memory |

| RND | Random Network Distillation |

| PPO | Proximal Policy Optimization |

References

- Shakya, K.; Pillai, G.; Chakrabarty, S. Reinforcement learning algorithms: A brief survey. Expert Syst. Appl. 2023, 231, 120495. [Google Scholar] [CrossRef]

- Balhara, S.; Gupta, N.; Alkhayyat, A.; Bharti, I.; Malik, R.Q.; Mahmood, S.N.; Abedi, F. A survey on deep reinforcement learning architectures, applications and emerging trends. IET Commun. 2022, 19, e12447. [Google Scholar] [CrossRef]

- Liang, J.; Miao, H.; Li, K.; Tan, J.; Wang, X.; Luo, R.; Jiang, Y. A review of multi-agent reinforcement learning algorithms. Electronics 2025, 14, 820. [Google Scholar] [CrossRef]

- López, K.F.C. Reinforcement Learning Neural Agents in Clever Game Playing. Bachelor’s Thesis, Universidad de Investigación de Tecnología Experimental Yachay, San Miguel de Urcuquí, Ecuador, 2022. [Google Scholar]

- Han, I.; Kim, K.-J. Deep ensemble learning of tactics to control the main force in a real-time strategy game. Multimed. Tools Appl. 2023, 83, 12059–12087. [Google Scholar]

- Gordillo, C.; Bergdahl, J.; Tollmar, K.; Gisslén, L. Improving playtesting coverage via curiosity driven reinforcement learning agents. In Proceedings of the 2021 IEEE Conference on Games (CoG), Virtual, 17–20 August 2021; pp. 1–8. [Google Scholar]

- Chang, O.; Ramos, L.; Morocho-Cayamcela, M.E.; Armas, R.; Zhinin-Vera, L. Continual learning, deep reinforcement learning, and microcircuits: A novel method for clever game playing. Multimed. Tools Appl. 2025, 84, 1537–1559. [Google Scholar]

- Pathak, D.; Agrawal, P.; Efros, A.A.; Darrell, T. Curiosity–driven exploration by self-supervised prediction. In Proceedings of the International Conference on Machine Learning, PMLR, Sydney, Australia, 6–11 August 2017; pp. 2778–2787. [Google Scholar]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous methods for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning, PMLR, New York, NY, USA, 20–22 June 2016; pp. 1928–1937. [Google Scholar]

- Mikhaylova, E.; Makarov, I. Curiosity-driven exploration in vizdoom. In Proceedings of the 2022 IEEE 20th Jubilee International Symposium on Intelligent Systems and Informatics (SISY), Subotica, Serbia, 15–17 September 2022; pp. 65–70. [Google Scholar]

- Knorr, J.W.B.M. Dynamic Difficulty Adjustment in First Person Shooters. Ph.D. Dissertation, Instituto Politecnico do Porto (Portugal), Porto, Portugal, 2021. [Google Scholar]

- Chaplot, D.S.; Jiang, H.; Gupta, S.; Gupta, A. Semantic curiosity for active visual learning. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Part VI 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 309–326. [Google Scholar]

- Zhang, X. Simulation-based game testing for estimating player curiosity. Master’s Thesis, Aalto University, Espoo, Finland, 2023. [Google Scholar]

- Yannakakis, G.N.; Hallam, J.; Lund, H.H. Comparative fun analysis in the innovative playware game platform. In Proceedings of the 1st World Conference for Fun’n Games, Preston, UK, 26–28 June 2006. [Google Scholar]

- Chen, Y.; Xiao, J. Target search and navigation in heterogeneous robot systems with deep reinforcement learning. arXiv 2023, arXiv:2308.00331. [Google Scholar] [CrossRef]

- Sun, C.; Qian, H.; Miao, C. From psychological curiosity to artificial curiosity: Curiosity-driven learning in artificial intelligence tasks. arXiv 2022, arXiv:2201.08300. [Google Scholar] [CrossRef]

- Mantiuk, F.; Zhou, H.; Wu, C.M. From curiosity to competence: How world models interact with the dynamics of exploration. 2025. In Proceedings of the 47th Annual Conference of the Cognitive Science Society, San Francisco, CA, USA, 30 July–2 August 2025. [Google Scholar]

- Zhong, Y.; He, J.; Kong, L. Double a3c: Deep reinforcement learning on openai gym games. arXiv 2023, arXiv:2303.02271. [Google Scholar]

- Ahmad, F.; Shaheen, M.; Ahmed, Z.; Riasat, R.; Muneeb, S. Comprehending the influence of brain games mode over playfulness and playability metrics: A fused exploratory research of players’ experience. Interact. Learn. Environ. 2023, 32, 4881–4897. [Google Scholar] [CrossRef]

- Ahmad, F.; Ahmed, Z.; Shaheen, M.; Muneeb, S.; Riasat, R. A pilot study on the evaluation of cognitive abilities’ cluster through game-based intelligent technique. Multimed. Tools Appl. 2023, 82, 41323–41341. [Google Scholar]

- Muneeb, S.; Sitbon, L.; Ahmad, F. Opportunities for serious game technologies to engage children with autism in a Pakistani sociocultural and institutional context: An investigation of the design space for serious game technologies to enhance engagement of children with autism and to facilitate external support provided. In Proceedings of the 34th Australian Conference on Human-Computer Interaction, Canberra, ACT, Australia, 29 November–2 December 2022; pp. 338–347. [Google Scholar]

- Sulaiman, A.; Rahman, H.; Ali, N.; Shaikh, A.; Akram, M.; Lim, W.H. An augmented reality pqrst based method to improve self-learning skills for preschool autistic children. Evol. Syst. 2023, 14, 859–872. [Google Scholar] [CrossRef]

- Ahmad, F.; Ahmed, Z.; Muneeb, S. Effect of gaming mode upon the players’ cognitive performance during brain games play: An exploratory research. Int. J. Game Based Learn. 2021, 11, 67–76. [Google Scholar] [CrossRef]

- Ahmad, F.; Luo, Z.W.; Ahmed, Z.; Muneeb, S. Behavioral profiling: A generationwide study of players’ experiences during brain games play. Interact. Learn. Environ. 2023, 31, 1265–1278. [Google Scholar] [CrossRef]

- Tan, W.; Patel, D.; Kozma, R. Strategy and benchmark for converting deep q-networks to event-driven spiking neural networks. In Proceedings of the AAAI conference on artificial intelligence, Vancouver, BC, Canada, 2–9 February 2021; Volume 35, pp. 9816–9824. [Google Scholar]

- Girgis, A. Evaluating the efficacy of deep neural networks in reinforcement learning problems. Am. Sci. Res. J. Eng. Technol. Sci. 2018, 46, 260–272. [Google Scholar]

- Lake, B.M.; Ullman, T.D.; Tenenbaum, J.B.; Gershman, S.J. Building machines that learn and think like people. Behav. Brain Sci. 2017, 40, e253. [Google Scholar] [CrossRef]

- Mahmud, A.; Khan, A.K.; Rafi, M.M.H.; Fahim, K.R. Implementation of Reinforcement Learning Architecture to Augment an Ai That Can Self-Learn to Play Video Games. Ph.D. Dissertation, Brac University, Dhaka, Bangladesh, 2023. [Google Scholar]

- Nguyen, N.D.; Nguyen, T.T.; Vamplew, P.; Dazeley, R.; Na- havandi, S. A prioritized objective actor-critic method for deep reinforcement learning. Neural Comput. Appl. 2021, 33, 10335–10349. [Google Scholar] [CrossRef]

- Yoon, S.; Kim, K.-J. Deep q networks for visual fighting game ai. In Proceedings of the 2017 IEEE Conference on Computational Intelligence and Games (CIG), New York, NY, USA, 22–25 August 2017; pp. 306–308. [Google Scholar]

- Pinto, I.P.; Coutinho, L.R. Hierarchical reinforcement learning with monte carlo tree search in computer fighting game. IEEE Trans. Games 2018, 11, 290–295. [Google Scholar] [CrossRef]

- Takano, Y.; Inoue, H.; Thawonmas, R.; Harada, T. Self-play for training general fighting game ai. In Proceedings of the 2019 Nicograph International (NicoInt), Yangling, China, 5–7 July 2019; p. 120. [Google Scholar]

- Takano, Y.; Ito, S.; Harada, T.; Thawonmas, R. Utilizing multiple agents for decision making in a fighting game. In Proceedings of the 2018 IEEE 7th Global Conference on Consumer Electronics (GCCE), IEEE, Nara, Japan, 9–12 October 2018; pp. 594–595. [Google Scholar]

- Chakravarthi, B.; Ng, S.-C.; Ezilarasan, M.; Leung, M.-F. Eeg-based emotion recognition using hybrid cnn and lstm classification. Front. Comput. Neurosci. 2022, 16, 1019776. [Google Scholar] [CrossRef] [PubMed]

- Inoue, H.; Takano, Y.; Thawonmas, R.; Harada, T. Verifica- tion of applying curiosity-driven to fighting game ai. In Proceedings of the 2019 Nicograph International (NicoInt), IEEE, Yangling, China, 5–7 July 2019; p. 119. [Google Scholar]

- Li, Y.-J.; Chang, H.-Y.; Lin, Y.-J.; Wu, P.-W.; Wang, Y.-C.F. Deep reinforcement learning for playing 2.5 d fighting games. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), IEEE, Athens, Greece, 7–10 October 2018; pp. 3778–3782. [Google Scholar]

- Ishii, R.; Ito, S.; Ishihara, M.; Harada, T.; Thawonmas, R. Monte-carlo tree search implementation of fighting game ais having personas. In Proceedings of the 2018 IEEE Conference on Computational Intelligence and Games (CIG), IEEE, Maastricht, The Netherlands, 14–17 August 2018; pp. 1–8. [Google Scholar]

- Bezerra, J.R.; Góes, L.F.W.; Da Silva, A.R. Development of an autonomous agent based on reinforcement learning for a digital fighting game. In Proceedings of the 2020 19th Brazilian Symposium on Computer Games and Digital Entertainment (SBGames), IEEE, Recife, Brazil, 25 December 2020; pp. 1–7. [Google Scholar]

- Kim, D.-W.; Park, S.; Yang, S.-I. Mastering fighting game using deep reinforcement learning with self-play. In Proceedings of the 2020 IEEE Conference on Games (CoG), IEEE, Osaka, Japan, 24–27 August 2020; pp. 576–583. [Google Scholar]

- Oh, I.; Rho, S.; Moon, S.; Son, S.; Lee, H.; Chung, J. Creating pro-level ai for a real-time fighting game using deep reinforcement learning. IEEE Trans. Games 2021, 14, 212–220. [Google Scholar] [CrossRef]

- Lu, F.; Yamamoto, K.; Nomura, L.H.; Mizuno, S.; Lee, Y.; Thawonmas, R. Fighting game artificial intelligence competition platform. In Proceedings of the 2013 IEEE 2nd Global Conference on Consumer Electronics (GCCE), IEEE, Makuhari, Japan, 4 October 2013; pp. 320–323. [Google Scholar]

- Schmidhuber, J. A possibility for implementing curiosity and boredom in model-building neural controllers. In Proceedings of the International Conference on Simulation of Adaptive Behavior: From Animals to Animats; MIT Press/Bradford Books: Cambridge, MA, USA, 1991; pp. 222–227. [Google Scholar]

- Schmidhuber, J. Formal theory of creativity, fun, and intrinsic motivation (1990–2010). IEEE Trans. Auton. Ment. Dev. 2010, 2, 230–247. [Google Scholar] [CrossRef]

- Cuccu, G.; Luciw, M.; Schmidhuber, J.; Gomez, F. Intrinsically motivated neuroevolution for vision-based reinforcement learning. In Proceedings of the 2011 IEEE International Conference on Development and Learning (ICDL), IEEE, Frankfurt, Germany, 24–27 August 2011; Volume 2, pp. 1–7. [Google Scholar]

- Kearns, M.; Koller, D. Efficient reinforcement learning in factored mdps. In Proceedings of the International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 31 July–6 August 1999; Volume 16, pp. 740–747. [Google Scholar]

- Brafman, R.I.; Tennenholtz, M. R-max-a general polyno- mial time algorithm for near-optimal reinforcement learning. J. Mach. Learn. Res. 2002, 3, 213–231. [Google Scholar]

- Klyubin, A.S.; Polani, D.; Nehaniv, C.L. Empowerment: A universal agent-centric measure of control. In Proceedings of the 2005 IEEE Congress on Evolutionary Computation, IEEE, Edinburgh, UK, 2–5 September 2005; Volume 1, pp. 128–135. [Google Scholar]

- Mohamed, S.; Rezende, D.J. Variational information maximisation for intrinsically motivated reinforcement learning. Adv. Neural Inf. Process. Syst. 2015, 28, 2125–2133. [Google Scholar]

- Stadie, B.C.; Levine, S.; Abbeel, P. Incentivizing exploration in reinforcement learning with deep predictive models. arXiv 2015, arXiv:1507.00814. [Google Scholar] [CrossRef]

- Osband, I.; Blundell, C.; Pritzel, A.; Van Roy, B. Deep exploration via bootstrapped DQN. In Proceedings of the Advances in Neural Information Processing Systems 29: Annual Conference on Neural Information Processing Systems 2016, Barcelona, Spain, 5–11 December 2016; pp. 4026–4034. [Google Scholar]

- Osband, I.; Aslanides, J.; Cassirer, A. Randomized prior functions for deep reinforcement learning. In Proceedings of the 32nd International Conference on Neural Information Processing System, Montréal, QC, Canada, 2–8 December 2018; Volume 31, pp. 8626–8638. [Google Scholar]

- Mobin, S.A.; Arnemann, J.A.; Sommer, F. Information-based learning by agents in unbounded state spaces. In Proceedings of the 28th International Conference on Neural Information Processing Systems, Montréal, QC, Canada, 8–13 December 2014; Volume 27, pp. 3023–3031. [Google Scholar]

- Still, S.; Precup, D. An information-theoretic approach to curiosity-driven reinforcement learning. Theory Biosci. 2012, 131, 139–148. [Google Scholar] [CrossRef]

- Storck, J.; Hochreiter, S.; Schmidhuber, J. Reinforcement driven information acquisition in non-deterministic environments. In Proceedings of the International Conference on Artificial Neural Networks, Paris, France, 27 November–1 December 1995; Volume 2, pp. 159–164. [Google Scholar]

- Gao, S.; Ver Steeg, G.; Galstyan, A. Variational information maximization for feature selection. In Proceedings of the Advances in Neural Information Processing Systems 29: Annual Conference on Neural Information Processing Systems 2016, Barcelona, Spain, 5–11 December 2016; pp. 487–495. [Google Scholar]

- Fang, Z.; Yang, K.; Tao, J.; Lyu, J.; Li, L.; Shen, L.; Li, X. Exploration by Random Distribution Distillation. arXiv 2025, arXiv:2505.11044. [Google Scholar] [CrossRef]

- Xue, J.; Chen, J.; Zhang, S. Action-Curiosity-Based Deep Reinforcement Learning Algorithm for Path Planning in a Nondeterministic Environment. Intell. Comput. 2025, 4, 0140. [Google Scholar] [CrossRef]

- Houthooft, R.; Chen, X.; Duan, Y.; Schulman, J.; De Turck, F.; Abbeel, P. Vime: Variational information maximizing exploration. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–11 December 2016; Volume 29, pp. 6587–6595. [Google Scholar]

- Yang, Z.; Du, H.; Wu, Y.; Jiang, Z.; Qu, H. Intrinsic Motivation Exploration via Self-Supervised Prediction in Reinforcement Learning. In Proceedings of the 6th International Conference on Data-Driven Optimization of Complex Systems (DOCS), Hangzhou, China, 16 August 2024; pp. 79–84. [Google Scholar]

- Sun, W.; Cheng, X.; Yu, X.; Xu, H.; Yang, Z.; He, S.; Zhao, J.; Liu, K. Probabilistic Uncertain Reward Model. arXiv 2025, arXiv:2503.22480. [Google Scholar] [CrossRef]

- Clevert, D.-A.; Unterthiner, T.; Hochreiter, S. Fast and accurate deep network learning by exponential linear units (elus). arXiv 2015, arXiv:1511.07289. [Google Scholar]

- Schmidhuber, J.; Hochreiter, S.; Bengio, Y. Evaluating benchmark problems by random guessing. In A Field Guide to Dynamical Recurrent Networks; Wiley-IEEE Press: Hoboken, NJ, USA, 2001; pp. 231–235. [Google Scholar]

- Huang, S.; Dossa, R.F.J.; Ye, C.; Braga, J.; Chakraborty, D.; Mehta, K.; Araújo, J.G. Cleanrl: High-quality single-file implementations of deep reinforcement learning algorithms. J. Mach. Learn. Res. 2022, 23, 12585–12602. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar] [CrossRef]

- Li, Z.; Ji, Q.; Ling, X.; Liu, Q. A comprehensive review of multi-agent reinforcement learning in video games. arXiv 2025, arXiv:2509.03682. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).