Network Data Flow Collection Methods for Cybersecurity: A Systematic Literature Review

Abstract

1. Introduction

- RQ1: Which technologies and architectures currently support flow collection for cybersecurity?

- RQ2: How do different flow-collection technologies compare in terms of data acquisition methods, advantages, limitations, and applicability to cybersecurity scenarios?

- RQ3: Which performance metrics are reported, and how consistent are they?

- RQ4: Which open challenges (scalability, privacy, regulatory compliance, benchmark availability) persist?

2. Materials and Methods

2.1. Review Protocol

2.2. Identification of Studies

2.2.1. Databases and Search Strategy

2.2.2. Additional Filters

- Publications from 1 January 2019 to 31 July 2025, reflecting the latest advances and current trends in data flow collection technologies in the field of cybersecurity.

- Peer-reviewed articles guarantee the scientific and methodological quality of the study.

- Studies in English, the predominant language in scientific literature.

2.2.3. Criteria for Inclusion

- Peer-reviewed publications (articles or conference papers) published between January 2019 and July 2025.

- Studies that address the use of specific network flow collection technologies, such as NetFlow, sFlow, IPFIX, or equivalent, in the context of cybersecurity.

- English language and full text available.

- Proposing, implementing, or evaluating data collection methods applied to cybersecurity (intrusion detection, incident response, traffic monitoring, etc.).

- The study must contribute to or discuss the data collection layer, not merely the subsequent processing or analysis stages.

2.2.4. Criteria for Exclusion

- Duplicate documents.

- Editorials, tutorials, and summaries.

- Studies outside the scope of cybersecurity or that do not address network flow collection.

- Articles without a relevant technical approach, which do not present methods applicable to data collection.

- Papers that do not deal with specific technologies, such as NetFlow, sFlow, IPFIX, or equivalent.

- Article unavailable: Studies whose full text could not be obtained.

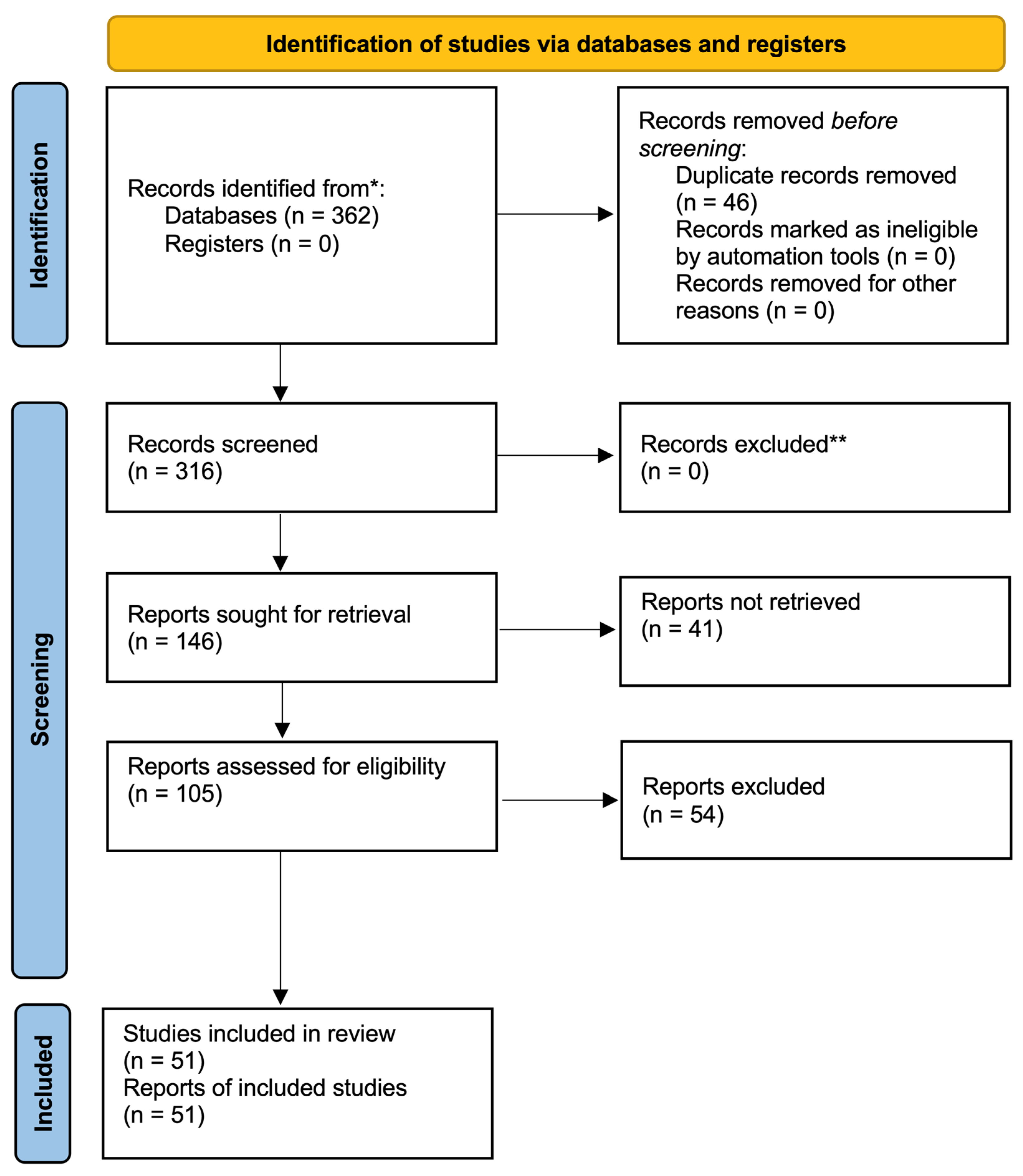

2.3. Study Selection Process

2.3.1. Initial Screening

- Preliminary analysis: The title, abstract, introduction, graphs, tables, images and conclusion of the studies were analyzed to identify those that were potentially relevant.

- Elimination of irrelevant studies: Studies that clearly did not meet the inclusion criteria or met the exclusion criteria were excluded at this stage.

- Selection for full reading: Studies that showed partial or total relevance in any of the elements assessed were selected for detailed analysis.

2.3.2. Full Text Evaluation

- Detailed analysis: Each selected study was fully reviewed to confirm its relevance and alignment with the objectives of the review, considering content, methods, and results presented.

- Collaborative process: All stages were carried out independently by two researchers.

2.4. Data Analysis

2.4.1. Data Extraction

- Bibliographic information: We have recorded the authors, title, year of publication, and source of the study in order to contextualize the origin and credibility of the work.

- Methodologies: We mapped the techniques and tools used to collect data from the data flow.

- Applications: We analyzed the context in which the methods were applied.

- Results: We evaluated the effectiveness of the proposed methods, their practical and theoretical limitations.

2.4.2. Tabulation and Comparison Process

2.4.3. Iterative Review of Studies

2.4.4. Tools Used

- Zotero [23]: We use it to manage bibliographical references and store the articles analyzed.

- Microsoft Excel: We structured and categorized the extracted data in Excel, allowing for comparative analysis and the visualization of trends.

- StArt [24]: Tool used to support the conduct of the systematic literature review, with functionalities for selecting, excluding, categorizing, and analyzing articles.

2.4.5. Categorization and Synthesis

2.5. Quality Assessment and Risk of Bias

- Clarity of context;

- Transparency of metrics;

- Validation process;

- Reproducibility;

- Declaration of conflict of interest.

- 0: criterion fully met;

- 1: partially met;

- 2: not answered.

- Low risk of bias: total score from 0 to 2;

- Moderate risk of bias: total score of 3 to 5;

- High risk of bias: total score equal to or greater than 6.

2.6. Characterization of the Selected Studies

3. Results

3.1. General Characteristics of the Studies

3.2. Main Results

3.2.1. Study Objectives

3.2.2. Data Collection Methods (RQ1: Which Technologies and Architectures Currently Support Flow Collection for Cybersecurity?)

3.2.3. Data Extraction Processes and Metrics (RQ3: Which Performance Metrics Are Reported, and How Consistent Are They?)

3.2.4. Comparison of Collection Technologies E Uso Em Cybersecurity (RQ2: How Do Different Flow-Collection Technologies Compare in Terms of Data Acquisition Methods, Advantages, Limitations, and Applicability to Cybersecurity Scenarios?)

3.2.5. Challenges and Practical Implementations (RQ4: Which Open Challenges (Scalability, Privacy, Regulatory Compliance, Benchmark Availability) Persist?)

3.3. Methodological Quality of the Studies

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CC BY | Creative Commons Attribution |

| DNS | Domain Name System |

| DDoS | Distributed Denial-of-Service |

| DPI | Deep Packet Inspection |

| F1 | F1 Score |

| FPGA | Field-Programmable Gate Array |

| GDPR | General Data Protection Regulation |

| ICS | Industrial Control System |

| IDS | Intrusion Detection System |

| INT | In-band Network Telemetry |

| LTE | Long-Term Evolution |

| NFV | Network Functions Virtualisation |

| NUMA | Non-Uniform Memory Access |

| OSF | Open Science Framework |

| P4 | Programming Protocol-Independent Packet Processors |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| RQ | Research Question |

| SDN | Software-Defined Networking |

| SRAM | Static Random-Access Memory |

| SSH | Secure Shell |

References

- Abramov, A.G. Collection, Analysis and Interactive Visualization of NetFlow Data: Experience with Big Data on the Base of the National Research Computer Network of Russia. Lobachevskii J. Math. 2020, 41, 2525–2534. [Google Scholar] [CrossRef]

- Bhattacharjee, R.; Rajesh, R.; Prasanna Kumar, K.R.; MV, V.P.; Athithan, G.; Sahadevan, A.V. Scalable Flow Probe Architecture for 100 Gbps+ Rates on Commodity Hardware: Design Considerations and Approach. J. Parallel Distrib. Comput. 2021, 155, 87–100. [Google Scholar] [CrossRef]

- Borylo, P.; Davoli, G.; Rzepka, M.; Lason, A.; Cerroni, W. Unified and Standalone Monitoring Module for NFV/SDN Infrastructures. J. Netw. Comput. Appl. 2021, 175, 102934. [Google Scholar] [CrossRef]

- Čeleda, P.; Velan, P.; Kral, B.; Kozak, O. Enabling SSH Protocol Visibility in Flow Monitoring. In Proceedings of the 2019 IFIP/IEEE International Symposium on Integrated Network Management (IM2019): Experience Sessions, Washington, DC, USA, 8–12 April 2019; pp. 569–574. [Google Scholar]

- Wrona, J.; Žádník, M. Low Overhead Distributed IP Flow Records Collection and Analysis. In Proceedings of the 2019 IFIP/IEEE Symposium on Integrated Network and Service Management (IM), Washington, DC, USA, 8–12 April 2019; pp. 557–562. [Google Scholar]

- Campazas-Vega, A.; Crespo-Martínez, I.S.; Guerrero-Higueras, Á.M.; Álvarez-Aparicio, C.; Matellán, V.; Fernández-Llamas, C. Analyzing the Influence of the Sampling Rate in the Detection of Malicious Traffic on Flow Data. Comput. Netw. 2023, 235, 109951. [Google Scholar] [CrossRef]

- Velan, P.; Jirsik, T. On the Impact of Flow Monitoring Configuration. In Proceedings of the 2020 IEEE/IFIP Network Operations and Management Symposium (NOMS 2020), Budapest, Hungary, 20–24 April 2020; pp. 1–7. [Google Scholar]

- Fejrskov, M.; Pedersen, J.M.; Vasilomanolakis, E. Cyber-Security Research by ISPs: A NetFlow and DNS Anonymization Policy. In Proceedings of the 2020 International Conference on Cyber Security and Protection of Digital Services (Cyber Security), Dublin, Ireland, 15–19 June 2020; pp. 1–8. [Google Scholar]

- Sateesan, A.; Vliegen, J.; Scherrer, S.; Hsiao, H.-C.; Perrig, A.; Mentens, N. Speed Records in Network Flow Measurement on FPGA. In Proceedings of the 2021 31st International Conference on Field-Programmable Logic and Applications (FPL), Dresden, Germany, 30 August–3 September 2021; pp. 219–224. [Google Scholar]

- Gao, J.; Zhou, F.; Dong, M.; Feng, L.; Ota, K.; Li, Z.; Fan, J. Intelligent Telemetry: P4-Driven Network Telemetry and Service Flow Intelligent Aviation Platform. In Network and Parallel Computing; Chen, X., Min, G., Guo, D., Xie, X., Pu, L., Eds.; Springer Nature: Singapore, 2025; pp. 348–359. [Google Scholar]

- Li, L.; Kun, K.; Pei, S.; Wen, J.; Liang, W.; Xie, G. CS-Sketch: Compressive Sensing Enhanced Sketch for Full Traffic Measurement. IEEE Trans. Netw. Sci. Eng. 2024, 11, 2338–2352. [Google Scholar] [CrossRef]

- Lin, K.C.-J.; Lai, W.-L. MC-Sketch: Enabling Heterogeneous Network Monitoring Resolutions with Multi-Class Sketch. In Proceedings of the IEEE INFOCOM 2022—IEEE Conference on Computer Communications, London, UK, 2–5 May 2022; pp. 220–229. [Google Scholar]

- Kim, S.; Yoon, S.; Lim, H. Deep Reinforcement Learning-Based Traffic Sampling for Multiple Traffic Analyzers on Software-Defined Networks. IEEE Access 2021, 9, 47815–47827. [Google Scholar] [CrossRef]

- Jafarian, T.; Ghaffari, A.; Seyfollahi, A.; Arasteh, B. Detecting and Mitigating Security Anomalies in Software-Defined Networking (SDN) Using Gradient-Boosted Trees and Floodlight Controller Characteristics. Comput. Stand. Interfaces 2025, 91, 103871. [Google Scholar] [CrossRef]

- Matoušek, P.; Ryšavý, O.; Grégr, M.; Havlena, V. Flow Based Monitoring of ICS Communication in the Smart Grid. J. Inf. Secur. Appl. 2020, 54, 102535. [Google Scholar] [CrossRef]

- Medeiros, D.S.V.; Cunha Neto, H.N.; Lopez, M.A.; Magalhães, L.C.S.; Fernandes, N.C.; Vieira, A.B.; Silva, E.F.; Mattos, D.M.F. A Survey on Data Analysis on Large-Scale Wireless Networks: Online Stream Processing, Trends, and Challenges. J. Internet Serv. Appl. 2020, 11, 6. [Google Scholar] [CrossRef]

- ACM Digital Library. Available online: https://dl.acm.org/ (accessed on 3 January 2025).

- IEEE Xplore. Available online: https://ieeexplore.ieee.org/Xplore/home.jsp (accessed on 3 January 2025).

- ScienceDirect.Com|Science, Health and Medical Journals, Full Text Articles and Books. Available online: https://www.sciencedirect.com/ (accessed on 3 January 2025).

- Computer Science: Books and Journals|Springer|Springer—International Publisher. Available online: https://www.springer.com/br/computer-science (accessed on 3 January 2025).

- Smart Search—Web of Science Core Collection. Available online: https://www.webofscience.com/wos/woscc/smart-search (accessed on 6 August 2025).

- Scopus—Homepage. Available online: https://www.scopus.com/pages/home?display=basic#basic (accessed on 6 August 2025).

- Zotero|Your Personal Research Assistant. Available online: https://www.zotero.org/ (accessed on 3 January 2025).

- StArt. Available online: https://www.lapes.ufscar.br/resources/tools-1/start-1 (accessed on 21 June 2025).

- Keele, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering; Technical Report, ver. 2.3 EBSE Technical Report; EBSE: Durham, UK, 2007. [Google Scholar]

- Jirsik, T.; Čeleda, P. Cyber Situation Awareness via IP Flow Monitoring. In Proceedings of the IEEE/IFIP Network Operations and Management Symposium (NOMS 2020), Budapest, Hungary, 20–24 April 2020; pp. 1–6. [Google Scholar]

- Watkins, J.; Tummala, M.; McEachen, J. A Machine Learning Approach to Network Security Classification Utilizing NetFlow Data. In Proceedings of the 15th International Conference on Signal Processing and Communication Systems (ICSPCS), Sydney, Australia, 13–15 December 2021. [Google Scholar]

- Koumar, J.; Hynek, K.; Pešek, J.; Čejka, T. NetTiSA: Extended IP Flow with Time-Series Features for Universal Bandwidth-Constrained High-Speed Network Traffic Classification. Comput. Netw. 2024, 240, 110147. [Google Scholar] [CrossRef]

- Campazas-Vega, A.; Crespo-Martínez, I.S.; Guerrero-Higueras, Á.M.; Fernández-Llamas, C. Flow-Data Gathering Using NetFlow Sensors for Fitting Malicious-Traffic Detection Models. Sensors 2020, 20, 7294. [Google Scholar] [CrossRef]

- Niknami, N.; Srinivasan, A.; Wu, J. Cyber-AnDe: Cybersecurity Framework with Adaptive Distributed Sampling for Anomaly Detection on SDNs. IEEE Trans. Inf. Forensics Secur. 2024, 19, 9245–9257. [Google Scholar] [CrossRef]

- Velan, P.; Čeleda, P. Application-Aware Flow Monitoring. In Proceedings of the 2019 IFIP/IEEE Symposium on Integrated Network and Service Management (IM), Washington, DC, USA, 8–12 April 2019; pp. 701–706. [Google Scholar]

- Husák, M.; Sadlek, L.; Spacek, S.; Laśtovička, M.; Javorník, M.; Komárková, J. CRUSOE: A Toolset for Cyber Situational Awareness and Decision Support in Incident Handling. Comput. Secur. 2022, 115, 102609. [Google Scholar] [CrossRef]

- Yu, T.; Yue, R. Detecting Abnormal Interactions among Intranet Groups Based on Netflow Data. IOP Conf. Ser. Earth Environ. Sci. 2020, 428, 012039. [Google Scholar] [CrossRef]

- Abramov, A.G. Enhancement of Services for Working with Big Data with an Emphasis on Intelligent Analysis and Visualization of Network Traffic Exchange in the National Research Computer Network. Lobachevskii J. Math. 2024, 45, 5764–5776. [Google Scholar] [CrossRef]

- Abramov, A.G.; Porkhachev, V.A.; Yastrebov, Y.V. Methods and High-Performance Tools for Collecting, Analysis and Visualization of Data Exchange with a Focus on Research and Education Telecommunications Networks. Lobachevskii J. Math. 2023, 44, 4930–4938. [Google Scholar] [CrossRef]

- Aquino, A.N.S.; Villanueva, A.R. Network Anomaly Detection Using NetFlow and Network Automation. In Proceedings of the 2023 11th International Symposium on Digital Forensics and Security (ISDFS), Chattanooga, TN, USA, 11–12 May 2023. [Google Scholar]

- Danesh, H.; Karimi, M.B.; Arasteh, B. CMShark: A NetFlow and Machine-Learning Based Crypto-Jacking Intrusion-Detection Method. Intell. Decis. Technol. 2024, 18, 2255–2273. [Google Scholar] [CrossRef]

- Dias, L.; Valente, S.; Correia, M. Go with the Flow: Clustering Dynamically-Defined NetFlow Features for Network Intrusion Detection with DynIDS. In Proceedings of the 2020 IEEE 19th International Symposium on Network Computing and Applications (NCA), Cambridge, MA, USA, 24–27 November 2020. [Google Scholar]

- Fejrskov, M.; Pedersen, J.M.; Vasilomanolakis, E. Detecting DNS Hijacking by Using NetFlow Data. In Proceedings of the 2022 IEEE Conference on Communications and Network Security (CNS), Austin, TX, USA, 3–5 October 2022; pp. 273–280. [Google Scholar]

- Hsupeng, B.; Lee, K.-W.; Wei, T.-E.; Wang, S.-H. Explainable Malware Detection Using Predefined Network Flow. In Proceedings of the 2022 24th International Conference on Advanced Communication Technology (ICACT), PyeongChang, Korea, 13–16 February 2022; pp. 27–33. [Google Scholar]

- Husák, M.; Laśtovička, M.; Tovarňák, D. System for Continuous Collection of Contextual Information for Network Security Management and Incident Handling. In Proceedings of the 16th International Conference on Availability, Reliability and Security, Vienna, Austria, 17–20 August 2021. [Google Scholar]

- Janati Idrissi, M.; Alami, H.; El Mahdaouy, A.; Bouayad, A.; Yartaoui, Z.; Berrada, I. Flow Timeout Matters: Investigating the Impact of Active and Idle Timeouts on the Performance of Machine Learning Models in Detecting Security Threats. Future Gener. Comput. Syst. 2025, 166, 107641. [Google Scholar] [CrossRef]

- Jare, S.; Abraham, J. Creating an Experimental Setup in Mininet for Traffic Flow Collection During DDoS Attack. In Proceedings of the 2024 8th International Conference on Computing, Communication, Control and Automation (ICCUBEA), Pune, India, 23–24 August 2024; pp. 1–6. [Google Scholar]

- Kamamura, S.; Hayashi, Y.; Fujiwara, T. Spatial Anomaly Detection Using Fast xFlow Proxy for Nation-Wide IP Network. IEICE Trans. Commun. 2024, E107.B, 728–738. [Google Scholar] [CrossRef]

- Komisarek, M.; Pawlicki, M.; Kozik, R.; Hołubowicz, W.; Choraś, M. How to Effectively Collect and Process Network Data for Intrusion Detection? Entropy 2021, 23, 1532. [Google Scholar] [CrossRef]

- Liu, X.; Tang, Z.; Yang, B. Predicting Network Attacks with CNN by Constructing Images from NetFlow Data. In Proceedings of the 2019 IEEE 5th Intl Conference on Big Data Security on Cloud (BigDataSecurity), IEEE Intl Conference on High Performance and Smart Computing, (HPSC) and IEEE Intl Conference on Intelligent Data and Security (IDS), Washington, DC, USA, 27–29 May 2019; pp. 61–66. [Google Scholar]

- Moreno-Sancho, A.A.; Pastor, A.; Martinez-Casanueva, I.D.; González-Sánchez, D.; Triana, L.B. A Data Infrastructure for Heterogeneous Telemetry Adaptation: Application to Netflow-Based Cryptojacking Detection. Ann. Telecommun. 2024, 79, 241–256. [Google Scholar] [CrossRef]

- Ndonda, G.K.; Sadre, R. Network Trace Generation for Flow-Based IDS Evaluation in Control and Automation Systems. Int. J. Crit. Infrastruct. Prot. 2020, 31, 100385. [Google Scholar] [CrossRef]

- Pesek, J.; Plny, R.; Koumar, J.; Jeřábek, K.; Čejka, T. Augmenting Monitoring Infrastructure For Dynamic Software-Defined Networks. In Proceedings of the 2023 8th International Conference on Smart and Sustainable Technologies (SpliTech), Split, Croatia, 20–23 June 2023; pp. 1–4. [Google Scholar]

- Erlacher, F.; Dressler, F. On High-Speed Flow-Based Intrusion Detection Using Snort-Compatible Signatures. IEEE Trans. Dependable Secur. Comput. 2022, 19, 495–506. [Google Scholar] [CrossRef]

- Leal, R.; Santos, L.; Vieira, L.; Gonçalves, R.; Rabadão, C. MQTT Flow Signatures for the Internet of Things. In Proceedings of the 2019 14th Iberian Conference on Information Systems and Technologies (CISTI), Coimbra, Portugal, 19–22 June 2019. [Google Scholar]

- Tovarňák, D.; Racek, M.; Velan, P. Cloud Native Data Platform for Network Telemetry and Analytics. In Proceedings of the 2021 17th International Conference on Network and Service Management (CNSM), Izmir, Turkey, 25–29 October 2021; pp. 394–396. [Google Scholar]

- Ujjan, R.M.A.; Pervez, Z.; Dahal, K.; Bashir, A.K.; Mumtaz, R.; González, J. Towards sFlow and Adaptive Polling Sampling for Deep Learning Based DDoS Detection in SDN. Future Gener. Comput. Syst. 2020, 111, 763–779. [Google Scholar] [CrossRef]

- Zhang, H.; Huang, H.; Sun, Y.-E.; Wang, Z. MIME: Fast and Accurate Flow Information Compression for Multi-Spread Estimation. In Proceedings of the 2023 IEEE 31st International Conference on Network Protocols (ICNP), Reykjavik, Iceland, 10–13 October 2023. [Google Scholar]

- Gao, G.; Qian, Z.; Huang, H.; Du, Y. An Adaptive Counter-Splicing-Based Sketch for Efficient Per-Flow Size Measurement. In Proceedings of the 2023 IEEE/ACM 31st International Symposium on Quality of Service (IWQoS), Orlando, FL, USA, 19–21 June 2023. [Google Scholar]

- Kim, S.; Jung, C.; Jang, R.; Mohaisen, D.; Nyang, D. A Robust Counting Sketch for Data Plane Intrusion Detection. In Proceedings of the 30th Annual Network and Distributed System Security Symposium (NDSS 2023), San Diego, CA, USA, 27 February–3 March 2023; The Internet Society: Reston, VA, USA, 2023. [Google Scholar]

- Lu, J.; Zhang, Z.; Chen, H. A Two-Layer Sketch for Entropy Estimation in the Data Plane. In Proceedings of the 2022 7th International Conference on Cloud Computing and Big Data Analytics (ICCCBDA), Chengdu, China, 28–30 April 2022; pp. 123–126. [Google Scholar]

- Sadrhaghighi, S.; Dolati, M.; Ghaderi, M.; Khonsari, A. Monitoring OpenFlow Virtual Networks via Coordinated Switch-Based Traffic Mirroring. IEEE Trans. Netw. Serv. Manag. 2022, 19, 2219–2237. [Google Scholar] [CrossRef]

- Fu, Y.; Chen, H.; Zheng, Q.; Yan, Z.; Kantola, R.; Jing, X.; Cao, J.; Li, H. An Adaptive Security Data Collection and Composition Recognition Method for Security Measurement over LTE/LTE-a Networks. J. Netw. Comput. Appl. 2020, 155, 102549. [Google Scholar] [CrossRef]

- Coutinho, A.C.; Araújo, L.V. de MICRA: A Modular Intelligent Cybersecurity Response Architecture with Machine Learning Integration. J. Cybersecur. Priv. 2025, 5, 60. [Google Scholar] [CrossRef]

| Database | Time Coverage | Last Search | Records Retrieved |

|---|---|---|---|

| ACM Digital Library | 2019-01-01–2025-07-31 | 2025-08-01 | 36 |

| IEEE Xplore | 2019-01-01–2025-07-31 | 2025-08-01 | 9 |

| ScienceDirect | 2019-01-01–2025-07-31 | 2025-08-01 | 75 |

| SpringerLink | 2019-01-01–2025-07-31 | 2025-08-01 | 18 |

| Web of Science | 2019-01-01–2025-07-31 | 2025-08-01 | 6 |

| Scopus | 2019-01-01–2025-07-31 | 2025-08-01 | 218 |

| Total | 362 |

| Technology Category | Share of Papers (%) | ID Papers |

|---|---|---|

| NetFlow (v5/v9, xFlow derivatives) | 62.7 | [1,2,4,6,7,8,13,14,16,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49] |

| IPFIX/IPFIX-based probes | 45.1 | [2,4,5,6,8,15,16,27,28,30,33,35,36,37,39,40,41,46,47,49,50,51,52] |

| sFlow exporters/adaptive polling | 9.8 | [3,13,16,28,53] |

| INT/P4 sketches/OpenFlow mirroring | 17.6 | [10,11,12,54,55,56,57,58,59] |

| Other (LTE adaptive collectors, FPGA meters, trace generators, MIME sketch, etc.) | 3.9 | [9,60] |

| Article | Metric | How the Metric Works | Analysis of Result |

|---|---|---|---|

| Velan & Čeleda (2019) [4] | Packet Processing Rate (pps) | Tracks rate of packet inspection and flow construction including application-layer parsing, measured in packets per second | Application-aware monitoring with optimization techniques sustained ~1 Mpps on a single core versus ~0.2 Mpps baseline, indicating a 5× performance gain while enriching flows with application metadata |

| Wrona & Zadník (2019) [5] | Query Latency (s) | Records end-to-end latency of interactive queries on distributed flow records platform versus Hadoop/MapReduce baseline | Distributed system yielded query latencies <1 s compared to 20–35 s on 6-node MapReduce cluster, demonstrating >20× speed-up for typical aggregation and Top-N queries with minimal overhead |

| Campazas-Vega et al. (2023) [6] | Sampling Rate | The proportion of packets selected for flow generation (e.g., 1 in every 250, 500, or 1000 packets is sampled). | As sampling becomes sparser, malicious flow details are lost. Detection remains high up to a 1/250 rate; at 1/500 some models (LR, LSVC) fall below 64% accuracy, while at 1/1000 only KNN, MLP, and RF maintain above 90% accuracy. |

| Sateesan et al. (2021) [9] | Throughput (Mpps) | Measures millions of packets per second processed by the A-CM sketch on FPGA; counted as number of flow updates per second under maximum line-rate traffic | A-CM sketch achieved up to 454 Mpps throughput with 5 clock-cycle update latency, outperforming prior FPGA-based sketches by 20–30% in both throughput and memory efficiency |

| Li et al. (2024) [11] | Flow reconstruction accuracy | Compresses flow counts in the switch and reconstructs them at the controller | Recovers nearly all flows with under 10% error using only 24 KB of memory |

| Lin & Lai (2022) [12] | Average error by priority class | Divides flows into priority classes and applies separate sketches to reduce collisions | Reduces error for high-priority flows by up to 57% and achieves up to 3× higher throughput than generic sketches |

| Matoušek et al. (2020) [15] | Flow delivery latency | Measures time delay between flow generation at the device and its arrival at the NetFlow collector | Average latency under 5 ms with low jitter, meeting industrial control requirements |

| Niknami et al. (2024) [30] | Adaptive sampling rate | Dynamically adjusts the fraction of packets sampled per flow based on detection feedback | Detects 90% of anomalies using roughly half the packets required by static sampling, cutting overhead |

| Fejrskov et al. (2022) [39] | Sampling rate 1:1024 | Only 1 out of every 1024 flows is recorded in the NetFlow logs; the remaining flows are discarded before feature extraction. | This high sampling rate reduces storage requirements and protects privacy and scalability, but may omit low-volume or short-lived flows. |

| Janati-Idrissi et al. (2025) [42] | Number of flows expired per timeout setting | NetFlow/IPFIX records are collected under different active and idle timeout values. The metric counts how many flow records expire before reporting under each setting. | Flow count varied by up to 15% depending on timeout configuration, showing that timeout parameters directly affect data completeness. |

| Komisarek et al. (2021) [45] | Sampling rate vs. volume of data collected | Various packet sampling schemes (e.g., 1:10, 1:100) are applied before NetFlow export. The metric measures the percentage reduction in captured packet volume. | A 1:100 sampling rate reduced data volume by up to 90% while retaining at least 80% of detection capability, illustrating the trade-off between collection overhead and detection efficacy. |

| Ndonda & Sadre (2020) [48] | Traffic pattern fidelity | Generates synthetic traffic following observed packet-size and inter-packet time distributions | Simulated traffic matches over 98% of real trace characteristics across multiple scenarios |

| Ujjan et al. (2020) [53] | True Positive Rate (TPR) vs. False Positive Rate (FPR) | Compares sFlow packet sampling (1:1256) against adaptive polling by measuring ratio of correctly detected malicious flows (TPR) and misclassified benign flows (FPR) | sFlow achieved TPR ≈ 95% with FPR ≈ 4%, while adaptive polling reached TPR ≈ 92% with FPR ≈ 5%. The sampling rate provided a better trade-off between overhead and detection quality |

| Lu et al. (2022) [57] | Entropy estimation quality | Tracks packet-size distribution over time windows to estimate entropy | Estimates are within 5% of the exact value while using minimal memory |

| Lu et al. (2022) [57] | Average entropy error | Filters out rare packets in the first layer, then computes entropy in the second layer | Maintains average error below 7% under high-volume conditions, demonstrating dataplane efficiency |

| Technology | Main Characteristics (According to the Literature) | Limitations (According to the Literature) | Suggested Use (According to the Literature) | Types of Attacks Reported in the Studies |

|---|---|---|---|---|

| NetFlow (v5/v9) | Aggregates packets into 5-tuple flows (source/destination IP, ports, protocol), exporting statistics such as bytes, packets, duration, and TCP flags; supports NetFlow v5 (fixed), v9 (template-based), and IPFIX (IETF-standard with extensible templates for additional metrics like DNS/HTTP/TLS); low overhead on routers and switches (ASIC-compatible); allows statistical sampling (1:N) to reduce cost; broad interoperability and native vendor support; integration with modern pipelines (Logstash, ClickHouse, Grafana) and automation frameworks (e.g., Ansible). | Does not capture payload, only header metadata; sensitive to encrypted traffic; heavily dependent on sampling configuration (may miss short flows and introduces a trade-off between precision and performance); large data volumes can saturate collectors and require Big-Data infrastructure; large template sets increase extraction latency; legacy versions (v5) are inflexible; complex tuning needed for clustering and dynamic feature definition; traditional tools (e.g., nfdump) become bottlenecks under high-volume collection. | Traffic monitoring in backbones, NRENs, ISPs, campus networks, and datacenters; network performance analysis, traffic engineering, and capacity planning; real-time detection of DDoS, port scans, brute-force attacks, botnets, and volumetric anomalies; forensic and historical analysis; protocol and policy auditing (e.g., SSH, DNS); identification of “heavy hitters” and “top talkers” for prioritization and troubleshooting; integration with IDS/IPS for alert correlation; scenarios where DPI is infeasible but high-volume visibility is essential. | Cryptomining/Cryptojacking Denial of Service (DoS)/Distributed DoS (DDoS) Port scanning/Network reconnaissance Brute-force authentication attacks Web application attacks Malware & exploits Botnet-related activity DNS-based attacks Network protocol abuse Fuzzers (automated vulnerability discovery). Web crawling/malicious scanning. Multihop (malicious routing/relay). WarezClient/WarezMaster (pirated software distribution). Recorded live user interaction (human-driven intrusion). Generic intrusion/generic attacks. Analysis (malicious analysis tools). IoT malware and targeted IoT attacks. |

| IPFIX | IETF-standardized, extensible flow export protocol (RFC 5101/7011) evolved from NetFlow; template-based design allows customizable fields (L2–L7, DNS, SSH, TLS, HTTP), user-defined data types, and bidirectional aggregation; widely supported in commercial routers/switches; enables multi-layer monitoring, distributed telemetry, and real-time integration with big-data pipelines (e.g., Elasticsearch, Kafka, IPFIXcol2). | Higher processing and memory overhead at collectors; dependency on template synchronization between exporters/collectors; packet loss of templates may delay decoding; non-encrypted messages raise privacy concerns; visibility limited to headers (no payload); accuracy reduced under aggressive sampling; interoperability varies across vendors; NAT/dynamic addressing complicates correlation; requires tuning of timeouts and template management. | Large-scale traffic monitoring in ISPs, NRENs, and corporate networks; anomaly and attack detection (DoS/DDoS, SSH brute force, DNS hijacking, cryptojacking); capacity planning and SLA monitoring; forensic analysis and long-term data retention; telemetry in SDN and IoT/ICS environments; privacy-compliant monitoring in regulated contexts (e.g., ePrivacy in the EU); situations where DPI is infeasible but enriched flow metadata is needed. | Cryptojacking/Cryptomining Denial-of-Service (DoS) and Distributed Denial-of-Service (DDoS) Port scanning/network probing SSH brute-force attacks Botnet command-and-control communication Worm propagation Intrusion attempts/unauthorized network infiltration Web application attacks DNS-based attacks IoT malware and IoT-targeted attacks Traffic obfuscation and tunneling |

| sFlow | Industry-standard packet sampling protocol (1-in-N header sampling) that exports UDP datagrams containing flow statistics and interface counters; lightweight on CPU/memory; widely supported across vendors and interoperable with SDN/OpenFlow; scalable for high-speed and heterogeneous environments (cloud, data centers, IoT). | Sampling reduces granularity and may miss short/low-rate flows or rare anomalies; precision highly sensitive to sampling rate (too high overloads collectors, too low loses detail); no payload visibility; limited view of intra-switch traffic. | High-speed networks where full DPI is infeasible; large-scale traffic monitoring in ISPs, SDN/NFV, campus and data centers; capacity planning and anomaly detection (e.g., DDoS); real-time statistical analysis and SLA monitoring in resource-constrained switches. | Denial of Service (DoS)/Distributed Denial of Service (DDoS) Brute-force authentication attacks Botnet-related activity Cryptomining/Cryptojacking DNS-based attacks IoT malware and IoT-targeted attacks Evasion/traffic obfuscation Generic intrusion detection (Intrusion attempts) |

| INT/P4 (In-band Network Telemetry) | In-band telemetry (INT) integrated with P4 forwarding, embedding hop-level metadata (timestamps, queue states, IDs) into packets; supports postcard model and on-demand activation; hierarchical multilayer structure with adaptive sampling to reduce controller load. | Requires P4-enabled switches (not legacy hardware); adds per-hop processing overhead; complex configuration of on-demand policies and metadata; centralized collection/aggregation needed; still mostly at prototype stage with limited large-scale deployments. | High-performance SDN environments with P4 adoption; critical applications needing fine-grained telemetry (e.g., aviation, data centers); scenarios benefiting from on-demand monitoring to cut overhead; integration with OpenFlow/Floodlight for fast anomaly detection and rerouting. | Distributed Denial of Service (DDoS) attacks General network anomalies Port scanning/network probing |

| Sketches (CS, MC, Filter, MIME) | Compact counter-based data structures (Count-Min, Elastic, MV, UnivMon, CS, Filter, MC, A-CM, etc.) with O(1) per-packet updates; support heavy-hitter, heavy-changer, entropy, and superspreader detection; adaptable via multi-layer designs, bidirectional counters, TCAM offload, or FPGA acceleration to reach hundreds of Gbps. | Accuracy drops for small (“mice”) flows due to hash collisions; trade-off between memory size and error; some designs require specialized hardware (TCAM, FPGA, programmable switches); increased complexity in multi-layer or reconstruction schemes; added controller overhead in full-flow recovery. | High-speed networks (100 Gbps+ backbones, ISPs, datacenters) requiring inline, low-latency flow measurement; detection of heavy hitters, abrupt traffic shifts, superspreaders, and entropy anomalies; suitable for SDN/programmable switches or FPGA-based deployments where precise telemetry and scalable flow monitoring are critical. | Heavy-hitter detection (volumetric flows/hosts, e.g., volumetric DDoS) Heavy-change detection (sudden shifts in traffic distributions) Superspreader detection (anomalous flows indicative of port scanning or DDoS) Denial of Service (DoS/DDoS) Botnet Cryptomining/Cryptojacking Malicious DNS (DNS malware/hijacking) Network Intrusion (generic intrusion) IoT Malware Tor traffic VPN traffic |

| FPGA Probes | Hardware-based packet and flow capture directly on FPGA NICs with on-chip caching and parallel pipelines, enabling line-rate processing from 1 Gbps (legacy NetFPGA) to 200+ Gbps in modern platforms; supports pre-filtering, sampling, summarization, and even DPI offload to reduce host CPU load. | High cost of specialized FPGA hardware; limited on-chip memory that can bottleneck under high flow counts; complex development and maintenance (HDL, firmware updates, vendor toolchains); less flexible than software-only collectors. | High-speed backbone or datacenter links (≥10 Gbps) requiring inline flow monitoring and very low latency; detection of elephant flows and volumetric attacks; environments where CPU offload is critical (e.g., inline IDS/NIDS, DPI acceleration, ISP/Tier-1 operators). | Generic intrusion/generic attacks. |

| Risk Classification | Number of Studies | Percentage (%) |

|---|---|---|

| Low | 17 | 33.3 |

| Moderate | 34 | 66.7 |

| High | 0 | 0.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Coutinho, A.C.; Araújo, L.V.d. Network Data Flow Collection Methods for Cybersecurity: A Systematic Literature Review. Computers 2025, 14, 407. https://doi.org/10.3390/computers14100407

Coutinho AC, Araújo LVd. Network Data Flow Collection Methods for Cybersecurity: A Systematic Literature Review. Computers. 2025; 14(10):407. https://doi.org/10.3390/computers14100407

Chicago/Turabian StyleCoutinho, Alessandro Carvalho, and Luciano Vieira de Araújo. 2025. "Network Data Flow Collection Methods for Cybersecurity: A Systematic Literature Review" Computers 14, no. 10: 407. https://doi.org/10.3390/computers14100407

APA StyleCoutinho, A. C., & Araújo, L. V. d. (2025). Network Data Flow Collection Methods for Cybersecurity: A Systematic Literature Review. Computers, 14(10), 407. https://doi.org/10.3390/computers14100407