Abstract

The deaf society supports Sign Language Recognition (SLR) since it is used to educate individuals in communication, education, and socialization. In this study, the results of using the modified Convolutional Neural Network (CNN) technique to develop a model for real-time Kurdish sign recognition are presented. Recognizing the Kurdish alphabet is the primary focus of this investigation. Using a variety of activation functions over several iterations, the model was trained and then used to make predictions on the KuSL2023 dataset. There are a total of 71,400 pictures in the dataset, drawn from two separate sources, representing the 34 sign languages and alphabets used by the Kurds. A large collection of real user images is used to evaluate the accuracy of the suggested strategy. A novel Kurdish Sign Language (KuSL) model for classification is presented in this research. Furthermore, the hand region must be identified in a picture with a complex backdrop, including lighting, ambience, and image color changes of varying intensities. Using a genuine public dataset, real-time classification, and personal independence while maintaining high classification accuracy, the proposed technique is an improvement over previous research on KuSL detection. The collected findings demonstrate that the performance of the proposed system offers improvements, with an average training accuracy of 99.05% for both classification and prediction models. Compared to earlier research on KuSL, these outcomes indicate very strong performance.

1. Introduction

As a primary form of communication among people, sign language is used globally. Sign language is also a repository of reactions and body language, with a corresponding hand gesture for each letter of the alphabet. Sign languages, in comparison with languages that use speech, exhibit significant regional variation within each nation [1].

A helpful and efficient aid for the deaf population was previously developed with the use of current technology [2]. Furthermore, there should not always be a need for a human translator to facilitate communication between deaf and hearing people. For thousands of years, the deaf community has relied on sign language to communicate. Among the many deaf cultures around the globe, SL has progressed into a full and complicated language [3]. The difficulties of everyday living can be exacerbated by a handicap, but technological and computerized advancements have made things much simpler [4].

The global extent of hearing loss underscores the importance of improving sign language recognition. The World Health Organization (WHO) reports that more than 430 million individuals need rehabilitation due to their severe hearing loss. By 2050, experts predict that this number will rise to more than 700 million. In low- and middle-income nations, where healthcare is scarce and assistance is minimal, this statistic corresponds to roughly 1 in 10 people facing communication challenges [5].

Deaf or hard-of-hearing individuals often struggle to interact in real-life situations and face challenges in accessing information. The EU-funded SignON project aims to create a mobile app that can translate between European sign and verbal languages to address these issues [6]. The belief that communication serves as the key to fostering an open society is held by SignDict. In order to enhance the integration of deaf communities, a living dictionary was established, and participation is encouraged from everyone in the development of a sign language dictionary, without the requirement of technical knowledge. Notably, both the SignDict and SignOn projects belong to a European country [7].

The most widely used sign languages, including American Sign Language (ASL) and British Sign Language (BSL), employ manual alphabets. Both of these classification criteria for sign languages are significant, although they are not written in the same language. The key distinction between ASL and BSL is that BSL provides detailed descriptions of the letter form, orientation, and placement in the hand, whereas ASL provides only shape and orientation [8]. There is no officially recognized or recorded version of Kurdish Sign Language (KuSL) in the nations populated by Kurds. In an effort to standardize and promote KuSL among the deaf community and others who are interested, Kurdish SL has been developed as a standard for Kurdish-script sign language based on ASL. This work, which relies on machine-learning methods, yielded a KuSL specification.

As a consequence of encouraging findings in real applications, the Convolutional Neural Network (CNN) method has recently acquired traction in the field of image categorization. As a result, many cutting-edge CNN-based sign language interpreters have been developed [9,10]. CNNs are helpful in classifying hand forms in SLR, but they cannot segment hands without further training or tools [11]. CNN’s superior ability to learn features from raw data input makes it the best machine-learning model for image identification. As a type of deep neural network, CNN was first developed for processing data in a single dimension [12]. Because of its high flexibility in parameter adjustment, growing effectiveness, and minimal difficulty, 2D CNN has achieved widespread use in a large range of fields, from image classification to face recognition and natural language processing [13].

Within the present context of gesture recognition, conventional algorithms such as the neural network approach and the hidden Markov model have significant limitations, including their considerable computational complexity and extended training times. In order to address these difficulties, the authors in [14] presented the Support Vector Machine (SVM) algorithm as an innovative method for gesture identification. The SVM algorithm is suggested as an appropriate alternative to current approaches to reduce the computational load and accelerate the relevant training procedures. With the goal of reducing the influence of external factors, including both human and environmental variables, on dynamic gestures of the same category, and improving the accuracy and reliability of the algorithm’s recognition, it is crucial to properly process the initial pattern of the gesture to be recognized, which is obtained through a camera or video file.

A categorization of KuSL using the ASL alphabet and the ArSL2018 dataset is proposed in this article as a potential benchmark for the Kurdish Sign Language Recognition Standard (KuSLRS). The Kurdish alphabet is based on Arabic script; hence, a 2D-CNN architecture to categorize the Kurdish letters is proposed.

1.1. Objectives

This study aims to bridge the communication gap between the hearing world and the deaf Kurdish community by developing a real-time, person-independent KuSL recognition system. Our model surpasses existing projects such as SignOn and SignDict, which focus on European languages and offline recognition, by achieving 98.80% real-time accuracy in translating handshapes into Kurdish-Arabic script letters. This accuracy can be maintained even under challenging lighting and hand poses thanks to our robust system design. Furthermore, we present the first comprehensive and publicly available KuSL dataset, which will facilitate further research and development. Importantly, real-time language translation empowers deaf individuals by providing access to written information and communication, thereby fostering greater independence and social participation. Our groundbreaking approach is evaluated in this study through field trials and user feedback, ensuring its effectiveness in bridging the communication gap and positively impacting the Kurdish deaf community.

1.2. Related Work

Most studies in the field used one of three methods: computer vision, smart gloves with sensors, or hybrid systems that integrate the two. In SLR, facial expressions are crucial, but the initial algorithm does not take them into account. In contrast to computer-vision systems, technologies based on gloves can capture the complete gesture, including the user’s movement [15,16].

Numerous studies on variant SL, such as ASL [17,18,19,20,21,22,23,24,25], BSL [26], Arabic SL [1,27,28], Turkish SL [29,30], Persian SL [31,32,33,34], Indian SL [35,36], and others, have been carried out in recent years. To the best of our knowledge, the only accessible studies focused on KuSL and consisted of 12 classes [37], 10 classes [38], and 84 classes [39].

The recognition of Kurdish signs in KuSL was improved with the help of the suggested model. There are now 71,400 samples across 34 alphabet signs for Kurdish letters due to the consolidation of the ASL and ArSL2018 databases. The three primary challenges in SLR are the large number of classes, models, and machine-learning techniques.

It is important to distinguish between static and dynamic gestures. To illustrate these procedures, one can utilize finger spelling. The architecture controls the data collection method, feature extraction, and data categorization, whether the system is working with static language signals expressed in a single picture or in dynamic language signs shown in a series of images. A global characteristic statement [39] works well for static indicators since they are not temporal series.

Addressing static sign language translation, a PhotoBiological Filter Classifier (PhBFC) was introduced for enhanced accuracy. Utilizing a contrast enhancement algorithm inspired by retinal photoreceptor cells, effective communication was supported between agents and operators and within hearing-impaired communities. Accuracy was maintained through integration into diverse devices and applications, with V3 achieving 91.1% accuracy compared to V1’s 63.4%, while an average of 55.5 Frames Per Second (FPS) was sustained across models. The use of photoreceptor cells improved accuracy without affecting processing time [40].

Wavelet transforms and Neural Network (NN) models were used in the Persian Sign Language (PSL) system to decipher static motions representing the letters of the Persian alphabet, as outlined in [31]. Digital cameras were used to take the necessary images, which were then cropped, scaled, and transformed into grayscale in preparation for use with the specified alphabets; 94.06% accuracy was achieved in the categorization process. Identification on a training dataset of 51 dynamic signals was implemented by utilizing LM recordings of hand motions and Kinect recordings of the signer’s face. The research achieved 96.05% and 94.27% accuracy for one- and two-handed motions, respectively, by using a number of different categorization methods [41]. As indicated in [21,23], some studies used a hybrid approach, employing both static and dynamic sign recognition.

Several methods exist for gathering data, such as utilizing a digital camera, Kinect sensor, continuous video content, or already publicly accessible datasets. It was suggested that a Kinect sensor be used in a two-step Hand Posture Recognition (HPR) process for SLR. At the outset, we demonstrated a powerful technique for locating and following hands. In the second stage, Deep Neural Networks (DNNs) were employed to learn features from effort-invariant hand gesture photos automatically, and the resulting identification accuracy was 98.12% [18].

To identify the 32 letters of the PSL alphabet, a novel hand gesture recognition technique was proposed. This method relies on the Bayes rule, a single Gaussian model, and the YCbCr color space. In this method, hand locations are identified even in complex environments with uneven illumination. The technology was tested using 480 USB webcam images of PSL postures. The total accuracy rating was 95.62% [33]. Furthermore, a technique was presented for identifying Kurdish sign language via the use of dynamic hand movements. This system makes use of two feature collections built from models of human hands. A feature-selection procedure was constructed, and then a classifier based on an Artificial Neural Network (ANN) was used to increase productivity. The results show that the success rate for identifying hand motions was quite high at 98% [38].

Furthermore, video data were processed via a Restricted Boltzmann Machine (RBM) to decipher hand-sign languages. After the original input image was segmented into five smaller photos, the convolutional neural network was trained to detect the hand movements. The Center for Vision at the University of Surrey compiled a dataset on handwriting recognition [22] using data from Massey University, New York University, and the ASL Fingerspelling Dataset (2012).

A hand-shape recognition system was introduced using Manus Prime X data gloves for nonverbal communication in VR. This system encompasses data acquisition, preprocessing, and classification, with analysis exploring the impact of outlier detection, feature selection, and artificial data augmentation. Up to 93.28% accuracy for 56 hand shapes and up to 95.55% for a reduced set of 27 were achieved, demonstrating the system’s effectiveness [42].

Researchers in this area continue to improve techniques for reading fingerspelling, recognizing gestures, and interpreting emotions. However, progress is being made every day in alphabetic recognition, which serves as the foundation for systems that recognize words and translate sentences. Twenty-four images representing the American Sign Language alphabet were used to train a Deep Neural Network (DNN) model, which was subsequently fine-tuned using 5200 pictures of computer-generated signs. A bidirectional LSTM neural network was then used to improve the system, achieving 98.07% efficiency in training using a vocabulary of 370 words. For observing live camera feeds, the SLR response to the current picture is summarized in three lines of text: a phrase produced by the gesture sequence to word converter, the resolved sentence received from the Word Spelling Correction (WSC) module, and the image itself [9]. These are all GUI elements used in the existing American Sign Language Alphabet Translator (ASLAT).

Using a wearable inertial motion capture system, a dataset of 300 commonly used American Sign Language sentences was collected from three volunteers. Deep-learning models achieved 99.07% word-level and 97.34% sentence-level accuracy in recognition. The translation network, based on an encoder–decoder model with global attention, yielded a 16.63% word-error rate. This method shows potential in recognizing diverse sign language sentences with reliable inertial data [43].

The possibility of using a fingerspelled version of the British Sign Language (BSL) alphabet for automatic word recognition was explored. Both hands were used for recognition when using BSL. This is a challenging task in comparison to learning other sign languages. The research achieved 98.9% accuracy in word recognition [26] using a collection of 1000 webcam images.

Scale Invariant Feature Transform (SIFT), Sped-Up Robust Features (SURF), and a novel approach called a based gesture descriptor have been suggested for use in the detection of hand movements, with the latter two being applied to the Kurdish Sign Language (KuSL) presented here. The new method outperformed the previous two algorithms in identifying Kurdish hand signals, with a recognition accuracy of 67% compared to 42% for the first two algorithms. Although there are only 36 letters in the Kurdish-Arabic alphabet, variances in imaging conditions such as location, illumination, and occlusion make it challenging to construct an efficient Kurdish sign language identification system.

While existing projects such as SignOn (focused on European Sign Languages) and SignDict (investigating lexicon translation) achieved noteworthy accuracy (95.2% and 97.8%, respectively) [7,44], their applicability to Kurdish Sign Language (KuSL) and real-time communication settings is limited. Our model addresses these gaps by specifically targeting KuSL, a previously understudied language, and prioritizing real-time performance with exceptional 99.05% training accuracy. This focus on real-world accessibility and language specificity offers unique advantages for the Kurdish deaf community, surpassing the capabilities of prior research in its potential to bridge the communication gap.

1.3. Research Contributions

The proposed approach uses convolutional neural networks to identify KuSL, with the model instantly converting real-time images into Kurdish letters.

The main contributions of the present study are as follows:

- The suggested method is the first method for accurately reading Kurdish script using a static hand-shaped symbol.

- We create a completely novel, fully labeled dataset to use with KuSL. The hand form identification dataset collected during ASL and ArSL2018 will be made freely accessible to the scientific community.

- In this method, one-handed forms alone are sufficient for alphabet identification; motion signals are not required.

- This method offers CNN-based real-time KuSL system generation with a high accuracy for several user types.

2. Materials and Methods

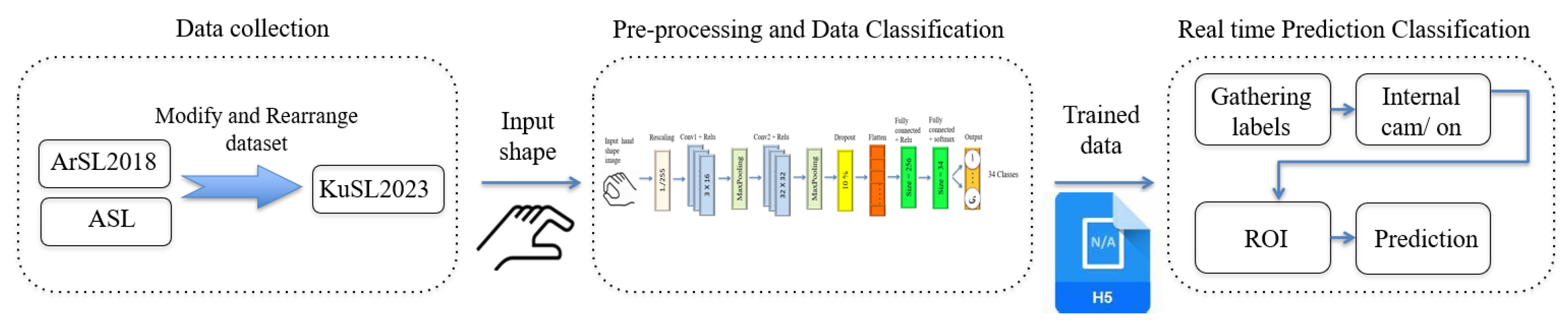

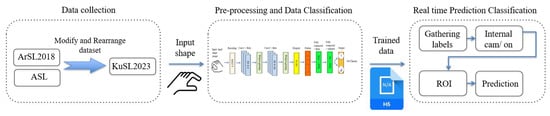

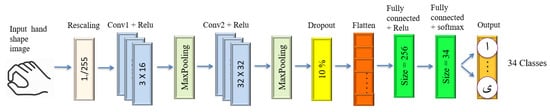

The proposed method is a Kurdish Sign Language (KuSL) identification system trained on a labeled KuSL dataset through convolutional neural networks. The proposed model architecture is shown in Figure 1 and relies upon various stages of the process. The method first assesses real-time predictions for Kurdish-script SL based on visual data and then analyzes the accuracy of those predictions.

Figure 1.

Block diagram of the whole Kurdish Sign Language (KuSL) recognition system.

The overall approach of the model involves a multi-step process encompassing dataset preparation, model creation, training, evaluation, and visualization. Utilizing TensorFlow and Keras, the script initializes datasets for training, validation, and testing from directories containing images of sign language gestures. The model architecture, constructed using the Sequential API, comprises layers for rescaling, convolution, max-pooling, flattening, and dense connections, culminating in a softmax output layer. The compilation incorporates categorical cross-entropy loss and metrics such as accuracy, precision, and recall.

Feature extraction is achieved through convolutional layers, which capture relevant patterns from the input images. The dataset is pre-processed by resizing images into a standardized 64 × 64-pixel format, thus ensuring uniformity. During training, the model’s performance is assessed on the validation dataset, and key metrics are recorded, including training time and throughput.

Post-training, the model undergoes evaluation on a separate test dataset, and various performance metrics, including precision, recall, F1 score, and accuracy, are calculated. Visualizations such as the confusion matrices, ROC curves, and accuracy/loss curves provide insights into the model’s performance dynamics throughout training. The approach demonstrates a systematic integration of data preparation, feature extraction, model creation, training, evaluation, and result visualization.

In the proposed model, preprocessing involves rescaling images to normalize pixel values and using convolutional layers for feature extraction, a dropout layer to mitigate overfitting, and dense layers for classification. The model is compiled with categorical cross-entropy loss, the Adam optimizer, and key metrics. Data loading and augmentation are performed with ‘tf.keras.utils.image_dataset_from_directory’, thereby splitting the dataset into training and validation subsets. These steps collectively contribute to effective training and evaluation.

The QuillBot AI tool was also used during the preparation of the proposed manuscript. The contribution of this tool was solely in academic paraphrasing and rearranging certain paragraphs to improve clarity. QuillBot was not used to generate new content or ideas; it was employed only as a stylistic tool to refine the phrasing and sentence structure of the existing text.

2.1. KuSL2023 Dataset

Data collection is a vital aspect of developing a machine-learning approach. Without a dataset, it is impossible to perform machine learning. The KuSL2023 database was created by merging and improving the ASL alphabet dataset and the ArSL2018 dataset, both of which are provided to the public for free. The ArSL2018 dataset has 54,049 images and was assembled in Al Khobar, Saudi Arabia, by forty contributors of various ages. These illustrations represent the 32 letters of the Arabic alphabet. To eliminate noise, center the picture, and resolve other imperfections, split processing methods may be employed to clean up pictures of varying sizes and variances [28]. The raw pixel values from the photos and their matching class labels are both included in the ASL alphabet dataset. In total, there are 78,000 RGB photos in the collection. Downsizing all photos from 647 × 511 × 3 to 227 × 227 × 3 pixels is advised [20].

The KuSL2023 dataset, consisting of 71,400 collected pictures, uses the ASL alphabet and the ArSL2018 database to derive 34 sign language combinations. Since the Kurdish Alphabet–Arabic script is based on the Arabic alphabet, the 23 most commonly used letters were compiled using the ArSL2018 dataset. Table 1 shows that the remaining Kurdish alphabet was derived from the ASL alphabet collection, which consists of 11 signs.

Table 1.

The collection resource known as KuSL2023.

Researchers in the area of machine learning can utilize the KuSL2023 dataset to better understand how to design assistive technologies for people with impairments. The KuSL2023 is a comprehensive database of annotated pictures representing Kurdish sign language and can be searched and accessed in a variety of ways.

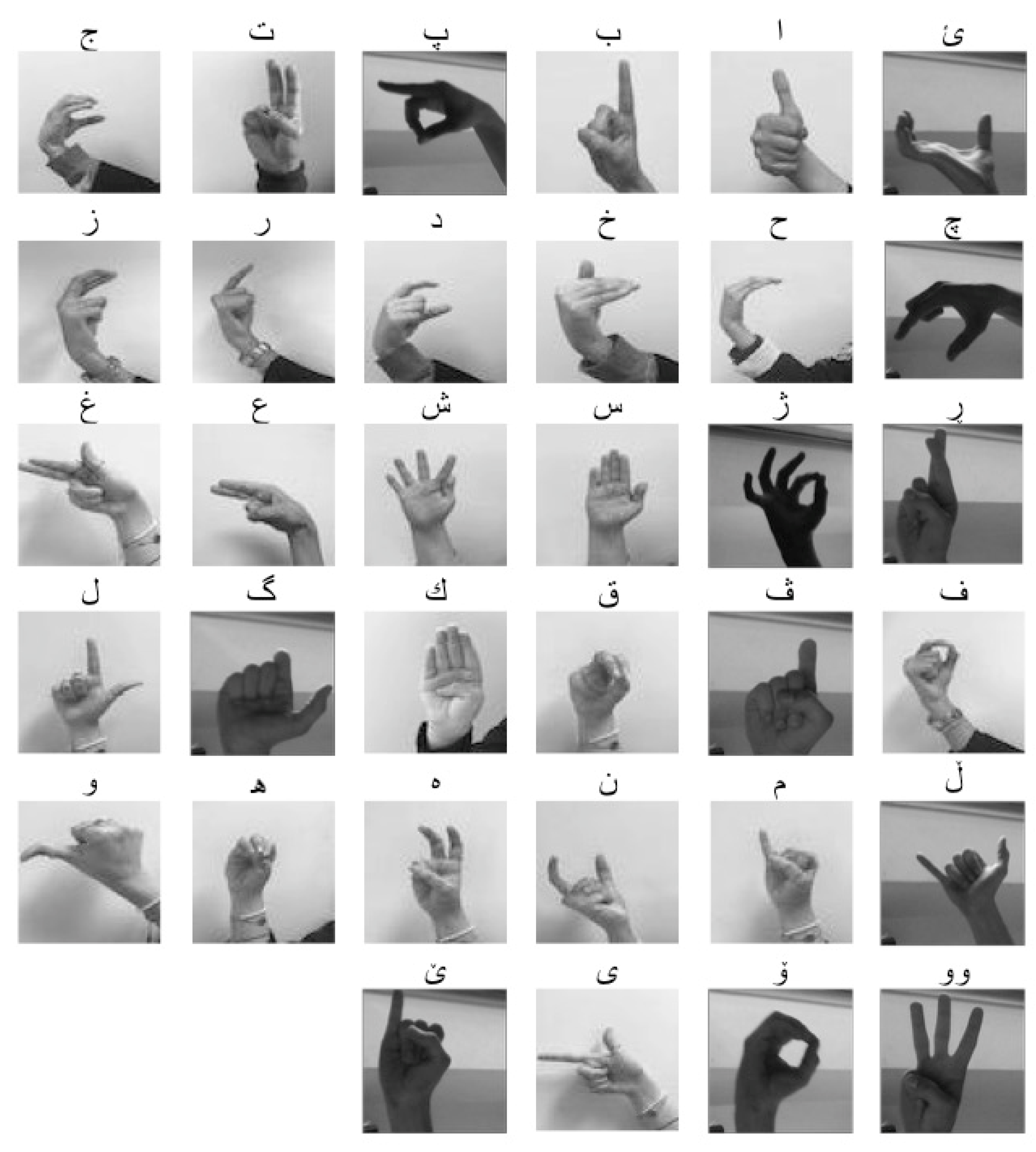

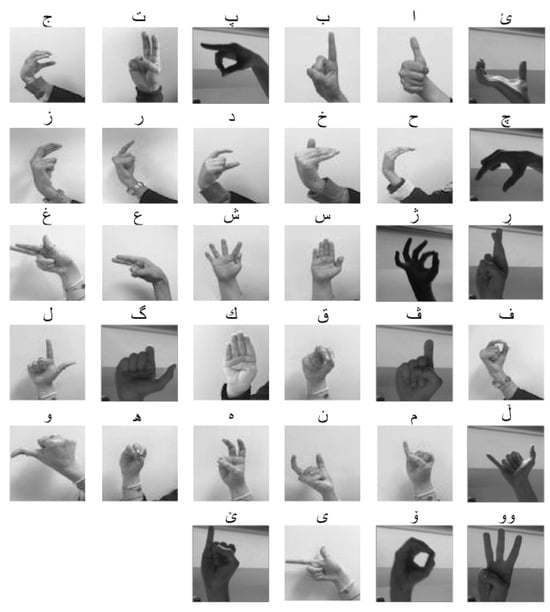

The hand motions used here are not the same as those used in Sign Language (SL). Like any other language, SL must be taught to new speakers, but the gestures are unique to each person and may be employed at will while communicating. As a result, SL education is a challenge for the general public. Recent advances in computer vision and machine learning, however, have yielded viable alternatives. Using hand shape recognition, SL can be transformed into text and voice [16]. In this way, as indicated in Figure 2, every letter in the proposed dataset is symbolized by a specific hand gesture.

Figure 2.

Graphic representation of the KuSL alphabet’s hand form.

2.2. CNN

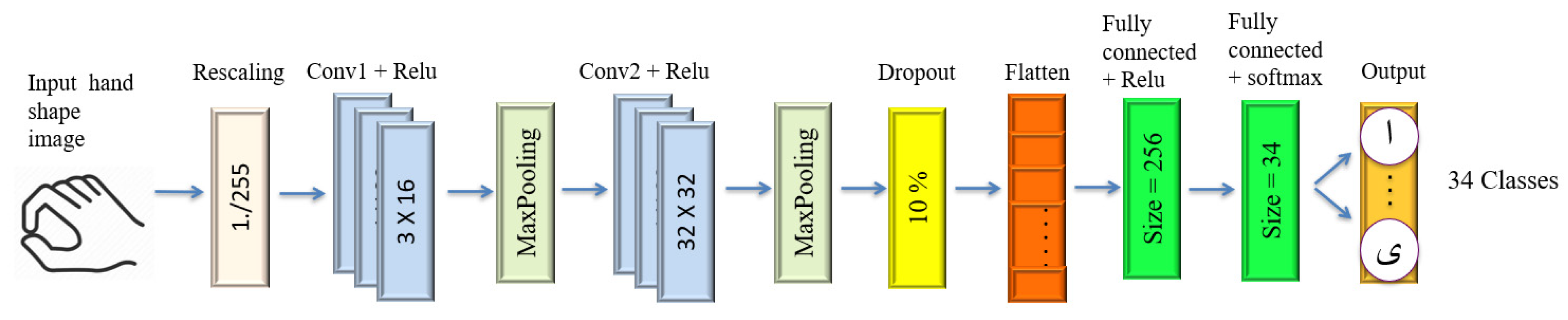

In order to develop the classifier, CNN was chosen as the main model due to its superior ability to learn and handle the complexity of the KuSL alphabet recognition issue. Compared to more conventional machine-learning methods, deep learning excels because CNN does not need to be taught how to selectively extract features. Additionally, in conventional system recognition, a classifier structure is created after image-processing via CNN. High-performance categorization with few processing stages is now possible [19]. The majority of research has indicated that CNNs perform well in practical settings despite their simple architecture and low number of neurons and hidden layers.

There are a total of ten layers in the proposed CNN architecture: a rescaling pre-processing layer, two convolution layers, two MaxPooling layers, one dropout layer with a 10% drop, Flatten, two fully connected levels, and an output layer. Figure 3 shows the overall structure of the suggested CNN model.

Figure 3.

The suggested architecture for CNN.

As a result, the parameters of the model were precisely fine-tuned to achieve a high level of SLR efficacy. This layer works to adjust the picture inputs by resizing their values into a new range, thus making the model more useful and saving time throughout the training process [45]. The suggested CNN model’s detailed parameters are presented in Table 2.

Table 2.

Construction of the proposed CNN system’s layers.

3. Results and Discussion

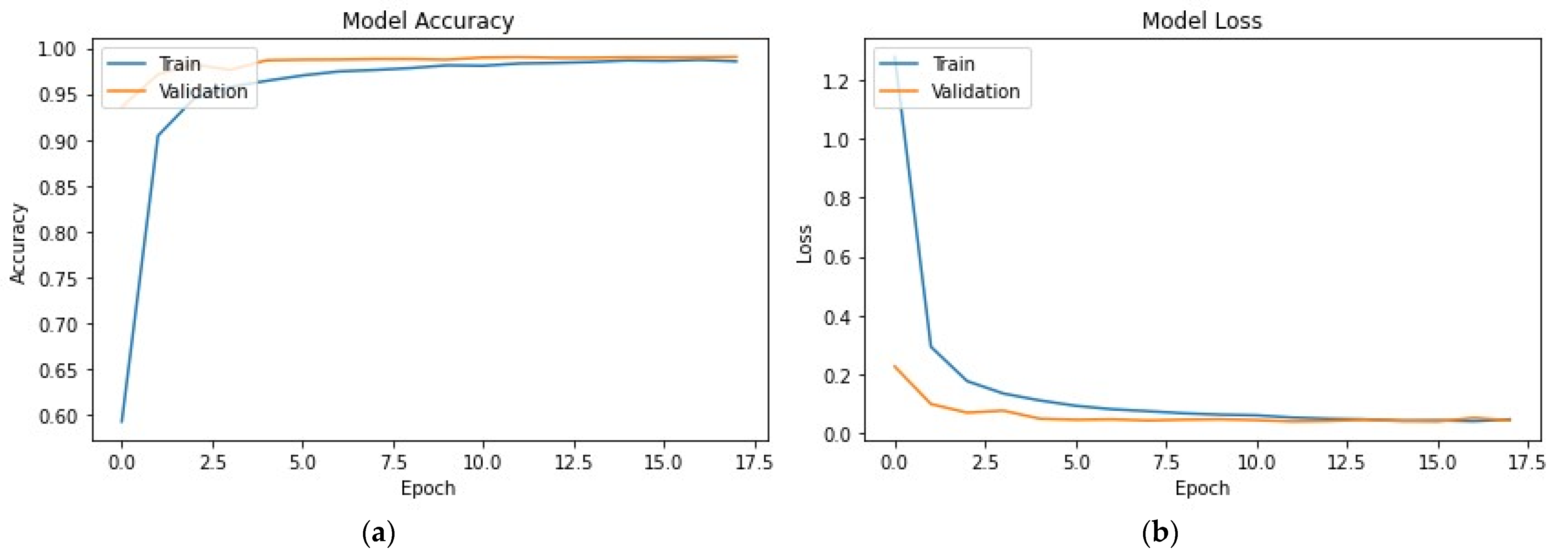

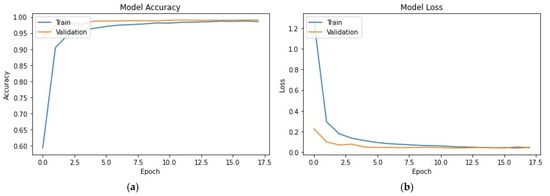

The KuSL2023 collection was utilized in this study to train a CNN model to recognize and categorize the Kurdish-Arabic script alphabet. As shown in Figure 4a,b, the evaluation processes were carried out via training, along with validation of the model’s efficiency and loss. While a thorough description of the test results is provided in Section 3.2, certain test results are described in Section 3.1.

Figure 4.

(a). Accuracy over training periods depicted as line plots. (b) loss over training periods depicted as line plots.

The model was trained using the Adam optimizer, with a default learning rate. The batch size was set to 64, and the training process spanned 18 epochs. These choices were made based on empirical observations and iterative experimentation to achieve optimal performance in Sign Language Recognition (SLR).

The validation and training losses both proceeded in the same direction for 18 epochs under the suggested approach, and the validation loss decreased during training. The nearly identical shapes of all the curves after seven epochs indicate that the approach converged. According to the data, the proposed method predicts a perfect match, meaning that the results neither overfit nor underfit. Table 3 compares the accuracy of the proposed system to that of a number of machine-learning techniques used to recognize SL from various datasets, while Table 4 evaluates the system’s real-time accuracy in the selection of random characters.

Table 3.

The real-time precision of the characters.

Table 4.

Evaluating the suggested model in comparison to existing SL classifiers.

3.1. Test Result

A total of 71,400 images from the KuSL2023 dataset were processed using the suggested technique. The first step involved adapting and designing the dataset based on two existing public datasets (as described in Section 2.1). The generated model of the workstation included the following specifications: a Core i9 3.3 GHz CPU with 20 cores, 16 GB of RAM, and a 64-bit Windows 10 Pro operating system.

This paper introduces a highly reliable machine-learning model for the recognition of sign language, with outstanding performance across a range of assessment measures. The model demonstrated a remarkable accuracy of 99.05%, with a narrow confidence interval of (98.81%, 99.26%) at a 95% confidence level, providing strong evidence for the dependability of our results. The model’s efficacy is highlighted by the accuracy, recall, and F1-score measures, which have values of 99.06%, 99.05%, and 99.05%, respectively. Notably, the training procedure was finished in 1 h, 40 min, and 13.49 s, resulting in a throughput of 10.70 samples per second, indicating the efficiency of our proposed technique. Taken together, these findings emphasize the model’s strength, applicability to many situations, and effectiveness, establishing this model as a potential solution in the field of sign language recognition.

In addition, the suggested model was used on a dataset including various layouts and characteristics. With such fine-tuned parameters, the suggested model was able to reliably recognize signs. The presented CNN architecture is the first to detect one-handed Kurdish alphabet static hand-shape signals, and the real-time predictions resulted in an acceptable outcome, as shown in Table 3.

Ten random Kurdish alphabets were obtained under a variety of settings, and their correctness is shown in Table 3. Predictions with the real-time model were made using the classification model’s hierarchical classification data (file.h5), as shown in Section 2.2. Over a period of 18 iterations, the model was trained using data representing 90% of the dataset and then tested with the remaining 10%. If the validation loss was less than the training loss, then the training data were not adequately partitioned, making this criterion preferable. In other words, the distributions of the training and validation sets were quite similar, as shown by the obtained results.

This section addresses many aspects that may impact system performance, including the dimension of the Region Of Interest (ROI), the distance between the camera and the subject, the orientation of the palm, obstacles posed by the background, and the prevailing lighting conditions. The practical usability of the suggested approach is highlighted by examining its capacity to operate in real-time situations and its performance on a wide-ranging public dataset.

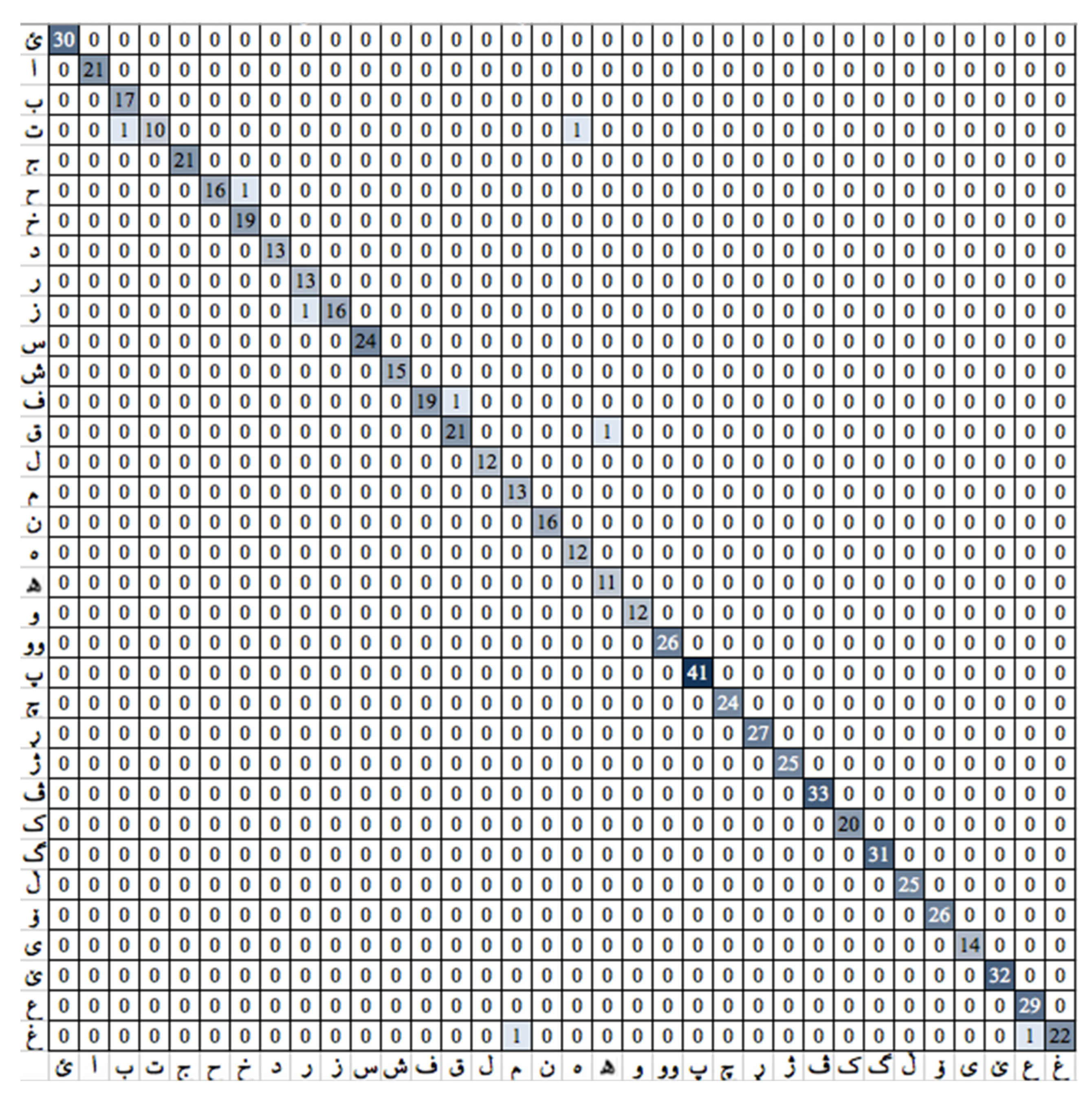

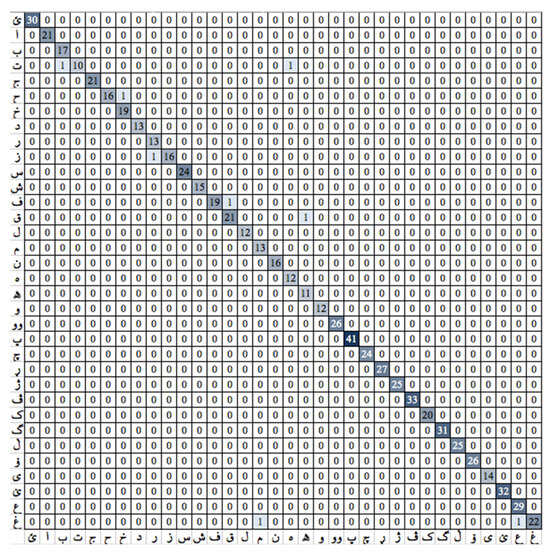

Figure 5 shows confusion matrices for 34 classes to help evaluate the effectiveness of the suggested CNN approach.

Figure 5.

Confusion matrices for the suggested CNN model.

Figure 5 illustrates a notable improvement in precision. The suggested model achieved near-perfect real-time recognition of Kurdish signals from the dataset.

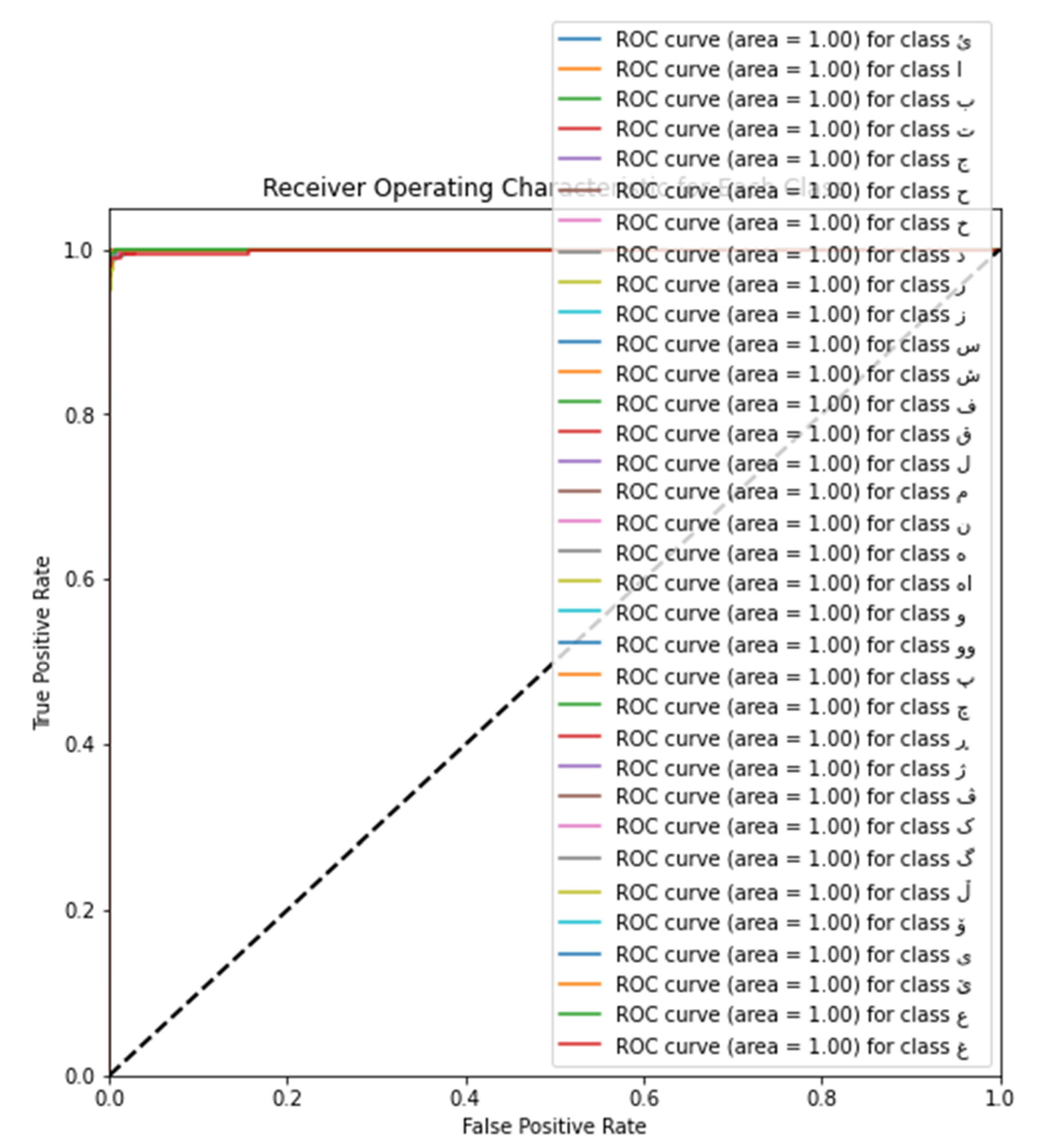

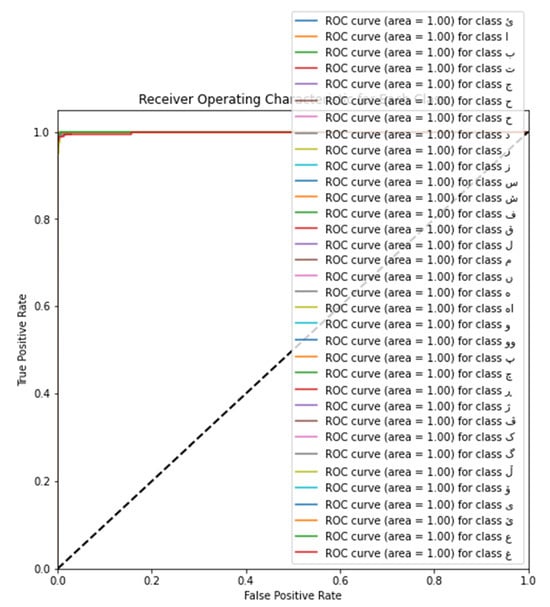

The multiclass classification model for Kurdish sign language demonstrated exceptional performance, surpassing expectations with a perfect Area Under the Curve (AUC) of 1 and an overall accuracy of 1. This impressive achievement, demonstrating impeccable differentiation among all categories, emphasizes the model’s unique capacity to apply and overcome the challenges of the proposed approach. Although we recognize the possibility of overfitting, we are certain that this remarkable accomplishment represents a substantial improvement in multiclass classification, which clears the way for future progress in the SLR field. Additionally, Figure 6 displays the Receiver Operating Characteristic (ROC) curve for 34 classes, which was used to assess the efficacy of the proposed Convolutional Neural Network (CNN) method.

Figure 6.

Receiver Operating Characteristic (ROC) curve for the proposed Convolutional Neural Network (CNN) model.

3.2. Discussion

The accuracy of the findings was the focus of several recent studies that analyzed sign language recognition. The purpose of this research is to evaluate the suggested model’s performance on 34 sets of Kurdish script letter signs at an acceptable precision level of 99.91%. Table 4 shows that the suggested approach outperforms the methods in other recent publications in the field in terms of recognition accuracy.

As shown in Table 4, the modified CNN model outperforms existing categorization machine-learning approaches when it comes to identifying Kurdish sign language. The SIFT, SURF, and GRIDDING approaches [37] had the lowest recognition accuracies of all models tested (42.00%, 42.00%, and 67.00%, respectively). Moreover, the ANN model [38] yielded 10 classes and 98.00% accuracy, while the ResNet (CNN) model [30] yielded 5 classes and 78.49% accuracy, placing it in last place in terms of classes. On the other hand, the RNN model [39] successfully classified Kurdish body sign language into 84 classes. This model was trained using data collected via Kinect with a 97.40% accuracy rate.

Table 4 shows the three different ways that American Sign Language (ASL) categorizes sign languages. First, the RBM-CNN model [22] uses the ASL Fingerspelling and ASL Fingerspelling A datasets (excluding J and Z) to distinguish 24 classes. The CNN and SVM models [23] also performed well when tasked with classifying the Massey dataset. Both models perform almost as well in terms of accuracy. The second method, developed by the teams at DBN [21] and SAE-PCANet [24], is capable of accurately identifying twenty-six letters across two databases: one collected via Kinect with 99.00% accuracy for the identified user and 77.00% for an unidentified user, and another trained on the ASL dataset with an accuracy of 99.00%. Some papers used CNN models [19,22] that classified 36 language signs with 98.50% and 99.30% accuracy, respectively, using the Massey dataset to categorize letters and numbers. Compared to earlier CNN models [19,20,22,23,30], as well as MLP-NN [31,33], which are based on the PSL, the closest comparable alphabet with the Kurdish alphabet, the suggested CNN model achieved 99.05% accuracy, representing the top result.

In addition, the variety of system designs and numbers of convolution layers make it difficult to compare the efficacy of various methods. PCANet [2] is the closest model to the suggested approach in terms of precision for classification outcomes, with a rate of 99.5%. This model was trained using data collected via Kinect and transformed into the ArSL language with 28 classes. Notably, the proposed model obtained greater efficiency in sign language recognition than all of the other approaches shown in Table 4.

4. Conclusions and Future Work

The suggested structure was used to develop CNN architecture for recognizing Kurdish sign letters, a fundamental example of how the deaf population conceptualizes language. The most difficult part of this research was making the decision to use Kurdish sign language to aid deaf people in social situations. The model accurately separated 34 distinct classes of Kurdish characters written in Arabic script.

Furthermore, there are a variety of factors that might impact system performance when dealing with real-time SL detection. Some of these factors include (i) determining the Region Of Interest (ROI) dimension for hand shape recognition, (ii) the distance between the hand shape and the digital camera, (iii) palm orientation, (iv) the inability to correctly detect the background of the image, and (v) the lighting conditions. This study offers a fresh perspective on the KuSL model of categorization. The system has to identify the hand area despite the image’s complicated backdrop, which may include a wide range of colors and lighting conditions.

The current method is a significant advancement over prior research in the field of KuSL recognition due to its use of real-time classification, excellent classification efficiency, and evaluation on a real public dataset.

Our research delivers a highly reliable and efficient CNN model for Kurdish sign language recognition, achieving remarkable accuracy exceeding 99%, with robust performance across diverse datasets and real-time settings. The model’s strengths lie in its adaptability to various conditions, pioneering real-time detection of one-handed Kurdish alphabets, and exceptional differentiation among all categories. This innovative approach paves the way for accessible communication, bridging the gap between hearing and deaf communities in the Kurdish context and opening doors for further advancements in multi-class sign language recognition by exploring vocabulary expansion and technology integration, as well as addressing potential limitations such as overfitting. This research represents a significant step forward in bridging communication barriers and promoting inclusivity through the power of machine learning.

We recognize the difficulties associated with tasks such as optimizing a model for real-time execution and managing intricate contexts. Further studies will prioritize the resolution of these constraints and investigate the incorporation of other technologies, thus broadening the platform’s vocabulary. We firmly believe that this online platform is a substantial advancement in enabling communication and promoting a more inclusive society for the Kurdish deaf population.

Author Contributions

K.M.H.R., supervision, conceptualization, data curation, and original draft preparation; A.A.M., data curation, validation, and writing—reviewing; A.O.A., methodology, visualization, investigation, and writing—reviewing and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data used, presented, and visualized in Figure 2, and Table 1 and are available for download at https://data.mendeley.com/v1/datasets/publish-confirmation/6gfrvzfh69/1 (accessed on 15 January 2024).

Acknowledgments

We acknowledge the utilization of the QuillBot AI tool in the development of this work. However, its impact was restricted to enhancing the style by employing academic paraphrasing and reorganizing some paragraphs to increase clarity. The primary substance and innovative concepts articulated in this piece, as demonstrated by the comprehensive bibliography included, are completely our own.

Conflicts of Interest

The authors declare that they have no competing interests that could influence the research design, data collection, analysis, interpretation, or presentation of the findings. No funding sources or financial relationships influenced the work presented in this paper. The authors confirm that this research was conducted in an objective and unbiased manner.

References

- El-Bendary, N.; Zawbaa, H.M.; Daoud, M.S.; Hassanien, A.E.; Nakamatsu, K. ArSLAT: Arabic Sign Language Alphabets Translator. In Proceedings of the 2010 International Conference on Computer Information Systems and Industrial Management Applications (CISIM), Krakow, Poland, 8–10 October 2010; pp. 590–595. [Google Scholar] [CrossRef]

- Maiorana-Basas, M.; Pagliaro, C.M. Technology use among adults who are deaf and hard of hearing: A national survey. J. Deaf Stud. Deaf Educ. 2014, 19, 400–410. [Google Scholar] [CrossRef]

- Rawf, K.M.H.; Mohammed, A.A.; Abdulrahman, A.O.; Abdalla, P.A.; Ghafor, K.J. A Comparative Study Using 2D CNN and Transfer Learning to Detect and Classify Arabic-Script-Based Sign Language. Acta Inform. Malays. 2023, 7, 8–14. [Google Scholar] [CrossRef]

- Rawf, K.M.H.; Abdulrahman, A.O. Microcontroller-based Kurdish understandable and readable digital smart clock. Sci. J. Univ. Zakho 2022, 10, 1–4. [Google Scholar] [CrossRef]

- Deafness and Hearing Loss. 27 February 2023. Available online: https://www.who.int/news-room/fact-sheets/detail/deafness-and-hearing-loss (accessed on 19 January 2024).

- CORDIS. SignON—Sign Language Translation Mobile Application and Open Communications Framework. 20 November 2020. Available online: https://cordis.europa.eu/project/id/101017255 (accessed on 15 December 2023).

- SignDict. What Is SignDict? 1 March 2017. Available online: https://signdict.org/about?locale=en (accessed on 15 December 2023).

- Cormier, K.; Schembri, A.C.; Tyrone, M.E. One hand or two?: Nativisation of fingerspelling in ASL and BANZSL. Sign Lang. Linguist. 2008, 11, 3–44. [Google Scholar] [CrossRef]

- Tao, W.; Leu, M.C.; Yin, Z. American Sign Language alphabet recognition using Convolutional Neural Networks with multiview augmentation and inference fusion. Eng. Appl. Artif. Intell. 2018, 76, 202–213. [Google Scholar] [CrossRef]

- Ye, Y.; Tian, Y.; Huenerfauth, M.; Liu, J. Recognizing American sign language gestures from within continuous videos. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–23 June 2018; pp. 2064–2073. [Google Scholar]

- Rivera-Acosta, M.; Ruiz-Varela, J.M.; Ortega-Cisneros, S.; Rivera, J.; Parra-Michel, R.; Mejia-Alvarez, P. Spelling Correction Real-Time American Sign Language Alphabet Translation System Based on YOLO Network and LSTM. Electronics 2021, 10, 1035. [Google Scholar] [CrossRef]

- Ghafoor, K.J.; Rawf, K.M.H.; Abdulrahman, A.O.; Taher, S.H. Kurdish Dialect Recognition using 1D CNN. ARO Sci. J. Koya Univ. 2021, 9, 10–14. [Google Scholar] [CrossRef]

- Lin, C.-J.; Jeng, S.-Y.; Chen, M.-K. Using 2D CNN with Taguchi parametric optimization for lung cancer recognition from CT Images. Appl. Sci. 2020, 10, 2591. [Google Scholar] [CrossRef]

- Mo, T.; Sun, P. Research on key issues of gesture recognition for artificial intelligence. Soft Comput. 2019, 24, 5795–5803. [Google Scholar] [CrossRef]

- Ahmed, M.A.; Zaidan, B.B.; Zaidan, A.A.; Salih, M.M.; bin Lakulu, M.M. A Review on Systems-Based Sensory Gloves for Sign Language Recognition State of the Art between 2007 and 2017. Sensors 2018, 18, 2208. [Google Scholar] [CrossRef]

- Amin, M.S.; Rizvi, S.T.H.; Hossain, M.M. A Comparative Review on Applications of Different Sensors for Sign Language Recognition. J. Imaging 2022, 8, 98. [Google Scholar] [CrossRef] [PubMed]

- Aly, W.; Aly, S.; Almotairi, S. User-Independent American Sign Language Alphabet Recognition Based on Depth Image and PCANet Features. IEEE Access 2019, 7, 123138–123150. [Google Scholar] [CrossRef]

- Tang, A.; Lu, K.; Wang, Y.; Huang, J.; Li, H. A real-time hand posture recognition system using Deep Neural Networks. ACM Trans. Intell. Syst. Technol. 2015, 6, 1–23. [Google Scholar] [CrossRef]

- Taskiran, M.; Killioglu, M.; Kahraman, N. A real-time system for recognition of American sign language by using deep learning. In Proceedings of the 2018 41st IEEE International Conference on Telecommunications and Signal Processing (TSP), Athens, Greece, 4–6 July 2018; pp. 1–5. [Google Scholar]

- MCayamcela, E.M.; Lim, W. Fine-tuning a pre-trained Convolutional Neural Network Model to translate American Sign Language in Real-time. In Proceedings of the 2019 International Conference on Computing, Networking and Communications (ICNC), Honolulu, HI, USA, 18–21 February 2019; pp. 100–104. [Google Scholar] [CrossRef]

- Rioux-Maldague, L.; Giguère, P. Sign Language Fingerspelling Classification from Depth and Color Images Using a Deep Belief Network. In Proceedings of the 2014 Canadian Conference on Computer and Robot Vision, Montreal, QC, Canada, 6–9 May 2014; pp. 92–97. [Google Scholar] [CrossRef]

- Rastgoo, R.; Kiani, K.; Escalera, S. Multi-modal Deep hand sign language recognition in still images using restricted Boltzmann machine. Entropy 2018, 20, 809. [Google Scholar] [CrossRef]

- Nguyen, H.B.D.; Do, H.N. Deep Learning for American Sign Language Fingerspelling Recognition System. In Proceedings of the 2019 26th International Conference on Telecommunications (ICT), Hanoi, Vietnam, 8–10 April 2019; pp. 314–318. [Google Scholar] [CrossRef]

- Li, S.-Z.; Yu, B.; Wu, W.; Su, S.-Z.; Ji, R.-R. Feature learning based on SAE–PCA Network for human gesture recognition in RGBD images. Neurocomputing 2015, 151, 565–573. [Google Scholar] [CrossRef]

- Mazinan, A.H.; Hassanian, J. A Hybrid Object Tracking for Hand Gesture (HOTHG) Approach based on MS-MD and its Application. J. Inf. Syst. Telecommun. (JIST) 2015, 3, 1–10. [Google Scholar]

- Liwicki, S.; Everingham, M. Automatic recognition of fingerspelled words in British Sign Language. In Proceedings of the 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Miami, FL, USA, 20–25 June 2009; pp. 50–57. [Google Scholar] [CrossRef]

- Aly, S.; Osman, B.; Aly, W.; Saber, M. Arabic sign language fingerspelling recognition from depth and intensity images. In Proceedings of the 2016 12th International Computer Engineering Conference (ICENCO), Cairo, Egypt, 28–29 December 2016; pp. 99–104. [Google Scholar] [CrossRef]

- Latif, G.; Mohammad, N.; Alghazo, J.; AlKhalaf, R.; AlKhalaf, R. ARASL: Arabic alphabets sign language dataset. Data Brief 2019, 23, 103777. [Google Scholar] [CrossRef]

- Karacı, A.; Akyol, K.; Turut, M.U. Real-time Turkish sign language recognition using Cascade Voting Approach with handcrafted features. Appl. Comput. Syst. 2021, 26, 12–21. [Google Scholar] [CrossRef]

- Aktaş, M.; Gökberk, B.; Akarun, L. Recognizing Non-Manual Signs in Turkish Sign Language. In Proceedings of the 2019 Ninth International Conference on Image Processing Theory, Tools and Applications (IPTA), Istanbul, Turkey, 6–9 November 2019; pp. 1–6. [Google Scholar]

- Karami, A.; Zanj, B.; Sarkaleh, A.K. Persian sign language (PSL) recognition using wavelet transform and neural networks. Expert Syst. Appl. 2011, 38, 2661–2667. [Google Scholar] [CrossRef]

- Khomami, S.A.; Shamekhi, S. Persian sign language recognition using IMU and surface EMG sensors. Measurement 2021, 168, 108471. [Google Scholar] [CrossRef]

- Jalilian, B.; Chalechale, A. Persian Sign Language Recognition Using Radial Distance and Fourier Transform. Int. J. Image Graph. Signal Process. 2014, 6, 40–46. [Google Scholar] [CrossRef][Green Version]

- Ebrahimi, M.; Komeleh, H.E. Rough Sets Theory with Deep Learning for Tracking in Natural Interaction with Deaf. J. Inf. Syst. Telecommun. (JIST) 2022, 10, 39. [Google Scholar] [CrossRef]

- Mariappan, H.M.; Gomathi, V. Real-Time Recognition of Indian Sign Language. In Proceedings of the 2019 International Conference on Computational Intelligence in Data Science (ICCIDS), Chennai, India, 21–23 February 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Rajam, P.S.; Balakrishnan, G. Real time Indian Sign Language Recognition System to aid deaf-dumb people. In Proceedings of the 2011 IEEE 13th International Conference on Communication Technology, Jinan, China, 25–28 September 2011; pp. 737–742. [Google Scholar] [CrossRef]

- Hashim, A.D.; Alizadeh, F. Kurdish Sign Language Recognition System. UKH J. Sci. Eng. 2018, 2, 1–6. [Google Scholar] [CrossRef]

- Mahmood, M.R.; Abdulazeez, A.M.; Orman, Z. Dynamic Hand Gesture Recognition System for Kurdish Sign Language Using Two Lines of Features. In Proceedings of the 2018 International Conference on Advanced Science and Engineering (ICOASE), Duhok, Iraq, 9–11 October 2018; pp. 42–47. [Google Scholar] [CrossRef]

- Mirza, S.F.; Al-Talabani, A.K. Efficient kinect sensor-based Kurdish Sign Language Recognition Using Echo System Network. ARO Sci. J. Koya Univ. 2021, 9, 1–9. [Google Scholar] [CrossRef]

- Urrea, C.; Kern, J.; Navarrete, R. Bioinspired Photoreceptors with Neural Network for Recognition and Classification of Sign Language Gesture. Sensors 2023, 23, 9646. [Google Scholar] [CrossRef]

- Kumar, P.; Roy, P.P.; Dogra, D.P. Independent Bayesian classifier combination based sign language recognition using facial expression. Inf. Sci. 2018, 428, 30–48. [Google Scholar] [CrossRef]

- Achenbach, P.; Laux, S.; Purdack, D.; Müller, P.N.; Göbel, S. Give Me a Sign: Using Data Gloves for Static Hand-Shape Recognition. Sensors 2023, 23, 9847. [Google Scholar] [CrossRef] [PubMed]

- Gu, Y.; Oku, H.; Todoh, M. American Sign Language Recognition and Translation Using Perception Neuron Wearable Inertial Motion Capture System. Sensors 2024, 24, 453. [Google Scholar] [CrossRef] [PubMed]

- SignON Project. SignON Project—Sign Language Translation Mobile Application. 5 July 2021. Available online: https://signon-project.eu/?fbclid=IwAR1xrlxMDPrAdI0gVVJmk1nUtC3f5Otu-pe3NOQEpUbXYV-BWOL7ca4W-Ts (accessed on 15 January 2024).

- Chollet, F.; Omernick, M. Keras Documentation: Working with Preprocessing Layers. Keras. 23 April 2021. Available online: https://keras.io/guides/preprocessing_layers/?fbclid=IwAR3KwRwy6PpRs115ZsnD7GphNZqkhq1svwFSGnUfffDxwdloZ_Na7uiICnk (accessed on 21 February 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).