Abstract

The widespread use of technology has made communication technology an indispensable part of daily life. However, the present cloud infrastructure is insufficient to meet the industry’s growing demands, and multi-access edge computing (MEC) has emerged as a solution by providing real-time computation closer to the data source. Effective management of MEC is essential for providing high-quality services, and proactive self-healing is a promising approach that anticipates and executes remedial operations before faults occur. This paper aims to identify, evaluate, and synthesize studies related to proactive self-healing approaches in MEC environments. The authors conducted a systematic literature review (SLR) using four well-known digital libraries (IEEE Xplore, Web of Science, ProQuest, and Scopus) and one academic search engine (Google Scholar). The review retrieved 920 papers, and 116 primary studies were selected for in-depth analysis. The SLR results are categorized into edge resource management methods and self-healing methods and approaches in MEC. The paper highlights the challenges and open issues in MEC, such as offloading task decisions, resource allocation, and security issues, such as infrastructure and cyber attacks. Finally, the paper suggests future work based on the SLR findings.

1. Introduction

Mobile edge computing (MEC) is a distributed computing architecture that aims to bring computation and storage resources closer to the end users or devices at the network edge. With MEC, computing resources are made available at the edge of the network, typically within the radio access network (RAN), which reduces latency, improves network efficiency, and enhances the user experience.

MEC technology enables the development of new applications and services that require real-time data processing, such as augmented reality, autonomous driving, and smart cities. MEC also provides a more secure and cost-effective way to deploy applications and services by reducing the reliance on centralized cloud infrastructures.

Moreover, MEC allows for the creation of new business models by enabling service providers to offer value-added services that leverage local context information, such as location, proximity, and environmental data. MEC is expected to play a critical role in supporting the deployment of 5G networks, which will require new network architectures to enable low-latency, high-bandwidth, and high-reliability services.

MEC provides a low latency and high bandwidth environment, making it ideal for supporting a wide range of applications and technologies that require real-time data processing [1]. These include emerging technologies, such as IoT and 5G, as well as low-latency applications, such as connected vehicles, video analytics, smart cities, augmented reality, and bloackchain technologies [2]. Due to its ability to support these technologies and applications, MEC has become a highly significant and exciting topic in the field of computing and telecommunications.

Mobile edge computing is a complex and heterogeneous system, making it vulnerable to various faults and errors [3]. To address this, an effective network management approach must be implemented that can proactively handle faults before they occur. One such approach is self-healing, which enables a system to automatically detect and diagnose faults and take the necessary corrective actions to ensure system continuity and reliability.

To address the challenges in MEC, researchers have proposed various self-healing techniques. For instance, Nikmanesh et al. [4] utilized the discrete time Markov process to develop a decision-making analytical framework that can determine the optimal compensatory action in a failure scenario. Similarly, Porch et al. [5] proposed the use of a High-Order Recurrent Neural Network to detect performance degradation. In this approach, a series of user-reported reference signal received power (RSRP) readings are continuously collected and used as input data for the proposed model. The model is then trained to detect when performance degradation exceeds a certain threshold.

Due to the increasing complexity of the IT infrastructure, maintenance costs have been rising, as well as data growth, and the number of internet users has increased astronomically. Consequently, many researchers have focused on discovering answers through self-healing systems.

The term self-healing (SH) is closely associated with autonomous computing (AC), which was introduced at IBM’s event in 2001. The event aimed to develop a system that could manage itself without human intervention to address the management issues arising from the growing computer systems and networks.

AC involves a system’s capacity to control itself independently through various enabling technologies [6]. It is modelled after the human nervous system, which can regulate and control the body system. The goal of autonomous computing is to build self-running systems that can perform complex operations while hiding the system’s sophistication from the user [7].

IBM developed AC in 2001 to create a distributed system on a large scale that is void of human intervention. The four essential features of AC are self-configuration, self-optimization, self-healing, and self-protection. The self-configuration feature focuses on configuring new system elements automatically during system deployments, upgrades, or after a system change without human involvement. Self-optimization allows the system to adapt automatically to increase efficiency and output during operation. Self-healing provides a system with the ability to automatically resume its activities when any malfunction happens. Self-protection enables a system to decide how to protect itself against malicious attacks [8].

AC has four key features, the first of which is self-configuration. This feature enables system elements to be configured automatically without human intervention during system deployment, upgrades, or after a system change has occurred. It encompasses the installation and configuration of new components, as well as the removal of damaged components and their automatic reconfiguration. Integrating large systems can be a challenging task, but self-configuration aims to ensure that an autonomic system can automatically configure itself based on high-level policies. This feature allows for a new component to integrate seamlessly into an existing system, with the rest of the system automatically adjusting to accommodate it.

The second important feature of AC is self-optimization, which enables the system to automatically adapt and improve efficiency and output during operation. By eliminating the need for manual configuration, this feature enhances the deployment process. Additionally, it performs regular system performance checks to ensure that key performance indicators (KPIs) remain within a reasonable threshold. In a complex system, optimizing certain parameters is crucial for achieving optimal performance, but improper adjustments can have unexpected consequences on the entire system. Self-optimization allows an autonomous system to continually seek ways to optimize its performance automatically.

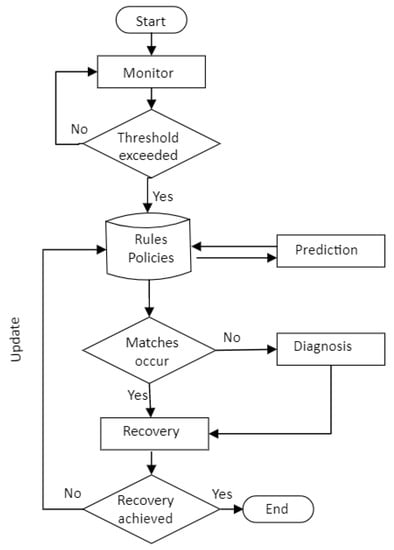

The third feature of AC is self-healing, which equips a system with the ability to automatically resume its activities in the case of a malfunction. This feature involves a three-step process of detecting failures, diagnosing, and performing remedial operations. The primary objective of incorporating self-healing functionality is to enhance the stability and maintainability of the system. To achieve this, the self-healing process continuously monitors the system for faults and raises an alarm when necessary. Once an issue is detected, the system conducts a diagnosis to investigate the root cause of the problem. Then, appropriate remedial action is taken to address the issue and resume normal system activities. Overall, the self-healing feature of AC ensures that the system operates effectively and efficiently without human intervention.

Self-healing can be categorized into two approaches: proactive and reactive. The proactive approach anticipates the potential failures and takes action to prevent them from occurring, while the reactive approach responds to actual failures and takes action to restore the system to its optimal state. Proactive self-healing involves continuous monitoring of the system to detect potential issues and takes corrective measures to prevent them from developing into major problems. On the other hand, reactive self-healing responds to actual failures, detects the root cause of the problem, and applies the necessary remedial action to restore the system to its optimal functional state. Both proactive and reactive self-healing are crucial to enhancing system stability and reducing downtime.

Self-protection, the fourth feature, empowers a system to proactively protect itself against malicious attacks. It utilizes two key strategies to achieve this goal. The first strategy is to safeguard the entire system against potential attacks, and the second strategy is to anticipate problems based on reports and take necessary actions to avoid them. By using self-protection, the system can detect and mitigate the activities of foreign elements as they occur, preventing them from causing damage to the entire system. This feature offers high-level protection by identifying unusual activities and triggering the appropriate defensive actions. The self-protection process includes critical activities such as system monitoring, analysis, detection, and the mitigation of threats.

IBM proposes a reference model, called the MAPE-K (monitor, analyze, plan, execute, knowledge) loop, to implement autonomic control. This model consists of two main elements, the managed elements and the autonomic manager. The managed elements include sensors and effectors that interact with the external environment by gathering information and executing actions. On the other hand, the autonomic manager is responsible for controlling the system.

To achieve system control, the autonomic manager continuously monitors the entire system using information collected by the sensors. The monitoring component of the MAPE-K loop is responsible for gathering relevant environmental properties. Subsequently, the data is thoroughly analyzed to derive useful knowledge. Based on this knowledge, the planning component considers creating several modifications to the system. Finally, the execute component performs the execution of the appropriate modification to improve the system’s overall performance.

In summary, the MAPE-K loop provides a structured approach for realizing autonomic control. By following this loop, the autonomic manager can monitor the system, analyze its performance, plan for improvements, and execute the necessary changes automatically. This approach ensures that the system remains stable, efficient, and responsive to its environment [6].

Efforts to mitigate failures have been ongoing, even before the emergence of self-healing systems. Traditional fault tolerance mechanisms, such as hardware and software redundancy methods have focused on failure detection and recovery. However, this approach requires human intervention, such as the use of experts to solve the problem, and often involves heavy hardware overhead. One of the key drawbacks of this approach is the time it takes to detect and rectify faults.

In addition, it is widely recognized that an expert’s knowledge and experience in resolving a specific issue may not be documented or available for someone else to use, in case the same issue arises again. Moreover, recruiting and dispatching specialists to the failure site can be quite costly.

Given the limitations of traditional fault tolerance mechanisms, there is a growing need for a method that would enable systems to manage failures without human involvement. This is where autonomous systems come in, providing the capability for systems to function without human intervention.

High availability is crucial for business-critical IT systems that support operations that cannot tolerate downtime. When such systems experience downtime, the organisation faces not only financial losses, but also damage to its reputation. In certain domains, such as medical systems, high availability can mean the difference between life and death.

The use of an autonomous system to achieve high availability is critical because it can detect and resolve issues automatically. A system that can identify problems and self-heal without human intervention is more likely to achieve high availability. With self-healing, the system can recover automatically from failures, thus reducing downtime and ensuring that it remains available for users. Additionally, the ability of an autonomous system to monitor itself and detect problems before they become critical also contributes to high availability [9].

To achieve continuous availability, it is essential to have a system that can identify and address faults in real-time. An autonomous system with self-healing capabilities can provide this real-time response without human intervention. This feature ensures that the system continues to perform, even when one or more of its components fail. In addition to self-healing, self-optimization and self-protection features enhance the system’s resilience by ensuring that the system remains in optimal condition and protected from external attacks. An autonomic control loop, such as the MAPE-K loop, can provide the necessary feedback and control required to keep the system in optimal condition. Therefore, the integration of autonomous capability can provide the necessary components for achieving high availability of an IT infrastructure system [10].

Indeed, achieving high availability is critical for the smooth operation of an enterprise. Downtime can lead to significant financial losses, impact customer satisfaction and reputation, and ultimately hinder business success. Therefore, it is essential to have a reliable and resilient IT infrastructure that can operate continuously without interruptions. However, ensuring high availability is a complex and challenging task, requiring the efficient detection, diagnosis, and remediation of failures. Autonomous systems can help achieve high availability by providing a self-healing mechanism that automatically detects and resolves faults, thus reducing downtime and improving the overall reliability of the system.

In this paper we focus on self-healing approaches in mobile edge computing, and we answer the following questions:

- How are SH approaches utilized in MEC?

- What design, theories, and algorithms could be used to support SH in MEC?

- What evaluation methods could be employed?

The rest of this paper is organized as follows. Section 1 provides introduction to MEC, while Section 2 describes the background. In Section 3 we present the conducted systematic literature review. Section 4 presents MEC challenges and use cases. Section 5 discusses autonomous approaches in MEC networks and related technologies. While in Section 6 we perform a review of self-healing approaches in MEC.

2. Background

The paradigm of mobile edge computing has recently garnered significant attention from both industry and academia, offering a promising solution to address the increasing performance demands of future applications. In this section, MEC is examined in detail, including related concepts and technologies, as well as the MEC architecture and network management strategies.

2.1. Mobile Edge Computing

Multi-access edge computing (MEC), previously known as mobile edge computing, is an architecture concept introduced by the European Telecommunications Standards Institute (ETSI) that offers cloud computing capabilities and IT services at the network’s edge, be it a cellular network or any other network [11]. MEC combines information technology with telecommunications, providing application developers and content providers with access to the radio access network, which may not have been feasible before. The radio access network is a part of a mobile telecommunications system and implements radio access technology. By processing and storing content closer to cellular customers, MEC offers faster response times, improved application performance, and minimized network congestion.

MEC Related Concepts and Technologies

The MCE paradigm has gained significant interest in both industry and academia as a means of bringing computation, storage, and networking closer to the source of data generation. MEC is one implementation of the edge computing paradigm, which also includes fog computing and cloudlet. Fog computing is a decentralized computing architecture that deploys fog nodes across the network to perform computational tasks [12]. Similar to edge computing, fog computing delivers low latency, reduces network congestion, and brings computation and storage capabilities closer to end users. Fog computing and edge computing are similar in that they both offer these core functions, and the terms are sometimes used interchangeably. The primary difference between fog and edge computing is how data is handled or where the data processing occurs.

Fog computing processes data on fog nodes positioned in a local area network level, which may be a few network hops from the end user, while edge computing processes data on edge devices, such as smartphones, laptops, desktop computers, and tablets. In contrast, a cloudlet is a cluster of computers that is well-connected to the internet, providing computation and storage close to mobile devices [13]. Cloudlets appear similar to a “data center in a box” and can offer real-time interactive responses with low latency due to their proximity to the end user.

2.2. MEC Architecture

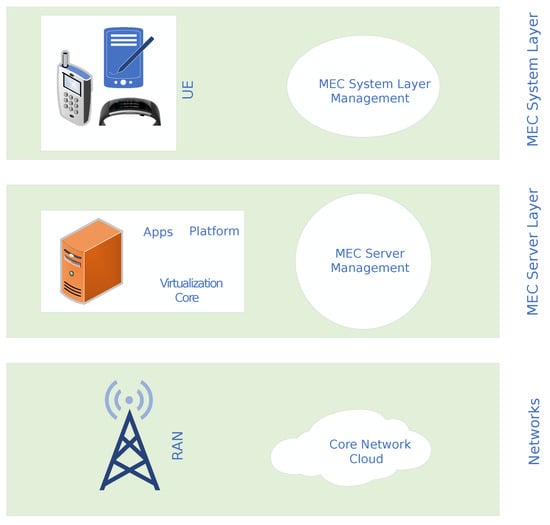

The MEC architecture allows for the deployment of services, applications, and content closer to the end user, which results in a low-latency and high-bandwidth environment. As shown in Figure 1, the MEC architecture comprises three distinct layers: the system layer, the host layer or server layer, and the network layer. The system layer includes a system-level management component that is positioned at the highest level of the entire system, providing a comprehensive view of the system. The host layer consists of the host component and the host layer management component, which are responsible for managing the services and applications deployed on the MEC server.

Figure 1.

MEC architecture.

The host component of the MEC architecture includes the virtualization infrastructure, the mobile edge (ME) platform, and the ME applications. These components work together to enable the deployment of services, applications, and content closer to the end user, resulting in a low latency and high bandwidth environment. The network layer, which consists of the cellular network infrastructure, local networks, and external networks, plays a critical role in supporting the MEC architecture. Additionally, the network layer implements a radio access network (RAN), which is an essential part of a mobile telecommunication system. The RAN functions by transmitting signals to and from user equipment through the backhaul to the core network, facilitating the efficient transfer of data.

2.3. Network Management

As communication and computer networks continue to expand in size and complexity, managing these intricate infrastructures has become increasingly challenging. Effective network management is crucial for ensuring excellent quality of service (QoS) and monitoring, securing, and controlling access to the entire network.

Network operators must contend with a variety of issues to manage, maintain, and safeguard their network infrastructure. The problem of network management has received significant attention, and numerous solutions have been proposed. However, the difficulties of modifying the underlying infrastructure have made previous attempts to ease network administration a temporary fix. The closed proprietary and tightly integrated nature of network equipment limits the flexibility of the underlying infrastructure, thus constraining creativity and development.

Complexity of Network Management

The effective management of mobile network infrastructure and services in today’s environment requires innovative approaches to address the added complexity brought about by virtualization and softwarization, while still reducing operating costs [14]. Network operators are challenged with executing complex policies and operations using limited device configuration commands. Configurations remain a critical aspect of network management, where high-level policies controlling the network depend on low-level configuration. However, low-level network rules are ill-equipped to handle rapid network changes and the demand for more functionality and complex regulations from operators continues to increase.

To address these challenges, the Zero Touch network and Service Management Industry Specification Group (ZSM ISG) was established by ETSI in 2017 to provide a framework for managing the complexities of network management across multiple domains. The ZSM ISG framework aims to automate all operational procedures and activities, including planning and design, delivery, deployment, provisioning, monitoring, and optimization. By providing a comprehensive framework for network management, the ZSM ISG seeks to enable autonomous management and overcome the difficulties associated with managing complex network infrastructure and services.

To achieve fully autonomous networks, artificial intelligence (AI), machine learning (ML), and big data analytics are crucial components. Recently, these methods have been used to intelligently perform various networking activities, such as administration, maintenance, and protection. For example, Fadlullah et al. [15] demonstrate the impressive results obtained from utilizing a deep-learning routing technique over a traditional routing strategy in a wireless mesh backbone network. Similarly, Kim et al. [16] utilize a graph neural network to address the issue of managing virtual networks and machines, which have increased in complexity. These studies showcase the potential of AI and ML in enhancing network management and support the notion that automation through these methods will be instrumental in realizing fully autonomous networks.

MEC, which integrates telecom, cloud computing, and IT services to bring computation, networking, and storage closer to the data source, has the potential to revolutionize the way subscribers access cloud services through the radio access network (RAN). However, due to its complexity and heterogeneity, MEC is susceptible to several faults, making a proactive self-healing system essential. Therefore, this systematic literature review aims to critically analyze the existing approaches, methods, and practices for achieving such a system in MEC, identify central issues and gaps in the current body of knowledge, and propose solutions.

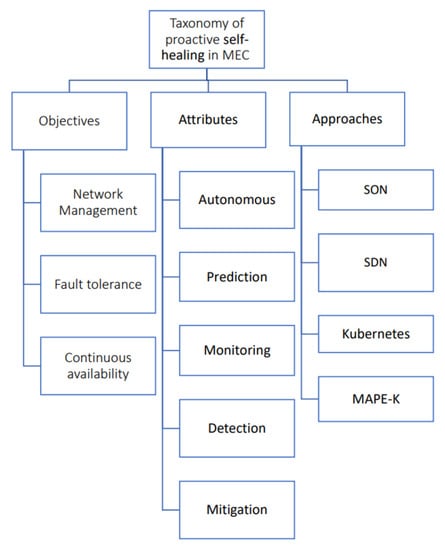

To achieve this goal, the review presents a taxonomy of the topic, in terms of objectives, attributes, and approaches (as depicted in Figure 2). This taxonomy helps in understanding the different approaches used in the literature, identifying existing gaps, and proposing solutions. The review provides a foundation for the research by providing insight into previous studies and placing the research within the context of previous works on the subject.

Figure 2.

Taxonomy of proactive self-healing in MEC.

The review also describes the process followed in conducting the study, which involved formulating research questions, identifying keywords, and searching digital libraries, followed by evaluating the documents based on quality criteria and stating clear rules for inclusion/exclusion of the literature. The review synthesizes and provides a new viewpoint on the topic, identifies connections between theories and concepts, and discovers relevant factors pertinent to the topic. Overall, this review helps to advance the knowledge on self-healing systems in MEC and provides a basis for future research in this area.

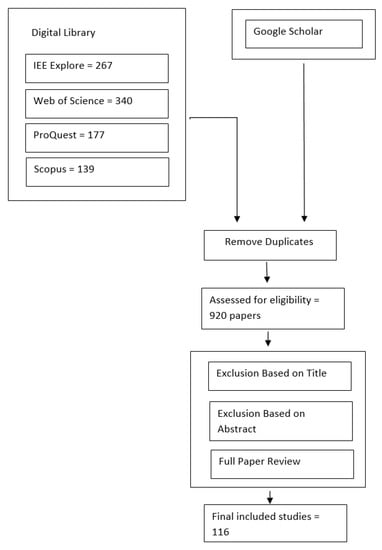

Figure 3 illustrates the preferred reporting items for systematic reviews and meta-analyses (PRISMA) flowchart that outlines the systematic literature review process that has been used in this paper. This diagram serves as a visual representation of the various steps that were undertaken to ensure that our review was comprehensive, transparent, and reproducible. The process began with a digital library search using a pre-defined search string that yielded a large number of articles related to our topic. The articles were screened in several stages to eliminate duplicates, irrelevant studies, and studies that did not meet our inclusion criteria. The remaining articles were then subjected to a more thorough analysis, with the selected primary studies being used as the basis for our review. Our quality criteria were used to evaluate the studies and identify relevant information, which was then synthesized to answer our research questions. Through this process, we aimed to identify gaps in the existing literature and propose solutions for future research.

Figure 3.

Systematic Literature Review (SLR) process.

3. Research Design

A well-designed research method and procedure are essential for conducting a comprehensive and rigorous SLR. In this section, we will provide details on the research design for our study.

Method: We conducted a systematic literature review of self-healing approaches in mobile edge computing. The method used in this review was guided by the PRISMA statement [17]. The review process involved searching for relevant literature, screening and selecting articles based on predefined inclusion and exclusion criteria, assessing the quality of the studies, and extracting relevant data.

Review Procedure: The review procedure consisted of the following steps:

- Planning Stage: In this stage, we defined the scope of the review, formulated the research questions, and established the search strategy, inclusion and exclusion criteria, and quality assessment criteria.

- Identification Stage: We conducted a systematic search of digital libraries, including IEEE Xplore, ACM Digital Library, ScienceDirect, and Springer Link, to identify relevant literature.

- Screening Stage: We screened the identified literature based on the inclusion and exclusion criteria, which were established in the planning stage. In this stage, we removed duplicates and reviewed titles and abstracts to identify articles that met the inclusion criteria.

- Eligibility Stage: In this stage, we assessed the full text of articles that passed the screening stage to ensure they met the inclusion criteria. We used a quality assessment tool to evaluate the quality of the selected studies.

- Data Extraction Stage: We extracted relevant data from the selected studies and organized the data in a tabular format for further analysis.

- Synthesis Stage: In this stage, we analyzed the extracted data and synthesized the findings to answer our research questions.

Selection Criteria: The inclusion criteria for the study were as follows:

- The study must focus on self-healing approaches in mobile edge computing.

- The study must be published in the English language and peer-reviewed.

- The study must be published between 2015 and 2022.

The exclusion criteria were as follows:

- Articles that do not focus on self-healing approaches in mobile edge computing.

- Articles that are not published in the English language or are not peer-reviewed.

- Articles that are published before 2015.

Adhering to the PRISMA statement and following a rigorous review procedure can help ensure the quality and validity of a systematic literature review. By applying specific inclusion and exclusion criteria, we can minimize the risk of bias and ensure that the studies included in our review are relevant and of high quality. This, in turn, can improve the reliability, replicability, and transparency of our review.

3.1. Research Methods

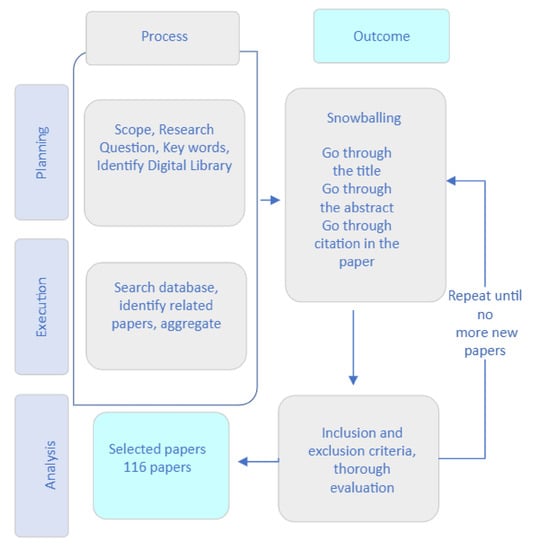

The SLR was conducted using the snowball sampling concept (see Figure 4). The idea, often referred to as a chain sampling or network sampling, starts with one or more study subjects. Following that, it continues based on referrals from the obtained papers at the initial stage out of the searching criteria. The cycle repeats until the desired sample is obtained or a saturation point is achieved [18].

Figure 4.

SLR-based snowballing procedure.

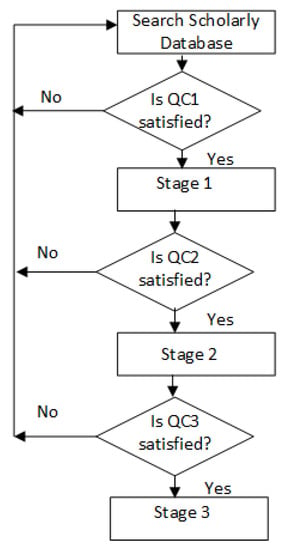

To contribute to the existing body of knowledge on self-healing approaches in mobile edge computing, we conducted a systematic mapping of the literature using the flowchart in Figure 5. This process allowed us to identify and highlight the different approaches used to address our research questions.

Figure 5.

Quality criteria flowchart.

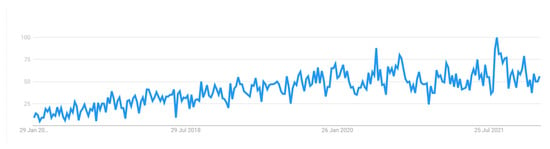

To ensure the relevance and comprehensiveness of our search, we carefully constructed our search string, taking into account the popularity and relevance of the keywords. We used Google Trends to measure the public interest in our keywords and to ensure that our search string was not limited in scope. This helped us to find a wide range of relevant literature to include in our review.

Figure 6 shows the popularity of the term “edge computing” over time, as measured by search interest. The numbers on the graph represent a score out of 100, with higher values indicating greater popularity. Over the ten-year period shown, the popularity of edge computing experienced an upward trend, with a low point in January 2012 and a peak in July 2021.

Figure 6.

Edge Computing popularity between 2012–2022.

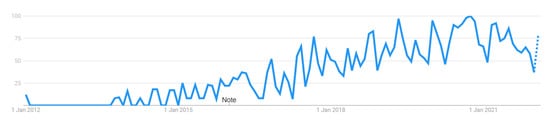

In Figure 7, we explore the popularity of the term “multi-access edge computing” (MEC), which is a newer name for mobile edge computing. The graph shows that MEC did not exist as a term before 2016, so search interest was low until that time. However, since then, the popularity of MEC has steadily increased, with 2021 marking its highest point yet.

Figure 7.

Multi-Access Edge Computing popularity between 2012–2022.

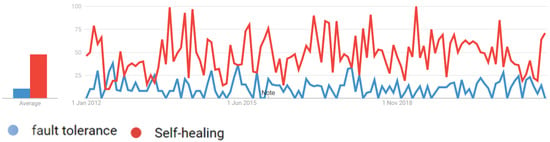

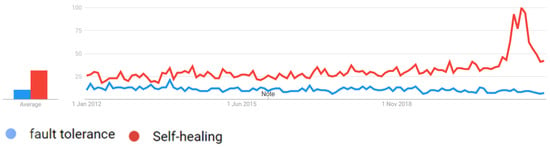

Figure 8 depicts the popularity of the terms “fault tolerance” and “self-healing” in the UK region. The graph reveals that the popularity of self-healing reached its highest value twice, once in 2013 and again in 2018, while the popularity of fault tolerance remained consistently lower, below 50.

Figure 8.

Fault Tolerance and SH popularity in UK.

Finally, Figure 9 compares the search interest in fault tolerance and self-healing around the world. The graph shows that both terms had similar levels of popularity, with neither exceeding a score of 50, until self-healing experienced a surge in popularity around 2021.

Figure 9.

Fault Tolerance and SH popularity around the World.

We utilized several digital libraries to gather relevant materials related to our topic, as outlined in Table 1. The digital libraries we used include the IEEE Xplore Digital Library, Web of Science, ProQuest, and Scopus. IEEE Xplore is a comprehensive database that includes papers in the fields of engineering, computer science, and information technology. The Web of Science database records materials in the areas of social science, arts, and humanities. ProQuest, on the other hand, provides publications on medicine, surgery, and nursing sciences. Lastly, Scopus covers a wide range of subjects, including technology, natural sciences, information technologies, social sciences, and medicine, among others.

Table 1.

Search conducted in various digital library.

Table 1 lists the preliminary paper search conducted in four digital libraries.

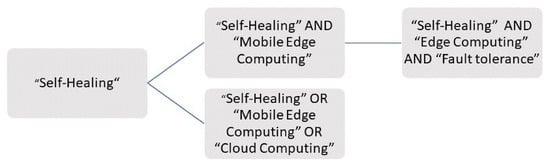

To achieve our set goal in searching databases, we utilized the search string shown in Figure 10.

Figure 10.

Search String.

Specifying “mobile edge computing” OR cloud computing OR edge computing was crucial, in order to minimize irrelevant application areas, such as SH for concrete materials, polymers, or SH in health care.

Inclusion and Exclusion Criteria: At this stage, we eliminate the studies that are not thematically relevant to the scope of this paper. The selection criteria we utilized include three stages.

We thoroughly examined the papers using a process that conforms with the following quality criteria (QC), as highlighted in Figure 5.

- QC1.

- Is there an up-to-date study of the current knowledge?

- QC2.

- Does the proposed study take a self-healing approach into cognizance or utilize any autonomic approach for network management?

- QC3.

- Is there a comprehensive analysis of evaluations—are they explained in detail, are the study’s objectives met?

Abstract Inclusion Check: In this stage, we reviewed the titles and abstracts of all the papers obtained from our search. We thoroughly scrutinized the abstracts and excluded some papers based on their content. For instance, if the abstract contained relevant keywords, we kept the paper for further processing. However, we did not evaluate the quality of the papers at this stage. The result of this stage helped us to distinguish between relevant and non-relevant papers.

After defining our scope, research questions, and keywords, we identified the digital libraries we would be using in our search.

Next, we conducted a search of the digital libraries using our search string. We then used QC1 to analyze the articles obtained. By reviewing the titles and abstracts, we identified articles related to our scope, and this was stage 1.

To achieve stage 2, we further analyzed the articles from stage 1 using QC2. We examined the content of these papers to ensure their relevance. At the end of this stage, we obtained thoroughly checked, relevant papers.

In stage 3, we further analyzed the papers from stage 2 using QC3. We looked at the methods and approaches used in the papers, the comprehensiveness of their analysis and evaluations, and whether the paper’s objectives were met. The result of this stage led us to our primary papers.

Table 2, Table 3, Table 4 and Table 5 are examples of the search conducted in four digital libraries. Showing the search string, the document type and source, as well as the year of publication.

Table 2.

Examples of search conducted in IEEXplore digital library.

Table 3.

Examples of search conducted in Web of Science digital library.

Table 4.

Examples of search conducted in ProQuest digital library.

Table 5.

Examples of search conducted in Scopus digital library.

3.1.1. Results and Analysis

In this section, the outcome of the systematic review study are arranged in line with the research questions. As shown in Figure 11b, out of 116 articles investigated in this study, 34% came from conferences, while 66% were published in journals. Our search include papers from 2018–2021.

Figure 11.

Publication outlet (a) year-wise percentage, (b) total percentage.

3.1.2. Discussion

This paper discusses the primary papers in two broad categories: management methods of edge resources and methods and approaches utilized in MEC for self-healing purposes.

Mobile Edge Computing Resource Management Techniques

The mobile edge computing environment has been the subject of a significant amount of literature, with a focus on resource management to optimize the quality of service (QoS). As the MEC environment interacts with highly non-stationary devices, task offloading to the MEC platforms has received much attention. To ensure that the QoS remains the same, the MEC architecture consists of several edge nodes. Hsieh et al. [19] addressed the challenge of optimizing task assignments in a system where diverse edge nodes process tasks together. The authors formulated the task assignment problem as a dynamic and random Markov decision process (MDP) and used online reinforcement learning to improve task processing execution, resulting in better performance than the commonly used standard technique. However, the impact of load balancing among the diverse edge nodes was not considered.

Similarly, Wu et al. [20] focused on end users’ mobility by predicting the expected routes to be taken by mobile users using a deep learning algorithm. They then utilized an online mechanism that is dependent on QoS to resolve the offloading issue [21]. The authors claimed that their approach has a reduced offloading failure rate and a faster response time, compared to typical approaches. However, the prediction of users’ mobility in real-life situations can be challenging, due to the complicated traffic network [22].

A considerable amount of the literature has been devoted to resource management in the MEC environment to optimize QoS. Given the interaction of the MEC environment with highly non-stationary devices, task offloading to MEC platforms has been the focus of much attention. The MEC architecture includes multiple edge nodes, and as mobile devices move, the QoS must remain consistent. Hsieh et al. [19] investigated the challenge of optimizing task assignments in a system where diverse edge nodes process tasks together. The authors framed the task assignment problem as a MDP and considered the problem as both dynamic and random. By using online reinforcement learning to improve task processing execution, they achieved a result better than the commonly used standard technique. However, their approach fails to consider the impact of load balancing among diverse edge nodes.

The network infrastructure is another crucial edge resource that significantly impacts QoS. Kan et al. [23] considered the use of both radio and computation resources to increase the performance of the MEC server. They approached resource allocation and offloading from the standpoint of cost minimization and claimed that their proposed solution significantly improved QoS by employing a heuristic approach to tackle the problem. However, their research was limited in scope and did not assess the impact of several base stations, as is the case in practice.

Zhang and Debroy [24] focused on the detrimental impact of wireless fluctuations on task execution time when real-time applications offload to the edge server. The authors offered a task offloading algorithm that can adapt to improve and balance energy consumption and total task completion time, claiming that their method provides better results. However, their paper lacks detailed information on the devices and parameters used, and there is no vivid comparison of their performance results with existing work.

Furthermore, Qiao et al. [25] focused on reducing energy usage when transmitting tasks to edge servers from a mobile device. The authors presented an energy-efficient algorithm that proved to be beneficial in lowering energy consumption. However, their research did not consider delays while offloading different tasks.

This category focuses on primary papers that use various methods and approaches in the literature for self-healing purposes. Our investigation shows that self-healing is an essential approach for next-generation network management solutions. Self-healing is required to manage both physical and virtual network infrastructures and services.

Some self-healing techniques gather information from MEC networks and perform diagnoses using various algorithms. For instance, Zoha et al. [26] presented a machine learning technique that gathers key performance indicators (KPIs) using minimize drive testing (MDT) characteristics. In an LTE network, the technique can automatically detect sleeping cells, providing a far better detection strategy.

In the context of self-organizing networks, Zoha et al. [27] addressed the detection and compensating approach for cell outages. The suggested detection component employed an approach based on minimum drive testing (MDT) functionality to gather data from mobile devices. Meanwhile, the second part of the approach, a compensating component, leveraged fuzzy-based reinforcement learning algorithms to deliver a suitable solution to users in the vicinity of the damaged cell. Specifically, the compensating component of their approach dynamically adjusts the antenna tilting and transmission power of neighboring cells to provide the best possible coverage to the affected area. The authors claimed that their findings demonstrate that the suggested framework is capable of autonomously detecting cell outages and compensating for them in a satisfactory way. The proposed approach can improve the network availability and performance by reducing service interruption and minimizing the negative impact on user experience.

Other research works have employed various algorithms for self-healing techniques, such as diagnosis. Khanafer et al. [28] used the Bayesian network for diagnosis in a UMTS network, while Ktari and Lavaux [29] proposed a Bayesian network model for system reliability and fault diagnosis. ADC [30] proposed a quadratic probing optimization algorithm for network node failure, and Khatib [31] utilized fuzzy logic as a supervised method for root cause analysis.

However, to effectively study the impact of faults on performance metrics, a diagnosis method requires a historical database of tagged fault instances. Typically, specialists do not focus on preserving the origin of the problem or the measures they took during troubleshooting activities. When there is an insufficient record of resolved instances, unsupervised techniques become the most suitable approach.

In an SDN environment, Vilchez et al. [32] proposed a self-healing approach for fault diagnosis based on Bayesian networks. However, the technique is reactive and does not prevent future occurrences of the same mistake. Thorat et al. [33] focused on switch-level link recovery and proposed a rapid recovery (RR) mechanism to quickly recover links. The method reduces backup flow rules for all the failing link’s interrupted flows to a single flow rule.

4. Review of MEC Challenges and Use Cases

Mobile edge computing (MEC) has emerged as a promising solution to address some of the limitations associated with cloud computing. By facilitating the storage and computation of data closer to the source where it is generated, MEC has the potential to improve the performance of computing systems. However, despite its benefits, MEC faces several challenges that must be addressed to ensure its effectiveness.

One of the most critical challenges facing MEC is task offloading and resource allocation. The performance of the MEC system heavily relies on effective decision making regarding the offloading of tasks and allocation of resources. Given the large amount of data collected and generated by mobile devices within the MEC network, there is a need to determine the necessary information that must be sent to the edge server. In this regard, it is crucial to partition data into tasks before they can be offloaded to the server.

Furthermore, the limited resources available at the edge make it imperative to allocate them efficiently. To this end, proper resource allocation is crucial for ensuring the effectiveness of the MEC system.

A substantial body of literature has explored the challenges of task offloading and resource allocation in MEC. Notably, Zhong et al. [34] have contributed to this field by proposing a smart and effective task allocation system for large-scale MEC. The authors’ model accounts for the MEC system’s demand for minimal latency and reduced energy usage, which are crucial factors for ensuring high-performance MEC systems.

To address the task assignment problem, the authors proposed a nonlinear integer programming approach. This approach is based on a combination of Newton, linear search techniques, and conjugate gradients algorithms. By integrating these algorithms, the authors were able to achieve convergence, reduce complexity, and enhance scalability. This solution is particularly promising for addressing the resource constraints of MEC systems, while maintaining optimal performance.

Tong et al. [35] conducted research on task offloading in a MEC system with multiple servers and users. Their aim was to minimize server delay by finding the most suitable task offloading scheme for each computation task. To achieve this goal, the authors proposed two sub-optimal techniques that consider the harmony between computation power and assigned tasks.

In another study, Shan et al. [36] addressed the resource distribution problem in mobile edge computing. The authors proposed a sophisticated model that uses deep reinforcement learning to allocate resources effectively. The research showed that their approach led to reduced energy usage, lower latency, and a more balanced distribution of resources in the system.

Yang and Poellabauer [37] conducted a study on the handling of multiple task and service requests in mobile edge computing systems with limited computing resources. In their research, they proposed a technique for prioritizing computational tasks based on specific features and a reinforcement learning approach to solve the scheduling problem. To verify the feasibility and effectiveness of the approach, the authors conducted a simulation experiment on a MEC testbed. The study’s main focus was to address the limitation of computing resources in MEC systems and how to optimally handle multiple tasks and service requests.

Shakarami et al. [38] proposed an autonomous computation offloading framework to tackle computational task offloading issues in MEC environments. To address the challenge of offloading decision making, the authors combined various techniques. The resulting framework yielded high accuracy in predicting energy usage, latency, and offloading, which are crucial metrics for task offloading decisions.

Naouri et al. [39] proposed a three-layer framework for addressing the task offloading challenge in MEC. The framework consists of three layers: the cloud layer, the cloudlet layer, and the device layer. To offload jobs that demand high processing power, the cloudlet layer and cloud layer are utilized. For operations with moderate computational requirements, but high communication costs, the device layer is used. By utilizing this approach, the transmission of large data to the cloud is avoided, and delays in processing data are significantly reduced.

To address offloading problems in large and diverse MEC networks with several cluster service nodes and various mobile task dependencies, Lu et al. [40] proposed a deep reinforcement learning (DRL) solution. The authors used iFogSim and Google Cluster Trace to simulate the task offloading challenge. By comparing their algorithm with the previous ones, the authors claimed that their new algorithm exhibited enhanced performance, in terms of latency, energy usage, load balancing, and average execution time.

Several studies have explored the use of caching as a potential solution to the challenge of resource management in mobile edge computing [41]. In particular, Safavat et al. [42] argued that a well-designed content caching strategy can play a critical role in reducing delays and improving the overall quality of experience. The authors also emphasize the importance of the content selection process, which involves both the caching and updating of content, as well as the expected duration for which the cached content remains relevant.

Similarly, Yang et al. [43] proposed an approach to efficiently allocate communication and computation resources through caching. The authors employ a long-short-term memory (LSTM) network to predict important tasks and formulate an optimization problem that involves task offloading, resource distribution, and caching choices. By leveraging the benefits of caching, the proposed approach can effectively reduce delays and improve overall system performance.

Li and Zhang’s study [44] focused on the impact of latency on the quality of service in a mobile edge computing environment. The authors proposed a method for offloading tasks from mobile devices to edge and remote cloud servers, with the goal of minimizing the delay experienced during data transmission. The proposed method uses a combination of service caching and task offloading, resulting in reduced system delay and power consumption.

Similarly, Yu et al. [45] proposed an offloading scheme that integrates caching to reduce the delay experienced by mobile devices during task execution. The authors made the computational results available to multiple users to prevent repetition and improve efficiency.

Security is a significant challenge in mobile edge computing, which includes infrastructure security, cyber security, and other related issues. The infrastructure of mobile edge computing could be the target of malicious attacks, which could aim to manipulate data from different sources, in order to corrupt the outcome of the edge server’s computation [46]. These attacks could include privacy attacks, data poisoning, and data evasion. The author advocates a multi-layer security approach, where the edge node can quickly and securely confirm that its peers before tasks are uploaded to address these issues.

Zhao et al. [47] discussed poisoning attacks that could be intentionally generated by malicious users to compromise the statistical results on the MEC server. The authors proposed a privacy-preserving scheme against poisoning (P3) to address this problem.

The vast amount of data gathered from various applications by MEC poses a new security threat, particularly during data access. To protect user data, access control is crucial, as emphasized by Hou et al. [48]. Meanwhile, Xiao et al. [49] addressed the need for a secure offloading strategy through a reinforcement learning algorithm. In addition, they suggested an authentication and cooperative caching strategy as a solution to various attacks aimed at mobile edge caching.

5. Autonomous Approaches in MEC Networks and Related Technologies

The concept of autonomic computing is a relatively new paradigm, and research has been conducted to propose new architectures, frameworks, and development processes. In this section, we will examine the application of autonomous principles in managing mobile networks and related technologies. Additionally, we will review some existing architectures used to achieve autonomous functionalities.

5.1. Mobile Network Management with Autonomous Approaches

The heterogeneous nature of mobile edge computing has presented challenges in current network management approaches [50]. The complexity and diversity of the infrastructure and services provided make management increasingly difficult. As a result, there is a growing need for a network management approach that can consistently deliver high-quality services.

Traditionally, mobile networks rely on human experts to manually correct network faults. This reactive approach is inefficient and can negatively impact the quality of the experience. To address this, there has been a push for autonomous network management approaches that can automatically detect and remediate faults without human intervention.

The critical function of an autonomous system is its ability to operate without human intervention. An autonomous system (AS) is capable of performing computations on its own with little or no human involvement, as it is an evolving concept that is applicable in various fields, including maritime, space exploration, military, computing, manufacturing, robotics, and navigation [51].

An autonomous system (AS) is characterized by its hardware and software capabilities, which work together to identify a problem and execute a solution. It should also be able to gather information from external sources, process it, and make decisions accordingly, as it is a concept applicable in various fields, such as cloud computing, edge computing, and network management, which employ different approaches to achieve autonomous functionalities.

In computing technologies, such as cloud and edge computing, several methods are used to implement autonomous functionalities. Autonomous computing is one such approach. In network management, a self-organizing network (SON) is an approach that can be utilized to achieve autonomous functionalities.

5.2. Autonomous Approaches in MEC and Related Technologies (IoT and Bigdata)

Mobile edge computing is a critical enabler of IoT and big data technologies. By bringing computation and storage closer to the data sources, MEC accelerates the process of IoT data sharing and analytics. The autonomous decision-making principle is vital to enhancing the management of these technologies and services. It provides for real-time management without human intervention, making it essential for the Internet of Things (IoT), 5G, big data, mobile networks, and other related technologies.

IoT consists of various sensor devices, and the autonomic decision-making concept is essential for the installation and management of these devices. Manual consideration is ineffective, due to the large number of devices involved, making a dynamic and intelligent management technique necessary.

The autonomic control loop, consisting of autonomic managers and managed resources, can be introduced to the IoT component structure as long as the hierarchy remains. The components above serve as the autonomic manager, and the components below serve as managed resources. This approach can be applied to the components individually or collectively.

Autonomic decision making is critical for the effective management of IoT and big data technologies, which heavily rely on mobile edge computing to bring computation and storage closer to the data source. The real-time management provided by autonomic decision making without human intervention is particularly important in device management, access management, and identity management. By introducing the autonomic decision-making concept, the system can control and manage its functions automatically, resulting in greater effectiveness in all components.

Research initiatives have explored the fusion of IoT and autonomous control systems to create a responsive environment capable of adapting to changes. Lei et al. [52] proposed a system that utilizes sensors to gather data, servers to make decisions based on data analysis, and actuators to carry out those decisions. To achieve autonomy, the authors use reinforcement learning and deep reinforcement learning in the decision-making process. However, utilizing deep reinforcement learning for decision making can be affected by delayed control and incomplete perception.

To achieve autonomous functionalities in IoT, Wei et al. [53] proposed the use of broad reinforcement learning (BRL) to reduce traffic jams in smart cities. The proposed approach by Wei et al. highlighted the growing interest in using autonomous decision making in IoT management. By leveraging BRL, their solution aimed to reduce traffic congestion in smart cities by controlling traffic lights. The system’s reinforcement learning algorithm learns the optimal timing and sequence of traffic lights based on real-time traffic data.

This approach demonstrates the potential of autonomous decision making in not only managing devices, but also in optimizing complex systems. With the increasing prevalence of IoT devices in smart cities, there is a growing need for autonomous management to ensure the efficient operation of these systems. Autonomous decision-making approaches, such as BRL, can be used in various applications, such as energy management, waste management, and public safety.

However, as with any autonomous system, there are also potential risks and challenges that need to be considered. The algorithm used in Wei et al.’s approach relies hvily on real-time traffic dataea. This raises questions about data privacy and security. In addition, there is a risk of the system being manipulated by malicious actors, which could lead to traffic accidents or other safety concerns.

To ensure the safe and effective implementation of autonomous decision making in IoT management, it is essential to address these potential risks and challenges. This includes developing robust security measures, ensuring data privacy, and implementing fail-safe mechanisms to prevent system failures. As research in this area continues to evolve, it will be crucial to strike a balance between the potential benefits and potential risks associated with autonomous decision making in IoT management.

Tahir et al. [54] have also proposed the use of autonomic computing for managing the IoT ecosystem with minimal human intervention. They describe the use of the MAPE (monitor-analyze-plan-execute) control loop for managing IoT components. The MAPE control loop assigns autonomic managers and managed resources to these components, enabling them to perform self-management and self-optimization without human intervention. This approach is particularly useful in scenarios where the number of devices and sensors is too large for manual management, and the system needs to adapt to changing conditions in real-time.

Big data is a field where autonomous functionalities are increasingly being applied to achieve greater efficiency in various tasks, including data security and privacy. One way in which autonomous decision making can improve security is through the automatic detection and prevention of intrusion. Traditional intrusion detection methods rely on sets of rules to analyze the source and behavior of an intrusion and apply preventive action, which can be slow and inefficient. This approach can result in a time gap between the detection and response, which can be crucial in preventing intrusions.

To overcome these limitations, there is a need to allow the system to protect itself without human intervention. This is where autonomous decision making can be applied to enhance the process of data security and privacy. By using machine learning algorithms to analyze data in real-time, an autonomous system can automatically detect potential intrusions and take preventive action, thereby reducing the response time and improving the overall effectiveness of the system. This approach is more efficient and reliable than traditional intrusion detection methods, making it a valuable tool for data security and privacy in the field of big data.

A number of studies have explored the use of autonomous functionalities in big data applications. One such study is that of Vieira et al. [55], which investigates the potential of integrating autonomic computing and big data techniques to build a system capable of processing large volumes of data from system operations to identify anomalies and formulate countermeasures in the event of an attack. The researchers propose a framework that uses machine learning algorithms to analyze data in real-time and make automatic decisions to protect the system. The framework is designed to be adaptive, so it can learn from new data and adjust its response accordingly. By automating the process of detecting and responding to threats, this framework provides a more effective way to secure big data systems, compared to traditional methods that rely on human intervention.

The autonomy intrusion detection system (AIRS) is a proposed method that is designed based on the MAPE-K model. One of its unique features is its ability to identify trends in massive log databases, which enables it to quickly detect and respond to cyber attacks. This is achieved by comparing signatures to suspicious actions, thus reducing the time lag between intrusion detection and response. The system’s efficient response time makes it an effective tool for improving data security and preventing cyber attacks.

Kassimi et al. [56] also explored the use of autonomous systems for intrusion detection, specifically the discovery of anomaly data. Their system is designed to gather information about network traffic through a mobile agent, which then stores and analyzes the data. Using Hadoop infrastructure, the large amount of data collected is organized and analyzed by multi-agent systems to provide a self-healing intrusion detection system. This autonomous approach to intrusion detection allows for the efficient and effective identification of security threats, without relying on human intervention, thus reducing response time and improving the overall security of the system.

In a related research effort, Mokhtari et al. [57] developed an autonomous proactive task dropping mechanism that utilizes probabilistic analysis to achieve autonomous functionality in a distributed computing system. The main aim is to maintain the system’s performance when faced with adverse situations. The authors employed a mathematical model to identify the most optimal task dropping decision that will enhance the system’s stability, followed by a task dropping heuristic to achieve stability within a reasonable time frame. By adopting this approach, the system can continue to operate without human intervention and quickly adapt to changes in the environment, ensuring that it remains stable and performs optimally.

5.3. Review of Autonomous Architecture and Framework for MEC

The concept of autonomy enables a system to coordinate itself without external intervention, which can be particularly useful in a MEC environment. In this subsection, we will introduce several autonomous architectures and frameworks found in the literature that can be applied in MEC environments.

Biologically inspired frameworks and architectures—the development of autonomic computing has been influenced by the human nervous system. The human nervous system has the ability to control and regulate the body’s systems, and this ability has been replicated in various autonomic computing approaches. These approaches focus on an entity that is responsible for controlling the systems, similar to how the brain controls the human body. By replicating the functions of the nervous system, autonomic computing can achieve self-regulation and self-optimization without external intervention.

There are various architectures and frameworks in the literature that can be useful in the context of MEC environments. Two of the relevant ones are biologically inspired frameworks and large-scale distributed system architecture.

Biologically inspired frameworks and architectures aim to replicate the human nervous system, which has the ability to regulate and control body systems without external intervention. In the context of AC, the approach is to create an entity responsible for controlling the system, similar to how the brain controls the human body.

Large-scale distributed system architecture includes applications such as Ocean store SMARTS from IBM and Auto Admin from Microsoft, which have been developed to manage distributed systems and databases automatically. These applications are designed to achieve the goals of AC, such as self-management and self-healing.

Another architecture that has been widely used to achieve autonomous behavior is the agent-based architecture. In this type of architecture, agents interact with each other to create a system that can oversee itself automatically. The key aspect of this architecture is the agent, which is an autonomous entity that can function without the need for a central control system.

The component-based architecture places emphasis on autonomic components and their interactions, with the goal of achieving a set objective. These components carry out monitoring, analysis, planning, and execution, which are the core functionalities of autonomous computing. By breaking down the system into smaller, more manageable components, this architecture enables a more efficient and effective approach to achieving autonomy. The autonomic components interact with each other, exchanging information and coordinating their actions to achieve the overall objective.

Technique-focused architecture is an AC architecture that leverages AI and control theory to optimize system performance. Its primary objective is to generate actions, acquire feedback, manage actions by retrying or re-planning, and generate alternative approaches to achieve specified goals. The architecture comprises features that enable learning from the environment, taking action, receiving feedback, planning, and re-planning, thus achieving autonomous behavior in a system.

On the other hand, service-oriented architecture focuses on the interaction of services to achieve autonomous functionalities. Services are loosely coupled and operate independently, offering interoperability and scalability. This architecture enables the creation of self-managed systems through the dynamic configuration of services, allowing for fault tolerance and self-healing capabilities.

5.4. Discussion

The process of automatically identifying faults is an essential aspect of a self-healing solution. However, there is limited research on self-healing in the mobile network domain. This can be attributed to several factors. Firstly, it is challenging to determine the most prevalent faults and their warning signals in mobile telecommunication networks, due to their complex nature. Moreover, experts who are responsible for identifying and solving faults often do not document them. Secondly, obtaining historical data from live networks is difficult. As a result, most self-healing solutions in existing studies are based on modeling faults in simulators. However, testing such solutions on a live network can slow down the system, which is counter-productive, making it challenging to evaluate their performance.

6. Review of Self-Healing Approaches in MEC

Self-healing capabilities integrated into network management systems hold significant potential for achieving crucial goals, such as maintaining QoS, while simultaneously reducing capital investment, operational expenses, and maintenance costs. Self-healing systems offer a methodology for identifying, categorizing, and mitigating faults. In this section, we will explore the various implementations and strategies utilized in achieving self-healing capabilities.

6.1. Various SH Implementation in MEC

MEC is a hybrid of various components that combines IT services with telecommunications. At its core, MEC involves deploying MEC servers within mobile networks. These servers act as virtualization infrastructure that hosts MEC services, software-defined networking, and network function virtualization. This provides a flexible environment where service providers can deploy their software across MEC systems from multiple vendors, creating a more open and interoperable ecosystem.

6.1.1. Network Functions Virtualization (NFV)

In a network, nodes serve as connection points and are responsible for receiving, generating, storing, and transferring data along network routes [7]. These nodes can recognize, process, and forward transmissions to other network nodes. Network nodes can also perform functions such as load balancing, firewalls, intrusion detection systems (IDS), and intrusion prevention systems (IPS). However, these network functions have traditionally required specialized hardware, which can be expensive. To address this, the concept of network functions virtualization (NFV) has emerged, which turns these network functions into software applications that can run on a virtual machine, eliminating the hardware cost constraint. NFV provides a flexible, agile, and scalable solution for networks [58].

6.1.2. Software Defined Networking (SDN)

The traditional approach to transferring and managing data in a network relies on the hardware and software integrated into devices such as routers and switches. However, SDN technology separates the software responsible for directing data flow and network management from the networking device that actually moves the data [59]. This allows for greater flexibility and control over network behavior, as well as easier management of network traffic and security policies.

SDN is a network architecture that aims to replace proprietary hardware-based equipment and services with common, software-driven methods [60]. This approach provides several benefits, including increased security, improved network visibility and control, and the ability to quickly adapt to changing enterprise requirements. With SDN, the software that controls how data travels through the network is separated from the underlying hardware responsible for transferring the data [59]. This coordination enables organizations to manage their entire network more intelligently, including in multi-location and multi-connectivity technology environments. By utilizing a unified network management software, businesses can improve flexibility and better position themselves to adapt to future needs.

The MEC platform is built on the principles of distributed systems, which consist of multiple autonomous components that work together to appear as a single system to users [61]. However, since distributed systems are prone to multiple points of failure, self-healing capabilities are necessary for the system to recover from failures on its own. Therefore, various approaches are used to achieve self-healing capabilities in MEC systems.

MEC environments can experience different types of failures, which can be broadly categorized as network infrastructure failure and MEC system failure. Network infrastructure failure may result from hardware failures or unstable connections within the mobile network. MEC system failure can be caused by a range of issues, such as hardware breakdown, software breakdown, or an overloaded MEC resource.

The MEC framework comprises the MEC host, which includes the MEC platform, MEC applications, and virtualization infrastructure. The MEC host serves as the server where MEC applications run together with the virtualization infrastructure. As this component plays a crucial role, achieving self-healing capability is imperative to ensure its uninterrupted operation.

For a business application to maintain a positive reputation in the market, it must run continuously without interruptions due to technical errors, feature updates, or natural disasters. Achieving a continuous application process can be challenging in today’s complex, multi-layered infrastructure. This is where self-healing technology comes in, enabling applications to function seamlessly, even in the face of such challenges.

6.1.3. Self Organizing Network (SON) Approach

To achieve self-healing in the Network infrastructure component of MEC, it’s essential to have autonomous functionality, and one approach that can be useful is the self-organizing network (SON) paradigm. A SON is a highly effective approach to enhance network management and meet the demand for high-quality services.

In order to achieve self-healing in the network component of a MEC system, the network must be both programmable and autonomous, as noted by Santos [62]. One effective paradigm for achieving this is the self-organizing network (SON), which is a technology designed to enhance the management and optimization of mobile radio access networks. By offering automated processes for network design, installation, management, and healing, SON has the potential to reduce the need for human involvement, as Ramiro has pointed out [63].

The management and operation of modern radio access networks (RANs) can be extremely complex, partly due to the heterogeneity of the deployed technologies and their lack of interoperability. In the radio access domain, the SON represents a promising approach to addressing these challenges, as they help to eliminate human error, reduce repetitive automation, and streamline tedious processes. By leveraging autonomic mechanisms, which can be either software or hardware-based, SON has the potential to significantly improve responsiveness and computational capacity, outpacing the capabilities of human experts and highlighting the need for self-management in RANs.

SON comprise a variety of processes that enable configuration, optimization, and healing in a self-regulating manner within a mobile network. By lowering capital expenditure (CAPEX) and operating expenditure (OPEX), SON has become a critical necessity in the operation of next-generation mobile networks.

SON functionalities have simplified the maintenance of communication networks that would otherwise be difficult and time-consuming. The programmability, flexibility, and dynamic nature of SON features have significantly enhanced the network’s operation by reducing human error and allowed for greater automation.

As previously mentioned, the SON function is traditionally divided into three categories: self-configuration, self-optimization, and self-healing. Self-healing (SH) was created as a feature of SON in compliance with the 3GPP standard to address three aspects: cell outage, self-recovery of network element (NE) software, and self-healing of board problems. Initially, the industry’s focus was on cell outage management, which is addressed by two primary components of SH: cell outage detection (COD) and compensation.

COD is responsible for automatically identifying cell outages, including sleeping cells and degrading cells. The compensation component provides an effective strategy for rectifying the identified flaws. The introduction of SH functionalities has significantly simplified the manual maintenance of communication networks, which would otherwise be difficult and time-consuming. By enabling the automatic identification and remediation of faults, SH features make the network more flexible, dynamic, and programmable, while reducing capital and operational expenditures in the operation of next-generation mobile networks.

The goal of cell outage management is to maintain network performance by continuously monitoring cells for outages and automatically adjusting network settings to restore optimal performance. In the compensation component, the algorithm adjusts the cell transmit power, antenna tilt, and azimuth to compensate for the outage [64].

In the self-healing process, the monitoring module detects a failure or a network device that triggers an alarm. Further analysis is performed to identify the root cause of the defect, using information from previous experience, measurements, or testing results. Once the defect is identified, the compensation module initiates the necessary repairs autonomously.

Considerable research has been dedicated to addressing the COD component of self-healing in a SON environment. One proposed solution to detecting cell outages is the use of hidden Markov models. This system automatically collects information about the current status of base stations (BS) and uses that information to predict when an outage may occur. While the method has been proven to predict BS conditions with 80% accuracy and a cell outage detection rate of 95%, it does not account for the influence of time or the historical sequence of cell states, which may provide useful insights for identifying erroneous cell profiles [65].

In the study by Yu et al. [66], a two-step architecture was proposed for cell outage detection, which includes data collection and data analysis phases. The first step focused on aggregating data from different sources, while the second step analyzed the data received in the first phase. The authors employed a modified LOF (Local Outlier Factor) detection technique to identify outages in various cell types and evaluate their approach using a use case. However, the study was limited in scope, as it did not consider resource allocation, load balancing, or the diagnosis and compensation components necessary for self-healing.

In contrast, Palacios et al. [67] have explored the use of statistical models to diagnose network problems, conduct recovery operations, and quickly compensate for the issue with the help of surrounding cells.

Mobile network operators have increasingly deployed more sophisticated networks, leading to the challenge of maintaining and managing these complex networks. To address this challenge, SON have become critical for automating network operations. However, current SON solutions may not be fully utilizing the massive amounts of network data available. Leveraging this data can enable the development of a proactive system that learns, predicts, and adapts dynamically to changing network requirements and volatile conditions.

The integration of SDN and NFV with SON can deliver an intelligent network. By separating the control plane from the data plane, SDN allows for more flexible network control and management, while NFV virtualizes hardware such as switches and routers. SON then acts as the “brain” of the network, reorganizing the network as needed to optimize performance. These technologies enhance SON’s ability to deal with dynamic networks by making the network programmable and adaptable to changing conditions.

6.1.4. Kubernetes Approach

Kubernetes is a self-healing solution that can be leveraged on an MEC platform to deploy and maintain uninterrupted services and applications in the face of technical glitches, feature updates, or natural disasters.

Released in 2015, Kubernetes is now one of the most widely used orchestration systems. It offers a unified platform for deploying applications and abstracts the underlying infrastructure, enabling developers to deploy applications without the need for support from system administrators. Meanwhile, system administrators can monitor and manage the availability of the supporting infrastructure. The primary objective of Kubernetes is to simplify deployments, saving time and effort [68]. To achieve this, Kubernetes provides a wide range of functionalities, including:

- Automatic bin packing: To maximize usage, the automatic bin packing feature of Kubernetes arranges containers based on resource demands and constraints.

- Horizontal scaling: This feature adjusts the number of executions and resource based on various requirements. It ensures the workloads are shared without affecting the existing ones currently running.

- Secret management: Kubernetes dissociates the management of application configuration information and sensitive data from the container image.

- Self-healing: Kubernetes has a unique self-healing feature that may restart failed containers, replace containers, and destroy containers that do not react to a user-defined health check [69]. Additionally, Kubernetes employs its self-healing functionality in a failed node by replacing and redistributing the failed containers to other active nodes.