Exploring the Potential of Distributed Computing Continuum Systems

Abstract

:1. Introduction

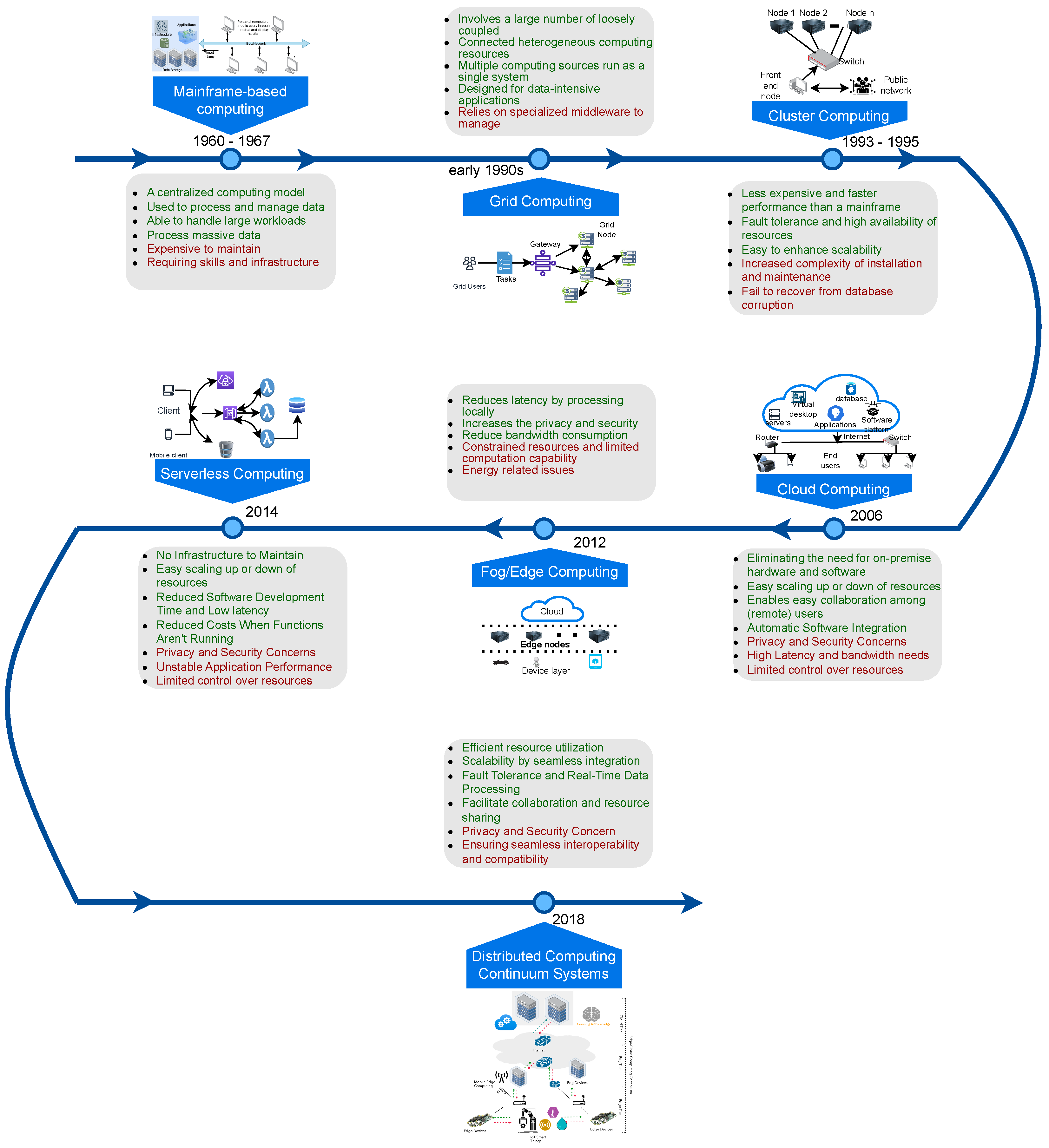

- Initially, we analyze the evolution of the computing paradigm from the 1960s to current computing trends. We discuss computing paradigm benefits and limitations.

- Next, the potential for DCCSs using various computing devices is discussed, along with their advantages and limitations. In addition, DCCSs’ overall benefits and limitations are analyzed.

- Furthermore, we provide various applications and real-time example scenarios wherein computing paradigms are highly needed. We highlight how these use cases benefit from DCCSs.

- Finally, we discuss several open research challenges and possible solutions for future enhancements to DCCSs to make these more efficient.

2. Evolution of Distributed Computing Continuum

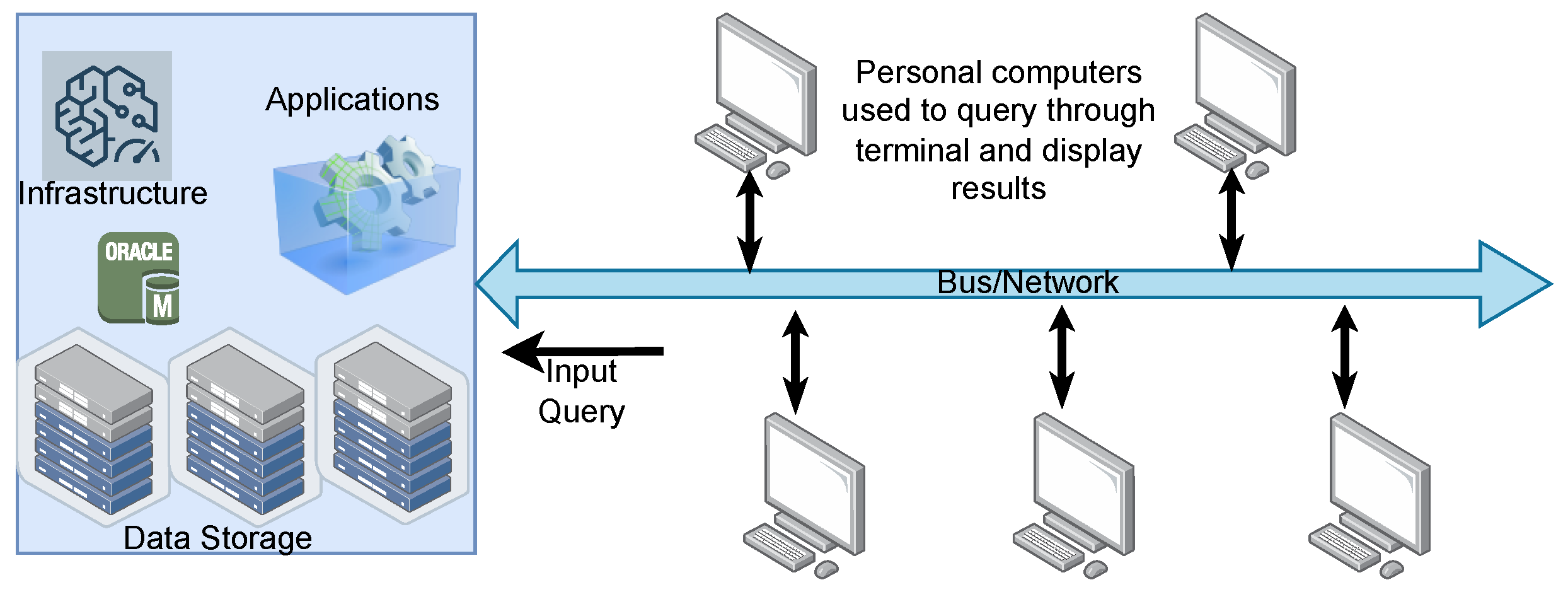

2.1. Mainframe-Based Computing

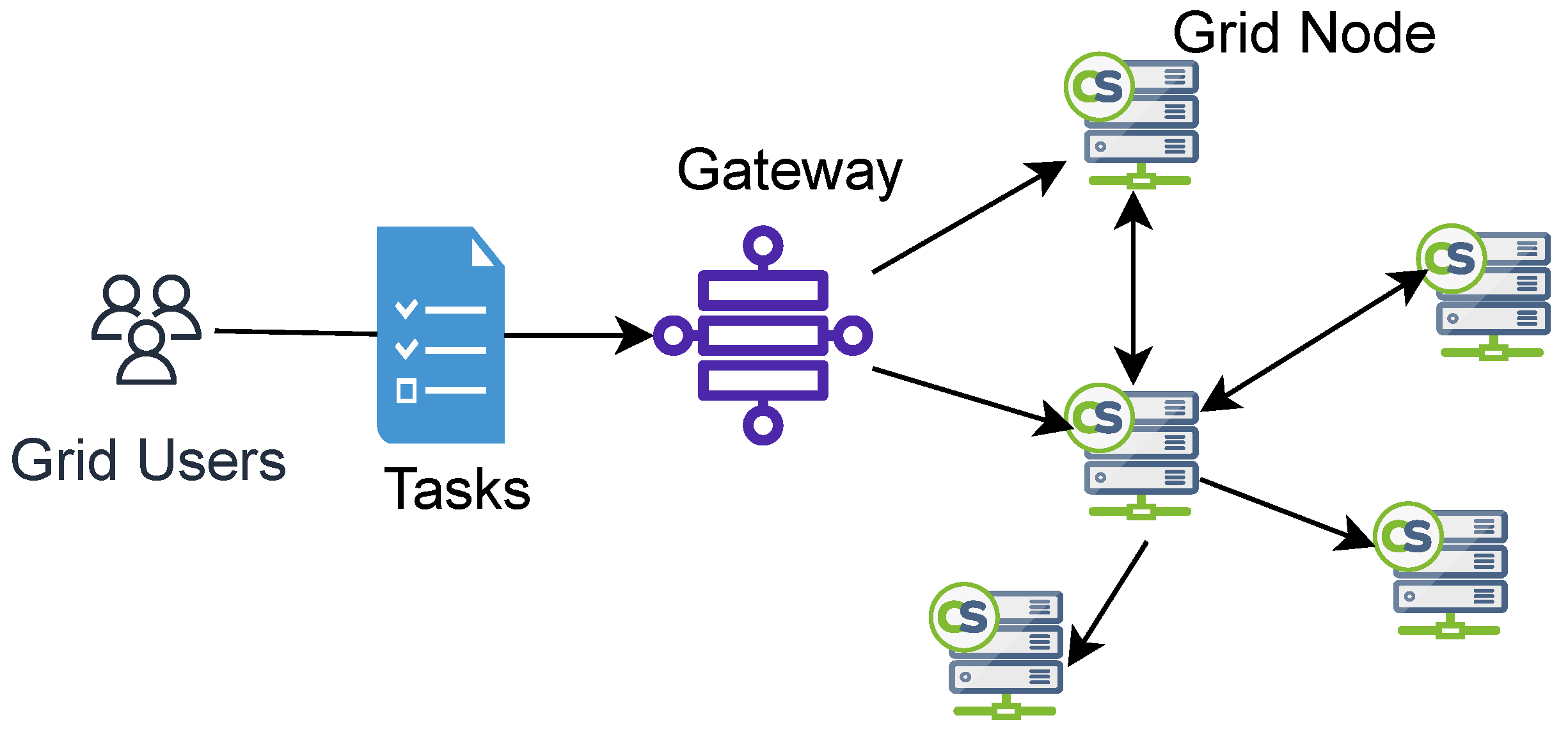

2.2. Grid Computing

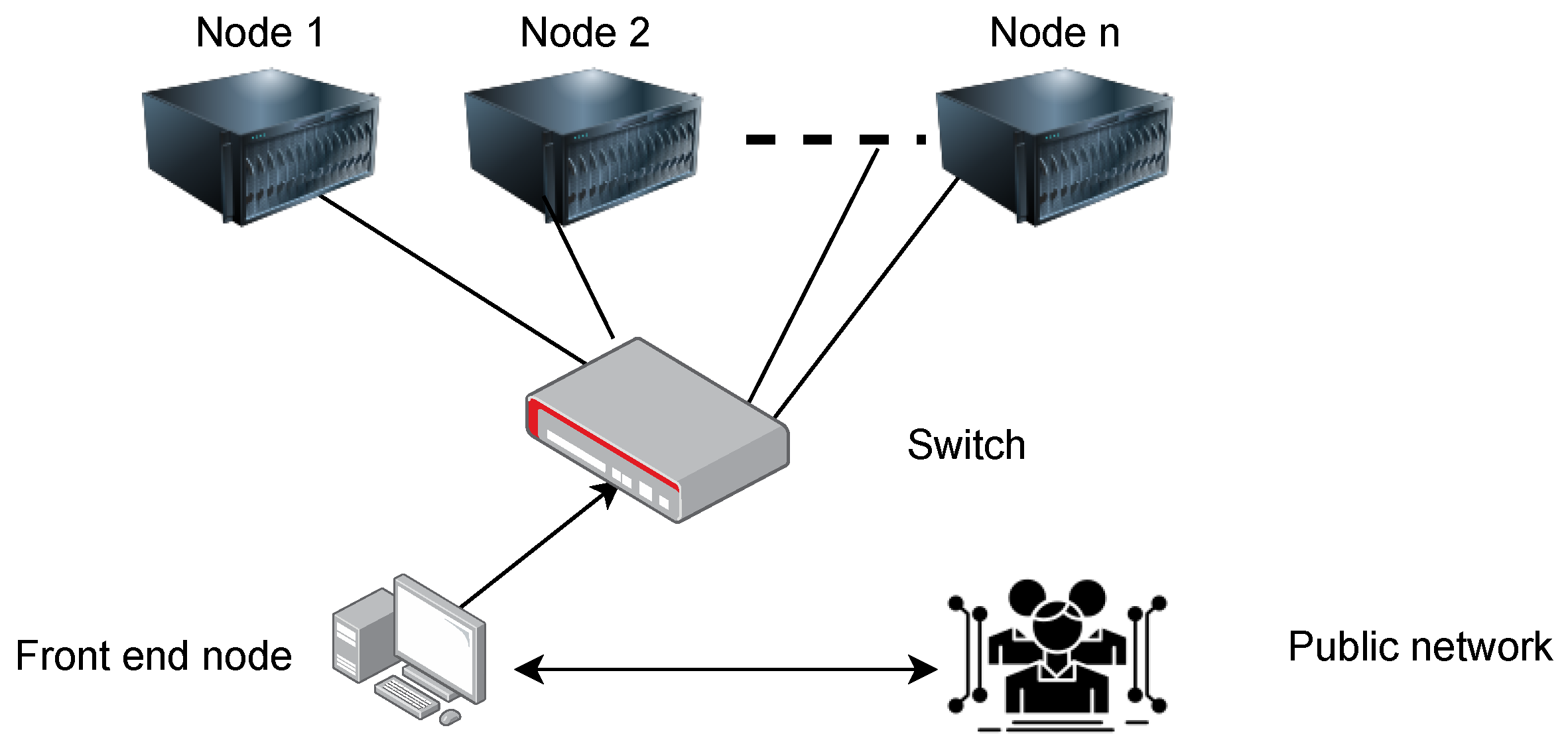

2.3. Cluster Computing

2.4. Cloud Computing

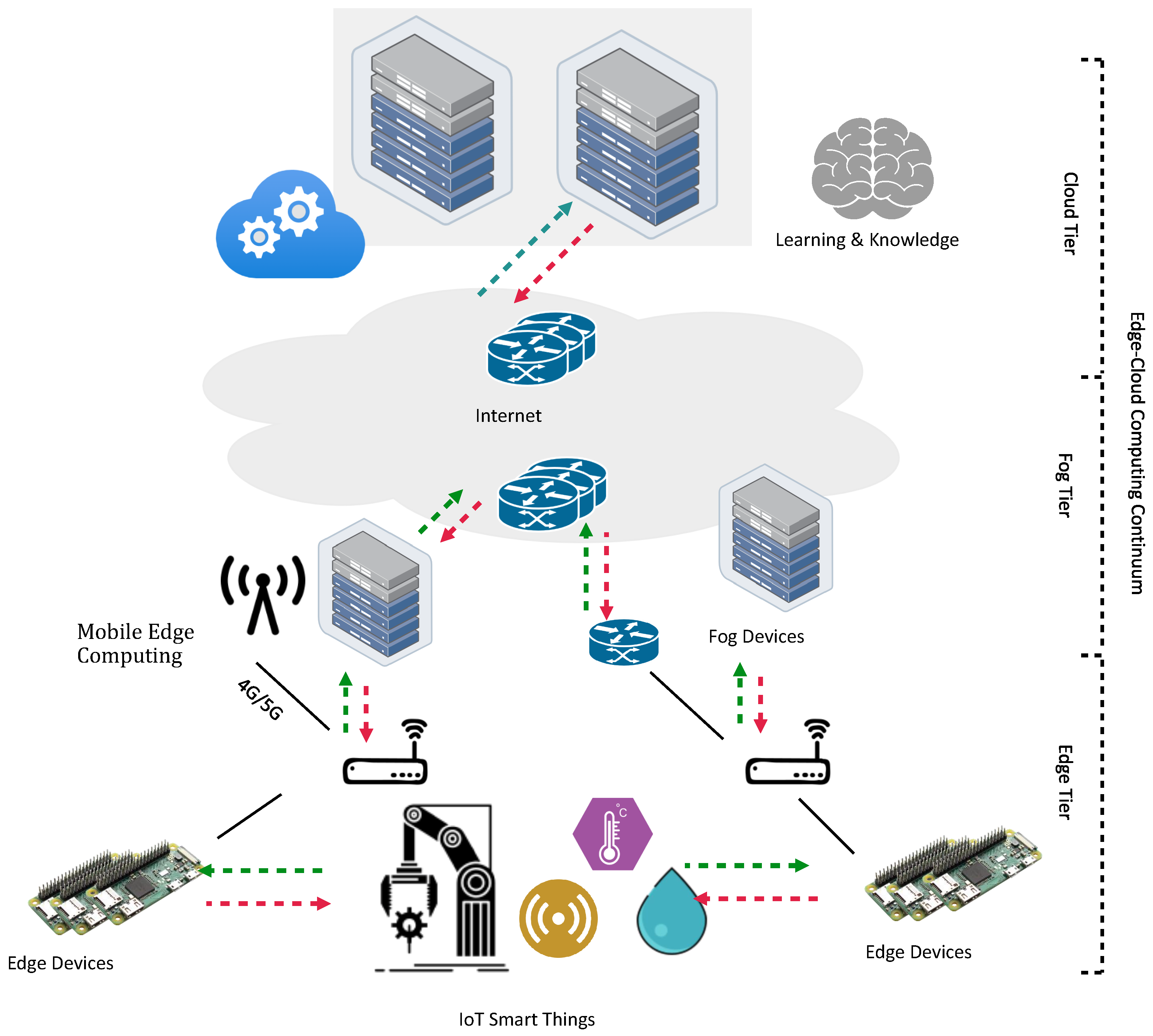

2.5. Fog and Edge Computing

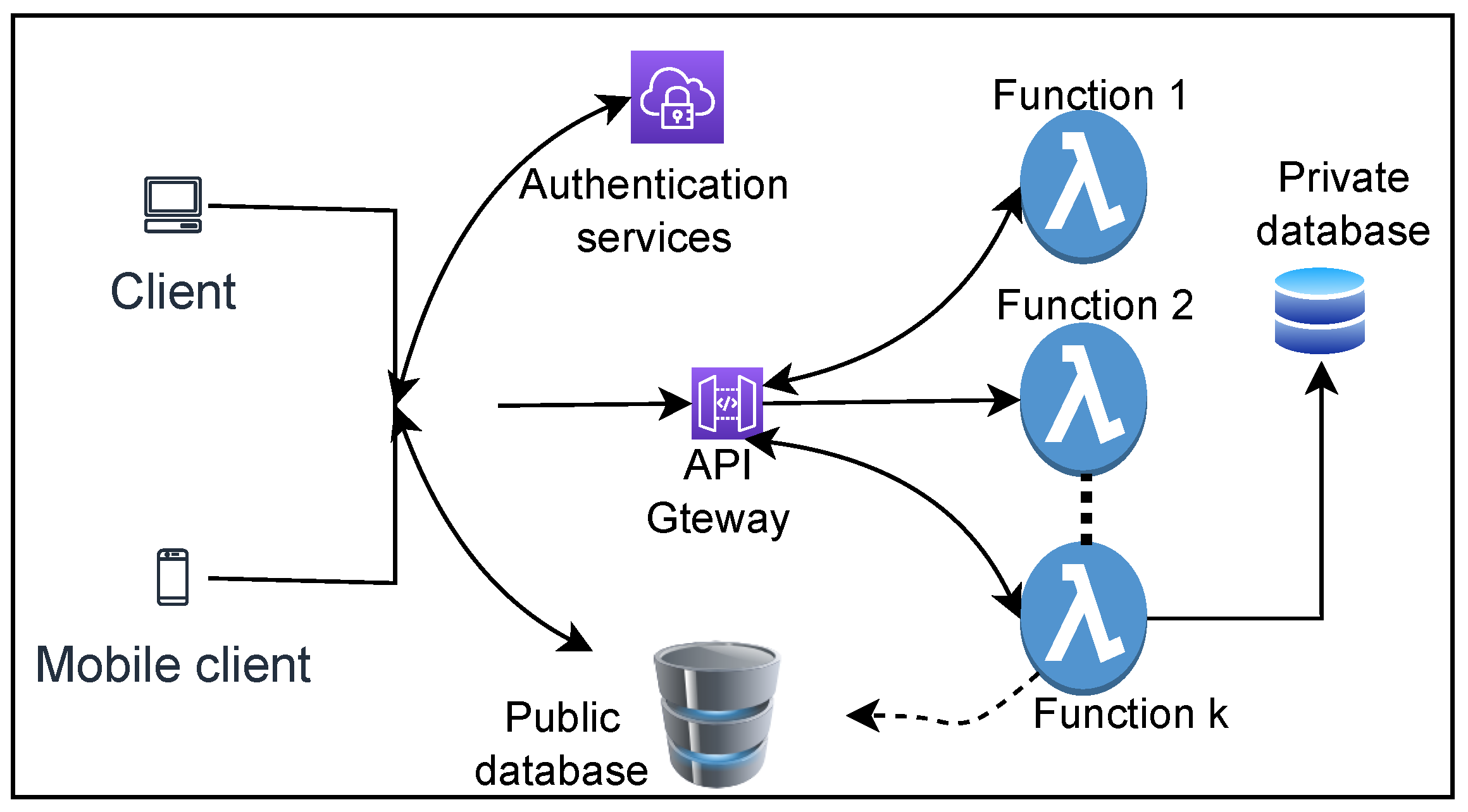

2.6. Serverless Computing

2.7. Distributed Computing Continuum Systems

- Cloud Computing vs. DCCSs: In cloud computing, users access virtualized servers, storage, and applications hosted by a cloud provider over the Internet [44]. In DCCSs, a wide array of resources are incorporated, including edge devices, IoT sensors, mobile devices, and even cloud servers. However, computations are distributed from localized processing to centralized cloud analytics as needed. In contrast, DCCSs dynamically assign tasks to the most appropriate resource based on factors such as proximity, processing capability, and data sensitivity, minimizing latency and maximizing resource utilization. Cloud infrastructure involves provisioning resources according to predetermined configurations and subscription plans. User capacity can be adjusted based on their needs until vendor lock-in occurs with scalable cloud computing. Moreover, DCCS functionality can evolve naturally based on resources and demand, enabling flexibility and effective resource utilization. It is possible to process data on edge devices instead of cloud servers when a task requires an immediate response or sensitive data that are too latency-sensitive to be processed centrally.

- Edge Computing vs. DCCS: Using edge computing, data are processed near its source, reducing latency and conserving bandwidth. Low-power devices, such as sensors and gateways, are usually treated as edge servers [45]. In contrast, DCCSs integrate not only edge devices but also cloud servers and various computing resources, which allow it to dynamically allocate tasks across available devices, optimizing resource utilization, enhancing responsiveness, and enabling real-time processing. In contrast to edge computing, DCCSs are capable of adaptive and efficient computation beyond the capabilities of individual devices by harnessing the power of an extensive array of resources. DCCSs support fault tolerance, whereas edge computing does not. A device failure does not interrupt computation, and the task is moved to another edge server or cloud server in DCCSs.

- Serverless Computing vs. DCCS: In serverless computing, resources are automatically scaled based on demand, and users are billed just as they use them. Using DCCSs, tasks are routinely distributed according to proximity, capacity, and urgency. In contrast to serverless computing, DCCSs primarily focus on resource efficiency, integrating a wide range of devices, and enabling real-time processing across the continuum (edge-to-cloud).

3. Potential of Distributed Computing Continuum

3.1. Classes of Computing Devices Used in DCCSs

3.1.1. Embedded Computers

3.1.2. Internet of Things

3.1.3. Mobile Devices

3.1.4. Desktop Computers

3.1.5. Servers and Supercomputers

3.2. Benefits

- Optimize bandwidth: In DCCSs, computation tasks are intelligently distributed between edge devices and centralized cloud resources. This distribution minimizes the need to continuously transfer high-bandwidth data, since only essential data (when local device resources are insufficient) or insights are transmitted to the cloud. DCCSs prioritize local processing at the edge, reducing bandwidth demands and enhancing response times compared with cloud computing, which often sends data back and forth between devices. Additionally, it reduces the need for extensive data transfers by utilizing localized caching and processing. DCCSs are particularly well suited to scenarios with limited or unreliable connectivity due to its dynamic approach that conserves bandwidth and accelerates decision making.

- Scalability: DCCSs demonstrate scalability by dynamically distributing computation tasks across diverse resources. Consider a scenario for a better understanding of the scalability feature in DCCSs. Suppose a smart city uses DCCSs for traffic management. The system may use edge devices and local servers to process real-time traffic data during regular traffic hours. Suppose the system detects an increase in traffic (such as during morning or evening hours) or unexpected traffic surges. In that case, additional resources can be integrated (such as the cloud) to handle the increased load without compromising performance. Scalability is especially advantageous when workloads fluctuate or demand spikes suddenly, since DCCSs effectively utilize available resources without overwhelming any one component. Due to this architectural agility, DCCS can easily accommodate the growing computational needs of modern applications and services.

- Low latency: DCCS achieves low latency because it processes tasks close to the data source, rather than sending data long distances as cloud environments do. On the contrary, cloud-based models require sending data to a remote cloud server for processing, introducing network latency that can significantly delay the response. For instance, in smart city applications where traffic management plays a pivotal role in ensuring efficient real-time responses, low latency is extremely important. Consider the scenario of an accident that causes traffic congestion on a busy road. Sensors deployed across the roadway can detect/predict this congestion and immediately notify nearby edge servers. With their processing capabilities, these edge servers can analyze information instantly and make decisions in a timely manner. For instance, the decision-making system can adjust traffic signals in real-time, reroute traffic from a congested route, or instantly dispatch emergency services. DCCSs’ localized processing effectively minimizes latency by allowing immediate analysis on local computing devices (such as edge servers), so that appropriate action is taken quickly.

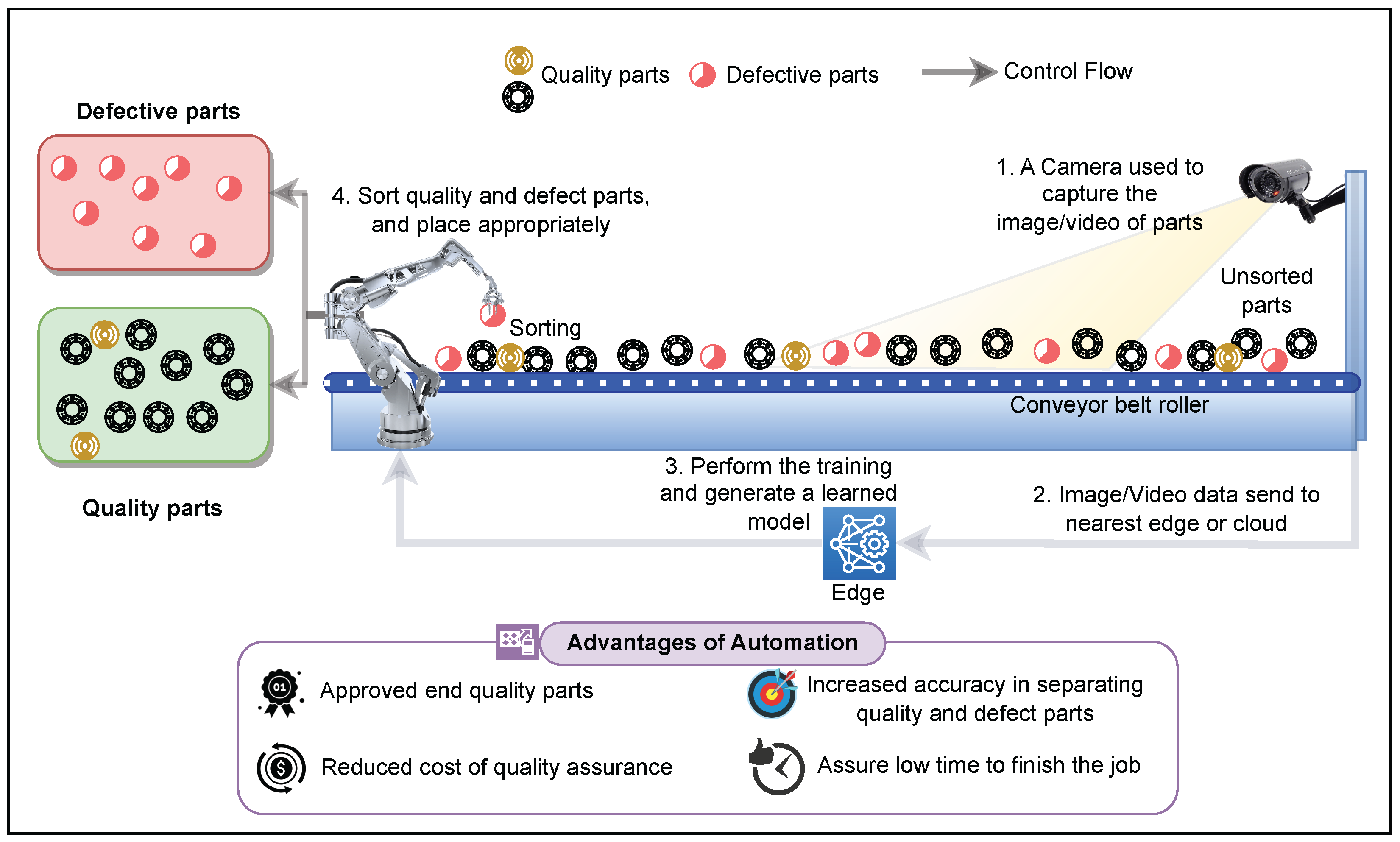

- Optimized resource utilization and load balancing: DCCSs ensure optimal resource utilization through efficient and dynamic resource allocation across the continuum. For example, consider a manufacturing facility that uses DCCSs to control quality in real time during production. A variety of sensors or cameras are integrated into the production line in order to capture product parameters, which need to be further analyzed. Depending on resource availability or computation intensiveness, DCCSs can dynamically allocate these data to edge nodes or the cloud. Basic data preprocessing and initial analysis can be carried out at the edge, where complex analyses (such as image or video analytics or AI/ML tasks) can be transferred to the cloud. Additionally, DCCSs can federate tasks among edge servers depending on computational needs and resource availability, which minimizes bandwidth usage and latency even further.

- Resilience, flexibility, and reliability: By distributing tasks across a diverse set of resources, DCCSs guarantees resilience, flexibility, and reliability. Distributing tasks across resources makes it possible to keep the system running even if one part of it is compromised. Consider the case of a disaster (such as a hurricane) that requires an emergency management system for a smart city. A network of sensors is deployed throughout the city to read weather conditions, water levels, and structural integrity. Data from these sensors are transmitted to local servers or the nearest edge server for initial analysis, which helps identify potential hazards. Unfortunately, if these servers fail to respond due to power outages, damages due to disaster, or connection issues, the DCCS can immediately transfer to another working edge server. The data can be sent to the cloud if there are no active edge servers or local servers in the city. The emergency management system becomes more resilient, flexible, and reliable, allowing disasters to be handled effectively even under adverse conditions when DCCSs are used.

3.3. Challenges

- Interoperability: DCCSs are multi-proprietary. This means that the infrastructure resources and their associated middle-ware layers belong to different organizations. One can imagine an application running some services in-house, some services with high computational needs in the Cloud, some latency-sensitive services in fog nodes next to the networking stations, and finally, some other services at the edge to enhance responsiveness and reduce overall bandwidth requirements. Interestingly, each set of nodes might be owned by a different organization. Hence, each has different semantics. Therefore, the application (based on all these services) needs to tackle the usage of very different devices, which, on top, have different owners with, perhaps, different priorities when designing their systems.

- Complexity of Governance: Currently, Internet-based systems are governed through the application logic and only residually at the infrastructure level by cloud orchestrators, which can basically run more copies of an existing job or schedule new jobs. Also, these are typically centralized entities, which clearly do not fit with the requirements for DCCSs.Another interesting aspect of current Internet-based systems is their usage of service-level objectives (SLOs) to set the minimal performance indicators for these systems. Unfortunately, current SLOs are only low-level metrics (such as CPU usage) or time-related metrics (such as end-to-end response time). Using SLOs for DCCSs seems appropriate. However, we identify two key aspects that need to be improved:

- 1.

- They would need to be able to cover all aspects/components of the system so that the governance strategies are aligned regardless of what is being controlled.

- 2.

- Their granularity is adequate to perform surgical interventions. Simply put, if the SLO is on end-to-end response time and it is violated, discovering which is the specific service/device/component/aspect that is producing the delay can be an overwhelming task, which cannot comply with time-constraint requirements.

- Data synchronization: In the DCCSs, data are constantly generated, updated, moved, and accessed across a wide range of distributed devices, and it is necessary to ensure consistency (through proper synchronization mechanisms [86]) across the continuum. Maintaining data integrity, coherence, and consistency becomes increasingly difficult as data are processed and modified at different locations and speeds. Sometimes, end-to-end delays, network issues, and varying computational speeds (due to resource availability or constraints) can lead to inconsistencies or conflicts between data versions. Furthermore, data synchronization across hybrid setups involving diverse computational resources (cloud, edge, constrained IoT, or sensor nodes) presents additional challenges due to varying processing capabilities and connectivity limitations [87]. In DCCSs, sophisticated synchronization mechanisms are required to ensure that all components can access up-to-date and accurate data.

- Sustainability and energy efficiency: In terms of sustainability, there are two key aspects to consider:

- 1.

- The vast amount of computing devices and connections;

- 2.

- Their energy sources.

Regarding the first consideration, the computational infrastructure will keep increasing in the coming years. However, it is important that we understand the need to reuse existing infrastructure to limit the need to add new resources. Unfortunately, this challenges previous topics such as governance, interoperability, and others, as dedicated resources are always easier to incorporate into the system than older ones with, perhaps, a different initial purpose. The second sustainability consideration relates to the energy sources that are used in computing systems. It is clear that AI-based systems require high amounts of energy. Hence, being able to harvest this energy from renewable sources is of great interest. Unfortunately, solutions that can do that also require control over the energy grid, which is usually not the case.Energy efficiency relates to sustainability with the idea of using the minimum energy required for any job. This translates to choosing the right algorithm/service/device/platform for each case, which requires solving very complex multi-variate optimization problems.Additionally, energy efficiency is key for energy-constrained devices, such as all those devices that are not permanently linked to the energy infrastructure. These require that their usage is compatible with their energy-loading/unloading cycles so that they are always available when needed. - Privacy and security: In DCCSs, privacy and security are inherent problems because of their complex structure built on resources, edge devices, cloud platforms, and data transmissions. A majority of privacy and security issues arise from sharing data, communicating over networks, and sharing resources across a continuum. Maintaining consistent security measures becomes more difficult due to dynamic scaling and resource sharing. To ensure the privacy and security of data, resources, and communication across the continuum, encryption, access controls, monitoring, and compliance are required.

4. Applications

4.1. Industry Automation

4.2. Transportation Systems

4.3. Mobile Robots

4.4. Smart Cities

4.5. Healthcare

5. Scope for Further Research

5.1. Learning Models for DCCS

5.2. Need for Intelligent Protocols

5.3. Use of Causality

5.4. Continuous Diagnostics and Mitigation

5.5. Data Fragmentation and Clustered Edge Intelligence

5.6. Energy-Efficiency and Sustainability

5.7. Controlling Data Gravity and Data Friction

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AGVs | Automated Guided Vehicles |

| AMQP | Advanced Message Queuing Protocol |

| API | Application Program Interface |

| AR | Augmented Reality |

| ATM | Automated Teller Machine |

| AUVs | Autonomous Underwater Vehicles |

| CAGR | Compound Annual Growth Rate |

| CDM | Continuous Diagnostics and Mitigation |

| CDN | Content Delivery Network |

| CEI | Clustered Edge Intelligence |

| CoAP | Constrained Application Protocol |

| CPU | Central Processing Unit |

| CT | Computerized Tomography |

| DCCS | Distributed Computing Continuum System |

| DDS | Data Distribution Service |

| DRL | Deep Reinforcement Learning |

| FEP | Free Energy Principle |

| FDG | Federated Domain Generalization |

| FL | Federated Learning |

| GAN | Generative Adversarial Networks |

| GKR | Graphical Knowledge Representation |

| ICU | Intensive Care Unit |

| IoT | Internet of Things |

| LLM | Large Language Models |

| ML | Machine Learning |

| MQTT | Message Queuing Telemetry Transport |

| QoS | Quality of Service |

| SAR | Search-and-Rescue Robots |

| SLO | Service Level Objective |

| UGVs | Unmanned Ground Vehicles |

| VR | Virtual Reality |

| WAN | Wide-Area Networks |

| WMR | Wearable Mobile Robots |

| ZTA | Zero-Trust Architecture |

| ZTP | Zero-Touch Provisioning |

References

- De Donno, M.; Tange, K.; Dragoni, N. Foundations and evolution of modern computing paradigms: Cloud, iot, edge, and fog. IEEE Access 2019, 7, 150936–150948. [Google Scholar] [CrossRef]

- Yuan, J.; Xiao, H.; Shen, Z.; Zhang, T.; Jin, J. ELECT: Energy-efficient intelligent edge–cloud collaboration for remote IoT services. Future Gener. Comput. Syst. 2023, 147, 179–194. [Google Scholar] [CrossRef]

- Alsamhi, S.H.; Shvetsov, A.V.; Kumar, S.; Hassan, J.; Alhartomi, M.A.; Shvetsova, S.V.; Sahal, R.; Hawbani, A. Computing in the sky: A survey on intelligent ubiquitous computing for uav-assisted 6g networks and industry 4.0/5.0. Drones 2022, 6, 177. [Google Scholar] [CrossRef]

- Ometov, A.; Molua, O.L.; Komarov, M.; Nurmi, J. A survey of security in cloud, edge, and fog computing. Sensors 2022, 22, 927. [Google Scholar] [CrossRef] [PubMed]

- Ren, J.; Zhang, D.; He, S.; Zhang, Y.; Li, T. A survey on end-edge-cloud orchestrated network computing paradigms: Transparent computing, mobile edge computing, fog computing, and cloudlet. ACM Comput. Surv. 2019, 52, 1–36. [Google Scholar] [CrossRef]

- Zhang, Y.; Ren, J.; Liu, J.; Xu, C.; Guo, H.; Liu, Y. A survey on emerging computing paradigms for big data. Chin. J. Electron. 2017, 26, 1–12. [Google Scholar] [CrossRef]

- Angel, N.A.; Ravindran, D.; Vincent, P.D.R.; Srinivasan, K.; Hu, Y.C. Recent advances in evolving computing paradigms: Cloud, edge, and fog technologies. Sensors 2021, 22, 196. [Google Scholar] [CrossRef] [PubMed]

- Lyytinen, K.; Yoo, Y. Ubiquitous computing. Commun. ACM 2002, 45, 63–96. [Google Scholar]

- Dustdar, S.; Pujol, V.C.; Donta, P.K. On distributed computing continuum systems. IEEE Trans. Knowl. Data Eng. 2022, 35, 4092–4105. [Google Scholar] [CrossRef]

- Casamayor Pujol, V.; Morichetta, A.; Murturi, I.; Kumar Donta, P.; Dustdar, S. Fundamental research challenges for distributed computing continuum systems. Information 2023, 14, 198. [Google Scholar] [CrossRef]

- Donta, P.K.; Dustdar, S. The promising role of representation learning for distributed computing continuum systems. In Proceedings of the 2022 IEEE International Conference on Service-Oriented System Engineering (SOSE), Newark, CA, USA, 15–18 August 2022; pp. 126–132. [Google Scholar] [CrossRef]

- Orive, A.; Agirre, A.; Truong, H.L.; Sarachaga, I.; Marcos, M. Quality of Service Aware Orchestration for Cloud–Edge Continuum Applications. Sensors 2022, 22, 1755. [Google Scholar] [CrossRef] [PubMed]

- Filho, C.P.; Marques, E., Jr.; Chang, V.; Dos Santos, L.; Bernardini, F.; Pires, P.F.; Ochi, L.; Delicato, F.C. A systematic literature review on distributed machine learning in edge computing. Sensors 2022, 22, 2665. [Google Scholar] [CrossRef]

- Iansiti, M.; Clark, K.B. Integration and dynamic capability: Evidence from product development in automobiles and mainframe computers. Ind. Corp. Chang. 1994, 3, 557–605. [Google Scholar] [CrossRef]

- Greenstein, S.M. Lock-in and the costs of switching mainframe computer vendors: What do buyers see? Ind. Corp. Chang. 1997, 6, 247–273. [Google Scholar] [CrossRef]

- Schwiegelshohn, U.; Badia, R.M.; Bubak, M.; Danelutto, M.; Dustdar, S.; Gagliardi, F.; Geiger, A.; Hluchy, L.; Kranzlmüller, D.; Laure, E.; et al. Perspectives on grid computing. Future Gener. Comput. Syst. 2010, 26, 1104–1115. [Google Scholar] [CrossRef]

- Casanova, H. Distributed computing research issues in grid computing. ACM SIGAct News 2002, 33, 50–70. [Google Scholar] [CrossRef]

- Yu, J.; Buyya, R. A taxonomy of workflow management systems for grid computing. J. Grid Comput. 2005, 3, 171–200. [Google Scholar] [CrossRef]

- Yeo, C.S.; Buyya, R. A taxonomy of market-based resource management systems for utility-driven cluster computing. Softw. Pract. Exp. 2006, 36, 1381–1419. [Google Scholar] [CrossRef]

- Baker, M.; Buyya, R. Cluster computing: The commodity supercomputer. Softw. Pract. Exp. 1999, 29, 551–576. [Google Scholar] [CrossRef]

- Barak, A.; La’adan, O. The MOSIX multicomputer operating system for high performance cluster computing. Future Gener. Comput. Syst. 1998, 13, 361–372. [Google Scholar] [CrossRef]

- Chiang, M.; Zhang, T. Fog and IoT: An overview of research opportunities. IEEE Internet Things J. 2016, 3, 854–864. [Google Scholar] [CrossRef]

- Bonomi, F.; Milito, R.; Natarajan, P.; Zhu, J. Fog computing: A platform for internet of things and analytics. In Big Data and Internet of Things: A Roadmap for Smart Environments; Springer: Berlin/Heidelberg, Germany, 2014; pp. 169–186. [Google Scholar] [CrossRef]

- Buyya, R.; Srirama, S.N. Fog and Edge Computing: Principles and Paradigms; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar]

- Yi, S.; Hao, Z.; Qin, Z.; Li, Q. Fog computing: Platform and applications. In Proceedings of the 2015 Third IEEE workshop on hot topics in web systems and technologies (HotWeb), Washington, DC, USA, 12–13 November 2015; pp. 73–78. [Google Scholar] [CrossRef]

- Avasalcai, C.; Murturi, I.; Dustdar, S. Edge and fog: A survey, use cases, and future challenges. In Fog Computing: Theory and Practice; John Wiley & Sons: Hoboken, NJ, USA, 2020; pp. 43–65. [Google Scholar] [CrossRef]

- Luo, Q.; Hu, S.; Li, C.; Li, G.; Shi, W. Resource scheduling in edge computing: A survey. IEEE Commun. Surv. Tutor. 2021, 23, 2131–2165. [Google Scholar] [CrossRef]

- Martin Wisniewski, L.; Bec, J.M.; Boguszewski, G.; Gamatié, A. Hardware Solutions for Low-Power Smart Edge Computing. J. Low Power Electron. Appl. 2022, 12, 61. [Google Scholar] [CrossRef]

- Sulieman, N.A.; Ricciardi Celsi, L.; Li, W.; Zomaya, A.; Villari, M. Edge-oriented computing: A survey on research and use cases. Energies 2022, 15, 452. [Google Scholar] [CrossRef]

- Shi, W.; Dustdar, S. The promise of edge computing. Computer 2016, 49, 78–81. [Google Scholar] [CrossRef]

- Li, C.; Xue, Y.; Wang, J.; Zhang, W.; Li, T. Edge-oriented computing paradigms: A survey on architecture design and system management. ACM Comput. Surv. 2018, 51, 1–34. [Google Scholar] [CrossRef]

- Yousefpour, A.; Fung, C.; Nguyen, T.; Kadiyala, K.; Jalali, F.; Niakanlahiji, A.; Kong, J.; Jue, J.P. All one needs to know about fog computing and related edge computing paradigms: A complete survey. J. Syst. Archit. 2019, 98, 289–330. [Google Scholar] [CrossRef]

- Dustdar, S.; Murturi, I. Towards distributed edge-based systems. In Proceedings of the 2020 IEEE Second International Conference on Cognitive Machine Intelligence (CogMI), Atlanta, GA, USA, 28–31 October 2020; pp. 1–9. [Google Scholar] [CrossRef]

- Taibi, D.; Spillner, J.; Wawruch, K. Serverless computing-where are we now, and where are we heading? IEEE Softw. 2020, 38, 25–31. [Google Scholar] [CrossRef]

- Shafiei, H.; Khonsari, A.; Mousavi, P. Serverless computing: A survey of opportunities, challenges, and applications. ACM Comput. Surv. 2022, 54, 1–32. [Google Scholar] [CrossRef]

- Wen, J.; Chen, Z.; Jin, X.; Liu, X. Rise of the planet of serverless computing: A systematic review. ACM Trans. Softw. Eng. Methodol. 2023, 32, 1–61. [Google Scholar] [CrossRef]

- Poojara, S.R.; Dehury, C.K.; Jakovits, P.; Srirama, S.N. Serverless data pipeline approaches for IoT data in fog and cloud computing. Future Gener. Comput. Syst. 2022, 130, 91–105. [Google Scholar] [CrossRef]

- Poojara, S.; Dehury, C.K.; Jakovits, P.; Srirama, S.N. Serverless Data Pipelines for IoT Data Analytics: A Cloud Vendors Perspective and Solutions. In Predictive Analytics in Cloud, Fog, and Edge Computing: Perspectives and Practices of Blockchain, IoT, and 5G; Springer: Berlin/Heidelberg, Germany, 2022; pp. 107–132. [Google Scholar] [CrossRef]

- Li, Z.; Guo, L.; Cheng, J.; Chen, Q.; He, B.; Guo, M. The serverless computing survey: A technical primer for design architecture. ACM Comput. Surv. 2022, 54, 1–34. [Google Scholar] [CrossRef]

- Naranjo, D.M.; Risco, S.; de Alfonso, C.; Pérez, A.; Blanquer, I.; Moltó, G. Accelerated serverless computing based on GPU virtualization. J. Parallel Distrib. Comput. 2020, 139, 32–42. [Google Scholar] [CrossRef]

- Castro, P.; Ishakian, V.; Muthusamy, V.; Slominski, A. The rise of serverless computing. Commun. ACM 2019, 62, 44–54. [Google Scholar] [CrossRef]

- Donta, P.K.; Sedlak, B.; Casamayor Pujol, V.; Dustdar, S. Governance and sustainability of distributed continuum systems: A big data approach. J. Big Data 2023, 10, 53. [Google Scholar] [CrossRef]

- Beckman, P.; Dongarra, J.; Ferrier, N.; Fox, G.; Moore, T.; Reed, D.; Beck, M. Harnessing the computing continuum for programming our world. In Fog Computing: Theory and Practice; John Wiley & Sons: Hoboken, NJ, USA, 2020; pp. 215–230. [Google Scholar] [CrossRef]

- Ketu, S.; Mishra, P.K. Cloud, fog and mist computing in IoT: An indication of emerging opportunities. IETE Tech. Rev. 2022, 39, 713–724. [Google Scholar] [CrossRef]

- Masip-Bruin, X.; Marín-Tordera, E.; Sánchez-López, S.; Garcia, J.; Jukan, A.; Juan Ferrer, A.; Queralt, A.; Salis, A.; Bartoli, A.; Cankar, M.; et al. Managing the cloud continuum: Lessons learnt from a real fog-to-cloud deployment. Sensors 2021, 21, 2974. [Google Scholar] [CrossRef]

- Pujol, V.C.; Donta, P.K.; Morichetta, A.; Murturi, I.; Dustdar, S. Edge Intelligence—Research Opportunities for Distributed Computing Continuum Systems. IEEE Internet Comput. 2023, 27, 53–74. [Google Scholar] [CrossRef]

- Huang, J.; Li, R.; An, J.; Ntalasha, D.; Yang, F.; Li, K. Energy-efficient resource utilization for heterogeneous embedded computing systems. IEEE Trans. Comput. 2017, 66, 1518–1531. [Google Scholar] [CrossRef]

- Leveson, N.G. Software safety in embedded computer systems. Commun. ACM 1991, 34, 34–46. [Google Scholar] [CrossRef]

- Das, A.; Kumar, A.; Veeravalli, B. Energy-aware task mapping and scheduling for reliable embedded computing systems. ACM Trans. Embed. Comput. Syst. 2014, 13, 1–27. [Google Scholar] [CrossRef]

- Rodrigues, J.J.; Segundo, D.B.D.R.; Junqueira, H.A.; Sabino, M.H.; Prince, R.M.; Al-Muhtadi, J.; De Albuquerque, V.H.C. Enabling technologies for the internet of health things. IEEE Access 2018, 6, 13129–13141. [Google Scholar] [CrossRef]

- Nahavandi, D.; Alizadehsani, R.; Khosravi, A.; Acharya, U.R. Application of artificial intelligence in wearable devices: Opportunities and challenges. Comput. Methods Programs Biomed. 2022, 213, 106541. [Google Scholar] [CrossRef] [PubMed]

- Scilingo, E.P.; Valenza, G. Recent advances on wearable electronics and embedded computing systems for biomedical applications. Electronics 2017, 6, 12. [Google Scholar] [CrossRef]

- Iqbal, S.M.; Mahgoub, I.; Du, E.; Leavitt, M.A.; Asghar, W. Advances in healthcare wearable devices. NPJ Flex. Electron. 2021, 5, 9. [Google Scholar] [CrossRef]

- Portilla, L.; Loganathan, K.; Faber, H.; Eid, A.; Hester, J.G.; Tentzeris, M.M.; Fattori, M.; Cantatore, E.; Jiang, C.; Nathan, A.; et al. Wirelessly powered large-area electronics for the Internet of Things. Nat. Electron. 2023, 6, 10–17. [Google Scholar] [CrossRef]

- Ali, I.; Ahmedy, I.; Gani, A.; Munir, M.U.; Anisi, M.H. Data collection in studies on Internet of things (IoT), wireless sensor networks (WSNs), and sensor cloud (SC): Similarities and differences. IEEE Access 2022, 10, 33909–33931. [Google Scholar] [CrossRef]

- Hussain, S.; Ullah, S.S.; Ali, I. An efficient content source verification scheme for multi-receiver in NDN-based Internet of Things. Clust. Comput. 2022, 25, 1749–1764. [Google Scholar] [CrossRef]

- Bayılmış, C.; Ebleme, M.A.; Çavuşoğlu, Ü.; Kücük, K.; Sevin, A. A survey on communication protocols and performance evaluations for Internet of Things. Digit. Commun. Netw. 2022, 8, 1094–1104. [Google Scholar] [CrossRef]

- Dizdarević, J.; Carpio, F.; Jukan, A.; Masip-Bruin, X. A survey of communication protocols for internet of things and related challenges of fog and cloud computing integration. ACM Comput. Surv. 2019, 51, 1–29. [Google Scholar] [CrossRef]

- Khanna, A.; Kaur, S. Evolution of Internet of Things (IoT) and its significant impact in the field of Precision Agriculture. Comput. Electron. Agric. 2019, 157, 218–231. [Google Scholar] [CrossRef]

- Alavikia, Z.; Shabro, M. A comprehensive layered approach for implementing internet of things-enabled smart grid: A survey. Digit. Commun. Netw. 2022, 8, 388–410. [Google Scholar] [CrossRef]

- Goudarzi, A.; Ghayoor, F.; Waseem, M.; Fahad, S.; Traore, I. A Survey on IoT-Enabled Smart Grids: Emerging, Applications, Challenges, and Outlook. Energies 2022, 15, 6984. [Google Scholar] [CrossRef]

- Aazhang, B.; Ahokangas, P.; Alves, H.; Alouini, M.S.; Beek, J.; Benn, H.; Bennis, M.; Belfiore, J.; Strinati, E.; Chen, F.; et al. Key drivers and research challenges for 6G ubiquitous wireless intelligence (white paper). Univ. Oulu 2019, 1–36. [Google Scholar]

- Lovén, L.; Leppänen, T.; Peltonen, E.; Partala, J.; Harjula, E.; Porambage, P.; Ylianttila, M.; Riekki, J. EdgeAI: A vision for distributed, edge-native artificial intelligence in future 6G networks. In Proceedings of the 6G Wireless Summit, Levi, Finland, 24–26 March 2019. [Google Scholar]

- Peltonen, E.; Bennis, M.; Capobianco, M.; Debbah, M.; Ding, A.; Gil-Castiñeira, F.; Jurmu, M.; Karvonen, T.; Kelanti, M.; Kliks, A.; et al. 6G white paper on edge intelligence. arXiv 2020, arXiv:2004.14850. [Google Scholar] [CrossRef]

- López, O.A.; Rosabal, O.M.; Ruiz-Guirola, D.; Raghuwanshi, P.; Mikhaylov, K.; Lovén, L.; Iyer, S. Energy-Sustainable IoT Connectivity: Vision, Technological Enablers, Challenges, and Future Directions. arXiv 2023, arXiv:2306.02444. [Google Scholar] [CrossRef]

- Rashid, A.T.; Elder, L. Mobile phones and development: An analysis of IDRC-supported projects. Electron. J. Inf. Syst. Dev. Ctries. 2009, 36, 1–16. [Google Scholar] [CrossRef]

- Duncombe, R. Researching impact of mobile phones for development: Concepts, methods and lessons for practice. Inf. Technol. Dev. 2011, 17, 268–288. [Google Scholar] [CrossRef]

- Hennessy, J.L.; Patterson, D.A. Computer Architecture: A Quantitative Approach; Elsevier: Amsterdam, The Netherlands, 2011. [Google Scholar]

- Wang, X.; Li, J.; Ning, Z.; Song, Q.; Guo, L.; Guo, S.; Obaidat, M.S. Wireless powered mobile edge computing networks: A survey. ACM Comput. Surv. 2023, 55, 1–37. [Google Scholar] [CrossRef]

- Yang, L.; Jiang, H.; Shi, J.; Xue, X.; Ren, P.; Feng, Y.; Chen, J. Achieving Cooperative Mobile-Edge Computing Using Helper Scheduling. IEEE Trans. Commun. 2023, 7, 3419–3436. [Google Scholar] [CrossRef]

- Yadav, A.M.; Sharma, S. Cooperative task scheduling secured with blockchain in sustainable mobile edge computing. Sustain. Comput. Inform. Syst. 2023, 37, 100843. [Google Scholar] [CrossRef]

- Feng, C.; Han, P.; Zhang, X.; Yang, B.; Liu, Y.; Guo, L. Computation offloading in mobile edge computing networks: A survey. J. Netw. Comput. Appl. 2022, 202, 103366. [Google Scholar] [CrossRef]

- Peng, H.; Davidson, S.; Shi, R.; Song, S.L.; Taylor, M. Chiplet Cloud: Building AI Supercomputers for Serving Large Generative Language Models. arXiv 2023, arXiv:2307.02666. [Google Scholar] [CrossRef]

- Xu, M.; Du, H.; Niyato, D.; Kang, J.; Xiong, Z.; Mao, S.; Han, Z.; Jamalipour, A.; Kim, D.I.; Leung, V.; et al. Unleashing the power of edge-cloud generative ai in mobile networks: A survey of aigc services. arXiv 2023, arXiv:2303.16129. [Google Scholar] [CrossRef]

- Dhar, S.; Guo, J.; Liu, J.; Tripathi, S.; Kurup, U.; Shah, M. A survey of on-device machine learning: An algorithms and learning theory perspective. ACM Trans. Internet Things 2021, 2, 1–49. [Google Scholar] [CrossRef]

- Saravanan, K.; Kouzani, A.Z. Advancements in On-Device Deep Neural Networks. Information 2023, 14, 470. [Google Scholar] [CrossRef]

- Xu, M.; Song, C.; Tian, Y.; Agrawal, N.; Granqvist, F.; van Dalen, R.; Zhang, X.; Argueta, A.; Han, S.; Deng, Y.; et al. Training Large-Vocabulary Neural Language Models by Private Federated Learning for Resource-Constrained Devices. In Proceedings of the ICASSP 2023–2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Wang, B.; Zhang, Y.J.; Cao, Y.; Li, B.; McMahan, H.B.; Oh, S.; Xu, Z.; Zaheer, M. Can Public Large Language Models Help Private Cross-device Federated Learning? arXiv 2023, arXiv:2305.12132. [Google Scholar] [CrossRef]

- Park, H.; Kim, S. Overviewing AI-Dedicated Hardware for On-Device AI in Smartphones. In Artificial Intelligence and Hardware Accelerators; Springer: Berlin/Heidelberg, Germany, 2023; pp. 127–150. [Google Scholar] [CrossRef]

- Yi, R.; Guo, L.; Wei, S.; Zhou, A.; Wang, S.; Xu, M. EdgeMoE: Fast On-Device Inference of MoE-based Large Language Models. arXiv 2023, arXiv:2308.14352. [Google Scholar] [CrossRef]

- Adepu, S.; Adler, R.F. A comparison of performance and preference on mobile devices vs. desktop computers. In Proceedings of the 2016 IEEE 7th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 20–22 October 2016; pp. 1–7. [Google Scholar] [CrossRef]

- Straker, L.; Jones, K.J.; Miller, J. A comparison of the postures assumed when using laptop computers and desktop computers. Appl. Ergon. 1997, 28, 263–268. [Google Scholar] [CrossRef]

- Oyanagi, Y. Future of supercomputing. J. Comput. Appl. Math. 2002, 149, 147–153. [Google Scholar] [CrossRef]

- Suarez, E.; Eicker, N.; Lippert, T. Modular supercomputing architecture: From idea to production. In Contemporary High Performance Computing; CRC Press: Boca Raton, FL, USA, 2019; pp. 223–255. [Google Scholar] [CrossRef]

- Oral, S.; Vazhkudai, S.S.; Wang, F.; Zimmer, C.; Brumgard, C.; Hanley, J.; Markomanolis, G.; Miller, R.; Leverman, D.; Atchley, S.; et al. End-to-end i/o portfolio for the summit supercomputing ecosystem. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, Denver, CO, USA, 17–19 November 2019; pp. 1–14. [Google Scholar] [CrossRef]

- Wang, T.; Zhou, J.; Liu, A.; Bhuiyan, M.Z.A.; Wang, G.; Jia, W. Fog-based computing and storage offloading for data synchronization in IoT. IEEE Internet Things J. 2018, 6, 4272–4282. [Google Scholar] [CrossRef]

- Rosendo, D.; Costan, A.; Valduriez, P.; Antoniu, G. Distributed intelligence on the Edge-to-Cloud Continuum: A systematic literature review. J. Parallel Distrib. Comput. 2022, 166, 71–94. [Google Scholar] [CrossRef]

- Waheed, A.; Shah, M.A.; Mohsin, S.M.; Khan, A.; Maple, C.; Aslam, S.; Shamshirband, S. A comprehensive review of computing paradigms, enabling computation offloading and task execution in vehicular networks. IEEE Access 2022, 10, 3580–3600. [Google Scholar] [CrossRef]

- Cheng, Y.L.; Lim, M.H.; Hui, K.H. Impact of internet of things paradigm towards energy consumption prediction: A systematic literature review. Sustain. Cities Soc. 2022, 78, 103624. [Google Scholar] [CrossRef]

- Hashim Mohammed, B.; Sallehuddin, H.; Safie, N.; Husairi, A.; Abu Bakar, N.A.; Yahya, F.; Ali, I.; AbdelGhany Mohamed, S. Building Information Modeling and Internet of Things Integration in the Construction Industry: A Scoping Study. Adv. Civ. Eng. 2022, 2022, 7886497. [Google Scholar] [CrossRef]

- Alekseeva, D.; Ometov, A.; Arponen, O.; Lohan, E.S. The future of computing paradigms for medical and emergency applications. Comput. Sci. Rev. 2022, 45, 100494. [Google Scholar] [CrossRef]

- Ravi, B.; Varghese, B.; Murturi, I.; Donta, P.K.; Dustdar, S.; Dehury, C.K.; Srirama, S.N. Stochastic Modeling for Intelligent Software-Defined Vehicular Networks: A Survey. Computers 2023, 12, 162. [Google Scholar] [CrossRef]

- Zhu, F.; Lv, Y.; Chen, Y.; Wang, X.; Xiong, G.; Wang, F.Y. Parallel transportation systems: Toward IoT-enabled smart urban traffic control and management. IEEE Trans. Intell. Transp. Syst. 2019, 21, 4063–4071. [Google Scholar] [CrossRef]

- Chen, C.; Liu, B.; Wan, S.; Qiao, P.; Pei, Q. An edge traffic flow detection scheme based on deep learning in an intelligent transportation system. IEEE Trans. Intell. Transp. Syst. 2020, 22, 1840–1852. [Google Scholar] [CrossRef]

- Deveci, M.; Gokasar, I.; Pamucar, D.; Zaidan, A.A.; Wen, X.; Gupta, B.B. Evaluation of Cooperative Intelligent Transportation System scenarios for resilience in transportation using type-2 neutrosophic fuzzy VIKOR. Transp. Res. Part A Policy Pract. 2023, 172, 103666. [Google Scholar] [CrossRef]

- Boukerche, A.; Wang, J. Machine learning-based traffic prediction models for intelligent transportation systems. Comput. Netw. 2020, 181, 107530. [Google Scholar] [CrossRef]

- Shahverdy, M.; Fathy, M.; Berangi, R.; Sabokrou, M. Driver behavior detection and classification using deep convolutional neural networks. Expert Syst. Appl. 2020, 149, 113240. [Google Scholar] [CrossRef]

- Pujol, V.C.; Dustdar, S. Fog Robotics–Understanding the Research Challenges. IEEE Internet Comput. 2021, 25, 10–17. [Google Scholar] [CrossRef]

- Wang, G.; Wang, W.; Ding, P.; Liu, Y.; Wang, H.; Fan, Z.; Bai, H.; Hongbiao, Z.; Du, Z. Development of a search and rescue robot system for the underground building environment. J. Field Robot. 2023, 40, 655–683. [Google Scholar] [CrossRef]

- Kim, T.H.; Bae, S.H.; Han, C.H.; Hahn, B. The Design of a Low-Cost Sensing and Control Architecture for a Search and Rescue Assistant Robot. Machines 2023, 11, 329. [Google Scholar] [CrossRef]

- Militano, L.; Arteaga, A.; Toffetti, G.; Mitton, N. The Cloud-to-Edge-to-IoT Continuum as an Enabler for Search and Rescue Operations. Future Internet 2023, 15, 55. [Google Scholar] [CrossRef]

- Jácome, M.Y.; Alvear Villaroel, F.; Figueroa Olmedo, J. Ground Robot for Search and Rescue Management. In Proceedings of the International Conference on Applied Technologies, Virtual, 23–25 November 2022; pp. 399–411. [Google Scholar] [CrossRef]

- Feng, S.; Shi, H.; Huang, L.; Shen, S.; Yu, S.; Peng, H.; Wu, C. Unknown hostile environment-oriented autonomous WSN deployment using a mobile robot. J. Netw. Comput. Appl. 2021, 182, 103053. [Google Scholar] [CrossRef]

- Mouradian, C.; Yangui, S.; Glitho, R.H. Robots as-a-service in cloud computing: Search and rescue in large-scale disasters case study. In Proceedings of the 2018 15th IEEE Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 12–15 January 2018; pp. 1–7. [Google Scholar] [CrossRef]

- Lyu, M.; Zhao, Y.; Huang, C.; Huang, H. Unmanned Aerial Vehicles for Search and Rescue: A Survey. Remote Sens. 2023, 15, 3266. [Google Scholar] [CrossRef]

- Badidi, E.; Mahrez, Z.; Sabir, E. Fog computing for smart cities’ big data management and analytics: A review. Future Internet 2020, 12, 190. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, C.; Jiang, L.; Xie, S.; Zhang, Y. Intelligent edge computing for IoT-based energy management in smart cities. IEEE Netw. 2019, 33, 111–117. [Google Scholar] [CrossRef]

- Zhang, C. Design and application of fog computing and Internet of Things service platform for smart city. Future Gener. Comput. Syst. 2020, 112, 630–640. [Google Scholar] [CrossRef]

- Lv, Z.; Chen, D.; Lou, R.; Wang, Q. Intelligent edge computing based on machine learning for smart city. Future Gener. Comput. Syst. 2021, 115, 90–99. [Google Scholar] [CrossRef]

- Kashef, M.; Visvizi, A.; Troisi, O. Smart city as a smart service system: Human–computer interaction and smart city surveillance systems. Comput. Hum. Behav. 2021, 124, 106923. [Google Scholar] [CrossRef]

- Silva, B.N.; Khan, M.; Han, K. Towards sustainable smart cities: A review of trends, architectures, components, and open challenges in smart cities. Sustain. Cities Soc. 2018, 38, 697–713. [Google Scholar] [CrossRef]

- Marques, P.; Manfroi, D.; Deitos, E.; Cegoni, J.; Castilhos, R.; Rochol, J.; Pignaton, E.; Kunst, R. An IoT-based smart cities infrastructure architecture applied to a waste management scenario. Ad Hoc Netw. 2019, 87, 200–208. [Google Scholar] [CrossRef]

- Pardini, K.; Rodrigues, J.J.; Diallo, O.; Das, A.K.; de Albuquerque, V.H.C.; Kozlov, S.A. A smart waste management solution geared towards citizens. Sensors 2020, 20, 2380. [Google Scholar] [CrossRef] [PubMed]

- Pardini, K.; Rodrigues, J.J.; Kozlov, S.A.; Kumar, N.; Furtado, V. IoT-based solid waste management solutions: A survey. J. Sens. Actuator Netw. 2019, 8, 5. [Google Scholar] [CrossRef]

- Sharma, M.; Joshi, S.; Kannan, D.; Govindan, K.; Singh, R.; Purohit, H. Internet of Things (IoT) adoption barriers of smart cities’ waste management: An Indian context. J. Clean. Prod. 2020, 270, 122047. [Google Scholar] [CrossRef]

- Aazam, M.; St-Hilaire, M.; Lung, C.H.; Lambadaris, I. Cloud-based smart waste management for smart cities. In Proceedings of the 2016 IEEE 21st international workshop on computer aided modelling and design of communication links and networks (CAMAD), Toronto, ON, Canada, 23–25 October 2016; pp. 188–193. [Google Scholar] [CrossRef]

- Sallang, N.C.A.; Islam, M.T.; Islam, M.S.; Arshad, H. A CNN-Based Smart Waste Management System Using TensorFlow Lite and LoRa-GPS Shield in Internet of Things Environment. IEEE Access 2021, 9, 153560–153574. [Google Scholar] [CrossRef]

- Wang, C.; Qin, J.; Qu, C.; Ran, X.; Liu, C.; Chen, B. A smart municipal waste management system based on deep-learning and Internet of Things. Waste Manag. 2021, 135, 20–29. [Google Scholar] [CrossRef]

- Dang, L.M.; Piran, M.J.; Han, D.; Min, K.; Moon, H. A survey on internet of things and cloud computing for healthcare. Electronics 2019, 8, 768. [Google Scholar] [CrossRef]

- Alam, A.; Qazi, S.; Iqbal, N.; Raza, K. Fog, edge and pervasive computing in intelligent internet of things driven applications in healthcare: Challenges, limitations and future use. Fog, Edge, and Pervasive Computing in Intelligent IoT Driven Applications; John Wiley & Sons: Hoboken, NJ, USA, 2020; pp. 1–26. [Google Scholar] [CrossRef]

- Mutlag, A.A.; Abd Ghani, M.K.; Arunkumar, N.a.; Mohammed, M.A.; Mohd, O. Enabling technologies for fog computing in healthcare IoT systems. Future Gener. Comput. Syst. 2019, 90, 62–78. [Google Scholar] [CrossRef]

- Poncette, A.S.; Mosch, L.; Spies, C.; Schmieding, M.; Schiefenhövel, F.; Krampe, H.; Balzer, F. Improvements in patient monitoring in the intensive care unit: Survey study. J. Med. Internet Res. 2020, 22, e19091. [Google Scholar] [CrossRef] [PubMed]

- Naik, B.N.; Gupta, R.; Singh, A.; Soni, S.L.; Puri, G. Real-time smart patient monitoring and assessment amid COVID-19 pandemic–An alternative approach to remote monitoring. J. Med. Syst. 2020, 44, 131. [Google Scholar] [CrossRef] [PubMed]

- Davoudi, A.; Malhotra, K.R.; Shickel, B.; Siegel, S.; Williams, S.; Ruppert, M.; Bihorac, E.; Ozrazgat-Baslanti, T.; Tighe, P.J.; Bihorac, A.; et al. Intelligent ICU for autonomous patient monitoring using pervasive sensing and deep learning. Sci. Rep. 2019, 9, 8020. [Google Scholar] [CrossRef]

- Khan, M.A.; Din, I.U.; Kim, B.S.; Almogren, A. Visualization of Remote Patient Monitoring System Based on Internet of Medical Things. Sustainability 2023, 15, 8120. [Google Scholar] [CrossRef]

- Habib, C.; Makhoul, A.; Darazi, R.; Couturier, R. Health risk assessment and decision-making for patient monitoring and decision-support using wireless body sensor networks. Inf. Fusion 2019, 47, 10–22. [Google Scholar] [CrossRef]

- Rehm, G.B.; Woo, S.H.; Chen, X.L.; Kuhn, B.T.; Cortes-Puch, I.; Anderson, N.R.; Adams, J.Y.; Chuah, C.N. Leveraging IoTs and machine learning for patient diagnosis and ventilation management in the intensive care unit. IEEE Pervasive Comput. 2020, 19, 68–78. [Google Scholar] [CrossRef]

- Balaji, T.; Annavarapu, C.S.R.; Bablani, A. Machine learning algorithms for social media analysis: A survey. Comput. Sci. Rev. 2021, 40, 100395. [Google Scholar]

- Sarker, I.H. Machine learning: Algorithms, real-world applications and research directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef]

- Samie, F.; Bauer, L.; Henkel, J. From cloud down to things: An overview of machine learning in internet of things. IEEE Internet Things J. 2019, 6, 4921–4934. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Polikar, R. Ensemble learning. In Ensemble Machine Learning: Methods and Applications; Springer: Berlin/Heidelberg, Germany, 2012; pp. 1–34. [Google Scholar] [CrossRef]

- van de Ven, G.M.; Tuytelaars, T.; Tolias, A.S. Three types of incremental learning. Nat. Mach. Intell. 2022, 4, 1185–1197. [Google Scholar] [CrossRef]

- Yamauchi, K.; Yamaguchi, N.; Ishii, N. Incremental learning methods with retrieving of interfered patterns. IEEE Trans. Neural Netw. 1999, 10, 1351–1365. [Google Scholar] [CrossRef]

- Li, Y.; Wang, X.; Zeng, R.; Donta, P.K.; Murturi, I.; Huang, M.; Dustdar, S. Federated Domain Generalization: A Survey. arXiv 2023, arXiv:2306.01334. [Google Scholar] [CrossRef]

- Donta, P.K.; Srirama, S.N.; Amgoth, T.; Annavarapu, C.S.R. Survey on recent advances in IoT application layer protocols and machine learning scope for research directions. Digit. Commun. Netw. 2022, 8, 727–744. [Google Scholar] [CrossRef]

- Donta, P.K.; Dustdar, S. Towards Intelligent Data Protocols for the Edge. In Proceedings of the 2023 IEEE International Conference on Edge Computing and Communications (EDGE), Chicago, IL, USA, 2–8 July 2023; pp. 372–380. [Google Scholar] [CrossRef]

- Bajrami, X.; Gashi, B.; Murturi, I. Face recognition performance using linear discriminant analysis and deep neural networks. Int. J. Appl. Pattern Recognit. 2018, 5, 240–250. [Google Scholar] [CrossRef]

- Cox, D.R. Causality: Some statistical aspects. J. R. Stat. Soc. Ser. A 1992, 155, 291–301. [Google Scholar] [CrossRef]

- Chen, P.; Qi, Y.; Hou, D. CauseInfer: Automated end-to-end performance diagnosis with hierarchical causality graph in cloud environment. IEEE Trans. Serv. Comput. 2016, 12, 214–230. [Google Scholar] [CrossRef]

- Liu, Q.; Wang, C.; Wang, Q. Bayesian Uncertainty Inferencing for Fault Diagnosis of Intelligent Instruments in IoT Systems. Appl. Sci. 2023, 13, 5380. [Google Scholar] [CrossRef]

- Al Ridhawi, I.; Aloqaily, M.; Karray, F.; Guizani, M.; Debbah, M. Realizing the tactile internet through intelligent zero touch networks. IEEE Netw. 2022. [Google Scholar] [CrossRef]

- Cheikhrouhou, S.; Maamar, Z.; Mars, R.; Kallel, S. A time interval-based approach for business process fragmentation over cloud and edge resources. Serv. Oriented Comput. Appl. 2022, 16, 263–278. [Google Scholar] [CrossRef]

- Murturi, I.; Dustdar, S. Decent: A decentralized configurator for controlling elasticity in dynamic edge networks. ACM Trans. Internet Technol. 2022, 22, 1–21. [Google Scholar] [CrossRef]

- Dehury, C.K.; Donta, P.K.; Dustdar, S.; Srirama, S.N. CCEI-IoT: Clustered and Cohesive Edge Intelligence in Internet of Things. In Proceedings of the 2022 IEEE International Conference on Edge Computing and Communications (EDGE), Barcelona, Spain, 11–15 July 2022; pp. 33–40. [Google Scholar] [CrossRef]

- Sedlak, B.; Pujol, V.C.; Donta, P.K.; Dustdar, S. Controlling Data Gravity and Data Friction: From Metrics to Multidimensional Elasticity Strategies. In Proceedings of the 2023 IEEE International Conference on Software Services Engineering (SSE), Chicago, IL, USA, 2–8 July 2023; pp. 43–49. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Donta, P.K.; Murturi, I.; Casamayor Pujol, V.; Sedlak, B.; Dustdar, S. Exploring the Potential of Distributed Computing Continuum Systems. Computers 2023, 12, 198. https://doi.org/10.3390/computers12100198

Donta PK, Murturi I, Casamayor Pujol V, Sedlak B, Dustdar S. Exploring the Potential of Distributed Computing Continuum Systems. Computers. 2023; 12(10):198. https://doi.org/10.3390/computers12100198

Chicago/Turabian StyleDonta, Praveen Kumar, Ilir Murturi, Victor Casamayor Pujol, Boris Sedlak, and Schahram Dustdar. 2023. "Exploring the Potential of Distributed Computing Continuum Systems" Computers 12, no. 10: 198. https://doi.org/10.3390/computers12100198

APA StyleDonta, P. K., Murturi, I., Casamayor Pujol, V., Sedlak, B., & Dustdar, S. (2023). Exploring the Potential of Distributed Computing Continuum Systems. Computers, 12(10), 198. https://doi.org/10.3390/computers12100198