An Augmented Reality CBIR System Based on Multimedia Knowledge Graph and Deep Learning Techniques in Cultural Heritage

Abstract

1. Introduction

2. Related Works

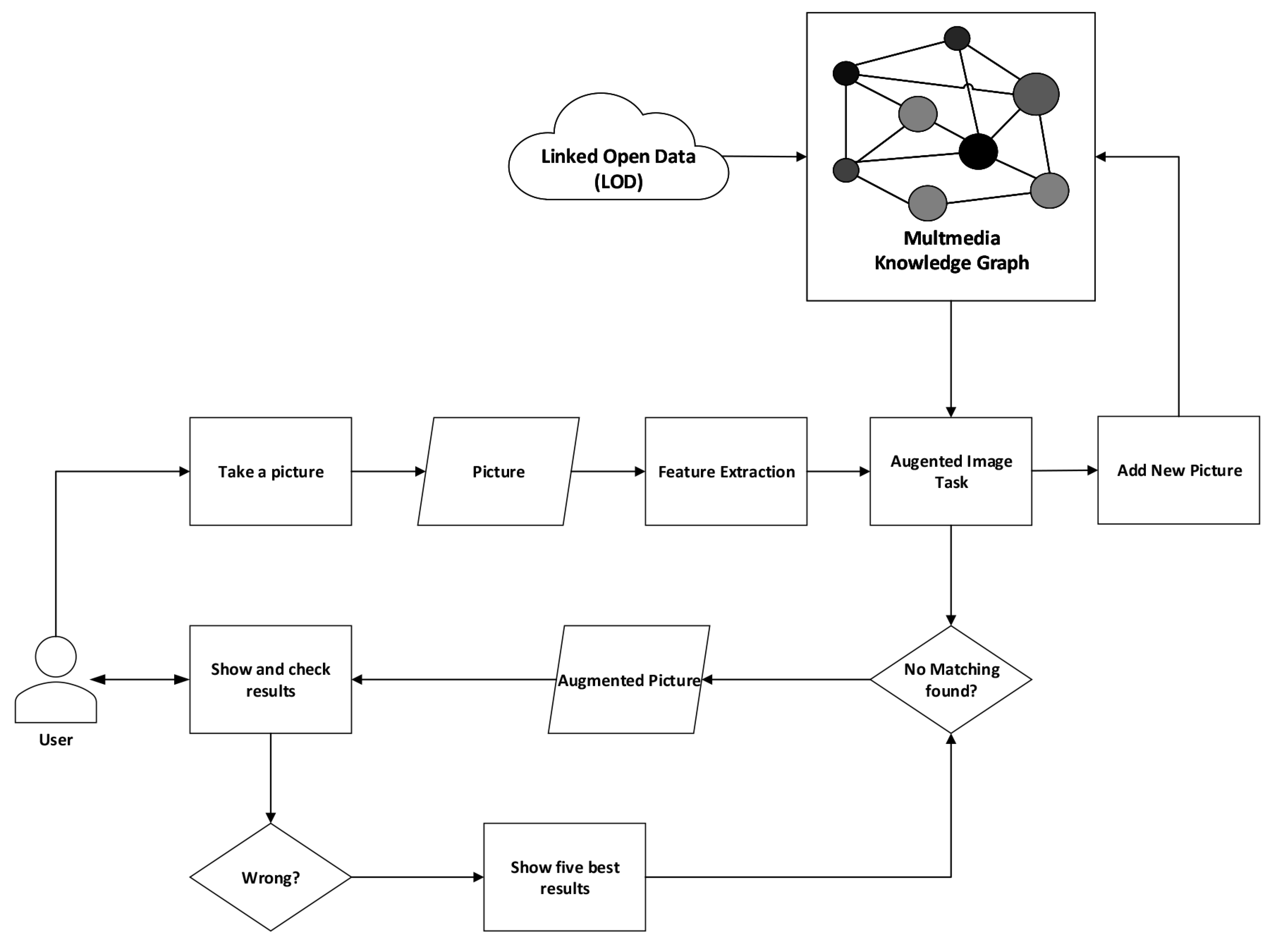

3. The Proposed System

- Image Loader: It loads images and preprocesses them as required from the feature extractor block with regards to the feature extraction techniques.

- Feature Extractor: It extracts features, so it takes in input of a processed image and outputs a feature vector.

- Feature Comparator: It compares the feature vector computed by the feature extractor and the feature stored in the multimedia knowledge graph. It puts on output the information used to augment the image.

- Augmenter Image: It applies augmentation using the information obtained from the feature comparator.

- Result Checker: It collects the user feedback and updates the results using LOD and MKG.

- Focused Crawler: It works offline, populating the multimedia knowledge graph and then updating and improving its content.

- VGG16

- Residual Network

- Inception

- MobileNet

- ORB

Augment Image Process

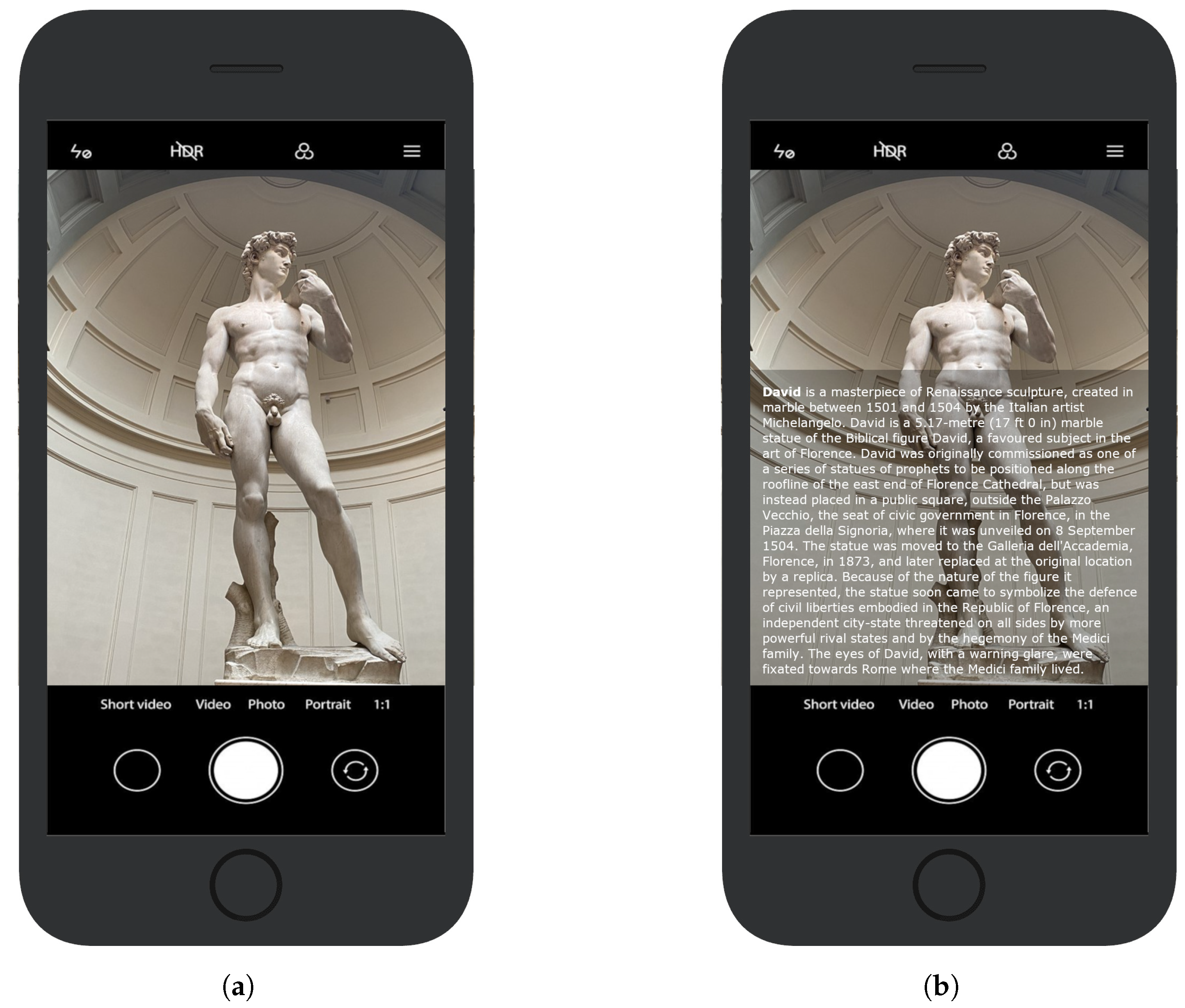

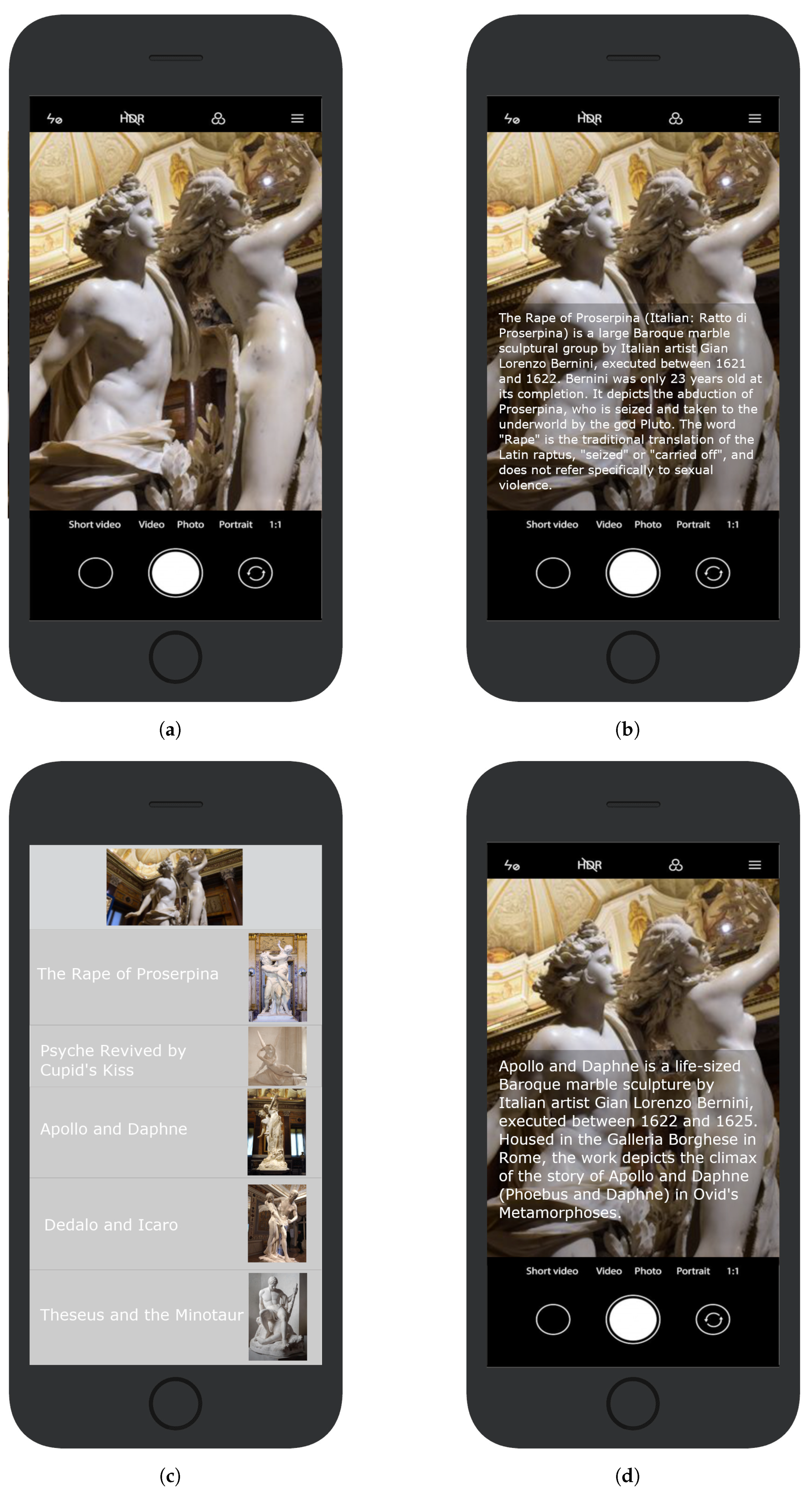

4. Use Case

4.1. Use Case 1

4.2. Use Case 2

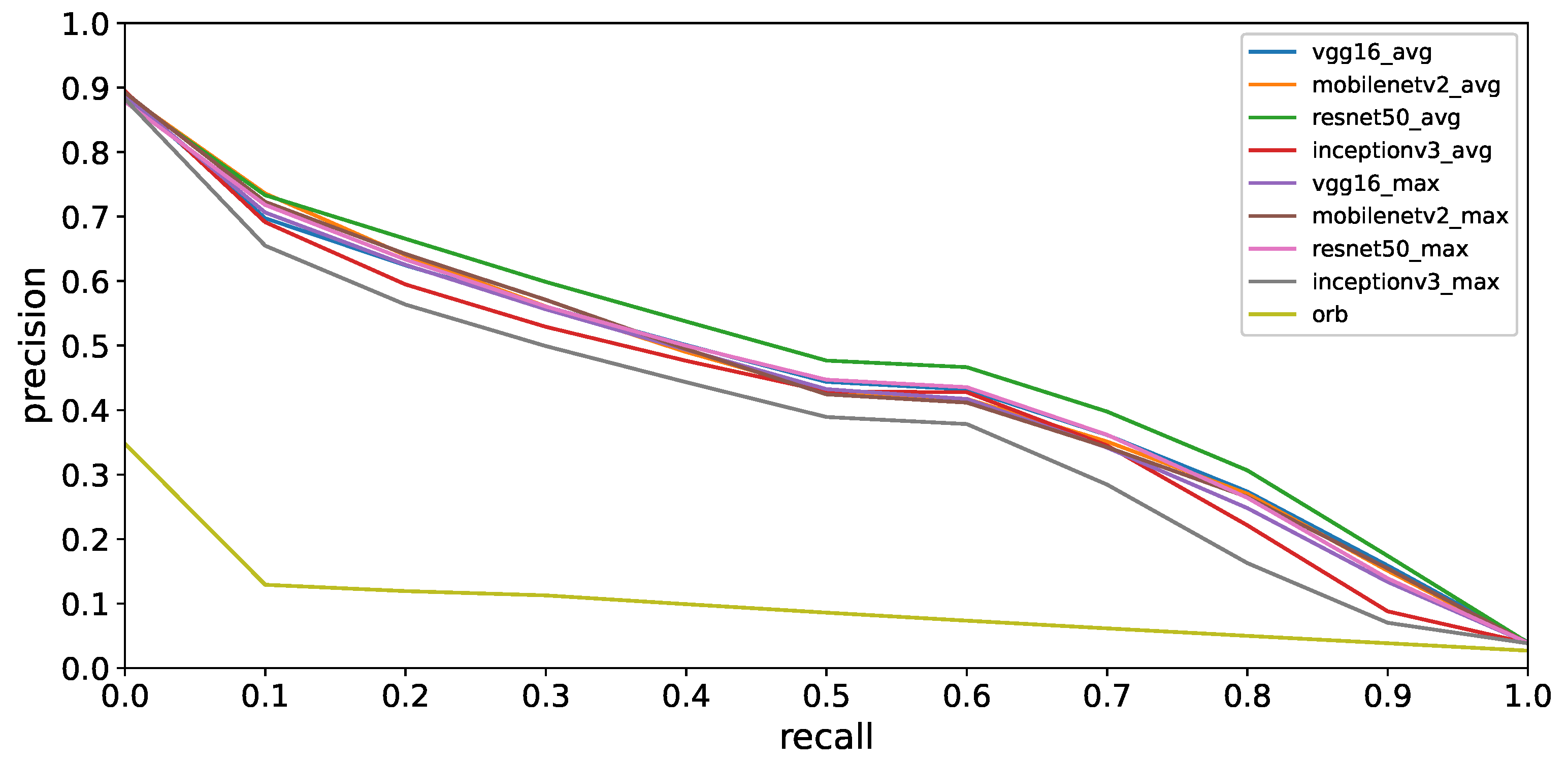

5. Experimental Results

6. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Purificato, E.; Rinaldi, A.M. Multimedia and geographic data integration for cultural heritage information retrieval. Multimedia Tools Appl. 2018, 77, 27447–27469. [Google Scholar] [CrossRef]

- Purificato, E.; Rinaldi, A.M. A multimodal approach for cultural heritage information retrieval. In Proceedings of the International Conference on Computational Science and Its Applications, Melbourne, VIC, Australia, 2–5 July 2018; Springer: Cham, Switzerland, 2018; pp. 214–230. [Google Scholar]

- Affleck, Y.K.T.K.J. New Heritage: New Media and Cultural Heritage; Routledge: London, UK, 2007. [Google Scholar]

- Han, D.I.D.; Weber, J.; Bastiaansen, M.; Mitas, O.; Lub, X. Virtual and augmented reality technologies to enhance the visitor experience in cultural tourism. In Augmented Reality and Virtual Reality; Springer: Cham, Switzerland, 2019; pp. 113–128. [Google Scholar]

- Vi, S.; da Silva, T.S.; Maurer, F. User Experience Guidelines for Designing HMD Extended Reality Applications. In Proceedings of the Human-Computer Interaction—INTERACT 2019, Paphos, Cyprus, 2–6 September 2019; Lamas, D., Loizides, F., Nacke, L., Petrie, H., Winckler, M., Zaphiris, P., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 319–341. [Google Scholar]

- Candan, K.S.; Sapino, M.L. Data Management for Multimedia Retrieval; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Rinaldi, A.M.; Russo, C. User-centered information retrieval using semantic multimedia big data. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 2304–2313. [Google Scholar]

- Rinaldi, A.M.; Russo, C.; Tommasino, C. A semantic approach for document classification using deep neural networks and multimedia knowledge graph. Expert Syst. Appl. 2021, 169, 114320. [Google Scholar] [CrossRef]

- Muscetti, M.; Rinaldi, A.M.; Russo, C.; Tommasino, C. Multimedia ontology population through semantic analysis and hierarchical deep features extraction techniques. Knowl. Inf. Syst. 2022, 64, 1283–1303. [Google Scholar] [CrossRef]

- Berners-Lee, T.; Hendler, J.; Lassila, O. The semantic web. Sci. Am. 2001, 284, 34–43. [Google Scholar] [CrossRef]

- Yu, L. Linked open data. In A Developer’s Guide to the Semantic Web; Springer: Berlin/Heidelberg, Germany, 2011; pp. 409–466. [Google Scholar]

- Titchen, S.M. On the construction of outstanding universal value: UNESCO’s World Heritage Convention (Convention concerning the Protection of the World Cultural and Natural Heritage, 1972) and the identification and assessment of cultural places for inclusion in the World Heritage List. Ph.D. Thesis, The Australian National University, Canberra, Australia, 1995. [Google Scholar]

- Rigby, J.; Smith, S.P. Augmented Reality Challenges for Cultural Heritage; Applied Informatics Research Group, University of Newcastle: Callaghan, Australia, 2013. [Google Scholar]

- Han, D.; Leue, C.; Jung, T. A tourist experience model for augmented reality applications in the urban heritage context. In Proceedings of the APacCHRIE Conference, Kuala Lumpur, Malaysia, 21–24 May 2014; pp. 21–24. [Google Scholar]

- Neuhofer, B.; Buhalis, D.; Ladkin, A. A typology of technology-enhanced tourism experiences. Int. J. Tour. Res. 2014, 16, 340–350. [Google Scholar] [CrossRef]

- Vargo, S.L.; Lusch, R.F. From goods to service (s): Divergences and convergences of logics. Ind. Mark. Manag. 2008, 37, 254–259. [Google Scholar] [CrossRef]

- Yovcheva, Z.; Buhalis, D.; Gatzidis, C. Engineering augmented tourism experiences. In Information and Communication Technologies in Tourism 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 24–35. [Google Scholar]

- Cranmer, E.; Jung, T. Augmented reality (AR): Business models in urban cultural heritage tourist destinations. In Proceedings of the APacCHRIE Conference, Kuala Lumpur, Malaysia, 21–24 May 2014; pp. 21–24. [Google Scholar]

- Fritz, F.; Susperregui, A.; Linaza, M.T. Enhancing cultural tourism experiences with augmented reality technologies. In Proceedings of the 6th International Symposium on Virtual Reality, Archaeology and Cultural Heritage VAST, Pisa, Italy, 8–11 November 2005. [Google Scholar]

- tom Dieck, M.C.; Jung, T.H. Value of augmented reality at cultural heritage sites: A stakeholder approach. J. Destinat. Mark. Manag. 2017, 6, 110–117. [Google Scholar] [CrossRef]

- Makantasis, K.; Doulamis, A.; Doulamis, N.; Ioannides, M.; Matsatsinis, N. Content-based filtering for fast 3D reconstruction from unstructured web-based image data. In Proceedings of the Euro-Mediterranean Conference, Limassol, Cyprus, 3–8 November 2014; Springer: Cham, Switzerland, 2014; pp. 91–101. [Google Scholar]

- Shin, C.; Hong, S.H.; Yoon, H. Enriching Natural Monument with User-Generated Mobile Augmented Reality Mashup. J. Multimedia Inf. Syst. 2020, 7, 25–32. [Google Scholar] [CrossRef]

- Tam, D.C.C.; Fiala, M. A Real Time Augmented Reality System Using GPU Acceleration. In Proceedings of the 2012 Ninth Conference on Computer and Robot Vision, Toronto, ON, Canada, 28–30 May 2012; pp. 101–108. [Google Scholar] [CrossRef]

- Chen, J.; Guo, J.; Wang, Y. Mobile augmented reality system for personal museum tour guide applications. In Proceedings of the IET International Communication Conference on Wireless Mobile and Computing (CCWMC 2011), Shanghai, China, 14–16 November 2011; pp. 262–265. [Google Scholar] [CrossRef]

- Han, J.G.; Park, K.W.; Ban, K.J.; Kim, E.K. Cultural Heritage Sites Visualization System based on Outdoor Augmented Reality. AASRI Procedia 2013, 4, 64–71. [Google Scholar] [CrossRef]

- Bres, S.; Tellez, B. Localisation and Augmented Reality for Mobile Applications in Culture Heritage; Computer (Long. Beach. Calif.); INSA: Lyon, France, 2006; pp. 1–5. [Google Scholar]

- Rodrigues, J.M.F.; Veiga, R.J.M.; Bajireanu, R.; Lam, R.; Pereira, J.A.R.; Sardo, J.D.P.; Cardoso, P.J.S.; Bica, P. Mobile Augmented Reality Framework—MIRAR. In Proceedings of the Universal Access in Human-Computer Interaction, Virtual, Augmented, and Intelligent Environments, Las Vegas, NV, USA, 15–20 July 2018; Antona, M., Stephanidis, C., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 102–121. [Google Scholar]

- Ufkes, A.; Fiala, M. A Markerless Augmented Reality System for Mobile Devices. In Proceedings of the 2013 International Conference on Computer and Robot Vision, Washington, DC, USA, 29–31 May 2013; pp. 226–233. [Google Scholar] [CrossRef]

- Ghouaiel, N.; Garbaya, S.; Cieutat, J.M.; Jessel, J.P. Mobile augmented reality in museums: Towards enhancing visitor’s learning experience. Int. J. Virtual Real. 2017, 17, 21–31. [Google Scholar] [CrossRef]

- Angelopoulou, A.; Economou, D.; Bouki, V.; Psarrou, A.; Jin, L.; Pritchard, C.; Kolyda, F. Mobile augmented reality for cultural heritage. In Proceedings of the International Conference on Mobile Wireless Middleware, Operating Systems, and Applications, London, UK, 22–24 June 2011; Springer: Cham, Switzerland, 2011; pp. 15–22. [Google Scholar]

- Haugstvedt, A.C.; Krogstie, J. Mobile augmented reality for cultural heritage: A technology acceptance study. In Proceedings of the 2012 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Altanta, GA, USA, 5–8 November 2012; pp. 247–255. [Google Scholar]

- Clini, P.; Frontoni, E.; Quattrini, R.; Pierdicca, R. Augmented Reality Experience: From High-Resolution Acquisition to Real Time Augmented Contents. Adv. MultiMedia 2014, 2014, 597476. [Google Scholar] [CrossRef]

- Rinaldi, A.M.; Russo, C.; Tommasino, C. An Approach Based on Linked Open Data and Augmented Reality for Cultural Heritage Content-Based Information Retrieval. In Proceedings of the International Conference on Computational Science and Its Applications, Malaga, Spain, 4–7 July 2022; Springer: Cham, Switzerland, 2022; pp. 99–112. [Google Scholar]

- Capuano, A.; Rinaldi, A.M.; Russo, C. An ontology-driven multimedia focused crawler based on linked open data and deep learning techniques. Multimedia Tools Appl. 2020, 79, 7577–7598. [Google Scholar] [CrossRef]

- Rinaldi, A.M.; Russo, C. A semantic-based model to represent multimedia big data. In Proceedings of the 10th International Conference on Management of Digital EcoSystems, Tokyo, Japan, 25–28 September 2018; pp. 31–38. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 630–645. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with 389 convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Candan, K.S.; Liu, H.; Suvarna, R. Resource description framework: Metadata and its applications. ACM Sigkdd Explor. Newsl. 2001, 3, 6–19. [Google Scholar] [CrossRef]

- Della Valle, E.; Ceri, S. Querying the semantic web: SPARQL. In Handbook of Semantic Web Technologies; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Lehmann, J.; Isele, R.; Jakob, M.; Jentzsch, A.; Kontokostas, D.; Mendes, P.N.; Hellmann, S.; Morsey, M.; Van Kleef, P.; Auer, S.; et al. Dbpedia–A large-scale, multilingual knowledge base extracted from wikipedia. Semant. Web 2015, 6, 167–195. [Google Scholar] [CrossRef]

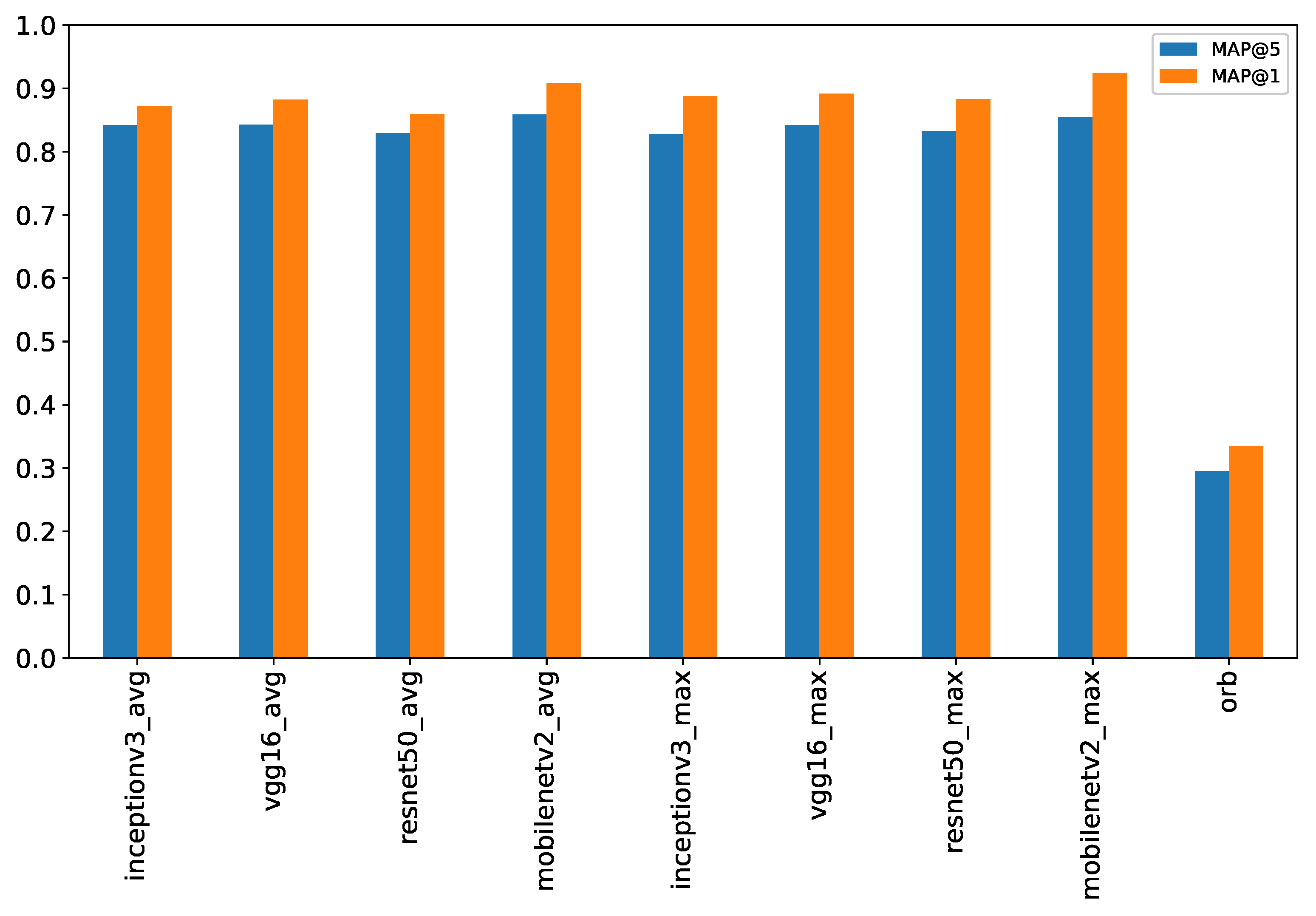

| MAP@5 | MAP@1 | |

|---|---|---|

| inceptionv3_avg | 0.8416 | 0.8716 |

| vgg16_avg | 0.8424 | 0.8824 |

| resnet50_avg | 0.8290 | 0.8590 |

| mobilenetv2_avg | 0.8586 | 0.9086 |

| inceptionv3_max | 0.8278 | 0.8878 |

| vgg16_max | 0.8417 | 0.8917 |

| resnet50_max | 0.8327 | 0.8827 |

| mobilenetv2_max | 0.8547 | 0.9247 |

| orb | 0.2949 | 0.3349 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rinaldi, A.M.; Russo, C.; Tommasino, C. An Augmented Reality CBIR System Based on Multimedia Knowledge Graph and Deep Learning Techniques in Cultural Heritage. Computers 2022, 11, 172. https://doi.org/10.3390/computers11120172

Rinaldi AM, Russo C, Tommasino C. An Augmented Reality CBIR System Based on Multimedia Knowledge Graph and Deep Learning Techniques in Cultural Heritage. Computers. 2022; 11(12):172. https://doi.org/10.3390/computers11120172

Chicago/Turabian StyleRinaldi, Antonio M., Cristiano Russo, and Cristian Tommasino. 2022. "An Augmented Reality CBIR System Based on Multimedia Knowledge Graph and Deep Learning Techniques in Cultural Heritage" Computers 11, no. 12: 172. https://doi.org/10.3390/computers11120172

APA StyleRinaldi, A. M., Russo, C., & Tommasino, C. (2022). An Augmented Reality CBIR System Based on Multimedia Knowledge Graph and Deep Learning Techniques in Cultural Heritage. Computers, 11(12), 172. https://doi.org/10.3390/computers11120172