A Systematic Investigation of Models for Color Image Processing in Wound Size Estimation

Abstract

1. Introduction

2. Methods

2.1. Research Questions

2.2. Inclusion Criteria

2.3. Search Strategy

2.4. Extraction of Study Characteristics

3. Results

4. Discussion

5. Conclusions

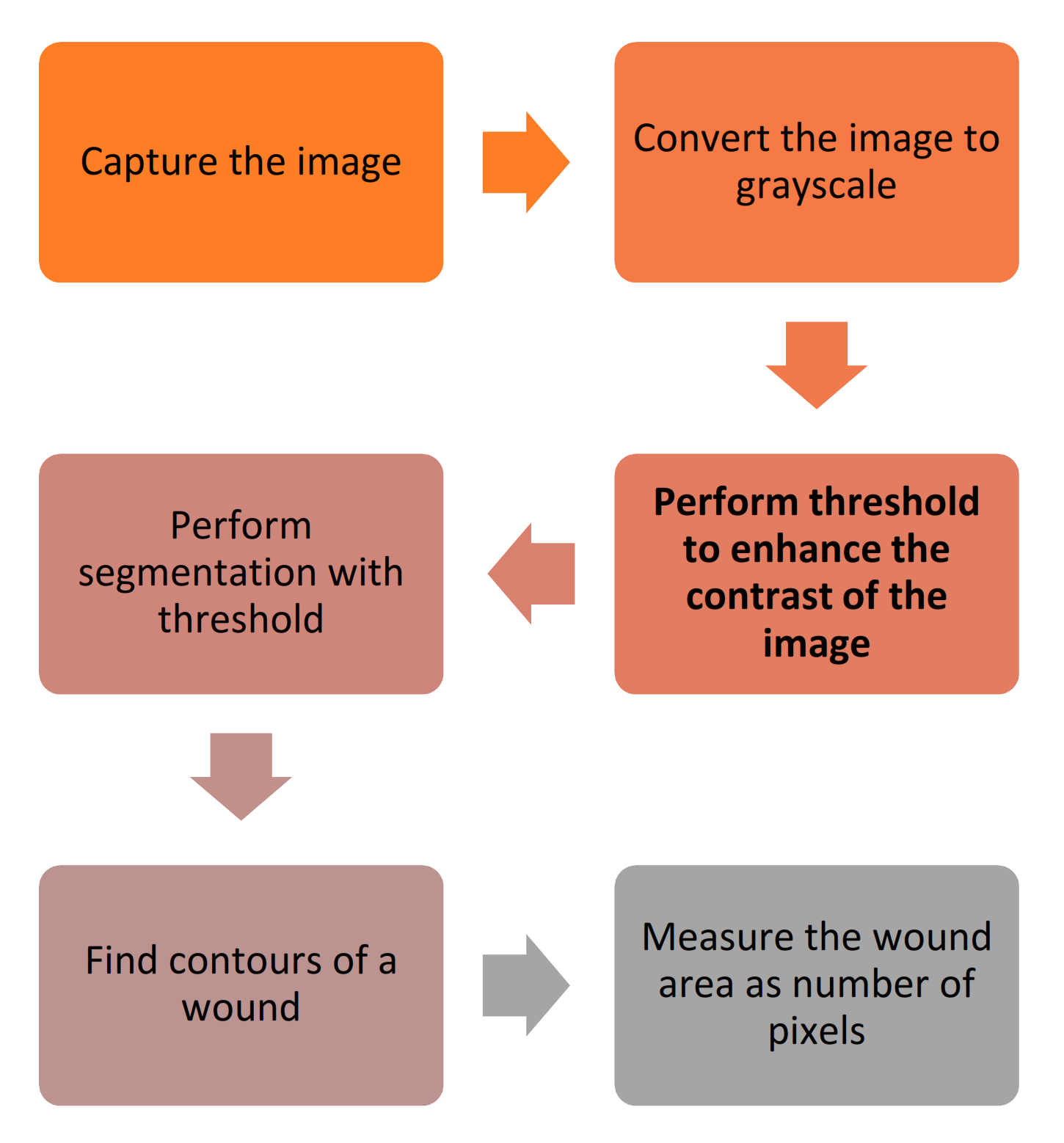

- (RQ1) Which are the techniques that can be applied in a mobile application to measure a wound area? A mobile application can capture different pictures related to different situations, including wounds. The mobile application commonly applies preprocessing techniques, segmentation, threshold, and other methods to measure the wound area;

- (RQ2) What are the most significant features to define a method for the automatic calculation of a wound’s size? The most notable feature related to the wound’s size is measuring the different pixels and the different points of each wound’s contour. The processing techniques and artificial intelligence techniques may be powerful in the measurement of the wound’s size;

- (RQ3) What are the benefits that this kind of study can bring to the medical sector? This kind of study’s benefits consist of the correct measurement of the evolution of a wound’s treatment and the medicine’s adaptation according to its changes. It is especially important in patients with diabetes.

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Camilo, M.E. Disease-Related Malnutrition: An Evidence-Based Approach to Treatment. Clin. Nutr. 2003, 22, 585. [Google Scholar] [CrossRef]

- Landi, F.; Onder, G.; Russo, A.; Bernabei, R. Pressure Ulcer and Mortality in Frail Elderly People Living in Community. Arch. Gerontol. Geriatr. 2007, 44, 217–223. [Google Scholar] [CrossRef] [PubMed]

- Beeckman, D.; Schoonhoven, L.; Fletcher, J.; Furtado, K.; Gunningberg, L.; Heyman, H.; Lindholm, C.; Paquay, L.; Verdú, J.; Defloor, T. EPUAP Classification System for Pressure Ulcers: European Reliability Study. J. Adv. Nurs. 2007, 60, 682–691. [Google Scholar] [CrossRef] [PubMed]

- Edsberg, L.E. Pressure Ulcer Tissue Histology: An Appraisal of Current Knowledge. Ostomy Wound Manag. 2007, 53, 40–49. [Google Scholar]

- Pires, I.M.; Marques, G.; Garcia, N.M.; Flórez-Revuelta, F.; Ponciano, V.; Oniani, S. A Research on the Classification and Applicability of the Mobile Health Applications. JPM 2020, 10, 11. [Google Scholar] [CrossRef]

- Pires, I.M.; Garcia, N.M.; Pombo, N.; Flórez-Revuelta, F.; Rodríguez, N.D. Validation Techniques for Sensor Data in Mobile Health Applications. J. Sens. 2016, 2016. [Google Scholar] [CrossRef]

- Sim, I. Mobile Devices and Health. N. Engl. J. Med. 2019, 381, 956–968. [Google Scholar] [CrossRef]

- Lumsden, C.J.; Byrne-Davis, L.M.T.; Mooney, J.S.; Sandars, J. Using Mobile Devices for Teaching and Learning in Clinical Medicine. Arch. Dis. Child Educ. Pract. 2015, 100, 244–251. [Google Scholar] [CrossRef]

- Albrecht, U.-V.; von Jan, U.; Kuebler, J.; Zoeller, C.; Lacher, M.; Muensterer, O.J.; Ettinger, M.; Klintschar, M.; Hagemeier, L. Google Glass for Documentation of Medical Findings: Evaluation in Forensic Medicine. J. Med. Internet Res. 2014, 16, e53. [Google Scholar] [CrossRef]

- Kilgus, T.; Heim, E.; Haase, S.; Prüfer, S.; Müller, M.; Seitel, A.; Fangerau, M.; Wiebe, T.; Iszatt, J.; Schlemmer, H.-P.; et al. Mobile Markerless Augmented Reality and Its Application in Forensic Medicine. Int. J. Comput. Assist. Radiol. Surg. 2015, 10, 573–586. [Google Scholar] [CrossRef]

- CDC. Disability and Health Data System (DHDS)|CDC. Available online: https://www.cdc.gov/ncbddd/disabilityandhealth/dhds/index.html (accessed on 5 August 2020).

- Yin, H.; Jha, N.K. A Health Decision Support System for Disease Diagnosis Based on Wearable Medical Sensors and Machine Learning Ensembles. IEEE Trans. Multi-Scale Comp. Syst. 2017, 3, 228–241. [Google Scholar] [CrossRef]

- Mehmood, N.; Hariz, A.; Templeton, S.; Voelcker, N.H. A Flexible and Low Power Telemetric Sensing and Monitoring System for Chronic Wound Diagnostics. Biomed. Eng. Online 2015, 14, 17. [Google Scholar] [CrossRef]

- Pal, A.; Goswami, D.; Cuellar, H.E.; Castro, B.; Kuang, S.; Martinez, R.V. Early Detection and Monitoring of Chronic Wounds Using Low-Cost, Omniphobic Paper-Based Smart Bandages. Biosens. Bioelectron. 2018, 117, 696–705. [Google Scholar] [CrossRef]

- Moore, K.; McCallion, R.; Searle, R.J.; Stacey, M.C.; Harding, K.G. Prediction and Monitoring the Therapeutic Response of Chronic Dermal Wounds. Int. Wound J. 2006, 3, 89–98. [Google Scholar] [CrossRef]

- Karkanis, S.A.; Iakovidis, D.K.; Maroulis, D.E.; Karras, D.A.; Tzivras, M. Computer-Aided Tumor Detection in Endoscopic Video Using Color Wavelet Features. IEEE Trans. Inform. Technol. Biomed. 2003, 7, 141–152. [Google Scholar] [CrossRef]

- Jones, T.D.; Plassmann, P. An Active Contour Model for Measuring the Area of Leg Ulcers. IEEE Trans. Med. Imaging 2000, 19, 1202–1210. [Google Scholar] [CrossRef]

- Veredas, F.; Mesa, H.; Morente, L. Binary Tissue Classification on Wound Images With Neural Networks and Bayesian Classifiers. IEEE Trans. Med. Imaging 2010, 29, 410–427. [Google Scholar] [CrossRef]

- Cula, O.G.; Dana, K.J.; Murphy, F.P.; Rao, B.K. Skin Texture Modeling. Int. J. Comput. Vis. 2005, 62, 97–119. [Google Scholar] [CrossRef]

- Neidrauer, M.; Papazoglou, E.S. Optical Non-invasive Characterization of Chronic Wounds. In Bioengineering Research of Chronic Wounds; Gefen, A., Ed.; Studies in Mechanobiology, Tissue Engineering and Biomaterials; Springer: Berlin/Heidelberg, Germany, 2009; Volume 1, pp. 381–404. ISBN 978-3-642-00533-6. [Google Scholar]

- Barone, S.; Paoli, A.; Razionale, A.V. Assessment of Chronic Wounds by Three-Dimensional Optical Imaging Based on Integrating Geometrical, Chromatic, and Thermal Data. Proc. Inst. Mech. Eng. 2011, 225, 181–193. [Google Scholar] [CrossRef]

- Hettiarachchi, N.D.J.; Mahindaratne, R.B.H.; Mendis, G.D.C.; Nanayakkara, H.T.; Nanayakkara, N.D. Mobile Based Wound Measurement. In Proceedings of the 2013 IEEE Point-of-Care Healthcare Technologies (PHT), Bangalore, India, 16–18 January 2013; pp. 298–301. [Google Scholar]

- Pires, I.M.; Garcia, N.M. Wound Area Assessment Using Mobile Application. BIODEVICES 2015, 1, 271–282. [Google Scholar]

- Gupta, A. Real Time Wound Segmentation/Management Using Image Processing on Handheld Devices. JCM 2017, 17, 321–329. [Google Scholar] [CrossRef]

- Sirazitdinova, E.; Deserno, T.M. System Design for 3D Wound Imaging Using Low-Cost Mobile Devices; Cook, T.S., Zhang, J., Eds.; SPIE: Orlando, FL, USA, 2017; p. 1013810. [Google Scholar]

- Deserno, T.M.; Kamath, S.; Sirazitdinova, E. Machine Learning for Mobile Wound Assessment. In Medical Imaging 2018: Imaging Informatics for Healthcare, Research, and Applications; Zhang, J., Chen, P.-H., Eds.; SPIE: Houston, TX, USA, 2018; p. 42. [Google Scholar]

- Petrovska, B.; Zdravevski, E.; Lameski, P.; Corizzo, R.; Štajduhar, I.; Lerga, J. Deep Learning for Feature Extraction in Remote Sensing: A Case-Study of Aerial Scene Classification. Sensors 2020, 20, 3906. [Google Scholar] [CrossRef] [PubMed]

- Petrovska, B.; Atanasova-Pacemska, T.; Corizzo, R.; Mignone, P.; Lameski, P.; Zdravevski, E. Aerial Scene Classification through Fine-Tuning with Adaptive Learning Rates and Label Smoothing. Appl. Sci. 2020, 10, 5792. [Google Scholar] [CrossRef]

- Casal-Guisande, M.; Comesaña-Campos, A.; Cerqueiro-Pequeño, J.; Bouza-Rodríguez, J.-B. Design and Development of a Methodology Based on Expert Systems, Applied to the Treatment of Pressure Ulcers. Diagnostics 2020, 10, 614. [Google Scholar] [CrossRef]

- Zahia, S.; Garcia-Zapirain, B.; Elmaghraby, A. Integrating 3D Model Representation for an Accurate Non-Invasive Assessment of Pressure Injuries with Deep Learning. Sensors 2020, 20, 2933. [Google Scholar] [CrossRef]

- COCO—Common Objects in Context. Available online: https://cocodataset.org/#home (accessed on 5 March 2021).

- ImageNet. Available online: http://www.image-net.org/ (accessed on 5 March 2021).

- Cazzolato, M.T.; Ramos, J.S.; Rodrigues, L.S.; Scabora, L.C.; Chino, D.Y.T.; Jorge, A.E.S.; Azevedo-Marques, P.M.; Traina, C.; Traina, A.J.M. Semi-Automatic Ulcer Segmentation and Wound Area Measurement Supporting Telemedicine. In Proceedings of the 2020 IEEE 33rd International Symposium on Computer-Based Medical Systems (CBMS), Rochester, MN, USA, 28–30 July 2020; pp. 356–361. [Google Scholar]

- Dorileo, E.A.; Frade, M.A.C.; Roselino, A.M.F.; Rangayyan, R.M.; Azevedo-Marques, P.M. Color Image Processing and Content-Based Image Retrieval Techniques for the Analysis of Dermatological Lesions. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–25 August 2008; pp. 1230–1233. [Google Scholar]

- Chino, D.Y.T.; Scabora, L.C.; Cazzolato, M.T.; Jorge, A.E.S.; Traina, C., Jr.; Traina, A.J.M. Segmenting Skin Ulcers and Measuring the Wound Area Using Deep Convolutional Networks. Comput. Methods Programs Biomed. 2020, 191, 105376. [Google Scholar] [CrossRef]

- Wu, W.-L.; Yong, K.Y.-W.; Federico, M.A.J.; Gan, S.K.-E. The APD Skin Monitoring App for Wound Monitoring: Image Processing, Area Plot, and Colour Histogram. Sci. Phone Apps Mob. Device 2019. [Google Scholar] [CrossRef]

- Liu, C.; Fan, X.; Guo, Z.; Mo, Z.; Chang, E.I.-C.; Xu, Y. Wound Area Measurement with 3D Transformation and Smartphone Images. BMC Bioinform. 2019, 20, 724. [Google Scholar] [CrossRef]

- Huang, C.-H.; Jhan, S.-D.; Lin, C.-H.; Liu, W.-M. Automatic Size Measurement and Boundary Tracing of Wound on a Mobile Device. In Proceedings of the 2018 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-TW), Taichung, Taiwan, 19–21 May 2018; pp. 1–2. [Google Scholar]

- Naraghi, S.; Mutsvangwa, T.; Goliath, R.; Rangaka, M.X.; Douglas, T.S. Mobile Phone-Based Evaluation of Latent Tuberculosis Infection: Proof of Concept for an Integrated Image Capture and Analysis System. Comput. Biol. Med. 2018, 98, 76–84. [Google Scholar] [CrossRef]

- Chen, Y.-W.; Hsu, J.-T.; Hung, C.-C.; Wu, J.-M.; Lai, F.; Kuo, S.-Y. Surgical Wounds Assessment System for Self-Care. IEEE Trans. Syst. Man Cybernsyst. 2019, 1–16. [Google Scholar] [CrossRef]

- UCI. Machine Learning Repository: Skin Segmentation Data Set. Available online: https://archive.ics.uci.edu/ml/datasets/Skin+Segmentation (accessed on 5 March 2021).

- Tang, M.; Gary, K.; Guler, O.; Cheng, P. A Lightweight App Distribution Strategy to Generate Interest in Complex Commercial Apps: Case Study of an Automated Wound Measurement System. In Proceedings of the Hawaii International Conference on System Sciences 2017, Big Island, HI, USA, 3–7 January 2017. [Google Scholar]

- Garcia-Zapirain, B.; Shalaby, A.; El-Baz, A.; Elmaghraby, A. Automated Framework for Accurate Segmentation of Pressure Ulcer Images. Comput. Biol. Med. 2017, 90, 137–145. [Google Scholar] [CrossRef]

- Dendere, R.; Mutsvangwa, T.; Goliath, R.; Rangaka, M.X.; Abubakar, I.; Douglas, T.S. Measurement of Skin Induration Size Using Smartphone Images and Photogrammetric Reconstruction: Pilot Study. JMIR Biomed. Eng. 2017, 2, e3. [Google Scholar] [CrossRef]

- Yee, A.; Patel, M.; Wu, E.; Yi, S.; Marti, G.; Harmon, J. IDr: An Intelligent Digital Ruler App for Remote Wound Assessment. In Proceedings of the 2016 IEEE First International Conference on Connected Health: Applications, Systems and Engineering Technologies (CHASE), Washington, DC, USA, 27–29 June 2016; pp. 380–381. [Google Scholar]

- Satheesha, T.Y.; Satyanarayana, D.; Prasad, G. Early Detection of Melanoma Using Color and Shape Geometry Feature. JBEMi 2015, 2. [Google Scholar] [CrossRef][Green Version]

- Cheung, N.-M.; Pomponiu, V.; Toan, D.; Nejati, H. Mobile Image Analysis for Medical Applications. Spie Newsroom 2015. [Google Scholar] [CrossRef]

- Bulan, O. Improved Wheal Detection from Skin Prick Test Images. In Proceedings of the IS&T/SPIE ELECTRONIC IMAGING, San Francisco, CA, USA, 2–6 February 2014. [Google Scholar]

- Kanade, T.; Yin, Z.; Bise, R.; Huh, S.; Eom, S.; Sandbothe, M.F.; Chen, M. Cell Image Analysis: Algorithms, System and Applications. In Proceedings of the 2011 IEEE Workshop on Applications of Computer Vision (WACV), Kona, HI, USA, 5–7 January 2011; pp. 374–381. [Google Scholar]

- Brownlee, J. Deep Learning with Python: Develop Deep Learning Models on Theano and TensorFlow Using Keras; Machine Learning Mastery: San Francisco, CA, USA, 2016. [Google Scholar]

- Holdroyd, T. TensorFlow 2.0 Quick Start Guide: Get up to Speed with the Newly Introduced Features of TensorFlow 2.0; Packt Publishing Ltd.: Birmingham, UK, 2019; ISBN 978-1-78953-696-6. [Google Scholar]

- Lameski, P.; Zdravevski, E.; Trajkovik, V.; Kulakov, A. Weed Detection Dataset with RGB Images Taken Under Variable Light Conditions. In ICT Innovations 2017; Trajanov, D., Bakeva, V., Eds.; Communications in Computer and Information Science; Springer International Publishing: Cham, Switzerland, 2017; Volume 778, pp. 112–119. ISBN 978-3-319-67596-1. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–15 June 2015; pp. 3431–3440. [Google Scholar]

- Yu, L.; Fan, G. DrsNet: Dual-Resolution Semantic Segmentation with Rare Class-Oriented Superpixel Prior. Multimed. Tools Appl. 2021, 80, 1687–1706. [Google Scholar] [CrossRef]

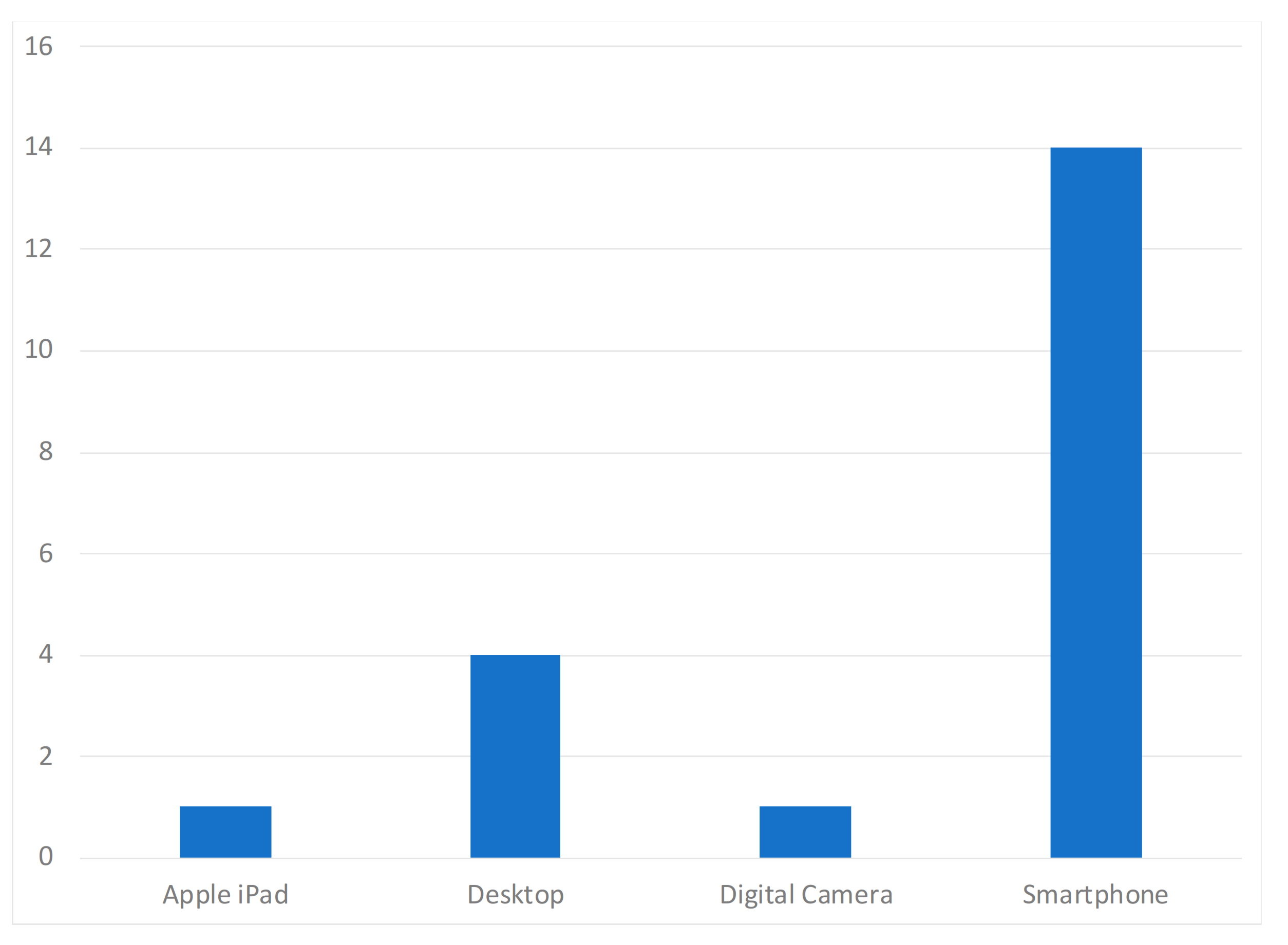

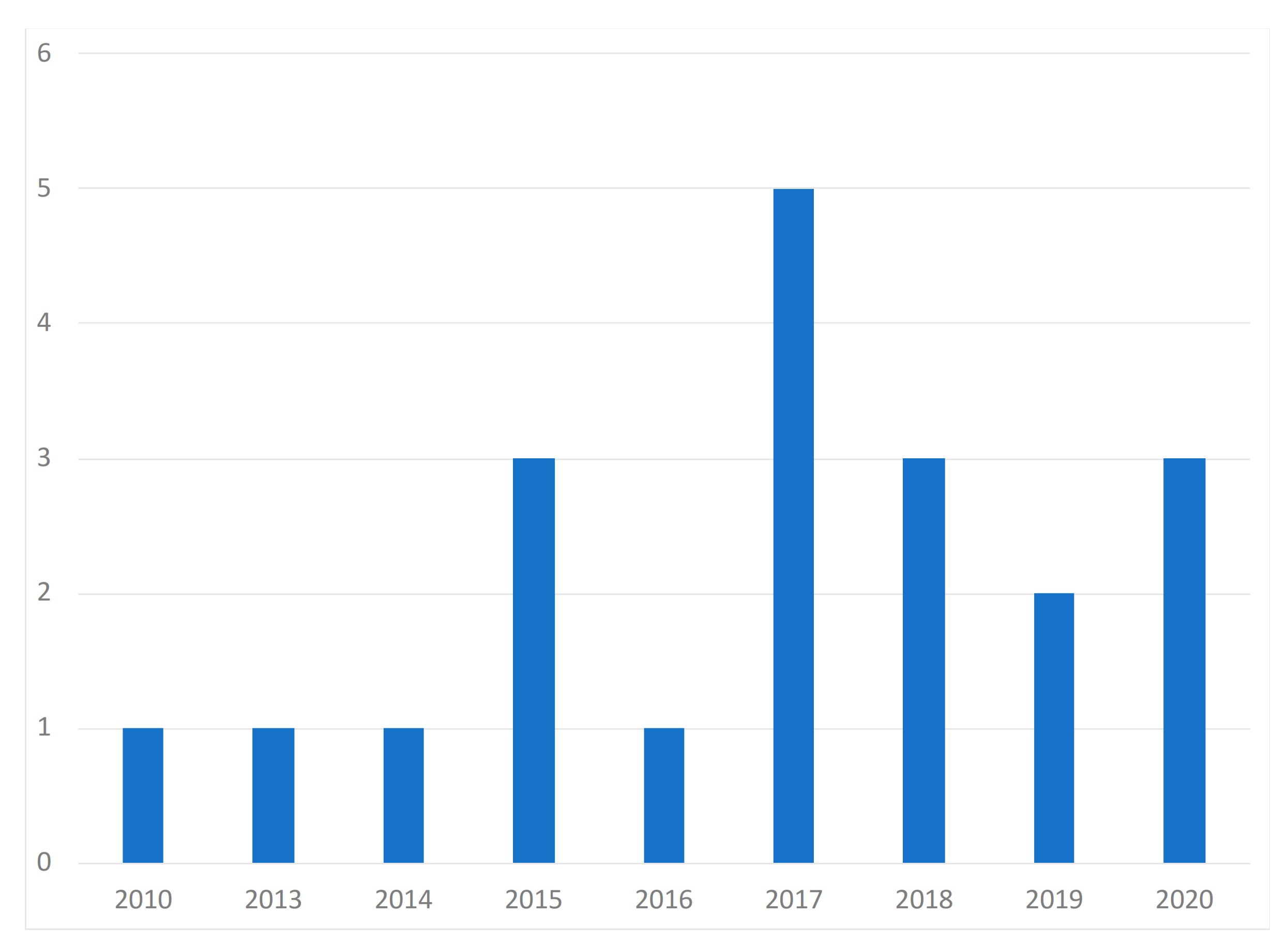

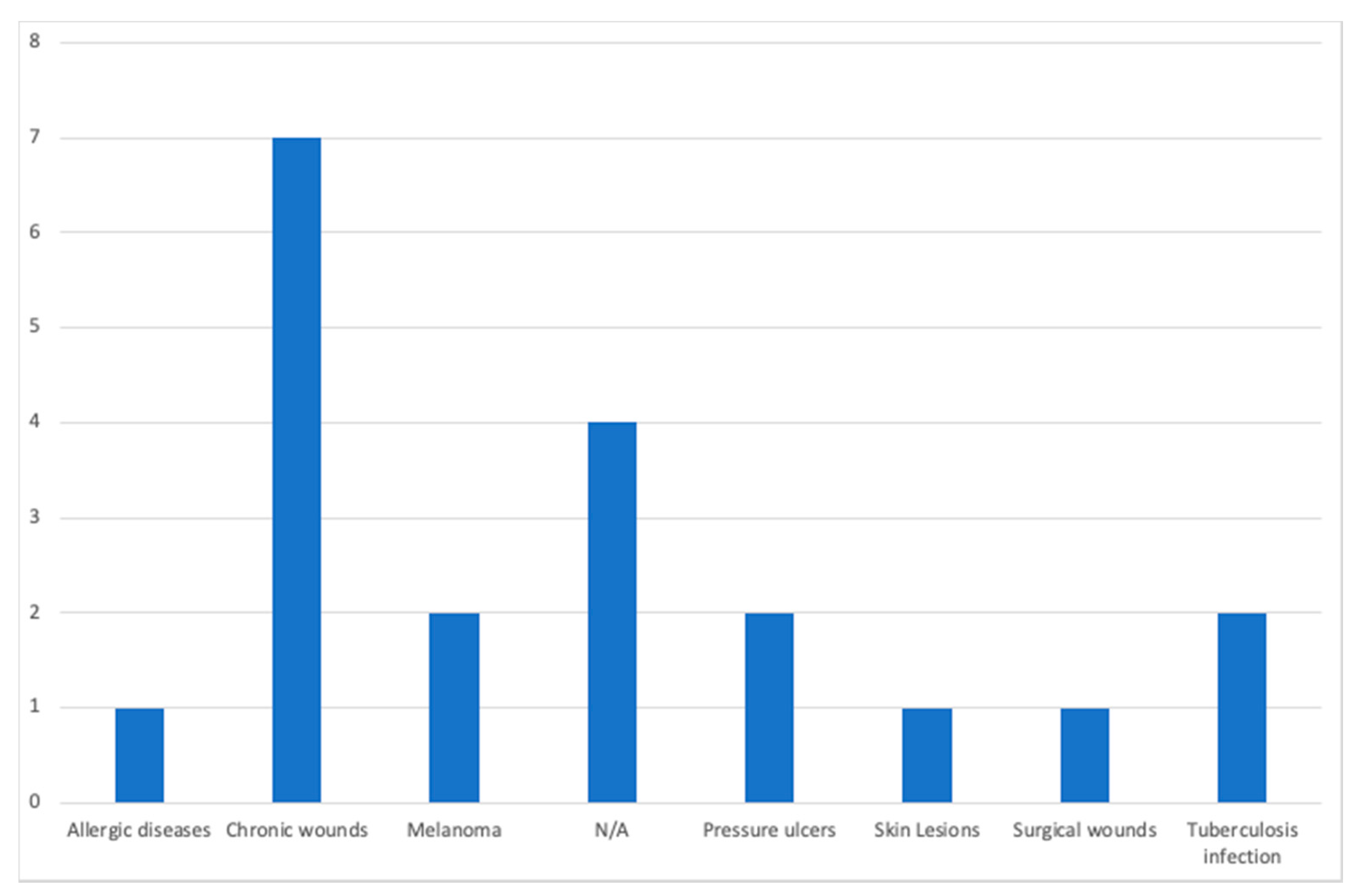

| Paper | Year of Publication | Population | Purpose of the Study | Devices | Dataset Availability | Methods | Diseases |

|---|---|---|---|---|---|---|---|

| Casal-Guisande et al. [29] | 2020 | N/A | Monitor and analyze the chronic wound treatment process | Desktop | Not available | Segmentation, threshold, color detection | Pressure ulcers |

| Zahia et al. [30] | 2020 | N/A | Measure the depth, area, volume, main axis, and secondary axis of chronic wounds | Smartphone | MS COCO [31] and ImageNet [32] datasets | Mask recurrent convolutional neural networks (RCNN) model | Chronic wounds |

| Cazzolato et al. [33] | 2020 | People with chronic wounds | Segment and measure skin ulcers | Smartphone | Datasets from [34,35] | Rule-based ulcer segmentation and measurement (URule) framework | Pressure ulcers |

| Wu et al. [36] | 2019 | Voluntary people | Detection of wounds with image processing techniques | Smartphone | Not available | Segmentation, threshold, color detection | N/A |

| Liu et al. [37] | 2019 | 54 patients | Detection and measurement of the wound area | Smartphone | Available by request | Least squares conformal map (LSCM) algorithm | N/A |

| Huang et al. [38] | 2018 | N/A | Measurement of wound size | Smartphone | Not available | Enhance local contrast (CLAHE) algorithm | Chronic wounds |

| Naraghi et al. [39] | 2018 | People with tuberculosis | Detection of wounds in people with tuberculosis | Smartphone | Not available | Photogrammetric reconstruction | Tuberculosis infection |

| Chen et al. [40] | 2018 | N/A | Evaluation of a surgical wound | Smartphone | Dataset available in [41] | Segmentation, threshold, color detection | Surgical wounds |

| Gupta et al. [24] | 2017 | 20 wound images | Mobile system for the segmentation and identification of a wound | Smartphone | Not available | Segmentation, threshold, color detection | Chronic wounds |

| Sirazitdinova et al. [25] | 2017 | N/A | Automatic wound reconstruction | Smartphone | Not available | Color correction, tissue segmentation | Skin Lesions |

| Tang et al. [42] | 2017 | N/A | Analysis of the evolution of wounds | Smartphone | Not available | Scaling method | Chronic wounds |

| Zapirain et al. [43] | 2017 | 24 clinical images of pressure ulcers | Segmentation and identification of chronic wounds | Desktop | Not publicly available | Linear combination of discrete gaussians (LCDG) model | Chronic wounds |

| Dendere et al. [44] | 2017 | 10 subjects | Measure the size of a wound | Smartphone | Not available | Tuberculin skin test (TST) | Tuberculosis infection |

| Yee et al. [45] | 2016 | N/A | Measurement, tracking, and diagnosis of wounds | Smartphone | Not available | Seymour wound model 0910 | Chronic wounds |

| Satheesha et al. [46] | 2015 | People with skin cancer | Segmentation and analysis techniques of wounds for the detection of the shape | Smartphone | Not available | PH2 dermoscopy image information, D-quick Fourier rework | Melanoma |

| Cheung et al. [47] | 2015 | N/A | Diagnosis and treatment of chronic wounds | Smartphone | Not available | Photogrammetric reconstruction | Melanoma |

| Pires et al. [23] | 2015 | N/A | Calculation of the wound area | Desktop | Not available | Segmentation, threshold, color detection | N/A |

| Bulan et al. [48] | 2014 | 36 patients with allergic diseases | Identification of a wound in images | Desktop | Not available | Linear discriminant analysis (LDA) | Allergic diseases |

| Hettiarachchi et al. [22] | 2013 | 20 patients | Measurement of wound area with segmentation techniques | Smartphone | Not available | Segmentation, threshold, color detection | Chronic wounds |

| Kanade et al. [49] | 2010 | Wide range of people | Restore, detect, and track cells and cellular tissues | Desktop | Not available | HCRF model | N/A |

| Action | Occurrences |

|---|---|

| Perform segmentation with threshold | 11 |

| Measure the wound area as the number of pixels | 5 |

| Convert image to grayscale | 4 |

| Perform 3D reconstruction | 4 |

| Crop the center of the wound | 3 |

| Extract saturation space from color space | 3 |

| Perform the histogram equalization | 3 |

| Perform threshold to enhance the contrast of the image | 3 |

| Adjust the size of the rectangle to the wound | 2 |

| Apply snakes model algorithm to define an energy function of the image | 2 |

| Apply the level set algorithm to find the boundary of the wound | 2 |

| Convert associate degree intensity image to a binary image | 2 |

| Extract asymmetry, border irregularity, color, and diameter | 2 |

| Extract superpixels which are skin | 2 |

| Find contours of the wound | 2 |

| Implement support vector machine (SVM) to classify the skin | 2 |

| Insert rectangular box in the image | 2 |

| Perform dilation operation | 2 |

| Action | Occurrences |

|---|---|

| Measure the wound area as the number of pixels | 4 |

| Perform segmentation with threshold | 4 |

| Find contours of the wound | 3 |

| Perform threshold to enhance the contrast of the image | 3 |

| Convert image to grayscale | 2 |

| Detect the wound | 2 |

| Mobile Devices | Desktop Computers |

|---|---|

| Measure the wound area anywhere at anytime | Measure the wound area in a static place |

| Mobile devices are currently embedding high-quality cameras | The measurement depends on the external cameras that are dispendious |

| Mobile devices embed other sensors that may allow the calibration of the cameras | The calibration of the cameras depends on other external devices |

| The resources available are not unlimited | The resources available can be expanded as needed with costs. |

| Action | Occurrences |

|---|---|

| Perform segmentation with threshold | 15 |

| Measure the wound area as the number of pixels | 13 |

| Convert image to grayscale | 6 |

| Perform threshold to enhance the contrast of the image | 6 |

| Find contours of the wound | 5 |

| Perform 3D reconstruction | 4 |

| Perform the histogram equalization | 3 |

| Crop the center of the wound | 3 |

| Perform dilation operation | 2 |

| Detect the wound | 2 |

| Extract saturation space from color space | 3 |

| Insert rectangular box in the image | 2 |

| Adjust the size of the rectangle to the wound | 2 |

| Apply the level set algorithm to find the boundary of the wound | 2 |

| Apply snakes model algorithm to define an energy function of the image | 2 |

| Convert associate degree intensity image to a binary image | 2 |

| Extract asymmetry, border irregularity, color, and diameter | 2 |

| Implement support vector machine (SVM) to classify the skin | 2 |

| Extract superpixels which are skin | 2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ferreira, F.; Pires, I.M.; Costa, M.; Ponciano, V.; Garcia, N.M.; Zdravevski, E.; Chorbev, I.; Mihajlov, M. A Systematic Investigation of Models for Color Image Processing in Wound Size Estimation. Computers 2021, 10, 43. https://doi.org/10.3390/computers10040043

Ferreira F, Pires IM, Costa M, Ponciano V, Garcia NM, Zdravevski E, Chorbev I, Mihajlov M. A Systematic Investigation of Models for Color Image Processing in Wound Size Estimation. Computers. 2021; 10(4):43. https://doi.org/10.3390/computers10040043

Chicago/Turabian StyleFerreira, Filipe, Ivan Miguel Pires, Mónica Costa, Vasco Ponciano, Nuno M. Garcia, Eftim Zdravevski, Ivan Chorbev, and Martin Mihajlov. 2021. "A Systematic Investigation of Models for Color Image Processing in Wound Size Estimation" Computers 10, no. 4: 43. https://doi.org/10.3390/computers10040043

APA StyleFerreira, F., Pires, I. M., Costa, M., Ponciano, V., Garcia, N. M., Zdravevski, E., Chorbev, I., & Mihajlov, M. (2021). A Systematic Investigation of Models for Color Image Processing in Wound Size Estimation. Computers, 10(4), 43. https://doi.org/10.3390/computers10040043