Evolving and Novel Applications of Artificial Intelligence in Cancer Imaging

Simple Summary

Abstract

1. Introduction

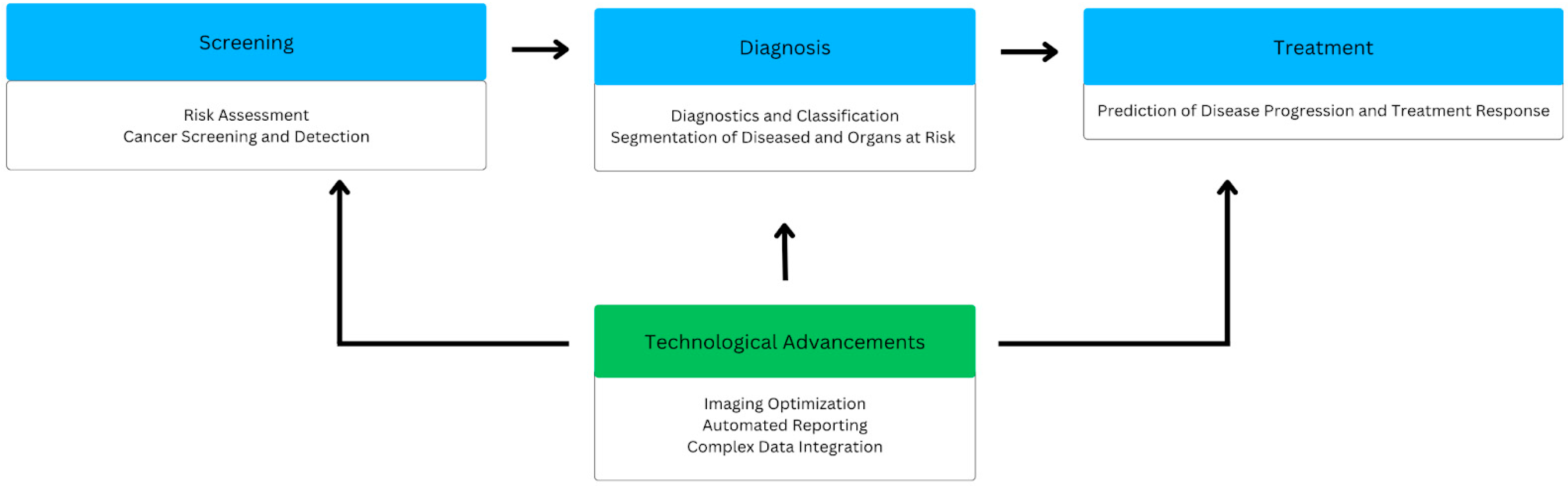

2. Screening

2.1. Risk Assessment

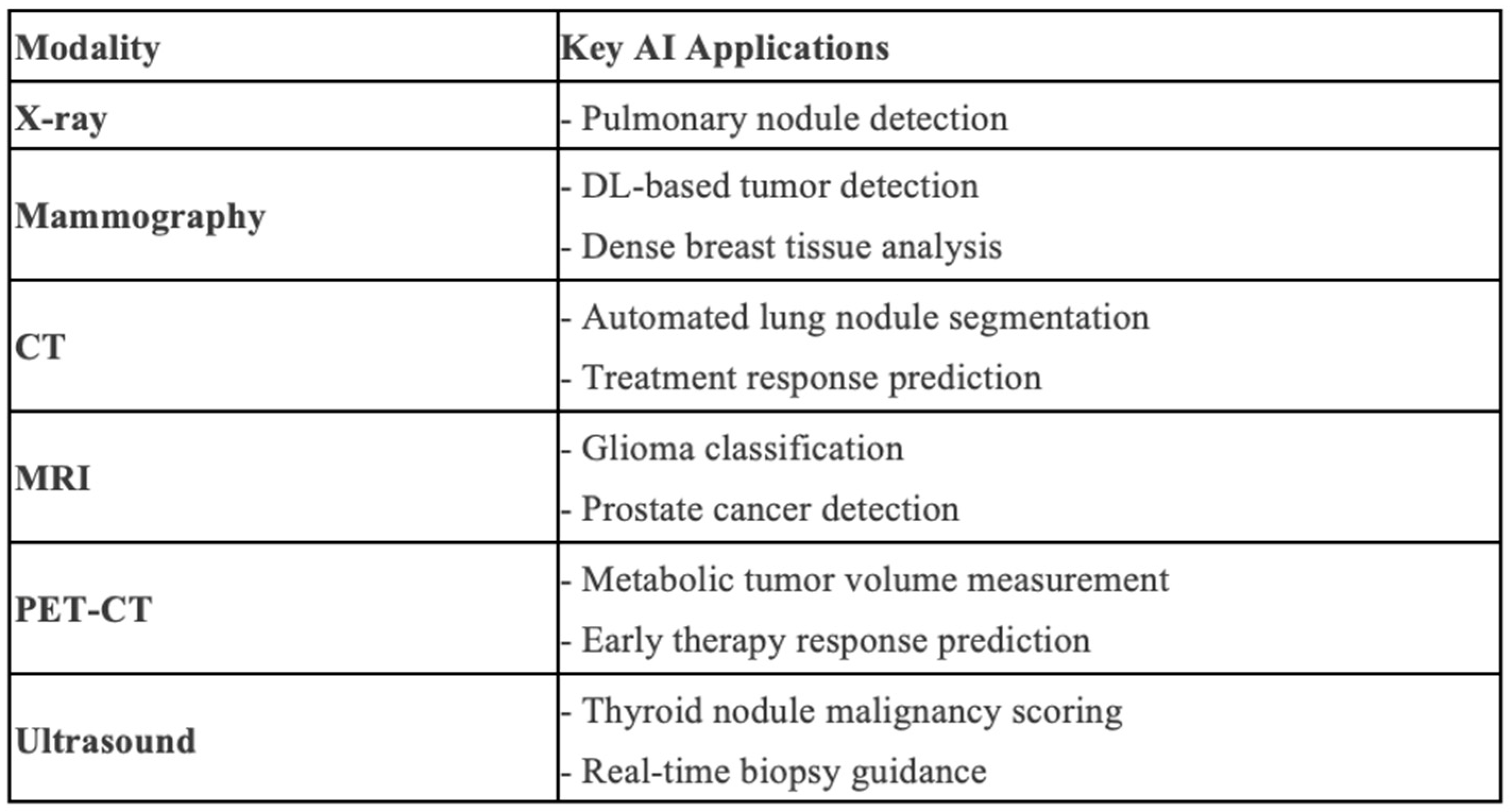

2.2. Cancer Detection

3. Diagnosis

3.1. Diagnostics and Classification

3.2. Segmentation of Disease and Organs at Risk

4. Treatment

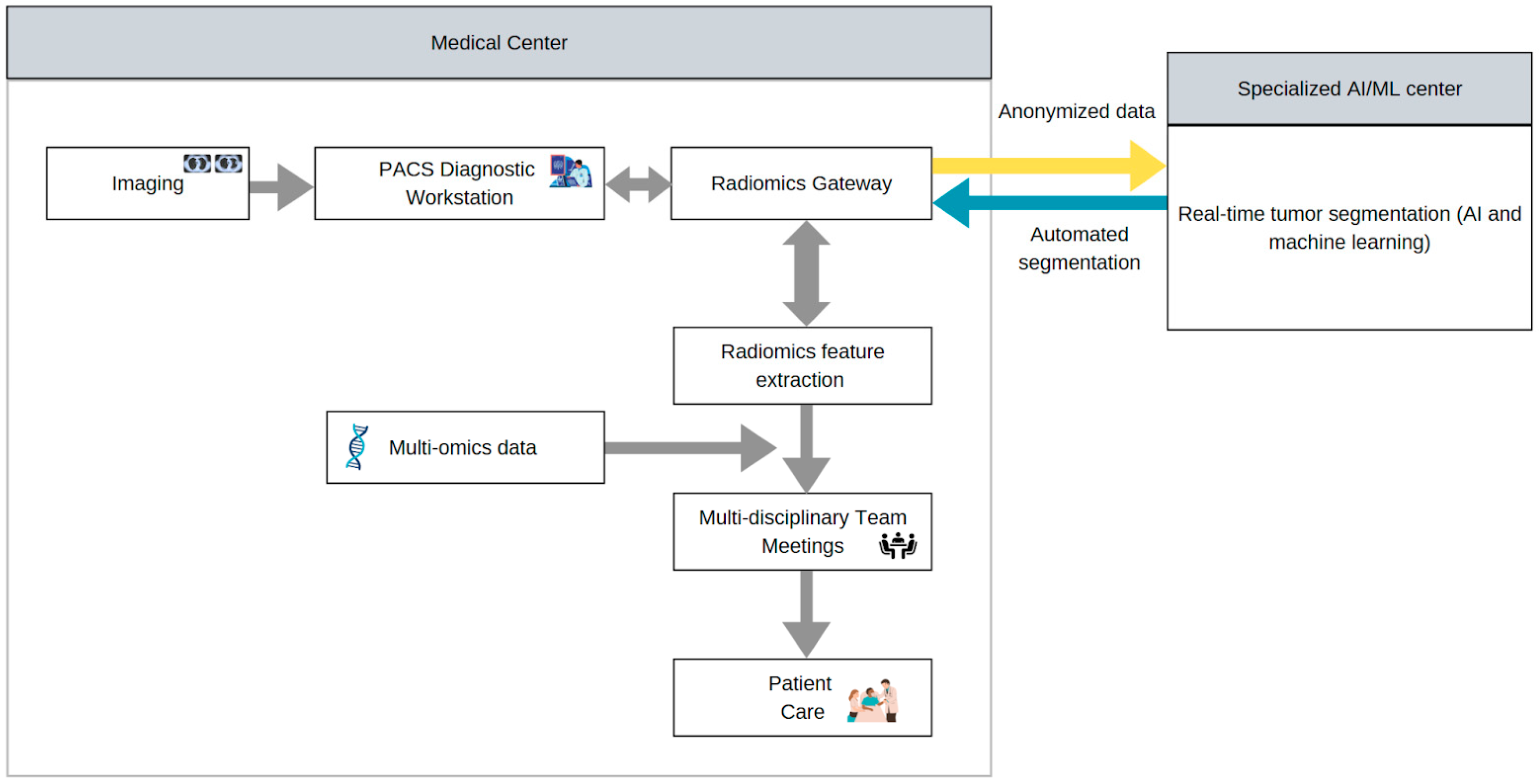

5. Technological Advancements

5.1. Imaging Optimization

5.2. Automated Reporting

5.3. Complex Data Integration

6. Limitations

6.1. Clinical Limitations

6.2. Professional Limitations

6.3. Technical Limitations

7. Future Directions

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ML | Machine Learning |

| DL | Deep Learning |

| CT | Computed Tomography |

| PET | Positron Emission Tomography |

| AUC | Area Under the Curve |

| CLAIM | Checklist for Artificial Intelligence in Medical Imaging |

| TNBC | Triple-Negative Breast Cancer |

| PMSA | Prostate-Specific Membrane Antigen |

| PACS | Picture Archiving Communication System |

| SNR | Signal-to-Noise Ratio |

| HCC | Hepatocellular Carcinoma |

| TACE | Transarterial Chemoembolization |

| PVE | Portal Vein Embolization |

| CRLM | Colorectal Liver Metastasis |

| EHR | Electronic Health Record |

| NLP | Natural Language Processing |

| LLM | Large Language Models |

| NSCLC | Non-Small-Cell Lung Carcinoma |

| TCGA | The Cancer Genome Atlas |

| TCIA | The Cancer Imaging Archive |

References

- Kwee, T.C.; Almaghrabi, M.T.; Kwee, R.M. Diagnostic Radiology and Its Future: What Do Clinicians Need and Think? Eur. Radiol. 2023, 33, 9401–9410. [Google Scholar] [CrossRef] [PubMed]

- Chang, J.Y.; Makary, M.S. Evolving and Novel Applications of Artificial Intelligence in Thoracic Imaging. Diagnostics 2024, 14, 1456. [Google Scholar] [CrossRef] [PubMed]

- Christensen, E.W.; Drake, A.R.; Parikh, J.R.; Rubin, E.M.; Rula, E.Y. Projected US Imaging Utilization, 2025 to 2055. J. Am. Coll. Radiol. 2025, 22, 151–158. [Google Scholar] [CrossRef]

- Busnatu, Ș.; Niculescu, A.-G.; Bolocan, A.; Petrescu, G.E.D.; Păduraru, D.N.; Năstasă, I.; Lupușoru, M.; Geantă, M.; Andronic, O.; Grumezescu, A.M.; et al. Clinical Applications of Artificial Intelligence—An Updated Overview. J. Clin. Med. 2022, 11, 2265. [Google Scholar] [CrossRef]

- Koh, D.-M.; Papanikolaou, N.; Bick, U.; Illing, R.; Kahn, C.E.; Kalpathi-Cramer, J.; Matos, C.; Martí-Bonmatí, L.; Miles, A.; Mun, S.K.; et al. Artificial Intelligence and Machine Learning in Cancer Imaging. Commun. Med. 2022, 2, 133. [Google Scholar] [CrossRef]

- Albadry, D.; Khater, H.; Reffat, M. The Value of Artificial Intelligence on the Detection of Pathologies in Chest Radiographs Compared with High Resolution Multi Slice Computed Tomography. Benha Med. J. 2022, 39, 303–314. [Google Scholar] [CrossRef]

- Qureshi, T.A.; Lynch, C.; Azab, L.; Xie, Y.; Gaddam, S.; Pandol, S.J.; Li, D. Morphology-Guided Deep Learning Framework for Segmentation of Pancreas in Computed Tomography Images. J. Med. Imaging 2022, 9, 024002. [Google Scholar] [CrossRef]

- Maier-Hein, L.; Reinke, A.; Godau, P.; Tizabi, M.D.; Buettner, F.; Christodoulou, E.; Glocker, B.; Isensee, F.; Kleesiek, J.; Kozubek, M.; et al. Metrics Reloaded: Recommendations for Image Analysis Validation. Nat. Methods 2024, 21, 195–212. [Google Scholar] [CrossRef]

- Cheng, Z.; Wen, J.; Huang, G.; Yan, J. Applications of Artificial Intelligence in Nuclear Medicine Image Generation. Quant. Imaging Med. Surg. 2021, 11, 2792–2822. [Google Scholar] [CrossRef]

- Pitarch, C.; Ribas, V.; Vellido, A. AI-Based Glioma Grading for a Trustworthy Diagnosis: An Analytical Pipeline for Improved Reliability. Cancers 2023, 15, 3369. [Google Scholar] [CrossRef]

- Seker, M.E.; Koyluoglu, Y.O.; Ozaydin, A.N.; Gurdal, S.O.; Ozcinar, B.; Cabioglu, N.; Ozmen, V.; Aribal, E. Diagnostic Capabilities of Artificial Intelligence as an Additional Reader in a Breast Cancer Screening Program. Eur. Radiol. 2024, 34, 6145–6157. [Google Scholar] [CrossRef] [PubMed]

- Toumazis, I.; Bastani, M.; Han, S.S.; Plevritis, S.K. Risk-Based Lung Cancer Screening: A Systematic Review. Lung Cancer 2020, 147, 154–186. [Google Scholar] [CrossRef] [PubMed]

- Portnow, L.H.; Choridah, L.; Kardinah, K.; Handarini, T.; Pijnappel, R.; Bluekens, A.M.J.; Duijm, L.E.M.; Schoub, P.K.; Smilg, P.S.; Malek, L.; et al. International Interobserver Variability of Breast Density Assessment. J. Am. Coll. Radiol. 2023, 20, 671–684. [Google Scholar] [CrossRef]

- Mohamed, A.A.; Berg, W.A.; Peng, H.; Luo, Y.; Jankowitz, R.C.; Wu, S. A Deep Learning Method for Classifying Mammographic Breast Density Categories. Med. Phys. 2018, 45, 314–321. [Google Scholar] [CrossRef] [PubMed]

- Arieno, A.; Chan, A.; Destounis, S.V. A Review of the Role of Augmented Intelligence in Breast Imaging: From Automated Breast Density Assessment to Risk Stratification. Am. J. Roentgenol. 2019, 212, 259–270. [Google Scholar] [CrossRef]

- Van Winkel, S.L.; Samperna, R.; Loehrer, E.A.; Kroes, J.; Rodriguez-Ruiz, A.; Mann, R.M. Using AI to Select Women with Intermediate Breast Cancer Risk for Breast Screening with MRI. Radiology 2025, 314, e233067. [Google Scholar] [CrossRef]

- Mittal, S.; Chung, M. Assessment of AI Risk Scores on Screening Mammograms Preceding Breast Cancer Diagnosis. Radiol. Imaging Cancer 2024, 6, e249011. [Google Scholar] [CrossRef]

- Eisemann, N.; Bunk, S.; Mukama, T.; Baltus, H.; Elsner, S.A.; Gomille, T.; Hecht, G.; Heywang-Köbrunner, S.; Rathmann, R.; Siegmann-Luz, K.; et al. Nationwide Real-World Implementation of AI for Cancer Detection in Population-Based Mammography Screening. Nat. Med. 2025, 31, 917–924. [Google Scholar] [CrossRef]

- Ramli, Z.; Farizan, A.; Tamchek, N.; Haron, Z.; Abdul Karim, M.K. Impact of Image Enhancement on the Radiomics Stability of Diffusion-Weighted MRI Images of Cervical Cancer. Cureus 2024, 16, e52132. [Google Scholar] [CrossRef]

- He, C.; Xu, H.; Yuan, E.; Ye, L.; Chen, Y.; Yao, J.; Song, B. The Accuracy and Quality of Image-Based Artificial Intelligence for Muscle-Invasive Bladder Cancer Prediction. Insights Imaging 2024, 15, 185. [Google Scholar] [CrossRef]

- Schrader, A.; Netzer, N.; Hielscher, T.; Görtz, M.; Zhang, K.S.; Schütz, V.; Stenzinger, A.; Hohenfellner, M.; Schlemmer, H.-P.; Bonekamp, D. Prostate Cancer Risk Assessment and Avoidance of Prostate Biopsies Using Fully Automatic Deep Learning in Prostate MRI: Comparison to PI-RADS and Integration with Clinical Data in Nomograms. Eur. Radiol. 2024, 34, 7909–7920. [Google Scholar] [CrossRef]

- Wu, G.; Bajestani, N.; Pracha, N.; Chen, C.; Makary, M.S. Hepatocellular Carcinoma Surveillance Strategies: Major Guidelines and Screening Advances. Cancers 2024, 16, 3933. [Google Scholar] [CrossRef] [PubMed]

- McKinney, S.M.; Sieniek, M.; Godbole, V.; Godwin, J.; Antropova, N.; Ashrafian, H.; Back, T.; Chesus, M.; Corrado, G.S.; Darzi, A.; et al. International Evaluation of an AI System for Breast Cancer Screening. Nature 2020, 577, 89–94. [Google Scholar] [CrossRef]

- Dembrower, K.; Wåhlin, E.; Liu, Y.; Salim, M.; Smith, K.; Lindholm, P.; Eklund, M.; Strand, F. Effect of Artificial Intelligence-Based Triaging of Breast Cancer Screening Mammograms on Cancer Detection and Radiologist Workload: A Retrospective Simulation Study. Lancet Digit. Health 2020, 2, e468–e474. [Google Scholar] [CrossRef] [PubMed]

- Lennox-Chhugani, N.; Chen, Y.; Pearson, V.; Trzcinski, B.; James, J. Women’s Attitudes to the Use of AI Image Readers: A Case Study from a National Breast Screening Programme. BMJ Health Care Inform. 2021, 28, e100293. [Google Scholar] [CrossRef]

- Batra, U.; Nathany, S.; Nath, S.K.; Jose, J.T.; Sharma, T.; Pasricha, S.; Sharma, M.; Arambam, N.; Khanna, V.; Bansal, A.; et al. AI-Based Pipeline for Early Screening of Lung Cancer: Integrating Radiology, Clinical, and Genomics Data. Lancet Reg. Health Southeast Asia 2024, 24, 100352. [Google Scholar] [CrossRef] [PubMed]

- Shao, X.; Zhang, H.; Wang, Y.; Qian, H.; Zhu, Y.; Dong, B.; Xu, F.; Chen, N.; Liu, S.; Pan, J.; et al. Deep Convolutional Neural Networks Combine Raman Spectral Signature of Serum for Prostate Cancer Bone Metastases Screening. Nanomed. Nanotechnol. Biol. Med. 2020, 29, 102245. [Google Scholar] [CrossRef]

- Giganti, F.; Moreira Da Silva, N.; Yeung, M.; Davies, L.; Frary, A.; Ferrer Rodriguez, M.; Sushentsev, N.; Ashley, N.; Andreou, A.; Bradley, A.; et al. AI-Powered Prostate Cancer Detection: A Multi-Centre, Multi-Scanner Validation Study. Eur. Radiol. 2025. [Google Scholar] [CrossRef]

- Papalia, G.F.; Brigato, P.; Sisca, L.; Maltese, G.; Faiella, E.; Santucci, D.; Pantano, F.; Vincenzi, B.; Tonini, G.; Papalia, R.; et al. Artificial Intelligence in Detection, Management, and Prognosis of Bone Metastasis: A Systematic Review. Cancers 2024, 16, 2700. [Google Scholar] [CrossRef]

- Ma, M.; Liu, R.; Wen, C.; Xu, W.; Xu, Z.; Wang, S.; Wu, J.; Pan, D.; Zheng, B.; Qin, G.; et al. Predicting the Molecular Subtype of Breast Cancer and Identifying Interpretable Imaging Features Using Machine Learning Algorithms. Eur. Radiol. 2022, 32, 1652–1662. [Google Scholar] [CrossRef]

- Chen, C.; Wang, E.; Wang, V.Y.; Chen, X.; Feng, B.; Yan, R.; Zhu, L.; Xu, D. Deep Learning Prediction of Mammographic Breast Density Using Screening Data. Sci. Rep. 2025, 15, 11602. [Google Scholar] [CrossRef]

- Xiang, J.; Wang, X.; Zhang, X.; Xi, Y.; Eweje, F.; Chen, Y.; Li, Y.; Bergstrom, C.; Gopaulchan, M.; Kim, T.; et al. A Vision–Language Foundation Model for Precision Oncology. Nature 2025, 638, 769–778. [Google Scholar] [CrossRef] [PubMed]

- Xie, H.; Zhang, Y.; Dong, L.; Lv, H.; Li, X.; Zhao, C.; Tian, Y.; Xie, L.; Wu, W.; Yang, Q.; et al. Deep Learning Driven Diagnosis of Malignant Soft Tissue Tumors Based on Dual-Modal Ultrasound Images and Clinical Indexes. Front. Oncol. 2024, 14, 1361694. [Google Scholar] [CrossRef]

- Liu, H.; Lao, M.; Zhang, Y.; Chang, C.; Yin, Y.; Wang, R. Radiomics-Based Machine Learning Models for Differentiating Pathological Subtypes in Cervical Cancer: A Multicenter Study. Front. Oncol. 2024, 14, 1346336. [Google Scholar] [CrossRef] [PubMed]

- Hu, C.; Qiao, X.; Xu, Z.; Zhang, Z.; Zhang, X. Machine Learning-Based CT Texture Analysis in the Differentiation of Testicular Masses. Front. Oncol. 2024, 13, 1284040. [Google Scholar] [CrossRef] [PubMed]

- Dinkel, J.; Khalilzadeh, O.; Hintze, C.; Fabel, M.; Puderbach, M.; Eichinger, M.; Schlemmer, H.-P.; Thorn, M.; Heussel, C.P.; Thomas, M.; et al. Inter-Observer Reproducibility of Semi-Automatic Tumor Diameter Measurement and Volumetric Analysis in Patients with Lung Cancer. Lung Cancer 2013, 82, 76–82. [Google Scholar] [CrossRef]

- Rundo, L.; Beer, L.; Ursprung, S.; Martin-Gonzalez, P.; Markowetz, F.; Brenton, J.D.; Crispin-Ortuzar, M.; Sala, E.; Woitek, R. Tissue-Specific and Interpretable Sub-Segmentation of Whole Tumour Burden on CT Images by Unsupervised Fuzzy Clustering. Comput. Biol. Med. 2020, 120, 103751. [Google Scholar] [CrossRef]

- Leung, K.H.; Rowe, S.P.; Sadaghiani, M.S.; Leal, J.P.; Mena, E.; Choyke, P.L.; Du, Y.; Pomper, M.G. Deep Semisupervised Transfer Learning for Fully Automated Whole-Body Tumor Quantification and Prognosis of Cancer on PET/CT. J. Nucl. Med. 2024, 65, 643–650. [Google Scholar] [CrossRef]

- Ma, J.; He, Y.; Li, F.; Han, L.; You, C.; Wang, B. Segment Anything in Medical Images. Nat. Commun. 2024, 15, 654. [Google Scholar] [CrossRef]

- Luo, X.; Yang, Y.; Yin, S.; Li, H.; Shao, Y.; Zheng, D.; Li, X.; Li, J.; Fan, W.; Li, J.; et al. Automated Segmentation of Brain Metastases with Deep Learning: A Multi-Center, Randomized Crossover, Multi-Reader Evaluation Study. Neuro Oncol. 2024, 26, 2140–2151. [Google Scholar] [CrossRef]

- Lin, C.; Cao, T.; Tang, M.; Pu, W.; Lei, P. Predicting Hepatocellular Carcinoma Response to TACE: A Machine Learning Study Based on 2.5D CT Imaging and Deep Features Analysis. Eur. J. Radiol. 2025, 187, 112060. [Google Scholar] [CrossRef] [PubMed]

- Kuhn, T.N.; Engelhardt, W.D.; Kahl, V.H.; Alkukhun, A.; Gross, M.; Iseke, S.; Onofrey, J.; Covey, A.; Camacho, J.C.; Kawaguchi, Y.; et al. Artificial Intelligence–Driven Patient Selection for Preoperative Portal Vein Embolization for Patients with Colorectal Cancer Liver Metastases. J. Vasc. Interv. Radiol. 2025, 36, 477–488. [Google Scholar] [CrossRef]

- Saha, A.; Bosma, J.S.; Twilt, J.J.; Van Ginneken, B.; Bjartell, A.; Padhani, A.R.; Bonekamp, D.; Villeirs, G.; Salomon, G.; Giannarini, G.; et al. Artificial Intelligence and Radiologists in Prostate Cancer Detection on MRI (PI-CAI): An International, Paired, Non-Inferiority, Confirmatory Study. Lancet Oncol. 2024, 25, 879–887. [Google Scholar] [CrossRef] [PubMed]

- Oshino, T.; Enda, K.; Shimizu, H.; Sato, M.; Nishida, M.; Kato, F.; Oda, Y.; Hosoda, M.; Kudo, K.; Iwasaki, N.; et al. Artificial Intelligence Can Extract Important Features for Diagnosing Axillary Lymph Node Metastasis in Early Breast Cancer Using Contrast-Enhanced Ultrasonography. Sci. Rep. 2025, 15, 5648. [Google Scholar] [CrossRef] [PubMed]

- Lambin, P.; Rios-Velazquez, E.; Leijenaar, R.; Carvalho, S.; Van Stiphout, R.G.P.M.; Granton, P.; Zegers, C.M.L.; Gillies, R.; Boellard, R.; Dekker, A.; et al. Radiomics: Extracting More Information from Medical Images Using Advanced Feature Analysis. Eur. J. Cancer 2012, 48, 441–446. [Google Scholar] [CrossRef]

- Liu, Z.; Duan, T.; Zhang, Y.; Weng, S.; Xu, H.; Ren, Y.; Zhang, Z.; Han, X. Radiogenomics: A Key Component of Precision Cancer Medicine. Br. J. Cancer 2023, 129, 741–753. [Google Scholar] [CrossRef]

- Du, Y.; Xiao, Y.; Guo, W.; Yao, J.; Lan, T.; Li, S.; Wen, H.; Zhu, W.; He, G.; Zheng, H.; et al. Development and Validation of an Ultrasound-Based Deep Learning Radiomics Nomogram for Predicting the Malignant Risk of Ovarian Tumours. BioMed Eng. OnLine 2024, 23, 41. [Google Scholar] [CrossRef]

- Zhang, Y.-F.; Zhou, C.; Guo, S.; Wang, C.; Yang, J.; Yang, Z.-J.; Wang, R.; Zhang, X.; Zhou, F.-H. Deep Learning Algorithm-Based Multimodal MRI Radiomics and Pathomics Data Improve Prediction of Bone Metastases in Primary Prostate Cancer. J. Cancer Res. Clin. Oncol. 2024, 150, 78. [Google Scholar] [CrossRef]

- Kao, Y.-S.; Hsu, Y. A Meta-Analysis for Using Radiomics to Predict Complete Pathological Response in Esophageal Cancer Patients Receiving Neoadjuvant Chemoradiation. Vivo 2021, 35, 1857–1863. [Google Scholar] [CrossRef]

- Delli Pizzi, A.; Chiarelli, A.M.; Chiacchiaretta, P.; d’Annibale, M.; Croce, P.; Rosa, C.; Mastrodicasa, D.; Trebeschi, S.; Lambregts, D.M.J.; Caposiena, D.; et al. MRI-Based Clinical-Radiomics Model Predicts Tumor Response before Treatment in Locally Advanced Rectal Cancer. Sci. Rep. 2021, 11, 5379. [Google Scholar] [CrossRef]

- Shanbhogue, K.; Tong, A.; Smereka, P.; Nickel, D.; Arberet, S.; Anthopolos, R.; Chandarana, H. Accelerated Single-Shot T2-Weighted Fat-Suppressed (FS) MRI of the Liver with Deep Learning–Based Image Reconstruction: Qualitative and Quantitative Comparison of Image Quality with Conventional T2-Weighted FS Sequence. Eur. Radiol. 2021, 31, 8447–8457. [Google Scholar] [CrossRef]

- Clement David-Olawade, A.; Olawade, D.B.; Vanderbloemen, L.; Rotifa, O.B.; Fidelis, S.C.; Egbon, E.; Akpan, A.O.; Adeleke, S.; Ghose, A.; Boussios, S. AI-Driven Advances in Low-Dose Imaging and Enhancement—A Review. Diagnostics 2025, 15, 689. [Google Scholar] [CrossRef]

- Melazzini, L.; Bortolotto, C.; Brizzi, L.; Achilli, M.; Basla, N.; D’Onorio De Meo, A.; Gerbasi, A.; Bottinelli, O.M.; Bellazzi, R.; Preda, L. AI for Image Quality and Patient Safety in CT and MRI. Eur. Radiol. Exp. 2025, 9, 28. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Guo, X.; Ou, X.; Zhang, W.; Ma, X. Discrimination of Pancreatic Serous Cystadenomas From Mucinous Cystadenomas With CT Textural Features: Based on Machine Learning. Front. Oncol. 2019, 9, 494. [Google Scholar] [CrossRef] [PubMed]

- Tajirian, T.; Stergiopoulos, V.; Strudwick, G.; Sequeira, L.; Sanches, M.; Kemp, J.; Ramamoorthi, K.; Zhang, T.; Jankowicz, D. The Influence of Electronic Health Record Use on Physician Burnout: Cross-Sectional Survey. J. Med. Internet Res. 2020, 22, e19274. [Google Scholar] [CrossRef]

- Khanna, A.; Wolf, T.; Frank, I.; Krueger, A.; Shah, P.; Sharma, V.; Gettman, M.T.; Boorjian, S.A.; Asselmann, D.; Tollefson, M.K. Enhancing Accuracy of Operative Reports with Automated Artificial Intelligence Analysis of Surgical Video. J. Am. Coll. Surg. 2025, 240, 739–746. [Google Scholar] [CrossRef]

- Maleki Varnosfaderani, S.; Forouzanfar, M. The Role of AI in Hospitals and Clinics: Transforming Healthcare in the 21st Century. Bioengineering 2024, 11, 337. [Google Scholar] [CrossRef]

- Tanno, R.; Barrett, D.G.T.; Sellergren, A.; Ghaisas, S.; Dathathri, S.; See, A.; Welbl, J.; Lau, C.; Tu, T.; Azizi, S.; et al. Collaboration between Clinicians and Vision–Language Models in Radiology Report Generation. Nat. Med. 2025, 31, 599–608. [Google Scholar] [CrossRef] [PubMed]

- Pesapane, F.; Gnocchi, G.; Quarrella, C.; Sorce, A.; Nicosia, L.; Mariano, L.; Bozzini, A.C.; Marinucci, I.; Priolo, F.; Abbate, F.; et al. Errors in Radiology: A Standard Review. J. Clin. Med. 2024, 13, 4306. [Google Scholar] [CrossRef]

- Bhandari, A. Revolutionizing Radiology With Artificial Intelligence. Cureus 2024, 16, e72646. [Google Scholar] [CrossRef]

- Yelne, S.; Chaudhary, M.; Dod, K.; Sayyad, A.; Sharma, R. Harnessing the Power of AI: A Comprehensive Review of Its Impact and Challenges in Nursing Science and Healthcare. Cureus 2023, 15, e49252. [Google Scholar] [CrossRef] [PubMed]

- Klement, W.; El Emam, K. Consolidated Reporting Guidelines for Prognostic and Diagnostic Machine Learning Modeling Studies: Development and Validation. J. Med. Internet Res. 2023, 25, e48763. [Google Scholar] [CrossRef]

- Cornelis, F.H.; Filippiadis, D.K.; Wiggermann, P.; Solomon, S.B.; Madoff, D.C.; Milot, L.; Bodard, S. Evaluation of Navigation and Robotic Systems for Percutaneous Image-Guided Interventions: A Novel Metric for Advanced Imaging and Artificial Intelligence Integration. Diagn. Interv. Imaging 2025, S2211568425000051. [Google Scholar] [CrossRef]

- Mastrodicasa, D.; Van Assen, M.; Huisman, M.; Leiner, T.; Williamson, E.E.; Nicol, E.D.; Allen, B.D.; Saba, L.; Vliegenthart, R.; Hanneman, K. Use of AI in Cardiac CT and MRI: A Scientific Statement from the ESCR, EuSoMII, NASCI, SCCT, SCMR, SIIM, and RSNA. Radiology 2025, 314, e240516. [Google Scholar] [CrossRef]

- Lastrucci, A.; Iosca, N.; Wandael, Y.; Barra, A.; Lepri, G.; Forini, N.; Ricci, R.; Miele, V.; Giansanti, D. AI and Interventional Radiology: A Narrative Review of Reviews on Opportunities, Challenges, and Future Directions. Diagnostics 2025, 15, 893. [Google Scholar] [CrossRef] [PubMed]

- Matsui, Y.; Ueda, D.; Fujita, S.; Fushimi, Y.; Tsuboyama, T.; Kamagata, K.; Ito, R.; Yanagawa, M.; Yamada, A.; Kawamura, M.; et al. Applications of Artificial Intelligence in Interventional Oncology: An up-to-Date Review of the Literature. Jpn. J. Radiol. 2025, 43, 164–176. [Google Scholar] [CrossRef] [PubMed]

- Lee, K.; Muniyandi, P.; Li, M.; Saccenti, L.; Christou, A.; Xu, S.; Wood, B.J. Smartphone Technology for Applications in Image-Guided Minimally Invasive Interventional Procedures. Cardiovasc. Interv. Radiol. 2024, 48, 142–156. [Google Scholar] [CrossRef]

- Ye, G.; Wu, G.; Qi, Y.; Li, K.; Wang, M.; Zhang, C.; Li, F.; Wee, L.; Dekker, A.; Han, C.; et al. Non-Invasive Multimodal CT Deep Learning Biomarker to Predict Pathological Complete Response of Non-Small Cell Lung Cancer Following Neoadjuvant Immunochemotherapy: A Multicenter Study. J. Immunother. Cancer 2024, 12, e009348. [Google Scholar] [CrossRef]

- Pinnock, M.A.; Hu, Y.; Bandula, S.; Barratt, D.C. Time Conditioning for Arbitrary Contrast Phase Generation in Interventional Computed Tomography. Phys. Med. Biol. 2024, 69, 115010. [Google Scholar] [CrossRef]

- Kotter, E.; D’Antonoli, T.A.; Cuocolo, R.; Hierath, M.; Huisman, M.; Klontzas, M.E.; Martí-Bonmatí, L.; May, M.S.; Neri, E.; Nikolaou, K.; et al. Guiding AI in Radiology: ESR’s Recommendations for Effective Implementation of the European AI Act. Insights Imaging 2025, 16, 33. [Google Scholar] [CrossRef]

- Wang, R.; Dai, W.; Gong, J.; Huang, M.; Hu, T.; Li, H.; Lin, K.; Tan, C.; Hu, H.; Tong, T.; et al. Development of a Novel Combined Nomogram Model Integrating Deep Learning-Pathomics, Radiomics and Immunoscore to Predict Postoperative Outcome of Colorectal Cancer Lung Metastasis Patients. J. Hematol. Oncol. 2022, 15, 11. [Google Scholar] [CrossRef] [PubMed]

- Von Ende, E.; Ryan, S.; Crain, M.A.; Makary, M.S. Artificial Intelligence, Augmented Reality, and Virtual Reality Advances and Applications in Interventional Radiology. Diagnostics 2023, 13, 892. [Google Scholar] [CrossRef] [PubMed]

- Komura, D.; Ochi, M.; Ishikawa, S. Machine Learning Methods for Histopathological Image Analysis: Updates in 2024. Comput. Struct. Biotechnol. J. 2025, 27, 383–400. [Google Scholar] [CrossRef]

- Fum, W.K.S.; Md Shah, M.N.; Raja Aman, R.R.A.; Abd Kadir, K.A.; Wen, D.W.; Leong, S.; Tan, L.K. Generation of Fluoroscopy-Alike Radiographs as Alternative Datasets for Deep Learning in Interventional Radiology. Phys. Eng. Sci. Med. 2023, 46, 1535–1552. [Google Scholar] [CrossRef]

- Sato, T.; Kawai, T.; Shimohira, M.; Ohta, K.; Suzuki, K.; Nakayama, K.; Takikawa, J.; Kawaguchi, T.; Urano, M.; Ng, K.W.; et al. Robot-Assisted CT-Guided Biopsy with an Artificial Intelligence–Based Needle-Path Generator: An Experimental Evaluation Using a Phantom Model. J. Vasc. Interv. Radiol. 2025, 36, 869–876. [Google Scholar] [CrossRef]

- Wenderott, K.; Krups, J.; Zaruchas, F.; Weigl, M. Effects of Artificial Intelligence Implementation on Efficiency in Medical Imaging—A Systematic Literature Review and Meta-Analysis. NPJ Digit. Med. 2024, 7, 265. [Google Scholar] [CrossRef] [PubMed]

- Mazaheri, S.; Loya, M.F.; Newsome, J.; Lungren, M.; Gichoya, J.W. Challenges of Implementing Artificial Intelligence in Interventional Radiology. Semin. Interv. Radiol. 2021, 38, 554–559. [Google Scholar] [CrossRef]

- Lee, B.C.; Rijhwani, D.; Lang, S.; Van Oorde-Grainger, S.; Haak, A.; Bleise, C.; Lylyk, P.; Ruijters, D.; Sinha, A. Tunable and Real-time Automatic Interventional X-ray Collimation from Semi-supervised Deep Feature Extraction. Med. Phys. 2025, 52, 1372–1389. [Google Scholar] [CrossRef]

- Seyhan, A.A.; Carini, C. Are Innovation and New Technologies in Precision Medicine Paving a New Era in Patients Centric Care? J. Transl. Med. 2019, 17, 114. [Google Scholar] [CrossRef]

- Brady, S.M.; Highnam, R.; Irving, B.; Schnabel, J.A. Oncological Image Analysis. Med. Image Anal. 2016, 33, 7–12. [Google Scholar] [CrossRef]

- Martin-Gonzalez, P.; Crispin-Ortuzar, M.; Rundo, L.; Delgado-Ortet, M.; Reinius, M.; Beer, L.; Woitek, R.; Ursprung, S.; Addley, H.; Brenton, J.D.; et al. Integrative Radiogenomics for Virtual Biopsy and Treatment Monitoring in Ovarian Cancer. Insights Imaging 2020, 11, 94. [Google Scholar] [CrossRef] [PubMed]

- Bukowski, M.; Farkas, R.; Beyan, O.; Moll, L.; Hahn, H.; Kiessling, F.; Schmitz-Rode, T. Implementation of eHealth and AI Integrated Diagnostics with Multidisciplinary Digitized Data: Are We Ready from an International Perspective? Eur. Radiol. 2020, 30, 5510–5524. [Google Scholar] [CrossRef]

- Allen, B.; Seltzer, S.E.; Langlotz, C.P.; Dreyer, K.P.; Summers, R.M.; Petrick, N.; Marinac-Dabic, D.; Cruz, M.; Alkasab, T.K.; Hanisch, R.J.; et al. A Road Map for Translational Research on Artificial Intelligence in Medical Imaging: From the 2018 National Institutes of Health/RSNA/ACR/The Academy Workshop. J. Am. Coll. Radiol. 2019, 16, 1179–1189. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.W.M.; Van Ginneken, B.; Sánchez, C.I. A Survey on Deep Learning in Medical Image Analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Zanfardino, M.; Franzese, M.; Pane, K.; Cavaliere, C.; Monti, S.; Esposito, G.; Salvatore, M.; Aiello, M. Bringing Radiomics into a Multi-Omics Framework for a Comprehensive Genotype–Phenotype Characterization of Oncological Diseases. J. Transl. Med. 2019, 17, 337. [Google Scholar] [CrossRef]

- Fromherz, M.R.; Makary, M.S. Artificial Intelligence: Advances and New Frontiers in Medical Imaging. Artif. Intell. Med. Imaging 2022, 3, 33–41. [Google Scholar] [CrossRef]

- Sacoransky, E.; Kwan, B.Y.M.; Soboleski, D. ChatGPT and Assistive AI in Structured Radiology Reporting: A Systematic Review. Curr. Probl. Diagn. Radiol. 2024, 53, 728–737. [Google Scholar] [CrossRef] [PubMed]

- AI in Disease Detection: Advancements and Applications; Singh, R., Ed.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2025; ISBN 978-1-394-27869-5. [Google Scholar]

- Amin, K.; Khosla, P.; Doshi, R.; Chheang, S.; Forman, H.P. Artificial Intelligence to Improve Patient Understanding of RadiologyReports. Yale J. Biol. Med. 2023, 96, 407–417. [Google Scholar] [CrossRef]

- Yang, X.; Xiao, Y.; Liu, D.; Zhang, Y.; Deng, H.; Huang, J.; Shi, H.; Liu, D.; Liang, M.; Jin, X.; et al. Enhancing Doctor-Patient Communication Using Large Language Models for Pathology Report Interpretation. BMC Med. Inform. Decis. Mak. 2025, 25, 36. [Google Scholar] [CrossRef]

- Dankwa-Mullan, I.; Ndoh, K.; Akogo, D.; Rocha, H.A.L.; Juaçaba, S.F. Artificial Intelligence and Cancer Health Equity: Bridging the Divide or Widening the Gap. Curr. Oncol. Rep. 2025, 27, 95–111. [Google Scholar] [CrossRef]

- Mollura, D.J.; Culp, M.P.; Pollack, E.; Battino, G.; Scheel, J.R.; Mango, V.L.; Elahi, A.; Schweitzer, A.; Dako, F. Artificial Intelligence in Low- and Middle-Income Countries: Innovating Global Health Radiology. Radiology 2020, 297, 513–520. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pallumeera, M.; Giang, J.C.; Singh, R.; Pracha, N.S.; Makary, M.S. Evolving and Novel Applications of Artificial Intelligence in Cancer Imaging. Cancers 2025, 17, 1510. https://doi.org/10.3390/cancers17091510

Pallumeera M, Giang JC, Singh R, Pracha NS, Makary MS. Evolving and Novel Applications of Artificial Intelligence in Cancer Imaging. Cancers. 2025; 17(9):1510. https://doi.org/10.3390/cancers17091510

Chicago/Turabian StylePallumeera, Mustaqueem, Jonathan C. Giang, Ramanpreet Singh, Nooruddin S. Pracha, and Mina S. Makary. 2025. "Evolving and Novel Applications of Artificial Intelligence in Cancer Imaging" Cancers 17, no. 9: 1510. https://doi.org/10.3390/cancers17091510

APA StylePallumeera, M., Giang, J. C., Singh, R., Pracha, N. S., & Makary, M. S. (2025). Evolving and Novel Applications of Artificial Intelligence in Cancer Imaging. Cancers, 17(9), 1510. https://doi.org/10.3390/cancers17091510