Lung Cancer Risk Prediction in Patients with Persistent Pulmonary Nodules Using the Brock Model and Sybil Model

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Cohorts

2.2. Radiologic Assessment

2.3. Performance of Brock Model and Sybil Model

2.4. Development of Machine Learning Models

2.5. Statistical Analysis

3. Results

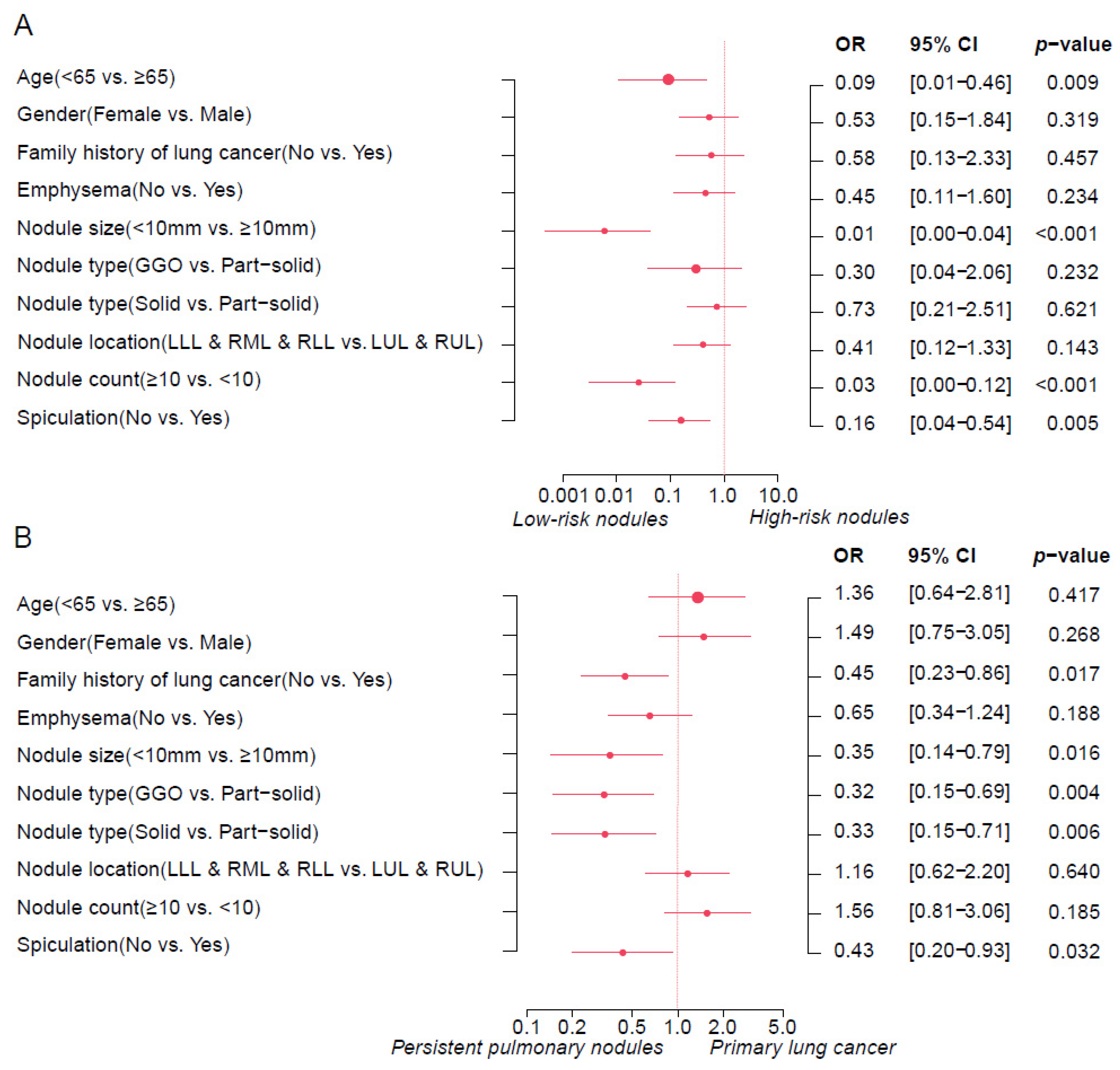

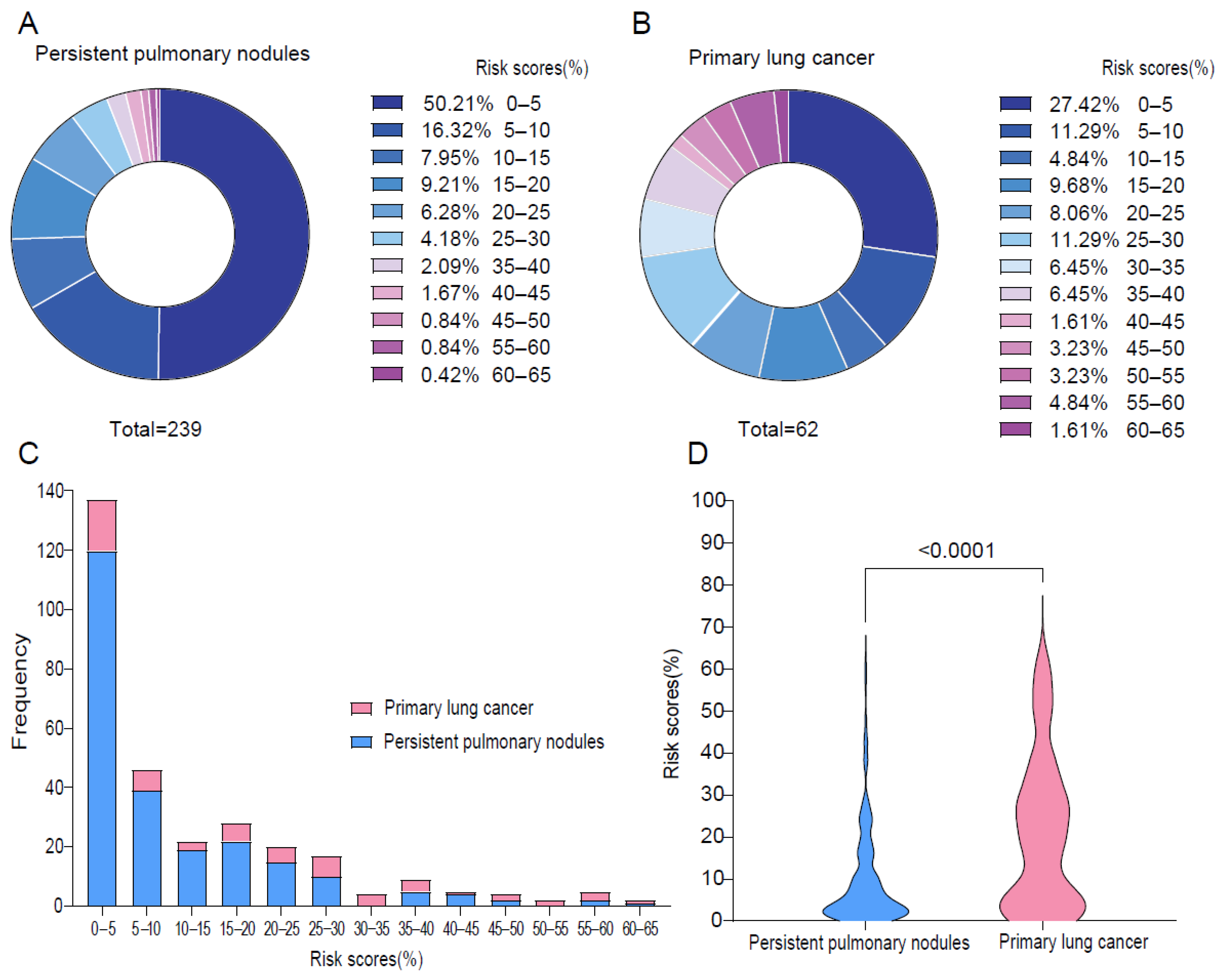

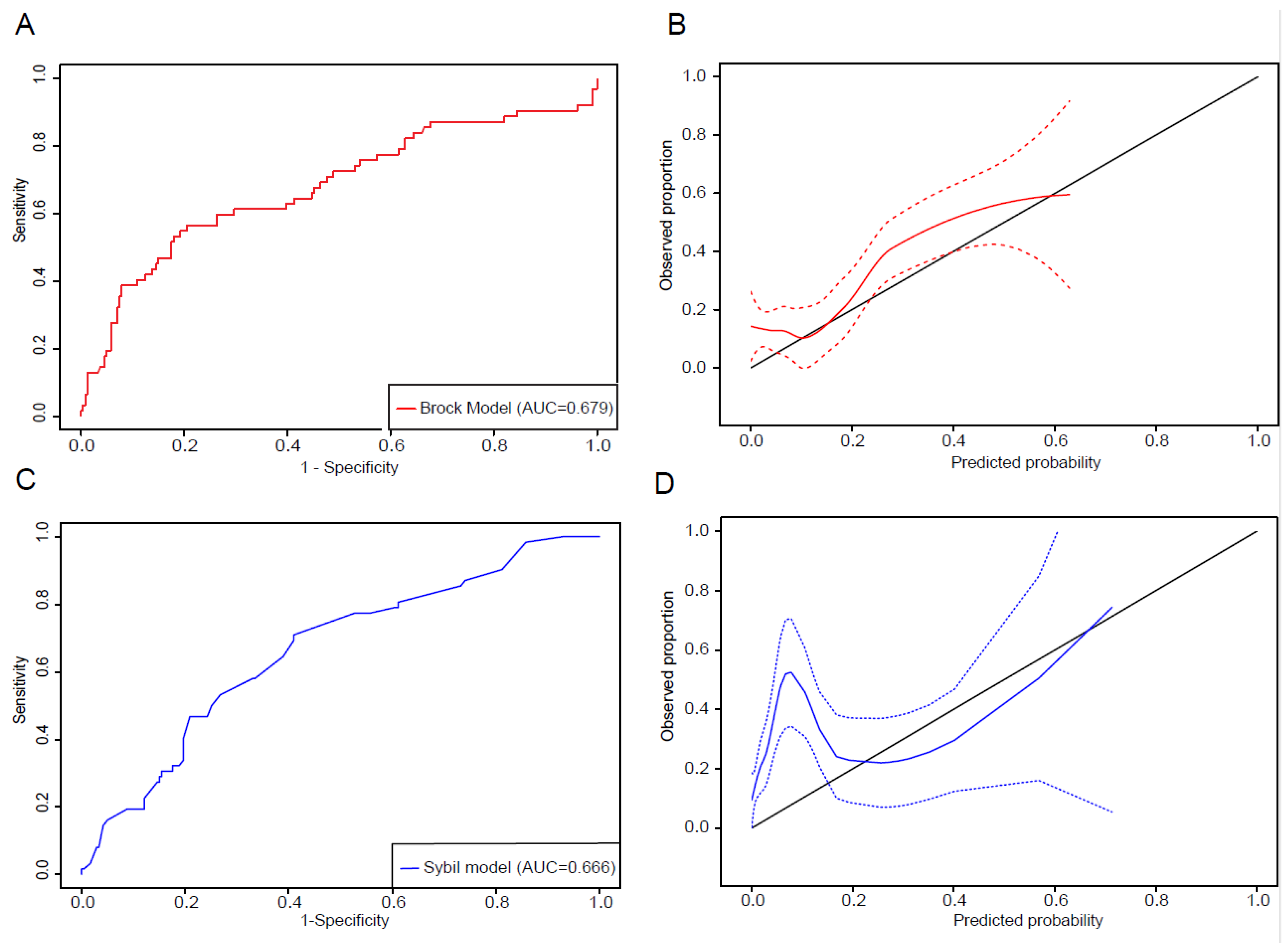

3.1. Lung Cancer Risk Prediction of Persistent Lung Nodules by Brock Model

3.2. Assessment of Sybil Model Performance

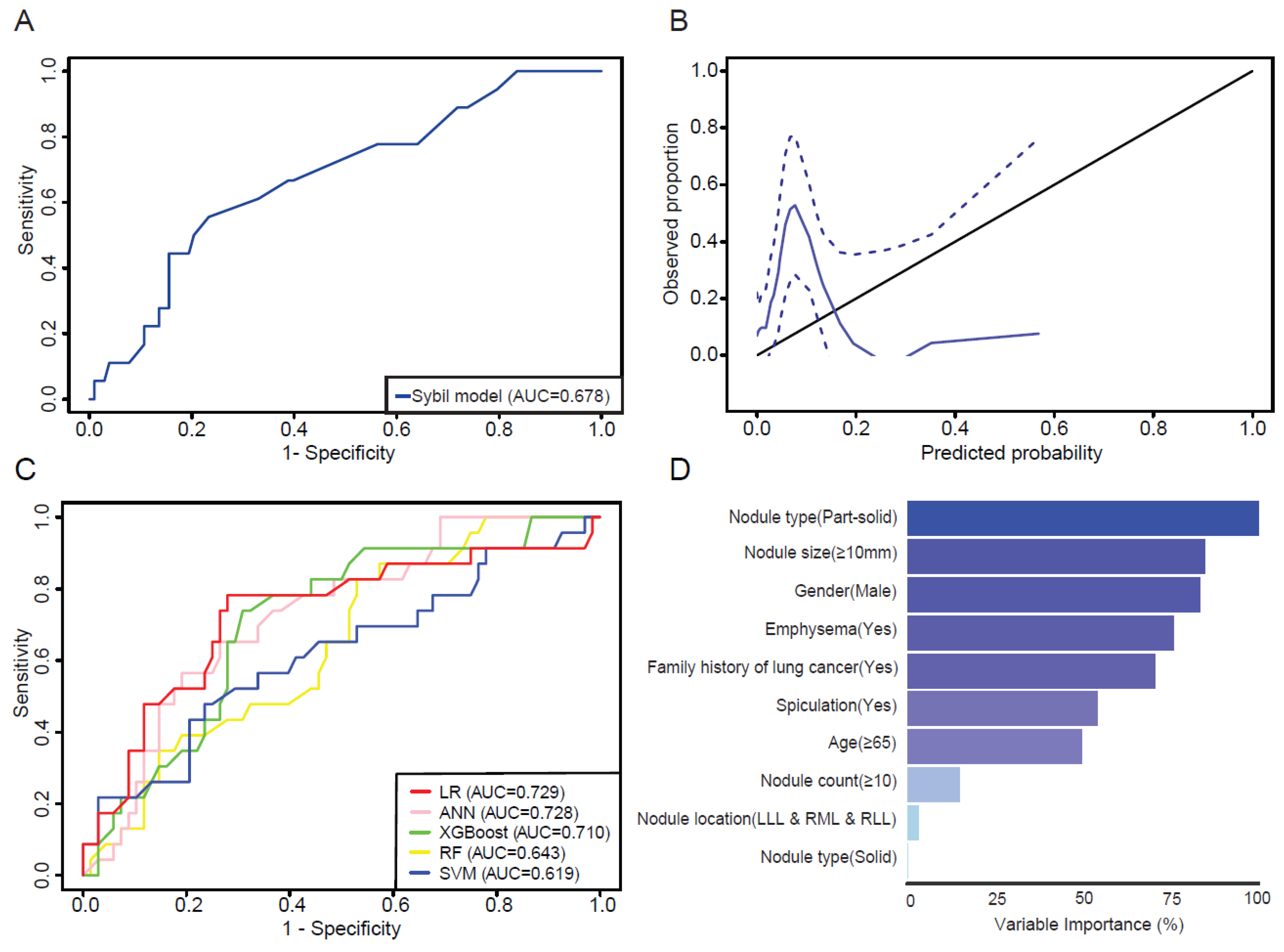

3.3. Evaluation of Machine Learning Models

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Siegel, R.L.; Giaquinto, A.N.; Jemal, A. Cancer statistics, 2024. CA A Cancer J. Clin. 2024, 74, 12–49. [Google Scholar] [CrossRef] [PubMed]

- Goldstraw, P.; Chansky, K.; Crowley, J.; Rami-Porta, R.; Asamura, H.; Eberhardt, W.E.; Nicholson, A.G.; Groome, P.; Mitchell, A.; Bolejack, V.; et al. The IASLC Lung Cancer Staging Project: Proposals for Revision of the TNM Stage Groupings in the Forthcoming (Eighth) Edition of the TNM Classification for Lung Cancer. J. Thorac. Oncol. 2016, 11, 39–51. [Google Scholar] [CrossRef] [PubMed]

- Detterbeck, F.C.; Homer, R.J. Approach to the ground-glass nodule. Clin. Chest Med. 2011, 32, 799–810. [Google Scholar] [CrossRef]

- Kodama, K.; Higashiyama, M.; Takami, K.; Oda, K.; Okami, J.; Maeda, J.; Koyama, M.; Nakayama, T. Treatment strategy for patients with small peripheral lung lesion(s): Intermediate-term results of prospective study. Eur. J. Cardiothorac. Surg. 2008, 34, 1068–1074. [Google Scholar] [CrossRef]

- Mun, M.; Kohno, T. Efficacy of thoracoscopic resection for multifocal bronchioloalveolar carcinoma showing pure ground-glass opacities of 20 mm or less in diameter. J. Thorac. Cardiovasc. Surg. 2007, 134, 877–882. [Google Scholar] [CrossRef]

- Ohtsuka, T.; Watanabe, K.; Kaji, M.; Naruke, T.; Suemasu, K. A clinicopathological study of resected pulmonary nodules with focal pure ground-glass opacity. Eur. J. Cardiothorac. Surg. 2006, 30, 160–163. [Google Scholar] [CrossRef]

- Tomonaga, N.; Nakamura, Y.; Yamaguchi, H.; Ikeda, T.; Mizoguchi, K.; Motoshima, K.; Doi, S.; Nakatomi, K.; Iida, T.; Hayashi, T.; et al. Analysis of Intratumor Heterogeneity of EGFR Mutations in Mixed Type Lung Adenocarcinoma. Clin. Lung Cancer 2013, 14, 521–526. [Google Scholar] [CrossRef]

- Nambu, A.; Araki, T.; Taguchi, Y.; Ozawa, K.; Miyata, K.; Miyazawa, M.; Hiejima, Y.; Saito, A. Focal area of ground-glass opacity and ground-glass opacity predominance on thin-section CT: Discrimination between neoplastic and non-neoplastic lesions. Clin. Radiol. 2005, 60, 1006–1017. [Google Scholar] [CrossRef]

- Blackburn, E.H. Cancer interception. Cancer Prev. Res. 2011, 4, 787–792. [Google Scholar] [CrossRef]

- McWilliams, A.; Tammemagi, M.C.; Mayo, J.R.; Roberts, H.; Liu, G.; Soghrati, K.; Yasufuku, K.; Martel, S.; Laberge, F.; Gingras, M.; et al. Probability of cancer in pulmonary nodules detected on first screening CT. N. Engl. J. Med. 2013, 369, 910–919. [Google Scholar] [CrossRef]

- Callister, M.E.; Baldwin, D.R.; Akram, A.R.; Barnard, S.; Cane, P.; Draffan, J.; Franks, K.; Gleeson, F.; Graham, R.; Malhotra, P.; et al. British Thoracic Society guidelines for the investigation and management of pulmonary nodules. Thorax 2015, 70 (Suppl. S2), ii1–ii54. [Google Scholar] [CrossRef] [PubMed]

- van Riel, S.J.; Ciompi, F.; Jacobs, C.; Winkler Wille, M.M.; Scholten, E.T.; Naqibullah, M.; Lam, S.; Prokop, M.; Schaefer-Prokop, C.; van Ginneken, B. Malignancy risk estimation of screen-detected nodules at baseline CT: Comparison of the PanCan model, Lung-RADS and NCCN guidelines. Eur. Radiol. 2017, 27, 4019–4029. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Kim, H.Y.; Goo, J.M.; Kim, Y. External validation and comparison of the Brock model and Lung-RADS for the baseline lung cancer CT screening using data from the Korean Lung Cancer Screening Project. Eur. Radiol. 2021, 31, 4004–4015. [Google Scholar] [CrossRef] [PubMed]

- Sundaram, V.; Gould, M.K.; Nair, V.S. A Comparison of the PanCan Model and Lung-RADS to Assess Cancer Probability Among People With Screening-Detected, Solid Lung Nodules. Chest 2021, 159, 1273–1282. [Google Scholar] [CrossRef]

- Hammer, M.M.; Palazzo, L.L.; Kong, C.Y.; Hunsaker, A.R. Cancer Risk in Subsolid Nodules in the National Lung Screening Trial. Radiology 2019, 293, 441–448. [Google Scholar] [CrossRef]

- Winter, A.; Aberle, D.R.; Hsu, W. External validation and recalibration of the Brock model to predict probability of cancer in pulmonary nodules using NLST data. Thorax 2019, 74, 551–563. [Google Scholar] [CrossRef]

- Winkler Wille, M.M.; van Riel, S.J.; Saghir, Z.; Dirksen, A.; Pedersen, J.H.; Jacobs, C.; Thomsen, L.H.; Scholten, E.T.; Skovgaard, L.T.; van Ginneken, B. Predictive Accuracy of the PanCan Lung Cancer Risk Prediction Model -External Validation based on CT from the Danish Lung Cancer Screening Trial. Eur. Radiol. 2015, 25, 3093–3099. [Google Scholar] [CrossRef]

- White, C.S.; Dharaiya, E.; Campbell, E.; Boroczky, L. The Vancouver Lung Cancer Risk Prediction Model: Assessment by Using a Subset of the National Lung Screening Trial Cohort. Radiology 2017, 283, 264–272. [Google Scholar] [CrossRef]

- Mikhael, P.G.; Wohlwend, J.; Yala, A.; Karstens, L.; Xiang, J.; Takigami, A.K.; Bourgouin, P.P.; Chan, P.; Mrah, S.; Amayri, W.; et al. Sybil: A Validated Deep Learning Model to Predict Future Lung Cancer Risk from a Single Low-Dose Chest Computed Tomography. J. Clin. Oncol. 2023, 41, 2191–2200. [Google Scholar] [CrossRef]

- Taiwo, E.O.; Yorio, J.T.; Yan, J.; Gerber, D.E. How have we diagnosed early-stage lung cancer without radiographic screening? A contemporary single-center experience. PLoS ONE 2012, 7, e52313. [Google Scholar] [CrossRef][Green Version]

- Lee, C.T. What do we know about ground-glass opacity nodules in the lung? Transl. Lung Cancer Res. 2015, 4, 656–659. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.M.; Park, C.M.; Goo, J.M.; Lee, C.H.; Lee, H.J.; Kim, K.G.; Kang, M.J.; Lee, I.S. Transient part-solid nodules detected at screening thin-section CT for lung cancer: Comparison with persistent part-solid nodules. Radiology 2010, 255, 242–251. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.Y.; Shim, Y.M.; Lee, K.S.; Han, J.; Yi, C.A.; Kim, Y.K. Persistent pulmonary nodular ground-glass opacity at thin-section CT: Histopathologic comparisons. Radiology 2007, 245, 267–275. [Google Scholar] [CrossRef]

- DeLong, E.R.; DeLong, D.M.; Clarke-Pearson, D.L. Comparing the areas under two or more correlated receiver operating characteristic curves: A nonparametric approach. Biometrics 1988, 44, 837–845. [Google Scholar] [CrossRef]

- Chung, K.; Mets, O.M.; Gerke, P.K.; Jacobs, C.; den Harder, A.M.; Scholten, E.T.; Prokop, M.; de Jong, P.A.; van Ginneken, B.; Schaefer-Prokop, C.M. Brock malignancy risk calculator for pulmonary nodules: Validation outside a lung cancer screening population. Thorax 2018, 73, 857–863. [Google Scholar] [CrossRef]

- MacMahon, H.; Naidich, D.P.; Goo, J.M.; Lee, K.S.; Leung, A.N.C.; Mayo, J.R.; Mehta, A.C.; Ohno, Y.; Powell, C.A.; Prokop, M.; et al. Guidelines for Management of Incidental Pulmonary Nodules Detected on CT Images: From the Fleischner Society 2017. Radiology 2017, 284, 228–243. [Google Scholar] [CrossRef]

- Nair, V.S.; Sundaram, V.; Desai, M.; Gould, M.K. Accuracy of Models to Identify Lung Nodule Cancer Risk in the National Lung Screening Trial. Am. J. Respir. Crit. Care Med. 2018, 197, 1220–1223. [Google Scholar] [CrossRef]

- Chetan, M.R.; Dowson, N.; Price, N.W.; Ather, S.; Nicolson, A.; Gleeson, F.V. Developing an understanding of artificial intelligence lung nodule risk prediction using insights from the Brock model. Eur. Radiol. 2022, 32, 5330–5338. [Google Scholar] [CrossRef]

- Venkadesh, K.V.; Setio, A.A.A.; Schreuder, A.; Scholten, E.T.; Chung, K.; W Wille, M.M.; Saghir, Z.; van Ginneken, B.; Prokop, M.; Jacobs, C. Deep Learning for Malignancy Risk Estimation of Pulmonary Nodules Detected at Low-Dose Screening CT. Radiology 2021, 300, 438–447. [Google Scholar] [CrossRef]

- Godfrey, C.M.; Shipe, M.E.; Welty, V.F.; Maiga, A.W.; Aldrich, M.C.; Montgomery, C.; Crockett, J.; Vaszar, L.T.; Regis, S.; Isbell, J.M.; et al. The Thoracic Research Evaluation and Treatment 2.0 Model: A Lung Cancer Prediction Model for Indeterminate Nodules Referred for Specialist Evaluation. Chest 2023, 164, 1305–1314. [Google Scholar] [CrossRef]

- Wu, Z.; Huang, T.; Zhang, S.; Cheng, D.; Li, W.; Chen, B. A prediction model to evaluate the pretest risk of malignancy in solitary pulmonary nodules: Evidence from a large Chinese southwestern population. J. Cancer Res. Clin. Oncol. 2021, 147, 275–285. [Google Scholar] [CrossRef] [PubMed]

- Chen, K.; Nie, Y.; Park, S.; Zhang, K.; Zhang, Y.; Liu, Y.; Hui, B.; Zhou, L.; Wang, X.; Qi, Q.; et al. Development and Validation of Machine Learning-based Model for the Prediction of Malignancy in Multiple Pulmonary Nodules: Analysis from Multicentric Cohorts. Clin. Cancer Res. 2021, 27, 2255–2265. [Google Scholar] [CrossRef]

- Gao, R.; Li, T.; Tang, Y.; Xu, K.; Khan, M.; Kammer, M.; Antic, S.L.; Deppen, S.; Huo, Y.; Lasko, T.A.; et al. Reducing uncertainty in cancer risk estimation for patients with indeterminate pulmonary nodules using an integrated deep learning model. Comput. Biol. Med. 2022, 150, 106113. [Google Scholar] [CrossRef]

- Baldwin, D.R.; Gustafson, J.; Pickup, L.; Arteta, C.; Novotny, P.; Declerck, J.; Kadir, T.; Figueiras, C.; Sterba, A.; Exell, A.; et al. External validation of a convolutional neural network artificial intelligence tool to predict malignancy in pulmonary nodules. Thorax 2020, 75, 306–312. [Google Scholar] [CrossRef]

| Overall (N = 130) | Low-Risk Nodules (N = 51) | High-Risk Nodules (N = 79) | p-Value | |

|---|---|---|---|---|

| Age | ||||

| <65 | 32 (24.6%) | 16 (31.4%) | 16 (20.3%) | 0.219 |

| ≥65 | 98 (75.4%) | 35 (68.6%) | 63 (79.7%) | |

| Gender | ||||

| Male | 45 (34.6%) | 17 (33.3%) | 28 (35.4%) | 0.954 |

| Female | 85 (65.4%) | 34 (66.7%) | 51 (64.6%) | |

| Race | ||||

| White | 108 (83.1%) | 41 (80.4%) | 67 (84.8%) | 0.804 * |

| Asian | 9 (6.9%) | 5 (9.8%) | 4 (5.1%) | |

| Black | 10 (7.7%) | 4 (7.8%) | 6 (7.6%) | |

| Others | 3 (2.3%) | 1 (2.0%) | 2 (2.5%) | |

| Ethnicity | ||||

| Non-Hispanic or Latino | 117 (90.0%) | 45 (88.2%) | 72 (91.1%) | 0.818 * |

| Hispanic or Latino | 6 (4.6%) | 3 (5.9%) | 3 (3.8%) | |

| Unknown | 7 (5.4%) | 3 (5.9%) | 4 (5.1%) | |

| Smoking history | ||||

| Current | 5 (3.8%) | 1 (2.0%) | 4 (5.1%) | 0.754 * |

| Former | 88 (67.7%) | 36 (70.6%) | 52 (65.8%) | |

| Never | 37 (28.5%) | 14 (27.5%) | 23 (29.1%) | |

| Histology | ||||

| LUAD | 107 (82.3%) | 36 (70.6%) | 71 (89.9%) | 0.008 * |

| LUSC | 17 (13.1%) | 10 (19.6%) | 7 (8.9%) | |

| Others | 6 (4.6%) | 5 (9.8%) | 1 (1.3%) | |

| Family history of lung cancer | ||||

| No | 94 (72.3%) | 34 (66.7%) | 60 (75.9%) | 0.340 |

| Yes | 36 (27.7%) | 17 (33.3%) | 19 (24.1%) | |

| Emphysema | ||||

| No | 88 (67.7%) | 35 (68.6%) | 53 (67.1%) | 1.000 |

| Yes | 42 (32.3%) | 16 (31.4%) | 26 (32.9%) | |

| Nodule size(mm) | ||||

| <10 | 29 (22.3%) | 26 (51.0%) | 3 (3.8%) | <0.001 * |

| ≥10 | 101 (77.7%) | 25 (49.0%) | 76 (96.2%) | |

| Nodule type | ||||

| GGO | 20 (15.4%) | 13 (25.5%) | 7 (8.9%) | 0.032 |

| Part-solid | 56 (43.1%) | 18 (35.3%) | 38 (48.1%) | |

| Solid | 54 (41.5%) | 20 (39.2%) | 34 (43.0%) | |

| Nodule location | ||||

| LUL & RUL | 73 (56.2%) | 24 (47.1%) | 49 (62.0%) | 0.134 |

| LLL & RML & RLL | 57 (43.8%) | 27 (52.9%) | 30 (38.0%) | |

| Nodule count | ||||

| <10 | 74 (56.9%) | 19 (37.3%) | 55 (69.6%) | <0.001 |

| ≥10 | 56 (43.1%) | 32 (62.7%) | 24 (30.4%) | |

| Nodule spiculation | ||||

| No | 56 (43.1%) | 37 (72.5%) | 19 (24.1%) | <0.001 |

| Yes | 74 (56.9%) | 14 (27.5%) | 60 (75.9%) |

| Overall (N = 301) | Persistent Pulmonary Nodules (N = 239) | Primary Lung Cancer (N = 62) | p-Value | |

|---|---|---|---|---|

| Age | ||||

| <65 | 76 (25.2%) | 61 (25.5%) | 15 (24.2%) | 0.960 |

| ≥65 | 225 (74.8%) | 178 (74.5%) | 47 (75.8%) | |

| Gender | ||||

| Male | 102 (33.9%) | 85 (35.6%) | 17 (27.4%) | 0.291 |

| Female | 199 (66.1%) | 154 (64.4%) | 45 (72.6%) | |

| Race | ||||

| White | 246 (81.7%) | 197 (82.4%) | 49 (79.0%) | 0.338 * |

| Asian | 24 (8.0%) | 18 (7.5%) | 6 (9.7%) | |

| Black | 18 (6.0%) | 12 (5.0%) | 6 (9.7%) | |

| Others | 13 (4.3%) | 12 (5.0%) | 1 (1.6%) | |

| Ethnicity | ||||

| Non-Hispanic or Latino | 278 (92.4%) | 218 (91.2%) | 60 (96.8%) | 0.295 * |

| Hispanic or Latino | 17 (5.6%) | 16 (6.7%) | 1 (1.6%) | |

| Unknown | 6 (2.0%) | 5 (2.1%) | 1 (1.6%) | |

| Smoking history | ||||

| Current | 18 (6.0%) | 14 (5.9%) | 4 (6.5%) | 0.170 * |

| Former | 201 (66.8%) | 154 (64.4%) | 47 (75.8%) | |

| Never | 82 (27.2%) | 71 (29.7%) | 11 (17.7%) | |

| Family history of lung cancer | ||||

| No | 205 (68.1%) | 171 (71.5%) | 34 (54.8%) | 0.014 |

| Yes | 94 (31.2%) | 66 (27.6%) | 28 (45.2%) | |

| Missing | 2 (0.7%) | 2 (0.8%) | 0 (0%) | |

| Emphysema | ||||

| No | 184 (61.1%) | 156 (65.3%) | 28 (45.2%) | 0.006 |

| Yes | 117 (38.9%) | 83 (34.7%) | 34 (54.8%) | |

| Nodule size (mm) | ||||

| <10 | 100 (33.2%) | 92 (38.5%) | 8 (12.9%) | <0.001 |

| ≥10 | 201 (66.8%) | 147 (61.5%) | 54 (87.1%) | |

| Nodule type | ||||

| GGO | 121 (40.2%) | 105 (43.9%) | 16 (25.8%) | <0.001 |

| Part-solid | 85 (28.2%) | 53 (22.2%) | 32 (51.6%) | |

| Solid | 95 (31.6%) | 81 (33.9%) | 14 (22.6%) | |

| Nodule location | ||||

| LUL & RUL | 164 (54.5%) | 131 (54.8%) | 33 (53.2%) | 0.936 |

| LLL & RML & RLL | 137 (45.5%) | 108 (45.2%) | 29 (46.8%) | |

| Nodule count | ||||

| <10 | 141 (46.8%) | 119 (49.8%) | 22 (35.5%) | 0.061 |

| ≥10 | 160 (53.2%) | 120 (50.2%) | 40 (64.5%) | |

| Nodule spiculation | ||||

| No | 243 (80.7%) | 202 (84.5%) | 41 (66.1%) | 0.002 |

| Yes | 58 (19.3%) | 37 (15.5%) | 21 (33.9%) |

| Persistent Pulmonary Nodules (N = 239) | Primary Lung Cancer (N = 62) | p-Value | |

|---|---|---|---|

| 1-year risk | |||

| Mean (SD) | 0.0501 (0.0935) | 0.0991 (0.138) | <0.001 |

| Median [Min, Max] | 0.0109 [0, 0.569] | 0.0310 [0.00117, 0.714] | |

| 2-year risk | |||

| Mean (SD) | 0.0761 (0.125) | 0.144 (0.173) | <0.001 |

| Median [Min, Max] | 0.0238 [0, 0.714] | 0.0598 [0.00255, 0.824] | |

| 3-year risk | |||

| Mean (SD) | 0.0922 (0.131) | 0.167 (0.179) | <0.001 |

| Median [Min, Max] | 0.0382 [0, 0.744] | 0.0852 [0.00783, 0.828] | |

| 4-year risk | |||

| Mean (SD) | 0.104 (0.136) | 0.181 (0.181) | <0.001 |

| Median [Min, Max] | 0.0561 [0, 0.763] | 0.0981 [0.0110, 0.851] | |

| 5-year risk | |||

| Mean (SD) | 0.116 (0.142) | 0.197 (0.189) | <0.001 |

| Median [Min, Max] | 0.0683 [0, 0.800] | 0.109 [0.0184, 0.869] | |

| 6-year risk | |||

| Mean (SD) | 0.151 (0.159) | 0.246 (0.206) | <0.001 |

| Median [Min, Max] | 0.0971 [0, 0.836] | 0.154 [0.0309, 0.882] | |

| CT types | |||

| With contrast | 127 (53.1%) | 40 (64.5%) | 0.143 |

| Without contrast | 112 (46.9%) | 22 (35.5%) | |

| CT or PET/CT | |||

| CT | 230 (96.2%) | 57 (91.9%) | 0.274 |

| PET/CT | 9 (3.8%) | 5 (8.1%) |

| Persistent Pulmonary Nodules (N = 103) | Primary Lung Cancer (N = 18) | p-Value | |

|---|---|---|---|

| 1-year risk | |||

| Mean (SD) | 0.0382 (0.0789) | 0.0731 (0.0957) | 0.016 |

| Median [Min, Max] | 0.0109 [0, 0.569] | 0.0310 [0.00178, 0.352] | |

| 2-year risk | |||

| Mean (SD) | 0.0601 (0.105) | 0.112 (0.126) | 0.025 |

| Median [Min, Max] | 0.0238 [0.00157, 0.714] | 0.0598 [0.00528, 0.463] | |

| 3-year risk | |||

| Mean (SD) | 0.0753 (0.108) | 0.134 (0.131) | 0.019 |

| Median [Min, Max] | 0.0382 [0.00295, 0.744] | 0.0852 [0.00904, 0.508] | |

| 4-year risk | |||

| Mean (SD) | 0.0873 (0.112) | 0.151 (0.137) | 0.019 |

| Median [Min, Max] | 0.0561 [0.00490, 0.763] | 0.0981 [0.0132, 0.530] | |

| 5-year risk | |||

| Mean (SD) | 0.0989 (0.118) | 0.165 (0.145) | 0.019 |

| Median [Min, Max] | 0.0683 [0.00836, 0.800] | 0.109 [0.0195, 0.573] | |

| 6-year risk | |||

| Mean (SD) | 0.132 (0.133) | 0.210 (0.161) | 0.019 |

| Median [Min, Max] | 0.104 [0.0144, 0.836] | 0.154 [0.0330, 0.653] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Salehjahromi, M.; Godoy, M.C.B.; Qin, K.; Plummer, C.M.; Zhang, Z.; Hong, L.; Heeke, S.; Le, X.; Vokes, N.; et al. Lung Cancer Risk Prediction in Patients with Persistent Pulmonary Nodules Using the Brock Model and Sybil Model. Cancers 2025, 17, 1499. https://doi.org/10.3390/cancers17091499

Li H, Salehjahromi M, Godoy MCB, Qin K, Plummer CM, Zhang Z, Hong L, Heeke S, Le X, Vokes N, et al. Lung Cancer Risk Prediction in Patients with Persistent Pulmonary Nodules Using the Brock Model and Sybil Model. Cancers. 2025; 17(9):1499. https://doi.org/10.3390/cancers17091499

Chicago/Turabian StyleLi, Hui, Morteza Salehjahromi, Myrna C. B. Godoy, Kang Qin, Courtney M. Plummer, Zheng Zhang, Lingzhi Hong, Simon Heeke, Xiuning Le, Natalie Vokes, and et al. 2025. "Lung Cancer Risk Prediction in Patients with Persistent Pulmonary Nodules Using the Brock Model and Sybil Model" Cancers 17, no. 9: 1499. https://doi.org/10.3390/cancers17091499

APA StyleLi, H., Salehjahromi, M., Godoy, M. C. B., Qin, K., Plummer, C. M., Zhang, Z., Hong, L., Heeke, S., Le, X., Vokes, N., Zhang, B., Araujo, H. A., Altan, M., Wu, C. C., Antonoff, M. B., Ostrin, E. J., Gibbons, D. L., Heymach, J. V., Lee, J. J., ... Zhang, J. (2025). Lung Cancer Risk Prediction in Patients with Persistent Pulmonary Nodules Using the Brock Model and Sybil Model. Cancers, 17(9), 1499. https://doi.org/10.3390/cancers17091499