1. Introduction

Breast cancer is the most common malignancy among women globally, with a persistent risk of local recurrence even after surgical resection. Radiotherapy (RT) has been proven to effectively reduce recurrence rates, establishing itself as a cornerstone of adjuvant therapy. With advancements in radiotherapy technology, volumetric modulated arc therapy (VMAT) has become widely adopted in breast cancer treatment because of its superior dose control, which balances tumor coverage with normal tissue sparing [

1,

2]. However, the proximity of the breast target volume to the skin surface renders the skin highly susceptible to radiation exposure, making radiation dermatitis (RD) the most prevalent acute side effect. Grade ≥ 2 RD, in particular, not only impairs patients’ quality of life but also may lead to treatment interruptions, compromising therapeutic efficacy [

3,

4,

5]. Thus, developing accurate tools for predicting RD risk during treatment planning to identify high-risk patients is of significant clinical value for optimizing treatment strategies and enhancing patient well-being.

Current clinical RD risk assessment relies primarily on clinical factors (e.g., age and BMI) and dose–volume histogram (DVH) parameters for modeling. While these provide some reference value, they fail to adequately capture interindividual tissue heterogeneity and microstructural differences, resulting in limited predictive performance [

6]. Recent studies highlight this issue; for example, Lee et al. [

7] integrated radiomics and dosiomics to predict RD in breast cancer, achieving an AUC of approximately 0.83, indicating that traditional feature fusion struggles to fully reflect the complexity of the dose distribution and tissue response. Similarly, Xiang et al. [

8] applied deep dosiomics for RD prediction, achieving an AUC of 0.82, demonstrating the potential of deep features but limited by non-dose-guided region of interest (ROI) selection and insufficient model interpretability, which hinders clinical adoption. This study aims to address these challenges by leveraging dose-guided deep learning (DLR) radiomics combined with multimodal features to increase prediction accuracy and transparency.

In recent years, advanced artificial intelligence (AI) frameworks have increasingly combined traditional handcrafted features, deep learning radiomics, and clinical variables to increase diagnostic precision and interpretability in oncology. For example, Prakashan et al. [

9] proposed a sustainable nanotechnology–AI framework to empower image-guided therapy for precision healthcare. Xie et al. [

10] demonstrated that integrating radiomic and dosimetric parameters improved the prediction of acute radiation dermatitis in patients with breast cancer. Similarly, Selcuk et al. [

11] developed an automated deep learning-based HER2 scoring system for pathological breast cancer images via pyramid sampling to capture multiscale information.

In addition to oncology, recent advances have demonstrated the efficacy of combining deep and handcrafted radiomic features for improved disease classification across multiple clinical domains. Arafa et al. [

12] developed a multistage classification model (MSCADMpox) that achieved high diagnostic accuracy for monkeypox detection. Attallah [

13] integrated lightweight CNNs with handcrafted features for lung and colon cancer diagnosis, enhancing cross-domain generalizability. Similarly, Ayyad et al. [

14] proposed a multimodal MR-based CAD system for precise prostate cancer assessment, whereas Abhisheka et al. [

15] reported that combining deep and handcrafted ultrasound features significantly improved breast cancer diagnostic performance. Collectively, these studies reinforce the growing role of multimodal fusion and explainable AI in advancing diagnostic performance, clinical decision support, and translational applicability.

The advent of radiomics has enabled the extraction of high-dimensional quantitative features from medical images, revealing the microstructural and biological characteristics of tumors and surrounding tissues that are not discernible through conventional imaging [

16]. Traditional handcrafted radiomics (HCR) relies on predefined mathematical formulas, offering some interpretability but struggling to capture complex nonlinear patterns [

17,

18], as reported by Jeong et al. [

19], where HCR yielded low performance in treatment response prediction (AUC < 0.70). In contrast, DLR employs convolutional neural networks to automatically learn multilevel image features, demonstrating superior predictive power [

8]. However, DLR models often face challenges such as overfitting due to small sample sizes, data heterogeneity, and lack of decision transparency, limiting their clinical translation. Ensemble learning, particularly stacking ensembles, enhances model stability and generalizability by integrating multiple classifiers, as demonstrated in medical imaging applications such as Wu et al. [

20] for side-effect prediction. Additionally, explainable artificial intelligence (XAI) techniques, such as SHAP (quantifying feature contributions) and Grad-CAM (visualizing attention regions), improve model transparency and clinical acceptance, as emphasized in recent methodological and healthcare-oriented XAI studies [

21,

22,

23].

To address these challenges, this study proposes a hybrid AI framework comprising the following:

Dose-Guided ROI Design: The subcutaneous region receiving ≥ 5 Gy (DLRV5Gy) was introduced to enhance the alignment of deep features with clinical RD occurrence.

Multimodal feature integration: Combining DLR, HCR, clinical factors, and DVH parameters to leverage complementary strengths.

Ensemble Learning Architecture: Employing stacking ensembles to improve model stability and generalizability.

XAI Analysis: SHAP is utilized to quantify feature contributions, and Grad-CAM is used to visualize attention regions, ensuring decision validity and clinical acceptability.

The objective of this study was to develop and validate an ensemble model that integrates DLR, clinical factors, and DVH parameters to predict Grade ≥ 2 RD risk in breast cancer patients undergoing VMAT. We hypothesize that dose-guided DLR features combined with multimodal data will significantly enhance predictive performance, with interpretability analyses supporting clinical translation.

2. Materials and Methods

2.1. Overall Research Framework

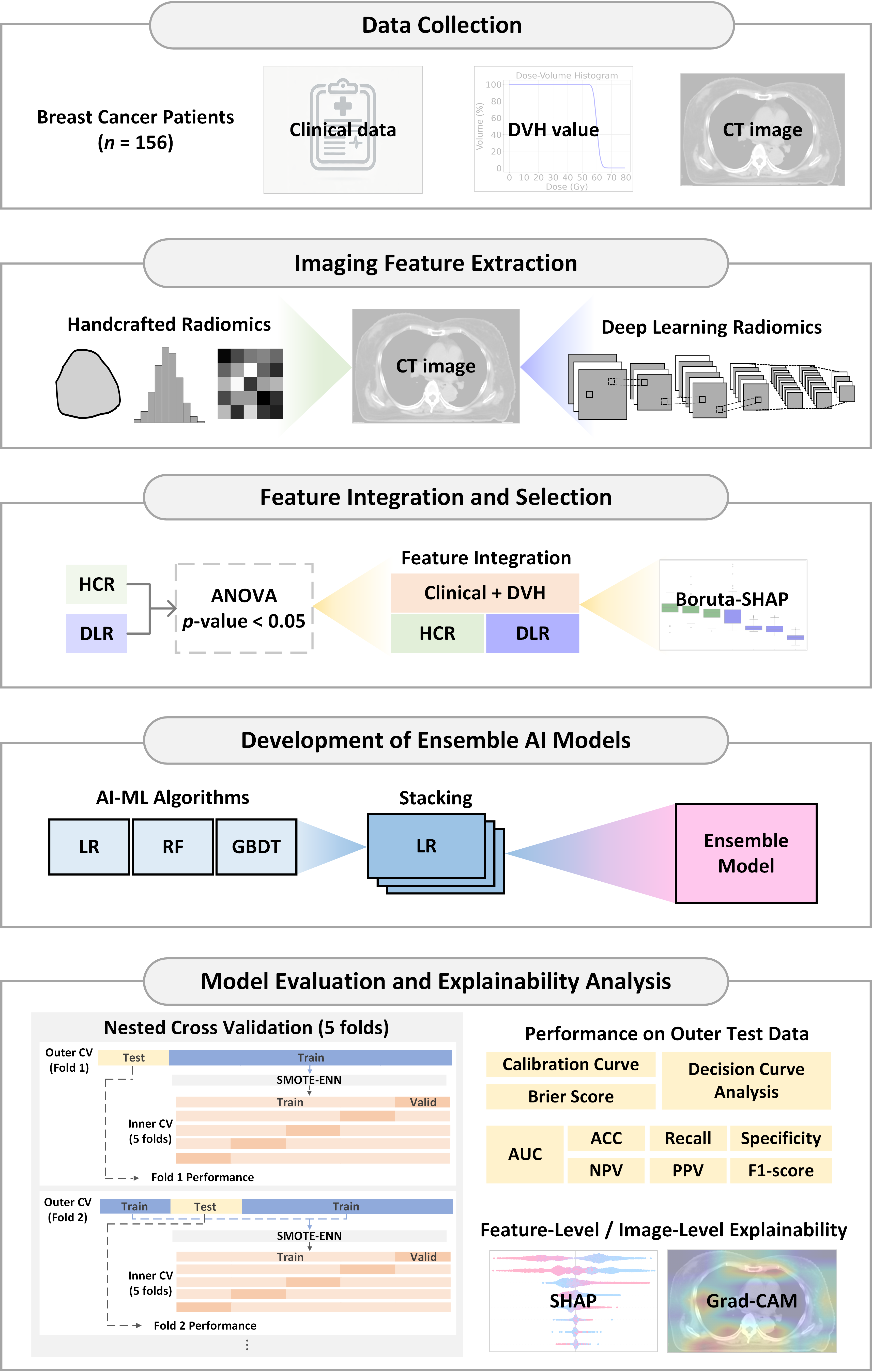

This study aims to develop a predictive model for RD risk in breast cancer patients undergoing VMAT by integrating HCR, DLR, clinical features, and DVH parameters. The research workflow, as shown in

Figure 1, encompasses five main stages:

Data collection

Imaging feature extraction

Feature integration and selection

Development of ensemble AI models

Model evaluation and explainability analysis

Figure 1.

Workflow of the proposed hybrid ensemble artificial intelligence (AI) framework for predicting radiation dermatitis in breast cancer patients. Abbreviation: DVH, Dose-Volume Histogram; CT, Computed Tomography; HCR, Handcrafted Radiomics; DLR, Deep Learning Radiomics; ANOVA, Analysis of Variance; LASSO, Least Absolute Shrinkage and Selection Operator; AI, Artificial Intelligence; ML, Machine Learning; LR, Logistic Regression; RF, Random Forest; GBDT, Gradient Boosting Decision Tree; AUC, Area Under the ROC Curve; ROC, Receiver Operating Characteristic; ACC, Accuracy; NPV, Negative Predictive Value; PPV, Positive Predictive Value; Grad-CAM, Gradient-weighted Class Activation Mapping; Valid, Validation set; SHAP, SHapley Additive exPlanations; SMOTE–ENN, Synthetic Minority Over-sampling Technique combined with Edited Nearest Neighbors.

Figure 1.

Workflow of the proposed hybrid ensemble artificial intelligence (AI) framework for predicting radiation dermatitis in breast cancer patients. Abbreviation: DVH, Dose-Volume Histogram; CT, Computed Tomography; HCR, Handcrafted Radiomics; DLR, Deep Learning Radiomics; ANOVA, Analysis of Variance; LASSO, Least Absolute Shrinkage and Selection Operator; AI, Artificial Intelligence; ML, Machine Learning; LR, Logistic Regression; RF, Random Forest; GBDT, Gradient Boosting Decision Tree; AUC, Area Under the ROC Curve; ROC, Receiver Operating Characteristic; ACC, Accuracy; NPV, Negative Predictive Value; PPV, Positive Predictive Value; Grad-CAM, Gradient-weighted Class Activation Mapping; Valid, Validation set; SHAP, SHapley Additive exPlanations; SMOTE–ENN, Synthetic Minority Over-sampling Technique combined with Edited Nearest Neighbors.

2.2. Study Population and Data Collection

This retrospective study included 156 breast cancer patients treated with VMAT (prescribed dose: 50 Gy in 25 fractions) at Kaohsiung Veterans General Hospital (Taiwan) between 2018 and 2023. The study protocol was approved by the Institutional Review Board (IRB No. KSVGH23-CT12-09, approval date: 5 December 2023), and all patient data were anonymized in accordance with data protection regulations.

Eligible patients were those with pathologically confirmed breast cancer who received postoperative radiotherapy and had complete clinical, dosimetric, and imaging data, including DVH information and planning CT images enabling extraction of skin and planning target volume (PTV) features. Patients with missing records (n = 6) or insufficient skin region of interest (ROI) volume for reliable radiomic extraction (n = 2) were excluded, yielding a total of 156 analyzable patients.

RD was evaluated by radiation oncologists via the Radiation Therapy Oncology Group (RTOG) criteria, which have been consistently applied in our institution as the standard for toxicity assessment. For binary classification, RD Grade ≥ 2 was defined as a clinically significant (positive) event. All RD grades were documented in the hospital information system to ensure consistency across the 5-year study period.

All patients underwent pretreatment CT simulation (GE Discovery CT590 RT(GE Healthcare, Chicago, IL, USA); slice thickness: 2.5 mm). Treatment plans were generated via Pinnacle3 v9.14 (Philips Radiation Oncology Systems, Fitchburg, WI, USA) or Eclipse v13 systems (Varian Medical Systems, Palo Alto, CA, USA) and delivered via Elekta Synergy or Versa HD linear accelerators (Elekta AB, Stockholm, Sweden). The RT-Structure, RTDOSE, and CT datasets were exported in DICOM format for subsequent feature extraction. Clinical variables (n = 8: age, BMI, laterality, surgery type, AJCC stage, supraclavicular fossa (SCF) irradiation, internal mammary node (IMN) irradiation, and chemotherapy status) and 12 DVH parameters (Skin5mm V5–V50Gy, PTV100%, and PTV105%) were analyzed. The 5 mm subcutaneous region (skin5mm) served as the primary ROI for evaluating the associations between dose distribution and RD occurrence.

2.3. Imaging Feature Extraction

To perform radiomic analysis, two types of imaging features were extracted from pretreatment CT images: HCR and DLR.

2.4. Handcrafted Radiomics (HCR)

HCR features were extracted via PyRadiomics (version 3.1.0) [

24] on the basis of the ROIs delineated in the treatment planning system, adhering to the Image Biomarker Standardization Initiative (IBSI) standards. Each ROI yielded 105 features, including the following:

Shape features: 14

First-order features: 18

Texture features: 73, comprising a gray level co-occurrence matrix (GLCM), gray level run length matrix (GLRLM), gray level size zone matrix (GLSZM), gray level dependence matrix (GLDM), and neighborhood gray tone difference matrix (NGTDM).

2.5. Deep Learning Radiomics (DLR)

DLR features were implemented via PyTorch (version 2.6.0, CUDA 11.8, Python 3.9) with a VGG16 convolutional neural network (CNN) as the base architecture. The model utilized ImageNet pretrained weights for transfer learning, leveraging low-level features (edges, textures) learned from large-scale natural images to enhance medical CT image analysis. All 13 convolutional layers of VGG16 were retained with pretrained ImageNet weights and frozen to prevent overfitting. Features were extracted from each convolutional layer, whereas the original fully connected layers were not used. Global average pooling was applied to the convolutional features to generate patient-level deep features.

Model Architecture and Feature Extraction: The VGG16 model retains its original 13 convolutional layers (Conv2d_1 to Conv2d_13). Global average pooling (GAP) was applied after each layer to compress spatial dimensions into channel dimensions, generating feature vectors. The feature counts per layer were 64, 64, 128, 128, 256, 256, 256, 512, 512, 512, 512, 512, 512, 512, totaling 4224 dimensions of deep feature representations. All HCR and DLR features were Z score standardized postextraction.

Image Preprocessing and Input Design: The input images were original-resolution CT slices (512 × 512 pixels) with channel standardization to meet feature extraction requirements. Three-dimensional CT images were processed slice-by-slice, extracting multilevel deep representations. The feature vectors from all the slices of a patient were averaged to generate individual-level DLR feature representations.

Four Input Source Designs (

Figure 2): To enhance the relevance of features to clinical RD risk, four input strategies were designed:

DLROriginal: Original CT images without ROI masking, which capture the complete chest wall and adjacent anatomical structures.

DLRSkin5mm: 5 mm subcutaneous region, focusing on superficial skin and subcutaneous tissues associated with RD.

DLRPTV100%: Planning target volume (PTV) with 100% prescription dose coverage, exploring associations between the primary tumor irradiation region and RD.

DLRV5Gy: Subcutaneous region within 5 mm receiving ≥ 5 Gy, excluding nonirradiated tissues to enhance relevance to clinical side effects.

ROI Definition and Dose-Guided Design: The dose-guided ROI (DLR

V5Gy) was defined as the intersection between the subcutaneous layer within 5 mm of the skin surface and the region receiving ≥ 5 Gy. The 5 Gy threshold corresponds to 10% of the prescribed 50 Gy dose and was selected based on radiobiological and dosimetric evidence indicating that early radiation-induced vascular and inflammatory skin responses arise within this low-to-moderate dose range [

25,

26]. In accordance with AAPM TG-218 recommendations for patient-specific quality assurance, voxels receiving <10% of the prescription dose were excluded to avoid scatter-dominated noise. Preliminary sensitivity testing with alternative thresholds (3 Gy, 5 Gy, 10 Gy) further confirmed that 5 Gy achieved the optimal balance between irradiated-region coverage and feature stability.

Design Principles and advantages: This DLR strategy balances anatomical information (DLROriginal, DLRPTV100%) and dosimetric effects (DLRSkin5mm, DLRV5Gy). The DLRV5Gy input, which targets the “actual irradiated skin region,” minimizes noise from nonirradiated areas, improving RD prediction performance. The extracted deep features encompass low-level textures and edges as well as high-level semantic features learned by the multilayer CNN, complementing HCR feature limitations in capturing complex image patterns.

2.6. Feature Integration and Selection

To evaluate the contributions of different data modalities to RD prediction, 11 feature sets were designed, covering single sources (clinical, DVH, HCR, or DLR) and multimodal combinations (e.g., clinical + DVH + DLR). This approach not only assesses the predictive potential of radiomics but also clarifies the additive value of clinical and dosimetric data. The detailed feature set definitions and corresponding model performance metrics are provided in

Supplementary Tables S1 and S2.

An early feature-level fusion strategy was employed to integrate multimodal data. The clinical, DVH, and radiomic features were concatenated before model training to form a unified feature matrix. All continuous variables were normalized via z score standardization (StandardScaler), followed by ANOVA and Boruta–SHAP for feature selection. The fused dataset was balanced via SMOTE-ENN before training the stacking ensemble model. This early fusion approach enables cross-modal learning and ensures balanced feature contributions across modalities.

To mitigate potential bias due to the higher dimensionality of DLR than HCR, the two modalities underwent independent feature selection via an identical two-stage process (ANOVA → Boruta–SHAP). ANOVA (Analysis of Variance): Initial dimensionality reduction was performed via one-way ANOVA (p < 0.05) to filter HCR and DLR features significantly associated with RD, reducing redundant variables. Boruta–SHAP: Further refinement was conducted via random forest and SHapley Additive exPlanations (SHAP) values to select features with stable and critical contributions to model decisions. The Boruta–SHAP algorithm was implemented via a random forest–based model without depth limitations (max_depth = None), allowing flexible feature representation. SHAP values were computed via the TreeSHAP algorithm, which was optimized for tree-based learners to estimate feature contributions efficiently. The feature importance estimation was iterated for 200 rounds, with shadow features regenerated at each run to minimize randomness and ensure stable, reproducible identification of important features across folds.

This independent yet standardized design effectively prevented feature importance annihilation between modalities and ensured balanced feature contributions in the subsequent ensemble learning framework. This two-stage process also effectively mitigates overfitting risks and computational burden while enhancing model interpretability and robustness, ensuring that the selected feature sets are statistically significant and clinically relevant.

Additionally, a nested cross-validation (NCV) framework was implemented to ensure rigorous model performance evaluation. Specifically, a fivefold stratified outer loop was used to assess the generalization ability, whereas a fivefold inner loop combined with RandomizedSearchCV was applied for hyperparameter optimization. This nested design minimizes overfitting risk and provides a reliable estimation of generalization in the absence of an external validation cohort.

To mitigate overfitting further, all convolutional layers of the pretrained VGG16 were frozen to limit trainable parameters. Model training and hyperparameter optimization were conducted under the NCV framework to ensure unbiased generalization assessment. Furthermore, a hybrid SMOTE-ENN resampling technique was applied only to the training subsets to balance the class distribution while noisy or ambiguous samples were removed. The final stacking ensemble integrates logistic regression, random forest, and gradient boosting decision tree classifiers to increase model robustness and stability.

Furthermore, the Boruta–SHAP procedure inherently includes an internal stability validation mechanism, ensuring that only robust and consistently important features are retained for model construction. This design enhances both the statistical reliability and clinical interpretability of high-dimensional radiomic analysis.

Dataset partitioning was performed on a per-patient basis to prevent data leakage, ensuring that each patient’s CT, DVH, and clinical data appeared in only one analysis fold. A fivefold stratified outer loop was used to assess generalization, whereas a fivefold inner loop performed hyperparameter optimization via RandomizedSearchCV. Stratification by RD grade maintained balanced class distributions across folds. Clinical characteristics were confirmed to be comparable between the training and testing subsets, with no significant differences observed except for age and internal mammary node (IMN) irradiation.

2.7. Development of Ensemble AI Models

To increase the predictive performance and model robustness, a stacking ensemble (SE) approach was adopted, which integrates three complementary base classifiers—logistic regression (LR), random forest (RF), and gradient boosting decision tree (GBDT)—to construct the RD risk prediction model.

The architecture employed a two-layer design:

First layer (base classifiers): LR, RF, and GBDT were trained separately, and the prediction probabilities were output.

Second Layer (Metaclassifier): Logistic regression serves as the metaclassifier, integrating first-layer outputs to generate final predictions. This heterogeneous ensemble strategy enhances the generalization and classification stability.

The model training process included the following steps:

Cross-validation: Nested 5-fold cross-validation was performed on the training set to maintain consistent class proportions across folds.

Class Imbalance Handling: The synthetic minority oversampling technique combined with the edit nearest neighbor (SMOTE–ENN) technique was applied within each cross-validation fold to balance the positive and negative class samples.

Feature Preprocessing: Numerical features are standardized, and categorical features are one-hot encoded.

Hyperparameter Optimization: Randomized search for optimal hyperparameter combinations, followed by retraining on the full training set.

Models were validated on an independent test set to assess real-world predictive performance, implemented in Python via scikit-learn and imbalanced-learn libraries.

To further evaluate component contributions, ablation analysis was performed by independently training and testing each base model (LR, RF, GBDT) and their partial combinations compared with the full SE. This modular structure enabled clear attribution of performance gains to each submodel. Additionally, GBDT hyperparameter sensitivity was assessed to confirm the stability of tree-based learners, which functionally serves as an internal ablation verification.

Logistic regression was adopted as the meta-learner in the stacking ensemble to provide interpretable and stable probabilistic outputs. Unlike nonlinear alternatives such as XGBoost, logistic regression offers transparent weighting of base learner predictions (GBDT, RF, and LR) and minimizes overfitting risk in moderate-sized datasets. Because the base classifiers already capture nonlinear relationships, a linear meta-learner ensures robust aggregation and reliable calibration under nested cross-validation.

2.8. Model Evaluation and Explainability Analysis

Multiple performance metrics were used to comprehensively evaluate model classification performance for Grade ≥ 2 RD prediction, with the AUC as the primary metric. Additional metrics included accuracy, recall, specificity, positive predictive value (PPV), negative predictive value (NPV), and F1 score, which were calculated on the basis of the confusion matrix for robust and comparable results. For each performance metric (AUC, accuracy, recall, specificity, PPV, NPV, and F1 score), 95% confidence intervals (CIs) were computed across the outer folds of the nested cross-validation to quantify variability and assess model stability. Between-model comparisons were performed via averaged metrics and 95% confidence interval (CI) analysis derived from the outer folds of the nested cross-validation. Consistent performance gains and nonoverlapping CIs were interpreted as statistically meaningful differences. Additionally, Cohen’s d effect size was introduced to quantify the magnitude of practical differences and to evaluate performance stability across folds.

Hyperparameter sensitivity analysis was conducted using results obtained from each inner RandomizedSearchCV iteration within the nested cross-validation framework. The effects of key parameters (n_estimators, max_depth, and learning_rate) were evaluated by summarizing their relationships with the average F1 score to assess model stability and robustness.

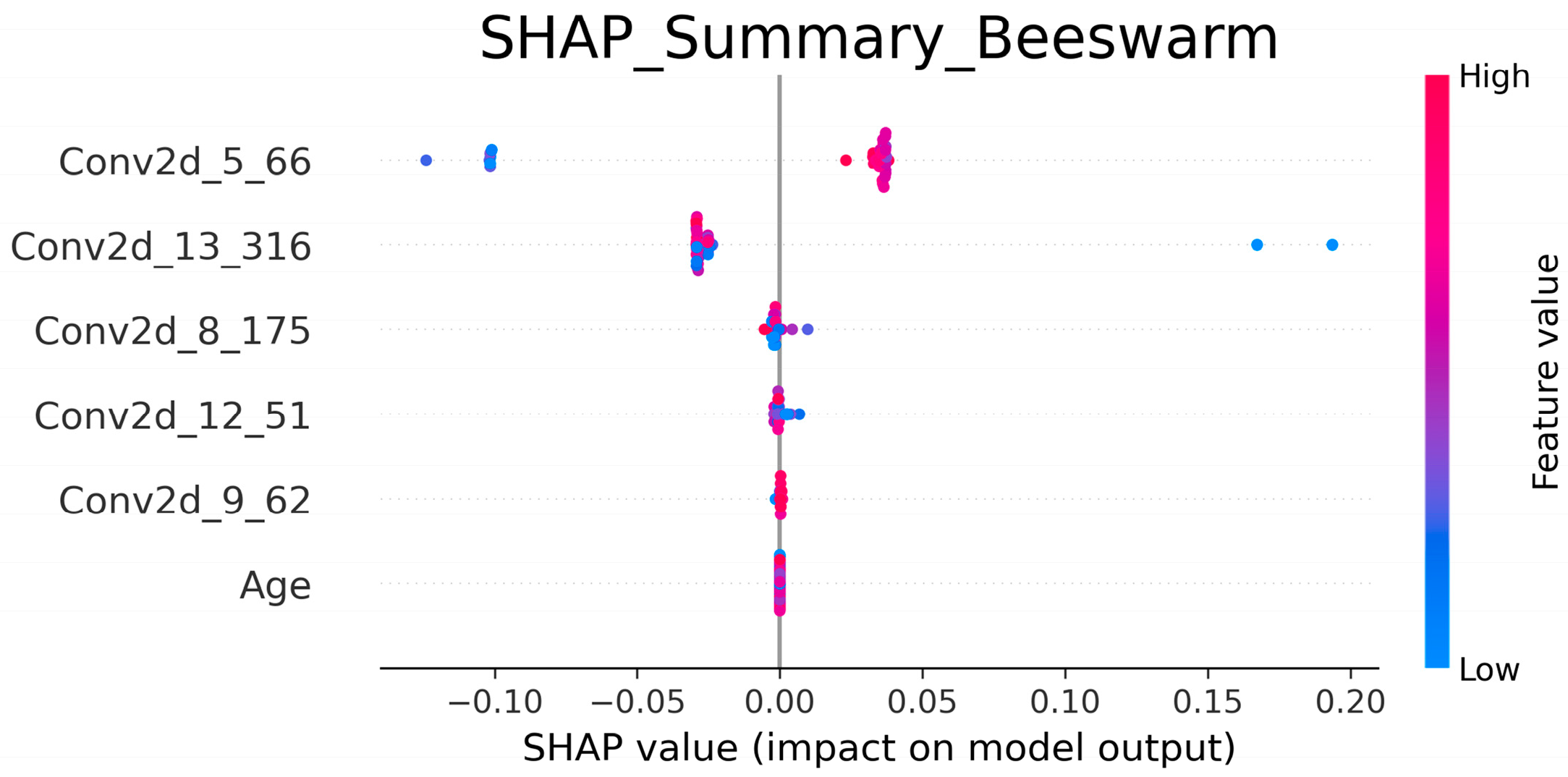

To enhance model transparency and interpretability, XAI techniques were employed:

SHAP analysis: SHapley additive exPlanations (SHAPs) quantify the relative contribution of each feature to predictions, establishing feature importance rankings to clarify decision-making [

27].

Grad-CAM analysis: Gradient-weighted class activation mapping (Grad-CAM) generates heatmaps to visualize the anatomical or dose-related regions focused on during model inference, validating biological plausibility [

28].

By integrating multidimensional performance evaluation and XAI techniques, this study ensures the accuracy, robustness, and clinical validity of the ensemble model, providing a transparent and interpretable AI framework for RD risk prediction.

To assess clinical utility, decision curve analysis (DCA) was performed to estimate the net benefit of the ensemble model across a range of probability thresholds. Additionally, clinical utility metrics, including the PPV, NPV, false positive rate (FPR), and false negative rate (FNR), were calculated at both the default (0.5) and optimal thresholds derived from Youden’s index.

Model performance was assessed via standard classification metrics derived from the confusion matrix: true positives (TPs), false positives (FPs), true negatives (TNs), and false negatives (FNs). The calculation formulas were defined as follows:

These metrics were calculated for each outer fold of the nested cross-validation to obtain mean values and 95% confidence intervals.

4. Discussion

This study proposed a hybrid ensemble model that integrates DLR, HCR, clinical variables, and DVH parameters to predict the risk of RD in breast cancer patients undergoing VMAT. Models relying solely on clinical and DVH features achieved limited discrimination (AUC = 0.61), whereas incorporating HCR features provided a moderate improvement (AUC = 0.66). In contrast, DLR-based feature sets achieved consistently higher predictive performance (AUC = 0.70–0.74), indicating that deep learning–derived representations capture more discriminative imaging patterns. Notably, the dose-guided DLR

V5Gy feature subset (subcutaneous 5 mm region receiving ≥ 5 Gy) demonstrated the highest single-modality performance (AUC = 0.72). Further integration of clinical, DVH, and DLR

V5Gy features via a stacking ensemble (SE) model yielded the best overall results (AUC = 0.76, Recall = 0.70, F1 score = 0.60). These findings highlight the critical role of dose-guided deep features and multimodal fusion in improving clinical toxicity prediction. Importantly, the consistent superiority of ensemble models across all feature combinations underscores their robustness in mitigating overfitting and enhancing generalizability, which is consistent with previous findings by Wu et al. [

20], who demonstrated that ensemble strategies outperform single classifiers in RD prediction tasks.

The ablation comparison (

Table 3) revealed that while GBDT and RF individually achieved moderate accuracy (AUC ≈ 0.50–0.73), integration through the stacking ensemble consistently improved overall discrimination (AUC = 0.76, F1 = 0.60). These findings demonstrate that each component contributes complementary decision boundaries—GBDT capturing nonlinear dependencies, RF enhancing robustness, and LR improving calibration—thereby validating the ensemble’s synergistic effect.

The design of the input regions had a decisive effect on the model performance. DLR

Original and DLR

Skin5mm achieved only modest AUCs of 0.74 and 0.72, with Grad-CAM visualizations revealing dispersed attention over irrelevant thoracic structures, which could introduce clinical misclassification risks. DLR

PTV100% achieved comparable performance (AUC = 0.74) by focusing on the target volume, although residual deep-tissue signals decreased its specificity. While the AUCs of the DLR-based models were similar overall, DLR

V5Gy achieved higher recall and exhibited more clinically consistent attention patterns. Grad-CAM attention for DLR

V5Gy was concentrated on the chest wall and subcutaneous skin—regions clinically known to be at high risk for RD. Moreover, these heatmaps strongly overlapped with moderate- to high-dose regions (≥ 35 Gy), reinforcing the consistency between imaging-derived features and DVH analysis. Compared with prior studies that used fixed ROIs or whole-breast inputs [

8], our dose–threshold ROI design (V5Gy) effectively guided the model toward clinically relevant structures, improving both accuracy and interpretability. This observation is consistent with that of Jeong et al. [

19], who noted the limitations of conventional HCR-based ROI strategies, and Bagherpour et al. [

29], who emphasized dose-driven region definitions for toxicity modeling.

Model interpretability further supported these conclusions. SHAP analysis revealed that mid-to-deep convolutional features (e.g., Conv2d_5_66, Conv2d_13_316) were the dominant drivers of prediction, whereas additional features such as Conv2d_8_175, Conv2d_12_51, and Conv2d_9_62 provided stable contributions, indicating that diverse deep-layer representations are crucial for capturing RD-relevant patterns. Clinical features such as age, although less impactful, were consistently retained by Boruta-SHAP, suggesting that they offer complementary signals to imaging features and enhance model stability. This synergy between radiomic features and structured variables echoes findings by Feng et al. [

30] and Nie et al. [

31] reported that radiomic features dominate toxicity prediction, but nonimaging features can contribute supportive information. Together, the combination of Grad-CAM and SHAP provided transparency, confirmed the biological plausibility of the model, and strengthened clinicians’ trust in AI-assisted tools.

Grad-CAM Clinical Implications: Grad-CAM visualization provided further clinical insight into the spatial attention of different ROI designs. While the DLR

Original and DLR

Skin5mm models exhibited dispersed activations involving nonirradiated thoracic regions, reducing specificity, and DLR

PTV100% focused on tumor boundaries and deeper tissues, their correspondence to RD-prone regions remained limited. In contrast, DLR

V5Gy consistently localized attention to the skin and subcutaneous layer from shallow to deeper convolutional layers, aligning closely with medium- to low-dose regions that are clinically recognized as RD high-risk areas. This highlights three key implications: (1) anatomical correspondence, as the skin and subcutaneous tissue represent the most common RD sites [

32,

33]; (2) dose dependency, with skin reactions showing a dose–dependent relationship with the cumulative radiation dose, supporting the dose–response nature of RD [

34,

35]; and (3) explainability, as the consistency between AI-derived heatmaps and clinical RD patterns enhances model transparency and physician trust. Compared with Raghavan et al. [

36], where conventional Grad-CAM produced broad and inconsistent breast activations, and Liang et al. [

37], which primarily demonstrated dose-related correlations without anatomical specificity, the DLR

V5Gy approach in the present study uniquely integrates anatomical and dosimetric guidance, providing superior clinical interpretability alongside predictive performance.

Compared with previous studies by Lee et al. (AUC = 0.83) [

7] and Xiang et al. (AUC = 0.82) [

8], the present study yielded a slightly lower cross-validated AUC (0.72) for the DLR

V5Gy-only model. This difference likely reflects the use of a more rigorous nested cross-validation (NCV) framework, which provides a conservative and unbiased estimation of generalization performance. Nevertheless, the methodological rationale of the proposed DLR

V5Gy approach remains distinct and robust.

Unlike earlier methods that extracted features from fixed or anatomically defined regions—such as the entire breast, superficial skin, or PTV-based ROIs—the DLRV5Gy design introduces a dose-guided ROI, defined as the intersection between the subcutaneous layer within 5 mm of the skin and the isodose volume receiving ≥ 5 Gy. This region better represents the clinically irradiated skin zone, reducing the dilution effect from non-irradiated tissue and improving the biological and dosimetric specificity of extracted features.

Furthermore, Grad-CAM visualizations confirmed that model attention was concentrated in dose-exposed skin areas consistent with radiation-induced toxicity patterns, reinforcing the interpretability and biological plausibility of the framework. When combined with clinical and DVH features in the stacking ensemble configuration, the model’s predictive performance improved further (AUC = 0.76), supporting the value of multimodal fusion in achieving stable, interpretable, and clinically relevant predictions of radiation dermatitis risk.

The inclusion of DCA and clinical utility metrics further underscores the practical relevance of the proposed model. The DCA results indicated consistent net benefit across a wide range of probability thresholds, suggesting that the stacking ensemble could assist clinicians in identifying patients at higher risk with fewer unnecessary interventions. Moreover, the improved PPV, NPV, and balanced false positive/negative rates confirm the model’s potential to support evidence-based decision-making in real-world radiotherapy management.

Several limitations warrant consideration. First, this was a single-center, retrospective study with 148 patients, which may limit its external generalizability. Interestingly, while the homogeneity of imaging protocols and planning systems (Pinnacle and Eclipse) improved training consistency, such uniformity may also limit performance when applied to heterogeneous external datasets. Second, RD grading was based on physician assessment according to the RTOG criteria, which may introduce subjective bias. Third, the high dimensionality of DLR features (4224 variables) poses overfitting risks; although our two-stage feature selection (ANOVA + Boruta-SHAP) effectively reduces redundancy, this step remains challenging. Finally, class imbalance (33% RD incidence) may bias learning toward majority cases, requiring careful handling by resampling strategies.

Despite these limitations, this study has several distinctive strengths. To our knowledge, this is the first work to propose the DLRV5Gy ROI strategy, which directly embeds dose thresholds into deep feature learning, thereby combining anatomical and dosimetric information in a clinically meaningful way. Furthermore, the integration of SHAP and Grad-CAM provided dual interpretability—both feature-level importance and spatial relevance—ensuring that predictions align with clinical knowledge and dose–toxicity relationships.

The clinical implications of this work are considerable. The proposed model can be implemented during treatment planning to identify high-risk patients, enabling physicians to adjust skin dose constraints proactively. For those flagged as high risk, enhanced prophylactic skincare and closer monitoring could be applied to mitigate severe toxicity and reduce treatment interruptions. Embedding the model into radiotherapy planning systems could provide real-time decision support, aligning with the goals of precision oncology to improve the safety, adherence, and cost-effectiveness of breast cancer radiotherapy. Although formal clinician evaluation of the model’s predictions was not performed in this study, the explainable ensemble framework provides SHAP-based interpretability that aligns with clinical reasoning. Future studies will incorporate direct clinician assessment of the model outputs to confirm their clinical relevance and applicability in real-world decision-making.

The proposed ensemble model can be incorporated into existing radiotherapy workflows by integrating its predictive outputs into treatment planning and oncology information systems. Such integration could enable early identification of high-risk patients, assist in dose optimization, and inform personalized follow-up or prophylactic strategies. The explainable SHAP-based outputs further enhance clinical transparency, allowing oncologists to understand the influence of key features on risk prediction. Future implementation will require prospective validation and workflow integration within hospital information systems.

Potential biases inherent to the retrospective cohort were carefully controlled through both data-level and model-level strategies. The synthetic minority oversampling technique combined with the edit nearest neighbors (SMOTE-ENN) was applied only within the training folds to prevent data leakage, ensuring balanced class representation during model training. Moreover, the nested cross-validation framework minimized sampling bias and overfitting, providing a reliable and generalizable estimation of model performance despite the retrospective design.

Future directions include multicenter and cross-platform validation to improve generalizability, prospective trials to evaluate clinical utility, and expansion to other cancer types, such as head and neck malignancies, where RD risk is also substantial. Additionally, the integration of other modalities (MRI, PET, surface imaging) and genomic data could further increase the predictive accuracy. Finally, coupling this framework with dose optimization algorithms could lead to the development of “RD-aware” planning systems, enabling real-time, patient-specific toxicity mitigation strategies. While this study employed ImageNet-pretrained VGG16 for deep feature extraction, future extensions will include domain-specific pretrained models (e.g., RadImageNet, Med3D) and CNNs trained from scratch to further evaluate the impact of different pretraining strategies on radiation dermatitis prediction.