Advances in Artificial Intelligence for Glioblastoma Radiotherapy Planning and Treatment

Simple Summary

Abstract

1. Essentials

- Deep learning-based auto segmentation models achieve high accuracy and substantially reduced inter-observer variability in glioblastoma radiotherapy planning (pp. 6–8)

- Biologically informed mathematical modeling integrates tumor growth dynamics with imaging, enabling personalized radiotherapy dose mapping strategies (pp. 8–11)

- Radiogenomic models integrating imaging and molecular data predict status of key biomarkers, supporting non-invasive tumor subtyping and personalized therapy (pp. 11–13)

- Multi-institutional datasets, model interpretability, and standardized validation protocols remain critical barriers to clinical adoption of artificial intelligence-guided radiotherapy (pp. 13–15)

- Recent advances, including adaptive radiotherapy, multimodal integration, and foundation models, enable personalization and real-time adaptability in glioblastoma radiotherapy (pp. 15–17)

2. Introduction

- Treatment preparation encompasses the delineation of target volumes and organs at risk, the adoption of dose prescriptions, and the determination of treatment plan through simulation.

- Treatment delivery involves the fractionation of the simulated plan into multiple sessions and the systematic administration of radiation according to the established plan.

- Treatment adaptation entails the continuous monitoring of treatment execution and the modification of the plan when anatomical or physiological changes compromise the ability of the initial plan to satisfy predefined dosimetric and clinical constraints.

3. Current Challenges in Glioblastoma Management and Treatment

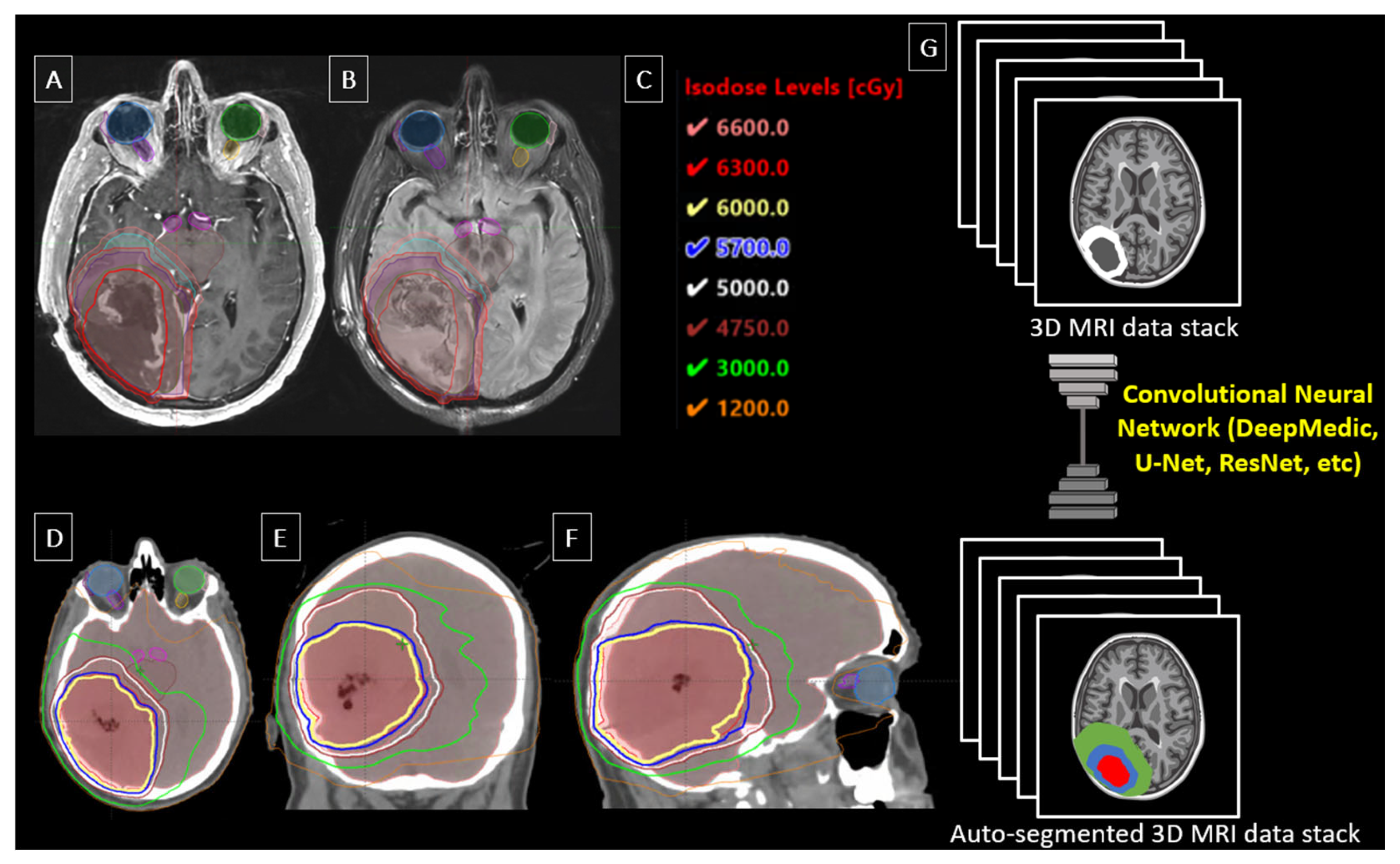

4. Tumor Delineation and Auto Segmentation

5. Personalized and Biologically Informed Tumor-Progression Radiotherapy

6. Modification of Treatment, Patient Response Prediction, and Triage During Therapy

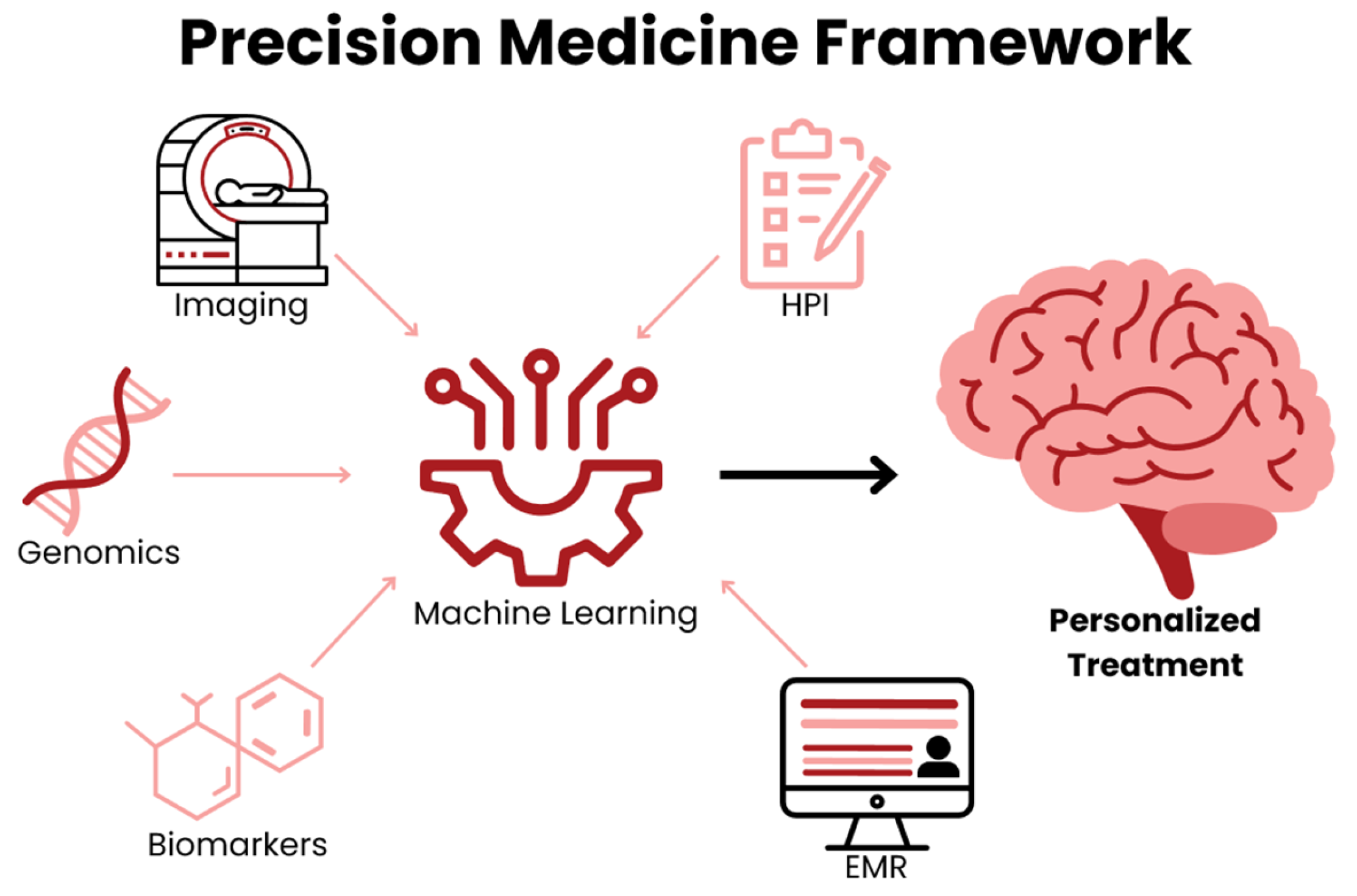

7. Radiogenomics and Non-Invasive Biomarker Integration

8. Interpretability and Explainability in Deep Learning Models

9. AI-Driven Solutions and Current Trends in Technology

10. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| RT | Radiotherapy |

| CTV | Clinical Target Volume |

| GTV | Gross Target Volume |

| PTV | Planning Target Volume |

| DL | Deep Learning |

| CNN | Convolutional Neural Network |

| ML | Machine Learning |

| DSC | Dice Similarity Coefficient |

| BRATS | Brain Tumor Segmentation |

| AUC | Area Under the Curve |

References

- Kanderi, T.; Munakomi, S.; Gupta, V. Glioblastoma Multiforme. In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2025. [Google Scholar]

- Khagi, S.; Kotecha, R.; Gatson, N.T.N.; Jeyapalan, S.; Abdullah, H.I.; Avgeropoulos, N.G.; Batzianouli, E.T.; Giladi, M.; Lustgarten, L.; Goldlust, S.A. Recent advances in Tumor Treating Fields (TTFields) therapy for glioblastoma. Oncologist 2024, 30, oyae227. [Google Scholar] [CrossRef]

- Stupp, R.; Taillibert, S.; Kanner, A.; Read, W.; Steinberg, D.; Lhermitte, B.; Toms, S.; Idbaih, A.; Ahluwalia, M.S.; Fink, K.; et al. Effect of Tumor-Treating Fields Plus Maintenance Temozolomide vs Maintenance Temozolomide Alone on Survival in Patients With Glioblastoma: A Randomized Clinical Trial. JAMA 2017, 318, 2306–2316. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- McKinnon, C.; Nandhabalan, M.; Murray, S.A.; Plaha, P. Glioblastoma: Clinical presentation, diagnosis, and management. BMJ 2021, 374, n1560. [Google Scholar] [CrossRef] [PubMed]

- Brown, T.J.; Brennan, M.C.; Li, M.; Church, E.W.; Brandmeir, N.J.; Rakszawski, K.L.; Patel, A.S.; Rizk, E.B.; Suki, D.; Sawaya, R.; et al. Association of the Extent of Resection With Survival in Glioblastoma: A Systematic Review and Meta-analysis. JAMA Oncol. 2016, 2, 1460–1469. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Weller, M.; van den Bent, M.; Preusser, M.; Le Rhun, E.; Tonn, J.C.; Minniti, G.; Bendszus, M.; Balana, C.; Chinot, O.; Dirven, L.; et al. EANO guidelines on the diagnosis and treatment of diffuse gliomas of adulthood. Nat. Rev. Clin. Oncol. 2021, 18, 170–186. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Peeken, J.C.; Molina-Romero, M.; Diehl, C.; Menze, B.H.; Straube, C.; Meyer, B.; Zimmer, C.; Wiestler, B.; Combs, S.E. Deep learning derived tumor infiltration maps for personalized target definition in Glioblastoma radiotherapy. Radiother. Oncol. 2019, 138, 166–172. [Google Scholar] [CrossRef] [PubMed]

- Rončević, A.; Koruga, N.; Soldo Koruga, A.; Rončević, R.; Rotim, T.; Šimundić, T.; Kretić, D.; Perić, M.; Turk, T.; Štimac, D. Personalized Treatment of Glioblastoma: Current State and Future Perspective. Biomedicines 2023, 11, 1579. [Google Scholar] [CrossRef]

- Bibault, J.-E.; Giraud, P. Deep learning for automated segmentation in radiotherapy: A narrative review. Br. J. Radiol. 2024, 97, 13–20. [Google Scholar] [CrossRef]

- Aman, R.A.; Pratama, M.G.; Satriawan, R.R.; Ardiansyah, I.R.; Suanjaya, I.K.A. Diagnostic and Prognostic Values of miRNAs in High-Grade Gliomas: A Systematic Review. F1000Research 2025, 13, 796. [Google Scholar] [CrossRef] [PubMed]

- Erices, J.I.; Bizama, C.; Niechi, I.; Uribe, D.; Rosales, A.; Fabres, K.; Navarro-Martínez, G.; Torres, Á.; San Martín, R.; Roa, J.C.; et al. Glioblastoma Microenvironment and Invasiveness: New Insights and Therapeutic Targets. Int. J. Mol. Sci. 2023, 24, 7047. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Fathi Kazerooni, A.; Nabil, M.; Zeinali Zadeh, M.; Firouznia, K.; Azmoudeh-Ardalan, F.; Frangi, A.F.; Davatzikos, C.; Saligheh Rad, H. Characterization of active and infiltrative tumorous subregions from normal tissue in brain gliomas using multiparametric MRI. J. Magn. Reson. Imaging 2018, 48, 938–950. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Kruser, T.J.; Bosch, W.R.; Badiyan, S.N.; Bovi, J.A.; Ghia, A.J.; Kim, M.M.; Solanki, A.A.; Sachdev, S.; Tsien, C.; Wang, T.J.C.; et al. NRG brain tumor specialists consensus guidelines for glioblastoma contouring. J. Neurooncol. 2019, 143, 157–166. [Google Scholar] [CrossRef]

- Poel, R.; Rüfenacht, E.; Ermis, E.; Müller, M.; Fix, M.K.; Aebersold, D.M.; Manser, P.; Reyes, M. Impact of random outliers in auto-segmented targets on radiotherapy treatment plans for glioblastoma. Radiat. Oncol. 2022, 17, 170. [Google Scholar] [CrossRef]

- Sidibe, I.; Tensaouti, F.; Gilhodes, J.; Cabarrou, B.; Filleron, T.; Desmoulin, F.; Ken, S.; Noël, G.; Truc, G.; Sunyach, M.P.; et al. Pseudoprogression in GBM versus true progression in patients with glioblastoma: A multiapproach analysis. Radiother. Oncol. 2023, 181, 109486. [Google Scholar] [CrossRef] [PubMed]

- Cramer, C.K.; Cummings, T.L.; Andrews, R.N.; Strowd, R.; Rapp, S.R.; Shaw, E.G.; Chan, M.D.; Lesser, G.J. Treatment of Radiation-Induced Cognitive Decline in Adult Brain Tumor Patients. Curr. Treat. Options Oncol. 2019, 20, 42. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Kickingereder, P.; Isensee, F.; Tursunova, I.; Petersen, J.; Neuberger, U.; Bonekamp, D.; Brugnara, G.; Schell, M.; Kessler, T.; Foltyn, M.; et al. Automated quantitative tumour response assessment of MRI in neuro-oncology with artificial neural networks: A multicentre, retrospective study. Lancet Oncol. 2019, 20, 728–740. [Google Scholar] [CrossRef] [PubMed]

- Mansoorian, S.; Schmidt, M.; Weissmann, T.; Delev, D.; Heiland, D.H.; Coras, R.; Stritzelberger, J.; Saake, M.; Höfler, D.; Schubert, P.; et al. Reirradiation for recurrent glioblastoma: The significance of the residual tumor volume. J. Neurooncol. 2025, 174, 243–252. [Google Scholar] [CrossRef] [PubMed]

- Shaver, M.; Kohanteb, P.; Chiou, C.; Bardis, M.; Chantaduly, C.; Bota, D.; Filippi, C.; Weinberg, B.; Grinband, J.; Chow, D.; et al. Optimizing Neuro-Oncology Imaging: A Review of Deep Learning Approaches for Glioma Imaging. Cancers 2019, 11, 829. [Google Scholar] [CrossRef] [PubMed]

- Doolan, P.J.; Charalambous, S.; Roussakis, Y.; Leczynski, A.; Peratikou, M.; Benjamin, M.; Ferentinos, K.; Strouthos, I.; Zamboglou, C.; Karagiannis, E. A clinical evaluation of the performance of five commercial artificial intelligence contouring systems for radiotherapy. Front. Oncol. 2023, 13, 1213068. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Lu, S.-L.; Xiao, F.-R.; Cheng, J.C.-H.; Yang, W.-C.; Cheng, Y.-H.; Chang, Y.-C.; Lin, J.-Y.; Liang, C.-H.; Lu, J.-T.; Chen, Y.-F.; et al. Randomized multi-reader evaluation of automated detection and segmentation of brain tumors in stereotactic radiosurgery with deep neural networks. Neuro-Oncology 2021, 23, 1560–1568. [Google Scholar] [CrossRef]

- Menze, B.H.; Jakab, A.; Bauer, S.; Kalpathy-Cramer, J.; Farahani, K.; Kirby, J.; Burren, Y.; Porz, N.; Slotboom, J.; Wiest, R.; et al. The Multimodal Brain Tumor Image Segmentation Benchmark (BRATS). IEEE Trans. Med. Imaging 2015, 34, 1993–2024. [Google Scholar] [CrossRef]

- Marey, A.; Arjmand, P.; Alerab, A.D.S.; Eslami, M.J.; Saad, A.M.; Sanchez, N.; Umair, M. Explainability, transparency and black box challenges of AI in radiology: Impact on patient care in cardiovascular radiology. Egypt J. Radiol. Nucl. Med. 2024, 55, 183. [Google Scholar] [CrossRef]

- Bakas, S.; Reyes, M.; Jakab, A.; Bauer, S.; Rempfler, M.; Crimi, A.; Shinohara, R.T.; Berger, C.; Ha, S.M.; Rozycki, M.; et al. Identifying the Best Machine Learning Algorithms for Brain Tumor Segmentation, Progression Assessment, and Overall Survival Prediction in the BRATS Challenge. arXiv 2018, arXiv:1811.02629. [Google Scholar] [CrossRef]

- Dora, L.; Agrawal, S.; Panda, R.; Abraham, A. State-of-the-Art Methods for Brain Tissue Segmentation: A Review. IEEE Rev. Biomed. Eng. 2017, 10, 235–249. [Google Scholar] [CrossRef]

- Işın, A.; Direkoğlu, C.; Şah, M. Review of MRI-based Brain Tumor Image Segmentation Using Deep Learning Methods. Procedia Comput. Sci. 2016, 102, 317–324. [Google Scholar] [CrossRef]

- Baid, U.; Ghodasara, S.; Mohan, S.; Bilello, M.; Calabrese, E.; Colak, E.; Farahani, K.; Kalpathy-Cramer, J.; Kitamura, F.C.; Pati, S.; et al. The RSNA-ASNR-MICCAI-BraTS-2021 benchmark on brain tumor segmentation and radiogenomic classification. arXiv 2021, arXiv:2107.02314. [Google Scholar]

- Isensee, F.; Jaeger, P.F.; Kohl, S.A.A.; Petersen, J.; Maier-Hein, K.H. nnU-Net: A self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 2021, 18, 203–211. [Google Scholar] [CrossRef]

- Zeineldin, R.A.; Karar, M.E.; Burgert, O.; Mathis-Ullrich, F. Multimodal CNN Networks for Brain Tumor Segmentation in MRI: A BraTS 2022 Challenge Solution. In Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries; Bakas, S., Crimi, A., Baid, U., Malec, S., Pytlarz, M., Baheti, B., Zenk, M., Dorent, R., Eds.; Springer Nature: Cham, Switzerland, 2023; pp. 127–137. [Google Scholar]

- Ferreira, A.; Solak, N.; Li, J.; Dammann, P.; Kleesiek, J.; Alves, V.; Egger, J. Enhanced Data Augmentation Using Synthetic Data for Brain Tumour Segmentation. In Brain Tumor Segmentation, and Cross-Modality Domain Adaptation for Medical Image Segmentation; Baid, U., Dorent, R., Malec, S., Pytlarz, M., Su, R., Wijethilake, N., Bakas, S., Crimi, A., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 79–93. [Google Scholar]

- Moradi, N.; Ferreira, A.; Puladi, B.; Kleesiek, J.; Fatemizadeh, E.; Luijten, G.; Alves, V.; Egger, J. Comparative Analysis of nnUNet and MedNeXt for Head and Neck Tumor Segmentation in MRI-Guided Radiotherapy. In Head and Neck Tumor Segmentation for MR-Guided Applications; Wahid, K.A., Dede, C., Naser, M.A., Fuller, C.D., Eds.; Springer Nature: Cham, Switzerland, 2025; pp. 136–153. [Google Scholar]

- Xue, J.; Wang, B.; Ming, Y.; Liu, X.; Jiang, Z.; Wang, C.; Liu, X.; Chen, L.; Qu, J.; Xu, S.; et al. Deep learning–based detection and segmentation-assisted management of brain metastases. Neuro-Oncology 2020, 22, 505–514. [Google Scholar] [CrossRef]

- Naceur, M.B.; Saouli, R.; Akil, M.; Kachouri, R. Fully Automatic Brain Tumor Segmentation using End-To-End Incremental Deep Neural Networks in MRI images. Comput. Methods Programs Biomed. 2018, 166, 39–49. [Google Scholar] [CrossRef]

- Chang, K.; Beers, A.L.; Bai, H.X.; Brown, J.M.; Ly, K.I.; Li, X.; Senders, J.T.; Kavouridis, V.K.; Boaro, A.; Su, C.; et al. Automatic assessment of glioma burden: A deep learning algorithm for fully automated volumetric and bidimensional measurement. Neuro-Oncology 2019, 21, 1412–1422. [Google Scholar] [CrossRef]

- Ranjbarzadeh, R.; Bagherian Kasgari, A.; Jafarzadeh Ghoushchi, S.; Anari, S.; Naseri, M.; Bendechache, M. Brain tumor segmentation based on deep learning and an attention mechanism using MRI multi-modalities brain images. Sci. Rep. 2021, 11, 10930. [Google Scholar] [CrossRef] [PubMed]

- Deng, W.; Shi, Q.; Luo, K.; Yang, Y.; Ning, N. Brain Tumor Segmentation Based on Improved Convolutional Neural Network in Combination with Non-quantifiable Local Texture Feature. J. Med. Syst. 2019, 43, 152. [Google Scholar] [CrossRef]

- Zhuge, Y.; Krauze, A.V.; Ning, H.; Cheng, J.Y.; Arora, B.C.; Camphausen, K.; Miller, R.W. Brain tumor segmentation using holistically nested neural networks in MRI images. Med. Phys. 2017, 44, 5234–5243. [Google Scholar] [CrossRef]

- Isensee, F.; Kickingereder, P.; Wick, W.; Bendszus, M.; Maier-Hein, K.H. Brain Tumor Segmentation and Radiomics Survival Prediction: Contribution to the BRATS 2017 Challenge. In Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries; Crimi, A., Bakas, S., Kuijf, H., Menze, B., Reyes, M., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 287–297. [Google Scholar]

- Pereira, S.; Pinto, A.; Alves, V.; Silva, C.A. Brain Tumor Segmentation Using Convolutional Neural Networks in MRI Images. IEEE Trans. Med. Imaging 2016, 35, 1240–1251. [Google Scholar] [CrossRef]

- Havaei, M.; Davy, A.; Warde-Farley, D.; Biard, A.; Courville, A.; Bengio, Y.; Pal, C.; Jodoin, P.-M.; Larochelle, H. Brain tumor segmentation with Deep Neural Networks. Med. Image Anal. 2017, 35, 18–31. [Google Scholar] [CrossRef] [PubMed]

- Soltaninejad, M.; Zhang, L.; Lambrou, T.; Allinson, N.; Ye, X. Multimodal MRI brain tumor segmentation using random forests with features learned from fully convolutional neural network. arXiv 2017, arXiv:1704.08134. [Google Scholar] [CrossRef]

- Hussain, S.; Anwar, S.M.; Majid, M. Brain tumor segmentation using cascaded deep convolutional neural network. In Proceedings of 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju Island, Republic of Korea, 11–15 July 2017; pp. 1998–2001. [Google Scholar]

- Tian, S.; Liu, Y.; Mao, X.; Xu, X.; He, S.; Jia, L.; Zhang, W.; Peng, P.; Wang, J. A multicenter study on deep learning for glioblastoma auto-segmentation with prior knowledge in multimodal imaging. Cancer Sci. 2024, 115, 3415–3425. [Google Scholar] [CrossRef]

- Unkelbach, J.; Bortfeld, T.; Cardenas, C.E.; Gregoire, V.; Hager, W.; Heijmen, B.; Jeraj, R.; Korreman, S.S.; Ludwig, R.; Pouymayou, B.; et al. The role of computational methods for automating and improving clinical target volume definition. Radiother. Oncol. 2020, 153, 15–25. [Google Scholar] [CrossRef]

- Metz, M.-C.; Ezhov, I.; Peeken, J.C.; Buchner, J.A.; Lipkova, J.; Kofler, F.; Waldmannstetter, D.; Delbridge, C.; Diehl, C.; Bernhardt, D.; et al. Toward image-based personalization of glioblastoma therapy: A clinical and biological validation study of a novel, deep learning-driven tumor growth model. Neurooncol. Adv. 2024, 6, vdad171. [Google Scholar] [CrossRef]

- Dextraze, K.; Saha, A.; Kim, D.; Narang, S.; Lehrer, M.; Rao, A.; Narang, S.; Rao, D.; Ahmed, S.; Madhugiri, V.; et al. Spatial habitats from multiparametric MR imaging are associated with signaling pathway activities and survival in glioblastoma. Oncotarget 2017, 8, 112992–113001. [Google Scholar] [CrossRef]

- Hanahan, D. Hallmarks of Cancer: New Dimensions. Cancer Discov. 2022, 12, 31–46. [Google Scholar] [CrossRef]

- Lipkova, J.; Angelikopoulos, P.; Wu, S.; Alberts, E.; Wiestler, B.; Diehl, C.; Preibisch, C.; Pyka, T.; Combs, S.E.; Hadjidoukas, P.; et al. Personalized Radiotherapy Design for Glioblastoma: Integrating Mathematical Tumor Models, Multimodal Scans, and Bayesian Inference. IEEE Trans. Med. Imaging 2019, 38, 1875–1884. [Google Scholar] [CrossRef]

- Hönikl, L.S.; Delbridge, C.; Yakushev, I.; Negwer, C.; Bernhardt, D.; Schmidt-Graf, F.; Meyer, B.; Wagner, A. Assessing the role of FET-PET imaging in glioblastoma recurrence: A retrospective analysis of diagnostic accuracy. Brain Spine 2025, 5, 105599. [Google Scholar] [CrossRef]

- Yang, Z.; Zamarud, A.; Marianayagam, N.J.; Park, D.J.; Yener, U.; Soltys, S.G.; Chang, S.D.; Meola, A.; Jiang, H.; Lu, W.; et al. Deep learning-based overall survival prediction in patients with glioblastoma: An automatic end-to-end workflow using pre-resection basic structural multiparametric MRIs. Comput. Biol. Med. 2025, 185, 109436. [Google Scholar] [CrossRef]

- Kwak, S.; Akbari, H.; Garcia, J.A.; Mohan, S.; Dicker, Y.; Sako, C.; Matsumoto, Y.; Nasrallah, M.P.; Shalaby, M.; O’Rourke, D.M.; et al. Predicting peritumoral glioblastoma infiltration and subsequent recurrence using deep-learning–based analysis of multi-parametric magnetic resonance imaging. J. Med. Imaging 2024, 11, 054001. [Google Scholar] [CrossRef]

- Hong, J.C.; Eclov, N.C.W.; Dalal, N.H.; Thomas, S.M.; Stephens, S.J.; Malicki, M.; Shields, S.; Cobb, A.; Mowery, Y.M.; Niedzwiecki, D.; et al. System for High-Intensity Evaluation During Radiation Therapy (SHIELD-RT): A Prospective Randomized Study of Machine Learning–Directed Clinical Evaluations During Radiation and Chemoradiation. J. Clin. Oncol. 2020, 38, 3652–3661. [Google Scholar] [CrossRef]

- Gutsche, R.; Lohmann, P.; Hoevels, M.; Ruess, D.; Galldiks, N.; Visser-Vandewalle, V.; Treuer, H.; Ruge, M.; Kocher, M. Radiomics outperforms semantic features for prediction of response to stereotactic radiosurgery in brain metastases. Radiother. Oncol. 2022, 166, 37–43. [Google Scholar] [CrossRef]

- Tsang, D.S.; Tsui, G.; McIntosh, C.; Purdie, T.; Bauman, G.; Dama, H.; Laperriere, N.; Millar, B.-A.; Shultz, D.B.; Ahmed, S.; et al. A pilot study of machine-learning based automated planning for primary brain tumours. Radiat. Oncol. 2022, 17, 3. [Google Scholar] [CrossRef] [PubMed]

- Di Nunno, V.; Fordellone, M.; Minniti, G.; Asioli, S.; Conti, A.; Mazzatenta, D.; Balestrini, D.; Chiodini, P.; Agati, R.; Tonon, C.; et al. Machine learning in neuro-oncology: Toward novel development fields. J. Neurooncol. 2022, 159, 333–346. [Google Scholar] [CrossRef]

- Chang, K.; Bai, H.X.; Zhou, H.; Su, C.; Bi, W.L.; Agbodza, E.; Kavouridis, V.K.; Senders, J.T.; Boaro, A.; Beers, A.; et al. Residual Convolutional Neural Network for the Determination of IDH Status in Low- and High-Grade Gliomas from MR Imaging. Clin. Cancer Res. 2018, 24, 1073–1081. [Google Scholar] [CrossRef] [PubMed]

- Wong, Q.H.-W.; Li, K.K.-W.; Wang, W.-W.; Malta, T.M.; Noushmehr, H.; Grabovska, Y.; Jones, C.; Chan, A.K.-Y.; Kwan, J.S.-H.; Huang, Q.J.-Q.; et al. Molecular landscape of IDH-mutant primary astrocytoma Grade IV/glioblastomas. Mod. Pathol. 2021, 34, 1245–1260. [Google Scholar] [CrossRef]

- Ding, J.; Zhao, R.; Qiu, Q.; Chen, J.; Duan, J.; Cao, X.; Yin, Y. Developing and validating a deep learning and radiomic model for glioma grading using multiplanar reconstructed magnetic resonance contrast-enhanced T1-weighted imaging: A robust, multi-institutional study. Quant. Imaging Med. Surg. 2022, 12, 1517–1528. [Google Scholar] [CrossRef]

- Zhang, X.; Yan, L.-F.; Hu, Y.-C.; Li, G.; Yang, Y.; Han, Y.; Sun, Y.-Z.; Liu, Z.-C.; Tian, Q.; Han, Z.-Y.; et al. Optimizing a machine learning based glioma grading system using multi-parametric MRI histogram and texture features. Oncotarget 2017, 8, 47816–47830. [Google Scholar] [CrossRef]

- Fathi Kazerooni, A.; Akbari, H.; Hu, X.; Bommineni, V.; Grigoriadis, D.; Toorens, E.; Sako, C.; Mamourian, E.; Ballinger, D.; Sussman, R.; et al. The radiogenomic and spatiogenomic landscapes of glioblastoma and their relationship to oncogenic drivers. Commun. Med. 2025, 5, 55. [Google Scholar] [CrossRef]

- Lu, J.; Zhang, Z.-Y.; Zhong, S.; Deng, D.; Yang, W.-Z.; Wu, S.-W.; Cheng, Y.; Bai, Y.; Mou, Y.-G. Evaluating the Diagnostic and Prognostic Value of Peripheral Immune Markers in Glioma Patients: A Prospective Multi-Institutional Cohort Study of 1282 Patients. J. Inflamm. Res. 2025, 18, 7477–7492. [Google Scholar] [CrossRef]

- Hasani, F.; Masrour, M.; Jazi, K.; Ahmadi, P.; Hosseini, S.S.; Lu, V.M.; Alborzi, A. MicroRNA as a potential diagnostic and prognostic biomarker in brain gliomas: A systematic review and meta-analysis. Front. Neurol. 2024, 15, 1357321. [Google Scholar] [CrossRef]

- Lakomy, R.; Sana, J.; Hankeova, S.; Fadrus, P.; Kren, L.; Lzicarova, E.; Svoboda, M.; Dolezelova, H.; Smrcka, M.; Vyzula, R.; et al. MiR-195, miR-196b, miR-181c, miR-21 expression levels and O-6-methylguanine-DNA methyltransferase methylation status are associated with clinical outcome in glioblastoma patients. Cancer Sci. 2011, 102, 2186–2190. [Google Scholar] [CrossRef] [PubMed]

- Lan, F.; Yue, X.; Xia, T. Exosomal microRNA-210 is a potentially non-invasive biomarker for the diagnosis and prognosis of glioma. Oncol. Lett. 2020, 19, 1967–1974. [Google Scholar] [CrossRef]

- Zhou, Q.; Liu, J.; Quan, J.; Liu, W.; Tan, H.; Li, W. MicroRNAs as potential biomarkers for the diagnosis of glioma: A systematic review and meta-analysis. Cancer Sci. 2018, 109, 2651–2659. [Google Scholar] [CrossRef] [PubMed]

- Velu, U.; Singh, A.; Nittala, R.; Yang, J.; Vijayakumar, S.; Cherukuri, C.; Vance, G.R.; Salvemini, J.D.; Hathaway, B.F.; Grady, C.; et al. Precision Population Cancer Medicine in Brain Tumors: A Potential Roadmap to Improve Outcomes and Strategize the Steps to Bring Interdisciplinary Interventions. Cureus 2024, 16, e71305. [Google Scholar] [CrossRef] [PubMed]

- Silva, P.J.; Silva, P.A.; Ramos, K.S. Genomic and Health Data as Fuel to Advance a Health Data Economy for Artificial Intelligence. BioMed Res. Int. 2025, 2025, 6565955. [Google Scholar] [CrossRef]

- Silva, P.J.; Rahimzadeh, V.; Powell, R.; Husain, J.; Grossman, S.; Hansen, A.; Hinkel, J.; Rosengarten, R.; Ory, M.G.; Ramos, K.S. Health equity innovation in precision medicine: Data stewardship and agency to expand representation in clinicogenomics. Health Res. Policy Syst. 2024, 22, 170. [Google Scholar] [CrossRef]

- Silva, P.; Janjan, N.; Ramos, K.S.; Udeani, G.; Zhong, L.; Ory, M.G.; Smith, M.L. External control arms: COVID-19 reveals the merits of using real world evidence in real-time for clinical and public health investigations. Front. Med. 2023, 10, 1198088. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- d’Este, S.H.; Nielsen, M.B.; Hansen, A.E. Visualizing Glioma Infiltration by the Combination of Multimodality Imaging and Artificial Intelligence, a Systematic Review of the Literature. Diagnostics 2021, 11, 592. [Google Scholar] [CrossRef]

- Holzinger, A.; Langs, G.; Denk, H.; Zatloukal, K.; Müller, H. Causability and explainability of artificial intelligence in medicine. WIREs Data Min. Knowl. 2019, 9, e1312. [Google Scholar] [CrossRef] [PubMed]

- AlBadawy, E.A.; Saha, A.; Mazurowski, M.A. Deep learning for segmentation of brain tumors: Impact of cross-institutional training and testing. Med. Phys. 2018, 45, 1150–1158. [Google Scholar] [CrossRef] [PubMed]

- Bologna, G.; Hayashi, Y. Characterization of Symbolic Rules Embedded in Deep DIMLP Networks: A Challenge to Transparency of Deep Learning. J. Artif. Intell. Soft Comput. Res. 2017, 7, 265–286. [Google Scholar] [CrossRef]

- Cui, S.; Traverso, A.; Niraula, D.; Zou, J.; Luo, Y.; Owen, D.; El Naqa, I.; Wei, L. Interpretable artificial intelligence in radiology and radiation oncology. Br. J. Radiol. 2023, 96, 20230142. [Google Scholar] [CrossRef]

- Sangwan, H. Quantifying Explainable Ai Methods in Medical Diagnosis: A Study in Skin Cancer. medRxiv 2024. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Lundberg, S.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. arXiv 2017, arXiv:1705.07874. [Google Scholar] [CrossRef]

- Sahlsten, J.; Jaskari, J.; Wahid, K.A.; Ahmed, S.; Glerean, E.; He, R.; Kann, B.H.; Mäkitie, A.; Fuller, C.D.; Naser, M.A.; et al. Application of simultaneous uncertainty quantification and segmentation for oropharyngeal cancer use-case with Bayesian deep learning. Commun. Med. 2024, 4, 110. [Google Scholar] [CrossRef]

- Alruily, M.; Mahmoud, A.A.; Allahem, H.; Mostafa, A.M.; Shabana, H.; Ezz, M. Enhancing Breast Cancer Detection in Ultrasound Images: An Innovative Approach Using Progressive Fine-Tuning of Vision Transformer Models. Int. J. Intell. Syst. 2024, 2024, 6528752. [Google Scholar] [CrossRef]

- Marin, T.; Zhuo, Y.; Lahoud, R.M.; Tian, F.; Ma, X.; Xing, F.; Moteabbed, M.; Liu, X.; Grogg, K.; Shusharina, N.; et al. Deep learning-based GTV contouring modeling inter- and intra-observer variability in sarcomas. Radiother. Oncol. 2022, 167, 269–276. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Abbasi, S.; Lan, H.; Choupan, J.; Sheikh-Bahaei, N.; Pandey, G.; Varghese, B. Deep learning for the harmonization of structural MRI scans: A survey. Biomed. Eng. Online 2024, 23, 90. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Ensenyat-Mendez, M.; Íñiguez-Muñoz, S.; Sesé, B.; Marzese, D.M. iGlioSub: An integrative transcriptomic and epigenomic classifier for glioblastoma molecular subtypes. BioData Min. 2021, 14, 42. [Google Scholar] [CrossRef]

- Dona Lemus, O.M.; Cao, M.; Cai, B.; Cummings, M.; Zheng, D. Adaptive Radiotherapy: Next-Generation Radiotherapy. Cancers 2024, 16, 1206. [Google Scholar] [CrossRef]

- Weykamp, F.; Meixner, E.; Arians, N.; Hoegen-Saßmannshausen, P.; Kim, J.-Y.; Tawk, B.; Knoll, M.; Huber, P.; König, L.; Sander, A.; et al. Daily AI-Based Treatment Adaptation under Weekly Offline MR Guidance in Chemoradiotherapy for Cervical Cancer 1: The AIM-C1 Trial. J. Clin. Med. 2024, 13, 957. [Google Scholar] [CrossRef]

- Vuong, W.; Gupta, S.; Weight, C.; Almassi, N.; Nikolaev, A.; Tendulkar, R.D.; Scott, J.G.; Chan, T.A.; Mian, O.Y. Trial in Progress: Adaptive RADiation Therapy with Concurrent Sacituzumab Govitecan (SG) for Bladder Preservation in Patients with MIBC (RAD-SG). Int. J. Radiat. Oncol. 2023, 117, e447–e448. [Google Scholar] [CrossRef]

- Guevara, B.; Cullison, K.; Maziero, D.; Azzam, G.A.; De La Fuente, M.I.; Brown, K.; Valderrama, A.; Meshman, J.; Breto, A.; Ford, J.C.; et al. Simulated Adaptive Radiotherapy for Shrinking Glioblastoma Resection Cavities on a Hybrid MRI-Linear Accelerator. Cancers 2023, 15, 1555. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Paschali, M.; Chen, Z.; Blankemeier, L.; Varma, M.; Youssef, A.; Bluethgen, C.; Langlotz, C.; Gatidis, S.; Chaudhari, A. Foundation Models in Radiology: What, How, Why, and Why Not. Radiology 2025, 314, e240597. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Putz, F.; Beirami, S.; Schmidt, M.A.; May, M.S.; Grigo, J.; Weissmann, T.; Schubert, P.; Höfler, D.; Gomaa, A.; Hassen, B.T.; et al. The Segment Anything foundation model achieves favorable brain tumor auto-segmentation accuracy in MRI to support radiotherapy treatment planning. Strahlenther. Onkol. 2025, 201, 255–265. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Kebaili, A.; Lapuyade-Lahorgue, J.; Vera, P.; Ruan, S. Multi-modal MRI synthesis with conditional latent diffusion models for data augmentation in tumor segmentation. Comput. Med. Imaging Graph. 2025, 123, 102532. [Google Scholar] [CrossRef] [PubMed]

- Baroudi, H.; Brock, K.K.; Cao, W.; Chen, X.; Chung, C.; Court, L.E.; El Basha, M.D.; Farhat, M.; Gay, S.; Gronberg, M.P.; et al. Automated Contouring and Planning in Radiation Therapy: What Is ‘Clinically Acceptable’? Diagnostics 2023, 13, 667. [Google Scholar] [CrossRef] [PubMed]

| Author | Year | Study/Model | Input Data | Patients/ Plans | Performance (DSC) | Notable Features |

|---|---|---|---|---|---|---|

| Peeken et al. [7] | 2019 | CNN | DTI; FLAIR | 33 | NA | Microscopic infiltration mapping aligned with clinical RT guidelines |

| Lu et al. [21] | 2021 | 3D U-Net + DeepMedic Ensemble | CECT; T1C | 1288 | 0.86–0.90 | Real-time clinical SRS workflow integration |

| Xue et al. [32] | 2020 | Cascaded 3D FCN | 3D-T1-MPRAGE | 1652 | 0.85 | High-volume validation |

| Naceur et al. [33] | 2018 | Incremental XCNet | T1C; T1; T2; FLAIR | 210 | 0.88 | Novel parallel CNNs + ELOBA_λ training; 20.87 s/segmentation |

| Kickingereder et al. [17] | 2019 | ANN | T1C; T2 | 455 | 0.89–0.93 | Segmentation output < 1 min; longitudinal validation |

| Chang et al. [34] | 2019 | AutoRANO (U-Net) | T1C; FLAIR | 843 | 0.94 | Outputs RANO metrics for volumetric tracking |

| Ranjbarzadeh et al. [35] | 2021 | Cascaded CNN w/Attention | T1; T1C; T2; FLAIR | 285 | 0.92, 0.87, 0.91 * | Reduced training time by 80% |

| Deng et al. [36] | 2019 | FCNN + DMDF | T1; T1C; T2; FLAIR | 100 | 0.91 | Segmentation output < 1 s |

| Zhuge et al. [37] | 2017 | HNN | T1; T1C; T2; FLAIR | 10 | 0.83 | Weighted-fusion; 10 h training |

| Isensee et al. [38] | 2018 | Modified U-Net | T1C; T1; T2; FLAIR | 220 | 0.90, 0.80, 0.73 * | Top performer that year |

| Pereira et al. [39] | 2016 | CNN (3 × 3 kernel) | T1C; T1; T2; FLAIR | 65 | 0.88, 0.83, 0.77 * | BRATS-2013 top performer & BRATS-2015 2nd overall |

| Havaei et al. [40] | 2017 | Cascaded CNN (2nd DNN input) | T1C; T1; T2; FLAIR | 65 | NA | Reduced segmentation time by 30-fold |

| Soltaninejad et al. [41] | 2017 | RF + FCN | T1C; T1; T2; FLAIR | 65 | 0.88, 0.80, 0.73 * | RF & FCN ensemble |

| Hussain et al. [42] | 2018 | Deep CNN w/dual-patch input | T1C; T1; T2; FLAIR | 274 | 0.87, 0.89, 0.92 * | Novel batch normalization |

| Tian et al. [43] | 2024 | 3D U-Net | NCCT; T1C; FLAIR | 148 | 0.92, 0.87, 0.91 * | Novel two-stage 3D U-Net |

| Moradi et al. [31] | 2025 | nnU-Net ensemble | T2; FLAIR | 150 | 0.83 | Synthetic training data; BRATS-2023 and 2024 top performer |

| Manual | Artificial Intelligence | |||

|---|---|---|---|---|

| Auto-segmentation | PROS | - Physician-driven | PROS | - Faster segmentation |

| - Context-aware decisions | - Improved consistency | |||

| - Customizable per patient | - Scalable across cases | |||

| CONS | - Time-consuming | CONS | - May miss subtle anatomical nuances | |

| - Intra-/inter-observer variability | - Limited generalizability | |||

| - Inconsistent delineation standards | - Requires clinician verification | |||

| Dose planning | PROS | - Greater human oversight | PROS | - High-speed prediction |

| - Flexible for complex cases | - More standardized plans | |||

| - Learns from large datasets | ||||

| CONS | - Labor-intensive | CONS | - May not account for patient-specific anatomy | |

| - Less reproducible | - Potential overfitting to training data | |||

| - Susceptible to planning variability | ||||

| Biologically informed RT | PROS | - Direct clinical judgment in biomarker relevance | PROS | - Can integrate complex genomic/radiomic data |

| - Custom-tailored escalation decisions | - Identifies non-obvious dose–response patterns | |||

| CONS | - Limited by available validated biomarkers | CONS | - Models may lack transparency | |

| - Not easily reproducible across institutions | - Biological relevance not always clinically validated | |||

| Treatment response prediction | PROS | - Based on clinician experience and medical history | PROS | - Detects hidden correlations |

| - Potential for early outcome forecasting | ||||

| CONS | - Subjective and inconsistent | CONS | - Risk of bias | |

| - Cannot scale or track subtle data patterns | - Generalizability across cohorts is limited | |||

| Radiogenomics integration | PROS | - Precision when available | PROS | - Merges imaging and genetic data |

| - Personalized to patient | - Hypothesis-generating at population level | |||

| CONS | - Not feasible at large scale | CONS | - Often exploratory | |

| - Requires multidisciplinary interpretation | - Lacks consistent clinical validation | |||

| Interpretability/Decision Support | PROS | - Transparent reasoning | PROS | - Synthesizes multimodal data |

| - Based on clinical logic | - Suggests patterns not obvious to clinicians | |||

| CONS | - May overlook complex data relationships | CONS | - Often black-box models | |

| - Hard to scale to multi-omic inputs | - May reduce clinician trust if unexplained | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Master, R.; Rubin, N.; Sampson, J.; Yadav, K.K.; Pandita, S.; Sabbagh, A.; Krishnan, A.; Silva, P.J.; Ramos, K.S.; Gregoire, V.; et al. Advances in Artificial Intelligence for Glioblastoma Radiotherapy Planning and Treatment. Cancers 2025, 17, 3762. https://doi.org/10.3390/cancers17233762

Master R, Rubin N, Sampson J, Yadav KK, Pandita S, Sabbagh A, Krishnan A, Silva PJ, Ramos KS, Gregoire V, et al. Advances in Artificial Intelligence for Glioblastoma Radiotherapy Planning and Treatment. Cancers. 2025; 17(23):3762. https://doi.org/10.3390/cancers17233762

Chicago/Turabian StyleMaster, Reid, Nesha Rubin, James Sampson, Kamlesh K. Yadav, Shruti Pandita, Aria Sabbagh, Anika Krishnan, Patrick J. Silva, Kenneth S. Ramos, Vincent Gregoire, and et al. 2025. "Advances in Artificial Intelligence for Glioblastoma Radiotherapy Planning and Treatment" Cancers 17, no. 23: 3762. https://doi.org/10.3390/cancers17233762

APA StyleMaster, R., Rubin, N., Sampson, J., Yadav, K. K., Pandita, S., Sabbagh, A., Krishnan, A., Silva, P. J., Ramos, K. S., Gregoire, V., Paragios, N., Krishnan, S., & Pandita, T. K. (2025). Advances in Artificial Intelligence for Glioblastoma Radiotherapy Planning and Treatment. Cancers, 17(23), 3762. https://doi.org/10.3390/cancers17233762