Predicting Radiotherapy Outcomes with Deep Learning Models Through Baseline and Adaptive Simulation Computed Tomography in Patients with Pharyngeal Cancer

Simple Summary

Abstract

1. Introduction

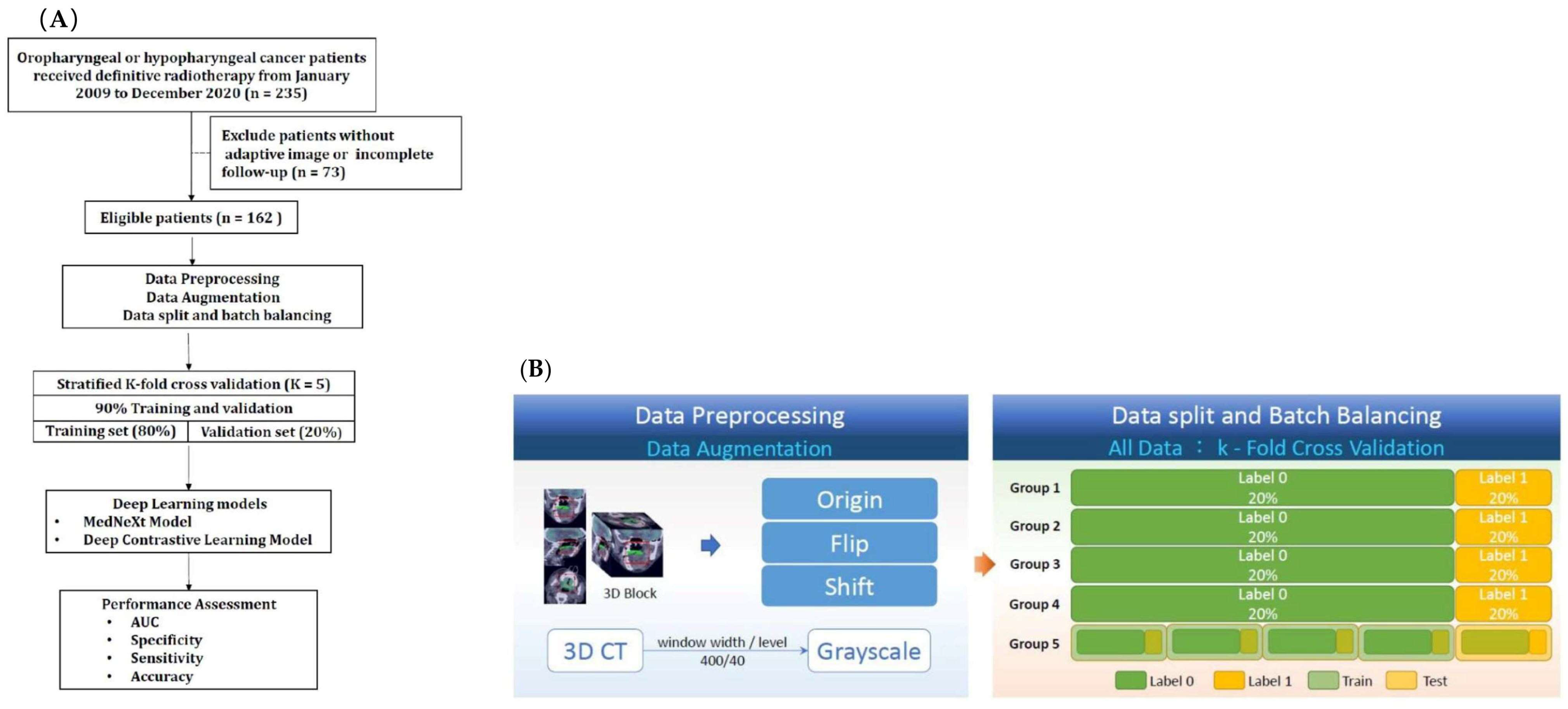

2. Materials and Methods

2.1. Study Population

- 1.

- Completed the prescribed RT or CRT and were followed for at least 6 months or until death.

- 2.

- Underwent a comprehensive staging process including physical examination, laryngoscopy, tumor biopsy, chest radiography, and either a CT scan of the neck or 18F-FDG PET/CT.

- 3.

- They were classified as having American Joint Committee on Cancer (AJCC) stage III to IVB disease, with a clear distinction between the primary tumor and nodal involvement.

- 4.

- Had a planned adaptive radiotherapy (ART) simulation CT scan performed approximately 4 to 5 weeks after the start of radiation therapy.

2.2. Study Endpoints and Design

2.3. Simulation CT Image Acquisition

2.4. Tumor Volume Delineation

2.5. Data Preprocessing

2.6. Data Augmentation

2.7. Data Split and Batch Balancing

2.8. Model Training and Optimization

2.9. Postprocessing

2.10. Treatment

2.11. Follow-Up

2.12. Statistical Analysis

3. Results

3.1. Patient Characteristics and Treatment Outcome

3.2. Patient-Based Prediction

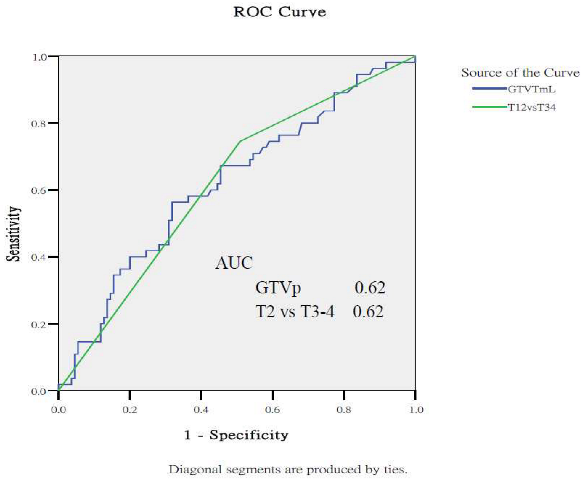

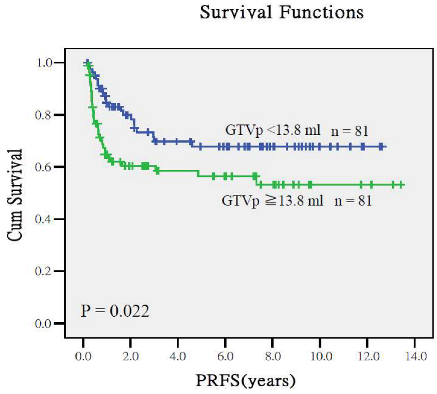

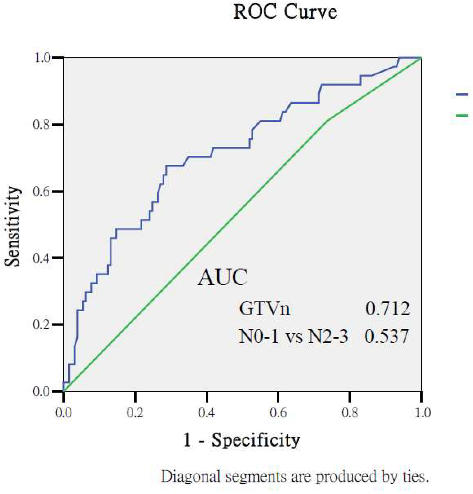

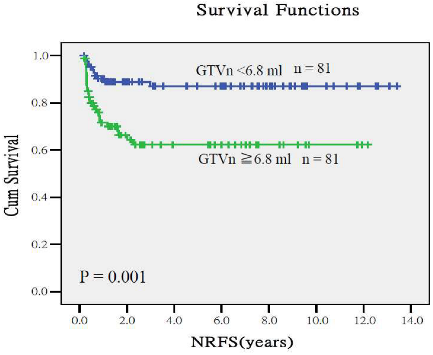

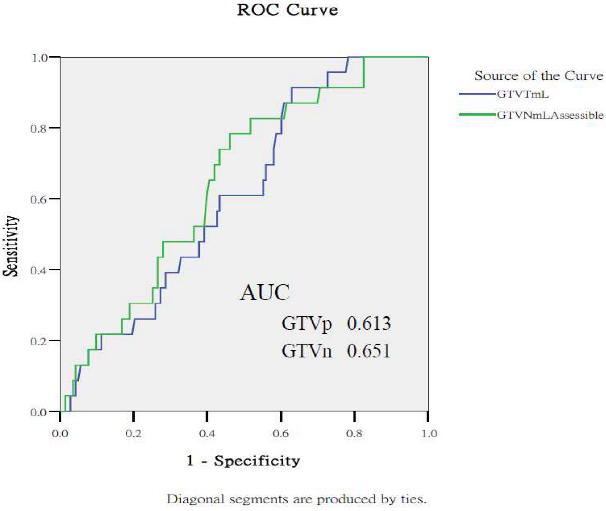

3.3. Comparison with the Prediction Performance from Clinical Stage and Gross Tumor Volumes

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Supervised Contrastive Loss Function and Model Optimization Strategy

Appendix A.2. Predictive Performance of Gross Tumor Volumes and Clinical Staging for Treatment Outcomes

References

- Glide-Hurst, C.K.; Lee, P.; Yock, A.D.; Olsen, J.R.; Cao, M.; Siddiqui, F.; Parker, W.; Doemer, A.; Rong, Y.; Kishan, A.U.; et al. Adaptive Radiation Therapy (ART) Strategies and Technical Considerations: A State of the ART Review From NRG Oncology. Int. J. Radiat. Oncol. Biol. Phys. 2021, 109, 1054–1075. [Google Scholar] [CrossRef]

- Nuyts, S.; Bollen, H.; Eisbruch, A.; Strojan, P.; Mendenhall, W.M.; Ng, S.P.; Ferlito, A. Adaptive radiotherapy for head and neck cancer: Pitfalls and possibilities from the radiation oncologist’s point of view. Cancer Med. 2024, 13, e7192. [Google Scholar] [CrossRef]

- Siegel, R.L.; Miller, K.D.; Wagle, N.S.; Jemal, A. Cancer statistics, 2023. CA Cancer J. Clin. 2023, 73, 17–48. [Google Scholar] [CrossRef] [PubMed]

- Atun, R.; Jaffray, D.A.; Barton, M.B.; Bray, F.; Baumann, M.; Vikram, B.; Hanna, T.P.; Knaul, F.M.; Lievens, Y.; Lui, T.Y.; et al. Expanding global access to radiotherapy. Lancet Oncol. 2015, 16, 1153–1186. [Google Scholar] [CrossRef] [PubMed]

- Morgan, H.E.; Sher, D.J. Adaptive radiotherapy for head and neck cancer. BMC Cancer 2020, 5, 1. [Google Scholar] [CrossRef] [PubMed]

- Gan, Y.; Langendijk, J.A.; Oldehinkel, E.; Lin, Z.; Both, S.; Brouwer, C.L. Optimal timing of re-planning for head and neck adaptive radiotherapy. Radiother. Oncol. 2024, 194, 110145. [Google Scholar] [CrossRef]

- Kumar, B.; Cordell, K.G.; Lee, J.S.; Worden, F.P.; Prince, M.E.; Tran, H.H.; Wolf, G.T.; Urba, S.G.; Chepeha, D.B.; Teknos, T.N.; et al. EGFR, p16, HPV titer, Bcl-xL and p53, sex, and smoking as indicators of response to therapy and survival in oropharyngeal cancer. J. Clin. Oncol. 2008, 26, 3128–3137. [Google Scholar] [CrossRef]

- Zhang, J.; Lam, S.K.; Teng, X.; Ma, Z.; Han, X.; Zhang, Y.; Cheung, A.L.; Chau, T.C.; Ng, S.C.; Lee, F.K.; et al. Radiomic feature repeatability and its impact on prognostic model generalizability: A multi-institutional study on nasopharyngeal carcinoma patients. Radiother. Oncol. 2023, 183, 109578. [Google Scholar] [CrossRef]

- Lou, B.; Doken, S.; Zhuang, T.; Wingerter, D.; Gidwani, M.; Mistry, N.; Ladic, L.; Kamen, A.; Abazeed, M.E. An image-based deep learning framework for individualizing radiotherapy dose. Lancet Digit. Health 2019, 1, e136–e147. [Google Scholar] [CrossRef]

- Fujima, N.; Andreu-Arasa, V.C.; Meibom, S.K.; Mercier, G.A.; Truong, M.T.; Hirata, K.; Yasuda, K.; Kano, S.; Homma, A.; Kudo, K.; et al. Prediction of the local treatment outcome in patients with oropharyngeal squamous cell carcinoma using deep learning analysis of pretreatment FDG-PET images. BMC Cancer 2021, 21, 900. [Google Scholar] [CrossRef]

- Diamant, A.; Chatterjee, A.; Vallières, M.; Shenouda, G.; Seuntjens, J. Deep learning in head & neck cancer outcome prediction. Sci. Rep. 2019, 9, 2764. [Google Scholar] [CrossRef] [PubMed]

- Huynh, B.N.; Groendahl, A.R.; Tomic, O.; Liland, K.H.; Knudtsen, I.S.; Hoebers, F.; van Elmpt, W.; Malinen, E.; Dale, E.; Futsaether, C.M. Head and neck cancer treatment outcome prediction: A comparison between machine learning with conventional radiomics features and deep learning radiomics. Front. Med. 2023, 30, 1217037, Erratum in Front. Med. 2024, 11, 1421603. https://doi.org/10.3389/fmed.2024.1421603. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Yang, S.N.; Liao, C.Y.; Chen, S.W.; Liang, J.A.; Tsai, M.H.; Hua, C.H.; Lin, F.J. Clinical implications of the tumor volume reduction rate in head-and-neck cancer during definitive intensity-modulated radiotherapy for organ preservation. Int. J. Radiat. Oncol. Biol. Phys. 2011, 79, 1096–1103. [Google Scholar] [CrossRef]

- Giorgi, J.; Nitski, O.; Wang, B.; Bader, G. Deep contrastive learning for unsupervised textual representations. arXiv 2006, arXiv:2006.03659. [Google Scholar]

- Yoon, H.; Ha, S.; Kwon, S.J.; Park, S.Y.; Kim, J.; O, J.H.; Yoo, I.R. Prognostic value of tumor metabolic imaging phenotype by FDG PET radiomics in HNSCC. Ann. Nucl. Med. 2021, 35, 370–377. [Google Scholar] [CrossRef]

- Higgins, K.A.; Hoang, J.K.; Roach, M.C.; Chino, J.; Yoo, D.S.; Turkington, T.G.; Brizel, D.M. Analysis of pretreatment FDG-PET SUV parameters in head-and-neck cancer: Tumor SUVmean has superior prognostic value. Int. J. Radiat. Oncol. Biol. Phys. 2012, 82, 548–553. [Google Scholar] [CrossRef]

- Wang, B.; Liu, J.; Zhang, X.; Wang, Z.; Cao, Z.; Lu, L.; Lv, W.; Wang, A.; Li, S.; Wu, X.; et al. Prognostic value of 18F-FDG PET/CT-based radiomics combining dosiomics and dose volume histogram for head and neck cancer. EJNMMI Res. 2023, 13, 14. [Google Scholar] [CrossRef] [PubMed]

- Argiris, A.; Karamouzis, M.V.; Raben, D.; Ferris, R.L. Head and neck cancer. Lacent 2008, 371, 1695–1709. [Google Scholar] [CrossRef] [PubMed]

- Alabi, R.O.; Almangush, A.; Elmusrati, M.; Leivo, I.; Mäkitie, A.A. An interpretable machine learning prognostic system for risk stratification in oropharyngeal cancer. Int. J. Med. Inform. 2022, 168, 104896. [Google Scholar] [CrossRef]

- Karadaghy, O.A.; Shew, M.; New, J.; Bur, A.M. Machine Learning to Predict Treatment in Oropharyngeal Squamous Cell Carcinoma. ORL 2021, 84, 39–46. [Google Scholar] [CrossRef]

- Bonomo, P.; Socarras Fernandez, J.; Thorwarth, D.; Casati, M.; Livi, L.; Zips, D.; Gani, C. Simulation CT-based radiomics for prediction of response after neoadjuvant chemo-radiotherapy in patients with locally advanced rectal cancer. Radiat. Oncol. 2022, 17, 84. [Google Scholar] [CrossRef]

- Illimoottil, M.; Ginat, D. Recent Advances in Deep Learning and Medical Imaging for Head and Neck Cancer Treatment: MRI, CT, and PET Scans. Cancers 2023, 15, 3267. [Google Scholar] [CrossRef]

- Albosaabar, M.H.; Ahmad, R.B.; Abouelenein, H.; Mohamed, F.; Yahya, N.; Mohamed, D.O.; Sayed, M.M. Determining The Optimal Time To Apply Adaptive Radiotherapy Plan For Head And Neck Cancer Patients. J. Pioneer. Med. Sci. 2024, 13, 99–105. [Google Scholar] [CrossRef]

- Li, Z.; Raldow, A.C.; Weidhaas, J.B.; Zhou, Q.; Qi, X.S. Prediction of radiation treatment response for locally advanced rectal cancer via a longitudinal trend analysis framework on cone-beam CT. Cancer 2023, 15, 5142. [Google Scholar] [CrossRef]

- Abid, N.; Neoaz, N.; Amin, M.H. Deep learning for multi-modal cancer imaging: Integrating radiomics, genomics, and clinical data for comprehensive diagnosis and prognosis. Glob. J. Univers. Stud. 2024, 1, 126–145. [Google Scholar] [CrossRef]

- Javanmardi, A.; Hosseinzadeh, M.; Hajianfar, G.; Nabizadeh, A.H.; Rezaeijo, S.M.; Rahmim, A.; Salmanpour, M. Multi-modality fusion coupled with deep learning for improved outcome prediction in head and neck cancer. Proc. SPIE 2022, 12032, 120322I. [Google Scholar]

- Toosi, A.; Shiri, I.; Zaidi, H.; Rahmim, A. Segmentation-free outcome prediction from head and neck cancer PET/CT images: Deep learning-based feature extraction from Multi-Angle Maximum Intensity Projections (MA-MIPs). Cancer 2024, 16, 2538. [Google Scholar] [CrossRef]

- Akcay, M.; Etiz, D.; Celik, O.; Ozen, A. Evaluation of Prognosis in Nasopharyngeal Cancer Using Machine Learning. Technol. Cancer Res. Treat. 2020, 19, 1533033820909829. [Google Scholar] [CrossRef]

- Jung, H.K.; Kim, K.; Park, J.E.; Kim, N. Image-based generative artificial intelligence in radiology: Comprehensive updates. Korean J. Radiol. 2024, 25, 959–981. [Google Scholar] [CrossRef] [PubMed]

- Yoon, S.W.; Lin, H.; Alonso-Basanta, M.; Anderson, N.; Apinorasethkul, O.; Cooper, K.; Dong, L.; Kempsey, B.; Marcel, J.; Metz, J.; et al. Initial Evaluation of a Novel Cone-Beam CT-Based Semi-Automated Online Adaptive Radiotherapy System for Head and Neck Cancer Treatment—A Timing and Automation Quality Study. Cureus 2020, 12, e9660. [Google Scholar] [CrossRef]

| Variables | n (%) |

|---|---|

| Gender | |

| Male | 156 |

| Female | 6 |

| Age (year) | median 53; range 37 to 82 |

| Primary tumor site | |

| oropharynx | 80 (49.40%) |

| hypopharynx | 82 (50.6%) |

| ECOG performance status | |

| 0 | 24 (14.8%) |

| 1 | 136 (84.0%) |

| 2 | 2 (1.2%) |

| T classification | |

| T1 | 11 (6.8%) |

| T2 | 53 (32.7%) |

| T3 | 42 (25.9%) |

| T4 | 56 (34.6%) |

| N classification | |

| N0 | 3 (1.9%) |

| N1 | 34 (21.0%) |

| N2 | 117 (72.2%) |

| N3 | 8 (4.9%) |

| AJCC stage | |

| III | 23 (14.2%) |

| IVA | 125 (77.2%) |

| IVB | 14 (8.6%) |

| Smoking | |

| Smoker | 138 (84.1%) |

| Never-smoker | 24 (15.9%) |

| Betel nut squid | |

| Yes | 85 (51.9%) |

| Never | 77 (48.1%) |

| Alcohol drinking | |

| Alcoholism | 74 (45.7%) |

| Non-alcoholism | 88 (54.3%) |

| Radiation dose (Gy) | median 70.0 Gy (range, 68.4–72.0 Gy) |

| Concurrent drug regimen | |

| Tri-weekly cisplatin | 131 (80.9%) |

| Cetuximab | 24 (14.8%) |

| None | 7 (4.3%) |

| Median follow-up durations (months) | 34 (range, 6 to 158) |

| TEST | AUC | Accuracy | Sensitivity | Specificity | |

|---|---|---|---|---|---|

| Baseline simulation CT | |||||

| K1 | 0.572 | 0.588 | 0.545 | 0.667 | |

| K2 | 0.658 | 0.625 | 0.619 | 0.636 | |

| K3 | 0.788 | 0.75 | 0.762 | 0.727 | |

| K4 | 0.697 | 0.6885 | 0.619 | 0.818 | |

| K5 | 0.779 | 0.688 | 0.619 | 0.818 | |

| Mean | 0.699 | 0.668 | 0.633 | 0.733 | |

| Adaptive simulation CT | |||||

| K1 | 0.674 | 0.559 | 0.545 | 0.583 | |

| K2 | 0.71 | 0.688 | 0.667 | 0.727 | |

| K3 | 0.662 | 0.531 | 0.429 | 0.727 | |

| K4 | 0.658 | 0.594 | 0.524 | 0.727 | |

| K5 | 0.71 | 0.656 | 0.667 | 0.636 | |

| Mean | 0.683 | 0.606 | 0.566 | 0.680 | |

| Merged ensemble model | |||||

| K1 | 0.758 | 0.618 | 0.591 | 0.667 | |

| K2 | 0.74 | 0.781 | 0.857 | 0.636 | |

| K3 | 0.814 | 0.75 | 0.667 | 0.909 | |

| K4 | 0.805 | 0.75 | 0.857 | 0.545 | |

| K5 | 0.749 | 0.719 | 0.667 | 0.818 | |

| Mean | 0.773 | 0.724 | 0.728 | 0.715 |

| TEST | AUC | Accuracy | Sensitivity | Specificity | |

|---|---|---|---|---|---|

| Baseline simulation CT | |||||

| K1 | 0.6 | 0.636 | 0.68 | 0.5 | |

| K2 | 0.725 | 0.667 | 0.64 | 0.75 | |

| K3 | 0.583 | 0.563 | 0.56 | 0.571 | |

| K4 | 0.731 | 0.656 | 0.64 | 0.714 | |

| K5 | 0.537 | 0.563 | 0.56 | 0.571 | |

| Mean | 0.635 | 0.617 | 0.616 | 0.621 | |

| Adaptive simulation CT | |||||

| K1 | 0.745 | 0.667 | 0.68 | 0.625 | |

| K2 | 0.61 | 0.515 | 0.48 | 0.625 | |

| K3 | 0.686 | 0.656 | 0.64 | 0.714 | |

| K4 | 0.8 | 0.656 | 0.6 | 0.857 | |

| K5 | 0.606 | 0.719 | 0.8 | 0.429 | |

| Mean | 0.689 | 0.643 | 0.64 | 0.65 | |

| Merged ensemble model | |||||

| K1 | 0.795 | 0.879 | 1 | 0.5 | |

| K2 | 0.69 | 0.667 | 0.68 | 0.625 | |

| K3 | 0.709 | 0.781 | 0.84 | 0.571 | |

| K4 | 0.851 | 0.844 | 0.88 | 0.714 | |

| K5 | 0.691 | 0.563 | 0.52 | 0.714 | |

| Mean | 0.747 | 0.747 | 0.784 | 0.625 |

| TEST | AUC | Accuracy | Sensitivity | Specificity | |

|---|---|---|---|---|---|

| Baseline simulation CT | |||||

| K1 | 0.629 | 0.697 | 0.75 | 0.4 | |

| K2 | 0.843 | 0.788 | 0.786 | 0.8 | |

| K3 | 0.7 | 0.727 | 0.714 | 0.8 | |

| K4 | 0.795 | 0.781 | 0.786 | 0.75 | |

| K5 | 0.787 | 0.581 | 0.519 | 1 | |

| Mean | 0.751 | 0.715 | 0.711 | 0.75 | |

| Adaptive simulation CT | |||||

| K1 | 0.586 | 0.727 | 0.786 | 0.4 | |

| K2 | 0.65 | 0.848 | 0.929 | 0.4 | |

| K3 | 0.821 | 0.788 | 0.821 | 0.6 | |

| K4 | 0.643 | 0.750 | 0.786 | 0.5 | |

| K5 | 0.63 | 0.742 | 0.741 | 0.75 | |

| Mean | 0.666 | 0.771 | 0.812 | 0.53 | |

| Merged ensemble model | |||||

| K1 | 0.814 | 0.848 | 0.893 | 0.6 | |

| K2 | 0.857 | 0.848 | 0.857 | 0.8 | |

| K3 | 0.757 | 0.697 | 0.679 | 0.8 | |

| K4 | 0.75 | 0.750 | 0.750 | 0.75 | |

| K5 | 0.787 | 0.645 | 0.630 | 0.75 | |

| Mean | 0.793 | 0.758 | 0.762 | 0.74 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, K.-C.; Chen, S.-W.; Chang, Y.-Y.; Wang, Y.-C.; Lin, Y.-C.; Chang, C.-J.; Hsu, Z.-K.; Chang, R.-F.; Kao, C.-H. Predicting Radiotherapy Outcomes with Deep Learning Models Through Baseline and Adaptive Simulation Computed Tomography in Patients with Pharyngeal Cancer. Cancers 2025, 17, 3492. https://doi.org/10.3390/cancers17213492

Wu K-C, Chen S-W, Chang Y-Y, Wang Y-C, Lin Y-C, Chang C-J, Hsu Z-K, Chang R-F, Kao C-H. Predicting Radiotherapy Outcomes with Deep Learning Models Through Baseline and Adaptive Simulation Computed Tomography in Patients with Pharyngeal Cancer. Cancers. 2025; 17(21):3492. https://doi.org/10.3390/cancers17213492

Chicago/Turabian StyleWu, Kuo-Chen, Shang-Wen Chen, Yuan-Yen Chang, Yao-Ching Wang, Ying-Chun Lin, Chao-Jen Chang, Zong-Kai Hsu, Ruey-Feng Chang, and Chia-Hung Kao. 2025. "Predicting Radiotherapy Outcomes with Deep Learning Models Through Baseline and Adaptive Simulation Computed Tomography in Patients with Pharyngeal Cancer" Cancers 17, no. 21: 3492. https://doi.org/10.3390/cancers17213492

APA StyleWu, K.-C., Chen, S.-W., Chang, Y.-Y., Wang, Y.-C., Lin, Y.-C., Chang, C.-J., Hsu, Z.-K., Chang, R.-F., & Kao, C.-H. (2025). Predicting Radiotherapy Outcomes with Deep Learning Models Through Baseline and Adaptive Simulation Computed Tomography in Patients with Pharyngeal Cancer. Cancers, 17(21), 3492. https://doi.org/10.3390/cancers17213492