Radiologists’ Perspectives on AI Integration in Mammographic Breast Cancer Screening: A Mixed Methods Study

Simple Summary

Abstract

1. Introduction

2. Methods

2.1. Study Design and Setting

2.2. Quantitative Methodology

2.3. Qualitative Methodology

2.4. Triangulation Approach

2.5. Statistical Analysis

2.6. Ethical Approval

3. Results

3.1. Overview of Findings

3.2. Triangulation of Findings

3.2.1. AI’s Role in Supporting Diagnostic Tasks and Ensuring Consistency

3.2.2. Integration and Functional Alignment of AI in Clinical Workflows

3.2.3. Attitudes Toward AI Adoption

3.2.4. Ethical and Regulatory Concerns

3.2.5. Unintended Consequences and Paradoxical Outcomes

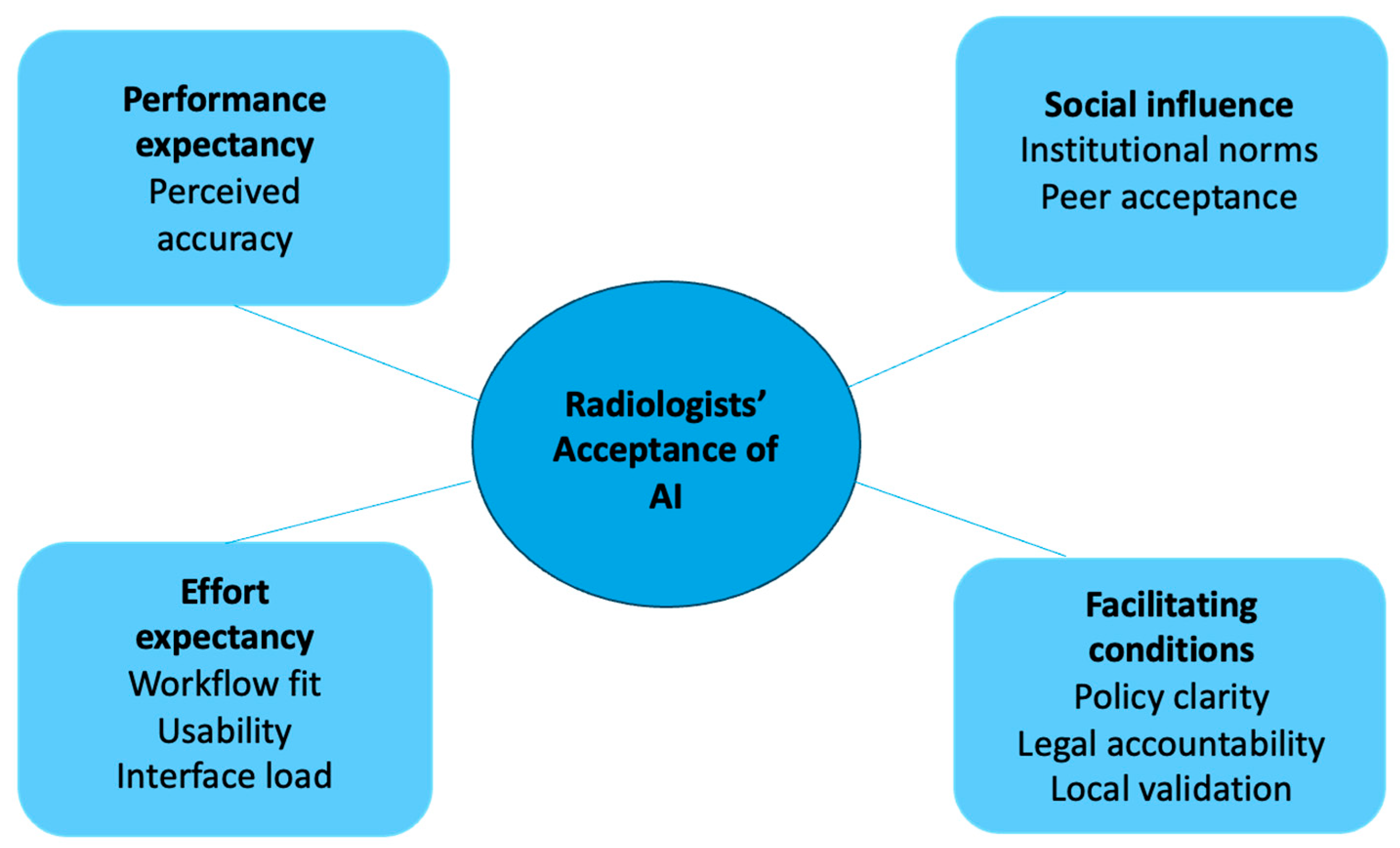

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bohr, A.; Memarzadeh, K. The rise of artificial intelligence in healthcare applications. In Artificial Intelligence in Healthcare; Academic Press: San Diego, CA, USA, 2020; pp. 25–60. [Google Scholar]

- Davenport, T.; Kalakota, R. The potential for artificial intelligence in healthcare. Future Healthc. J. 2019, 6, 94–98. [Google Scholar] [CrossRef]

- Sedeta, E.T.; Jobre, B.; Avezbakiyev, B. Breast cancer: Global patterns of incidence, mortality, and trends. J. Clin. Oncol. 2023, 41 (Suppl. 16), 10528. [Google Scholar] [CrossRef]

- Duffy, S.W.; Tabár, L.; Yen, A.M.; Dean, P.B.; Smith, R.A.; Jonsson, H.; Törnberg, S.; Chen, S.L.; Chiu, S.Y.; Fann, J.C.; et al. Mammography screening reduces rates of advanced and fatal breast cancers: Results in 549,091 women. Cancer 2020, 126, 2971–2979. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Goh, C.X.Y.; Ho, F.C.H. The Growing Problem of Radiologist Shortages: Perspectives from Singapore. Korean J. Radiol. 2023, 24, 1176–1178. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Dembrower, K.; Wåhlin, E.; Liu, Y.; Salim, M.; Smith, K.; Lindholm, P.; Eklund, M.; Strand, F. Effect of artificial intelligence-based triaging of breast cancer screening mammograms on cancer detection and radiologist workload: A retrospective simulation study. Lancet Digit. Health 2020, 2, e468–e474. [Google Scholar] [CrossRef] [PubMed]

- Lång, K.; Josefsson, V.; Larsson, A.M.; Larsson, S.; Högberg, C.; Sartor, H.; Hofvind, S.; Andersson, I.; Rosso, A. Artificial intelligence-supported screen reading versus standard double reading in the Mammography Screening with Artificial Intelligence trial (MASAI): A clinical safety analysis of a randomised, controlled, non-inferiority, single-blinded, screening accuracy study. Lancet Oncol. 2023, 24, 936–944. [Google Scholar] [CrossRef] [PubMed]

- Lauritzen, A.D.; Lillholm, M.; Lynge, E.; Nielsen, M.; Karssemeijer, N.; Vejborg, I. Early Indicators of the Impact of Using AI in Mammography Screening for Breast Cancer. Radiology 2024, 311, e232479. [Google Scholar] [CrossRef] [PubMed]

- Chia, J.L.; He, G.S.; Ngiam, K.Y.; Hartman, M.; Ng, Q.X.; Goh, S.S. Harnessing Artificial Intelligence to Enhance Global Breast Cancer Care: A Scoping Review of Applications, Outcomes, and Challenges. Cancers 2025, 17, 197. [Google Scholar] [CrossRef]

- Khan, B.; Fatima, H.; Qureshi, A.; Kumar, S.; Hanan, A.; Hussain, J.; Abdullah, S. Drawbacks of Artificial Intelligence and Their Potential Solutions in the Healthcare Sector. Biomed. Mater. Devices 2023, 1, 731–738. [Google Scholar] [CrossRef]

- de Vries, C.F.; Colosimo, S.J.; Boyle, M.; Lip, G.; Anderson, L.A.; Staff, R.T.; iCAIRD Radiology Collaboration. AI in breast screening mammography: Breast screening readers’ perspectives. Insights Imaging 2022, 13, 186. [Google Scholar] [CrossRef]

- Johansson, J.V.; Engström, E. ‘Humans think outside the pixels’—Radiologists’ perceptions of using artificial intelligence for breast cancer detection in mammography screening in a clinical setting. Health Inform. J. 2024, 30, 14604582241275020. [Google Scholar]

- Goh, S.; Du, H.; Tan, L.Y.; Seah, E.; Lau, W.K.; Ng, A.H.; Lim, S.W.D.; Ong, H.Y.; Lau, S.; Tan, Y.L. A Multi-Reader Multi-Case Study Comparing Mammographic Breast Cancer Detection Rates on Mammograms by Resident and Consultant Radiologists in Singapore with and without the Use of AI Assistance. Preprint 2023. [Google Scholar] [CrossRef]

- Marikyan, M.; Papagiannidis, P. Unified theory of acceptance and use of technology. In TheoryHub Book; Theory Hub: Cambridge, UK, 2021. [Google Scholar]

- Tong, A.; Sainsbury, P.; Craig, J. Consolidated criteria for reporting qualitative research (COREQ): A 32-item checklist for interviews and focus groups. Int. J. Qual. Health Care 2007, 19, 349–357. [Google Scholar] [CrossRef]

- O’Cathain, A.; Murphy, E.; Nicholl, J. Good reporting of a mixed methods study (GRAMMS) checklist. J. Health Serv. Res. Policy 2008, 13, 92–98. [Google Scholar]

- Ng, Q.X.; Zhou, K.X. Rethinking reflexivity, replicability and rigour in qualitative research. Med. Humanit. 2025. [Google Scholar] [CrossRef] [PubMed]

- Ng, J.J.W.; Wang, E.; Zhou, X.; Zhou, K.X.; Goh, C.X.L.; Sim, G.Z.N.; Tan, H.K.; Goh, S.S.N.; Ng, Q.X. Evaluating the Performance of Artificial Intelligence-Based Speech Recognition for Clinical Documentation: A Systematic Review. BMC Med. Inform. Decis. Mak. 2025, 25, 236. [Google Scholar] [CrossRef]

- Högberg, C.; Larsson, S.; Lång, K. Anticipating artificial intelligence in mammography screening: Views of Swedish breast radiologists. BMJ Health Care Inform. 2023, 30, e100712. [Google Scholar]

- Lenskjold, A.; Nybing, J.U.; Trampedach, C.; Galsgaard, A.; Brejnebøl, M.W.; Raaschou, H.; Rose, M.H.; Boesen, M. Should artificial intelligence have lower acceptable error rates than humans? BJR Open 2023, 5, 20220053. [Google Scholar] [CrossRef]

- Anichini, G.; Natali, C.; Cabitza, F. Invisible to Machines: Designing AI that Supports Vision Work in Radiology. Comput. Support. Coop. Work (CSCW) 2024, 33, 993–1036. [Google Scholar] [CrossRef]

- Suchman, L. Human–Machine Communication. In Human-Machine Reconfigurations: Plans and Situated Actions. Learning in Doing: Social, Cognitive and Computational Perspectives; Cambridge University Press: Cambridge, UK, 2006; pp. 125–175. [Google Scholar]

- Kelly, C.J.; Karthikesalingam, A.; Suleyman, M.; Corrado, G.; King, D. Key challenges for delivering clinical impact with artificial intelligence. BMC Med. 2019, 17, 195. [Google Scholar] [CrossRef]

- van Hoek, J.; Huber, A.; Leichtle, A.; Härmä, K.; Hilt, D.; von Tengg-Kobligk, H.; Heverhagen, J.; Poellinger, A. A survey on the future of radiology among radiologists, medical students and surgeons: Students and surgeons tend to be more skeptical about artificial intelligence and radiologists may fear that other disciplines take over. Eur. J. Radiol. 2019, 121, 108742. [Google Scholar] [CrossRef]

- Hanna, M.G.; Pantanowitz, L.; Jackson, B.; Palmer, O.; Visweswaran, S.; Pantanowitz, J.; Deebajah, M.; Rashidi, H.H. Ethical and Bias Considerations in Artificial Intelligence/Machine Learning. Mod. Pathol. 2025, 38, 100686. [Google Scholar] [CrossRef]

- Jussupow, E.; Spohrer, K.; Heinzl, A. Radiologists’ Usage of Diagnostic AI Systems. Bus. Inf. Syst. Eng. 2022, 64, 293–309. [Google Scholar] [CrossRef]

- Walsh, L. Rising AI Costs Underscore Need for Value Selling: CHANNELOMICS. 2024. Available online: https://channelnomics.com/rising-ai-costs-underscore-need-for-value-selling (accessed on 20 December 2024).

- Cabitza, F.; Campagner, A.; Balsano, C. Bridging the “last mile” gap between AI implementation and operation: “data awareness” that matters. Ann. Transl. Med. 2020, 8, 501. [Google Scholar] [CrossRef]

- Nguyen, D.L.; Ren, Y.; Jones, T.M.; Thomas, S.M.; Lo, J.Y.; Grimm, L.J. Patient Characteristics Impact Performance of AI Algorithm in Interpreting Negative Screening Digital Breast Tomosynthesis Studies. Radiology 2024, 311, e232286. [Google Scholar] [CrossRef]

- Morgan, M.B.; Mates, J.L. Ethics of Artificial Intelligence in Breast Imaging. J. Breast Imaging 2023, 5, 195–200. [Google Scholar] [CrossRef]

- Lee, Y.-H.; Hsieh, Y.-C.; Chen, Y.-H. An investigation of employees’ use of e-learning systems: Applying the technology acceptance model. Behav. Inf. Technol. 2013, 32, 173–189. [Google Scholar] [CrossRef]

- Cè, M.; Ibba, S.; Cellina, M.; Tancredi, C.; Fantesini, A.; Fazzini, D.; Fortunati, A.; Perazzo, C.; Presta, R.; Montanari, R.; et al. Radiologists’ perceptions on AI integration: An in-depth survey study. Eur. J. Radiol. 2024, 177, 111590. [Google Scholar] [CrossRef]

- Chen, Y.; Wu, Z.; Wang, P.; Xie, L.; Yan, M.; Jiang, M.; Yang, Z.; Zheng, J.; Zhang, J.; Zhu, J. Radiology Residents’ Perceptions of Artificial Intelligence: Nationwide Cross-Sectional Survey Study. J. Med. Internet Res. 2023, 25, e48249. [Google Scholar] [CrossRef]

| Quantitative Findings | Qualitative Themes and Subthemes 1 | Meta-Inference |

|---|---|---|

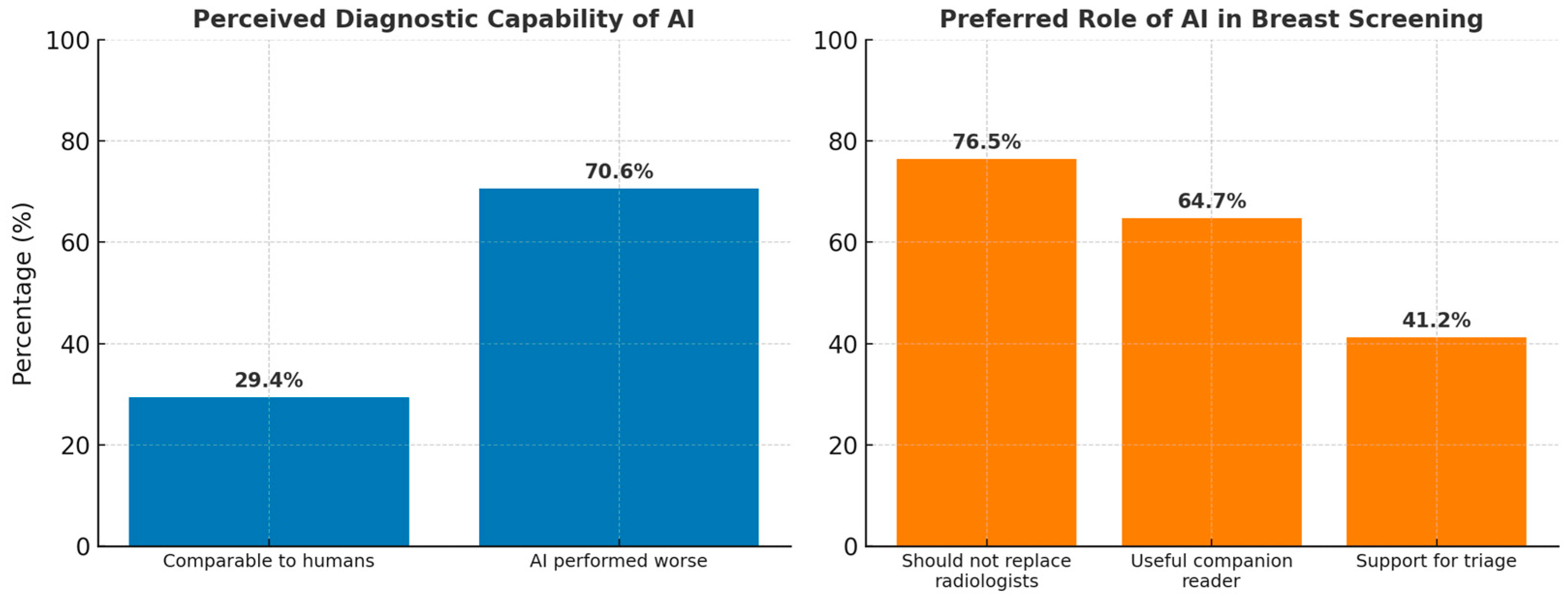

| 29.4% (5/17) of respondents rated AI as comparable to radiologists, while 70.6% (12/17) rated it as worse. | Theme 1: AI aids in routine diagnostic tasks but has limitations in complex interpretations. Subtheme 1.1: AI useful for routine tasks (Participant (P) P1, P2, P9) Subtheme 1.3: Limited in complex diagnostic integration (P4, P6) | Confirmation: The qualitative data aligns with survey responses, showing that AI is not yet viewed as capable of replacing human expertise for complex tasks. However, AI’s value lies in routine tasks and consistency. |

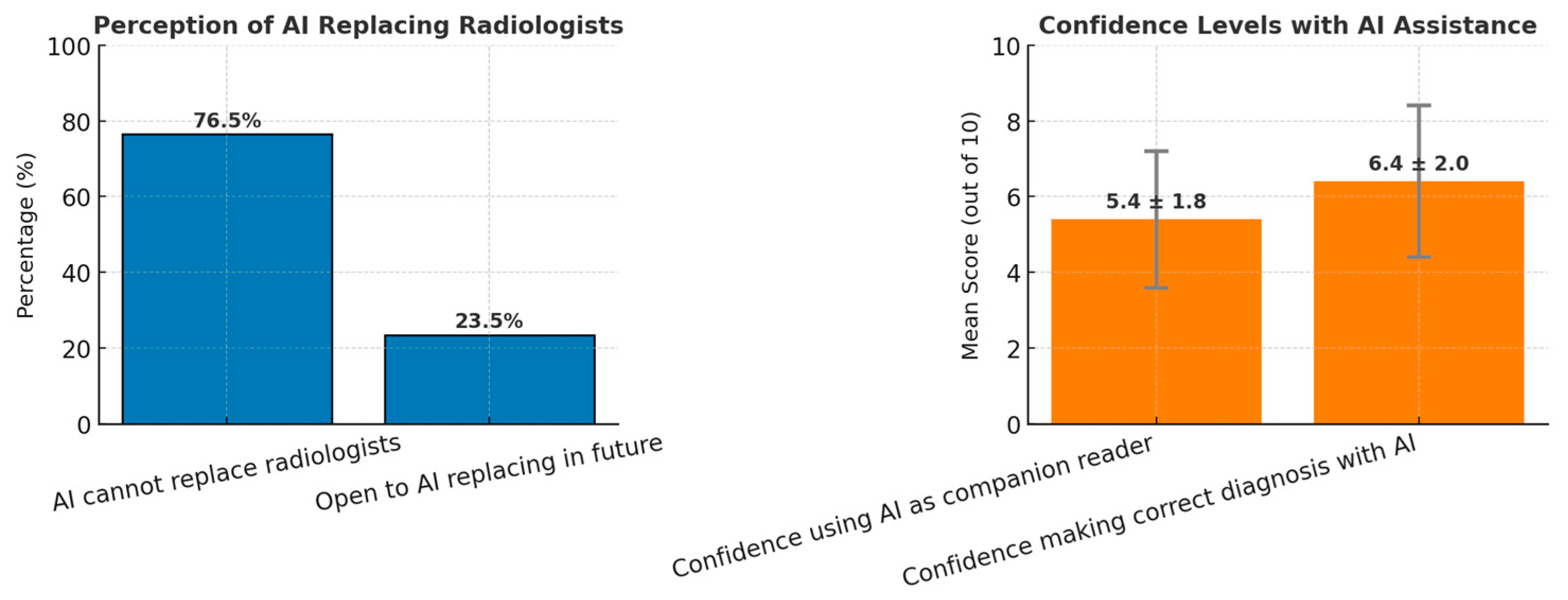

| 76.5% (13/17) indicated that AI cannot replace human radiologists, but 23.5% (4/17) were open to replacement. | Theme 3: Ambivalent attitudes towards AI adoption. Subtheme 3.2: Fear and uncertainty about AI replacing humans (P2, P8). | Expansion: AI is considered a useful companion, though resistance remains to full replacement. There is also fear among radiologists about AI potentially replacing their role in the future. |

| Mean score of 6.4 (out of 10) for confidence in AI-assisted diagnosis. | Theme 3: Technological anxiety from AI use. Subtheme 3.3: Fear of over-reliance and doubts about vendor processes (P4, P5). | Discordance: While respondents expressed moderate confidence, qualitative insights reveal anxiety and resistance regarding over-reliance on AI tools. |

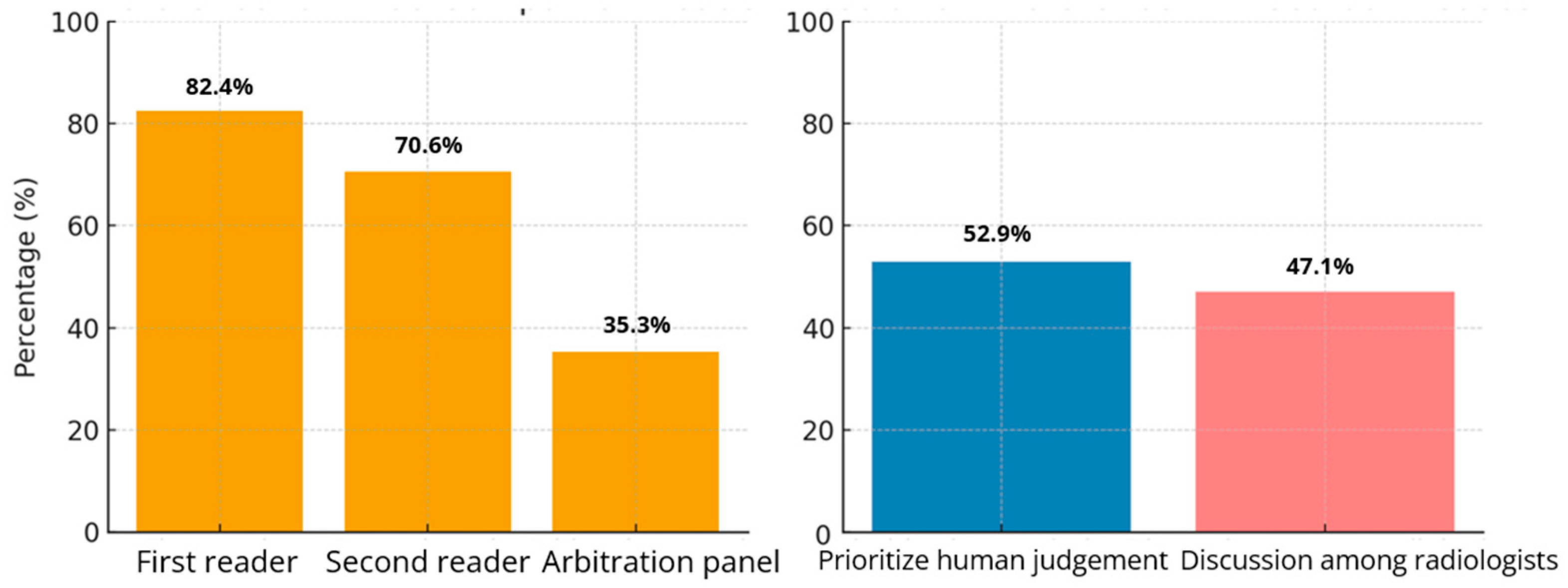

| 64.7% (11/17) recommended AI as a companion for either Reader 1 or 2, while 58.8% (10/17) specifically preferred its use for Reader 2. | Theme 2: Integration and functional Alignment of AI in Clinical Workflows Subtheme 2.3: Integration barriers and infrastructure issues (P6, P10). | Expansion: The preference for AI use with secondary readers suggests it is better suited for supportive roles rather than primary diagnostic responsibility. Integration challenges must be addressed to enhance adoption. |

| Heat maps were ranked as the most useful feature, while triaging ranked fourth. | Theme 1: AI enhances detection in specific tasks. Subtheme 1.2: Heat maps improve cancer detection (P7). | Confirmation: There is alignment between the perceived utility of heat maps in the survey and their value in practice. AI’s value lies in augmenting human detection in nuanced areas. |

| 52.9% (9/17) favored radiologists’ opinions prevailing over AI, and 47.1% (8/17) suggested discussion of discordant cases. | Theme 5: Increased workload from AI use. Subtheme 5.2: AI introduces workload paradox (P9). | Discordance: Although AI is intended to reduce workload, its use often increases work due to discordant case discussions and resulting additional investigations. |

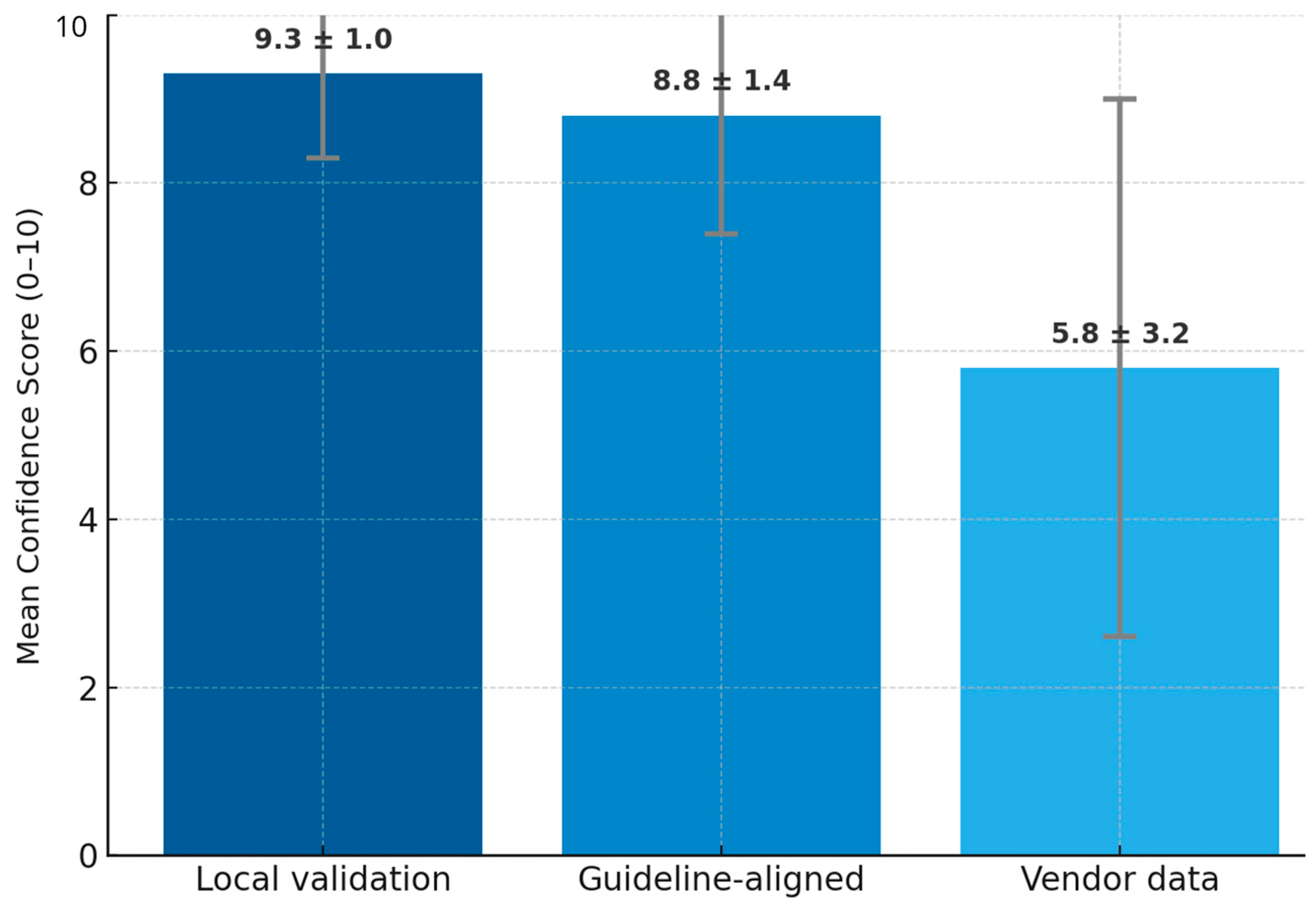

| Testing AI on local datasets had a mean confidence rating of 9.3 (out of 10), the highest-rated evidence type. | Theme 4: Ethical and regulatory concerns. Subtheme 4.2: Importance of regulatory frameworks and national standards (P2, P3). | Expansion: Need for locally validated AI models and government regulations to foster AI adoption. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Goh, S.S.N.; Ng, Q.X.; Chan, F.J.H.; Goh, R.S.J.; Jagmohan, P.; Ali, S.H.; Koh, G.C.H. Radiologists’ Perspectives on AI Integration in Mammographic Breast Cancer Screening: A Mixed Methods Study. Cancers 2025, 17, 3491. https://doi.org/10.3390/cancers17213491

Goh SSN, Ng QX, Chan FJH, Goh RSJ, Jagmohan P, Ali SH, Koh GCH. Radiologists’ Perspectives on AI Integration in Mammographic Breast Cancer Screening: A Mixed Methods Study. Cancers. 2025; 17(21):3491. https://doi.org/10.3390/cancers17213491

Chicago/Turabian StyleGoh, Serene Si Ning, Qin Xiang Ng, Felicia Jia Hui Chan, Rachel Sze Jen Goh, Pooja Jagmohan, Shahmir H. Ali, and Gerald Choon Huat Koh. 2025. "Radiologists’ Perspectives on AI Integration in Mammographic Breast Cancer Screening: A Mixed Methods Study" Cancers 17, no. 21: 3491. https://doi.org/10.3390/cancers17213491

APA StyleGoh, S. S. N., Ng, Q. X., Chan, F. J. H., Goh, R. S. J., Jagmohan, P., Ali, S. H., & Koh, G. C. H. (2025). Radiologists’ Perspectives on AI Integration in Mammographic Breast Cancer Screening: A Mixed Methods Study. Cancers, 17(21), 3491. https://doi.org/10.3390/cancers17213491