Deep Learning and High-Resolution Anoscopy: Development of an Interoperable Algorithm for the Detection and Differentiation of Anal Squamous Cell Carcinoma Precursors—A Multicentric Study

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Design and Patient Selection

2.2. High-Resolution Anoscopy Procedures

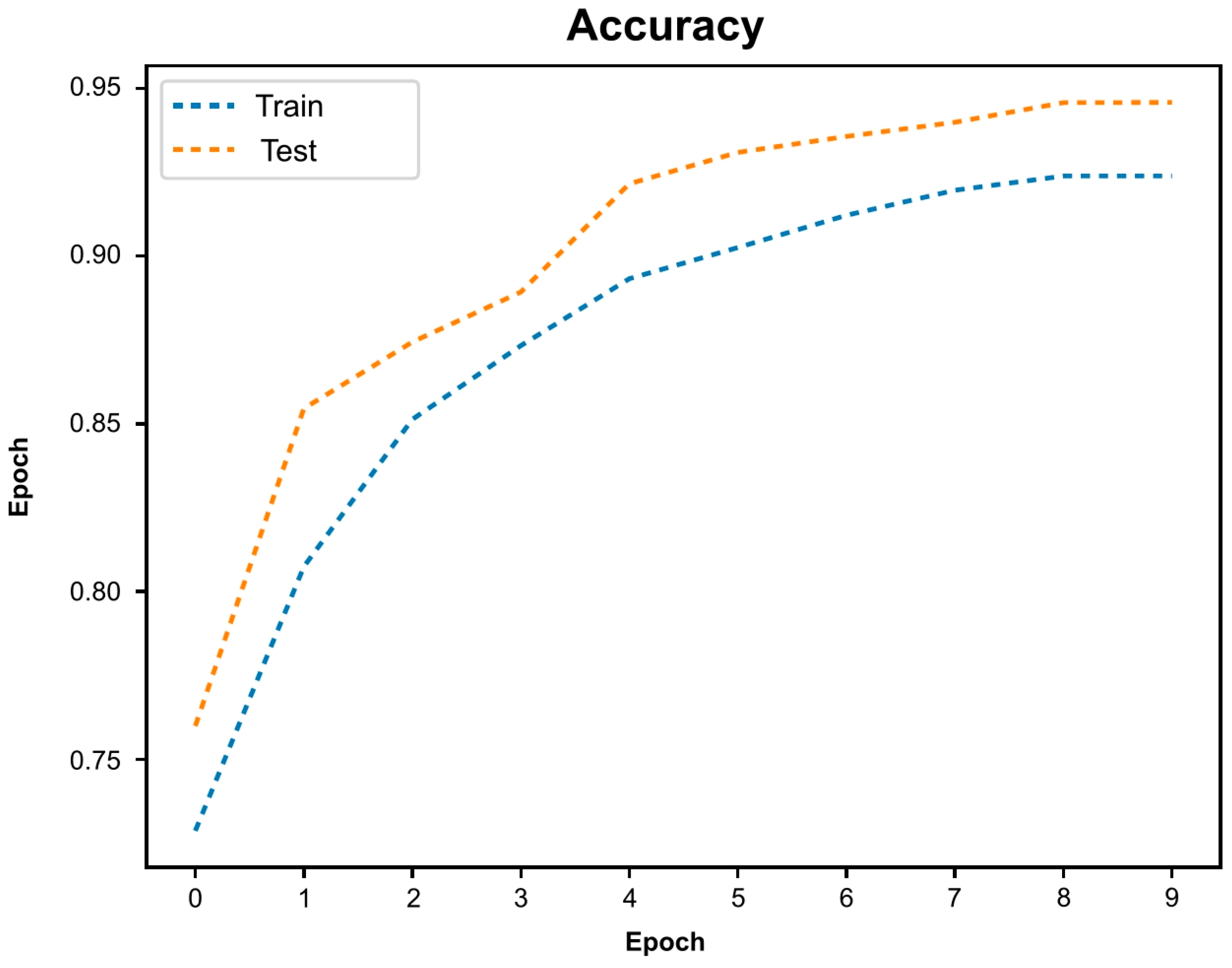

2.3. Image Processing, Dataset Organization and Development of the Convolutional Neural Network

2.4. Model Performance and Statistical Analysis

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hillman, R.J.; Cuming, T.; Darragh, T.; Nathan, M.; Berry-Lawthorn, M.; Goldstone, S.; Law, C.; Palefsky, J.; Barroso, L.F.; Stier, E.A.; et al. 2016 IANS International Guidelines for Practice Standards in the Detection of Anal Cancer Precursors. J. Low Genit. Tract Dis. 2016, 20, 283–291. [Google Scholar] [CrossRef]

- Jay, N. High-resolution anoscopy: Procedure and findings. Semin. Colon. Rectal Surg. 2017, 28, 75–80. [Google Scholar] [CrossRef]

- Mistrangelo, M.; Salzano, A. Progression of LSIL to HSIL or SCC: Is anoscopy and biopsy good enough? Tech. Coloproctol. 2019, 23, 303–304. [Google Scholar] [CrossRef] [PubMed]

- Clifford, G.M.; Georges, D.; Shiels, M.S.; Engels, E.A.; Albuquerque, A.; Poynten, I.M.; de Pokomandy, A.; Easson, A.M.; Stier, E.A. A meta-analysis of anal cancer incidence by risk group: Toward a unified anal cancer risk scale. Int. J. Cancer 2021, 148, 38–47. [Google Scholar] [CrossRef]

- Deshmukh, A.A.; Chiao, E.Y.; Cantor, S.B.; Stier, E.A.; Goldstone, S.E.; Nyitray, A.G.; Wilkin, T.; Wang, X.; Chhatwal, J. Management of precancerous anal intraepithelial lesions in human immunodeficiency virus-positive men who have sex with men: Clinical effectiveness and cost-effectiveness. Cancer 2017, 123, 4709–4719. [Google Scholar] [CrossRef]

- Liu, Y.; Bhardwaj, S.; Sigel, K.; Winters, J.; Terlizzi, J.; Gaisa, M.M. Anal cancer screening results from 18-to-34-year-old men who have sex with men living with HIV. Int. J. Cancer 2024, 154, 21–27. [Google Scholar] [CrossRef]

- Deshmukh, A.A.; Suk, R.; Shiels, M.S.; Sonawane, K.; Nyitray, A.G.; Liu, Y.; Gaisa, M.M.; Palefsky, J.M.; Sigel, K. Recent Trends in Squamous Cell Carcinoma of the Anus Incidence and Mortality in the United States, 2001–2015. J. Natl. Cancer Inst. 2020, 112, 829–838. [Google Scholar] [CrossRef]

- Palefsky, J.M.; Lee, J.Y.; Jay, N.; Goldstone, S.E.; Darragh, T.M.; Dunlevy, H.A.; Rosa-Cunha, I.; Arons, A.; Pugliese, J.C.; Vena, D.; et al. Treatment of Anal High-Grade Squamous Intraepithelial Lesions to Prevent Anal Cancer. N. Engl. J. Med. 2022, 386, 2273–2282. [Google Scholar] [CrossRef]

- Stier, E.A.; Clarke, M.A.; Deshmukh, A.A.; Wentzensen, N.; Liu, Y.; Poynten, I.M.; Cavallari, E.N.; Fink, V.; Barroso, L.F.; Clifford, G.M.; et al. International Anal Neoplasia Society’s consensus guidelines for anal cancer screening. Int. J. Cancer 2024, 154, 1694–1702. [Google Scholar] [CrossRef] [PubMed]

- Neukam, K.; Milanés Guisado, Y.; Fontillón, M.; Merino, L.; Sotomayor, C.; Espinosa, N.; López-Cortés, L.F.; Viciana, P. High-resolution anoscopy in HIV-infected men: Assessment of the learning curve and factors that improve the performance. Papillomavirus Res. 2019, 7, 62–66. [Google Scholar] [CrossRef]

- Yasaka, K.; Akai, H.; Abe, O.; Kiryu, S. Deep Learning with Convolutional Neural Network for Differentiation of Liver Masses at Dynamic Contrast-enhanced CT: A Preliminary Study. Radiology 2018, 286, 887–896. [Google Scholar] [CrossRef] [PubMed]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Gargeya, R.; Leng, T. Automated Identification of Diabetic Retinopathy Using Deep Learning. Ophthalmology 2017, 124, 962–969. [Google Scholar] [CrossRef] [PubMed]

- Ferreira, J.P.S.; de Mascarenhas Saraiva, M.; Afonso, J.P.L.; Ribeiro, T.F.C.; Cardoso, H.M.C.; Ribeiro Andrade, A.P.; de Mascarenhas Saraiva, M.N.G.; Parente, M.P.L.; Natal Jorge, R.; Lopes, S.I.O.; et al. Identification of Ulcers and Erosions by the Novel Pillcam™ Crohn’s Capsule Using a Convolutional Neural Network: A Multicentre Pilot Study. J. Crohns Colitis 2022, 16, 169–172. [Google Scholar] [CrossRef]

- Saraiva, M.M.; Spindler, L.; Fathallah, N.; Beaussier, H.; Mamma, C.; Quesnée, M.; Ribeiro, T.; Afonso, J.; Carvalho, M.; Moura, R.; et al. Artificial intelligence and high-resolution anoscopy: Automatic identification of anal squamous cell carcinoma precursors using a convolutional neural network. Tech. Coloproctol. 2022, 26, 893–900. [Google Scholar] [CrossRef]

- College of American Pathologists. Protocol for the Examination of Excision Specimens from Patients with Carcinoma of the Anus. Version: 4.2.0.0. 2021. Available online: https://www.google.com/url?sa=t&source=web&rct=j&opi=89978449&url=https://documents.cap.org/protocols/ColoRectal_4.2.0.1.REL_CAPCP.pdf&ved=2ahUKEwjhhZe7_PeFAxUpaPUHHWczCuUQFnoECBIQAQ&usg=AOvVaw0lelz7vrLg9YJeAfCHqFEO (accessed on 20 December 2023).

- Bradsky, G. The OpenCV Library. Dr. Dobb’s J. Softw. Tools 2000, 120, 122–125. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Jin, X.F.; Ma, Y.H.; Shi, J.W.; Cai, J.T. Efficacy of Artificial Intelligence in Reducing Miss Rates of Gastrointestinal Adenomas, Polyps, and Sessile Serrated Lesions: A Meta-analysis of Randomized Controlled Trials. Gastrointest. Endosc. 2024, 99, 667–675.e1. [Google Scholar] [CrossRef]

- Saraiva, M.M.; Ribeiro, T.; Ferreira, J.P.S.; Boas, F.V.; Afonso, J.; Santos, A.L.; Parente, M.P.L.; Jorge, R.N.; Pereira, P.; Macedo, G. Artificial intelligence for automatic diagnosis of biliary stricture malignancy status in single-operator cholangioscopy: A pilot study. Gastrointest. Endosc. 2022, 95, 339–348. [Google Scholar] [CrossRef]

- Vilas-Boas, F.; Ribeiro, T.; Afonso, J.; Cardoso, H.; Lopes, S.; Moutinho-Ribeiro, P.; Ferreira, J.; Mascarenhas-Saraiva, M.; Macedo, G. Deep Learning for Automatic Differentiation of Mucinous versus Non-Mucinous Pancreatic Cystic Lesions: A Pilot Study. Diagnostics 2022, 12, 2041. [Google Scholar] [CrossRef]

- Lehne, M.; Sass, J.; Essenwanger, A.; Schepers, J.; Thun, S. Why digital medicine depends on interoperability. NPJ Digit. Med. 2019, 2, 79. [Google Scholar] [CrossRef] [PubMed]

- Kwong, J.C.C.; Nickel, G.C.; Wang, S.C.Y.; Kvedar, J.C. Integrating artificial intelligence into healthcare systems: More than just the algorithm. NPJ Digit. Med. 2024, 7, 52. [Google Scholar] [CrossRef] [PubMed]

- Huerta, E.A.; Blaiszik, B.; Brinson, L.C.; Bouchard, K.E.; Diaz, D.; Doglioni, C.; Duarte, J.M.; Emani, M.; Foster, I.; Fox, G.; et al. FAIR for AI: An interdisciplinary and international community building perspective. Sci. Data 2023, 10, 487. [Google Scholar] [CrossRef] [PubMed]

- Varghese, J. Artificial Intelligence in Medicine: Chances and Challenges for Wide Clinical Adoption. Visc. Med. 2020, 36, 443–449. [Google Scholar] [CrossRef]

- Colón-López, V.; Shiels, M.S.; Machin, M.; Ortiz, A.P.; Strickler, H.; Castle, P.E.; Pfeiffer, R.M.; Engels, E.A. Anal Cancer Risk Among People With HIV Infection in the United States. J. Clin. Oncol. 2018, 36, 68–75. [Google Scholar] [CrossRef] [PubMed]

- Clarke, M.A.; Wentzensen, N. Strategies for screening and early detection of anal cancers: A narrative and systematic review and meta-analysis of cytology, HPV testing, and other biomarkers. Cancer Cytopathol. 2018, 126, 447–460. [Google Scholar] [CrossRef] [PubMed]

- Damgacioglu, H.; Lin, Y.Y.; Ortiz, A.P.; Wu, C.F.; Shahmoradi, Z.; Shyu, S.S.; Li, R.; Nyitray, A.G.; Sigel, K.; Clifford, G.M.; et al. State Variation in Squamous Cell Carcinoma of the Anus Incidence and Mortality, and Association With HIV/AIDS and Smoking in the United States. J. Clin. Oncol. 2023, 41, 1228–1238. [Google Scholar] [CrossRef]

- Spindler, L.; Etienney, I.; Abramowitz, L.; de Parades, V.; Pigot, F.; Siproudhis, L.; Adam, J.; Balzano, V.; Bouchard, D.; Bouta, N.; et al. Screening for precancerous anal lesions linked to human papillomaviruses: French recommendations for clinical practice. Tech. Coloproctol. 2024, 28, 23. [Google Scholar] [CrossRef]

- Silvera, R.; Martinson, T.; Gaisa, M.M.; Liu, Y.; Deshmukh, A.A.; Sigel, K. The other side of screening: Predictors of treatment and follow-up for anal precancers in a large health system. Aids 2021, 35, 2157–2162. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saraiva, M.M.; Spindler, L.; Manzione, T.; Ribeiro, T.; Fathallah, N.; Martins, M.; Cardoso, P.; Mendes, F.; Fernandes, J.; Ferreira, J.; et al. Deep Learning and High-Resolution Anoscopy: Development of an Interoperable Algorithm for the Detection and Differentiation of Anal Squamous Cell Carcinoma Precursors—A Multicentric Study. Cancers 2024, 16, 1909. https://doi.org/10.3390/cancers16101909

Saraiva MM, Spindler L, Manzione T, Ribeiro T, Fathallah N, Martins M, Cardoso P, Mendes F, Fernandes J, Ferreira J, et al. Deep Learning and High-Resolution Anoscopy: Development of an Interoperable Algorithm for the Detection and Differentiation of Anal Squamous Cell Carcinoma Precursors—A Multicentric Study. Cancers. 2024; 16(10):1909. https://doi.org/10.3390/cancers16101909

Chicago/Turabian StyleSaraiva, Miguel Mascarenhas, Lucas Spindler, Thiago Manzione, Tiago Ribeiro, Nadia Fathallah, Miguel Martins, Pedro Cardoso, Francisco Mendes, Joana Fernandes, João Ferreira, and et al. 2024. "Deep Learning and High-Resolution Anoscopy: Development of an Interoperable Algorithm for the Detection and Differentiation of Anal Squamous Cell Carcinoma Precursors—A Multicentric Study" Cancers 16, no. 10: 1909. https://doi.org/10.3390/cancers16101909

APA StyleSaraiva, M. M., Spindler, L., Manzione, T., Ribeiro, T., Fathallah, N., Martins, M., Cardoso, P., Mendes, F., Fernandes, J., Ferreira, J., Macedo, G., Nadal, S., & de Parades, V. (2024). Deep Learning and High-Resolution Anoscopy: Development of an Interoperable Algorithm for the Detection and Differentiation of Anal Squamous Cell Carcinoma Precursors—A Multicentric Study. Cancers, 16(10), 1909. https://doi.org/10.3390/cancers16101909