Simple Summary

Early and accurate bladder cancer staging is important as it determines the mode of initial treatment. Non-muscle invasive bladder cancer (NMIBC) can be treated with transurethral resection whereas muscle invasive bladder cancer (MIBC) requires neoadjuvant chemotherapy with subsequent cystectomy as indicated. Our hybrid machine/deep learning model demonstrates improved accuracy of bladder cancer staging by CECT using a hybrid machine/deep learning model which will facilitate appropriate clinical management of the patients with bladder cancer, ultimately improving patient outcome.

Abstract

Accurate clinical staging of bladder cancer aids in optimizing the process of clinical decision-making, thereby tailoring the effective treatment and management of patients. While several radiomics approaches have been developed to facilitate the process of clinical diagnosis and staging of bladder cancer using grayscale computed tomography (CT) scans, the performances of these models have been low, with little validation and no clear consensus on specific imaging signatures. We propose a hybrid framework comprising pre-trained deep neural networks for feature extraction, in combination with statistical machine learning techniques for classification, which is capable of performing the following classification tasks: (1) bladder cancer tissue vs. normal tissue, (2) muscle-invasive bladder cancer (MIBC) vs. non-muscle-invasive bladder cancer (NMIBC), and (3) post-treatment changes (PTC) vs. MIBC.

1. Introduction

Bladder cancer imaging can be misleading. Findings such as perivesical fat stranding, hydronephrosis, focal bladder wall thickening, or a small bladder lesion may be wrongly perceived as a more advanced stage of bladder cancer. It is common to see small lymph nodes in the pelvis post transurethral resection of bladder tumor (TURBT) [1,2,3] or at the time of diagnosis [4]. These otherwise non-significant lymph nodes may harbor metastasis, which in turn might be difficult to decipher relying solely on visual inspection from CT scans [5,6]. Furthermore, bladder cancer is a heterogeneous disease with an extremely varied range of case-specific diagnoses [7,8]. From low-grade tumors such as (noninvasive papillary carcinoma) and (tumor in situ or “flat lesion”), which require TURBT or less aggressive endoscopic intervention [8], to high-grade muscle-invasive tumors, which require chemotherapy [9,10], bladder cancer diagnosis is highly dependent on the type and stage of the tumor [4,6,11].

Radiomics can provide tools to significantly improve the accuracy of clinical staging by analyzing multiple qualitative features, including but not restricted to texture analysis, raw digital data and deep model-generated embeddings.

Texture analysis helps capture local patterns in images from the intensity information contained within them. Such features are very effective in identifying tissue types from grayscale medical scans. The texture-based features generally used in medical imaging can be broadly categorized into two subdivisions, namely statistical approaches and transformation-based approaches.

First, we will review some of the most commonly used statistical texture features for bladder cancer detection and staging from medical scans. Ref. [12] utilized a set of functional, second-order statistical and morphological features to perform staging between T1 and T2 types of bladder cancer from MRI scans. The second-order statistical feature extraction approach included a total of 25 GLCM features and 16 gray level run length matrix (GLRLM) features obtained from 42 bladder MRIs (21 T1, 21 T2) post ROI segmentation, yielding an accuracy, sensitivity and specificity of 95.24% and an AUC of 98.64%. Furthermore, the authors also performed an extensive comparison between their proposed model and two state-of-the-art approaches, namely [13,14]. Ref. [15] makes use of LBP and GLCM features to perform primary tumor staging of bladder cancer into two groups: (1) tumor stage and primary tumor located completely within the bladder; (2) tumor stage and primary tumor extending outside the bladder. The SVM classifier, which was trained on T2-weighted MRI scans from 65 bladder cancer patients all with stage 1, reported an AUC of 80.60%. Ref. [16] performs prediction of recurrence and progression of urothelial carcinoma from a dataset of 42 patients—13 without recurrence, 14 with recurrence but not progression, and 15 with progression. Features extracted using LBP and local variance, after classification using the RUSBoost classifier, provided an accuracy of 70% and sensitivity of 84%. Ref. [17] utilizes LBP and GLCM features to classify the invasiveness of bladder cancer. The dataset comprised T2-weighted MRI scans from 65 preoperative bladder cancer patients followed by radical cystectomy. The proposed model reported a patient-level sensitivity of 74.20%, specificity of 82.40%, accuracy of 78.50% and AUC of 80.60%. Ref. [18] performed survival prediction of bladder urothelial carcinoma (BLCA) from CECT scans by utilizing LBP, wavelet and GLCM features. The dataset comprised scans from 62 bladder cancer patients with stages of urothelial carcinoma. The radiomics features extracted from the CECTs were used in combination with RNA-seq data for a complete radiogenomics signature, which in turn helped predict the survival of the patients. This study exhibits the applicability of radiomics and transcriptomics data in predicting BLCA survival. However, owing to the sheer small size of the dataset, the authors think that the model needs to be validated on a larger set of samples for a more foolproof analysis. The literature exhibits that statistical texture analysis is not only fast and easy to implement but also very effective in performing classification, staging and segmentation of bladder cancer from medical images.

Transformation-based approaches such as Fourier and Gabor wavelet transform are very effective in learning textural patterns from images and are therefore a popular option among medical imaging researchers. Ref. [19] utilized Gabor features to perform carcinoma cell classification from biopsy images associated with 14 distinct cancer types, scanned from 14 different patients. An SVM classifier with a radial basis function (RBF) kernel was subsequently trained on these features. The SVM classifier provides the highest cross-validation accuracy of 99.20% with a Gabor window size of 400 pixels and an image magnification of 10. Ref. [20] makes use of 2D Fourier-based features to identify cancer from 182 optical coherence tomography (OCT) scans obtained from 21 patients who were identified as high risk of having transitional cell carcinoma (TCC) and from 68 different areas of the bladder. The task was two-fold: (1) to perform classification between non-cancerous, dysplasia, carcinoma in situ (CIS), and papillary lesions; (2) to predict the invasiveness of the lesion. Other than 2D Fourier transform, the authors also extracted four different statistical feature extraction approaches, resulting in a total of 74 features obtained from the raw OCT images. A simple cross-correlation-based filter with a correlation threshold of 0.85 was employed for feature selection, which resulted in a final set of nine selected features. The decision tree classifier was utilized to finally perform classification on the selected feature set. The authors reported a non-cancerous versus cancerous classification sensitivity of 92.00% and specificity of 62.00%. Ref. [21], which has been summarized earlier, reports that GLCM and GLDM perform better than Fourier transform-based feature extractors in differentiating between tumors and peritumoral fat tissues.

From the literature reviewed above, it is evident that texture analysis is an effective approach when performing classification tasks on medical imaging, in general, and histologic analysis in particular.

Recently, deep learning-based models have emerged and gained popularity among researchers owing to their automatic feature extraction capabilities. Convolutional neural networks (CNNs) are the most commonly used type of deep models and a very popular framework when performing classification tasks on imaging-based applications, in general, and radiological data in particular. The authors of [22] designed a set of nine CNN-based models for the classification of MIBC and NMIBC from contrast-enhanced CT (CECT) images. The dataset comprised 1200 CT scans obtained from 369 patients undergoing radical cystectomy. A total of 249 out of these patients had NMIBC, while the remaining 120 had MIBC. The CNN model was pre-trained on the ImageNet dataset in order to improve classification performance. The model with the highest AUC on the test set was obtained using the VGG16 algorithm—with an AUC of 99.70%, accuracy of 93.90%, sensitivity of 88.90%, specificity of 98.90%, precision of 98.80% and negative predictive value of 89.90%. In contrast, the authors of [13] made use of Haralick features, which are a variant of GLCM, to identify muscular invasiveness in MRI scans containing a total of 118 volumes of interest (VOI) obtained from 68 patients—34 volumes labeled non-muscle-invasive bladder cancer (NMIBC), and 84 labeled muscle-invasive bladder cancer (MIBC). The final SVM classifier obtained an AUC of 86.10% and Youden index of 71.92%.

Ref. [21] performs classification between bladder cancers with and without response to chemotherapy from a set of CT scans obtained before and after treatment. The authors have reviewed three different models and their capabilities in performing classification: (1) a deep learning-convolutional neural network-based model (DL-CNN); (2) a more deterministic radiomics feature-based classifier (RF-SL); (3) an intermediate model that extracts radiomics features from image patterns (RF-ROI). The training dataset comprised 82 patients having 87 bladder cancers, scanned pre- and post-chemotherapy. The test set comprised 41 patients with 43 cancers. The radiomics feature-based model (RF-SL) performed the best with an AUC of 77.00%, while the two radiologists reported AUCs of 76.00% and 77.00%, respectively.

Ref. [23] proposed a CNN-based model for performing classification between low- and high-stage bladder cancer. The training dataset comprised 84 bladder cancer CR urography (CTU) images obtained from 76 patients (43 CTUs contained low-stage cancer, while 41 contained high-stage cancer). The test set consisted of 90 bladder CTUs obtained from 86 patients. The CNN classifier had a test set prediction accuracy of 91.00%, which the authors claim is higher as compared to texture-based classification using SVM on the same dataset (which had a prediction accuracy of 88.00%). In comparison, ref. [21] extracted GLCM- and histogram-based features from apparent diffusion coefficient (ADC) and diffusion-weighted images (DWI) to perform bladder cancer grading. A total of 61 patients were scanned for this study, 32 out of whom were in low-grade and the remaining 29 in high-grade classes. A combination of 102 GLCM and histogram features were initially extracted, out of which 47 were finally selected using the Mann–Whitney U-test, and an SVM classifier was used to perform classification between high- and low-grade bladder cancer with an accuracy of 82.90%. Ref. [21] extracted histogram and GLCM features to perform classification between high-grade and low-grade bladder cancer scans from a set of diffusion-based MRIs obtained from 61 bladder cancer patients (32 of them having low-grade and 29 having high-grade bladder cancer), yielding an accuracy of 82.90% and area under the curve (AUC) of 86.10%. Ref. [24] made use of GLCM, wavelet filter and Laplacian of Gaussian filter to extract features from a small dataset of 145 patients to perform grading on bladder cancer CT scans. Out of these 145 scans, 108 were used to train the model, and the remaining 37 were used to perform validation. The model provided an accuracy of 83.80% on the validation set.

From the literature reviewed above, it is evident that neural network-based classifiers are more effective than texture analysis when performing identification and staging of bladder cancer from medical imaging alone.

The authors of [25] claim that feature extraction when governed by domain knowledge performs better than CNN-based classifiers that are capable of automatic feature generation. The task in this case was to classify two early stages of bladder cancer that are histologically difficult to differentiate, namely Ta (non-invasive) and T1 (superficially invasive). The dataset comprised a total of 1177 bladder scans—460 non-invasive, 717 superficially invasive. CNN classifiers achieved the highest accuracy of 84.00%, performing considerably poorer than supervised machine learning classifiers that were trained on manually extracted features. The aforementioned literature on CNNs comprises end-to-end models, where both training and testing are performed on the same dataset. The problem with such an approach is that deep neural networks require large quantities of training data in order to avoid the pervasive issue of over-fitting. As a substitute to end-to-end deep models, researchers make use of a concept called “transfer learning”, where the neural network is first pre-trained on a large dataset such as ImageNet, and the learned weights are subsequently fine-tuned on the small target data. Since we were using a small dataset of 200 CT scans for this study, we decided to make use of transfer learning to improve classification results and alleviate overfitting. A 71-layer ResNet-18 model pre-trained on the publicly available ImageNet dataset was utilized to extract features from the bladder scans. The extracted features, after feature selection using a combination of supervised and unsupervised techniques, were finally used to perform classification by five different machine learning classifiers, namely k-nearest neighbor (KNN), support vector machine (SVM), linear discriminant analysis (LDA), decision tree (DT) and naive Bayes (NB).

2. Materials and Methods

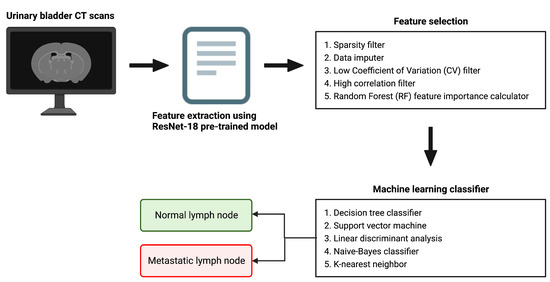

Figure 1 provides a pictorial depiction of the entire workflow, starting from the raw bladder scans to the prediction labels obtained after classification. The proposed methodology comprises feature extraction, feature selection, and finally classification. In this hybrid approach, we extract feature vectors from the images using the trained model weights from the last pooling layer of the five most widely used pre-trained deep models, namely AlexNet, GoogleNet, InceptionV3, ResNet-50 and XceptionNet. We subsequently employ our feature selection algorithm on this feature vector. Finally, we use five machine learning classification algorithms on the selected feature set, namely k-nearest neighbor (KNN), naive Bayes (NB), support vector machine (SVM), linear discriminant analysis (LDA) and decision tree (DT).

Figure 1.

A schematic representation of the overall workflow.

2.1. Feature Extraction Using Pre-Trained Deep Models

Five popular neural network-based deep models, namely AlexNet, GoogleNet, InceptionV3, ResNet-50 and XceptionNet, all pre-trained on the ImageNet dataset [26], were trained using our bladder CT scan data to fine-tune the model parameters. The trained weights from the last pooling layer of each of these models were extracted and subsequently used as feature descriptors. Table 1 provides a comprehensive description of each of these models—including information regarding the pooling layer and the size of the extracted feature vector.

Table 1.

A description of the five pre-trained models used for feature extraction from the bladder scans, namely AlexNet, GoogleNet, InceptionV3, ResNet-50 and XceptionNet. The table contains the total number of layers per model; the last pooling layer from which features were being extracted; the layer number of the last pooling layer; and the length of the extracted feature vector.

2.2. Feature Selection Mechanism

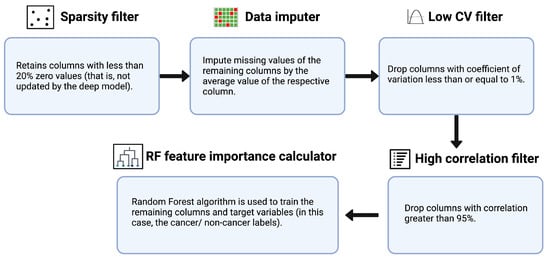

An ensemble feature selection technique was used to select the most important features from the originally extracted feature vector—which was obtained using five different pre-trained models, namely AlexNet, GoogleNet, Inception V3, ResNet-50 and XceptionNet, for performing the classification of normal vs. metastatic lymph nodes. First, we make use of a “sparsity filter” to remove the features that were not updated by the deep model. Through this step, we not only exclude features that have low variance but also automatically remove those that are less likely to have an impact on the classification process. Next, we utilize the “data imputer” to impute the unchanged values of the remaining columns by the mean value of the respective column. Subsequently, we made use of the “low coefficient of variation (CV) filter” to drop features with a CV value of less than 0.01 CV, or standard deviation normalized by mean, is a measure of information content; a lower CV indicates features with a lower normalized variance. Furthermore, in the next step, a correlation matrix was generated, which contains information regarding the cross-correlation values between every pair of features. For each pair with >95% correlation, the one with a lower correlation to the output is dropped. Finally, we employed random forest, a boosted decision tree algorithm, to train the remaining columns and target variables for feature importance score generation. The number of trees was set to a default value of 100, and the Gini index was used as the metric for calculating feature importance. The first four steps were unsupervised (statistical approaches used to perform selection on features alone, not labels); the last feature importance calculation step was supervised (relationship between dependent and independent variables critical in determining feature selection). Figure 2 provides a schematic depiction of the feature selection algorithm.

Figure 2.

A pictorial representation of the feature selection procedure.

2.3. Machine Learning-Based Classification

The important features obtained using the feature selection algorithm were used as inputs into the machine learning classifier. The three classification tasks that were performed were: (1) normal vs. bladder cancer, (2) NMIBC vs. MIBC, (3) post-treatment changes (PTC) vs. MIBC. A 10-fold cross-validation was utilized to evaluate the prediction performance of the proposed model. The samples were randomly re-arranged, then the dataset was split into 10 equal divisions. Nine of these divisions, at every iteration, were used for training; and the remaining was one used for testing. This process was repeated for 10 iterations, each time the test set being a different group. The prediction evaluation metrics calculated across the ten iterations were averaged and reported. Classification was performed using five different machine learning classifiers, namely k-nearest neighbor (KNN), naive Bayes (NB), support vector machine (SVM), linear discriminant analysis (LDA) and decision tree (DT).

2.4. Evaluation Metrics

In order to evaluate the efficacy of the classification model, the following five metrics were used:

where TP is the # of true positives, TN is the # of true negatives, FP is the # of false positives and FN is the # of false negatives.

Since our dataset is small and highly imbalanced, accuracy, precision and recall are not ideal in representing the classification performance of the models. Therefore, the F1-score was used to determine the overall effectiveness of the classifiers. While accuracy represents the overall percentage of correctly classified samples, precision represents the percentage of identified samples where the condition actually exists, and recall represents the proportion of samples with the condition that have been correctly diagnosed, none of them corrects for data imbalance. The F1-score, which is the harmonic mean of precision and recall, has been employed to rank classifiers in terms of diagnostic performance for this particular study.

3. Software and Tools

MATLAB version R2021b (developed by MathWorks, Massachussets USA) was used for the purpose of feature extraction and machine learning-based classification (The Deep Learning Toolbox was used to train the five pre-trained deep models, namely AlexNet, GoogleNet, InceptionV3, ResNet50 and XceptionNet. The Statistics and Machine Learning Toolbox was used to train the four machine learning-based classifiers, namely naive Bayes, support vector machine, linear discriminant analysis and decision tree).

ImageJ (developed by National Institutes of Health, Maryland USA) and RadiAnt Dicom Viewer (open source application) were used to analyze the CT images. BioRender was used to generate all the illustrations in the paper (Figures 1–4).

Python 3 (open source programming language) was used to program the feature selection workflow and generate the plots containing the F1-scores per model per classifier (Figures 5–7).

4. Dataset

A urothelial carcinoma dataset was provided by Mayo Clinic, Arizona. The dataset contained de-identified grayscale CT scans obtained from patients who were imaged before radical cystectomy and pelvic lymph node dissection as part of a trial. The location of each bladder mass was confirmed, and labels of the preoperative CT data were generated. The labels were “cancer” (meaning malignant cells) and “normal” (normal bladder wall).

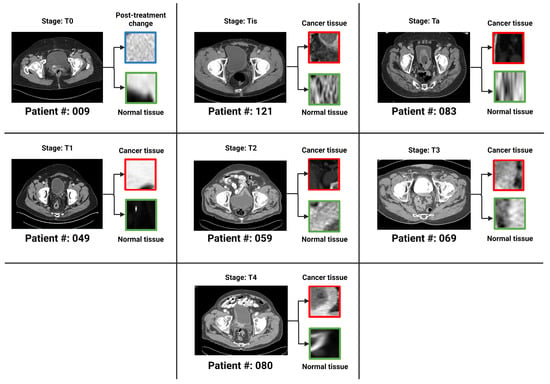

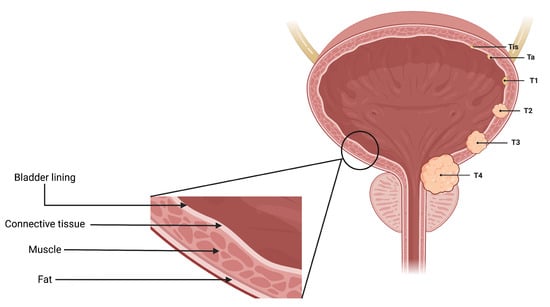

There were a total of 100 CT scans of the pelvis with intravenous contrast images visualizing the bladder, obtained from 100 patients (one image captured per patient). Each scan had 2 masks (normal bladder wall and bladder cancer) manually annotated by encompassing both the entire region of biopsy-proven malignancy and the normal-appearing bladder by two radiologists familiar with bladder imaging, which were used to extract the respective regions of interest (ROI)—therefore resulting in 200 ROIs (100 normal tissue and 100 abnormal tissue). These ROIs were used as input for classification instead of the entire image. The patients were distributed across seven bladder cancer stages, namely , , , , , and . Figure 3 provides an axial CT scan with IV contrast, along with its corresponding region of interest (ROI) on the bladder wall, pertaining to each of the seven stages. represents a stage where the tissue of interest shows no evidence of malignancy, possibly but not necessarily following tumor resection and/or chemotherapy. This indicates carcinoma in situ, where the malignant cells only involves the innermost lining of the bladder wall. represents a stage where malignant cells involve the connective tissue beyond the innermost lining without involvement of the bladder muscle. represents the malignant spread of tumor involving the bladder muscle. represents a stage where malignant mass spreads outside the confines of bladder muscle with involvement of the perivesical fat. stage indicates the spread of tumor beyond the bladder with involvement of abdominal/pelvic wall and/or nearby organs. , and stages are classified as non-muscle-invasive bladder cancer (NMIBC); , and stages are classified as muscle-invasive bladder cancer (MIBC) [4,27]. Figure 4 is a pictorial depiction of the different stages of bladder cancer. As is evident from Figure 3, the stages are difficult to distinguish on visual inspection—thereby making it suitable for classification using AI-based models. Table 2 provides a summary of the number of patients per stage.

Figure 3.

One bladder CT scan per stage (along with the corresponding regions of interest that were used in the various classification tasks) have been provided. The 7 stages of urothelial carcinoma analyzed in the study are: , , , , , and ( has not been shown in the figure because represents a stage where the tissue of interest shows no evidence of malignancy).

Figure 4.

A pictorial representation of the 7 stages of urothelial carcinoma analyzed in the study ( has not been shown in the figure because represents a stage where the tissue of interest shows no evidence of malignancy).

Table 2.

A summary of the number of patients per stage.

5. Results

The proposed model was used to perform three different classification tasks during the course of this study: (1) normal vs. bladder cancer; (2) NMIBC vs. MIBC; (3) post-treatment changes (PTC) vs. MIBC. In this section, for each of the three tasks, the best classification performances corresponding to each of the five pre-trained deep model-based features have been presented. The class-wise number of ROIs used for each of the individual tasks has also been summarized.

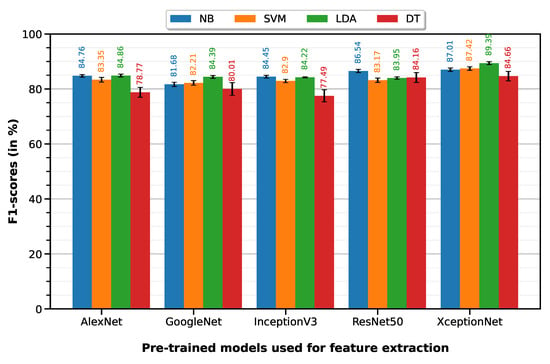

5.1. Normal vs. Cancer

Normal vs. cancer classification was performed with 10-fold cross-validation on a dataset of 165 ROIs (100 normal, 65 cancer)—35 T0 images were not relevant because they represent post-treatment changes (PTC) and not cancer. The LDA classifier on XceptionNet-based features provides the best performance with an accuracy of 86.07%, sensitivity of 96.75%, specificity of 69.65%, precision of 83.07% and F1-score of 89.39%. Table 3 summarizes the classification performances of the 10-fold machine learning classifiers on features extracted from each of the five pre-trained deep models (results visualized on the associated Figure 5 bar plot).

Table 3.

Classification performances of the 10-fold machine learning classifiers on features extracted from each of the five pre-trained deep models.

Figure 5.

F1-scores of the 10-fold machine learning classifiers on features extracted from each of the five pre-trained deep models.

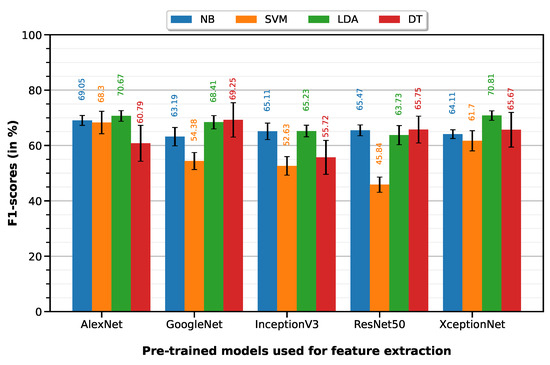

5.2. NMIBC vs. MIBC

NMIBC vs. MIBC classification was performed with 10-fold cross-validation on a dataset of 65 ROIs (24 NMIBC, 41 MIBC). The LDA classifier on XceptionNet-based features provides the best performance with an accuracy of 79.72%, sensitivity of 66.62%, specificity of 87.39%, precision of 75.58% and F1-score of 70.81%. Table 4 summarizes the classification performances of the 10-fold machine learning classifiers on features extracted from each of the five pre-trained deep models (results visualized on the associated Figure 6 bar plot).

Table 4.

Classification performances of the 10-fold machine learning classifiers on features extracted from each of the five pre-trained deep models.

Figure 6.

F1-scores of the 10-fold machine learning classifiers on features extracted from each of the five pre-trained deep models.

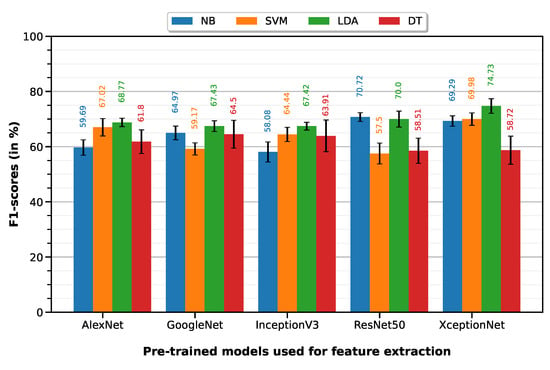

5.3. Post-Treatment Changes (PTC) vs. MIBC

PTC vs. MIBC classification was performed with a 10-fold cross-validation on a dataset of 76 ROIs (35 PTC, 41 MIBC). LDA classifier on XceptionNet-based features provided the best performance with an accuracy of 74.96%, sensitivity of 80.51%, specificity of 70.22%, precision of 69.78% and F1-score of 74.73%. Table 5 summarizes the classification performances of the 10-fold machine learning classifiers, on features extracted from each of the five pre-trained deep models (results visualized on associated Figure 7 bar plot).

Table 5.

Classification performances of the 10-fold machine learning classifiers on features extracted from each of the five pre-trained deep models.

Figure 7.

F1-scores of the 10-fold machine learning classifiers on features extracted from each of the five pre-trained deep models.

6. Discussion

Optimal management of bladder cancer requires a multidisciplinary approach, with tumor staging an important prognostic factor that determines the mode of initial treatment. Cystoscopy examination, together with a biopsy, remains the primary mode of tumor detection and clinical staging in patients with suspected bladder cancer. CT is often the first exam modality for bladder cancer detection due to its wide availability and minimal associated complication. However, the CT findings are nonspecific with limited accuracy. At least one article [28] reported the accuracy of CT evaluation for bladder cancer as low as 49%.

Differentiating non-muscle-invasive bladder cancer (NMIBC), consisting of Ta, Tis, and T1 stages, from muscle-invasive bladder cancer (MBIC), consisting of -4 stages, is important as MIBC is more likely to spread to lymph nodes and other organs requiring radical cystectomy with or without systemic chemotherapy [29]. In contrast, NMBIC has low risk of recurrent disease but can be effectively treated with intravesical chemotherapy, immunotherapy, and transurethral resection of bladder tumor (TURBT) [30]. Early differentiation of two bladder cancer stages is critical for the appropriate utilization of medical resources and optimization of targeted treatment. Therefore, accurate initial staging on the CT exam is crucial for therapeutic decision-making [31].

While histopathologic cancer detection using pre-trained neural network-based models is mainstream, in this project, we were faced with the additional issues of imbalanced data and limited sample size. In comparison to [32], where the number of image samples was 1350, and the number of classification categories was 2 (1200 bladder cancer tissues, 1150 normal tissues), we had a total of only 100 tissue scans distributed across 7 stages of urothelial carcinoma (6 , 9 , 35 , 9 , 13 , 24 , 4 ). The issue of class-imbalanced samples could not be solved using SMOTE because the sheer lack of samples in some of the categories (especially and ) meant that the synthetically generated samples were very similar to the few original ones, and contributed towards decreased variance in the training dataset—therefore resulting in overfitting. Next, the issue of overfitting, which we faced while performing end-to-end classification using pre-trained networks, was tackled by: (1) making use of a combined deep learning-machine learning approach where the trained weights from the pre-trained neural network were then classified using statistical machine learning approaches. (2) An ensemble statistical- and supervised learning-based feature selection approach that helped remove features that were unimportant. Ref. [32], which focused on bladder cancer detection from cytoscopic images alone, reported an accuracy of 86.36% with deep learning-based classifiers and 84.09% with human surgical experts; however, no significant difference was found between the two (p-value greater than 0.05).

The LDA classifier on XceptionNet performed best in terms of the F1-score for all three experiments in our study—namely, normal vs. cancer, NMIBC vs. MIBC and PTC vs. MIBC. For normal vs. cancer classification, LDA on XceptionNet had an F1-score of (89.39 ± 0.26)%, which will facilitate clinicians in better detection of lesions because, for histopathologic images in general, and bladder scans in particular, flat and subtle lesions are often missed on visual inspection. For NMIBC vs. MIBC classification, LDA on XceptionNet had an F1-score of (70.81 ± 0.86). For PTC vs. MIBC classification, LDA on XceptionNet had an F1-score of (74.73 ± 1.31)%, which is especially encouraging because there is an unmet demand to develop new non-invasive techniques to assess accurate prediction of recurrence and response to chemotherapy. Currently, patients with bladder cancer require repeat cystoscopies and biopsies of the bladder to assess the response and recurrence of the disease. This procedure is very costly and invasive, with several associated complications, including bladder perforation.

Our study has certain limitations. It is a retrospective design based on a single-center small dataset that may overestimate the diagnostic performance of our model. Therefore, our next step is to extend the diagnostic model on prospective, multi-center datasets with external validation.

7. Conclusions

Our model showed a high F1-score, which means that our model indicated a high value for both recall and precision. We used the F1-score to compare our classifiers. We opted for the ResNet-50, whose F1-score was higher among others and ResNet-50 showed the best classification based on the F1-score for all three experiments.

Radiomics-assisted interpretation of CT by radiologists may help more accurately diagnose bladder cancer. This can allow the timely utilization of medical resources and consultation with oncologists and urologists, ultimately improving patients’ clinical outcomes.

Author Contributions

Conceptualization, S.S., K.M., W.I., A.C.S., P.S. and T.W.; methodology, S.S., T.W. and A.C.S.; software, S.S.; validation, S.S. and K.M.; formal analysis, S.S.; investigation, S.S.; resources, T.W., A.C.S. and P.S.; data curation, K.M., R.W.T., I.B.R., A.C.S., A.H.B., C.M., T.H.H., G.S., H.M.A.-M. and P.S.; writing—original draft preparation, S.S. and W.I.; writing—review and editing, S.S., K.M., W.I., T.W., A.C.S. and P.S.; visualization, S.S.; supervision, T.W., P.S. and A.C.S.; project administration, T.W., P.S. and A.C.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board (or Ethics Committee) of Mayo Clinic Arizona (protocol code 20-012296 and date of approval 15 December 2020).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study. Furthermore, all data used in the study have been de-identified of any personal information.

Data Availability Statement

Data will be made available under reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

Correction Statement

This article has been republished with a minor correction to the readability of Figure 2. This change does not affect the scientific content of the article.

References

- Kim, L.H.; Patel, M.I. Transurethral resection of bladder tumour (TURBT). Transl. Androl. Urol. 2020, 9, 3056. [Google Scholar] [CrossRef]

- Furuse, H.; Ozono, S. Transurethral resection of the bladder tumour (TURBT) for non-muscle invasive bladder cancer: Basic skills. Int. J. Urol. 2010, 17, 698–699. [Google Scholar] [CrossRef] [PubMed]

- Richterstetter, M.; Wullich, B.; Amann, K.; Haeberle, L.; Engehausen, D.G.; Goebell, P.J.; Krause, F.S. The value of extended transurethral resection of bladder tumour (TURBT) in the treatment of bladder cancer. BJU Int. 2012, 110, E76–E79. [Google Scholar] [CrossRef] [PubMed]

- Sanli, O.; Dobruch, J.; Knowles, M.A.; Burger, M.; Alemozaffar, M.; Nielsen, M.E.; Lotan, Y. Bladder cancer. Nat. Rev. Dis. Primers 2017, 3, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Bostrom, P.J.; Van Rhijn, B.W.; Fleshner, N.; Finelli, A.; Jewett, M.; Thoms, J.; Hanna, S.; Kuk, C.; Zlotta, A.R. Staging and staging errors in bladder cancer. Eur. Urol. Suppl. 2010, 9, 2–9. [Google Scholar] [CrossRef]

- Colombel, M.; Soloway, M.; Akaza, H.; Böhle, A.; Palou, J.; Buckley, R.; Lamm, D.; Brausi, M.; Witjes, J.A.; Persad, R. Epidemiology, staging, grading, and risk stratification of bladder cancer. Eur. Urol. Suppl. 2008, 7, 618–626. [Google Scholar] [CrossRef]

- Kaufman, D.S.; Shipley, W.U.; Feldman, A.S. Bladder cancer. Lancet 2009, 374, 239–249. [Google Scholar] [CrossRef]

- Kirkali, Z.; Chan, T.; Manoharan, M.; Algaba, F.; Busch, C.; Cheng, L.; Kiemeney, L.; Kriegmair, M.; Montironi, R.; Murphy, W.M.; et al. Bladder cancer: Epidemiology, staging and grading, and diagnosis. Urology 2005, 66, 4–34. [Google Scholar] [CrossRef]

- Sharma, S.; Ksheersagar, P.; Sharma, P. Diagnosis and treatment of bladder cancer. Am. Fam. Physician 2009, 80, 717–723. [Google Scholar]

- Gofrit, O.; Shapiro, A.; Pode, D.; Sidi, A.; Nativ, O.; Leib, Z.; Witjes, J.; Van Der Heijden, A.; Naspro, R.; Colombo, R. Combined local bladder hyperthermia and intravesical chemotherapy for the treatment of high-grade superficial bladder cancer. Urology 2004, 63, 466–471. [Google Scholar] [CrossRef]

- Sun, M.; Trinh, Q.D. Diagnosis and staging of bladder cancer. Hematol./Oncol. Clin. 2015, 29, 205–218. [Google Scholar] [CrossRef]

- Hammouda, K.; Khalifa, F.; Soliman, A.; Ghazal, M.; Abou El-Ghar, M.; Badawy, M.A.; Darwish, H.E.; Khelifi, A.; El-Baz, A. A multiparametric MRI-based CAD system for accurate diagnosis of bladder cancer staging. Comput. Med. Imaging Graph. 2021, 90, 101911. [Google Scholar] [CrossRef]

- Xu, X.; Liu, Y.; Zhang, X.; Tian, Q.; Wu, Y.; Zhang, G.; Meng, J.; Yang, Z.; Lu, H. Preoperative prediction of muscular invasiveness of bladder cancer with radiomic features on conventional MRI and its high-order derivative maps. Abdom. Radiol. 2017, 42, 1896–1905. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wang, C.; Udupa, J.K.; Tong, Y.; Chen, J.; Venigalla, S.; Odhner, D.; Guzzo, T.J.; Christodouleas, J.; Torigian, D.A. Urinary bladder cancer T-staging from T2-weighted MR images using an optimal biomarker approach. In Medical Imaging 2018: Computer-Aided Diagnosis; SPIE: Bellingham, WA, USA, 2018; Volume 10575, pp. 526–531. [Google Scholar]

- Urdal, J.; Engan, K.; Kvikstad, V.; Janssen, E.A. Prognostic prediction of histopathological images by local binary patterns and RUSBoost. In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO), Kos Island, Greece, 28 August–2 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2349–2353. [Google Scholar]

- Tong, Y.; Udupa, J.K.; Wang, C.; Chen, J.; Venigalla, S.; Guzzo, T.J.; Mamtani, R.; Baumann, B.C.; Christodouleas, J.P.; Torigian, D.A. Radiomics-guided therapy for bladder cancer: Using an optimal biomarker approach to determine extent of bladder cancer invasion from t2-weighted magnetic resonance images. Adv. Radiat. Oncol. 2018, 3, 331–338. [Google Scholar] [CrossRef]

- Lin, P.; Wen, D.Y.; Chen, L.; Li, X.; Li, S.h.; Yan, H.B.; He, R.Q.; Chen, G.; He, Y.; Yang, H. A radiogenomics signature for predicting the clinical outcome of bladder urothelial carcinoma. Eur. Radiol. 2020, 30, 547–557. [Google Scholar] [CrossRef]

- Çinar, U.; Çetin, Y.Y.; Çetin-Atalay, R.; Çetin, E. Classification of human carcinoma cells using multispectral imagery. In Medical Imaging 2016: Digital Pathology; SPIE: Bellingham, WA, USA, 2016; Volume 9791, pp. 341–346. [Google Scholar]

- Lingley-Papadopoulos, C.A.; Loew, M.H.; Manyak, M.J.; Zara, J.M. Computer recognition of cancer in the urinary bladder using optical coherence tomography and texture analysis. J. Biomed. Opt. 2008, 13, 024003. [Google Scholar] [CrossRef]

- Zhang, X.; Xu, X.; Tian, Q.; Li, B.; Wu, Y.; Yang, Z.; Liang, Z.; Liu, Y.; Cui, G.; Lu, H. Radiomics assessment of bladder cancer grade using texture features from diffusion-weighted imaging. J. Magn. Reson. Imaging 2017, 46, 1281–1288. [Google Scholar] [CrossRef]

- Yang, Y.; Zou, X.; Wang, Y.; Ma, X. Application of deep learning as a noninvasive tool to differentiate muscle-invasive bladder cancer and non–muscle-invasive bladder cancer with CT. Eur. J. Radiol. 2021, 139, 109666. [Google Scholar] [CrossRef]

- Chapman-Sung, D.H.; Hadjiiski, L.; Gandikota, D.; Chan, H.P.; Samala, R.; Caoili, E.M.; Cohan, R.H.; Weizer, A.; Alva, A.; Zhou, C. Convolutional neural network-based decision support system for bladder cancer staging in CT urography: Decision threshold estimation and validation. In Medical Imaging 2020: Computer-Aided Diagnosis; SPIE: Bellingham, WA, USA, 2020; Volume 11314, pp. 424–429. [Google Scholar]

- Zhang, G.; Xu, L.; Zhao, L.; Mao, L.; Li, X.; Jin, Z.; Sun, H. CT-based radiomics to predict the pathological grade of bladder cancer. Eur. Radiol. 2020, 30, 6749–6756. [Google Scholar] [CrossRef]

- Yin, P.N.; Kc, K.; Wei, S.; Yu, Q.; Li, R.; Haake, A.R.; Miyamoto, H.; Cui, F. Histopathological distinction of non-invasive and invasive bladder cancers using machine learning approaches. BMC Med. Inform. Decis. Mak. 2020, 20, 1–11. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Magers, M.J.; Lopez-Beltran, A.; Montironi, R.; Williamson, S.R.; Kaimakliotis, H.Z.; Cheng, L. Staging of bladder cancer. Histopathology 2019, 74, 112–134. [Google Scholar] [CrossRef] [PubMed]

- Tritschler, S.; Mosler, C.; Straub, J.; Buchner, A.; Karl, A.; Graser, A.; Stief, C.; Tilki, D. Staging of muscle-invasive bladder cancer: Can computerized tomography help us to decide on local treatment? World J. Urol. 2012, 30, 827–831. [Google Scholar] [CrossRef] [PubMed]

- Chang, S.S.; Bochner, B.H.; Chou, R.; Dreicer, R.; Kamat, A.M.; Lerner, S.P.; Lotan, Y.; Meeks, J.J.; Michalski, J.M.; Morgan, T.M.; et al. Treatment of non-metastatic muscle-invasive bladder cancer: AUA/ASCO/ASTRO/SUO guideline. J. Urol. 2017, 198, 552–559. [Google Scholar] [CrossRef]

- Chang, S.S.; Boorjian, S.A.; Chou, R.; Clark, P.E.; Daneshmand, S.; Konety, B.R.; Pruthi, R.; Quale, D.Z.; Ritch, C.R.; Seigne, J.D.; et al. Diagnosis and treatment of non-muscle invasive bladder cancer: AUA/SUO guideline. J. Urol. 2016, 196, 1021–1029. [Google Scholar] [CrossRef]

- Brierley, J.D.; Gospodarowicz, M.K.; Wittekind, C. TNM Classification of Malignant Tumours; John Wiley & Sons: Hoboken, NJ, USA, 2017. [Google Scholar]

- Yang, R.; Du, Y.; Weng, X.; Chen, Z.; Wang, S.; Liu, X. Automatic recognition of bladder tumours using deep learning technology and its clinical application. Int. J. Med. Robot. Comput. Assist. Surg. 2021, 17, e2194. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).