Deep Learning for Medical Image-Based Cancer Diagnosis

Abstract

Simple Summary

Abstract

1. Introduction

- (i)

- The principle and application of radiological and histopathological images in cancer diagnosis are introduced in detail;

- (ii)

- This paper introduces 9 basic architectures of deep learning, 12 classical pretrained models, and 5 typical methods to overcome overfitting. In addition, advanced deep neural networks are introduced, such as vision transformers, transfer learning, ensemble learning, graph neural network, and explainable deep neural networks;

- (iii)

- The application of deep learning technology in medical imaging cancer diagnosis is deeply analyzed, including image classification, image reconstruction, image detection, image segmentation, image registration, and image fusion;

- (iv)

- The current challenges and future research hotspots are discussed and analyzed around data, labels, and models.

2. Common Imaging Techniques

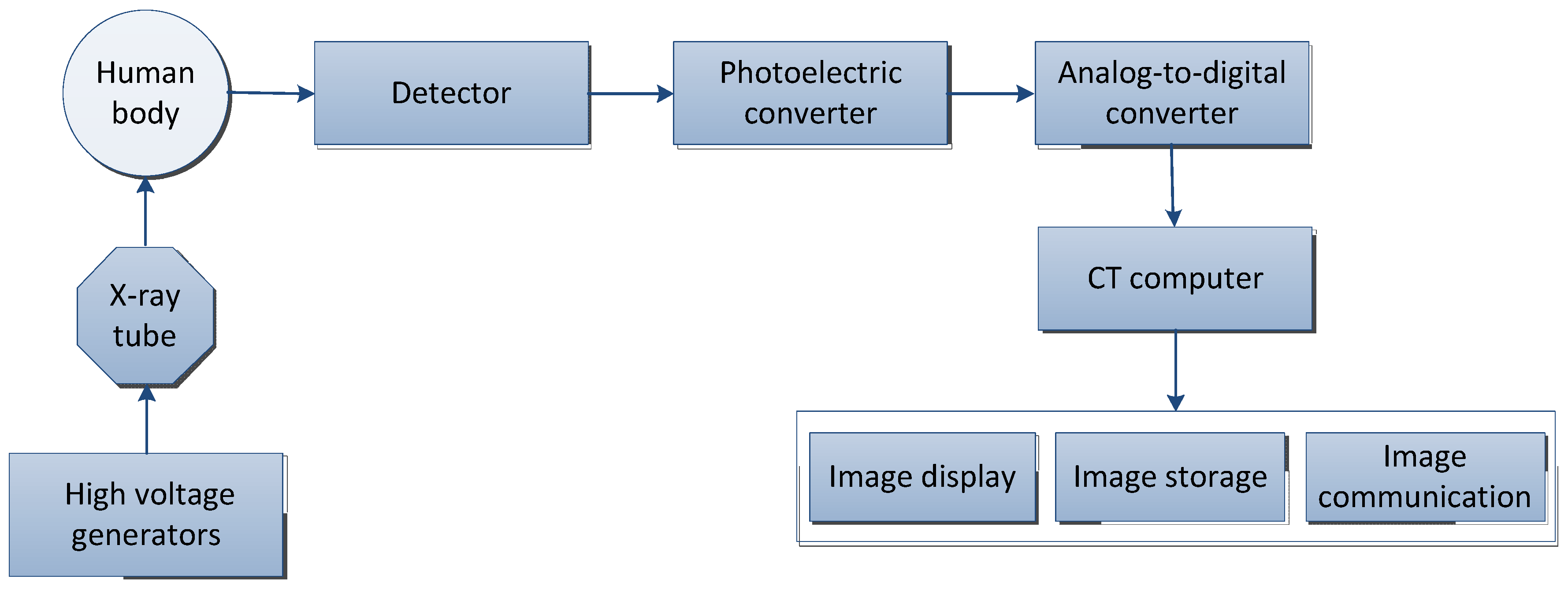

2.1. Computed Tomography

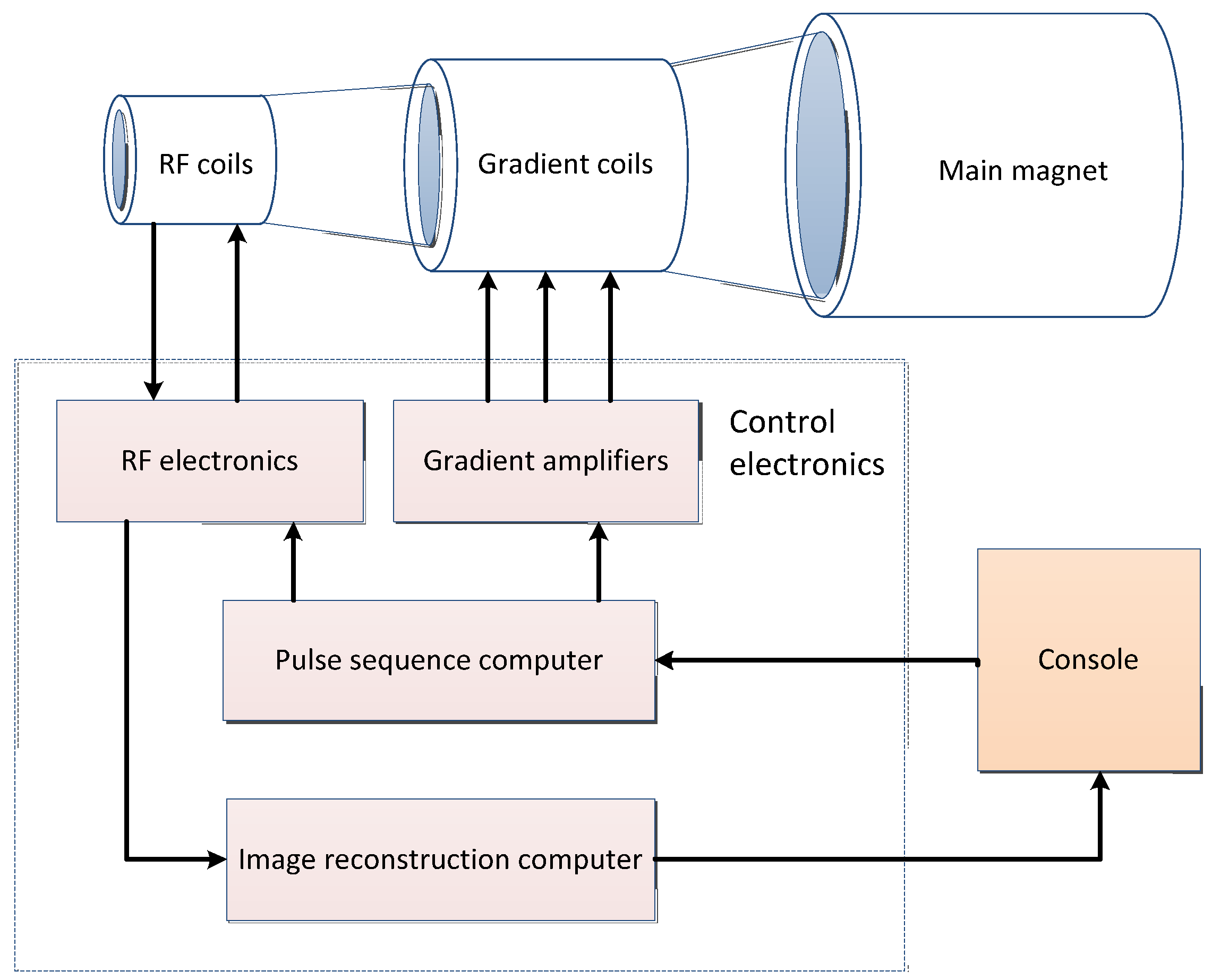

2.2. Magnetic Resonance Imaging

| MRI | Description |

|---|---|

| Conception |

|

| Feature |

|

2.3. Ultrasound

| Ultrasound | Description |

|---|---|

| Conception |

|

| Feature |

|

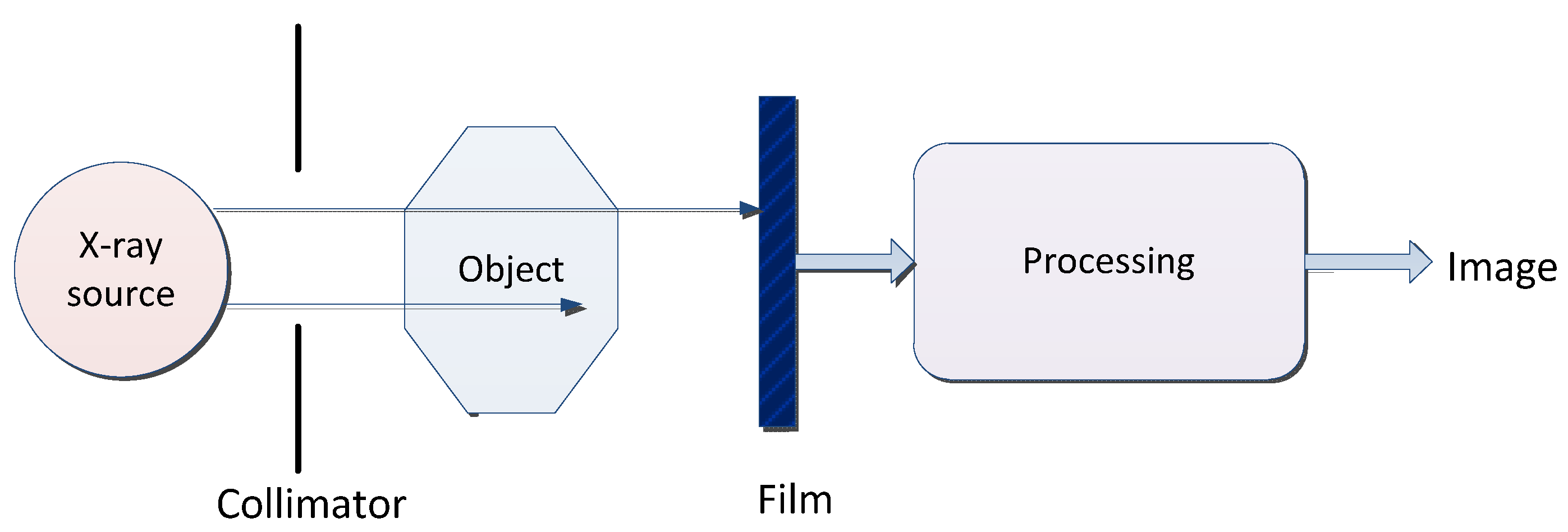

2.4. X-ray

| X-ray | Description |

|---|---|

| Conception |

|

| Feature |

|

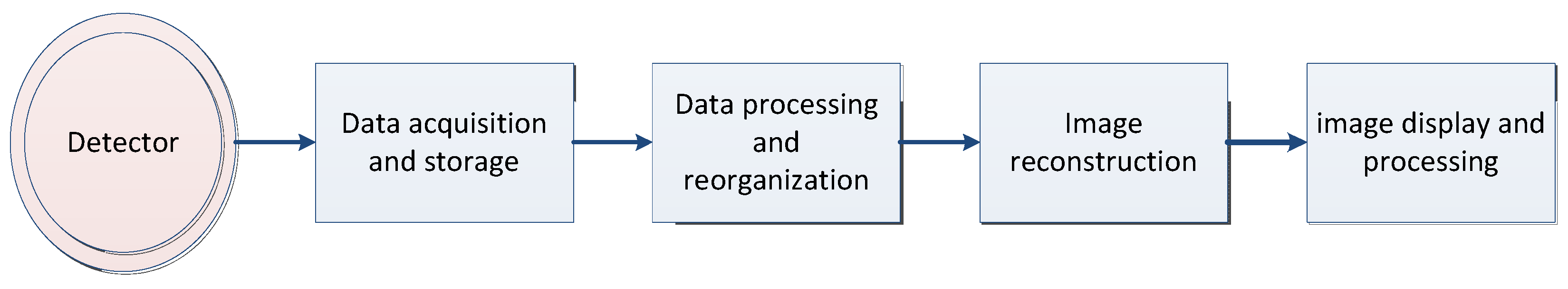

2.5. Positron Emission Tomography

| PET | Description |

|---|---|

| Conception |

|

| Feature |

|

2.6. Histopathology

3. Deep Learning

3.1. Basic Model

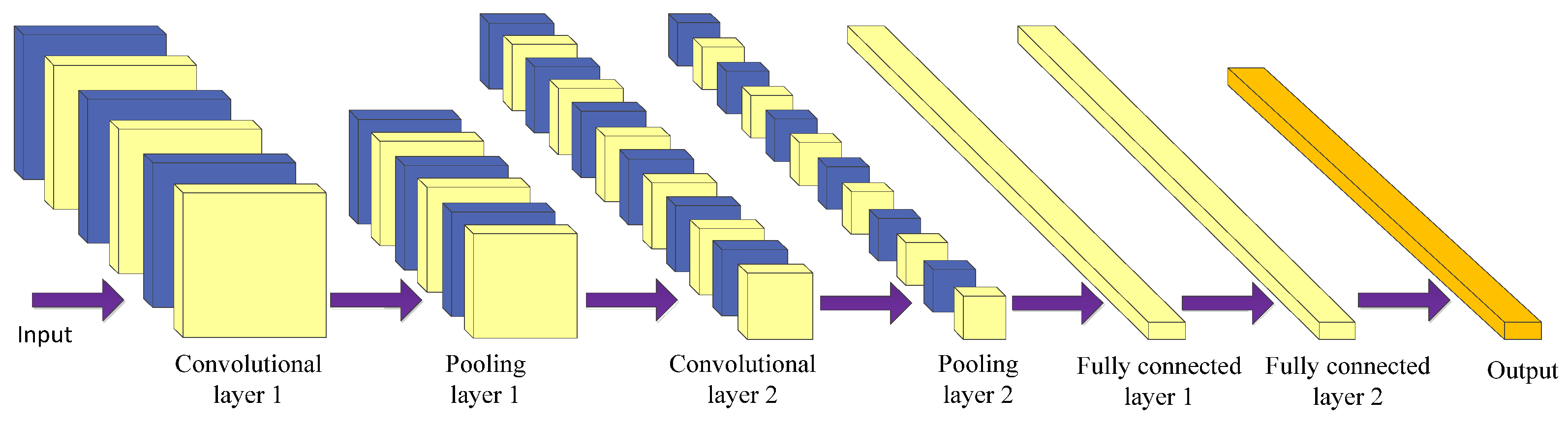

3.1.1. Convolutional Neural Network

3.1.2. Fully Convolutional Network

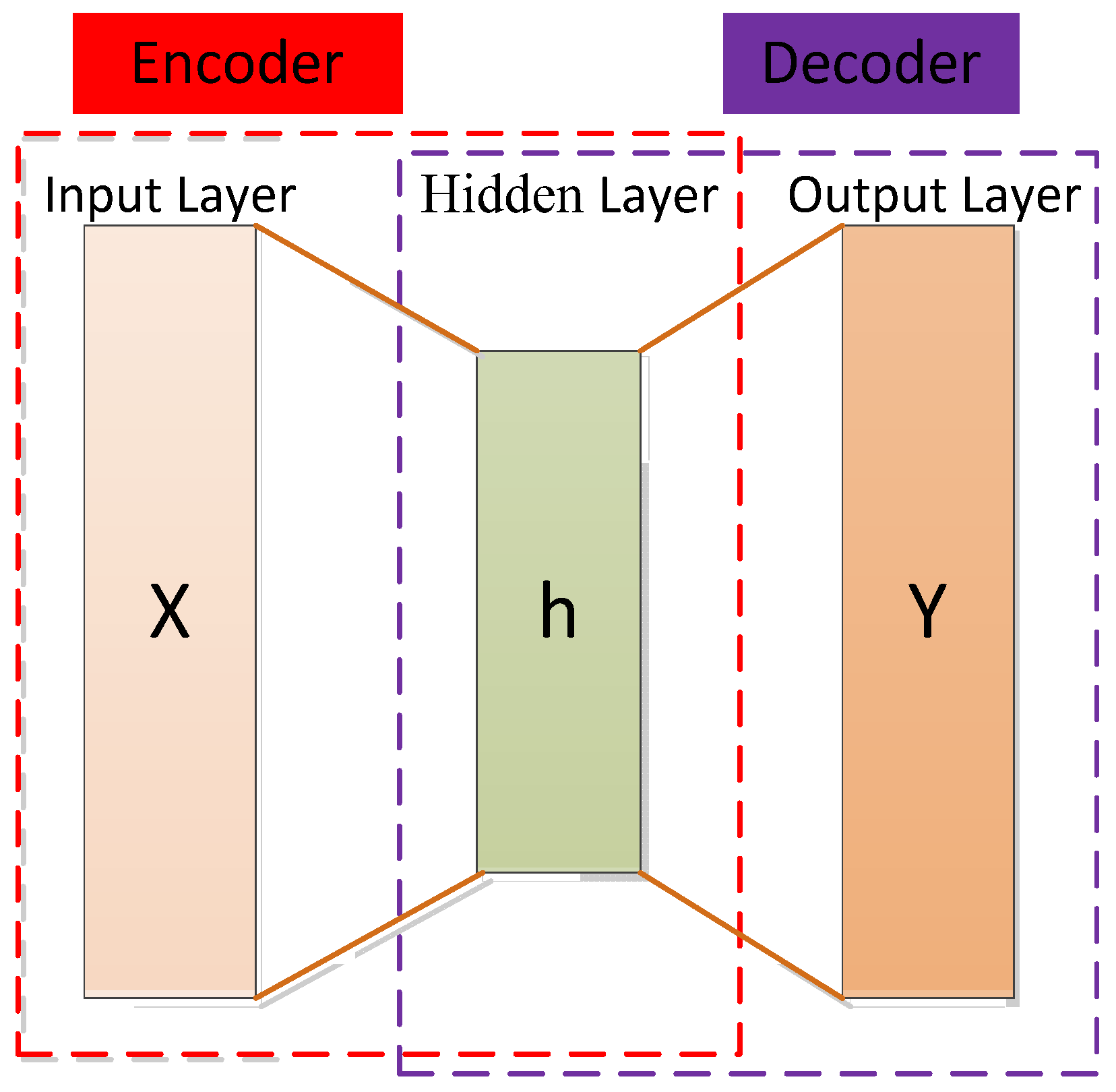

3.1.3. Autoencoder

| Author | Method | Year | Description | Feature |

|---|---|---|---|---|

| Bengio et al. [159] | SAE 1 | 2007 | Use layer-wise training to learn network parameters. | The pre-trained network fits the structure of the training data to a certain extent, which makes the initial value of the entire network in a suitable state, which is convenient for the supervised stage to speed up the iterative convergence. |

| Vincent et al. [160] | DAE 2 | 2008 | Add random noise perturbation to the input data. | Representation reconstructs high-level information from chaotic information, allowing high learning capacity while preventing learning a useless identity function in the encoder and decoder, improving algorithm robustness, and obtaining a more efficient representation of the input. |

| Vincent et al. [152] | SDAE 3 | 2010 | Multiple DAEs are stacked together to form a deep architecture. The input is corroded (noised) only during training; once the training is complete, there is no need to corrode. | It has strong feature extraction ability and good robustness. It is just a feature extractor and does not have a classification function. |

| Ng [151] | Sparse autoencoder | 2011 | A regular term controlling sparsity is added to the original loss function. | Features can be automatically learned from unlabeled data and better feature descriptions can be given than the original data. |

| Rifai et al. [154] | CAE 4 | 2011 | The autoencoder object function is constrained by the encoder’s Jacobian matrix norm so that the encoder can learn abstract features with anti-jamming. | It mainly mines the inherent characteristics of the training samples, which entails using the gradient information of the samples themselves. |

| Masci et al. [161] | Convolutional autoencoder | 2011 | Utilizes the unsupervised learning method of the traditional autoencoder, combining the convolution and pooling operations of the convolutional neural network. | Through the convolution operation, the convolutional autoencoder can well preserve the spatial information of the two-dimensional signal. |

| Kingma et al. [153] | VAE 5 | 2013 | Addresses the problem of non-regularized latent spaces in autoencoders and provides generative capabilities for the entire space. | It is probabilistic and the output is contingent; new instances that look like input data can be generated. |

| Srivastava et al. [162] | Dropout autoencoder | 2014 | Reduce the expressive power of the network and prevent overfitting by randomly disconnecting the network. | The degree of overfitting can be reduced and the training time is long. |

| Srivastava et al. [163] | LAE 6 | 2015 | Compressive representations of sequence data can be learned. | Representation helps improve classification accuracy, especially when there are few training examples. |

| Makhzani et al. [164] | AAE 7 | 2015 | An additional discriminator network is used to determine whether hidden variables of dimensionality reduction are sampled from prior distributions. | Minimize the reconstruction error of traditional autoencoders; match the aggregated posterior distribution of the latent variables of the autoencoder with an arbitrary prior distribution. |

| Xu et al. [155] | SSAE 8 | 2015 | Advanced feature representations of pixel intensity can be captured in an unsupervised manner. | Only advanced features are learned from pixel intensity to identify the distinguishing features of the kernel; efficient coding can be achieved. |

| Higgins et al. [165] | beta-VAE | 2017 | beta-VAE is a generalization of VAE that only changes the ratio between reconstruction loss and divergence loss. The scalar β denotes the influence factor of the divergence loss. | The potential channel capacity and independence constraints can be balanced with the reconstruction accuracy. Training is stable, makes few assumptions about the data, and relies on tuning a single hyperparameter. |

| Zhao et al. [166] | info-VAE | 2017 | The ELBO objective is modified to address issues where variational autoencoders cannot perform amortized inference or learn meaningful latent features. | Significantly improves the quality of variational posteriors and allows the efficient use of latent features. |

| Van Den Oord et al. [167] | vq-VAE 9 | 2017 | Combining VAEs with vector quantization for discrete latent representations. | Encoder networks output discrete rather than continuous codes; priors are learned rather than static. |

| Dupont [168] | Joint-VAE | 2018 | Augment the continuous latent distribution of a variational autoencoder using a relaxed discrete distribution and control the amount of information encoded in each latent unit. | Stable training and large sample diversity, modeling complex continuous and discrete generative factors. |

| Kim et al. [169] | factorVAE | 2018 | The algorithm motivates the distribution of the representation so that it becomes factorized and independent in the whole dimension. | It outperforms β-VAE in disentanglement and reconstruction. |

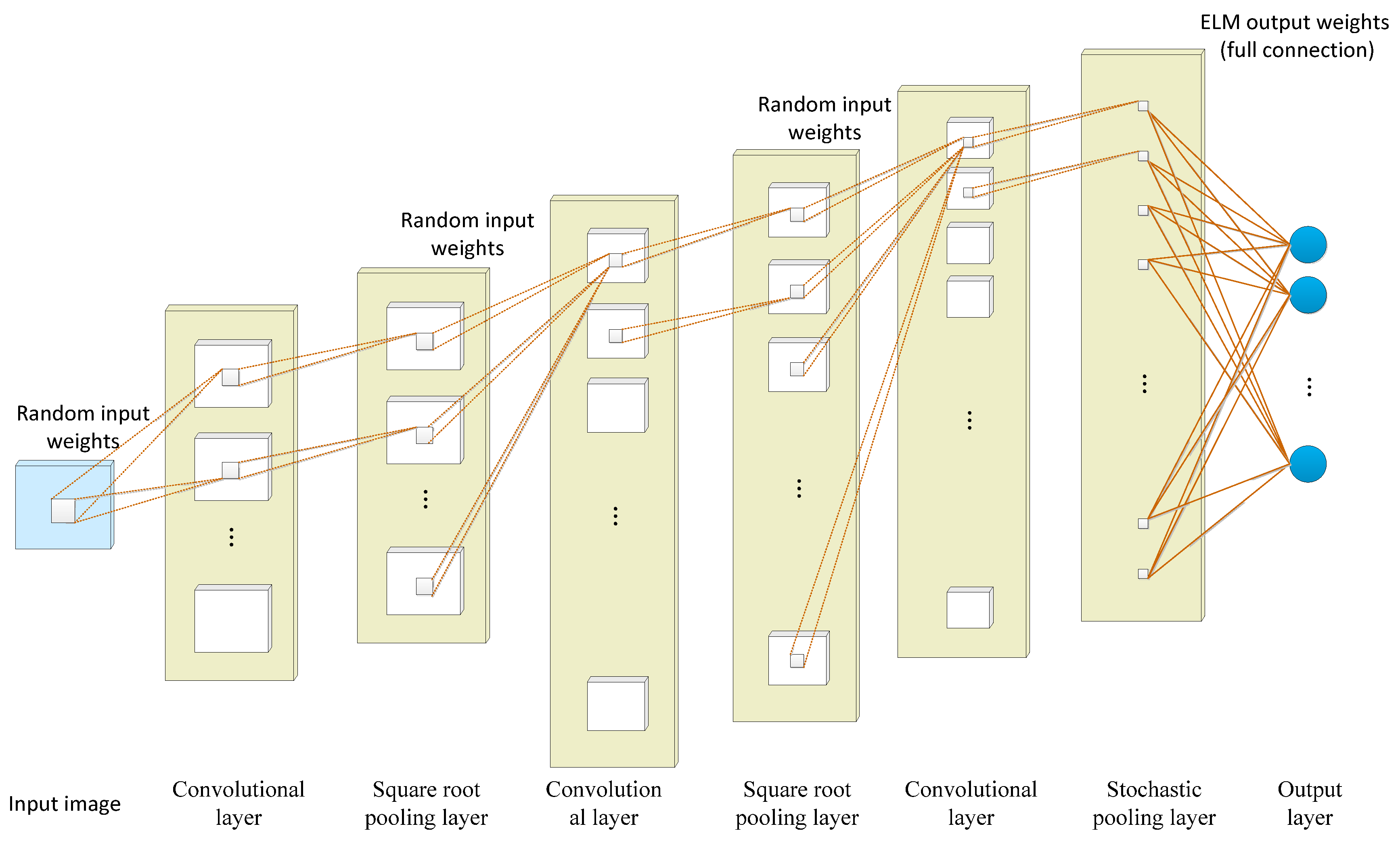

3.1.4. Deep Convolutional Extreme Learning Machine

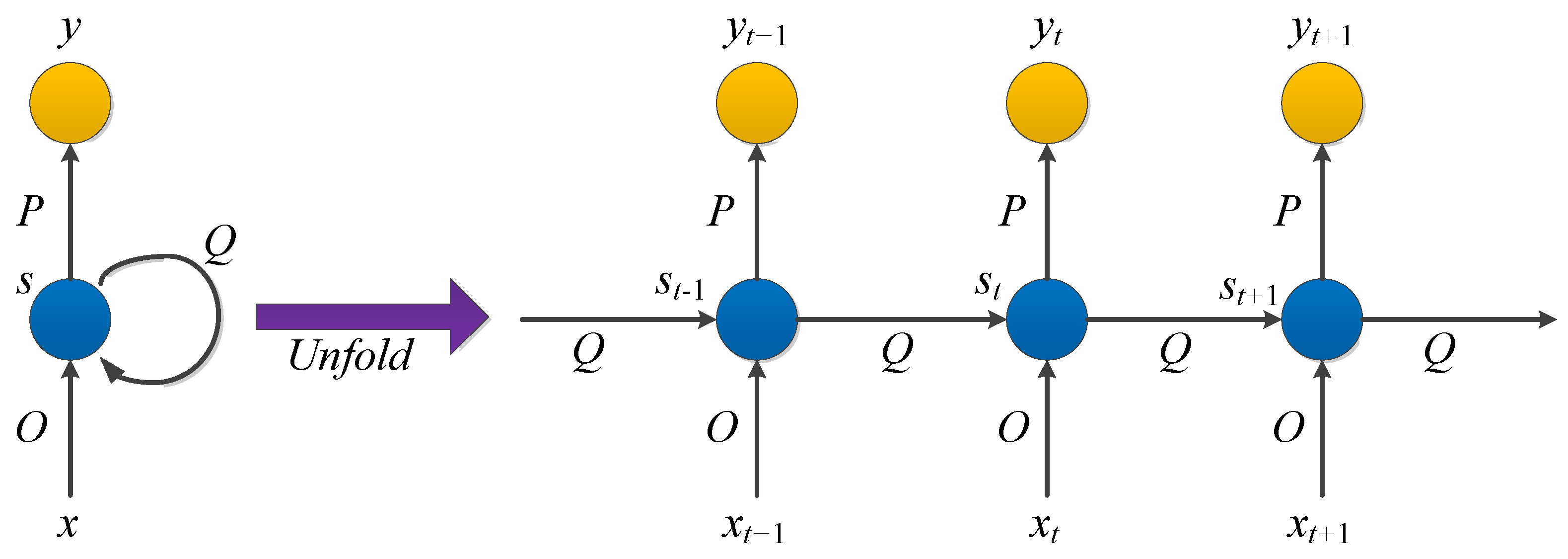

3.1.5. Recurrent Neural Network

3.1.6. Long Short-Term Memory

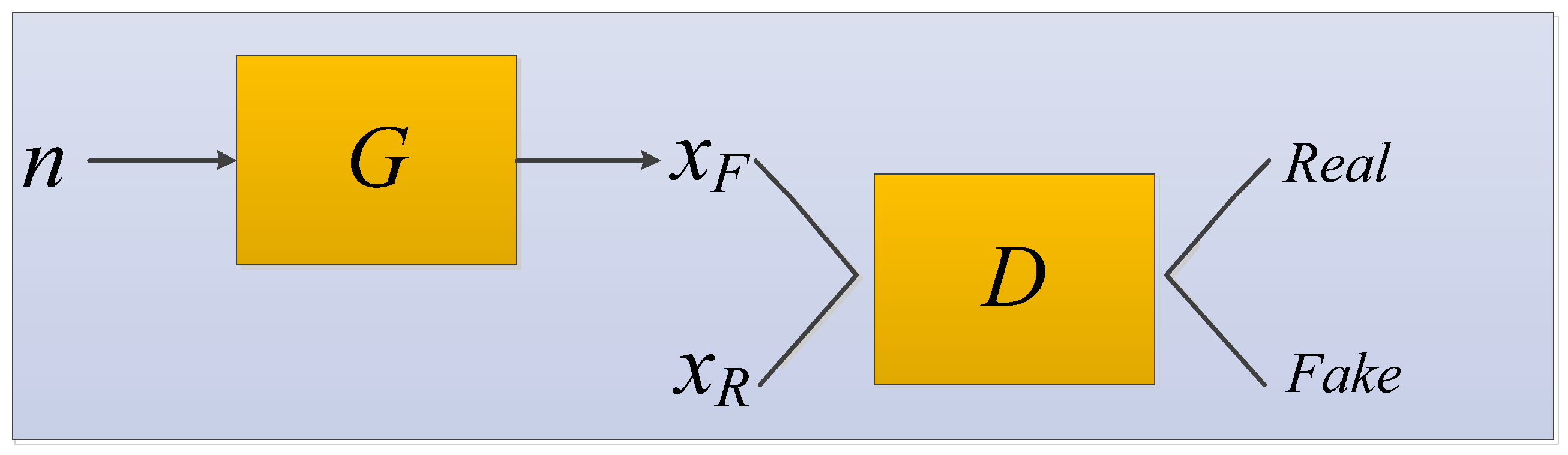

3.1.7. Generative Adversarial Network

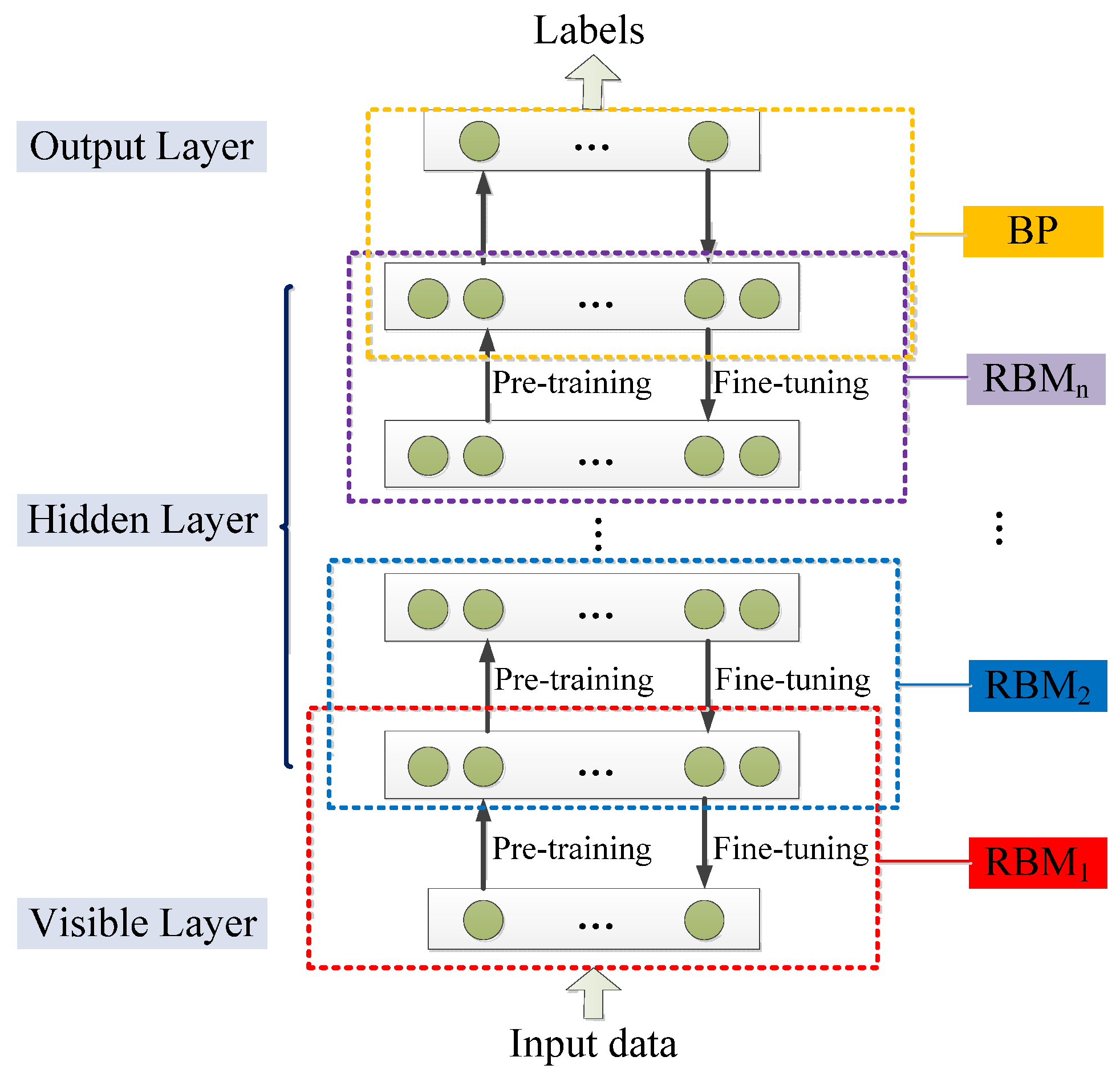

3.1.8. Deep Belief Network

3.1.9. Deep Boltzmann Machine

3.2. Classical Pretrained Model

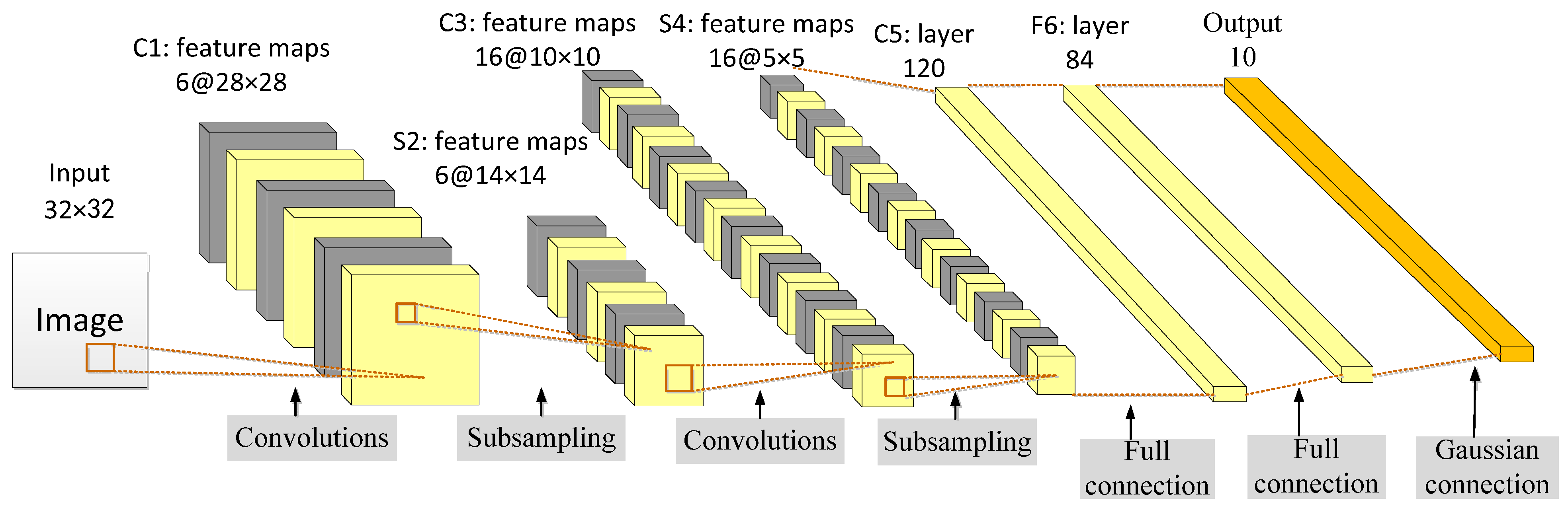

3.2.1. LeNet-5

3.2.2. AlexNet

3.2.3. ZF-Net

3.2.4. VGGNet

3.2.5. GoogLeNet

3.2.6. ResNet

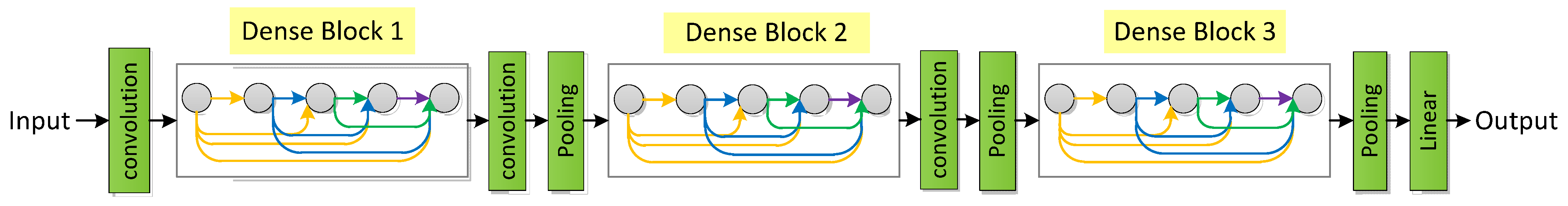

3.2.7. DenseNet

3.2.8. MobileNet

3.2.9. ShuffleNet

3.2.10. SqueezeNet

3.2.11. XceptionNet

3.2.12. U-net

3.3. Advanced Deep Neural Network

3.3.1. Transfer Learning

- (i)

- Instance-based transfer learning entails reusing part of the data in the source domain through the heavy weight method of the target domain learning;

- (ii)

- Feature-representation transfer learning aims to learn a good feature representation through the source domain, encode knowledge in the form of features, and transfer it from source domain to the target domain for improving the effect of target domain tasks. Feature-based transfer learning is based on the assumption that the target and source domains share some overlapping common features. In feature-based methods, a feature transformation strategy is usually adopted to transform each original feature into a new feature representation for knowledge transfer [279];

- (iii)

- Parameter-transfer learning means that the tasks of the target domain and source domain share the same model parameters or obey the same prior distribution. It is based on the assumption that individual models for related tasks should share a prior distribution of some parameters or hyperparameters. Generally, there are usually two specific ways to achieve this. One is to initialize a new model with the parameters of the source model and then fine-tune it. Secondly, the source model or some layers in the source model are solidified as feature extractors in the new model. Then an output layer is added for the target problem and learning on this basis can effectively utilize previous knowledge and reduce training costs [274];

- (iv)

- Relational-knowledge transfer learning involves knowledge transfer between related domains, which needs to assume that the source and target domains are similar and can share some logical relationship, and attempts to transfer the logical relationship among data from the source domain to the target domain.

3.3.2. Ensemble Learning

3.3.3. Graph Neural Network

3.3.4. Explainable Deep Neural Network

3.3.5. Vision Transformer

- (i)

- The image of H × W × C is changed into a sequence of N × (P2 × C), where P is the size of the image block. This sequence can be viewed as a series of flattened image patches. That is, the image is cut into small patches and then flattened. The sequence contains a total of N = H × W/P2 image patches and the dimension of each image patch is (P2 × C). After the above transformation, N can be regarded as the length of the sequence;

- (ii)

- Since the dimension of each image patch is (P2 × C) and the vector dimension we actually need is D, we also need to Embed the image patch. That is, each image patch will be linearly transformed and the dimension will be compressed to D.

3.4. Overfitting Prevention Technique

3.4.1. Batch Normalization

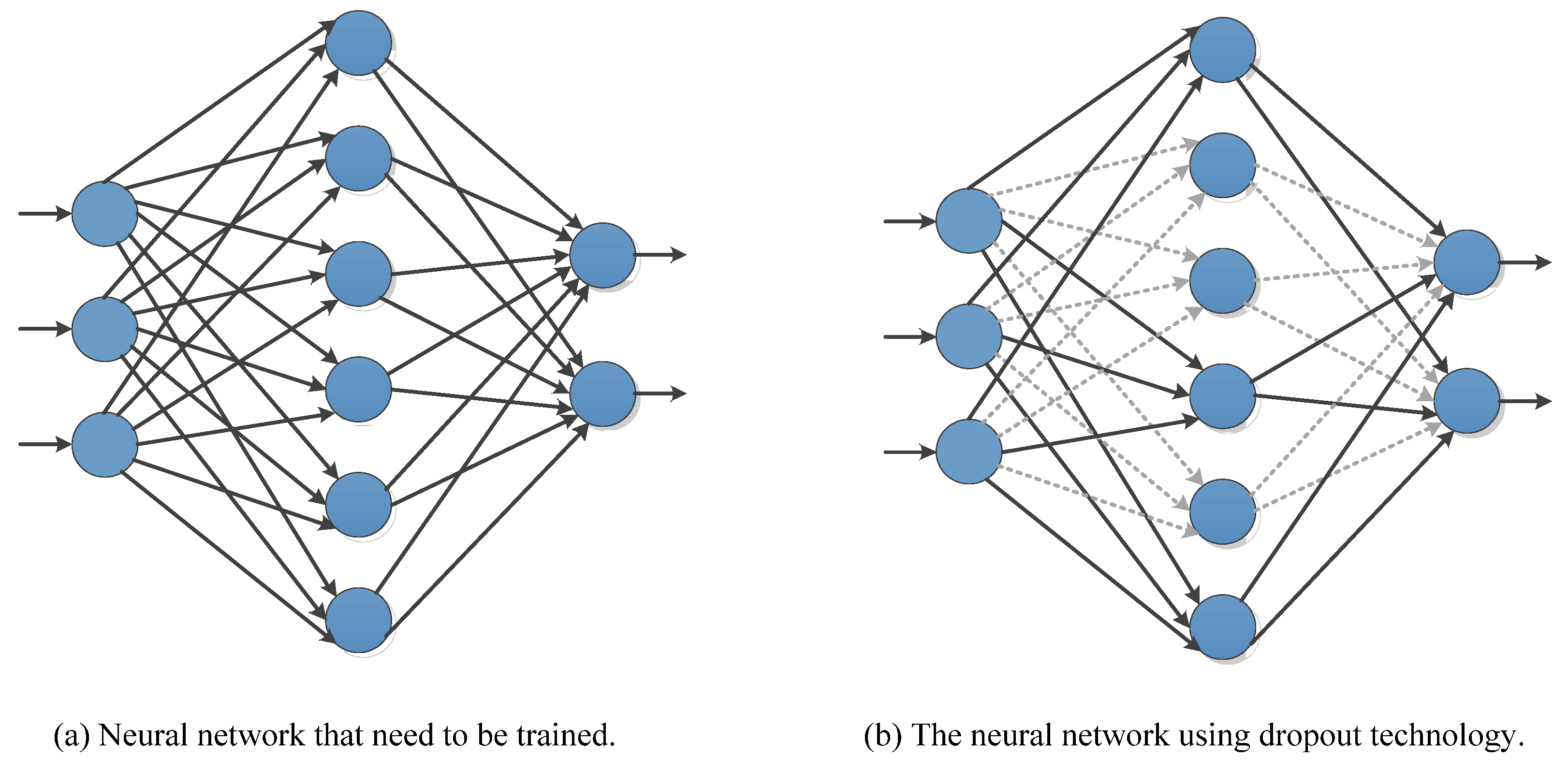

3.4.2. Dropout

3.4.3. Weight Initialization

3.4.4. Data Augmentation

4. Application of Deep Learning in Cancer Diagnoses

4.1. Image Classification

4.2. Image Detection

4.3. Image Segmentation

4.4. Image Registration

4.5. Image Reconstruction

4.6. Image Synthesis

5. Discussion

5.1. Data

5.1.1. Less Training Data

5.1.2. Class Imbalance

5.1.3. Image Fusion

5.2. Label

5.2.1. Insufficient Annotation Data

5.2.2. Noisy Labels

5.2.3. Supervised Paradigm

5.3. Model

5.3.1. Model Explainability

5.3.2. Model Robustness and Generalization

5.4. Radiomics

6. Conclusions

6.1. Limitations and Challenges

- (i)

- Datasets problems.

- (ii)

- The model lacks explainability.

- (iii)

- Poor generalization ability.

- (iv)

- Lack of high-performance models for multi-modal images.

6.2. Future Research Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chhikara, B.S.; Parang, K. Global Cancer Statistics 2022: The trends projection analysis. Chem. Biol. Lett. 2022, 10, 451. [Google Scholar]

- Sabarwal, A.; Kumar, K.; Singh, R.P. Hazardous effects of chemical pesticides on human health–Cancer and other associated disorders. Environ. Toxicol. Pharmacol. 2018, 63, 103–114. [Google Scholar] [CrossRef] [PubMed]

- Hunter, B.; Hindocha, S.; Lee, R.W. The Role of Artificial Intelligence in Early Cancer Diagnosis. Cancers 2022, 14, 1524. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Su, W.; Ao, J.; Wang, M.; Jiang, Q.; He, J.; Gao, H.; Lei, S.; Nie, J.; Yan, X.; et al. Instant diagnosis of gastroscopic biopsy via deep-learned single-shot femtosecond stimulated Raman histology. Nat. Commun. 2022, 13, 4050. [Google Scholar] [CrossRef]

- Attallah, O. Cervical cancer diagnosis based on multi-domain features using deep learning enhanced by handcrafted descriptors. Appl. Sci. 2023, 13, 1916. [Google Scholar] [CrossRef]

- Sargazi, S.; Laraib, U.; Er, S.; Rahdar, A.; Hassanisaadi, M.; Zafar, M.; Díez-Pascual, A.; Bilal, M. Application of Green Gold Nanoparticles in Cancer Therapy and Diagnosis. Nanomaterials 2022, 12, 1102. [Google Scholar] [CrossRef]

- Zhu, F.; Zhang, B. Analysis of the Clinical Characteristics of Tuberculosis Patients based on Multi-Constrained Computed Tomography (CT) Image Segmentation Algorithm. Pak. J. Med. Sci. 2021, 37, 1705–1709. [Google Scholar] [CrossRef]

- Wang, H.; Li, Y.; Liu, S.; Yue, X. Design Computer-Aided Diagnosis System Based on Chest CT Evaluation of Pulmonary Nodules. Comput. Math. Methods Med. 2022, 2022, 7729524. [Google Scholar] [CrossRef] [PubMed]

- Chan, S.-C.; Yeh, C.-H.; Yen, T.-C.; Ng, S.-H.; Chang, J.T.-C.; Lin, C.-Y.; Yen-Ming, T.; Fan, K.-H.; Huang, B.-S.; Hsu, C.-L.; et al. Clinical utility of simultaneous whole-body 18F-FDG PET/MRI as a single-step imaging modality in the staging of primary nasopharyngeal carcinoma. Eur. J. Nucl. Med. Mol. Imaging 2018, 45, 1297–1308. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.; Zheng, W.; Zhang, L.; Tian, H. Segmentation of ultrasound images of thyroid nodule for assisting fine needle aspiration cytology. Health Inf. Sci. Syst. 2013, 1, 5. [Google Scholar] [CrossRef]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine learning and deep learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Kavitha, R.; Jothi, D.K.; Saravanan, K.; Swain, M.P.; Gonzáles, J.L.A.; Bhardwaj, R.J.; Adomako, E. Ant colony optimization-enabled CNN deep learning technique for accurate detection of cervical cancer. BioMed Res. Int. 2023, 2023, 1742891. [Google Scholar] [CrossRef]

- Castiglioni, I.; Rundo, L.; Codari, M.; Di Leo, G.; Salvatore, C.; Interlenghi, M.; Gallivanone, F.; Cozzi, A.; D’Amico, N.C.; Sardanelli, F. AI applications to medical images: From machine learning to deep learning. Phys. Medica 2021, 83, 9–24. [Google Scholar] [CrossRef] [PubMed]

- Ker, J.; Wang, L.; Rao, J.; Lim, T. Deep Learning Applications in Medical Image Analysis. IEEE Access 2018, 6, 9375–9389. [Google Scholar] [CrossRef]

- Greenspan, H.; van Ginneken, B.; Summers, R.M. Guest Editorial Deep Learning in Medical Imaging: Overview and Future Promise of an Exciting New Technique. IEEE Trans. Med. Imaging 2016, 35, 1153–1159. [Google Scholar] [CrossRef]

- Ghanem, N.M.; Attallah, O.; Anwar, F.; Ismail, M.A. AUTO-BREAST: A fully automated pipeline for breast cancer diagnosis using AI technology. In Artificial Intelligence in Cancer Diagnosis and Prognosis, Volume 2: Breast and Bladder Cancer; IOP Publishing: Bristol, UK, 2022. [Google Scholar]

- Yildirim, K.; Bozdag, P.G.; Talo, M.; Yildirim, O.; Karabatak, M.; Acharya, U.R. Deep learning model for automated kidney stone detection using coronal CT images. Comput. Biol. Med. 2021, 135, 104569. [Google Scholar] [CrossRef] [PubMed]

- Attallah, O.; Aslan, M.F.; Sabanci, K. A framework for lung and colon cancer diagnosis via lightweight deep learning models and transformation methods. Diagnostics 2022, 12, 2926. [Google Scholar] [CrossRef]

- Ragab, D.A.; Attallah, O.; Sharkas, M.; Ren, J.; Marshall, S. A framework for breast cancer classification using multi-DCNNs. Comput. Biol. Med. 2021, 131, 104245. [Google Scholar] [CrossRef]

- Punn, N.S.; Agarwal, S. Modality specific U-Net variants for biomedical image segmentation: A survey. Artif. Intell. Rev. 2022, 55, 5845–5889. [Google Scholar] [CrossRef]

- Du, G.; Cao, X.; Liang, J.; Chen, X.; Zhan, Y. Medical image segmentation based on u-net: A review. J. Imaging Sci. Technol. 2020, 64, jist0710. [Google Scholar] [CrossRef]

- Kaur, C.; Garg, U. Artificial intelligence techniques for cancer detection in medical image processing: A review. Mater. Today Proc. 2021, 81, 806–809. [Google Scholar] [CrossRef]

- Rapidis, A.D. Orbitomaxillary mucormycosis (zygomycosis) and the surgical approach to treatment: Perspectives from a maxillofacial surgeon. Clin. Microbiol. Infect. Off. Publ. Eur. Soc. Clin. Microbiol. Infect. Dis. 2010, 15 (Suppl. 5), 98–102. [Google Scholar] [CrossRef]

- Kim, W.; Park, M.S.; Lee, S.H.; Kim, S.H.; Jung, I.J.; Takahashi, T.; Misu, T.; Fujihara, K.; Kim, H.J. Characteristic brain magnetic resonance imaging abnormalities in central nervous system aquaporin-4 autoimmunity. Mult. Scler. 2010, 16, 1229–1236. [Google Scholar] [CrossRef]

- Brinkley, C.K.; Kolodny, N.H.; Kohler, S.J.; Sandeman, D.C.; Beltz, B.S. Magnetic resonance imaging at 9.4 T as a tool for studying neural anatomy in non-vertebrates. J. Neurosci. Methods 2005, 146, 124–132. [Google Scholar]

- Grüneboom, A.; Kling, L.; Christiansen, S.; Mill, L.; Maier, A.; Engelke, K.; Quick, H.; Schett, G.; Gunzer, M. Next-generation imaging of the skeletal system and its blood supply. Nat. Rev. Rheumatol. 2019, 15, 533–549. [Google Scholar] [CrossRef]

- Yang, Z.H.; Gao, J.B.; Yue, S.W.; Guo, H.; Yang, X.H. X-ray diagnosis of synchronous multiple primary carcinoma in the upper gastrointestinal tract. World J. Gastroenterol. 2011, 17, 1817–1824. [Google Scholar] [CrossRef] [PubMed]

- Qin, B.; Jin, M.; Hao, D.; Lv, Y.; Liu, Q.; Zhu, Y.; Ding, S.; Zhao, J.; Fei, B. Accurate vessel extraction via tensor completion of background layer in X-ray coronary angiograms. Pattern Recognit. 2019, 87, 38–54. [Google Scholar] [CrossRef] [PubMed]

- Geleijns, J.; Wondergem, J. X-ray imaging and the skin: Radiation biology, patient dosimetry and observed effects. Radiat. Prot. Dosim. 2005, 114, 121–125. [Google Scholar] [CrossRef]

- Sebastian, T.B.; Tek, H.; Crisco, J.J.; Kimia, B.B. Segmentation of carpal bones from CT images using skeletally coupled deformable models. Med. Image Anal. 2003, 7, 21–45. [Google Scholar] [CrossRef]

- Furukawa, A.; Sakoda, M.; Yamasaki, M.; Kono, N.; Tanaka, T.; Nitta, N.; Kanasaki, S.; Imoto, K.; Takahashi, M.; Murata, K.; et al. Gastrointestinal tract perforation: CT diagnosis of presence, site, and cause. Abdom. Imaging 2005, 30, 524–534. [Google Scholar] [CrossRef] [PubMed]

- Cademartiri, F.; Nieman, K.; Aad, V.D.L.; Raaijmakers, R.H.; Mollet, N.; Pattynama, P.M.T.; De Feyter, P.J.; Krestin, G.P. Intravenous contrast material administration at 16-detector row helical CT coronary angiography: Test bolus versus bolus-tracking technique. Radiology 2004, 233, 817–823. [Google Scholar] [CrossRef] [PubMed]

- Gao, Z.; Wang, X.; Sun, S.; Wu, D.; Bai, J.; Yin, Y.; Liu, X.; Zhang, H.; de Albuquerque, V.H.C. Learning physical properties in complex visual scenes: An intelligent machine for perceiving blood flow dynamics from static CT angiography imaging. Neural Netw. 2020, 123, 82–93. [Google Scholar] [CrossRef] [PubMed]

- Stengel, D.; Rademacher, G.; Ekkernkamp, A.; Güthoff, C.; Mutze, S. Emergency ultrasound-based algorithms for diagnosing blunt abdominal trauma. Cochrane Database Syst. Rev. 2015, 2015, CD004446. [Google Scholar] [CrossRef]

- Chew, C.; Halliday, J.L.; Riley, M.M.; Penny, D.J. Population-based study of antenatal detection of congenital heart disease by ultrasound examination. Ultrasound Obstet. Gynecol. 2010, 29, 619–624. [Google Scholar] [CrossRef]

- Garne, E.; Stoll, C.; Clementi, M. Evaluation of prenatal diagnosis of congenital heart diseases by ultrasound: Experience from 20 European registries. Ultrasound Obstet. Gynecol. 2002, 17, 386–391. [Google Scholar] [CrossRef]

- Fledelius, H.C. Ultrasound in ophthalmology. Ultrasound Med. Biol. 1997, 23, 365–375. [Google Scholar] [CrossRef] [PubMed]

- Abinader, R.W.; Steven, L. Benefits and Pitfalls of Ultrasound in Obstetrics and Gynecology. Obstet. Gynecol. Clin. N. Am. 2019, 46, 367–378. [Google Scholar] [CrossRef]

- Videbech, P. PET measurements of brain glucose metabolism and blood flow in major depressive disorder: A critical review. Acta Psychiatr. Scand. 2010, 101, 11–20. [Google Scholar] [CrossRef] [PubMed]

- Taghanaki, S.A.; Duggan, N.; Ma, H.; Hou, X.; Celler, A.; Benard, F.; Hamarneh, G. Segmentation-free direct tumor volume and metabolic activity estimation from PET scans. Comput. Med. Imaging Graph. 2018, 63, 52–66. [Google Scholar] [CrossRef] [PubMed]

- Schöder, H.; Gönen, M. Screening for Cancer with PET and PET/CT: Potential and Limitations. J. Nucl. Med. Off. Publ. Soc. Nucl. Med. 2007, 48 (Suppl. 1), 4S–18S. [Google Scholar]

- Seeram, E. Computed tomography: Physical principles and recent technical advances. J. Med. Imaging Radiat. Sci. 2010, 41, 87–109. [Google Scholar] [CrossRef] [PubMed]

- Gładyszewski, K.; Gro, K.; Bieberle, A.; Schubert, M.; Hild, M.; Górak, A.; Skiborowski, M. Evaluation of performance improvements through application of anisotropic foam packings in rotating packed beds. Chem. Eng. Sci. 2021, 230, 116176. [Google Scholar] [CrossRef]

- Sera, T. Computed Tomography, in Transparency in Biology; Springer: Berlin/Heidelberg, Germany, 2021; pp. 167–187. [Google Scholar]

- Brenner, D.J.; Hall, E.J. Computed tomography—An increasing source of radiation exposure. N. Engl. J. Med. 2007, 357, 2277–2284. [Google Scholar] [CrossRef] [PubMed]

- Ardila, D.; Kiraly, A.P.; Bharadwaj, S.; Choi, B.; Reicher, J.J.; Peng, L.; Tse, D.; Etemadi, M.; Ye, W.; Corrado, G.; et al. End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nat. Med. 2019, 25, 954–961. [Google Scholar] [CrossRef] [PubMed]

- Tian, P.; He, B.; Mu, W.; Liu, K.; Liu, L.; Zeng, H.; Liu, Y.; Jiang, L.; Zhou, P.; Huang, Z.; et al. Assessing PD-L1 expression in non-small cell lung cancer and predicting responses to immune checkpoint inhibitors using deep learning on computed tomography images. Theranostics 2021, 11, 2098–2107. [Google Scholar] [CrossRef] [PubMed]

- Best, T.D.; Mercaldo, S.F.; Bryan, D.S.; Marquardt, J.P.; Wrobel, M.M.; Bridge, C.P.; Troschel, F.M.; Javidan, C.; Chung, J.H.; Muniappan, A. Multilevel body composition analysis on chest computed tomography predicts hospital length of stay and complications after lobectomy for lung cancer: A multicenter study. Ann. Surg. 2022, 275, e708–e715. [Google Scholar] [CrossRef]

- Vangelov, B.; Bauer, J.; Kotevski, D.; Smee, R.I. The use of alternate vertebral levels to L3 in computed tomography scans for skeletal muscle mass evaluation and sarcopenia assessment in patients with cancer: A systematic review. Br. J. Nutr. 2022, 127, 722–735. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez, A. Principles of magnetic resonance imaging. Rev. Mex. De Física 2004, 50, 272–286. [Google Scholar]

- Vasireddi, A.K.; Leo, M.E.; Squires, J.H. Magnetic resonance imaging of pediatric liver tumors. Pediatr. Radiol. 2022, 52, 177–188. [Google Scholar] [CrossRef] [PubMed]

- Shao, Y.-Y.; Wang, S.-Y.; Lin, S.-M.; Chen, K.-Y.; Tseng, J.-H.; Ho, M.-C.; Lee, R.-C.; Liang, P.-C.; Liao, L.-Y.; Huang, K.-W.; et al. Management consensus guideline for hepatocellular carcinoma: 2020 update on surveillance, diagnosis, and systemic treatment by the Taiwan Liver Cancer Association and the Gastroenterological Society of Taiwan. J. Formos. Med. Assoc. 2021, 120, 1051–1060. [Google Scholar] [CrossRef]

- Yang, J.; Yu, S.; Gao, L.; Zhou, Q.; Zhan, S.; Sun, F. Current global development of screening guidelines for hepatocellular carcinoma: A systematic review. Zhonghua Liu Xing Bing Xue Za Zhi Zhonghua Liuxingbingxue Zazhi 2020, 41, 1126–1137. [Google Scholar]

- Pedrosa, I.; Alsop, D.C.; Rofsky, N.M. Magnetic resonance imaging as a biomarker in renal cell carcinoma. Cancer 2009, 115, 2334–2345. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Kwon, Y.S.; Labib, M.; Foran, D.J.; Singer, E.A. Magnetic resonance imaging as a biomarker for renal cell carcinoma. Dis. Markers 2015, 2015, 648495. [Google Scholar] [CrossRef] [PubMed]

- Schima, W.; Ba-Ssalamah, A.; Goetzinger, P.; Scharitzer, M.; Koelblinger, C. State-of-the-art magnetic resonance imaging of pancreatic cancer. Top. Magn. Reson. Imaging 2007, 18, 421–429. [Google Scholar] [CrossRef]

- Saisho, H.; Yamaguchi, T. Diagnostic imaging for pancreatic cancer: Computed tomography, magnetic resonance imaging, and positron emission tomography. Pancreas 2004, 28, 273–278. [Google Scholar] [CrossRef] [PubMed]

- Tiwari, A.; Srivastava, S.; Pant, M. Brain tumor segmentation and classification from magnetic resonance images: Review of selected methods from 2014 to 2019. Pattern Recognit. Lett. 2020, 131, 244–260. [Google Scholar] [CrossRef]

- Tonarelli, L. Magnetic Resonance Imaging of Brain Tumor; CEwebsource.com: Diepoldsau, Switzerland, 2013. [Google Scholar]

- Hernández, M.L.; Osorio, S.; Florez, K.; Ospino, A.; Díaz, G.M. Abbreviated magnetic resonance imaging in breast cancer: A systematic review of literature. Eur. J. Radiol. Open 2021, 8, 100307. [Google Scholar] [CrossRef]

- Park, J.W.; Jeong, W.G.; Lee, J.E.; Lee, H.-J.; Ki, S.Y.; Lee, B.C.; Kim, H.O.; Kim, S.K.; Heo, S.H.; Lim, H.S.; et al. Pictorial review of mediastinal masses with an emphasis on magnetic resonance imaging. Korean J. Radiol. 2021, 22, 139–154. [Google Scholar] [CrossRef]

- Bak, S.H.; Kim, C.; Kim, C.H.; Ohno, Y.; Lee, H.Y. Magnetic resonance imaging for lung cancer: A state-of-the-art review. Precis. Future Med. 2022, 6, 49–77. [Google Scholar] [CrossRef]

- Xia, L. Auxiliary Diagnosis of Lung Cancer with Magnetic Resonance Imaging Data under Deep Learning. Comput. Math. Methods Med. 2022, 2022, 1994082. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Suh, C.H.; Kim, S.Y.; Cho, J.Y.; Kim, S.H. Magnetic resonance imaging for detection of parametrial invasion in cervical cancer: An updated systematic review and meta-analysis of the literature between 2012 and 2016. Eur. Radiol. 2018, 28, 530–541. [Google Scholar] [CrossRef]

- Wu, Q.; Wang, S.; Chen, X.; Wang, Y.; Dong, L.; Liu, Z.; Tian, J.; Wang, M. Radiomics analysis of magnetic resonance imaging improves diagnostic performance of lymph node metastasis in patients with cervical cancer. Radiother. Oncol. 2019, 138, 141–148. [Google Scholar] [CrossRef] [PubMed]

- Wu, Q.; Wang, S.; Zhang, S.; Wang, M.; Ding, Y.; Fang, J.; Qian, W.; Liu, Z.; Sun, K.; Jin, Y.; et al. Development of a deep learning model to identify lymph node metastasis on magnetic resonance imaging in patients with cervical cancer. JAMA Netw. Open 2020, 3, e2011625. [Google Scholar] [CrossRef]

- Panebianco, V.; Barchetti, F.; de Haas, R.J.; Pearson, R.A.; Kennish, S.J.; Giannarini, G.; Catto, J.W. Improving staging in bladder cancer: The increasing role of multiparametric magnetic resonance imaging. Eur. Urol. Focus 2016, 2, 113–121. [Google Scholar] [CrossRef]

- Green, D.A.; Durand, M.; Gumpeni, N.; Rink, M.; Cha, E.K.; Karakiewicz, P.I.; Scherr, D.S.; Shariat, S.F. Role of magnetic resonance imaging in bladder cancer: Current status and emerging techniques. BJU Int. 2012, 110, 1463–1470. [Google Scholar] [CrossRef]

- Zhao, Y.; Simpson, B.S.; Morka, N.; Freeman, A.; Kirkham, A.; Kelly, D.; Whitaker, H.C.; Emberton, M.; Norris, J.M. Comparison of multiparametric magnetic resonance imaging with prostate-specific membrane antigen positron-emission tomography imaging in primary prostate cancer diagnosis: A systematic review and meta-analysis. Cancers 2022, 14, 3497. [Google Scholar] [CrossRef]

- Emmett, L.; Buteau, J.; Papa, N.; Moon, D.; Thompson, J.; Roberts, M.J.; Rasiah, K.; Pattison, D.A.; Yaxley, J.; Thomas, P.; et al. The additive diagnostic value of prostate-specific membrane antigen positron emission tomography computed tomography to multiparametric magnetic resonance imaging triage in the diagnosis of prostate cancer (PRIMARY): A prospective multicentre study. Eur. Urol. 2021, 80, 682–689. [Google Scholar] [CrossRef] [PubMed]

- Brown, G.; Radcliffe, A.; Newcombe, R.; Dallimore, N.; Bourne, M.; Williams, G. Preoperative assessment of prognostic factors in rectal cancer using high-resolution magnetic resonance imaging. Br. J. Surg. 2003, 90, 355–364. [Google Scholar] [CrossRef] [PubMed]

- Akasu, T.; Iinuma, G.; Takawa, M.; Yamamoto, S.; Muramatsu, Y.; Moriyama, N. Accuracy of high-resolution magnetic resonance imaging in preoperative staging of rectal cancer. Ann. Surg. Oncol. 2009, 16, 2787–2794. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; Xu, J.; Zhang, Z.; Li, S.; Zhang, X.; Zhou, Y.; Zhang, X.; Lu, Y. Evaluation of rectal cancer circumferential resection margin using faster region-based convolutional neural network in high-resolution magnetic resonance images. Dis. Colon Rectum 2020, 63, 143–151. [Google Scholar] [CrossRef]

- Lu, W.; Jing, H.; Ju-Mei, Z.; Shao-Lin, N.; Fang, C.; Xiao-Ping, Y.; Qiang, L.; Biao, Z.; Su-Yu, Z.; Ying, H. Intravoxel incoherent motion diffusion-weighted imaging for discriminating the pathological response to neoadjuvant chemoradiotherapy in locally advanced rectal cancer. Sci. Rep. 2017, 7, 8496. [Google Scholar] [CrossRef]

- Lu, B.; Yang, X.; Xiao, X.; Chen, Y.; Yan, X.; Yu, S. Intravoxel incoherent motion diffusion-weighted imaging of primary rectal carcinoma: Correlation with histopathology. Med. Sci. Monit. Int. Med. J. Exp. Clin. Res. 2018, 24, 2429–2436. [Google Scholar] [CrossRef] [PubMed]

- de Lussanet, Q.G.; Backes, W.H.; Griffioen, A.W.; Padhani, A.R.; Baeten, C.I.; van Baardwijk, A.; Lambin, P.; Beets, G.L.; van Engelshoven, J.M.; Beets-Tan, R.G. Dynamic contrast-enhanced magnetic resonance imaging of radiation therapy-induced microcirculation changes in rectal cancer. Int. J. Radiat. Oncol. Biol. Phys. 2005, 63, 1309–1315. [Google Scholar] [CrossRef] [PubMed]

- Ciolina, M.; Caruso, D.; De Santis, D.; Zerunian, M.; Rengo, M.; Alfieri, N.; Musio, D.; De Felice, F.; Ciardi, A.; Tombolini, V.; et al. Dynamic contrast-enhanced magnetic resonance imaging in locally advanced rectal cancer: Role of perfusion parameters in the assessment of response to treatment. La Radiol. Medica 2019, 124, 331–338. [Google Scholar] [CrossRef]

- Wen, Z.; Chen, Y.; Yang, X.; Lu, B.; Liu, Y.; Shen, B.; Yu, S. Application of magnetic resonance diffusion kurtosis imaging for distinguishing histopathologic subtypes and grades of rectal carcinoma. Cancer Imaging 2019, 19, 8. [Google Scholar] [CrossRef]

- Hu, F.; Tang, W.; Sun, Y.; Wan, D.; Cai, S.; Zhang, Z.; Grimm, R.; Yan, X.; Fu, C.; Tong, T.; et al. The value of diffusion kurtosis imaging in assessing pathological complete response to neoadjuvant chemoradiation therapy in rectal cancer: A comparison with conventional diffusion-weighted imaging. Oncotarget 2017, 8, 75597–75606. [Google Scholar] [CrossRef]

- Jordan, K.W.; Nordenstam, J.; Lauwers, G.Y.; Rothenberger, D.A.; Alavi, K.; Garwood, M.; Cheng, L.L. Metabolomic characterization of human rectal adenocarcinoma with intact tissue magnetic resonance spectroscopy. Dis. Colon Rectum 2009, 52, 520–525. [Google Scholar] [CrossRef] [PubMed]

- Pang, X.; Xie, P.; Yu, L.; Chen, H.; Zheng, J.; Meng, X.; Wan, X. A new magnetic resonance imaging tumour response grading scheme for locally advanced rectal cancer. Br. J. Cancer 2022, 127, 268–277. [Google Scholar] [CrossRef] [PubMed]

- Clough, T.J.; Jiang, L.; Wong, K.-L.; Long, N.J. Ligand design strategies to increase stability of gadolinium-based magnetic resonance imaging contrast agents. Nat. Commun. 2019, 10, 1420. [Google Scholar] [CrossRef]

- Bottrill, M.; Kwok, L.; Long, N.J. Lanthanides in magnetic resonance imaging. Chem. Soc. Rev. 2006, 35, 557–571. [Google Scholar] [CrossRef] [PubMed]

- Fitch, A.A.; Rudisill, S.S.; Harada, G.K.; An, H.S. Magnetic Resonance Imaging Techniques for the Evaluation of the Subaxial Cervical Spine. In Atlas of Spinal Imaging; Elsevier: Amsterdam, The Netherlands, 2022; pp. 75–105. [Google Scholar]

- Lei, Y.; Shu, H.-K.; Tian, S.; Jeong, J.J.; Liu, T.; Shim, H.; Mao, H.; Wang, T.; Jani, A.B.; Curran, W.J.; et al. Magnetic resonance imaging-based pseudo computed tomography using anatomic signature and joint dictionary learning. J. Med. Imaging 2018, 5, 034001. [Google Scholar]

- Woo, S.; Suh, C.H.; Kim, S.Y.; Cho, J.Y.; Kim, S.H. Diagnostic performance of magnetic resonance imaging for the detection of bone metastasis in prostate cancer: A systematic review and meta-analysis. Eur. Urol. 2018, 73, 81–91. [Google Scholar] [CrossRef]

- Fritz, B.; Müller, D.A.; Sutter, R.; Wurnig, M.C.; Wagner, M.W.; Pfirrmann, C.W.; Fischer, M.A. Magnetic resonance imaging–based grading of cartilaginous bone tumors: Added value of quantitative texture analysis. Investig. Radiol. 2018, 53, 663–672. [Google Scholar] [CrossRef]

- Hetland, M.; Østergaard, M.; Stengaard-Pedersen, K.; Junker, P.; Ejbjerg, B.; Jacobsen, S.; Ellingsen, T.; Lindegaard, H.; Pødenphant, J.; Vestergaard, A. Anti-cyclic citrullinated peptide antibodies, 28-joint Disease Activity Score, and magnetic resonance imaging bone oedema at baseline predict 11 years’ functional and radiographic outcome in early rheumatoid arthritis. Scand. J. Rheumatol. 2019, 48, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Østergaard, M.; Boesen, M. Imaging in rheumatoid arthritis: The role of magnetic resonance imaging and computed tomography. La Radiol. Medica 2019, 124, 1128–1141. [Google Scholar] [CrossRef]

- Kijowski, R.; Gold, G.E. Routine 3D magnetic resonance imaging of joints. J. Magn. Reson. Imaging 2011, 33, 758–771. [Google Scholar] [CrossRef]

- Johnstone, E.; Wyatt, J.J.; Henry, A.M.; Short, S.C.; Sebag-Montefiore, D.; Murray, L.; Kelly, C.G.; McCallum, H.M.; Speight, R. Systematic review of synthetic computed tomography generation methodologies for use in magnetic resonance imaging–only radiation therapy. Int. J. Radiat. Oncol. Biol. Phys. 2018, 100, 199–217. [Google Scholar] [CrossRef] [PubMed]

- Klenk, C.; Gawande, R.; Uslu, L.; Khurana, A.; Qiu, D.; Quon, A.; Donig, J.; Rosenberg, J.; Luna-Fineman, S.; Moseley, M.; et al. Ionising radiation-free whole-body MRI versus 18F-fluorodeoxyglucose PET/CT scans for children and young adults with cancer: A prospective, non-randomised, single-centre study. Lancet Oncol. 2014, 15, 275–285. [Google Scholar] [CrossRef] [PubMed]

- Ghadimi, M.; Sapra, A. Magnetic resonance imaging contraindications. In StatPearls [Internet]; StatPearls Publishing: Treasure Island, FL, USA, 2021. [Google Scholar]

- Mohan, J.; Krishnaveni, V.; Guo, Y. A survey on the magnetic resonance image denoising methods. Biomed. Signal Process. Control 2014, 9, 56–69. [Google Scholar] [CrossRef]

- Wells, P.N. Ultrasonic imaging of the human body. Rep. Prog. Phys. 1999, 62, 671. [Google Scholar] [CrossRef]

- Rajamanickam, K. Role of Ultrasonography in Cancer Theranostic Applications. Arch. Intern. Med. Res. 2020, 3, 32–43. [Google Scholar] [CrossRef]

- Nayak, G.; Bolla, V.; Balivada, S.K.; Prabhudev, P. Technological Evolution of Ultrasound Devices: A Review. Int. J. Health Technol. Innov. 2022, 1, 24–32. [Google Scholar]

- Bogani, G.; Chiappa, V.; Lopez, S.; Salvatore, C.; Interlenghi, M.; D’Oria, O.; Giannini, A.; Maggiore, U.L.R.; Chiarello, G.; Palladino, S.; et al. Radiomics and Molecular Classification in Endometrial Cancer (The ROME Study): A Step Forward to a Simplified Precision Medicine. Healthcare 2022, 10, 2464. [Google Scholar] [CrossRef] [PubMed]

- Hoskins, P.R.; Anderson, T.; Sharp, M.; Meagher, S.; McGillivray, T.; McDicken, W.N. Ultrasound B-mode 360/spl deg/tomography in mice. In Proceedings of the IEEE Ultrasonics Symposium, Montreal, QC, Canada, 23–27 August 2004. [Google Scholar]

- Fite, B.Z.; Wang, J.; Ghanouni, P.; Ferrara, K.W. A review of imaging methods to assess ultrasound-mediated ablation. BME Front. 2022, 2022, 9758652. [Google Scholar] [CrossRef]

- Jain, A.; Tiwari, A.; Verma, A.; Jain, S.K. Ultrasound-based triggered drug delivery to tumors. Drug Deliv. Transl. Res. 2018, 8, 150–164. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Ma, Y.; Zhang, T.; Shung, K.K.; Zhu, B. Recent advancements in ultrasound transducer: From material strategies to biomedical applications. BME Front. 2022, 2022, 9764501. [Google Scholar] [CrossRef]

- Shalaby, T.; Gawish, A.; Hamad, H. A Promising Platform of Magnetic Nanofluid and Ultrasonic Treatment for Cancer Hyperthermia Therapy: In Vitro and in Vivo Study. Ultrasound Med. Biol. 2021, 47, 651–665. [Google Scholar] [CrossRef]

- Leighton, T.G. What is ultrasound? Prog. Biophys. Mol. Biol. 2007, 93, 3–83. [Google Scholar] [CrossRef] [PubMed]

- Carovac, A.; Smajlovic, F.; Junuzovic, D. Application of ultrasound in medicine. Acta Inform. Medica 2011, 19, 168–171. [Google Scholar] [CrossRef] [PubMed]

- Bandyopadhyay, O.; Biswas, A.; Bhattacharya, B.B. Bone-cancer assessment and destruction pattern analysis in long-bone X-ray image. J. Digit. Imaging 2019, 32, 300–313. [Google Scholar] [CrossRef]

- Gaál, G.; Maga, B.; Lukács, A. Attention u-net based adversarial architectures for chest x-ray lung segmentation. arXiv 2020, arXiv:2003.10304. [Google Scholar]

- Bradley, S.H.; Abraham, S.; Callister, M.E.; Grice, A.; Hamilton, W.T.; Lopez, R.R.; Shinkins, B.; Neal, R.D. Sensitivity of chest X-ray for detecting lung cancer in people presenting with symptoms: A systematic review. Br. J. Gen. Pract. 2019, 69, e827–e835. [Google Scholar] [CrossRef]

- Foley, R.W.; Nassour, V.; Oliver, H.C.; Hall, T.; Masani, V.; Robinson, G.; Rodrigues, J.C.; Hudson, B.J. Chest X-ray in suspected lung cancer is harmful. Eur. Radiol. 2021, 31, 6269–6274. [Google Scholar] [CrossRef] [PubMed]

- Gang, P.; Zhen, W.; Zeng, W.; Gordienko, Y.; Kochura, Y.; Alienin, O.; Rokovyi, O.; Stirenko, S. Dimensionality reduction in deep learning for chest X-ray analysis of lung cancer. In Proceedings of the 2018 Tenth International Conference on Advanced Computational Intelligence (ICACI), Xiamen, China, 29–31 March 2018. [Google Scholar]

- Gordienko, Y.; Gang, P.; Hui, J.; Zeng, W.; Kochura, Y.; Alienin, O.; Rokovyi, O.; Stirenko, S. Deep learning with lung segmentation and bone shadow exclusion techniques for chest X-ray analysis of lung cancer. In Proceedings of the International Conference on Computer Science, Engineering and Education Applications, Kiev, Ukraine, 18–20 January 2018; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Lu, L.; Sun, M.; Lu, Q.; Wu, T.; Huang, B. High energy X-ray radiation sensitive scintillating materials for medical imaging, cancer diagnosis and therapy. Nano Energy 2021, 79, 105437. [Google Scholar] [CrossRef]

- Zeng, F.; Zhu, Z. Design of X-ray energy detector. Energy Rep. 2022, 8, 456–460. [Google Scholar] [CrossRef]

- Preshlock, S.; Tredwell, M.; Gouverneur, V. 18F-Labeling of arenes and heteroarenes for applications in positron emission tomography. Chem. Rev. 2016, 116, 719–766. [Google Scholar] [CrossRef] [PubMed]

- Gambhir, S.S. Molecular imaging of cancer with positron emission tomography. Nat. Rev. Cancer 2002, 2, 683–693. [Google Scholar] [CrossRef] [PubMed]

- Lardinois, D.; Weder, W.; Hany, T.F.; Kamel, E.M.; Korom, S.; Seifert, B.; von Schulthess, G.K.; Steinert, H.C. Staging of non–small-cell lung cancer with integrated positron-emission tomography and computed tomography. N. Engl. J. Med. 2003, 348, 2500–2507. [Google Scholar] [CrossRef] [PubMed]

- Anttinen, M.; Ettala, O.; Malaspina, S.; Jambor, I.; Sandell, M.; Kajander, S.; Rinta-Kiikka, I.; Schildt, J.; Saukko, E.; Rautio, P.; et al. A Prospective Comparison of 18F-prostate-specific Membrane Antigen-1007 Positron Emission Tomography Computed Tomography, Whole-body 1.5 T Magnetic Resonance Imaging with Diffusion-weighted Imaging, and Single-photon Emission Computed Tomography/Computed Tomography with Traditional Imaging in Primary Distant Metastasis Staging of Prostate Cancer (PROSTAGE). Eur. Urol. Oncol. 2021, 4, 635–644. [Google Scholar] [PubMed]

- Garg, P.K.; Singh, S.K.; Prakash, G.; Jakhetiya, A.; Pandey, D. Role of positron emission tomography-computed tomography in non-small cell lung cancer. World J. Methodol. 2016, 6, 105–111. [Google Scholar] [CrossRef]

- Czernin, J.; Phelps, M.E. Positron emission tomography scanning: Current and future applications. Annu. Rev. Med. 2002, 53, 89–112. [Google Scholar] [CrossRef] [PubMed]

- Phelps, M.E. Positron emission tomography provides molecular imaging of biological processes. Proc. Natl. Acad. Sci. USA 2000, 97, 9226–9233. [Google Scholar] [CrossRef]

- Cherry, S.R. Fundamentals of positron emission tomography and applications in preclinical drug development. J. Clin. Pharmacol. 2001, 41, 482–491. [Google Scholar] [CrossRef]

- Van der Laak, J.; Litjens, G.; Ciompi, F. Deep learning in histopathology: The path to the clinic. Nat. Med. 2021, 27, 775–784. [Google Scholar] [CrossRef]

- Arevalo, J.; Cruz-Roa, A.; González, O.F.A. Histopathology image representation for automatic analysis: A state-of-the-art review. Rev. Med. 2014, 22, 79–91. [Google Scholar] [CrossRef]

- Gurcan, M.N.; Boucheron, L.E.; Can, A.; Madabhushi, A.; Rajpoot, N.M.; Yener, B. Histopathological image analysis: A review. IEEE Rev. Biomed. Eng. 2009, 2, 147–171. [Google Scholar] [CrossRef] [PubMed]

- Dabeer, S.; Khan, M.M.; Islam, S. Cancer diagnosis in histopathological image: CNN based approach. Inform. Med. Unlocked 2019, 16, 100231. [Google Scholar] [CrossRef]

- Das, A.; Nair, M.S.; Peter, S.D. Computer-aided histopathological image analysis techniques for automated nuclear atypia scoring of breast cancer: A review. J. Digit. Imaging 2020, 33, 1091–1121. [Google Scholar] [CrossRef]

- Liu, W.; Wang, Z.; Liu, X.; Zeng, N.; Liu, Y.; Alsaadi, F.E. A survey of deep neural network architectures and their applications. Neurocomputing 2017, 234, 11–26. [Google Scholar] [CrossRef]

- Raiko, T.; Valpola, H.; Lecun, Y. Deep Learning Made Easier by Linear Transformations in Perceptrons. In Proceedings of the Fifteenth International Conference on Artificial Intelligence and Statistics, La Palma, Canary Islands, 21–23 April 2012; Neil, D.L., Mark, G., Eds.; PMLR, Proceedings of Machine Learning Research: Cambridge, MA, USA, 2012; pp. 924–932. [Google Scholar]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning Representations by Back Propagating Errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Pang, S.; Yang, X. Deep convolutional extreme learning machine and its application in handwritten digit classification. Comput. Intell. Neurosci. 2016, 2016, 3049632. [Google Scholar] [CrossRef] [PubMed]

- Elman, J.L. Finding Structure in Time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. arXiv 2014, arXiv:1406.2661. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Salakhutdinov, R.; Hinton, G.E. Deep Boltzmann Machines. J. Mach. Learn. Res. 2009, 5, 1967–2006. [Google Scholar]

- Tajbakhsh, N.; Shin, J.Y.; Gurudu, S.R.; Hurst, R.T.; Kendall, C.B.; Gotway, M.B.; Liang, J. Convolutional Neural Networks for Medical Image Analysis: Full Training or Fine Tuning? IEEE Trans. Med. Imaging 2016, 35, 1299–1312. [Google Scholar] [CrossRef] [PubMed]

- Anwar, S.M.; Majid, M.; Qayyum, A.; Awais, M.; Alnowami, M.; Khan, M.K. Medical Image Analysis using Convolutional Neural Networks: A Review. J. Med. Syst. 2018, 42, 226. [Google Scholar] [CrossRef]

- Pan, Y.; Huang, W.; Lin, Z.; Zhu, W.; Zhou, J.; Wong, J.; Ding, Z. Brain tumor grading based on Neural Networks and Convolutional Neural Networks. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015. [Google Scholar]

- Abiyev, R.H.; Ma’aitah, M.K.S. Deep Convolutional Neural Networks for Chest Diseases Detection. J. Healthc. Eng. 2018, 2018, 4168538. [Google Scholar] [CrossRef] [PubMed]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Into Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Zhang, J.; Dong, W.; Yu, J.; Xie, C.; Li, R.; Chen, T.; Chen, H. A crop pests image classification algorithm based on deep convolutional neural network. TELKOMNIKA (Telecommun. Comput. Electron. Control) 2017, 15, 1239–1246. [Google Scholar] [CrossRef]

- Ben-Cohen, A.; Diamant, I.; Klang, E.; Amitai, M.; Greenspan, H. Fully Convolutional Network for Liver Segmentation and Lesions Detection. In Deep Learning and Data Labeling for Medical Applications; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar]

- Li, S.; Zhao, X.; Zhou, G. Automatic pixel-level multiple damage detection of concrete structure using fully convolutional network. Comput.-Aided Civ. Infrastruct. Eng. 2019, 34, 616–634. [Google Scholar] [CrossRef]

- Bi, L.; Feng, D.; Kim, J. Dual-Path Adversarial Learning for Fully Convolutional Network (FCN)-Based Medical Image Segmentation. Vis. Comput. 2018, 34, 1043–1052. [Google Scholar] [CrossRef]

- Huang, L.; Xia, W.; Zhang, B.; Qiu, B.; Gao, X. MSFCN-multiple supervised fully convolutional networks for the osteosarcoma segmentation of CT images. Comput. Methods Programs Biomed. 2017, 143, 67–74. [Google Scholar] [CrossRef] [PubMed]

- Shao, H.; Jiang, H.; Zhao, H.; Wang, F. A novel deep autoencoder feature learning method for rotating machinery fault diagnosis. Mech. Syst. Signal Process. 2017, 95, 187–204. [Google Scholar] [CrossRef]

- Yousuff, M.; Babu, R. Deep autoencoder based hybrid dimensionality reduction approach for classification of SERS for melanoma cancer diagnostics. J. Intell. Fuzzy Syst. 2022; preprint. [Google Scholar] [CrossRef]

- Suk, H.-I.; Lee, S.-W.; Shen, D. Latent feature representation with stacked auto-encoder for AD/MCI diagnosis. Brain Struct. Funct. 2015, 220, 841–859. [Google Scholar] [CrossRef]

- Ng, A. Sparse autoencoder. CS294A Lect. Notes 2011, 72, 1–19. [Google Scholar]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.-A.; Bottou, L. Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Rifai, S.; Vincent, P.; Muller, X.; Glorot, X.; Bengio, Y. Contractive auto-encoders: Explicit invariance during feature extraction. In Proceedings of the 28th International Conference on International Conference on Machine Learning, Bellevue WA, USA, 28 June–2 July 2011; Omnipress2600 Anderson: St. Madison, WI, USA, 2011. [Google Scholar]

- Xu, J.; Xiang, L.; Liu, Q.; Gilmore, H.; Wu, J.; Tang, J.; Madabhushi, A. Stacked sparse autoencoder (SSAE) for nuclei detection on breast cancer histopathology images. IEEE Trans. Med. Imaging 2015, 35, 119–130. [Google Scholar] [CrossRef]

- Abraham, B.; Nair, M.S. Computer-aided diagnosis of clinically significant prostate cancer from MRI images using sparse autoencoder and random forest classifier. Biocybern. Biomed. Eng. 2018, 38, 733–744. [Google Scholar] [CrossRef]

- Huang, G.; Wang, H.; Zhang, L. Sparse-Coding-Based Autoencoder and Its Application for Cancer Survivability Prediction. Math. Probl. Eng. 2022, 2022, 8544122. [Google Scholar] [CrossRef]

- Munir, M.A.; Aslam, M.A.; Shafique, M.; Ahmed, R.; Mehmood, Z. Deep stacked sparse autoencoders-a breast cancer classifier. Mehran Univ. Res. J. Eng. Technol. 2022, 41, 41–52. [Google Scholar] [CrossRef]

- Bengio, Y.; Lamblin, P.; Popovici, D.; Larochelle, H. Greedy layer-wise training of deep networks. In Advances in Neural Information Processing Systems 19 (NIPS 2006); The MIT Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Vincent, P.; Larochelle, H.; Bengio, Y.; Manzagol, P.-A. Extracting and composing robust features with denoising autoencoders. In Proceedings of the 25th International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008; Association for Computing Machinery: New York, NY, USA, 2008. [Google Scholar]

- Masci, J.; Meier, U.; Cireşan, D.; Schmidhuber, J. Stacked convolutional auto-encoders for hierarchical feature extraction. In Artificial Neural Networks and Machine Learning–ICANN 2011, Proceedings of the 21st International Conference on Artificial Neural Networks, Espoo, Finland, 14–17 June 2011, Proceedings, Part I 21; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Srivastava, N.; Mansimov, E.; Salakhudinov, R. Unsupervised learning of video representations using lstms. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; PMLR: Cambridge, MA, USA, 2015. [Google Scholar]

- Makhzani, A.; Shlens, J.; Jaitly, N.; Goodfellow, I.; Frey, B. Adversarial autoencoders. arXiv 2015, arXiv:1511.05644. [Google Scholar]

- Higgins, I.; Matthey, L.; Pal, A.; Burgess, C.; Glorot, X.; Botvinick, M.; Mohamed, S.; Lerchner, A. Beta-vae: Learning basic visual concepts with a constrained variational framework. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Zhao, S.; Song, J.; Ermon, S. Infovae: Information maximizing variational autoencoders. arXiv 2017, arXiv:1706.02262. [Google Scholar]

- Van Den Oord, A.; Vinyals, O. Neural discrete representation learning. In Advances in Neural Information Processing Systems 30; The MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Dupont, E. Learning disentangled joint continuous and discrete representations. In Advances in Neural Information Processing Systems 31; The MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Kim, H.; Mnih, A. Disentangling by factorising. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; PMLR: Cambridge, MA, USA, 2018. [Google Scholar]

- Huang, G.-B.; Zhu, Q.-Y.; Siew, C.-K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Wang, P.; Zhang, X.; Hao, Y. A method combining CNN and ELM for feature extraction and classification of SAR image. J. Sens. 2019, 2019, 6134610. [Google Scholar] [CrossRef]

- Huang, G.-B.; Chen, L.; Siew, C.K. Universal approximation using incremental constructive feedforward networks with random hidden nodes. IEEE Trans. Neural Netw. 2006, 17, 879–892. [Google Scholar] [CrossRef]

- Niu, X.-X.; Suen, C.Y. A novel hybrid CNN–SVM classifier for recognizing handwritten digits. Pattern Recognit. 2012, 45, 1318–1325. [Google Scholar] [CrossRef]

- Chen, L.; Pan, X.; Zhang, Y.-H.; Liu, M.; Huang, T.; Cai, Y.-D. Classification of widely and rarely expressed genes with recurrent neural network. Comput. Struct. Biotechnol. J. 2019, 17, 49–60. [Google Scholar] [CrossRef]

- Aher, C.N.; Jena, A.K. Rider-chicken optimization dependent recurrent neural network for cancer detection and classification using gene expression data. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2021, 9, 174–191. [Google Scholar] [CrossRef]

- Navaneethakrishnan, M.; Vairamuthu, S.; Parthasarathy, G.; Cristin, R. Atom search-Jaya-based deep recurrent neural network for liver cancer detection. IET Image Process. 2021, 15, 337–349. [Google Scholar] [CrossRef]

- Yan, R.; Ren, F.; Wang, Z.; Wang, L.; Zhang, T.; Liu, Y.; Rao, X.; Zheng, C.; Zhang, F. Breast cancer histopathological image classification using a hybrid deep neural network. Methods 2020, 173, 52–60. [Google Scholar] [CrossRef]

- Moitra, D.; Mandal, R.K. Prediction of Non-small Cell Lung Cancer Histology by a Deep Ensemble of Convolutional and Bidirectional Recurrent Neural Network. J. Digit. Imaging 2020, 33, 895–902. [Google Scholar] [CrossRef]

- Selvanambi, R.; Natarajan, J.; Karuppiah, M.; Islam, S.; Hassan, M.M.; Fortino, G. Lung cancer prediction using higher-order recurrent neural network based on glowworm swarm optimization. Neural Comput. Appl. 2020, 32, 4373–4386. [Google Scholar] [CrossRef]

- Yang, Y.; Fasching, P.A.; Tresp, V. Predictive Modeling of Therapy Decisions in Metastatic Breast Cancer with Recurrent Neural Network Encoder and Multinomial Hierarchical Regression Decoder. In Proceedings of the 2017 IEEE International Conference on Healthcare Informatics (ICHI), Park City, UT, USA, 23–26 August 2017. [Google Scholar]

- Moitra, D.; Mandal, R.K. Automated AJCC staging of non-small cell lung cancer (NSCLC) using deep convolutional neural network (CNN) and recurrent neural network (RNN). Health Inf. Sci. Syst. 2019, 7, 14. [Google Scholar] [CrossRef]

- Pan, Q.; Zhang, Y.; Chen, D.; Xu, G. Character-Based Convolutional Grid Neural Network for Breast Cancer Classification. In Proceedings of the 2017 International Conference on Green Informatics (ICGI), Fuzhou, China, 15–17 August 2017. [Google Scholar]

- Liu, S.; Li, T.; Ding, H.; Tang, B.; Wang, X.; Chen, Q.; Yan, J.; Zhou, Y. A hybrid method of recurrent neural network and graph neural network for next-period prescription prediction. Int. J. Mach. Learn. Cybern. 2020, 11, 2849–2856. [Google Scholar] [CrossRef]

- Nurtiyasari, D.; Rosadi, D.; Abdurakhman. The application of Wavelet Recurrent Neural Network for lung cancer classification. In Proceedings of the 2017 3rd International Conference on Science and Technology-Computer (ICST), Yogyakarta, Indonesia, 11–12 July 2017. [Google Scholar]

- Tng, S.S.; Le, N.Q.K.; Yeh, H.-Y.; Chua, M.C.H. Improved prediction model of protein lysine Crotonylation sites using bidirectional recurrent neural networks. J. Proteome Res. 2021, 21, 265–273. [Google Scholar] [CrossRef]

- Azizi, S.; Bayat, S.; Yan, P.; Tahmasebi, A.; Kwak, J.T.; Xu, S.; Turkbey, B.; Choyke, P.; Pinto, P.; Wood, B.; et al. Deep recurrent neural networks for prostate cancer detection: Analysis of temporal enhanced ultrasound. IEEE Trans. Med. Imaging 2018, 37, 2695–2703. [Google Scholar] [CrossRef] [PubMed]

- SivaSai, J.G.; Srinivasu, P.N.; Sindhuri, M.N.; Rohitha, K.; Deepika, S. An Automated segmentation of brain MR image through fuzzy recurrent neural network. In Bio-Inspired Neurocomputing; Springer: Berlin/Heidelberg, Germany, 2021; pp. 163–179. [Google Scholar]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to forget: Continual prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [Google Scholar] [CrossRef] [PubMed]

- Gao, R.; Tang, Y.; Xu, K.; Huo, Y.; Bao, S.; Antic, S.L.; Epstein, E.S.; Deppen, S.; Paulson, A.B.; Sandler, K.L.; et al. Time-distanced gates in long short-term memory networks. Med. Image Anal. 2020, 65, 101785. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.; Wang, H.-Y.; Shi, P.; Sun, R.; Wang, X.; Luo, Z.; Zeng, F.; Lebowitz, M.S.; Lin, W.-Y.; Lu, J.-J.; et al. Long short-term memory model—A deep learning approach for medical data with irregularity in cancer predication with tumor markers. Comput. Biol. Med. 2022, 144, 105362. [Google Scholar] [CrossRef]

- Elsheikh, A.; Yacout, S.; Ouali, M.S. Bidirectional handshaking LSTM for remaining useful life prediction. Neurocomputing 2019, 323, 148–156. [Google Scholar] [CrossRef]

- Koo, K.C.; Lee, K.S.; Kim, S.; Min, C.; Min, G.R.; Lee, Y.H.; Han, W.K.; Rha, K.H.; Hong, S.J.; Yang, S.C.; et al. Long short-term memory artificial neural network model for prediction of prostate cancer survival outcomes according to initial treatment strategy: Development of an online decision-making support system. World J. Urol. 2020, 38, 2469–2476. [Google Scholar] [CrossRef]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A.A. Generative Adversarial Networks: An Overview. IEEE Signal Process. Mag. 2017, 35, 53–65. [Google Scholar] [CrossRef]

- Gonzalez-Abril, L.; Angulo, C.; Ortega, J.-A.; Lopez-Guerra, J.-L. Generative Adversarial Networks for Anonymized Healthcare of Lung Cancer Patients. Electronics 2021, 10, 2220. [Google Scholar] [CrossRef]

- Hua, Y.; Guo, J.; Zhao, H. Deep belief networks and deep learning. In Proceedings of the 2015 International Conference on Intelligent Computing and Internet of Things, Harbin, China, 17–18 January 2015. [Google Scholar]

- Xing, Y.; Yue, J.; Chen, C.; Xiang, Y.; Chen, Y.; Shi, M. A deep belief network combined with modified grey wolf optimization algorithm for PM2.5 concentration prediction. Appl. Sci. 2019, 9, 3765. [Google Scholar] [CrossRef]

- Hinton, G.E. Deep belief networks. Scholarpedia 2009, 4, 5947. [Google Scholar] [CrossRef]

- Mohamed, A.-R.; Dahl, G.E.; Hinton, G. Acoustic modeling using deep belief networks. IEEE Trans. Audio Speech Lang. Process. 2011, 20, 14–22. [Google Scholar] [CrossRef]

- Zhang, C.; Lim, P.; Qin, A.K.; Tan, K.C. Multiobjective Deep Belief Networks Ensemble for Remaining Useful Life Estimation in Prognostics. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2306–2318. [Google Scholar] [CrossRef]

- Novitasari, D.C.R.; Foeady, A.Z.; Thohir, M.; Arifin, A.Z.; Niam, K.; Asyhar, A.H. Automatic Approach for Cervical Cancer Detection Based on Deep Belief Network (DBN) Using Colposcopy Data. In Proceedings of the 2020 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 19–21 February 2020. [Google Scholar]

- Ronoud, S.; Asadi, S. An evolutionary deep belief network extreme learning-based for breast cancer diagnosis. Soft Comput. 2019, 23, 13139–13159. [Google Scholar] [CrossRef]

- Eslami, S.; Heess, N.; Williams, C.K.; Winn, J. The shape boltzmann machine: A strong model of object shape. Int. J. Comput. Vis. 2014, 107, 155–176. [Google Scholar] [CrossRef]

- Wu, J.; Mazur, T.R.; Ruan, S.; Lian, C.; Daniel, N.; Lashmett, H.; Ochoa, L.; Zoberi, I.; Anastasio, M.A.; Gach, H.M.; et al. A deep Boltzmann machine-driven level set method for heart motion tracking using cine MRI images. Med. Image Anal. 2018, 47, 68–80. [Google Scholar] [CrossRef]

- Syafiandini, A.F.; Wasito, I.; Yazid, S.; Fitriawan, A.; Amien, M. Multimodal Deep Boltzmann Machines for feature selection on gene expression data. In Proceedings of the 2016 International Conference on Advanced Computer Science and Information Systems (ICACSIS), Malang, Indonesia, 15–16 October 2016. [Google Scholar]

- Hess, M.; Lenz, S.; Binder, H. A deep learning approach for uncovering lung cancer immunome patterns. bioRxiv 2018, 291047. [Google Scholar] [CrossRef]

- Yu, X.; Wang, J.; Hong, Q.-Q.; Teku, R.; Wang, S.-H.; Zhang, Y.-D. Transfer learning for medical images analyses: A survey. Neurocomputing 2022, 489, 230–254. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems 25; The MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015, Proceedings, Part III 18; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Redmon, J. Darknet: Open Source Neural Networks in C. 2013–2016. 2016. Available online: http://pjreddie.com/darknet/ (accessed on 20 April 2023).

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Tian, Y. Artificial Intelligence Image Recognition Method Based on Convolutional Neural Network Algorithm. IEEE Access 2020, 8, 125731–125744. [Google Scholar] [CrossRef]

- Sun, Y.; Ou, Z.; Chen, J.; Qi, X.; Guo, Y.; Cai, S.; Yan, X. Evaluating Performance, Power and Energy of Deep Neural Networks on CPUs and GPUs. In Theoretical Computer Science. NCTCS 2021. Communications in Computer and Information Science; Springer: Singapore, 2021. [Google Scholar]

- Samir, S.; Emary, E.; El-Sayed, K.; Onsi, H. Optimization of a Pre-Trained AlexNet Model for Detecting and Localizing Image Forgeries. Information 2020, 11, 275. [Google Scholar] [CrossRef]

- Li, M.; Tang, H.; Chan, M.D.; Zhou, X.; Qian, X. DC-AL GAN: Pseudoprogression and true tumor progression of glioblastoma multiform image classification based on DCGAN and AlexNet. Med. Phys. 2020, 47, 1139–1150. [Google Scholar] [CrossRef]

- Suryawati, E.; Sustika, R.; Yuwana, R.S.; Subekti, A.; Pardede, H.F. Deep structured convolutional neural network for tomato diseases detection. In Proceedings of the 2018 International Conference on Advanced Computer Science and Information Systems (ICACSIS), Yogyakarta, Indonesia, 27–28 October 2018. [Google Scholar]

- Lv, X.; Zhang, X.; Jiang, Y.; Zhang, J. Pedestrian Detection Using Regional Proposal Network with Feature Fusion. In Proceedings of the 2018 Eighth International Conference on Image Processing Theory, Tools and Applications (IPTA), Xi’an, China, 7–10 November 2018. [Google Scholar]

- Zou, Z.; Wang, N.; Zhao, P.; Zhao, X. Feature recognition and detection for ancient architecture based on machine vision. In Smart Structures and NDE for Industry 4.0; SPIE: Bellingham, WA, USA, 2018. [Google Scholar]

- Yu, S.; Liu, J.; Shu, H.; Cheng, Z. Handwritten Digit Recognition using Deep Learning Networks. In Proceedings of the 2022 IEEE Conference on Telecommunications, Optics and Computer Science (TOCS), Dalian, China, 11–12 December 2022. [Google Scholar]

- Cai, L.; Gao, J.; Zhao, D. A review of the application of deep learning in medical image classification and segmentation. Ann. Transl. Med. 2020, 8, 713. [Google Scholar] [CrossRef]

- Li, G.; Shen, X.; Li, J.; Wang, J. Diagonal-kernel convolutional neural networks for image classification. Digit. Signal Process. 2021, 108, 102898. [Google Scholar] [CrossRef]

- Wang, W.; Yang, Y.; Wang, X.; Wang, W.; Li, J. Development of convolutional neural network and its application in image classification: A survey. Opt. Eng. 2019, 58, 040901. [Google Scholar] [CrossRef]

- Li, H.; Zhuang, S.; Li, D.-A.; Zhao, J.; Ma, Y. Benign and malignant classification of mammogram images based on deep learning. Biomed. Signal Process. Control 2019, 51, 347–354. [Google Scholar] [CrossRef]

- Zhang, C.-L.; Luo, J.-H.; Wei, X.-S.; Wu, J. In defense of fully connected layers in visual representation transfer. In Proceedings of the Pacific Rim Conference on Multimedia, Harbin, China, 28–29 September 2017; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Attallah, O.; Zaghlool, S. AI-based pipeline for classifying pediatric medulloblastoma using histopathological and textural images. Life 2022, 12, 232. [Google Scholar] [CrossRef]

- Yao, X.; Wang, X.; Wang, S.-H.; Zhang, Y.-D. A comprehensive survey on convolutional neural network in medical image analysis. Multimed. Tools Appl. 2020, 81, 41361–41405. [Google Scholar] [CrossRef]

- Yu, X.; Yu, Z.; Ramalingam, S. Learning strict identity mappings in deep residual networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Wide residual networks. arXiv 2016, arXiv:1605.07146. [Google Scholar]

- Pleiss, G.; Chen, D.; Huang, G.; Li, T.; Van Der Maaten, L.; Weinberger, K.Q. Memory-efficient implementation of densenets. arXiv 2017, arXiv:1707.06990. [Google Scholar]

- Huang, G.; Liu, Z.; Pleiss, G.; Maaten, L.V.D.; Weinberger, K.Q. Convolutional Networks with Dense Connectivity. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 8704–8716. [Google Scholar] [CrossRef] [PubMed]

- Sae-Lim, W.; Wettayaprasit, W.; Aiyarak, P. Convolutional neural networks using MobileNet for skin lesion classification. In Proceedings of the 2019 16th International Joint Conference on Computer Science and Software Engineering (JCSSE), Chonburi, Thailand, 10–12 July 2019. [Google Scholar]

- Tseng, F.-H.; Yeh, K.-H.; Kao, F.-Y.; Chen, C.-Y. MiniNet: Dense squeeze with depthwise separable convolutions for image classification in resource-constrained autonomous systems. ISA Trans. 2022, 132, 120–130. [Google Scholar] [CrossRef] [PubMed]

- Bi, C.; Wang, J.; Duan, Y.; Fu, B.; Kang, J.-R.; Shi, Y. MobileNet based apple leaf diseases identification. Mob. Netw. Appl. 2022, 27, 172–180. [Google Scholar] [CrossRef]

- Dhouibi, M.; Salem, A.K.B.; Saidi, A.; Saoud, S.B. Accelerating deep neural networks implementation: A survey. IET Comput. Digit. Tech. 2021, 15, 79–96. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Attallah, O.; Anwar, F.; Ghanem, N.M.; Ismail, M.A. Histo-CADx: Duo cascaded fusion stages for breast cancer diagnosis from histopathological images. PeerJ Comput. Sci. 2021, 7, e493. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.-T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Obayya, M.; Maashi, M.S.; Nemri, N.; Mohsen, H.; Motwakel, A.; Osman, A.E.; Alneil, A.A.; Alsaid, M.I. Hyperparameter optimizer with deep learning-based decision-support systems for histopathological breast cancer diagnosis. Cancers 2023, 15, 885. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zhou, T.; Hou, S.; Lu, H.; Zhao, Y.; Dang, P.; Dong, Y. Exploring and analyzing the improvement mechanism of U-Net and its application in medical image segmentation. Sheng Wu Yi Xue Gong Cheng Xue Za Zhi J. Biomed. Eng. Shengwu Yixue Gongchengxue Zazhi 2022, 39, 806–825. [Google Scholar]

- Jégou, S.; Drozdzal, M.; Vazquez, D.; Romero, A.; Bengio, Y. The one hundred layers tiramisu: Fully convolutional densenets for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- AlGhamdi, M.; Abdel-Mottaleb, M.; Collado-Mesa, F. DU-Net: Convolutional Network for the Detection of Arterial Calcifications in Mammograms. IEEE Trans. Med. Imaging 2020, 39, 3240–3249. [Google Scholar] [CrossRef]

- Khanna, A.; Londhe, N.D.; Gupta, S.; Semwal, A. A deep Residual U-Net convolutional neural network for automated lung segmentation in computed tomography images. Biocybern. Biomed. Eng. 2020, 40, 1314–1327. [Google Scholar] [CrossRef]

- Lu, L.; Jian, L.; Luo, J.; Xiao, B. Pancreatic Segmentation via Ringed Residual U-Net. IEEE Access 2019, 7, 172871–172878. [Google Scholar] [CrossRef]

- Lee, S.; Negishi, M.; Urakubo, H.; Kasai, H.; Ishii, S. Mu-net: Multi-scale U-net for two-photon microscopy image denoising and restoration. Neural Netw. 2020, 125, 92–103. [Google Scholar] [CrossRef] [PubMed]

- Li, F.; Li, W.; Qin, S.; Wang, L. MDFA-Net: Multiscale dual-path feature aggregation network for cardiac segmentation on multi-sequence cardiac MR. Knowl.-Based Syst. 2021, 215, 106776. [Google Scholar] [CrossRef]

- Coupé, P.; Mansencal, B.; Clément, M.; Giraud, R.; de Senneville, B.D.; Ta, V.-T.; Lepetit, V.; Manjon, J.V. AssemblyNet: A large ensemble of CNNs for 3D whole brain MRI segmentation. NeuroImage 2020, 219, 117026. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Wu, C.; Coleman, S.; Kerr, D. DENSE-INception U-net for medical image segmentation. Comput. Methods Programs Biomed. 2020, 192, 105395. [Google Scholar] [CrossRef]

- Xu, G.; Cao, H.; Udupa, J.K.; Tong, Y.; Torigian, D.A. DiSegNet: A deep dilated convolutional encoder-decoder architecture for lymph node segmentation on PET/CT images. Comput. Med. Imaging Graph. 2021, 88, 101851. [Google Scholar] [CrossRef]

- Li, J.; Yu, Z.L.; Gu, Z.; Liu, H.; Li, Y. Dilated-inception net: Multi-scale feature aggregation for cardiac right ventricle segmentation. IEEE Trans. Biomed. Eng. 2019, 66, 3499–3508. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Tan, Y.; Chen, W.; Luo, X.; He, Y.; Gao, Y.; Li, F. ANU-Net: Attention-based Nested U-Net to exploit full resolution features for medical image segmentation. Comput. Graph. 2020, 90, 11–20. [Google Scholar] [CrossRef]

- Guo, C.; Szemenyei, M.; Yi, Y.; Wang, W.; Chen, B.; Fan, C. SA-UNet: Spatial attention U-Net for retinal vessel segmentation. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021. [Google Scholar]

- Li, Y.; Yang, J.; Ni, J.; Elazab, A.; Wu, J. TA-Net: Triple attention network for medical image segmentation. Comput. Biol. Med. 2021, 137, 104836. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Chen, S.; Chen, G.; Wang, W.; Lei, B.; Wen, Z. FAT-Net: Feature adaptive transformers for automated skin lesion segmentation. Med. Image Anal. 2022, 76, 102327. [Google Scholar] [CrossRef] [PubMed]

- Çiçek, Ö.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning dense volumetric segmentation from sparse annotation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2016, Proceedings of the 19th International Conference, Athens, Greece, 17–21 October 2016, Proceedings, Part II 19; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B. Attention u-net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Alom, M.Z.; Hasan, M.; Yakopcic, C.; Taha, T.M.; Asari, V.K. Recurrent residual convolutional neural network based on u-net (r2u-net) for medical image segmentation. arXiv 2018, arXiv:1802.06955. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, Proceedings of the 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 20 September 2018, Proceedings 4; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Ibtehaz, N.; Rahman, M.S. MultiResUNet: Rethinking the U-Net architecture for multimodal biomedical image segmentation. Neural Netw. 2020, 121, 74–87. [Google Scholar] [CrossRef]

- Yeung, M.; Sala, E.; Schönlieb, C.-B.; Rundo, L. Focus U-Net: A novel dual attention-gated CNN for polyp segmentation during colonoscopy. Comput. Biol. Med. 2021, 137, 104815. [Google Scholar] [CrossRef]

- Beeche, C.; Singh, J.P.; Leader, J.K.; Gezer, N.S.; Oruwari, A.P.; Dansingani, K.K.; Chhablani, J.; Pu, J. Super U-Net: A modularized generalizable architecture. Pattern Recognit. 2022, 128, 108669. [Google Scholar] [CrossRef]

- Wang, J.; Zhu, H.; Wang, S.-H.; Zhang, Y.-D. A review of deep learning on medical image analysis. Mob. Netw. Appl. 2021, 26, 351–380. [Google Scholar] [CrossRef]

- Khan, S.; Islam, N.; Jan, Z.; Din, I.U.; Rodrigues, J.J.C. A novel deep learning based framework for the detection and classification of breast cancer using transfer learning. Pattern Recognit. Lett. 2019, 125, 1–6. [Google Scholar] [CrossRef]

- Zhen, X.; Chen, J.; Zhong, Z.; Hrycushko, B.; Zhou, L.; Jiang, S.; Albuquerque, K.; Gu, X. Deep convolutional neural network with transfer learning for rectum toxicity prediction in cervical cancer radiotherapy: A feasibility study. Phys. Med. Biol. 2017, 62, 8246–8263. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Sagi, O.; Rokach, L. Ensemble learning: A survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, e1249. [Google Scholar] [CrossRef]

- Zhou, Z.-H.; Zhou, Z.-H. Ensemble Learning; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Tsai, C.-F.; Sung, Y.-T. Ensemble feature selection in high dimension, low sample size datasets: Parallel and serial combination approaches. Knowl.-Based Syst. 2020, 203, 106097. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Hastie, T.; Rosset, S.; Zhu, J.; Zou, H. Multi-class adaboost. Stat. Its Interface 2009, 2, 349–360. [Google Scholar] [CrossRef]

- Friedman, J.H. Stochastic gradient boosting. Comput. Stat. Data Anal. 2002, 38, 367–378. [Google Scholar] [CrossRef]

- Müller, D.; Soto-Rey, I.; Kramer, F. An analysis on ensemble learning optimized medical image classification with deep convolutional neural networks. IEEE Access 2022, 10, 66467–66480. [Google Scholar] [CrossRef]

- Brunese, L.; Mercaldo, F.; Reginelli, A.; Santone, A. An ensemble learning approach for brain cancer detection exploiting radiomic features. Comput. Methods Programs Biomed. 2020, 185, 105134. [Google Scholar] [CrossRef] [PubMed]

- Abdar, M.; Makarenkov, V. CWV-BANN-SVM ensemble learning classifier for an accurate diagnosis of breast cancer. Measurement 2019, 146, 557–570. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, D.; Geng, N.; Wang, Y.; Yin, Y.; Jin, Y. Stacking-based ensemble learning of decision trees for interpretable prostate cancer detection. Appl. Soft Comput. 2019, 77, 188–204. [Google Scholar] [CrossRef]

- Scarselli, F.; Gori, M.; Tsoi, A.C.; Hagenbuchner, M.; Monfardini, G. The graph neural network model. IEEE Trans. Neural Netw. 2008, 20, 61–80. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Tang, X.; Qi, X.; Li, C.-G.; Xiao, R. Learning graph normalization for graph neural networks. Neurocomputing 2022, 493, 613–625. [Google Scholar] [CrossRef]