1. Introduction

The organ and tumor movement during radiotherapy (RT) treatment delivery has negative adverse impact on the effectiveness of the RT and its clinical outcome [

1]. To compensate for tumor motion and its related uncertainty, as the standard of care, a safety margin is added to the target region. This expansion of irradiated target region results in the surrounding organs at risk (OAR) also getting exposed to radiation dose [

2,

3]. Moreover, this unwanted OAR irradiation limits the dose escalation. For instance, with less than 10% 3-year survival rate [

4], pancreatic cancer is a devastating disease that can greatly benefit from dose escalation. Studies have shown that dose-escalated pancreatic cancer doubles and triples the 2-year and 3-year survival rate [

5,

6,

7,

8,

9,

10,

11,

12,

13]. Real-time motion tracking is the most effective method for motion compensation [

2], that in turn, allows RT to be performed with higher precision by adjusting the radiation beam to the tumor location [

14] and, thus, enables the dose-escalation [

15,

16,

17,

18,

19,

20,

21,

22].

The major cause of intrafraction OAR and target motion is respiratory-induced motion. Respiratory-related tumor motion in pancreatic cancer can reach up to 35 mm [

23]. This considerably high motion uncertainty can be greatly mitigated by real-time tumor positioning. The tumor positioning methods can be divided into two major techniques: direct and indirect [

24]. Direct methods are those that use imaging systems to directly track the tumor position, and such systems are kilovolt X-ray imaging-based methods [

25,

26,

27,

28], magnetic resonance (MR) imaging-based approaches [

29], electromagnetic sensing systems [

30,

31], and ultrasound-based techniques [

32,

33,

34,

35]. In indirect position tracking technique, the motion trajectory is tracked using a surrogate target that is highly associated with the tumor motion, for instance, thoracoabdominal surface displacement tracking using surface optical trackers. By tracking and analyzing the position of markers that are placed on the skin, external surface motion can be obtained [

36,

37]. Although, indirect methods are non-invasive, to increase the accuracy these methods, an accurate model is required for converting the motion between the body surface and the internal organ [

2,

36].

Direct tumor tracking methods are highly accurate, but use of X-ray imaging or electromagnetic sensing can result in exposing patients to extra radiation dose or implanting invasive electromagnetic markers, and the associated cost is high, and they cannot retrofit MR imaging to existing RT treatment machines. On the other hand, ultrasound-based direct approach has several advantages [

19,

33,

38]: (i) low cost, (ii) non-invasive (iii) ability for image enhancement with contrast agents, and (iv) easily integratable with current standard of care. Ultrasound (US) tumor tracking has been successfully applied to prostate intrafraction motion tracking and is commercially available [

36,

37]. Even though the studies have shown promising results for US-based abdominal intrafraction motion tracking [

33,

39,

40,

41], its application in clinics is highly hindered. This is mainly due to the need for a special holder for abdominal sites. The holder should be compatible with treatment setup, and the probe and holder should be placed so that it does not block the radiation [

33]. Moreover, the conventional US probes are rigid and operator-dependent [

42] and require contact pressure that may cause an anatomical change on each day of radiotherapy delivery and, thus, cause dose deviation from the original treatment plan depending on the original patient anatomy from initial ultrasound probe pressure and location [

43,

44,

45].

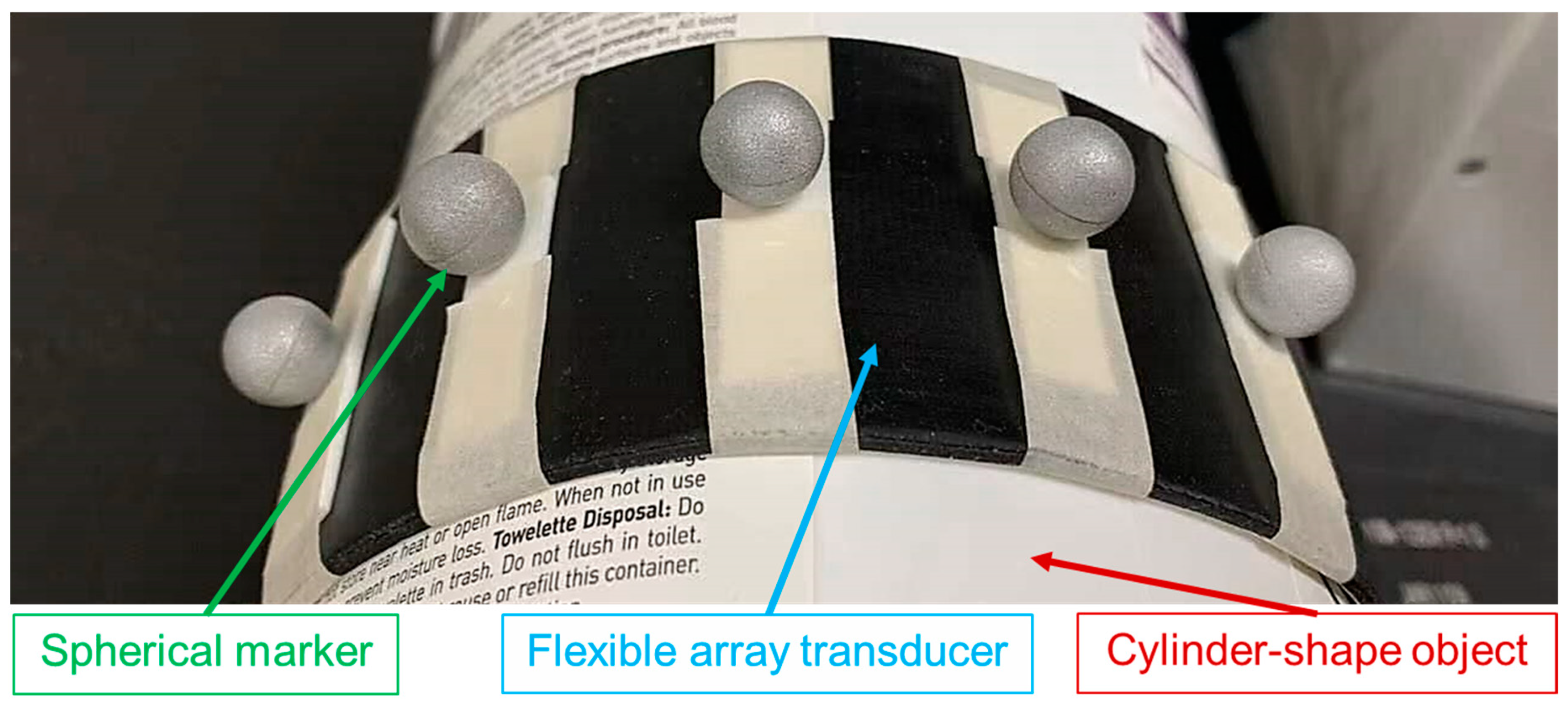

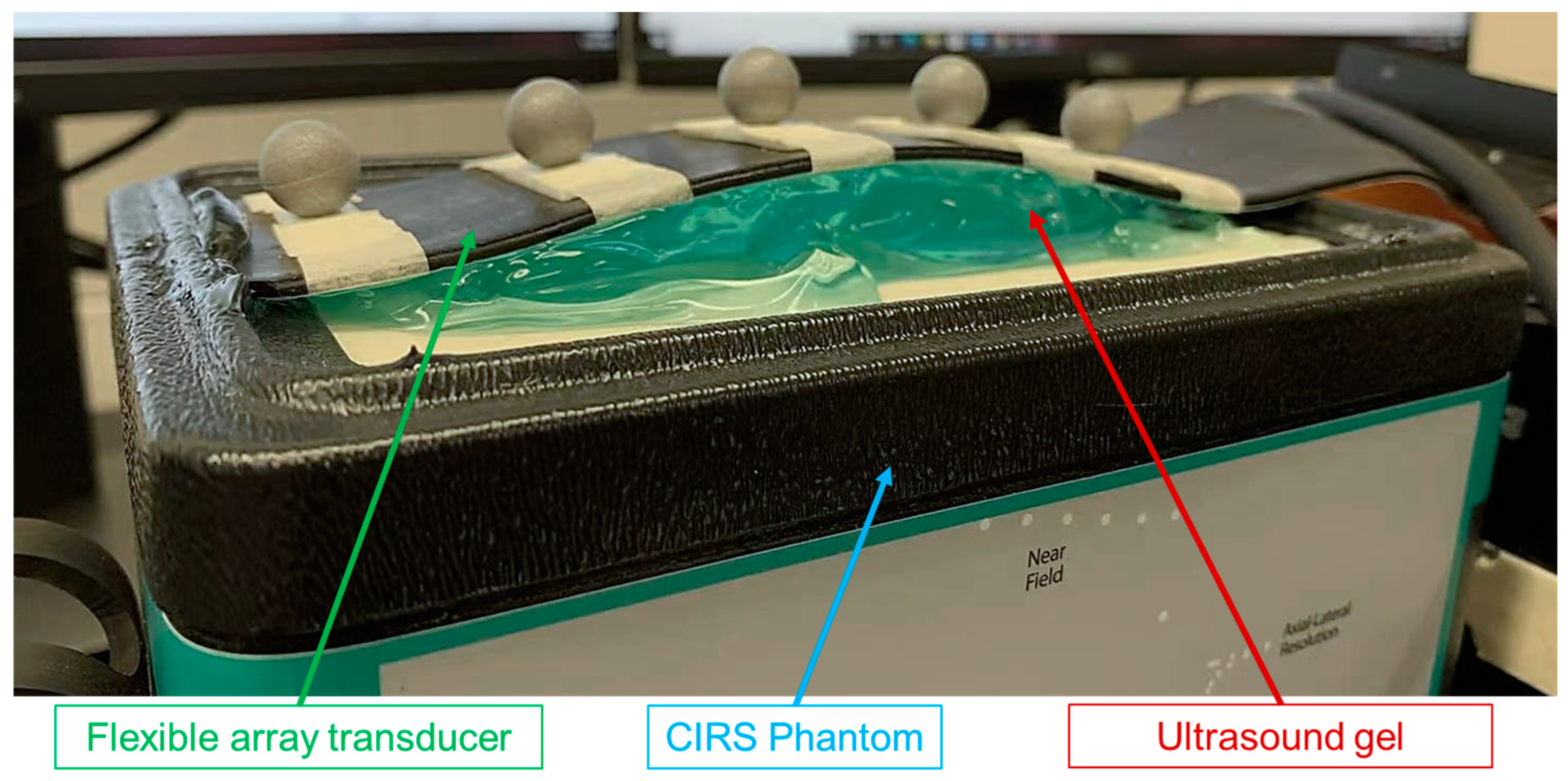

The flexible array transducer is a promising design that can address user dependency and induced anatomical changes of the conventional US transducer [

46,

47,

48]. The flexible array transducer is a thin US transducer that can be attached to the body surface and adapt to different geometries [

49,

50]. In recent years, many studies have shown the application of flexible array transducers in biomedical imaging [

50,

51]. Our group has been investigating the application of the flexible US probe for real-time tumor tracking during radiotherapy for both abdominal and head-and-neck cancers. Although the flexible probe is wearable and takes the shape of subject’s body, the image reconstruction and ultrasound beamforming are very challenging due to its flexible geometry.

The delay-and-sum (DAS) beamformer is commonly for B-mode image reconstruction from radiofrequency (RF) channel data. DAS requires an accurate array shape to calculate the time-of-flight (ToF) between elements and focal point, and accordingly, apply proper time delays to each channel [

52]. As a result, the use of DAS as a flexible array transducer is very challenging as the array shape is generally unknown at any given point in time and changes with the body surface. For a 5 MHz probe, a half-wavelength error in element position, or 0.154 mm, potentially causes the summation of wrongly delayed signals in the opposite phase and introduces signal loss [

53]. Therefore, if the array shape is not correctly defined, the reconstructed US image is significant, defocused, and distorted [

54].

Numerous approaches have been proposed to address the beamforming problem. De Oliveira et al. [

55] and Boerkamp et al. [

56] developed flexible array transducers with strain gauges and piezoelectric sensors bonded to their surfaces for detecting local curvature. Although these sensors could estimate curvature with reasonable accuracy, the additional hardware increases costs and limits the number of elements. Chang et al. [

57] and Noda et al. [

58] proposed mathematical model-based shape estimation algorithms to achieve optimal image quality using flexible array transducers. However, the estimation time for both algorithms is relatively long, resulting in a frame rate that is too low for real-time imaging. Moreover, Huang et al. [

59] and Noda et al. [

60] developed methods to directly reconstruct B-mode images or estimate array geometry from radio frequency (RF) data of flexible array transducers using deep neural networks (DNNs). Despite their efforts, both methods failed to reduce the array shape error to less than half the wavelength of the transmitted wave and suffered from blurry reconstructed images.

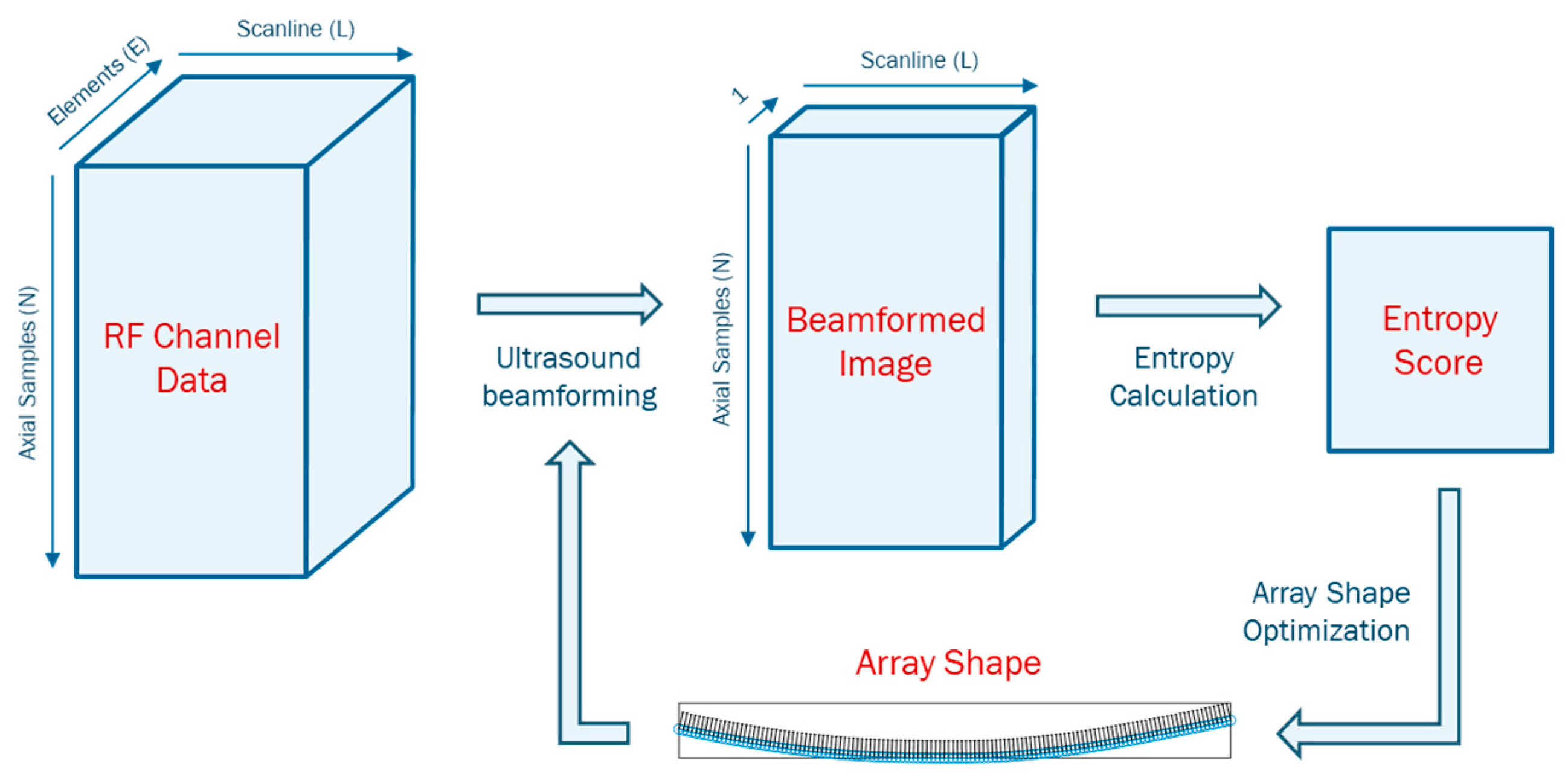

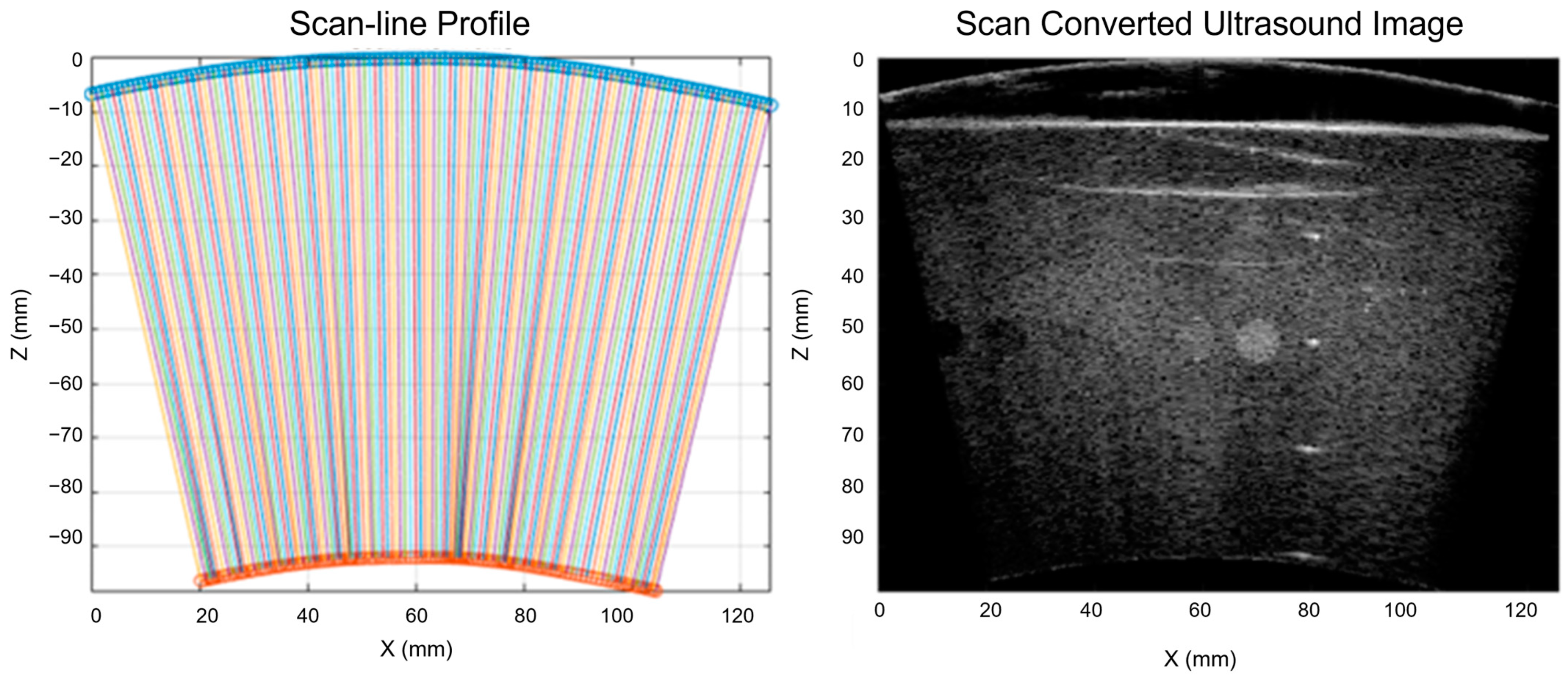

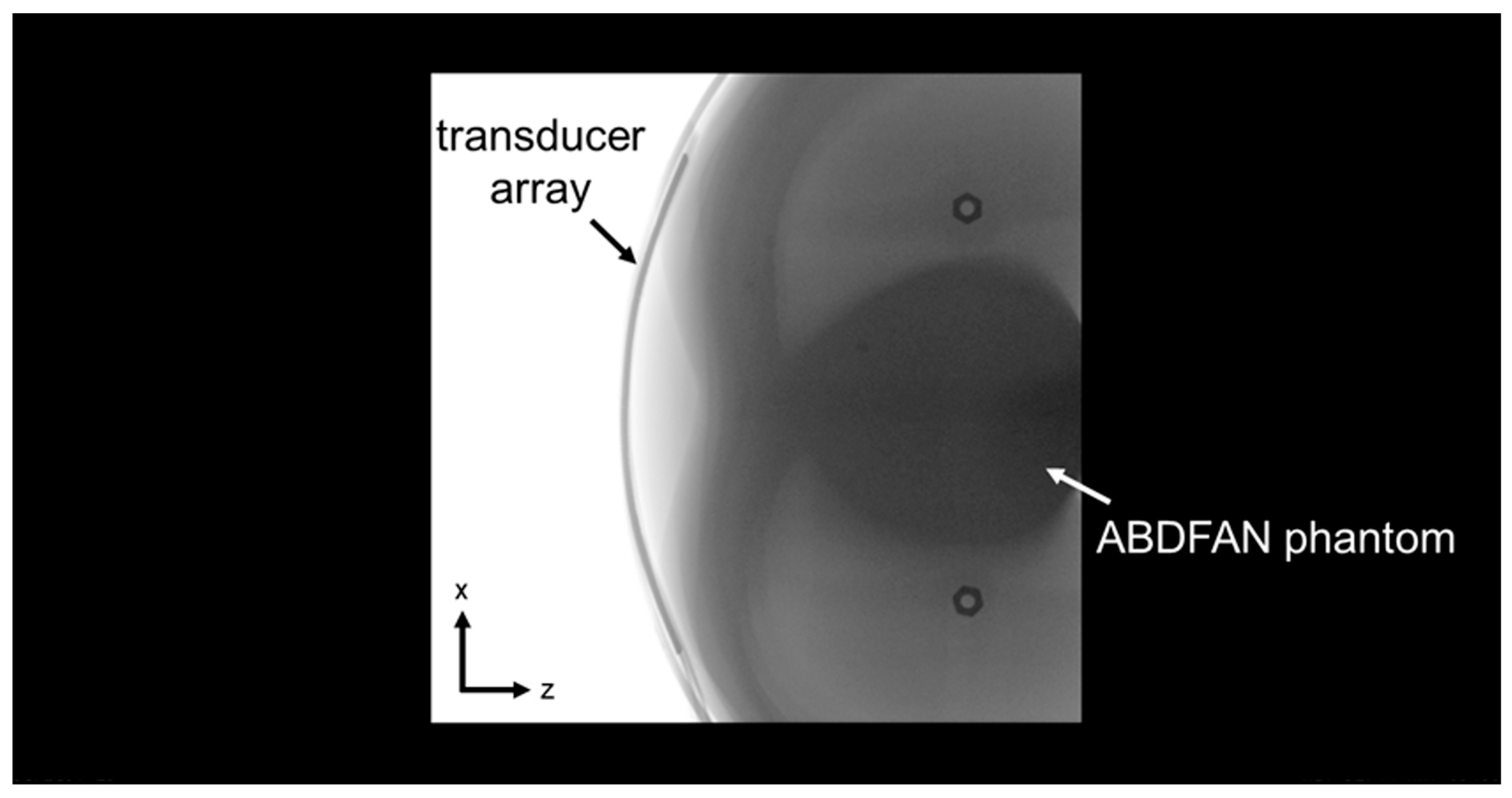

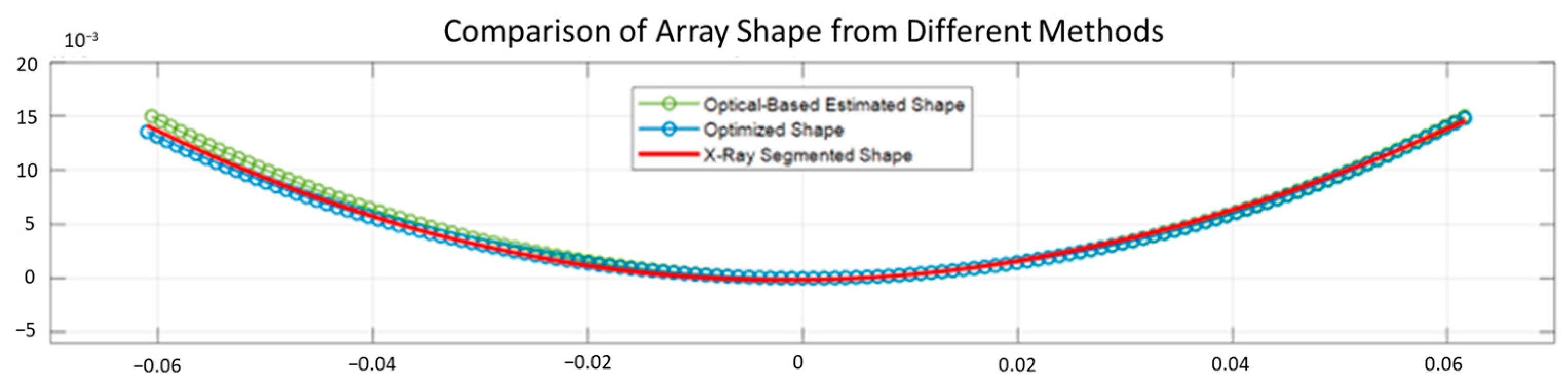

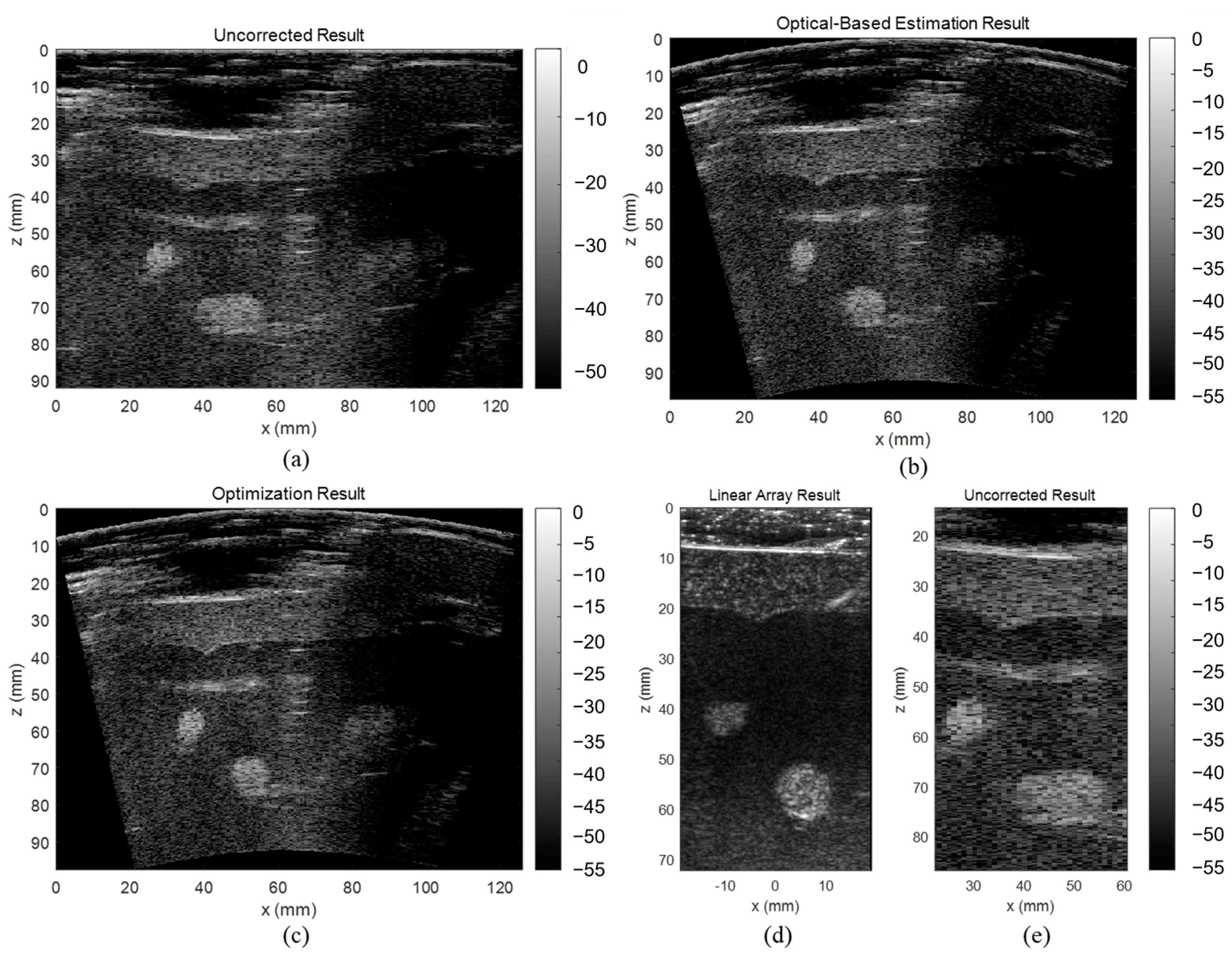

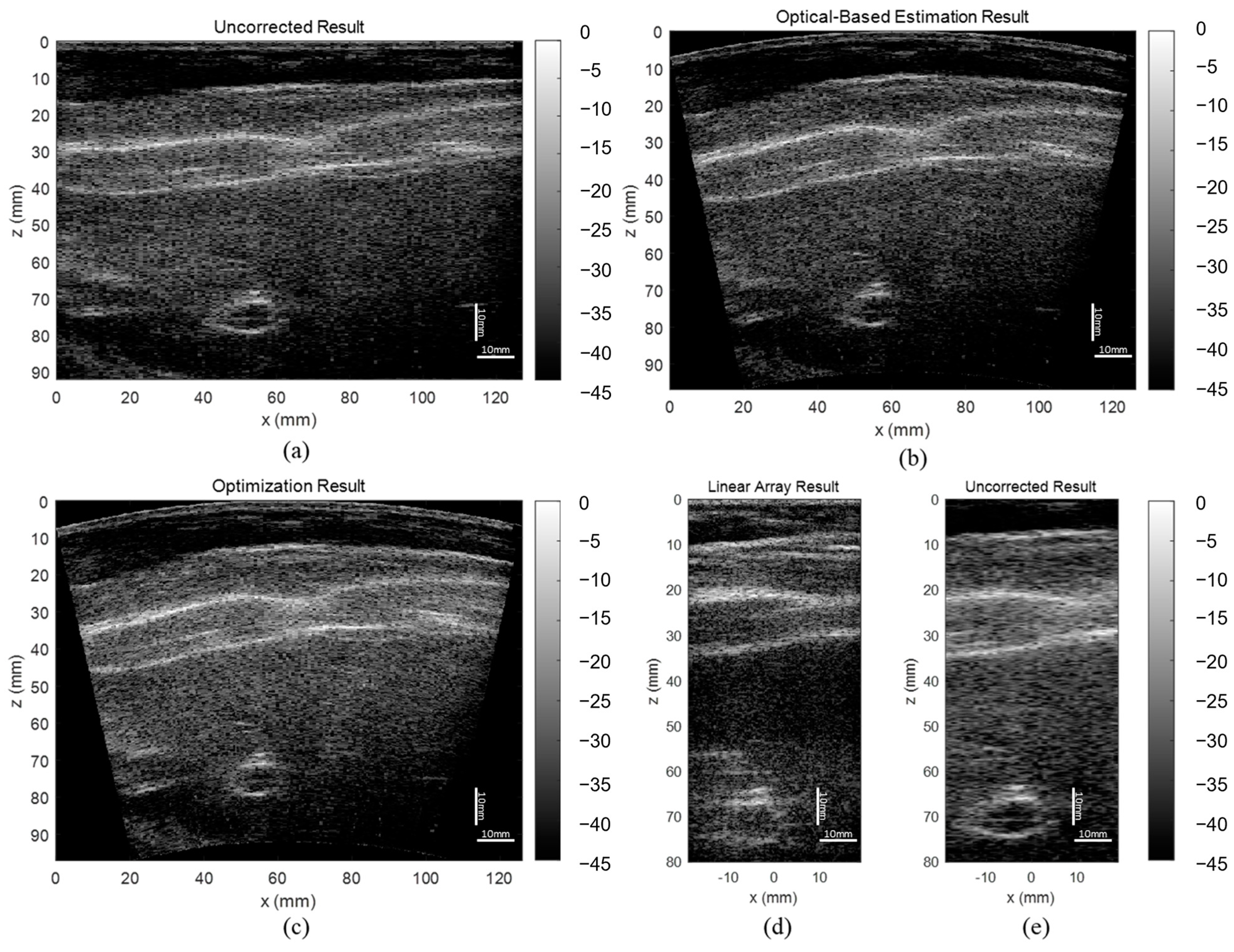

In this study, we propose a novel shape-estimating method for a flexible array transducer using a sequential approach. The optical-based shape-estimation algorithm uses the optical tracking system to collect the spatial coordinates of the array and estimate its shape, while the shape optimization algorithm further optimizes the estimated shape by searching for the array shape that can reconstruct the beamformed image with highest quality without any external device. We conducted phantom and in vivo experiments and evaluated the accuracy of the estimated array shapes and reconstructed ultrasound images.

4. Discussion

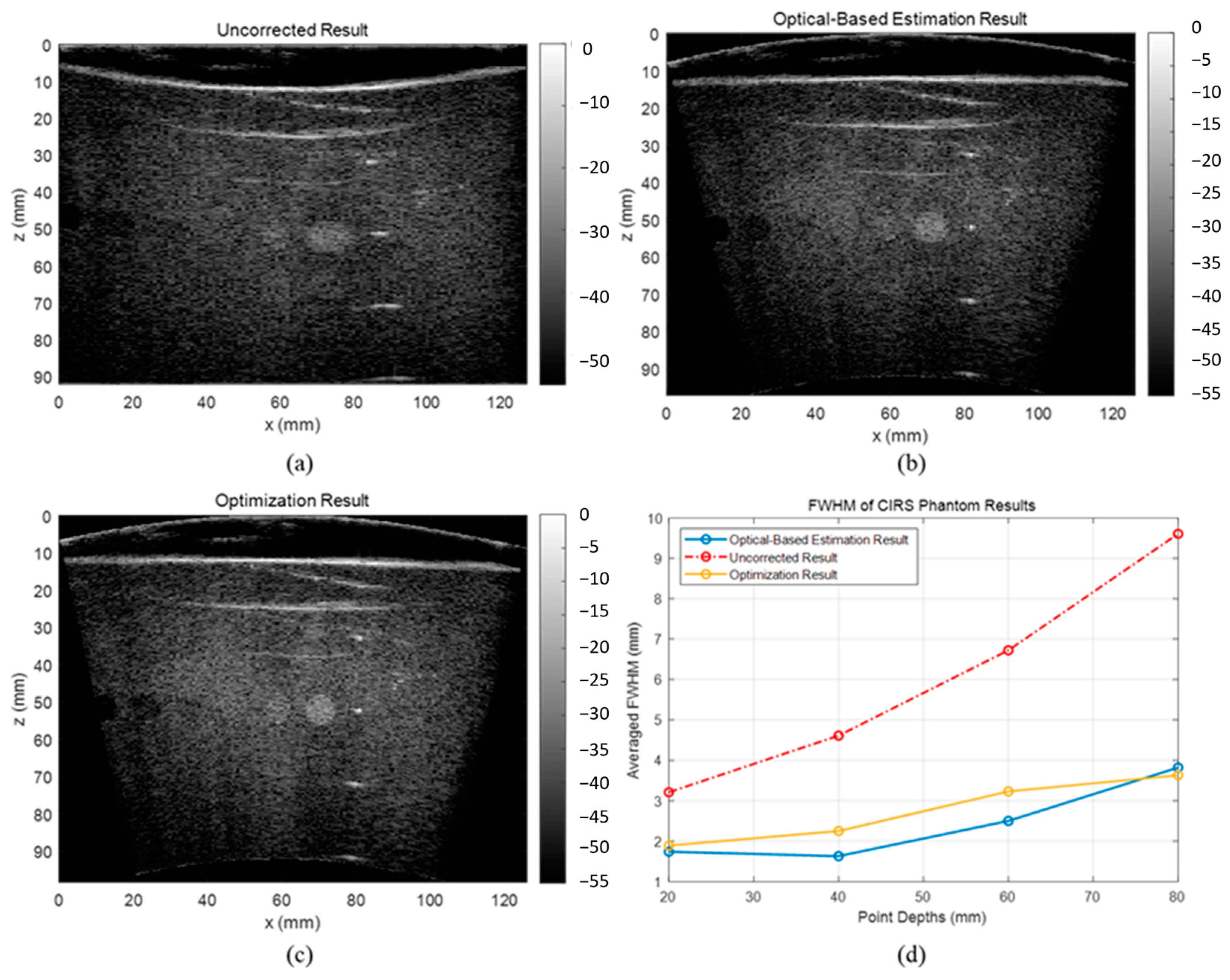

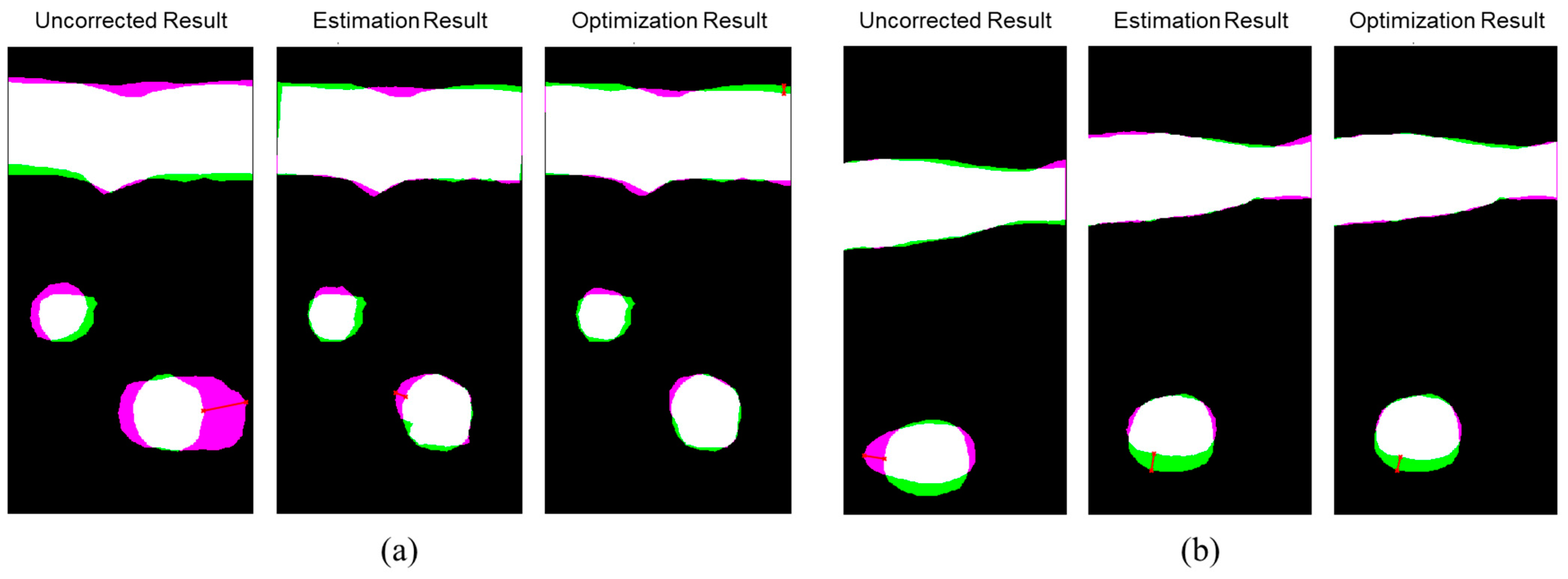

The evaluation results showed that the proposed optical-based shape estimation algorithm could successfully estimate the array shape of the flexible array transducer with reasonable accuracy and reconstruct ultrasound images with significantly less defocusing and distortion than those without any shape correction. The proposed shape optimization algorithm could further improve the accuracy of the array shape estimation. The mean absolute error of the element positions was less than half-wavelength of the transmitted wave, which means the algorithm could reconstruct ultrasound images with optimal accuracy and quality. In addition, the computation time of the shape estimation algorithm was less than 0.01 s, while that of the shape optimization algorithm for 200 iterations was about 1000 s. Therefore, the proposed shape estimation algorithm could achieve real-time ultrasound imaging with acceptable accuracy and frame rate. For specific frames that require higher accuracy and quality, the shape optimization algorithm could be used to further improve the reconstructed images.

The Shannon entropy is a measurement of information and uncertainty in random variables [

67]. Hughes first used the entropy for analyzing ultrasound signals and indicated that entropy can be used to quantitatively characterize the changes in the microstructures of scattering media [

74,

75]. Tsui et al. applied the entropy of ultrasound backscattered signals to multiple diseases assessment [

76,

77]. The studies concluded that increasing the scatterer concentration would generate a stronger effect of constructive wave interference and lead to a larger backscattered amplitude. In this condition, various echo amplitudes exist, and the signal uncertainty and unpredictability (entropy) increase. Noda et al. proposed an assumption that the beam-summed image using an array shape of higher accuracy would have smaller entropy [

58]. However, there was no in-depth discussion of this assumption. To the best of our knowledge, there is no study on evaluating the ultrasound beamforming process with entropy.

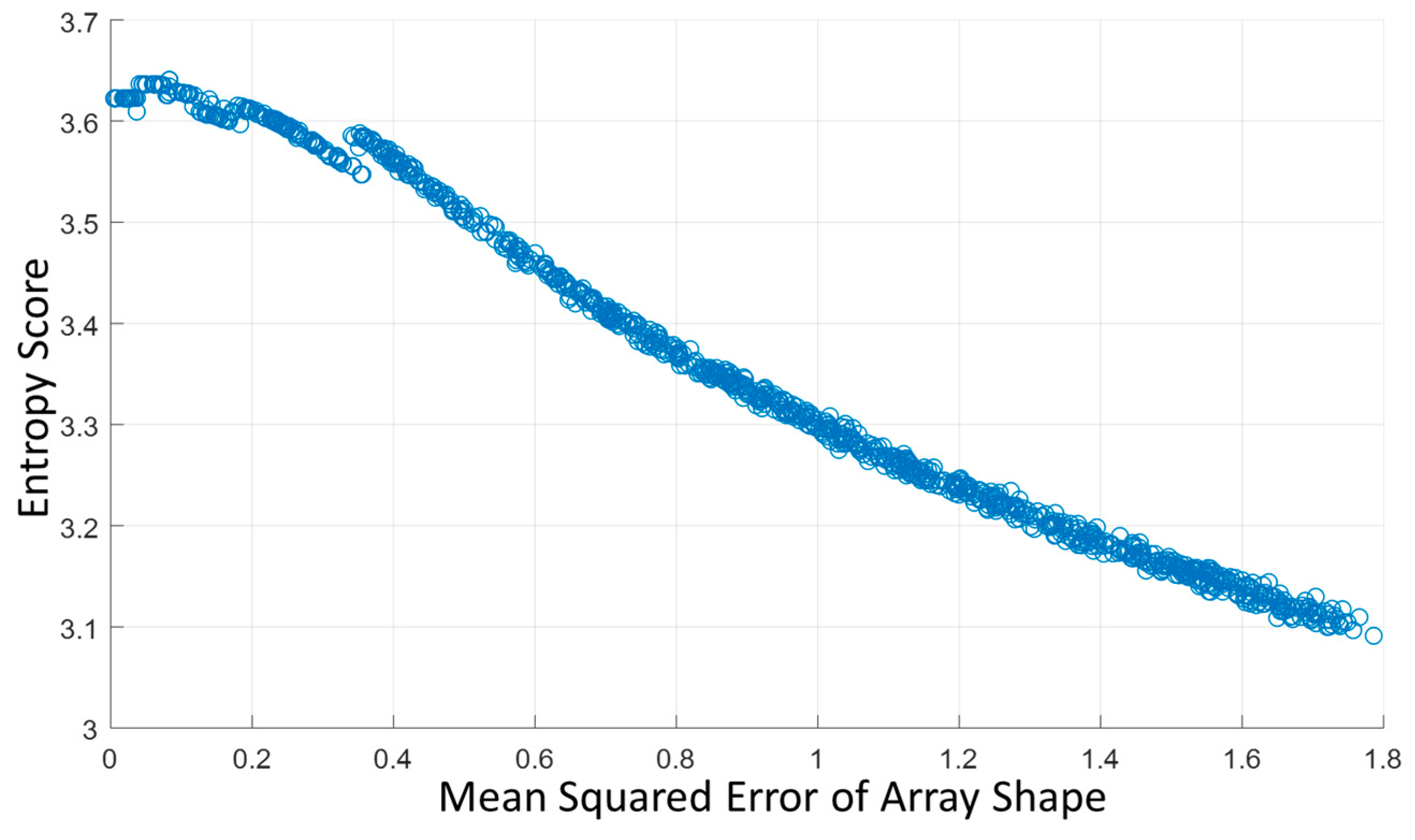

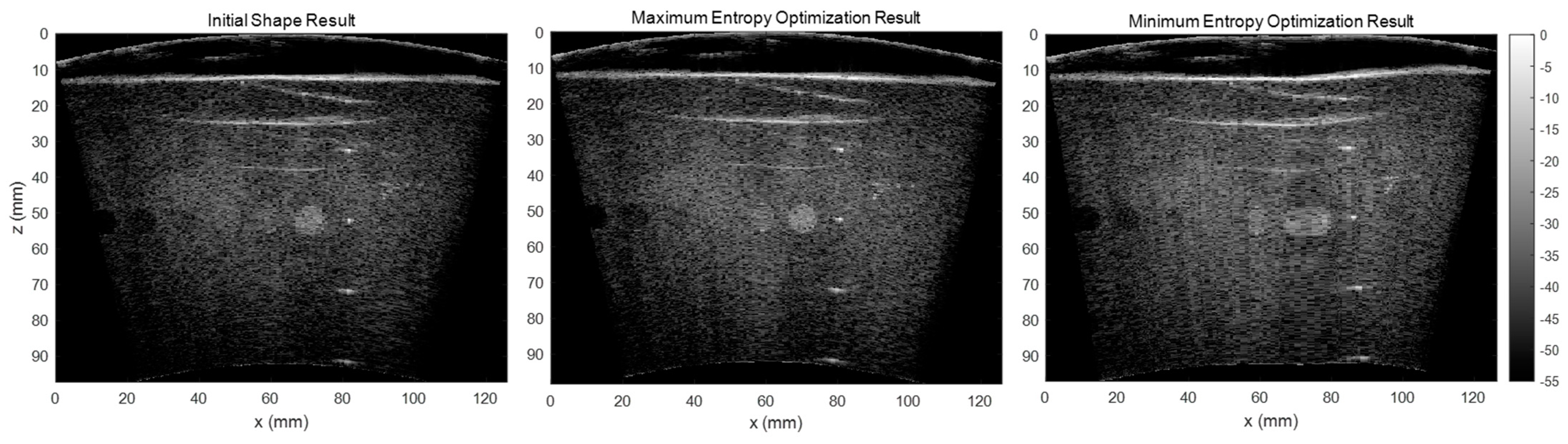

As the RF channel data is delayed and summed based on the array shape to form the beamformed image, errors in array shape will make the delayed RF data sum in the opposite phase, which causes significant signal loss. It would lead to a smaller backscattered amplitude and the signal’s entropy would increase. In addition to our testing of the correlation between the array shape accuracy and beamformed image entropy, we also tested the effect of entropy by maximizing and minimizing the entropy in our shape optimization algorithm and compared the results.

Figure 13 shows the reconstructed images using the initial shape, maximum entropy-optimized shape, and minimum entropy-optimized shape. The results illustrate that the array shape optimized by minimizing the entropy is worse than the initial shape. Therefore, we believe that the beamformed ultrasound image using an array shape of higher accuracy would have a larger entropy.

Here, we presented a shape estimation approach that consists of two sequential methods to estimate the array shape and reconstruct B-mode images acquired by flexible array transducer using deep learning method. One potential application of our method is to improve the ultrasound imaging reconstruction technique for in vivo tumors shape and size calculations [

78]. With current developments of nanomedicine, it has shown a great potential for diagnosis and treatment of many disease [

79]. Nanocarriers can actively and precisely target the tumor by binding to the cancer cell-overexpressed receptors [

80]. However, tumor size and shape might be an important consideration for designing nanocarriers [

79]. The method presented in this paper may increase the accuracy of tumor shape and size estimation, which in turn, can increase the efficiency of nanocarriers design. Future studies are warranted to demonstrate the effectiveness of our method for shape estimation.

There are some limitations of this study: First, the computation time of the shape optimization algorithm is long. As it is explained in the methods, the Kronecker delta function

was used for reconstructing the beamformed image

. As

cannot be differentiated by the shape parameters

, our shape optimization algorithm was implemented with a global solver without using the gradient to

, which significantly increased the computation time compared with gradient decent based optimizer. Inspired by the previous literature [

58], a future direction of this study will be replacing the Kronecker delta function with a function that is differentiable by

. Second, the optical-based estimation algorithm relies on external devices including the infrared optical tracker and passive marker spheres, which may be occluded during radiotherapy. The optical tracking system should be properly set up in advance to avoid any interference. In addition, the speed of sound was set to be homogeneous in this study. Changes in the speed of sound will influence the ToF of the focal points, which has the same effect as the errors in array shape. Therefore, the proposed shape optimization algorithm may simultaneously correct the array shape and the heterogeneous speed of sound. Future work needs to be conducted to study how heterogeneous speed of sound may affect the performance of the proposed algorithms.