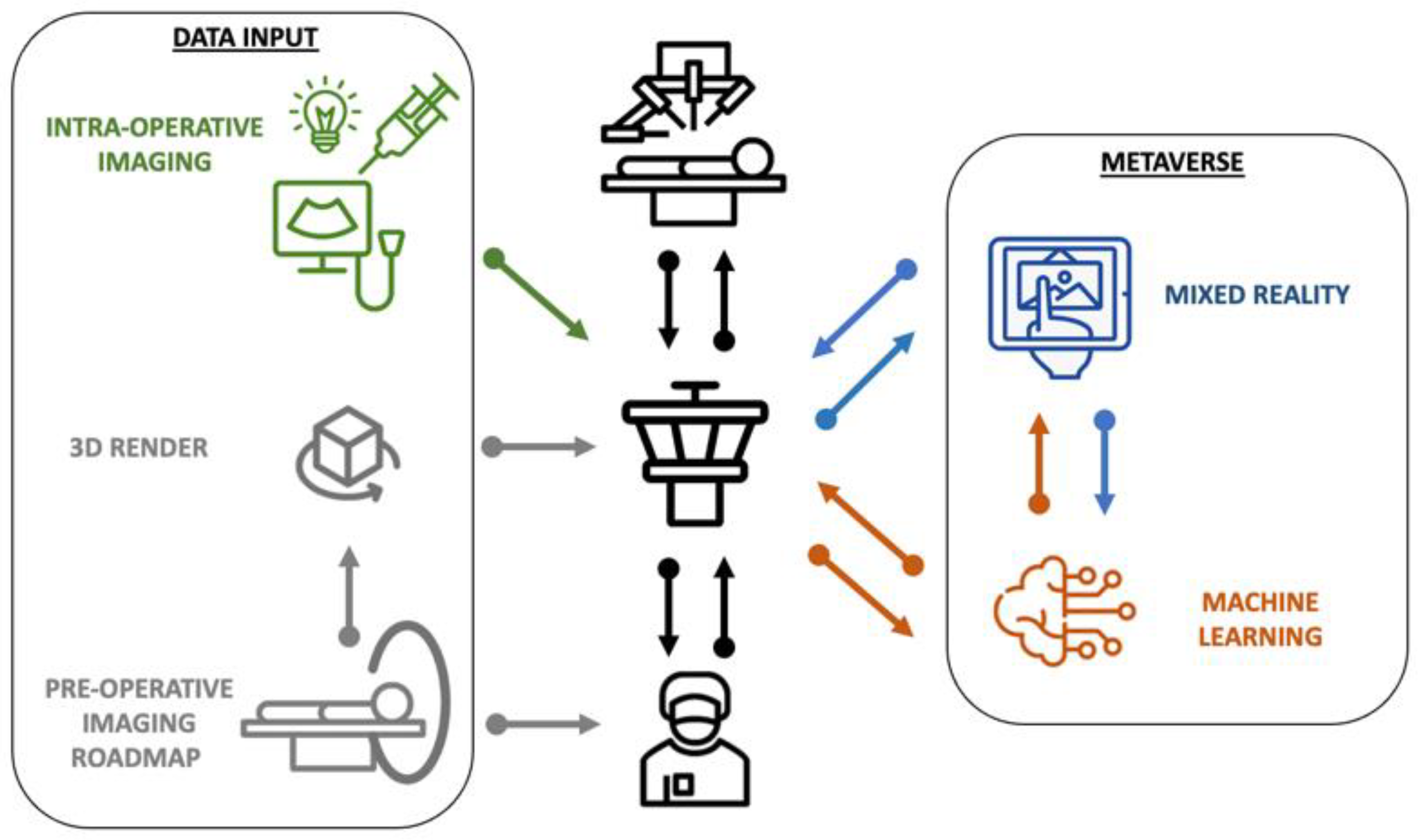

Where Robotic Surgery Meets the Metaverse

Author Contributions

Conflicts of Interest

References

- Giannone, F.; Felli, E.; Cherkaoui, Z.; Mascagni, P.; Pessaux, P. Augmented Reality and Image-Guided Robotic Liver Surgery. Cancers 2021, 13, 6268. [Google Scholar] [CrossRef] [PubMed]

- Wendler, T.; van Leeuwen, F.W.B.; Navab, N.; van Oosterom, M.N. How molecular imaging will enable robotic precision surgery: The role of artificial intelligence, augmented reality, and navigation. Eur. J. Nucl. Med. Mol. Imaging 2021, 48, 4201–4224. [Google Scholar] [CrossRef] [PubMed]

- Greco, F.; Cadeddu, J.A.; Gill, I.S.; Kaouk, J.H.; Remzi, M.; Thompson, R.H.; van Leeuwen, F.W.B.; van der Poel, H.G.; Fornara, P.; Rassweiler, J. Current Perspectives in the Use of Molecular Imaging To Target Surgical Treatments for Genitourinary Cancers. Eur. Urol. 2014, 65, 947–964. [Google Scholar] [CrossRef] [PubMed]

- Alvarez Paez, A.M.; Brouwer, O.R.; Veenstra, H.J.; van der Hage, J.A.; Wouters, M.; Nieweg, O.E.; Valdés-Olmos, R.A. Decisive role of SPECT/CT in localization of unusual periscapular sentinel nodes in patients with posterior trunk melanoma: Three illustrative cases and a review of the literature. Melanoma Res. 2012, 22, 278–283. [Google Scholar] [CrossRef] [PubMed]

- Porpiglia, F.; Checcucci, E.; Amparore, D.; Piramide, F.; Volpi, G.; Granato, S.; Verri, P.; Manfredi, M.; Bellin, A.; Piazzolla, P.; et al. Three-dimensional Augmented Reality Robot-assisted Partial Nephrectomy in Case of Complex Tumours (PADUA ≥ 10): A New Intraoperative Tool Overcoming the Ultrasound Guidance. Eur. Urol. 2020, 78, 229–238. [Google Scholar] [CrossRef] [PubMed]

- Schneider, C.; Nguan, C.; Rohling, R.; Salcudean, S. Tracked “Pick-Up” Ultrasound for Robot-Assisted Minimally Invasive Surgery. IEEE Trans. Biomed. Eng. 2016, 63, 260–268. [Google Scholar] [CrossRef] [PubMed]

- van Oosterom, M.N.; van der Poel, H.G.; Navab, N.; van de Velde, C.J.H.; van Leeuwen, F.W.B. Computer-assisted surgery: Virtual- and augmented-reality displays for navigation during urological interventions. Curr. Opin. Urol. 2018, 28, 205–213. [Google Scholar] [CrossRef] [PubMed]

- Mezger, U.; Jendrewski, C.; Bartels, M. Navigation in surgery. Langenbecks Arch. Surg. 2013, 398, 501–514. [Google Scholar] [CrossRef] [PubMed]

- Meershoek, P.; van den Berg, N.S.; Lutjeboer, J.; Burgmans, M.C.; van der Meer, R.W.; van Rijswijk, C.S.P.; van Oosterom, M.N.; van Erkel, A.R.; van Leeuwen, F.W.B. Assessing the value of volume navigation during ultrasound-guided radiofrequency- and microwave-ablations of liver lesions. Eur. J. Radiol. Open 2021, 8, 100367. [Google Scholar] [CrossRef] [PubMed]

- Amparore, D.; Checcucci, E.; Piazzolla, P.; Piramide, F.; De Cillis, S.; Piana, A.; Verri, P.; Manfredi, M.; Fiori, C.; Vezzetti, E.; et al. Indocyanine Green Drives Computer Vision Based 3D Augmented Reality Robot Assisted Partial Nephrectomy: The Beginning of “Automatic” Overlapping Era. Urology 2022, 164, e312–e316. [Google Scholar] [CrossRef] [PubMed]

- Ma, R.; Vanstrum, E.B.; Lee, R.; Chen, J.; Hung, A.J. Machine learning in the optimization of robotics in the operative field. Curr. Opin. Urol. 2020, 30, 808–816. [Google Scholar] [CrossRef] [PubMed]

- Judkins, T.N.; Oleynikov, D.; Stergiou, N. Objective evaluation of expert and novice performance during robotic surgical training tasks. Surg. Endosc. 2009, 23, 590–597. [Google Scholar] [CrossRef] [PubMed]

- Boekestijn, I.; Azargoshasb, S.; van Oosterom, M.N.; Slof, L.J.; Dibbets-Schneider, P.; Dankelman, J.; van Erkel, A.R.; Rietbergen, D.D.D.; van Leeuwen, F.W.B. Value-assessment of computer-assisted navigation strategies during percutaneous needle placement. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1775–1785. [Google Scholar] [CrossRef] [PubMed]

- Hung, A.J.; Chen, J.; Che, Z.; Nilanon, T.; Jarc, A.; Titus, M.; Oh, P.J.; Gill, I.S.; Liu, Y. Utilizing Machine Learning and Automated Performance Metrics to Evaluate Robot-Assisted Radical Prostatectomy Performance and Predict Outcomes. J. Endourol. 2018, 32, 438–444. [Google Scholar] [CrossRef] [PubMed]

- Bogomolova, K.; van Merriënboer, J.J.G.; Sluimers, J.E.; Donkers, J.; Wiggers, T.; Hovius, S.E.R.; van der Hage, J.A. The effect of a three-dimensional instructional video on performance of a spatially complex procedure in surgical residents in relation to their visual-spatial abilities. Am. J. Surg. 2021, 222, 739–745. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

van Leeuwen, F.W.B.; van der Hage, J.A. Where Robotic Surgery Meets the Metaverse. Cancers 2022, 14, 6161. https://doi.org/10.3390/cancers14246161

van Leeuwen FWB, van der Hage JA. Where Robotic Surgery Meets the Metaverse. Cancers. 2022; 14(24):6161. https://doi.org/10.3390/cancers14246161

Chicago/Turabian Stylevan Leeuwen, Fijs W. B., and Jos A. van der Hage. 2022. "Where Robotic Surgery Meets the Metaverse" Cancers 14, no. 24: 6161. https://doi.org/10.3390/cancers14246161

APA Stylevan Leeuwen, F. W. B., & van der Hage, J. A. (2022). Where Robotic Surgery Meets the Metaverse. Cancers, 14(24), 6161. https://doi.org/10.3390/cancers14246161