Deep Learning Techniques to Diagnose Lung Cancer

Abstract

Simple Summary

Abstract

1. Introduction

2. Lung Imaging Techniques

3. Deep Learning-Based Imaging Techniques

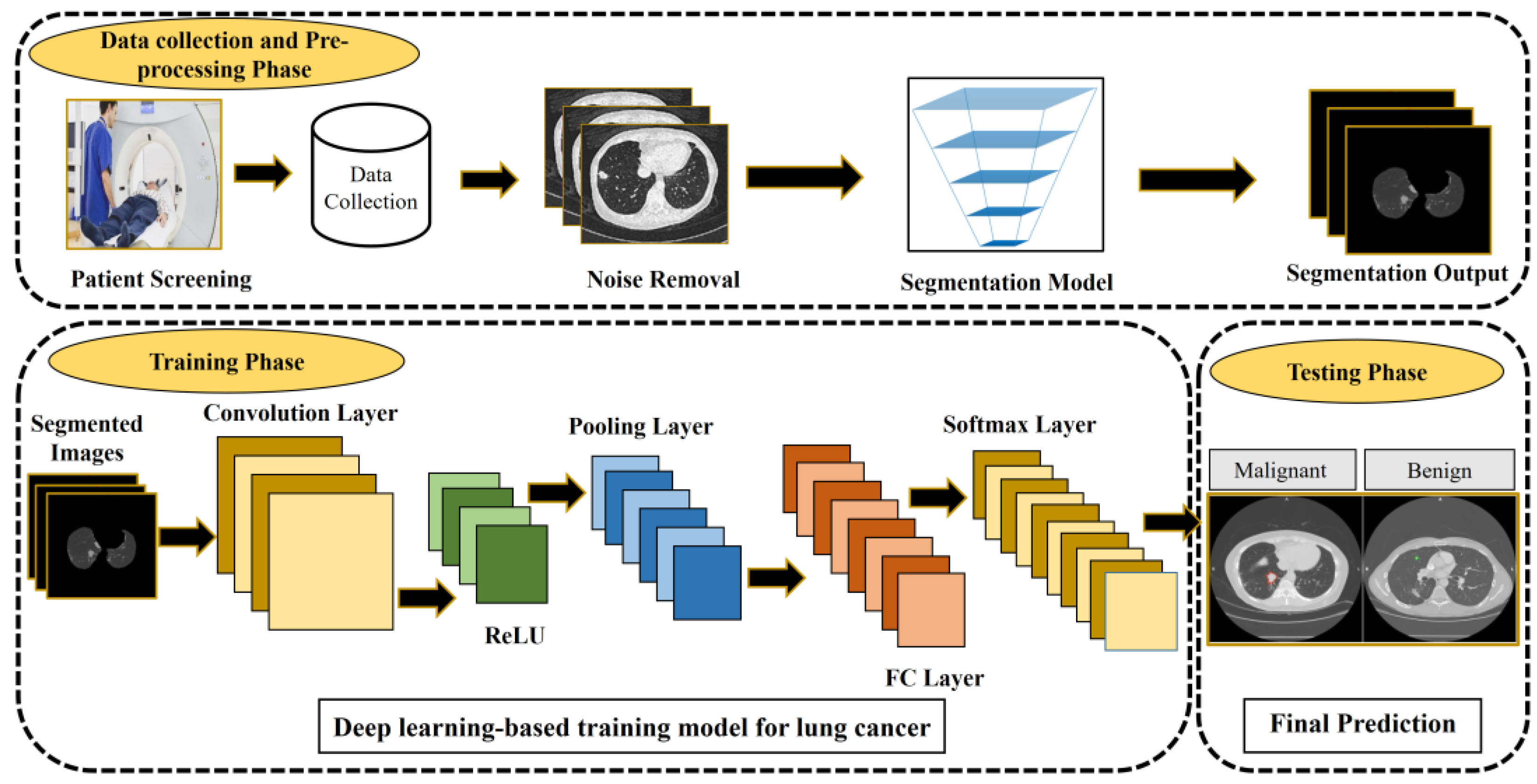

4. Lung Cancer Prediction Using Deep Learning

4.1. Imaging Pre-Processing Techniques and Evaluation

4.1.1. Pre-Processing Techniques

4.1.2. Performance Metrics

4.2. Datasets

4.3. Lung Image Segmentation

4.4. Lung Nodule Detection

4.5. Lung Nodule Classification

5. Challenges and Future Research Directions

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Correction Statement

References

- Siegel, R.L.; Miller, K.D.; Fuchs, H.E.; Jemal, A. Cancer statistics. A Cancer J. Clin. 2022, 72, 7–33. [Google Scholar] [CrossRef] [PubMed]

- Bade, B.C.; Cruz, C. Lung cancer. Clin. Chest Med. 2020, 41, 1–24. [Google Scholar] [CrossRef]

- Stamatis, G.; Eberhard, W.; Pöttgen, C. Surgery after multimodality treatment for non-small-cell lung cancer. Lung Cancer 2004, 45, S107–S112. [Google Scholar] [CrossRef] [PubMed]

- Chiang, T.A.; Chen, P.H.; Wu, P.F.; Wang, T.N.; Chang, P.Y.; Ko, A.M.; Huang, M.S.; Ko, Y.C. Important prognostic factors for the long-term survival of lung cancer subjects in Taiwan. BMC Cancer 2008, 8, 324. [Google Scholar] [CrossRef] [PubMed]

- Journy, N.; Rehel, J.L.; Pointe, H.D.L.; Lee, C.; Brisse, H.; Chateil, J.F.; Caer-Lorho, S.; Laurier, D.; Bernier, M.O. Are the studies on cancer risk from ct scans biased by indication? Elements of answer from a large-scale cohort study in France. Br. J. Cancer 2015, 112, 1841–1842. [Google Scholar] [CrossRef]

- National Lung Screening Trial Research Team. Reduced lung-cancer mortality with low-dose computed tomographic screening. N. Engl. J. Med. 2011, 365, 395–409. [Google Scholar] [CrossRef]

- Ippolito, D.; Capraro, C.; Guerra, L.; De Ponti, E.; Messa, C.; Sironi, S. Feasibility of perfusion CT technique integrated into conventional (18) FDG/PET-CT studies in lung cancer patients: Clinical staging and functional information in a single study. Eur. J. Nucl. Med. Mol. Imaging 2013, 40, 156–165. [Google Scholar] [CrossRef]

- Park, S.Y.; Cho, A.; Yu, W.S.; Lee, C.Y.; Lee, J.G.; Kim, D.J.; Chung, K.Y. Prognostic value of total lesion glycolysis by F-18-FDG PET/CT in surgically resected stage IA non-small cell lung cancer. J. Nucl. Med. 2015, 56, 45–49. [Google Scholar] [CrossRef][Green Version]

- Griffiths, H. Magnetic induction tomography. Meas. Sci. Technol. 2011, 12, 1126–1131. [Google Scholar] [CrossRef]

- Brown, M.S.; Lo, P.; Goldin, J.G.; Barnoy, E.; Kim, G.H.J.; Mcnitt-Gray, M.F.; Aberle, D.R. Toward clinically usable CAD for lung cancer screening with computed tomography. Eur. Radiol. 2020, 30, 1822. [Google Scholar] [CrossRef]

- Roberts, H.C.; Patsios, D.; Kucharczyk, M.; Paul, N.; Roberts, T.P. The utility of computer-aided detection (CAD) for lung cancer screening using low-dose CT. Int. Congr. Ser. 2005, 1281, 1137–1142. [Google Scholar] [CrossRef]

- Abdul, L.; Rajasekar, S.; Lin, D.S.Y.; Venkatasubramania Raja, S.; Sotra, A.; Feng, Y.; Liu, A.; Zhang, B. Deep-lumen assay-human lung epithelial spheroid classification from brightfield images using deep learning. Lab A Chip 2021, 21, 447–448. [Google Scholar] [CrossRef] [PubMed]

- Armato, S.G.I. Deep learning demonstrates potential for lung cancer detection in chest radiography. Radiology 2020, 297, 697–698. [Google Scholar] [CrossRef] [PubMed]

- Ali, S.; Li, J.; Pei, Y.; Khurram, R.; Rehman, K.U.; Rasool, A.B. State-of-the-Art Challenges and Perspectives in Multi-Organ Cancer Diagnosis via Deep Learning-Based Methods. Cancers 2021, 13, 5546. [Google Scholar] [CrossRef]

- Riquelme, D.; Akhloufi, M.A. Deep Learning for Lung Cancer Nodules Detection and Classification in CT Scans. AI 2020, 1, 28–67. [Google Scholar] [CrossRef]

- Zhukov, T.A.; Johanson, R.A.; Cantor, A.B.; Clark, R.A.; Tockman, M.S. Discovery of distinct protein profiles specific for lung tumors and pre-malignant lung lesions by SELDI mass spectrometry. Lung Cancer 2003, 40, 267–279. [Google Scholar] [CrossRef]

- Zeiser, F.A.; Costa, C.; Ramos, G.; Bohn, H.C.; Santos, I.; Roehe, A.V. Deepbatch: A hybrid deep learning model for interpretable diagnosis of breast cancer in whole-slide images. Expert Syst. Appl. 2021, 185, 115586. [Google Scholar] [CrossRef]

- Mandal, M.; Vipparthi, S.K. An empirical review of deep learning frameworks for change detection: Model design, experimental frameworks, challenges and research needs. IEEE Trans. Intell. Transp. Syst. 2022, 23, 6101–6122. [Google Scholar] [CrossRef]

- Alireza, H.; Cheikh, M.; Annika, K.; Jari, V. Deep learning for forest inventory and planning: A critical review on the remote sensing approaches so far and prospects for further applications. Forestry 2022, 95, 451–465. [Google Scholar]

- Highamcatherine, F.; Highamdesmond, J. Deep learning. SIAM Rev. 2019, 32, 860–891. [Google Scholar]

- Latifi, K.; Dilling, T.J.; Feygelman, V.; Moros, E.G.; Stevens, C.W.; Montilla-Soler, J.L.; Zhang, G.G. Impact of dose on lung ventilation change calculated from 4D-CT using deformable image registration in lung cancer patients treated with SBRT. J. Radiat. Oncol. 2015, 4, 265–270. [Google Scholar] [CrossRef]

- Lakshmanaprabu, S.K.; Mohanty, S.N.; Shankar, K.; Arunkumar, N.; Ramirez, G. Optimal deep learning model for classification of lung cancer on CT images. Future Gener. Comput. Syst. 2019, 92, 374–382. [Google Scholar]

- Shim, S.S.; Lee, K.S.; Kim, B.T.; Chung, M.J.; Lee, E.J.; Han, J.; Choi, J.Y.; Kwon, O.J.; Shim, Y.M.; Kim, S. Non-small cell lung cancer: Prospective comparison of integrated FDG PET/CT and CT alone for preoperative staging. Radiology 2005, 236, 1011–1019. [Google Scholar] [CrossRef] [PubMed]

- Ab, G.D.C.; Domínguez, J.F.; Bolton, R.D.; Pérez, C.F.; Martínez, B.C.; García-Esquinas, M.G.; Carreras Delgado, J.L. PET-CT in presurgical lymph node staging in non-small cell lung cancer: The importance of false-negative and false-positive findings. Radiologia 2017, 59, 147–158. [Google Scholar]

- Yaturu, S.; Patel, R.A. Metastases to the thyroid presenting as a metabolically inactive incidental thyroid nodule with stable size in 15 months. Case Rep. Endocrinol. 2014, 2014, 643986. [Google Scholar] [CrossRef][Green Version]

- Eschmann, S.M.; Friedel, G.; Paulsen, F.; Reimold, M.; Hehr, T.; Budach, W.; Langen, H.J.; Bares, R. 18F-FDG PET for assessment of therapy response and preoperative re-evaluation after neoadjuvant radio-chemotherapy in stage III non-small cell lung cancer. Eur. J. Nucl. Med. Mol. Imaging 2007, 34, 463–471. [Google Scholar] [CrossRef]

- Lee, W.K.; Lau, E.W.; Chin, K.; Sedlaczek, O.; Steinke, K. Modern diagnostic and therapeutic interventional radiology in lung cancer. J. Thorac. Dis. 2013, 5, 511–523. [Google Scholar]

- Zurek, M.; Bessaad, A.; Cieslar, K.; Crémillieux, Y. Validation of simple and robust protocols for high-resolution lung proton MRI in mice. Magn. Reson. Med. 2010, 64, 401–407. [Google Scholar] [CrossRef]

- Burris, N.S.; Johnson, K.M.; Larson, P.E.Z.; Hope, M.D.; Nagle, S.K.; Behr, S.C.; Hope, T.A. Detection of small pulmonary nodules with ultrashort echo time sequences in oncology patients by using a PET/MR system. Radiology 2016, 278, 239–246. [Google Scholar] [CrossRef]

- Fink, C.; Puderbach, M.; Biederer, J.; Fabel, M.; Dietrich, O.; Kauczor, H.U.; Reiser, M.F.; Schönberg, S.O. Lung MRI at 1.5 and 3 tesla: Observer preference study and lesion contrast using five different pulse sequences. Investig. Radiol. 2007, 42, 377–383. [Google Scholar] [CrossRef]

- Cieszanowski, A.; Anyszgrodzicka, A.; Szeszkowski, W.; Kaczynski, B.; Maj, E.; Gornicka, B.; Grodzicki, M.; Grudzinski, I.P.; Stadnik, A.; Krawczyk, M.; et al. Characterization of focal liver lesions using quantitative techniques: Comparison of apparent diffusion coefficient values and T2 relaxation times. Eur. Radiol. 2012, 22, 2514–2524. [Google Scholar] [CrossRef] [PubMed]

- Hughes, D.; Tiddens, H.; Wild, J.M. Lung imaging in cystic fibrosis. Imaging Decis. MRI 2009, 13, 28–37. [Google Scholar] [CrossRef]

- Groth, M.; Henes, F.O.; Bannas, P.; Muellerleile, K.; Adam, G.; Regier, M. Intraindividual comparison of contrast-enhanced MRI and unenhanced SSFP sequences of stenotic and non-stenotic pulmonary artery diameters. Rofo 2011, 183, 47–53. [Google Scholar] [CrossRef] [PubMed]

- Chong, A.L.; Chandra, R.V.; Chuah, K.C.; Roberts, E.L.; Stuckey, S.L. Proton density MRI increases detection of cervical spinal cord multiple sclerosis lesions compared with T2-weighted fast spin-echo. Am. J. Neuroradiol. 2016, 37, 180–184. [Google Scholar] [CrossRef] [PubMed]

- Alzeibak, S.; Saunders, N.H. A feasibility study of in vivo electromagnetic imaging. Phys. Med. Biol. 1993, 38, 151–160. [Google Scholar] [CrossRef] [PubMed]

- Merwa, R.; Hollaus, K.; Brunner, P.; Scharfetter, H. Solution of the inverse problem of magnetic induction tomography (MIT). Physiol. Meas. 2006, 26, 241–250. [Google Scholar] [CrossRef] [PubMed]

- Fernandes, S.L.; Gurupur, V.P.; Lin, H.; Martis, R.J. A novel fusion approach for early lung cancer detection using computer aided diagnosis techniques. J. Med. Imaging Health Inform. 2017, 7, 1841–1850. [Google Scholar] [CrossRef]

- Lu, H. Computer-aided diagnosis research of a lung tumor based on a deep convolutional neural network and global features. BioMed Res. Int. 2021, 2021, 5513746. [Google Scholar] [CrossRef] [PubMed]

- Standford.edu. Deep Learning Tutorial. Available online: http://deeplearning.stanford.edu/tutorial/ (accessed on 5 October 2022).

- Dauphin, Y.N.; Fan, A.; Auli, M.; Grangier, D. Language Modeling with Gated Convolutional Networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 933–941. [Google Scholar]

- Jeong, J.; Lei, Y.; Shu, H.K.; Liu, T.; Wang, L.; Curran, W.; Shu, H.-K.; Mao, H.; Yang, X. Brain tumor segmentation using 3D mask R-CNN for dynamic susceptibility contrast enhanced perfusion imaging. Med. Imaging Biomed. Appl. Mol. Struct. Funct. Imaging 2020, 65, 185009. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 6. [Google Scholar] [CrossRef]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Liu, W.; Chen, W.; Wang, C.; Mao, Q.; Dai, X. Capsule Embedded ResNet for Image Classification. In Proceedings of the 2021 5th International Conference on Computer Science and Artificial Intelligence (CSAI 2021), Beijing, China, 4–6 December 2021. [Google Scholar]

- Guan, X.; Gao, W.; Peng, H.; Shu, N.; Gao, D.W. Image-based incipient fault classification of electrical substation equipment by transfer learning of deep convolutional neural network. IEEE Can. J. Electr. Comput. Eng. 2021, 45, 1–8. [Google Scholar] [CrossRef]

- Warin, K.; Limprasert, W.; Suebnukarn, S.; Jinaporntham, S.; Jantana, P. Performance of deep convolutional neural network for classification and detection of oral potentially malignant disorders in photographic images. Int. J. Oral Maxillofac. Surg. 2022, 51, 699–704. [Google Scholar] [CrossRef] [PubMed]

- Magge, A.; Weissenbacher, D.; Sarker, A.; Scotch, M.; Gonzalez-Hernandez, G. Bi-directional recurrent neural network models for geographic location extraction in biomedical literature. Pac. Symp. Biocomput. 2019, 24, 100–111. [Google Scholar] [PubMed]

- Garg, J.S. Improving segmentation by denoising brain MRI images through interpolation median filter in ADTVFCM. Int. J. Comput. Trends Technol. 2013, 4, 187–188. [Google Scholar]

- Siddeq, M. De-noise color or gray level images by using hybred dwt with wiener filter. Hepato-Gastroenterology 2014, 61, 1308–1312. [Google Scholar]

- Rajendran, K.; Tao, S.; Zhou, W.; Leng, S.; Mccollough, C. Spectral prior image constrained compressed sensing reconstruction for photon-counting detector based CT using a non-local means filtered prior (NLM-SPICCS). Med. Phys. 2018, 6, 45. [Google Scholar]

- Powers, D.M.W. Evaluation: From precision, recall and f-measure to roc., informedness, markedness & correlation. J. Mach. Learn. Technol. 2011, 2, 37–63. [Google Scholar]

- Das, A.; Rajendra Acharya, U.; Panda, S.S.; Sabut, S. Deep learning-based liver cancer detection using watershed transform and Gaussian mixture model techniques. Cogn. Syst. Res. 2019, 54, 165–175. [Google Scholar] [CrossRef]

- Lung Image Database Consortium (LIDC). Available online: https://imaging.nci.nih.gov/ncia/login.jsf (accessed on 5 October 2022).

- Armato Samuel, G.; McLennan, G.; Bidaut, L.; McNitt-Gray, M.F.; Meyer, C.R.; Reeves, A.P.; Zhao, B.; Aberle, D.R.; Henschke, C.I.; Hoffman, E.A.; et al. Data from LIDC-IDRI. 2015. Available online: https://wiki.cancerimagingarchive.net/display/Public/LIDC-IDRI (accessed on 5 October 2022).

- Setio, A.A.A.; Traverso, A.; de Bel, T.; Berens, M.S.N.; van den Bogaard, C.; Cerello, P.; Chen, H.; Dou, Q.; Fantacci, M.E.; Geurts, B.; et al. Validation, Comparison, and Combination of Algorithms for Automatic Detection of Pulmonary Nodules in Computed Tomography Images: The LUNA16 Challenge. Med. Image Anal. 2017, 42, 1–13. [Google Scholar] [CrossRef]

- ELCAP Public Lung Image Database. 2014. Available online: http://www.via.cornell.edu/lungdb.html (accessed on 5 October 2022).

- Pedrosa, J.; Aresta, G.; Ferreira, C.; Rodrigues, M.; Leito, P.; Carvalho, A.S.; Rebelo, J.; Negrao, E.; Ramos, I.; Cunha, A.; et al. LNDb: A Lung Nodule Database on Computed Tomography. arXiv 2019, arXiv:1911.08434. [Google Scholar]

- Prasad, D.; Ujjwal, B.; Sanjay, T. LNCDS: A 2D-3D cascaded CNN approach for lung nodule classification, detection and segmentation. Biomed. Signal Process. Control. 2021, 67, 102527. [Google Scholar]

- Shiraishi, J.; Katsuragawa, S.; Ikezoe, A.; Matsumoto, T.; Kobayashi, T.; Komatsu, K.; Matsiu, M.; Fujita, H.; Kodera, Y.; Doi, K. Development of a digital image database for chest radiographs with and without a lung nodule: Receiver operating characteristic analysis of radiologists’ detection of pulmonary nodules. Am. J. Roentgen. 2000, 174, 71–74. [Google Scholar] [CrossRef] [PubMed]

- Costa, D.D.; Broodman, I.; Hoogsteden, H.; Luider, T.; Klaveren, R.V. Biomarker identification for early detection of lung cancer by proteomic techniques in the NELSON lung cancer screening trial. Cancer Res. 2008, 68, 3961. [Google Scholar]

- Van Ginneken, B.; Armato, S.G.; de Hoop, B.; van Amelsvoort-van de Vorst, S.; Duindam, T.; Niemeijer, M.; Murphy, K.; Schilham, A.; Retico, A.; Fantacci, M.E.; et al. Comparing and Combining Algorithms for Computer-Aided Detection of Pulmonary Nodules in Computed Tomography Scans: The ANODE09 Study. Med. Image Anal. 2010, 14, 707–722. [Google Scholar] [CrossRef] [PubMed]

- Hospital, S.Z. A Trial to Evaluate the Impact of Lung-Protective Intervention in Patients Undergoing Esophageal Cancer Surgery; US National Library of Medicine: Bethesda, MD, USA, 2013. [Google Scholar]

- Armato Samuel, G., III; Hadjiiski, L.; Tourassi, G.D.; Drukker, K.; Giger, M.L.; Li, F.; Redmond, G.; Farahani, K.; Kirby, J.S.; Clarke, L.P. SPIE-AAPM-NCI Lung Nodule Classification Challenge Dataset. 2015. Available online: https://wiki.cancerimagingarchive.net/display/Public/SPIE-AAPM+Lung+CT+Challenge (accessed on 5 October 2022).

- Li, T.Y.; Li, S.P.; Zhang, Q.L. Protective effect of ischemic preconditioning on lung injury induced by intestinal ischemia/reperfusion in rats. Mil. Med. J. South China 2011, 25, 107–110. [Google Scholar]

- Li, Y.; Zhang, L.; Chen, H.; Yang, N. Lung nodule detection with deep learning in 3D thoracic MR images. IEEE Access 2019, 7, 37822–37832. [Google Scholar] [CrossRef]

- Aerts, H.; Velazquez, E.; Leijenaar, R.; Parmar, C.; Grossman, P.; Carvalho, S.; Bussink, J.; Monshouwer, R.; Haibe-Kains, B.; Rietveld, D. Decoding tumour phenotype by noninvasive imaging using a quantitative radiomics approach. Nat. Commun. 2014, 5, 4006. [Google Scholar] [CrossRef]

- Danish Lung Cancer Screening Trial (DLCST)—Full Text View—ClinicalTrials.Gov. Available online: https://clinicaltrials.gov/ct2/show/NCT00496977 (accessed on 5 October 2022).

- Trial Summary—Learn—NLST—The Cancer Data Access System. Available online: https://biometry.nci.nih.gov/cdas/learn/nlst/trial-summary/ (accessed on 5 October 2022).

- Hu, S.; Hoffman, E.A.; Reinhardt, J.M. Accurate lung segmentation for accurate quantization of volumetric X-ray CT images. IEEE Trans. Med. Imaging 2001, 20, 490–498. [Google Scholar] [CrossRef]

- Dawoud, A. Lung segmentation in chest radiographs by fusing shape information in iterative thresholding. Comput. Vis. IET 2011, 5, 185–190. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Sys. Man Cyber. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Peng, T.; Wang, C.; Zhang, Y.; Wang, J. H-SegNet: Hybrid segmentation network for lung segmentation in chest radiographs using mask region-based convolutional neural network and adaptive closed polyline searching method. Phys. Med. Biol. 2022, 67, 075006. [Google Scholar] [CrossRef] [PubMed]

- Tseng, L.Y.; Huang, L.C. An adaptive thresholding method for automatic lung segmentation in CT images. In Proceedings of the IEEE Africon, Nairobi, Kenya, 23–25 September 2009; pp. 1–5. [Google Scholar]

- Dehmeshki, J.; Amin, H.; Valdivieso, M.; Ye, X. Segmentation of pulmonary nodules in thoracic CT scans: A region growing approach. IEEE Trans. Med. Imaging 2008, 27, 467–480. [Google Scholar] [CrossRef] [PubMed]

- Fabijacska, A. The influence of pre-processing of CT images on airway tree segmentation using 3D region growing. In Proceedings of the 5th International Conference on Perspective Technologies and Methods in MEMS Design, Lviv, Ukraine, 22–24 April 2009. [Google Scholar]

- Kass, M.; Witkin, A.; Terzopoulos, D. Snakes: Active contour models. Int. J. Comput. Vis. 1988, 1, 321–331. [Google Scholar] [CrossRef]

- Lan, Y.; Xu, N.; Ma, X.; Jia, X. Segmentation of Pulmonary Nodules in Lung CT Images based on Active Contour Model. In Proceedings of the 14th IEEE International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 20–21 August 2022. [Google Scholar]

- Wang, S.; Zhou, M.; Olivier, G.; Tang, Z.C.; Dong, D.; Liu, Z.Y.; Tian, J. A Multi-view Deep Convolutional Neural Networks for Lung Nodule Segmentation. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Korea, 11–15 July 2017; pp. 1752–1755. [Google Scholar]

- Hamidian, S.; Sahiner, B.; Petrick, N.; Pezeshk, A. 3D Convolutional Neural Network for Automatic Detection of Lung Nodules in Chest CT. Proc. SPIE Int. Soc. Opt. Eng. 2017, 10134, 1013409. [Google Scholar]

- Sun, X.F.; Lin, H.; Wang, S.Y.; Zheng, L.M. Industrial robots sorting system based on improved faster RCNN. Comput. Syst. Appl. 2019, 28, 258–263. [Google Scholar]

- Cao, H.C.; Liu, H.; Song, E.; Hung, C.C.; Ma, G.Z.; Xu, X.Y.; Jin, R.C.; Jianguo Lu, J.G. Dual-branch residual network for lung nodule segmentation. Appl. Soft Comput. 2020, 86, 105934. [Google Scholar] [CrossRef]

- Banu, S.F.; Sarker, M.; Abdel-Nasser, M.; Puig, D.; Raswan, H.A. AWEU-Net: An attention-aware weight excitation u-net for lung nodule segmentation. arXiv 2021, arXiv:2110.05144. [Google Scholar] [CrossRef]

- Dutta, K. Densely connected recurrent residual (DENSE R2UNET) convolutional neural network for segmentation of lung CT images. arXiv 2021, arXiv:2102.00663. [Google Scholar]

- Keshani, M.; Azimifar, Z.; Tajeripour, F.; Boostani, R. Lung nodule segmentation and recognition using SVM classifier and active contour modeling: A complete intelligent system. Comput. Biol. Med. 2013, 43, 287–300. [Google Scholar] [CrossRef]

- Qi, S.L.; Si, G.L.; Yue, Y.; Meng, X.F.; Cai, J.F.; Kang, Y. Lung nodule segmentation based on thoracic CT images. Beijing Biomed. Eng. 2014, 33, 29–34. [Google Scholar]

- Wang, X.P.; Wen, Z.; Ying, C. Tumor segmentation in lung CT images based on support vector machine and improved level set. Optoelectron. Lett. 2015, 11, 395–400. [Google Scholar] [CrossRef]

- Shen, S.; Bui, A.; Cong, J.J.; Hsu, W. An automated lung segmentation approach using bidirectional chain codes to improve nodule detection accuracy. Comput. Biol. Med. 2015, 57(C), 139–149. [Google Scholar] [CrossRef] [PubMed]

- Roth, H.R.; Farag, A.; Le, L.; Turkbey, E.B.; Summers, R.M. Deep convolutional networks for pancreas segmentation in CT imaging. Proc. SPIE 2015, 9413, 94131G. [Google Scholar]

- Yip, S.; Chintan, P.; Daniel, B.; Jose, E.; Steve, P.; John, K.; Aerts, H.J.W.L. Application of the 3D slicer chest imaging platform segmentation algorithm for large lung nodule delineation. PLoS ONE 2017, 12, e0178944. [Google Scholar] [CrossRef]

- Firdouse, M.J.; Balasubramanian, M. A survey on lung segmentation methods. Adv. Comput. Sci. Technol. 2017, 10, 2875–2885. [Google Scholar]

- Khosravan, N.; Bagci, U. Semi-Supervised Multi-Task Learning for Lung Cancer Diagnosis. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2018, 2018, 710–713. [Google Scholar]

- Tong, G.; Li, Y.; Chen, H.; Zhang, Q.; Jiang, H. Improved U-NET network for pulmonary nodules segmentation. Optik 2018, 174, 460–469. [Google Scholar] [CrossRef]

- Jiang, J.; Hu, Y.C.; Liu, C.J.; Darragh, H.; Hellmann, M.D.; Deasy, J.O.; Mageras, G.; Veeraraghavan, H. Multiple resolution residually connected feature streams for automatic lung tumor segmentation from CT images. IEEE Trans. Med. Imaging 2019, 38, 134–144. [Google Scholar] [CrossRef]

- Burlutskiy, N.; Gu, F.; Wilen, L.K.; Backman, M.; Micke, P. A deep learning framework for automatic diagnosis in lung cancer. In Proceedings of the 1st Conference on Medical Imaging with Deep Learning (MIDL 2018), Amsterdam, The Netherlands, 4–6 July 2018. [Google Scholar]

- Yan, H.; Lu, H.; Ye, M.; Yan, K.; Jin, Q. Improved Mask R-CNN for Lung Nodule Segmentation. In Proceedings of the 2019 10th International Conference on Information Technology in Medicine and Education (ITME), Qingdao, China, 23–25 August 2019; pp. 137–147. [Google Scholar]

- Xiao, Z.; Liu, B.; Geng, L.; Zhang, F.; Liu, Y. Segmentation of lung nodules using improved 3D-Unet neural network. Symmetry 2020, 12, 1787. [Google Scholar] [CrossRef]

- Kashyap, M.; Panjwani, N.; Hasan, M.; Huang, C.; Bush, K.; Dong, P.; Zaky, S.; Chin, A.; Vitzthum, L.; Loo, B.; et al. Deep learning based identification and segmentation of lung tumors on computed tomography images. Int. J. Radiat. Oncol. Biol. Phys. 2021, 111(3S), E92–E93. [Google Scholar] [CrossRef]

- Chen, C.; Zhou, K.; Zha, M.; Qu, X.; Xiao, R. An effective deep neural network for lung lesions segmentation from COVID-19 CT images. IEEE Trans. Ind. Inform. 2021, 17, 6528–6538. [Google Scholar] [CrossRef]

- Zhang, M.; Li, H.; Pan, S.; Lyu, J.; Su, S. Convolutional neural networks based lung nodule classification: A surrogate-assisted evolutionary algorithm for hyperparameter optimization. IEEE Trans. Evol. Comput. 2021, 25, 869–882. [Google Scholar] [CrossRef]

- Jalali, Y.; Fateh, M.; Rezvani, M.; Abolghasemi, V.; Anisi, M.H. ResBCDU-Net: A deep learning framework for lung ct image segmentation. Sensors 2021, 21, 268. [Google Scholar] [CrossRef]

- Balaha, H.; Balaha, M.; Ali, H. Hybrid COVID-19 segmentation and recognition framework (HMB-HCF) using deep learning and genetic algorithms. Artif. Intell. Med. 2021, 119, 102156. [Google Scholar] [CrossRef]

- Lin, X.; Jiao, H.; Pang, Z.; Chen, H.; Wu, W.; Wang, X.; Xiong, L.; Chen, B.; Huang, Y.; Li, S.; et al. Lung cancer and granuloma identification using a deep learning model to extract 3-dimensional radiomics features in CT imaging. Clin. Lung Cancer 2021, 22, e756–e766. [Google Scholar] [CrossRef]

- Gan, W.; Wang, H.; Gu, H.; Duan, Y.; Xu, Z. Automatic segmentation of lung tumors on CT images based on a 2D & 3D hybrid convolutional neural network. Br. J. Radiol. 2021, 94, 20210038. [Google Scholar]

- Protonotarios, N.E.; Katsamenis, I.; Sykiotis, S.; Dikaios, N.; Kastis, G.A.; Chatziioannou, S.N.; Metaxas, M.; Doulamis, N.; Doulamis, A. A FEW-SHOT U-NET deep learning model for lung cancer lesion segmentation via PET/CT imaging. Biomed. Phys. Eng. Express 2022, 8, 025019. [Google Scholar] [CrossRef]

- Kim, H.M.; Ko, T.; Young, C.I.; Myong, J.P. Asbestosis diagnosis algorithm combining the lung segmentation method and deep learning model in computed tomography image. Int. J. Med. Inform. 2022, 158, 104667. [Google Scholar] [CrossRef]

- Chang, C.Y.; Chen, S.J.; Tsai, M.F. Application of support-vector-machine-based method for feature selection and classification of thyroid nodules in ultrasound images. Pattern Recognit. 2010, 43, 3494–3506. [Google Scholar] [CrossRef]

- Nithila, E.E.; Kumar, S.S. Segmentation of lung nodule in CT data using active contour model and Fuzzy C-mean clustering. Alex. Eng. J. 2016, 55, 2583–2588. [Google Scholar] [CrossRef]

- Zhang, X. Computer-Aided Detection of Pulmonary Nodules in Helical CT Images. Ph.D. Dissertation, The University of Iowa, Iowa City, IA, USA, 2005. [Google Scholar]

- Hwang, J.; Chung, M.J.; Bae, Y.; Shin, K.M.; Jeong, S.Y.; Lee, K.S. Computer-aided detection of lung nodules. J. Comput. Assist. Tomogr. 2010, 34, 31–34. [Google Scholar] [CrossRef] [PubMed]

- Young, S.; Lo, P.; Kim, G.; Brown, M.; Hoffman, J.; Hsu, W.; Wahi-Anwar, W.; Flores, C.; Lee, G.; Noo, F.; et al. The effect of radiation dose reduction on computer-aided detection (CAD) performance in a low-dose lung cancer screening population. Med. Phys. 2017, 44, 1337–1346. [Google Scholar] [CrossRef] [PubMed]

- Tajbakhsh, N.; Suzuki, K. Comparing two classes of end-to-end machine-learning models in lung nodule detection and classification: MTANNS VS. CNNS. Pattern Recognit. 2017, 63, 476–486. [Google Scholar] [CrossRef]

- Liu, X.; Hou, F.; Hong, Q.; Hao, A. Multi-view multi-scale CNNs for lung nodule type classification from CT images. Pattern Recognit. 2018, 77, 262–275. [Google Scholar] [CrossRef]

- Cao, H.C.; Liu, H.; Song, E.; Ma, G.Z.; Xu, X.Y.; Jin, R.C.; Liu, T.Y.; Hung, C.C. A Two-Stage Convolutional Neural Networks for Lung Nodule Detection. IEEE J. Biomed. Health Inform. 2020, 24, 2006–2015. [Google Scholar] [CrossRef]

- Alakwaa, W.; Nassef, M.; Badr, A. Lung cancer detection and classification with 3D convolutional neural network (3D-CNN). Lung Cancer 2017, 8, 409–417. [Google Scholar] [CrossRef]

- Anirudh, R.; Thiagarajan, J.J.; Bremer, T.; Kim, H. Lung nodule detection using 3D convolutional neural networks trained on weakly labeled data. In Proceedings of the Medical Imaging 2016: Computer-Aided Diagnosis, International Society for Optics and Photonics, San Diego, CA, USA, 27 February–3 March 2016; Volume 9785, pp. 1–6. [Google Scholar]

- Feng, Y.; Hao, P.; Zhang, P.; Liu, X.; Wu, F.; Wang, H. Supervoxel based weakly-supervised multi-level 3D CNNs for lung nodule detection and segmentation. J. Ambient. Intell. Humaniz. Comput. 2019. [Google Scholar] [CrossRef]

- Perez, G.; Arbelaez, P. Automated lung cancer diagnosis using three-dimensional convolutional neural networks. Med. Biol. Eng. Comput. 2020, 58, 1803–1815. [Google Scholar] [CrossRef]

- Vipparla, V.K.; Chilukuri, P.K.; Kande, G.B. Attention based multi-patched 3D-CNNs with hybrid fusion architecture for reducing false positives during lung nodule detection. J. Comput. Commun. 2021, 9, 1–26. [Google Scholar] [CrossRef]

- Dutande, P.; Baid, U.; Talbar, S. Deep residual separable convolutional neural network for lung tumor segmentation. Comput. Biol. Med. 2022, 141, 105161. [Google Scholar] [CrossRef] [PubMed]

- Luo, X.; Song, T.; Wang, G.; Chen, J.; Chen, Y.; Li, K.; Metaxas, D.N.; Zhang, S. SCPM-Net: An anchor-free 3D lung nodule detection network using sphere representation and center points matching. Med. Image Anal. 2022, 75, 102287. [Google Scholar] [CrossRef] [PubMed]

- Franck, C.; Snoeckx, A.; Spinhoven, M.; Addouli, H.E.; Zanca, F. Pulmonary nodule detection in chest CT using a deep learning-based reconstruction algorithm. Radiat. Prot. Dosim. 2021, 195, 158–163. [Google Scholar] [CrossRef] [PubMed]

- Dou, Q.; Chen, H.; Yu, L.; Qin, J.; Heng, P.A. Multi-level contextual 3D CNNs for false positive reduction in pulmonary nodule detection. IEEE Trans. Biomed. Eng. 2016, 64, 1558–1567. [Google Scholar] [CrossRef]

- Setio, A.; Ciompi, F.; Litjens, G.; Gerke, P.; Jacobs, C.; Riel, S.; Wille, M.M.; Naqibullah, M.; Sanchez, C.I.; van Ginneken, B. Pulmonary nodule detection in CT images: False positive reduction using multi-view convolutional networks. IEEE Trans. Med. Imaging 2016, 35, 1160–1169. [Google Scholar] [CrossRef] [PubMed]

- Mercy Theresa, M.; Subbiah Bharathi, V. CAD for lung nodule detection in chest radiography using complex wavelet transform and shearlet transform features. Indian J. Sci. Technol. 2016, 9, 1–12. [Google Scholar] [CrossRef]

- Jin, T.; Hui, C.; Shan, Z.; Wang, X. Learning Deep Spatial Lung Features by 3D Convolutional Neural Network for Early Cancer Detection. In Proceedings of the 2017 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Sydney, Australia, 29 November–1 December 2017. [Google Scholar]

- Zhu, W.; Liu, C.; Fan, W.; Xie, X. DeepLung: Deep 3D dual path nets for automated pulmonary nodule detection and classification. arXiv 2017, arXiv:1709.05538. [Google Scholar]

- Eun, H.Y.; Kim, D.Y.; Jung, C.; Kim, C. Single-view 2D CNNs with Fully Automatic Non-nodule Categorization for False Positive Reduction in Pulmonary Nodule Detection. Comput. Methods Programs Biomed. 2018, 165, 215–224. [Google Scholar] [CrossRef]

- Ramachandran, S.; George, J.; Skaria, S.; Varun, V.V. Using yolo based deep learning network for real time detection and localization of lung nodules from low dose CT scans. In Proceedings of the SPIE 10575, Medical Imaging 2018: Computer-Aided Diagnosis, 105751I (2018). Houston, TX, USA, 27 February 2018. [Google Scholar] [CrossRef]

- Serj, M.F.; Lavi, B.; Hoff, G.; Valls, D.P. A deep convolutional neural network for lung cancer diagnostic. arXiv 2018, arXiv:1804.08170. [Google Scholar]

- Zhang, J.; Xia, Y.; Zeng, H.; Zhang, Y. Nodule: Combining constrained multi-scale log filters with densely dilated 3D deep convolutional neural network for pulmonary nodule detection. Neurocomputing 2018, 317, 159–167. [Google Scholar] [CrossRef]

- Schwyzer, M.; Ferraro, D.A.; Muehlematter, U.J.; Curioni-Fontecedro, A.; Messerli, M. Automated detection of lung cancer at ultralow dose PET/CT by deep neural networks—Initial results. Lung Cancer 2018, 126, 170–173. [Google Scholar] [CrossRef] [PubMed]

- Gerard, S.E.; Patton, T.J.; Christensen, G.E.; Bayouth, J.E.; Reinhardt, J.M. Fissurenet: A deep learning approach for pulmonary fissure detection in CT images. IEEE Trans. Med. Imaging 2018, 38, 156–166. [Google Scholar] [CrossRef] [PubMed]

- Zhong, Z.S.; Kim, Y.S.; Plichta, K.; Allen, B.G.; Zhou, L.X.; Buatti, J.; Wu, X.D. Simultaneous cosegmentation of tumors in PET-CT images using deep fully convolutional networks. Med. Phys. 2019, 2, 619–633. [Google Scholar] [CrossRef] [PubMed]

- Kim, B.C.; Choi, J.S.; Suk, H.I. Multi-scale gradual integration CNN for false positive reduction in pulmonary nodule detection. Neural Netw. 2019, 115, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Masood, A.; Sheng, B.; Li, P.; Hou, X.; Wei, X.; Qin, J.; Feng, D. Computer-assisted decision support system in pulmonary cancer detection and stage classification on CT images. J. Biomed. Inform. 2018, 79, 117–128. [Google Scholar] [CrossRef]

- Nam, J.G.; Park, S.; Hwang, E.J.; Lee, J.H.; Jin, K.-N.; Lim, K.Y.; Vu, T.H.; Sohn, J.H.; Hwang, S.; Goo, J.M.; et al. Development and validation of deep learning-based automatic detection algorithm for malignant pulmonary nodules on chest radiographs. Clin. Infect. Dis. 2019, 69, 739–747. [Google Scholar] [CrossRef]

- Choi, W.; Oh, J.H.; Riyahi, S.; Liu, C.J.; Lu, W. Radiomics analysis of pulmonary nodules in low-dose CT for early detection of lung cancer. Med. Phys. 2018, 45, 1537–1549. [Google Scholar] [CrossRef]

- Tan, J.X.; Huo, Y.M.; Liang, Z.; Li, L. Expert knowledge-infused deep learning for automatic lung nodule detection. J. X-Ray Sci. Technol. 2019, 27, 17–35. [Google Scholar] [CrossRef]

- Ozdemir, O.; Russell, R.L.; Berlin, A.A. A 3D probabilistic deep learning system for detection and diagnosis of lung cancer using low-dose CT scans. IEEE Trans. Med. Imaging 2020, 39, 1419–1429. [Google Scholar] [CrossRef]

- Cha, M.J.; Chung, M.J.; Lee, J.H.; Lee, K.S. Performance of deep learning model in detecting operable lung cancer with chest radiographs. J. Thorac. Imaging 2019, 34, 86–91. [Google Scholar] [CrossRef]

- Pham, H.; Futakuchi, M.; Bychkov, A.; Furukawa, T.; Fukuoka, J. Detection of lung cancer lymph node metastases from whole-slide histopathologic images using a two-step deep learning approach. Am. J. Pathol. 2019, 189, 2428–2439. [Google Scholar] [CrossRef] [PubMed]

- Li, D.; Vilmun, B.M.; Carlsen, J.F.; Albrecht-Beste, E.; Lauridsen, C.A.; Nielsen, M.B.; Hansen, K.L. The performance of deep learning algorithms on automatic pulmonary nodule detection and classification tested on different datasets that are not derived from LIDC-IDRI: A systematic review. Diagnostics 2019, 9, 207. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Jin, W.; Li, G.; Yin, C. Yolo v2 network with asymmetric convolution kernel for lung nodule detection of CT image. Chin. J. Biomed. Eng. 2019, 38, 401–408. [Google Scholar]

- Guo, T.; Xie, S.P. Automated segmentation and identification of pulmonary nodule images. Comput. Eng. Des. 2019, 40, 467–472. [Google Scholar]

- Huang, W.; Hu, L. Using a noisy U-net for detecting lung nodule candidates. IEEE Access 2019, 7, 67905–67915. [Google Scholar] [CrossRef]

- Gu, Y.; Lu, X.; Zhang, B.; Zhao, Y.; Zhou, T. Automatic lung nodule detection using multi-scale dot nodule-enhancement filter and weighted support vector machines in chest computed tomography. PLoS ONE 2019, 14, e0210551. [Google Scholar] [CrossRef]

- Kumar, A.; Fulham, M.J.; Feng, D.; Kim, J. Co-learning feature fusion maps from PET-CT images of lung cancer. IEEE Trans. Med. Imaging 2019, 39, 204–217. [Google Scholar] [CrossRef]

- Pesce, E.; Withey, S.; Ypsilantis, P.P.; Bakewell, R.; Goh, V.; Montana, G. Learning to detect chest radiographs containing pulmonary lesions using visual attention networks. Med. Image Anal. 2019, 53, 26–38. [Google Scholar] [CrossRef]

- Huang, X.; Lei, Q.; Xie, T.; Zhang, Y.; Hu, Z.; Zhou, Q. Deep transfer convolutional neural network and extreme learning machine for lung nodule diagnosis on CT images. Knowl. Based Syst. 2020, 204, 105230. [Google Scholar] [CrossRef]

- Zheng, S.; Cornelissen, L.J.; Cui, X.; Jing, X.; Ooijen, P. Deep convolutional neural networks for multiplanar lung nodule detection: Improvement in small nodule identification. Med. Phys. 2021, 48, 733–744. [Google Scholar] [CrossRef]

- Xu, X.; Wang, C.; Guo, J.; Gan, Y.; Yi, Z. MSCS-DEEPLN: Evaluating lung nodule malignancy using multi-scale cost-sensitive neural networks. Med. Image Anal. 2020, 65, 101772. [Google Scholar] [CrossRef] [PubMed]

- Yektai, H.; Manthouri, M. Diagnosis of lung cancer using multi-scale convolutional neural network. Biomed. Eng. Appl. Basis Commun. 2020, 32, 2050030. [Google Scholar] [CrossRef]

- Heuvelmans, M.A.; Ooijen, P.; Ather, S.; Silva, C.F.; Oudkerk, M. Lung cancer prediction by deep learning to identify benign lung nodules. Lung Cancer 2021, 154, 1–4. [Google Scholar] [CrossRef]

- Hsu, H.H.; Ko, K.H.; Chou, Y.C.; Wu, Y.C.; Chiu, S.H.; Chang, C.K.; Chang, W.C. Performance and reading time of lung nodule identification on multidetector CT with or without an artificial intelligence-powered computer-aided detection system. Clin. Radiol. 2021, 76, 626.e23. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.M.; Choi, E.J.; Chung, J.H.; Lee, K.W.; Oh, J.W. A DNA-derived phage nose using machine learning and artificial neural processing for diagnosing lung cancer. Biosens. Bioelectron. 2021, 194, 113567. [Google Scholar] [CrossRef] [PubMed]

- Afshar, P.; Naderkhani, F.; Oikonomou, A.; Rafiee, M.J.; Plataniotis, K.N. MIXCAPS: A capsule network-based mixture of experts for lung nodule malignancy prediction. Pattern Recognit. 2021, 116(August 2021), 107942. [Google Scholar] [CrossRef]

- Lai, K.D.; Nguyen, T.T.; Le, T.H. Detection of lung nodules on ct images based on the convolutional neural network with attention mechanism. Ann. Emerg. Technol. Comput. 2021, 5, 78–89. [Google Scholar] [CrossRef]

- Bu, Z.; Zhang, X.; Lu, J.; Lao, H.; Liang, C.; Xu, X.; Wei, Y.; Zeng, H. Lung nodule detection based on YOLOv3 deep learning with limited datasets. Mol. Cell. Biomech. 2022, 19, 17–28. [Google Scholar] [CrossRef]

- Al-Shabi, M.; Shak, K.; Tan, M. ProCAN: Progressive growing channel attentive non-local network for lung nodule classification. Pattern Recognit. 2022, 122, 108309. [Google Scholar] [CrossRef]

- Farag, A.; Ali, A.; Graham, J.; Farag, A.; Elshazly, S.; Falk, R. Evaluation of geometric feature descriptors for detection and classification of lung nodules in low dose CT scans of the chest. In Proceedings of the 2011 IEEE International Symposium on Biomedical Imaging: From Nano to Macro, Chicago, IL, USA, 30 March–2 April 2011; pp. 169–172. [Google Scholar]

- Orozco, H.M.; Villegas, O.O.V.; Domínguez, H.J.O.; Domínguez, H.D.J.O.; Sanchez, V.G.C. Lung nodule classification in CT thorax images using support vector machines. In Proceedings of the 2013 12th Mexican International Conference on Artificial Intelligence (MICAI), Mexico City, Mexico, 24–30 November 2013; pp. 277–283. [Google Scholar]

- Krewer, H.; Geiger, B.; Hall, L.O.; Goldgof, D.B.; Gu, Y.; Tockman, M.; Gillies, R.J. Effect of texture features in computer aided diagnosis of pulmonary nodules in low-dose computed tomography. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Manchester, UK, 13–16 October 2013; pp. 3887–3891. [Google Scholar]

- Parveen, S.S.; Kavitha, C. Classification of lung cancer nodules using SVM Kernels. Int. J. Comput. Appl. 2014, 95, 975–8887. [Google Scholar]

- Dandıl, E.; Çakiroğlu, M.; Ekşi, Z.; Özkan, M.; Kurt, Ö.K.; Canan, A. Artificial neural network-based classification system for lung nodules on computed tomography scans. In Proceedings of the 2014 6th International Conference of Soft Computing and Pattern Recognition (SoCPar), Tunis, Tunisia, 11–14 August 2014; pp. 382–386. [Google Scholar]

- Hua, K.L.; Hsu, C.H.; Hidayati, S.C.; Hidayati, S.C.; Cheng, W.H.; Chen, Y.J. Computer-aided classification of lung nodules on computed tomography images via deep learning technique. OncoTargets Ther. 2015, 8, 2015–2022. [Google Scholar]

- Kumar, D.; Wong, A.; Clausi, D.A. Lung nodule classification using deep features in CT images. In Proceedings of the 2015 12th Conference on Computer and Robot Vision (CRV), Halifax, NS, Canada, 3–5 June 2015; pp. 133–138. [Google Scholar]

- Shen, S.; Han, S.X.; Aberle, D.R.; Bui, A.A.; Hsu, W. An interpretable deep hierarchical semantic convolutional neural network for lung nodule malignancy classification. Expert Syst. Appl. 2019, 128, 84–95. [Google Scholar] [CrossRef] [PubMed]

- Shen, W.; Zhou, M.; Yang, F.; Yang, C.; Tian, J. Muti-scale convolutional neural networks for lung nodule Classification. Inf. Process. Med. Imaging 2015, 24, 588–599. [Google Scholar] [PubMed]

- Cheng, J.Z.; Ni, D.; Chou, Y.H.; Qin, J.; Tiu, C.M.; Chang, Y.C.; Huang, C.S.; Shen, D.; Chen, C.M. Computer-aided diagnosis with deep learning architecture: Applications to breast lesions in US images and pulmonary nodules in CT scans. Sci. Rep. 2016, 6, 24454. [Google Scholar] [CrossRef]

- Kwajiri, T.L.; Tezukam, T. Classification of Lung Nodules Using Deep Learning. Trans. Jpn. Soc. Med. Biol. Eng. 2017, 55, 516–517. [Google Scholar]

- Shen, W.; Zhou, M.; Yang, F.; Yu, D.; Dong, D.; Yang, C.; Tian, J.; Zang, Y. Multi-crop convolutional neural networks for lung nodule malignancy suspiciousness classification. Pattern Recognit. 2017, 61, 663–673. [Google Scholar] [CrossRef]

- Abbas, Q. Lung-deep: A computerized tool for detection of lung nodule patterns using deep learning algorithms detection of lung nodules patterns. Int. J. Adv. Comput. Sci. Appl. 2017, 8, 112–116. [Google Scholar] [CrossRef]

- Da Silva, G.L.F.; da Silva Neto, O.P.; Silva, A.C.; Gattass, M. Lung nodules diagnosis based on evolutionary convolutional neural network. Multimed. Tools Appl. 2017, 76, 19039–19055. [Google Scholar] [CrossRef]

- Zhang, B.; Qi, S.; Monkam, P.; Li, C.; Qian, W. Ensemble learners of multiple deep CNNs for pulmonary nodules classification using CT images. IEEE Access 2019, 7, 110358–110371. [Google Scholar] [CrossRef]

- Sahu, P.; Yu, D.; Dasari, M.; Hou, F.; Qin, H. A lightweight multi-section CNN for lung nodule classification and malignancy estimation. IEEE J. Biomed. Health Inform. 2019, 23, 960–968. [Google Scholar] [CrossRef]

- Ali, I.; Muzammil, M.; Ulhaq, D.I.; Khaliq, A.A.; Malik, S. Efficient lung nodule classification using transferable texture convolutional neural network. IEEE Access 2020, 8, 175859–175870. [Google Scholar] [CrossRef]

- Marques, S.; Schiavo, F.; Ferreira, C.A.; Pedrosa, J.; Cunha, A.; Campilho, A. A multi-task CNN approach for lung nodule malignancy classification and characterization. Expert Syst. Appl. 2021, 184, 115469.1–115469.9. [Google Scholar] [CrossRef]

- Thamilarasi, V.; Roselin, R. Automatic classification and accuracy by deep learning using CNN methods in lung chest x-ray images. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1055, 012099. [Google Scholar] [CrossRef]

- Kawathekar, I.D.; Areeckal, A.S. Performance analysis of texture characterization techniques for lung nodule classification. J. Phys. Conf. Ser. 2022, 2161, 012045. [Google Scholar] [CrossRef]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2016, arXiv:1511.06434. [Google Scholar]

- Chuquicusma, M.J.M.; Hussein, S.; Burt, J.; Bagci, U. How to fool radiologists with generative adversarial networks? A visual turing test for lung cancer diagnosis. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 240–244. [Google Scholar]

- Zhao, D.; Zhu, D.; Lu, J.; Luo, Y.; Zhang, G. Synthetic Medical Images Using F&BGAN for Improved Lung Nodules Classification by Multi-Scale VGG16. Symmetry 2018, 10, 519. [Google Scholar]

- Teramoto, A.; Yamada, A.; Kiriyama, Y.; Tsukamoto, T.; Fujita, H. Automated classification of benign and malignant cells from lung cytological images using deep convolutional neural network. Inform. Med. Unlocked 2019, 16, 100205. [Google Scholar] [CrossRef]

- Rani, K.V.; Jawhar, S.J. Superpixel with nanoscale imaging and boosted deep convolutional neural network concept for lung tumor classification. Int. J. Imaging Syst. Technol. 2020, 30, 899–915. [Google Scholar] [CrossRef]

- Kuruvilla, J.; Gunavathi, K. Lung cancer classification using neural networks for CT images. Comput. Methods Programs Biomed 2014, 113, 202–209. [Google Scholar] [CrossRef]

- Ciompi, F.; Chung, K.; Riel, S.V.; Setio, A.; Gerke, P.K.; Jacobs, C.; Scholten, E.T.; Schaefer-Prokop, C.; Wille, M.M.W.; Marchianò, A.; et al. Corrigendum: Towards automatic pulmonary nodule management in lung cancer screening with deep learning. Sci. Rep. 2017, 7, 46878. [Google Scholar] [CrossRef]

- Nurtiyasari, D.; Rosadi, D.; Abdurakhman. The application of Wavelet Recurrent Neural Network for lung cancer classification. In Proceedings of the 2017 3rd International Conference on Science and Technology—Computer (ICST), Yogyakarta, Indonesia, 11–12 July 2017; pp. 127–130. [Google Scholar]

- De Carvalho Filho, A.O.; Silva, A.C.; de Paiva, A.C.; Nunes, R.A.; Gattass, M. Classification of patterns of benignity and malignancy based on CT using topology-based phylogenetic diversity index and convolutional neural network. Pattern Recognit. 2018, 81, 200–212. [Google Scholar] [CrossRef]

- Lindsay, W.; Wang, J.; Sachs, N.; Barbosa, E.; Gee, J. Transfer learning approach to predict biopsy-confirmed malignancy of lung nodules from imaging data: A pilot study. In Image Analysis for Moving Organ, Breast, and Thoracic Images; Springer: Berlin/Heidelberg, Germany, 2018; pp. 295–301. [Google Scholar]

- Keming, M.; Renjie, T.; Xinqi, W.; Weiyi, Z.; Haoxiang, W. Feature representation using deep autoencoder for lung nodule image classification. Complexity 2018, 3078374. [Google Scholar] [CrossRef]

- Matsuyama, E.; Tsai, D.Y. Automated classification of lung diseases in computed tomography images using a wavelet based convolutional neural network. J. Biomed. Sci. Eng. 2018, 11, 263–274. [Google Scholar] [CrossRef][Green Version]

- Sathyan, H.; Panicker, J.V. Lung Nodule Classification Using Deep ConvNets on CT Images. In Proceedings of the 2018 9th International Conference on Computing, Communication and Networking Technologies, Bengaluru, India, 10–12 July 2018; p. 18192544. [Google Scholar] [CrossRef]

- Xie, Y.; Xia, Y.; Zhang, J.; Song, Y.; Feng, D.; Fulham, M.; Cai, W. Knowledge-based collaborative deep learning for benign-malignant lung nodule classification on chest CT. IEEE Trans. Med. Imaging 2019, 38, 991–1004. [Google Scholar] [CrossRef]

- Nasrullah, N.; Sang, J.; Alam, M.S.; Mateen, M.; Cai, B.; Hu, H. Automated lung nodule detection and classification using deep learning combined with multiple strategies. Sensors 2019, 19, 3722. [Google Scholar] [CrossRef]

- Shakeel, P.M.; Burhanuddin, M.A.; Desa, M.I. Lung cancer detection from CT image using improved profuse clustering and deep learning instantaneously trained neural networks. Measurement 2019, 145, 702–712. [Google Scholar] [CrossRef]

- Suresh, S.; Mohan, S. Roi-based feature learning for efficient true positive prediction using convolutional neural network for lung cancer diagnosis. Neural Comput. Appl. 2020, 32, 15989–16009. [Google Scholar] [CrossRef]

- Su, R.; Xie, W.; Tan, T. 2.75D convolutional neural network for pulmonary nodule classification in chest CT. arXiv 2020, arXiv:2002.04251. [Google Scholar]

- Zia, M.B.; Zhao, J.J.; Ning, X. Detection and classification of lung nodule in diagnostic CT: A TSDN method based on improved 3D-FASTER R-CNN and multi-scale multi-crop convolutional neural network. Int. J. Hybrid Inf. Technol. 2020, 13, 45–56. [Google Scholar] [CrossRef]

- Lin, C.J.; Li, Y.C. Lung nodule classification using taguchi-based convolutional neural networks for computer tomography images. Electronics 2020, 9, 1066. [Google Scholar] [CrossRef]

- Mmmap, A.; Sjj, B.; Gjm, C. Optimal deep belief network with opposition based pity beetle algorithm for lung cancer classification: A DBNOPBA approach. Comput. Methods Programs Biomed 2021, 199, 105902. [Google Scholar]

- Baranwal, N.; Doravari, P.; Kachhoria, R. Classification of histopathology images of lung cancer using convolutional neural network (CNN). arXiv 2021, arXiv:2112.13553. [Google Scholar]

- Shiwei, L.I.; Liu, D. Automated classification of solitary pulmonary nodules using convolutional neural network based on transfer learning strategy. J. Mech. Med. Biol. 2021, 21, 2140002. [Google Scholar]

- Arumuga Maria Devi, T.; Mebin Jose, V.I. Three Stream Network Model for Lung Cancer Classification in the CT Images. Open Comput. Sci. 2021, 11, 251–261. [Google Scholar] [CrossRef]

- Naik, A.; Edla, D.R.; Kuppili, V. Lung Nodule Classification on Computed Tomography Images Using Fractalnet. Wireless Pers Commun 2021, 119, 1209–1229. [Google Scholar] [CrossRef]

- Ibrahim, D.M.; Elshennawy, N.M.; Sarhan, A.M. Deep-chest: Multi-classification deep learning model for diagnosing COVID-19, pneumonia, and lung cancer chest diseases. Comput. Biol. Med. 2021, 132, 104348. [Google Scholar] [CrossRef]

- Fu, X.; Bi, L.; Kumar, A.; Fulham, M.; Kim, J. An attention-enhanced cross-task network to analyse lung nodule attributes in CT images. Pattern Recognit. 2022, 126, 108576. [Google Scholar] [CrossRef]

- Vaiyapuri, T.; Liyakathunisa; Alaskar, H.; Parvathi, R.; Pattabiraman, V.; Hussain, A. CAT Swarm Optimization-Based Computer-Aided Diagnosis Model for Lung Cancer Classification in Computed Tomography Images. Appl. Sci. 2022, 12, 5491. [Google Scholar] [CrossRef]

- Halder, A.; Chatterjee, S.; Dey, D. Adaptive morphology aided 2-pathway convolutional neural network for lung nodule classification. Biomed. Signal Process. Control 2022, 72, 103347. [Google Scholar] [CrossRef]

- Forte, G.C.; Altmayer, S.; Silva, R.F.; Stefani, M.T.; Libermann, L.L.; Cavion, C.C.; Youssef, A.; Forghani, R.; King, J.; Mohamed, T.-L.; et al. Deep Learning Algorithms for Diagnosis of Lung Cancer: A Systematic Review and Meta-Analysis. Cancers 2022, 14, 3856. [Google Scholar] [CrossRef]

| Reference | Dataset | Sample Number |

|---|---|---|

| [53] | Lung image database consortium (LIDC) | 399 CT images |

| [54] | Lung image database consortium and image database resource initiative (LIDC-IDRI) | 1018 CT images from 1010 patients |

| [55] | Lung nodule analysis challenge 2016 (LUNA16) | 888 CT images from LIDC-IDRI dataset |

| [56] | Early lung cancer action program (ELCAP) | 50 LDCT lung images & 379 unduplicated lung nodule CT images |

| [57] | Lung Nodule Database (LNDb) | 294 CT images from Centro Hospitalar e Universitario de São Joãao |

| [58] | Indian Lung CT Image Database (ILCID) | CT images from 400 patients |

| [59] | Japanese Society of Radiological Technology (JSRT) | 154 nodules & 93 nonnodules with labels |

| [60] | Nederland-Leuvens Longkanker Screenings Onderzoek (NELSON) | CT images from 15,523 human subjects |

| [61] | Automatic nodule detection 2009 (ANODE09) | 5 examples & 50 test images |

| [62] | Shanghai Zhongshan hospital database | CT images from 350 patients |

| [63] | Society of Photo-Optical Instrumentation Engineers in conjunction with the American Association of Physicists in Medicine and the National Cancer Institute (SPIE-AAPM-NCI) LungX | 60 thoracic CT scans with 73 nodules |

| [64] | General Hospital of Guangzhou military command (GHGMC) dataset | 180 benign & 120 malignant lung nodules |

| [65] | First Affiliated Hospital of Guangzhou Medical University (FAHGMU) dataset | 142 T2-weighted MR images |

| [66] | Non-small cell lung cancer (NSCLC)-Radiomics database | 13,482 CT images from 89 patients |

| [67] | Danish lung nodule screening trial (DLCST) | CT images from 4104 subjects |

| [68] | U.S. National Lung Screening Trial (NLST) | CT images from 1058 patients with lung cancer & 9310 patients with benign lung nodules |

| Reference | Year | Method | Imaging | Datasets | Results |

|---|---|---|---|---|---|

| [84] | 2013 | Support vector machine (SVM) | CT images | Shiraz University of Medical Sciences | Accuracy: 98.1% |

| [85] | 2014 | Lung nodule segmentation | CT images | 85 patients | Accuracy: >90% |

| [86] | 2015 | SVM | CT images | 193 CT images | Accuracy: 94.67% for benign tumors; Accuracy: 96.07% for adhesion tumor |

| [87] | 2015 | Bidirectional chain coding combined with SVM | CT images | LIDC | Accuracy: 92.6% |

| [88] | 2015 | Convolutional networks (ConvNets) | CT images | 82 patients | DSC: 68% ± 10% |

| [77] | 2017 | Multi-view convolutional neural networks (MV-CNN) | CT images | LIDC-IDRI | DSC: 77.67% |

| [80] | 2017 | Two-stage CAD | CT images | LIDC-IDRI | F1-score: 85.01% |

| [89] | 2017 | 3D Slicer chest imaging platform (CIP) | CT images | LIDC | median DSC: 99% |

| [90] | 2017 | Deep computer aided detection (CAD) | CT images | LIDC-IDRI | Sensitivity: 88% |

| [91] | 2018 | 3D deep multi-task CNN | CT images | LUNA16 | DSC: 91% |

| [92] | 2018 | Improved U-Net | CT images | LUNA16 | DSC: 73.6% |

| [93] | 2018 | Incremental-multiple resolution residually connected network (MRRN) | CT images | TCIA | DSC: 74% ± 0.13 |

| MSKCC | DSC: 75%±0.12 | ||||

| LIDC | DSC: 68%±0.23 | ||||

| [94] | 2018 | U-Net | hematoxylin-eosin-stained slides | 712 lung cancer patients operated in Uppsala Hospital, Stanford TMA cores | Precision: 80% |

| [95] | 2019 | Mask R-CNN | CT images | LIDC-IDRI | Average precision:78% |

| [96] | 2020 | 3D-UNet | CT images | LUNA16 | DSC: 95.30% |

| [81] | 2020 | Dual-branch Residual Network (DB-ResNet) | CT images | LIDC-IDRI | DSC: 82.74% |

| [97] | 2021 | End-to-end deep learning | CT images | 1916 lung tumors in 1504 patients | Sensitivity: 93.2% |

| [98] | 2021 | 3D Attention U-Net | COVID-19 CT images | Fifth Medical Center of the PLA General Hospital | Accuracy: 94.43% |

| [99] | 2021 | Improved U-Net | CT images | LIDC-IDRI | Precision: 84.91% |

| [82] | 2021 | Attention-aware weight excitation U-Net (AWEU-Net) | CT images | LUNA16 | DSC: 89.79% |

| LIDC-IDRI | DSC: 90.35% | ||||

| [83] | 2021 | Dense Recurrent Residual Convolutional Neural Network(Dense R2U CNN) | CT images | LUNA | Sensitivity: 99.4% ± 0.2% |

| [100] | 2021 | Modified U-Net in which the encoder is replaced with a pre-trained ResNet-34 network (Res BCDU-Net) | CT images | LIDC-IDRI | Accuracy: 97.58% |

| [101] | 2021 | Hybrid COVID-19 segmentation and recognition framework (HMB-HCF) | X-Ray images | COVID-19 dataset from 8 sources * | Accuracy: 99.30% |

| [102] | 2021 | Clinical image radionics DL (CIRDL) | CT Images | First Affiliated Hospital of Guangzhou Medical University | Sensitivity: 0.8763 |

| [103] | 2021 | 2D & 3D hybrid CNN | CT scans | 260 patients with lung cancer treated | Median DSC: 0.73 |

| [104] | 2022 | Few-shot learning U-Net (U-Net FSL) | PET/CT images | Lung-PET-CT-DX TCIA | Accuracy: 99.27% ± 0.03 |

| U-Net CT | Accuracy: 99.08% ± 0.05 | ||||

| U-Net PET | Accuracy: 98.78% ± 0.06 | ||||

| U-Net PET/CT | Accuracy: 98.92% ± 0.09 | ||||

| CNN | Accuracy: 98.89% ± 0.08 | ||||

| Co-learning | Accuracy: 99.94% ± 0.09 | ||||

| [105] | 2022 | DenseNet201 | CT images | Seoul St. Mary’s Hospital dataset | Sensitivity: 96.2% |

| Reference | Year | Method | Imaging | Datasets | Results |

|---|---|---|---|---|---|

| [122] | 2016 | 3D CNN | CT images | LUNA16 | Sensitivity: >87% at 4 FPs/scan |

| [123] | 2016 | 2D multi-view convolutional networks (ConvNets) | CT images | LIDC-IDRI | Sensitivity: 85.4% at 1 FPs/scan, 90.1% at 4 FPs/scan |

| [124] | 2016 | Thresholding method | CT images | JSRT | Accuracy: 96% |

| [110] | 2017 | Computer aided detection (CAD) | LDCT | NLST | Mean sensitivity: 74.1% |

| [125] | 2017 | 3D CNN | LDCT | KDSB17 | Accuracy: 87.5% |

| [126] | 2017 | 3D Faster R-CNN with U-Net-like encoder | CT scans | LUNA16 | Accuracy: 81.41%; |

| LIDC-IDRI | Accuracy: 90.44% | ||||

| [127] | 2018 | Single-view 2D CNN | CT scans | LUNA16 | metric score: 92.2% |

| [128] | 2018 | DetectNet | CT scans | LIDC | Sensitivity: 89% |

| [129] | 2018 | 3D CNN | CT scans | KDSB17 | Sensitivity: 87%; |

| [130] | 2018 | Novel pulmonary nodule detection algorithm (NODULe) based on 3D CNN | CT scans | LUNA16 | CPM score: 94.7% |

| LIDC-IDRI | Sensitivity: 94.9% | ||||

| [131] | 2018 | Deep neural networks (DNN) | PET images | 50 lung cancer patients, & 50 patients without lung cancer | Sensitivity: 95.9% |

| ultralow dose PET | Sensitivity: 91.5% | ||||

| [132] | 2018 | FissureNet | 3DCT | COPDGene | AUC: 0.98 |

| U-Net | AUC: 0.963 | ||||

| Hessian | AUC: 0.158 | ||||

| [133] | 2018 | DFCN-based cosegmentation (DFCN-CoSeg) | CT scans | 60 NSCLC patients | Score: 0.865 ± 0.034; |

| PET images | Score: 0.853 ± 0.063; | ||||

| [134] | 2018 | Multi-scale Gradual Integration CNN (MGI-CNN) | CT scans | LUNA16, V1 dataset includes 551,065 subjects; V2 dataset includes 754,975 subjects | CPM: 0.908 for the V1 dataset, 0.942 for the V2 dataset; |

| [135] | 2018 | Deep fully CNN (DFCNet) | CT scans | LIDC-IDR | Accuracy: 84.58% |

| CNN | Accuracy: 77.6% | ||||

| [136] | 2018 | Deep learning–based automatic detection algorithm (DLAD) | CT scans | Seoul National University Hospital | Sensitivity: 69.9% |

| [137] | 2018 | SVM classifier coupled with a least absolute shrinkage and selection operator (SVM-LASSO) | CT scans | LIDC-IDRI | Accuracy: 84.6% |

| [138] | 2019 | CNN | CT scans | LIDC-IDR | Sensitivity: 88% at 1.9 FPs/scan; 94.01% at 4.01 FPs/scan |

| [139] | 2019 | 3D CNN | LDCT | LUNA16 and Kaggle datasets | Average metric: 92.1% |

| [140] | 2019 | Deep learning model (DLM) based on DCNN | Chest radiographs (CXRs) | 3500 CXRs contain lung nodules & 13,711 normal CXRs | Sensitivity: 76.8% |

| [141] | 2019 | Two-Step Deep Learning | CT scans | Nagasaki University Hospital | Sensitivity of 79.6% with sizes ≤0.6 mm; Sensitivity of 75.5% with sizes ≤0.7 mm; |

| [142] | 2019 | Faster R-CNN network and false positive (FP) | CT scans | FAHGMU | Sensitivity: 85.2% |

| [143] | 2019 | YOLOv2 with Asymmetric Convolution Kernel | CT scans | LIDC-IDRI | Sensitivity: 94.25% |

| [144] | 2019 | VGG-16 network | CT scans | LIDC-IDRI | Accuracy: 92.72% |

| [145] | 2019 | Noisy U-Net (NU-Net) | CT scans | LUNA16 | Sensitivity: 97.1% |

| [146] | 2019 | CAD using a multi-scale dot nodule-enhancement filter | CT scans | LIDC | Sensitivity: 87.81% |

| [147] | 2019 | Co-Learning Feature Fusion CNN | PET-CT scans | 50 NSCLC patients | Accuracy: 99.29% |

| [148] | 2019 | Convolution networks with attention feedback (CONAF) | Chest radiographs | 430,000 CXRs | Sensitivity: 78% |

| [148] | 2019 | Recurrent attention model with annotation feedback (RAMAF) | Chest radiographs | 430,000 CXRs | Sensitivity: 74% |

| [113] | 2020 | Two-Stage CNN (TSCNN) | CT scans | LUNA16 & LIDC-IDRI | CPM: 0.911 |

| [149] | 2020 | Deep Transfer CNN and Extreme Learning Machine (DTCNN-ELM) | CT scans | LIDC-IDRI & FAH-GMU | Sensitivity: 93.69%; |

| [150] | 2020 | U-Net++ | CT scans | LIDC-IDRI | Sensitivity: 94.2% at 1 FP/scan, 96% at 2 FPs/scan |

| [151] | 2020 | MSCS-DeepLN | CT scans | LIDC-IDRI & DeepLN | |

| [152] | 2020 | Multi-scale CNN (MCNN) | CT scans | LIDC-IDRI | Accuracy: 93.7% ± 0.3 |

| [153] | 2021 | Lung Cancer Prediction CNN (LCP-CNN) | CT scans | U.S. NLST | Sensitivity: 99%; |

| [154] | 2021 | Automatic AI-powered CAD | CT scans | 150 images include 340 nodules | mean sensitivity: 82% for second-reading mode, 80% for concurrent-reading mode |

| [155] | 2021 | DNA-derived phage nose (D2pNose) using machine learning and ANN | CT scans | Pusan National University | Detection accuracy: >75%; Classification accuracy: >86% |

| [156] | 2021 | Capsule network-based mixture of experts (MIXCAPS) | CT scans | LIDC-IDRI | Sensitivity: 89.5%; |

| [157] | 2021 | CNN with attention mechanism | CT scans | LUNA16 | Specificity: 98.9% |

| [121] | 2021 | Deep learning image reconstruction (DLIR) | CT scans | LIDC-IDRI | AUC of 0.555, 0.561, 0.557, 0.558 for ASIR-V, DL-L, DL-M, DL-H |

| [58] | 2021 | 2D-3D cascaded CNN | CT scans | LIDC-IDRI | Sensitivity: 90.01% |

| [120] | 2022 | 3D sphere representation-based center-points matching detection network (SCPM-Net) | CT scans | LUNA16 | Average sensitivity: 89.2% |

| [158] | 2022 | YOLOv3 | CT scans | RIDER | Accuracy: 95.17% |

| [118] | 2022 | 3D Attention CNN | CT scans | LUNA16 | CPM: 0.931 |

| [159] | 2022 | Progressive Growing Channel Attentive Non-Local (ProCAN) network | CT scans | LIDC-IDRI | Accuracy: 95.28% |

| Reference | Year | Method | Imaging | Datasets | Results |

|---|---|---|---|---|---|

| [185] | 2014 | FF-BPNN | CT scans | LIDC | Sensitivity: 91.4% |

| [168] | 2015 | Multi-scale CNN | CT scans | LIDC-IDRI | Accuracy: 86.84% |

| [166] | 2015 | CAD using deep features | CT scans | LIDC-IDRI | Sensitivity: 83.35% |

| [165] | 2015 | Deep belief network (DBN) | CT scans | LIDC | Sensitivity: 73.4% |

| [165] | 2015 | CNN | CT scans | LIDC | Sensitivity:73.3% |

| [165] | 2015 | Fractal | CT scans | LIDC | Sensitivity:50.2% |

| [165] | 2015 | Scale-invariant feature transform (SIFT) | CT scans | LIDC | Sensitivity: 75.6% |

| [186] | 2016 | Intensity features +SVM | CT scans | DLCST | Accuracy: 27.0% |

| [186] | 2016 | Unsupervised features+SVM | CT scans | DLCST | Accuracy: 39.9% |

| [186] | 2016 | ConvNets 1 scale | CT scans | DLCST | Accuracy: 84.4% |

| [186] | 2016 | ConvNets 2 scale | CT scans | DLCST | Accuracy: 85.6% |

| [186] | 2016 | ConvNets 3 scale | CT scans | DLCST | Accuracy: 85.6% |

| [171] | 2017 | Multi-crop CNN | CT scans | LIDC-IDRI | Accuracy: 87.14% |

| [171] | 2017 | Deep 3D DPN | CT scans | LIDC-IDRI | Accuracy: 88.74% |

| [171] | 2017 | Deep 3D DPN+ GBM | CT scans | LIDC-IDRI | Accuracy: 90.44% |

| [111] | 2017 | Massive-training ANN (MTANN) | CT scans | LDCT | AUC: 0. 8806 |

| [111] | 2017 | CNN | CT scans | LDCT | AUC: 0.7755 |

| [187] | 2017 | Wavelet Recurrent Neural Network | Chest X-Ray | Japanese Society Radiology and Technology | Sensitivity: 88.24% |

| [171] | 2017 | Multi-crop convolutional neural network (MC-CNN) | CT scans | LIDC-IDRI | Sensitivity: 77% |

| [188] | 2018 | Topology-based phylogenetic diversity index classification CNN | CT scans | LIDC | Sensitivity: 90.70% |

| [189] | 2018 | Transfer learning deep 3D CNN | CT scans | Institution records | Accuracy: 71% |

| [128] | 2018 | CNN | CT scans | Kaggle Data Science Bowl 2017 | Sensitivity: 87% |

| [190] | 2018 | Feature Representation Using Deep Autoencoder | CT scans | ELCAP | Accuracy: 93.9% |

| [112] | 2018 | Multi-view multi-scale CNN | CT scans | LIDC-IDRI & ELCAP | overall classification rates: 92.3% for LIDC-IDRI; overall classification rates: 90.3% for ELCAP |

| [191] | 2018 | Wavelet-Based CNN | CT scans | 448 images include four categories | Accuracy: 91.9% |

| [192] | 2018 | Deep ConvNets | CT scans | LIDC-IDRI | Accuracy: 98% |

| [182] | 2018 | Forward and Backward GAN (F&BGAN) | CT scans | LIDC-IDRI | Sensitivity: 98.67% |

| [174] | 2019 | Ensemble learner of multiple deep CNN | CT scans | LIDC-IDRI | Accuracy: 84.0% |

| [175] | 2019 | Lightweight Multi-Section CNN | CT scans | LIDC-IDRI | Accuracy: 93.18% |

| [167] | 2019 | Deep hierarchical semantic CNN (HSCNN) | CT scans | LIDC | Sensitivity: 70.5% |

| [193] | 2019 | Multi-view knowledge-based collaborative (MV-KBC) | CT scans | LIDC-IDRI | Accuracy: 91.60% |

| [167] | 2019 | 3D CNN | CT scans | LIDC | Sensitivity: 66.8% |

| [183] | 2019 | DCNN | CT scans | 46 images from interventional cytology | Sensitivity: 89.3% |

| [194] | 2019 | 3D MixNet | CT scans | LIDC-IDRI & LUNA16 | Accuracy: 88.83% |

| [194] | 2019 | 3D MixNet +GBM | CT scans | LIDC-IDRI & LUNA16 | Accuracy: 90.57% |

| [194] | 2019 | 3D CMixNet+ GBM | CT scans | LIDC-IDRI & LUNA16 | Accuracy: 91.13 |

| [194] | 2019 | 3D CMixNet+ GBM+Biomarkers | CT scans | LIDC-IDRI & LUNA16 | Accuracy: 94.17% |

| [195] | 2019 | Deep Learning with Instantaneously Trained Neural Networks (DITNN) | CT scans | Cancer imaging Archive (CIA) | Accuracy: 98.42% |

| [184] | 2020 | DCNN | CT scans | LIDC | Accuracy: 97.3% |

| [196] | 2020 | CNN | CT scans | LIDC | Sensitivity: 93.4% |

| [197] | 2020 | 2.75D CNN | CT scans | LUNA16 | AUC: 0.9842 |

| [198] | 2020 | Two-step Deep Network (TsDN) | CT scans | LIDC-IDRI | Sensitivity: 88.5% |

| [176] | 2020 | Transferable texture CNN | CT scans | LIDC-IDRI & LUNGx | Accuracy: 96.69% ± 0.72% |

| [199] | 2020 | Taguchi-Based CNN | X-ray & CT images | 245,931 images | Accuracy: 99.6% |

| [200] | 2021 | Optimal Deep Belief Network with Opposition-based Pity Beetle Algorithm | CT scans | LIDC-IDRI | Sensitivity: 96.86% |

| [177] | 2021 | Multi-task CNN | CT scans | LIDC-IDRI | AUC: 0.783 |

| [178] | 2021 | CNN | CT scans | JSRT | Accuracy: 86.67% |

| [201] | 2021 | Inception_ResNet_V2 | CT scans | LC25000 | Accuracy: 99.7% |

| [201] | 2021 | VGG19 | CT scans | LC25000 | Accuracy: 92% |

| [201] | 2021 | ResNet50 | CT scans | LC25000 | Accuracy: 99% |

| [201] | 2021 | Net121 | CT scans | LC25000 | Accuracy: 99.4% |

| [202] | 2021 | Improved Faster R-CNN and transfer learning | CT scans | Heilongjiang Provincial Hospital | Accuracy: 89.7% |

| [203] | 2021 | Three-stream network | CT scans | LIDC-IDRI | Accuracy: 98.2% |

| [204] | 2021 | FractalNet | CT scans | LUNA 16 | Sensitivity: 96.68% |

| [205] | 2021 | VGG19+CNN | X-ray & CT images | GitHub | Specificity: 99.5% |

| [205] | 2021 | ResNet152V2 | X-ray & CT images | GitHub | Specificity: 98.4% |

| [205] | 2021 | ResNet152V2+GRU | X-ray & CT images | GitHub | Specificity: 98.7% |

| [205] | 2021 | ResNet152V2+Bi-GRU | X-ray & CT images | GitHub | Specificity: 97.8% |

| [179] | 2022 | Machine learning | CT scans | LNDb | Accuracy: 94% |

| [159] | 2022 | Progressively Growing Channel Attentive Non-Local (ProCAN) | CT scans | LIDC-IDRI | Accuracy: 95.28% |

| [206] | 2022 | CNN-based multi-task learning (CNN-MTL) | CT scans | LIDC-IDRI | Sensitivity: 96.2% |

| [207] | 2022 | Cat swarm optimization-based CAD for lung cancer classification (CSO-CADLCC) | CT scans | Benchmark | Specificity: 99.17% |

| [208] | 2022 | 2-Pathway Morphology-based CNN (2PMorphCNN) | CT scans | LIDC-IDRI | Sensitivity: 96.85% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L. Deep Learning Techniques to Diagnose Lung Cancer. Cancers 2022, 14, 5569. https://doi.org/10.3390/cancers14225569

Wang L. Deep Learning Techniques to Diagnose Lung Cancer. Cancers. 2022; 14(22):5569. https://doi.org/10.3390/cancers14225569

Chicago/Turabian StyleWang, Lulu. 2022. "Deep Learning Techniques to Diagnose Lung Cancer" Cancers 14, no. 22: 5569. https://doi.org/10.3390/cancers14225569

APA StyleWang, L. (2022). Deep Learning Techniques to Diagnose Lung Cancer. Cancers, 14(22), 5569. https://doi.org/10.3390/cancers14225569