Simple Summary

Breast cancer is the most common cancer, which resulted in the death of 700,000 people around the world in 2020. Various imaging modalities have been utilized to detect and analyze breast cancer. However, the manual detection of cancer from large-size images produced by these imaging modalities is usually time-consuming and can be inaccurate. Early and accurate detection of breast cancer plays a critical role in improving the prognosis bringing the patient survival rate to 50%. Recently, some artificial-intelligence-based approaches such as deep learning algorithms have shown remarkable advancements in early breast cancer diagnosis. This review focuses first on the introduction of various breast cancer imaging modalities and their available public datasets, then on proposing the most recent studies considering deep-learning-based models for breast cancer analysis. This study systemically summarizes various imaging modalities, relevant public datasets, deep learning architectures used for different imaging modalities, model performances for different tasks such as classification and segmentation, and research directions.

Abstract

Breast cancer is among the most common and fatal diseases for women, and no permanent treatment has been discovered. Thus, early detection is a crucial step to control and cure breast cancer that can save the lives of millions of women. For example, in 2020, more than 65% of breast cancer patients were diagnosed in an early stage of cancer, from which all survived. Although early detection is the most effective approach for cancer treatment, breast cancer screening conducted by radiologists is very expensive and time-consuming. More importantly, conventional methods of analyzing breast cancer images suffer from high false-detection rates. Different breast cancer imaging modalities are used to extract and analyze the key features affecting the diagnosis and treatment of breast cancer. These imaging modalities can be divided into subgroups such as mammograms, ultrasound, magnetic resonance imaging, histopathological images, or any combination of them. Radiologists or pathologists analyze images produced by these methods manually, which leads to an increase in the risk of wrong decisions for cancer detection. Thus, the utilization of new automatic methods to analyze all kinds of breast screening images to assist radiologists to interpret images is required. Recently, artificial intelligence (AI) has been widely utilized to automatically improve the early detection and treatment of different types of cancer, specifically breast cancer, thereby enhancing the survival chance of patients. Advances in AI algorithms, such as deep learning, and the availability of datasets obtained from various imaging modalities have opened an opportunity to surpass the limitations of current breast cancer analysis methods. In this article, we first review breast cancer imaging modalities, and their strengths and limitations. Then, we explore and summarize the most recent studies that employed AI in breast cancer detection using various breast imaging modalities. In addition, we report available datasets on the breast-cancer imaging modalities which are important in developing AI-based algorithms and training deep learning models. In conclusion, this review paper tries to provide a comprehensive resource to help researchers working in breast cancer imaging analysis.

1. Introduction

Breast cancer is the second most fatal disease in women and is a leading cause of death for millions of women around the world [1]. According to the American Cancer Society, approximately 20% of women who have been diagnosed with breast cancer die [2,3]. Generally, breast tumors are divided into four groups: normal, benign, in situ carcinoma, and invasive carcinoma [1]. A benign tumor is an abnormal but noncancerous collection of cells in which minor changes in the structure of cells happen, and they cannot be considered cancerous cells [1]. However, in situ carcinoma and invasive carcinoma are classified as cancer [4]. In situ carcinoma remains in its organ and does not affect other organs. On the other hand, invasive carcinoma spreads to surrounding organs and causes the development of many cancerous cells in the organs [5,6]. Early detection of breast cancer is a determinative step for treatment and is critical to avoiding further advancement of cancer and its complications [7]. There are several well-known imaging modalities to detect and treat breast cancer at an early stage including mammograms (MM) [8], breast thermography (BTD) [9], magnetic resonance imaging (MRI) [10], positron emission tomography (PET) [11], computed tomography (CT) [11], ultrasound (US) [12], and histopathology (HP) [13]. Among these modalities, mammograms (MMs) and histopathology (HP), which involve image analysis of the removed tissue stained with hematoxylin and eosin to increase visibility, are widely used [14,15]. Mammography tries to filter a large-scale population for initial breast cancer symptoms, while histopathology tries to capture microscopic images with the highest possible resolution to find exact cancerous tissues at the molecular level [16,17]. In practice for breast cancer screening, radiologists or pathologists observe and examine breast images manually for diagnosis, prognosis, and treatment decisions [7]. Such screening usually leads to over- or under-treatment because of inaccurate detection, resulting in a prolonged diagnosis process [18]. It is worth noting that only 0.6% to 0.7% of cancer detections in women during the screening are validated and 15–35% of cancer screening fails due to errors related to the imaging process, quality of images, and human fatigue [19,20,21]. Several decades ago, computer-aided detection (CAD) systems were first employed to assist radiologists in their decision-making. CAD systems generally analyze imaging data and other cancer-related data alone or in combination with other clinical information [22]. Additionally, based on the statistical models, CADs can provide results about the probability of diseases such as breast cancer [23]. CAD systems have been widely used to help radiologists in patient care processes such as cancer staging [23]. However, conventional CAD systems, which are based on traditional image processing techniques, have been limited in their utility and capability.

To tackle these problems and enhance efficiency as well as decrease false cancer detection rates, precise automated methods are needed to complement the work of humans or replace them. AI is one of the most effective approaches capturing much attention in analyzing medical imaging, especially for the automated analysis and extraction of relevant information from imaging modalities such as MMs and HPs [24,25]. Many available AI-based tools for image recognition to detect breast cancer have exhibited better performance than traditional CAD systems and manually examining images by expert radiologists or pathologists due to the limitations of current manual approaches [26]. In other words, AI-based methods avoid expensive and time-consuming manual inspection and effectively extract key and determinative information from high-resolution image data [26,27]. For example, a spectrum of diseases is associated with specific features, such as mammographic features. Thus, AI can learn these types of features from the structure of image data and then detect the spectrum of the disease assisting the radiologist or histopathologist. It is worth noting that in contrast to human inspection, algorithms are mainly similar to the black box and cannot understand the context, mode of collection, or meaning of viewed images, resulting in the problem of “shortcut” learning [28,29]. Thus, building interpretable AI-based models is necessary. AI models can generally be categorized into two groups to interpret and extract information from image data: (1) Traditional machine learning algorithms which need to receive handcrafted features derived from raw image data as preprocessing steps. (2) Deep learning algorithms that process raw images and try to extract features by mathematical optimization and multiple-level abstractions [30]. Although both approaches have shown promising results in breast cancer detection, recently, the latter approach has attracted more interest mainly because of its capability to learn the most salient representations of the data without human intervention to produce superior performance [31,32]. This review assesses and compresses recent datasets and AI-based models, specifically created by deep learning algorithms, used on TBD, PET, MRI, US, HP, and MM in breast cancer screening and detection. We also highlight the future direction in breast cancer detection via deep learning. This study can be summarized as follows: (1) Review of different imaging modalities for breast cancer screening. (2) Comparison of different deep learning models proposed in the most recent studies and their achieved performances on breast cancer classification, segmentation, detection, and other analysis. (3) Lastly, the conclusion of the paper and suggestions for future research directions. The main contributions of this paper can be listed as follows:

- We reviewed different imaging tasks such as classification, segmentation, and detection through deep learning algorithms, while most of the existing review papers focus on a specific task.

- We covered all available imaging modalities for breast cancer analysis in contrast to most of the existing studies that focus on single or two imaging modalities.

- For each imaging modality, we summarized all available datasets.

- We considered the most recent studies (2019–2022) on breast cancer imaging diagnosis employing deep learning models.

2. Imaging Modalities and Available Datasets for Breast Cancer

In this study, we summarize well-known imaging modalities for breast cancer diagnosis and analysis. As many existing studies have shown, there are several imaging modalities, including mammography, histopathology, ultrasound, magnetic resonance imaging, positron emission tomography, digital breast tomosynthesis, and a combination of these modalities (multimodalities) [10,32,33]. There are various public or private datasets for these modalities. Approximately 70% of available public datasets are related to mammography and ultrasound modalities demonstrating the prevalence of these methods, especially mammography, for breast cancer screening [31,32]. On the other hand, the researcher also widely utilized other modalities such as histopathology and MRI to confirm cancer and deal with difficulties related to mammography and ultrasound imaging modalities such as large variations in the image’s shape, morphological structure, and the density of breast tissues, etc. Here, we outline the aforementioned imaging modalities and available datasets for breast cancer detection.

2.1. Mammograms (MMs)

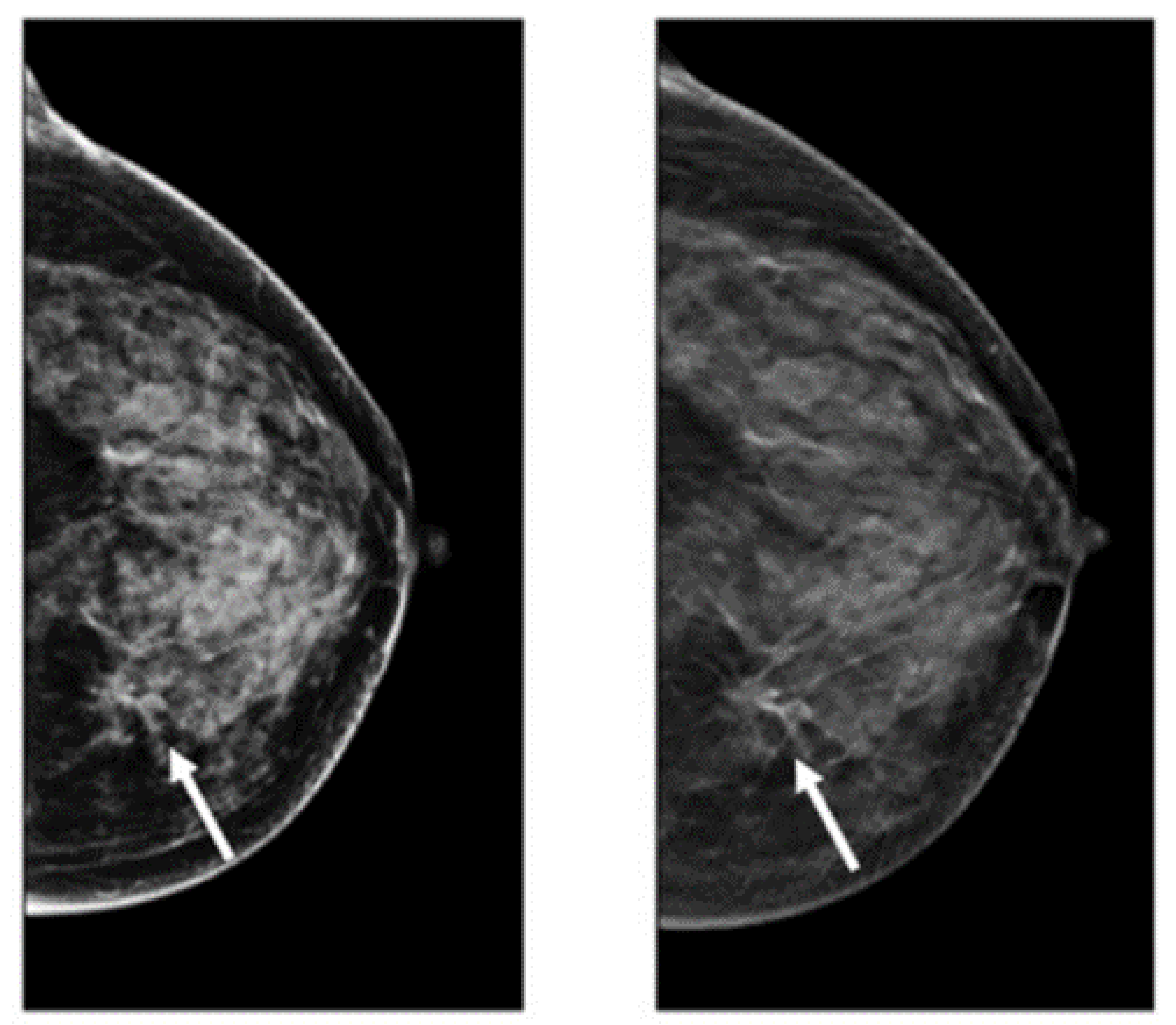

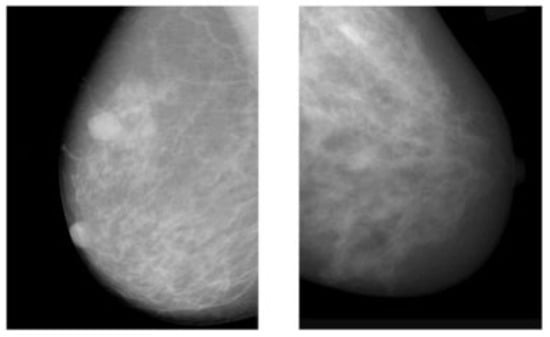

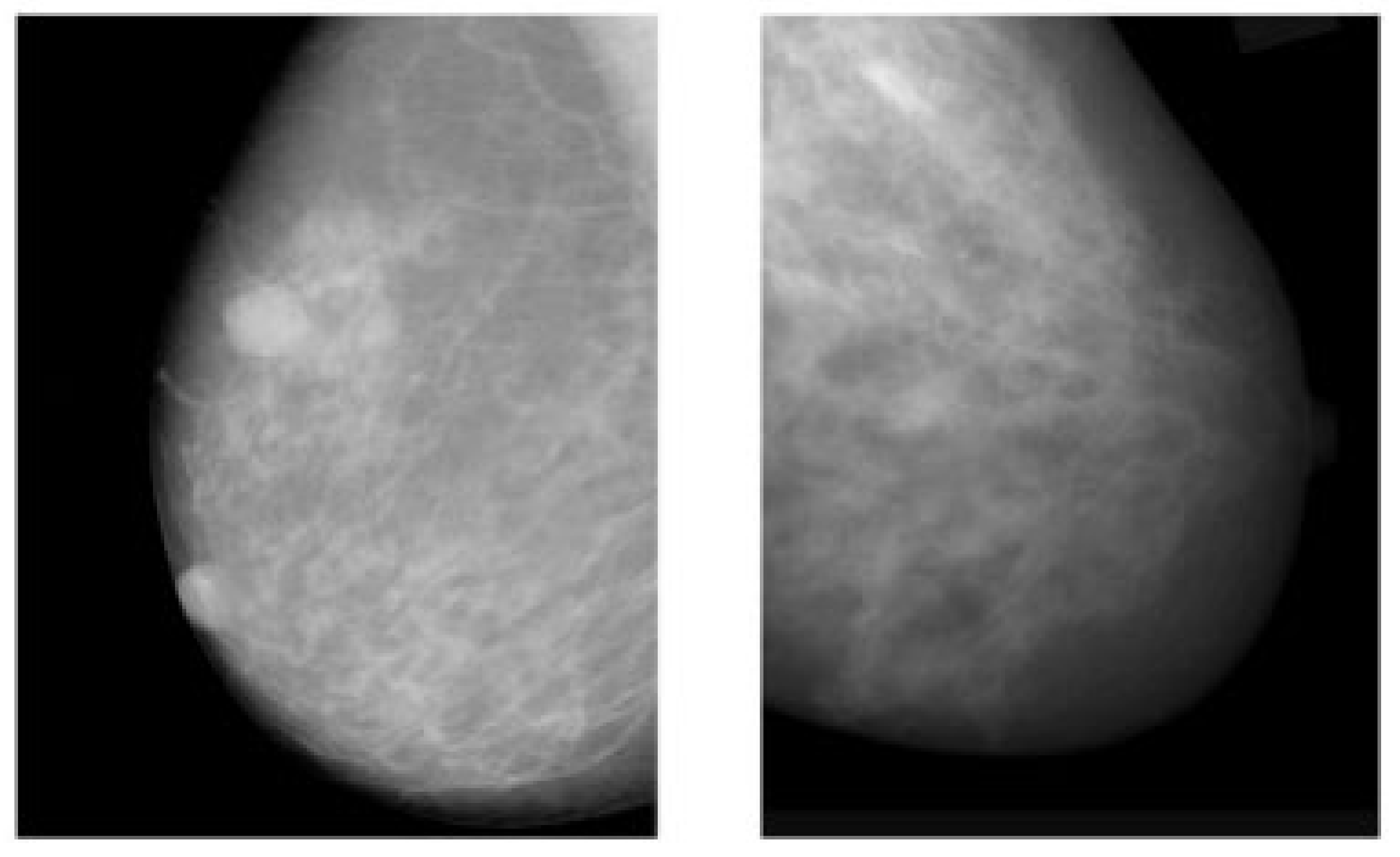

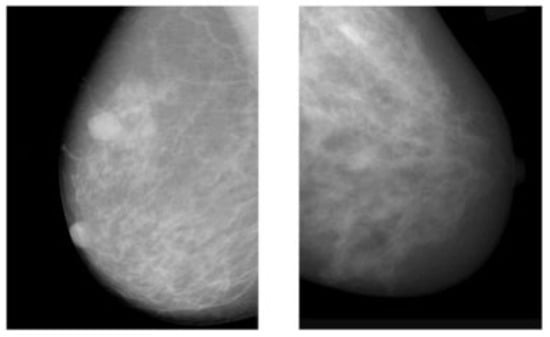

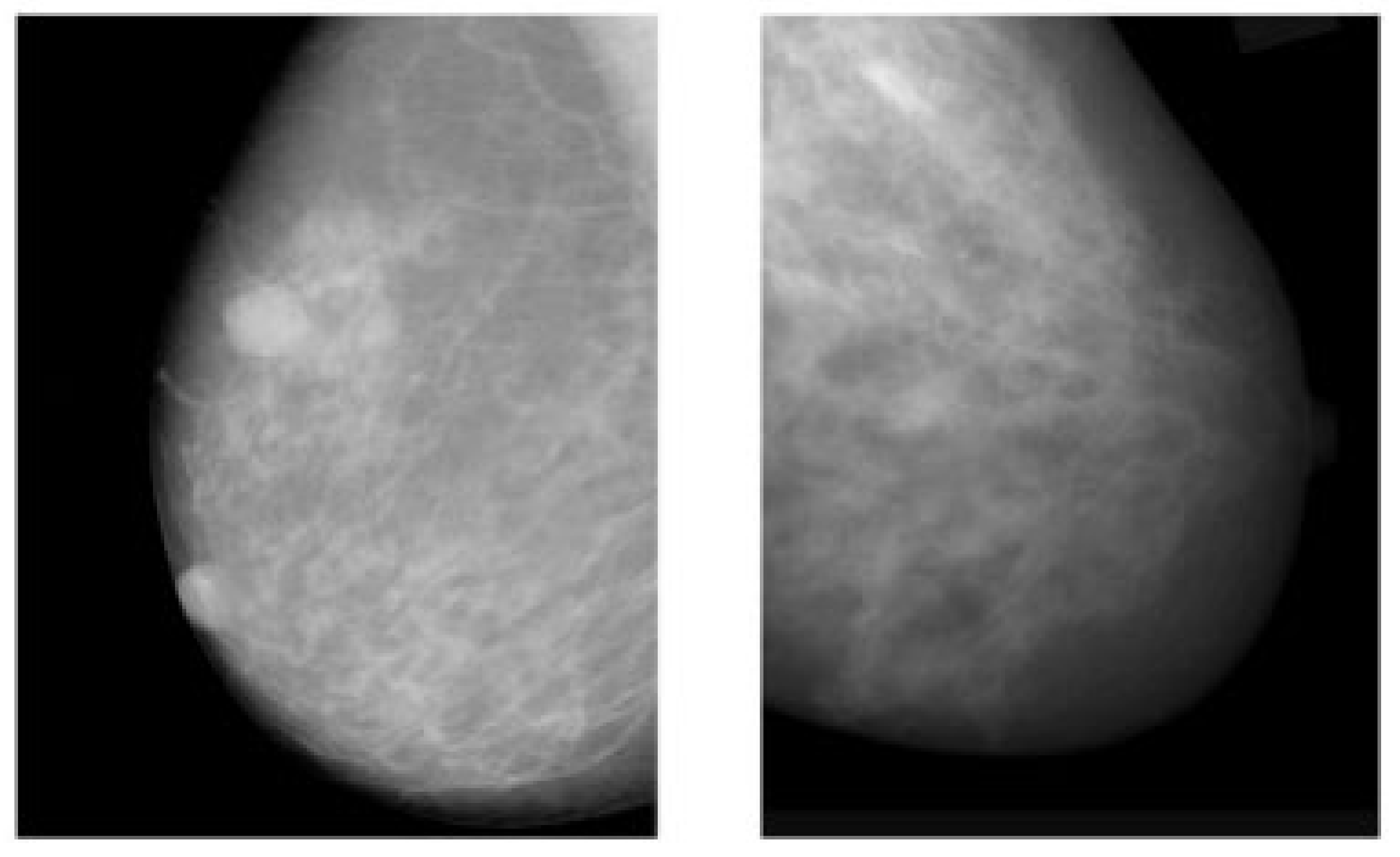

The advantages of mammograms, such as being cost-effective to detect tumors in the initial stage before development, mean that MMs are the most promising imaging screening technique in clinical practice. MMs are generally images of breasts produced by low-intensity X-rays (Figure 1) [33]. In this imaging modality, cancerous regions are brighter and more clear than other parts of breast tissue, helping to detect small variations in the composition of the tissues; therefore, it is used for the diagnosis and analysis of breast cancer [34,35] (Figure 1). Although MMs are the standard approach for breast cancer analysis, it is an inappropriate imaging modality for women with dense breasts [36], since the performance of MMs highly depends on specific tumor morphological characteristics [36,37]. To deal with this problem, using automated whole breast ultrasound (AWBU) or other methods are suggested with MMs to produce a more detailed image of breast tissues [38].

For various tasks in breast cancer analysis, such as breast lesion detection and classification, MMs are generally divided into two forms: screen film mammograms (SFM) and digital mammograms (DMM). DMM is widely categorized into three categories consisting of full-field digital mammograms (FFDM), digital breast tomosynthesis (DBT), and contrast-enhanced digital mammograms (CEDM) [39,40,41,42,43,44]. SFM was the standard imaging method in MMs because of its high sensitivity (100%) in the analysis and detection of lesions in breasts composed primarily of fatty tissue [45]. However, it has many drawbacks, including the following: (1) SFM imaging needs to be repeated with a higher radiation dose because some parts of the image in SFM have lesser contrast and cannot be further improved, and (2) various regions of the breast image are represented according to the characteristic response of the SFM [19,45]. Since 2010, DMM has replaced film as the primary screening modality. The main advantages of digital imaging over file systems are the higher contrast resolution and the ability to enlarge the image or change the contrast and brightness. These advantages help radiologists to detect subtle abnormalities, particularly in a background of dense breast tissue, more easily. Most studies comparing digital and film mammography performance have found little difference in cancer detection rates [46]. Digital mammography increases the chance of detecting invasive cancer in premenopausal and perimenopausal women and women with dense breasts. However, it increases false-positive findings as well [46]. Randomized mammographic trials/randomized controlled trials (RMT/RCT) represent the most important usage of MMs, through which large-scale screening for breast cancer analysis is performed. Despite the great capability of MMs for early-stage cancer detection, it is difficult to use MMs alone for detection. Because it requires additional screening tests along with mammographic trials/RMT such as breast self-examination (BSE) and clinical breast examination (CBE), which are more feasible methods to detect breast cancer at early stages to improve breast cancer survival [38,47,48]. Additionally, BSE and CBE avoid tremendous harm due to MMs screening, such as repeating the imaging process. More details about the advantages and disadvantages of MMs are provided in Table 1.

Figure 1.

Example of breast cancer images using traditional film MMs. Reprinted/adapted with permission from [49]. 2021, Elsevier.

Figure 1.

Example of breast cancer images using traditional film MMs. Reprinted/adapted with permission from [49]. 2021, Elsevier.

Table 1.

Advantages and limitations of various imaging modalities.

2.2. Digital Breast Tomosynthesis (DBT)

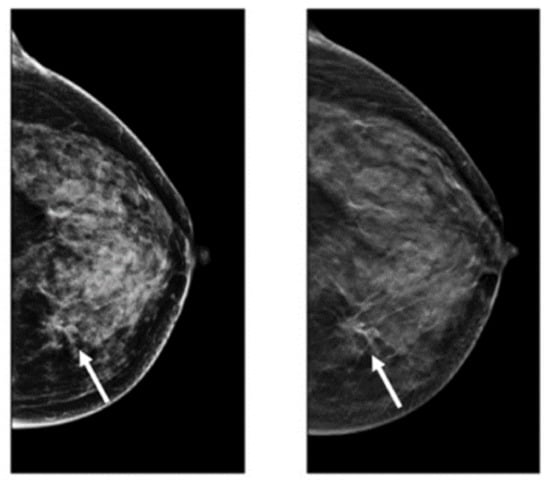

DBT is a novel imaging modality making 3D images of breasts by the utilization of X-rays captured from different angles [50]. This method is similar to what is performed in mammograms, except the tube with the X-ray moves in a circular arc around the breast [51,52,53] (Figure 2). Repeated exposures to the breast tissue at different angles produce DBT images in half-millimeter slices. In this method, computational methods are utilized to collect information received from X-ray images to produce z-stack breast images and 2D reconstruction images [53,54]. In contrast to the conventional FSM method, DBT can easily cover the imaging of tumors from small to large size, especially in the case of small lesions and dense breasts [55]. However, the main challenging issue regarding the DBT is the long reading time because of the number of mammograms, the z-stack of images, and the number of recall rates for architectural distortion type of breast cancer abnormality [56]. After FFDM, DBT is the commonly used method for imaging modalities. Many studies recently used this imaging modality for breast cancer detection due to its favorable sensitivity and accuracy in screening and producing better details of tissue in breast cancer [57,58,59,60]. Table 1 provides details of the pros and cons of DBT for breast cancer analysis.

Figure 2.

Images of cancerous breast tissue by DBT imaging modality [61]. Reprinted/adapted with permission from [61]. 2021, Elsevier.

2.3. Ultrasound (US)

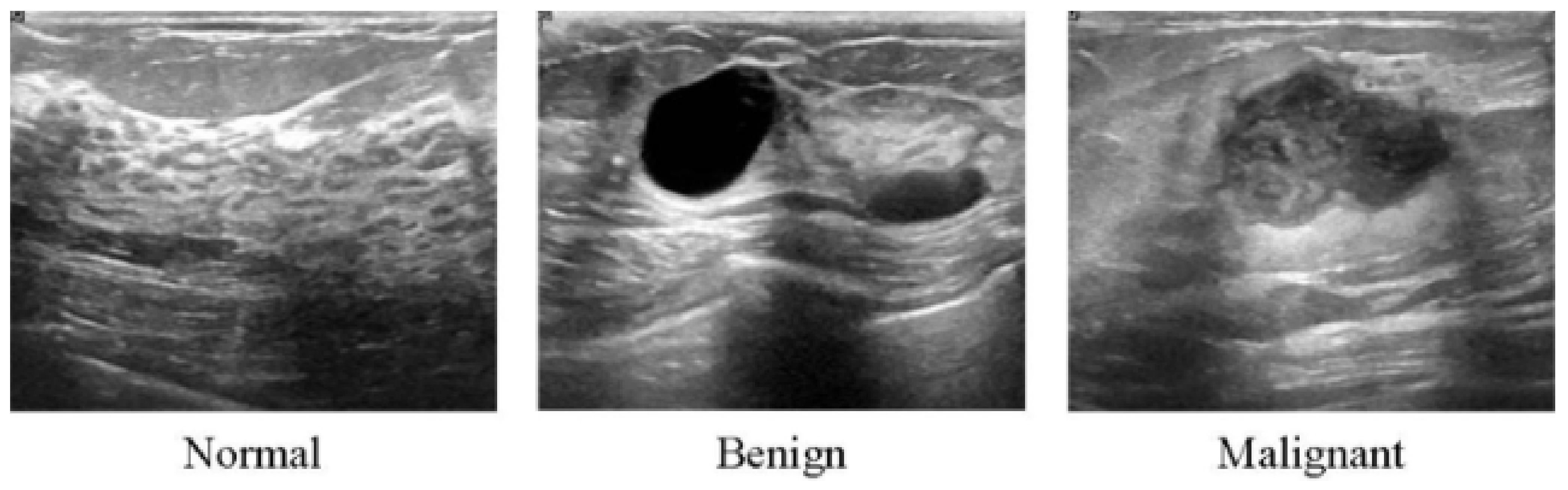

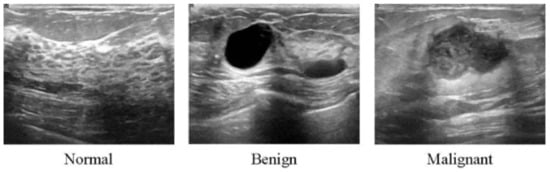

All of the aforementioned modalities can endanger patients and radiologists because of possible overdosage of ionizing radiation, making these approaches slightly risky and unhealthy for certain sensitive patients [62]. Additionally, these methods show low specificity, meaning the low ability to correctly determine a tissue without disease as a negative case. Therefore, although the aforementioned imaging modalities are highly used for early breast cancer detection, the US as a safe imaging modality has been used [62,63,64,65,66,67] (Figure 3). Compared to MMs, the US is a more convenient method for women with dense breasts. It is also useful to characterize abnormal regions and negative tumors detected by MMs [68]. Some studies showed the high accuracy of the US in detecting and discriminating benign and malignant masses [69]. US images are used in three broad combinations, i.e., (i) simple two-dimensional grayscale US images, (ii) color US images with shear wave elastography (SWE) added features, and (iii) Nakagami colored US images without any need for ionizing radiation [70,71]. It is worth noting that Nakagami-colored US images are responsible for the region of interest extraction by better detection of irregular masses in the breast. Moreover, US can be used as a complement to MMs owing to its availability, inexpensiveness compared to other modalities, and it being well tolerated by patients [70,72,73]. In a recent retrospective study, US breast imaging has shown high predictive value when combined with MMs images [74]. US images, along with MMs, improved the overall detection by about 20% and decreased unnecessary biopsy tasks by 40% in total [67]. Moreover, US is a reliable and valuable tool for metastatic lymph node screening in breast cancer patients. It is a cheap, noninvasive, easy-to-handle and cost-effective diagnostic method [75]. However, the US represents some limitations. For instance, the interpretation of US images is highly difficult and needs an expert radiologist to comprehensively understand these images. This is because of the complex nature of US images and the presence of speckle noise [76,77]. To deal with this issue, new technologies have been introduced in breast US imaging, such as automated breast ultrasound (ABUS). ABUS produces 3D images using wider probes. Shin et al. [78] improved how ABUS allows more appropriate image evaluation for large breast masses compared to conventional breast US. On the other hand, ABUS showed the lowest reliability in the prediction of residual tumor size and pCR (pathological complete response) [79]. Table 1 highlights more details about the weaknesses and strengths of the US imaging modality.

Figure 3.

Ultrasound images from breast tissue for normal, benign, and malignant [80].

2.4. Magnetic Resonance Imaging (MRI)

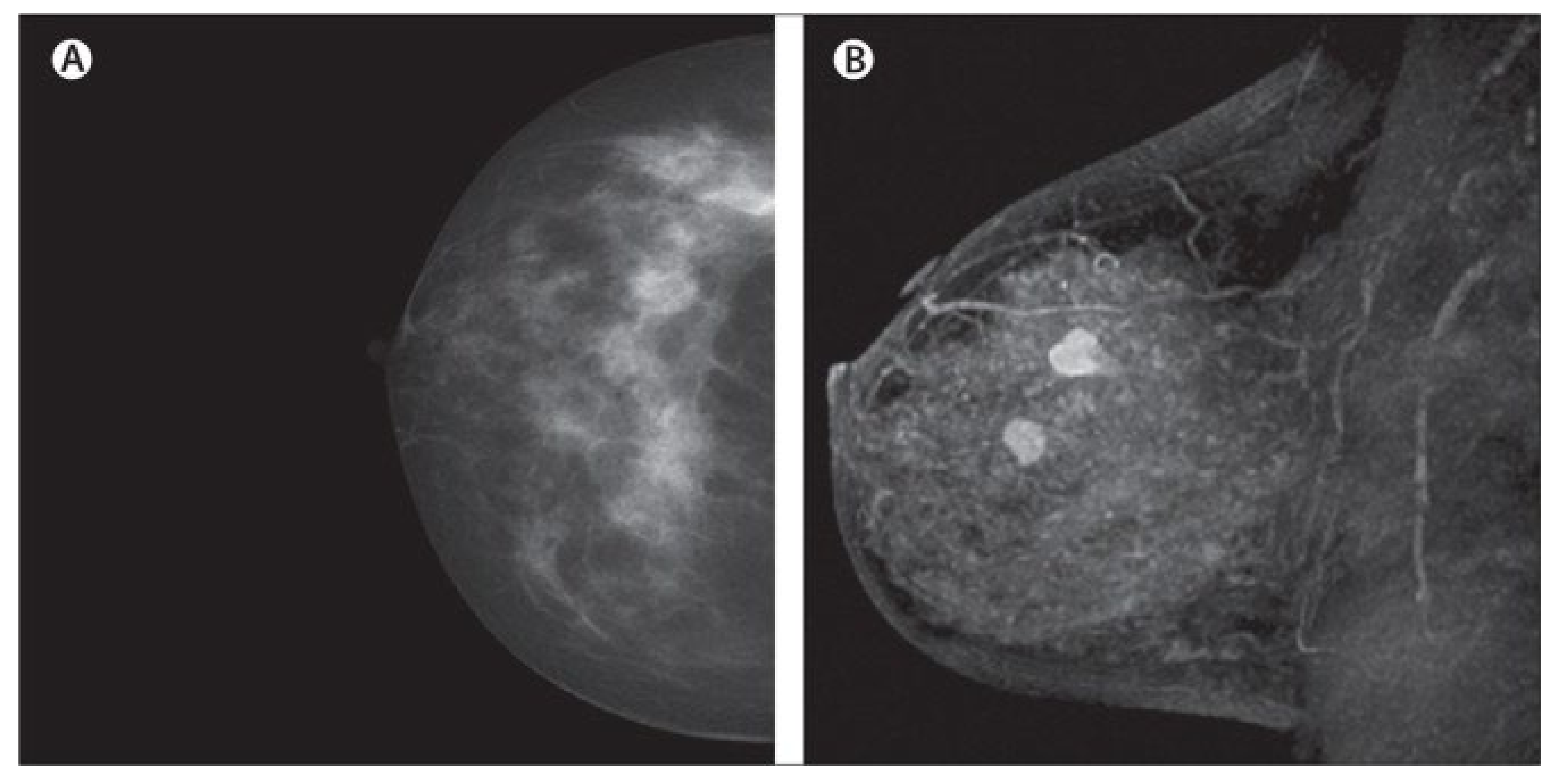

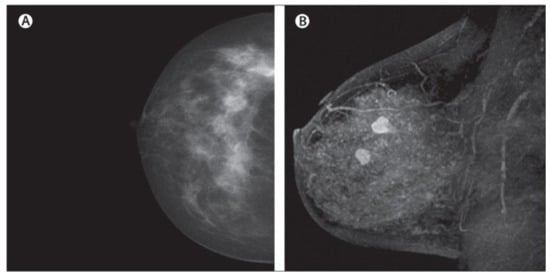

MRI creates images of the whole breast and presents it as thin slices that cover the entire breast volume. It works based on radio frequency absorption of nuclei in the existence of potent magnetic fields. MRI uses a magnetic field along with radio waves to capture multiple breast images at different angles from a tissue [81,82,83] (Figure 4). By the combination of these images together, clear and detailed images of tissues are produced. Hence, MRI creates much clearer images for breast cancer analysis than other imaging modalities [84]. For instance, the MRI image shows many details clearly, leading to easy detection of lesions that are considered benign in other imaging modalities. Additionally, MRI is the most favorable method for breast cancer screening in women with dense breasts without any ionizing and other health risks, which we have seen in other modalities such as MMs [85,86]. Another interesting issue about MRI is its capability for producing high-quality images with a clearer view via the utilization of a contrast agent before taking MRI images [87,88]. Furthermore, MRI is more accurate than MM, DBT, and the US in evaluating residual tumors and predicting pCR [79,89], which helps clinicians to select appropriate patients for avoiding surgery after neoadjuvant chemotherapy (first-line treatment of breast cancer) when pCR is obtained [90,91]. Even though MRI exhibits promising advantages, such as high sensitivity, it shows low specificity, and it is time consuming and expensive, especially since its reading time is long [92,93]. It is worth noting that some new MRI-based methods, such as ultrafast breast MRI (UF-MRI), create much more efficient images with high screening specificity with short reading time [94,95]. Additionally, diffusion-weighted MR imaging (DWI-MRI) and dynamic contrast-enhanced MRI (DCE-MRI) provide higher volumetric resolution for better lesion visualization and lesion temporal pattern enhancement to use in breast cancer diagnosis and prognosis and correlation with genomics [53,81,96,97,98]. Details about various MRI-based methods and their pros and cons are available in Table 1.

Figure 4.

Dense cancerous breast tissue images conducted by MRI method from different angles. (A) Normal; (B) malignant [82]. Reprinted/adapted with permission from [82]. 2011, Elsevier.

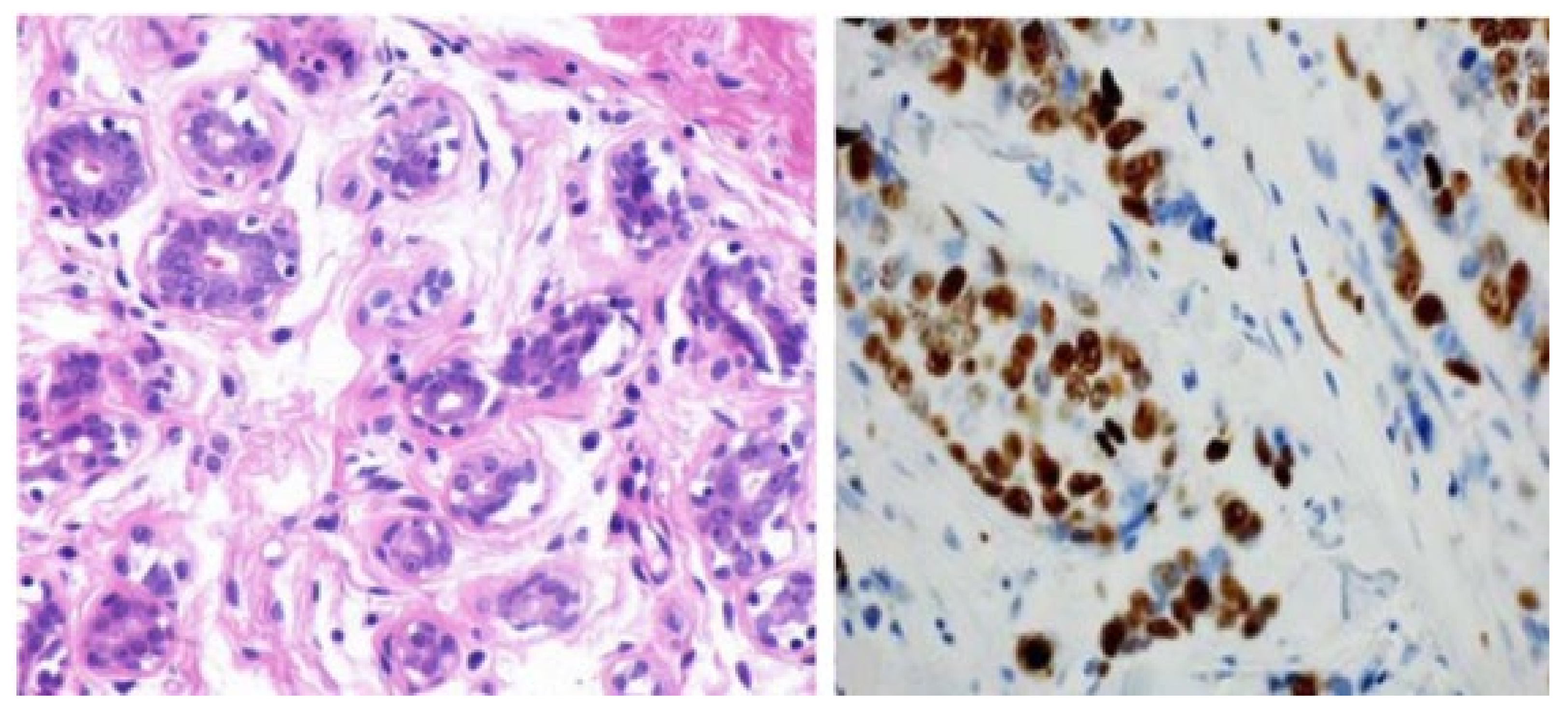

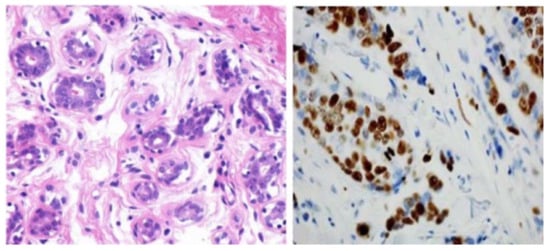

2.5. Histopathology

Recently, various studies have confirmed that the gold standard for confirmation of breast cancer diagnosis, treatment, and management is given by the histopathological analysis of a section of the suspected area by a pathologist [99,100,101]. Histopathology consists of examining tissue lesion samples stained, for example, with hematoxylin and eosin (H&E) to produce colored histopathologic (HP) images for better visualization and detailed analysis of tissues [102,103,104] (Figure 5). Generally, HP images are obtained from a piece of suspicious human tissue to be tested and analyzed by a pathologist [105]. HP images are defined as gigapixel whole-slide images (WSI) from which some small patches are extracted to enhance the analysis of these WSI (Figure 5). In other words, pathologists try to extract small patches related to ROI from WSI to diagnose breast cancer subtypes, which is a great advantage of HPs, enabling them to classify multiple classes of breast cancer [106,107] for prognosis and treatment. Additionally, much more meaningful ROI can be derived from HPs, in contrast to other imaging modalities confirming outstanding authenticity for breast cancer classification, especially breast cancer subtype classification. Furthermore, one of the most important advantages of HPs is their capability to integrate multi-omics features to analyze and diagnose breast cancer with high confidence [108]. TCGA is the most favorable resource for breast histopathological images. The TCGA database is widely employed in multi-level omics integration investigations. In other words, within TCGA, HPs provide contextual features to extract morphological properties, while molecular information from omics data at different levels, including microRNA, CNV, and DNA methylation [108], are also available for each patient. Integrating morphology and multiomics information provides an opportunity to more accurately detect and classify breast cancer. Despite these advantages, HPs have some limitations. For example, analyzing multiple biopsy sections, such as converting an invasive biopsy to digital images, is a lengthy process requiring a high concentration level due to the cell structures’ microscopic size [109]. More drawbacks and advantages of the HP imagining modality are summarized in Table 1.

Figure 5.

Images of the breast from H&E (haemotoxylin and eosin) stained image of a benign case provided by histopathology imaging modality [105]. Reprinted/adapted with permission from [105]. 2017, Elsevier.

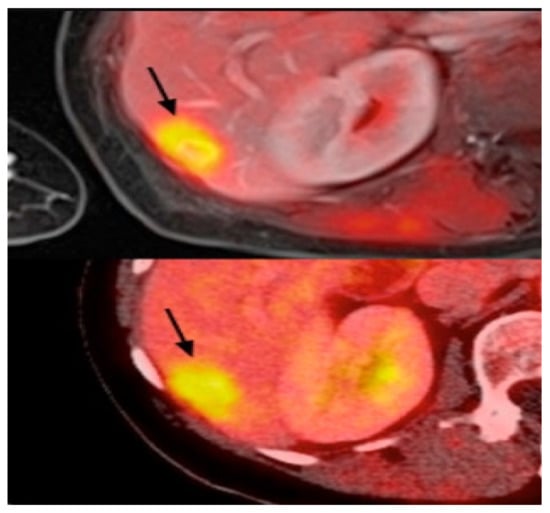

2.6. Positron Emission Tomography (PET)

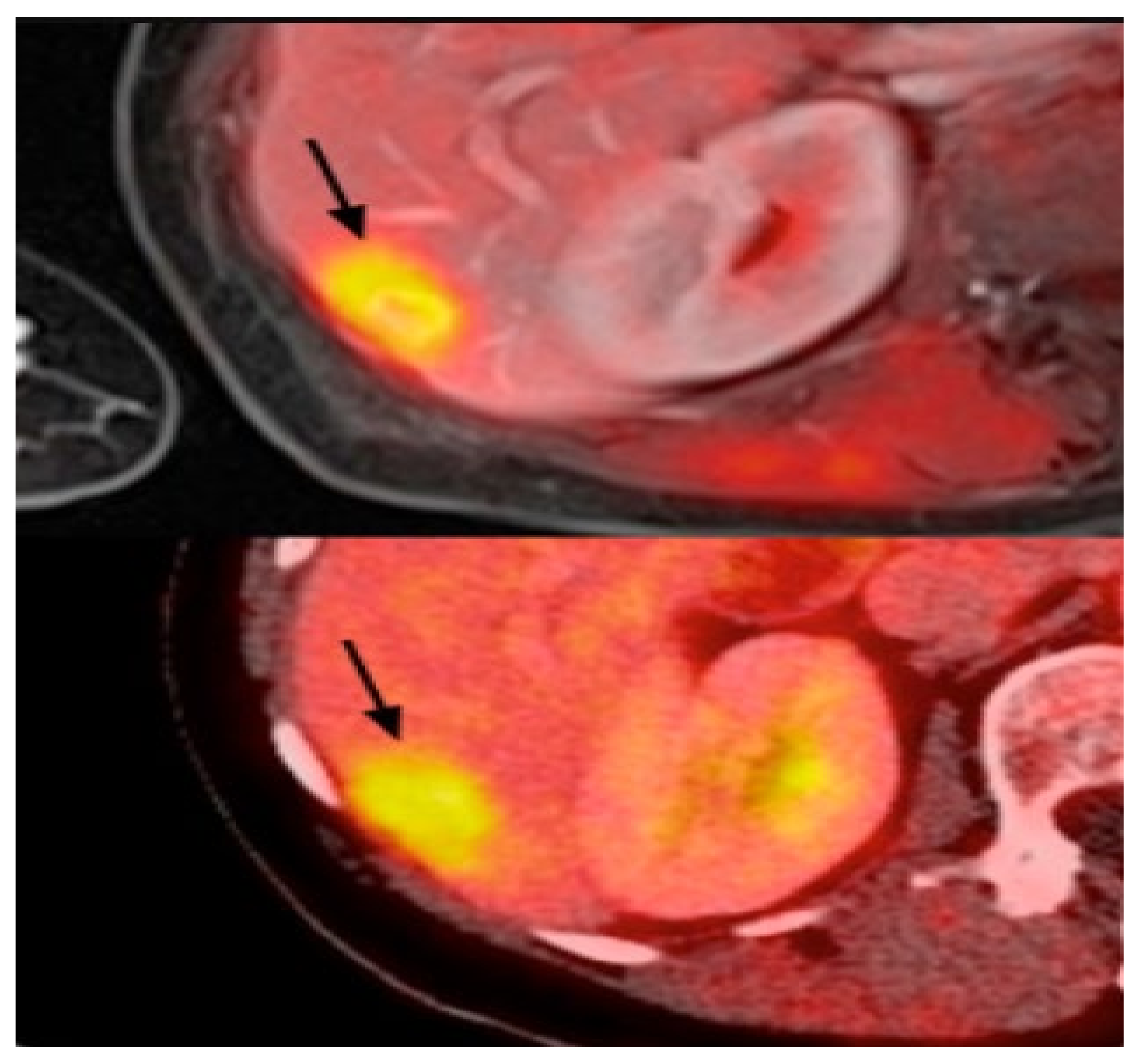

PET uses radiotracers for visualizing and measuring the changes in metabolic processes and other physiological activities, such as blood flow, regional chemical composition, and absorption. PET is a recent effective imaging method showing the promising capability to measure tissues’ in vivo cellular, molecular, and biochemical properties (Figure 6). One of the key applications of PET is the analysis of breast cancer [110]. Studies highlighted that PET is a handy tool in staging advanced and inflammatory breast cancer and evaluating the response to treatment of the recurrent disease [34,35]. In contrast to the anatomic imaging method, PET highlights a more specific targeting of breast cancer with a larger margin between tumor and normal tissue, representing one step forward in cancer detection besides anatomic modalities [111,112,113]. Thus, the PET approach is used in hybrid modalities with CT for specific organ imaging to encourage the advantages of PET and improve spatial resolution, which is one of this modality’s strengths. Additionally, PET uses the integration of radionuclides with some elements or pharmaceutical compounds to form radiotracers, improving the performance of PET [114]. Fluorodeoxyglucose (FDG), a glucose analog, is most commonly used for most breast cancer imaging studies as an effective radiotracer developed for PET imaging [115]. Recent studies clarified a specific correlation between the degree of FDG uptake and several phenotypic features containing a tumor histologic type and grade, cell receptor expression, and cellular proliferation [116,117]. These correlations lead to making the FDG-PET system for breast cancer analysis such as diagnosis, staging, re-staging, and treatment response evaluation [111,118,119]. Another PET system is a breast-dedicated high-resolution PET system designed in a hanging breast imaging modality. Some studies demonstrate that these PET-based modalities can detect almost all breast lesions and cancerous regions [120]. Table 1 summarizes some of PET-based imaging modalities’ limitations and advantages. Also, in Table 2, we provided most commonly used public datasets for different imaging modalities in breast cancer detection.

Figure 6.

Example of PET images for breast cancer analysis [118]. Reprinted/adapted with permission from [118]. 2021, Elsevier.

Table 2.

Public datasets for different imaging modalities for breast cancer analysis.

3. Artificial Intelligence in Medical Image Analysis

Artificial intelligence (AI) has become very popular in the past few years because it adds human capabilities, e.g., learning, reasoning, and perception, to the software accurately and efficiently, and as a result, computers gain the ability to perform tasks that are usually carried out by humans. The recent advances in computing resources and availability of large datasets, as well as the development of new AI algorithms, have opened the path to the use of AI in many different areas, including but not limited to image synthesis [121], speech recognition [122,123] and engineering [124,125,126]. AI has been also employed in healthcare industries for applications such as protein engineering [127,128,129,130], cancer detection [131], and drug discovery [132,133]. More specifically, AI algorithms have shown an outstanding capability to discover complex patterns and extract discriminative features from medical images, providing higher-quality analysis and better quantitative results efficiently and automatically. AI has been a great help for physicians in imaging-related tasks, i.e., disease detection and diagnosis, to accomplish more accurate results [134]. Deep learning (DL) [30] is part of a broader family of AI which imitates the way humans learn. DL uses multiple layers to gain knowledge, and the complexity of the learned features increases hierarchically. DL algorithms have been applied in many applications, and in some of them, they could outperform humans. DL algorithms have also been used in various categories in the realm of cancer diagnosis using cancer images from different modalities, including detecting cancer cells, cancer type classification, lesion segmentation, etc. To learn more about DL, we refer interested readers to [135].

3.1. Benefits of Using DL for Medical Image Analysis

Comparing the healthcare area with others, it is safe to say that the decision-making process is much more crucial in healthcare systems than in other areas since it directly affects people’s lives. For example, a wrong decision by a physician in diagnosing a disease can lead to the death of a patient. Complex and constrained clinical environments and workflows make the physician’s decision-making very challenging, especially for image-related tasks since they require high visual perception and cognitive ability [136]. In these situations, AI can be a great tool to decrease the false-diagnosis rates by extracting specific and known features from the images or even helping the physician by giving an initial guess for the solution. Nowadays, more and more healthcare providers are encouraged to use AI algorithms due to the availability of computing resources, advancement in image analysis tools, and the great performance shown by AI methods.

3.2. Deep Learning Models for Breast Cancer Detection

This section briefly discusses the deep learning algorithms applied to images from each breast cancer modality.

3.2.1. Digital Mammography and Digital Breast Tomosynthesis (MM-DBT)

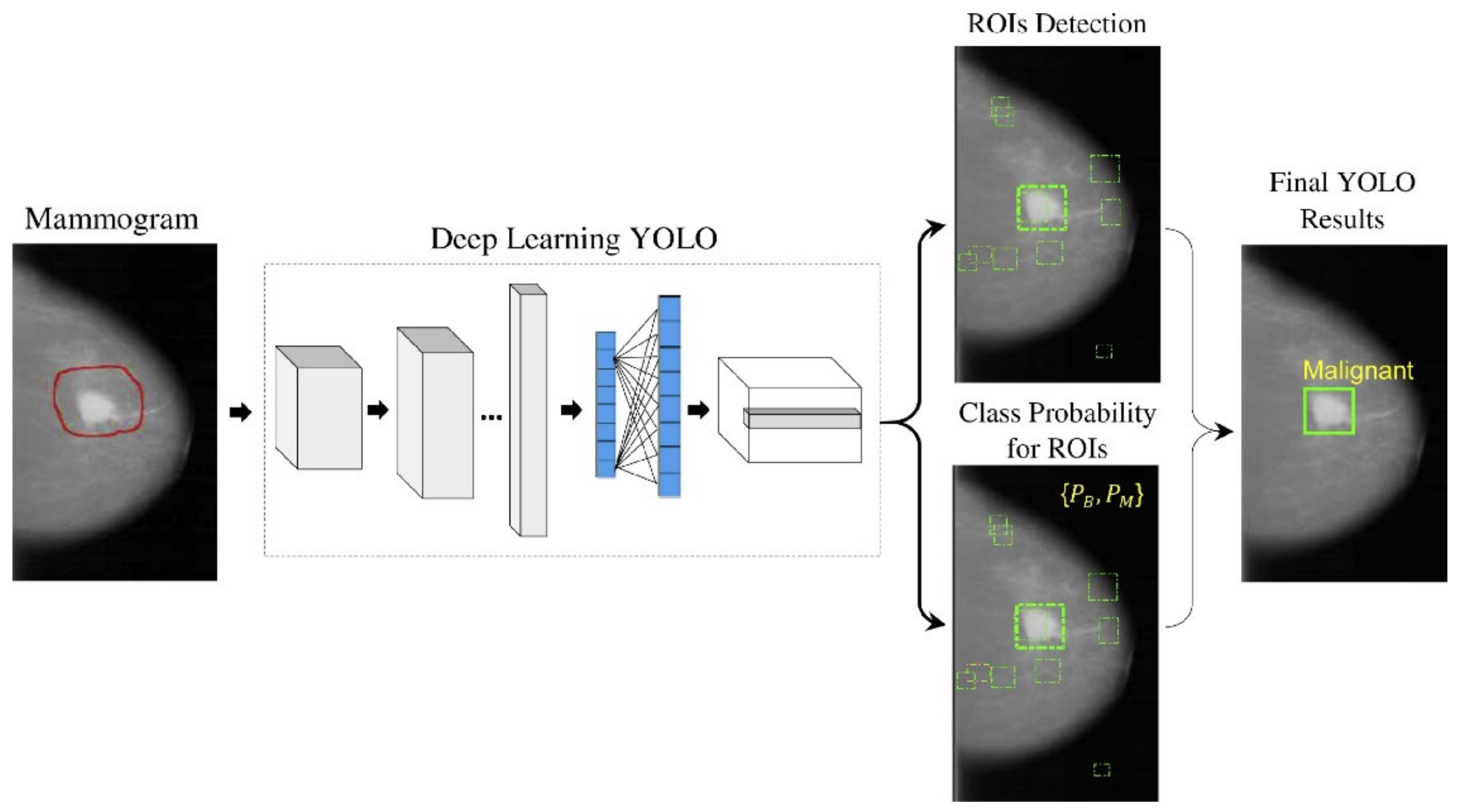

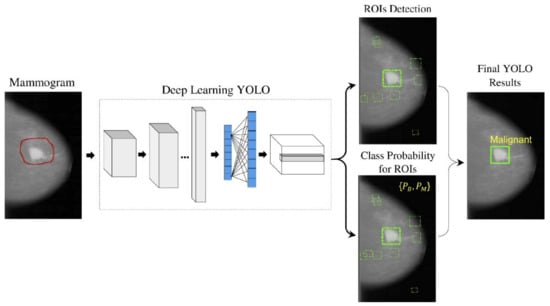

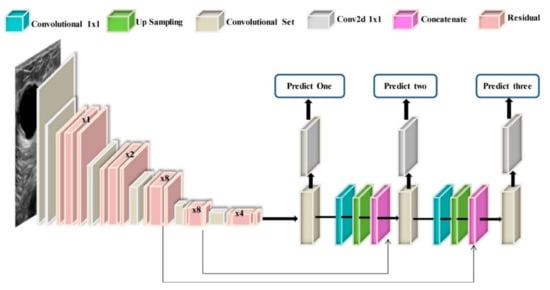

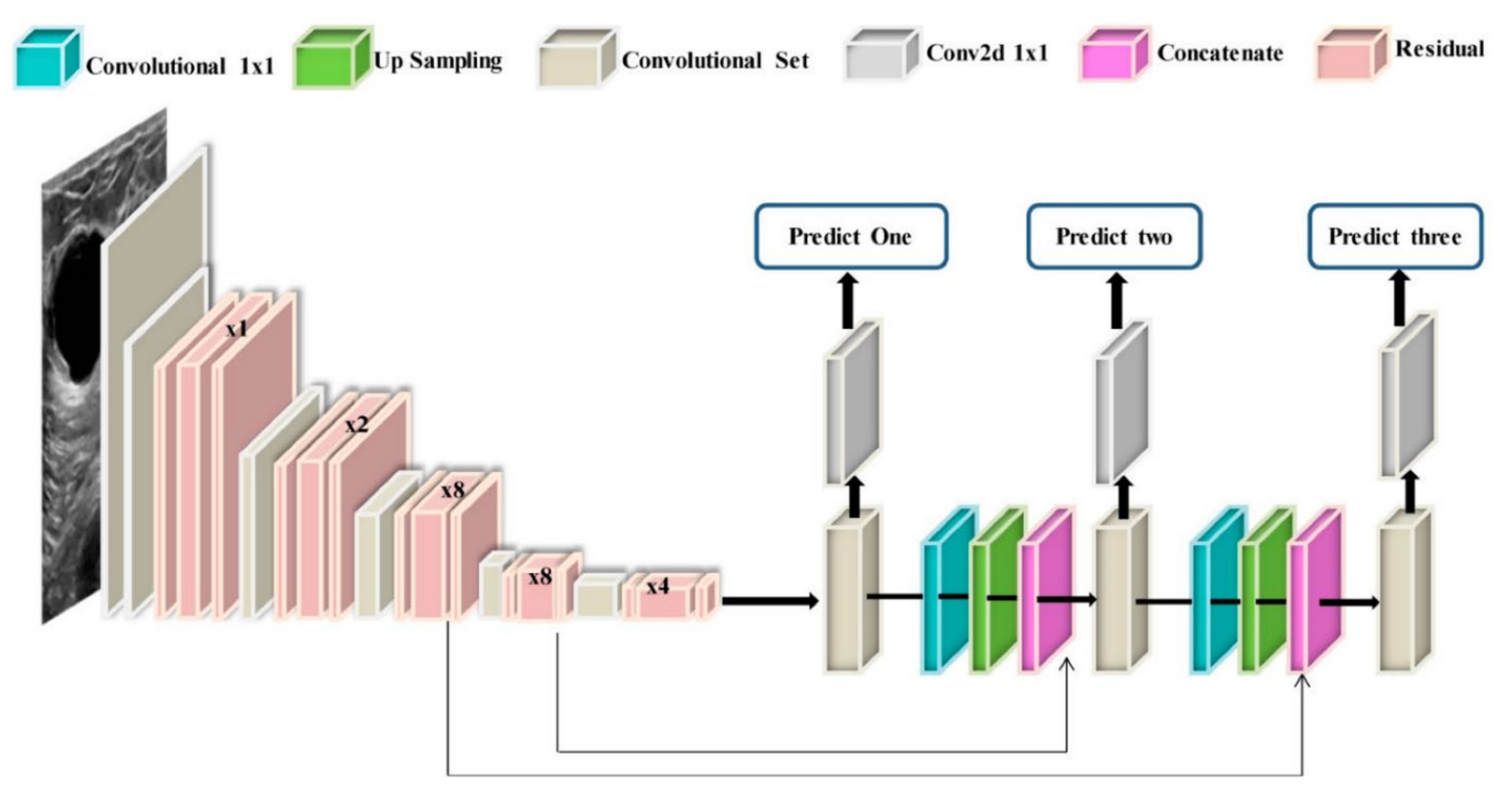

With the recent technology developments, MM images follow the same trend and take more advanced forms, e.g., digital breast tomosynthesis (DBT). Each MM form has been widely used for breast cancer detection and classification. One of the first attempts to use deep learning for MMs was carried out by [137]. The authors in [137] used a convolutional neural network (CNN)-based model to learn features from mammography images before feeding them to a support vector machine (SVM) classifier. Their algorithm could achieve 86% AUC in lesion classification, which had about 6% improvement compared to the best conventional approach before this paper. Following [137], more studies [138,139,140] have also used CNN-based algorithms for lesion classification. However, in these papers, the region of interest was extracted without the help of a deep learning algorithm, i.e., by employing traditional image processing methods [139] or by an expert [140]. More specifically, the authors in [138] first divided MM images into patches and extracted the features from the patches using a conventional image-processing algorithm, and then used the random forest classifier to choose good candidate patches for their CNN algorithm. Their approach could achieve an AUC of 92.9%, which is slightly better than the baseline method based on a conventional method with an AUC of 91%. With the advancement in DL algorithms and the availability of complex and powerful DL architectures, DL methods have been used to extract ROIs from full MM images. As a result, the input to the algorithm is no longer the small patches, and the full MM image could be used as input. For example, the proposed method in [131] uses YOLO [141], a well-known algorithm for detection and classification, to simultaneously extract and classify ROIs in the whole image. Their results show that their algorithm performs similarly to a CNN model trained on small patches with an AUC of 97%. Figure 7 shows the overall structure of the proposed model in [131].

Figure 7.

Schematic diagram of the proposed YOLO-based CAD system in [131]. Reprinted/adapted with permission from [131]. 2021, Elsevier.

To increase the accuracy of cancer detection, DBT has emerged as a predominant breast-imaging modality. It has been shown that DBT increases the cancer detection rate (CDR) while decreasing recall rates (RR) when compared to FFDM [142,143,144]. Following the same logic, some DL algorithms have been proposed to apply to DBT images for cancer detection [145,146,147,148,149]. For instance, the authors in [150] proposed a deep learning model based on ResNet architecture to classify the input images into normal, benign, high-risk, or malignant. They trained the model on an FFDM dataset, then fine-tuned the model using 2D reconstruction of DBT images obtained by applying the 2D maximum intensity projection (MIP) method. Their method achieved an AUC of 84.7% on the DBT dataset. A deep CNN has been developed in [145] that uses DBT volumes to classify the masses. Their proposed approach obtained an AUC of 84.7%, which is about 2% higher than the current CAD method with hand-crafted features.

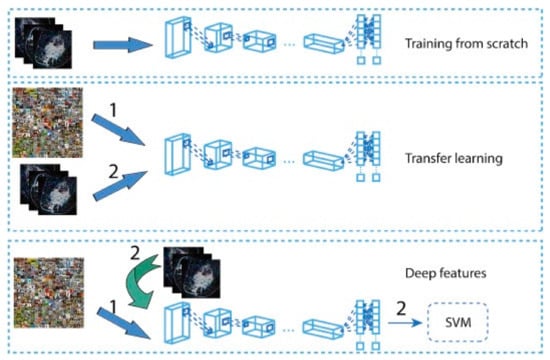

Although deep learning models perform very well in medical image analysis, their major bottleneck is the thirst for training datasets. In the medical field, collecting and labeling data is very expensive. Some studies used transfer learning to overcome this problem. In the study by [151], the authors developed a two-stage transfer learning approach to classify DBT images as mass or normal. In the first stage, the authors fine-tuned a pretrained AlexNet [152] using FFDM images, and then the fine-tuned model was used to train a model using DBT images. The CNN model in the second stage was used as the feature extractor for DBT images, and the random forest classifier was used to classify the extracted features as mass or normal. They obtained an AUC of 90% on their test dataset. In another work in [153], the authors used a VGG19 [154] network trained on the ImageNet dataset as a feature extractor for FFDM and DBT images for malignant and benign classification. The extracted features were fed to an SVM classifier to estimate the probability of malignancy. Their method obtained an AUC of 98% and 97% on the DBT images in CC and MLO view, respectively. These methods show that by using a relatively small training dataset and employing transfer learning techniques, deep learning models can perform well. Most of the aforementioned studies compare their DL algorithms with traditional CAD methods. However, the best way to evaluate the performance of a DL method is to compare that with a radiologist directly. For example, the performance of DL systems on FFDM and DBT has been investigated in [155]. The study shows that a DL system can achieve comparable sensitivity as radiologists in FFDM images while decreasing the recall rate. Additionally, on DBT images, an AI system can have the same performance as radiologists, although the recall rate has increased.

Table 3 shows the list of recent DL-based models used for MM and DBT with their performances. The application of DL in breast cancer detection is not limited to mammography images. In the following section, we discuss the DL application in other breast cancer imaging modalities.

Table 3.

The summary of the studies that used MM and DBT datasets.

3.2.2. Ultrasound (US)

As has been explained in Section 2, ultrasound performs much better in detecting cancers and reduces unnecessary biopsy operations [183]. Therefore, it is not surprising to see that the researchers use this type of image in their DL models for cancer detection [184,185,186]. For instance, a GoogleNet [187]-based CNN has been trained on the suspicious ROIs of US images in [184]. The proposed method in [184] achieved an AUC of 96%, which is 6% higher than the CAD-based method with hand-crafted features. The authors in [188,189,190] trained CNN models directly with whole US images without extracting the ROIs. For example, the authors in [190] combined VGG19 and ResNet152 and trained the ensemble network on US images. Their proposed method achieved an AUC of 95% on a balanced, independent test dataset. Figure 8 represents an example of CNN models for breast cancer subtype classification.

In comparison with datasets for mammography images, there are fewer datasets for US images, and they usually contain much fewer images. Therefore, most of the proposed DL models use some kind of data augmentation method, such as rotation, to increase the size of training data and improve the model performance. However, one should be careful about how to augment US images since some augmentation may decrease the model performance. For example, it has been shown in [186] that performing the image rotation or shift in the longitudinal direction can affect the model performance negatively. The generative adversarial networks (GANs) can also be used to generate synthetic US images with or without tumors [191]. These images can be added to the original training images to improve the model’s accuracy.

The US images have also been used in lesion detection in which, when given an image, the CAD system decides whether the lesion is present. One of the challenges that the researcher faces in this type of problem with normal US images is that there is a need for a US doctor to manually select the images that have lesions for the models. This depends on the doctors’ availability and is usually expensive and time-consuming. It also adds human errors to the system [192]. To solve this problem, a method has been developed in [193] to detect the lesions in real time during US scanning. Another type of US imaging is called the 3D automated breast US scan, which captures the entire breast [194,195]. The authors in [195] developed a CNN model based on VGGNet, ResNet [196], and DenseNet [197] networks. Their approach obtained an AUC of 97% on their private dataset and an AUC of 97.11% on the breast ultrasound image (BUSI) dataset [80].

Some methods combined the detection and classification of lesions in US images in one step [198]. An extensive study in [199] compares different DL architectures for US image detection and classification. Their results show that the DenseNet is a good candidate for classification analysis of US images, which provides accuracies of 85% and 87.5% for full image classification and pre-defined ROIs, respectively. The authors in [200] developed a weakly supervised DL algorithm based on VGG16, ResNet34, and GoogleNet trained using 1000 unannotated US images. They have reported an average AUC of 88%.

Some studies validate the performance of DL algorithms [201,202,203] using expert inference, showing that DL algorithms can greatly help radiologists. This is mostly in cases where the lesion was already detected by an expert, and the DL model is used to classify them. However, unlike the mammography studies, most of the studies are not validated by multiple physicians and do not show the generalizability of their method on multiple datasets which should be addressed in future validations. Table 4 shows the list of recent algorithms used for US images and their performances.

Table 4.

The summary of the studies that used ultrasound dataset.

Figure 8.

Example of a model architecture for breast cancer subtypes classification from US images via CNN models [222].

Figure 8.

Example of a model architecture for breast cancer subtypes classification from US images via CNN models [222].

3.2.3. Magnetic Resonance Imaging (MRI)

As explained in Section 2, MRI has higher sensitivity for breast cancer detection in dense breasts [223] than MM and US images. However, the big difference between MRI and MM or US images is that the MRI is a 3D scan, but MM and US are 2D images. Moreover, MRI sequences are captured over time, increasing the MRI dimensionality to 4D (dynamic contrast-enhanced (DCE)-MRI). This makes MRI images more challenging for DL algorithms compared to MM and US images, as most of the current DL algorithms are built for 2D images. One way to address this challenge is to convert the 3D image to 2D by either dividing 3D MRIs into 2D slices [224,225] or using MIP to build a 2D representation [226]. Moreover, most DL algorithms have been developed for colored images, which are 3D images whose third dimension represents the color channels. However, the MRIs are grayscale images. Therefore, some developed MRI models put three consecutive slices of grayscale MRI together and build a 3D image [227,228]. Some other approaches modify the current 2D DL architecture to make them appropriate for MRI 3D scans [229].

All the above approaches have been used in lesion classification DL algorithms. For example, [230] uses 2D slices of the ROIs as input to their CNN model. They obtained an accuracy of 85% on their test dataset. The MIP technique is used in [231] which obtained an AUC of 89.5%. In the study carried out by Zhou et al. [229], the authors put the grayscale MRIs together and built 3D images for their DL methods. Their algorithm obtained an AUC of 92%. In another study presented in [193], the proposed algorithm uses the actual 3D MRI scans obtaining an AUC of 85.9% by the 3D version of DenseNet [197]. It is worth mentioning that the performance of 2D and 3D approaches cannot be compared since they used different datasets. However, some studies compared their proposed methods with radiologists’ interpretations [228,229]. Figure 9 shows a schematic of a framework for cancer subtype classification with MRI.

Like in MM and US images, the DL methods have been widely used in lesion detection and segmentation problems in MRI images. A CNN algorithm based on RetinaNet [232] has been developed in [233] for detecting lesions from the 4D MR scans. Their method obtained a sensitivity of 95%. One study [234] used a mask-guided hierarchical learning (MHL) framework for breast tumor segmentation based on U-net architecture. Their method achieved the Dice similarity coefficient (DSC) of 0.72 for lesion segmentation. In another work [235], the authors proposed a U-net-based CNN model called 3TP U-net for the lesion segmentation task. Their algorithm obtained a Dice similarity coefficient of 61.24%. Alternatively, the authors in [236] developed a CNN-based segmentation model by refining the U-net architecture to segment the lesions in MRIs. Their proposed method achieved a Dice similarity coefficient of 86.5%. It has to be noted that in most lesion segmentation algorithms, there is a need for a mask that shows the pixels that belong to the breast as ground truth for training. These masks can help the models to focus on the right place and ignore the areas that do not have any information. Table 5 shows the list of recent algorithms used for MRI images and their performances.

Figure 9.

A model architecture for cancer subtypes prediction via ResNet 50 and CNN models from MRI images [237]. Reprinted/adapted with permission from [237]. 2019, Elsevier.

Figure 9.

A model architecture for cancer subtypes prediction via ResNet 50 and CNN models from MRI images [237]. Reprinted/adapted with permission from [237]. 2019, Elsevier.

Table 5.

Summary of the studies that used MRI datasets.

3.2.4. Histopathology

In contrast to other modalities, histopathology images are colored images that are provided either as the whole-slide images (WSI) or the extracted image patches from the WSI, i.e., ROIs that are extracted by pathologists. The histopathology images are a great means of diagnosing breast cancer types that are impossible to find with radiology images, i.e., MRIs. Moreover, these images have been used to detect cancer subtypes because of the details they have about the tissue. Therefore, they are widely used with DL algorithms for cancer detection. For example, Ref. [258] employed a CNN-based DL algorithm to classify the histopathology images into four classes: normal tissue, benign lesion, in situ carcinoma, and invasive carcinoma. They combined the classification results of all the image patches to obtain the final image-wise classification. They also used their model to classify the images into two classes, carcinoma, and non-carcinoma. An SVM has been trained on the features extracted by a CNN to classify the images. Their method obtained an accuracy of 77.8% on four-class classification and an accuracy of 83.3% on binary classification. In another work proposed in [259], two CNN models were developed, one for predicting malignancy and the other for predicting malignancy and image magnification levels simultaneously. They used images of size 700 × 460 with different magnification levels. Their average binary classification for benign/malignant is 83.25%. A novel framework was proposed in [260] that uses a hybrid attention-based mechanism to classify histopathology images. The attention mechanism helps to find the useful regions from raw images automatically.

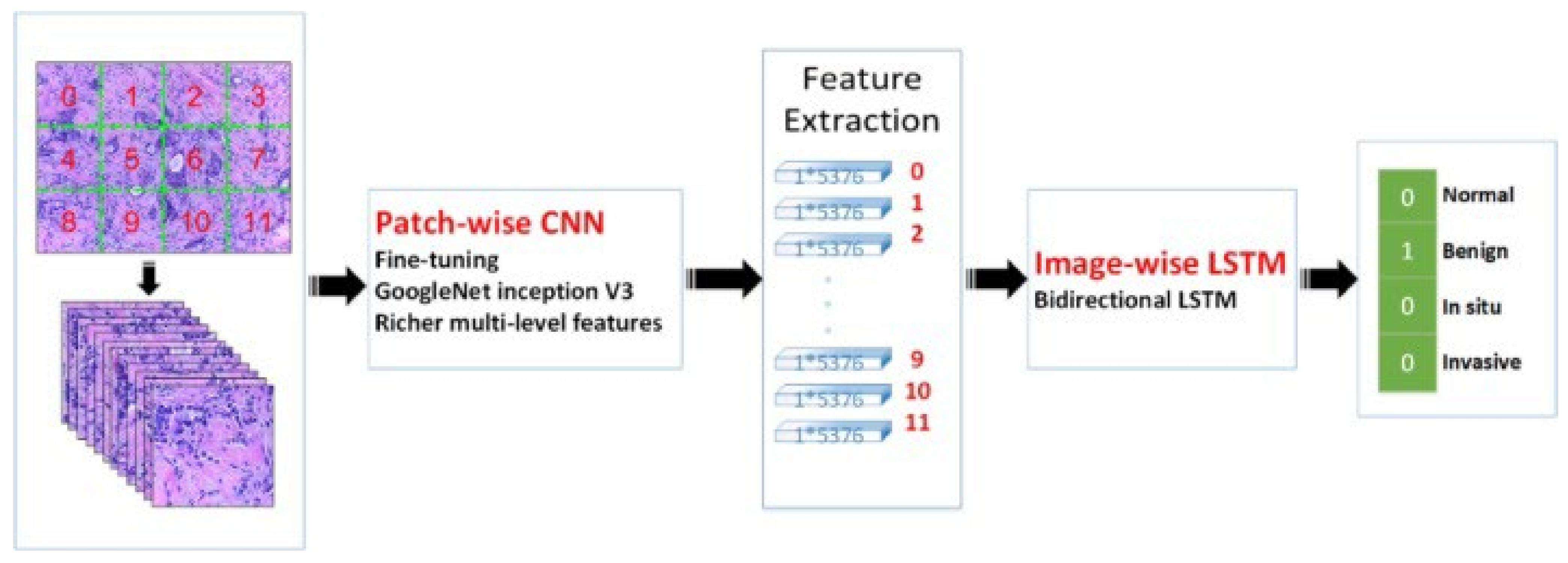

The transfer learning approach has also been employed in analyzing histopathology images since the histopathology image datasets suffer from the lack of a large amount of data required for deep learning models. For example, the method developed in [261] uses pretrained Inception-V3 [187] and Inception-ResNet-V2 [262] and fine-tunes them for both binary and multiclass classification on histology images. Their approach obtained an accuracy of 97.9% in binary classification and an accuracy of 92.07% in the multi-classification task. In another work [263], the authors developed a framework for classifying malignant and benign cells that extracted the features from images using GoogleNet, VGGNet, and ResNet and then combined those features to use them in the classifier. Their framework obtained an average accuracy of 97%. The authors in [264] used a fine-tuned GoogleNet to extract features from the small patches of pathological images. The extracted features were fed to a bidirectional long short-term memory (LSTM) layer for classification. Their approach obtained an accuracy of 91.3%. Figure 10 shows the overview of the method proposed in [264]. GANs have also been combined with transfer learning to further increase classification accuracy. In work carried out in [265], StyleGAN [266] and Pix2Pix [267] were used to generate fake images. Then, VGG-16 and VGG-19 were fine-tuned to classify images. Their proposed method achieved an accuracy of 98.1% in binary classification.

Figure 10.

Prediction of breast cancer grades from extracted patches from histopathology images via patch-wise LSTM architecture [264]. Reprinted/adapted with permission from [264]. 2019, Elsevier.

Histopathology images have been widely used for nuclei detection and segmentation. For instance, in the work presented in [268], a novel framework called HASHI was developed that automatically detects invasive breast cancer in the whole slide images. Their framework obtained a Dice coefficient of 76% on their independent test dataset. In the other work performed in [269] for nuclei detection, a series of handcrafted features and features extracted from CNN were combined for better detection. The method used three different datasets and obtained an F-score of 90%. The authors in [270] presented a fully automated workflow for nuclei segmentation in histopathology images based on deep learning and the morphological properties extracted from the images. Their workflow achieved an accuracy and F1-score of 95.4% and 80.5%, respectively. In another work by [271], the authors first extracted the small patches from the high-resolution whole slides, then each small patch was segmented using a CNN along with an encoder-decoder; finally, to combine the local segmentation result, they used an improved merging strategy based on a fully connected conditional random field. Their algorithm obtained a segmentation accuracy of 95.6%. Table 6 shows the performance of recently developed DL methods in histology images.

3.2.5. Positron Emission Tomography (PET)/Computed Tomography (CT)

PET/CT is a nuclear medicine imaging technique that helps increase the effectiveness of detecting and classifying axillary lymph nodes and distant staging [272]. However, they have trouble detecting early-stage breast cancer. Therefore, it is not surprising that PET/CT is barely used with DL algorithms. However, PET/CT has some important applications that DL algorithms can be applied. For example, as discussed in [273], breast cancer is one of the reasons for most cases of bone metastasis. A CNN-based algorithm was developed in [274] to detect breast cancer metastasis on whole-body scintigraphy scans. Their algorithm obtained 92.5% accuracy in the binary classification of whole-body scans.

In the other application, PET/CT can be used to quantify the whole-body metabolic tumor volume (MTV) to reduce the labor and cost of obtaining MTV. For example, in the work presented in [275], a model trained on the MTV of lymphoma and lung cancer patients is used to detect the lesions in PET/CT scans of breast cancer patients. Their algorithm could detect 92% of the measurable lesions.

Table 6.

The summary of the studies that used histopathology datasets.

Table 6.

The summary of the studies that used histopathology datasets.

| Paper | Year | Task | Model | Dataset | Evaluation |

|---|---|---|---|---|---|

| Zainudin et al. [276] | 2019 | Breast Cancer Cell Detection/Classification | CNN | MITOS | Acc = 84.5% TP = 80.55% FP = 11.6% |

| Li et al. [277] | 2019 | Breast Cancer Cell Detection/Classification | Deep cascade CNN | MITOSIS AMIDA13 TUPAC16 | MITOSIS: F-score = 56.2% AMIDA13: F-score = 67.3% TUPAC16: F-score = 66.9% |

| Das et al. [278] | 2019 | Breast Cancer Cell Detection/Classification | CNN | MITOS ATYPIA14 | MITOS: F1-score = 84.05% ATYPIA14: F1-score = 59.76% |

| Gour et al. [279] | 2020 | Classification | CNN | BreakHis | Acc = 92.52% F1 score = 93.45% |

| Saxena et al. [280] | 2020 | Classification | CNN | BreakHis | Avg. Acc = 88% |

| Hirra et al. [281] | 2021 | Classification | DBN | DRYAD | Acc = 86% |

| Senan et al. [282] | 2021 | Classification | CNN | BreakHis | Acc = 95% AUC = 99.36% |

| Zewdie et al. [283] | 2021 | Classification | CNN | Private BreakHis Zendo | Binary Acc = 96.75% Grade classification Acc = 93.86% |

| Kushwaha et al. [284] | 2021 | Classification | CNN | BreakHis | Acc = 97% |

| Gheshlaghi et al. [285] | 2021 | Classification | Auxiliary Classifier GAN | BreakHis | Binary Acc = 90.15% Sub-type classification Acc = 86.33% |

| Reshma et al. [286] | 2022 | Classification | Genetic Algorithm with CNN | BreakHis | Acc = 89.13% |

| Joseph et al. [287] | 2022 | Classification | CNN | BreakHis | Avg. Multiclass Acc = 97% |

| Ahmad et al. [288] | 2022 | Classification | CNN | BreakHis | Avg. Binary Acc = 99% Avg. Multiclass Acc = 95% |

| Mathew et al. [289] | 2022 | Breast Cancer Cell Detection/Classification | CNN | ATYPIA MITOS | F1 score = 61.91% |

| Singh and Kumar [290] | 2022 | Classification | Inception ResNet | BHI BreakHis | BHI: Acc = 85.21% BreakHis: Avg. Acc = 84% |

| Mejbri et al. [291] | 2019 | Tissue-level Segmentation | DNNs | Private | U-Net: Dice = 86%, SegNet: Dice = 87%, FCN: Dice = 86%, DeepLab: Dice = 86% |

| Guo et al. [292] | 2019 | Cancer Regions Segmentation | Transfer learning based on Inception-V3 and ResNet-101 | Camelyon16 | IOU = 80.4% AUC = 96.2% |

| Priego-Torres et al. [271] | 2020 | Tumor Segmentation | CNN | Private | Acc = 95.62% IOU = 92.52% |

| Budginaitė et al. [293] | 2021 | Cell Nuclei Segmentation | Micro-Net | Private | Dice = 81% |

| Pedersen et al. [294] | 2022 | Tumor Segmentation | CNN | Norwegian cohort [295] | Dice = 93.3% |

| Khalil et al. [296] | 2022 | Lymph node Segmentation | CNN | Private | F1 score = 84.4% IOU = 74.9% |

4. Discussion

Breast cancer plays a crucial role in the mortality of women in the world. Cancer detection in its early stage is an essential task to reduce mortality. Recently, many imaging modalities have been used to give more detailed insights into breast cancer. However, manual analysis of these imaging modalities with a huge number of images is a difficult and time-consuming task leading to inaccurate diagnoses and an increased false-detection rate. Thus, to tackle these problems, an automated approach is needed. The most effective and reliable approach for medical image analysis is CAD. CAD systems have been designed to help physicians to reduce their errors in analyzing medical images. A CAD system highlights the suspicious features in images (e.g., masses) and helps radiologists to reduce false-negative readings. Moreover, CAD systems usually detect more false features than true marks, and it is the radiologist’s responsibility to evaluate the results. This characteristic of CAD systems increases the reading time and limits the number of cases that radiologists can evaluate. Recently, the advancement of AI, especially DL-based methods, could effectively speed up the image analysis process and help radiologists in early breast cancer diagnosis.

Considering the importance of DL-based CAD systems for breast cancer detection and diagnosis, in this paper, we have discussed the applications of different DL algorithms in breast cancer detection. We first reviewed the imaging modalities used for breast cancer screening and diagnosis. Besides a comprehensive discussion, we discussed the advantage and limitations of each imaging modality and summarize the public datasets available for each modality with the links to the datasets. We then reviewed the recent DL algorithms used for breast imaging analysis along with the detail of their datasets and results. The studies presented promising results from DL-based CAD systems. However, the DL-based CAD tools still face many challenges that prohibit them from clinical usage. Here, we discussed some of these challenges as well as the future direction for cancer detection studies.

One of the main obstacles to having a robust DL-based CAD tool is the cost of collecting medical images. The medical images used for DL algorithms should contain reliable annotated images from different patients. Data collection would be very costly for sufficient abnormal data compared to normal cases since the number of abnormal cases is much lower than the normal cases (e.g., several abnormal cases per thousand patients in the breast cancer screening population). The data collection also depends on the number of patients that takes a specific examination and the availability of equipment and protocols in different clinical settings. For example, MM datasets are usually very large datasets, including thousands of patients. However, the MRI or PET/CT datasets contain much fewer patients. Due to the existence of a large public dataset for MM, much more DL algorithms have been developed and validated for the MM modality than other datasets. One way to create a big dataset for different image modalities is multi-institutional collaboration. The dataset obtained from these collaborations covers a large group of patients with different characteristics, different imaging equipment, and clinical settings and protocols. These datasets make the DL algorithms more robust and reliable.

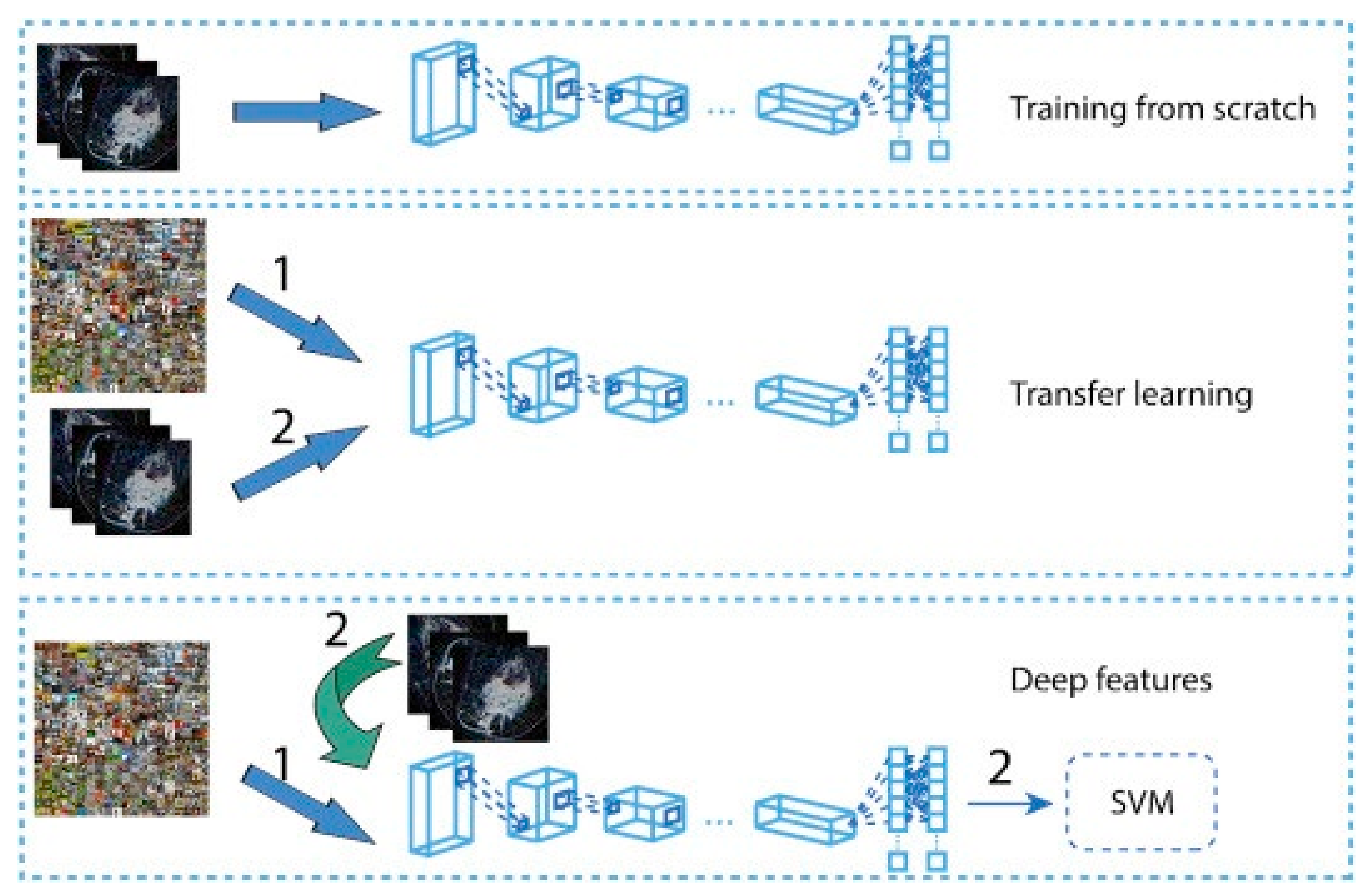

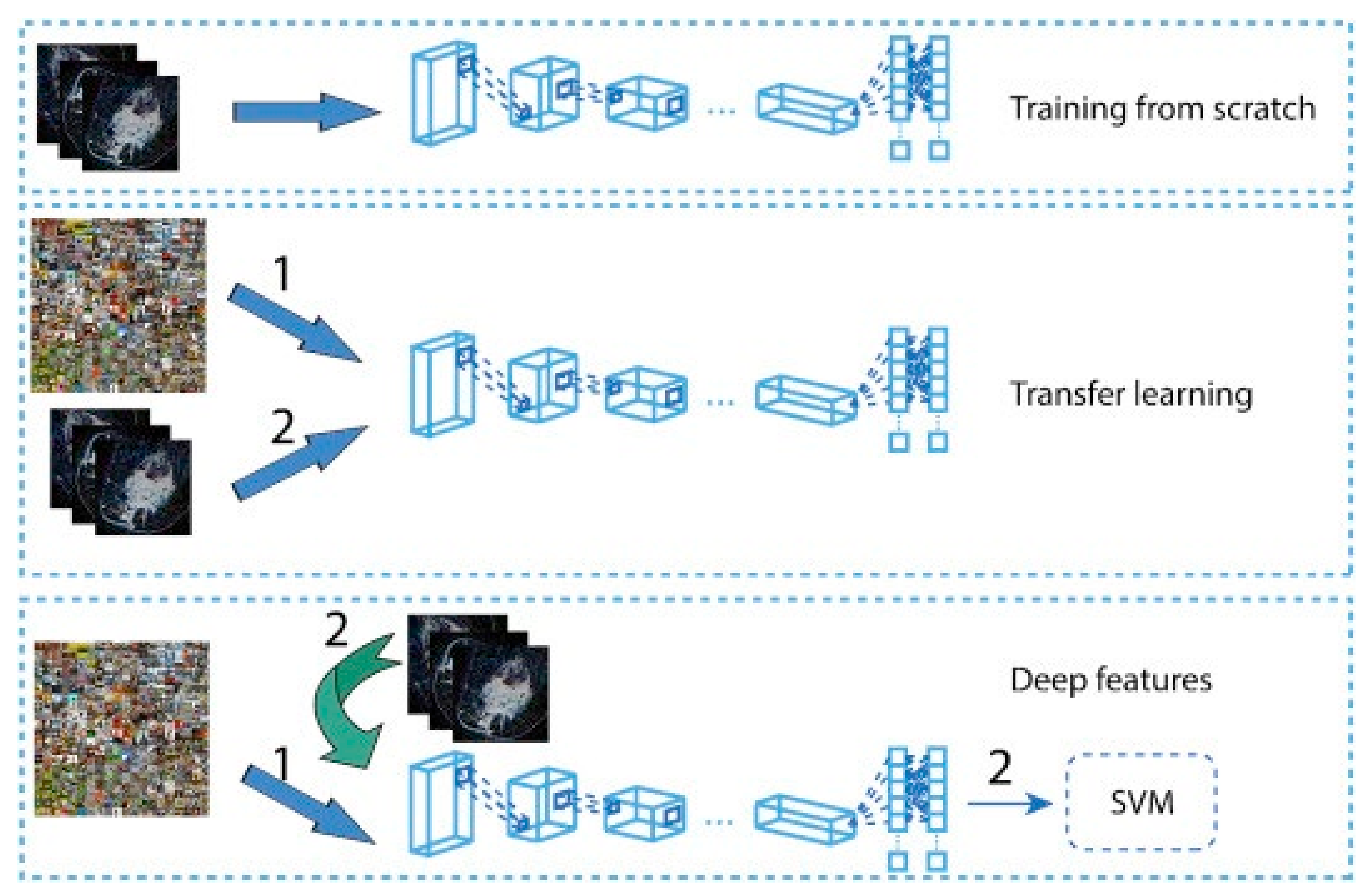

Currently available medical image datasets usually contain a small amount of data. On the other hand, employing DL and exploiting its capabilities on a small amount of training data is challenging. Because the DL algorithms should be trained on a large dataset to have a good performance. Some possible solutions can help to overcome the problems related to small datasets. For example, the datasets from different medical centers can be combined to create a bigger one. However, there are usually some patient privacy policies that should be addressed. Another solution to this problem is using federated learning [297] in which the algorithm is trained on datasets locally, but it should travel between the centers and be trained on the datasets in each center. The federated learning algorithms are not popular yet, and they are not widely implemented. In most cases, the training data cannot be publicly shared; therefore, there is no way to evaluate the DL methods and regenerate the results in the studies. Many studies used transfer learning to overcome the problem of small datasets. Some of the studies used a pre-trained model to extract features from the medical images and then, they used the extracted features to train a DL model for target tasks. However, other studies initialized their model with pre-trained model weights and then fine-tuned their models with the medical image datasets. Although transfer learning shows some improvement for the small datasets, the performance of the target model highly depends on the difference between the characteristics of source datasets and target datasets. In these cases, a negative transfer [298] may occur in which the source domain reduces the learning performance in the target domain. Some studies used data augmentation rather than transfer learning to increase the size of the dataset artificially and improve the model performance. However, one should note that augmenting data does not introduce the independent features to the model; therefore, it does not provide much new knowledge for the DL model compared to new independent images.

The shortage of datasets with comprehensive and fully labeled/annotated data is also another challenge that DL-based CAD systems face. Most of the DL methods are supervised algorithms, and they need fully labeled/annotated datasets. However, creating a large fully annotated dataset is a very challenging task since annotating medical images is time-consuming and may have human errors. To avoid the need for annotated datasets, some papers used unsupervised algorithms, but they obtained less accurate results compared to supervised algorithms.

Another important challenge is the generalizability of the DL algorithms. Most of the proposed approaches work on the datasets obtained with specific imaging characteristics and cannot be used for the datasets obtained from different populations, different clinical settings, or different imaging equipment and protocols. This is an obstacle to the wide use of AI methods in cancer detection in medical centers. Each health clinic should design and conduct a testing protocol for DL-based CAD systems using the data obtained from the local patient population before any clinical usage of these systems. During the testing period, the user should find the weaknesses and strengths of the system based on the output of the system for different input cases. The user should know that what is the characteristics of the failed and correct output and recognize when the system makes mistake and when it works fine. This testing procedure not only evaluates DL-based CAD models but also teaches the user the best way to use DL-based CAD systems.

Another limitation can be the interpretability of DL algorithms. Most DL algorithms are like a black box, and there are no suitable explanations for the decision, and feature selection happens during the training and learning processes. Radiologists usually do not prefer these uninterpretable DL algorithms because they need to understand the physical meaning of the decisions taken by the algorithms and which parts of images are highly discriminative. Recently, some DL-based algorithms such as DeepSHAP [299] were introduced to define an interpretable model to give more insight into the decision-making of DL algorithms in medical image analysis. Therefore, to increase physicians’ confidence and reliability of the decision made by DL tools, the utilization of interpretable approaches and proper explanation of DL algorithms is required for breast cancer analysis, helping widely used DL technology in clinical care applications such as breast cancer analysis.

DL algorithms show outstanding performance in analyzing imaging data. However, as discussed, there are still many challenges that they face. Besides DL algorithms, some studies show that using omics data instead of imaging data may lead to higher classification accuracy [108,300]. The omics data contain fewer but more effective features than imaging data. Moreover, the DL methods may extract the features from the images that are not relevant to the final label and those features may decrease the model performance. On the other hand, processing omics data is more expensive than image processing. Moreover, there are much more algorithms available for image processing than omics processing. Additionally, there are much more imaging data available than omics data.

5. Conclusions

Cancer detection in its early stage can improve the survival rate and reduce mortality. The rapid developments in deep learning-based techniques in medical image analysis algorithms along with the availability of large datasets and computational resources made it possible to improve breast cancer detection, diagnosis, prognosis, and treatment. Moreover, due to the capability of deep learning algorithms particularly CNNs, they have been very popular among the research community. In this research, comprehensive detail of the most recently employed deep learning methods is provided for different image modalities in different applications (e.g., classification, and segmentation). Despite outstanding performance by deep learning methods, they still face many challenges that should be addressed before deep learning can eventually influence clinical practices. Besides the challenges, ethical issues related to the explainability and interpretability of these systems need to be considered before deep learning can be expanded to its full potential in the clinical breast cancer imaging practice. Therefore, it is the responsibility of the research community to make the deep learning algorithms fully explainable before considering these systems as decision-making candidates in clinical practice.

Author Contributions

Data curation, M.M. and M.M.B.; writing—original draft preparation, M.M. and M.M.B.; writing—review and editing, M.M., M.M.B. and S.N.; supervision, S.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

There are no conflict of interest.

References

- Zhou, X.; Li, C.; Rahaman, M.M.; Yao, Y.; Ai, S.; Sun, C.; Wang, Q.; Zhang, Y.; Li, M.; Li, X.; et al. A comprehensive review for breast histopathology image analysis using classical and deep neural networks. IEEE Access 2020, 8, 90931–90956. [Google Scholar] [CrossRef]

- Global Burden of 87 Risk Factors in 204 Countries and Territories, 1990–2019: A Systematic Analysis for the Global Burden of Disease Study 2019—ScienceDirect. Available online: https://www.sciencedirect.com/science/article/pii/S0140673620307522 (accessed on 21 July 2022).

- Anastasiadi, Z.; Lianos, G.D.; Ignatiadou, E.; Harissis, H.V.; Mitsis, M. Breast cancer in young women: An overview. Updat. Surg. 2017, 69, 313–317. [Google Scholar] [CrossRef] [PubMed]

- Chiao, J.-Y.; Chen, K.-Y.; Liao, K.Y.-K.; Hsieh, P.-H.; Zhang, G.; Huang, T.-C. Detection and classification the breast tumors using mask R-CNN on sonograms. Medicine 2019, 98, e15200. [Google Scholar] [CrossRef] [PubMed]

- Cruz-Roa, A. Accurate and reproducible invasive breast cancer detection in whole-slide images: A Deep Learning approach for quantifying tumour extent. Sci. Rep. 2017, 7, 46450. [Google Scholar] [CrossRef]

- Richie, R.C.; Swanson, J.O. Breast cancer: A review of the literature. J. Insur. Med. 2003, 35, 85–101. [Google Scholar]

- Youlden, D.R.; Cramb, S.M.; Dunn, N.A.M.; Muller, J.M.; Pyke, C.M.; Baade, P.D. The descriptive epidemiology of female breast cancer: An international comparison of screening, incidence, survival and mortality. Cancer Epidemiol. 2012, 36, 237–248. [Google Scholar] [CrossRef]

- Moghbel, M.; Ooi, C.Y.; Ismail, N.; Hau, Y.W.; Memari, N. A review of breast boundary and pectoral muscle segmentation methods in computer-aided detection/diagnosis of breast mammography. Artif. Intell. Rev. 2019, 53, 1873–1918. [Google Scholar] [CrossRef]

- Moghbel, M.; Mashohor, S. A review of computer assisted detection/diagnosis (CAD) in breast thermography for breast cancer detection. Artif. Intell. Rev. 2013, 39, 305–313. [Google Scholar] [CrossRef]

- Murtaza, G.; Shuib, L.; Wahab, A.W.A.; Mujtaba, G.; Nweke, H.F.; Al-Garadi, M.A.; Zulfiqar, F.; Raza, G.; Azmi, N.A. Deep learning-based breast cancer classification through medical imaging modalities: State of the art and research challenges. Artif. Intell. Rev. 2019, 53, 1655–1720. [Google Scholar] [CrossRef]

- Domingues, I.; Pereira, G.; Martins, P.; Duarte, H.; Santos, J.; Abreu, P.H. Using deep learning techniques in medical imaging: A systematic review of applications on CT and PET. Artif. Intell. Rev. 2019, 53, 4093–4160. [Google Scholar] [CrossRef]

- Kozegar, E.; Soryani, M.; Behnam, H.; Salamati, M.; Tan, T. Computer aided detection in automated 3-D breast ultrasound images: A survey. Artif. Intell. Rev. 2019, 53, 1919–1941. [Google Scholar] [CrossRef]

- Saha, M.; Chakraborty, C.; Racoceanu, D. Efficient deep learning model for mitosis detection using breast histopathology images. Comput. Med. Imaging Graph. 2018, 64, 29–40. [Google Scholar] [CrossRef]

- Suh, Y.J.; Jung, J.; Cho, B.-J. Automated Breast Cancer Detection in Digital Mammograms of Various Densities via Deep Learning. J. Pers. Med. 2020, 10, 211. [Google Scholar] [CrossRef] [PubMed]

- Cheng, H.D.; Shi, X.J.; Min, R.; Hu, L.M.; Cai, X.P.; Du, H.N. Approaches for automated detection and classification of masses in mammograms. Pattern Recognit. 2006, 39, 646–668. [Google Scholar] [CrossRef]

- Van Ourti, T.; O’Donnell, O.; Koç, H.; Fracheboud, J.; de Koning, H.J. Effect of screening mammography on breast cancer mortality: Quasi-experimental evidence from rollout of the Dutch population-based program with 17-year follow-up of a cohort. Int. J. Cancer 2019, 146, 2201–2208. [Google Scholar] [CrossRef] [PubMed]

- Sutanto, D.H.; Ghani, M.K.A. A Benchmark of Classification Framework for Non-Communicable Disease Prediction: A Review. ARPN J. Eng. Appl. Sci. 2015, 10, 15. [Google Scholar]

- Van Luijt, P.A.; Heijnsdijk, E.A.M.; Fracheboud, J.; Overbeek, L.I.H.; Broeders, M.J.M.; Wesseling, J.; Heeten, G.J.D.; de Koning, H.J. The distribution of ductal carcinoma in situ (DCIS) grade in 4232 women and its impact on overdiagnosis in breast cancer screening. Breast Cancer Res. 2016, 18, 47. [Google Scholar] [CrossRef]

- Baines, C.J.; Miller, A.B.; Wall, C.; McFarlane, D.V.; Simor, I.S.; Jong, R.; Shapiro, B.J.; Audet, L.; Petitclerc, M.; Ouimet-Oliva, D. Sensitivity and specificity of first screen mammography in the Canadian National Breast Screening Study: A preliminary report from five centers. Radiology 1986, 160, 295–298. [Google Scholar] [CrossRef]

- Houssami, N.; Macaskill, P.; Bernardi, D.; Caumo, F.; Pellegrini, M.; Brunelli, S.; Tuttobene, P.; Bricolo, P.; Fantò, C.; Valentini, M. Breast screening using 2D-mammography or integrating digital breast tomosynthesis (3D-mammography) for single-reading or double-reading–evidence to guide future screening strategies. Eur. J. Cancer 2014, 50, 1799–1807. [Google Scholar] [CrossRef]

- Houssami, N.; Hunter, K. The epidemiology, radiology and biological characteristics of interval breast cancers in population mammography screening. NPJ Breast Cancer 2017, 3, 12. [Google Scholar] [CrossRef]

- Massafra, R.; Comes, M.C.; Bove, S.; Didonna, V.; Diotaiuti, S.; Giotta, F.; Latorre, A.; La Forgia, D.; Nardone, A.; Pomarico, D.; et al. A machine learning ensemble approach for 5-and 10-year breast cancer invasive disease event classification. PLoS ONE 2022, 17, e0274691. [Google Scholar] [CrossRef] [PubMed]

- Chan, H.P.; Samala, R.K.; Hadjiiski, L.M. CAD and AI for breast cancer—Recent development and challenges. Br. J. Radiol. 2019, 93, 20190580. [Google Scholar] [CrossRef] [PubMed]

- Jannesari, M.; Habibzadeh, M.; Aboulkheyr, H.; Khosravi, P.; Elemento, O.; Totonchi, M.; Hajirasouliha, I. Breast Cancer Histopathological Image Classification: A Deep Learning Approach. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 2405–2412. [Google Scholar] [CrossRef]

- Rodriguez-Ruiz, A.; Lång, K.; Gubern-Merida, A.; Broeders, M.; Gennaro, G.; Clauser, P.; Helbich, T.H.; Chevalier, M.; Tan, T.; Mertelmeier, T.; et al. Stand-alone artificial intelligence for breast cancer detection in mammography: Comparison with 101 radiologists. JNCI J. Natl. Cancer Inst. 2019, 111, 916–922. [Google Scholar] [CrossRef] [PubMed]

- McKinney, S.M.; Sieniek, M.; Godbole, V.; Godwin, J.; Antropova, N.; Ashrafian, H.; Back, T.; Chesus, M.; Corrado, G.S.; Darzi, A.; et al. International evaluation of an AI system for breast cancer screening. Nature 2020, 577, 89–94. [Google Scholar] [CrossRef] [PubMed]

- Obermeyer, Z.; Emanuel, E.J. Predicting the Future—Big Data, Machine Learning, and Clinical Medicine. N. Engl. J. Med. 2016, 375, 1216–1219. [Google Scholar] [CrossRef]

- Geirhos, R.; Jacobsen, J.H.; Michaelis, C.; Zemel, R.; Brendel, W.; Bethge, M.; Wichmann, F.A. Shortcut learning in deep neural networks. Nat. Mach. Intell. 2020, 2, 665–673. [Google Scholar] [CrossRef]

- Freeman, K.; Geppert, J.; Stinton, C.; Todkill, D.; Johnson, S.; Clarke, A.; Taylor-Phillips, S. Use of artificial intelligence for image analysis in breast cancer screening programmes: Systematic review of test accuracy. BMJ 2021, 374. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Burt, J.R.; Torosdagli, N.; Khosravan, N.; RaviPrakash, H.; Mortazi, A.; Tissavirasingham, F.; Hussein, S.; Bagci, U. Deep learning beyond cats and dogs: Recent advances in diagnosing breast cancer with deep neural networks. Br. J. Radiol. 2018, 91, 20170545. [Google Scholar] [CrossRef]

- Sharma, S.; Mehra, R. Conventional Machine Learning and Deep Learning Approach for Multi-Classification of Breast Cancer Histopathology Images—A Comparative Insight. J. Digit. Imaging 2020, 33, 632–654. [Google Scholar] [CrossRef]

- Hadadi, I.; Rae, W.; Clarke, J.; McEntee, M.; Ekpo, E. Diagnostic performance of adjunctive imaging modalities compared to mammography alone in women with non-dense and dense breasts: A systematic review and meta-analysis. Clin. Breast Cancer 2021, 21, 278–291. [Google Scholar] [CrossRef] [PubMed]

- Yassin, N.I.R.; Omran, S.; el Houby, E.M.F.; Allam, H. Machine learning techniques for breast cancer computer aided diagnosis using different image modalities: A systematic review. Comput. Methods Programs Biomed. 2018, 156, 25–45. [Google Scholar] [CrossRef] [PubMed]

- Saslow, D.; Boetes, C.; Burke, W.; Harms, S.; Leach, M.O.; Lehman, C.D.; Morris, E.; Pisano, E.; Schnall, M.; Sener, S.; et al. American Cancer Society guidelines for breast screening with MRI as an adjunct to mammography. CA A Cancer J. Clin. 2007, 57, 75–89. [Google Scholar]

- Park, J.; Chae, E.Y.; Cha, J.H.; Shin, H.J.; Choi, W.J.; Choi, Y.W.; Kim, H.H. Comparison of mammography, digital breast tomosynthesis, automated breast ultrasound, magnetic resonance imaging in evaluation of residual tumor after neoadjuvant chemotherapy. Eur. J. Radiol. 2018, 108, 261–268. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.; Houssami, N.; Brennan, M.; Nickel, B. The impact of mandatory mammographic breast density notification on supplemental screening practice in the United States: A systematic review. Breast Cancer Res. Treat. 2021, 187, 11–30. [Google Scholar] [CrossRef] [PubMed]

- Cho, N.; Han, W.; Han, B.K.; Bae, M.S.; Ko, E.S.; Nam, S.J.; Chae, E.Y.; Lee, J.W.; Kim, S.H.; Kang, B.J.; et al. Breast cancer screening with mammography plus ultrasonography or magnetic resonance imaging in women 50 years or younger at diagnosis and treated with breast conservation therapy. JAMA Oncol. 2017, 3, 1495–1502. [Google Scholar] [CrossRef]

- Arevalo, J.; Gonzalez, F.A.; Ramos-Pollan, R.; Oliveira, J.L.; Lopez, M.A.G. Convolutional neural networks for mammography mass lesion classification. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 797–800. [Google Scholar] [CrossRef]

- Duraisamy, S.; Emperumal, S. Computer-aided mammogram diagnosis system using deep learning convolutional fully complex-valued relaxation neural network classifier. IET Comput. Vis. 2017, 11, 656–662. [Google Scholar] [CrossRef]

- Khan, M.H.-M. Automated breast cancer diagnosis using artificial neural network (ANN). In Proceedings of the 2017 3rd Iranian Conference on Intelligent Systems and Signal Processing (ICSPIS), Shahrood, Iran, 20–21 December 2017; pp. 54–58. [Google Scholar] [CrossRef]

- Hadad, O.; Bakalo, R.; Ben-Ari, R.; Hashoul, S.; Amit, G. Classification of breast lesions using cross-modal deep learning. In Proceedings of the 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), Melbourne, VIC, Australia, 18–21 April 2017; pp. 109–112. [Google Scholar] [CrossRef]

- Kim, D.H.; Kim, S.T.; Ro, Y.M. Latent feature representation with 3-D multi-view deep convolutional neural network for bilateral analysis in digital breast tomosynthesis. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 927–931. [Google Scholar] [CrossRef]

- Comstock, C.E.; Gatsonis, C.; Newstead, G.M.; Snyder, B.S.; Gareen, I.F.; Bergin, J.T.; Rahbar, H.; Sung, J.S.; Jacobs, C.; Harvey, J.A.; et al. Comparison of Abbreviated Breast MRI vs Digital Breast Tomosynthesis for Breast Cancer Detection Among Women with Dense Breasts Undergoing Screening. JAMA 2020, 323, 746–756. [Google Scholar] [CrossRef]

- Debelee, T.G.; Schwenker, F.; Ibenthal, A.; Yohannes, D. Survey of deep learning in breast cancer image analysis. Evol. Syst. 2019, 11, 143–163. [Google Scholar] [CrossRef]

- Screening for Breast Cancer—ClinicalKey. Available online: https://www.clinicalkey.com/#!/content/book/3-s2.0-B9780323640596001237 (accessed on 27 July 2022).

- Chen, T.H.H.; Yen, A.M.F.; Fann, J.C.Y.; Gordon, P.; Chen, S.L.S.; Chiu, S.Y.H.; Hsu, C.Y.; Chang, K.J.; Lee, W.C.; Yeoh, K.G.; et al. Clarifying the debate on population-based screening for breast cancer with mammography: A systematic review of randomized controlled trials on mammography with Bayesian meta-analysis and causal model. Medicine 2017, 96, e5684. [Google Scholar] [CrossRef]

- Vieira, R.A.d.; Biller, G.; Uemura, G.; Ruiz, C.A.; Curado, M.P. Breast cancer screening in developing countries. Clinics 2017, 72, 244–253. [Google Scholar] [CrossRef]

- Abdelrahman, L.; al Ghamdi, M.; Collado-Mesa, F.; Abdel-Mottaleb, M. Convolutional neural networks for breast cancer detection in mammography: A survey. Comput. Biol. Med. 2021, 131, 104248. [Google Scholar] [CrossRef] [PubMed]

- Hooley, R.J.; Durand, M.A.; Philpotts, L.E. Advances in Digital Breast Tomosynthesis. Am. J. Roentgenol. 2017, 208, 256–266. [Google Scholar] [CrossRef] [PubMed]

- Gur, D.; Abrams, G.S.; Chough, D.M.; Ganott, M.A.; Hakim, C.M.; Perrin, R.L.; Rathfon, G.Y.; Sumkin, J.H.; Zuley, M.L.; Bandos, A.I. Digital breast tomosynthesis: Observer performance study. Am. J. Roentgenol. 2009, 193, 586–591. [Google Scholar]

- Østerås, B.H.; Martinsen, A.C.T.; Gullien, R.; Skaane, P. Digital Mammography versus Breast Tomosynthesis: Impact of Breast Density on Diagnostic Performance in Population-based Screening. Radiology 2019, 293, 60–68. [Google Scholar] [CrossRef]

- Zhang, J.; Ghate, S.V.; Grimm, L.J.; Saha, A.; Cain, E.H.; Zhu, Z.; Mazurowski, M.A. February. Convolutional encoder-decoder for breast mass segmentation in digital breast tomosynthesis. In Medical Imaging 2018: Computer-Aided Diagnosis; SPIE: Bellingham, WA, USA, 2018; Volume 10575, pp. 639–644. [Google Scholar]

- Poplack, S.P.; Tosteson, T.D.; Kogel, C.A.; Nagy, H.M. Digital breast tomosynthesis: Initial experience in 98 women with abnormal digital screening mammography. AJR Am. J. Roentgenol. 2007, 189, 616–623. [Google Scholar] [CrossRef]

- Mun, H.S.; Kim, H.H.; Shin, H.J.; Cha, J.H.; Ruppel, P.L.; Oh, H.Y.; Chae, E.Y. Assessment of extent of breast cancer: Comparison between digital breast tomosynthesis and full-field digital mammography. Clin. Radiol. 2013, 68, 1254–1259. [Google Scholar] [CrossRef]

- Lourenco, A.P.; Barry-Brooks, M.; Baird, G.L.; Tuttle, A.; Mainiero, M.B. Changes in recall type and patient treatment following implementation of screening digital breast tomosynthesis. Radiology 2015, 274, 337–342. [Google Scholar] [CrossRef]

- Heywang-Köbrunner, S.H.; Jänsch, A.; Hacker, A.; Weinand, S.; Vogelmann, T. Digital breast tomosynthesis (DBT) plus synthesised two-dimensional mammography (s2D) in breast cancer screening is associated with higher cancer detection and lower recalls compared to digital mammography (DM) alone: Results of a systematic review and meta-analysis. Eur. Radiol. 2021, 32, 2301–2312. [Google Scholar]

- Alabousi, M.; Wadera, A.; Kashif Al-Ghita, M.; Kashef Al-Ghetaa, R.; Salameh, J.P.; Pozdnyakov, A.; Zha, N.; Samoilov, L.; Dehmoobad Sharifabadi, A.; Sadeghirad, B. Performance of digital breast tomosynthesis, synthetic mammography, and digital mammography in breast cancer screening: A systematic review and meta-analysis. JNCI J. Natl. Cancer Inst. 2020, 113, 680–690. [Google Scholar] [CrossRef]

- Durand, M.A.; Friedewald, S.M.; Plecha, D.M.; Copit, D.S.; Barke, L.D.; Rose, S.L.; Hayes, M.K.; Greer, L.N.; Dabbous, F.M.; Conant, E.F. False-negative rates of breast cancer screening with and without digital breast tomosynthesis. Radiology 2021, 298, 296–305. [Google Scholar] [CrossRef] [PubMed]

- Alsheik, N.; Blount, L.; Qiong, Q.; Talley, M.; Pohlman, S.; Troeger, K.; Abbey, G.; Mango, V.L.; Pollack, E.; Chong, A.; et al. Outcomes by race in breast cancer screening with digital breast tomosynthesis versus digital mammography. J. Am. Coll. Radiol. 2021, 18, 906–918. [Google Scholar] [CrossRef] [PubMed]

- Boisselier, A.; Mandoul, C.; Monsonis, B.; Delebecq, J.; Millet, I.; Pages, E.; Taourel, P. Reader performances in breast lesion characterization via DBT: One or two views and which view? Eur. J. Radiol. 2021, 142, 109880. [Google Scholar] [CrossRef] [PubMed]

- Fiorica, J.V. Breast Cancer Screening, Mammography, and Other Modalities. Clin. Obstet. Gynecol. 2016, 59, 688–709. [Google Scholar] [CrossRef] [PubMed]

- Jesneck, J.L.; Lo, J.Y.; Baker, J.A. Breast Mass Lesions: Computer-aided Diagnosis Models with Mammographic and Sonographic Descriptors. Radiology 2007, 244, 390–398. [Google Scholar] [CrossRef]

- Cheng, H.D.; Shan, J.; Ju, W.; Guo, Y.; Zhang, L. Automated breast cancer detection and classification using ultrasound images: A survey. Pattern Recognit. 2010, 43, 299–317. [Google Scholar] [CrossRef]

- Maxim, L.D.; Niebo, R.; Utell, M.J. Screening tests: A review with examples. Inhal. Toxicol. 2014, 26, 811–828. [Google Scholar] [CrossRef]