Feasibility of Synthetic Computed Tomography Images Generated from Magnetic Resonance Imaging Scans Using Various Deep Learning Methods in the Planning of Radiation Therapy for Prostate Cancer

Abstract

:Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Patients

2.2. CT and MRI Acquisition

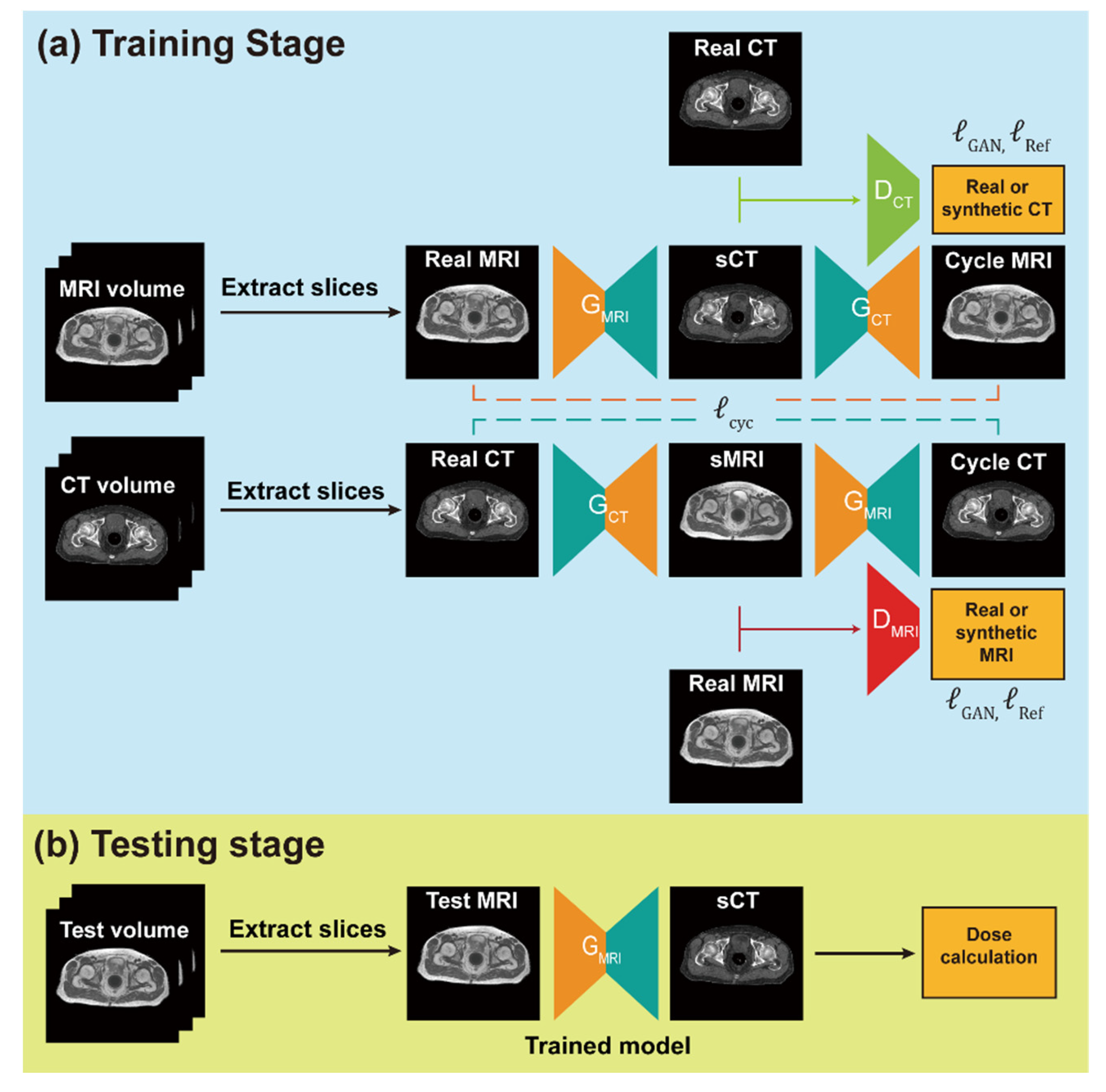

2.3. Network Training and sCT Generation

2.4. Data Pre-Processing

2.5. Network Training and sCT Generation

2.6. Assessment of sCT Quality

2.7. Statistical Analysis

3. Results

3.1. Patients’ Characteristics

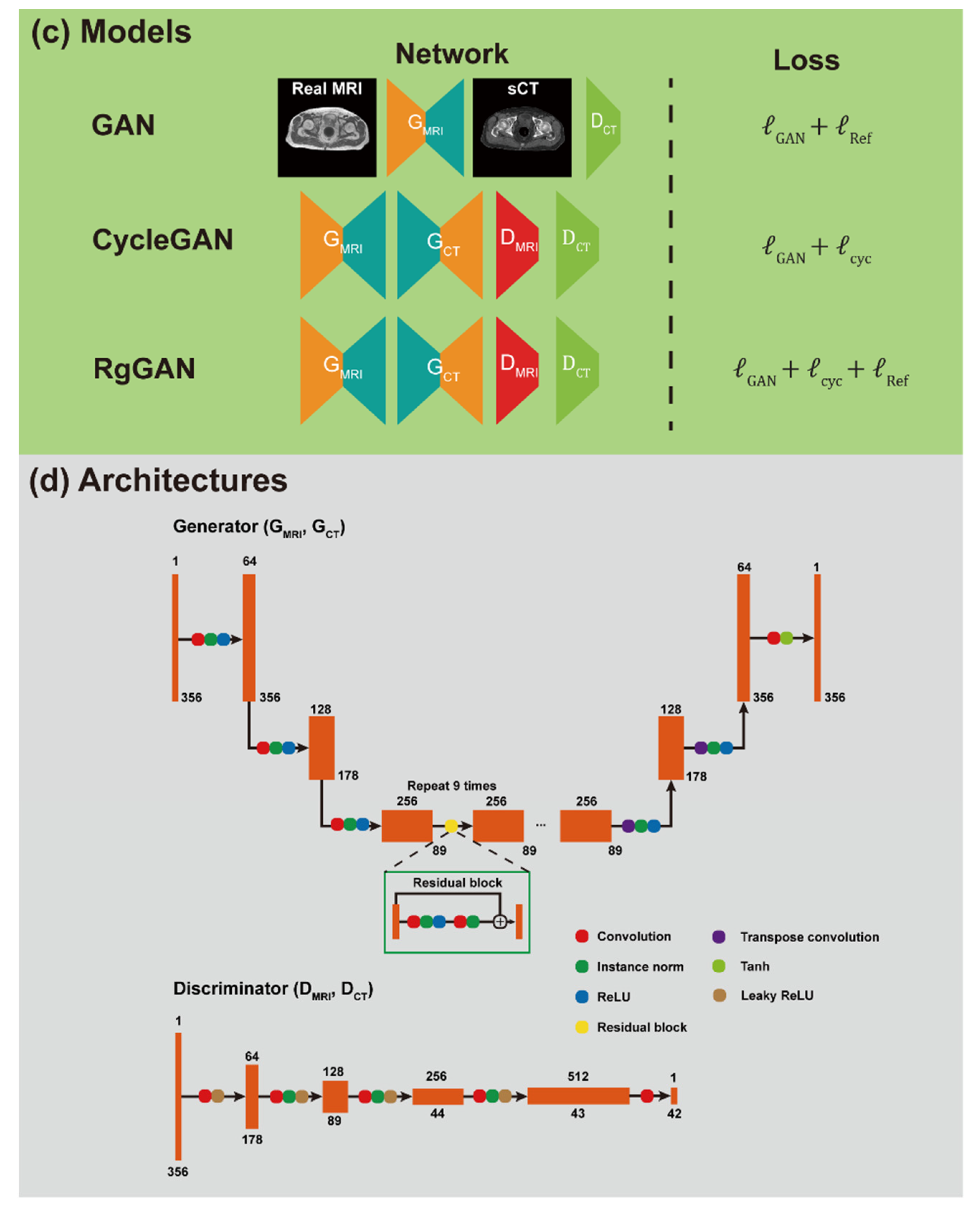

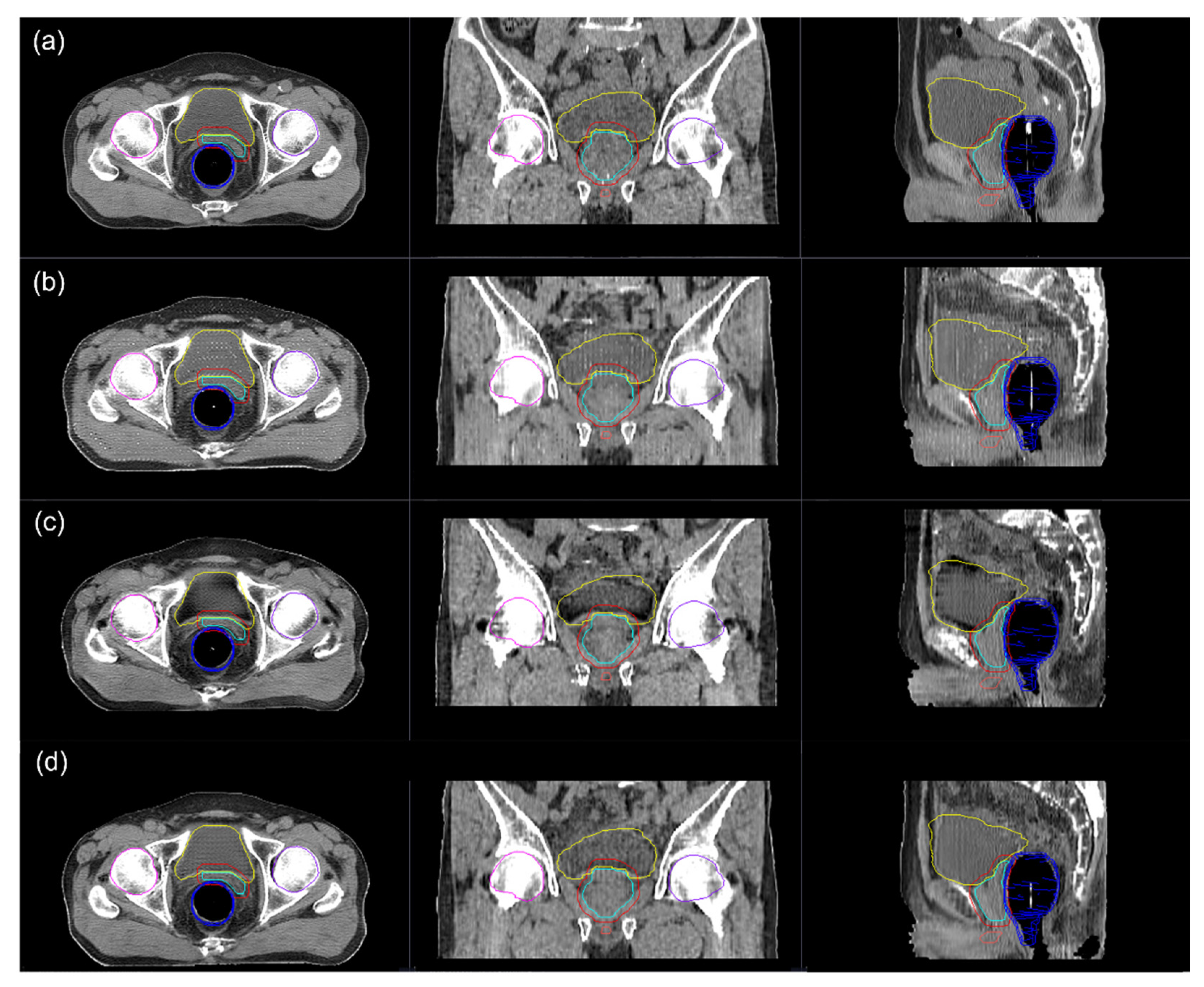

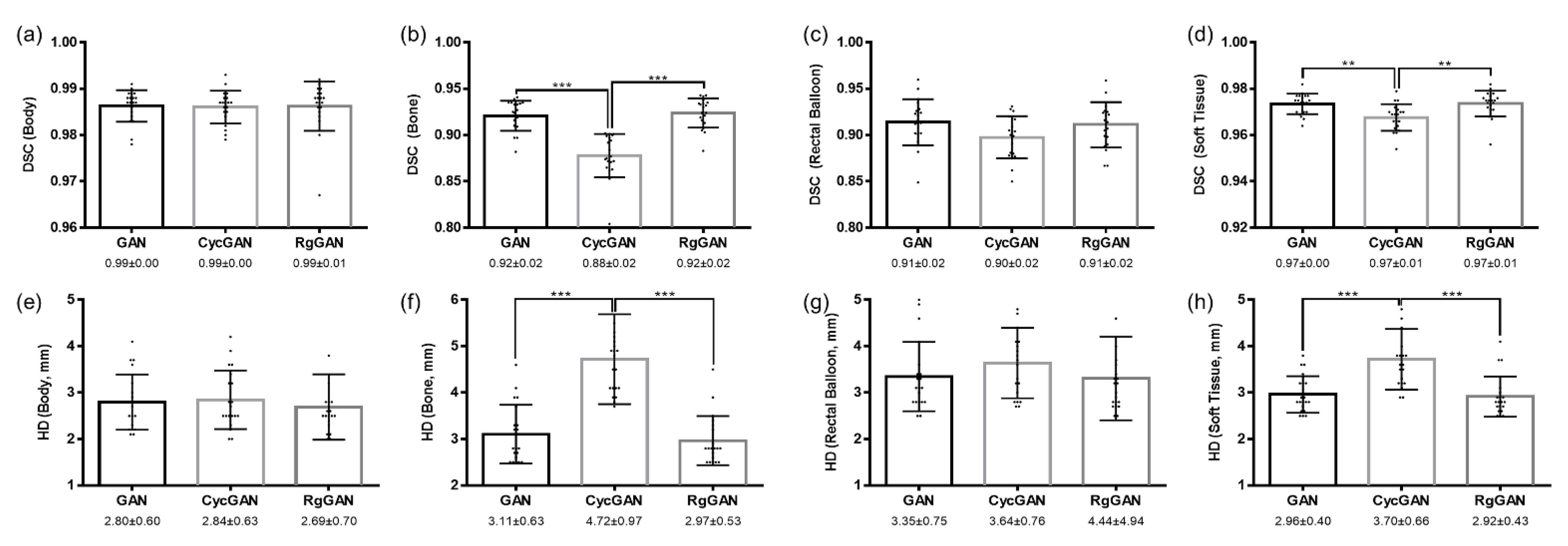

3.2. Image Quality

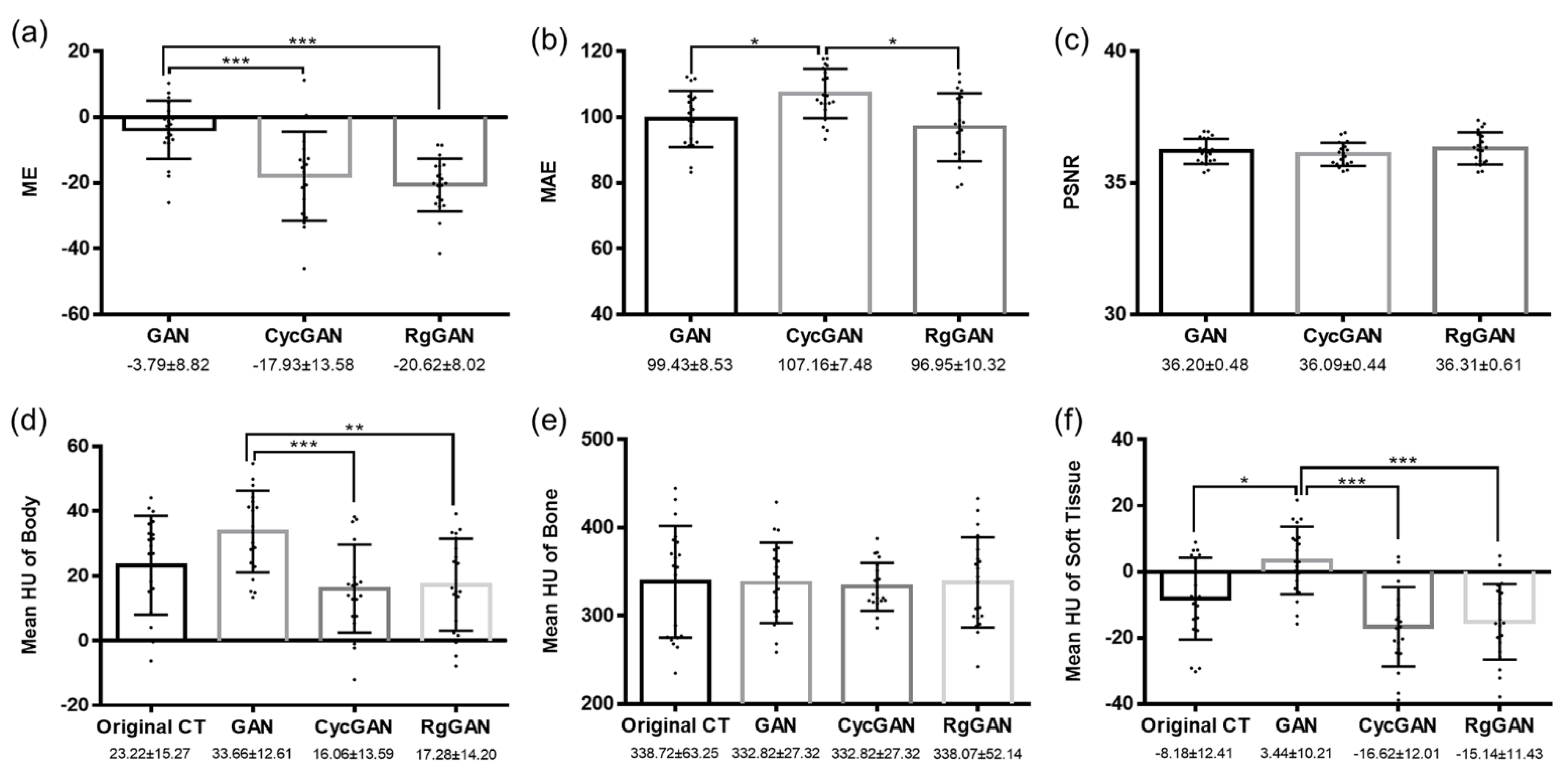

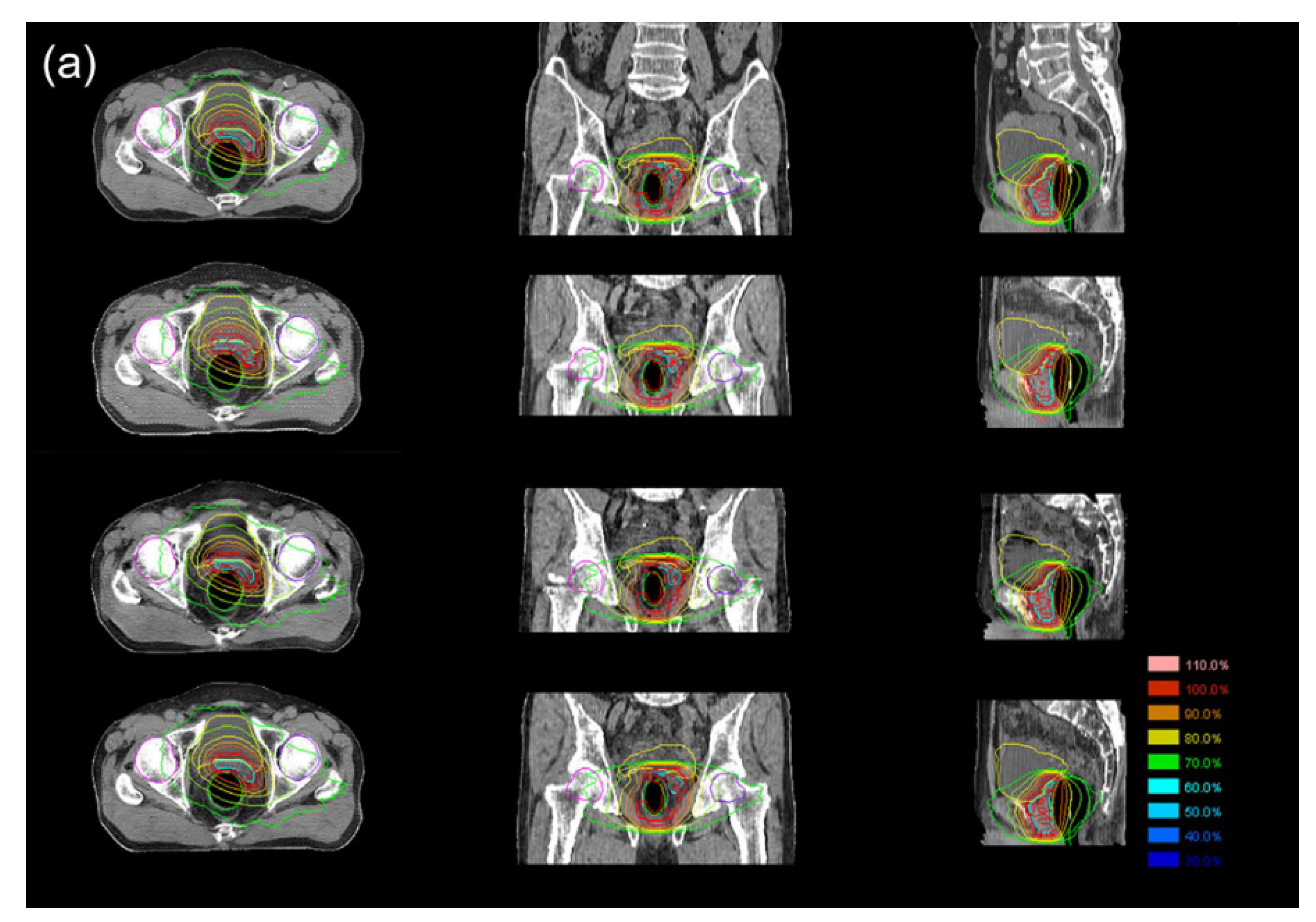

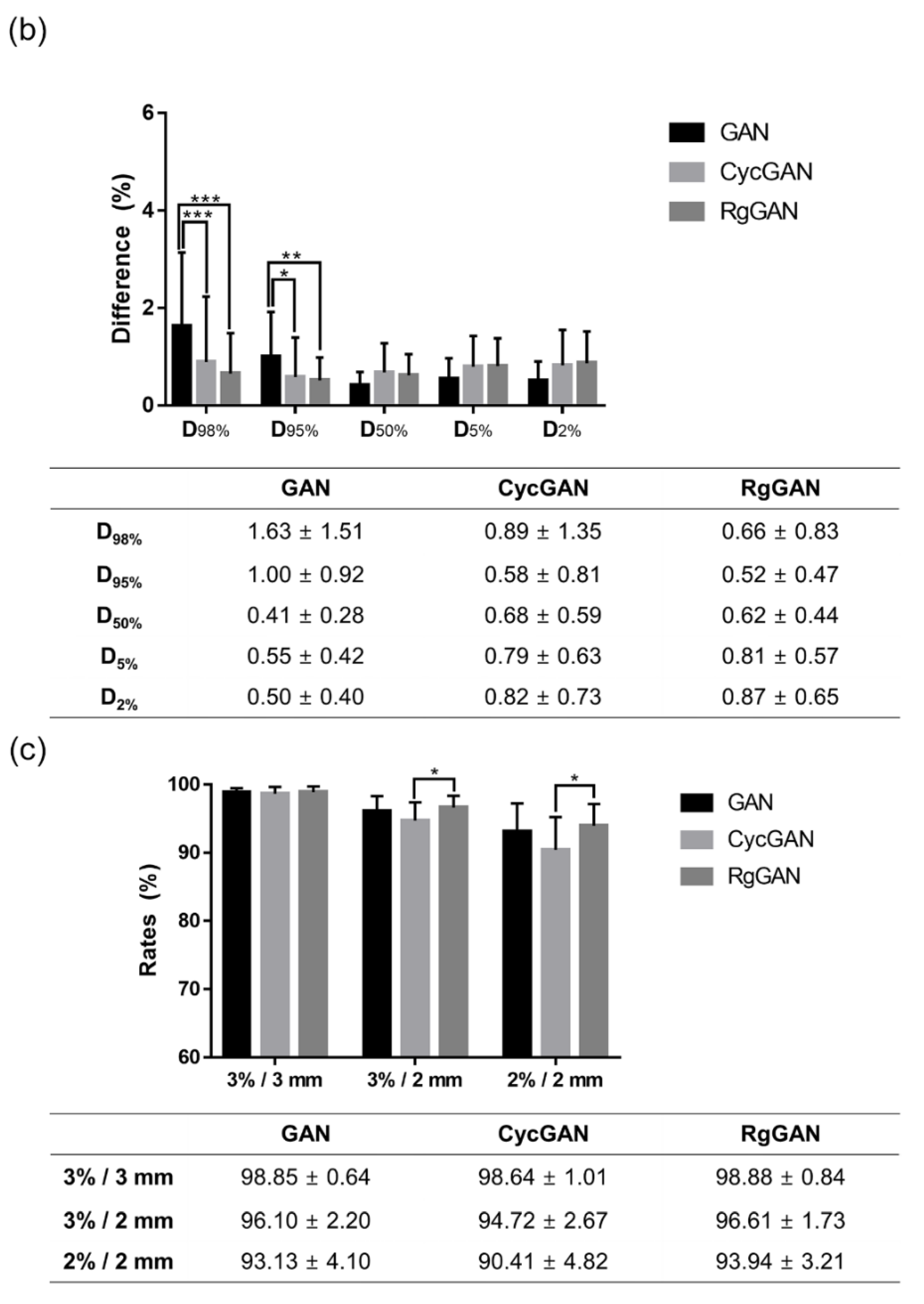

3.3. Dosimetric Comparison

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Culp, M.B.; Soerjomataram, I.; Efstathiou, J.A.; Bray, F.; Jemal, A. Recent Global Patterns in Prostate Cancer Incidence and Mortality Rates. Eur. Urol. 2020, 77, 38–52. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.U.; Cho, K.H. Multimodal therapy for locally advanced prostate cancer: The roles of radiotherapy, androgen deprivation therapy, and their combination. Radiat. Oncol. J. 2017, 35, 189–197. [Google Scholar] [CrossRef] [Green Version]

- Parker, C.; Castro, E.; Fizazi, K.; Heidenreich, A.; Ost, P.; Procopio, G.; Tombal, B.; Gillessen, S. Prostate cancer: ESMO Clinical Practice Guidelines for diagnosis, treatment and follow-up. Ann. Oncol. 2020, 31, 1119–1134. [Google Scholar] [CrossRef]

- The Complete Library of NCCN Clinical Practice Guidelines in Oncology; National Comprehensive Cancer Network: Jenkintown, PA, USA, 2021.

- Karlsson, M.; Karlsson, M.G.; Nyholm, T.; Amies, C.; Zackrisson, B. Dedicated magnetic resonance imaging in the radiotherapy clinic. Int. J. Radiat. Oncol. Biol. Phys. 2009, 74, 644–651. [Google Scholar] [CrossRef]

- Dirix, P.; Haustermans, K.; Vandecaveye, V. The value of magnetic resonance imaging for radiotherapy planning. Semin. Radiat. Oncol. 2014, 24, 151–159. [Google Scholar] [CrossRef] [PubMed]

- Murray, J.; Tree, A.C. Prostate cancer—Advantages and disadvantages of MR-guided RT. Clin. Transl. Radiat. Oncol. 2019, 18, 68–73. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nyholm, T.; Nyberg, M.; Karlsson, M.G.; Karlsson, M. Systematisation of spatial uncertainties for comparison between a MR and a CT-based radiotherapy workflow for prostate treatments. Radiat. Oncol. 2009, 4, 54. [Google Scholar] [CrossRef]

- Nyholm, T.; Jonsson, J. Counterpoint: Opportunities and challenges of a magnetic resonance imaging-only radiotherapy work flow. Semin. Radiat. Oncol. 2014, 24, 175–180. [Google Scholar] [CrossRef]

- Johnstone, E.; Wyatt, J.J.; Henry, A.M.; Short, S.C.; Sebag-Montefiore, D.; Murray, L.; Kelly, C.G.; McCallum, H.M.; Speight, R. Systematic Review of Synthetic Computed Tomography Generation Methodologies for Use in Magnetic Resonance Imaging-Only Radiation Therapy. Int. J. Radiat. Oncol. Biol. Phys. 2018, 100, 199–217. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Lei, Y.; Wang, Y.; Shafai-Erfani, G.; Wang, T.; Tian, S.; Patel, P.; Jani, A.B.; McDonald, M.; Curran, W.J.; et al. Evaluation of a deep learning-based pelvic synthetic CT generation technique for MRI-based prostate proton treatment planning. Phys. Med. Biol. 2019, 64, 205022. [Google Scholar] [CrossRef]

- Jung, S.H.; Kim, J.; Chung, Y.; Keserci, B.; Pyo, H.; Park, H.C.; Park, W. Magnetic resonance image-based tomotherapy planning for prostate cancer. Radiat. Oncol. J. 2020, 38, 52–59. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Peng, Y.; Chen, S.; Qin, A.; Chen, M.; Gao, X.; Liu, Y.; Miao, J.; Gu, H.; Zhao, C.; Deng, X.; et al. Magnetic resonance-based synthetic computed tomography images generated using generative adversarial networks for nasopharyngeal carcinoma radiotherapy treatment planning. Radiother. Oncol. 2020, 150, 217–224. [Google Scholar] [CrossRef]

- Maspero, M.; Bentvelzen, L.G.; Savenije, M.H.F.; Guerreiro, F.; Seravalli, E.; Janssens, G.O.; van den Berg, C.A.T.; Philippens, M.E.P. Deep learning-based synthetic CT generation for paediatric brain MR-only photon and proton radiotherapy. Radiother. Oncol. 2020, 153, 197–204. [Google Scholar] [CrossRef] [PubMed]

- Florkow, M.C.; Guerreiro, F.; Zijlstra, F.; Seravalli, E.; Janssens, G.O.; Maduro, J.H.; Knopf, A.C.; Castelein, R.M.; van Stralen, M.; Raaymakers, B.W.; et al. Deep learning-enabled MRI-only photon and proton therapy treatment planning for paediatric abdominal tumours. Radiother. Oncol. 2020, 153, 220–227. [Google Scholar] [CrossRef]

- Nawaz, M.; Nazir, T.; Masood, M.; Mehmood, A.; Mahum, R.; Khan, M.A.; Kadry, S.; Thinnukool, O. Analysis of Brain MRI Images Using Improved CornerNet Approach. Diagnostics 2021, 11, 1856. [Google Scholar] [CrossRef] [PubMed]

- Manic, K.S.; Biju, R.; Patel, W.; Khan, M.A.; Raja, N.S.M.; Uma, S. Extraction and Evaluation of Corpus Callosum from 2D Brain MRI Slice: A Study with Cuckoo Search Algorithm. Comput. Math. Methods Med. 2021, 2021, 5524637. [Google Scholar] [CrossRef] [PubMed]

- Qi, M.; Li, Y.; Wu, A.; Jia, Q.; Li, B.; Sun, W.; Dai, Z.; Lu, X.; Zhou, L.; Deng, X.; et al. Multi-sequence MR image-based synthetic CT generation using a generative adversarial network for head and neck MRI-only radiotherapy. Med. Phys. 2020, 47, 1880–1894. [Google Scholar] [CrossRef] [PubMed]

- Bird, D.; Nix, M.G.; McCallum, H.; Teo, M.; Gilbert, A.; Casanova, N.; Cooper, R.; Buckley, D.L.; Sebag-Montefiore, D.; Speight, R.; et al. Multicentre, deep learning, synthetic-CT generation for ano-rectal MR-only radiotherapy treatment planning. Radiother. Oncol. 2021, 156, 23–28. [Google Scholar] [CrossRef]

- Maspero, M.; Savenije, M.H.F.; Dinkla, A.M.; Seevinck, P.R.; Intven, M.P.W.; Jurgenliemk-Schulz, I.M.; Kerkmeijer, L.G.W.; van den Berg, C.A.T. Dose evaluation of fast synthetic-CT generation using a generative adversarial network for general pelvis MR-only radiotherapy. Phys. Med. Biol. 2018, 63, 185001. [Google Scholar] [CrossRef]

- Nie, D.; Trullo, R.; Lian, J.; Wang, L.; Petitjean, C.; Ruan, S.; Wang, Q.; Shen, D. Medical Image Synthesis with Deep Convolutional Adversarial Networks. IEEE Trans. Biomed. Eng. 2018, 65, 2720–2730. [Google Scholar] [CrossRef]

- Yang, H.; Sun, J.; Carass, A.; Zhao, C.; Lee, J.; Prince, J.L.; Xu, Z. Unsupervised MR-to-CT Synthesis Using Structure-Constrained CycleGAN. IEEE Trans. Med. Imaging 2020, 39, 4249–4261. [Google Scholar] [CrossRef] [PubMed]

- Lei, Y.; Harms, J.; Wang, T.; Liu, Y.; Shu, H.K.; Jani, A.B.; Curran, W.J.; Mao, H.; Liu, T.; Yang, X. MRI-only based synthetic CT generation using dense cycle consistent generative adversarial networks. Med. Phys. 2019, 46, 3565–3581. [Google Scholar] [CrossRef] [PubMed]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 June 2017; pp. 5967–5976. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar]

- Bogoya, J.M.; Vargas, A.; Schütze, O. The Averaged Hausdorff Distances in Multi-Objective Optimization: A Review. Mathematics 2019, 7, 894. [Google Scholar] [CrossRef] [Green Version]

- Klages, P.; Benslimane, I.; Riyahi, S.; Jiang, J.; Hunt, M.; Deasy, J.O.; Veeraraghavan, H.; Tyagi, N. Patch-based generative adversarial neural network models for head and neck MR-only planning. Med. Phys. 2020, 47, 626–642. [Google Scholar] [CrossRef] [PubMed]

- Sze, G.; Kawamura, Y.; Negishi, C.; Constable, R.T.; Merriam, M.; Oshio, K.; Jolesz, F. Fast spin-echo MR imaging of the cervical spine: Influence of echo train length and echo spacing on image contrast and quality. AJNR Am. J. Neuroradiol. 1993, 14, 1203–1213. [Google Scholar] [PubMed]

- Li, T.; Mirowitz, S.A. Fast T2-weighted MR imaging: Impact of variation in pulse sequence parameters on image quality and artifacts. Magn. Reson. Imaging 2003, 21, 745–753. [Google Scholar] [CrossRef]

| Characteristics | Number (%) | p Value | |

|---|---|---|---|

| Training/Validation Group (n = 93) | Test Group (n = 20) | ||

| Median age (range) | 72 (58–82) | 69 (58–88) | 0.908 |

| T stage | 0.476 | ||

| 1–2 | 32 (34.4) | 9 (45.0) | |

| 3–4 | 61 (65.6) | 11 (55.0) | |

| N stage | 0.778 | ||

| 0 | 68 (73.1) | 16 (80.0) | |

| 1 | 25 (26.9) | 4 (20.0) | |

| M stage | 1.000 | ||

| 0 | 85 (91.4) | 19 (95.0) | |

| 1 | 8 (8.6) | 1 (5.0) | |

| Prostatectomy | 0.443 | ||

| Yes | 9 (9.7) | 3 (15.0) | |

| No | 84 (90.3) | 17 (85.0) | |

| Radiotherapy modality | 0.379 | ||

| X-ray | 86 (92.5) | 17 (85.0) | |

| Proton | 7 (7.5) | 3 (15.0) | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoo, G.S.; Luu, H.M.; Kim, H.; Park, W.; Pyo, H.; Han, Y.; Park, J.Y.; Park, S.-H. Feasibility of Synthetic Computed Tomography Images Generated from Magnetic Resonance Imaging Scans Using Various Deep Learning Methods in the Planning of Radiation Therapy for Prostate Cancer. Cancers 2022, 14, 40. https://doi.org/10.3390/cancers14010040

Yoo GS, Luu HM, Kim H, Park W, Pyo H, Han Y, Park JY, Park S-H. Feasibility of Synthetic Computed Tomography Images Generated from Magnetic Resonance Imaging Scans Using Various Deep Learning Methods in the Planning of Radiation Therapy for Prostate Cancer. Cancers. 2022; 14(1):40. https://doi.org/10.3390/cancers14010040

Chicago/Turabian StyleYoo, Gyu Sang, Huan Minh Luu, Heejung Kim, Won Park, Hongryull Pyo, Youngyih Han, Ju Young Park, and Sung-Hong Park. 2022. "Feasibility of Synthetic Computed Tomography Images Generated from Magnetic Resonance Imaging Scans Using Various Deep Learning Methods in the Planning of Radiation Therapy for Prostate Cancer" Cancers 14, no. 1: 40. https://doi.org/10.3390/cancers14010040

APA StyleYoo, G. S., Luu, H. M., Kim, H., Park, W., Pyo, H., Han, Y., Park, J. Y., & Park, S.-H. (2022). Feasibility of Synthetic Computed Tomography Images Generated from Magnetic Resonance Imaging Scans Using Various Deep Learning Methods in the Planning of Radiation Therapy for Prostate Cancer. Cancers, 14(1), 40. https://doi.org/10.3390/cancers14010040