The Challenge of Choosing the Best Classification Method in Radiomic Analyses: Recommendations and Applications to Lung Cancer CT Images

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset

Non-Small-Cell Lung Cancer (NSCLC) Patients’ Original Dataset

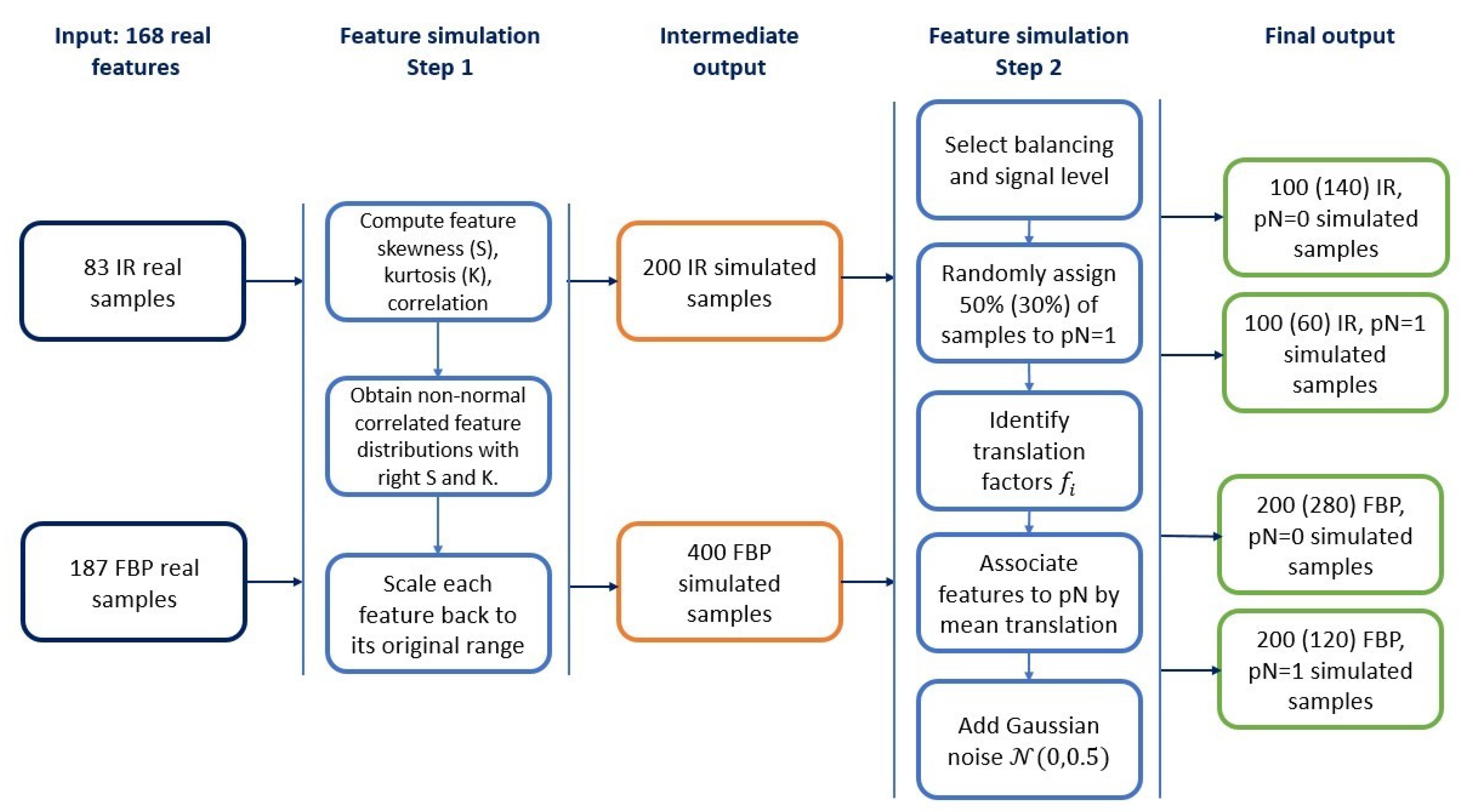

2.2. Simulated Datasets

2.2.1. Radiomic Features Simulation

2.2.2. Construction of Simulated Datasets

2.3. Feature Selection and Classifier Methods

2.4. Classification of Simulated and Real Datasets

2.4.1. Classification Framework

2.4.2. Application to Simulated and NSCLC Data

3. Results

3.1. Data Simulation Step

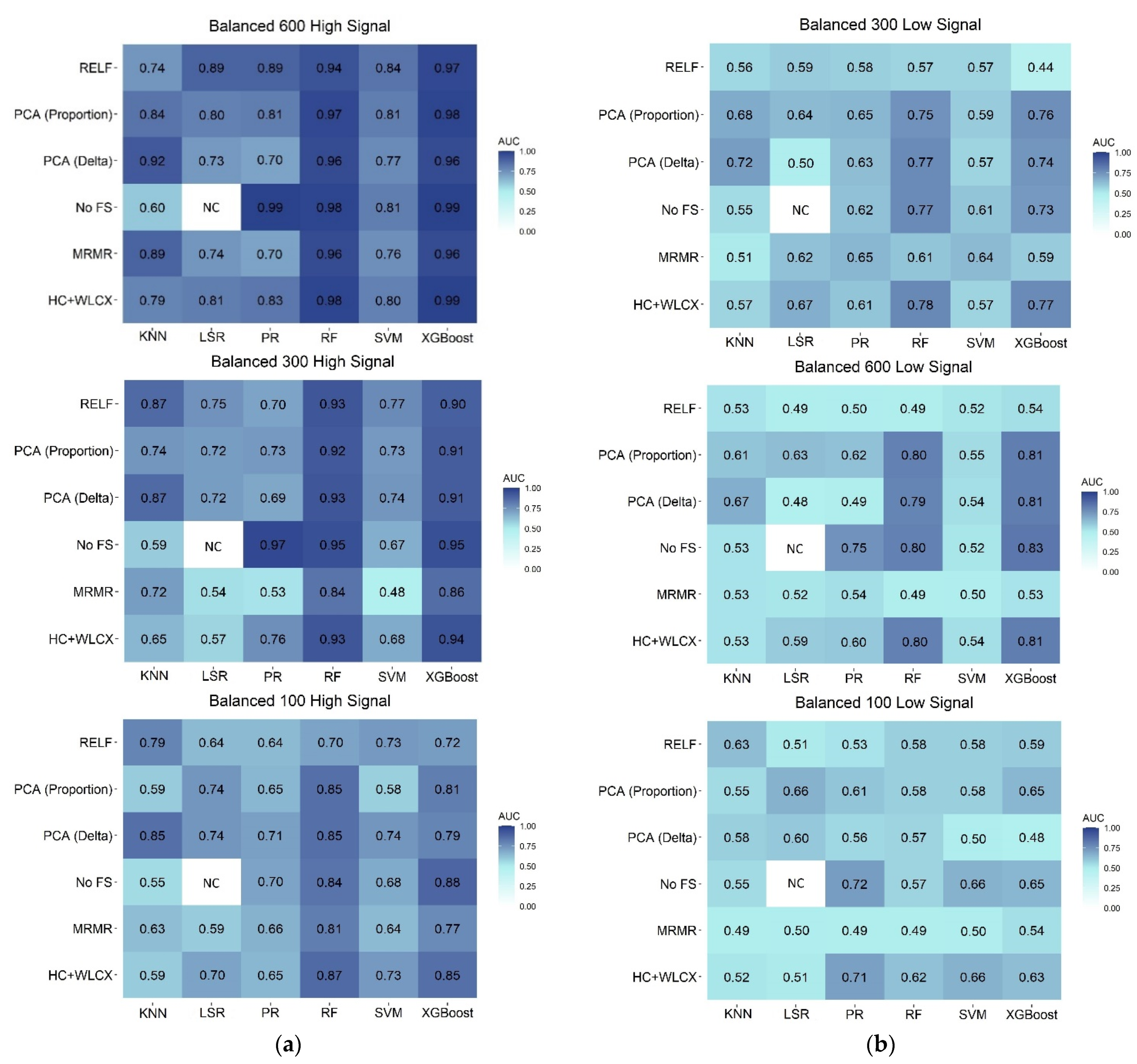

3.2. Classification Performances on Balanced Samples

3.3. Classification Performances on Unbalanced Samples

3.4. Overall Comparison of Feature Selection and Classification Methods

3.5. Application to NSCLC Real Data

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cufer, T.; Ovcaricek, T.; O’Brien, M.E.R. Systemic therapy of advanced non-small cell lung cancer: Major-developments of the last 5-years. Eur. J. Cancer 2013, 49, 1216–1225. [Google Scholar] [CrossRef]

- Aerts, H.J.W.L.; Velazquez, E.R.; Leijenaar, R.T.H.; Parmar, C.; Grossmann, P.; Cavalho, S.; Bussink, J.; Monshouwer, R.; Haibe-Kains, B.; Rietveld, D.; et al. Decoding tumour phenotype by noninvasive imaging using a quantitative radiomics approach. Nat. Commun. 2014, 5. [Google Scholar] [CrossRef] [PubMed]

- Van Griethuysen, J.J.M.; Fedorov, A.; Parmar, C.; Hosny, A.; Aucoin, N.; Narayan, V.; Beets-Tan, R.G.H.; Fillion-Robin, J.C.; Pieper, S.; Aerts, H.J.W.L. Computational radiomics system to decode the radiographic phenotype. Cancer Res. 2017, 77, e104–e107. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Lambin, P.; Rios-Velazquez, E.; Leijenaar, R.; Carvalho, S.; Van Stiphout, R.G.P.M.; Granton, P.; Zegers, C.M.L.; Gillies, R.; Boellard, R.; Dekker, A.; et al. Radiomics: Extracting more information from medical images using advanced feature analysis. Eur. J. Cancer 2012, 48, 441–446. [Google Scholar] [CrossRef]

- Parmar, C.; Grossmann, P.; Bussink, J.; Lambin, P.; Aerts, H.J.W.L. Machine Learning methods for Quantitative Radiomic Biomarkers. Sci. Rep. 2015, 5, 1–11. [Google Scholar] [CrossRef]

- Coroller, T.P.; Grossmann, P.; Hou, Y.; Rios Velazquez, E.; Leijenaar, R.T.H.; Hermann, G.; Lambin, P.; Haibe-Kains, B.; Mak, R.H.; Aerts, H.J.W.L. CT-based radiomic signature predicts distant metastasis in lung adenocarcinoma. Radiother. Oncol. 2015, 114, 345–350. [Google Scholar] [CrossRef]

- Wu, W.; Parmar, C.; Grossmann, P.; Quackenbush, J.; Lambin, P.; Bussink, J.; Mak, R.; Aerts, H.J.W.L. Exploratory study to identify radiomics classifiers for lung cancer histology. Front. Oncol. 2016, 6, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Huynh, E.; Coroller, T.P.; Narayan, V.; Agrawal, V.; Romano, J.; Franco, I.; Parmar, C.; Hou, Y.; Mak, R.H.; Aerts, H.J.W.L. Associations of radiomic data extracted from static and respiratory-gated CT scans with disease recurrence in lung cancer patients treated with SBRT. PLoS ONE 2017, 12, e0169172. [Google Scholar] [CrossRef]

- Kolossváry, M.; Karády, J.; Szilveszter, B.; Kitslaar, P.; Hoffmann, U.; Merkely, B.; Maurovich-Horvat, P. Radiomic features are superior to conventional quantitative computed tomographic metrics to identify coronary plaques with napkin-ring sign. Circ. Cardiovasc. Imaging 2017, 10, 1–9. [Google Scholar] [CrossRef]

- O’Connor, J.P.B. Rethinking the role of clinical imaging. Elife 2017, 6, e30563. [Google Scholar] [CrossRef]

- Sanduleanu, S.; Woodruff, H.C.; de Jong, E.E.C.; van Timmeren, J.E.; Jochems, A.; Dubois, L.; Lambin, P. Tracking tumor biology with radiomics: A systematic review utilizing a radiomics quality score. Radiother. Oncol. 2018, 127, 349–360. [Google Scholar] [CrossRef]

- Deist, T.M.; Dankers, F.J.W.M.; Valdes, G.; Wijsman, R.; Hsu, I.C.; Oberije, C.; Lustberg, T.; van Soest, J.; Hoebers, F.; Jochems, A.; et al. Machine learning algorithms for outcome prediction in (chemo)radiotherapy: An empirical comparison of classifiers. Med. Phys. 2018, 45, 3449–3459. [Google Scholar] [CrossRef]

- Ibrahim, A.; Vallières, M.; Woodruff, H.; Primakov, S.; Beheshti, M.; Keek, S.; Refaee, T.; Sanduleanu, S.; Walsh, S.; Morin, O.; et al. Radiomics analysis for clinical decision support in nuclear medicine. Semin. Nucl. Med. 2019, 49, 438–449. [Google Scholar] [CrossRef]

- Refaee, T.; Wu, G.; Ibrahim, A.; Halilaj, I.; Leijenaar, R.T.H.; Rogers, W.; Gietema, H.A.; Hendriks, L.E.L.; Lambin, P.; Woodruff, H.C. The emerging role of radiomics in COPD and lung cancer. Respiration 2020, 99, 99–107. [Google Scholar] [CrossRef] [PubMed]

- Rizzo, S.; Botta, F.; Raimondi, S.; Origgi, D.; Fanciullo, C.; Morganti, A.G.; Bellomi, M. Radiomics: The facts and the challenges of image analysis. Eur. Radiol. Exp. 2018, 2. [Google Scholar] [CrossRef]

- Rogers, W.; Woodruff, H.C. 141.BJR 125th anniversary special feature: Review article radiomics: From qualitative to quantitative imaging. Br. Inst. Radiol. 2020, 1, 1–13. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Eschrich, S.; Yang, I.; Bloom, G.; Kwong, K.Y.; Boulware, D.; Cantor, A.; Coppola, D.; Kruhøffer, M.; Aaltonen, L.; Orntoft, T.F.; et al. Molecular staging for survival prediction of colorectal cancer patients. J. Clin. Oncol. 2005, 23, 3526–3535. [Google Scholar] [CrossRef] [PubMed]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Shedden, K.; Taylor, J.M.G.; Enkemann, S.A.; Tsao, M.S.; Yeatman, T.J.; Gerald, W.L.; Eschrich, S.; Jurisica, I.; Giordano, T.J.; Misek, D.E.; et al. Gene expression-based survival prediction in lung adenocarcinoma: A multi-site, blinded validation study. Nat. Med. 2008, 14, 822–827. [Google Scholar] [CrossRef] [PubMed]

- Hawkins, S.H.; Korecki, J.N.; Balagurunathan, Y.; Gu, Y.; Kumar, V.; Basu, S.; Hall, L.O.; Goldgof, D.B.; Gatenby, R.A.; Gillies, R.J. Predicting outcomes of nonsmall cell lung cancer using CT image features. IEEE Access 2014, 2, 1418–1426. [Google Scholar] [CrossRef]

- Gulliford, S. Machine Learning in Radiation Oncology; El Naqa, I., Li, R., Murphy, M.J., Eds.; Springer International Publishing: Cham, Switzerland, 2015; ISBN 978-3-319-18304-6. [Google Scholar]

- Wang, J.; Wu, C.J.; Bao, M.L.; Zhang, J.; Wang, X.N.; Zhang, Y.D. Machine learning-based analysis of MR radiomics can help to improve the diagnostic performance of PI-RADS v2 in clinically relevant prostate cancer. Eur. Radiol. 2017, 27, 4082–4090. [Google Scholar] [CrossRef] [PubMed]

- Zhang, B.; He, X.; Ouyang, F.; Gu, D.; Dong, Y.; Zhang, L.; Mo, X.; Huang, W.; Tian, J.; Zhang, S. Radiomic machine-learning classifiers for prognostic biomarkers of advanced nasopharyngeal carcinoma. Cancer Lett. 2017, 403, 21–27. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Oikonomou, A.; Wong, A.; Haider, M.A.; Khalvati, F. Radiomics-based prognosis analysis for non-small cell lung cancer. Sci. Rep. 2017, 7, 1–8. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning; Springer: New York, NY, USA, 2009; ISBN 978-0-387-84857-0. [Google Scholar]

- Delzell, D.A.P.; Magnuson, S.; Peter, T.; Smith, M.; Smith, B.J. Machine learning and feature selection methods for disease classification with application to lung cancer screening image data. Front. Oncol. 2019, 9, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Botta, F.; Raimondi, S.; Rinaldi, L.; Bellerba, F.; Corso, F.; Bagnardi, V.; Origgi, D.; Minelli, R.; Pitoni, G.; Petrella, F.; et al. Association of a CT-based clinical and radiomics score of non-small cell lung cancer (NSCLC) with lymph node status and overall survival. Cancers 2020, 12, 1432. [Google Scholar] [CrossRef]

- Vale, C.D.; Maurelli, V.A. Simulating multivariate nonnormal distributions. Psychometrika 1983, 48, 465–471. [Google Scholar] [CrossRef]

- Kuhn, M. Building predictive models in R using the caret package. J. Stat. Softw. 2008, 28, 1–26. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Figueroa, R.L.; Zeng-Treitler, Q.; Kandula, S.; Ngo, L.H. Predicting sample size required for classification performance. BMC Med. Inform. Decis. Mak. 2012, 12. [Google Scholar] [CrossRef]

- Chawla, N.V. Data mining for imbalanced datasets: An overview. Data Min. Knowl. Discov. Handb. 2005, 875–886. [Google Scholar] [CrossRef]

- Park, J.E.; Kim, D.; Kim, H.S.; Park, S.Y.; Kim, J.Y.; Cho, S.J.; Shin, J.H.; Kim, J.H. Quality of science and reporting of radiomics in oncologic studies: Room for improvement according to radiomics quality score and TRIPOD statement. Eur. Radiol. 2020, 30, 523–536. [Google Scholar] [CrossRef] [PubMed]

- Xie, C.; Du, R.; Ho, J.W.; Pang, H.H.; Chiu, K.W.; Lee, E.Y.; Vardhanabhuti, V. Effect of machine learning re-sampling techniques for imbalanced datasets in 18F-FDG PET-based radiomics model on prognostication performance in cohorts of head and neck cancer patients. Eur. J. Nucl. Med. Mol. Imaging 2020, 47, 2826–2835. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Shang, Z.; Yang, Z.; Zhang, Y.; Wan, H. Machine learning methods for MRI biomarkers analysis of pediatric posterior fossa tumors. Biocybern. Biomed. Eng. 2019, 39, 765–774. [Google Scholar] [CrossRef]

- Traverso, A.; Kazmierski, M.; Zhovannik, I.; Welch, M.; Wee, L.; Jaffray, D.; Dekker, A.; Hope, A. Machine learning helps identifying volume-confounding effects in radiomics. Phys. Medica 2020, 71, 24–30. [Google Scholar] [CrossRef]

- Welch, M.L.; McIntosh, C.; Haibe-Kains, B.; Milosevic, M.F.; Wee, L.; Dekker, A.; Huang, S.H.; Purdie, T.G.; O’Sullivan, B.; Aerts, H.J.W.L.; et al. Vulnerabilities of radiomic signature development: The need for safeguards. Radiother. Oncol. 2019, 130, 2–9. [Google Scholar] [CrossRef] [PubMed]

- Ibrahim, A.; Primakov, S.; Beuque, M.; Woodruff, H.C.; Halilaj, I.; Wu, G.; Refaee, T.; Granzier, R.; Widaatalla, Y.; Hustinx, R.; et al. Radiomics for precision medicine: Current challenges, future prospects, and the proposal of a new framework. Methods 2021, 188, 20–29. [Google Scholar] [CrossRef]

- Crombé, A.; Kind, M.; Fadli, D.; Le Loarer, F.; Italiano, A.; Buy, X.; Saut, O. Intensity harmonization techniques influence radiomics features and radiomics-based predictions in sarcoma patients. Sci. Rep. 2020, 10, 1–13. [Google Scholar] [CrossRef]

- Orlhac, F.; Boughdad, S.; Philippe, C.; Stalla-Bourdillon, H.; Nioche, C.; Champion, L.; Soussan, M.; Frouin, F.; Frouin, V.; Buvat, I. A postreconstruction harmonization method for multicenter radiomic studies in PET. J. Nucl. Med. 2018, 59, 1321–1328. [Google Scholar] [CrossRef] [PubMed]

- Zhovannik, I.; Bussink, J.; Traverso, A.; Shi, Z.; Kalendralis, P.; Wee, L.; Dekker, A.; Fijten, R.; Monshouwer, R. Learning from scanners: Bias reduction and feature correction in radiomics. Clin. Transl. Radiat. Oncol. 2019, 19, 33–38. [Google Scholar] [CrossRef] [PubMed]

- Kirienko, M.; Cozzi, L.; Antunovic, L.; Lozza, L.; Fogliata, A.; Voulaz, E.; Rossi, A.; Chiti, A.; Sollini, M. Prediction of disease-free survival by the PET/CT radiomic signature in non-small cell lung cancer patients undergoing surgery. Eur. J. Nucl. Med. Mol. Imaging 2018, 45, 207–217. [Google Scholar] [CrossRef]

- Moran, A.; Wang, Y.; Dyer, B.A.; Yip, S.S.F.; Daly, M.E.; Yamamoto, T. Prognostic value of computed tomography and/or 18F-fluorodeoxyglucose positron emission tomography radiomics features in locally advanced non-small cell lung cancer. Clin. Lung Cancer 2021, 1–8. [Google Scholar] [CrossRef]

- Kim, C.; Cho, H.; Choi, J.Y.; Franks, T.J.; Han, J.; Choi, Y.; Lee, S.-H.; Park, H.; Lee, K.S. Pleomorphic carcinoma of the lung: Prognostic models of semantic, radiomics and combined features from CT and PET/CT in 85 patients. Eur. J. Radiol. Open 2021, 8, 100351. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.; Sun, X.; Wang, G.; Yu, H.; Zhao, W.; Ge, Y.; Duan, S.; Qian, X.; Wang, R.; Lei, B.; et al. A machine learning model based on PET/CT radiomics and clinical characteristics predicts ALK rearrangement status in lung adenocarcinoma. Front. Oncol. 2021, 11, 1–12. [Google Scholar] [CrossRef]

- Gatta, R.; Depeursinge, A.; Ratib, O.; Michielin, O.; Leimgruber, A. Integrating radiomics into holomics for personalised oncology: From algorithms to bedside. Eur. Radiol. Exp. 2020, 4. [Google Scholar] [CrossRef]

- Vigneau, E.; Chen, M.; Qannari, E.M. ClustVarLV: An R package for the clustering of variables around latent variables. R J. 2015, 7, 134–148. [Google Scholar] [CrossRef][Green Version]

- Giannitto, C.; Marvaso, G.; Botta, F.; Raimondi, S.; Alterio, D.; Ciardo, D.; Volpe, S.; Piano, F.; De Ancona, E.; Tagliabue, M.; et al. Association of quantitative MRI-based radiomic features with prognostic factors and recurrence rate in oropharyngeal squamous cell carcinoma. Neoplasma 2021, 67, 1437–1446. [Google Scholar] [CrossRef] [PubMed]

- Gugliandolo, S.G.; Pepa, M.; Isaksson, L.J.; Marvaso, G.; Raimondi, S.; Botta, F.; Gandini, S.; Ciardo, D.; Volpe, S.; Riva, G.; et al. MRI-based radiomics signature for localized prostate cancer: A new clinical tool for cancer aggressiveness prediction? Sub-study of prospective phase II trial on ultra-hypofractionated radiotherapy (AIRC IG-13218). Eur. Radiol. 2021, 31, 716–728. [Google Scholar] [CrossRef] [PubMed]

| Sample Size | Balanced Samples (50% pN = 0, 50% pN = 1) | ||||||

| FBP | IR | Total (test + validation set) | |||||

| PN0 | PN1 | PN0 | PN1 | PN0 | PN1 | All PN | |

| Large | 200 | 200 | 100 | 100 | 300 | 300 | 600 |

| Medium | 100 | 100 | 50 | 50 | 150 | 150 | 300 |

| Small | 34 | 33 | 16 | 17 | 50 | 50 | 100 |

| Sample Size | Unbalanced Samples (70% pN = 0, 30% pN = 1) | ||||||

| Large | 280 | 120 | 140 | 60 | 420 | 180 | 600 |

| Medium | 140 | 60 | 70 | 30 | 210 | 90 | 300 |

| Small | 47 | 20 | 23 | 10 | 70 | 30 | 100 |

| FS Acronym | FS Method | Classifier Acronym | Classifier |

|---|---|---|---|

| No FS | No feature selection step | PR | Penalized Regression |

| HC + WLCX | Hierarchical clustering + Wilcoxon | RF | Random Forest |

| PCA (Delta) + WLCX | Principal Component Analysis clustering with Delta plot stop criterion + Wilcoxon | XGBoost | Extreme Gradient Boosting |

| PCA (Proportion) + WLCX | Principal Component Analysis clustering with proportion stop criterion + Wilcoxon | LSR | Logistic Step-wise Regression |

| MRMR | Minimum Redundancy Maximum Relevance | KNN | K-Nearest Neighbor |

| RELF | Relief | SVM | Support Vector Machine |

| Method | AUC Mean | AUC SD | Sensitivity Mean | Sensitivity SD | Specificity Mean | Specificity SD |

|---|---|---|---|---|---|---|

| Classification methods | ||||||

| KNN | 0.63 | 0.12 | 0.43 | 0.21 | 0.65 | 0.21 |

| LSR | 0.64 | 0.11 | 0.45 | 0.22 | 0.74 | 0.14 |

| PR | 0.67 | 0.12 | 0.45 | 0.24 | 0.78 | 0.15 |

| RF | 0.78 | 0.16 | 0.58 | 0.24 | 0.82 | 0.15 |

| SVM | 0.63 | 0.12 | 0.44 | 0.29 | 0.68 | 0.3 |

| XGBoost | 0.79 | 0.16 | 0.62 | 0.23 | 0.81 | 0.13 |

| Feature selection methods | ||||||

| No FS | 0.72 | 0.12 | 0.56 | 0.24 | 0.76 | 0.15 |

| HC + WLCX | 0.71 | 0.12 | 0.54 | 0.21 | 0.74 | 0.21 |

| MRMR | 0.63 | 0.11 | 0.41 | 0.22 | 0.73 | 0.14 |

| PCA (Delta) + WLCX | 0.72 | 0.16 | 0.51 | 0.24 | 0.76 | 0.15 |

| PCA (Proportion) + WLCX | 0.71 | 0.12 | 0.52 | 0.29 | 0.76 | 0.30 |

| RELF | 0.67 | 0.16 | 0.44 | 0.23 | 0.74 | 0.13 |

| Feature Selection | Classification | AUC (CI 95%) | Sensitivity | Specificity |

|---|---|---|---|---|

| No FS | PR | 0.60 (0.46, 0.73) | 0.50 | 0.61 |

| RF | 0.62 (0.49, 0.75) | 0.50 | 0.63 | |

| XGBoost | 0.64 (0.51, 0.76) | 0.50 | 0.70 | |

| KNN | 0.57 (0.45, 0.67) | 0.54 | 0.59 | |

| SVM | 0.52 (0.38, 0.65) | 0.50 | 0.57 | |

| HC + WLCX | PR | 0.63 (0.50, 0.76) | 0.46 | 0.63 |

| RF | 0.67 (0.54, 0.79) | 0.50 | 0.70 | |

| XGBoost | 0.64 (0.51, 0.76) | 0.54 | 0.65 | |

| LSR | 0.57 (0.43, 0.71) | 0.50 | 0.61 | |

| KNN | 0.55 (0.44, 0.67) | 0.37 | 0.73 | |

| SVM | 0.55 (0.42, 0.68) | 0.62 | 0.59 | |

| PCA (Delta) + WLCX | PR | 0.60 (0.47, 0.72) | 0.58 | 0.63 |

| RF | 0.65 (0.52, 0.78) | 0.67 | 0.61 | |

| XGBoost | 0.69 (0.57, 0.8) | 0.67 | 0.62 | |

| LSR | 0.59 (0.46, 0.72) | 0.50 | 0.64 | |

| KNN | 0.58 (0.45, 0.70) | 0.54 | 0.59 | |

| SVM | 0.58 (0.45, 0.70) | 0.62 | 0.57 | |

| PCA (Proportion) + WLCX | PR | 0.57 (0.44, 0.71) | 0.42 | 0.65 |

| RF | 0.64 (0.50, 0.77) | 0.54 | 0.65 | |

| XGBoost | 0.69 (0.55, 0.81) | 0.54 | 0.65 | |

| LSR | 0.58 (0.43, 0.72) | 0.50 | 0.68 | |

| KNN | 0.58 (0.46, 0.69) | 0.45 | 0.70 | |

| SVM | 0.60 (0.48, 0.72) | 0.66 | 0.59 | |

| MRMR | PR | 0.55 (0.42, 0.68) | 0.42 | 0.63 |

| RF | 0.64 (0.50, 0.77) | 0.46 | 0.72 | |

| XGBoost | 0.66 (0.52, 0.78) | 0.54 | 0.65 | |

| LSR | 0.54 (0.40, 0.67) | 0.42 | 0.68 | |

| KNN | 0.50 (0.39, 0.61) | 0.33 | 0.67 | |

| SVM | 0.61 (0.48, 0.73) | 0.62 | 0.63 | |

| RELF | PR | 0.70 (0.57, 0.83) | 0.21 | 0.94 |

| RF | 0.60 (0.47, 0.73) | 0.50 | 0.69 | |

| XGBoost | 0.67 (0.53, 0.79) | 0.46 | 0.79 | |

| LSR | 0.69 (0.55, 0.82) | 0.62 | 0.69 | |

| KNN | 0.45 (0.35, 0.56) | 0.29 | 0.61 | |

| SVM | 0.75 (0.63, 0.86) | 0.54 | 0.87 |

| Sample Size | Balancing | Association | |

|---|---|---|---|

| High | Low | ||

| Large (600) | Balanced Unbalanced | PR PR | RF * RF * |

| Medium (300) | Balanced Unbalanced | PR PR | RF * RF * |

| Small (100) | Balanced Unbalanced | RF * XGBoost | PR XGBoost |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Corso, F.; Tini, G.; Lo Presti, G.; Garau, N.; De Angelis, S.P.; Bellerba, F.; Rinaldi, L.; Botta, F.; Rizzo, S.; Origgi, D.; et al. The Challenge of Choosing the Best Classification Method in Radiomic Analyses: Recommendations and Applications to Lung Cancer CT Images. Cancers 2021, 13, 3088. https://doi.org/10.3390/cancers13123088

Corso F, Tini G, Lo Presti G, Garau N, De Angelis SP, Bellerba F, Rinaldi L, Botta F, Rizzo S, Origgi D, et al. The Challenge of Choosing the Best Classification Method in Radiomic Analyses: Recommendations and Applications to Lung Cancer CT Images. Cancers. 2021; 13(12):3088. https://doi.org/10.3390/cancers13123088

Chicago/Turabian StyleCorso, Federica, Giulia Tini, Giuliana Lo Presti, Noemi Garau, Simone Pietro De Angelis, Federica Bellerba, Lisa Rinaldi, Francesca Botta, Stefania Rizzo, Daniela Origgi, and et al. 2021. "The Challenge of Choosing the Best Classification Method in Radiomic Analyses: Recommendations and Applications to Lung Cancer CT Images" Cancers 13, no. 12: 3088. https://doi.org/10.3390/cancers13123088

APA StyleCorso, F., Tini, G., Lo Presti, G., Garau, N., De Angelis, S. P., Bellerba, F., Rinaldi, L., Botta, F., Rizzo, S., Origgi, D., Paganelli, C., Cremonesi, M., Rampinelli, C., Bellomi, M., Mazzarella, L., Pelicci, P. G., Gandini, S., & Raimondi, S. (2021). The Challenge of Choosing the Best Classification Method in Radiomic Analyses: Recommendations and Applications to Lung Cancer CT Images. Cancers, 13(12), 3088. https://doi.org/10.3390/cancers13123088