1. Introduction

Reliability data plotting is more of an art than a science. The goal of plotting raw data in a particular format is to match the science or theoretical physics underlying the phenomenon that is observed. It is important to understand that an assumed physics or degradation mechanism determines the plotting axes. The axes are determined based on the extrapolation of the expected degradation [

1]. That is to say that the chosen axes prejudice the extrapolation based on an assumed theory. Often the theory will require that the extrapolation be according to a power law rather than a log-of-time

X-axis. Nonetheless, the tendency is to use the log-of-time for the

X-axis and transform the

Y-axis data to be the log of a change in parameter versus the log of time. This way, the slope will indicate what the power-law is for the specific degradation mechanism being observed. For example, NBTI data is often plotted as the log of change in threshold voltage versus the log of time [

2,

3].

For many years, the International Reliability Physics Symposium, as well as other forums, has had scientists presenting degradation data and proving or disproving degradation mechanisms based on a developed theory fit to experimental data. Consistently, due to the basic assumption of thermodynamics and an Arrhenius theory of defect creation and propagation, the log-of-time axis is chosen, regardless of the theory that is applied. This is basically justified as it is consistent with Weibull plotting for failure probability, and it is consistent with most physics-based accelerated life-test principles. The assumption is that an expected time to fail will be accelerated on a logarithmic time axis with linearly increasing stress conditions, consistent with the Arrhenius law.

Joe McPherson, in his now classic book, “

Reliability Physics and Engineering, Time-To-Failure Modeling,” has canonized today’s approach for plotting degradation based on a power-law assumption as a more generalized scheme compared to plotting on a semi-log graph [

1]. Log of time is the

X-axis; however, rather than a linear abscissa, which would work for the special case of a log-time dependence on stress, he suggested using a log of S(t),

Y-axis. In principle, this assumption works out well for most failure data, as McPherson points out in his second chapter, since the data will generally not fit properly to a linear-log-of-time plot. Aside from this book, I could not find another microelectronics reference describing a different approach to extrapolate a time to fail from degradation data. This seems to be the definitive approach used ubiquitously in the literature, and there is no controversy or alternative.

The assumption for a degradation profile, according to McPherson, would follow the parameter, S(t), over time at a given stress level

So to find the parameters S0, c, and m, we need to transform the parameters such that we can make a log–log plot of S(t) versus time. This is a very straightforward and common-sense way to find the divergence of the indication parameter, S(t), from some initially determined S0.

For the most part, this is the assumption of most reliability data plots in electronic devices, including silicon NBTI [

2,

3], HCI [

4], SiC [

5] devices, as well as GaN [

6] transistor degradation. However, if we look at the required

Y-axis, which is to be plotted on a log–log scale, we notice an interesting phenomenon that perhaps needs to be analyzed from a fresh perspective. According to McPherson, we plot X and Y such that

and

At first glance, it looks perfectly fine, and it is the way our industry has been plotting their data for decades. By assuming that the line is straight, then you can calculate c and m directly from the curve. This allows extrapolation in time to an assumed failure criterion, when S(t) = S

F, where S

F is the failure criterion. In general, when plotting ring oscillator frequency degradation, for example, NBTI data, this criterion may be 10% degradation (i.e., S

F = 1.1S

0) [

7], or it could be 20% degradation (1.2S

0), as is typical for discrete power devices [

8]. The calculation of the

Y-axis value depends critically on an exact value for S

0. The sensitivity of the calculations, including extrapolation of m and c, depends critically on an exact measure of S

0 with nearly zero margin of error. Because S

0 is both in the numerator and denominator of (3), the sensitivity is extremely high with respect to any variation on this parameter, S

0.

2. Problem with Initial State Measurement

The assumption from Equation (1) is that the initial parameter remains mostly unchanged for the initial portion of the test and only slowly deviates over time. However, when plotting changes in this parameter on a log scale, where there is no ‘zero’ value on a log scale, the data becomes very awkward in the short times, where ΔS/S0~0. As a result, the plots can only begin when the data consistently shows parameter S(t) > S0 (or S(t) < S0 when measuring a decreasing parameter). Hence, we are limited to including data whose difference is above the noise margin of the measurements. As it turns out, the parameters are so sensitive to an exact measurement of S0 that any small noise margin will tremendously affect the calculations both for c and m, and the exponent will be generally much higher than the actual result, leading to orders of magnitude in error of time to fail with only fractions of a percent miscalculation in the exact S0.

This sensitivity can be best illustrated by example.

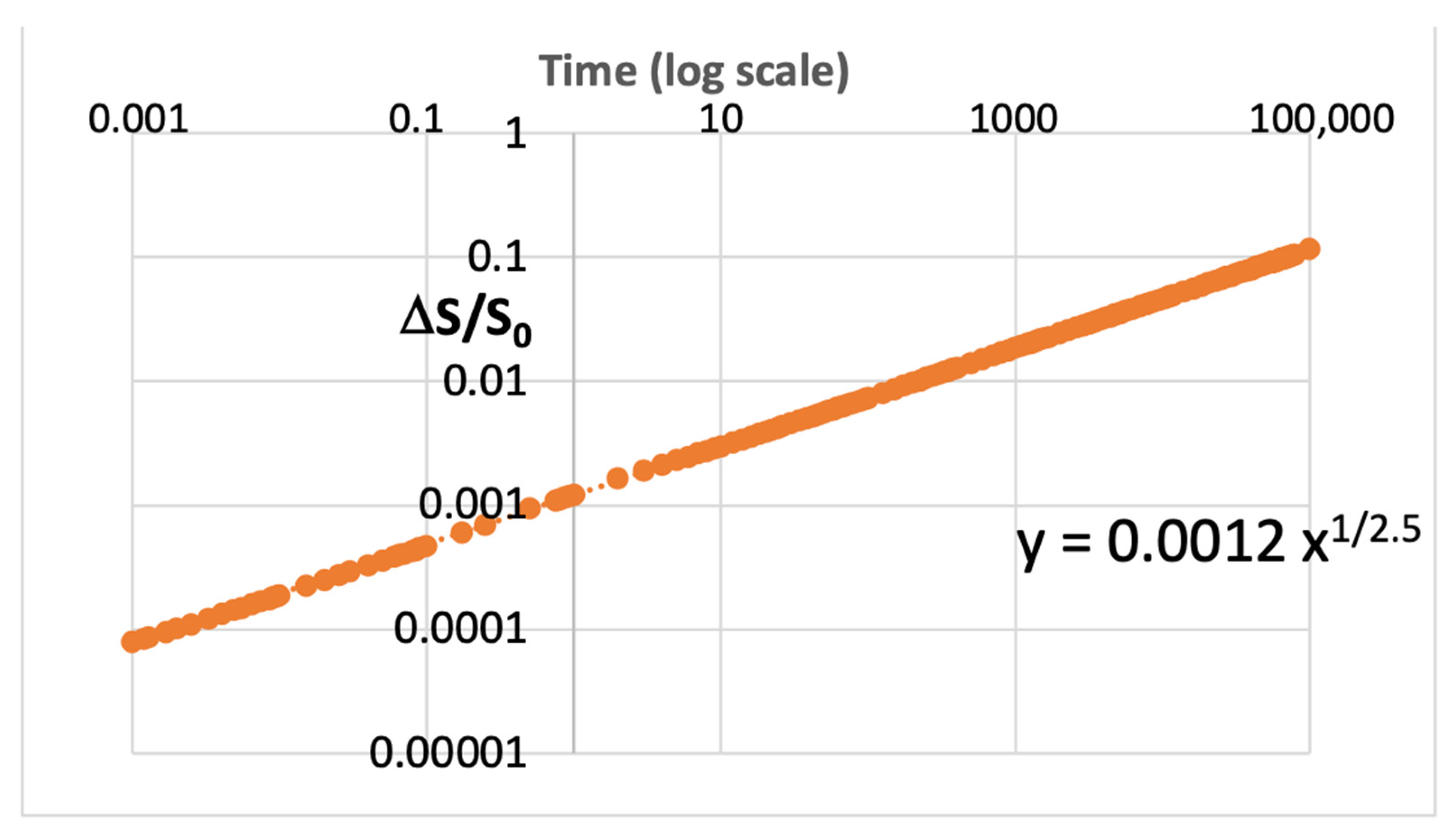

Figure 1 shows synthesized data generated with a parameter, S(t), that has a power-law increase over time, consistent with the theory of Equation (1). The data mimics typical observed degradation characteristics seen in microelectronics. This dataset is plotted in

Figure 1, and the relation for Y is shown with 1/m = 0.40, thus m = 2.5.

Figure 2 shows the data, S(t), plotted with different values of S

0, varied from its initial assumption by a small percent, from 97.5% of its actual value to as much as 99.5%.

Figure 2 shows the same data as

Figure 1, but where the value of S

0 is multiplied by P. Again, this synthesized data illustrates the sensitivity of the initial value for S

0 with very small variations in S

0. These plots show the least squares calculations for m based on a linear fit from the log–log plots using Equation (3) for the Y value. We see that the values of m~8 in the root law for the time axis, where the error in S

0 was only 2.5% (for P = 97.5%).

The plot of

Figure 2 shows the dramatic effect that a very small deviation in the initial calculated parameter, S

0, has on the resulting calculation for ‘m’ ranging from 2.5 to 8. On a log scale, the averaged value used in the plotting of ΔS/S

0 will extrapolate to some assumption for S

0 but never be exact since there is always noise associated with making that measurement. This alone can explain why often the power-law data is shown as ¼ or even as small as

1/

8, depending on the uncertainty of that first point used for the calculation. Very often, there will be physics principles or theories as to exactly what exponent should be expected for this exponent. However, in our example, we show, without any theoretical motivation, that the power law of time can take on any value that would empirically be found from such a plot. Hence, the data plotting methodology is a critically important part of evaluating the time to fail or device lifetime under applied stress conditions.

3. Properly Plotting Power-Law Data

We need to know, with greater certainty, the correct power-law when extrapolating time to fail from degradation data. It is imperative to use an accurate plotting scheme to present the data such that there is no sensitivity error in finding the initial point, S

0. There needs to be an accurate fit across most of the data so that a proper time-to-fail extrapolation can be achieved. Again, this is only when degradation theory dictates a power-law process causing the change over time. McPherson suggests that this would generally be the case. The proper transformation to make for Equation (1) would, thus, be to let

and

This way, when a least squares fit is made for Y versus X, we have a simple linear fit. Hence, with a properly fit ‘m’, a perfectly linear extrapolation can be made to any given failure criterion. However, there is one difficulty that must be resolved: that is to find the correct ‘m.’ This is accomplished by determining that the data forming the curve is actually straight, without any curvature. By inspection of

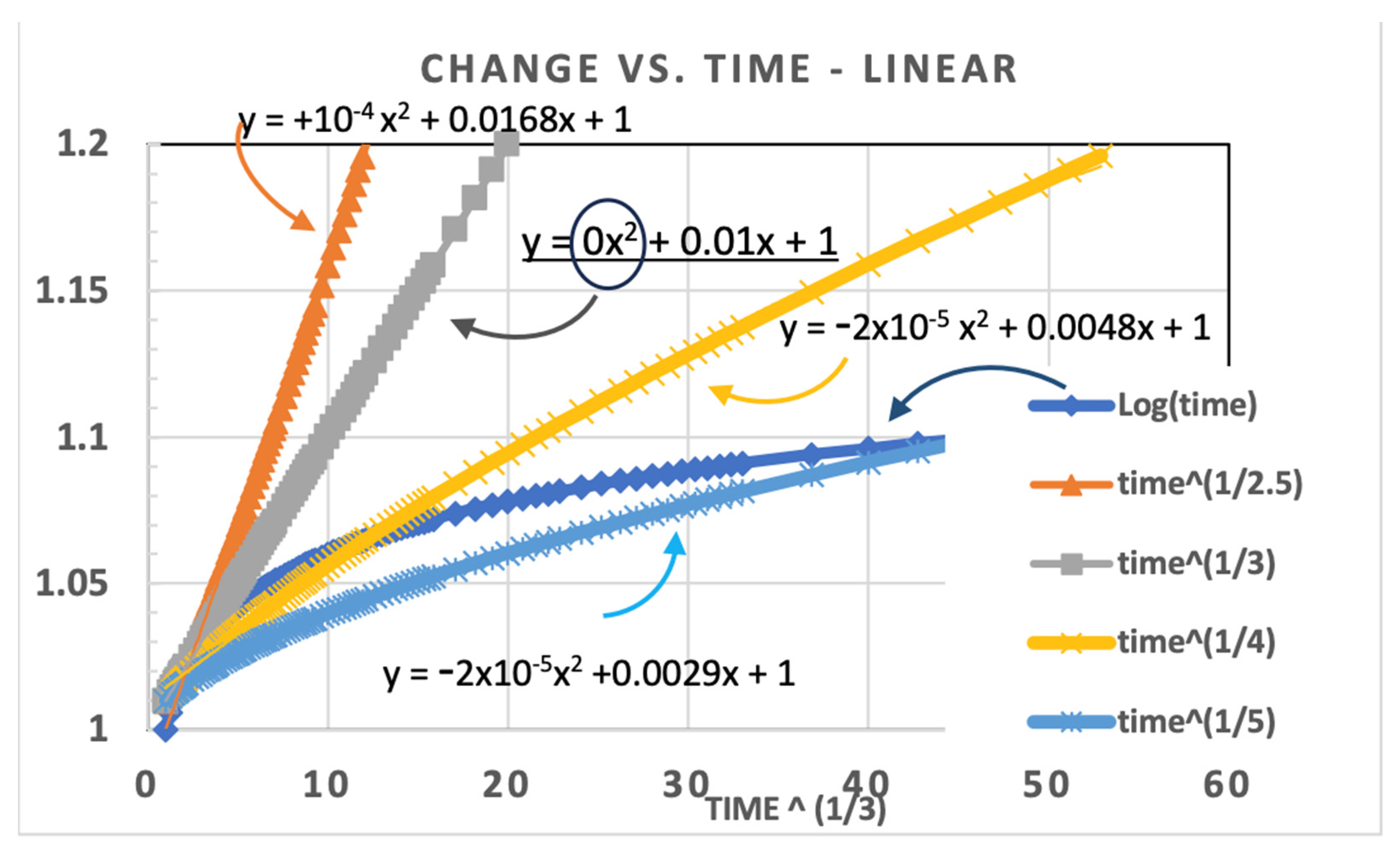

Figure 2, we see that a curvature will result in an improper extrapolation. This makes sense since a curve represents a deviation from the assumed axes transformation, which can lead to a very large deviation if the curve were to continue. Therefore, the proper extrapolation must be perfectly straight along transformed axes. The curve shown in

Figure 3 uses a different dataset than

Figure 1 and

Figure 2. Here, the power exponent was chosen as m = 3, not 2.5. This allows us to see the result of overestimating or underestimating the power-law using the same values for ‘m.’

For the trendline (in Excel), we used a second-order polynomial fit to compare different assumptions for which value of m correctly reflects the data trend.

Figure 3 illustrates how we can compare different assumptions for ‘m’ and see the effect on the x

2 term.

Figure 3 shows that the simulated data is exactly Y = 1 + 0.01 t

1/3 plotted on a linear Y versus X, where X = t

1/3. We see in this plot that the x

2 term is only zero when the plot is that of m = 3, but the x

2 constant is negative for m > 3 (i.e., m = 4 or 5) and positive (+10

−4) for m = 2.5 (i.e., m < 3). This way we know that the extrapolation of degradation versus time continues straight along the uncurved line with zero deviation. This figure also compares to the log-time function (in blue), which saturates and is never straight with a power-law transformation.

4. Application to Real Data

We can now apply this methodology of extrapolating the time to failure based on real data that was collected from GaN power transistors from EPC corporation of El Segundo CA. The devices tested were EPC2016 and operated at 110 V (10% over specification) for 13 h. The ON state resistance (R

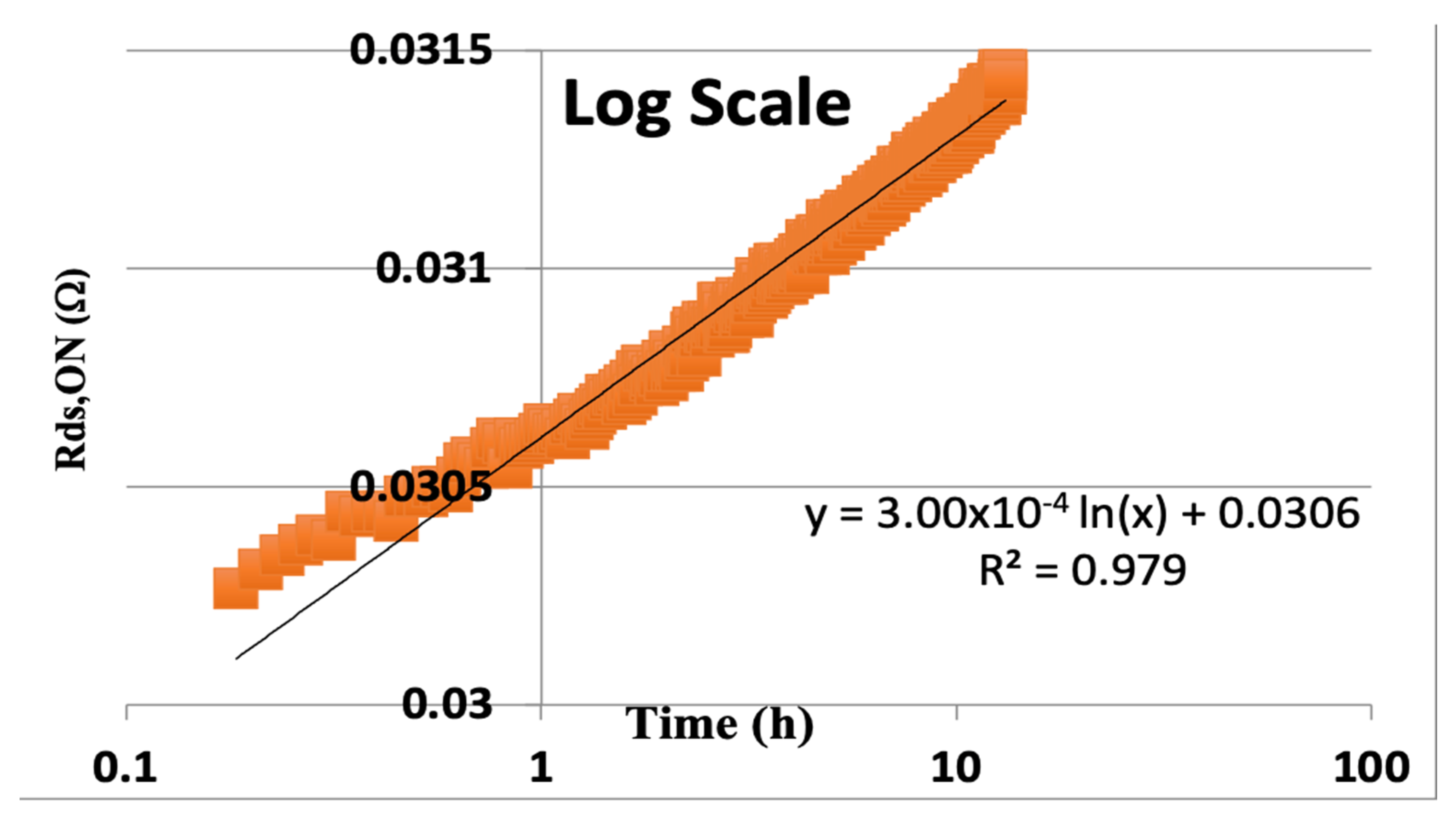

DS,ON) was recorded versus time in hours and plotted on a log scale for time, as is traditionally performed. We see what looks like a very nice least squares fit to the data, as shown in

Figure 4.

The data in

Figure 4 shows the curve fit for this real data, which would extrapolate the time to fail (TTF) on a log scale to the time (x) when the change in resistance would be 20% of the initial resistance of 0.0306 Ohms. So, when 3.00 × 10

−4 × ln(x) = 0.2 × 0.0306 = 6.12 × 10

−3. Thus, the extrapolated time to 20% degradation is

This result is equivalent to about 82,000 years! That is a very optimistic result if only it could be believed. However, we can replot this same data using our proposed calculation for the exponent of time, whereby the second derivative is fit to zero, as seen in

Figure 5. Here, we minimized the x

2 term by solving for zero, using the Excel solver function. We minimize the constant with m = 4.47. This polynomial fit took away the curvature of the plot, and we have a realistic extrapolation to time for 20% degradation.

We can now extrapolate a more realistic time to fail by finding X

F = TTF

1/4.47 when R

DS,ON increases by 20% over the initial R

0. From the least squares fit of the 2nd order polynomial using Excel, where we find m such that the x

2 term is solved for zero (or as close to zero as possible), we have a proper value for the actual initial value for R

0, which is 0.0296, and the proper slope on the new axis is 0.00104X (where X = t

1/4.47). Therefore, we want to find X such that the increase in resistance is 20%, or 0.2 × 0.0296 = 0.00592, so

This result, based on finding the appropriate time axis by eliminating the curvature of the data, results in two important insights into the expected time to fail:

The predicted time to fail in hours is much more realistic for overvoltage testing (this is 10% above the rated voltage).

The extrapolation is based on a perfectly straight data extrapolation since we solved for the value, m, that brings the x2 term to zero, guaranteeing a linear fit.

By choosing the time axis exponent properly and leaving the Y-axes linear, the dependence on initial value, S

0, as in Equation (3), is eliminated. The result is that a more appropriate fit can be made based on the entirety of the data. In this one example, we see that the initially assumed value (R

0, in our example) is 0.0306; however, when we use the entirety of the data, we find that the power-law is (1/m) such that m = 4.47. In this example, the real R

0 based on this time exponent calculation is 0.0296, a value that is P = 96.7% of the originally assumed value. Thus, we can tell from the calculations of

Figure 2 that our m-value determined by the classical approach, using Equations (2) and (3), would give a very incorrect result for the power-law and a huge overestimation, by far, for the actual extrapolated time to failure. As we saw before, this methodology will yield a more conservative extrapolation of the time to fail (TTF) and is likely to be correct.

5. Silicon NBTI Degradation Example

This same methodology can be applied to the most common application of reliability prediction in silicon devices. We have seen with real data taken at a high temperature, 140 °C, accelerated testing of 28 nm FPGA technology. These devices were configured to observe degradation of ring oscillators (RO). The principle here is that device degradation is observed by measuring the change in frequency over time with a given stress voltage. It is understood from the principles of negative bias temperature instability (NBTI) degradation that negative bias on the PMOS devices increases the charge in the gate and leads to a shift in the threshold voltage of all the PMOS being stressed [

9]. The set of data presented here was from a 28 nm technology node FPGA from Xilinx. The initial frequency, F

0, was taken as the average measured frequency for the first 40 min of operation. This value was then subtracted from the measured frequency over time, F(t).

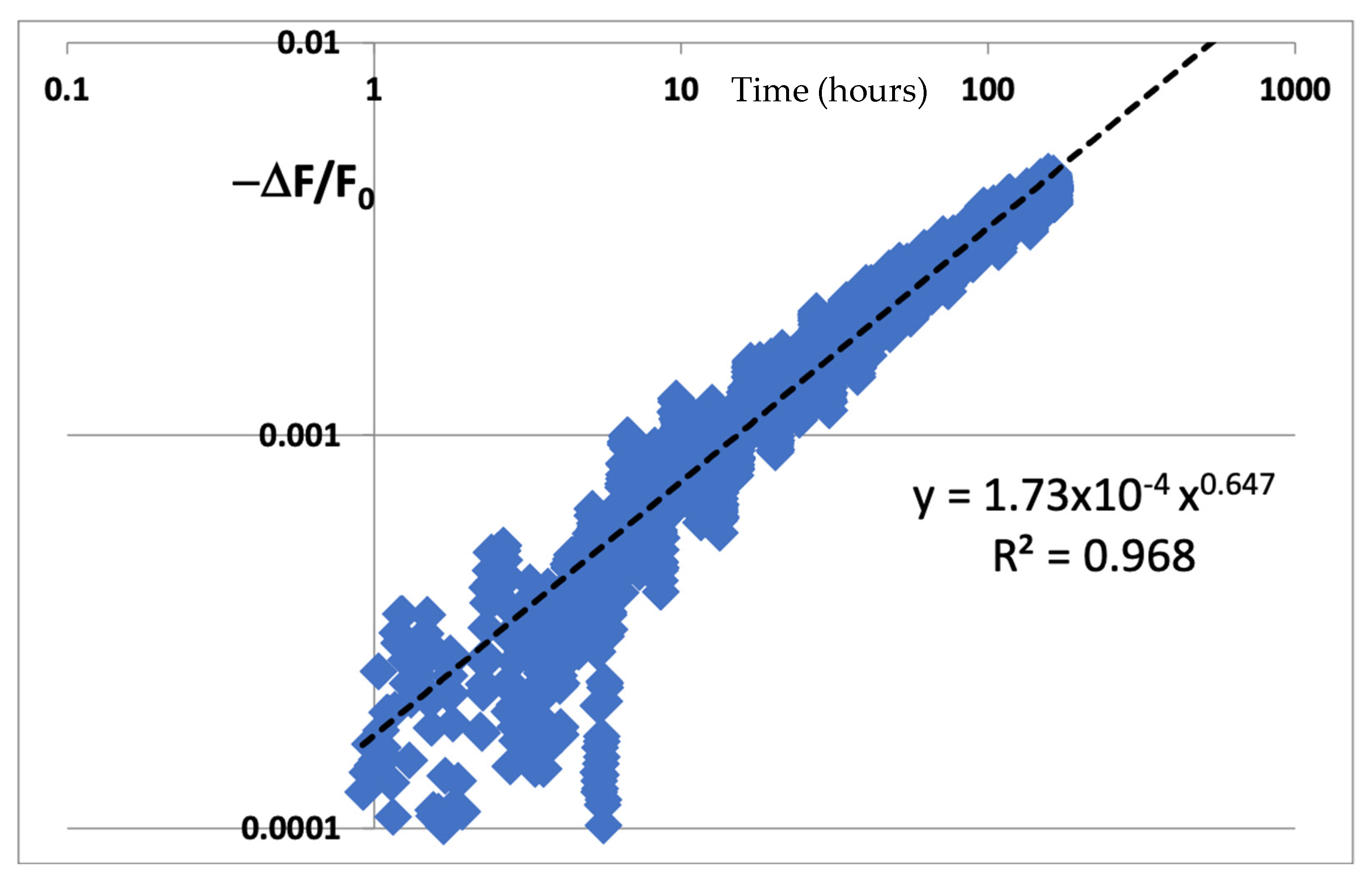

The assumed degradation here is understood to be a decrease in the frequency over time, so we use Equation (1) to assume a power-law decrease in frequency over time. The difference in the frequency is then normalized by F0 as per Equation (3) to calculate Y. X, of course, is the time on a log scale. We see the data plotted as per the instructions of McPherson, and the data looks very linear on a log–log scale. Then we use the Excel trendline function to calculate the trend to extrapolate time to fail. We see what looks like an excellent and proper fit to a power-law trendline with y = 1.73 × 10−4 t0.647. We can then find that m = 1/0.647 = 1.5456.

In most cases, for example, in Naouss and Marc [

2] and in many other examples of NBTI data plotting [

3], the time to fail (TTF) is extrapolated by extending the power-law curve to 10% degradation, or when the trendline goes to 0.1 (10% degradation)

However, if we look carefully at the curve of

Figure 6, it is possible to notice a slight bowing of the data with respect to the trendline. For the most part, like the GaN example shown above, this is easily overlooked since the R

2 value is very good and there is the expected noise in the beginning of the data plot. Also, to fit the plot on a proper log–log scale, it was necessary to exclude some early data that were below zero due to the difference calculation for ΔF = F(t) − F

0, since negative values are forbidden points on a log scale. Together, these two phenomena bring into question their entire calculation. Hence, we can compare our revised figure to a plot of the same data plotted linearly as a function of time to the power 1/m. From the data itself, we can minimize the curvature (not the R

2 value) such that the line is perfectly straight. This is what we see next.

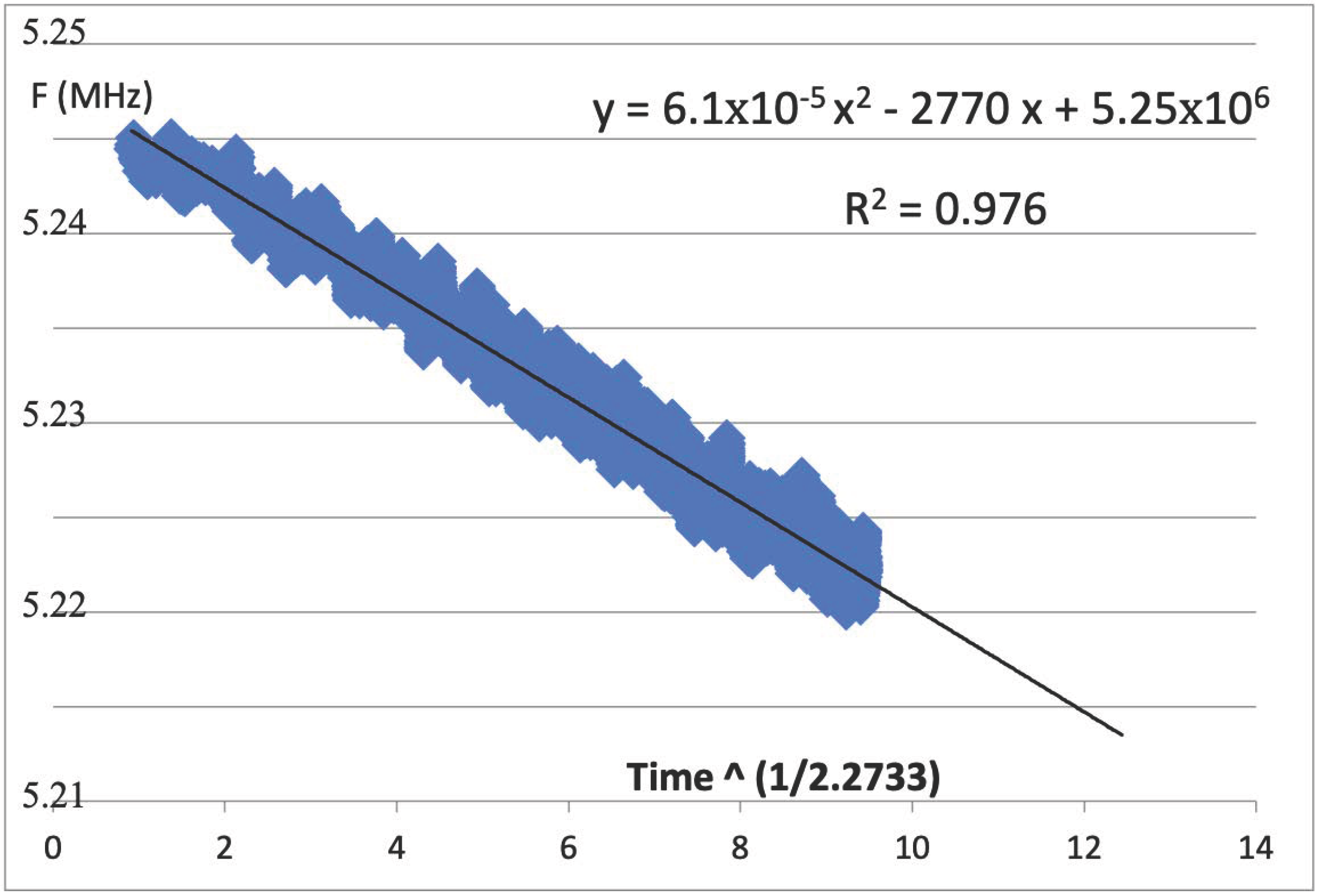

In

Figure 7, we see the trendline fit to a 2nd-order polynomial where the x

2 term was solved to be as close to zero as is reasonable to achieve. For our data, 6.11 × 10

−5 is for all practical purposes approximately zero. We see that the correlation coefficient, R

2, is also quite good, but the power-law that was derived is m = 2.733. Thus, the proper way to plot this data is to have the actual frequency values for the

Y-axis, as seen from Equation (5), while the

X-axis should be the transformed time to the power 1/m, as we see from Equation (4); thus, Y = F(t), as the raw data from the measured frequencies directly from the FPGA.

We have here the raw data, F(t), plotted with the time axis. We can calculate the time to failure, TTF, as the time to 10% degradation from t = 0 (F

0). That initial frequency here is seen as 5.25 MHz, while the slope is −2770 X. We now find the X value for which the trendline will reach Y = F

0 × 0.9 (10% degradation in frequency). From here, we find

In this example, we see that the correctly calculated TTF is nearly 100 times greater than the result from the classical calculation from

Figure 6. This would suggest a severe undercalculation based on the traditional approach for extrapolating time-to-fail from degradation data. Thus, we see two examples of how properly determining the power time-law can give a drastically different result, either much greater or lower than what would have been found by transforming the

Y-axis and leaving the

X-axis simply as the log of time.