Abstract

This study proposes a deep learning-based method to improve overlay alignment precision in laser direct writing systems. Alignment errors arise from multiple sources in nanoscale processes, including optical aberrations, mechanical drift, and fiducial mark imperfections. A significant portion of the residual alignment error stems from the interpretation of mark coordinates by the vision system and algorithms. Here, we developed a convolutional neural network (CNN) model to predict the coordinates calculation error of 66,000 sets of computer-generated defective crosshair marks (simulating real fiducial mark imperfections). We compared 14 neural network architectures (8 CNN variants and 6 feedforward neural network (FNN) configurations) and found a well-performing, simple CNN structure achieving a mean squared error (MSE) of 0.0011 on the training sets and 0.0016 on the validation sets, demonstrating 90% error reduction compared to the FNN structure. Experimental results on test datasets showed the CNN’s capability to maintain prediction errors below 100 nm in both X/Y coordinates, significantly outperforming traditional FNN approaches. The proposed method’s success stems from the CNN’s inherent advantages in local feature extraction and translation invariance, combined with a simplified network architecture that prevents overfitting while maintaining computational efficiency. This breakthrough establishes a new paradigm for precision enhancement in micro–nano optical device fabrication.

1. Introduction

Laser direct writing technology plays a very important role in the fields of integrated circuits, flat panel displays, and micro–nano optical manufacturing [1,2,3,4,5,6]. The precision alignment and overlay process through laser direct writing is an important technique to realize complex and multilayered micro–nano structures in optoelectronic devices [7,8,9,10,11]. The overlay alignment accuracy largely determines the accuracy and performance of the device [12,13,14].

In the mainstream laser direct writing overlay process, high-contrast, sharp-edged patterns (e.g., cross-shaped metal markers) serve as fiducial targets for submicron alignment under microscopy. While miniaturized markers enhance positional resolution, they are more vulnerable to fabrication imperfections. Alignment precision is governed by the following: (i) optical system’s NA/resolution, (ii) mechanical stability/repeatability of air-bearing stages and nanopositioning actuators, and (iii) environmental controls (e.g., dual-frequency laser interferometry and thermal regulation). State-of-the-art systems achieve ≤100 nm absolute positioning accuracy through integrated metrology and motion control architectures. However, stochastic overlay errors (>500 nm peak) arise from defects in fiducial patterns (e.g., edge roughness, partial occlusion), which compromise feature recognition algorithms. For high-precision micro-/nanophotonic devices (e.g., multi-level diffractive optical elements), mitigating these errors is critical to preserving wavefront fidelity and optical performance. Advanced fiducial design strategies and machine learning-based defect compensation techniques are actively pursued to address this challenge [15,16,17,18,19].

The emergence and rise of deep learning technology have provided new ideas for solving the current process optimization problems in the field of laser precision machining [20,21,22]. Deep neural networks mainly consist of four major types: feedforward neural networks (FNNs), convolutional neural networks (CNNs), recurrent neural networks (RNNs), and generative neural networks (GNNs) [23]. Among them, CNNs are constructed imitating the biological visual mechanism, and the implicit convolutional kernels can extract features from images, thus the CNN is most widely used in deep learning research related to image processing.

To address the problem of excessive alignment errors in laser direct writing overlay alignment, we took the technological advantages of deep learning, considering the randomly distributed defects in optical alignment marks, and proposed a method based on deep learning to improve the accuracy of laser direct writing overlay alignment. This approach has reduced the alignment error in mark recognition by an order of magnitude, providing a new idea for enhancing the alignment accuracy of optical alignment marks and laying a technical foundation for overlay alignment applications with higher precision.

2. Methods

Figure 1 below depicts a typical marking pattern utilized in the laser direct writing overlay process, consisting of a crosshair alignment mark and primary and secondary verniers. The crosshair alignment mark serves the purpose of alignment positioning, while the primary and secondary verniers function to indicate alignment errors. During the actual operation process, the crosshair alignment mark and the primary vernier are first fabricated on the substrate surface along with the first layer pattern. Subsequently, the secondary vernier is fabricated on the substrate surface along with the second layer pattern. The deviation between the primary and secondary verniers can then reflect the overlay alignment error between the two layers. Figure 1a illustrates the ideal overlay process where there is no alignment error between the primary vernier and the secondary vernier. However, Figure 1b–d depict the realistic scenario where randomly distributed alignment errors occur between the primary and secondary verniers during the overlay process. Apart from the resolution errors of the optical system and image processing system and the ranging errors of interference caused by ambient temperature drift, the sources of these alignment errors are mainly caused by the defects in the production of positioning marks, including line width errors, line edge roughness, sand holes, burrs, etc. The main reasons for these defects include defects in coating quality, nonuniform exposure dose of photolithography, and inhomogeneous distribution of development and wet etching rates.

Figure 1.

(a) Schematic of ideal overlay result with no alignment errors. (b–d) Schematics of real overlay result with typical alignment errors. The schematics show defective nature of patterns fabricated by photolithography (DWL66+, Heidelberg Instruments, Heidelberg, Germany) with laser wavelength 405 nm and spot size 600 nm. The overlay error stems from multiple sources including optical aberrations, mechanical drift, and fiducial mark imperfections, and we focus on fiducial mark imperfections in this work.

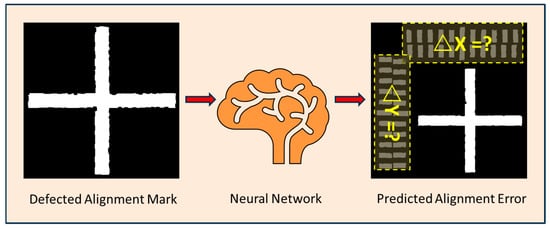

Due to the presence of these defects, the image processing system will generate alignment errors when identifying and locating alignment marks. Typical alignment errors are shown in Figure 1b–d, with errors within ±500 nm in both the X and Y directions, and the absolute error depends on the severity of the marker defects. The main innovation of this work lies in the adoption of deep learning methods to restore defective positioning markers to their defect-free state. The principle of this method is illustrated in Figure 2, where the input to the neural network is a grayscale image of a crosshair positioning marker with defects such as linewidth errors, rough edges, and sand holes. The output of the neural network is the alignment error, which represents the difference between the predicted true coordinates of the center position of the crosshair positioning marker and the measured coordinates.

Figure 2.

Concept diagram of predicting alignment error of fiducial mark with imperfections via a neural network. The input to the neural network was a grayscale map of crosshair mark with random defects simulating line edge roughness, asymmetric linewidth error, and sand holes. The output was the predicted alignment error. The defects primarily come from process imperfections including exposure nonuniformity, asymmetric development, and wet etching.

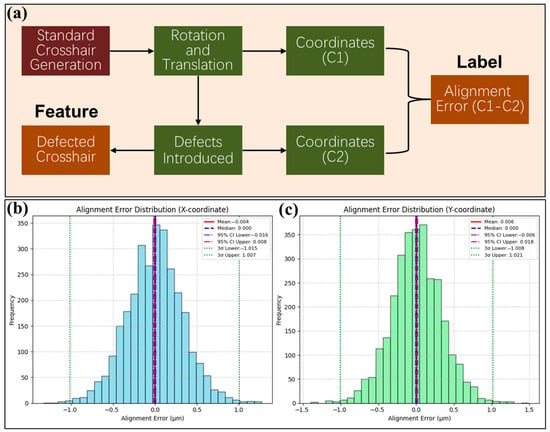

Firstly, FNNs with different structures were built for experiments. In order to obtain sufficient training data, this paper referred to the real crosshair marking defects and used a notebook computer (with Intel i5-12500H 2.50 GHz CPU and 16.0 GB RAM, Santa Clara, CA, USA) to generate 60,000 sets of training data, 3000 sets of validation data, and 3000 sets of test data. Among them, the training data were used to optimize the weight parameters of the neural network through the backpropagation algorithm. The validation data were used for model tuning and to avoid overfitting. The test data was used to evaluate the generalization ability of the model. The data generation method, shown in Figure 3, was based on the standard crosshair gray image, with random translation and rotation generated. The center coordinates of the defect-free crosshair (C1) at this time were recorded. Then, the crosshair was randomly eroded to simulate various defects and coordinates of the defective crosshair (C2) were obtained by pixel calculation. Finally, the crosshair gray images with translation, rotation, and defects were saved as the dataset features, and the difference between C1 and C2 was saved as dataset labels.

Figure 3.

(a) Diagram of training data generation method. Firstly, random translations and rotations are applied to a standard crosshair grayscale image, and the center coordinates (c1) of the crosshair at this time are recorded. Subsequently, various defects are introduced by randomly eroding the crosshair, and the center coordinates (c2) at this stage are noted. The difference between c1 and c2 (representing the alignment error) is saved as the dataset label. Finally, the crosshair grayscale images with translations, rotations, and defects are saved as the dataset features. (b) Labeled alignment error distribution for the X coordinate in the test set (3000 data). (c) Labeled alignment error distribution for the Y coordinate in the test set (3000 data). The 3σ value of alignment error for both coordinates is near 1 μm.

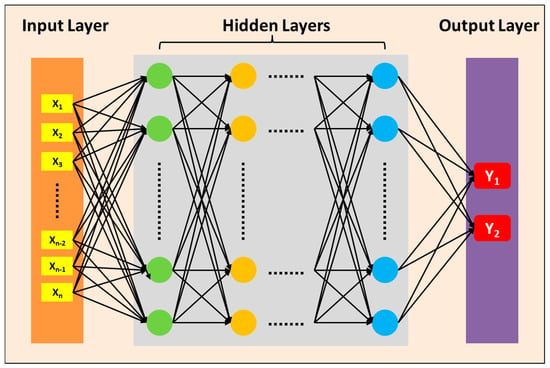

The structure of FNNs is illustrated in Figure 4, comprising an input layer, several hidden layers, and an output layer. The input layer data consists of one-dimensional vectors corresponding to the grayscale crosshair images, while the output layer data represents the predicted X coordinate error and the Y coordinate error of the crosshair center by the neural network. The process of using an FNN to predict the ideal center coordinates of a defective image relies primarily on its network structure and training data. The defective image is converted into a numerical form suitable for FNN processing and input into the input layer. In the hidden layers, the FNN transforms and extracts features from the input data through fully connected connections, utilizing sufficient hidden layers and neurons to learn and represent the overall features of the image. In the output layer, the FNN performs a regression task, outputting the predicted center coordinates, which are based on the entire network’s understanding and transformation of the input image. Through optimization methods such as the backpropagation algorithm and gradient descent, the FNN continuously adjusts its weights and bias parameters during training to minimize the error between the predicted center coordinates and the actual center coordinates. This requires a large amount of training data to ensure that the FNNs can learn effective feature representations and prediction models. By adjusting the number of neurons in the hidden layers and the number of hidden layers, experiments compare the predictive capabilities of FNNs with different structures.

Figure 4.

The structure of the FNNs, consisting of an input layer, several hidden layers, and an output layer. The input layer data corresponds to a one-dimensional vector of the crosshair gray image, while the output layer data represents the predicted X and Y coordinates errors of the crosshair center by the neural network.

3. Results and Discussions

The FNNs was built using the Keras framework, with ReLU as the activation function, Adam as the optimizer, Mean Square Error (MSE) as the loss function, and a Batch Size of 128. The results were compared after 100 epochs of training. The training results are shown in Table 1 below. It is evident that as the number of hidden layers and neurons increases, the training set loss decreases continuously, from 0.02 to below 0.01. However, the validation set loss remain unchanged above 0.02. Furthermore, with the increase in the number of neurons and hidden layers, overfitting becomes more pronounced. It is assumed that further optimizing the structure of the FNNs is unlikely to significantly lower validation loss.

Table 1.

Training results of FNNs with different structures.

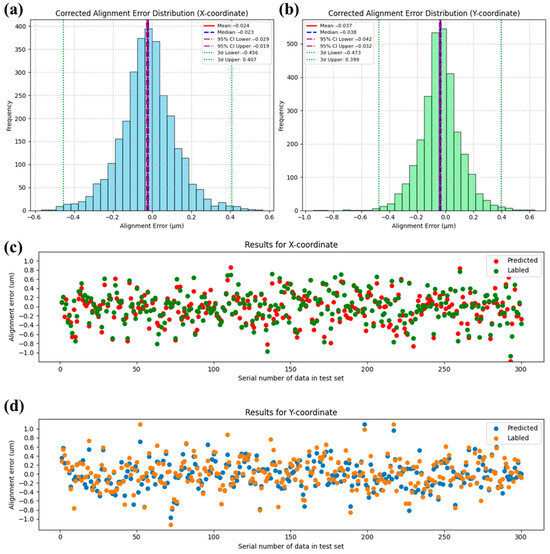

To demonstrate the model’s prediction capability on the test set, we showed statistical distribution of both labeled and predicted alignment error for 3000 results in the test set (Figure 5a,b), and first 300 results of predicted and actual marker coordinates (more data would make the graph less clear) in Figure 5c,d. Statistical results show that the corrected alignment error, obtained by subtracting the predicted alignment error from the labeled alignment error, has a 3σ value reduced to less than 50% of the labeled value. This indicates that the FNN model is capable of reducing the alignment error caused by defective marker recognition by more than 50%. The distribution of the first 300 sets of data also intuitively shows that the FNN model can predict the alignment errors caused by random marker defects to a certain extent. Both in the X coordinate and Y coordinate, the predicted trends are correct, but the approximated values are not ideal, especially for some alignment errors with larger magnitudes. We can observe that there are still many points with deviations greater than 100 nm.

Figure 5.

The prediction results of the FNN-6 network (Table 1, No. 6) on the test set are as follows: panel (a) represents the corrected alignment error distribution for the X-coordinate, and panel (b) represents the corrected alignment error distribution for the Y coordinate. Panel (c) shows the first 300 predicted and labeled alignment error for the X coordinate in the test set, and panel (d) shows the first 300 predicted and labeled alignment error for the Y coordinate in the test set.

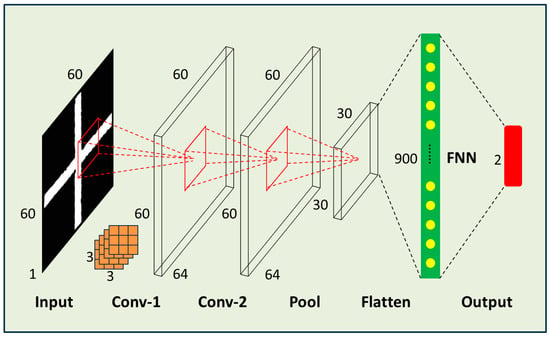

Based on the FNN, we added convolutional layers and pooling layers to form a Convolutional Neural Network (CNN). The convolutional layers mimic the receptive fields of biological vision to extract features from the input image. Each convolutional kernel extracts a specific feature, and multiple kernels can extract various features. The pooling layers retain the main features while discarding the less important ones, which also compresses the data volume and improves training efficiency. Through multiple convolutional and pooling operations, CNNs can automatically extract key features from the input image and use these features to predict the center coordinates of the graphic. Additionally, CNNs’ translation invariance and end-to-end training approach provide strong support for their performance in graphic center coordinate prediction tasks. By adjusting the network structure and hyperparameters of CNNs, we compared the training loss and validation loss of different models—the result is shown in Table 2 below. A total of eight different network structures’ training results were compared. The first network structure was the most complex architecture, but its training result was the worst in the table. Upon actual prediction, it was found that all coordinates were 0. Therefore, we started to simplify the network structure, reduce the number of convolutional layers, adjust the number of convolution kernels, and remove redundant fully connected layers. Eventually, the eighth network structure performed the best. Figure 6 shows the No. 8 CNN structure that achieved good practical results. The input is a grayscale image, which is processed through two convolutional layers and one pooling layer, then converted into a 1D vector and passed through a fully connected layer to the output layer. The input grayscale image has a size of 60 × 60 × 1; the convolutional kernels have a size of 3 × 3; the pooling layer has a size of 2 × 2; the activation function is ReLU; the optimizer is Adam; the loss function is Mean Squared Error (MSE); and the output layer predicts the positioning marker coordinate error.

Table 2.

Training results of CNNs with different structures.

Figure 6.

Demonstrated CNN structure with good practical performance. The input is a grayscale image, which undergoes two convolutional processing stages and one pooling stage. After having been flattened, it passes through one fully connected layer before reaching the output layer. The size of the convolutional kernels is 3 × 3.

The initial design of this network structure was inspired by the classic VGG network structure, which uses more convolutional layers with more neurons and additional fully connected layers. However, practical tests showed that this simple CNN structure outperformed the more complex VGG-like structure. The reason may be that this task involves a limited number of features. The typical VGG network structure is deeper and uses too many fully connected neurons (over 1000), ultimately leading to severe overfitting.

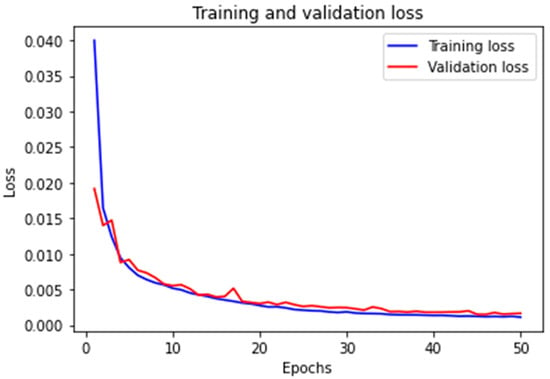

This structure efficiently extracts relevant features from the grayscale image through the convolutional layers, reduces the dimensionality and retains important features through the pooling layer, and finally maps these features to the predicted output through the fully connected layer. The use of 3 × 3 convolutional kernels allows for the extraction of detailed spatial information while maintaining a manageable computational load. The training and validation loss of the CNN model after 50 epochs of training are shown in Figure 7 below. The model achieved convergence with a MSE training loss of 0.0011 and validation loss of 0.0016, which represents a marked improvement over the prior FNN baseline (showing 90% reduction in error). The baseline VGG-style network (five convolutional layers, three fully connected layers) required 15.5 billion FLOPs per inference due to its deep structure with 512-channel convolutions and high-dimensional fully connected layers (4096 nodes). Our streamlined architecture reduces this to 3.8 billion FLOPs through three strategic optimizations: (1) Layer pruning eliminating 60% of convolutional layers (5→2) and 67% of FC layers (3→1); (2) channel compression limiting maximum feature maps to 64 channels (vs 512 in VGG); and (3) implementation of 2 × 2 pooling that progressively halves spatial dimensions from 60 × 60 to 15 × 15. These architectural changes achieved 99.7% parameter reduction (138 M→0.35 M) while maintaining feature extraction capability through preserved 3 × 3 convolutional kernels. Empirical validation on an Intel i5-12500H system confirmed proportional resource savings-training time per epoch decreased 75% (18→4.5 min); GPU memory usage dropped 76.5% (9.8→2.3 GB); and model storage shrank 99.7% (528 MB→1.4 MB). The FLOPs reduction (75.5% theoretical) and actual runtime improvements (75% measured) demonstrate strong correlation, proving the optimization’s effectiveness. This efficiency gain enables real-time defect correction on consumer-grade hardware while achieving sub-100 nm precision, resolving the critical throughput-precision tradeoff in laser direct writing systems.

Figure 7.

Training and validation loss of the CNN. After 50 epochs, the training loss reaches 0.0011, and the validation loss reaches 0.0016. The loss function is an MSE.

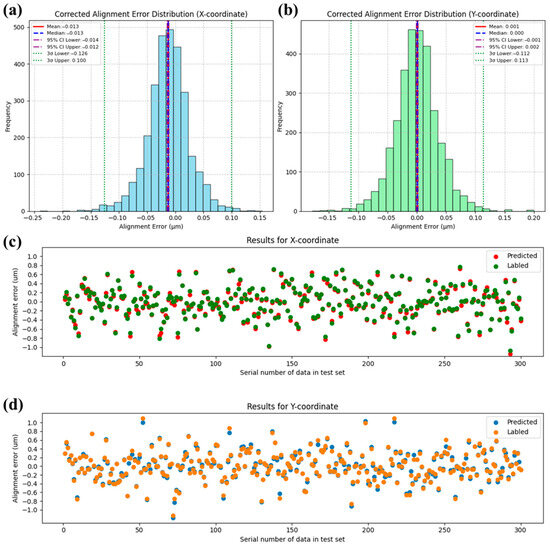

The optimized CNN architecture achieved a mean squared error (MSE) of 0.0015 on the test set, demonstrating superior alignment prediction accuracy compared to conventional FNN approaches. As illustrated in Figure 8, statistical results show that the corrected alignment error, obtained by subtracting the predicted alignment error from the labeled alignment error, has a 3σ value reduced to less than 10% of the labeled value. This indicates that the CNN model is capable of reducing the alignment error caused by defective marker recognition by more than 90%. The distribution of the first 300 sets of data also intuitively shows that the CNN model can predict the alignment errors caused by random marker defects to a greater extent than its FNN counterpart. Both in the X coordinate and the Y coordinate, the predicted trends are correct, and the approximated values are very close, even for some alignment errors with larger magnitudes. We can observe that there are few points with deviations greater than 100 nm. This performance stems from the network’s hierarchical feature extraction mechanism: 3 × 3 convolutional kernels systematically capture critical spatial patterns (edge gradients, texture variations) through localized receptive fields, while parameter sharing across translationally invariant operations substantially reduces model complexity. The architecture’s shallow topology (two convolutional layers followed by max-pooling and a single fully connected layer) proves optimal for this defect-correction task, balancing sufficient feature abstraction with minimized overfitting risks—a crucial advantage given the limited morphological complexity of alignment markers. Compared to FNN baselines, the CNN reduces trainable parameters by 98.7% through its weight-sharing paradigm while maintaining spatial robustness to marker positioning variations. This design synergy enables precise coordinate regression (σ < 50 nm) with 75% faster inference speeds than deeper networks, effectively resolving the accuracy–efficiency tradeoff in high-precision overlay alignment systems.

Figure 8.

The prediction results of the CNN-8 network (Table 2, No. 8) on the test set are as follows: panel (a) represents the corrected alignment error distribution for the X coordinate, and panel (b) represents the corrected alignment error distribution for the Y coordinate. Panel (c) shows the first 300 predicted and labeled alignment error for the X coordinate in the test set, and panel (d) shows the first 300 predicted and labeled alignment error for the Y coordinate in the test set.

4. Conclusions

This research demonstrates the effectiveness of deep learning in addressing laser direct writing overlay alignment challenges. Three key findings emerge: (1) CNN architectures significantly outperform FNN counterparts, reducing alignment errors by 90% through localized feature extraction and parameter sharing mechanisms. (2) Simplified network structures (two convolutional + one pooling + one FC layer) achieve optimal performance for this specific task, outperforming complex VGG-like architectures while reducing computational demands by 75%. (3) The developed defect correction model successfully maintains prediction errors below 100 nm, meeting the precision requirements for multi-layer diffractive optical elements. The method’s success is attributed to three innovative aspects: (a) Simulated defect generation strategy mimicking real process imperfections; (b) dual-coordinate error prediction through end-to-end image regression; (c) architecture optimization balancing feature extraction capability and model complexity. Future work should focus on real-time implementation and extension to 3D alignment scenarios. This approach provides a viable solution for next-generation lithography systems requiring sub-100 nm overlay accuracy, with potential applications in advanced semiconductor manufacturing and nano-photonic device fabrication.

Author Contributions

Conceptualization, G.G.; Methodology, G.G. and J.W.; Investigation, X.L.; Data curation, G.G.; Writing—original draft, G.G.; Writing—review & editing, J.B.; Project administration, J.D.; Funding acquisition, H.Y. All authors have read and agreed to the published version of the manuscript.

Funding

National Key R&D Program of China (No. 2016YFB0500200) and the National Natural Science Foundation of China (No. 62075220).

Data Availability Statement

Data underlying the results presented in this paper are not publicly available at this time but may be obtained from the authors upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Poleshchuk, A.; Korolkov, V. Laser writing systems and technologies for fabrication of binary and continuous-relief diffractive optical elements-art. no. 67320X. In Proceedings of the International Conference on Lasers, Applications, and Technologies 2007: Laser-Assisted Micro-and Nanotechnologies, Minsk, Belarus, 28 May–1 June 2007; SPIE: Washington, DC, USA, 2007; Volume 6732, pp. 130–139. [Google Scholar] [CrossRef]

- Zhong, K.; Gao, Y.; Li, F.; Luo, N.; Zhang, W. Fabrication of continuous relief micro-optic elements using real-time maskless lithography technique based on DMD. Opt. Laser Technol. 2014, 56, 367–371. [Google Scholar] [CrossRef]

- Ng, C.K.; Chen, C.; Yang, Y.; Zhang, F.; Ju, B.F.; Chen, Y.L. Femtosecond laser micro-machining of three-dimensional surface profiles on flat single crystal sapphire. Opt. Laser Technol. 2024, 170, 110205. [Google Scholar] [CrossRef]

- Petrov, V.V.; Kryuchyn, A.A.; Beliak, I.V.; Manko, D.Y.; Kosyak, I.V.; Melnik, O.G. Advantages of Direct Laser Writing for Enhancing the Resolution of Diffractive Optical Element Fabrication Processes. Phys. Chem. Solid State 2024, 25, 587–594. [Google Scholar] [CrossRef]

- Pinheiro, T.; Morais, M.; Silvestre, S.; Carlos, E.; Coelho, J.; Almeida, H.V.; Barquinha, P.; Fortunato, E.; Martins, R. Direct Laser Writing: From Materials Synthesis and Conversion to Electronic Device Processing. Adv. Mater. 2024, 36, e2402014. [Google Scholar] [CrossRef] [PubMed]

- Reiser, A. Direct laser 3D nanowriting of metals and their alloys. Nat. Mater. 2024, 23, 1604–1605. [Google Scholar] [CrossRef] [PubMed]

- Lam, A.; Ypma, A.; Gatefait, M.; Deckers, D.; Koopman, A.; van Haren, R.; Beltman, J. Pattern recognition and data mining techniques to identify factors in wafer processing and control determining overlay error. In Metrology, Inspection, and Process Control for Microlithography XXIX; SPIE: Washington, DC, USA, 2015; Volume 9424, pp. 545–554. [Google Scholar]

- Haq, A.U.; Djurdjanovic, D. Robust Control of Overlay Errors in Photolithography Processes. IEEE Trans. Semicond. Manuf. 2019, 32, 320–333. [Google Scholar] [CrossRef]

- Harry, J.L.; Moshe, E.P.; Patrick, J.L. Minimization of total overlay errors on product wafers using an advanced optimization scheme. In Optical Microlithography X; SPIE: Washington, DC, USA, 1997; Volume 3051, pp. 362–373. [Google Scholar]

- Lee, K.B.; Kim, C.O. Marker Layout for Optimizing the Overlay Alignment in a Photolithography Process. IEEE Trans. Semicond. Manuf. 2019, 32, 212–219. [Google Scholar] [CrossRef]

- Timothy, A.B.; Vinayan, C.M.; Cheuk, W.W.; Oleg, G.; Michael, P.B.; Nelson, M.F.; Christopher, P.A.; Pradeep, V.; Sathish, V.; Jaydeep, K.S. Characterization of wafer geometry and overlay error on silicon wafers with nonuniform stress. J. Micro/Nanolithography MEMS MOEMS 2013, 12, 043002. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, H.; Xu, W.; Lu, Z. Large aperture diffractive optical telescope: A review. Opt. Laser Technol. 2020, 130, 106356. [Google Scholar] [CrossRef]

- Bai, Y.; Zhang, Z.; Chen, T.; Wang, R.; Wei, H.; Zeng, X.; Zhang, X. Evaluation of wavefront aberrations induced by overlay errors in stitching computer-generated holograms. Opt. Lasers Eng. 2022, 152, 106944. [Google Scholar] [CrossRef]

- Ma, S.; Ying, H.; Ng, B.; Tok, M.K. Process Challenges attributing to Lithography Overlay Misalignment: A Case Study on BEOL Lithography Process. In Proceedings of the 2025 36th Annual SEMI Advanced Semiconductor Manufacturing Conference (ASMC), Albany, NY, USA, 5–8 May 2025; pp. 1–4. [Google Scholar] [CrossRef]

- Gao, G.; Mao, D.; Fan, B.; Guan, C. Effect of Wet Expansion Behavior on Polyimide Membrane Diffractive Lens. Coatings 2019, 9, 559. [Google Scholar] [CrossRef]

- Gao, G.; Mao, D.; Jiang, R.; Li, Z.; Liu, X.; Lei, B.; Bian, J.; Wu, S.; Fan, B. Investigation of Photoelastic Property and Stress Analysis for Optical Polyimide Membrane through Stress Birefringence Method. Coatings 2020, 10, 56. [Google Scholar] [CrossRef]

- Gao, G.; Shi, H.; Wang, L.; Liu, D.; Wang, J.; Du, J.; Bian, J.; Fan, B.; Yang, H. Large aperture high diffraction efficiency off-axis Fresnel lens fabrication and analysis. Opt. Express 2022, 30, 28932–28940. [Google Scholar] [CrossRef] [PubMed]

- Gao, G.; Wang, L.; Shi, H.; Liu, D.; Fan, B.; Guan, C. Facile large-area uniform photolithography of membrane diffractive lens based on vacuum assisted self contact method. Sci. Rep. 2020, 10, 9005. [Google Scholar] [CrossRef] [PubMed]

- Luo, Q.; Gao, G.; Liu, D.; Du, J. Wavefront Measurement of Large Aperture High Image Quality Off-axis Fresnel Lens. Opt. Express 2022, 31, 1249–1257. [Google Scholar] [CrossRef] [PubMed]

- Heath, D.J.; Grant-Jacob, J.A.; Xie, Y.; Mackay, B.S.; Baker, J.A.G.; Eason, R.W.; Mills, B. Machine learning for 3D simulated visualization of laser machining. Opt. Express 2018, 26, 21574–21584. [Google Scholar] [CrossRef]

- Mills, B.; Heath, D.J.; Grant-Jacob, J.A.; Eason, R.W. Predictive capabilities for laser machining via a neural network. Opt. Express 2018, 26, 17245–17253. [Google Scholar] [CrossRef] [PubMed]

- Ren, Z.; Gao, L.; Clark, S.J.; Fezzaa, K.; Shevchenko, P.; Choi, A.; Everhart, W.; Rollett, A.D.; Chen, L.; Sun, T. Machine learning–aided real-time detection of keyhole pore generation in laser powder bed fusion. Science 2023, 379, 89–94. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).