Low-Power-Management Engine: Driving DDR Towards Ultra-Efficient Operations

Abstract

1. Introduction

2. Foundations of LPME

2.1. Theoretical Basis

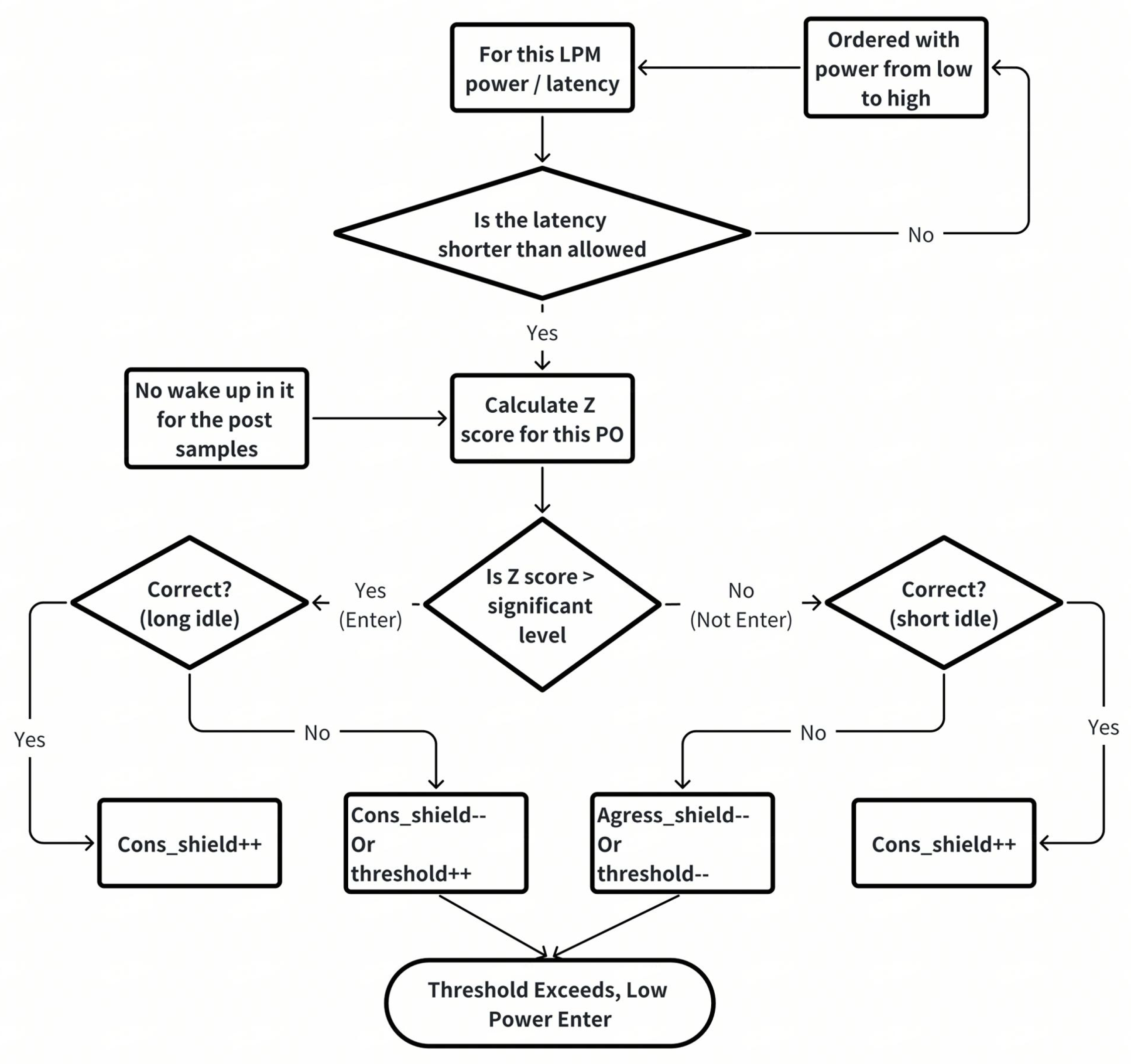

2.2. Z-Test Application for LPM Control

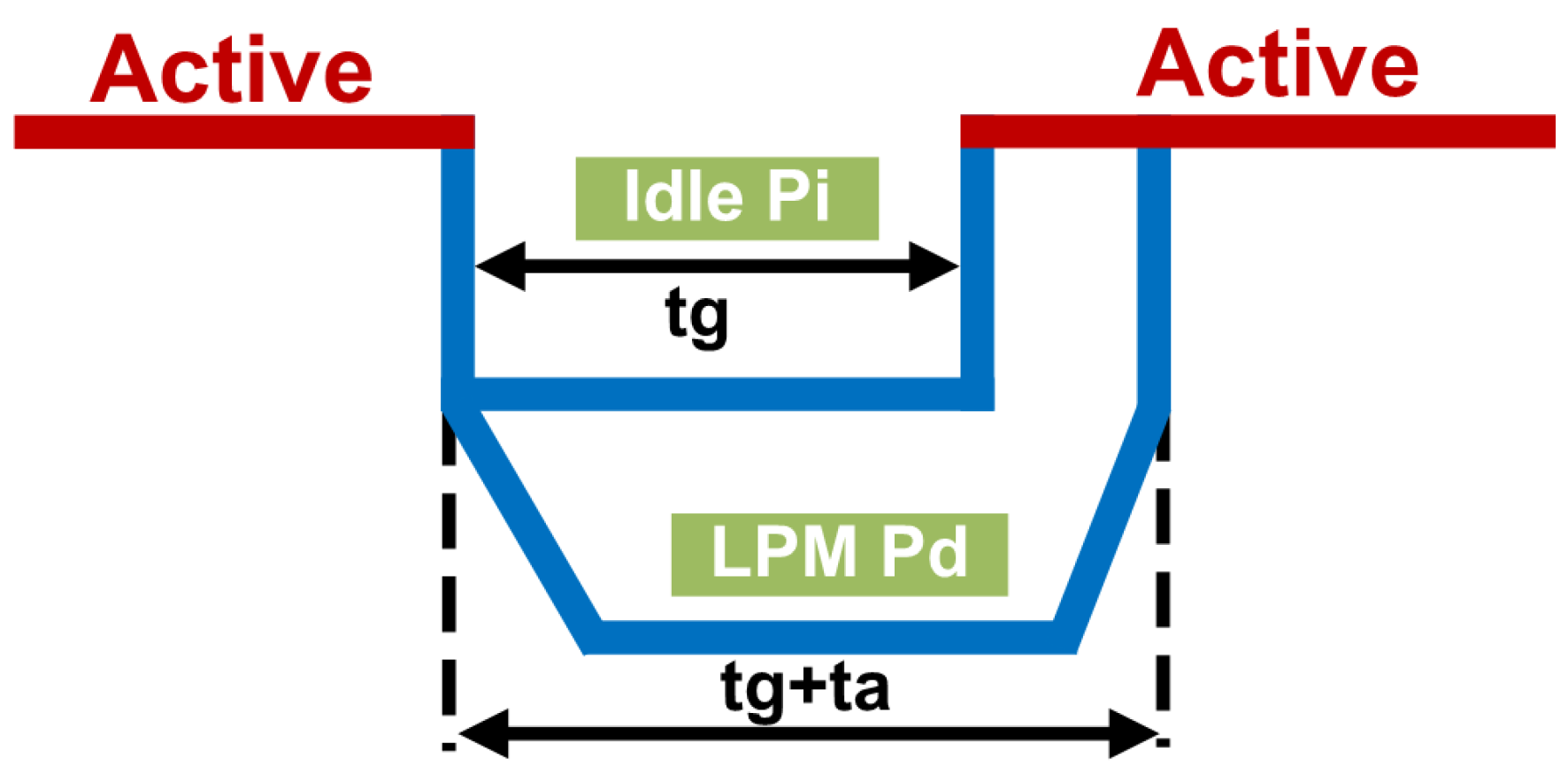

2.3. Power-Gain Analysis and Decision-Making

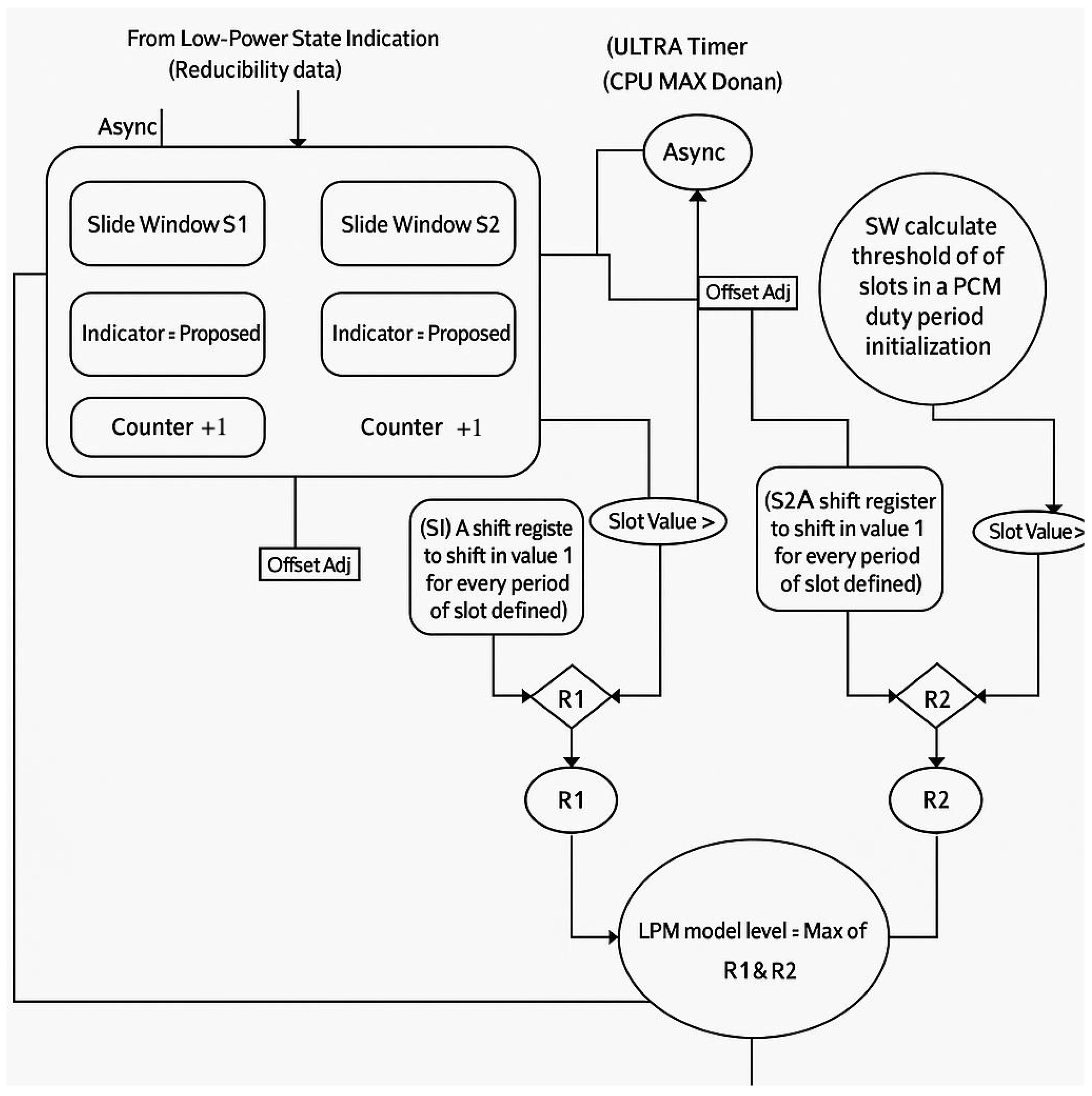

3. Implementation

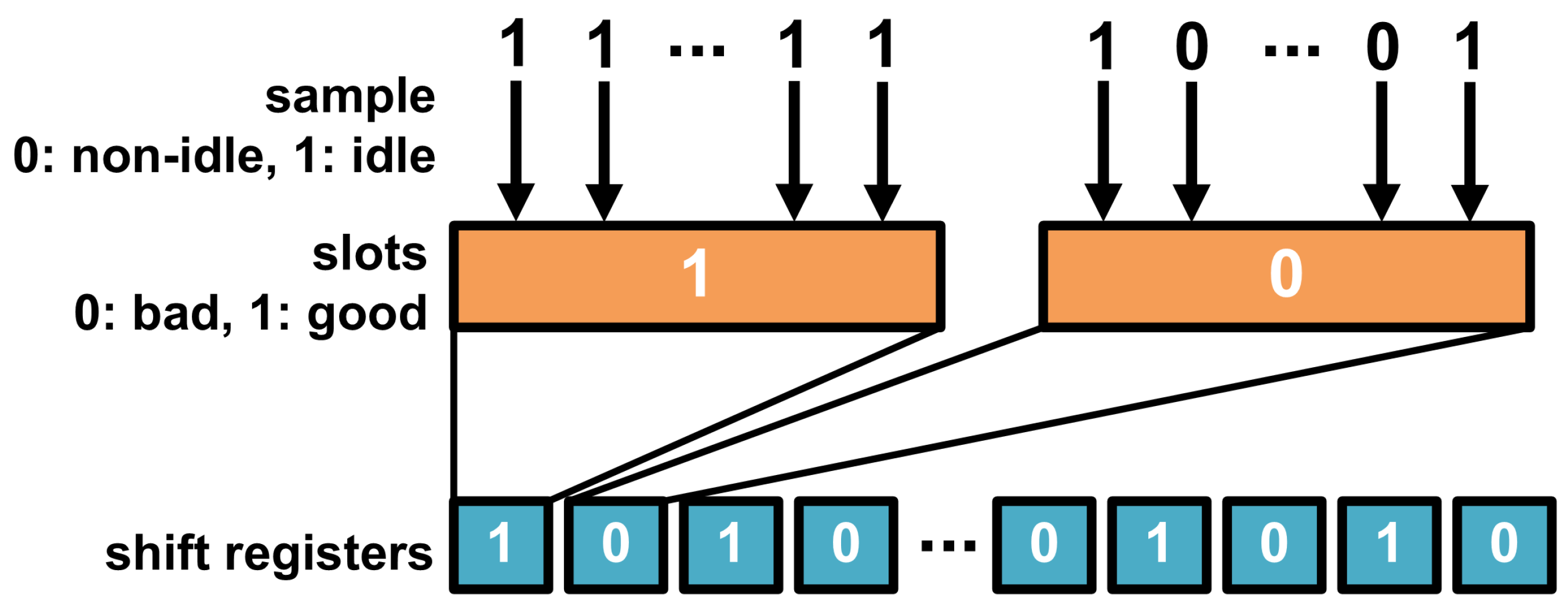

3.1. Sampling Mechanism: Detecting Idle States for Power Optimization

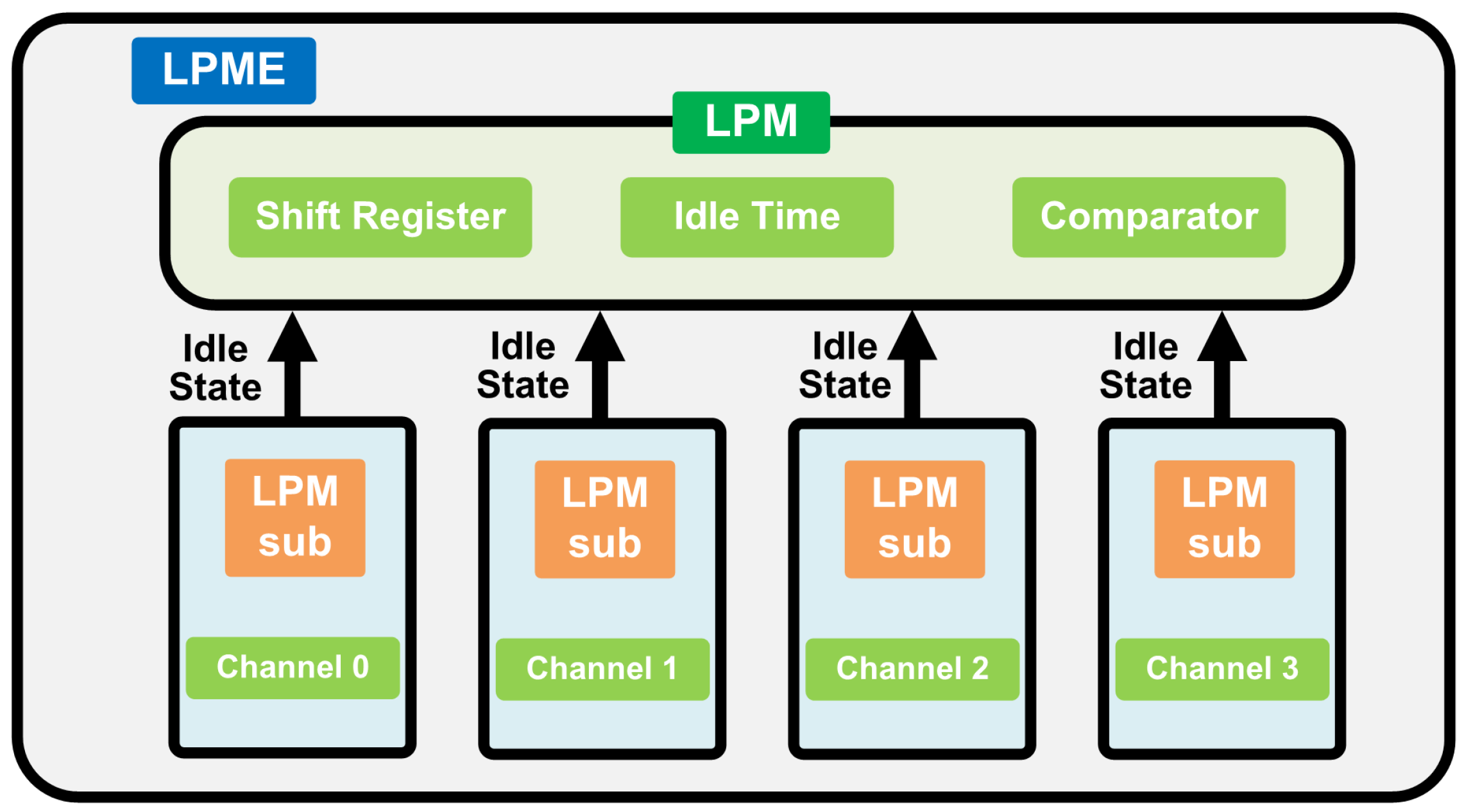

3.2. Multi-Mode Operation: Independent Decision Making in LPM

3.3. Adaptive Feedback Mechanism: Refining LPM Decisions for Optimal Power Management

3.4. Hardware Complexity

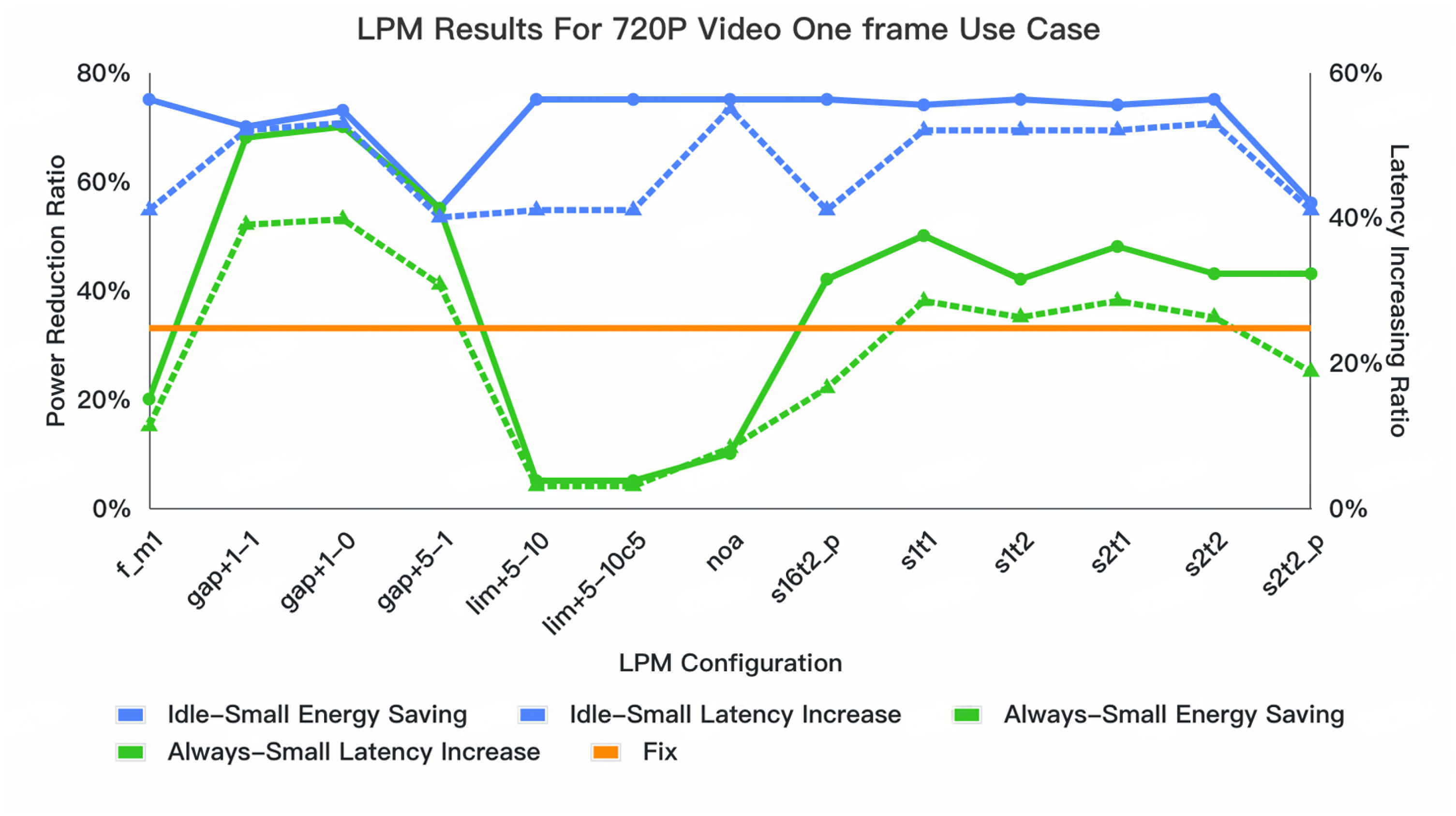

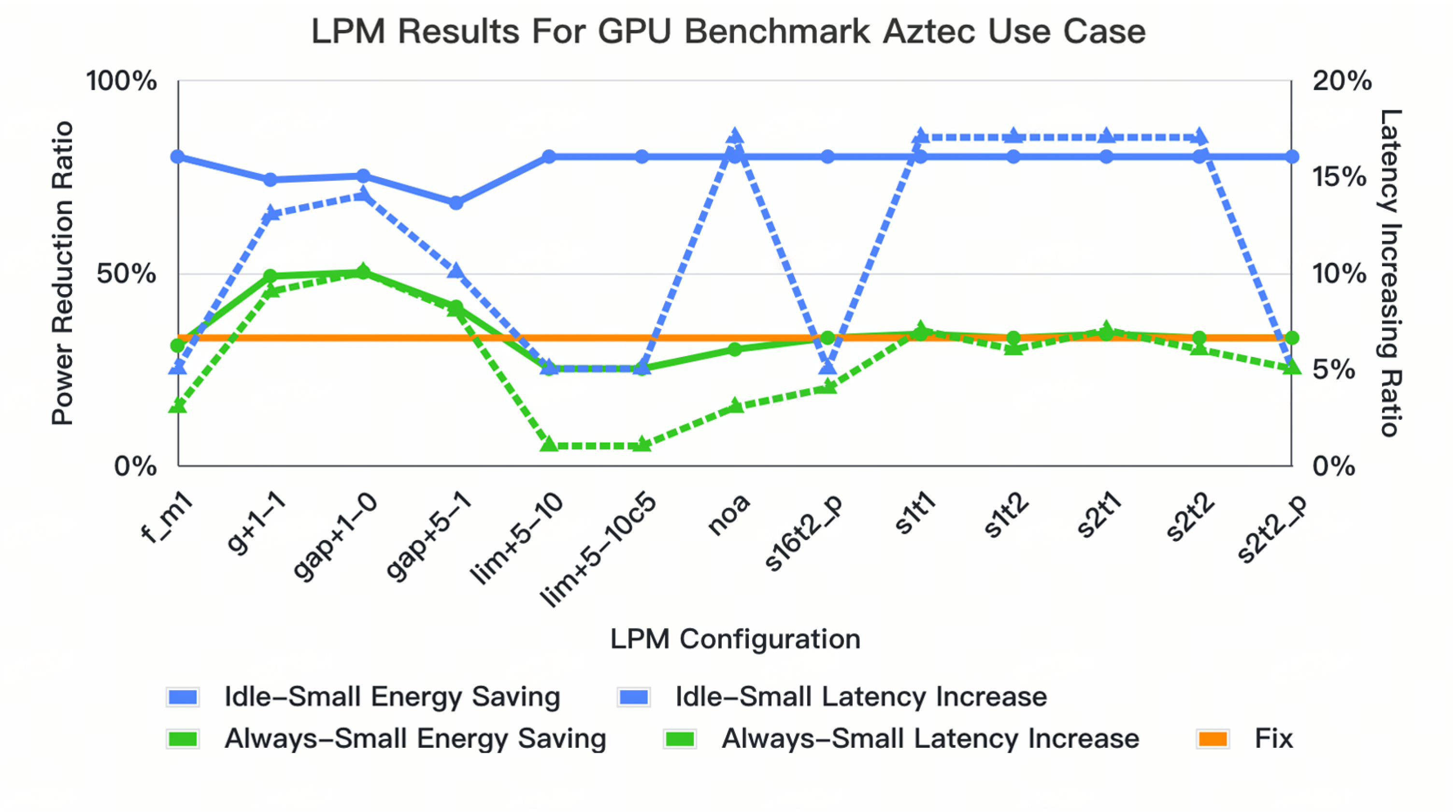

4. Evaluation Results

4.1. Advantages in Power Efficiency Across Test Scenarios

4.2. In-Depth Analysis of Sampling and Slot-Window Configurations

4.3. Dynamic Tuning and Configuration Selection for Optimal Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Arjun, R.; Chaudhary, D.; Mukheerjee, A. Design of Low-Power DDR Controller and DRAM for Deep Learning and Server Applications. In Proceedings of the 2021 IEEE 4th International Conference on Computing, Power and Communication Technologies (GUCON), Kuala Lumpur, Malaysia, 24–26 September 2021. [Google Scholar]

- Anandtech. The Qualcomm Snapdragon X Architecture Deep Dive: Getting to Know Oryon and Adreno X1, 2nd ed.; Addison Wesley: Boston, MA, USA, 2024. [Google Scholar]

- Seshadri, V.; Lee, D.; Mullins, T.; Hassan, H.; Boroum, A.; Kim, J.; Kozuch, M.A.; Mutlu, O.; Gibbons, P.B.; Mowry, T.C. Ambit: In-Memory accelerator for bulk bitwise operations using commodity DRAM technology. In Proceedings of the 50th Annual IEEE/ACM International Symposium on Microarchitecture, Cambridge, MA, USA, 14–18 October 2017. [Google Scholar]

- Oliveira, G.F.; Olgun, A.; Yağlıkçı, A.G.; Bostancı, F.N.; Gómez-Luna, J.; Ghose, S.; Mutlu, O. MIMDRAM: An End-to-End Processing-Using-DRAM System for High-Throughput, Energy-Efficient and Programmer-Transparent Multiple-Instruction Multiple-Data Computing. In Proceedings of the 2024 IEEE International Symposium on High-Performance Computer Architecture (HPCA), Edinburgh, UK, 2–6 March 2024. [Google Scholar]

- Kang, J.; Choi, S.; Lee, E.; Sim, J. SpDRAM: Efficient In-DRAM Acceleration of Sparse Matrix-Vector Multiplication. IEEE Access 2024, 12, 176009–176021. [Google Scholar] [CrossRef]

- Hong, B.; Kim, G.; Ahn, J.H.; Kwon, Y.; Kim, H.; Kim, J. Accelerating links-list traversal through near-data processing. In Proceedings of the 2016 International Conference on Parallel Architectures and Compilation, Haifa, Israel, 11–15 September 2016. [Google Scholar]

- Olgun, A.; Bostanci, F.N.; Francisco de Oliveira, G., Jr.; Tugrul, Y.C.; Bera, R.; Yaglikci, A.G.; Hassan, H.; Ergin, O.; Mutlu, O. Sectored DRAM: A Practical Energy-Efficient and High-Performance Fine-Grained DRAM Architecture. ACM Trans. Archit. Code Optim. 2024, 21, 60. [Google Scholar] [CrossRef]

- Alawneh, T.A.; Sharadqh, A.A.; Alsharah, A.; Awada, E.; Alkasassbeh, J.S.; Al-Rawashdeh, A.Y.; Al-Qaisi, A. A Highly Parallel DRAM Architecture to Mitigate Large Access Latency and Improve Energy Efficiency of Modern DRAM Systems. IEEE Access 2024, 12, 182998–183023. [Google Scholar] [CrossRef]

- Alawneh, T.A.; Jarajreh, M.M.; Alkasassbeh, J.S.; Sharadqh, A.A. High-Performance and Power-Saving Mechanism for Page Activations Based on Full Independent DRAM Sub-Arrays in Multi-Core Systems. IEEE Access 2023, 11, 79801–79822. [Google Scholar] [CrossRef]

- Ebrahimi, E.; Lee, C.J.; Mutlu, O.; Patt, Y.N. Fairness via source throttling: A configurable and high-performance fairness substrate for multi-core memory systems. Acm Sigplan Not. 2010, 45, 335–346. [Google Scholar] [CrossRef]

- Serrano-Cases, A.; Reina, J.M.; Abella, J.; Mezzetti, E.; Cazorla, F.J. Leveraging Hardware QoS to Control Contention in the Xilinx Zynq UltraScale+ MPSoC. In Proceedings of the 33rd Euromicro Conference on Real-Time Systems (ECRTS 2021), Virtual, 5–9 July 2021. [Google Scholar]

- Ejaz, A.; Papaefstathiou, V.; Sourdis, I. HighwayNoC: Approaching Ideal NoC Performance with Dual Data Rate Routers. IEEE/ACM Trans. Netw. 2021, 29, 318–331. [Google Scholar] [CrossRef]

- Punniyamurthy, K.; Gerstlauer, A. Off-Chip Congestion Management for GPU-based Non-Uniform Processing-in-Memory Networks. In Proceedings of the 2020 28th Euromicro International Conference on Parallel, Distributed and Network-Based Processing (PDP), Västerås, Sweden, 11–13 March 2020. [Google Scholar]

- Das, A.; Al-Hashimi, B.M.; Merrett, G.V. Adaptive and Hierarchical Runtime Manager for Energy-Aware Thermal Management of Embedded Systems. Acm Trans. Embed. Comput. Syst. (TECS) 2016, 15, 24. [Google Scholar] [CrossRef]

- González Trejo, A. Low Energy DRAM Controller for Computer Systems. Master’s Thesis, Universitat Politècnica de Catalunya, Barcelona, Spain, 2019. [Google Scholar]

- D’Addato, M.; Antolini, A.; Renzini, F.; Elgani, A.M.; Perilli, L.; Scarselli, E.F.; Gnudi, A.; Magno, M.; Canegallo, R. Nanowatt Clock and Data Recovery for Ultra-Low Power Wake-Up Based Receivers. In Proceedings of the 2020 International Conference on Embedded Wireless Systems and Networks (EWSN ’20), Lyon, France, 17–19 February 2020; pp. 224–229. [Google Scholar]

- Welch, B.L. On the Z-Test in Randomized Blocks and Latin Squares. Biometrika 1937, 29, 21–52. [Google Scholar] [CrossRef]

- Synopsys, Inc. Synopsys Design Compiler. Available online: https://www.synopsys.com/support/training/rtl-synthesis/design-compiler-rtl-synthesis.html (accessed on 15 January 2024.).

- Intel Inc. 3rd Gen Intel Xeon Scalable Processors. Available online: https://www.intel.com/content/dam/www/public/us/en/documents/a1171486-icelake-productbrief-updates-r1v2.pdf (accessed on 15 January 2024.).

- JESD79-4C; DDR4 SDRAM Standard. JEDEC: Arlington, VA, USA, 2020.

- JESD79-5c; DDR5 SDRAM Standard. JEDEC: Arlington, VA, USA, 2024.

| Method | No LPM | LPM | Ratio |

|---|---|---|---|

| Total Transaction Time (ns) | 299,491 | 299,495 | 0.01% |

| Bandwidth (MB/s) | 6338.96 | 6338.88 | 0.01% |

| Total Power (mw) | 121.33 | 94.26 | −22% |

| Case | Algorithm | PR Ratio a | PE Ratio b | Idle c |

|---|---|---|---|---|

| VideoEncoder | fixed | 16.78 | 7.2 | 37,514 |

| Douyin | fixed | 15.83 | 7.33 | 29,848 |

| Perf joy yuv | fixed | 52.03 | 5.02 | 17,678 |

| VideoEncoder | always-small | 9.68 | 3.85 | 82,586 |

| Douyin | always-small | 4.21 | 3.22 | 53,681 |

| Perf joy yuv | always-small | 66.97 | 11.49 | 14,498 |

| VideoEncoder | always-big | 9.33 | 4 | 82,405 |

| Douyin | always-big | 4.05 | 3.45 | 53,324 |

| Perf joy yuv | always-big | 66.75 | 11.7 | 3860 |

| VideoEncoder | idle-small | 9.68 | 4.28 | 84,912 |

| Douyin | idle-small | 4.05 | 6.84 | 56,572 |

| Perf joy yuv | idle-small | 69.73 | 10.67 | 12,946 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Z.; Li, Y.; Zeng, X. Low-Power-Management Engine: Driving DDR Towards Ultra-Efficient Operations. Micromachines 2025, 16, 543. https://doi.org/10.3390/mi16050543

Liu Z, Li Y, Zeng X. Low-Power-Management Engine: Driving DDR Towards Ultra-Efficient Operations. Micromachines. 2025; 16(5):543. https://doi.org/10.3390/mi16050543

Chicago/Turabian StyleLiu, Zhuorui, Yan Li, and Xiaoyang Zeng. 2025. "Low-Power-Management Engine: Driving DDR Towards Ultra-Efficient Operations" Micromachines 16, no. 5: 543. https://doi.org/10.3390/mi16050543

APA StyleLiu, Z., Li, Y., & Zeng, X. (2025). Low-Power-Management Engine: Driving DDR Towards Ultra-Efficient Operations. Micromachines, 16(5), 543. https://doi.org/10.3390/mi16050543