Temperature Compensation Method Based on an Improved Firefly Algorithm Optimized Backpropagation Neural Network for Micromachined Silicon Resonant Accelerometers

Abstract

:1. Introduction

2. Establishing the IFA-BP Neural Network Compensation Model

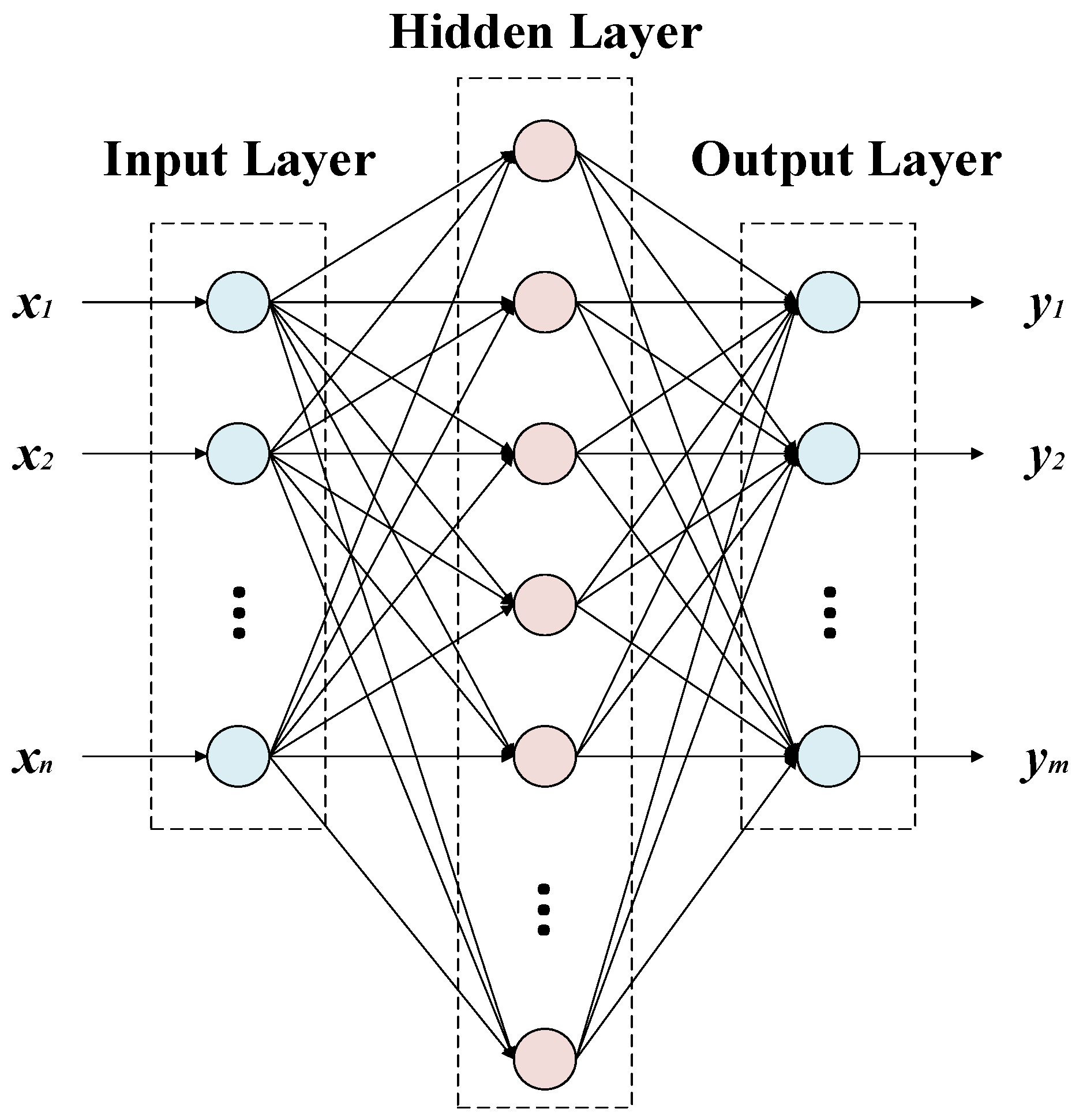

2.1. BP Neural Network

2.2. Firefly Algorithm

2.2.1. Standard Firefly Algorithm

2.2.2. Improved Firefly Algorithm

- Improvement of the step size strategy

- 2.

- Improvement of the best firefly

- 3.

- Improvement of the firefly position update strategy

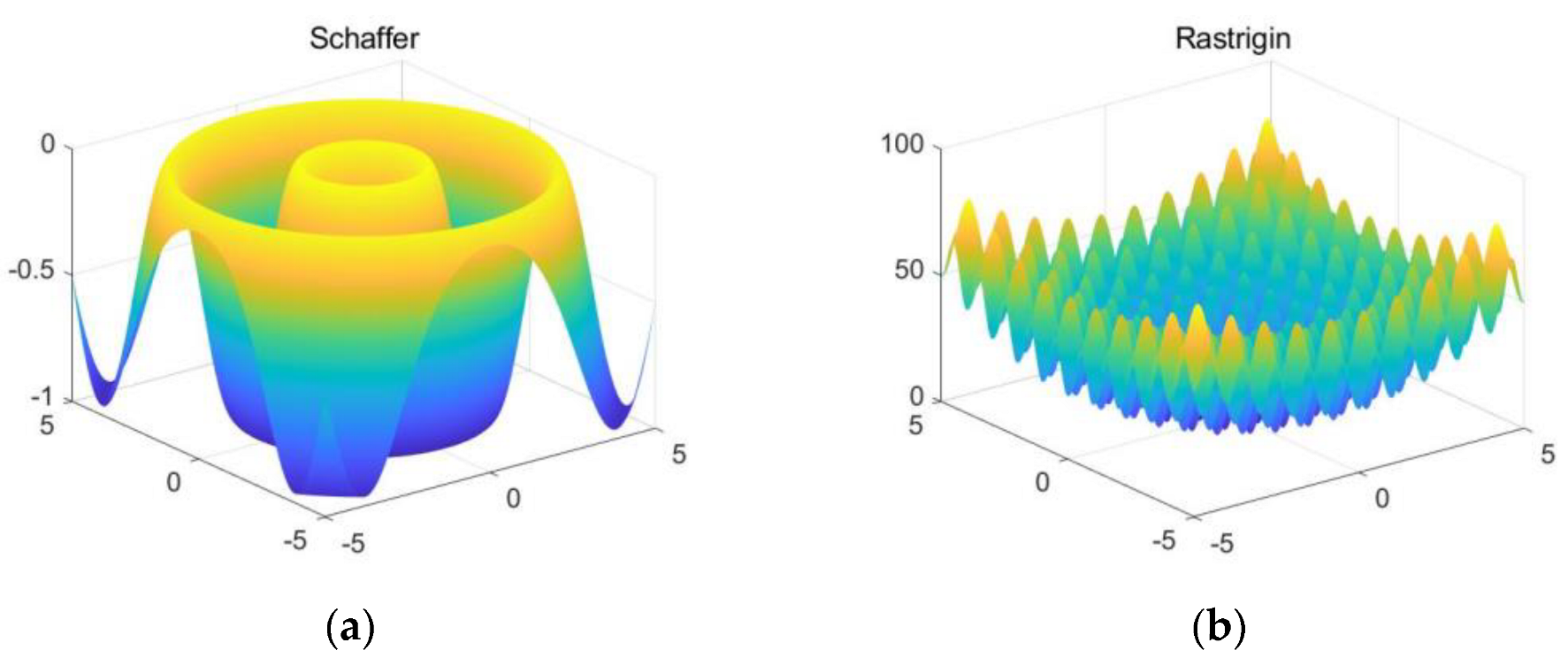

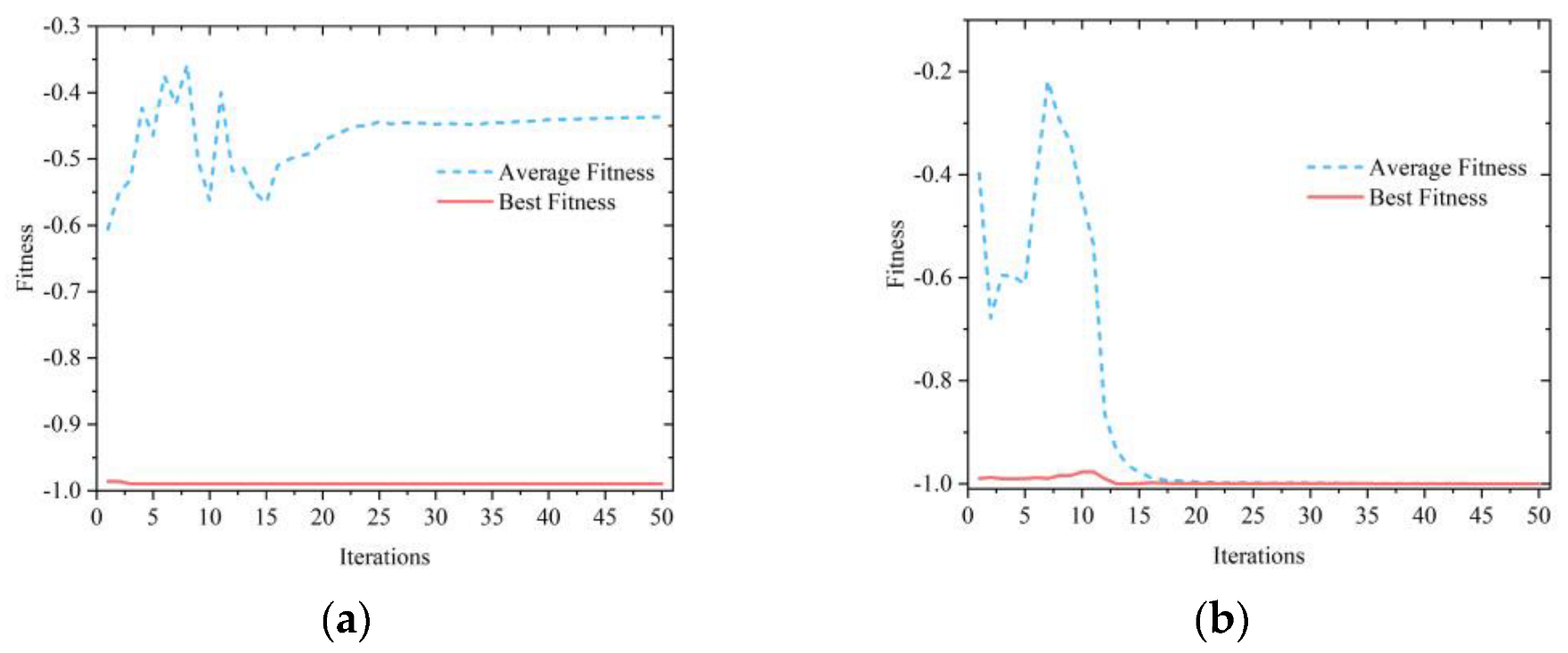

2.2.3. Simulation Analysis of Optimization Algorithm Based on Test Functions

2.3. IFA-BP Neural Network Model

3. Experiments and Results

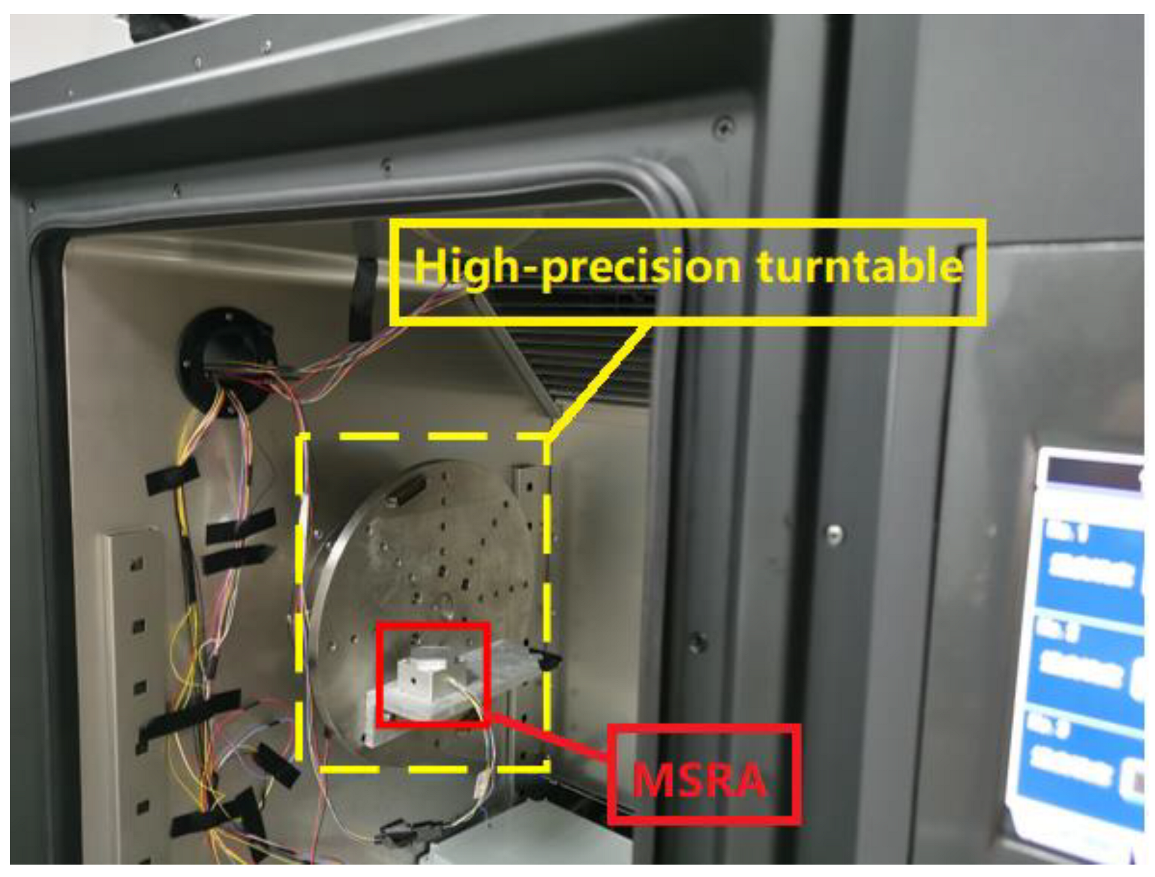

3.1. Experiments

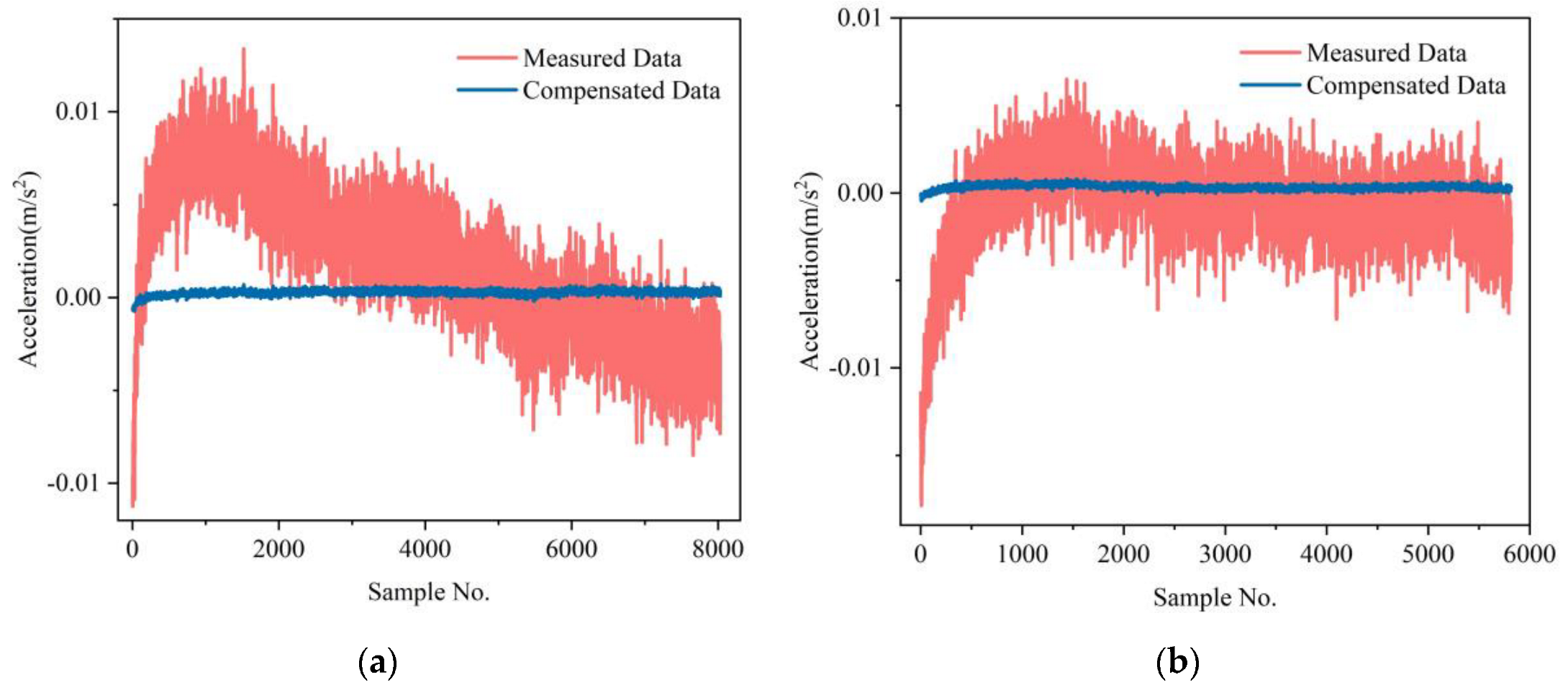

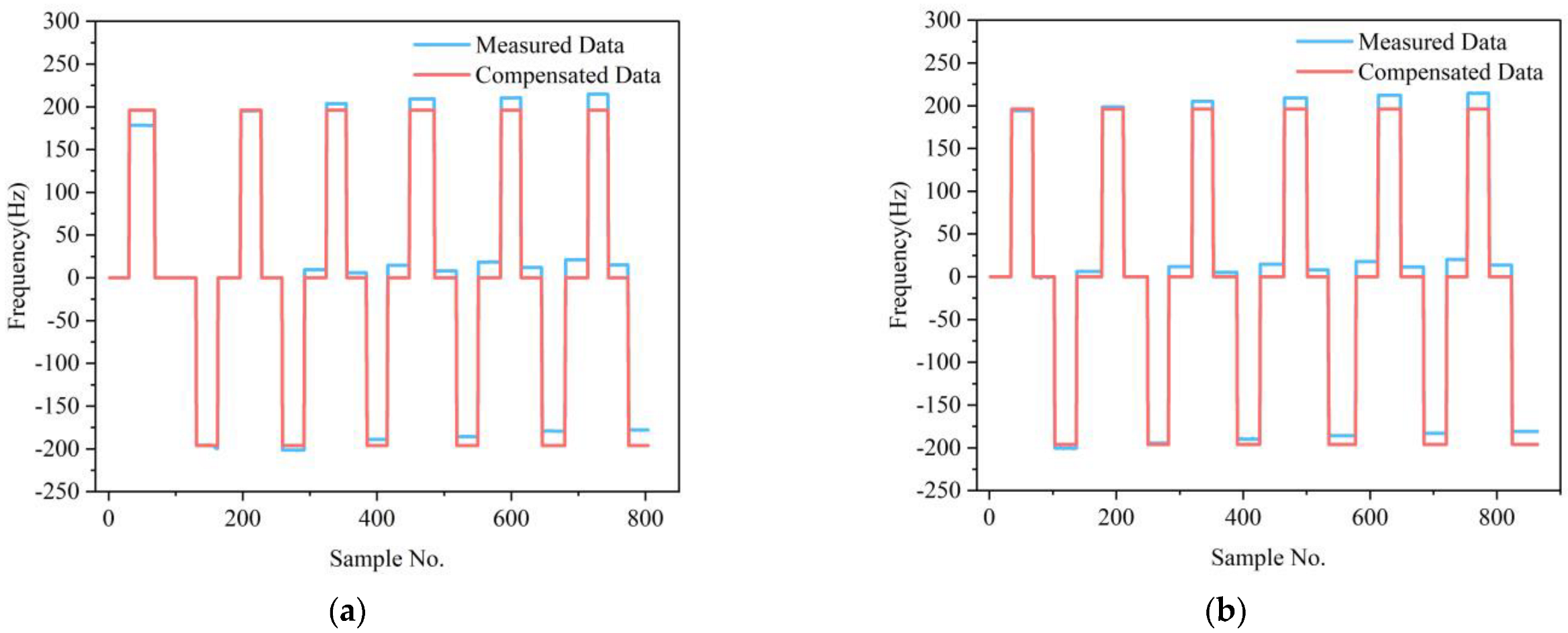

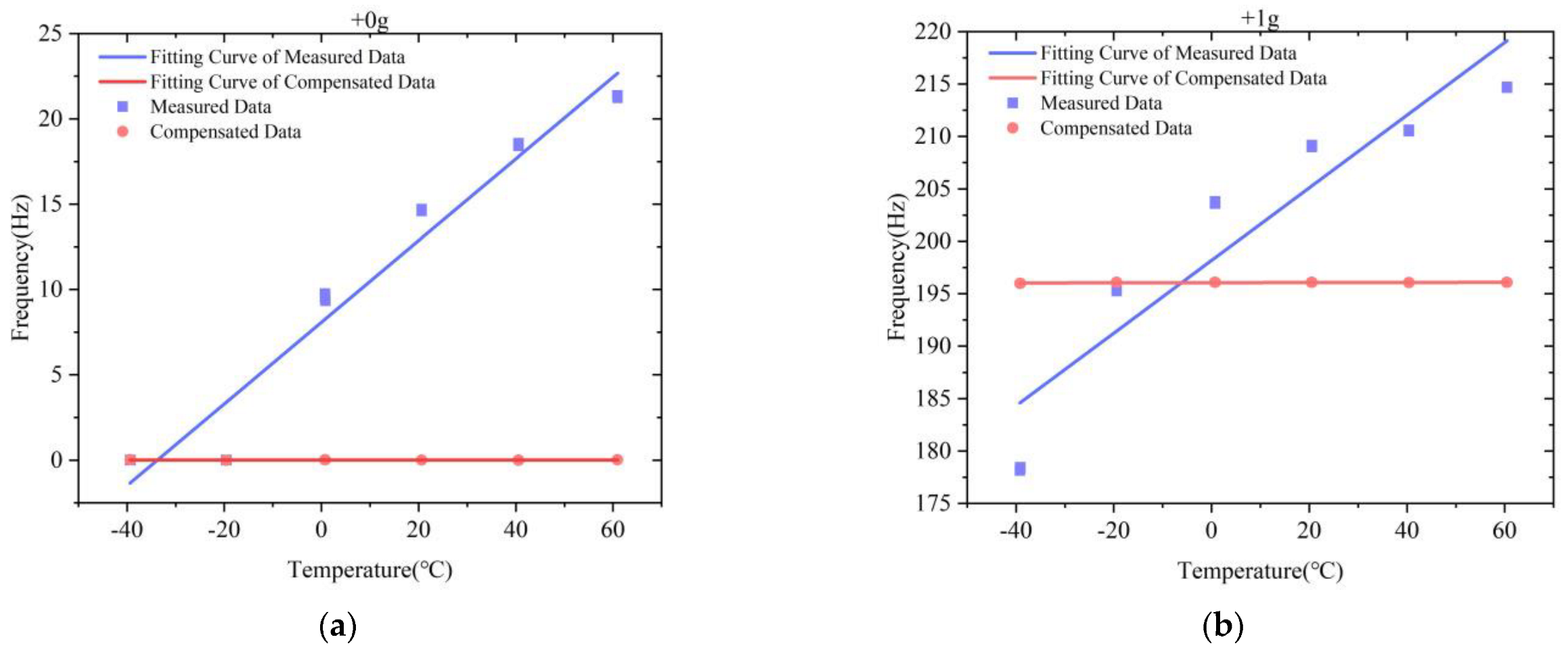

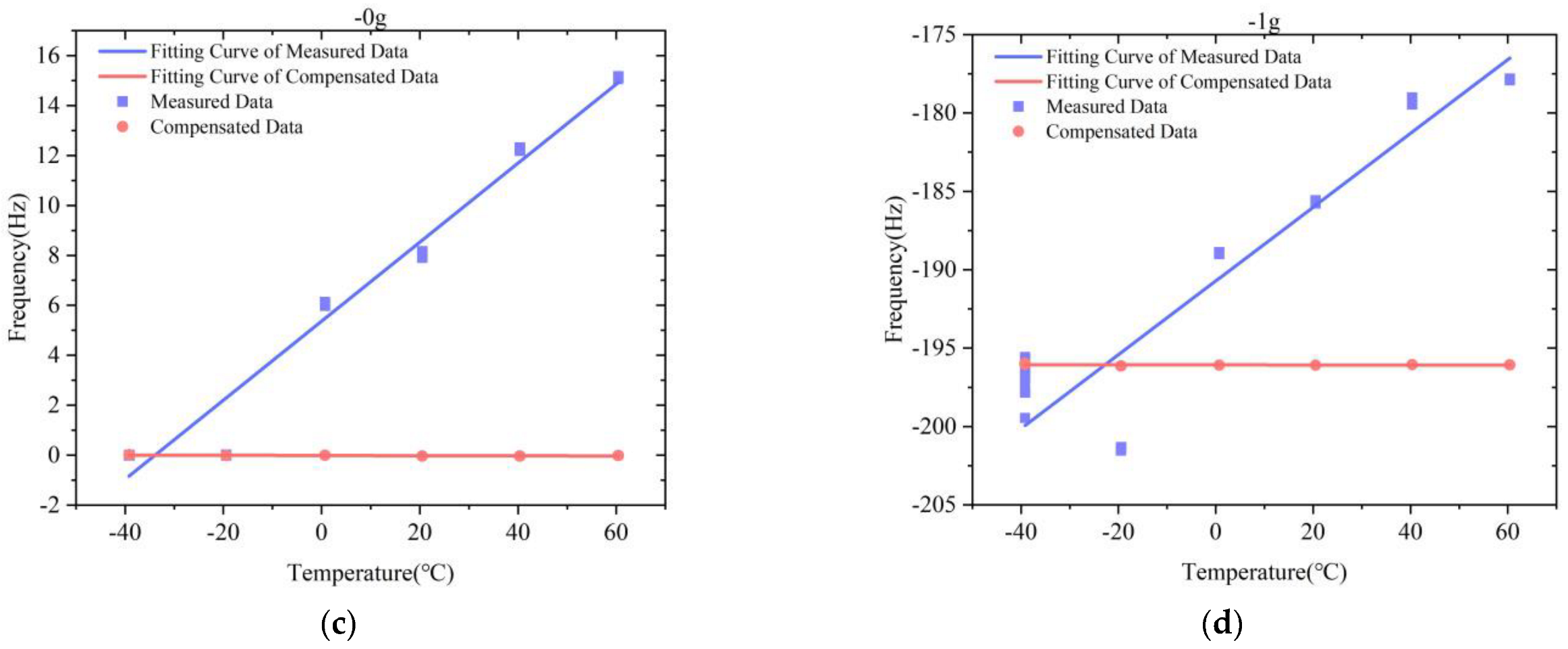

3.2. Results and Discussion

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Huang, L.; Yang, H.; Gao, Y.; Zhao, L.; Liang, J. Design and implementation of a micromechanical silicon resonant accelerometer. Sensors 2013, 13, 15785–15804. [Google Scholar] [CrossRef] [PubMed]

- Weinberg, M.S.; Bernstein, J.J.; Borenstein, J.T.; Campbell, J.; Cousens, J.; Cunningham, R.K.; Fields, R.; Greiff, P.; Hugh, B.; Niles, L. Micromachining inertial instruments. In Proceedings of the Micromachining and Microfabrication Process Technology II, Austin, TX, USA, 14–15 October 1996; pp. 26–36. [Google Scholar]

- Hopkins, R.; Miola, J.; Sawyer, W.; Setterlund, R.; Dow, B. The silicon oscillating accelerometer: A high-performance MEMS accelerometer for precision navigation and strategic guidance applications. In Proceedings of the Institute of Navigation, 2005 National Technical Meeting, NTM 2005, San Diego, CA, USA, 24–26 January 2005; pp. 970–979. [Google Scholar]

- Pike, W.T.; Delahunty, A.; Mukherjee, A.; Dou, G.; Liu, H.; Calcutt, S.; Standley, I.M. A self-levelling nano-g silicon seismometer. In Proceedings of the SENSORS, 2014 IEEE, Valencia, Spain, 2–5 November 2014; pp. 1599–1602. [Google Scholar]

- Jiang, B.; Huang, S.; Zhang, J.; Su, Y. Analysis of Frequency Drift of Silicon MEMS Resonator with Temperature. Micromachines 2020, 12, 26. [Google Scholar] [CrossRef] [PubMed]

- Jing, Z.; Anping, Q.; Qin, S.; You, B.; Guoming, X. Research on temperature compensation method of silicon resonant accelerometer based on integrated temperature measurement resonator. In Proceedings of the 2015 12th IEEE International Conference on Electronic Measurement & Instruments (ICEMI), Qingdao, China, 16–18 July 2015; pp. 1577–1581. [Google Scholar]

- Kyu Lee, H.; Melamud, R.; Kim, B.; Chandorkar, S.; Salvia, J.C.; Kenny, T.W. The effect of the temperature-dependent nonlinearities on the temperature stability of micromechanical resonators. J. Appl. Phys. 2013, 114, 153513. [Google Scholar] [CrossRef]

- Zhang, X.; Park, S.; Judy, M.W. Accurate Assessment of Packaging Stress Effects on MEMS Sensors by Measurement and Sensor–Package Interaction Simulations. J. Microelectromechan. Syst. 2007, 16, 639–649. [Google Scholar] [CrossRef]

- Luschi, L.; Iannaccone, G.; Pieri, F. Temperature Compensation of Silicon Lame Resonators Using Etch Holes: Theory and Design Methodology. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2017, 64, 879–887. [Google Scholar] [CrossRef] [PubMed]

- Mustafazade, A.; Seshia, A.A. Compact High-Precision Analog Temperature Controller for MEMS Inertial Sensors. In Proceedings of the 2018 IEEE International Frequency Control Symposium (IFCS), Olympic Valley, CA, USA, 21–24 May 2018; pp. 1–2. [Google Scholar]

- Salvia, J.C.; Melamud, R.; Chandorkar, S.A.; Lord, S.F.; Kenny, T.W. Real-Time Temperature Compensation of MEMS Oscillators Using an Integrated Micro-Oven and a Phase-Locked Loop. J. Microelectromechan. Syst. 2010, 19, 192–201. [Google Scholar] [CrossRef]

- Shin, D.D.; Chen, Y.; Flader, I.B.; Kenny, T.W. Epitaxially encapsulated resonant accelerometer with an on-chip micro-oven. In Proceedings of the 2017 19th International Conference on Solid-State Sensors, Actuators and Microsystems (TRANSDUCERS), Kaohsiung, Taiwan, 18–22 June 2017; pp. 595–598. [Google Scholar]

- Yang, B.; Dai, B.; Liu, X.; Xu, L.; Deng, Y.; Wang, X. The on-chip temperature compensation and temperature control research for the silicon micro-gyroscope. Microsyst. Technol. 2014, 21, 1061–1072. [Google Scholar] [CrossRef]

- Cui, J.; Yang, H.; Li, D.; Song, Z.; Zhao, Q. A Silicon Resonant Accelerometer Embedded in An Isolation Frame with Stress Relief Anchor. Micromachines 2019, 10, 571. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kang, H.; Ruan, B.; Hao, Y.; Chang, H. A Mode-Localized Resonant Accelerometer With Self-Temperature Drift Suppression. IEEE Sens. J. 2020, 20, 12154–12165. [Google Scholar] [CrossRef]

- Li, H.; Huang, L.; Ran, Q.; Wang, S. Design of Temperature Sensitive Structure for Micromechanical Silicon Resonant Accelerometer. In Proceedings of the 2017 International Conference on Computer Network, Electronic and Automation (ICCNEA), Xi’an, China, 23–25 September 2017; pp. 350–354. [Google Scholar]

- Li, N.; Xing, C.; Sun, P.; Zhu, Z. Simulation Analysis on Thermal Drift of MEMS Resonant Accelerometer. In Proceedings of the 2019 20th International Conference on Electronic Packaging Technology (ICEPT), Hong Kong, China, 11–15 August 2019; pp. 1–4. [Google Scholar]

- Shin, D.D.; Ahn, C.H.; Chen, Y.; Christensen, D.L.; Flader, I.B.; Kenny, T.W.; IEEE. Environmentally Robust Differential Resonant Accelerometer in a Wafer-Scale Encapsulation Process. In Proceedings of the 30th IEEE International Conference on Micro Electro Mechanical Systems (MEMS), Las Vegas, NV, USA, 22–26 January 2017; pp. 17–20. [Google Scholar]

- Cui, J.; Liu, M.; Yang, H.; Li, D.; Zhao, Q.; IEEE. Temperature Robust Silicon Resonant Accelerometer with Stress Isolation Frame Mounted on Axis-Symmetrical Anchors. In Proceedings of the 33rd IEEE International Conference on Micro Electro Mechanical Systems (MEMS), Vancouver, BC, Canada, 18–22 January 2020; pp. 791–794. [Google Scholar]

- Zotov, S.A.; Simon, B.R.; Trusov, A.A.; Shkel, A.M. High Quality Factor Resonant MEMS Accelerometer With Continuous Thermal Compensation. IEEE Sens. J. 2015, 15, 5045–5052. [Google Scholar] [CrossRef]

- Shi, R.; Zhao, J.; Qiu, A.P.; Xia, G.M. Temperature Self-Compensation of Micromechanical Silicon Resonant Accelerometer. Appl. Mech. Mater. 2013, 373–375, 373–381. [Google Scholar] [CrossRef]

- Cai, P.; Xiong, X.; Wang, K.; Wang, J.; Zou, X. An Improved Difference Temperature Compensation Method for MEMS Resonant Accelerometers. Micromachines 2021, 12, 1022. [Google Scholar] [CrossRef] [PubMed]

- Araghi, G.; Landry, R., Jr.; IEEE. Temperature compensation model of MEMS inertial sensors based on neural network. In Proceedings of the IEEE/ION Position, Location and Navigation Symposium (PLANS), Monterey, CA, USA, 23–26 April 2018; pp. 301–309. [Google Scholar]

- Cao, H.; Zhang, Y.; Shen, C.; Liu, Y.; Wang, X. Temperature Energy Influence Compensation for MEMS Vibration Gyroscope Based on RBF NN-GA-KF Method. Shock Vib. 2018, 2018, 1–10. [Google Scholar] [CrossRef]

- Fontanella, R.; Accardo, D.; Lo Moriello, R.S.; Angrisani, L.; De Simone, D. MEMS gyros temperature calibration through artificial neural networks. Sens. Actuators A Phys. 2018, 279, 553–565. [Google Scholar] [CrossRef]

- Wang, S.; Zhu, W.; Shen, Y.; Ren, J.; Gu, H.; Wei, X. Temperature compensation for MEMS resonant accelerometer based on genetic algorithm optimized backpropagation neural network. Sens. Actuators A Phys. 2020, 316, 112393. [Google Scholar] [CrossRef]

- Lu, Q.; Shen, C.; Cao, H.; Shi, Y.; Liu, J. Fusion Algorithm-Based Temperature Compensation Method for High-G MEMS Accelerometer. Shock Vib. 2019, 2019, 1–13. [Google Scholar] [CrossRef]

- Zhu, M.; Pang, L.; Xiao, Z.; Shen, C.; Cao, H.; Shi, Y.; Liu, J. Temperature Drift Compensation for High-G MEMS Accelerometer Based on RBF NN Improved Method. Appl. Sci. 2019, 9, 695. [Google Scholar] [CrossRef] [Green Version]

- Hornik, K. Approximation Capabilities of Multilayer Feedforward Networks. Neural Netw. 1991, 4, 251–257. [Google Scholar] [CrossRef]

- Liu, M.; Zhang, M.; Zhao, W.; Song, C.; Wang, D.; Li, Q.; Wang, Z. Prediction of congestion degree for optical networks based on bp artificial neural network. In Proceedings of the 2017 16th International Conference on Optical Communications and Networks (ICOCN), Wuzhen, China, 7–10 August 2017; pp. 1–3. [Google Scholar]

- Ren, C.; An, N.; Wang, J.; Li, L.; Hu, B.; Shang, D. Optimal parameters selection for BP neural network based on particle swarm optimization: A case study of wind speed forecasting. Knowledge-Based Syst. 2014, 56, 226–239. [Google Scholar] [CrossRef]

- Yang, X.-S. Firefly algorithms for multimodal optimization. In Proceedings of the International Symposium on Stochastic Algorithms, Sapporo, Japan, 26–28 October 2009; pp. 169–178. [Google Scholar]

- Yang, X.-S. Firefly Algorithm, Lévy Flights and Global Optimization. In Research and Development in Intelligent Systems XXVI; Springer: Berlin, Germany, 2010; pp. 209–218. [Google Scholar]

- Metropolis, N.; Rosenbluth, A.W.; Rosenbluth, M.N.; Teller, A.H.; Teller, E. Equation of state calculations by fast computing machines. J. Chem. phys. 1953, 21, 1087–1092. [Google Scholar] [CrossRef] [Green Version]

| Measured Data | FA-BP | IFA-BP | ||||

|---|---|---|---|---|---|---|

| Test Dataset 1 | Test Dataset 2 | Test Dataset 1 | Test Dataset 2 | Test Dataset 1 | Test Dataset 2 | |

| Zero-bias stability after 30 min of startup () | 186.47 | 109.56 | 15.867 | 14.476 | 7.7562 | 7.4809 |

| Zero-bias stability after 20 min of startup () | 231.63 | 136.38 | 16.902 | 19.417 | 9.6671 | 10.127 |

| Zero-start zero-bias stability () | 283.05 | 227.98 | 24.848 | 25.907 | 11.868 | 12.750 |

| Measured Data | FA-BP | IFA-BP | ||||

|---|---|---|---|---|---|---|

| Test Dataset 1 | Test Dataset 2 | Test Dataset 1 | Test Dataset 2 | Test Dataset 1 | Test Dataset 2 | |

| The variation of the scale factor at full temperature () | 20,600 | 2153.2 | 578.77 | 107.59 | 214.86 | 30.806 |

| The variation of the bias at full temperature () | 39,152 | 32873 | 89.431 | 103.57 | 32.967 | 36.556 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, L.; Jiang, L.; Zhao, L.; Ding, X. Temperature Compensation Method Based on an Improved Firefly Algorithm Optimized Backpropagation Neural Network for Micromachined Silicon Resonant Accelerometers. Micromachines 2022, 13, 1054. https://doi.org/10.3390/mi13071054

Huang L, Jiang L, Zhao L, Ding X. Temperature Compensation Method Based on an Improved Firefly Algorithm Optimized Backpropagation Neural Network for Micromachined Silicon Resonant Accelerometers. Micromachines. 2022; 13(7):1054. https://doi.org/10.3390/mi13071054

Chicago/Turabian StyleHuang, Libin, Lin Jiang, Liye Zhao, and Xukai Ding. 2022. "Temperature Compensation Method Based on an Improved Firefly Algorithm Optimized Backpropagation Neural Network for Micromachined Silicon Resonant Accelerometers" Micromachines 13, no. 7: 1054. https://doi.org/10.3390/mi13071054

APA StyleHuang, L., Jiang, L., Zhao, L., & Ding, X. (2022). Temperature Compensation Method Based on an Improved Firefly Algorithm Optimized Backpropagation Neural Network for Micromachined Silicon Resonant Accelerometers. Micromachines, 13(7), 1054. https://doi.org/10.3390/mi13071054