Embedded Machine Learning Using a Multi-Thread Algorithm on a Raspberry Pi Platform to Improve Prosthetic Hand Performance

Abstract

:1. Introduction

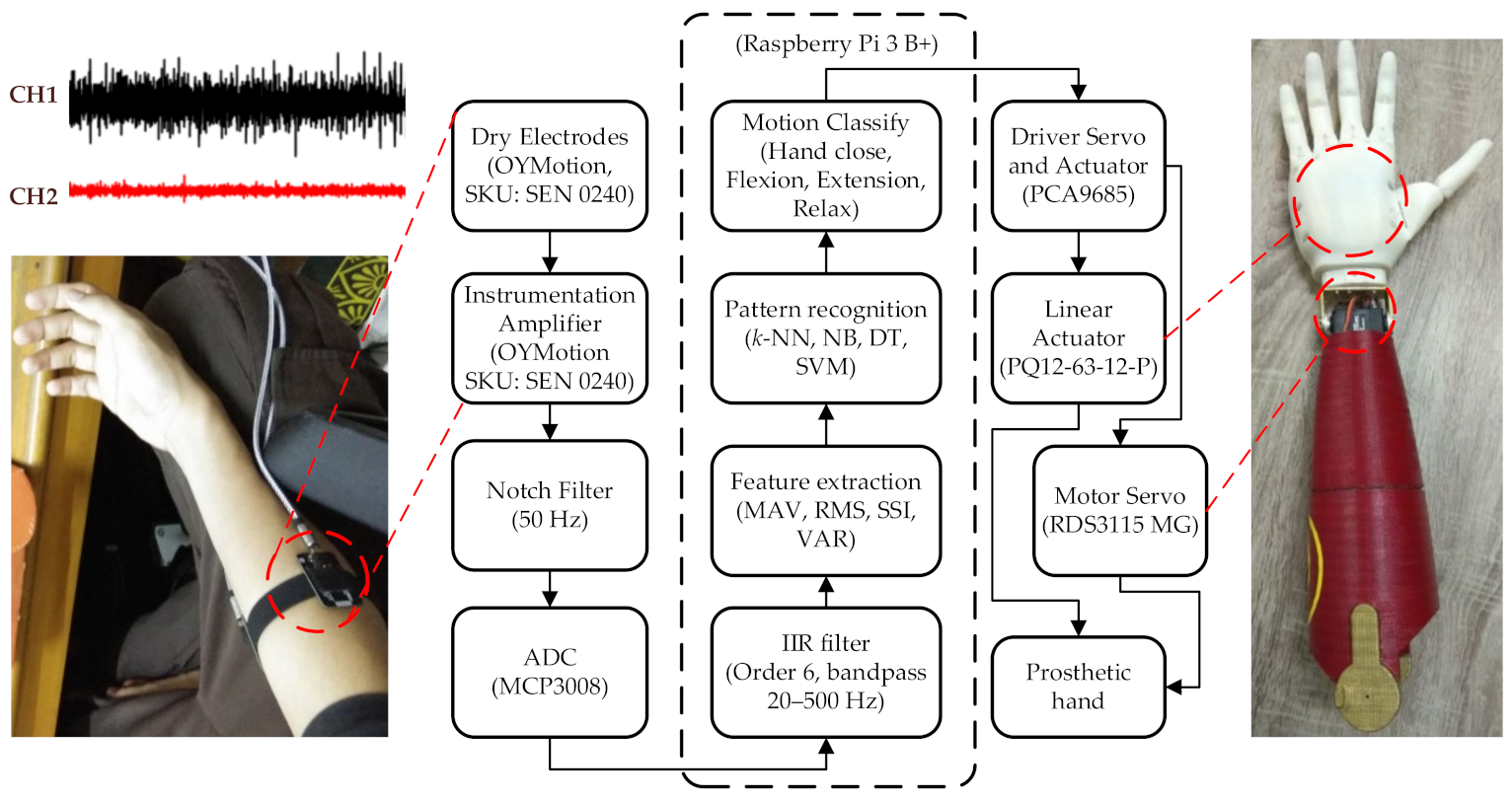

2. Materials and Methods

2.1. Minimum System

2.2. Proposed Method

2.3. Data Collection

3. Results

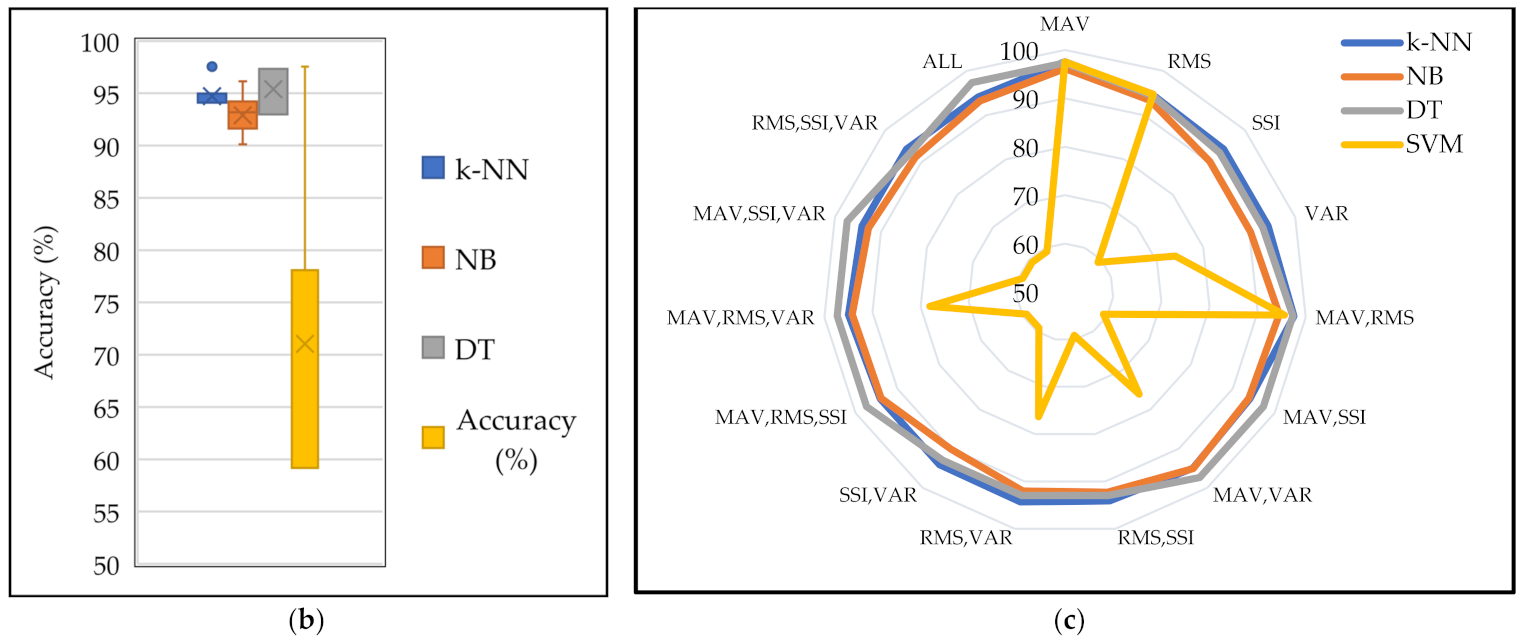

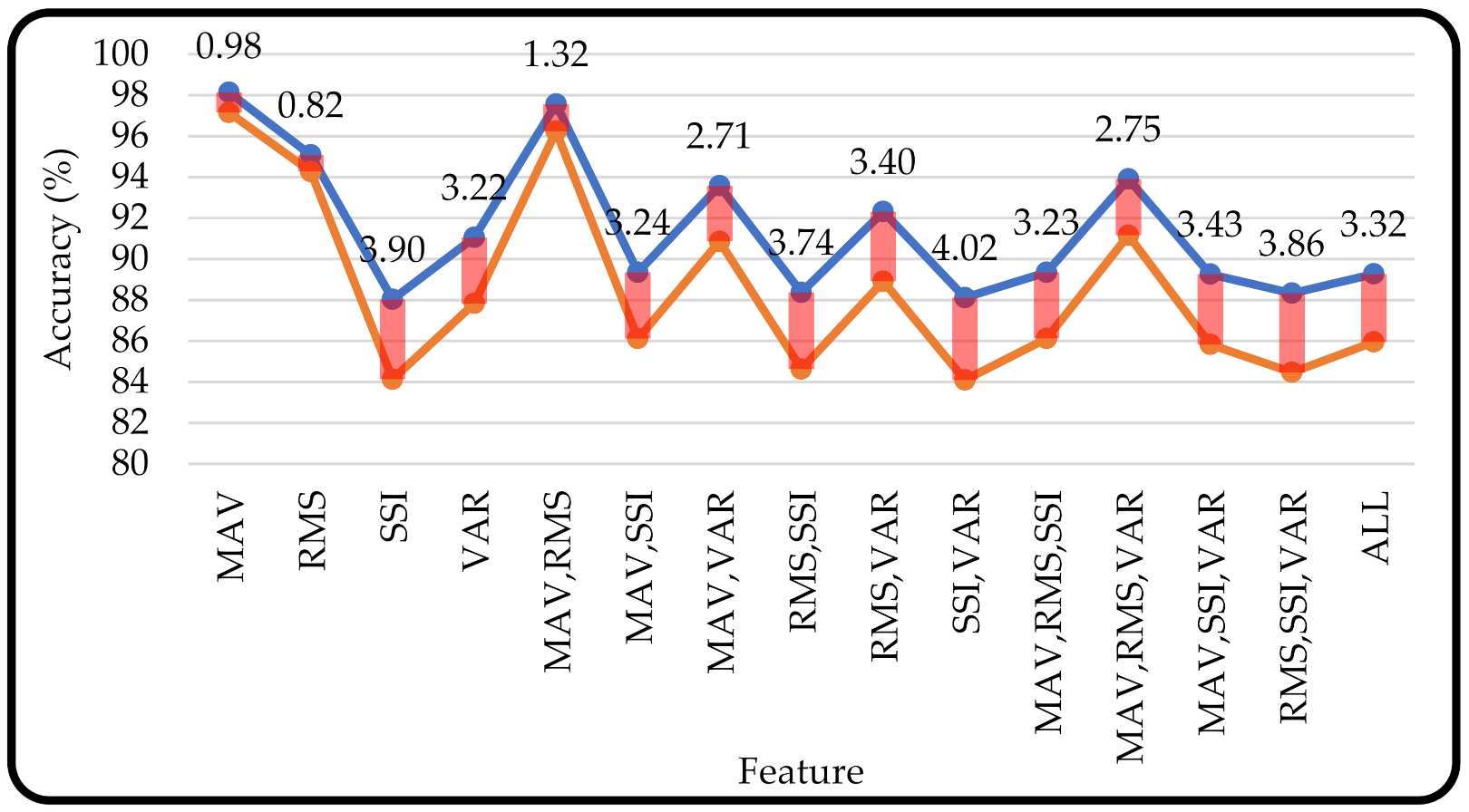

3.1. Machine Learning Accuracy

3.2. Accuracy for Each Motion

3.3. Confusion Matrices

3.4. Computation Time

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Kumar, D.K.; Jelfs, B.; Sui, X.; Arjunan, S.P. Prosthetic hand control: A multidisciplinary review to identify strengths, shortcomings, and the future. Biomed. Signal Process. Control 2019, 53, 101588. [Google Scholar] [CrossRef]

- Noce, E.; Bellingegni, A.D.; Ciancio, A.L.; Sacchetti, R.; Davalli, A.; Guglielmelli, E.; Zollo, L. EMG and ENG-envelope pattern recognition for prosthetic hand control. J. Neurosci. Methods 2019, 311, 38–46. [Google Scholar] [CrossRef] [PubMed]

- Vujaklija, I.; Farina, D.; Aszmann, O. New developments in prosthetic arm systems. Orthop. Res. Rev. 2016, 8, 31–39. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lenzi, T.; Lipsey, J.; Sensinger, J.W. The RIC arm—A small anthropomorphic transhumeral prosthesis. IEEE/ASME Trans. Mechatron. 2016, 21, 2660–2671. [Google Scholar] [CrossRef]

- Van Der Niet, O.; van der Sluis, C.K. Functionality of i-LIMB and i-LIMB pulse hands: Case report. J. Rehabil. Res. Dev. 2013, 50, 1123. [Google Scholar] [CrossRef]

- Medynski, C.; Rattray, B. Bebionic prosthetic design. In Proceedings of the Myoelectric Symposium, UNB, Fredericton, NB, Canada, 14–19 August 2011. [Google Scholar]

- Schulz, S. First experiences with the vincent hand. In Proceedings of the Myoelectric Symposium, UNB, Fredericton, NB, Canada, 14–19 August 2011. [Google Scholar]

- Várszegi, K. Detecting Hand Motions from EEG Recordings; Budapest University of Technology and Economics: Budapest, Hungary, 2015. [Google Scholar]

- Ahmed, M.R.; Halder, R.; Uddin, M.; Mondal, P.C.; Karmaker, A.K. Prosthetic arm control using electromyography (EMG) signal. In Proceedings of the International Conference on Advancement in Electrical and Electronic Engineering (ICAEEE), Gazipur, Bangladesh, 22–24 November 2018; pp. 1–4. [Google Scholar]

- Pratomo, M.R.; Irianto, B.G.; Triwiyanto, T.; Utomo, B.; Setioningsih, E.D.; Titisari, D. Prosthetic hand with 2-dimensional motion based EOG signal control. In Proceedings of the International Symposium on Materials and Electrical Engineering 2019 (ISMEE 2019), Bandung, Indonesia, 17 July 2019; Volume 850. [Google Scholar]

- Roy, R.; Kianoush, N. A low-cost Raspberry PI-based vision system for upper-limb prosthetics. In Proceedings of the 27th IEEE International Conference on Electronics, Circuits and Systems (ICECS), Glasgow, UK, 23–25 November 2020; pp. 20–23. [Google Scholar]

- Triwiyanto, T.; Rahmawati, T.; Pawana, I.P.A.; Lamidi, L. State-of-the-art method in prosthetic hand design: A review. J. Biomim. Biomater. Biomed. Eng. 2021, 50, 15–24. [Google Scholar] [CrossRef]

- Triwiyanto, T.; Pawana, I.P.A.; Hamzah, T.; Luthfiyah, S. Low-cost and open-source anthropomorphic prosthetics hand using linear actuators. Telkomnika 2020, 18, 953–960. [Google Scholar] [CrossRef]

- Kuiken, T.A.; Miller, L.A.; Turner, K.; Hargrove, L.J. A comparison of pattern recognition control and direct control of a multiple degree-of-freedom transradial prosthesis. IEEE J. Transl. Eng. Health Med. 2016, 4, 1–8. [Google Scholar] [CrossRef]

- Ma, J.; Thakor, N.V.; Matsuno, F. Hand and wrist movement control of myoelectric prosthesis based on synergy. IEEE Trans. Hum.-Mach. Syst. 2015, 45, 74–83. [Google Scholar] [CrossRef]

- Jaramillo, A.G.; Benalcazar, M.E. Real-time hand gesture recognition with EMG using machine learning. In Proceedings of the IEEE Second Ecuador Technical Chapters Meeting (ETCM), Salinas, Ecuador, 16–20 October 2017; pp. 1–5. [Google Scholar]

- Geethanjali, P.; Ray, K.K. A low-cost real-time research platform for EMG pattern recognition-based prosthetic hand. IEEE/ASME Trans. Mechatron. 2015, 20, 1948–1955. [Google Scholar] [CrossRef]

- Pancholi, S.; Joshi, A.M. Sensor systems electromyography-based hand gesture recognition system for upper limb amputees. IEEE Sens. Lett. 2019, 3, 1–4. [Google Scholar]

- Chen, X.; Wang, Z.J. Biomedical signal processing and control pattern recognition of number gestures based on a wireless surface EMG system. Biomed. Signal Process. Control 2013, 8, 184–192. [Google Scholar] [CrossRef]

- Benatti, S.; Casamassima, F.; Milosevic, B.; Farella, E.; Schönle, P.; Fateh, S.; Burger, T.; Huang, Q.; Benini, L. A Versatile Embedded Platform for EMG Acquisition and Gesture Recognition. IEEE Trans. Biomed. Circuits Syst. 2015, 9, 620–630. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.; Kim, M.; Kang, T.; Park, J.; Choi, Y. Knit band sensor for myoelectric control of surface EMG-based prosthetic hand. IEEE Sens. J. 2018, 18, 8578–8586. [Google Scholar] [CrossRef]

- Raurale, S.; McAllister, J.; del Rincon, J.M. EMG wrist-hand motion recognition system for real-time embedded platform. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 1523–1527. [Google Scholar]

- Tavakoli, M.; Benussi, C.; Lourenco, J.L. Single channel surface EMG control of advanced prosthetic hands: A simple, low cost and efficient approach. Expert Syst. Appl. 2017, 79, 322–332. [Google Scholar] [CrossRef]

- Gaetani, F.; Primiceri, P.; Zappatore, G.A.; Visconti, P. Hardware design and software development of a motion control and driving system for transradial prosthesis based on a wireless myoelectric armband. IET Sci. Meas. Technol. 2019, 13, 354–362. [Google Scholar] [CrossRef]

- Chen, X.; Ke, A.; Ma, X.; He, J. SoC-based architecture for robotic prosthetics control using surface electromyography. In Proceedings of the 8th International Conference on Intelligent Human-Machine Systems and Cybernetics, Hangzhou, China, 27–28 August 2016; pp. 134–137. [Google Scholar]

- De Oliveira de Souza, J.O.; Bloedow, M.D.; Rubo, F.C.; de Figueiredo, R.M.; Pessin, G.; Rigo, S.J. Investigation of different approaches to real-time control of prosthetic hands with electromyography signals. IEEE Sens. J. 2021, 21, 20674–20684. [Google Scholar] [CrossRef]

- Martini, F.H.; Nath, J.L.; Barholomew, E.F. Fundamental of Anatomy and Physiology, 9th ed.; Pearson Education: Boston, MA, USA, 2012; ISBN 9780321709332. [Google Scholar]

- Triwiyanto, T.; Wahyunggoro, O.; Nugroho, H.A.; Herianto, H. An investigation into time domain features of surface electromyography to estimate the elbow joint angle. Adv. Electr. Electron. Eng. 2017, 15, 448–458. [Google Scholar] [CrossRef]

- Phinyomark, A.; Scheme, E. A feature extraction issue for myoelectric control based on wearable EMG sensors. In Proceedings of the IEEE Sensors Applications Symposium (SAS), Seoul, Korea, 12–14 March 2018; pp. 1–6. [Google Scholar]

- Chu, J.U.; Moon, I.; Mun, M.S. A real-time EMG pattern recognition system based on linear-nonlinear feature projection for a multifunction myoelectric hand. IEEE Trans. Biomed. Eng. 2006, 53, 2232–2239. [Google Scholar]

- Smith, L.H.; Hargrove, L.J.; Lock, B.A.; Kuiken, T.A. Determining the optimal window length for pattern recognition-based myoelectric control: Balancing the competing effects of classification error and controller delay. IEEE Trans. Neural Syst. Rehabil. Eng. 2011, 19, 186–192. [Google Scholar] [CrossRef] [Green Version]

- Smith, S.W. Digital Signal Processing; California Technical Publishing: San Diego, CA, USA, 1999; ISBN 0-9660176-6-8. [Google Scholar]

- Kaur, R. A new greedy search method for the design of digital IIR filter. J. King Saud Univ.-Comput. Inf. Sci. 2015, 27, 278–287. [Google Scholar] [CrossRef] [Green Version]

- Kumar, V. A new proposal for time domain features of EMG signal on individual basis over conventional space. In Proceedings of the 4th International Conference on Signal Processing, Computing and Control (ISPCC), Solan, India, 21–23 September 2017; pp. 531–535. [Google Scholar]

- Phinyomark, A.; Phukpattaranont, P.; Limsakul, C. Feature reduction and selection for EMG signal classification. Expert Syst. Appl. 2012, 39, 7420–7431. [Google Scholar] [CrossRef]

- Shi, W.T.; Lyu, Z.J.; Tang, S.T.; Chia, T.L.; Yang, C.Y. A bionic hand controlled by hand gesture recognition based on surface EMG signals: A preliminary study. Biocybern. Biomed. Eng. 2018, 38, 126–135. [Google Scholar] [CrossRef]

- Yochum, M.; Bakir, T.; Binczak, S.; Lepers, R. Multi axis representation and Euclidean distance of muscle fatigue indexes during evoked contractions. In Proceedings of the 2014 IEEE Region 10 Symposium, Kuala Lumpur, Malaysia, 14–16 April 2014; pp. 446–449. [Google Scholar]

- Pati, S.; Joshi, D.; Mishra, A. Locomotion classification using EMG signal. In Proceedings of the International Conference on Information and Emerging Technologies, Karachi, Pakistan, 14–16 June 2010; pp. 1–6. [Google Scholar]

- Paul, Y.; Goyal, V.; Jaswal, R.A. Comparative analysis between SVM & KNN classifier for EMG signal classification on elementary time domain features. In Proceedings of the 4th International Conference on Signal Processing, Computing and Control (ISPCC), Solan, India, 21–23 September 2017; pp. 169–175. [Google Scholar]

- Tom, M.M. Machine Learning; McGraw-Hill Science: New York, NY, USA, 2009. [Google Scholar]

- Konrad, P. The ABC of EMG: A practical introduction to kinesiological electromyography. In A Practical Introduction to Kinesiological Electromyography; Noraxon Inc.: Scottsdale, AZ, USA, 2005; pp. 1–60. ISBN 0977162214. [Google Scholar]

- Basmajian, J.V.; de Luca, C.J. Chapter 8. Muscle fatigue and time-dependent parameters of the surface EMG signal. In Muscles Alive: Their Functions Revealed by Electromyography; Williams & Wilkins: Baltimore, MD, USA, 1985; pp. 201–222. ISBN 0471675806. [Google Scholar]

- Phinyomark, A.; Quaine, F.; Charbonnier, S.; Serviere, C. EMG feature evaluation for improving myoelectric pattern recognition robustness. Expert Syst. Appl. 2013, 40, 4832–4840. [Google Scholar] [CrossRef]

- Triwiyanto, T.; Wahyunggoro, O.; Nugroho, H.A.; Herianto, H. Muscle fatigue compensation of the electromyography signal for elbow joint angle estimation using adaptive feature. Comput. Electr. Eng. 2018, 71, 284–293. [Google Scholar] [CrossRef]

| System Parameter | Ref. [19] | Ref. [20] | Ref. [21] | Ref. [18] | Proposed Study |

|---|---|---|---|---|---|

| Implementation | Computer | Embedded | Computer | Embedded | Embedded |

| Training stage | Offline | Offline | Offline | Online | Offline |

| Testing stage | Online | Online | Online | Online | Online |

| Machine learning | SVM | SVM | ANN | LDA | DT |

| Number of motions | 9 | 7 | 6 | 6 | 4 |

| Number of channels | 4 | 8 | 8 | 8 | 2 |

| Accuracy | 90% | 90% | 93.20% | 94.14% | 98.13% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Triwiyanto, T.; Caesarendra, W.; Purnomo, M.H.; Sułowicz, M.; Wisana, I.D.G.H.; Titisari, D.; Lamidi, L.; Rismayani, R. Embedded Machine Learning Using a Multi-Thread Algorithm on a Raspberry Pi Platform to Improve Prosthetic Hand Performance. Micromachines 2022, 13, 191. https://doi.org/10.3390/mi13020191

Triwiyanto T, Caesarendra W, Purnomo MH, Sułowicz M, Wisana IDGH, Titisari D, Lamidi L, Rismayani R. Embedded Machine Learning Using a Multi-Thread Algorithm on a Raspberry Pi Platform to Improve Prosthetic Hand Performance. Micromachines. 2022; 13(2):191. https://doi.org/10.3390/mi13020191

Chicago/Turabian StyleTriwiyanto, Triwiyanto, Wahyu Caesarendra, Mauridhi Hery Purnomo, Maciej Sułowicz, I Dewa Gede Hari Wisana, Dyah Titisari, Lamidi Lamidi, and Rismayani Rismayani. 2022. "Embedded Machine Learning Using a Multi-Thread Algorithm on a Raspberry Pi Platform to Improve Prosthetic Hand Performance" Micromachines 13, no. 2: 191. https://doi.org/10.3390/mi13020191

APA StyleTriwiyanto, T., Caesarendra, W., Purnomo, M. H., Sułowicz, M., Wisana, I. D. G. H., Titisari, D., Lamidi, L., & Rismayani, R. (2022). Embedded Machine Learning Using a Multi-Thread Algorithm on a Raspberry Pi Platform to Improve Prosthetic Hand Performance. Micromachines, 13(2), 191. https://doi.org/10.3390/mi13020191