Combined Distributed Shared-Buffered and Diagonally-Linked Mesh Topology for High-Performance Interconnect

Abstract

1. Introduction

- We elaborate on the initial concept, in particular focusing on the freedom offered by R-NoC in terms of internal topology, the opportunity for concentrated networks, and the number of ports. We propose a construction algorithm that generates deadlock-free router topologies taking design parameters as inputs.

- We present in detail the synchronous-elastic implementation of R-NoC and thoroughly evaluate the corresponding power consumption at the network level while considering different traffic patterns. We show that R-NoC consumes less power compared to typical input-buffered routers. This confirms our claim that R-NoC performance improvement is not at the expense of power.

- We investigate how the network performance scales with an increasing number of cores, from 4 to 64 cores, and for various traffic patterns. The obtained experimental results show that R-NoC provides better scalability compared to typical input-buffered routers.

- We propose a low area-overhead router, named R-NoC-D, dedicated to DMesh networks. We show that the new router provides up to 24% and 59% performance improvement for uniform and non-uniform traffic patterns respectively compared to its mesh counterparts. We assess the implementation of R-NoC-D and observe that the performance improvements it brings come at an area overhead that is less compared to a DMesh implementation of a typical router with input buffers.

2. Related Works

2.1. Conventional Packet-Switching Routers

2.2. Router-Less, Buffer-Less and Shared-Buffer Routers

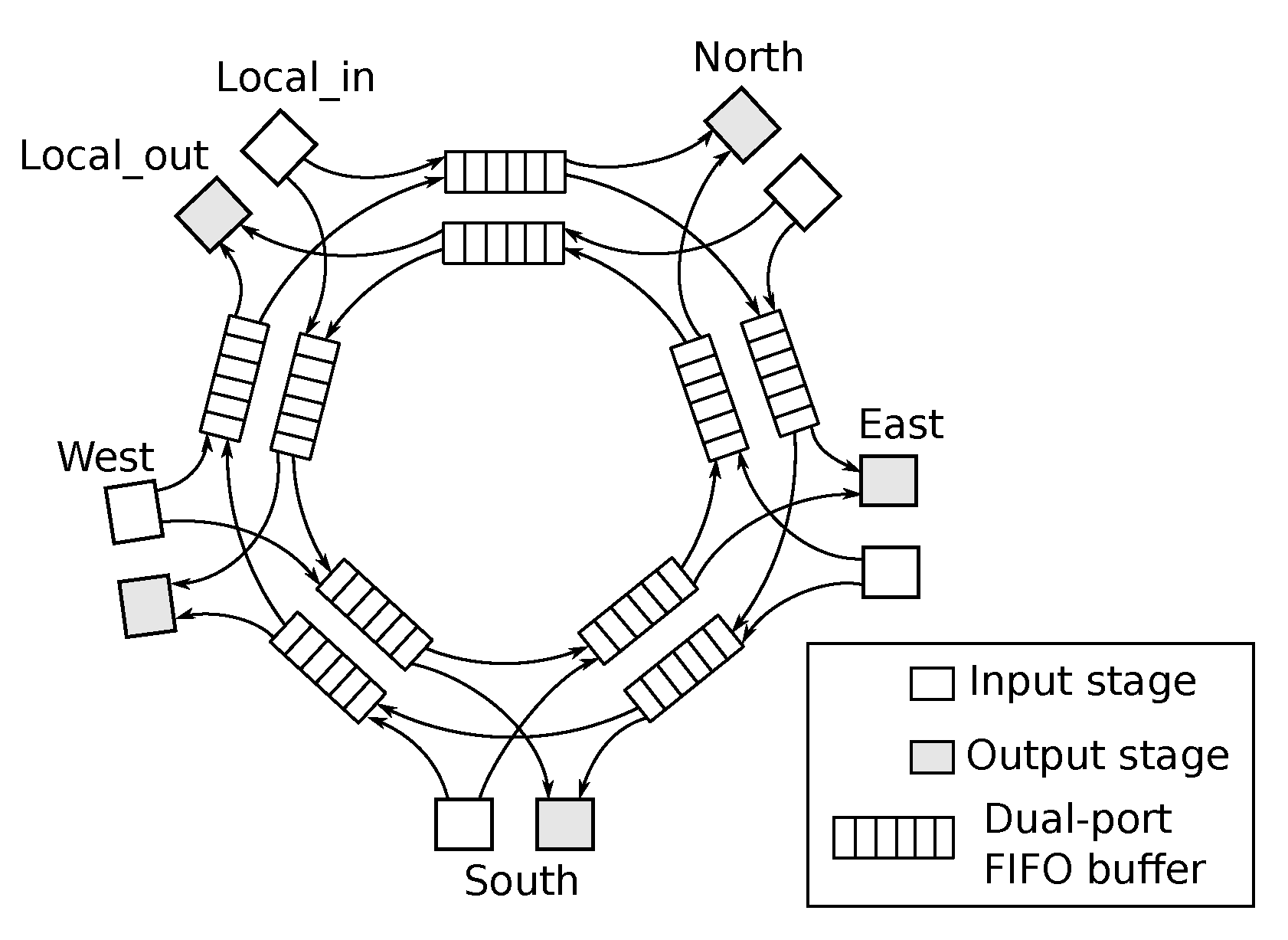

3. R-NoC Router for Resource Sharing

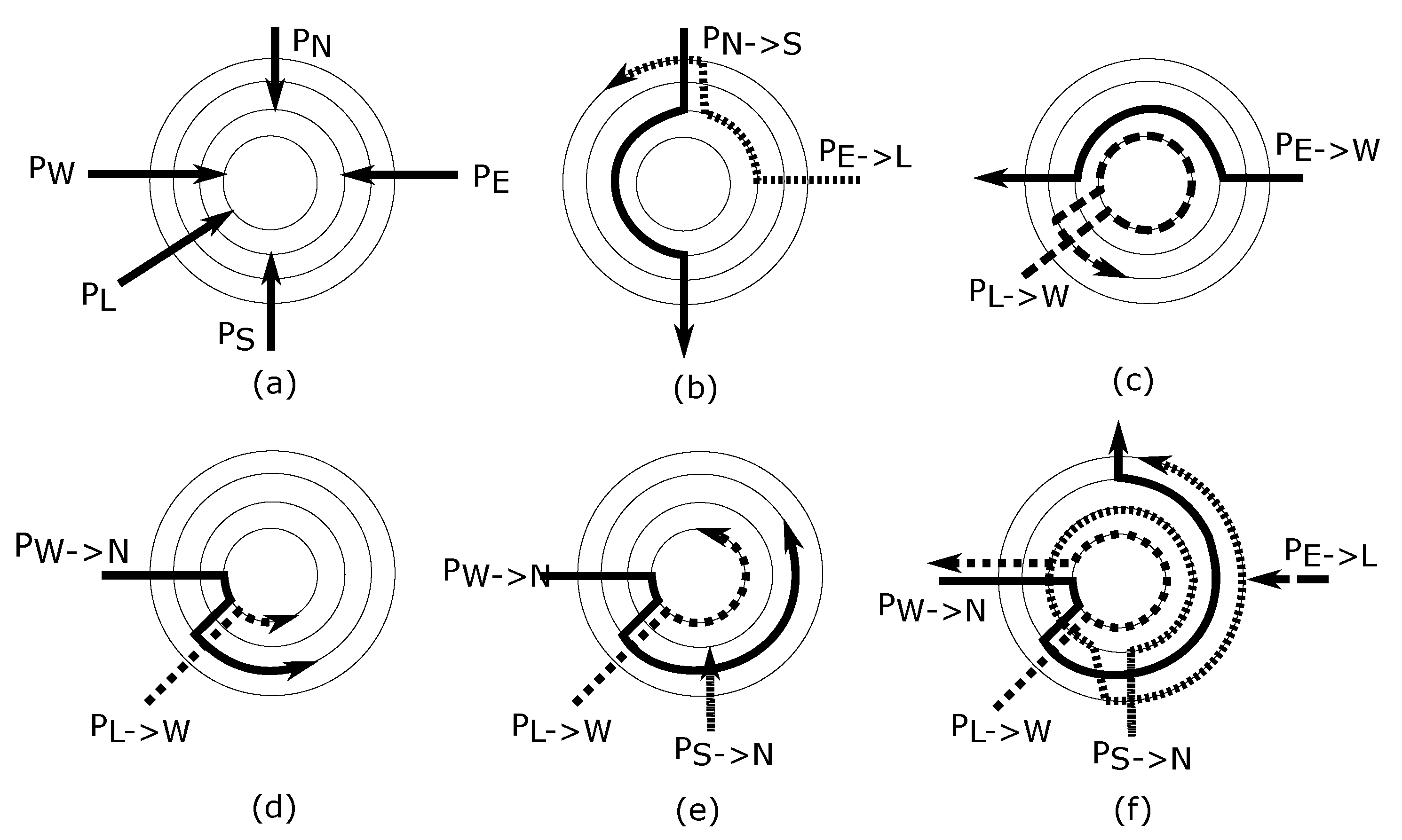

3.1. Intuition on the Data-Flow Principle in R-NoC Router

- the router can have N lanes, where . The lanes are partitioned into primary and secondary lanes with an arbitrary lane count in each;

- the output ports are connected to both primary and secondary lanes, while the input ports are only connected to the primary lanes;

- packets can switch from primary to secondary lanes when either their path is blocked or their output is unavailable.

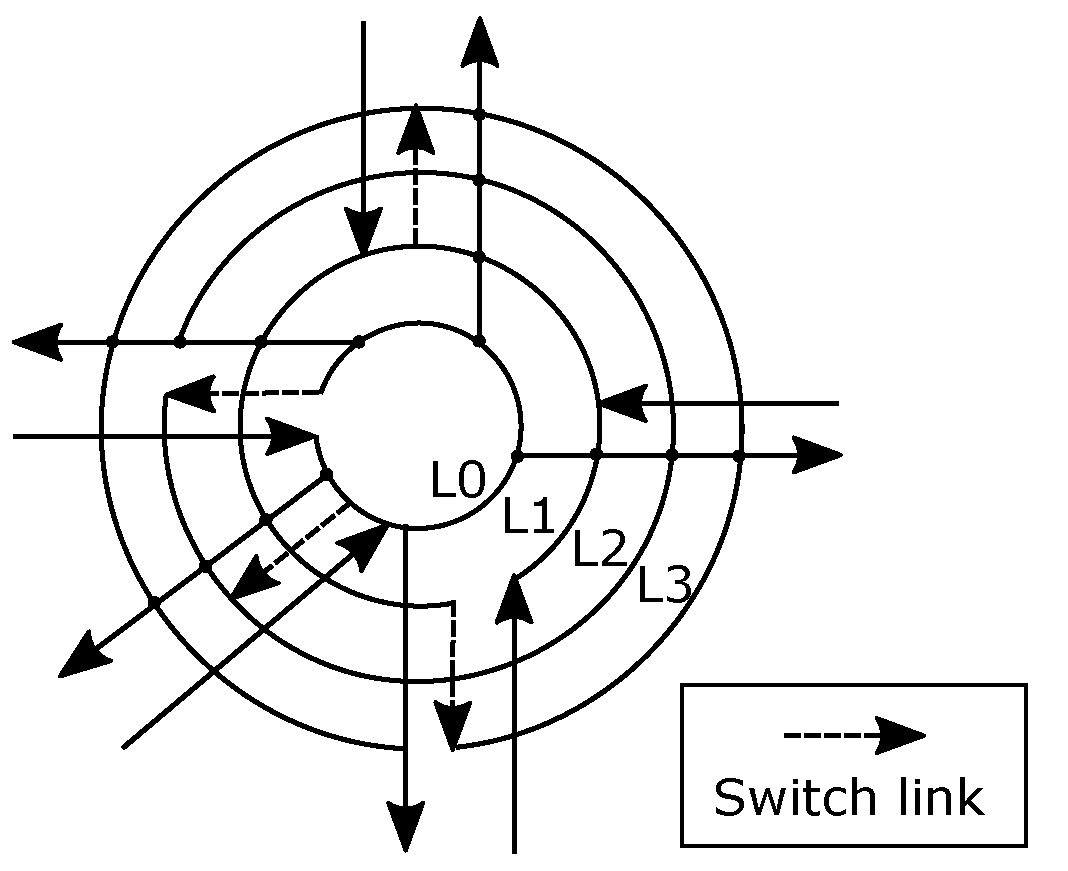

3.2. The R-NoC Router Concept

3.3. Generating Deadlock-Free R-NoC Topologies

| Algorithm 1:R-NoC topology generation algorithm |

|

3.4. Example of R-NoC Router Topology Generation

4. Implementation of R-NoC Router and NoC

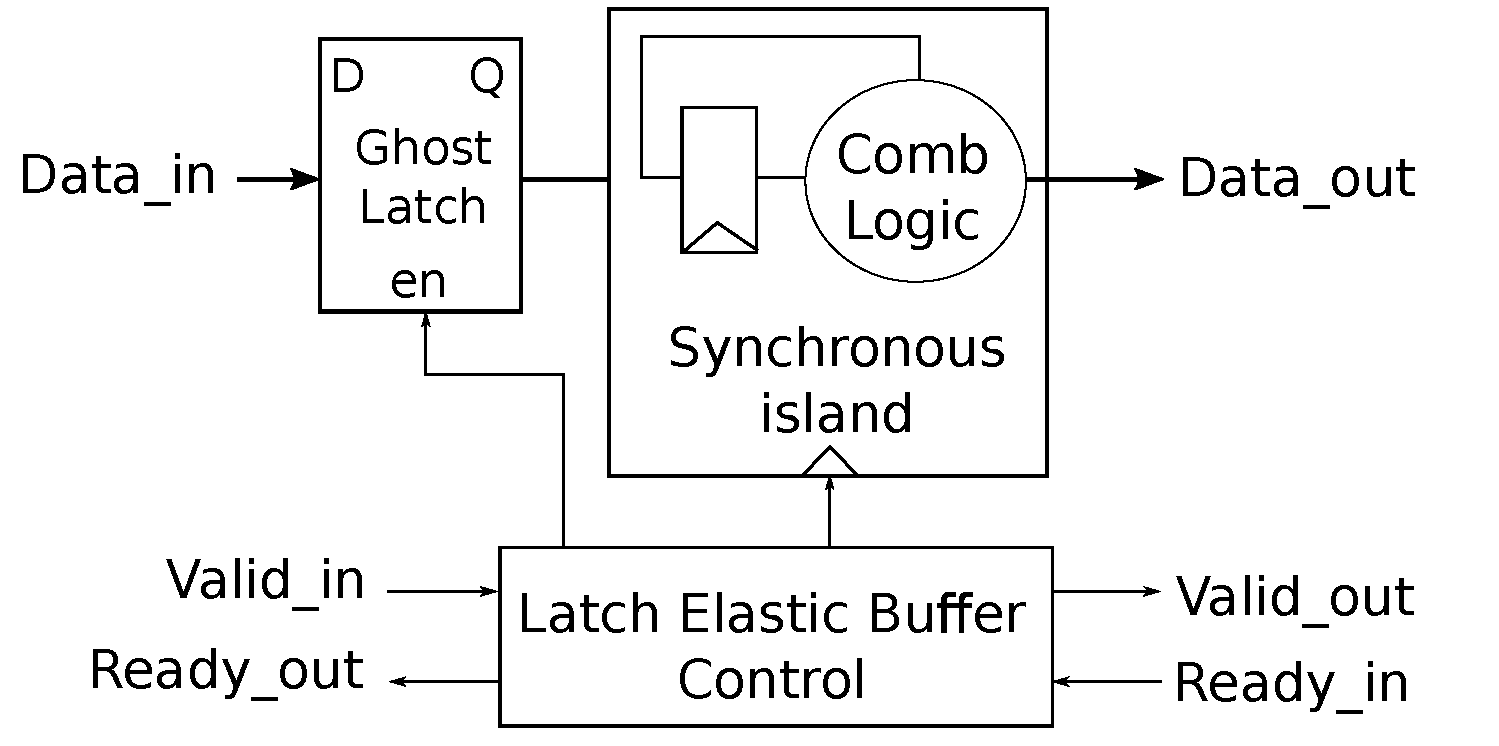

4.1. Synchronous-Elastic Design Style

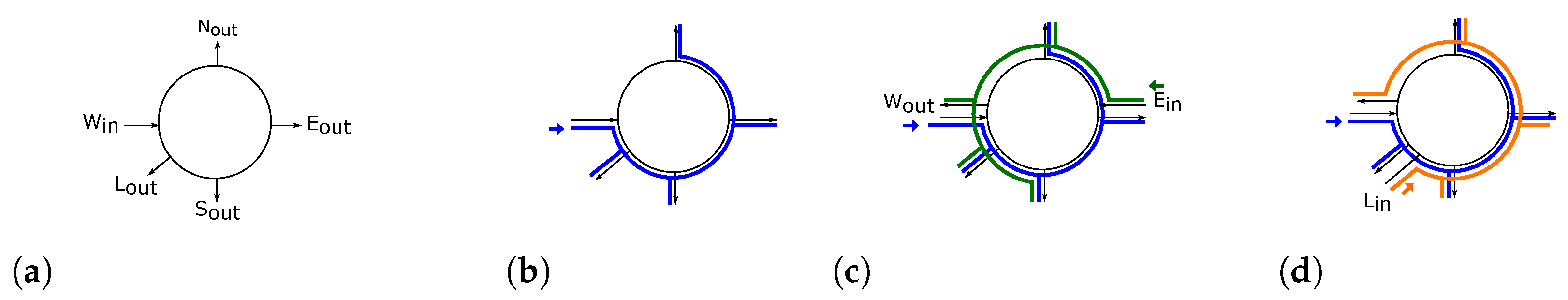

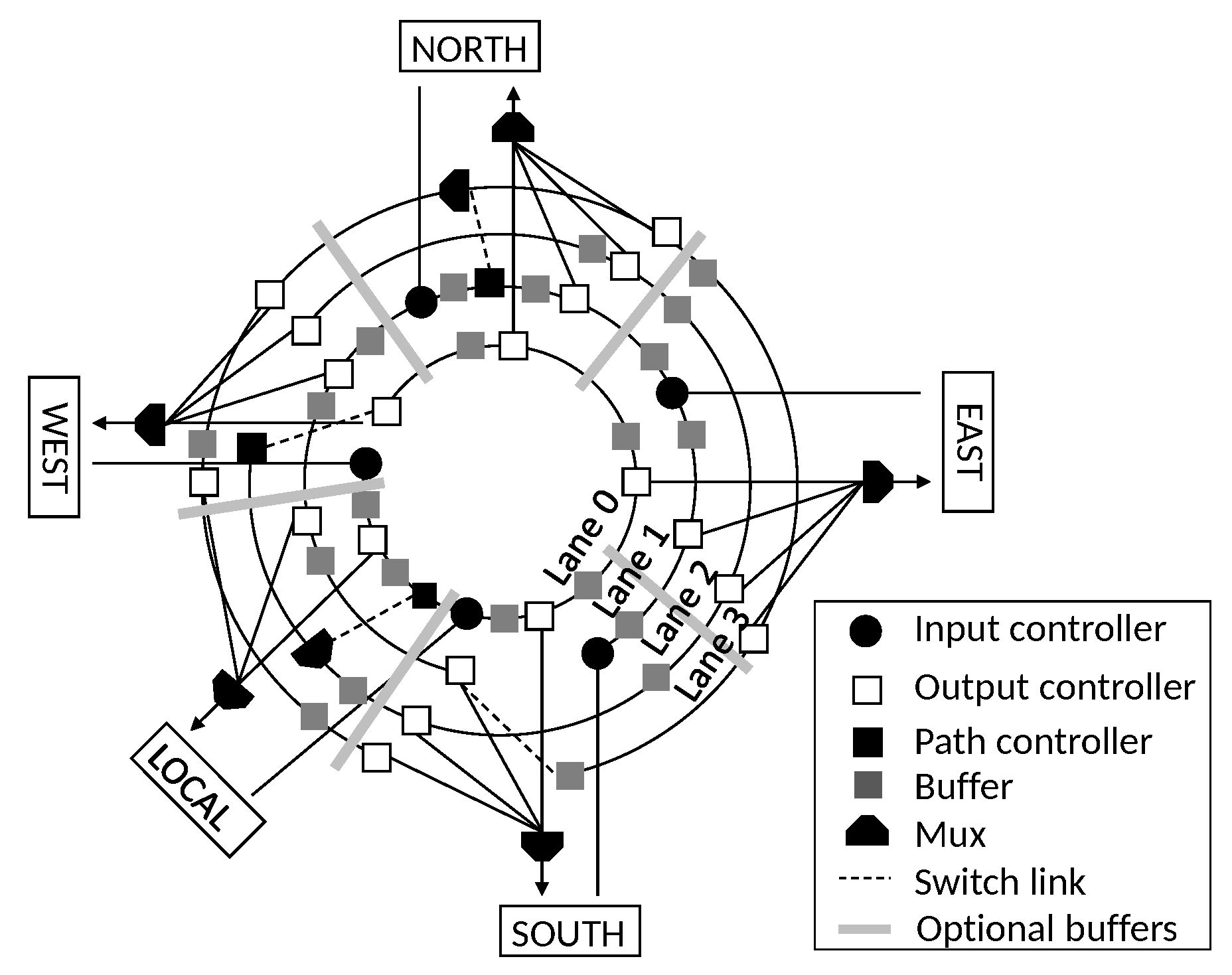

4.2. R-NoC Topology

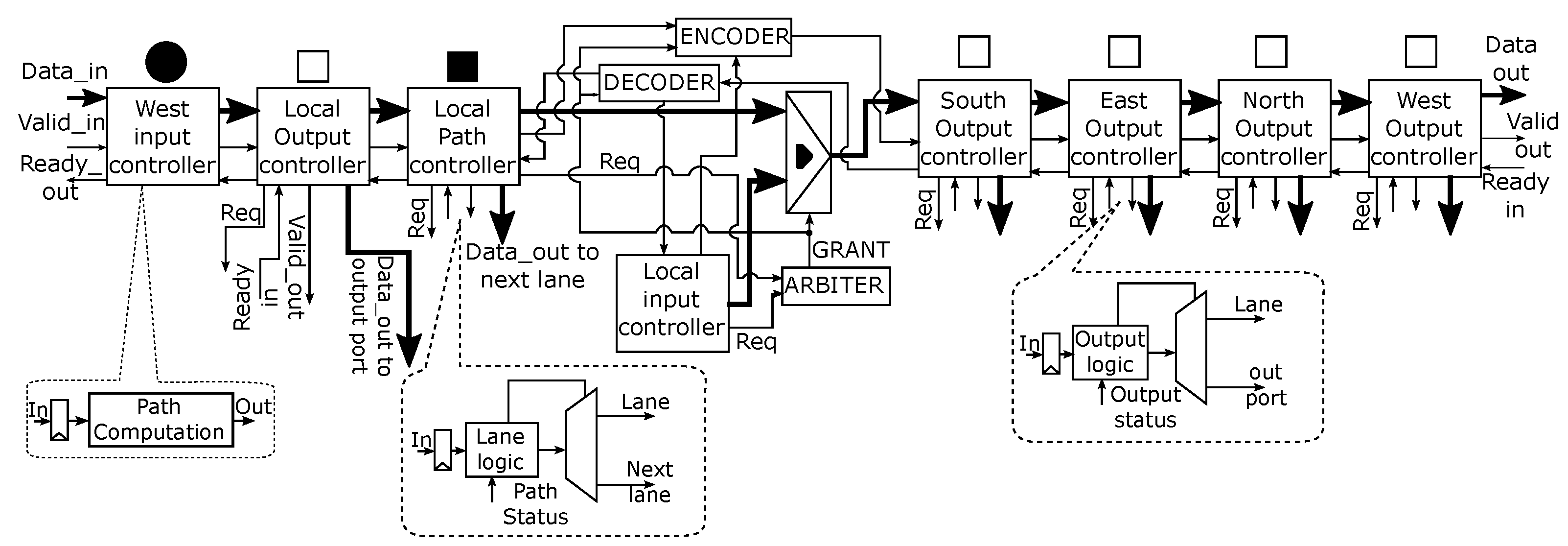

4.3. Lane Pipeline

4.4. Input Controller

4.5. Output and Path Controller

- output port address matches and output port is free;

- output port address matches but output port is busy and is not local;

- output port address matches but output port is busy and is local;

- output port address does not match;

- output port address matches and output port controller is located on the secondary lanes Lane 2 or Lane 3 shown in Figure 7.

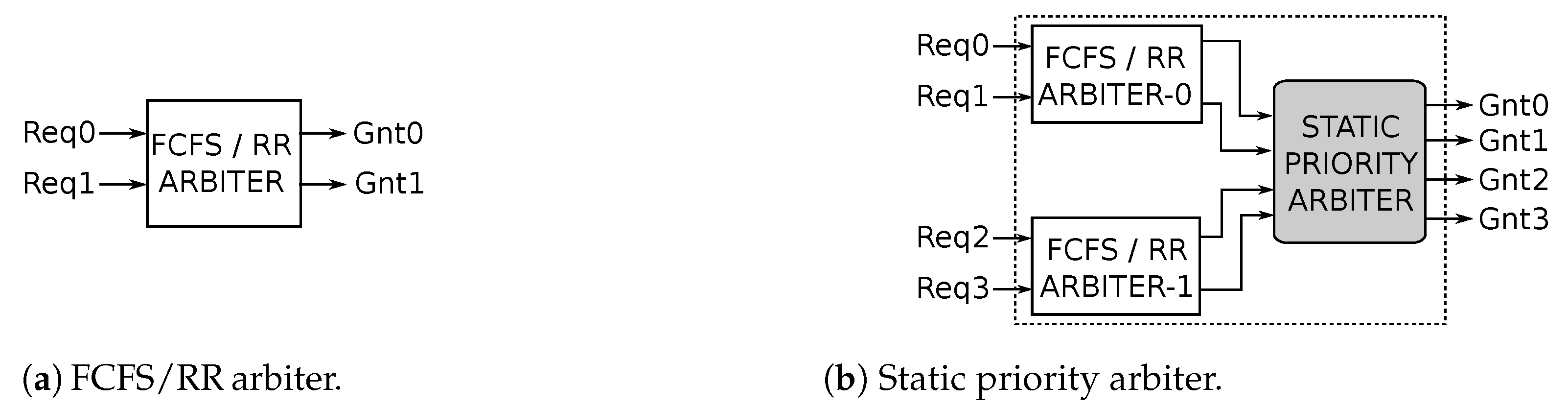

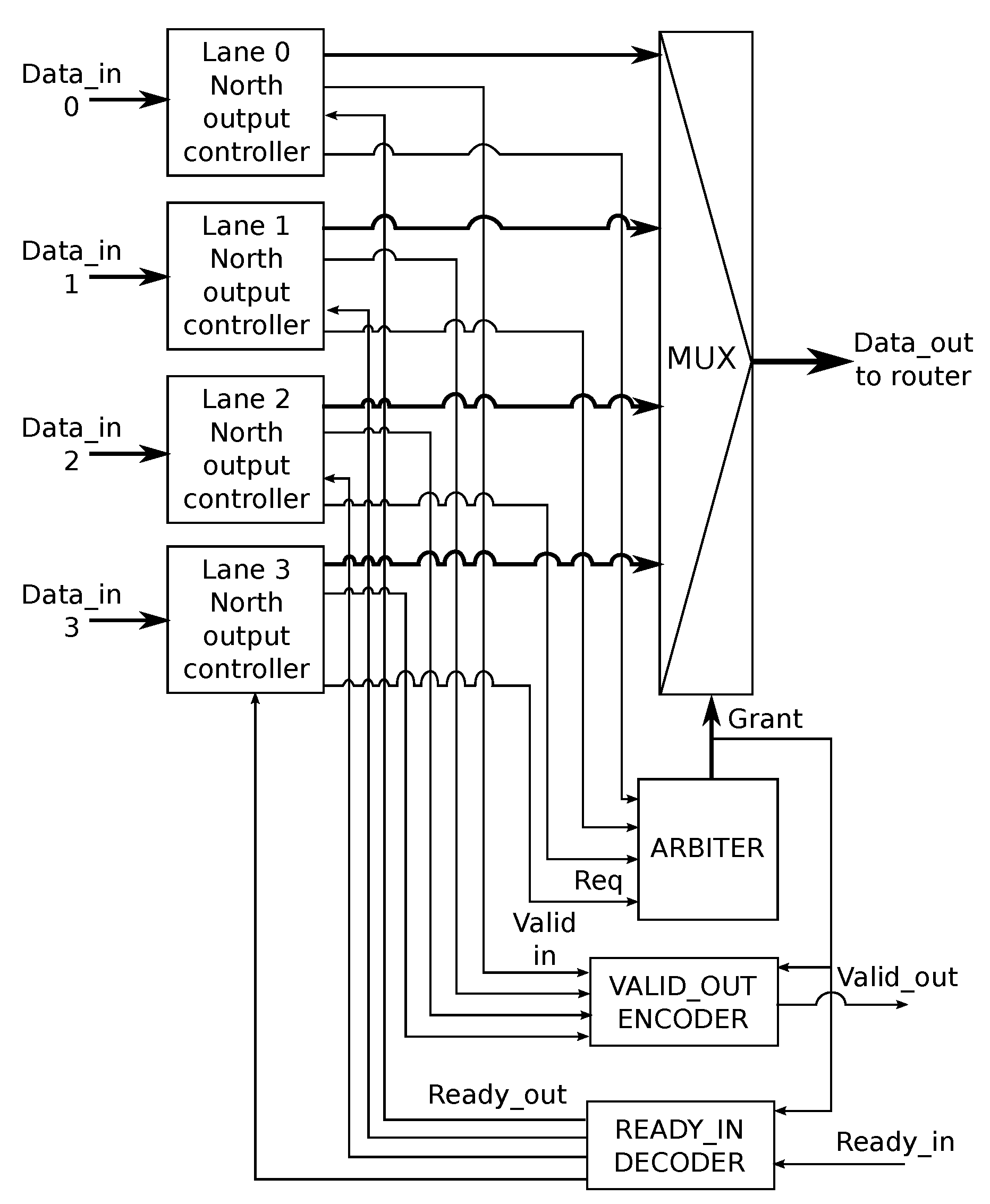

4.6. Arbitration

4.7. R-NoC Output Port Block

- only the valid signal of the output controller for which access has been granted is sent to the receiver.

- the receiver’s status, indicated by the "ready_in" signal, is communicated only to the output controller that has been granted access to the output port.

5. R-NoC Evaluation

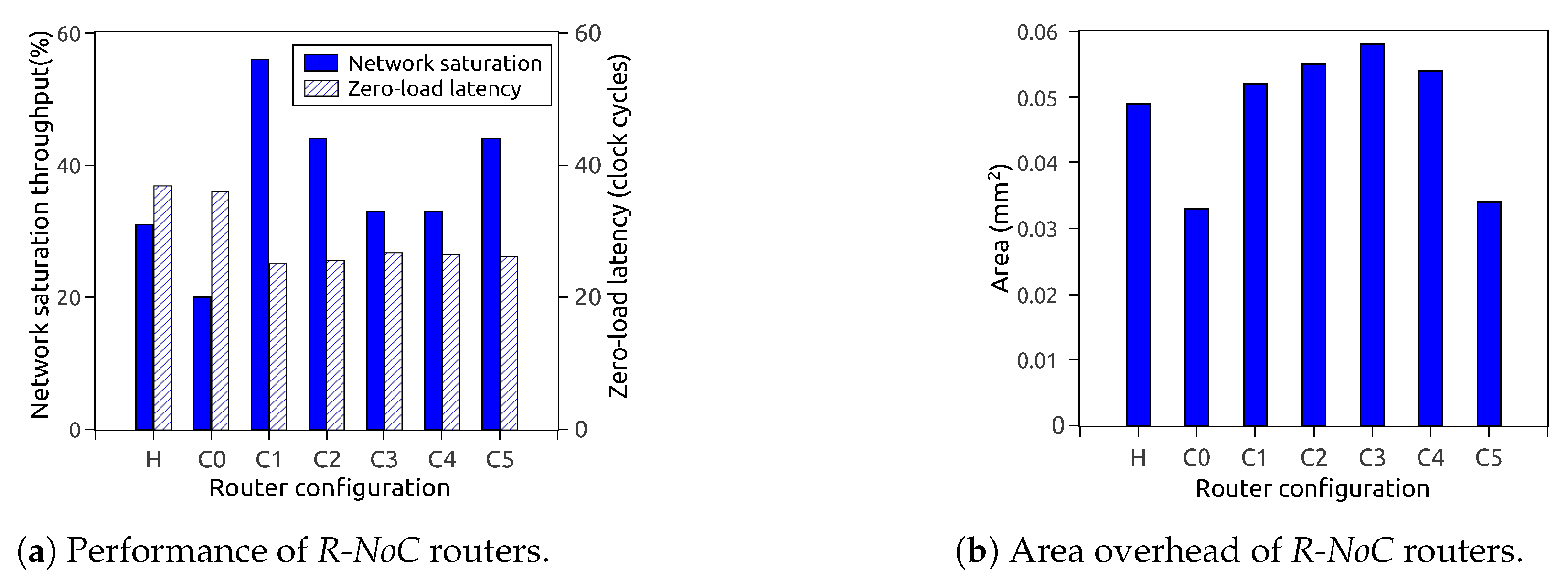

5.1. Topology Exploration

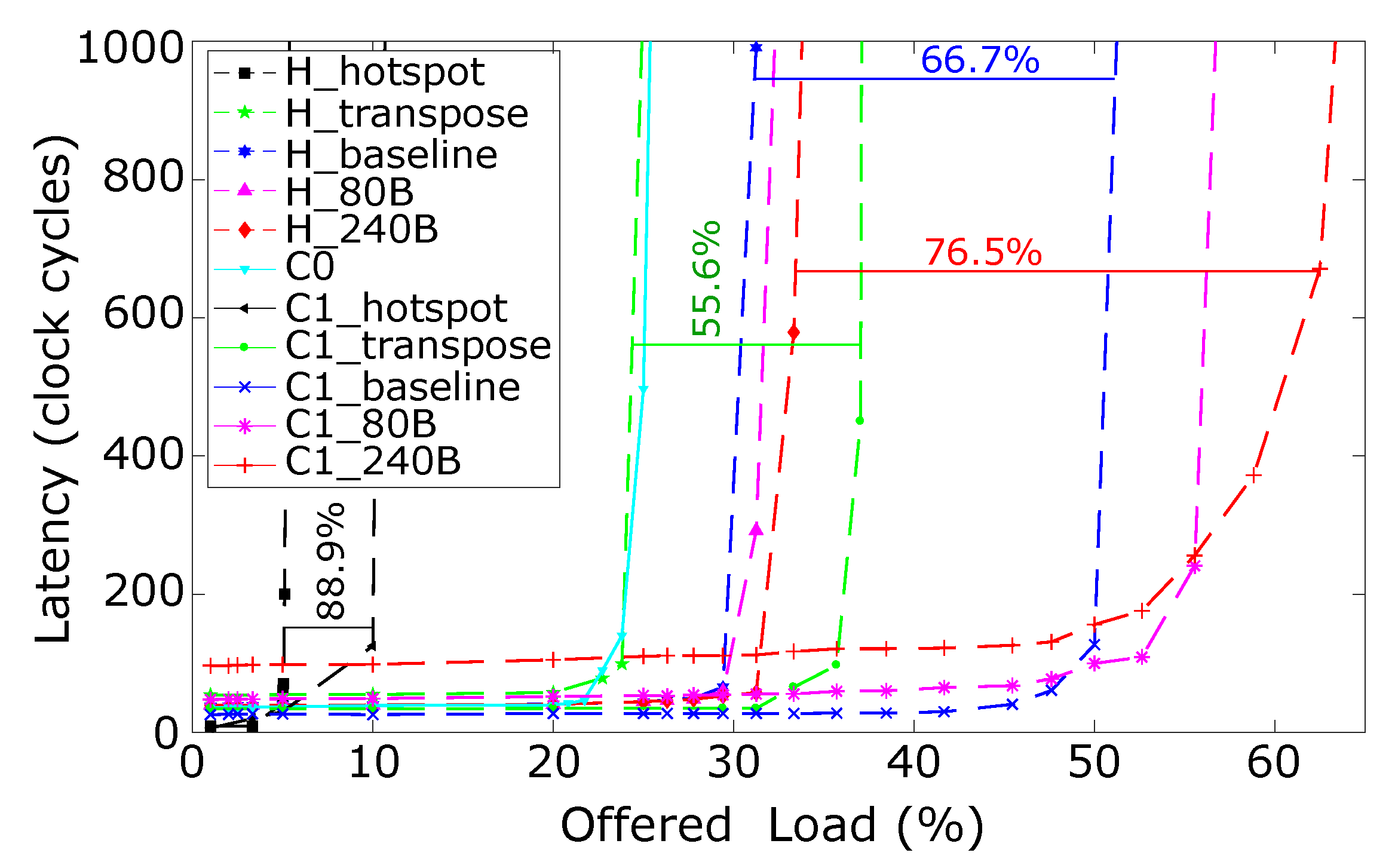

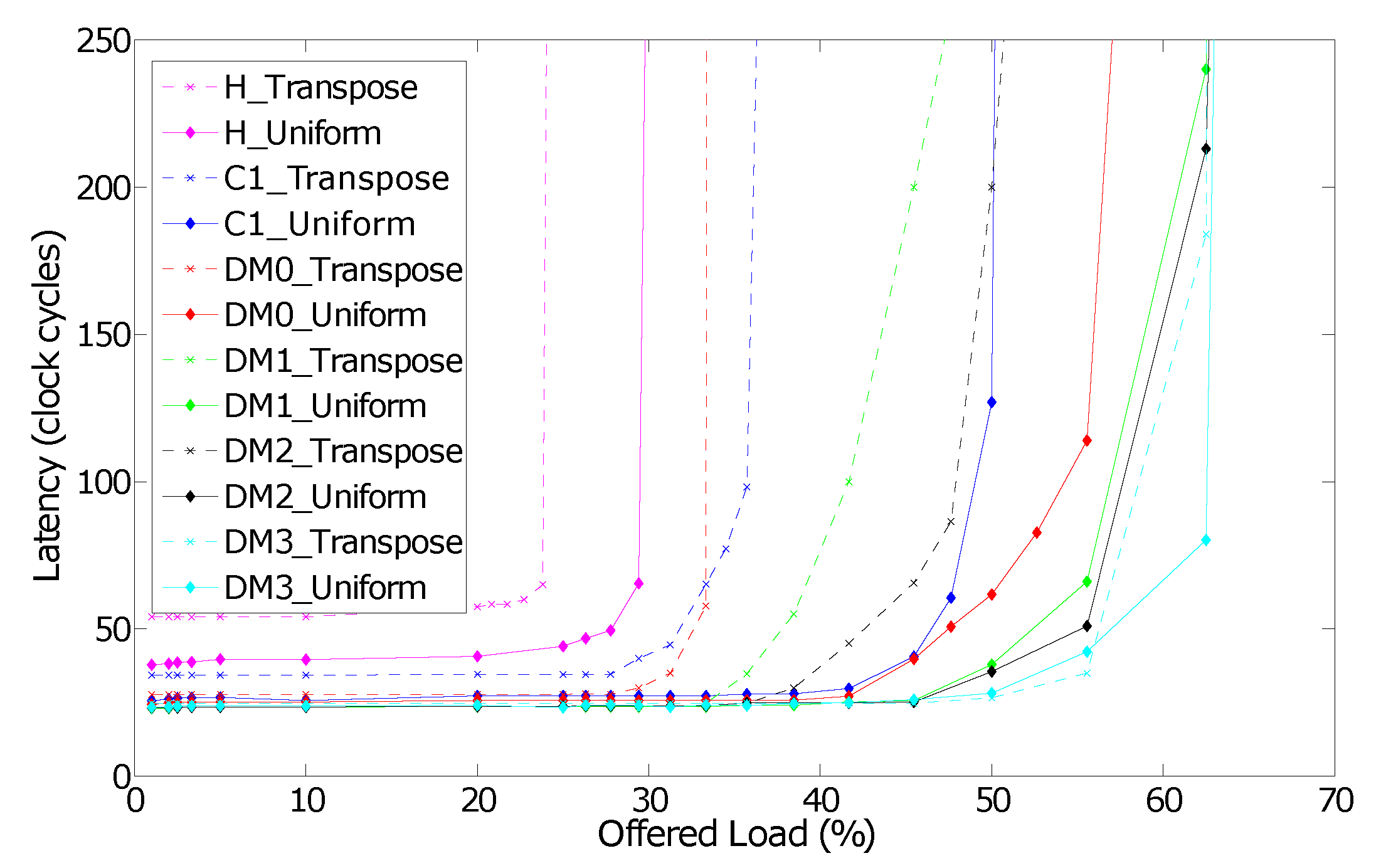

5.1.1. Performance for Different Traffic Patterns

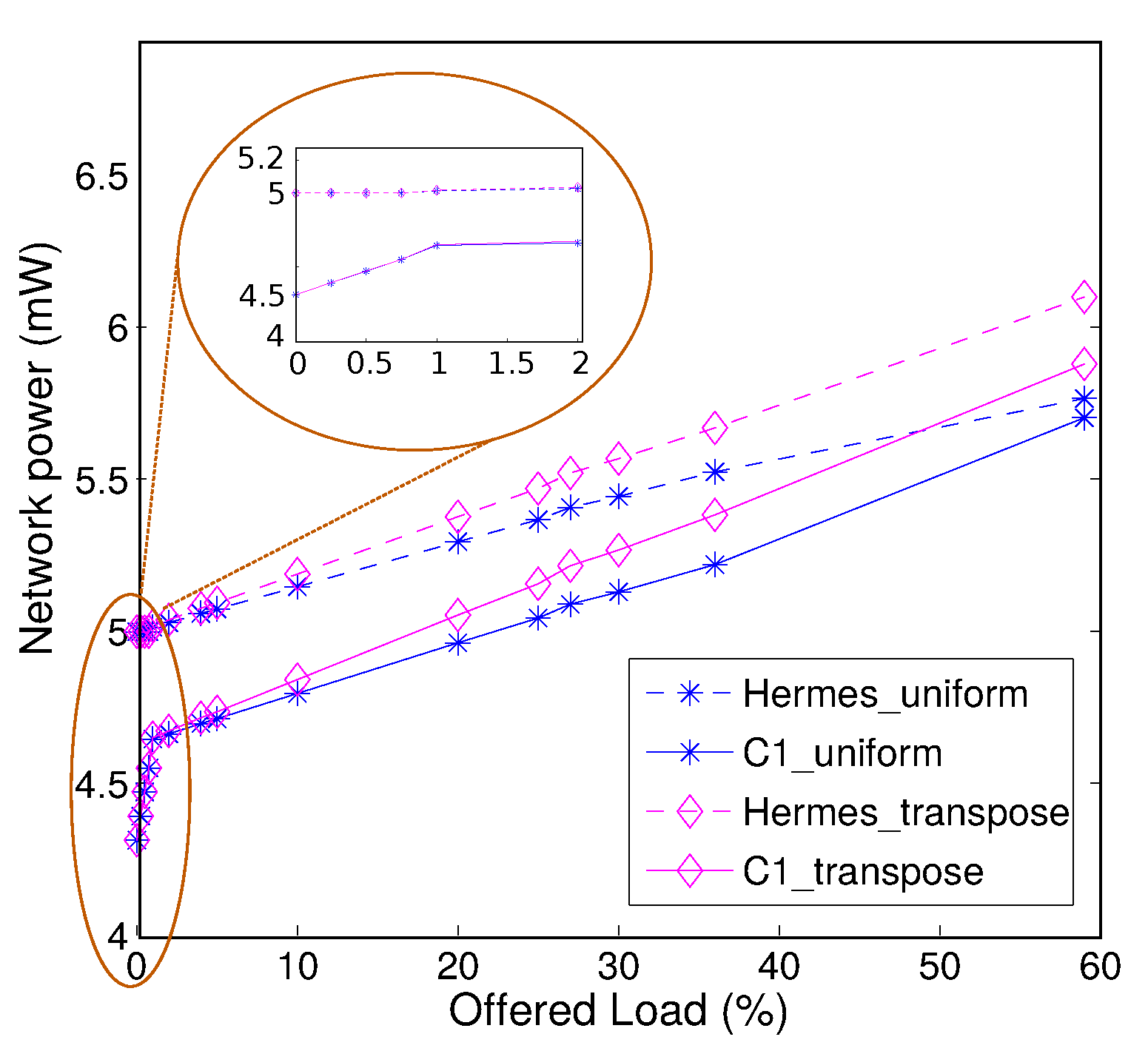

5.1.2. R-NoC Network-Level Power Consumption

5.1.3. Scalability for Different Network Sizes

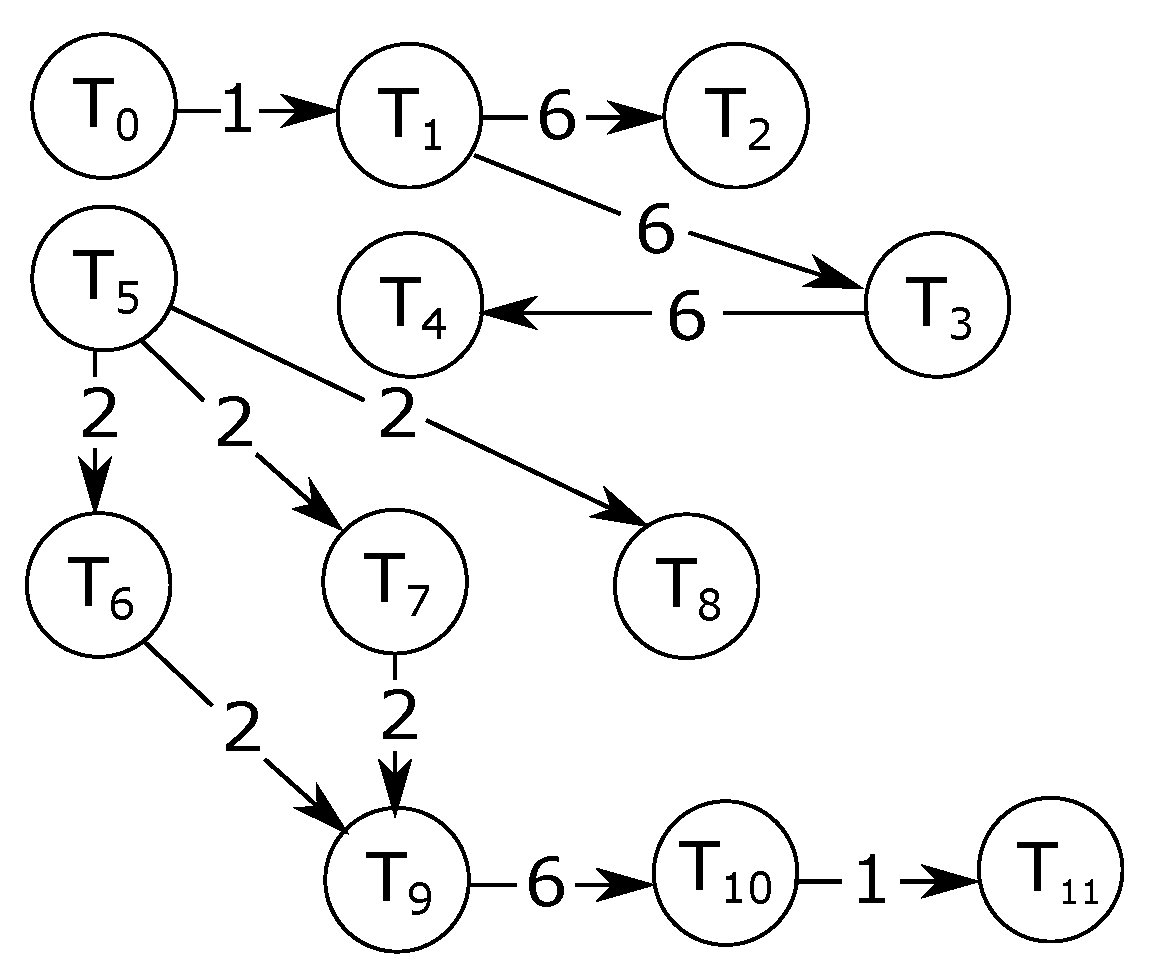

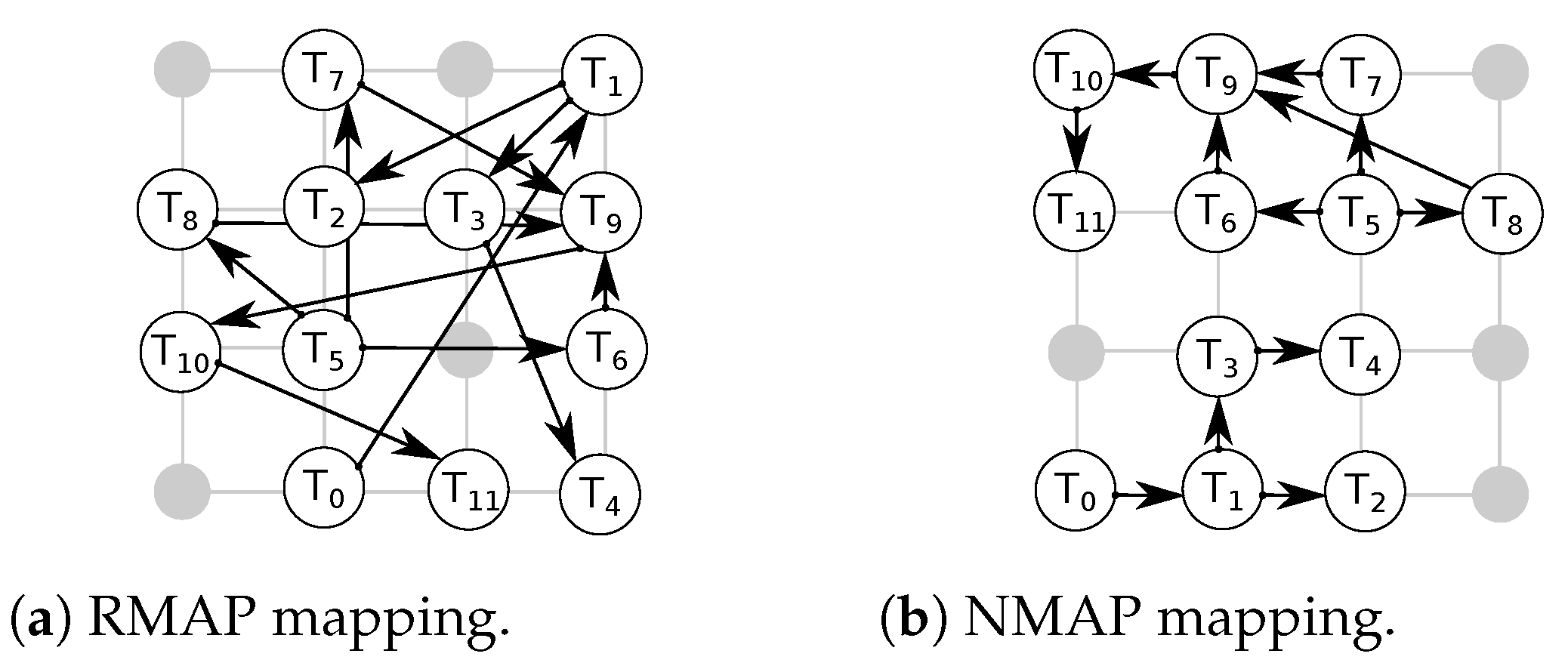

5.1.4. Application Performance

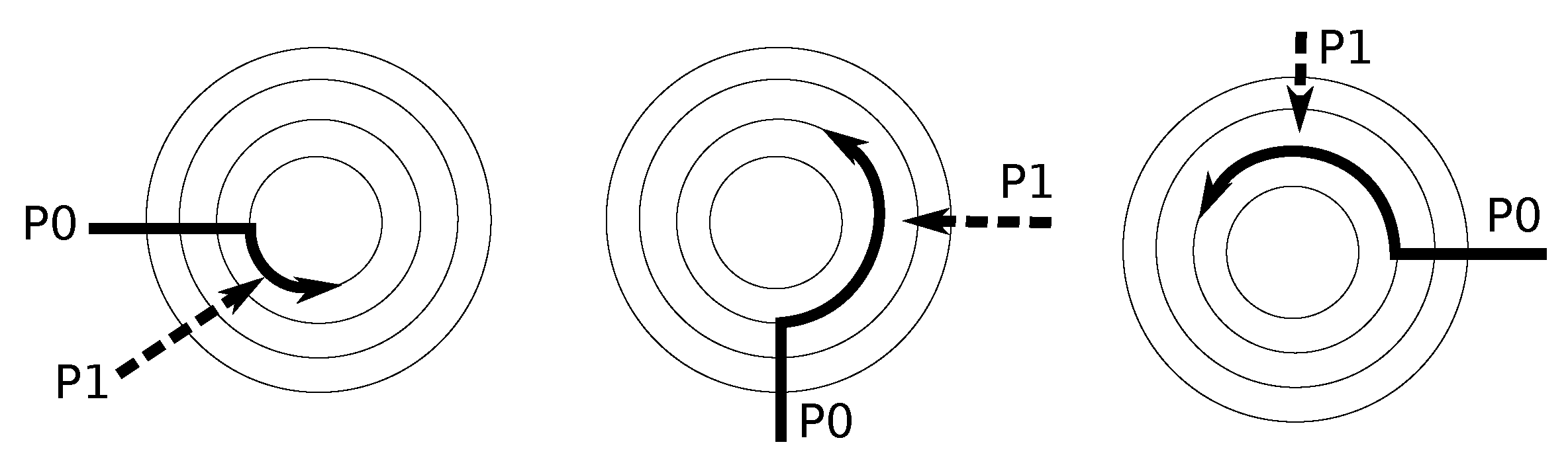

6. Evaluation of R-NoC for Diagonally Linked Mesh NoCs

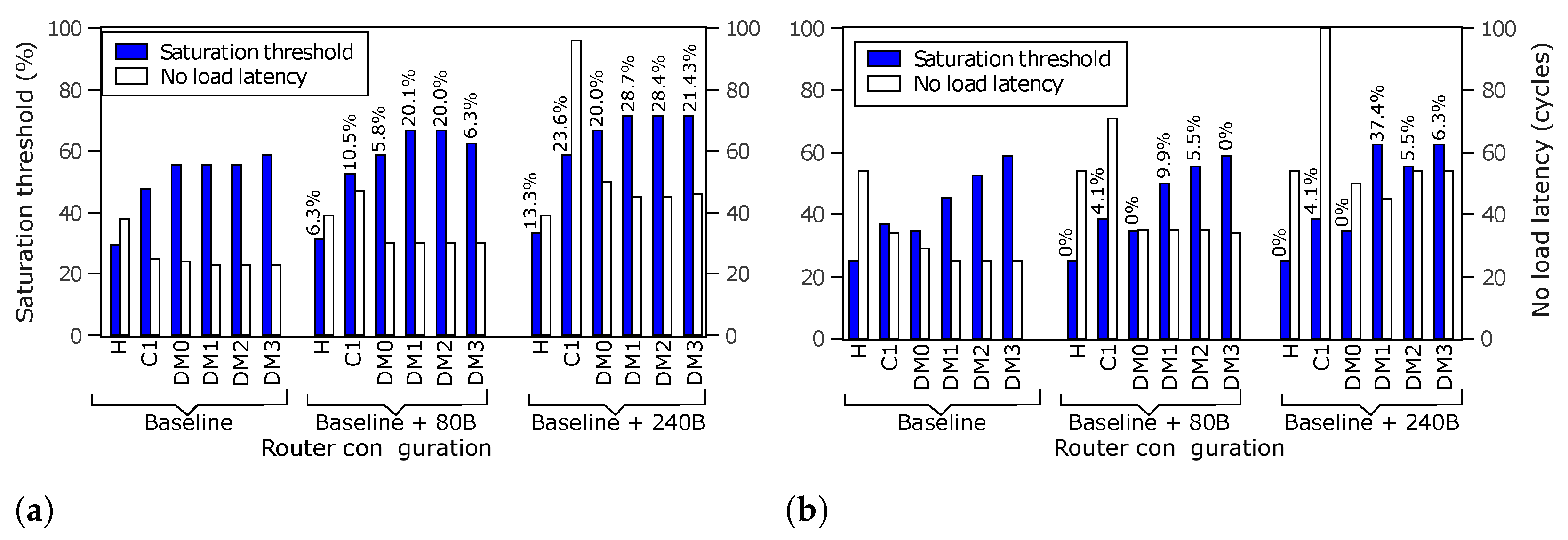

6.1. Performance Scaling through Buffer Insertion

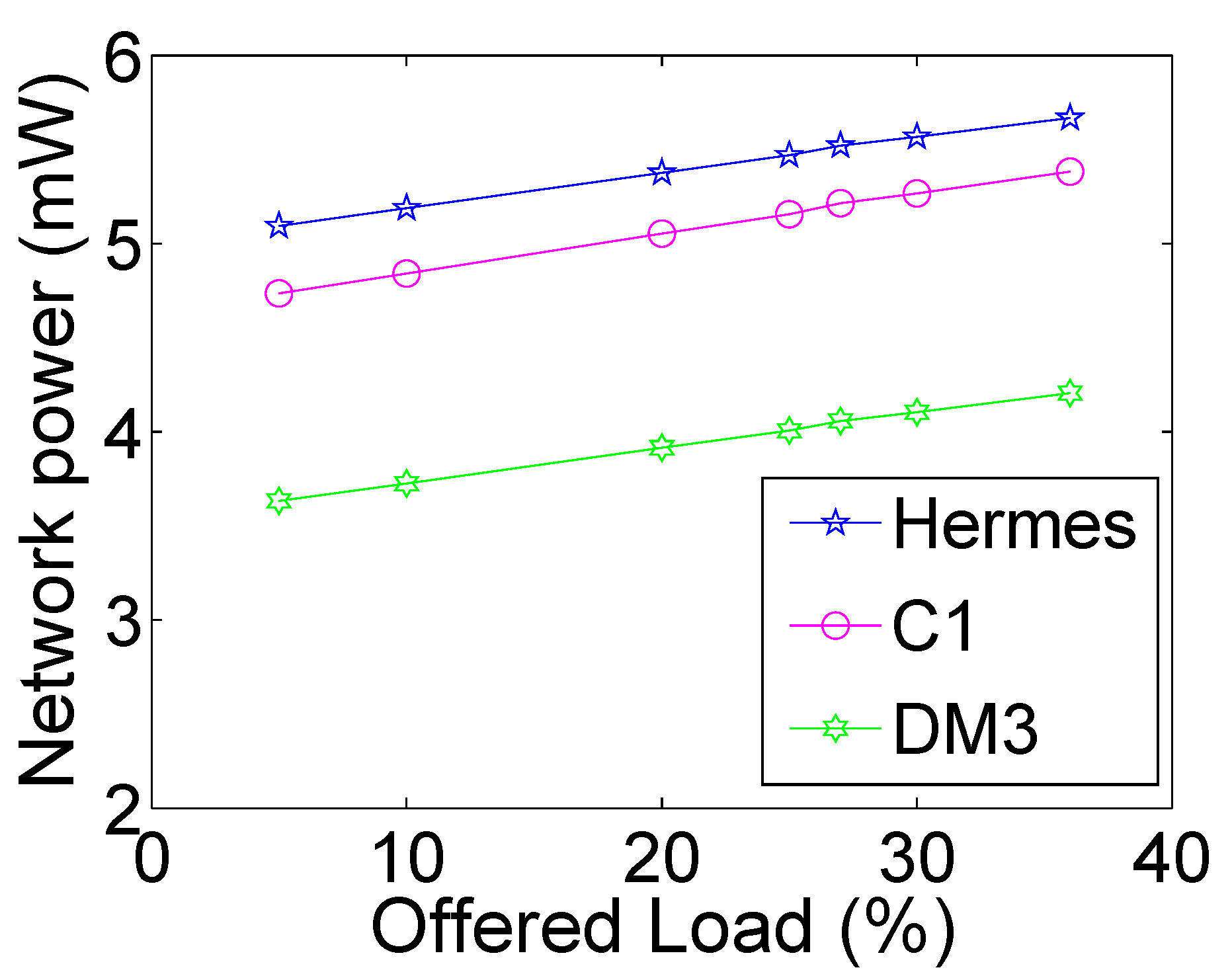

6.2. R-NoC-D Network-Level Power Consumption

6.3. Comparison with Existing Solutions

6.3.1. Comparison with Rotary

6.3.2. Comparison with VC and Single Cycle Routers

7. Conclusions and Perspectives

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Benini, L.; De Micheli, G. Networks on Chips: A New SoC Paradigm. Computer 2002, 35, 70–78. [Google Scholar] [CrossRef]

- Tran, A.T.; Baas, B.M. Achieving High-Performance On-Chip Networks With Shared-Buffer Routers. IEEE Trans. Very Large Scale Integ. (VLSI) Syst. 2014, 22, 1391–1403. [Google Scholar] [CrossRef]

- Tran, A.T.; Baas, B.M. DLABS: A dual-lane buffer-sharing router architecture for networks on chip. In Proceedings of the 2010 IEEE Workshop On Signal Processing Systems, San Francisco, CA, USA, 6–8 October 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 327–332. [Google Scholar]

- Effiong, C.; Sassatelli, G.; Gamatie, A. Scalable and Power-Efficient Implementation of an Asynchronous Router with Buffer Sharing. In Proceedings of the Euromicro Conference on Digital System Design, DSD’17, Vienna, Austria, 30 August–1 September 2017. [Google Scholar]

- Effiong, C.; Sassatelli, G.; Gamatie, A. Exploration of a scalable and power-efficient asynchronous Network-on-Chip with dynamic resource allocation. Microprocess. Microsyst. 2018, 60, 173–184. [Google Scholar] [CrossRef]

- Abad, P.; Puente, V.; Gregorio, J.A.; Prieto, P. Rotary Router: An Efficient Architecture for CMP Interconnection Networks. SIGARCH Comput. Archit. News 2007, 35, 116–125. [Google Scholar] [CrossRef]

- Wang, C.; Hu, W.H.; Lee, S.E.; Bagherzadeh, N. Area and Power-efficient Innovative Congestion-aware Network-on-Chip Architecture. J. Syst. Archit. 2011, 57, 24–38. [Google Scholar] [CrossRef]

- Guerrier, P.; Greiner, A. A generic architecture for on-chip packet-switched interconnections. In Proceedings of the Proceedings Design, Automation and Test in Europe Conference and Exhibition 2000 (Cat. No. PR00537), Paris, France, 27–30 March 2000; pp. 250–256. [Google Scholar] [CrossRef]

- Dally, W.; Towles, B. Route packets, not wires: On-chip interconnection networks. In Proceedings of the 38th Design Automation Conference (IEEE Cat. No.01CH37232), Las Vegas, NV, USA, 18–22 June 2001; pp. 684–689. [Google Scholar]

- Moraes, F.; Calazans, N.; Mello, A.; Möller, L.; Ost, L. HERMES: An Infrastructure for Low Area Overhead Packet-switching Networks on Chip. Integr. VLSI J. 2004, 38, 69–93. [Google Scholar] [CrossRef]

- Alazemi, F.; AziziMazreah, A.; Bose, B.; Chen, L. Routerless Network-on-Chip. In Proceedings of the 2018 IEEE International Symposium on High Performance Computer Architecture (HPCA), Vienna, Austria, 24–28 February 2018; pp. 492–503. [Google Scholar] [CrossRef]

- Lin, T.R.; Penney, D.; Pedram, M.; Chen, L. A Deep Reinforcement Learning Framework for Architectural Exploration: A Routerless NoC Case Study. In Proceedings of the 2020 IEEE International Symposium on High Performance Computer Architecture (HPCA), San Diego, CA, USA, 22–26 February 2020; pp. 99–110. [Google Scholar] [CrossRef]

- Xiang, X.; Sigdel, P.; Tzeng, N. Bufferless Network-on-Chips With Bridged Multiple Subnetworks for Deflection Reduction and Energy Savings. IEEE Trans. Comput. 2020, 69, 577–590. [Google Scholar] [CrossRef]

- Jung, D.C.; Davidson, S.; Zhao, C.; Richmond, D.; Taylor, M.B. Ruche Networks: Wire-Maximal, No-Fuss NoCs: Special Session Paper. In Proceedings of the 2020 14th IEEE/ACM International Symposium on Networks-on-Chip (NOCS), Hamburg, Germany, 24–25 September 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Moscibroda, T.; Mutlu, O. A Case for Bufferless Routing in On-chip Networks. SIGARCH Comput. Archit. News 2009, 37, 196–207. [Google Scholar] [CrossRef]

- Dally, W.; Towles, B. Principles and Practices of Interconnection Networks; Morgan Kaufmann: San Francisco, CA, USA, 2003. [Google Scholar]

- Soteriou, V.; Ramanujam, R.S.; Lin, B.; Peh, L.S. A High-Throughput Distributed Shared-Buffer NoC Router. IEEE Comput. Archit. Lett. 2009, 8, 21–24. [Google Scholar] [CrossRef]

- Nicopoulos, C.A.; Park, D.; Kim, J.; Vijaykrishnan, N.; Yousif, M.S.; Das, C.R. ViChaR: A Dynamic Virtual Channel Regulator for Network-on-Chip Routers. In Proceedings of the 2006 39th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO’06), Orlando, FL, USA, 9–13 December 2006; pp. 333–346. [Google Scholar] [CrossRef]

- Zaruba, F.; Schuiki, F.; Benini, L. Manticore: A 4096-core RISC-V Chiplet Architecture for Ultra-efficient Floating-point Computing. IEEE Micro 2020, 41, 36–42. [Google Scholar] [CrossRef]

- Wang, X.M.; Bandi, L. X-Network: An area-efficient and high-performance on-chip wormhole interconnect network. Microprocess. Microsyst. 2013, 37, 1208–1218. [Google Scholar] [CrossRef]

- Duato, J. A Necessary and Sufficient Condition for Deadlock-Free Adaptive Routing in Wormhole Networks. IEEE Trans. Parallel Distrib. Syst. 1995, 6, 1055–1067. [Google Scholar] [CrossRef]

- Effiong, C.; Sassatelli, G.; Gamatie, A. Distributed and Dynamic Shared-Buffer Router for High-Performance Interconnect. In Proceedings of the Eleventh IEEE/ACM International Symposium on Networks-on-Chip, NOCS ’17, Seoul, Republic of Korea, 19–20 October 2017; pp. 2:1–2:8. [Google Scholar]

- Michelogiannakis, G.; Dally, W.J. Elastic Buffer Flow Control for On-Chip Networks. IEEE Trans. Comput. 2013, 62, 295–309. [Google Scholar] [CrossRef]

- Jacobson, H.M.; Kudva, P.N.; Bose, P.; Cook, P.W.; Schuster, S.E.; Mercer, E.G.; Myers, C.J. Synchronous interlocked pipelines. In Proceedings of the Proceedings Eighth International Symposium on Asynchronous Circuits and Systems, Manchester, UK, 8–11 April 2002; pp. 3–12. [Google Scholar]

- Matoussi, O. NoC Performance Model for Efficient Network Latency Estimation. In Proceedings of the 2021 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 1–5 February 2021; pp. 994–999. [Google Scholar] [CrossRef]

- Cho, S.; Jin, L. Managing distributed, shared L2 caches through OS-level page allocation. In Proceedings of the 39th Annual IEEE/ACM International Symposium on Microarchitecture, Orlando, FL, USA, 9–13 December 2006; pp. 455–468. [Google Scholar]

- Dick, R. Embedded System Synthesis Benchmarks Suite (E3S). 2013. Available online: http://ziyang.eecs.umich.edu/~dickrp/e3s/ (accessed on 15 October 2022).

- Palesi, M.; Holsmark, R.; Kumar, S.; Catania, V. Application Specific Routing Algorithms for Networks on Chip. IEEE Trans. Parallel Dist. Syst. 2009, 20, 316–330. [Google Scholar] [CrossRef]

- Gebhardt, D.; You, J.; Stevens, K.S. Design of an Energy-Efficient Asynchronous NoC and Its Optimization Tools for Heterogeneous SoCs. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2011, 30, 1387–1399. [Google Scholar] [CrossRef]

- Bertozzi, D.; Jalabert, A.; Murali, S.; Tamhankar, R.; Stergiou, S.; Benini, L.; Micheli, G.D. NoC synthesis flow for customized domain specific multiprocessor systems-on-chip. IEEE Trans. Parallel Dist. Syst. 2005, 16, 113–129. [Google Scholar]

- Latif, K.; Rahmani, A.M.; Guang, L.; Seceleanu, T.; Tenhunen, H. PVS-NoC: Partial Virtual Channel Sharing NoC Architecture. In Proceedings of the 2011 19th International Euromicro Conference on Parallel, Distributed and Network-Based Processing, Valladolid, Spain, 9–11 March 2011; pp. 470–477. [Google Scholar]

- van der Tol, E.B.; Jaspers, E.G. Mapping of MPEG-4 decoding on a flexible architecture platform. In Media Processors 2002; SPIE: Bellingham, WC, USA, 2001; Volume 4674, pp. 1–13. [Google Scholar]

- Tran, A.T.; Truong, D.N.; Baas, B.M. A complete real-time 802.11a baseband receiver implemented on an array of programmable processors. In Proceedings of the 2008 42nd Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 26–29 October 2008; pp. 165–170. [Google Scholar]

- Adiga, N.R.; Blumrich, M.A.; Chen, D.; Coteus, P.; Gara, A.; Giampapa, M.E.; Heidelberger, P.; Singh, S.; Steinmacher-Burow, B.D.; Takken, T.; et al. Blue Gene/L torus interconnection network. IBM J. Res. Dev. 2005, 49, 265–276. [Google Scholar] [CrossRef]

- Mullins, R.; West, A.; Moore, S. Low-Latency Virtual-Channel Routers for On-Chip Networks. Sigarch Comp. Archit. News 2004. [Google Scholar] [CrossRef]

- Chen, Y.; Lu, Z.; Xie, L.; Li, J.; Zhang, M. A single-cycle output buffered router with layered switching for Networks-on-Chips. Comput. Electr. Eng. 2012, 38, 906–916. [Google Scholar] [CrossRef]

| Source (Input Ports) | Destinations (Outputs Ports) |

|---|---|

| Local | West, South, East, North |

| West | Local, South, East, North |

| East | West, South, Local, North |

| North | South, Local |

| South | North, Local |

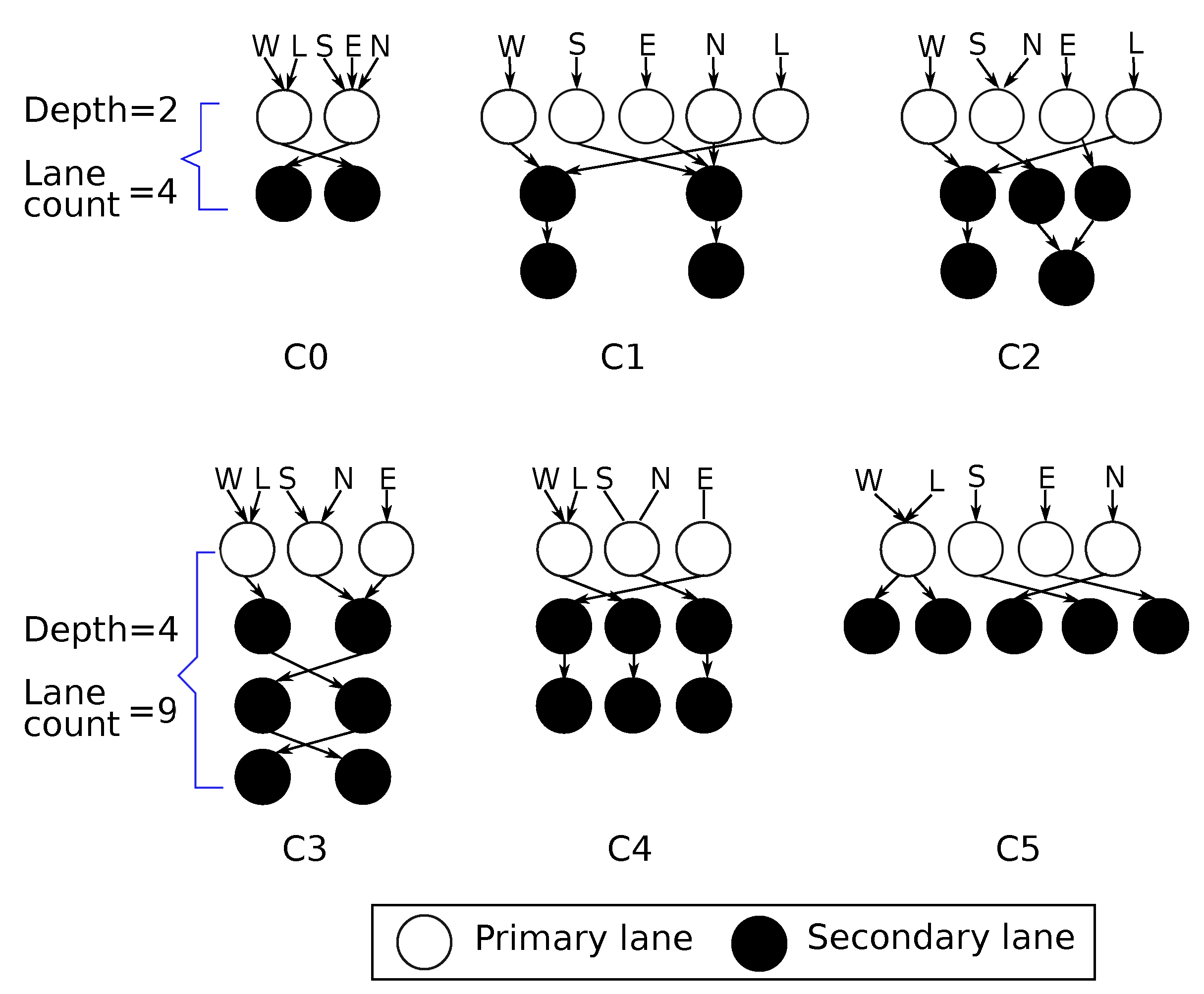

| Config. | Lane Depth | Parallelism Level | P/S (%) | No. of Lanes |

|---|---|---|---|---|

| C0 | 2 | 2 | 50 | 4 |

| C1 | 3 | 5 | 56 | 9 |

| C2 | 3 | 4 | 44 | |

| C3 | 4 | 3 | 33 | |

| C4 | 3 | 3 | 33 | |

| C5 | 2 | 3 | 44 |

| Applications | Number of Tasks |

|---|---|

| E3S auto-indust (E3S-AUTO) [27] | 24 |

| E3S networking (E3S-NET) [27] | 12 |

| E3S telecom (E3S-TEL) [27] | 30 |

| Multimedia system (MMS) [28] | 25 |

| MPEG4 application (MPEG4) [29] | 12 |

| Multi-window display (MWD) [30] | 12 |

| Video conference encoder (VCE) [31] | 25 |

| Video object plan encoder (VOPD) [32] | 16 |

| Wifi application (WIFI) [33] | 20 |

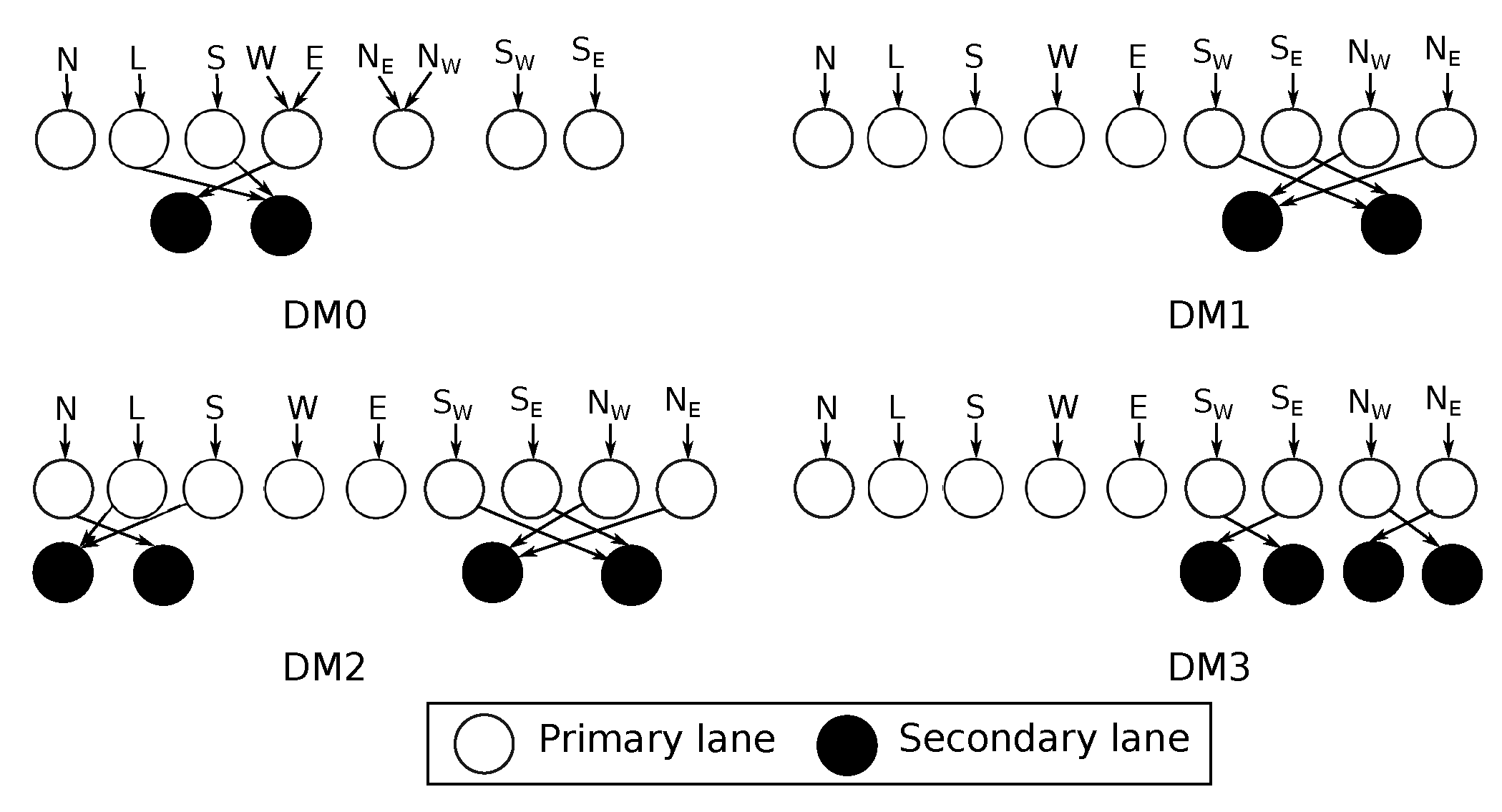

| Config. | No. of Ports | Parallelism Level | No. of Lanes |

|---|---|---|---|

| C1 | 5 | 5 | 9 |

| DM0 | 9 | 5 | 9 |

| DM1 | 9 | 11 | |

| DM2 | 9 | 13 | |

| DM3 | 9 | 13 |

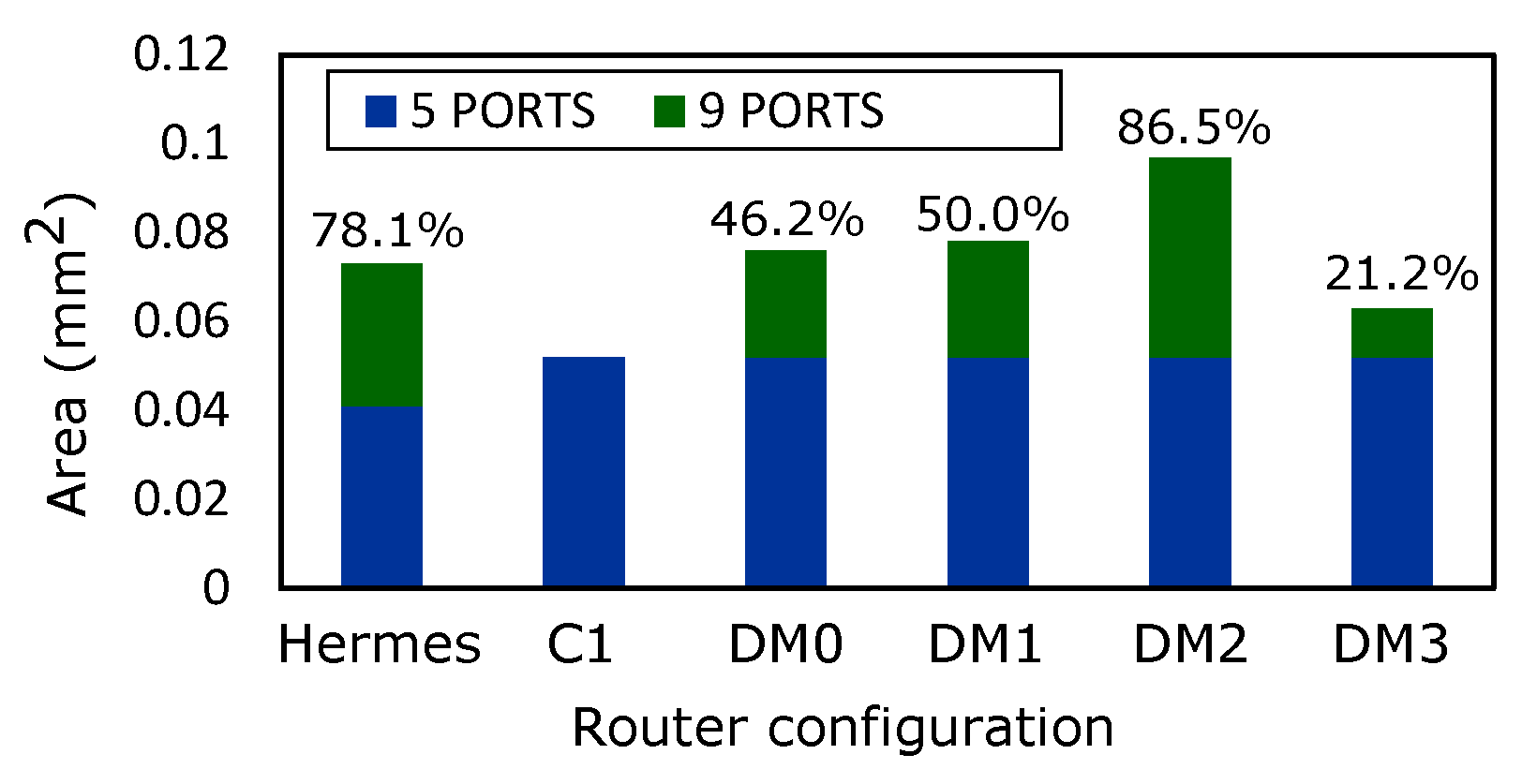

| Router | Area (mm) | Power (mW) | |

|---|---|---|---|

| Hermes (9 ports) | 0.073 | 3.6 | |

| R-NoC-D (DM3) | 0.063 | Min | Max |

| 3.3 | 4.0 | ||

| Router | Head-Flit | Saturation | Flow-Control | Network |

|---|---|---|---|---|

| (Cycles) | (%) | Topology | ||

| Rotary [6] | 4 | 75 | VCT/bubble | 2D-Torus |

| C1 | 2 | 50 | Wormhole | Mesh |

| DM3 | 63 | DMesh |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Effiong, C.; Sassatelli, G.; Gamatié, A. Combined Distributed Shared-Buffered and Diagonally-Linked Mesh Topology for High-Performance Interconnect. Micromachines 2022, 13, 2246. https://doi.org/10.3390/mi13122246

Effiong C, Sassatelli G, Gamatié A. Combined Distributed Shared-Buffered and Diagonally-Linked Mesh Topology for High-Performance Interconnect. Micromachines. 2022; 13(12):2246. https://doi.org/10.3390/mi13122246

Chicago/Turabian StyleEffiong, Charles, Gilles Sassatelli, and Abdoulaye Gamatié. 2022. "Combined Distributed Shared-Buffered and Diagonally-Linked Mesh Topology for High-Performance Interconnect" Micromachines 13, no. 12: 2246. https://doi.org/10.3390/mi13122246

APA StyleEffiong, C., Sassatelli, G., & Gamatié, A. (2022). Combined Distributed Shared-Buffered and Diagonally-Linked Mesh Topology for High-Performance Interconnect. Micromachines, 13(12), 2246. https://doi.org/10.3390/mi13122246