Design and Development of a Wearable Assistive Device Integrating a Fuzzy Decision Support System for Blind and Visually Impaired People

Abstract

1. Introduction

2. Materials and Methods

2.1. Design Requirements

- The first point concerns positioning systems intended to provide location information. The choice of these components during the design depends mainly on the requirements of the desired solution and the application environment (interior/exterior), and in light of these two factors, the choice of the appropriate technology is made taking cost into account;

- The second point is environmental monitoring, which is very important in these types of applications to detect static and dynamic obstacles in particular;

- The third is the user interface, which relies on data collected from the environment and navigation and communicates this information to blind users to guide them while navigating.

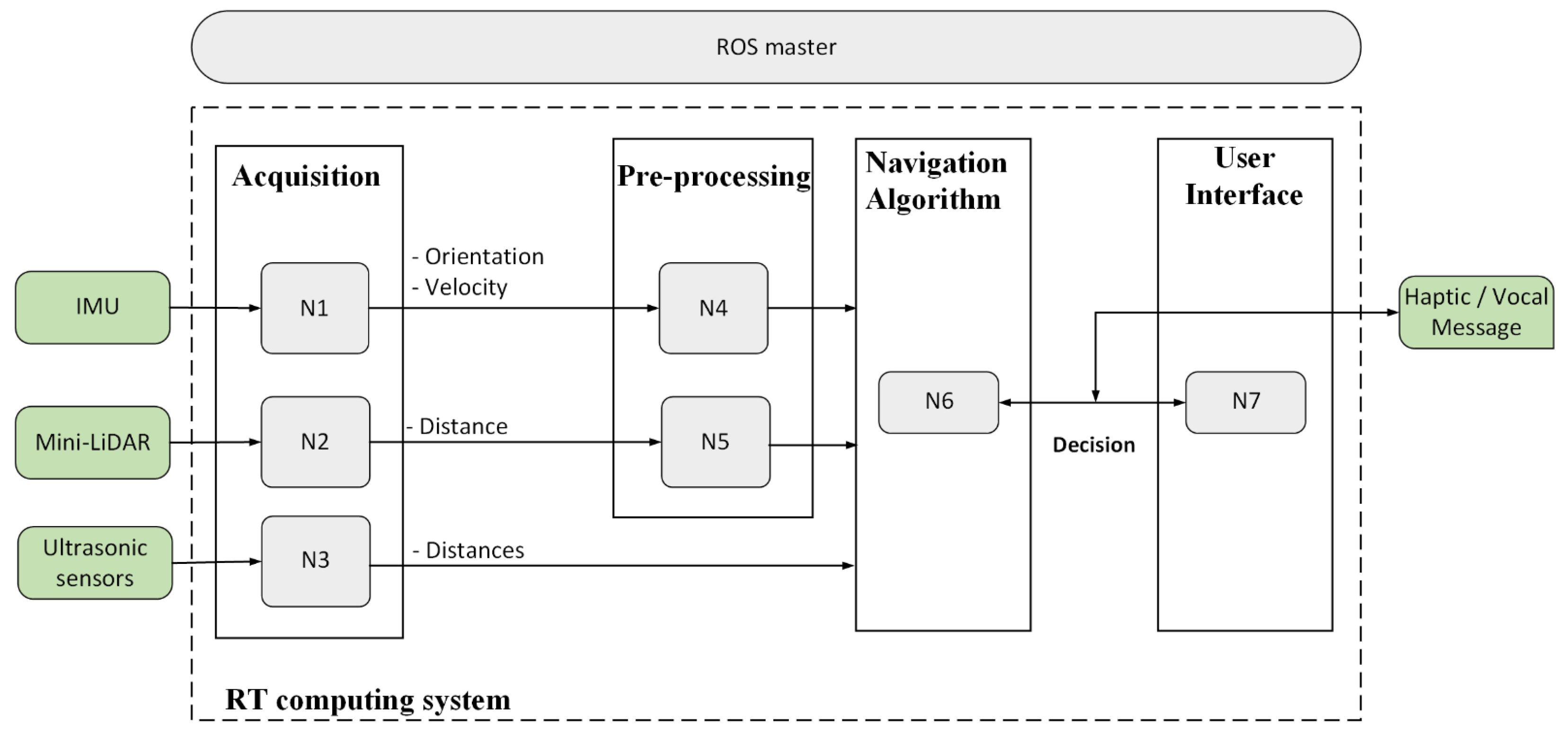

2.1.1. Unit Description

- Compute the velocity from the acquired position data (from the UM7);

- Extract the Yaw value from the magnetometer data, applying a smoothing filter for speed and orientation data (from the UM7) and the distance (from the mini-LiDAR);

- Normalization of the rectified data dedicated for fuzzy classifier inputs by mapping all data to [0, 10].

2.1.2. Navigation System Architecture

2.1.3. Mechanical Design

- Modeling of the 3D geometry of the desired wearable device using CAD software;

- Processing of the acquired data through dedicated software;

- Realization of the designed device using a 3D printer.

2.1.4. Electrical Design

- The importance of the lower area in the navigation of visually impaired and blind peoples. Indeed, the ground can include either a low obstacle or a hole, which are the main factors affecting the safety of the navigation path. This requirement means that the used sensor must cover a wide scanning area with high precision and accuracy;

- The shape of the ground. Indeed, identifying the slope of the ground is indispensable for safe navigation. This requires a sensor that can detect the distance of the barrier even if the shape is complicated;

- The ground scanning method must be as continuous as possible during navigation. This means that the hand is the best location for the sensor to be embedded. This constraint requires that the related sensor must be fully integrated with a small size and weight.

- Higher gyro bias stability and lower noise;

- A new communication architecture for improved flexibility;

- UTC time-synchronization with external GPS;

- Support for third-order temperature compensation on all sensors.

2.2. Navigation Approach

- 1. IF “depth” IS “very low” AND “human velocity” IS “low” THEN “safety level” IS “very low”;

- 2. IF “depth” IS “low” AND “human velocity” IS “low” THEN “safety level” IS “low”;

- 3. IF “depth” IS “medium” AND “human velocity” IS “low” THEN “safety level” IS “medium”;

- 4. IF “depth” IS “high” AND “human velocity” IS “low” THEN “safety level” IS “medium”;

- 5. IF “depth” IS “very high” AND “human velocity” IS “low” THEN “safety level” IS “high”;

- 6. IF “depth” IS “very low” AND “human velocity” IS “medium” THEN “safety level” IS “low”;

- 7. IF “depth” IS “low” AND “human velocity” IS “medium” THEN “safety level” IS “medium”;

- 8. IF “depth” IS “medium” AND “human velocity” IS “medium” THEN “safety level” IS “medium”;

- 9. IF “depth” IS “high” AND “human velocity” IS “medium” THEN “safety level” IS “high”;

- 10. IF “depth” IS “very high” AND “human velocity” IS “medium” THEN “safety level” IS “very high”;

- 11. IF “depth” IS “very low” AND “human velocity” IS “high” THEN “safety level” IS “medium”;

- 12. IF “depth” IS “low” AND “human velocity” IS “high” THEN “safety level” IS “medium”;

- 13. IF “depth” IS “medium” AND “human velocity” IS “high” THEN “safety level” IS “High”;

- 14. IF “depth” IS “high” AND “human velocity” IS “high” THEN “safety level” IS “very high”;

- 15. IF “depth” IS “very high” AND “human velocity” IS “high” THEN “safety level” IS “very high”.

3. Case Study

- The eyeglass, containing three ultrasonic sensors with the related vibrator modules, assembled in a headband and attached via scratch;

- The wearable hand accessory, including the mini-LiDAR and the related vibration module, which is also attached by a simple scratch;

- Control and power systems embedded inside a handbag that is portable by the user.

4. Experimental Results

4.1. Experimental Setup and Tests with Healthy Participants

4.2. Experimental Setup and Tests with Visually Impaired Subjects

- The easiness of wearing and the portability of the device;

- The provided assistance to move in unknown paths and unfamiliar environments;

- How safe they felt when using our device;

- The real-time decisions about the safety of the path and the avoidance of the dynamic objects;

- Suggestions for product improvement.

5. Conclusions

Funding

Conflicts of Interest

References

- Bourne, R.R.; Flaxman, S.R.; Braithwaite, T.; Cicinelli, M.V.; Das, A.; Jonas, J.B.; Keeffe, J.; Kempen, J.H.; Leasher, J.; Limburg, H.; et al. Magnitude, temporal trends, and projections of the global prevalence of blindness and distance and near vision impairment: A systematic review and meta-analysis. Lancet Glob. Health 2017, 5, e888–e897. [Google Scholar] [CrossRef]

- Real, S.; Araujo, A. Navigation systems for the blind and visually impaired: Past work, challenges, and open problems. Sensors 2019, 19, 3404. [Google Scholar] [CrossRef]

- Manjari, K.; Verma, M.; Singal, G. A survey on assistive technology for visually impaired. Internet Things 2020, 11, 100188. [Google Scholar] [CrossRef]

- Tapu, R.; Mocanu, B.; Zaharia, T. Wearable assistive devices for visually impaired: A state of the art survey. Pattern Recognit. Lett. 2018, 137, 37–52. [Google Scholar] [CrossRef]

- Khan, S.; Nazir, S.; Khan, H.U. Analysis of Navigation Assistants for Blind and Visually Impaired People: A Systematic Review. IEEE Access 2021, 9, 26712–26734. [Google Scholar] [CrossRef]

- Zhang, H.; Ye, C. An indoor wayfinding system based on geometric features aided graph SLAM for the visually impaired. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1592–1604. [Google Scholar] [CrossRef]

- Jafri, R.; Campos, R.L.; Ali, S.A.; Arabnia, H.R. Visual and infrared sensor data-based obstacle detection for the visually impaired using the Google project tango tablet development kit and the unity engine. IEEE Access 2017, 6, 443–454. [Google Scholar] [CrossRef]

- Neto, L.B.; Grijalva, F.; Maike, V.R.M.L.; Martini, L.C.; Florencio, D.; Baranauskas, M.C.C.; Rocha, A.; Goldenstein, S. A kinect-based wearable face recognition system to aid visually impaired users. IEEE Trans. Hum.-Mach. Syst. 2016, 47, 52–64. [Google Scholar] [CrossRef]

- Ahmetovic, D.; Gleason, C.; Ruan, C.; Kitani, K.; Takagi, H.; Asakawa, C. NavCog: A navigational cognitive assistant for the blind. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services, Florence, Italy, 6–9 September 2016; pp. 90–99. [Google Scholar]

- Apostolopoulos, I.; Fallah, N.; Folmer, E.; Bekris, K.E. Integrated online localization and navigation for people with visual impairments using smart phones. ACM Trans. Interact. Intell. Syst. (TiiS) 2014, 3, 1–28. [Google Scholar] [CrossRef]

- Hsieh, Y.Z.; Lin, S.S.; Xu, F.X. Development of a wearable guide device based on convolutional neural network for blind or visually impaired persons. Multimed. Tools Appl. 2020, 79, 29473–29491. [Google Scholar] [CrossRef]

- Barontini, F.; Catalano, M.G.; Pallottino, L.; Leporini, B.; Bianchi, M. Integrating Wearable Haptics and Obstacle Avoidance for the Visually Impaired in Indoor Navigation: A User-Centered Approach. IEEE Trans. Haptics 2020, 14, 109–122. [Google Scholar] [CrossRef] [PubMed]

- Bai, J.; Liu, Z.; Lin, Y.; Li, Y.; Lian, S.; Liu, D. Wearable travel aid for environment perception and navigation of visually impaired people. Electronics 2019, 8, 697. [Google Scholar] [CrossRef]

- Mancini, A.; Frontoni, E.; Zingaretti, P. Mechatronic system to help visually impaired users during walking and running. IEEE Trans. Intell. Transp. Syst. 2018, 19, 649–660. [Google Scholar] [CrossRef]

- Abita, J.L.; Stanford, R.; Carkhuff, B. Alarm System for Blind and Visually Impaired Individuals. U.S. Patent US5838238A, 17 November 1998. [Google Scholar]

- Searle, C.; Searle, S.; Holbrook, J. Navigation Device for the Visually-Impaired. U.S. Patent US9429446B1, 30 August 2016. [Google Scholar]

- Saud, S.N.; Raya, L.; Abdullah, M.I.; Isa, M.Z.A. Smart Navigation Aids for Blind and Vision Impairment People. In Computational Intelligence in Information Systems; Springer: Cham, Switerland, 2021. [Google Scholar]

- Messaoudi, M.D.; Menelas, B.A.J.; Mcheick, H. Autonomous Smart White Cane Navigation System for Indoor Usage. Technologies 2020, 8, 37. [Google Scholar] [CrossRef]

- Tayyaba, S.; Ashraf, M.W.; Alquthami, T.; Ahmad, Z.; Manzoor, S. Fuzzy-Based Approach Using IoT Devices for Smart Home to Assist Blind People for Navigation. Sensors 2020, 20, 3674. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, X.; Kojima, M.; Huang, Q.; Arai, T. A Wearable Navigation Device for Visually Impaired People Based on the Real-Time Semantic Visual SLAM System. Sensors 2021, 21, 1536. [Google Scholar] [CrossRef]

- Kuriakose, B.; Shrestha, R.; Sandnes, F.E. Tools and Technologies for Blind and Visually Impaired Navigation Support: A Review. IETE Tech. Rev. 2020. [Google Scholar] [CrossRef]

- Yuan, D.; Manduchi, R. Dynamic environment exploration using a virtual white cane. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 243–249. [Google Scholar]

- Tahat, A. A wireless ranging system for the blind long-cane utilizing a smart-phone. In Proceedings of the 2009 10th International Conference on Telecommunications, Zagreb, Croatia, 8–10 June 2009; pp. 111–117. [Google Scholar]

- Bolgiano, D.; Meeks, E. A laser cane for the blind. IEEE J. Quantum Electron. 1967, 3, 268. [Google Scholar] [CrossRef]

- Milios, E.; Kapralos, B.; Kopinska, A.; Stergiopoulos, S. Sonification of range information for 3-D space perception. IEEE Trans. Neural Syst. Rehabil. Eng. 2003, 11, 416–421. [Google Scholar] [CrossRef]

- Chang, W.J.; Chen, L.B.; Chen, M.C.; Su, J.P.; Sie, C.Y.; Yang, C.H. Design and Implementation of an Intelligent Assistive System for Visually Impaired People for Aerial Obstacle Avoidance and Fall Detection. IEEE Sens. J. 2020, 20, 10199–10210. [Google Scholar] [CrossRef]

- Landa-Hernández, A.; Casarubias-Vargas, H.; Bayro-Corrochano, E. Geometric fuzzy techniques for guidance of visually impaired people. Appl. Bionics Biomech. 2013, 10, 139–157. [Google Scholar] [CrossRef][Green Version]

- Li, B.; Muñoz, J.P.; Rong, X.; Chen, Q.; Xiao, J.; Tian, Y.; Arditi, A.; Yousuf, M. Vision-based mobile indoor assistive navigation aid for blind people. IEEE Trans. Mob. Comput. 2018, 18, 702–714. [Google Scholar] [CrossRef]

- Chen, S.; Yao, D.; Cao, H.; Shen, C. A Novel Approach to Wearable Image Recognition Systems to Aid Visually Impaired People. Appl. Sci. 2019, 9, 3350. [Google Scholar] [CrossRef]

- Sainarayanan, G.; Nagarajan, R.; Yaacob, S. Fuzzy image processing scheme for autonomous navigation of human blind. Appl. Soft Comput. 2007, 7, 257–264. [Google Scholar] [CrossRef]

- Kanwal, N.; Bostanci, E.; Currie, K.; Clark, A.F. A navigation system for the visually impaired: A fusion of vision and depth sensor. Appl. Bionics Biomech. 2015, 2015, 479857. [Google Scholar] [CrossRef]

- Stoll, C.; Palluel-Germain, R.; Fristot, V.; Pellerin, D.; Alleysson, D.; Graff, C. Navigating from a depth image converted into sound. Appl. Bionics Biomech. 2015, 2015, 543492. [Google Scholar] [CrossRef]

- Pham, H.H.; Le, T.L.; Vuillerme, N. Real-time obstacle detection system in indoor environment for the visually impaired using microsoft kinect sensor. J. Sens. 2016, 2016, 3754918. [Google Scholar] [CrossRef]

- Marston, J.R.; Loomis, J.M.; Klatzky, R.L.; Golledge, R.G. Nonvisual route following with guidance from a simple haptic or auditory display. J. Vis. Impair. Blind. 2007, 101, 203–211. [Google Scholar] [CrossRef]

- Guerrero, L.A.; Vasquez, F.; Ochoa, S.F. An indoor navigation system for the visually impaired. Sensors 2012, 12, 8236–8258. [Google Scholar] [CrossRef]

- Heuten, W.; Henze, N.; Boll, S.; Pielot, M. Tactile wayfinder: A non-visual support system for wayfinding. In Proceedings of the 5th Nordic Conference on Human-Computer Interaction: Building Bridges, Lund, Sweden, 20–22 October 2008; pp. 172–181. [Google Scholar]

- Velázquez, R.; Pissaloux, E.; Lay-Ekuakille, A. Tactile-foot stimulation can assist the navigation of people with visual impairment. Appl. Bionics Biomech. 2015, 2015, 798748. [Google Scholar] [CrossRef]

| Velocity | ||||

|---|---|---|---|---|

| Low | Medium | High | ||

| Depth | Very Low | Very Low | Low | Medium |

| Low | Low | Medium | Medium | |

| Medium | Medium | Medium | High | |

| High | Medium | High | Very High | |

| Very High | High | Very High | Very High | |

| Simulated Environments | Haptic Feedback | Vocal Message | Safety Estimation | Power Management | Real-Time Decision Making | Safe Path Generation |

|---|---|---|---|---|---|---|

| Indoor | Reliable | Reliable | Good | Excellent | Yes | Good |

| Outdoor | Reliable | Acceptable | Acceptable | Excellent | Yes | Acceptable |

| Scenario | Path | Length (m) | Obstacles | Dangerous Obstacles | Average Time (s) | Total Collisions | Dangerous Obstacles Detection | Trust Safe Path Generation | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Static | Dynamic | Ours | Cane | Ours | Cane | Ours | Cane | Ours | Cane | ||||

| Indoor | Path1 | 32 | 3 | 0 | 0 | 114 | 121 | 0 | 0 | - | - | - | - |

| Path2 | 44 | 5 | 1 | 0 | 165 | 178 | 0 | 4 | - | - | - | - | |

| Path3 | 54 | 11 | 2 | 1 | 215 | 244 | 0 | 8 | 1 | 1 | Yes | No | |

| Outdoor | Path4 | 241 | 28 | 10 | 2 | 569 | 681 | 3 | 28 | 2 | 2 | Yes | No |

| Path5 | 316 | 39 | 16 | 5 | 754 | 988 | 5 | 40 | 4 | 3 | Yes | No | |

| Path6 | 411 | 55 | 22 | 11 | 911 | 1058 | 12 | 61 | 9 | 5 | Yes | No | |

| Total Collisions | Obstacles Types | Collisions with High Obstacles | Collisions with Middle Obstacles | Collisions with Ground Obstacles and Cavities | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ours | Cane | Static | Dynamic | Ours | Cane | Ours | Cane | Ours | Cane | ||

| Ours | Cane | Ours | Cane | ||||||||

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 4 | 0 | 3 | 0 | 1 | 0 | 3 | 0 | 0 | 0 | 1 |

| 0 | 8 | 0 | 6 | 0 | 2 | 0 | 6 | 0 | 1 | 0 | 1 |

| 3 | 28 | 0 | 20 | 3 | 8 | 1 | 14 | 0 | 4 | 2 | 10 |

| 5 | 40 | 1 | 25 | 4 | 15 | 2 | 22 | 0 | 5 | 3 | 13 |

| 12 | 61 | 2 | 42 | 10 | 19 | 4 | 32 | 1 | 8 | 7 | 21 |

| Participants | Totally Blind or Partially Sighted | Easiness | Assistance | Safety | Real-Time | Suggestions |

|---|---|---|---|---|---|---|

| Participant 1 | Partially sighted | Yes | Yes | Yes | Yes | The device should be more compact |

| Participant 2 | Partially sighted | Yes | Yes | Yes | Yes | – |

| Participant 3 | Totally blind | Questionable | Yes | Questionable | Yes | Add face recognition |

| Participant 4 | Totally blind | Yes | Yes | Questionable | Yes | Add object identification |

| Participant 5 | Totally blind | Yes | Yes | Yes | Yes | Add character recognition, cash recognition |

| Participant 6 | Partially sighted | Yes | Yes | Yes | Yes | Reduce cost of product |

| Participant 7 | Partially sighted | Questionable | Yes | Yes | Yes | Add a GPS |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bouteraa, Y. Design and Development of a Wearable Assistive Device Integrating a Fuzzy Decision Support System for Blind and Visually Impaired People. Micromachines 2021, 12, 1082. https://doi.org/10.3390/mi12091082

Bouteraa Y. Design and Development of a Wearable Assistive Device Integrating a Fuzzy Decision Support System for Blind and Visually Impaired People. Micromachines. 2021; 12(9):1082. https://doi.org/10.3390/mi12091082

Chicago/Turabian StyleBouteraa, Yassine. 2021. "Design and Development of a Wearable Assistive Device Integrating a Fuzzy Decision Support System for Blind and Visually Impaired People" Micromachines 12, no. 9: 1082. https://doi.org/10.3390/mi12091082

APA StyleBouteraa, Y. (2021). Design and Development of a Wearable Assistive Device Integrating a Fuzzy Decision Support System for Blind and Visually Impaired People. Micromachines, 12(9), 1082. https://doi.org/10.3390/mi12091082