Blood Vessel Segmentation of Retinal Image Based on Dense-U-Net Network

Abstract

:1. Introduction

2. Principle of Retinal Image Segmentation

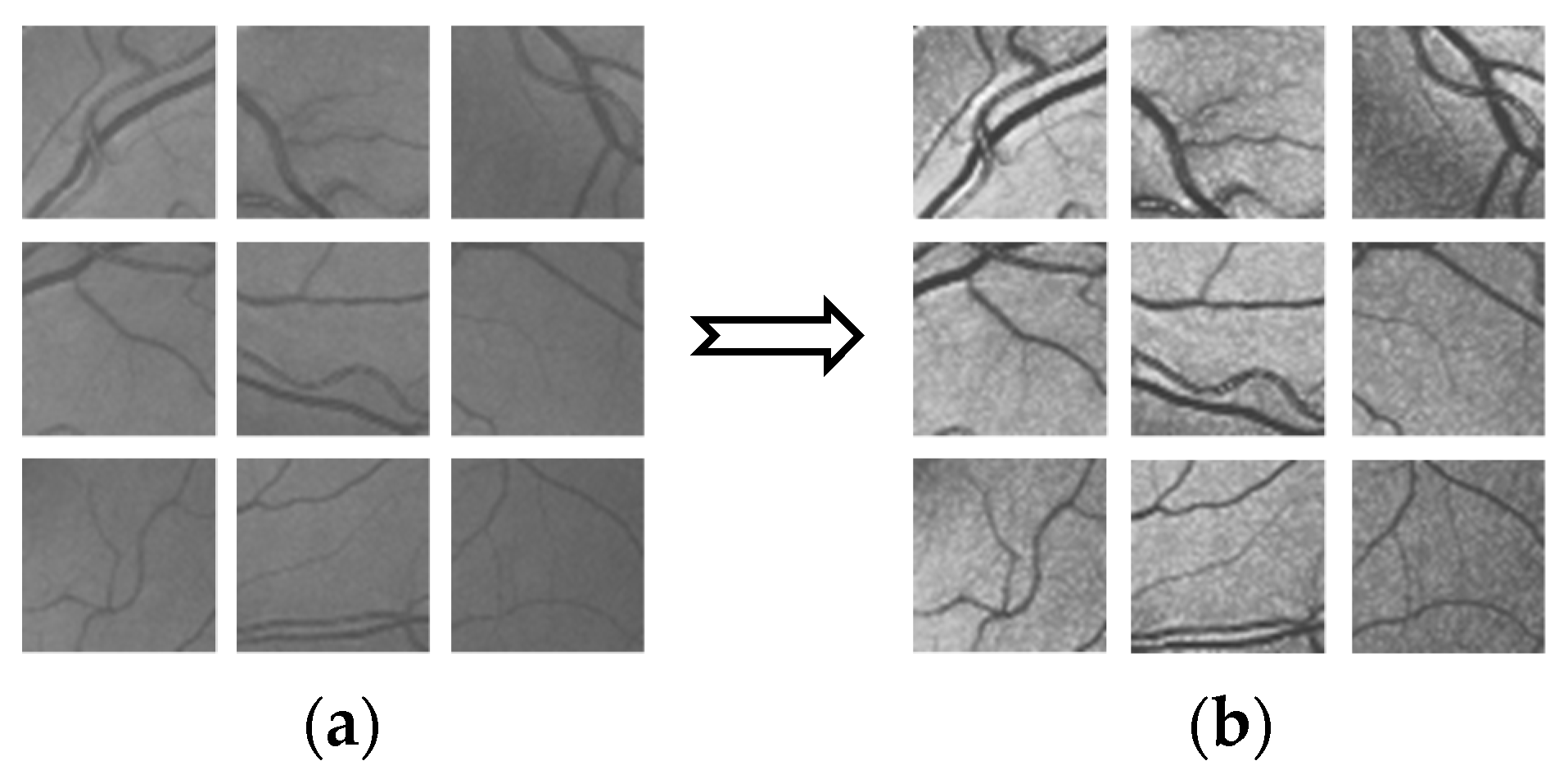

2.1. Image Preprocessing

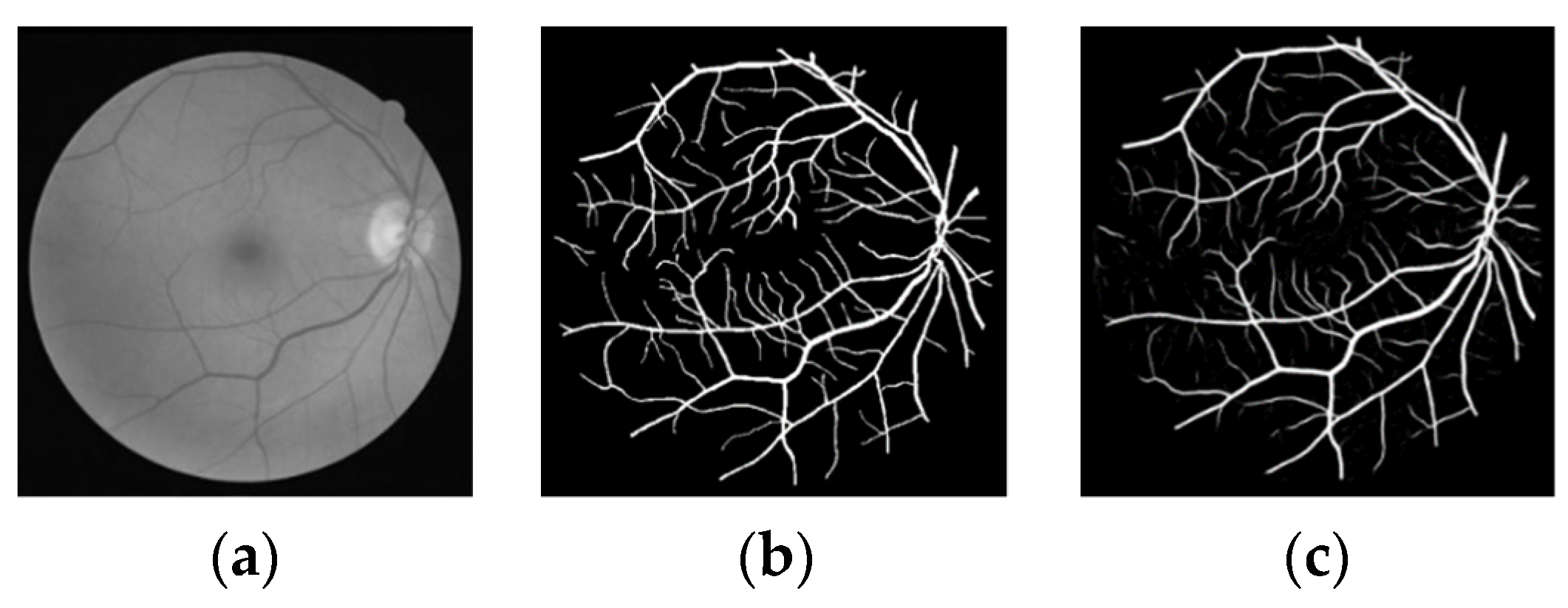

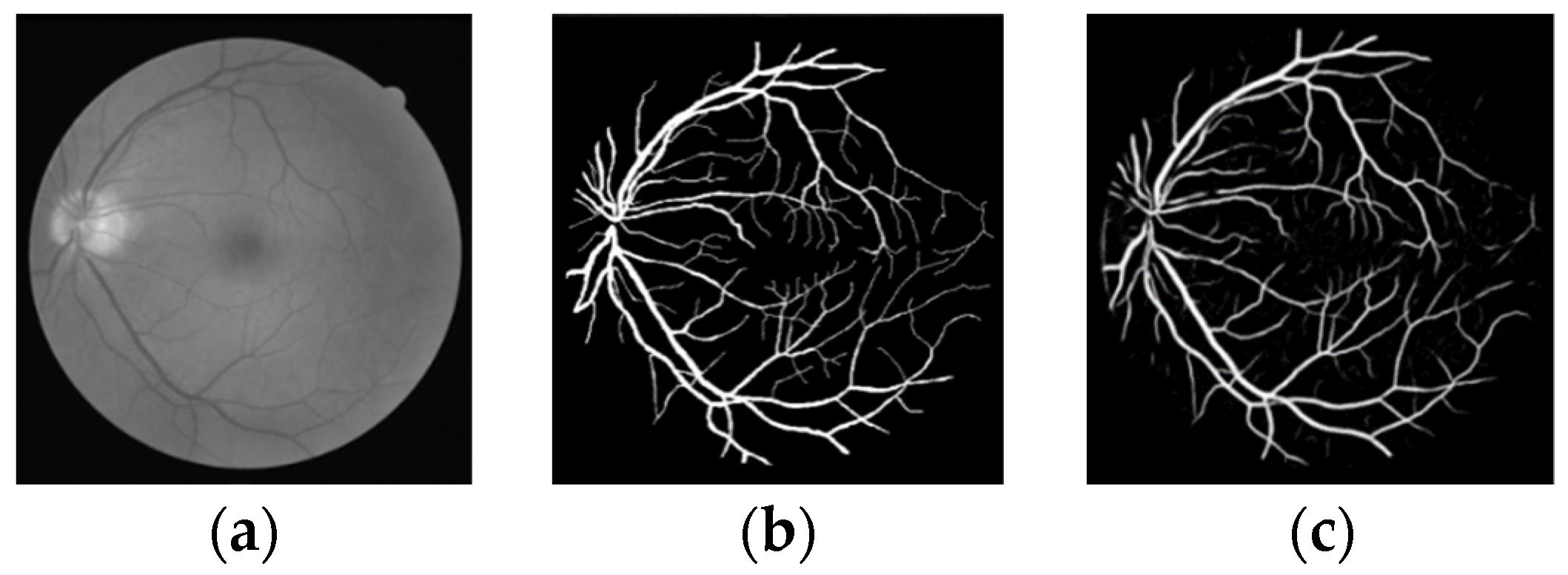

- (a)

- The image of green channel has high contrast and low noise, so it can be used as input data.

- (b)

- CLAHE. The contrast is improved, and noise is suppressed, so it is easier to extract vascular information.

- (c)

- Median filtering. The lesion interference and pipeline influence were removed to better highlight the vascular characteristic information.

- (d)

- Normalization of data. The pixel value range of the image is between (0,1), and the normalization formula is defined as follows:where, is the pixel value of the input image, and are the minimum pixel value and maximum pixel value in the image sample data, respectively, and is the pixel value after normalized processing.

- (e)

- Adaptive gamma correction [23]. It is used to enhance the brightness information of the darker part of the blood vessels in the image, and can effectively retain the quality of the brighter part.

- (f)

- Multi-scale morphological transformation [24]. By selecting four scales to control the control factors of image edge gradient information, the model is defined as:where, is the image detail enhancement factor; and are, respectively the input and output images; and are the features of bright detail and dark detail, respectively. The pretreatment results are shown in Figure 2.

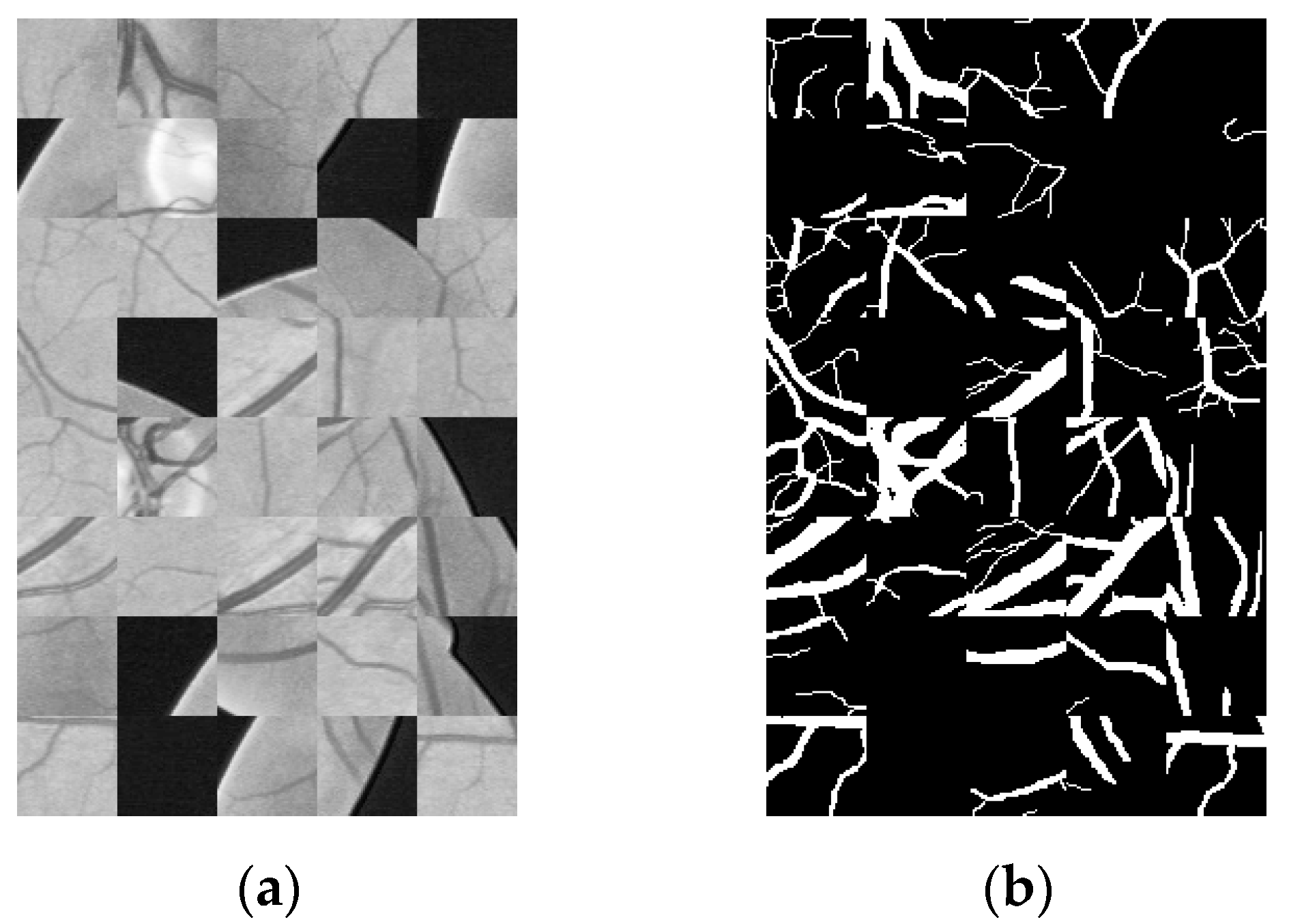

2.2. Data Amplification

2.3. Dense-U-Net Model

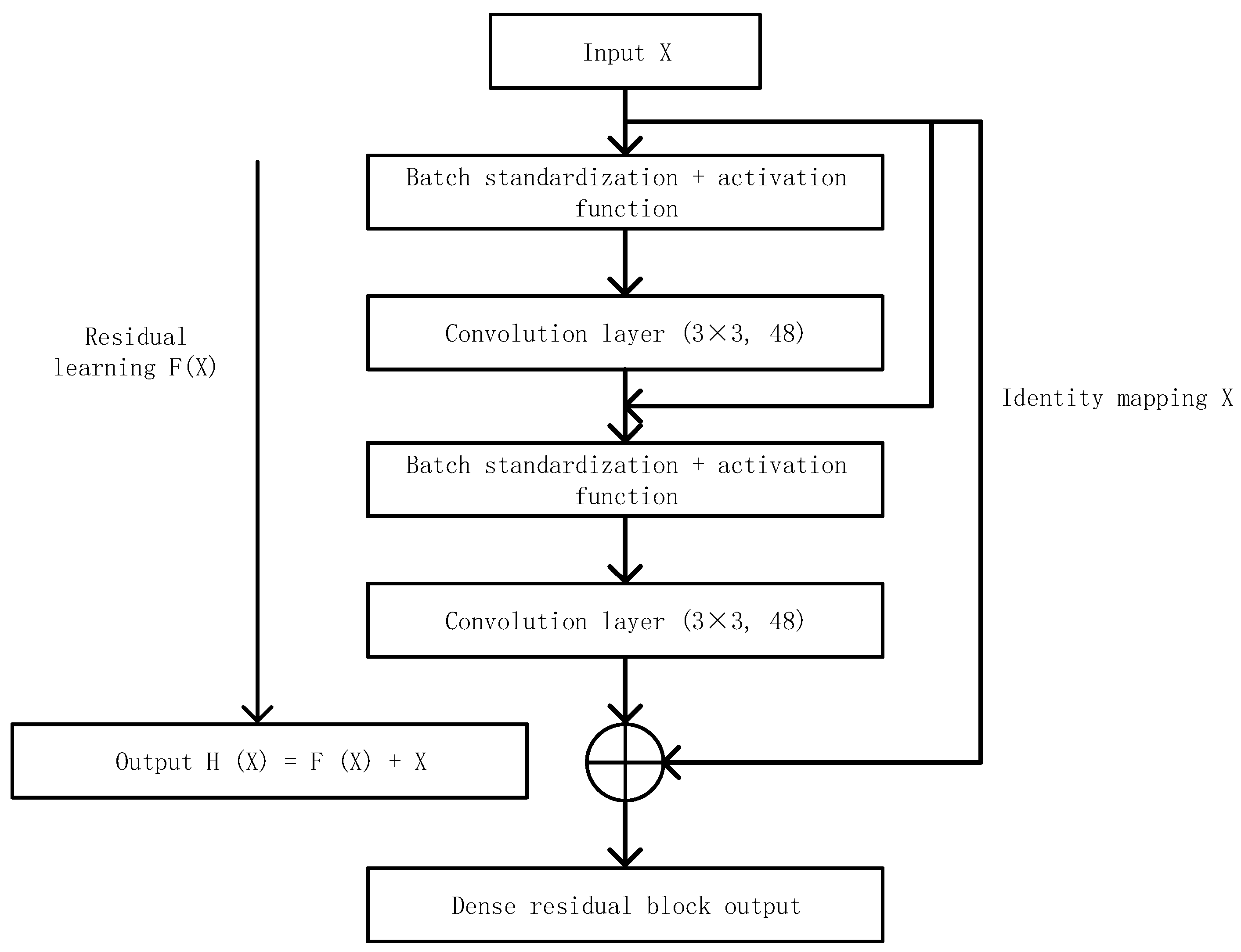

2.3.1. Dense Block

2.3.2. Loss Function

3. Experiment

3.1. Experimental Data Set

3.2. Evaluation Indicators

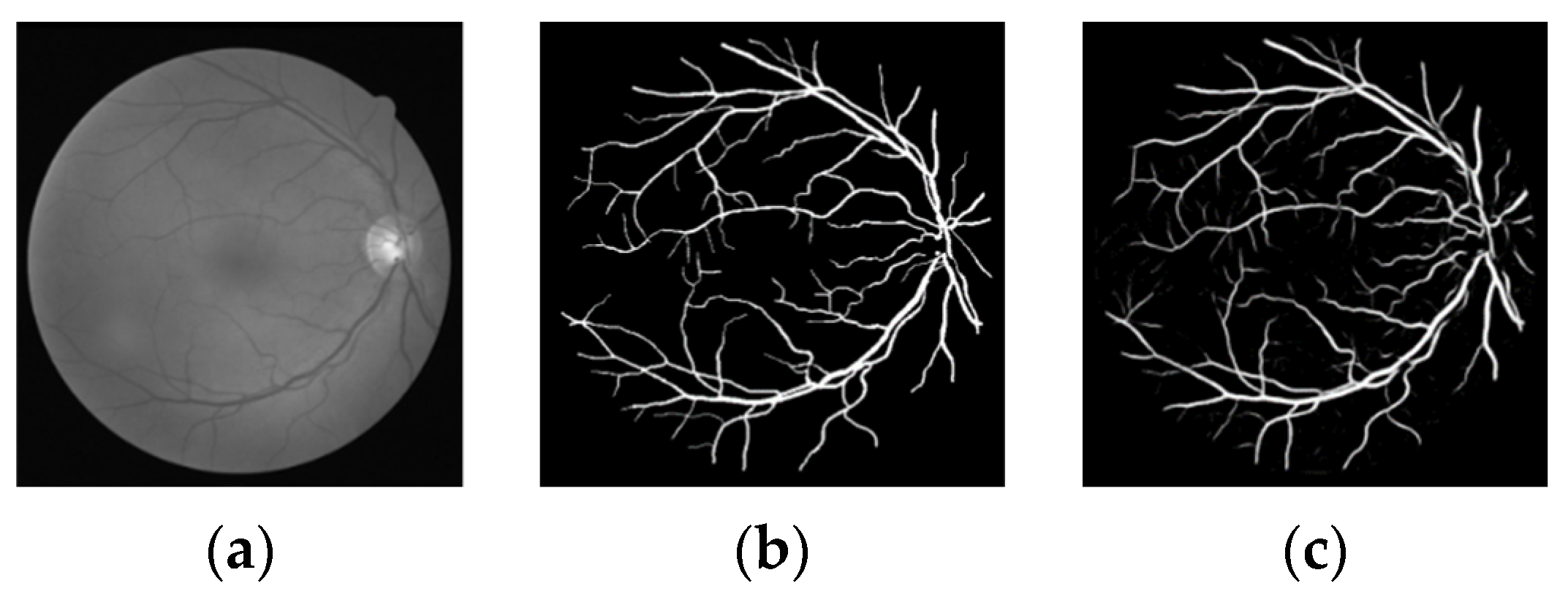

3.3. Experimental Results and Analysis

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Roychowdhury, S.; Koozekanani, D.D.; Parhi, K.K. Blood Vessel Segmentation of Fundus Images by Major Vessel Extraction and Sub-image Classification. IEEE J. Biomed. Health Inform. 2015, 19, 1118–1128. [Google Scholar] [CrossRef]

- Chaudhuri, S.; Chatterjee, S.; Katz, N.; Nelson, M.; Goldbaum, M. Detection of blood vessels in retinal images using two-dimensional matched filters. IEEE Trans. Med. Imaging 1989, 8, 263–269. [Google Scholar] [CrossRef] [Green Version]

- Zana, F.; Klein, J.C. Segmentation of vessel-like patterns using mathematical morphology and curvature evaluation. IEEE Trans. Image Process. 2001, 10, 1010–1019. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, I.; Sun, Y. Recursive tracking of vascular networks in angiograms based on the detection-deletion scheme. IEEE Trans. Med. Imaging 1993, 12, 334–341. [Google Scholar] [CrossRef]

- Jaspreet, K.; Sinha, H.P. Automated Detection of Retinal Blood Vessels in Diabetic Retinopathy Using Gabor Filter. Int. J. Comput. Sci. Netw. Secur. 2012, 4, 109–116. [Google Scholar]

- Wang, X.; Jiang, X. Retinal vessel segmentation by a divide-and-conquer funnel-structured classification framework. Signal Process. 2019, 165, 104–114. [Google Scholar] [CrossRef]

- Yan, Z.; Yang, X.; Cheng, K.T. A Three-Stage Deep Learning Model for Accurate Retinal Vessel Segmentation. IEEE J. Biomed. Health Inform. 2019, 23, 1427–1436. [Google Scholar] [CrossRef] [PubMed]

- Fraz, M.M.; Barman, S.A.; Remagnino, P.; Hoppe, A.; Basit, A.; Uyyanonvara, B.; Rudnicka, A.; Owen, C. An approach to localize the retinal blood vessels using bit planes and centerline detection. Comput. Methods Programs Biomed. 2012, 108, 600–616. [Google Scholar] [CrossRef]

- Soares, J.; Leandro, J.; Cesar, R.M. Retinal vessel segmentation using the 2-D Gabor wavelet and supervised classification. IEEE Trans. Med. Imaging 2006, 25, 1214–1222. [Google Scholar] [CrossRef] [Green Version]

- Saffarzadeh, V.M.; Osareh, A.; Shadgar, B. Vessel Segmentation in Retinal Images Using Multi-scale Line Operator and K-Means Clustering. J. Med. Signals Sens. 2014, 4, 122–129. [Google Scholar] [CrossRef]

- Gu, Z.W.; Cheng, J.; Fu, H.Z.; Zhou, K.; Hao, H.; Zhao, Y.; Zhang, T.; Gao, S.; Liu, J. CE-Net: Context encoder network for 2D medical image segmentation. IEEE Trans. Med. Imaging 2019, 38, 2281–2292. [Google Scholar] [CrossRef] [Green Version]

- Choy, S.K.; Ng, T.C.; Yu, C. Unsupervised Fuzzy Model-based Image Segmentation. Signal Process. 2020, 171, 107483. [Google Scholar] [CrossRef]

- Zhang, J.; Li, H.; Nie, Q.; Cheng, L. A retinal vessel boundary tracking method based on Bayesian theory and multi-scale line detection. Comput. Med. Imaging Graph. 2014, 38, 517–525. [Google Scholar] [CrossRef]

- Orlando, J.I.; Prokofyeva, E.; Blaschko, M.B. A discriminatively trained fully connected conditional random field model for blood vessel segmentation in fundus images. IEEE Trans. Biomed. Eng. 2017, 64, 16–27. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Soomro, T.A.; Afifi, A.J.; Zheng, L.; Soomro, S.; Gao, J.; Hellwich, O.; Paul, M. Deep Learning Models for Retinal Blood Vessels Segmentation: A Review. IEEE Access 2019, 7, 71696–71717. [Google Scholar] [CrossRef]

- Wang, S.; Yin, Y.; Cao, G.; Wei, B.; Zheng, Y.; Yang, G. Hierarchical retinal blood vessel segmentation based on feature and ensemble learning. Neuro Comput. 2015, 149, 708–717. [Google Scholar] [CrossRef]

- Liskowski, P.; Krawiec, K. Segmenting Retinal Blood Vessels with Deep Neural Networks. IEEE Trans. Med. Imaging 2016, 35, 2369–2380. [Google Scholar] [CrossRef]

- Suryani, E.; Susilo, M. The hybrid method of SOM artificial neural network and median thresholding for segmentation of blood vessels in the retina image fundus. Int. J. Fuzzy Log. Intell. Syst. 2019, 19, 323–331. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Y.K.; Chen, Z.L.; Shen, H.L.; Zheng, X.; Zhao, R.; Duan, X. A refined equilibrium generative adversarial network for retinal vessel segmentation. Neuro Comput. 2021, 437, 118–130. [Google Scholar] [CrossRef]

- Fu, H.; Xu, Y.; Lin, S.; Wong, D.W.K.; Liu, J. Deep Vessel: Retinal Vessel Segmentation via Deep Learning and Conditional Random Field. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Athens, Greece, 17–21 October 2016; Springer: Cham, Switzerland, 2016; pp. 132–139. [Google Scholar]

- Zhou, L.; Yu, Q.; Xu, X.; Gu, Y.; Yang, J. Improving dense conditional random field for retinal vessel segmentation by discriminative feature learning and thin-vessel enhancement. Comput. Methods Programs Biomed. 2017, 148, 13–25. [Google Scholar] [CrossRef]

- Pan, X.; Zhang, Q.; Zhang, H. A Fundus Retinal Vessels Segmentation Scheme Based on the Improved Deep Learning U-Net Model. IEEE Access 2019, 7, 122634–122643. [Google Scholar]

- Rahman, S.; Rahman, M.M.; Abdullah-Al-Wadud, M.; Al-Quaderi, G.D.; Shoyaib, M. An adaptive gamma correction for image enhancement. EURASIP J. Image Video Process. 2016, 2016, 35. [Google Scholar] [CrossRef] [Green Version]

- Nahar, S.; Munir, M.B. Automatic detection of premature ventricular contraction beat using morphological transformation and cross-correlation. In Proceedings of the International Conference on Signal Processing & Communication Systems, Omaha, NE, USA, 28–30 September 2009; pp. 1–4. [Google Scholar]

- Oliveira, A.; Pereira, S.; Silva, C.A. Augmenting data when training a CNN for retinal vessel segmentation: How to warp? In Proceedings of the 2017 IEEE 5th Portuguese Meeting on Bioengineering (ENBENG), Coimbra, Portugal, 16–18 February 2017; IEEE Press: Manhattan, NY, USA, 2017; pp. 1–4. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- He, H.; Garcia, E.A. Learning from Imbalanced Data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar]

- Xie, S.; Tu, Z. Holistically-Nested Edge Detection. Int. J. Comput. Vis. 2015, 125, 3–18. [Google Scholar] [CrossRef]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; IEEE Press: Manhattan, NY, USA, 2016; pp. 565–571. [Google Scholar]

- Staal, J.; Abramoff, M.D.; Niemeijer, M.; Viergever, M.A.; Van Ginneken, B. Ridge-based vessel segmentation in color images of the retina. IEEE Trans. Med. Imaging 2004, 23, 501–509. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Zhao, Z.; Ren, Q.; Xu, Y.; Yu, Y. Dense U-net Based on Patch-Based Learning for Retinal Vessel Segmentation. Entropy 2019, 21, 168. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, Y. A Labeling-Free Approach to Supervising Deep Neural Networks for Retinal Blood Vessel Segmentation. arXiv 2017, arXiv:1704.07502. [Google Scholar]

- Strisciuglio, N.; Azzopardi, G.; Vento, M.; Petkov, N. Supervised vessel delineation in retinal fundus images with the automatic selection of B-COSFIRE filters. Mach. Vis. Appl. 2016, 27, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Guo, C.; Szemenyei, M.; Pei, Y.; Yi, Y.; Zhou, W. SD-U-net: A Structured Dropout U-Net for Retinal Vessel Segmentation. In Proceedings of the IEEE 19th International Conference on Bioinformatics and Bioengineering, Athens, Greece, 28–30 October 2019; pp. 439–444. [Google Scholar]

- Alom, M.Z.; Yakopcic, C.; Hasan, M.; Taha, T.M.; Asari, V.K. Recurrent residual U-Net for medical image segmentation. J. Med. Imaging 2019, 6, 014006. [Google Scholar] [CrossRef]

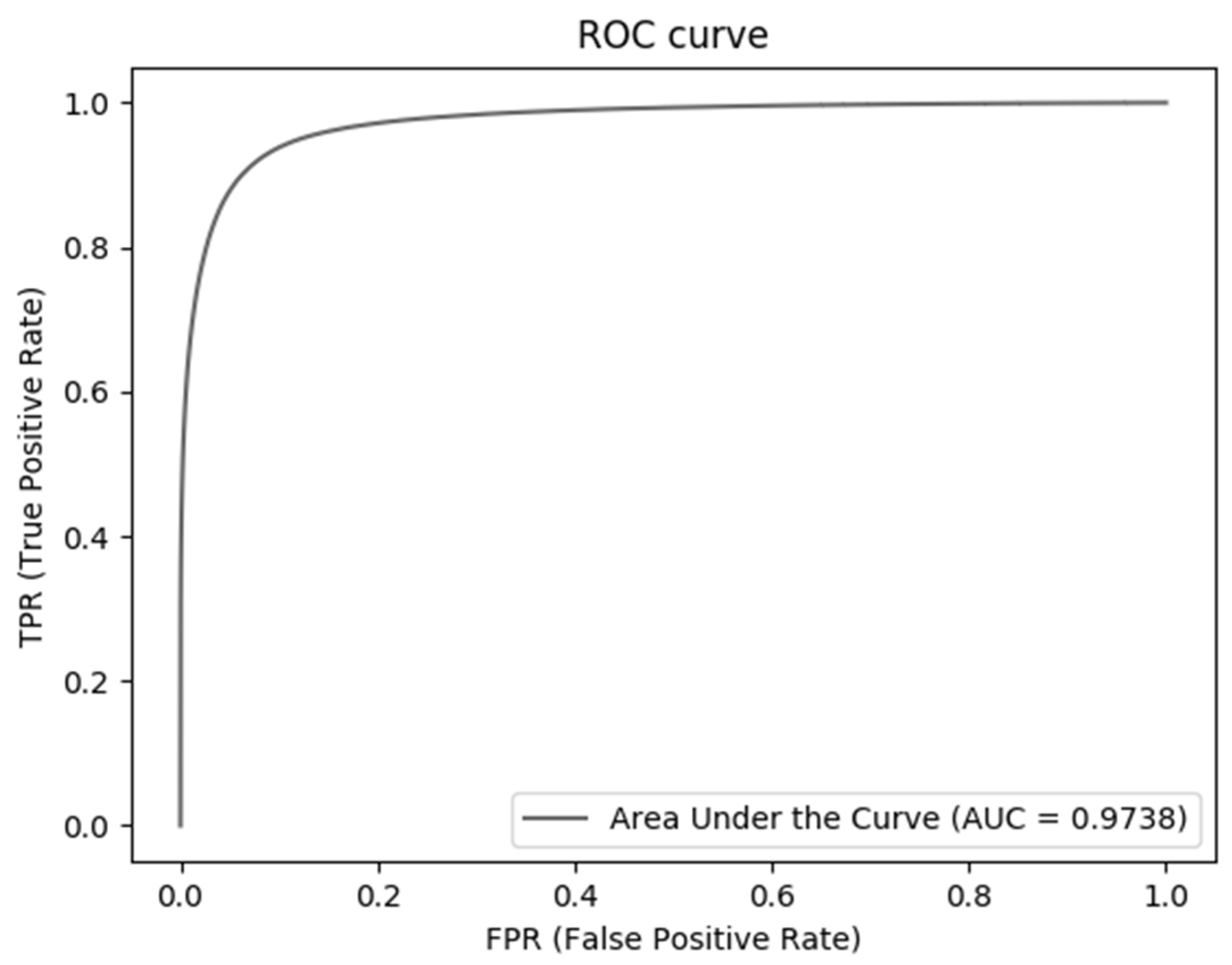

| Methods | PPV | Sp | Sn | Acc | AUC |

|---|---|---|---|---|---|

| Wang [31] | - | 0.9736 | 0.7986 | 0.9511 | 0.9740 |

| Chen [32] | - | 0.9735 | 0.7426 | 0.9453 | 0.9516 |

| Strisciuglio [33] | - | 0.9724 | 0.7731 | 0.9467 | 0.9588 |

| Guo [34] | 0.8335 | 0.9848 | 0.7891 | 0.9674 | 0.9836 |

| Alom [35] | - | 0.9813 | 0.7792 | 0.9556 | 0.9784 |

| Our proposed | 0.8946 | 0.9896 | 0.7931 | 0.9698 | 0.9738 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Jia, M.; Yang, X.; Xu, M. Blood Vessel Segmentation of Retinal Image Based on Dense-U-Net Network. Micromachines 2021, 12, 1478. https://doi.org/10.3390/mi12121478

Li Z, Jia M, Yang X, Xu M. Blood Vessel Segmentation of Retinal Image Based on Dense-U-Net Network. Micromachines. 2021; 12(12):1478. https://doi.org/10.3390/mi12121478

Chicago/Turabian StyleLi, Zhenwei, Mengli Jia, Xiaoli Yang, and Mengying Xu. 2021. "Blood Vessel Segmentation of Retinal Image Based on Dense-U-Net Network" Micromachines 12, no. 12: 1478. https://doi.org/10.3390/mi12121478

APA StyleLi, Z., Jia, M., Yang, X., & Xu, M. (2021). Blood Vessel Segmentation of Retinal Image Based on Dense-U-Net Network. Micromachines, 12(12), 1478. https://doi.org/10.3390/mi12121478