Vari-Focal Light Field Camera for Extended Depth of Field

Abstract

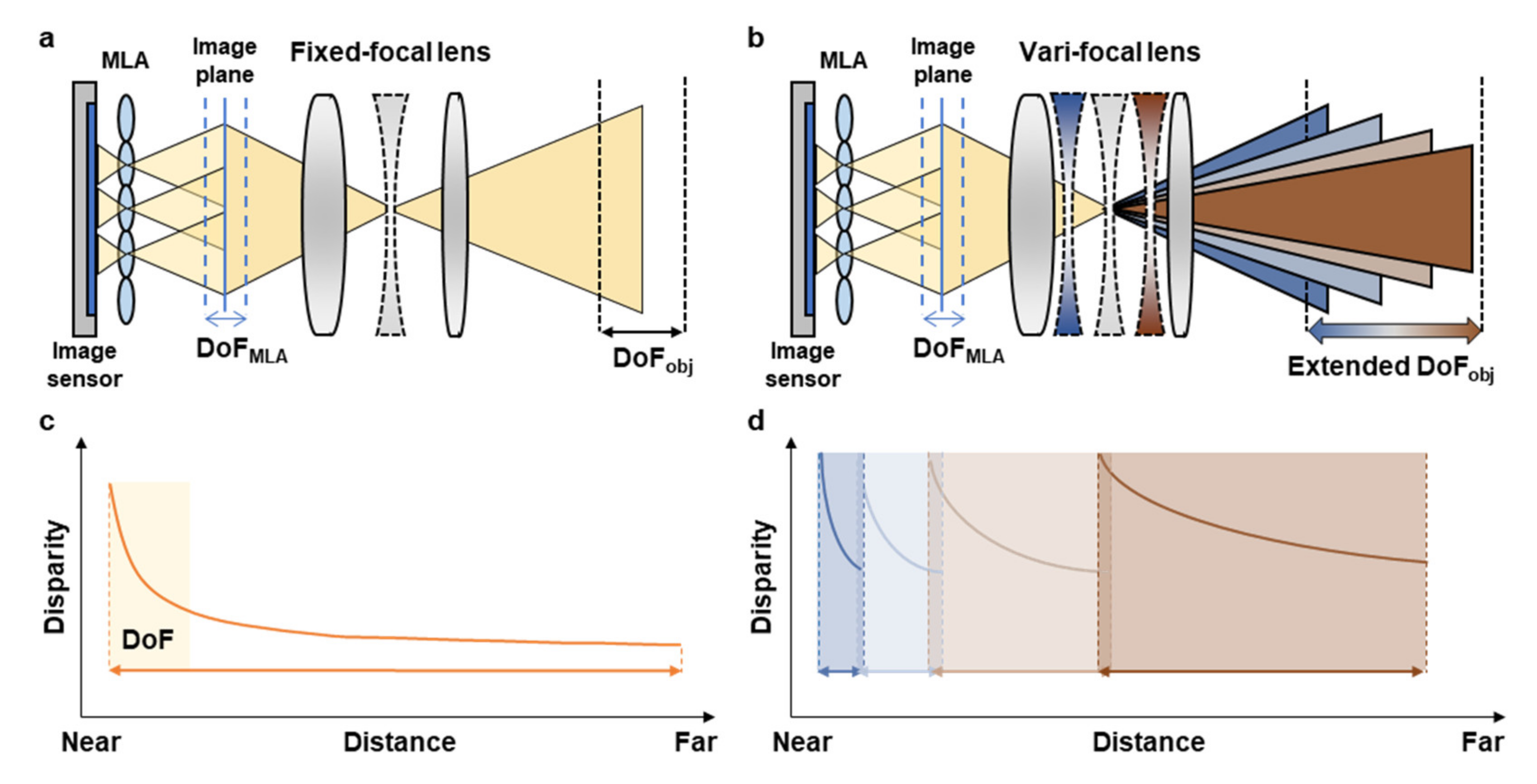

:1. Introduction

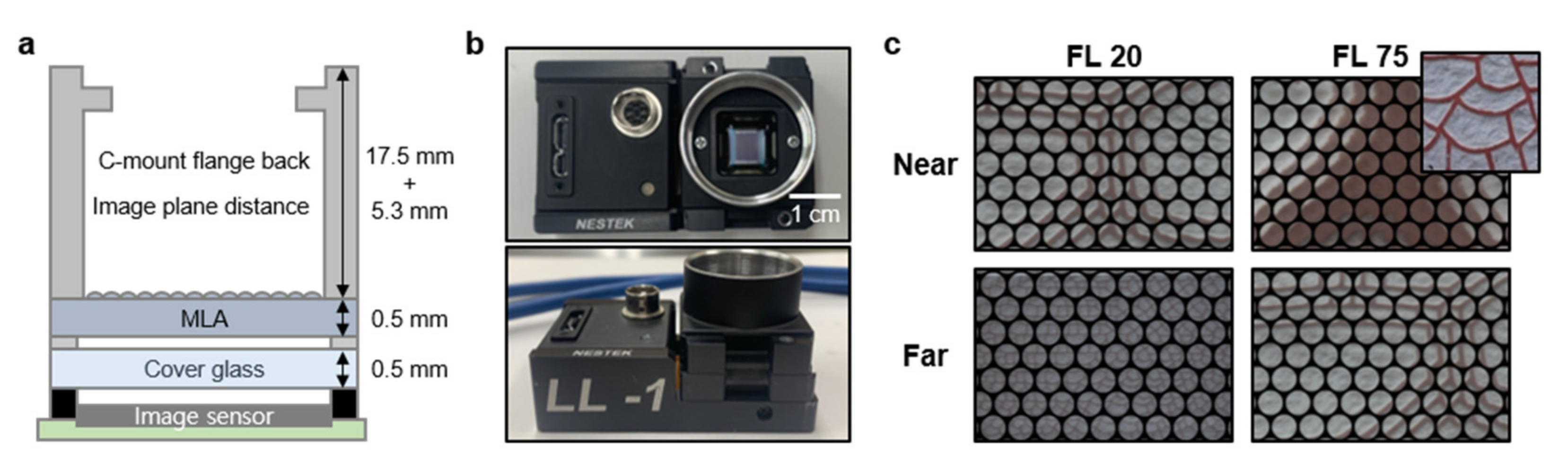

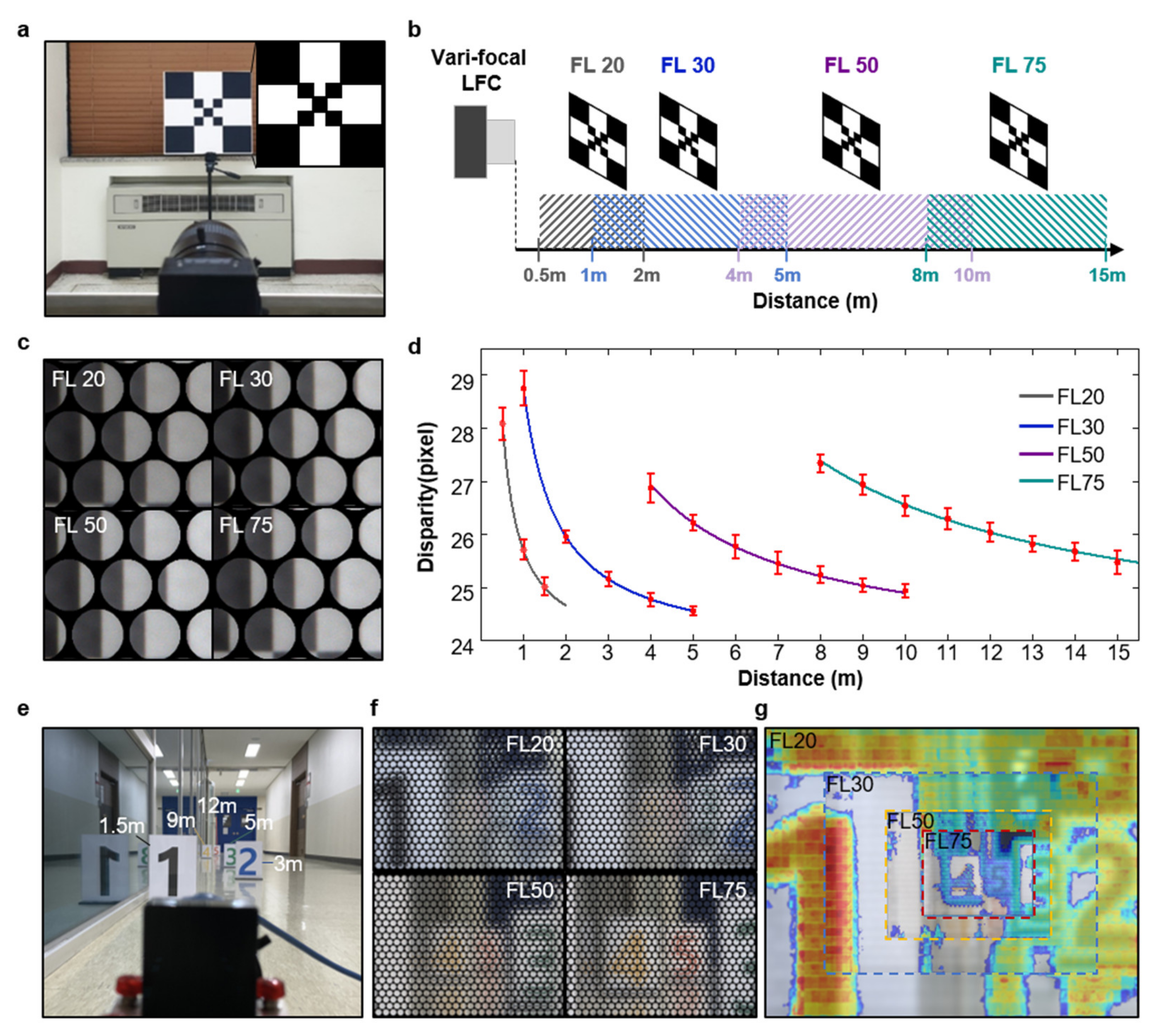

2. Materials and Methods

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lee, G.J.; Choi, C.; Kim, D.H.; Song, Y.M. Bioinspired Artificial Eyes: Optic Components, Digital Cameras, and Visual Prostheses. Adv. Funct. Mater. 2018, 28, 1705202. [Google Scholar] [CrossRef]

- Song, Y.M.; Xie, Y.; Malyarchuk, V.; Xiao, J.; Jung, I.; Choi, K.J.; Liu, Z.; Park, H.; Lu, C.; Kim, R.H.; et al. Digital cameras with designs inspired by the arthropod eye. Nature 2013, 497, 95–99. [Google Scholar] [CrossRef]

- Gu, L.; Poddar, S.; Lin, Y.; Long, Z.; Zhang, D.; Zhang, Q.; Shu, L.; Qiu, X.; Kam, M.; Javey, A.; et al. A biomimetic eye with a hemispherical perovskite nanowire array retina. Nature 2020, 581, 278–282. [Google Scholar] [CrossRef] [PubMed]

- Kim, M.S.; Lee, G.J.; Choi, C.; Kim, M.S.; Lee, M.; Liu, S.; Cho, K.W.; Kim, H.M.; Cho, H.; Choi, M.K.; et al. An aquatic-vision-inspired camera based on a monocentric lens and a silicon nanorod photodiode array. Nat. Electron. 2020, 3, 546–553. [Google Scholar] [CrossRef]

- Ives, H.E. Parallax Panoramagrams Made with a Large Diameter Lens. J. Opt. Soc. Am. 1930, 20, 332–342. [Google Scholar] [CrossRef] [Green Version]

- Lippmann, G. Épreuves réversibles donnant la sensation du relief. J. Phys. Theor. Appl. 1908, 7, 821–825. [Google Scholar] [CrossRef]

- Ng, R.; Levoy, M.; Brédif, M.; Duval, G.; Horowitz, M.; Hanrahan, P. Light Field Photography with a Hand-Held Plenoptic Camera; Research Report CSTR 2005-02; Stanford University: Stanford, CA, USA, 2005. [Google Scholar]

- Georgiev, T.; Lumsdaine, A. Superresolution with Plenoptic 2.0 Cameras. In Frontiers in Optics 2009/Laser Science XXV/Fall 2009 OSA Optics & Photonics Technical Digest, OSA Technical Digest (CD); Optical Society of America: Washington, DC, USA, 2009; paper STuA6. [Google Scholar]

- Kim, H.M.; Kim, M.S.; Lee, G.J.; Yoo, Y.J.; Song, Y.M. Large area fabrication of engineered microlens array with low sag height for light-field imaging. Opt. Express 2019, 27, 4435–4444. [Google Scholar] [CrossRef]

- Bok, Y.; Jeon, H.G.; Kweon, I.S. Geometric Calibration of Micro-Lens-Based Light Field Cameras Using Line Features. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 287–300. [Google Scholar] [CrossRef] [PubMed]

- Jeon, H.G.; Park, J.; Choe, G.; Park, J.; Bok, Y.; Tai, Y.W.; Kweon, I.S. Accurate depth map estimation from a lenslet light field camera. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1547–1555. [Google Scholar]

- Kim, H.M.; Kim, M.S.; Lee, G.J.; Jang, H.J.; Song, Y.M. Miniaturized 3D Depth Sensing-Based Smartphone Light Field Camera. Sensors 2020, 20, 2129. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Perwaß, C.; Wietzke, L. Single lens 3D-camera with extended depth-of-field. In Human Vision and Electronic Imaging XVII; Proceedings of SPIE; SPIE: Bellingham, WA, USA, 2012; Volume 8291, ID 829108. [Google Scholar]

- Georgiev, T.; Lumsdaine, A. The multifocus plenoptic camera. In Digital Photography VIII; Proceedings of SPIE; SPIE: Bellingham, WA, USA, 2012; Volume 8299, ID 829908. [Google Scholar]

- Lee, J.H.; Chang, S.; Kim, M.S.; Kim, Y.J.; Kim, H.M.; Song, Y.M. High-Identical Numerical Aperture, Multifocal Microlens Array through Single-Step Multi-Sized Hole Patterning Photolithography. Micromachines 2020, 11, 1068. [Google Scholar] [CrossRef]

- Lei, Y.; Tong, Q.; Zhang, X.; Sang, H.; Xie, C. Plenoptic camera based on a liquid crystal microlens array. In Novel Optical Systems Design and Optimization XVIII; Proceedings of SPIE; SPIE: Bellingham, WA, USA, 2015; Volume 9579, ID 95790T. [Google Scholar]

- Chen, M.; He, W.; Wei, D.; Hu, C.; Shi, J.; Zhang, X.; Wang, H.; Xie, C. Depth-of-Field-Extended Plenoptic Camera Based on Tunable Multi-Focus Liquid-Crystal Microlens Array. Sensors 2020, 20, 4142. [Google Scholar] [CrossRef] [PubMed]

- Hsieh, P.Y.; Chou, P.Y.; Lin, H.A.; Chu, C.Y.; Huang, C.T.; Chen, C.H.; Qin, Z.; Corral, M.M.; Javidi, B.; Huang, Y.P. Long working range light field microscope with fast scanning multifocal liquid crystal microlens array. Opt. Express 2018, 26, 10981–10996. [Google Scholar] [CrossRef] [PubMed]

- Xin, Z.; Wei, D.; Xie, X.; Chen, M.; Zhang, X.; Liao, J.; Wang, H.; Xie, C. Dual-polarized light-field imaging micro-system via a liquid-crystal microlens array for direct three-dimensional observation. Opt. Express 2018, 26, 4035–4049. [Google Scholar] [CrossRef] [PubMed]

- Kwon, H.; Kizu, Y.; Kizaki, Y.; Ito, M.; Kobayashi, M.; Ueno, R.; Suzuki, K.; Funaki, H. A Gradient Index Liquid Crystal Microlens Array for Light-Field Camera Applications. IEEE Photonics Technol. Lett. 2015, 27, 836–839. [Google Scholar] [CrossRef]

- Algorri, J.F.; Morawiak, P.; Bennis, N.; Zografopoulos, D.C.; Urruchi, V.; Rodríguez-Cobo, L.; Jaroszewicz, L.R.; Sánchez-Pena, J.M.; López-Higuera, J.M. Positive-negative tunable liquid crystal lenses based on a microstructured transmission line. Sci. Rep. 2020, 10, 10153. [Google Scholar] [CrossRef] [PubMed]

- Pertuz, S.; Pulido-Herrera, E.; Kamarainen, J.K. Focus model for metric depth estimation in standard plenoptic cameras. ISPRS J. Photogramm. Remote. Sens. 2018, 144, 38–47. [Google Scholar] [CrossRef]

- Palmieri, L. The Plenoptic Toolbox 2.0. Available online: https://github.com/PlenopticToolbox/PlenopticToolbox2.0 (accessed on 17 October 2018).

- Monteiro, N.B.; Marto, S.; Barreto, J.P.; Gaspar, J. Depth range accuracy for plenoptic cameras. Comput. Vis. Image Underst. 2018, 168, 104–117. [Google Scholar] [CrossRef]

- Chen, Y.; Jin, X.; Dai, Q. Distance measurement based on light field geometry and ray tracing. Opt. Express 2017, 25, 59–76. [Google Scholar] [CrossRef]

- Bae, S.I.; Kim, K.; Jang, K.W.; Kim, H.K.; Jeong, K.H. High Contrast Ultrathin Light-Field Camera Using Inverted Microlens. Arrays with Metal–Insulator–Metal Optical Absorber. Adv. Opt. Mater. 2021, 9, 2001657. [Google Scholar] [CrossRef]

- Zeller, N.; Quint, F.; Stilla, U. Depth estimation and camera calibration of a focused plenoptic camera for visual odometry. ISPRS J. Photogramm. Remote. Sens. 2016, 118, 83–100. [Google Scholar] [CrossRef]

- Ibrahim, M.M.; Liu, Q.; Khan, R.; Yang, J.; Adeli, E.; Yang, Y. Depth map artefacts reduction: A review. IET Image Process. 2020, 14, 2630–2644. [Google Scholar] [CrossRef]

- Tao, M.W.; Su, J.C.; Wang, T.C.; Malik, J.; Ramamoorthi, R. Depth Estimation and Specular Removal for Glossy Surfaces Using Point and Line Consistency with Light-Field Cameras. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1155–1169. [Google Scholar] [CrossRef] [PubMed]

| Microlens Array | Image Sensor | ||

|---|---|---|---|

| Pitch | 240 µm | Model | IMX178 (Sony, Tokyo, Japan) |

| Focal length | 965 µm | Pixel size | 2.4 µm |

| Array type | Hexagonal | Number of pixels | 3096 (H) × 2080 (V) |

| 1.235 | 101.8 | 20, 30, 50, 75 | 5.3 |

| Light Field Type | Objective Lens | Depth of Field | Main Characteristics | Reference |

|---|---|---|---|---|

| Unfocused LFC (1.0) | Zoom lens | 0.05–2 m | Lytro 1st generation | [24] |

| Unfocused LFC (1.0) | Zoom lens | 0.5–0.9 m | - | [25] |

| Focused LFC (2.0) | 3.04 mm | 0.05–0.25 m | Small form factor | [26] |

| Focused LFC (2.0) | 35 mm | 0.7–5.2 m | Raytrix camera | [27] |

| Focused LFC (2.0) | 39.8 mm | ~0.59 m | LC MLA (tunable) | [19] |

| Focused LFC (2.0) | Zoom lens | 0.5–15 m | Vari-focal lens | Ours |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.M.; Kim, M.S.; Chang, S.; Jeong, J.; Jeon, H.-G.; Song, Y.M. Vari-Focal Light Field Camera for Extended Depth of Field. Micromachines 2021, 12, 1453. https://doi.org/10.3390/mi12121453

Kim HM, Kim MS, Chang S, Jeong J, Jeon H-G, Song YM. Vari-Focal Light Field Camera for Extended Depth of Field. Micromachines. 2021; 12(12):1453. https://doi.org/10.3390/mi12121453

Chicago/Turabian StyleKim, Hyun Myung, Min Seok Kim, Sehui Chang, Jiseong Jeong, Hae-Gon Jeon, and Young Min Song. 2021. "Vari-Focal Light Field Camera for Extended Depth of Field" Micromachines 12, no. 12: 1453. https://doi.org/10.3390/mi12121453

APA StyleKim, H. M., Kim, M. S., Chang, S., Jeong, J., Jeon, H.-G., & Song, Y. M. (2021). Vari-Focal Light Field Camera for Extended Depth of Field. Micromachines, 12(12), 1453. https://doi.org/10.3390/mi12121453