The Nutritional Content of Meal Images in Free-Living Conditions—Automatic Assessment with goFOODTM

Abstract

:1. Introduction

2. Materials and Methods

2.1. goFOODTMLite Application

2.2. Feasibility Study

2.3. Database

2.4. Automatic Dietary Assessment

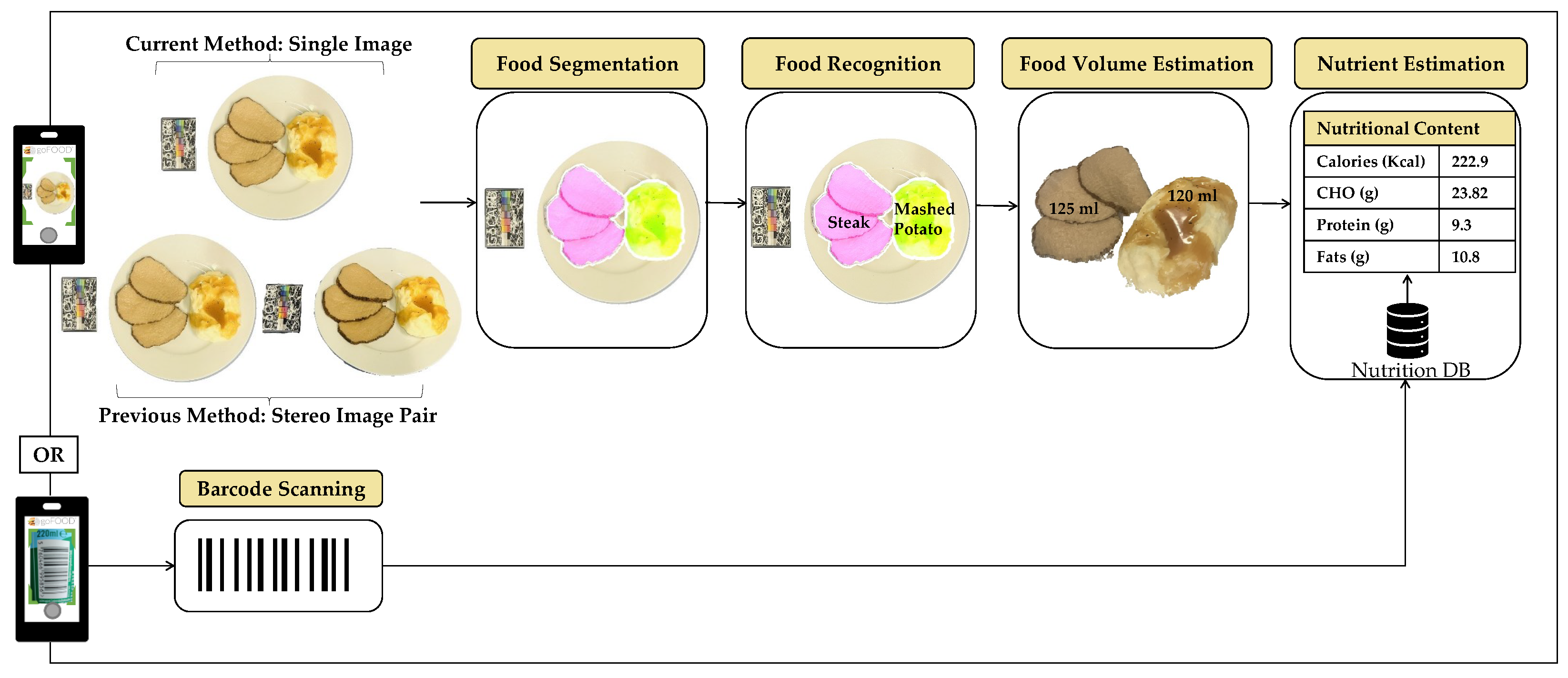

2.4.1. System Pipeline

2.4.2. Food Segmentation

2.4.3. Food Recognition

2.4.4. Food Volume Estimation

Neural-Based Approach

Geometry-Based Approach

2.4.5. Nutrient Estimation

3. Results

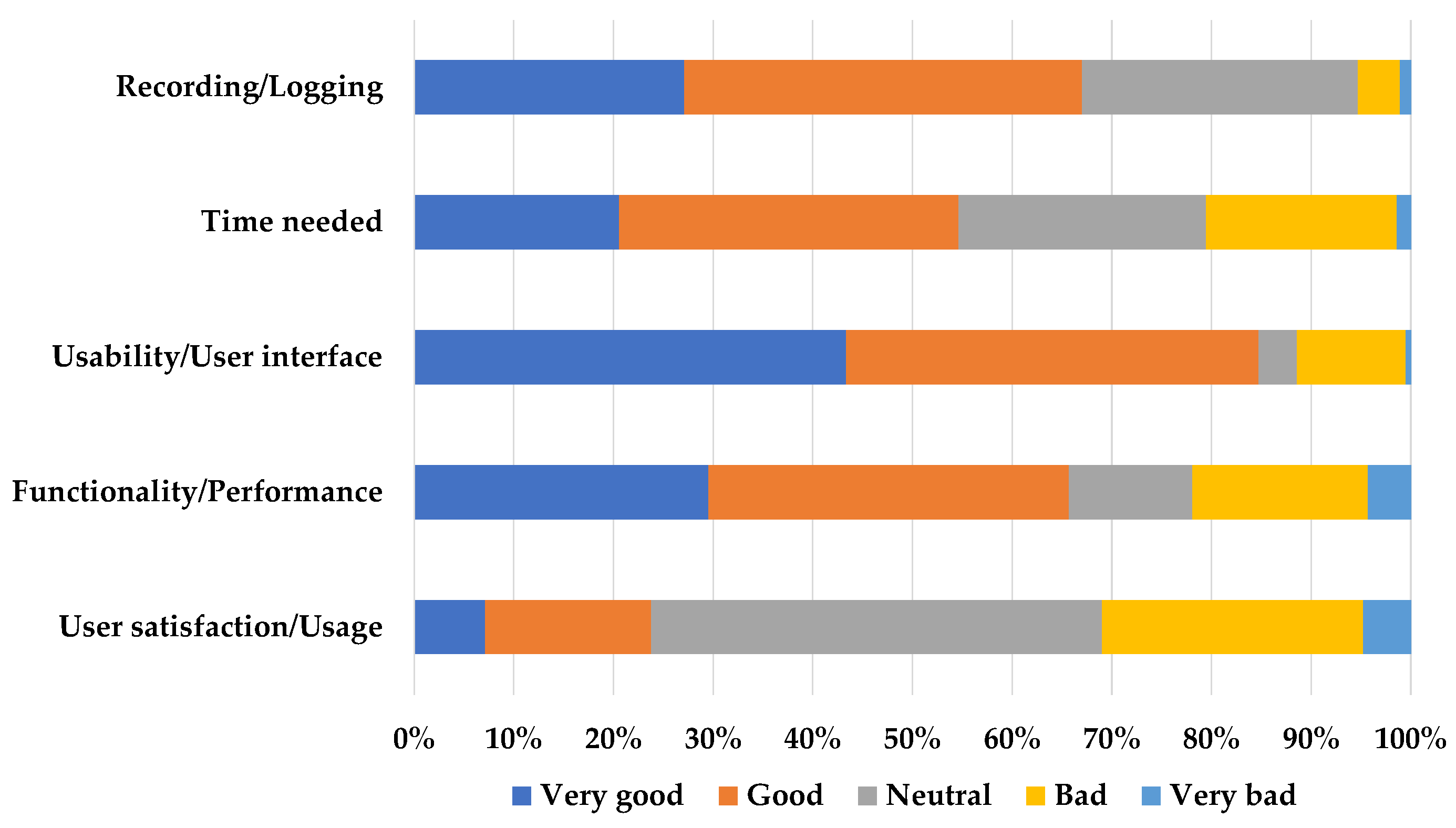

3.1. Feasibility Study

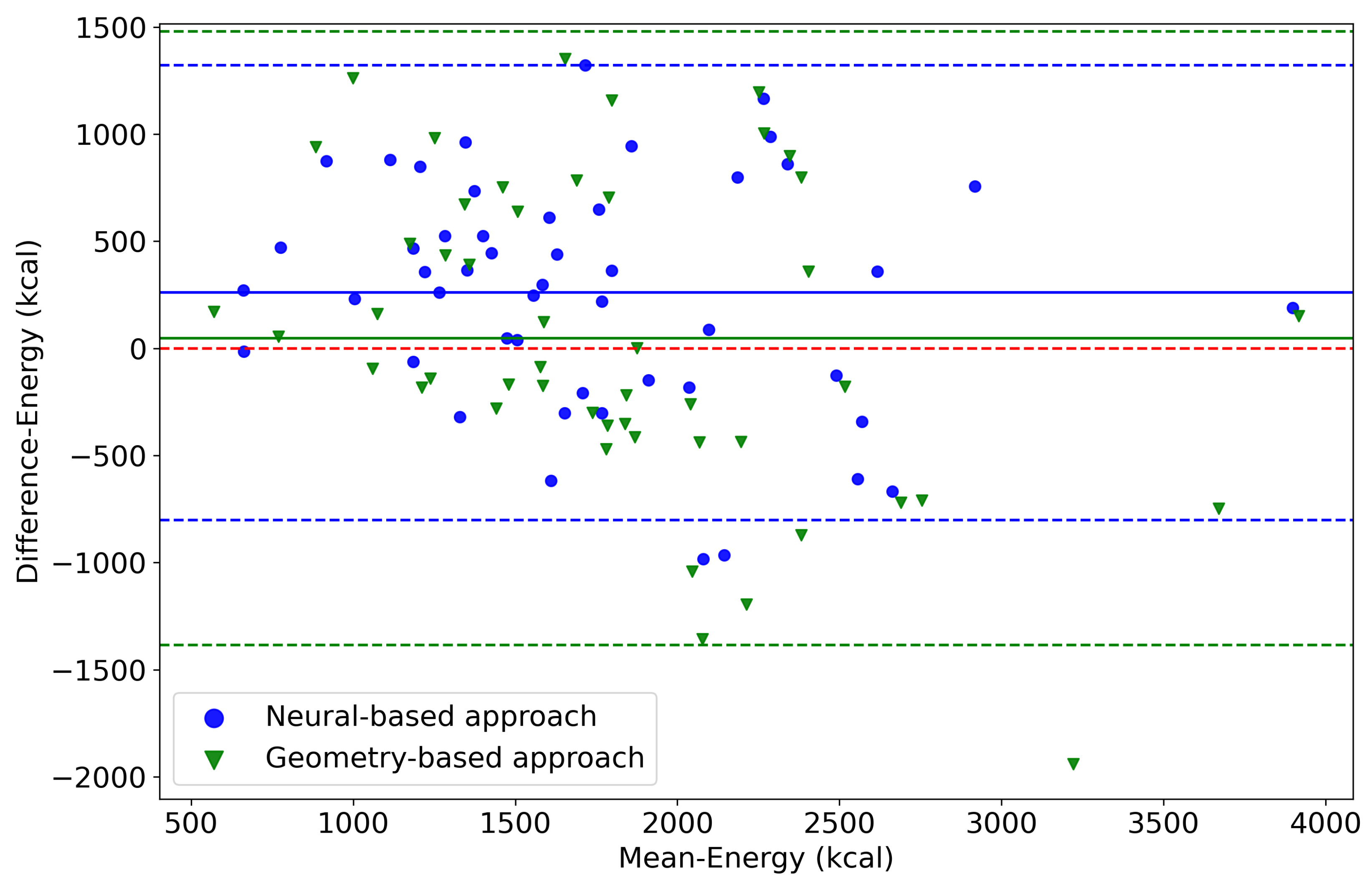

3.2. Automatic Dietary Assessment

3.2.1. Food Segmentation and Recognition Evaluation

3.2.2. System Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| CHO | Carbohydrates |

| CNN | Convolutional Neural Network |

| FFQ | Food Frequency Questionnaire |

| GT | Ground Truth |

| IoU | Intersection over Union |

| kcal | Kilocalories |

References

- Freeman, A.M.; Aggarwal, M. Malnutrition in the obese: Commonly overlooked but with serious consequences. J. Am. Coll. Cardiol. 2020, 76, 841–843. [Google Scholar] [CrossRef] [PubMed]

- Kuftinec, G.; Bhamidimarri, K.R.; Pearlman, M. Malnutrition in cirrhosis: Frequent but overlooked. Liver Transpl. 2019, 25, 1743–1744. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. Fact Sheets—Malnutrition. 2021. Available online: https://www.who.int/news-room/fact-sheets/detail/malnutrition (accessed on 30 June 2023).

- Mendes, A.; Serratrice, C.; Herrmann, F.R.; Gold, G.; Graf, C.E.; Zekry, D.; Genton, L. Nutritional risk at hospital admission is associated with prolonged length of hospital stay in old patients with COVID-19. Clin. Nutr. 2022, 41, 3085–3088. [Google Scholar] [CrossRef]

- Nolte Fong, J.V.; Moore, L.W. Nutrition trends in kidney transplant recipients: The importance of dietary monitoring and need for evidence-based recommendations. Front. Med. 2018, 5, 302. [Google Scholar] [CrossRef] [PubMed]

- Seligman, H.K.; Levi, R.; Adebiyi, V.O.; Coleman-Jensen, A.; Guthrie, J.F.; Frongillo, E.A. Assessing and Monitoring Nutrition Security to Promote Healthy Dietary Intake and Outcomes in the United States. Annu. Rev. Nutr. 2023, 43, 409–429. [Google Scholar] [CrossRef]

- Bailey, R.L. Overview of dietary assessment methods for measuring intakes of foods, beverages, and dietary supplements in research studies. Curr. Opin. Biotechnol. 2021, 70, 91–96. [Google Scholar] [CrossRef]

- Thompson, F.E.; Subar, A.F. Dietary assessment methodology. In Nutrition in the Prevention and Treatment of Disease; Academic Press: Cambridge, MA, USA, 2017; pp. 5–48. [Google Scholar]

- Barbosa-Silva, M.C.G. Subjective and objective nutritional assessment methods: What do they really assess? Curr. Opin. Clin. Nutr. Metab. Care 2008, 11, 248–254. [Google Scholar] [CrossRef]

- Ravelli, M.N.; Schoeller, D.A. Traditional self-reported dietary instruments are prone to inaccuracies and new approaches are needed. Front. Nutr. 2020, 7, 90. [Google Scholar] [CrossRef]

- Taylor, M.K.; Sullivan, D.K.; Ellerbeck, E.F.; Gajewski, B.J.; Gibbs, H.D. Nutrition literacy predicts adherence to healthy/unhealthy diet patterns in adults with a nutrition-related chronic condition. Public Health Nutr. 2019, 22, 2157–2169. [Google Scholar] [CrossRef]

- Segal, L.; Opie, R.S. A nutrition strategy to reduce the burden of diet related disease: Access to dietician services must complement population health approaches. Front. Pharmacol. 2015, 6, 160. [Google Scholar] [CrossRef]

- Assaad-Khalil, S.; Al Arouj, M.; Almaatouq, M.; Amod, A.; Assaad, S.; Azar, S.; Belkhadir, J.; Esmat, K.; Hassoun, A.; Jarrah, N.; et al. Barriers to the delivery of diabetes care in the Middle East and South Africa: A survey of 1082 practising physicians in five countries. Int. J. Clin. Pract. 2013, 67, 1144–1150. [Google Scholar] [CrossRef] [PubMed]

- Arens-Volland, A.G.; Spassova, L.; Bohn, T. Promising approaches of computer-supported dietary assessment and management—Current research status and available applications. Int. J. Med. Inform. 2015, 84, 997–1008. [Google Scholar] [CrossRef] [PubMed]

- Fox, G.; Connolly, R. Mobile health technology adoption across generations: Narrowing the digital divide. Inf. Syst. J. 2018, 28, 995–1019. [Google Scholar] [CrossRef]

- Akdur, G.; Aydin, M.N.; Akdur, G. Adoption of mobile health apps in dietetic practice: Case study of diyetkolik. JMIR Mhealth Uhealth 2020, 8, e16911. [Google Scholar] [CrossRef] [PubMed]

- Meyers, A.; Johnston, N.; Rathod, V.; Korattikara, A.; Gorban, A.; Silberman, N.; Guadarrama, S.; Papandreou, G.; Huang, J.; Murphy, K.P. Im2Calories: Towards an automated mobile vision food diary. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1233–1241. [Google Scholar]

- Zhang, W.; Yu, Q.; Siddiquie, B.; Divakaran, A.; Sawhney, H. “Snap-n-eat” food recognition and nutrition estimation on a smartphone. J. Diabetes Sci. Technol. 2015, 9, 525–533. [Google Scholar] [CrossRef] [PubMed]

- Freitas, C.N.; Cordeiro, F.R.; Macario, V. Myfood: A food segmentation and classification system to aid nutritional monitoring. In Proceedings of the 2020 33rd SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Porto de Galinhas, Brazil, 7–10 November 2020; pp. 234–239. [Google Scholar]

- Vasiloglou, M.F.; Marcano, I.; Lizama, S.; Papathanail, I.; Spanakis, E.K.; Mougiakakou, S. Multimedia data-based mobile applications for dietary assessment. J. Diabetes Sci. Technol. 2022, 17, 1056–1065. [Google Scholar] [CrossRef]

- Vasiloglou, M.F.; Christodoulidis, S.; Reber, E.; Stathopoulou, T.; Lu, Y.; Stanga, Z.; Mougiakakou, S. What healthcare professionals think of “nutrition & diet” apps: An international survey. Nutrients 2020, 12, 2214. [Google Scholar]

- Papathanail, I.; Vasiloglou, M.F.; Stathopoulou, T.; Ghosh, A.; Baumann, M.; Faeh, D.; Mougiakakou, S. A feasibility study to assess Mediterranean Diet adherence using an AI-powered system. Sci. Rep. 2022, 12, 17008. [Google Scholar] [CrossRef]

- Vasiloglou, M.F.; van der Horst, K.; Stathopoulou, T.; Jaeggi, M.P.; Tedde, G.S.; Lu, Y.; Mougiakakou, S. The human factor in automated image-based nutrition apps: Analysis of common mistakes using the goFOOD lite app. JMIR Mhealth Uhealth 2021, 9, e24467. [Google Scholar] [CrossRef]

- Amugongo, L.M.; Kriebitz, A.; Boch, A.; Lütge, C. Mobile Computer Vision-Based Applications for Food Recognition and Volume and Calorific Estimation: A Systematic Review. Healthcare 2023, 11, 59. [Google Scholar] [CrossRef]

- Tahir, G.A.; Loo, C.K. A comprehensive survey of image-based food recognition and volume estimation methods for dietary assessment. Healthcare 2021, 9, 1676. [Google Scholar] [CrossRef]

- Wang, W.; Min, W.; Li, T.; Dong, X.; Li, H.; Jiang, S. A review on vision-based analysis for automatic dietary assessment. Trends Food Sci. Technol. 2022, 122, 223–237. [Google Scholar] [CrossRef]

- Lu, Y.; Stathopoulou, T.; Vasiloglou, M.F.; Pinault, L.F.; Kiley, C.; Spanakis, E.K.; Mougiakakou, S. goFOODTM: An artificial intelligence system for dietary assessment. Sensors 2020, 20, 4283. [Google Scholar] [CrossRef] [PubMed]

- Vasiloglou, M.F.; Christodoulidis, S.; Reber, E.; Stathopoulou, T.; Lu, Y.; Stanga, Z.; Mougiakakou, S. Perspectives and preferences of adult smartphone users regarding nutrition and diet apps: Web-based survey study. JMIR Mhealth Uhealth 2021, 9, e27885. [Google Scholar] [CrossRef] [PubMed]

- Papathanail, I.; Brühlmann, J.; Vasiloglou, M.F.; Stathopoulou, T.; Exadaktylos, A.K.; Stanga, Z.; Münzer, T.; Mougiakakou, S. Evaluation of a novel artificial intelligence system to monitor and assess energy and macronutrient intake in hospitalised older patients. Nutrients 2021, 13, 4539. [Google Scholar] [CrossRef] [PubMed]

- Brooke, J. Sus: A “quick and dirty” usability. Usability Eval. Ind. 1996, 189, 189–194. [Google Scholar]

- REDCap. Available online: https://www.project-redcap.org/ (accessed on 27 July 2023).

- Moshfegh, A.J.; Rhodes, D.G.; Baer, D.J.; Murayi, T.; Clemens, J.C.; Rumpler, W.V.; Paul, D.R.; Sebastian, R.S.; Kuczynski, K.J.; Ingwersen, L.A.; et al. The US Department of Agriculture Automated Multiple-Pass Method reduces bias in the collection of energy intakes. Am. J. Clin. Nutr. 2008, 88, 324–332. [Google Scholar] [CrossRef]

- Nutritional.Software. Available online: https://www.nutritional-software.at/ (accessed on 27 July 2023).

- United States Department of Agriculture Food Database. Available online: https://fdc.nal.usda.gov/ (accessed on 27 July 2023).

- Swiss Food Composition Database. Available online: https://naehrwertdaten.ch/en/ (accessed on 27 July 2023).

- Lu, Y.; Stathopoulou, T.; Vasiloglou, M.F.; Christodoulidis, S.; Stanga, Z.; Mougiakakou, S. An artificial intelligence-based system to assess nutrient intake for hospitalised patients. IEEE Trans. Multimed. 2020, 23, 1136–1147. [Google Scholar] [CrossRef]

- Allegra, D.; Anthimopoulos, M.; Dehais, J.; Lu, Y.; Stanco, F.; Farinella, G.M.; Mougiakakou, S. A multimedia database for automatic meal assessment systems. In Proceedings of the New Trends in Image Analysis and Processing—ICIAP 2017: ICIAP International Workshops, WBICV, SSPandBE, 3AS, RGBD, NIVAR, IWBAAS, and MADiMa 2017, Catania, Italy, 11–15 September 2017; Revised Selected Papers 19. Springer: Berlin/Heidelberg, Germany, 2017; pp. 471–478. [Google Scholar]

- Mohanty, S.P.; Singhal, G.; Scuccimarra, E.A.; Kebaili, D.; Héritier, H.; Boulanger, V.; Salathé, M. The food recognition benchmark: Using deep learning to recognize food in images. Front. Nutr. 2022, 9, 875143. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Radosavovic, I.; Kosaraju, R.P.; Girshick, R.; He, K.; Dollár, P. Designing network design spaces. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10428–10436. [Google Scholar]

- Li, J.; Socher, R.; Hoi, S.C. Dividemix: Learning with noisy labels as semi-supervised learning. arXiv 2020, arXiv:2002.07394. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. Mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Bhat, S.F.; Birkl, R.; Wofk, D.; Wonka, P.; Müller, M. Zoedepth: Zero-shot transfer by combining relative and metric depth. arXiv 2023, arXiv:2302.12288. [Google Scholar]

- Nutritionix. Available online: https://www.nutritionix.com/ (accessed on 27 July 2023).

- Aqua-Calc. Available online: https://www.aqua-calc.com/calculate/food-volume-to-weight (accessed on 27 July 2023).

- Open Food Facts. Available online: https://ch-en.openfoodfacts.org/ (accessed on 27 July 2023).

- Vasiloglou, M.F.; Mougiakakou, S.; Aubry, E.; Bokelmann, A.; Fricker, R.; Gomes, F.; Guntermann, C.; Meyer, A.; Studerus, D.; Stanga, Z. A comparative study on carbohydrate estimation: GoCARB vs. Dietitians. Nutrients 2018, 10, 741. [Google Scholar] [CrossRef] [PubMed]

- Jayanty, S.S.; Diganta, K.; Raven, B. Effects of cooking methods on nutritional content in potato tubers. Am. J. Potato Res. 2019, 96, 183–194. [Google Scholar] [CrossRef]

- Thompson, F.E.; Kirkpatrick, S.I.; Subar, A.F.; Reedy, J.; Schap, T.E.; Wilson, M.M.; Krebs-Smith, S.M. The National Cancer Institute’s dietary assessment primer: A resource for diet research. J. Acad. Nutr. Diet. 2015, 115, 1986–1995. [Google Scholar] [CrossRef]

| Characteristic | Value |

|---|---|

| Sex (n, %) | Female (n = 35, 70%) |

| Male (n = 15, 30%) | |

| Mean age in years (SD) | 29.2 (11.4) |

| Mean BMI in kg/m2 (SD) | 27.2 (11.8) |

| Ethnicity (n, %) | White (n = 45, 90%) |

| Hispanic/Latino (n = 2, 4%) | |

| Asian/Pacific Islander (n = 2, 4%) | |

| Half European/Half Latino (n = 1, 2%) | |

| Highest Educational Level (n, %) | High School/Apprenticeship (n = 16, 32%) |

| Bachelor’s Degree (n = 19, 38%) | |

| Master’s Degree (n = 7, 14%) | |

| PhD (n = 3, 6%) | |

| Other (n = 5, 10 %) | |

| Occupation (n, %) | Student (n = 27, 54%) |

| Employed full time (n = 15, 30%) | |

| Employed part time (n = 5, 10%) | |

| Self-employed (n = 1, 2%) | |

| Retired (n = 1, 2%) | |

| Homemaker (n = 1, 2%) |

| Models | Fine | Middle | Coarse | |||

|---|---|---|---|---|---|---|

| Top-1 | Top-5 | Top-1 | Top-3 | Top-1 | Top-3 | |

| ResNet-50 | 52.9 | 67.1 | 68.2 | 85.3 | 75.3 | 88.8 |

| RegNetY-16GF | 56.4 | 70.6 | 74.1 | 86.8 | 80.3 | 91.1 |

| ResNet-50 + DivideMix | 55.4 | 66.5 | 70.6 | 85.0 | 75.9 | 88.1 |

| RegNetY-16GF + DivideMix | 58.7 | 78.8 | 75.0 | 89.0 | 79.3 | 91.1 |

| Complete System | kcal | Carbohydrates | Protein | Fat |

|---|---|---|---|---|

| Geometry-based | 31.32 (22.3) | 37.84 (36.4) | 42.41 (25.1) | 51.75 (57.4) |

| Neural-based | 27.41 (16.9) | 31.27 (22.4) | 39.17 (33.9) | 43.24 (32.4) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Papathanail, I.; Abdur Rahman, L.; Brigato, L.; Bez, N.S.; Vasiloglou, M.F.; van der Horst, K.; Mougiakakou, S. The Nutritional Content of Meal Images in Free-Living Conditions—Automatic Assessment with goFOODTM. Nutrients 2023, 15, 3835. https://doi.org/10.3390/nu15173835

Papathanail I, Abdur Rahman L, Brigato L, Bez NS, Vasiloglou MF, van der Horst K, Mougiakakou S. The Nutritional Content of Meal Images in Free-Living Conditions—Automatic Assessment with goFOODTM. Nutrients. 2023; 15(17):3835. https://doi.org/10.3390/nu15173835

Chicago/Turabian StylePapathanail, Ioannis, Lubnaa Abdur Rahman, Lorenzo Brigato, Natalie S. Bez, Maria F. Vasiloglou, Klazine van der Horst, and Stavroula Mougiakakou. 2023. "The Nutritional Content of Meal Images in Free-Living Conditions—Automatic Assessment with goFOODTM" Nutrients 15, no. 17: 3835. https://doi.org/10.3390/nu15173835

APA StylePapathanail, I., Abdur Rahman, L., Brigato, L., Bez, N. S., Vasiloglou, M. F., van der Horst, K., & Mougiakakou, S. (2023). The Nutritional Content of Meal Images in Free-Living Conditions—Automatic Assessment with goFOODTM. Nutrients, 15(17), 3835. https://doi.org/10.3390/nu15173835