Performance of the Digital Dietary Assessment Tool MyFoodRepo

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data Collection

2.1.1. Controlled Values Measured from the Weighted Food Diaries

2.1.2. Measurements Made by MFR

2.2. Data Analysis

2.2.1. Segmentation

2.2.2. Classification

2.2.3. Portion Size Estimation

2.2.4. Overall Performance for Energy and Macronutrient Content

3. Results

3.1. Segmentation

3.2. Classification

3.3. Portion Size Estimation

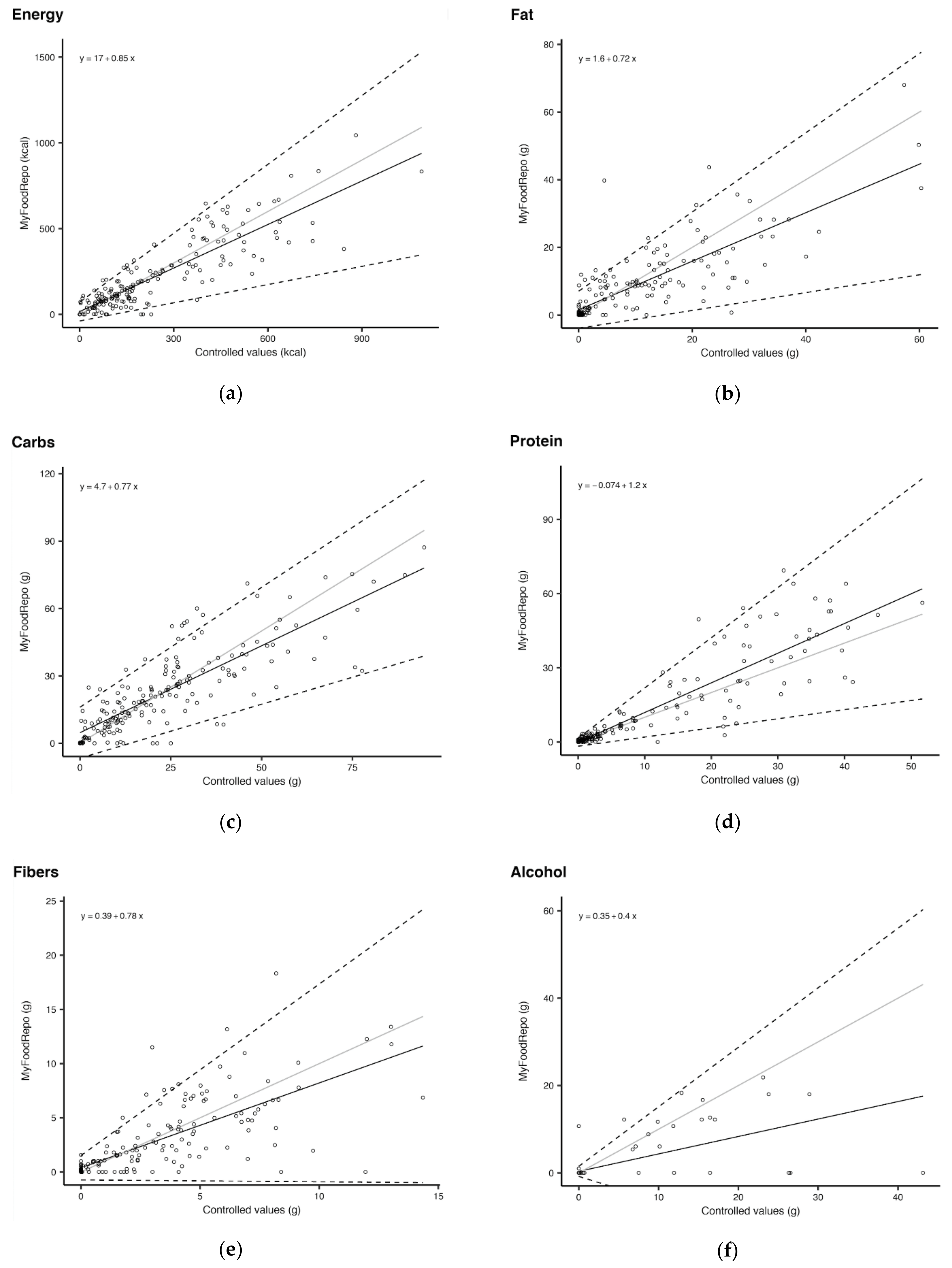

3.4. Overall Performance for Energy and Macronutrient Content

4. Discussion

5. Conclusions and Recommendations

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Thompson, F.E.; Subar, A.F. Dietary assessment methodology. In Nutrition in the Prevention and Treatment of Disease, 3rd ed.; Coulston, A.M., Boushey, C.J., Ferruzzi, M., Eds.; Elsevier: San Diego, CA, USA, 2013; pp. 5–46. [Google Scholar]

- Blanchard, C.M.; Chin, M.K.; Gilhooly, C.H.; Barger, K.; Matuszek, G.; Miki, A.J.; Côté, R.G.; Eldridge, A.L.; Green, H.; Mainardi, F.; et al. Evaluation of PIQNIQ, a Novel Mobile Application for Capturing Dietary Intake. J. Nutr. 2021, 151, 1347–1356. [Google Scholar] [CrossRef] [PubMed]

- Evenepoel, C.; Clevers, E.; Deroover, L.; Van Loo, W.; Matthys, C.; Verbeke, K. Accuracy of Nutrient Calculations Using the Consumer-Focused Online App MyFitnessPal: Validation Study. J. Med. Internet Res. 2020, 22, e18237. [Google Scholar] [CrossRef] [PubMed]

- Illner, A.K.; Freisling, H.; Boeing, H.; Huybrechts, I.; Crispim, S.P.; Slimani, N. Review and evaluation of innovative technologies for measuring diet in nutritional epidemiology. Int. J. Epidemiol. 2012, 41, 1187–1203. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sharp, D.B.; Allman-Farinelli, M. Feasibility and validity of mobile phones to assess dietary intake. Nutrition 2014, 30, 1257–1266. [Google Scholar] [CrossRef] [PubMed]

- Research2Guidance. mHealth Developer Economics—Connectivity in Digital Health; Research2Guidance: Berlin, Germany, 2018. [Google Scholar]

- Chen, J.; Cade, J.E.; Allman-Farinelli, M. The Most Popular Smartphone Apps for Weight Loss: A Quality Assessment. JMIR mhealth uhealth 2015, 3, e104. [Google Scholar] [CrossRef]

- Griffiths, C.; Harnack, L.; Pereira, M.A. Assessment of the accuracy of nutrient calculations of five popular nutrition tracking applications. Public Health Nutr. 2018, 21, 1495–1502. [Google Scholar] [CrossRef] [Green Version]

- Ji, Y.; Plourde, H.; Bouzo, V.; Kilgour, R.D.; Cohen, T.R. Validity and Usability of a Smartphone Image-Based Dietary Assessment App Compared to 3-Day Food Diaries in Assessing Dietary Intake Among Canadian Adults: Randomized Controlled Trial. JMIR mHealth uHealth 2020, 8, e16953. [Google Scholar] [CrossRef]

- Shinozaki, N.; Murakami, K. Evaluation of the Ability of Diet-Tracking Mobile Applications to Estimate Energy and Nutrient Intake in Japan. Nutrients 2020, 12, 3327. [Google Scholar] [CrossRef]

- Marcano-Olivier, M.; Erjavec, M.; Horne, P.J.; Viktor, S.; Pearson, R. Measuring lunchtime consumption in school cafeterias: A validation study of the use of digital photography. Public Health Nutr. 2019, 22, 1745–1754. [Google Scholar] [CrossRef] [Green Version]

- Gemming, L.; Rush, E.; Maddison, R.; Doherty, A.; Gant, N.; Utter, J.; Ni Mhurchu, C. Wearable cameras can reduce dietary under-reporting: Doubly labelled water validation of a camera-assisted 24 h recall. Br. J. Nutr. 2015, 113, 284–291. [Google Scholar] [CrossRef] [Green Version]

- Jia, W.; Chen, H.C.; Yue, Y.; Li, Z.; Fernstrom, J.; Bai, Y.; Li, C.; Sun, M. Accuracy of food portion size estimation from digital pictures acquired by a chest-worn camera. Public Health Nutr. 2014, 17, 1671–1681. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pettitt, C.; Liu, J.; Kwasnicki, R.M.; Yang, G.Z.; Preston, T.; Frost, G. A pilot study to determine whether using a lightweight, wearable micro-camera improves dietary assessment accuracy and offers information on macronutrients and eating rate. Br. J. Nutr. 2016, 115, 160–167. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ahmad, Z.; Kerr, D.A.; Bosch, M.; Boushey, C.J.; Delp, E.J.; Khanna, N.; Zhu, F. A Mobile Food Record For Integrated Dietary Assessment. MADiMa16 2016, 2016, 53–62. [Google Scholar] [CrossRef] [Green Version]

- Casperson, S.L.; Sieling, J.; Moon, J.; Johnson, L.; Roemmich, J.N.; Whigham, L. A mobile phone food record app to digitally capture dietary intake for adolescents in a free-living environment: Usability study. JMIR mHealth uHealth 2015, 3, e30. [Google Scholar] [CrossRef]

- Rhyner, D.; Loher, H.; Dehais, J.; Anthimopoulos, M.; Shevchik, S.; Botwey, R.H.; Duke, D.; Stettler, C.; Diem, P.; Mougiakakou, S. Carbohydrate Estimation by a Mobile Phone-Based System Versus Self-Estimations of Individuals With Type 1 Diabetes Mellitus: A Comparative Study. J. Med. Internet Res. 2016, 18, e101. [Google Scholar] [CrossRef] [Green Version]

- He, H.; Kong, F.; Tan, J. DietCam: Multiview Food Recognition Using a Multikernel SVM. IEEE J. Biomed. Health Inform. 2016, 20, 848–855. [Google Scholar] [CrossRef]

- Bucher Della Torre, S.; Carrard, I.; Farina, E.; Danuser, B.; Kruseman, M. Development and Evaluation of e-CA, an Electronic Mobile-Based Food Record. Nutrients 2017, 9, 76. [Google Scholar] [CrossRef] [Green Version]

- Lee, C.D.; Chae, J.; Schap, T.E.; Kerr, D.A.; Delp, E.J.; Ebert, D.S.; Boushey, C.J. Comparison of known food weights with image-based portion-size automated estimation and adolescents’ self-reported portion size. J. Diabetes Sci. Technol. 2012, 6, 428–434. [Google Scholar] [CrossRef]

- Lemacks, J.L.; Adams, K.; Lovetere, A. Dietary Intake Reporting Accuracy of the Bridge2U Mobile Application Food Log Compared to Control Meal and Dietary Recall Methods. Nutrients 2019, 11, 199. [Google Scholar] [CrossRef] [Green Version]

- Wellard-Cole, L.; Chen, J.; Davies, A.; Wong, A.; Huynh, S.; Rangan, A.; Allman-Farinelli, M. Relative Validity of the Eat and Track (EaT) Smartphone App for Collection of Dietary Intake Data in 18-to-30-Year Olds. Nutrients 2019, 11, 621. [Google Scholar] [CrossRef] [Green Version]

- Zhang, W.; Yu, Q.; Siddiquie, B.; Divakaran, A.; Sawhney, H. “Snap-n-Eat”: Food Recognition and Nutrition Estimation on a Smartphone. J. Diabetes Sci. Technol. 2015, 9, 525–533. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Höchsmann, C.; Martin, C.K. Review of the validity and feasibility of image-assisted methods for dietary assessment. Int. J. Obes. 2020, 44, 2358–2371. [Google Scholar] [CrossRef] [PubMed]

- Digital Epidemiology Lab. The app MyFoodRepo. Available online: https://www.foodandyou.ch/en/my-food-repo (accessed on 5 May 2021).

- Office Fédéral de la Securité Alimentaire et des Affaires Vétérinaires. Base de Données Suisse des Valeurs Nutritives. Available online: https://valeursnutritives.ch/fr/ (accessed on 17 August 2020).

- Agence Nationale de Sécurité Sanitaire de L’alimentation de L’environnement et du Travail (Anses). Table de Composition Nutritionnelle des Aliments CIQUAL. Available online: https://ciqual.anses.fr/ (accessed on 17 August 2020).

- Max Rubner-Institut. German Nutrient Database (Bundeslebensmittelschlüssel, BLS); Max Rubner-Institut: Karlsruhe, Germany, 2020. [Google Scholar]

- Société Suisse de Nutrition SSN. La Pyramide Alimentaire Suisse; Office Fédéral de la Securité Alimentaire et des Affaires Vétérinaires, Ed.; Société Suisse de Nutrition SSN: Bern, Switzerland, 2011. [Google Scholar]

- Laboratoire D’epidémiologie Numérique EPFL. Open Food Repo. Available online: www.foodrepo.org (accessed on 1 February 2021).

- Brennan, R.L.; Prediger, D.J. Coefficient Kappa: Some Uses, Misuses, and Alternatives. Educ. Psychol. Meas. 1981, 41, 687–699. [Google Scholar] [CrossRef]

- Taffe, P.; Zuppinger, C.; Burger, G.M.; Gonseth-Nusslé, S. The Bland-Altman Method Should Not Be Used When One of the Two Measurement Methods Has Negligible Measurement Errors; Center of Primary Care and Public Health (Unisanté), University of Lausanne: Lausanne, Switzerland, 2022; to be submitted. [Google Scholar]

- Taffe, P. Effective plots to assess bias and precision in method comparison studies. Stat. Methods Med. Res. 2018, 27, 1650–1660. [Google Scholar] [CrossRef]

- Martin, C.K.; Kaya, S.; Gunturk, B.K. Quantification of food intake using food image analysis. In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; Volume 2009, pp. 6869–6872. [Google Scholar] [CrossRef] [Green Version]

- Martin, C.K.; Correa, J.B.; Han, H.; Allen, H.R.; Rood, J.C.; Champagne, C.M.; Gunturk, B.K.; Bray, G.A. Validity of the Remote Food Photography Method (RFPM) for estimating energy and nutrient intake in near real-time. Obesity 2012, 20, 891–899. [Google Scholar] [CrossRef] [Green Version]

- Daugherty, B.L.; Schap, T.E.; Ettienne-Gittens, R.; Zhu, F.M.; Bosch, M.; Delp, E.J.; Ebert, D.S.; Kerr, D.A.; Boushey, C.J. Novel technologies for assessing dietary intake: Evaluating the usability of a mobile telephone food record among adults and adolescents. J. Med. Internet Res. 2012, 14, e58. [Google Scholar] [CrossRef]

- Harnack, L.; Steffen, L.; Arnett, D.K.; Gao, S.; Luepker, R.V. Accuracy of estimation of large food portions. J. Am. Diet. Assoc. 2004, 104, 804–806. [Google Scholar] [CrossRef]

- Flax, V.L.; Thakwalakwa, C.; Schnefke, C.H.; Stobaugh, H.; Phuka, J.C.; Coates, J.; Rogers, B.; Bell, W.; Colaiezzi, B.; Muth, M.K. Validation of a digitally displayed photographic food portion-size estimation aid among women in urban and rural Malawi. Public Health Nutr. 2019, 22, 3140–3150. [Google Scholar] [CrossRef]

- Norman, Å.; Kjellenberg, K.; Torres Aréchiga, D.; Löf, M.; Patterson, E. “Everyone can take photos”. Feasibility and relative validity of phone photography-based assessment of children’s diets—A mixed methods study. Nutr. J. 2020, 19, 50. [Google Scholar] [CrossRef]

- Subar, A.F.; Freedman, L.S.; Tooze, J.A.; Kirkpatrick, S.I.; Boushey, C.; Neuhouser, M.L.; Thompson, F.E.; Potischman, N.; Guenther, P.M.; Tarasuk, V.; et al. Addressing Current Criticism Regarding the Value of Self-Report Dietary Data. J. Nutr. 2015, 145, 2639–2645. [Google Scholar] [CrossRef] [Green Version]

- Khanna, N.; Boushey, C.J.; Kerr, D.; Okos, M.; Ebert, D.S.; Delp, E.J. An Overview of The Technology Assisted Dietary Assessment Project at Purdue University. In Proceedings of the 2010 IEEE International Symposium on Multimedia, Taichung, Taiwan, 13–15 December 2010; pp. 290–295. [Google Scholar] [CrossRef] [Green Version]

- Woo, I.; Otsmo, K.; Kim, S.; Ebert, D.S.; Delp, E.J.; Boushey, C.J. Automatic portion estimation and visual refinement in mobile dietary assessment. In Proceedings of the IS&T/SPIE Electronic Imaging, San Jose, CA, USA, 17–21 January 2010; Volume 7533. [Google Scholar] [CrossRef] [Green Version]

| Segments | Total (n = 352) | Composite Foods (n = 208) | Simple Foods (n = 79) | Composite Beverages (n = 32) | Simple Beverages (n = 33) |

|---|---|---|---|---|---|

| %Found (n) | 98.0% ± 1.5 (n = 345) | 97.6% ± 2.1 (n = 203) | 98.7% ± 2.5 (n = 78) | 96.9% ± 6.1 (n = 31) | 100.0% ± 0.0 (n = 33) |

| %Omitted (n) | 2.0% ± 1.5 (n = 7) | 2.4% ± 2.1 (n = 5) | 1.3% ± 2.5 (n = 1) | 3.1% ± 6.1 (n = 1) | 0.0% (n = 0) |

| %Intruded (n) | 1.4% ± 1.2 (n = 5) | 1.5% ± 1.7 (n = 3) | 2.5% ± 3.5 (n = 2) | 0.0% (n = 0) | 0.0% (n = 0) |

| Records Classification | Total (n = 345) | Composite Foods (n = 203) | Simple Foods (n = 78) | Composite Beverages (n = 31) | Simple Beverages (n = 33) |

|---|---|---|---|---|---|

| %Exact match (n) | 87.5% ± 3.5 (n = 302) | 90.1% ± 4.1 (n = 183) | 96.2% ± 4.3 (n = 75) | 41.9% ± 17.7 (n = 13) | 93.9%± 8.3 (n = 31) |

| %Close match (n) | 8.4% ± 3.0 (n = 29) | 6.9% ± 3.5 (n = 14) | 3.8% ± 4.3 (n = 3) | 38.7% ± 17.4 (n = 12) | 0.0% (n = 0) |

| %Far match (n) | 1.2% ± 1.1 (n = 4) | 2.0% ± 1.9 (n = 4) | 0.0% (n = 0) | 0.0% (n = 0) | 0.0% (n = 0) |

| %Mismatch (n) | 2.9% ± 1.8 (n = 10) | 1.0% ± 1.4 (n = 2) | 0.0% (n = 0) | 19.4% ± 14.1 (n = 6) | 6.1% ± 8.3 (n = 2) |

| Food Groups | Cohen Kappa | Uniform Kappa | Sensitivity [%] | Specificity [%] | ||||

|---|---|---|---|---|---|---|---|---|

| Kappa | Std. Err. | Kappa | [95% CI] | Sensitivity | [95% CI] | Specificity | [95% CI] | |

| NaNs beverages | 0.8607 | 0.0533 | 0.977 | [0.954;0.994] | 100 | [75.3;100] | 98.8 | [96.9; 99.7] |

| Vegetables | 1.0000 | 0.0538 | 1 | [1;1] | 100 | [95.3;100] | 100 | [98.6;100] |

| Fruit | 1.0000 | 0.0538 | 1 | [1;1] | 100 | [85.8;100] | 100 | [98.9;100] |

| Juice | 0.7721 | 0.0524 | 0.977 | [0.954;0.994] | 100 | [59;100] | 98.8 | [97.0;99.7] |

| Meat & poultry | 1.0000 | 0.0538 | 1 | [1;1] | 100 | [83.9;100] | 100 | [98.9;100] |

| Fish & seafood | 1.0000 | 0.0538 | 1 | [1;1] | 100 | [54.1;100] | 100 | [98.9;100] |

| Unclassified meat | 1.0000 | 0.0538 | 1 | [1;1] | 100 | [39.8;100] | 100 | [98.9;100] |

| Eggs & meat substitutes | 0.8874 | 0.0535 | 0.994 | [0.983;1] | 100 | [39.8;100] | 99.7 | [98.4;100] |

| Dairy products (excl. milk) | 1.0000 | 0.0538 | 1 | [1;1] | 100 | [80.5;100] | 100 | [98.9;100] |

| Milk & milk-based beverages | 1.0000 | 0.0538 | 1 | [1;1] | 100 | [29.2;100] | 100 | [98.9,100] |

| Seeds & nuts | 1.0000 | 0.0538 | 1 | [1;1] | 100 | [29.2;100] | 100 | [98.9;100] |

| Fats & oils | 0.8542 | 0.0538 | 0.988 | [0.971;1] | 85.7 | [42.1;99.6] | 99.7 | [98.4;100] |

| Cereals & cereal-based products | 0.9598 | 0.0538 | 0.988 | [0.971;1] | 96.3 | [81; 99.9] | 99.7 | [98.3;100] |

| Rice, rice-based products | 0.9319 | 0.0537 | 0.994 | [0.983;1] | 100 | [59;100] | 99.7 | [98.4;100] |

| Potatoes, legumes & beans | 0.9469 | 0.0538 | 0.988 | [0.971;1] | 90.5 | [69.6;98.8] | 100 | [98.9;100] |

| Salty snacks | 1.0000 | 0.0538 | 1 | [1;1] | 100 | [54.1;100] | 100 | [98.9;100] |

| Sweet dishes | 1.0000 | 0.0538 | 1 | [1;1] | 100 | [78.2;100] | 100 | [98.9;100] |

| Sweeteners | 0.9076 | 0.0536 | 0.994 | [0.983;1] | 83.3 | [35.9;99.6] | 100 | [98.9;100] |

| NaS beverages | 0.8122 | 0.0536 | 0.977 | [0.954;0.994] | 75.0 | [42.8;94.5] | 99.7 | [98.3;100] |

| Alcoholic beverages | 0.8574 | 0.0533 | 0.971 | [0.936;0.994] | 76.2 | [52.8;91.8] | 100 | [98.9;100] |

| Condiments & sauces | 0.9665 | 0.0538 | 0.988 | [0.971;1] | 97.0 | [84.2;99.9] | 99.7 | [98.2;100] |

| Milk substitutes | 1.0000 | 0.0538 | 1 | [1;1] | 100 | [2.5;100] | 100 | [98.9;100] |

| Soups | 1.0000 | 0.0538 | 1 | [1;1] | 100 | [69.2;100] | 100 | [98.9;100] |

| Level of Granularity | Cohen Kappa | Uniform Kappa | ||

|---|---|---|---|---|

| Kappa | Std. Err. | Kappa | [95% CI] | |

| Food Categories | 0.9603 | 0.0254 | 0.963 | [0.943; 0.983] |

| Food Groups | 0.9554 | 0.0158 | 0.958 | [0.933; 0.979] |

| Food Types | 0.9559 | 0.0145 | 0.958 | [0.934; 0.979] |

| Coefficient of Variation Cυ | Mean Coefficient of Variation | |||

|---|---|---|---|---|

| At True Values’ 25th Percentile | At True Values’ Median | At True Values’ 75th Percentile | All Records | |

| Energy | 0.58 | 0.45 | 0.37 | 0.35 |

| Fat | 1.68 | 0.83 | 0.52 | 0.42 |

| Carbohydrates | 0.65 | 0.45 | 0.37 | 0.31 |

| Protein | 1.96 | 0.63 | 0.40 | 0.38 |

| Fiber | 1.47 | 0.72 | 0.62 | 0.58 |

| Alcohol | 1.70 | 1.70 | 1.70 | 1.25 |

| Mean Coefficient of Variation | |||

|---|---|---|---|

| All Records | Foods | Beverages | |

| Energy | 0.35 | 0.33 | 0.75 |

| Fat | 0.42 | 0.41 | 1.18 |

| Carbohydrates | 0.31 | 0.32 | 0.27 |

| Protein | 0.38 | 0.37 | 0.65 |

| Fibers | 0.58 | 0.52 | 2.01 |

| Alcohol | 1.25 | 3.14 | 1.23 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zuppinger, C.; Taffé, P.; Burger, G.; Badran-Amstutz, W.; Niemi, T.; Cornuz, C.; Belle, F.N.; Chatelan, A.; Paclet Lafaille, M.; Bochud, M.; et al. Performance of the Digital Dietary Assessment Tool MyFoodRepo. Nutrients 2022, 14, 635. https://doi.org/10.3390/nu14030635

Zuppinger C, Taffé P, Burger G, Badran-Amstutz W, Niemi T, Cornuz C, Belle FN, Chatelan A, Paclet Lafaille M, Bochud M, et al. Performance of the Digital Dietary Assessment Tool MyFoodRepo. Nutrients. 2022; 14(3):635. https://doi.org/10.3390/nu14030635

Chicago/Turabian StyleZuppinger, Claire, Patrick Taffé, Gerrit Burger, Wafa Badran-Amstutz, Tapio Niemi, Clémence Cornuz, Fabiën N. Belle, Angeline Chatelan, Muriel Paclet Lafaille, Murielle Bochud, and et al. 2022. "Performance of the Digital Dietary Assessment Tool MyFoodRepo" Nutrients 14, no. 3: 635. https://doi.org/10.3390/nu14030635

APA StyleZuppinger, C., Taffé, P., Burger, G., Badran-Amstutz, W., Niemi, T., Cornuz, C., Belle, F. N., Chatelan, A., Paclet Lafaille, M., Bochud, M., & Gonseth Nusslé, S. (2022). Performance of the Digital Dietary Assessment Tool MyFoodRepo. Nutrients, 14(3), 635. https://doi.org/10.3390/nu14030635