Integration of Hyperspectral Imaging and AI Techniques for Crop Type Mapping: Present Status, Trends, and Challenges

Abstract

1. Introduction

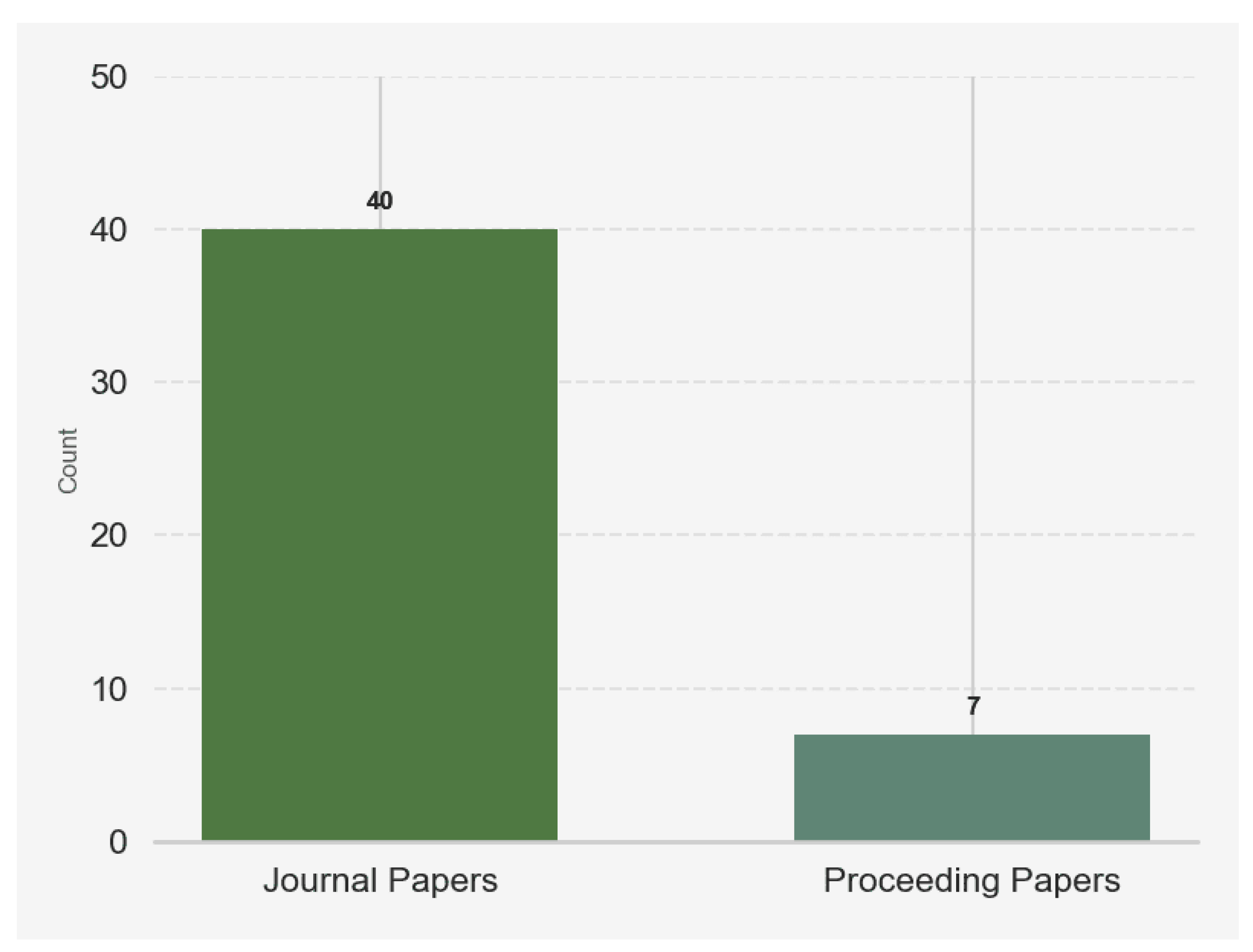

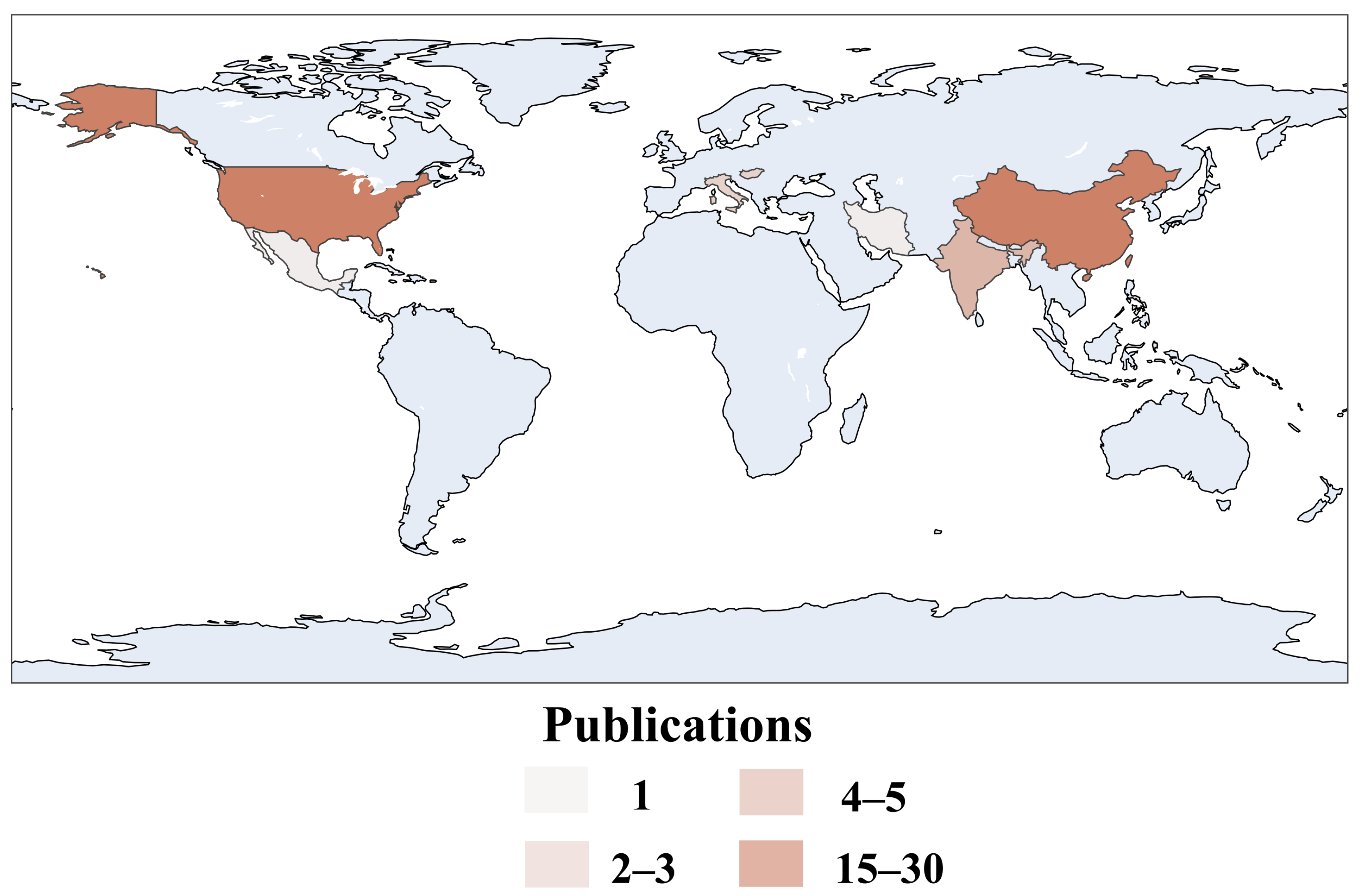

2. Literature Identification

2.1. Formulating Research Questions

- Q.1: What are the most common hyperspectral remote sensing platforms and sensors used for crop mapping in the literature?

- Q.2: What HSI features are employed in the literature to identify crops accurately?

- Q.3: Which ML and DL models have been tested in the literature for crop type mapping using HSI?

- Q.4: What challenges do researchers face when using HSI for classification?

2.2. Search Strategy

2.3. Selection Criteria

3. Overview of Existing Surveys with Current Review Study

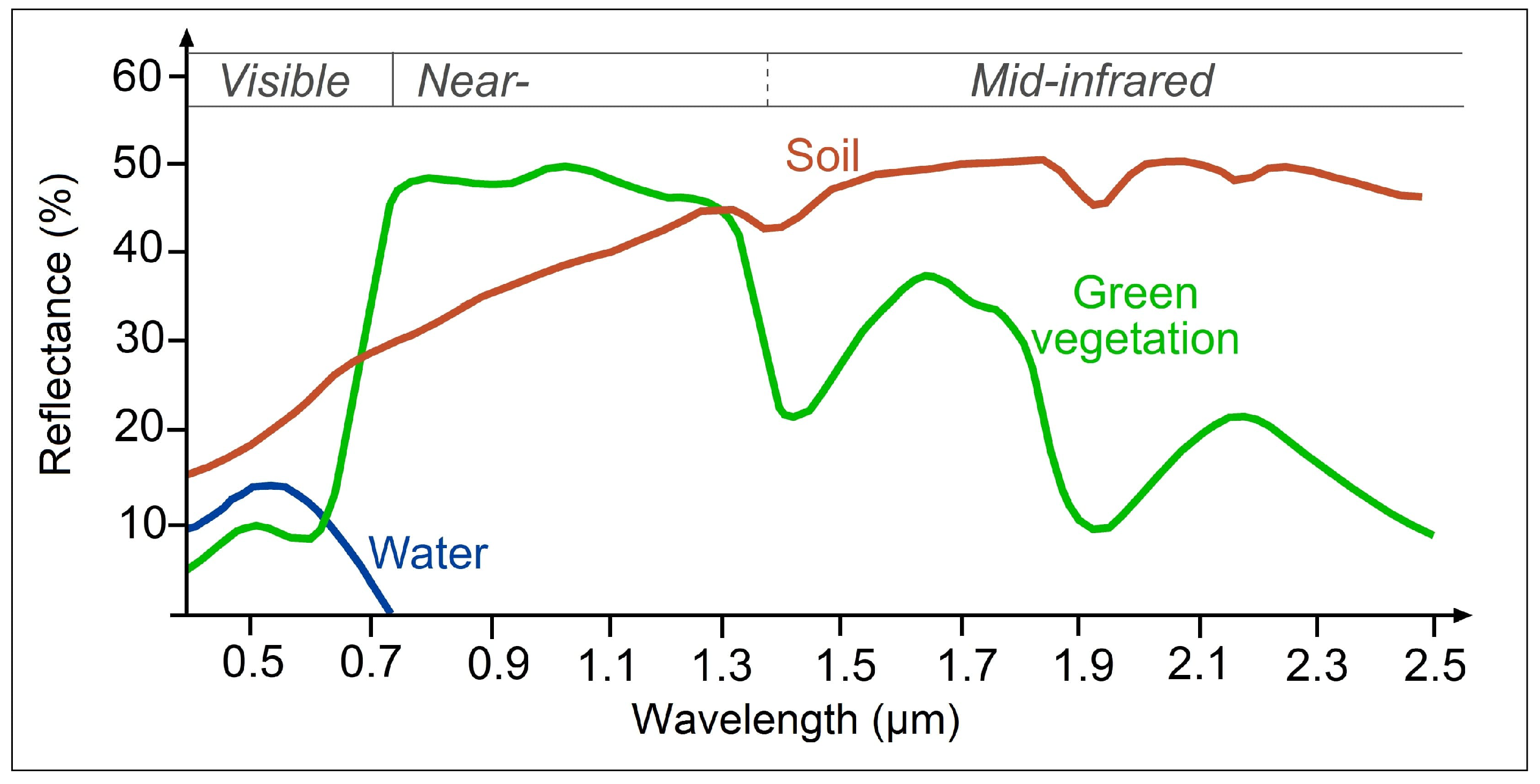

4. Overview of Hyperspectral Imaging Sensors and Platforms Used for Crop Type Mapping

4.1. Satellite-Mounted Sensors

4.2. Airborne and UAVs Mounted Sensors

5. Overview of Artificial Intelligence Models for Crop Mapping Using Hyperspectral Imaging

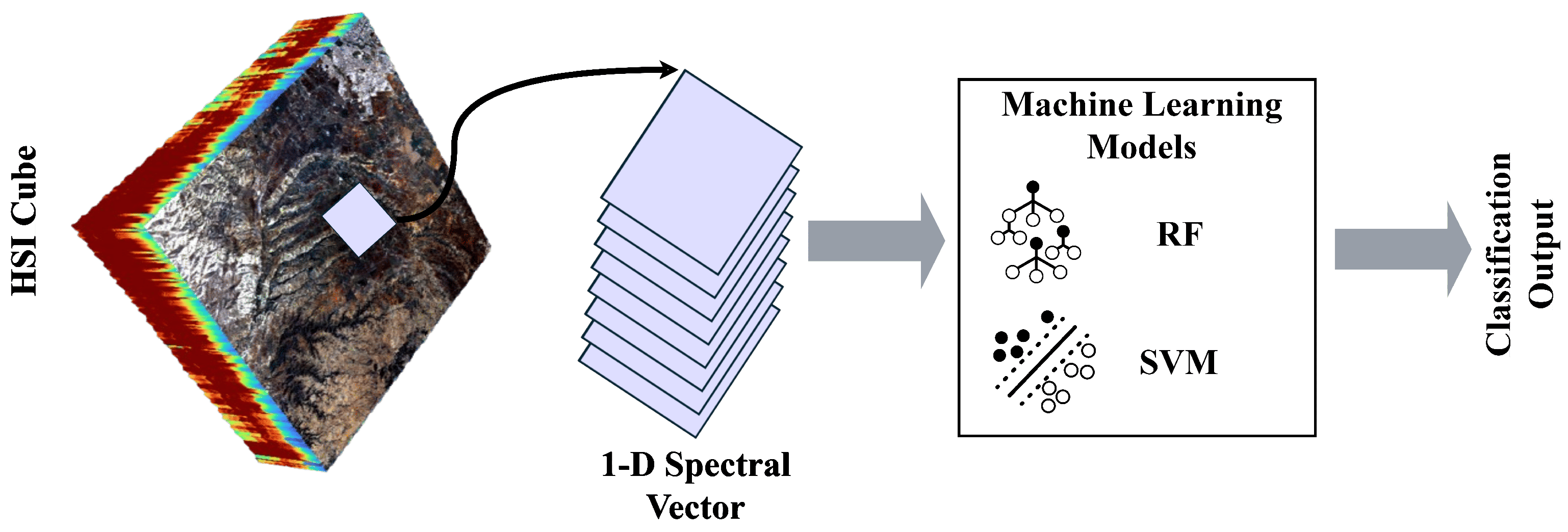

5.1. Conventional AI Approaches in Crop Mapping

5.1.1. Support Vector Machine

5.1.2. Random Forest

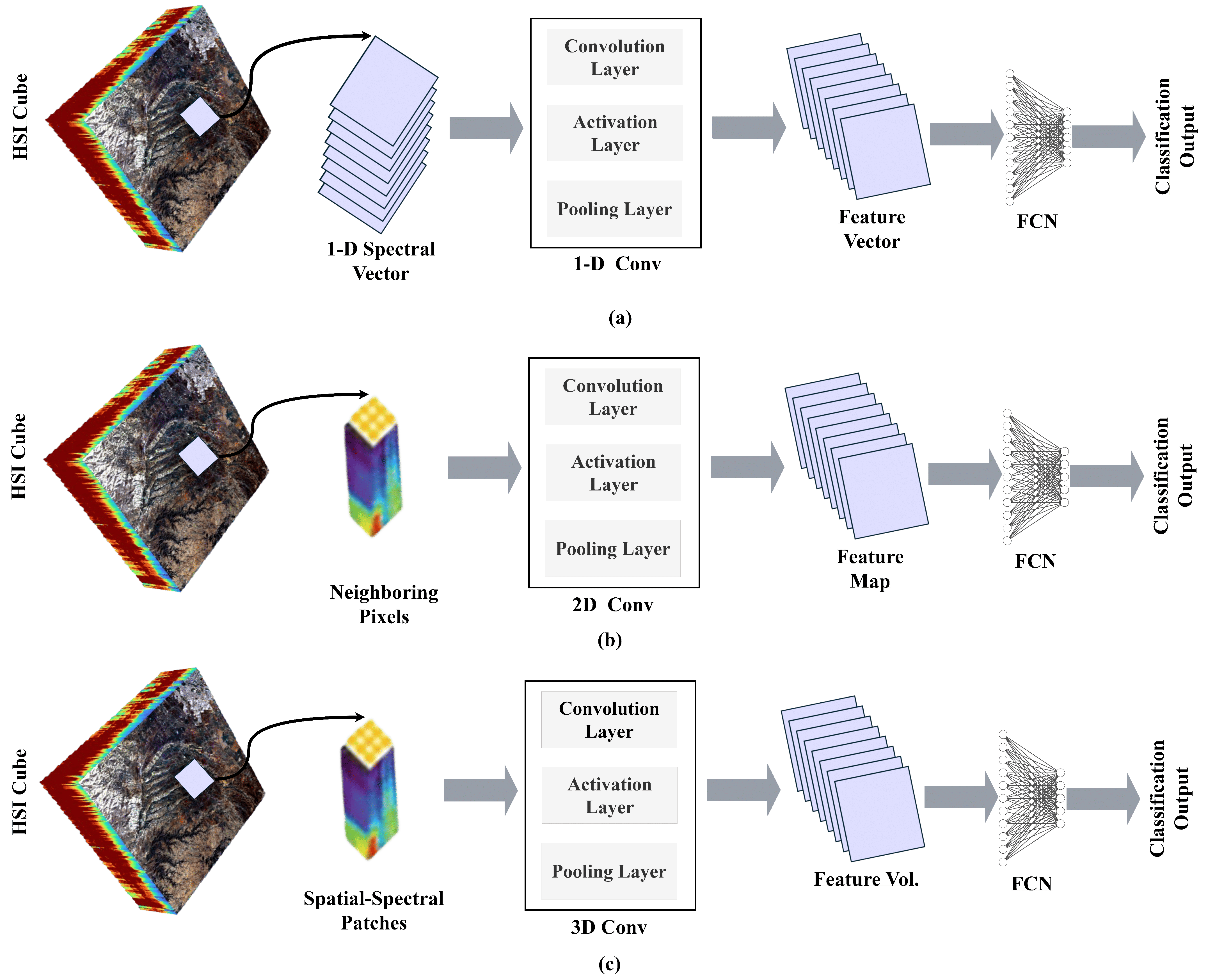

5.1.3. Convolutional Neural Network

5.1.4. Recurrent Neural Networks

5.2. Advanced AI Approaches in Crop Mapping

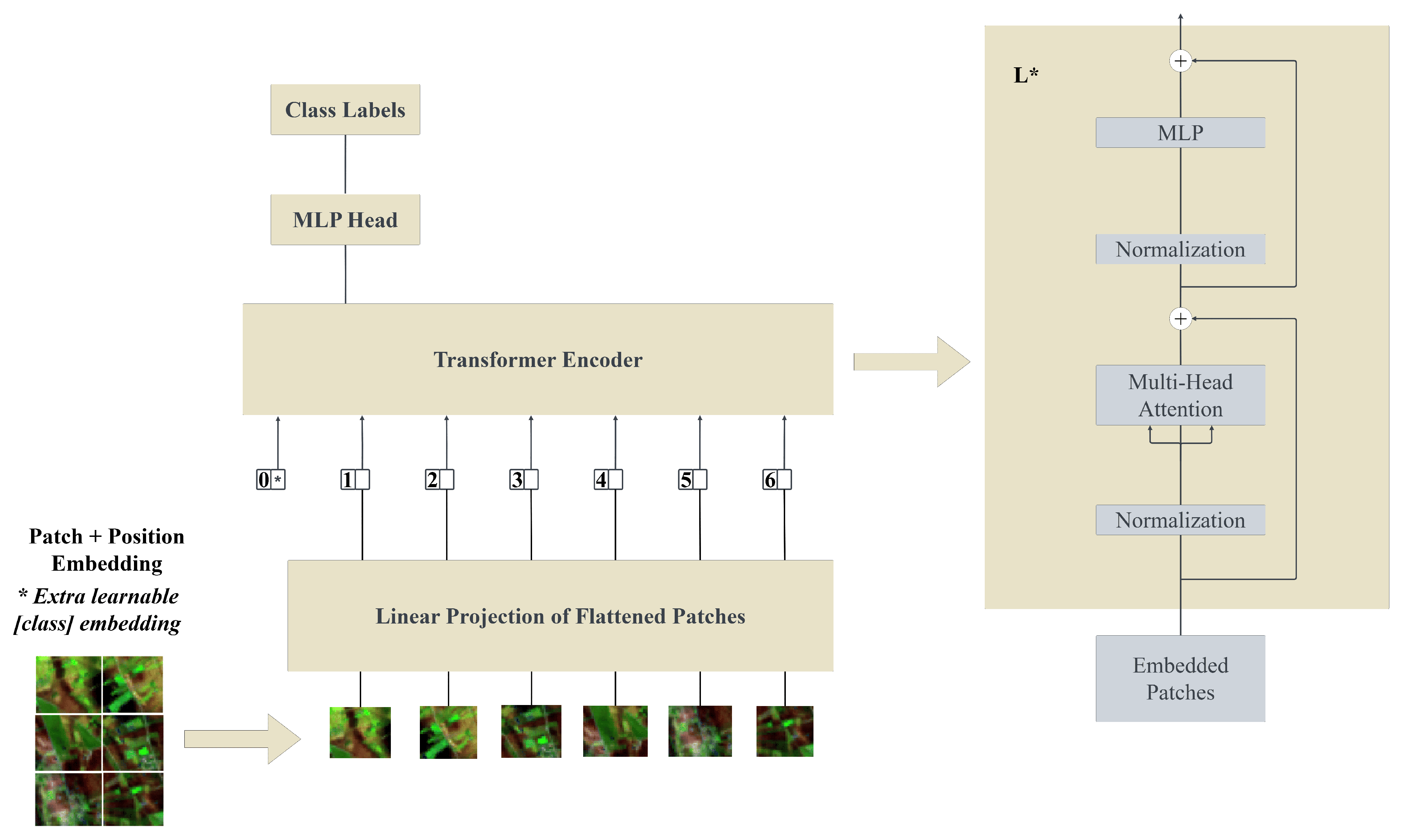

5.2.1. Transformer

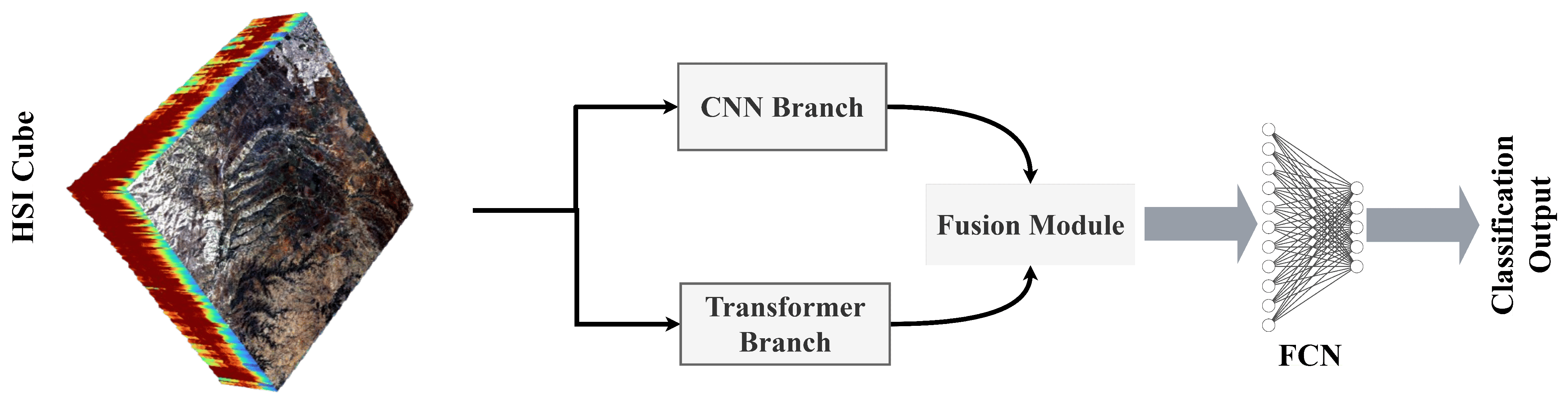

5.2.2. Hybrid Architectures

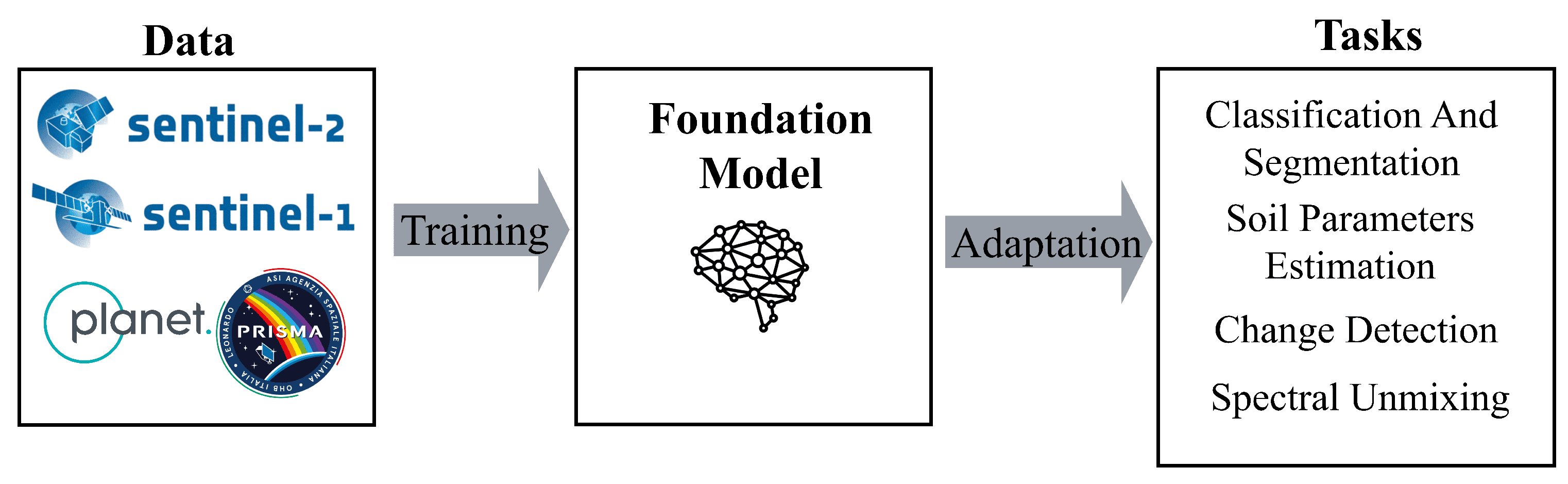

5.2.3. Geospatial Foundation Models

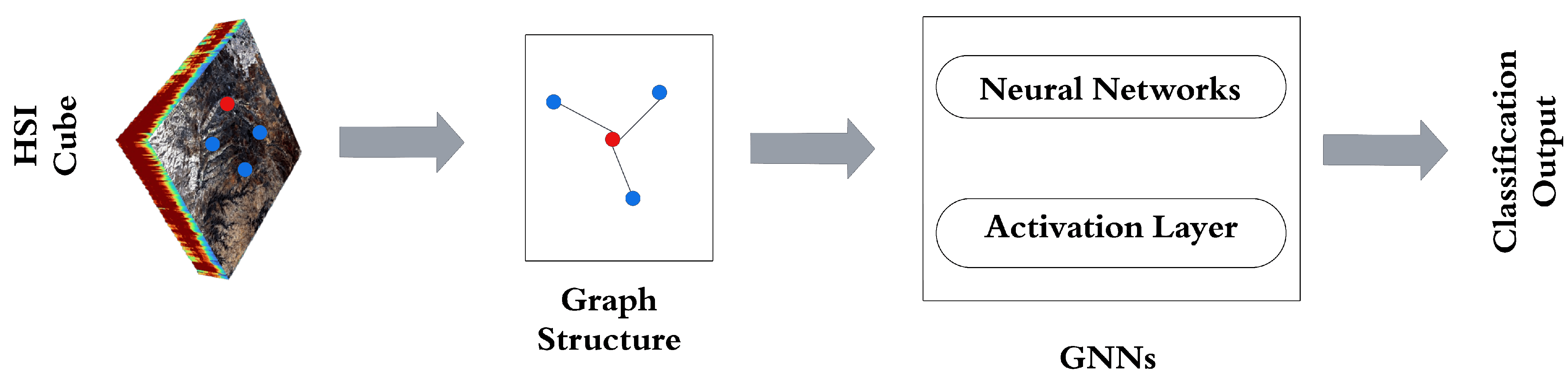

5.2.4. Graph Neural Networks

6. Results and Discussion

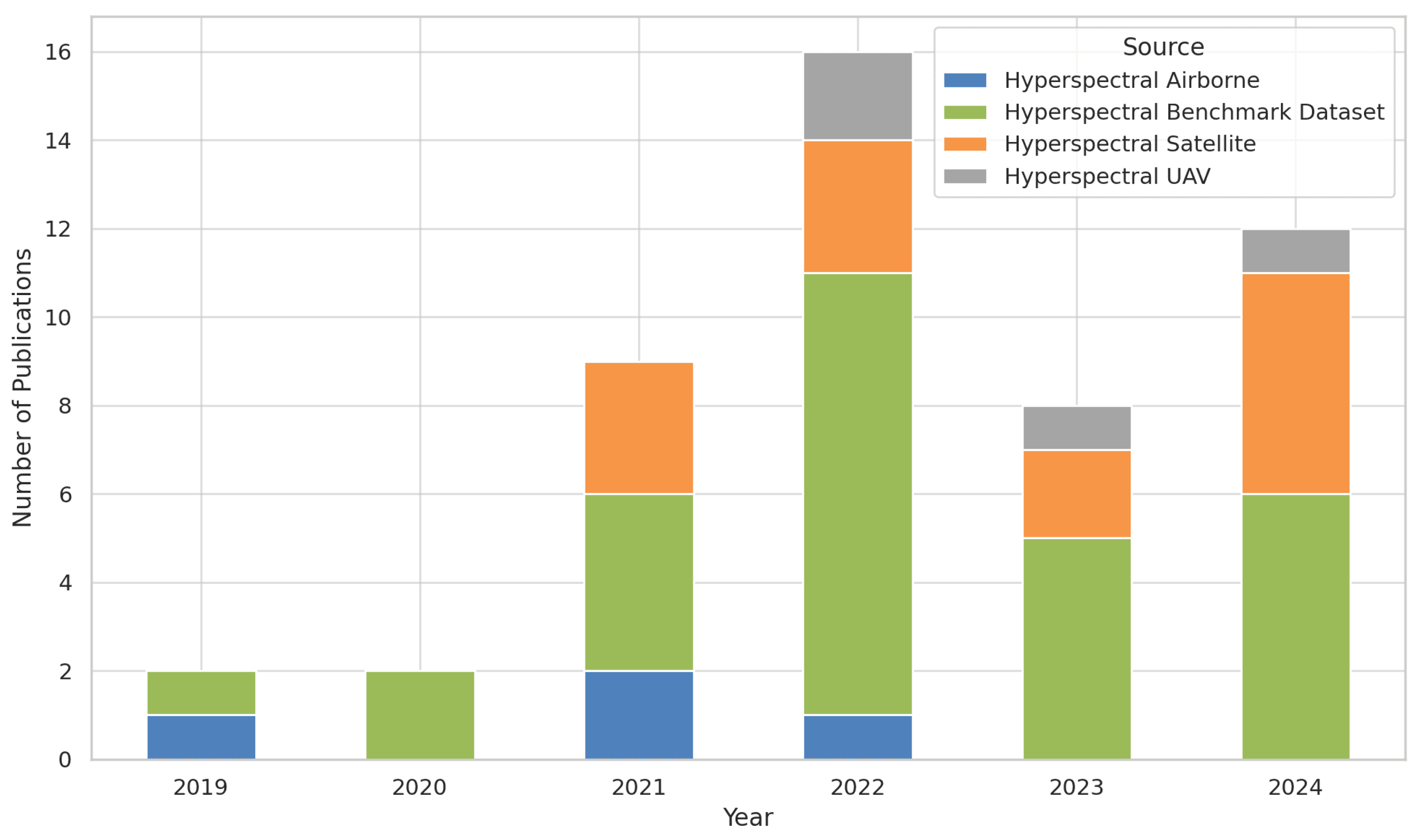

6.1. Sensors and Platforms

6.2. Modeling

6.3. Synthesis of Key Challenges in Crop Type Mapping Using HSI

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yailymova, H.; Yailymov, B.; Mazur, Y.; Kussul, N.; Shelestov, A. Sustainable Development Goal 2.4.1 for Ukraine Based on Geospatial Data. Proc. Int. Conf. Appl. Innov. IT 2023, 11, 67–73. [Google Scholar] [CrossRef]

- Calicioglu, O.; Flammini, A.; Bracco, S.; Bellù, L.; Sims, R. The Future Challenges of Food and Agriculture: An Integrated Analysis of Trends and Solutions. Sustainability 2019, 11, 222. [Google Scholar] [CrossRef]

- United Nations, Department of Economic and Social Affairs, Population Division. Population 2030: Demographic Challenges and Opportunities for Sustainable Development Planning; ST/ESA/SER.A/389; United Nations: New York, NY, USA, 2015. [Google Scholar]

- Choukri, M.; Laamrani, A.; Chehbouni, A. Use of Optical and Radar Imagery for Crop Type Classification in Africa: A Review. Sensors 2024, 24, 3618. [Google Scholar] [CrossRef] [PubMed]

- Devkota, K.P.; Bouasria, A.; Devkota, M.; Nangia, V. Predicting Wheat Yield Gap and Its Determinants Combining Remote Sensing, Machine Learning, and Survey Approaches in Rainfed Mediterranean Regions of Morocco. Eur. J. Agron. 2024, 158, 127195. [Google Scholar] [CrossRef]

- Khechba, K.; Laamrani, A.; Dhiba, D.; Misbah, K.; Chehbouni, A. Monitoring and Analyzing Yield Gap in Africa through Soil Attribute Best Management Using Remote Sensing Approaches: A Review. Remote Sens. 2021, 13, 4602. [Google Scholar] [CrossRef]

- Defourny, P.; Jarvis, I.; Blaes, X. JECAM Guidelines for Cropland and Crop Type Definition and Field Data Collection. 2014. Available online: http://jecam.org/wp-content/uploads/2018/10/JECAM_Guidelines_for_Field_Data_Collection_v1_0.pdf (accessed on 19 February 2025).

- Korotkova, I.; Efremova, N. AI for Agriculture: The Comparison of Semantic Segmentation Methods for Crop Mapping with Sentinel-2 Imagery. arXiv 2023, arXiv:2311.12993. [Google Scholar]

- Kamenova, I.; Chanev, M.; Dimitrov, P.; Filchev, L.; Bonchev, B.; Zhu, L.; Dong, Q. Crop Type Mapping and Winter Wheat Yield Prediction Utilizing Sentinel-2: A Case Study from Upper Thracian Lowland, Bulgaria. Remote Sens. 2024, 16, 1144. [Google Scholar] [CrossRef]

- Zhang, J.; You, S.; Liu, A.; Xie, L.; Huang, C.; Han, X.; Li, P.; Wu, Y.; Deng, J. Winter Wheat Mapping Method Based on Pseudo-Labels and U-Net Model for Training Sample Shortage. Remote Sens. 2024, 16, 2553. [Google Scholar] [CrossRef]

- Gao, M.; Lu, T.; Wang, L. Crop Mapping Based on Sentinel-2 Images Using Semantic Segmentation Model of Attention Mechanism. Sensors 2023, 23, 7008. [Google Scholar] [CrossRef]

- Lu, B.; Dao, P.D.; Liu, J.; He, Y.; Shang, J. Recent Advances of Hyperspectral Imaging Technology and Applications in Agriculture. Remote Sens. 2020, 12, 2659. [Google Scholar] [CrossRef]

- Alami Machichi, M.; El Mansouri, L.; Imani, Y.; Bourja, O.; Hadria, R.; Lahlou, O.; Benmansour, S.; Zennayi, Y.; Bourzeix, F. CerealNet: A Hybrid Deep Learning Architecture for Cereal Crop Mapping Using Sentinel-2 Time-Series. Informatics 2022, 9, 96. [Google Scholar] [CrossRef]

- Aneece, I.; Thenkabail, P. Accuracies Achieved in Classifying Five Leading World Crop Types and Their Growth Stages Using Optimal Earth Observing-1 Hyperion Hyperspectral Narrowbands on Google Earth Engine. Remote Sens. 2018, 10, 2027. [Google Scholar] [CrossRef]

- Hamzeh, S.; Hajeb, M.; Alavipanah, S.K.; Verrelst, J. RETRIEVAL OF SUGARCANE LEAF AREA INDEX FROM PRISMA HYPERSPECTRAL DATA. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, X-4-W1-2022, 271–277. [Google Scholar] [CrossRef]

- Aneece, I.; Foley, D.; Thenkabail, P.; Oliphant, A.; Teluguntla, P. New Generation Hyperspectral Data From DESIS Compared to High Spatial Resolution PlanetScope Data for Crop Type Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7846–7858. [Google Scholar] [CrossRef]

- Meng, S.; Wang, X.; Hu, X.; Luo, C.; Zhong, Y. Deep Learning-Based Crop Mapping in the Cloudy Season Using One-Shot Hyperspectral Satellite Imagery. Comput. Electron. Agric. 2021, 186, 106188. [Google Scholar] [CrossRef]

- Thiele, S.T.; Bnoulkacem, Z.; Lorenz, S.; Bordenave, A.; Menegoni, N.; Madriz, Y.; Dujoncquoy, E.; Gloaguen, R.; Kenter, J. Mineralogical Mapping with Accurately Corrected Shortwave Infrared Hyperspectral Data Acquired Obliquely from UAVs. Remote Sens. 2022, 14, 5. [Google Scholar] [CrossRef]

- Alonso, K.; Bachmann, M.; Burch, K.; Carmona, E.; Cerra, D.; de los Reyes, R.; Dietrich, D.; Heiden, U.; Hölderlin, A.; Ickes, J.; et al. Data Products, Quality and Validation of the DLR Earth Sensing Imaging Spectrometer (DESIS). Sensors 2019, 19, 4471. [Google Scholar] [CrossRef] [PubMed]

- Cogliati, S.; Sarti, F.; Chiarantini, L.; Cosi, M.; Lorusso, R.; Lopinto, E.; Miglietta, F.; Genesio, L.; Guanter, L.; Damm, A.; et al. The PRISMA Imaging Spectroscopy Mission: Overview and First Performance Analysis. Remote Sens. Environ. 2021, 262, 112499. [Google Scholar] [CrossRef]

- Chabrillat, S.; Foerster, S.; Segl, K.; Beamish, A.; Brell, M.; Asadzadeh, S.; Milewski, R.; Ward, K.J.; Brosinsky, A.; Koch, K.; et al. The EnMAP Spaceborne Imaging Spectroscopy Mission: Initial Scientific Results Two Years after Launch. Remote Sens. Environ. 2024, 315, 114379. [Google Scholar] [CrossRef]

- Nieke, J.; Despoisse, L.; Gabriele, A.; Weber, H.; Strese, H.; Ghasemi, N.; Gascon, F.; Alonso, K.; Boccia, V.; Tsonevska, B.; et al. The Copernicus Hyperspectral Imaging Mission for the Environment (CHIME): An Overview of Its Mission, System and Planning Status. In Proceedings of the SPIE 12729, Sensors, Systems, and Next-Generation Satellites XXVII, Amsterdam, The Netherlands, 19 October 2023; p. 7. [Google Scholar] [CrossRef]

- Petropoulos, G.P.; Detsikas, S.E.; Lemesios, I.; Raj, R. Obtaining LULC Distribution at 30-m Resolution from Pixxel’s First Technology Demonstrator Hyperspectral Imagery. Int. J. Remote Sens. 2024, 45, 4883–4896. [Google Scholar] [CrossRef]

- Li, W.; Arundel, S.; Gao, S.; Goodchild, M.; Hu, Y.; Wang, S.; Zipf, A. GeoAI for Science and the Science of GeoAI. J. Spat. Inf. Sci. 2024, 29, 1–17. [Google Scholar] [CrossRef]

- Guerri, M.F.; Distante, C.; Spagnolo, P.; Bougourzi, F.; Taleb-Ahmed, A. Deep Learning Techniques for Hyperspectral Image Analysis in Agriculture: A Review. ISPRS Open J. Photogramm. Remote Sens. 2024, 12, 100062. [Google Scholar] [CrossRef]

- Addimando, N.; Engel, M.; Schwarz, F.; Batič, M. A DEEP LEARNING APPROACH FOR CROP TYPE MAPPING BASED ON COMBINED TIME SERIES OF SATELLITE AND WEATHER DATA. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLIII-B3-2022, 1301–1308. [Google Scholar] [CrossRef]

- Snyder, H. Literature Review as a Research Methodology: An Overview and Guidelines. J. Bus. Res. 2019, 104, 333–339. [Google Scholar] [CrossRef]

- Ecarnot, F.; Seronde, M.F.; Chopard, R.; Schiele, F.; Meneveau, N. Writing a Scientific Article: A Step-by-Step Guide for Beginners. Eur. Geriatr. Med. 2015, 6, 573–579. [Google Scholar] [CrossRef]

- Alami Machichi, M.; Mansouri, l.E.; Imani, y.; Bourja, O.; Lahlou, O.; Zennayi, Y.; Bourzeix, F.; Hanadé Houmma, I.; Hadria, R. Crop Mapping Using Supervised Machine Learning and Deep Learning: A Systematic Literature Review. Int. J. Remote. Sens. 2023, 44, 2717–2753. [Google Scholar] [CrossRef]

- Orynbaikyzy, A.; Gessner, U.; Conrad, C. Crop Type Classification Using a Combination of Optical and Radar Remote Sensing Data: A Review. Int. J. Remote Sens. 2019, 40, 6553–6595. [Google Scholar] [CrossRef]

- Karfi, K.E.; Fkihi, S.E.; Mansouri, L.E.; Naggar, O. Classification of Hyperspectral Remote Sensing Images for Crop Type Identification: State of the Art. In Proceedings of the 2nd International Conference on Advanced Technologies for Humanity—Volume 1: ICATH; SciTePress: Rabat, Morocco, 2020; pp. 11–18. [Google Scholar] [CrossRef]

- Ram, B.G.; Oduor, P.; Igathinathane, C.; Howatt, K.; Sun, X. A Systematic Review of Hyperspectral Imaging in Precision Agriculture: Analysis of Its Current State and Future Prospects. Comput. Electron. Agric. 2024, 222, 109037. [Google Scholar] [CrossRef]

- Moharram, M.A.; Sundaram, D.M. Land Use and Land Cover Classification with Hyperspectral Data: A Comprehensive Review of Methods, Challenges and Future Directions. Neurocomputing 2023, 536, 90–113. [Google Scholar] [CrossRef]

- Akewar, M.; Chandak, M. Hyperspectral Imaging Algorithms and Applications: A Review. TechRxiv 2024. [Google Scholar]

- Barbedo, J.G.A. A Review on the Combination of Deep Learning Techniques with Proximal Hyperspectral Images in Agriculture. Comput. Electron. Agric. 2023, 210, 107920. [Google Scholar] [CrossRef]

- Siegmund, A.; Menz, G. Fernes nah gebracht–Satelliten-und Luftbildeinsatz zur Analyse von Umweltveränderungen im Geographieunterricht. Geogr. Sch. 2005, 154, 2–10. [Google Scholar]

- Khan, A.; Vibhute, A.; Mali, S.; Patil, C. A Systematic Review on Hyperspectral Imaging Technology with a Machine and Deep Learning Methodology for Agricultural Applications. Ecol. Inform. 2022, 69, 101678. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Lastri, C.; Selva, M. Spectral Distortion in Lossy Compression of Hyperspectral Data. J. Electr. Comput. Eng. 2012, 2012, 850637. [Google Scholar] [CrossRef]

- Liu, N.; Li, W.; Wang, Y.; Tao, R.; Du, Q.; Chanussot, J. A Survey on Hyperspectral Image Restoration: From the View of Low-Rank Tensor Approximation. Sci. China Inf. Sci. 2023, 66, 140302. [Google Scholar] [CrossRef]

- Vuolo, F.; Neuwirth, M.; Immitzer, M.; Atzberger, C.; Ng, W.T. How much does multi-temporal Sentinel-2 data improve crop type classification? Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 122–130. [Google Scholar]

- Wang, M.; Wang, J.; Cui, Y.; Liu, J.; Chen, L. Agricultural Field Boundary Delineation with Satellite Image Segmentation for High-Resolution Crop Mapping: A Case Study of Rice Paddy. Agronomy 2022, 12, 2342. [Google Scholar] [CrossRef]

- Liu, Y.; Diao, C.; Mei, W.; Zhang, C. CropSight: Towards a large-scale operational framework for object-based crop type ground truth retrieval using street view and PlanetScope satellite imagery. ISPRS J. Photogramm. Remote Sens. 2024, 216, 66–89. [Google Scholar]

- Kokhan, S.; Vostokov, A. Application of nanosatellites PlanetScope data to monitor crop growth. E3S Web Conf. 2020, 171, 02014. [Google Scholar]

- Bourriz, M.; Laamrani, A.; El-Battay, A.; Hajji, H.; Elbouanani, N.; Ait Abdelali, H.; Bourzeix, F.; Amazirh, A.; Chehbouni, A. An Intercomparison of Two Satellite-Based Hyperspectral Imagery (PRISMA & EnMAP) for Agricultural Mapping: Potential of These Sensors to Produce Hyperspectral Time-Series Essential for Tracking Crop Phenology and Enhancing Crop Type Mapping. 2025. Available online: https://meetingorganizer.copernicus.org/EGU25/EGU25-18417.html (accessed on 15 March 2025). [CrossRef]

- Chakraborty, R.; Rachdi, I.; Thiele, S.; Booysen, R.; Kirsch, M.; Lorenz, S.; Gloaguen, R.; Sebari, I. A Spectral and Spatial Comparison of Satellite-Based Hyperspectral Data for Geological Mapping. Remote Sens. 2024, 16, 2089. [Google Scholar] [CrossRef]

- Bostan, S.; Ortak, M.A.; Tuna, C.; Akoguz, A.; Sertel, E.; Berk Ustundag, B. Comparison of Classification Accuracy of Co-Located Hyperspectral & Multispectral Images for Agricultural Purposes. In Proceedings of the 2016 Fifth International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Tianjin, China, 18–20 July 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Surase, R.; Kale, K.; Solankar, M.; Hanumant, G.; Varpe, A.; Vibhute, A.; Gaikwad, S.; Nalawade, D. Assessment of EO-1 Hyperion Imagery for Crop Discrimination Using Spectral Analysis. In Microelectronics, Electromagnetics and Telecommunications; Springer: Singapore, 2018; pp. 505–515. [Google Scholar]

- Aneece, I.; Thenkabail, P. Classifying Crop Types Using Two Generations of Hyperspectral Sensors (Hyperion and DESIS) with Machine Learning on the Cloud. Remote Sensing 2021, 13, 4704. [Google Scholar] [CrossRef]

- Transon, J.; D’Andrimont, R.; Maugnard, A.; Defourny, P. Survey of Hyperspectral Earth Observation Applications from Space in the Sentinel-2 Context. Remote Sens. 2018, 10, 157. [Google Scholar] [CrossRef]

- Aneece, I.; Thenkabail, P. New Generation Hyperspectral Sensors DESIS and PRISMA Provide Improved Agricultural Crop Classifications. Photogramm. Eng. Remote Sens. 2022, 88, 715–729. [Google Scholar] [CrossRef]

- Aneece, I.; Thenkabail, P.S.; McCormick, R.; Alifu, H.; Foley, D.; Oliphant, A.J.; Teluguntla, P. Machine Learning and New-Generation Spaceborne Hyperspectral Data Advance Crop Type Mapping. Photogramm. Eng. Remote Sens. 2024, 90, 687–698. [Google Scholar] [CrossRef]

- Sedighi, A.; Hamzeh, S.; Firozjaei, M.; Goodarzi, H.; Naseri, A. Comparative Analysis of Multispectral and Hyperspectral Imagery for Mapping Sugarcane Varieties. PFG—J. Photogramm. Remote Sens. Geoinf. Sci. 2023, 91, 453–470. [Google Scholar] [CrossRef]

- Chakraborty, R.; Thiele, S.; Naik, P.; Gloaguen, R. Evaluation of Spectral and Spatial Reconstruction in Resolution-Enhanced Hyperspectral Data for Effective Mineral Mapping. 2025. Available online: https://www.researchgate.net/publication/389263998?channel=doi&linkId=67bb7129f5cb8f70d5bd618e&showFulltext=true (accessed on 15 February 2025). [CrossRef]

- Eltner, A.; Hoffmeister, D.; Kaiser, A.; Karrasch, P.; Klingbeil, L.; Stöcker, C.; Rovere, A. (Eds.) UAVs for the Environmental Sciences: Methods and Applications; wbg Academic: Darmstadt, Germany, 2022; ISBN 978-3-534-40588-6. [Google Scholar]

- Ambrosi, G. UAV4PrecisAg. Image Created by Ambrosi, Gianluca. Available online: https://lms.geoversity.io/course/view.php?id=4 (accessed on 23 January 2025).

- Spiller, D.; Ansalone, L.; Carotenuto, F.; Mathieu, P.P. Crop Type Mapping Using Prisma Hyperspectral Images and One-Dimensional Convolutional Neural Network. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 8166–8169. [Google Scholar] [CrossRef]

- Thoreau, R.; Risser, L.; Achard, V.; Berthelot, B.; Briottet, X. Toulouse Hyperspectral Data Set: A benchmark data set to assess semi-supervised spectral representation learning and pixel-wise classification techniques. ISPRS J. Photogramm. Remote Sens. 2024, 212, 323–337. [Google Scholar] [CrossRef]

- Roupioz, L.; Briottet, X.; Adeline, K.; Al Bitar, A.; Barbon-Dubosc, D.; Barda-Chatain, R.; Barillot, P.; Bridier, S.; Carroll, E.; Cassante, C.; et al. Multi-source datasets acquired over Toulouse (France) in 2021 for urban microclimate studies during the CAMCATT/AI4GEO field campaign. Data Brief 2023, 48, 109109. [Google Scholar] [CrossRef] [PubMed]

- Joshi, A.; Pradhan, B.; Gite, S.; Chakraborty, S. Remote-Sensing Data and Deep-Learning Techniques in Crop Mapping and Yield Prediction: A Systematic Review. Remote Sens. 2023, 15, 2014. [Google Scholar] [CrossRef]

- Misbah, K.; Laamrani, A.; Chehbouni, A.; Dhiba, D.; Ezzahar, J. Use of Hyperspectral Prisma Level-1 Data and ISDA Soil Fertility Map for Soil Macronutrient Availability Quantification in a Moroccan Agricultural Land. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 7051–7054. [Google Scholar] [CrossRef]

- Arroyo-Mora, J.P.; Kalacska, M.; Inamdar, D.; Soffer, R.; Lucanus, O.; Gorman, J.; Naprstek, T.; Schaaf, E.S.; Ifimov, G.; Elmer, K.; et al. Implementation of a UAV–Hyperspectral Pushbroom Imager for Ecological Monitoring. Drones 2019, 3, 12. [Google Scholar] [CrossRef]

- Yokoya, N.; Chan, J.C.W.; Segl, K. Potential of Resolution-Enhanced Hyperspectral Data for Mineral Mapping Using Simulated EnMAP and Sentinel-2 Images. Remote Sens. 2016, 8, 172. [Google Scholar] [CrossRef]

- Li, H.; Ye, W.; Liu, J.; Tan, W.; Pirasteh, S.; Fatholahi, S.N.; Li, J. High-Resolution Terrain Modeling Using Airborne LiDAR Data with Transfer Learning. Remote Sens. 2021, 13, 3448. [Google Scholar] [CrossRef]

- Cao, J.; Liu, K.; Zhuo, L.; Liu, L.; Zhu, Y.; Peng, L. Combining UAV-based hyperspectral and LiDAR data for mangrove species classification using the rotation forest algorithm. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102414. [Google Scholar]

- Stewart, A.J.; Robinson, C.; Corley, I.A.; Ortiz, A.; Ferres, J.M.L.; Banerjee, A. Torchgeo: Deep learning with geospatial data. In Proceedings of the 30th International Conference on Advances in Geographic Information Systems, Seattle, WA, USA, 1–4 November 2022; pp. 1–12. [Google Scholar]

- Ahmad, M.; Shabbir, S.; Roy, S.K.; Hong, D.; Wu, X.; Yao, J.; Khan, A.M.; Mazzara, M.; Distefano, S.; Chanussot, J. Hyperspectral Image Classification—Traditional to Deep Models: A Survey for Future Prospects. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 968–999. [Google Scholar] [CrossRef]

- Akewar, M.; Chandak, M. An Integration of Natural Language and Hyperspectral Imaging: A Review. IEEE Geosci. Remote Sens. Mag. 2024, 13, 32–54. [Google Scholar] [CrossRef]

- Lebrini, Y.; Boudhar, A.; Hadria, R.; Lionboui, H.; Elmansouri, L.; Arrach, R.; Ceccato, P.; Benabdelouahab, T. Identifying Agricultural Systems Using SVM Classification Approach Based on Phenological Metrics in a Semi-arid Region of Morocco. Earth Syst. Environ. 2019, 3, 277–288. [Google Scholar] [CrossRef]

- Jacon, A.; Galvão, L.; Santos, J.; Sano, E. Seasonal Characterization and Discrimination of Savannah Physiognomies in Brazil Using Hyperspectral Metrics from Hyperion/EO-1. Int. J. Remote Sens. 2017, 38, 4494–4516. [Google Scholar] [CrossRef]

- Reddy, B.S.; Sharma, S.; Shwetha, H.R. Crop Classification Based on Optimal Hyperspectral Narrow Bands Using Machine Learning and Hyperion Data. In Proceedings of the 2023 IEEE India Geoscience and Remote Sensing Symposium (InGARSS), Bangalore, India, 10–13 December 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Erdanaev, E.; Kappas, M.; Wyss, D. Irrigated Crop Types Mapping in Tashkent Province of Uzbekistan with Remote Sensing-Based Classification Methods. Sensors 2022, 22, 5683. [Google Scholar] [CrossRef] [PubMed]

- Ustuner, M.; Sanli, F.B. Evaluating Training Data for Crop Type Classifıcation Using Support Vector Machine and Random Forests. Geod. Glas. 2017, 51, 125–133. [Google Scholar] [CrossRef]

- Monaco, M.; Sileo, A.; Orlandi, D.; Battagliere, M.; Candela, L.; Cimino, M.; Vivaldi, G.; Giannico, V. Semantic Segmentation of Crops via Hyperspectral PRISMA Satellite Images. In Proceedings of the 10th International Conference on Geographical Information Systems Theory, Applications and Management (GISTAM 2024), Angers, France, 2–4 May 2024; SciTePress: Setubal, Portugal, 2024; pp. 187–194. [Google Scholar] [CrossRef]

- Bhosle, K.; Musande, V. Evaluation of Deep Learning Convolutional Neural Network for Crop Classification. Int. J. Recent Technol. Eng. 2019, 8, 3960–3963. [Google Scholar] [CrossRef]

- Liu, N.; Zhao, Q.; Williams, R.; Barrett, B. Enhanced Crop Classification through Integrated Optical and SAR Data: A Deep Learning Approach for Multi-Source Image Fusion. Int. J. Remote Sens. 2023, 45, 7605–7633. [Google Scholar] [CrossRef]

- Shuai, L.; Li, Z.; Chen, Z.; Luo, D.; Mu, J. A Research Review on Deep Learning Combined with Hyperspectral Imaging in Multiscale Agricultural Sensing. Comput. Electron. Agric. 2024, 217, 108577. [Google Scholar] [CrossRef]

- LeCun, Y.; Kavukcuoglu, K.; Farabet, C. Convolutional networks and applications in vision. In Proceedings of the 2010 IEEE International Symposium on Circuits and Systems, Paris, France, 30 May–2 June 2010; pp. 253–256. [Google Scholar]

- Janga, B.; Asamani, G.P.; Sun, Z.; Cristea, N. A Review of Practical AI for Remote Sensing in Earth Sciences. Remote Sens. 2023, 15, 4112. [Google Scholar] [CrossRef]

- Kanthi, M.; Sarma, T.H.; Bindu, C.S. A 3d-Deep CNN Based Feature Extraction and Hyperspectral Image Classification. In Proceedings of the 2020 IEEE India Geoscience and Remote Sensing Symposium (InGARSS), Ahmedabad, India, 1–4 December 2020; pp. 229–232. [Google Scholar] [CrossRef]

- Mirzaei, S.; Pascucci, S.; Carfora, M.F.; Casa, R.; Rossi, F.; Santini, F.; Palombo, A.; Laneve, G.; Pignatti, S. Early-Season Crop Mapping by PRISMA Images Using Machine/Deep Learning Approaches: Italy and Iran Test Cases. Remote Sens. 2024, 16, 2431. [Google Scholar] [CrossRef]

- Ashraf, M.; Chen, L.; Innab, N.; Umer, M.; Baili, J.; Kim, T.H.; Ashraf, I. Novel 3-D Deep Neural Network Architecture for Crop Classification Using Remote Sensing-Based Hyperspectral Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 12649–12665. [Google Scholar] [CrossRef]

- Meghraoui, K.; Sebari, I.; Pilz, J.; Kadi, K.; Bensiali, S. Applied Deep Learning-Based Crop Yield Prediction: A Systematic Analysis of Current Developments and Potential Challenges. Technologies 2024, 12, 43. [Google Scholar] [CrossRef]

- Sahoo, A.R.; Chakraborty, P. Hybrid CNN Bi-LSTM Neural Network for Hyperspectral Image Classification. arXiv 2024, arXiv:2402.10026. [Google Scholar]

- Zhou, F.; Hang, R.; Liu, Q.; Yuan, X. Hyperspectral Image Classification Using Spectral-Spatial LSTMs. Neurocomputing 2019, 328, 39–47. [Google Scholar] [CrossRef]

- Ahmad, M.; Distifano, S.; Khan, A.M.; Mazzara, M.; Li, C.; Yao, J.; Li, H.; Aryal, J.; Vivone, G.; Hong, D. A Comprehensive Survey for Hyperspectral Image Classification: The Evolution from Conventional to Transformers. arXiv 2024, arXiv:2404.14955. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2023, arXiv:1706.03762. [Google Scholar]

- Xie, J.; Hua, J.; Chen, S.; Wu, P.; Gao, P.; Sun, D.; Lyu, Z.; Lyu, S.; Xue, X.; Lu, J. HyperSFormer: A Transformer-Based End-to-End Hyperspectral Image Classification Method for Crop Classification. Remote Sens. 2023, 15, 3491. [Google Scholar] [CrossRef]

- Zhang, H.; Feng, S.; Wu, D.; Zhao, C.; Liu, X.; Zhou, Y.; Wang, S.; Deng, H.; Zheng, S. Hyperspectral Image Classification on Large-Scale Agricultural Crops: The Heilongjiang Benchmark Dataset, Validation Procedure, and Baseline Results. Remote Sens. 2024, 16, 478. [Google Scholar] [CrossRef]

- Li, J.; Cai, Y.; Li, Q.; Kou, M.; Zhang, T. A Review of Remote Sensing Image Segmentation by Deep Learning Methods. Int. J. Digit. Earth 2024, 17, 2328827. [Google Scholar] [CrossRef]

- Guo, F.; Feng, Q.; Yang, S.; Yang, W. CMTNet: Convolutional Meets Transformer Network for Hyperspectral Images Classification. arXiv 2024, arXiv:2406.14080. [Google Scholar]

- Wang, A.; Xing, S.; Zhao, Y.; Wu, H.; Iwahori, Y. A Hyperspectral Image Classification Method Based on Adaptive Spectral Spatial Kernel Combined with Improved Vision Transformer. Remote Sens. 2022, 14, 3705. [Google Scholar] [CrossRef]

- Liu, Y.; Han, T.; Ma, S.; Zhang, J.; Yang, Y.; Tian, J.; He, H.; Li, A.; He, M.; Liu, Z.; et al. Summary of chatgpt-related research and perspective towards the future of large language models. Meta-Radiology 2023, 1, 100017. [Google Scholar] [CrossRef]

- Team, G.; Anil, R.; Borgeaud, S.; Alayrac, J.B.; Yu, J.; Soricut, R.; Schalkwyk, J.; Dai, A.M.; Hauth, A.; Millican, K.; et al. Gemini: A family of highly capable multimodal models. arXiv 2023, arXiv:2312.11805. [Google Scholar]

- Lu, S.; Guo, J.; Zimmer-Dauphinee, J.R.; Nieusma, J.M.; Wang, X.; VanValkenburgh, P.; Wernke, S.A.; Huo, Y. AI foundation models in remote sensing: A survey. arXiv 2024, arXiv:2408.03464. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 4015–4026. [Google Scholar]

- Xiong, Z.; Wang, Y.; Zhang, F.; Zhu, X.X. One for All: Toward Unified Foundation Models for Earth Vision. In Proceedings of the IGARSS 2024—2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024. [Google Scholar]

- Xu, Y.; Ma, Y.; Zhang, Z. Self-Supervised Pre-Training for Large-Scale Crop Mapping Using Sentinel-2 Time Series. ISPRS J. Photogramm. Remote Sens. 2024, 207, 312–325. [Google Scholar] [CrossRef]

- Ghorbanzadeh, O.; Shahabi, H.; Piralilou, S.T.; Crivellari, A.; Rosa, L.E.C.l.; Atzberger, C.; Li, J.; Ghamisi, P. Contrastive Self-Supervised Learning for Globally Distributed Landslide Detection. IEEE Access 2024, 12, 118453–118466. [Google Scholar] [CrossRef]

- Wang, Y.; Albrecht, C.M.; Braham, N.A.A.; Mou, L.; Zhu, X.X. Self-Supervised Learning in Remote Sensing: A Review. IEEE Geosci. Remote Sens. Mag. 2022, 10, 213–247. [Google Scholar] [CrossRef]

- Wang, D.; Hu, M.; Jin, Y.; Miao, Y.; Yang, J.; Xu, Y.; Qin, X.; Ma, J.; Sun, L.; Li, C.; et al. HyperSIGMA: Hyperspectral Intelligence Comprehension Foundation Model. arXiv 2024, arXiv:2406.11519. [Google Scholar]

- Braham, N.A.A.; Albrecht, C.M.; Mairal, J.; Chanussot, J.; Wang, Y.; Zhu, X.X. SpectralEarth: Training Hyperspectral Foundation Models at Scale. arXiv 2024, arXiv:2408.08447. [Google Scholar]

- Xiong, Z.; Wang, Y.; Zhang, F.; Stewart, A.J.; Hanna, J.; Borth, D.; Papoutsis, I.; Le Saux, B.; Camps-Valls, G.; Zhu, X.X. Neural plasticity-inspired foundation model for observing the Earth crossing modalities. arXiv 2024, arXiv:2403.15356. [Google Scholar]

- Zhao, S.; Chen, Z.; Xiong, Z.; Shi, Y.; Saha, S.; Zhu, X.X. Beyond Grid Data: Exploring graph neural networks for Earth observation. IEEE Geosci. Remote Sens. Mag. 2024, 13, 175–208. [Google Scholar]

- Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Plaza, A.; Chanussot, J. Graph Convolutional Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5966–5978. [Google Scholar] [CrossRef]

- Wu, G.; Al-qaness, M.A.A.; Al-Alimi, D.; Dahou, A.; Abd Elaziz, M.; Ewees, A.A. Hyperspectral Image Classification Using Graph Convolutional Network: A Comprehensive Review. Expert Syst. Appl. 2024, 257, 125106. [Google Scholar] [CrossRef]

- Liu, J.; Guan, R.; Li, Z.; Zhang, J.; Hu, Y.; Wang, X. Adaptive Multi-Feature Fusion Graph Convolutional Network for Hyperspectral Image Classification. Remote Sens. 2023, 15, 5483. [Google Scholar] [CrossRef]

- Yang, A.; Li, M.; Ding, Y.; Fang, L.; Cai, Y.; He, Y. Graphmamba: An efficient graph structure learning vision mamba for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5537414. [Google Scholar]

- Estudillo, J.P.; Kijima, Y.; Sonobe, T. Introduction: Agricultural Development in Asia and Africa. In Agricultural Development in Asia and Africa: Essays in Honor of Keijiro Otsuka; Estudillo, J.P., Kijima, Y., Sonobe, T., Eds.; Springer: Singapore, 2023; pp. 1–17. ISBN 978-981-19-5542-6. [Google Scholar] [CrossRef]

- Farmonov, N.; Esmaeili, M.; Abbasi-Moghadam, D.; Sharifi, A.; Amankulova, K.; Mucsi, L. HypsLiDNet: 3-D–2-D CNN Model and Spatial–Spectral Morphological Attention for Crop Classification With DESIS and LiDAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 11969–11996. [Google Scholar] [CrossRef]

- Patel, H.; Bhagia, N.; Vyas, T.; Bhattacharya, B.; Dave, K. Crop Identification and Discrimination Using AVIRIS-NG Hyperspectral Data Based on Deep Learning Techniques. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July 2019–2 August 2019; pp. 3728–3731. [Google Scholar] [CrossRef]

- Wei, L.; Yu, M.; Zhong, Y.; Zhao, J.; Liang, Y.; Hu, X. Spatial–Spectral Fusion Based on Conditional Random Fields for the Fine Classification of Crops in UAV-borne Hyperspectral Remote Sensing Imagery. Remote Sens. 2019, 11, 780. [Google Scholar] [CrossRef]

- Zhao, J.; Zhong, Y.; Hu, X.; Wei, L.; Zhang, L. A robust spectral-spatial approach to identifying heterogeneous crops using remote sensing imagery with high spectral and spatial resolutions. Remote Sens. Environ. 2020, 239, 111605. [Google Scholar] [CrossRef]

- Wan, S.; Yeh, M.L.; Ma, H.L. An Innovative Intelligent System with Integrated CNN and SVM: Considering Various Crops through Hyperspectral Image Data. ISPRS Int. J. Geo-Inf. 2021, 10, 242. [Google Scholar] [CrossRef]

- Wei, L.; Wang, K.; Lu, Q.; Liang, Y.; Li, H.; Wang, Z.; Wang, R.; Cao, L. Crops Fine Classification in Airborne Hyperspectral Imagery Based on Multi-Feature Fusion and Deep Learning. Remote Sens. 2021, 13, 2917. [Google Scholar] [CrossRef]

- Shi, H.; Cao, G.; Ge, Z.; Zhang, Y.; Fu, P. Double-Branch Network with Pyramidal Convolution and Iterative Attention for Hyperspectral Image Classification. Remote Sens. 2021, 13, 1403. [Google Scholar] [CrossRef]

- Liu, S.; Shi, Q.; Zhang, L. Few-Shot Hyperspectral Image Classification With Unknown Classes Using Multitask Deep Learning. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5085–5102. [Google Scholar] [CrossRef]

- Cheng, J.; Xu, Y.; Kong, L. Hyperspectral Imaging Classification Based on LBP Feature Extraction and Multimodel Ensemble Learning. Comput. Electr. Eng. 2021, 92, 107199. [Google Scholar] [CrossRef]

- Liu, D.; Wang, Y.; Liu, P.; Li, Q.; Yang, H.; Chen, D.; Liu, Z.; Han, G. A Multibranch Crossover Feature Attention Network for Hyperspectral Image Classification. Remote Sens. 2022, 14, 5778. [Google Scholar] [CrossRef]

- Agilandeeswari, L.; Prabukumar, M.; Radhesyam, V.; Phaneendra, K.L.B.; Farhan, A. Crop Classification for Agricultural Applications in Hyperspectral Remote Sensing Images. Appl. Sci. 2022, 12, 1670. [Google Scholar] [CrossRef]

- Singh, P.; Srivastava, P.K.; Shah, D.; Pandey, M.K.; Anand, A.; Prasad, R.; Dave, R.; Verrelst, J.; Bhattacharya, B.K.; Raghubanshi, A. Crop Type Discrimination Using Geo-Stat Endmember Extraction and Machine Learning Algorithms. Adv. Space Res. 2022, 73, 1331–1348. [Google Scholar] [CrossRef]

- Galodha, A.; Vashisht, R.; Nidamanuri, R.; Ramiya, A. DEEP CONVOLUTION NEURAL NETWORKS WITH RESNET ARCHITECTURE FOR SPECTRAL-SPATIAL CLASSIFICATION OF DRONE BORNE AND GROUND BASED HIGH RESOLUTION HYPERSPECTRAL IMAGERY. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2022, 43, 577–584. [Google Scholar] [CrossRef]

- Bhosle, K.; Musande, V. Evaluation of CNN Model by Comparing with Convolutional Autoencoder and Deep Neural Network for Crop Classification on Hyperspectral Imagery. Geocarto Int. 2022, 37, 813–827. [Google Scholar] [CrossRef]

- Niu, B.; Feng, Q.; Chen, B.; Ou, C.; Liu, Y.; Yang, J. HSI-TransUNet: A Transformer Based Semantic Segmentation Model for Crop Mapping from UAV Hyperspectral Imagery. Comput. Electron. Agric. 2022, 201, 107297. [Google Scholar] [CrossRef]

- Qing, Y.; Huang, Q.; Feng, L.; Qi, Y.; Liu, W. Multiscale Feature Fusion Network Incorporating 3D Self-Attention for Hyperspectral Image Classification. Remote Sens. 2022, 14, 742. [Google Scholar] [CrossRef]

- Wu, H.; Zhou, H.; Wang, A.; Iwahori, Y. Precise Crop Classification of Hyperspectral Images Using Multi-Branch Feature Fusion and Dilation-Based MLP. Remote Sens. 2022, 14, 2713. [Google Scholar] [CrossRef]

- Zheng, X.; Sun, H.; Lu, X.; Xie, W. Rotation-Invariant Attention Network for Hyperspectral Image Classification. IEEE Trans. Image Process. 2022, 31, 4251–4265. [Google Scholar] [CrossRef]

- Hamza, M.A.; Alrowais, F.; Alzahrani, J.S.; Mahgoub, H.; Salem, N.M.; Marzouk, R. Squirrel Search Optimization with Deep Transfer Learning-Enabled Crop Classification Model on Hyperspectral Remote Sensing Imagery. Appl. Sci. 2022, 12, 5650. [Google Scholar] [CrossRef]

- Chen, H.; Qiu, Y.; Yin, D.; Chen, J.; Chen, X.; Liu, S.; Liu, L. Stacked Spectral Feature Space Patch: An Advanced Spectral Representation for Precise Crop Classification Based on Convolutional Neural Network. Crop J. 2022, 10, 1460–1469. [Google Scholar]

- Chen, H.; Li, X.; Zhou, J.; Wang, Y. TPPI: A Novel Network Framework and Model for Efficient Hyperspectral Image Classification. Photogramm. Eng. Remote Sens. 2022, 88, 535–546. [Google Scholar] [CrossRef]

- Sarma, A.; Rao Nidamanuri, R. Evolutionary Optimisation Techniques for Band Selection in Drone-Based Hyperspectral Images for Vegetable Crops Mapping. In Proceedings of the IGARSS 2023—2023 IEEE International Geoscience and Remote Sensing Symposium, Pasadena, CA, USA, 16–21 July 2023; Volume 2023, pp. 7384–7387. [Google Scholar] [CrossRef]

- Farmonov, N.; Amankulova, K.; Szatmari, J.; Sharifi, A.; Abbasi-Moghadam, D.; Nejad, S.M.M.; Mucsi, L. Crop Type Classification by DESIS Hyperspectral Imagery and Machine Learning Algorithms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 1576–1588. [Google Scholar] [CrossRef]

- Alajmi, M.; Mengash, H.; Eltahir, M.; Assiri, M.; Ibrahim, S.; Salama, A. Exploiting Hyperspectral Imaging and Optimal Deep Learning for Crop Type Detection and Classification. IEEE Access 2023, 11, 124985–124995. [Google Scholar] [CrossRef]

- Scheibenreif, L.; Mommert, M.; Borth, D. Masked Vision Transformers for Hyperspectral Image Classification. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 17–24 June 2023; pp. 2166–2176. [Google Scholar] [CrossRef]

- Bai, Y.; Xu, M.; Zhang, L.; Liu, Y. Pruning Multi-Scale Multi-Branch Network for Small-Sample Hyperspectral Image Classification. Electronics 2023, 12, 674. [Google Scholar] [CrossRef]

- Huang, X.; Zhou, Y.; Yang, X.; Zhu, X.; Wang, K. SS-TMNet: Spatial–Spectral Transformer Network with Multi-Scale Convolution for Hyperspectral Image Classification. Remote Sens. 2023, 15, 1206. [Google Scholar] [CrossRef]

- Liang, J.; Yang, Z.; Bi, Y.; Qu, B.; Liu, M.; Xue, B.; Zhang, M. A Multitree Genetic Programming-Based Feature Construction Approach to Crop Classification Using Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–17. [Google Scholar] [CrossRef]

- Islam, M.R.; Islam, M.T.; Uddin, M.P.; Ulhaq, A. Improving Hyperspectral Image Classification with Compact Multi-Branch Deep Learning. Remote Sens. 2024, 16, 2069. [Google Scholar] [CrossRef]

- Zhang, B.; Chen, Y.; Li, Z.; Xiong, S.; Lu, X. SANet: A Self-Attention Network for Agricultural Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- Sankararao, A.U.G.; Rajalakshmi, P.; Choudhary, S. UC-HSI: UAV-Based Crop Hyperspectral Imaging Datasets and Machine Learning Benchmark Results. IEEE Geosci. Remote. Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Chen, R.; Vivone, G.; Li, G.; Dai, C.; Hong, D.; Chanussot, J. Graph U-Net With Topology-Feature Awareness Pooling for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–14. [Google Scholar] [CrossRef]

- Cheng, W.; Ye, H.; Wen, X.; Su, Q.; Hu, H.; Zhang, J.; Zhang, F. A Crop’s Spectral Signature Is Worth a Compressive Text. Comput. Electron. Agric. 2024, 227, 109576. [Google Scholar] [CrossRef]

- Ravirathinam, P.; Ghosh, R.; Khandelwal, A.; Jia, X.; Mulla, D.; Kumar, V. Combining Satellite and Weather Data for Crop Type Mapping: An Inverse Modelling Approach. In Proceedings of the 2024 SIAM International Conference on Data Mining (SDM), SIAM, Houston, TX, USA, 18–20 April 2024; pp. 445–453. [Google Scholar]

- Elbouanani, N.; Laamrani, A.; El-Battay, A.; Hajji, H.; Bourriz, M.; Bourzeix, F.; Ait Abdelali, H.; Amazirh, A.; Chehbouni, A. Enhancing Soil Fertility Mapping with Hyperspectral Remote Sensing and Advanced AI: A Comparative Study of Dimensionality Reduction Techniques in Morocco. 2025. Available online: https://meetingorganizer.copernicus.org/EGU25/EGU25-18418.html (accessed on 15 March 2025). [CrossRef]

- Ghassemi, B.; Immitzer, M.; Atzberger, C.; Vuolo, F. Evaluation of Accuracy Enhancement in European-Wide Crop Type Mapping by Combining Optical and Microwave Time Series. Land 2022, 11, 1397. [Google Scholar] [CrossRef]

- Kakhani, N.; Rangzan, M.; Jamali, A.; Attarchi, S.; Alavipanah, S.K.; Mommert, M.; Tziolas, N.; Scholten, T. SSL-SoilNet: A Hybrid Transformer-based Framework with Self-Supervised Learning for Large-scale Soil Organic Carbon Prediction. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4509915. [Google Scholar] [CrossRef]

- Höhl, A.; Obadic, I.; Torres, M.Á.F.; Najjar, H.; Oliveira, D.; Akata, Z.; Dengel, A.; Zhu, X.X. Opening the Black-Box: A Systematic Review on Explainable AI in Remote Sensing. arXiv 2024, arXiv:2402.13791. [Google Scholar]

- Abbas, A.; Linardi, M.; Vareille, E.; Christophides, V.; Paris, C. Towards Explainable AI4EO: An Explainable Deep Learning Approach for Crop Type Mapping Using Satellite Images Time Series. In Proceedings of the IGARSS 2023—2023 IEEE International Geoscience and Remote Sensing Symposium, Pasadena, CA, USA, 16–21 July 2023; pp. 1088–1091. [Google Scholar] [CrossRef]

- Ghamisi, P.; Rasti, B.; Yokoya, N.; Wang, Q.; Hofle, B.; Bruzzone, L.; Bovolo, F.; Chi, M.; Anders, K.; Gloaguen, R.; et al. Multisource and Multitemporal Data Fusion in Remote Sensing: A Comprehensive Review of the State of the Art. IEEE Geosci. Remote Sens. Mag. 2019, 7, 6–39. [Google Scholar] [CrossRef]

- Villa, A.; Chanussot, J.; Benediktsson, J.A.; Jutten, C. Spectral Unmixing for the Classification of Hyperspectral Images at a Finer Spatial Resolution. IEEE J. Sel. Top. Signal Process. 2011, 5, 521–533. [Google Scholar] [CrossRef]

- Gumma, M.K.; Panjala, P.; Teluguntla, P. Mapping Heterogeneous Land Use/Land Cover and Crop Types in Senegal Using Sentinel-2 Data and Machine Learning Algorithms. Int. J. Digit. Earth 2024, 17, 2378815. [Google Scholar] [CrossRef]

- Ouzemou, J.E.; El Harti, A.; Lhissou, R.; El Moujahid, A.; Bouch, N.; El Ouazzani, R.; Bachaoui, E.; El Ghmari, A. Crop Type Mapping from Pansharpened Landsat 8 NDVI Data: A Case of a Highly Fragmented and Intensive Agricultural System. Remote Sens. Appl. Soc. Environ. 2018, 11, 94–103. [Google Scholar] [CrossRef]

- Misbah, K.; Laamrani, A.; Voroney, P.; Khechba, K.; Casa, R.; Chehbouni, A. Ensemble Band Selection for Quantification of Soil Total Nitrogen Levels from Hyperspectral Imagery. Remote Sens. 2024, 16, 2549. [Google Scholar] [CrossRef]

- Misbah, K.; Laamrani, A.; Casa, R.; Voroney, P.; Dhiba, D.; Ezzahar, J.; Chehbouni, A. Spatial Prediction of Soil Attributes from PRISMA Hyperspectral Imagery Using Wrapper Feature Selection and Ensemble Modeling. PFG—J. Photogramm. Remote Sens. Geoinf. Sci. 2024, 93, 197–215. [Google Scholar] [CrossRef]

| Main Search Term | Search Query | Boolean Operator Between Query |

|---|---|---|

| Crop Mapping | (TITLE-ABS-KEY (“Crop Mapping” OR “Crop Types Classification” OR “Crop Identification”)) | AND |

| Hyperspectral Data | TITLE-ABS-KEY (“Hyperspectral Imaging” OR “Hyperspectral Remote Sensing” OR “Hyperspectral Satellite” OR “Hyperspectral UAV”) | AND |

| AI Algorithms | TITLE-ABS-KEY (“Machine Learning” OR “Deep Learning” OR “Transformers” OR “Foundation Models”)) | - |

| Sensor | Hyperion | DESIS | PRISMA | EnMAP | EMIT |

|---|---|---|---|---|---|

| Spectral Range (nm) | 400–2500 | 400–1000 | 400–2500 | 420–2450 | 380–2500 |

| Number of Bands | 220 | 235 | 240 | 246 | 285 |

| Spectral Resolution (nm) | VNIR 60 | VNIR 2.55 | VNIR 12 | VNIR 6.5 | VNIR 7.4 |

| SWIR 10 | - | SWIR 12 | SWIR 10 | SWIR 7.4 | |

| Spatial Resolution (m) | 30 | 30 | 30 | 30 | 10 |

| Temporal Resolution (days) | 16 | 10 | 29 | 27 | - |

| Operator | NASA | DLR/TBE | ASI | DLR | NASA |

| Life Time | 2000–2017 | 2018–act. | 2019–act. | 2022–act. | 2022–act. |

| Dataset | Year | Source | Spectral Bands | Wavelengths (nm) | Spatial Resolution (m) |

|---|---|---|---|---|---|

| Indian Pines | 1992 | NASA AVIRIS | 220 | 400–2500 | 20 |

| Salinas | 1998 | NASA AVIRIS | 224 | 360–2500 | 3.7 |

| Xuzhou | 2014 | HySpex | 436 | 415–2508 | 0.73 |

| Toulouse | 2021 | Aisa (FENIX) | 420 | 400–2500 | 1 |

| Dataset | Year | Source | Spectral Bands | Wavelengths (nm) | Spatial Resolution (m) |

|---|---|---|---|---|---|

| WHU-Hi-HanChuan | 2016 | Headwall Nano-Hyperspec | 274 | 400–1000 | 0.109 |

| WHU-Hi-HongHu | 2017 | Headwall Nano-Hyperspec | 270 | 400–1000 | 0.043 |

| WHU-Hi-LongKou | 2018 | Headwall Nano-Hyperspec | 270 | 400–1000 | 0.463 |

| UAV-borne Crop Hyperspectral Image (UC-HSI) | 2024 | Resonon’s Pika-L | 300 | 385–1021 | 0.011 |

| Year | Publication Topic | Models | Max Overall Accuracy (%) | Classes | Imagery Source | Features Used | Reference |

|---|---|---|---|---|---|---|---|

| 2019 | Developed a Parallel CNN architecture to classify crops and their growth stages using AVIRIS-NG hyperspectral data. | PCNN, ANN, SVM (Linear, RBF), KNN, SAM | 99.1 | 13 | Airborne Hyperspectral Imagery | Spatial–Spectral | Patel et al. [110] |

| 2019 | Developed a spatial–spectral fusion method based on conditional random fields (SSF-CRF) for fine crop classification using UAV-borne hyperspectral imagery. | SSF-CRF, SVM, MS, MSVC, SVRFMC, DPSCRF | WHU-Han: 94.60, WHU-Hon: 97.95 | 9; 18 | Hyperspectral Benchmark Dataset | Spatial–Spectral, Texture, Morphology | Wei et al. [111] |

| 2020 | Development of a novel 3D-Deep Feature Extraction CNN (3D-DFE-CNN) for hyperspectral image classification. | 3D-DFE-CNN, SVM, 2D-CNN, 3D-CNN | IP: 99.94, SA: 99.99 | 16 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Kanthi et al. [79] |

| 2020 | Proposed a robust spectral–spatial classification method using Conditional Random Fields (SCRFs) to handle heterogeneous agricultural areas. | SCRF, SSVM, SVM, RF, OO-FNEA, SSRN | SA: 99.40, WHU-Han: 88.24 | 16 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Zhao et al. [112] |

| 2021 | Developed a two-stage classification system combining SVM and CNN for crop classification. | SVM + CNN, SVM, CNN | 100 | 7 | Airborne Hyperspectral Imagery | Spatial–Spectral | Wan et al. [113] |

| 2021 | Compared crop classification using two generations of hyperspectral sensors. | SVM, RF, Naive Bayes, WekaXMeans | EO-1: 100, DE: 85 | 3 | Satellite Hyperspectral Imagery | Spectral | Aneece and Thenkabail [48] |

| 2021 | Demonstrated the utility of PRISMA hyperspectral imagery for binary crop type classification using a 1D-CNN model. | 1D-CNN | 100 | 2 | Satellite Hyperspectral Imagery, Airborne Hyperspectral Imagery | Spectral | Spiller et al. [56] |

| 2021 | Developed a fine classification method for airborne hyperspectral imagery using multi-feature fusion and a DNN-CRF framework. | DNN + CRF with stacking fusion, SVM | WHU-Hon: 98.71, WHU-Xio: 99.71 | 18; 20 | Hyperspectral Benchmark Dataset | Spectral, Texture, Spatial, Morphological | Wei et al. [114] |

| 2021 | Developed a deep learning-based framework using hyperspectral satellite imagery for large-area crop mapping in cloudy conditions. | 3D-CNN, 2D-CNN, 1D-CNN, SVM, RF | 94.65 | 6 | Hyperspectral Satellite Imagery | Spatial–Spectral | Meng et al. [17] |

| 2021 | Proposed a novel HSI classification model integrating pyramidal convolution and iterative attention mechanisms to improve accuracy. | Double-Branch Network with Pyramidal Convolution + Iterative Attention, SVM, CDCNN, SSRN, FDSSC, DBMA, DBDA | IP: 95.90, SA: 98.33 | 16 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Shi et al. [115] |

| 2021 | Developed a multitask deep learning model for hyperspectral image classification. | MDL4OW, CROS2R, ResNet, WCRN, DCCNN, SVM, RF | SA: 94.34, IP: 82.61 | 8; 16 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Liu et al. [116] |

| 2021 | Developed a multi-model ensemble learning method integrating LBP features and sparse representation for hyperspectral image classification. | LBP_SRPMC, SVM, JSRC, CCJSR, JSACR | IP: 98.92 | 16 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Cheng et al. [117] |

| 2022 | Proposed a hybrid method combining adaptive spectral–spatial kernel and improved ViT with re-attention and locality mechanisms. | Adaptive Spectral Spatial Kernel + Improved Vision Transformer (ViT), RBF-SVM, CNN, HybridSN, PvResNet, SSRN, SSFTT, A2S2KResNet | IP: 98.81, Xu: 99.80, WHU-Lon: 99.89 | 9; 16 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Wang et al. [91] |

| 2022 | Developed a Multibranch Crossover Feature Attention Network (MCFANet) for hyperspectral image classification. | MCFANet, SVM, RF, KNN, GaussianNB, SSRN, FDSSC, 2D-CNN, 3D-CNN, HybridSN, MBDA, MSRN, MCFANet | IP: 98.91, SA: 99.54 | 16 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Liu et al. [118] |

| 2022 | Developed a partition-based band selection approach integrating entropy, NDVI, and MNDWI for crop mapping. | Partition-Based Band Selection + CNN, 3DGSVM, CNN+MFL, SS3FC, WEDCT-MI | IP: 97.62, SA: 96.08 | 16 | Hyperspectral Benchmark Dataset | Spectral Indices, Spatial–Spectral | Agilandeeswari et al. [119] |

| 2022 | Developed a Geo-Stat Endmember Extraction (GSEE) algorithm for pure pixel identification and applied advanced classifiers for crop type discrimination. | CNN, SAM, SID, SVM, RUSBoost, AdaBoost, Tree Bag | 89.07 | 10 | Airborne Hyperspectral Imagery | Spatial | Singh et al. [120] |

| 2022 | Developed a ResNet-based deep learning architecture for spatial–spectral crop classification using UAV and ground-based hyperspectral imagery. | ResNet + DCNN | 97.16 | 5 | Drone Hyperspectral Imagery, Terrestrial Hyperspectral Imagery | Spatial–Spectral | Galodha et al. [121] |

| 2022 | Evaluation of a CNN compared with a Convolutional Autoencoder and DNN for crop classification using hyperspectral data. | Optimized CNN, Convolutional Autoencoder, DNN | IP: 97, EO-1: 78 | 4; 16 | Hyperspectral Benchmark Dataset, Hyperspectral Satellite Imagery | Spatial | Bhosle and Musande [122] |

| 2022 | Developed HSI-TransUNet, a transformer-based semantic segmentation model for precise crop mapping using UAV hyperspectral imagery. | HSI-TransUNet, TransUNet, UNet, SegNet, SETR | 86.05 | 30 | Drone Hyperspectral Imagery | Spatial–Spectral | Niu et al. [123] |

| 2022 | Introduced a 3D Self-Attention Multiscale Feature Fusion Network for hyperspectral image classification. | 3DSA-MFN, SVM, 3D-CNN, SSAN, SSRN, HSI-BERT, SAT | SA: 99.92, IP: 99.52 | 16 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Qing et al. [124] |

| 2022 | Compared classification performance and optimal band selections between PRISMA and DESIS hyperspectral sensors for crop mapping. | SVM, RF | PR: 90, DE: 83 | 7 | Hyperspectral Satellite Imagery | Spectral | Aneece and Thenkabail [50] |

| 2022 | Compared crop classification performance of hyperspectral DESIS data versus high-resolution PlanetScope data for major crops in California’s Central Valley. | SVM, RF | DE: 85, PL: 79 | 8 | Hyperspectral Satellite Imagery | Spectral | Aneece et al. [16] |

| 2022 | Proposed a multi-branch feature fusion framework (DMLPFFN) using dilation-based MLP to enhance hyperspectral crop classification accuracy. | DMLPFFN, DMLP, DFFN, CNN, ResNet, RBF-SVM, EMP-SVM | SA: 99.05, WHU-Lon: 99.16, WHU-Han: 98.05 | 9; 16 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Wu et al. [125] |

| 2022 | Developed a Rotation-Invariant Attention Network (RIAN) to address rotation sensitivity in hyperspectral image classification. | RIAN, 1-D CNN, 2-D CNN, DHCNet, SpecAN, SSAN, MGCN | SA: 97.5 | 16 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Zheng et al. [126] |

| 2022 | Proposed a squirrel search optimization and deep transfer learning-enabled crop classification model for hyperspectral imaging. | SSODTL-CC, CNN, CNN-CRF, SVM, FNEA-OO, SVRFMC | WHU-Lon: 99.23, WHU-Han: 97.15 | 9; 16 | Hyperspectral Benchmark Dataset | Spatial | Hamza et al. [127] |

| 2022 | Proposed a framework using advanced spectral feature representation for precise crop classification in hyperspectral imagery. | SSFSP-CNN, TFP-CN | WHU-Hon: 99.21, SA: 99.24 | 16; 22 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Chen et al. [128] |

| 2022 | Proposed a novel framework for efficient pixel-level dense hyperspectral image classification. | TPPI-Net, HybridSN, pResNet, SSRN, 2D-CNN, 3D-CNN, SSAN | IP: 98.18, SA: 99.06 | 16 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Chen et al. [129] |

| 2023 | Developed an active learning-enhanced crop classification method using Marine Predators Algorithm for band optimization. | Optimized RF | 99.69 | 3 | Drone Hyperspectral Imagery | Spectral | Sarma and Nidamarthi [130] |

| 2023 | Proposed Adaptive Multi-Feature Fusion Graph Convolutional Network (AMF-GCN) for hyperspectral image classification. | AMF-GCN, HybridSN, DR-CNN, SAGE-A, MDGCN, MBCUT, JSDF | SA: 98.21 | 16 | Hyperspectral Benchmark Dataset | Spectral, Spatial, Texture | Liu et al. [106] |

| 2023 | Developed an integrating wavelet transform and attention mechanisms for crop type classification using DESIS imagery. | WA-GCN, MSRN, MDBRSSN, RF, SVM | 97.89 | 5 | Satellite Hyperspectral imagery | Spatial–Spectral | Farmonov et al. [131] |

| 2023 | Proposed an optimize for hyperparameter tuning in a deep transfer learning model for crop type classification using HSI. | DODTL-CTDC, CNN, SVM, CNN-CRF, FNEA-OO, SVRFMC, SSODTL-CC, DODTL-CTDC | WHU-Lon: 99.47, WHU-Han: 97.66 | 9; 11 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Alajmi et al. [132] |

| 2023 | Proposed a transformer-based model for hyperspectral crop classification. | HyperSFormer, FPGA, SSDGL, SeqFormer | IP: 98.4, WHU-Han: 95.3, WHU-Hon: 98 | 16; 22 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Xie et al. [87] |

| 2023 | Developed a masked vision transformer leveraging spatial–spectral interactions for hyperspectral classification with improved label efficiency. | Masked Spatial–Spectral Transformer, 3D-CNN, ViT-RGB, Spectral T., SST | 82 | 8 | Satellite Hyperspectral Imagery | Spatial–Spectral | Scheibenreif et al. [133] |

| 2023 | Introduced a pruning multi-scale multi-branch hybrid convolutional network for small-sample hyperspectral image classification. | PMSMBN, 2D-CNN, 3D-CNN, SSRN, HybridSN, SSAN, DMCN | SA: 99.78, IP: 96.28 | 16 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Bai et al. [134] |

| 2023 | Proposed a spatial–spectral transformer network with multi-scale convolution for hyperspectral image classification. | SS-TMNet, ViT, 3D-CNN, HybridSN, CrossViT, LeViT | IP: 84.67 | 16 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Huang et al. [135] |

| 2024 | Proposed a multitree genetic programming-based feature selection for hyperspectral crop classification. | AMTGP with SVM, MLP, LSTM, 1D-CNN | SA: 96.86, WHU-Lon: 93.13 | 9; 16 | Hyperspectral Benchmark Dataset | Spectral, Texture, Spectral Indices | Liang et al. [136] |

| 2024 | Early-season crop mapping using PRISMA and Sentinel-2 satellite data with machine and deep learning algorithms. | 1D-CNN, 3D-CNN, RF, SVM, KNN, MNB | 89 (Winter), 91 (Summer), 92 (Perennial), WHU-Hon: 73.54 | 19 | Satellite Hyperspectral Imagery | Spectral, Spatial–Spectral | Mirzaei et al. [80] |

| 2024 | Introduced the Spectral–Spatial Feature Tokenization Transformer for large-scale agricultural crops. | SSFTT, SpectralFormer, ViT, SSRN, DBDA-MISH, 2D-Deform, SVM | HLJ-Raohe: 95.72, HLJ-Yan: 92.41 | 7; 8 | Satellite Hyperspectral Imagery | Spatial–Spectral | Zhang et al. [88] |

| 2024 | Introduced HypsLiDNet, a 3D-2D CNN model integrating hyperspectral and LiDAR data with spatial–spectral morphological attention for crop classification. | HypsLiDNet, AMSSE-Net, MFT, MCACN, DEGL, HLDC, SVM, RF, 2DCNN, 3DCNN, ViT | 98.86 | 6 | Satellite Hyperspectral Imagery, LIDAR | Spatial–Spectral, Morphological Operators | Farmonov et al. [109] |

| 2024 | Developed a multi-branch deep learning model integrating Factor Analysis and Minimum Noise Fraction for spectral–spatial HSI classification. | CMD, Fast 3D CNN, HybridSN, SpectralNET, MBDA, Tri-CNN | SA: 99.35, IP: 98.45 | 16 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Islam et al. [137] |

| 2024 | Investigated hyperspectral crop classification using DESIS and PRISMA with machine learning algorithms. | SVM, RF, SAM | DE+PR: 99, DE: 97, PR: 96 | 5 | Satellite Hyperspectral Imagery | Spectral | Aneece et al. [51] |

| 2024 | Developed a novel 3D-UNet architecture for hyperspectral image classification. | 3D-UNet, HyperUNet, EddyNet, CEU-Net, UNet | IP: 99.60, XI: 99.81, WHU-Hon: 98.85, SA: 99.92 | 16; 20 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Ashraf et al. [81] |

| 2024 | Proposed SANet, a self-attention-based network for agricultural HSI classification. | SANet, ResNet, PyResNet, SSRN, A2S2KResNet, SSFTT, MATNet | WHU-Han: 95.81, WHU-Lon: 99.65, WHU-Hon: 97.40 | 9; 22 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Zhang et al. [138] |

| 2024 | Developed a two-stage semantic segmentation pipeline for agricultural crop classification. | 2D-CNN, 1D-CNN, Random Forest | 92 | 2 | Satellite Hyperspectral Imagery | Spectro-Temporal | Monaco et al. [73] |

| 2024 | Introduced a novel HyperConvFormer model for hyperspectral crop classification and growth stage analysis. | HyperConvFormer, 3D-CNN, ViT, 2D+1D-CNN, SpectralFormer | 95.26 | 10 | Drone Hyperspectral Imagery | Spatial–Spectral | Sankararao et al. [139] |

| 2024 | Introduced a novel graph U-Net with topology feature awareness pooling for hyperspectral image classification. | TFAP graph U-Net, 3D-CNN, SSRN, SSTN, 3DGAN | 97.19 | 16 | Hyperspectral Benchmark Dataset | Spatial–Spectral | Chen et al. [140] |

| 2024 | Introduced a lightweight, non-training framework for hyperspectral crop classification, featuring a Channel2Vec module for spectral embedding and a spectral tokenizer for local sequence extraction. | Channel2Vec, kNN, SVM, 1D-CNN, 2D-CNN, 3D-CNN, ViT, SpectralFormer GCN | XJM: 95.15, XI: 99.11, SA: 99.17 | 6; 16 | Hyperspectral Benchmark Dataset | Spectral | Cheng et al. [141] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bourriz, M.; Hajji, H.; Laamrani, A.; Elbouanani, N.; Abdelali, H.A.; Bourzeix, F.; El-Battay, A.; Amazirh, A.; Chehbouni, A. Integration of Hyperspectral Imaging and AI Techniques for Crop Type Mapping: Present Status, Trends, and Challenges. Remote Sens. 2025, 17, 1574. https://doi.org/10.3390/rs17091574

Bourriz M, Hajji H, Laamrani A, Elbouanani N, Abdelali HA, Bourzeix F, El-Battay A, Amazirh A, Chehbouni A. Integration of Hyperspectral Imaging and AI Techniques for Crop Type Mapping: Present Status, Trends, and Challenges. Remote Sensing. 2025; 17(9):1574. https://doi.org/10.3390/rs17091574

Chicago/Turabian StyleBourriz, Mohamed, Hicham Hajji, Ahmed Laamrani, Nadir Elbouanani, Hamd Ait Abdelali, François Bourzeix, Ali El-Battay, Abdelhakim Amazirh, and Abdelghani Chehbouni. 2025. "Integration of Hyperspectral Imaging and AI Techniques for Crop Type Mapping: Present Status, Trends, and Challenges" Remote Sensing 17, no. 9: 1574. https://doi.org/10.3390/rs17091574

APA StyleBourriz, M., Hajji, H., Laamrani, A., Elbouanani, N., Abdelali, H. A., Bourzeix, F., El-Battay, A., Amazirh, A., & Chehbouni, A. (2025). Integration of Hyperspectral Imaging and AI Techniques for Crop Type Mapping: Present Status, Trends, and Challenges. Remote Sensing, 17(9), 1574. https://doi.org/10.3390/rs17091574