A Taxonomy of Sensors, Calibration and Computational Methods, and Applications of Mobile Mapping Systems: A Comprehensive Review

Abstract

1. Introduction

2. Sensors

2.1. Global Positioning

2.2. Inertial Measurement Unit (IMU)

2.3. Camera

2.3.1. Camera Calibration

2.3.2. Simultaneous Localization and Mapping (SLAM)

2.3.3. Automatic Image Registration and Image-Based Point Cloud Generation

2.3.4. Why Are Cameras Almost Always Required?

2.4. Light Detection and Ranging (LiDAR)

2.4.1. Geometric Calibration of LiDARs

2.4.2. LiDAR Camera Calibration

2.4.3. LiDAR-SLAM

2.5. Robotic Operating System (ROS)

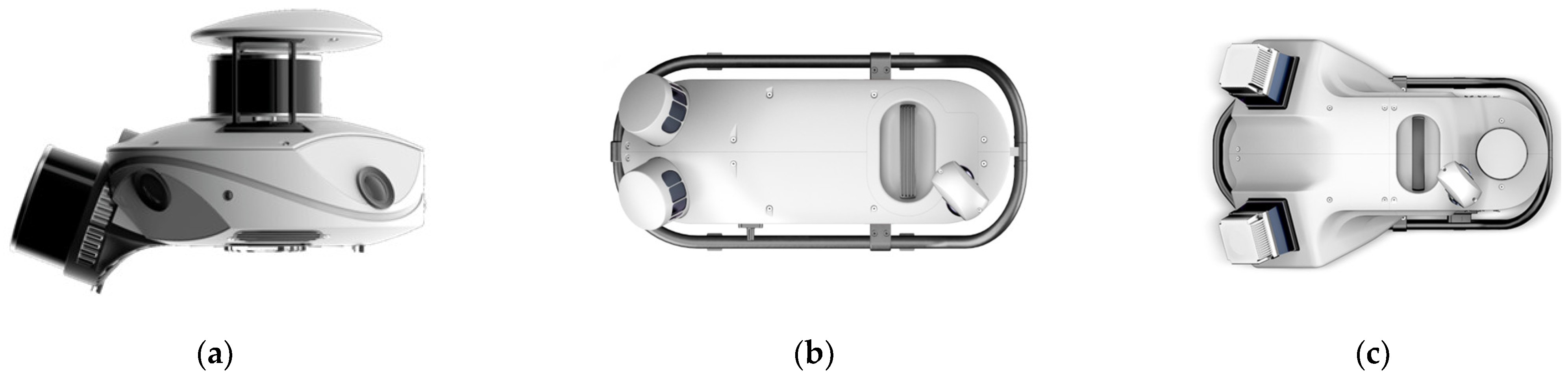

3. Mobile Mapping Systems

3.1. MMS Research Outputs

3.2. Custom MMS Configurations

3.3. Commercial MMSs

3.4. Alternative MMS Platforms

4. Recent Advancements in Applications

4.1. Natural Resource Monitoring and Management

4.2. Forest Management

4.3. Precision Agriculture

4.4. Mapping and Map Updating

4.5. Road Inventory and City Asset Management

4.6. Gamification and Virtual Reality

| Category | Selected Literature | Key Applications |

|---|---|---|

| Natural resource monitoring | An individual tree segmentation [114], forest digital twin [136] | -Individual tree detection -Trunk recognition from point-cloud |

| Forest management and monitoring | Forest parameter estimation at single-tree level [137], 3D mapping [138], biomass and CO2 estimation [139], Individual tree detection [140] | -Biomass estimation -Forest digital twin -CO2 estimation |

| Environment monitoring | Rocky landslide monitoring [141] Water body monitoring [142] | -Camera-based MMS -Hazardous site mapping -Image-based point clouds -UAV MMS platforms |

| Precision agriculture | Production estimation [121] Crop classification [123] Irritation [143] | -Harvest estimation; -yield mapping and monitoring -Crop classification -Irritation planning monitoring -Water stress monitoring; pest detection |

| Mapping | Map updating [144,145] Robotic 3D mapping [146] | -Car-mounted MMS -Deep-learning classification |

| Real estate | Flood risk mapping [147] | -Per-building flood risk modeling |

| Mining industries | Outdoor and indoor mapping [129] | -Geotechnical and geological study |

| Road mapping, inventory, and asset management | Traffic infrastructure road property survey [148], highway infrastructure survey, road inventory [133], evaluation of road infrastructure in urban and rural corridor [149], road refurbishment [150], rockfall risk management [151], road boundary extraction [152] | -Road property survey -Spatial accuracy investigation -Traffic sign detection -Lighting poles detection -Road centerlines, and building corners detection -Enhanced road safety by hazardous objects monitoring |

| Construction | Large-scale projects monitoring [153] Tunnel inspection [154] | -MMS with ground penetrating radar (GPR) -Site mapping |

| Under water | Underwater mapping [155] | -Ocean depth mapping |

| Low-cost developments | Combining low-cost UAV footage with MMS point cloud data [156] | -Combining terrestrial MMS and UAV -3D urban map generation |

| Gamification | Identification of road assets [157] | -Applications of game engines |

| Data | SLAM dataset for urban mobile mapping [114], semantic segmentation [158], map updating using autonomous vehicles [159] | -Large open datasets -3D point cloud annotation -MMS data capturing by autonomous vehicles |

| Localization | SLAM on an MMS with multi-camera and tilted LiDAR [160] | -Customized SLAM application by sensor fusion |

| Custom MMS | Ground penetrating radar MMS [153] | -Additional sensors |

| Cultural heritage documentation | Forgotten cultural heritage under forest environments [161], continuous monitoring [132] | -Indoor and outdoor mapping -Site geometric documentation |

| Wireless networks | Network coverage estimation [128] | -Ray tracing using 3D maps -Wireless propagation models |

4.7. Adoptability of Different MMSs in Various Applications

5. Discussion and Future Directions

Author Contributions

Funding

Conflicts of Interest

References

- Lockwood, M.; Davidson, J.; Curtis, A.; Stratford, E.; Griffith, R. Governance Principles for Natural Resource Management. Soc. Nat. Resour. 2010, 23, 986–1001. [Google Scholar] [CrossRef]

- Hofmann-Wellenhof, B.; Lichtenegger, H.; Collins, J. Global Positioning System: Theory and Practice; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Li, X.; Zhang, X.; Ren, X.; Fritsche, M.; Wickert, J.; Schuh, H. Precise positioning with current multi-constellation global navigation satellite systems: GPS, GLONASS, Galileo and BeiDou. Sci. Rep. 2015, 5, 8328. [Google Scholar] [CrossRef] [PubMed]

- Olanoff, D. Inside Google Street View: From Larry Page’s Car To The Depths Of The Grand Canyon. Retrieved July 2013, 29, 2019. [Google Scholar]

- Rizaldy, A.; Firdaus, W. Direct georeferencing: A new standard in photogrammetry for high accuracy mapping. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, 5–9. [Google Scholar] [CrossRef]

- Gledhill, D.; Tian, G.Y.; Taylor, D.; Clarke, D. Panoramic imaging—A review. Comput. Graph. 2003, 27, 435–445. [Google Scholar] [CrossRef]

- Explore Street View and Add Your Own 360 Images to Google Maps. Available online: https://www.google.com/streetview/ (accessed on 1 April 2025).

- Yang, B. Developing a mobile mapping system for 3D GIS and smart city planning. Sustainability 2019, 11, 3713. [Google Scholar] [CrossRef]

- Sofia, H.; Anas, E.; Faïz, O. Mobile mapping, machine learning and digital twin for road infrastructure monitoring and maintenance: Case study of mohammed VI bridge in Morocco. In Proceedings of the 2020 IEEE International Conference of Moroccan Geomatics (Morgeo), Casablanca, Morocco, 11–13 May 2020; pp. 1–6. [Google Scholar]

- Zhao, G.; Lian, M.; Li, Y.; Duan, Z.; Zhu, S.; Mei, L.; Svanberg, S. Mobile lidar system for environmental monitoring. Appl. Opt. 2017, 56, 1506–1516. [Google Scholar] [CrossRef]

- Blaser, S.; Nebiker, S.; Wisler, D. Portable image-based high performance mobile mapping system in underground environments–system configuration and performance evaluation. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 4, 255–262. [Google Scholar] [CrossRef]

- Barazzetti, L.; Remondino, F.; Scaioni, M. Combined use of photogrammetric and computer vision techniques for fully automated and accurate 3D modeling of terrestrial objects. In Proceedings of the Videometrics, Range Imaging, and Applications X, San Diego, CA, USA, 2–3 August 2009; Volume 7447, pp. 183–194. [Google Scholar]

- Ravi, R.; Lin, Y.-J.; Elbahnasawy, M.; Shamseldin, T.; Habib, A. Simultaneous system calibration of a multi-lidar multicamera mobile mapping platform. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1694–1714. [Google Scholar] [CrossRef]

- Hennessy, A.; Clarke, K.; Lewis, M. Hyperspectral classification of plants: A review of waveband selection generalisability. Remote Sens. 2020, 12, 113. [Google Scholar] [CrossRef]

- Manish, R.; Lin, Y.-C.; Ravi, R.; Hasheminasab, S.M.; Zhou, T.; Habib, A. Development of a miniaturized mobile mapping system for in-row, under-canopy phenotyping. Remote Sens. 2021, 13, 276. [Google Scholar] [CrossRef]

- Spinhirne, J.D. Micro pulse lidar. IEEE Trans. Geosci. Remote Sens. 1993, 31, 48–55. [Google Scholar] [CrossRef]

- Garrett, D. Apollo 17. 1972. Available online: https://ntrs.nasa.gov/citations/20090012049 (accessed on 1 April 2025).

- Raj, T.; Hanim Hashim, F.; Baseri Huddin, A.; Ibrahim, M.F.; Hussain, A. A survey on LiDAR scanning mechanisms. Electronics 2020, 9, 741. [Google Scholar] [CrossRef]

- Di Stefano, F.; Chiappini, S.; Gorreja, A.; Balestra, M.; Pierdicca, R. Mobile 3D scan LiDAR: A literature review. Geomat. Nat. Hazards Risk 2021, 12, 2387–2429. [Google Scholar] [CrossRef]

- Kaasinen, E. User needs for location-aware mobile services. Pers. Ubiquitous Comput. 2003, 7, 70–79. [Google Scholar] [CrossRef]

- Karam, S.; Vosselman, G.; Peter, M.; Hosseinyalamdary, S.; Lehtola, V. Design, calibration, and evaluation of a backpack indoor mobile mapping system. Remote Sens. 2019, 11, 905. [Google Scholar] [CrossRef]

- Tachi, T.; Wang, Y.; Abe, R.; Kato, T.; Maebashi, N.; Kishimoto, N. Development of versatile mobile mapping system on a small scale. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 271–275. [Google Scholar] [CrossRef]

- Elhashash, M.; Albanwan, H.; Qin, R. A review of mobile mapping systems: From sensors to applications. Sensors 2022, 22, 4262. [Google Scholar] [CrossRef]

- Luetzenburg, G.; Kroon, A.; Bjørk, A.A. Evaluation of the Apple iPhone 12 Pro LiDAR for an Application in Geosciences. Sci. Rep. 2021, 11, 22221. [Google Scholar] [CrossRef]

- Chase, P.; Clarke, K.; Hawkes, A.; Jabari, S.; Jakus, J. Apple iPhone 13 Pro LiDAR accuracy assessment for engineering applications. In Proceedings of the Transforming Construction with Reality Capture Technologies: The Digital Reality of Tomorrow, Fredericton, NB, Canada, 23–25 August 2022. [Google Scholar]

- Balado, J.; González, E.; Arias, P.; Castro, D. Novel approach to automatic traffic sign inventory based on mobile mapping system data and deep learning. Remote Sens. 2020, 12, 442. [Google Scholar] [CrossRef]

- Niu, X.; Liu, T.; Kuang, J.; Li, Y. A novel position and orientation system for pedestrian indoor mobile mapping system. IEEE Sens. J. 2020, 21, 2104–2114. [Google Scholar] [CrossRef]

- Nebiker, S.; Meyer, J.; Blaser, S.; Ammann, M.; Rhyner, S. Outdoor mobile mapping and AI-based 3D object detection with low-cost RGB-D cameras: The use case of on-street parking statistics. Remote Sens. 2021, 13, 3099. [Google Scholar] [CrossRef]

- Deng, Y.; Ai, H.; Deng, Z.; Gao, W.; Shang, J. An overview of indoor positioning and mapping technology standards. Standards 2022, 2, 157–183. [Google Scholar] [CrossRef]

- Xie, J.; Wang, H.; Li, P.; Meng, Y. Overview of Navigation Satellite Systems. In Satellite Navigation Systems and Technologies; Space Science and Technologies; Springer: Singapore, 2021; pp. 35–66. [Google Scholar] [CrossRef]

- Zaminpardaz, S.; Teunissen, P.J.; Khodabandeh, A. GLONASS–only FDMA+ CDMA RTK: Performance and outlook. GPS Solut. 2021, 25, 96. [Google Scholar] [CrossRef]

- Henkel, P.; Mittmann, U.; Iafrancesco, M. Real-time kinematic positioning with GPS and GLONASS. In Proceedings of the 2016 24th European Signal Processing Conference (EUSIPCO), Budapest, Hungary, 29 August–2 September 2016; pp. 1063–1067. [Google Scholar]

- Landau, H.; Vollath, U.; Chen, X. Virtual reference station systems. J. Glob. Position. Syst. 2002, 1, 137–143. [Google Scholar] [CrossRef]

- Kouba, J.; Lahaye, F.; Tétreault, P. Precise Point Positioning. In Springer Handbook of Global Navigation Satellite Systems; Teunissen, P.J.G., Montenbruck, O., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 723–751. [Google Scholar] [CrossRef]

- Elsheikh, M.; Iqbal, U.; Noureldin, A.; Korenberg, M. The implementation of precise point positioning (PPP): A comprehensive review. Sensors 2023, 23, 8874. [Google Scholar] [CrossRef]

- Li, X.; Huang, J.; Li, X.; Shen, Z.; Han, J.; Li, L.; Wang, B. Review of PPP–RTK: Achievements, challenges, and opportunities. Satell. Navig. 2022, 3, 28. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, J.; El-Mowafy, A.; Rizos, C. Integrity monitoring scheme for undifferenced and uncombined multi-frequency multi-constellation PPP-RTK. GPS Solut. 2023, 27, 68. [Google Scholar] [CrossRef]

- Zhang, P.; Zhao, Y.; Lin, H.; Zou, J.; Wang, X.; Yang, F. A novel GNSS attitude determination method based on primary baseline switching for a multi-antenna platform. Remote Sens. 2020, 12, 747. [Google Scholar] [CrossRef]

- Grove, P.D. Principles of GNSS, Inertial, and Multisensor Integrated Navigation System; Artech House: Norwood, MA, USA, 2013. [Google Scholar]

- Cai, X.; Hsu, H.; Chai, H.; Ding, L.; Wang, Y. Multi-antenna GNSS and INS integrated position and attitude determination without base station for land vehicles. J. Navig. 2019, 72, 342–358. [Google Scholar] [CrossRef]

- Zhang, C.; Dong, D.; Chen, W.; Cai, M.; Peng, Y.; Yu, C.; Wu, J. High-accuracy attitude determination using single-difference observables based on multi-antenna GNSS receiver with a common clock. Remote Sens. 2021, 13, 3977. [Google Scholar] [CrossRef]

- Mechanical Design of MEMS Gyroscopes. In MEMS Vibratory Gyroscopes; Springer: Boston, MA, USA, 2009; pp. 1–38. [CrossRef]

- Malykin, G.B. The Sagnac effect: Correct and incorrect explanations. Phys.-Uspekhi 2000, 43, 1229. [Google Scholar] [CrossRef]

- Lu, J.; Ye, L.; Zhang, J.; Luo, W.; Liu, H. A new calibration method of MEMS IMU plus FOG IMU. IEEE Sens. J. 2022, 22, 8728–8737. [Google Scholar] [CrossRef]

- Niu, W. Summary of research status and application of MEMS accelerometers. J. Comput. Commun. 2018, 6, 215. [Google Scholar] [CrossRef]

- Kyynäräinen, J.; Saarilahti, J.; Kattelus, H.; Kärkkäinen, A.; Meinander, T.; Oja, A.; Pekko, P.; Seppä, H.; Suhonen, M.; Kuisma, H. A 3D micromechanical compass. Sens. Actuators Phys. 2008, 142, 561–568. [Google Scholar] [CrossRef]

- Chow, W.W.; Gea-Banacloche, J.; Pedrotti, L.M.; Sanders, V.E.; Schleich, W.; Scully, M.O. The ring laser gyro. Rev. Mod. Phys. 1985, 57, 61–104. [Google Scholar] [CrossRef]

- Kang, H.; Yang, J.; Chang, H. A closed-loop accelerometer based on three degree-of-freedom weakly coupled resonator with self-elimination of feedthrough signal. IEEE Sens. J. 2018, 18, 3960–3967. [Google Scholar] [CrossRef]

- Grinberg, B.; Feingold, A.; Koenigsberg, L.; Furman, L. Closed-loop MEMS accelerometer: From design to production. In Proceedings of the 2016 DGON Intertial Sensors and Systems (ISS), Karlsruhe, Germany, 20–21 September 2016; pp. 1–16. [Google Scholar]

- Zwahlen, P.; Balmain, D.; Habibi, S.; Etter, P.; Rudolf, F.; Brisson, R.; Ullah, P.; Ragot, V. Open-loop and closed-loop high-end accelerometer platforms for high demanding applications. In Proceedings of the 2016 IEEE/ION Position, Location and Navigation Symposium (PLANS), Savannah, Georgia, USA, 11–14 April 2016; pp. 932–937. [Google Scholar]

- Santosh Kumar, S.; Tanwar, A. Development of a MEMS-based barometric pressure sensor for micro air vehicle (MAV) altitude measurement. Microsyst. Technol. 2020, 26, 901–912. [Google Scholar] [CrossRef]

- Fiorillo, A.S.; Critello, C.D.; Pullano, S.A. Theory, technology and applications of piezoresistive sensors: A review. Sens. Actuators Phys. 2018, 281, 156–175. [Google Scholar] [CrossRef]

- Thess, A.; Votyakov, E.V.; Kolesnikov, Y. Lorentz Force Velocimetry. Phys. Rev. Lett. 2006, 96, 164501. [Google Scholar] [CrossRef]

- Chulliat, A.; Macmillan, S.; Alken, P.; Beggan, C.; Nair, M.; Hamilton, B.; Woods, A.; Ridley, V.; Maus, S.; Thomson, A. The US/UK World Magnetic Model for 2015–2020. 2015. Available online: https://www.ngdc.noaa.gov/geomag/WMM/data/WMM2015/WMM2015_Report.pdf (accessed on 1 April 2025).

- Skog, I.; Händel, P. Calibration of a MEMS inertial measurement unit. In Proceedings of the XVII IMEKO World Congress, Rio de Janeiro, Brazil, 17–22 September 2006; pp. 1–6. [Google Scholar]

- Tedaldi, D.; Pretto, A.; Menegatti, E. A robust and easy to implement method for IMU calibration without external equipments. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 3042–3049. [Google Scholar]

- Albl, C.; Kukelova, Z.; Larsson, V.; Polic, M.; Pajdla, T.; Schindler, K. From two rolling shutters to one global shutter. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 2505–2513. [Google Scholar]

- Vautherin, J.; Rutishauser, S.; Schneider-Zapp, K.; Choi, H.F.; Chovancova, V.; Glass, A.; Strecha, C. Photogrammetric accuracy and modeling of rolling shutter cameras. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 139–146. [Google Scholar]

- Kauhanen, H.; Rönnholm, P. Wired and wireless camera triggering with Arduino. In Frontiers in Spectral Imaging and 3D Technologies for Geospatial Solutions; International Society for Photogrammetry and Remote Sensing (ISPRS): Vienna, Austria, 2017; pp. 101–106. [Google Scholar]

- Chowdhury, S.A.H.; Nguyen, C.; Li, H.; Hartley, R. Fixed-Lens camera setup and calibrated image registration for multifocus multiview 3D reconstruction. Neural Comput. Appl. 2021, 33, 7421–7440. [Google Scholar] [CrossRef]

- Lapray, P.-J.; Wang, X.; Thomas, J.-B.; Gouton, P. Multispectral filter arrays: Recent advances and practical implementation. Sensors 2014, 14, 21626–21659. [Google Scholar] [CrossRef]

- Genser, N.; Seiler, J.; Kaup, A. Camera array for multi-spectral imaging. IEEE Trans. Image Process. 2020, 29, 9234–9249. [Google Scholar] [CrossRef]

- Park, J.-I.; Lee, M.-H.; Grossberg, M.D.; Nayar, S.K. Multispectral imaging using multiplexed illumination. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio De Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Olagoke, A.S.; Ibrahim, H.; Teoh, S.S. Literature survey on multi-camera system and its application. IEEE Access 2020, 8, 172892–172922. [Google Scholar] [CrossRef]

- Ramírez-Hernández, L.R.; Rodríguez-Quiñonez, J.C.; Castro-Toscano, M.J.; Hernández-Balbuena, D.; Flores-Fuentes, W.; Rascón-Carmona, R.; Lindner, L.; Sergiyenko, O. Improve three-dimensional point localization accuracy in stereo vision systems using a novel camera calibration method. Int. J. Adv. Robot. Syst. 2020, 17, 1729881419896717. [Google Scholar] [CrossRef]

- Porto, L.R.; Imai, N.N.; Berveglieri, A.; Miyoshi, G.T.; Moriya, É.A.; Tommaselli, A.M.G.; Honkavaara, E. Comparison between two radiometric calibration methods applied to UAV multispectral images. In Image and Signal Processing for Remote Sensing XXVI; SPIE: Bellingham, WA, USA, 2020; Volume 11533, pp. 362–369. [Google Scholar]

- Huang, B.; Tang, Y.; Ozdemir, S.; Ling, H. A fast and flexible projector-camera calibration system. IEEE Trans. Autom. Sci. Eng. 2020, 18, 1049–1063. [Google Scholar] [CrossRef]

- Zhang, Z. Camera Parameters (Intrinsic, Extrinsic). In Computer Vision; Ikeuchi, K., Ed.; Springer International Publishing: Cham, Switzerland, 2021; pp. 135–140. [Google Scholar] [CrossRef]

- Habib, A.; Detchev, I.; Kwak, E. Stability analysis for a multi-camera photogrammetric system. Sensors 2014, 14, 15084–15112. [Google Scholar] [CrossRef]

- Huai, J.; Shao, Y.; Jozkow, G.; Wang, B.; Chen, D.; He, Y.; Yilmaz, A. Geometric Wide-Angle Camera Calibration: A Review and Comparative Study. Sensors 2024, 24, 6595. [Google Scholar] [CrossRef]

- Honkavaara, E.; Hakala, T.; Markelin, L.; Rosnell, T.; Saari, H.; Mäkynen, J. A process for radiometric correction of UAV image blocks. Photogramm. Fernerkund. Geoinf. 2012, 2, 115–127. [Google Scholar] [CrossRef]

- Suomalainen, J.; Oliveira, R.A.; Hakala, T.; Koivumäki, N.; Markelin, L.; Näsi, R.; Honkavaara, E. Direct reflectance transformation methodology for drone-based hyperspectral imaging. Remote Sens. Environ. 2021, 266, 112691. [Google Scholar] [CrossRef]

- Josep, A.; Yvan, P.; Joaquim, S.; Lladó, X. The SLAM problem: A survey. In Frontiers in Artificial Intelligence and Applications; IOS Press: Amsterdam, The Netherlands, 2008. [Google Scholar]

- He, M.; Zhu, C.; Huang, Q.; Ren, B.; Liu, J. A review of monocular visual odometry. Vis. Comput. 2020, 36, 1053–1065. [Google Scholar] [CrossRef]

- Yang, C.; Chen, Q.; Yang, Y.; Zhang, J.; Wu, M.; Mei, K. SDF-SLAM: A deep learning based highly accurate SLAM using monocular camera aiming at indoor map reconstruction with semantic and depth fusion. IEEE Access 2022, 10, 10259–10272. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Murai, R.; Dexheimer, E.; Davison, A.J. MASt3R-SLAM: Real-Time Dense SLAM with 3D Reconstruction Priors. arXiv 2024, arXiv:2412.12392. [Google Scholar] [CrossRef]

- Teed, Z.; Deng, J. Droid-slam: Deep visual slam for monocular, stereo, and rgb-d cameras. Adv. Neural Inf. Process. Syst. 2021, 34, 16558–16569. [Google Scholar]

- Li, S.; Zhang, D.; Xian, Y.; Li, B.; Zhang, T.; Zhong, C. Overview of deep learning application on visual SLAM. Displays 2022, 74, 102298. [Google Scholar] [CrossRef]

- Zitova, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef]

- Lowe, G. Sift-the scale invariant feature transform. Int. J. 2004, 2, 2. [Google Scholar]

- Ye, Y.; Zhu, B.; Tang, T.; Yang, C.; Xu, Q.; Zhang, G. A robust multimodal remote sensing image registration method and system using steerable filters with first-and second-order gradients. ISPRS J. Photogramm. Remote Sens. 2022, 188, 331–350. [Google Scholar] [CrossRef]

- Xiao, X.; Guo, B.; Shi, Y.; Gong, W.; Li, J.; Zhang, C. Robust and rapid matching of oblique UAV images of urban area. In MIPPR 2013: Pattern Recognition and Computer Vision; SPIE: Bellingham, WA, USA, 2013; Volume 8919, pp. 223–230. [Google Scholar]

- Hossein-Nejad, Z.; Nasri, M. An adaptive image registration method based on SIFT features and RANSAC transform. Comput. Electr. Eng. 2017, 62, 524–537. [Google Scholar] [CrossRef]

- Khoramshahi, E.; Campos, M.B.; Tommaselli, A.M.G.; Vilijanen, N.; Mielonen, T.; Kaartinen, H.; Kukko, A.; Honkavaara, E. Accurate calibration scheme for a multi-camera mobile mapping system. Remote Sens. 2019, 11, 2778. [Google Scholar] [CrossRef]

- Hirschmuller, H. Accurate and efficient stereo processing by semi-global matching and mutual information. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 21–23 September 2005; Volume 2, pp. 807–814. [Google Scholar]

- Seki, A.; Pollefeys, M. Sgm-nets: Semi-global matching with neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 231–240. [Google Scholar]

- Cao, Z.; Wang, Y.; Zheng, W.; Yin, L.; Tang, Y.; Miao, W.; Liu, S.; Yang, B. The algorithm of stereo vision and shape from shading based on endoscope imaging. Biomed. Signal Process. Control 2022, 76, 103658. [Google Scholar] [CrossRef]

- Long, X.; Guo, Y.-C.; Lin, C.; Liu, Y.; Dou, Z.; Liu, L.; Ma, Y.; Zhang, S.-H.; Habermann, M.; Theobalt, C. Wonder3d: Single image to 3d using cross-domain diffusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 9970–9980. [Google Scholar]

- Wang, D.; Watkins, C.; Xie, H. MEMS mirrors for LiDAR: A review. Micromachines 2020, 11, 456. [Google Scholar] [CrossRef] [PubMed]

- Zheng, H.; Ma, R.; Liu, M.; Zhu, Z. A linear-array receiver analog front-end circuit for rotating scanner LiDAR application. IEEE Sens. J. 2019, 19, 5053–5061. [Google Scholar] [CrossRef]

- Xue, F.; Lu, W.; Chen, Z.; Webster, C.J. From LiDAR point cloud towards digital twin city: Clustering city objects based on Gestalt principles. ISPRS J. Photogramm. Remote Sens. 2020, 167, 418–431. [Google Scholar] [CrossRef]

- Allouis, T.; Bailly, J.; Pastol, Y.; Le Roux, C. Comparison of LiDAR waveform processing methods for very shallow water bathymetry using Raman, near-infrared and green signals. Earth Surf. Process. Landf. 2010, 35, 640–650. [Google Scholar] [CrossRef]

- Szafarczyk, A.; Toś, C. The use of green laser in LiDAR bathymetry: State of the art and recent advancements. Sensors 2022, 23, 292. [Google Scholar] [CrossRef]

- Goyer, G.G.; Watson, R. The laser and its application to meteorology. Bull. Am. Meteorol. Soc. 1963, 44, 564–570. [Google Scholar] [CrossRef]

- Zou, Q.; Sun, Q.; Chen, L.; Nie, B.; Li, Q. A comparative analysis of LiDAR SLAM-based indoor navigation for autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6907–6921. [Google Scholar] [CrossRef]

- Rahman, M.F.; Onoda, Y.; Kitajima, K. Forest canopy height variation in relation to topography and forest types in central Japan with LiDAR. For. Ecol. Manag. 2022, 503, 119792. [Google Scholar] [CrossRef]

- Du, L.; Pang, Y.; Ni, W.; Liang, X.; Li, Z.; Suarez, J.; Wei, W. Forest terrain and canopy height estimation using stereo images and spaceborne LiDAR data from GF-7 satellite. Geo-Spat. Inf. Sci. 2024, 27, 811–821. [Google Scholar] [CrossRef]

- Uciechowska-Grakowicz, A.; Herrera-Granados, O.; Biernat, S.; Bac-Bronowicz, J. Usage of Airborne LiDAR Data and High-Resolution Remote Sensing Images in Implementing the Smart City Concept. Remote Sens. 2023, 15, 5776. [Google Scholar] [CrossRef]

- Dhanani, N.; Vignesh, V.P.; Venkatachalam, S. Demonstration of LiDAR on Accurate Surface Damage Measurement: A Case of Transportation Infrastructure. In ISARC. Proceedings of the International Symposium on Automation and Robotics in Construction; IAARC Publications: Chennai, India, 2023; Volume 40, pp. 553–560. [Google Scholar]

- Wang, H.; Feng, D. Rapid Geometric Evaluation of Transportation Infrastructure Based on a Proposed Low-Cost Portable Mobile Laser Scanning System. Sensors 2024, 24, 425. [Google Scholar] [CrossRef]

- Viswanath, K.; Jiang, P.; Saripalli, S. Reflectivity Is All You Need!: Advancing LiDAR Semantic Segmentation. arXiv 2024, arXiv:2403.13188. [Google Scholar]

- Zang, Y.; Yang, B.; Liang, F.; Xiao, X. Novel adaptive laser scanning method for point clouds of free-form objects. Sensors 2018, 18, 2239. [Google Scholar] [CrossRef] [PubMed]

- Hashimoto, T.; Saito, M. Normal Estimation for Accurate 3D Mesh Reconstruction with Point Cloud Model Incorporating Spatial Structure. In Proceedings of the CVPR Workshops, Long Beach, CA, USA, 16–20 June 2019; Volume 1, pp. 1–10. [Google Scholar]

- Huang, X.; Mei, G.; Zhang, J.; Abbas, R. A comprehensive survey on point cloud registration. arXiv 2021, arXiv:2103.02690. [Google Scholar] [CrossRef]

- Jones, K.; Lichti, D.D.; Radovanovic, R. Synthetic Images for Georeferencing Camera Images in Mobile Mapping Point-clouds. Can. J. Remote Sens. 2024, 50, 2300328. [Google Scholar] [CrossRef]

- Ihmeida, M.; Wei, H. Image registration techniques and applications: Comparative study on remote sensing imagery. In Proceedings of the 2021 14th International Conference on Developments in Esystems Engineering (DeSE), Sharjah, United Arab Emirates, 7–10 December 2021; pp. 142–148. [Google Scholar]

- Yang, H.; Shi, J.; Carlone, L. Teaser: Fast and certifiable point cloud registration. IEEE Trans. Robot. 2020, 37, 314–333. [Google Scholar] [CrossRef]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In IEEE ICRA Workshop on Open Source Software; IEEE: Kobe, Japan, 2009; Volume 3, p. 5. [Google Scholar]

- Macenski, S.; Foote, T.; Gerkey, B.; Lalancette, C.; Woodall, W. Robot Operating System 2: Design, architecture, and uses in the wild. Sci. Robot. 2022, 7, eabm6074. [Google Scholar] [CrossRef]

- Trybała, P.; Kujawa, P.; Romańczukiewicz, K.; Szrek, A.; Remondino, F. Designing and evaluating a portable LiDAR-based SLAM system. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 48, 191–198. [Google Scholar] [CrossRef]

- Menna, F.; Torresani, A.; Battisti, R.; Nocerino, E.; Remondino, F. A modular and low-cost portable VSLAM system for real-time 3D mapping: From indoor and outdoor spaces to underwater environments. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 48, 153–162. [Google Scholar] [CrossRef]

- Będkowski, J. Open source, open hardware hand-held mobile mapping system for large scale surveys. SoftwareX 2024, 25, 101618. [Google Scholar] [CrossRef]

- Zhang, Y.; Ahmadi, S.; Kang, J.; Arjmandi, Z.; Sohn, G. YUTO MMS: A comprehensive SLAM dataset for urban mobile mapping with tilted LiDAR and panoramic camera integration. Int. J. Robot. Res. 2025, 44, 3–21. [Google Scholar] [CrossRef]

- Xu, D.; Wang, H.; Xu, W.; Luan, Z.; Xu, X. LiDAR applications to estimate forest biomass at individual tree scale: Opportunities, challenges and future perspectives. Forests 2021, 12, 550. [Google Scholar] [CrossRef]

- Zhang, M.; Li, W.; Zhao, X.; Liu, H.; Tao, R.; Du, Q. Morphological transformation and spatial-logical aggregation for tree species classification using hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–12. [Google Scholar] [CrossRef]

- Asner, G.P.; Ustin, S.L.; Townsend, P.; Martin, R.E. Forest biophysical and biochemical properties from hyperspectral and LiDAR remote sensing. In Remote Sensing Handbook, Volume IV; CRC Press: Boca Raton, FL, USA, 2024; pp. 96–124. [Google Scholar]

- Ahmad, U.; Alvino, A.; Marino, S. A review of crop water stress assessment using remote sensing. Remote Sens. 2021, 13, 4155. [Google Scholar] [CrossRef]

- Guo, Y.; Chen, W.Y. Monitoring tree canopy dynamics across heterogeneous urban habitats: A longitudinal study using multi-source remote sensing data. J. Environ. Manag. 2024, 356, 120542. [Google Scholar] [CrossRef]

- Zhu, H.; Lin, C.; Liu, G.; Wang, D.; Qin, S.; Li, A.; Xu, J.-L.; He, Y. Intelligent agriculture: Deep learning in UAV-based remote sensing imagery for crop diseases and pests detection. Front. Plant Sci. 2024, 15, 1435016. [Google Scholar] [CrossRef]

- Li, F.; Bai, J.; Zhang, M.; Zhang, R. Yield estimation of high-density cotton fields using low-altitude UAV imaging and deep learning. Plant Methods 2022, 18, 55. [Google Scholar] [CrossRef]

- Lambertini, A.; Mandanici, E.; Tini, M.A.; Vittuari, L. Technical challenges for multi-temporal and multi-sensor image processing surveyed by UAV for mapping and monitoring in precision agriculture. Remote Sens. 2022, 14, 4954. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A.M. Deep learning techniques to classify agricultural crops through UAV imagery: A review. Neural Comput. Appl. 2022, 34, 9511–9536. [Google Scholar] [CrossRef] [PubMed]

- Moradi, S.; Bokani, A.; Hassan, J. UAV-based smart agriculture: A review of UAV sensing and applications. In Proceedings of the 2022 32nd International Telecommunication Networks and Applications Conference (ITNAC), Wellington, New Zealand, 30 November–2 December 2022; pp. 181–184. [Google Scholar]

- Dong, H.; Dong, J.; Sun, S.; Bai, T.; Zhao, D.; Yin, Y.; Shen, X.; Wang, Y.; Zhang, Z.; Wang, Y. Crop water stress detection based on UAV remote sensing systems. Agric. Water Manag. 2024, 303, 109059. [Google Scholar] [CrossRef]

- Treccani, D.; Adami, A.; Brunelli, V.; Fregonese, L. Mobile mapping system for historic built heritage and GIS integration: A challenging case study. Appl. Geomat. 2024, 16, 293–312. [Google Scholar] [CrossRef]

- Naeem, N.; Rana, I.A.; Nasir, A.R. Digital real estate: A review of the technologies and tools transforming the industry and society. Smart Constr. Sustain. Cities 2023, 1, 15. [Google Scholar] [CrossRef]

- Hoydis, J.; Cammerer, S.; Aoudia, F.A.; Vem, A.; Binder, N.; Marcus, G.; Keller, A. Sionna: An Open-Source Library for Next-Generation Physical Layer Research. arXiv 2023, arXiv:2203.11854. [Google Scholar] [CrossRef]

- Vassena, G. Outdoor and indoor mapping of a mining site by indoor mobile mapping and geo referenced Ground Control Scans. In Proceedings of the XXVII FIG CONFERENCE, Warsaw, Poland, 11–15 September 2022; Volume 1, pp. 1–10. [Google Scholar]

- Hasegawa, H.; Sujaswara, A.A.; Kanemoto, T.; Tsubota, K. Possibilities of using UAV for estimating earthwork volumes during process of repairing a small-scale forest road, case study from Kyoto Prefecture, Japan. Forests 2023, 14, 677. [Google Scholar] [CrossRef]

- Yıldız, S.; Kıvrak, S.; Arslan, G. Using drone technologies for construction project management: A narrative review. J. Constr. Eng. Manag. Innov. 2021, 4, 229–244. [Google Scholar] [CrossRef]

- Campi, M.; Falcone, M.; Sabbatini, S. Towards continuous monitoring of architecture. Terrestrial laser scanning and mobile mapping system for the diagnostic phases of the cultural heritage. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 46, 121–127. [Google Scholar] [CrossRef]

- Kurşun, H. Accuracy comparison of mobile mapping system for road inventory. Mersin Photogramm. J. 2023, 5, 55–66. [Google Scholar] [CrossRef]

- Agarwal, D.; Kucukpinar, T.; Fraser, J.; Kerley, J.; Buck, A.R.; Anderson, D.T.; Palaniappan, K. Simulating city-scale aerial data collection using unreal engine. In Proceedings of the 2023 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), St. Louis, MO, USA, 27–29 September 2023; pp. 1–9. [Google Scholar]

- Sabir, A.; Hussain, R.; Pedro, A.; Soltani, M.; Lee, D.; Park, C.; Pyeon, J.-H. Synthetic Data Generation with Unity 3D and Unreal Engine for Construction Hazard Scenarios: A Comparative Analysis. In International Conference on Construction Engineering and Project Management; Korea Institute of Construction Engineering and Management: Seoul, Republic of Korea, 2024; pp. 1286–1288. [Google Scholar]

- Iwaszczuk, D.; Goebel, M.; Du, Y.; Schmidt, J.; Weinmann, M. Potential of Mobile Mapping To Create Digital Twins of Forests. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 48, 199–206. [Google Scholar] [CrossRef]

- Spadavecchia, C.; Belcore, E.; Grasso, N.; Piras, M. A fully automatic forest parameters extraction at single-tree level: A comparison of mls and tls applications. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 48, 457–463. [Google Scholar] [CrossRef]

- Fol, C.; Murtiyoso, A.; Kükenbrink, D.; Remondino, F.; Griess, V. Terrestrial 3D Mapping of Forests: Georeferencing Challenges and Sensors Comparisons. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 48, 55–61. [Google Scholar] [CrossRef]

- Spadavecchia, C. Innovative LiDAR-Based Solution for Automatic Assessment of Forest Parameters for Estimating Aboveground Biomass and C02 Storage. Doctoral Dissertation, Politecnico di Torino, Torino, Italy, July 2024. Available online: https://tesidottorato.depositolegale.it/bitstream/20.500.14242/170710/1/conv_final_phd_thesis_spadavecchia.pdf (accessed on 1 April 2025).

- Yang, T.; Ryu, Y.; Kwon, R.; Choi, C.; Zhong, Z.; Nam, Y.; Jo, S. Mapping Carbon Stock of Individual Street Trees Using Lidar-Camera Fusion-Based Mobile Mapping System. Available online: https://ssrn.com/abstract=4762402 (accessed on 1 April 2025).

- Cabrelles, M.; Lerma, J.; García-Asenjo, L.; Garrigues, P.; Martínez, L. Long and Lose-Range Terrestrial Photogrammetry for Rocky Landscape Deformation Monitoring. In Proceedings of the 5th Joint International Symposium on Deformation Monitoring (JISDM 2022), Valencia, Spain, 20–22 June 2023; pp. 485–491. [Google Scholar]

- Șmuleac, A.; Șmuleac, L.; Popescu, C.A.; Herban, S.; Man, T.E.; Imbrea, F.; Horablaga, A.; Mihai, S.; Paşcalău, R.; Safar, T. Geospatial Technologies Used in the Management of Water Resources in West of Romania. Water 2022, 14, 3729. [Google Scholar] [CrossRef]

- Bwambale, E.; Abagale, F.K.; Anornu, G.K. Smart irrigation monitoring and control strategies for improving water use efficiency in precision agriculture: A review. Agric. Water Manag. 2022, 260, 107324. [Google Scholar] [CrossRef]

- Hwang, J.; Yun, H.; Jeong, T.; Suh, Y.; Huang, H. Frequent unscheduled updates of the national base map using the land-based mobile mapping system. Remote Sens. 2013, 5, 2513–2533. [Google Scholar] [CrossRef]

- Fryskowska, A.; Wroblewski, P. Mobile Laser Scanning accuracy assessment for the purpose of base-map updating. Geod. Cartogr. 2018, 67, 35–55. [Google Scholar]

- Maset, E.; Scalera, L.; Beinat, A.; Visintini, D.; Gasparetto, A. Performance investigation and repeatability assessment of a mobile robotic system for 3D mapping. Robotics 2022, 11, 54. [Google Scholar] [CrossRef]

- Feng, Y.; Xiao, Q.; Brenner, C.; Peche, A.; Yang, J.; Feuerhake, U.; Sester, M. Determination of building flood risk maps from LiDAR mobile mapping data. Comput. Environ. Urban Syst. 2022, 93, 101759. [Google Scholar] [CrossRef]

- Yuan, Q.; Yao, L.; Xu, Z.; Liu, H. Survey of expressway infrastructure based on vehicle-borne mobile mapping system. In International Conference on Intelligent Traffic Systems and Smart City (ITSSC 2021); SPIE: Bellingham, WA, USA, 2022; Volume 12165, pp. 404–410. [Google Scholar]

- Espinel-Gomez, D.; Fernandez-Gomez, W.; Moreno-Moreno, J.; Carranza-Leguizamo, D.; Marrugo, C. A Smart Mobile Mapping Application for the Evaluation of Road Infrastructure in Urban and Rural Corridors. In Applied Computer Sciences in Engineering; Communications in Computer and Information Science; Figueroa-García, J.C., Hernández, G., Suero Pérez, D.F., Gaona García, E.E., Eds.; Springer Nature: Cham, Switzerland, 2025; Volume 2222, pp. 175–185. [Google Scholar]

- Simon, M.; Neo, O.; Șmuleac, L.; Șmuleac, A. The Use of lidar technology-mobile mapping in urban road infrastructure. Res. J. Agric. Sci. 2023, 55, 229. [Google Scholar]

- Simeoni, L.; Vitti, A.; Ferro, E.; Corsini, A.; Ronchetti, F.; Lelli, F.; Costa, C.; Quattrociocchi, D.; Rover, S.; Beltrami, A. Mobile Terrestrial LiDAR survey for rockfall risk management along a highway. Procedia Struct. Integr. 2024, 62, 499–505. [Google Scholar] [CrossRef]

- Suleymanoglu, B.; Soycan, M.; Toth, C. 3d road boundary extraction based on machine learning strategy using lidar and image-derived mms point clouds. Sensors 2024, 24, 503. [Google Scholar] [CrossRef] [PubMed]

- Lisjak, J.; Petrinović, M.; Keleminec, S. Harnessing Remote Sensing Technologies for Successful Large-Scale Projects. Teh. Glas. 2024, 18, 104–109. [Google Scholar] [CrossRef]

- Sjölander, A.; Belloni, V.; Ansell, A.; Nordström, E. Towards automated inspections of tunnels: A review of optical inspections and autonomous assessment of concrete tunnel linings. Sensors 2023, 23, 3189. [Google Scholar] [CrossRef]

- Menna, F.; Battisti, R.; Nocerino, E.; Remondino, F. FROG: A portable underwater mobile mapping system. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 48, 295–302. [Google Scholar] [CrossRef]

- Farkoushi, M.G.; Hong, S.; Sohn, H.-G. Generating Seamless Three-Dimensional Maps by Integrating Low-Cost Unmanned Aerial Vehicle Imagery and Mobile Mapping System Data. Sensors 2025, 25, 822. [Google Scholar] [CrossRef]

- Barros-Sobrín, Á.; Balado, J.; Soilán, M.; Mingueza-Bauzá, E. Gamification for road asset inspection from Mobile Mapping System data. J. Spat. Sci. 2024, 69, 443–466. [Google Scholar] [CrossRef]

- Peters, T.; Brenner, C.; Schindler, K. Semantic segmentation of mobile mapping point clouds via multi-view label transfer. ISPRS J. Photogramm. Remote Sens. 2023, 202, 30–39. [Google Scholar] [CrossRef]

- Hyyppä, E.; Manninen, P.; Maanpää, J.; Taher, J.; Litkey, P.; Hyyti, H.; Kukko, A.; Kaartinen, H.; Ahokas, E.; Yu, X. Can the perception data of autonomous vehicles be used to replace mobile mapping surveys?—A case study surveying roadside city trees. Remote Sens. 2023, 15, 1790. [Google Scholar] [CrossRef]

- Zhang, Y.; Kang, J.; Sohn, G. PVL-Cartographer: Panoramic vision-aided lidar cartographer-based slam for maverick mobile mapping system. Remote Sens. 2023, 15, 3383. [Google Scholar] [CrossRef]

- Maté-González, M.Á.; Di Pietra, V.; Piras, M. Evaluation of different LiDAR technologies for the documentation of forgotten cultural heritage under forest environments. Sensors 2022, 22, 6314. [Google Scholar] [CrossRef] [PubMed]

- Oleiwi, B.K.; Mahfuz, A.; Roth, H. Application of fuzzy logic for collision avoidance of mobile robots in dynamic-indoor environments. In Proceedings of the 2021 2nd International Conference on Robotics, Electrical and Signal Processing Techniques (ICREST), Dhaka, Bangladesh, 5–7 January 2021; pp. 131–136. [Google Scholar]

- Schuman, C.D.; Kulkarni, S.R.; Parsa, M.; Mitchell, J.P.; Date, P.; Kay, B. Opportunities for neuromorphic computing algorithms and applications. Nat. Comput. Sci. 2022, 2, 10–19. [Google Scholar] [CrossRef] [PubMed]

- Gallego, G.; Delbrück, T.; Orchard, G.; Bartolozzi, C.; Taba, B.; Censi, A.; Leutenegger, S.; Davison, A.J.; Conradt, J.; Daniilidis, K. Event-based vision: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 154–180. [Google Scholar] [CrossRef] [PubMed]

- Reichert, M.; Di Candia, R.; Win, M.Z.; Sanz, M. Quantum-enhanced Doppler lidar. Npj Quantum Inf. 2022, 8, 147. [Google Scholar] [CrossRef]

| Sensor | Technologies | ||||

|---|---|---|---|---|---|

| Compass, Magnetometer, Accelerometer, pressure, Temperature | Mechanical | MEMS | |||

| Ultrasonic | Electrical | Flash light | |||

| GNSS | Differential | RTK | PPP | PPP-RTK | NRTK |

| Multi- constellation | Multi-antenna | ||||

| IMU | Mechanical | FOG | MEMS | RLG | |

| Kalman Filter | |||||

| LiDAR | Non-scanning | Non-mechanical | Multi-temporal | Hyper temporal | MEMS |

| Scanning | Mechanical | OPA | SLAM | ||

| Motorized Optomechanical | |||||

| Camera | RGB | Infrared | Multi-spectral | Multi-camera | Multi-fisheye |

| RGB-D | Hyper-spectral | Multi-projective | |||

| Geometric Calibration | Radiometric calibration | SLAM | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khoramshahi, E.; Nezami, S.; Pellikka, P.; Honkavaara, E.; Chen, Y.; Habib, A. A Taxonomy of Sensors, Calibration and Computational Methods, and Applications of Mobile Mapping Systems: A Comprehensive Review. Remote Sens. 2025, 17, 1502. https://doi.org/10.3390/rs17091502

Khoramshahi E, Nezami S, Pellikka P, Honkavaara E, Chen Y, Habib A. A Taxonomy of Sensors, Calibration and Computational Methods, and Applications of Mobile Mapping Systems: A Comprehensive Review. Remote Sensing. 2025; 17(9):1502. https://doi.org/10.3390/rs17091502

Chicago/Turabian StyleKhoramshahi, Ehsan, Somayeh Nezami, Petri Pellikka, Eija Honkavaara, Yuwei Chen, and Ayman Habib. 2025. "A Taxonomy of Sensors, Calibration and Computational Methods, and Applications of Mobile Mapping Systems: A Comprehensive Review" Remote Sensing 17, no. 9: 1502. https://doi.org/10.3390/rs17091502

APA StyleKhoramshahi, E., Nezami, S., Pellikka, P., Honkavaara, E., Chen, Y., & Habib, A. (2025). A Taxonomy of Sensors, Calibration and Computational Methods, and Applications of Mobile Mapping Systems: A Comprehensive Review. Remote Sensing, 17(9), 1502. https://doi.org/10.3390/rs17091502