Collaborative Static-Dynamic Teaching: A Semi-Supervised Framework for Stripe-like Space Target Detection

Abstract

1. Introduction

- Novel MRSA-Net: We designed a specialized network for SSTD, which effectively extracts features from variable and low-SNR stripe-like targets using multi-receptive field feature extraction and multi-level weighted feature fusion.

- Innovative CSDT Architecture: It reduces dependency on extensive, inaccurate, and labor-intensive pixel-level annotations by learning stripe-like patterns from unlabeled space images, marking the first application of semi-supervised learning techniques to SSTD.

- New Adaptive Pseudo-Labeling (APL) Strategy: It combines insights from static teacher and dynamic teacher models to dynamically select the most reliable pseudo-labels, reducing overfitting risks during training.

- Comprehensive Validation: Extensive experiments demonstrate the CSDT framework’s state-of-the-art performance on the AstroStripeSet dataset, showcasing robust zero-shot generalization across diverse real-world datasets from both ground-based and space-based sources.

2. Related Work

2.1. Traditional Unsupervised Methods for SSTD

2.2. Fully Supervised Learning Methods for Target Detection

2.3. Semi-Supervised Learning Methods for Target Detection

3. Proposed Framework

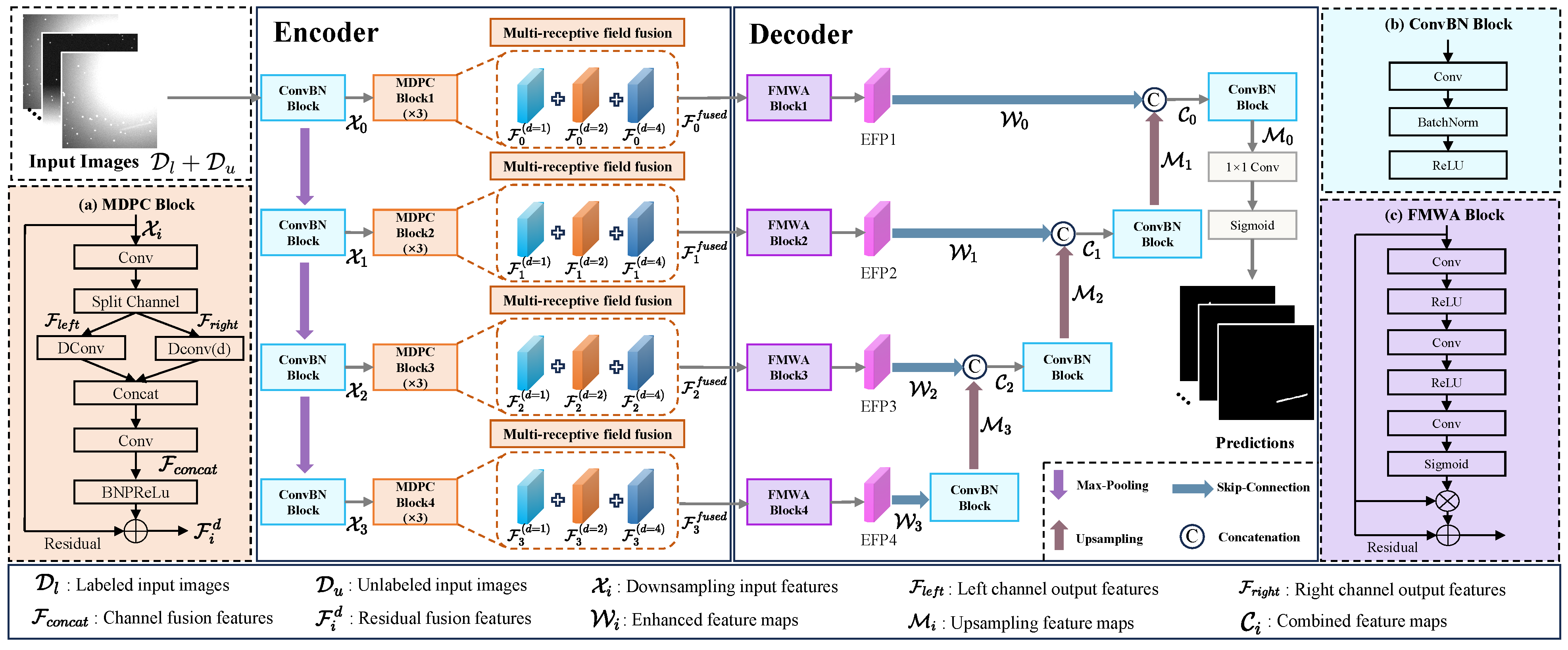

3.1. MRSA-Net Configuration

3.1.1. Multi-Receptive Feature Extraction Encoder

3.1.2. Multi-Level Feature Fusion Decoder

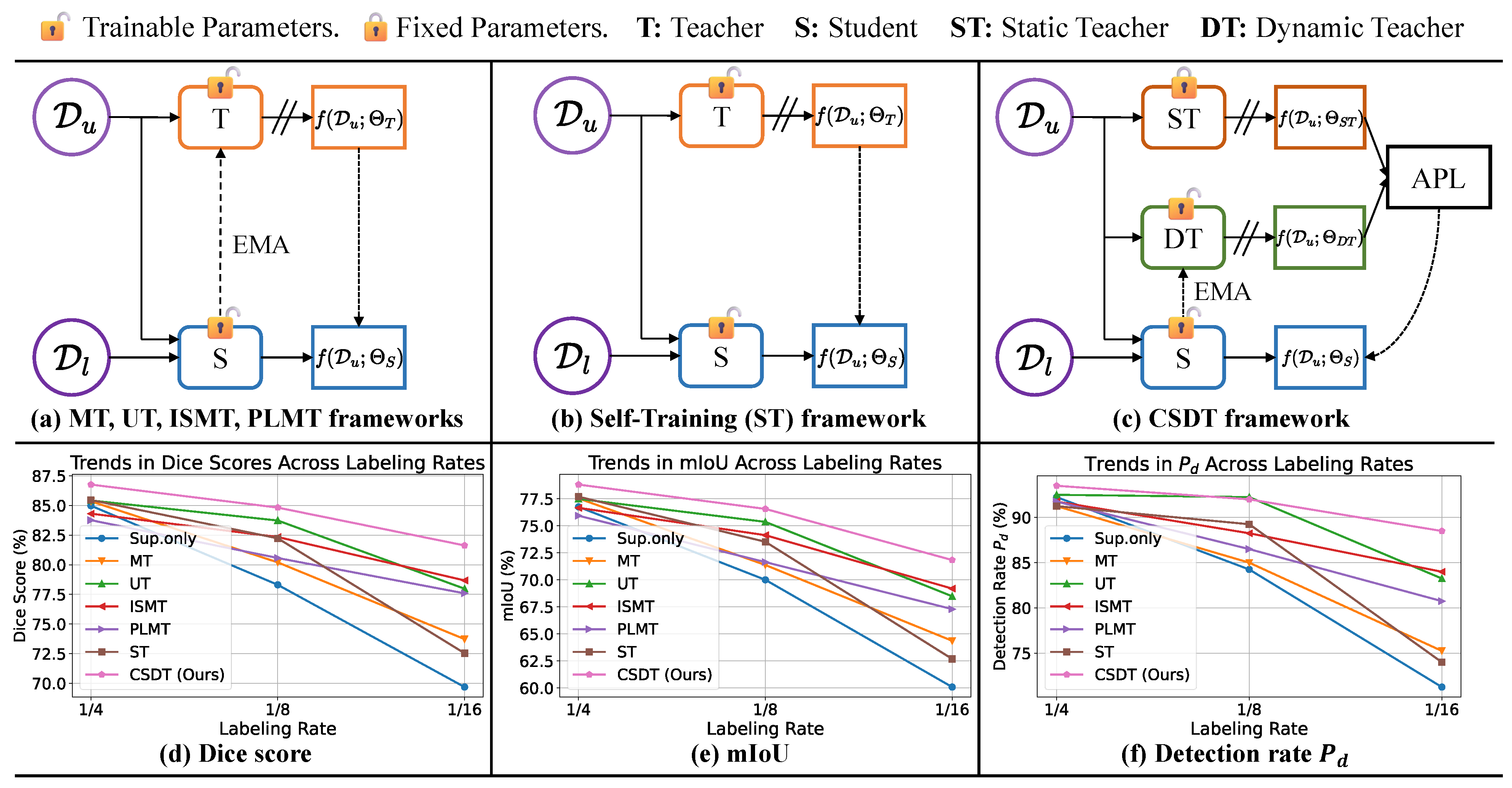

3.2. CSDT Semi-Supervised Learning Architecture

3.2.1. The Role of the Static Teacher Model

| Algorithm 1 CSDT training and updating strategies |

|

3.2.2. The Role of the Dynamic Teacher Model

3.2.3. The Role of the Student Model

3.3. Adaptive Pseudo-Labeling Strategy

4. Experiments

4.1. Experimental Setup

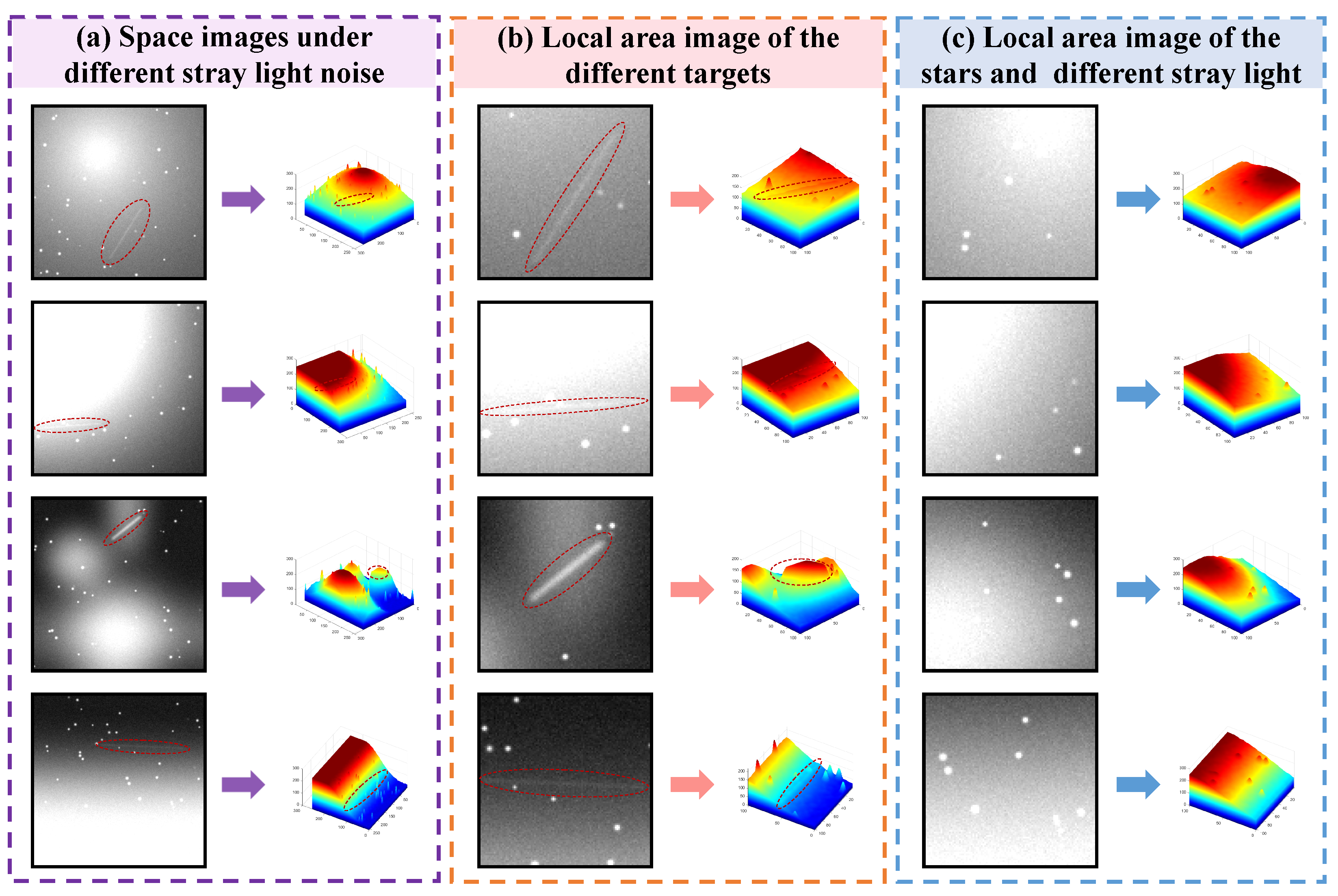

4.1.1. Dataset

4.1.2. Evaluation Metrics

4.1.3. Implementation Details

4.2. Comparison with SOTA Semi-Supervised Learning Methods

4.2.1. Quantitative Comparison

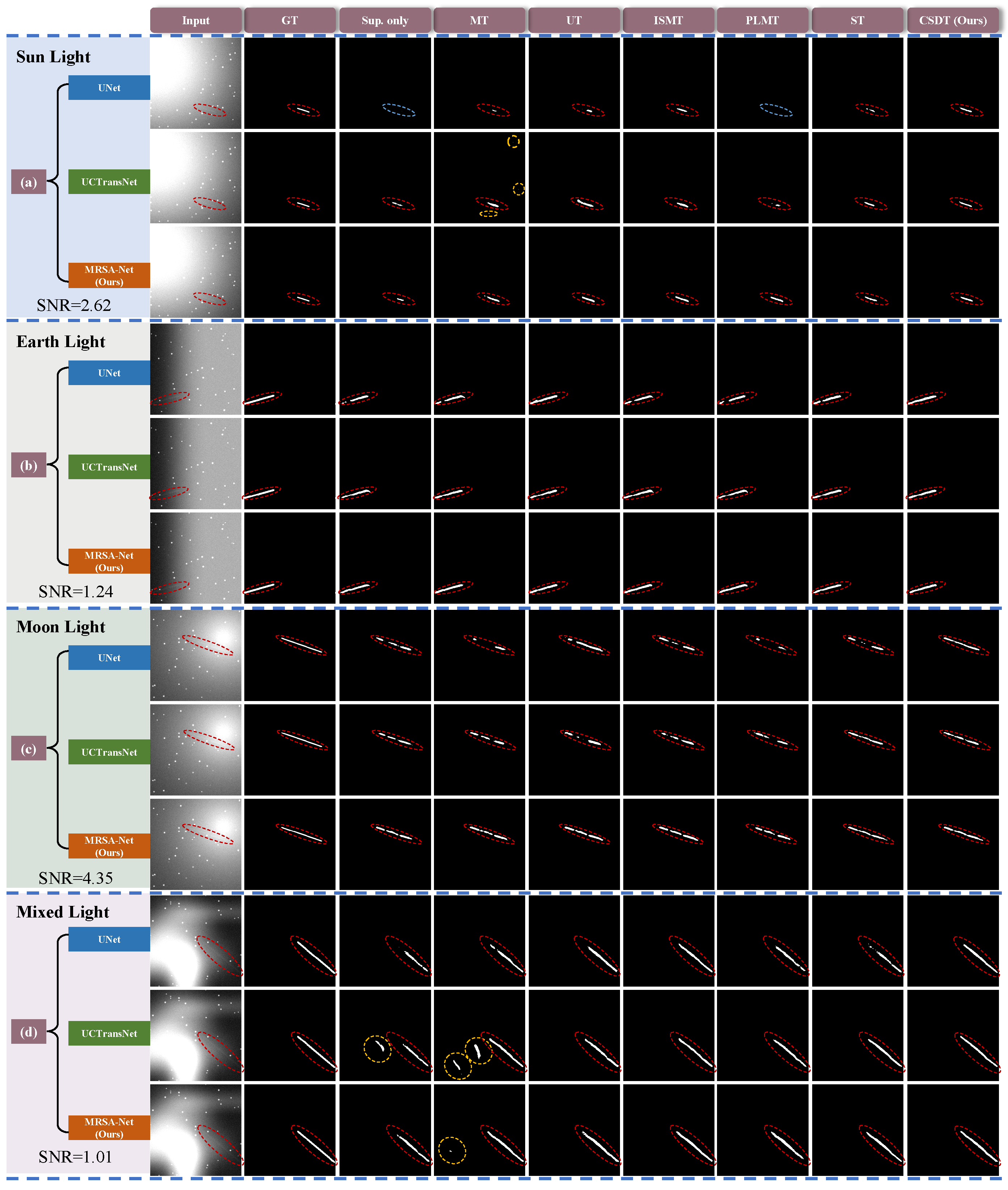

4.2.2. Visual Effect Assessments

4.3. Ablation Study

4.3.1. Zero-Shot Generalization Capabilities

4.3.2. Contribution of MRSA-Net Components

4.3.3. Single-Teacher vs. Dual-Teacher Supervision

4.3.4. Evaluation of APL Strategy

4.3.5. Impact of Loss Functions

4.3.6. Comparison with Other Networks

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, Z.; Wang, Y.; Zheng, W. Space-based optical observations on space debris via multipoint of view. Int. J. Aerosp. Eng. 2020, 2020, 8328405. [Google Scholar] [CrossRef]

- Wirnsberger, H.; Baur, O.; Kirchner, G. Space debris orbit prediction errors using bi-static laser observations. Case study: ENVISAT. Adv. Space Res. 2015, 55, 2607–2615. [Google Scholar] [CrossRef]

- Zhang, J.; Shi, A.; Yang, K. Dynamics of tethered-coulomb formation for debris deorbiting in geosynchronous orbit. J. Aerosp. Eng. 2022, 35, 04022015. [Google Scholar] [CrossRef]

- Liu, D.; Chen, B.; Chin, T.J.; Rutten, M.G. Topological sweep for multi-target detection of geostationary space objects. IEEE Trans. Signal Process. 2020, 68, 5166–5177. [Google Scholar] [CrossRef]

- Diprima, F.; Santoni, F.; Piergentili, F.; Fortunato, V.; Abbattista, C.; Amoruso, L. Efficient and automatic image reduction framework for space debris detection based on GPU technology. Acta Astronaut. 2018, 145, 332–341. [Google Scholar] [CrossRef]

- Liu, D.; Wang, X.; Xu, Z.; Li, Y.; Liu, W. Space target extraction and detection for wide-field surveillance. Astron. Comput. 2020, 32, 100408. [Google Scholar] [CrossRef]

- Yao, Y.; Zhu, J.; Liu, Q.; Lu, Y.; Xu, X. An adaptive space target detection algorithm. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6517605. [Google Scholar] [CrossRef]

- Felt, V.; Fletcher, J. Seeing Stars: Learned Star Localization for Narrow-Field Astrometry. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Nashville TN, USA, 11–15 June 2024; pp. 8297–8305. [Google Scholar]

- Lin, B.; Zhong, L.; Zhuge, S.; Yang, X.; Yang, Y.; Wang, K.; Zhang, X. A New Pattern for Detection of Streak-like Space Target From Single Optical Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5616113. [Google Scholar] [CrossRef]

- Jiang, P.; Liu, C.; Yang, W.; Kang, Z.; Li, Z. Automatic space debris extraction channel based on large field of view photoelectric detection system. Publ. Astron. Soc. Pac. 2022, 134, 024503. [Google Scholar] [CrossRef]

- Lu, K.; Li, H.; Lin, L.; Zhao, R.; Liu, E.; Zhao, R. A Fast Star-Detection Algorithm under Stray-Light Interference. Photonics 2023, 10, 889. [Google Scholar] [CrossRef]

- Xu, Z.; Liu, D.; Yan, C.; Hu, C. Stray light nonuniform background correction for a wide-field surveillance system. Appl. Opt. 2020, 59, 10719–10728. [Google Scholar] [CrossRef] [PubMed]

- Hickson, P. A fast algorithm for the detection of faint orbital debris tracks in optical images. Adv. Space Res. 2018, 62, 3078–3085. [Google Scholar] [CrossRef]

- Levesque, M.P.; Buteau, S. Image Processing Technique for Automatic Detection of Satellite Streaks; Defense Research and Development Canada Valcartier: Quebec, QC, Canada, 2007. [Google Scholar]

- Levesque, M. Automatic reacquisition of satellite positions by detecting their expected streaks in astronomical images. In Proceedings of the Advanced Maui Optical and Space Surveillance Technologies Conference, Wailea, HI, USA, 19–22 September 2009; p. E81. [Google Scholar]

- Jia, P.; Liu, Q.; Sun, Y. Detection and classification of astronomical targets with deep neural networks in wide-field small aperture telescopes. Astron. J. 2020, 159, 212. [Google Scholar] [CrossRef]

- Li, Y.; Niu, Z.; Sun, Q.; Xiao, H.; Li, H. BSC-Net: Background Suppression Algorithm for Stray Lights in Star Images. Remote Sens. 2022, 14, 4852. [Google Scholar] [CrossRef]

- Liu, L.; Niu, Z.; Li, Y.; Sun, Q. Multi-Level Convolutional Network for Ground-Based Star Image Enhancement. Remote Sens. 2023, 15, 3292. [Google Scholar] [CrossRef]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. Adv. Neural Inf. Process. Syst. 2017, 30, 789. [Google Scholar]

- Liu, Y.C.; Ma, C.Y.; He, Z.; Kuo, C.W.; Chen, K.; Zhang, P.; Wu, B.; Kira, Z.; Vajda, P. Unbiased teacher for semi-supervised object detection. arXiv 2021, arXiv:2102.09480. [Google Scholar]

- Yang, Q.; Wei, X.; Wang, B.; Hua, X.S.; Zhang, L. Interactive self-training with mean teachers for semi-supervised object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 5941–5950. [Google Scholar]

- Mao, Z.; Tong, X.; Luo, Z. Semi-Supervised Remote Sensing Image Change Detection Using Mean Teacher Model for Constructing Pseudo-Labels. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Yang, L.; Zhuo, W.; Qi, L.; Shi, Y.; Gao, Y. St++: Make self-training work better for semi-supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4268–4277. [Google Scholar]

- Zhu, Z.; Zia, A.; Li, X.; Dan, B.; Ma, Y.; Liu, E.; Zhao, R. SSTD: Stripe-Like Space Target Detection using Single-Point Supervision. arXiv 2024, arXiv:2407.18097. [Google Scholar]

- Wang, H.; Cao, P.; Wang, J.; Zaiane, O.R. Uctransnet: Rethinking the skip connections in u-net from a channel-wise perspective with transformer. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; Volume 36, pp. 2441–2449. [Google Scholar]

- Ma, T.; Wang, H.; Liang, J.; Peng, J.; Ma, Q.; Kai, Z. MSMA-Net: An Infrared Small Target Detection Network by Multi-scale Super-resolution Enhancement and Multi-level Attention Fusion. IEEE Trans. Geosci. Remote. Sens. 2023, 62, 5602620. [Google Scholar]

- Li, B.; Xiao, C.; Wang, L.; Wang, Y.; Lin, Z.; Li, M.; An, W.; Guo, Y. Dense nested attention network for infrared small target detection. IEEE Trans. Image Process. 2022, 32, 1745–1758. [Google Scholar] [CrossRef]

- Jiang, P.; Liu, C.; Yang, W.; Kang, Z.; Fan, C.; Li, Z. Space Debris Automation Detection and Extraction Based on a Wide-field Surveillance System. Astrophys. J. Suppl. Ser. 2022, 259, 4. [Google Scholar] [CrossRef]

- Cegarra Polo, M.; Yanagisawa, T.; Kurosaki, H. Real-time processing pipeline for automatic streak detection in astronomical images implemented in a multi-GPU system. Publ. Astron. Soc. Jpn. 2022, 74, 777–790. [Google Scholar] [CrossRef]

- Nir, G.; Zackay, B.; Ofek, E.O. Optimal and efficient streak detection in astronomical images. Astron. J. 2018, 156, 229. [Google Scholar] [CrossRef]

- Dawson, W.A.; Schneider, M.D.; Kamath, C. Blind detection of ultra-faint streaks with a maximum likelihood method. arXiv 2016, arXiv:1609.07158. [Google Scholar]

- Sara, R.; Cvrcek, V. Faint streak detection with certificate by adaptive multi-level bayesian inference. In Proceedings of the European Conference on Space Debris, Darmstadt, Germany, 18–21 April 2017. [Google Scholar]

- Virtanen, J.; Poikonen, J.; Säntti, T.; Komulainen, T.; Torppa, J.; Granvik, M.; Muinonen, K.; Pentikäinen, H.; Martikainen, J.; Näränen, J.; et al. Streak detection and analysis pipeline for space-debris optical images. Adv. Space Res. 2016, 57, 1607–1623. [Google Scholar] [CrossRef]

- Huang, T.; Yang, G.; Tang, G. A fast two-dimensional median filtering algorithm. IEEE Trans. Acoust. Speech Signal Process. 1979, 27, 13–18. [Google Scholar] [CrossRef]

- Serra, J.; Vincent, L. An overview of morphological filtering. Circuits Syst. Signal Process. 1992, 11, 47–108. [Google Scholar] [CrossRef]

- Xi, J.; Wen, D.; Ersoy, O.K.; Yi, H.; Yao, D.; Song, Z.; Xi, S. Space debris detection in optical image sequences. Appl. Opt. 2016, 55, 7929–7940. [Google Scholar] [CrossRef]

- Duarte, P.; Gordo, P.; Peixinho, N.; Melicio, R.; Valério, D.; Gafeira, R. Space Surveillance payload camera breadboard: Star tracking and debris detection algorithms. Adv. Space Res. 2023, 72, 4215–4228. [Google Scholar]

- Wu, X.; Hong, D.; Chanussot, J. UIU-Net: U-Net in U-Net for infrared small object detection. IEEE Trans. Image Process. 2022, 32, 364–376. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Asymmetric contextual modulation for infrared small target detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 950–959. [Google Scholar]

- Sun, H.; Bai, J.; Yang, F.; Bai, X. Receptive-Field and Direction Induced Attention Network for Infrared Dim Small Target Detection with a Large-Scale Dataset IRDST. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5000513. [Google Scholar] [CrossRef]

- Zhang, M.; Zhang, R.; Yang, Y.; Bai, H.; Zhang, J.; Guo, J. ISNet: Shape matters for infrared small target detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 877–886. [Google Scholar]

- Zhang, T.; Cao, S.; Pu, T.; Peng, Z. AGPCNet: Attention-guided pyramid context networks for infrared small target detection. arXiv 2021, arXiv:2111.03580. [Google Scholar]

- Cao, K.; Liu, Y.; Zeng, X.; Qin, X.; Wu, R.; Wan, L.; Deng, B.; Zhong, J.; Ni, G.; Liu, Y. Semi-supervised 3D retinal fluid segmentation via correlation mutual learning with global reasoning attention. Biomed. Opt. Express 2024, 15, 6905–6921. [Google Scholar] [CrossRef]

- Hu, S.; Tang, H.; Luo, Y. Identifying retinopathy in optical coherence tomography images with less labeled data via contrastive graph regularization. Biomed. Opt. Express 2024, 15, 4980–4994. [Google Scholar] [CrossRef]

- Chen, H.; Li, Z.; Wu, J.; Xiong, W.; Du, C. SemiRoadExNet: A semi-supervised network for road extraction from remote sensing imagery via adversarial learning. ISPRS J. Photogramm. Remote Sens. 2023, 198, 169–183. [Google Scholar] [CrossRef]

- Sun, B.; Zhang, Y.; Zhou, Q.; Zhang, X. Effectiveness of semi-supervised learning and multi-source data in detailed urban landuse mapping with a few labeled samples. Remote Sens. 2022, 14, 648. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, R.; Xu, Y. Semi-Supervised Remote Sensing Building Change Detection with Joint Perturbation and Feature Complementation. Remote Sens. 2024, 16, 3424. [Google Scholar] [CrossRef]

- Yang, Y.; Lang, P.; Yin, J.; He, Y.; Yang, J. Data Matters: Rethinking the Data Distribution in Semi-Supervised Oriented SAR Ship Detection. Remote Sens. 2024, 16, 2551. [Google Scholar] [CrossRef]

- Sajjadi, M.; Javanmardi, M.; Tasdizen, T. Regularization with stochastic transformations and perturbations for deep semi-supervised learning. Adv. Neural Inf. Process. Syst. 2016, 29. [Google Scholar]

- Laine, S.; Aila, T. Temporal ensembling for semi-supervised learning. arXiv 2016, arXiv:1610.02242. [Google Scholar]

- Sohn, K.; Berthelot, D.; Carlini, N.; Zhang, Z.; Zhang, H.; Raffel, C.A.; Cubuk, E.D.; Kurakin, A.; Li, C.L. Fixmatch: Simplifying semi-supervised learning with consistency and confidence. Adv. Neural Inf. Process. Syst. 2020, 33, 596–608. [Google Scholar]

- Lee, D.H. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In Proceedings of the Workshop on Challenges in Representation Learning, ICML, Atlanta, GA, USA, 17–19 June 2013; Volume 3, p. 896. [Google Scholar]

- Sohn, K.; Zhang, Z.; Li, C.L.; Zhang, H.; Lee, C.Y.; Pfister, T. A simple semi-supervised learning framework for object detection. arXiv 2020, arXiv:2005.04757. [Google Scholar]

- Xu, M.; Zhang, Z.; Hu, H.; Wang, J.; Wang, L.; Wei, F.; Bai, X.; Liu, Z. End-to-end semi-supervised object detection with soft teacher. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3060–3069. [Google Scholar]

- Zhou, Y.; Jiang, X.; Chen, Z.; Chen, L.; Liu, X. A Semi-Supervised Arbitrary-Oriented SAR Ship Detection Network based on Interference Consistency Learning and Pseudo Label Calibration. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2023, 16, 5893–5904. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; proceedings, part III 18; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Mei, C.; Yang, X.; Zhou, M.; Zhang, S.; Chen, H.; Yang, X.; Wang, L. Semi-supervised image segmentation using a residual-driven mean teacher and an exponential Dice loss. Artif. Intell. Med. 2024, 148, 102757. [Google Scholar] [CrossRef]

- Yang, X.; Song, Z.; King, I.; Xu, Z. A survey on deep semi-supervised learning. IEEE Trans. Knowl. Data Eng. 2022, 35, 8934–8954. [Google Scholar] [CrossRef]

- Liu, Q.; Liu, R.; Zheng, B.; Wang, H.; Fu, Y. Infrared small target detection with scale and location sensitivity. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 17490–17499. [Google Scholar]

| Network | Parameters | Inference Time |

|---|---|---|

| UCTransNet [25] | 66.5 M | 32 ms |

| UNet [56] | 15.0 M | 20 ms |

| MRSA-Net (Ours) | 32.0 M | 22 ms |

| Network | Method | Source | 1/4 (250) | 1/8 (125) | 1/16 (62) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dice | mIoU | Pd | Fa | Dice | mIoU | Pd | Fa | Dice | mIoU | Pd | Fa | |||

| UNet [56] | Sup.only [56] | MICCAI (2015) | 77.07 | 68.82 | 79.75 | 3.44 | 72.20 | 62.72 | 71.50 | 3.22 | 58.0 | 48.45 | 55.25 | 2.50 |

| MT [19] | Neurips (2017) | 81.72 | 73.04 | 84.75 | 3.19 | 77.34 | 68.25 | 78.50 | 2.96 | 72.27 | 62.74 | 73.0 | 3.18 | |

| UT [20] | ICLR (2021) | 81.22 | 73.29 | 88.25 | 6.32 | 79.86 | 70.29 | 84.75 | 7.39 | 74.18 | 63.81 | 75.75 | 11.74 | |

| ISMT [21] | CVPR (2021) | 83.27 | 74.86 | 88.25 | 3.78 | 79.60 | 71.27 | 86.0 | 5.92 | 73.19 | 62.46 | 73.75 | 16.73 | |

| PLMT [22] | ICASSP (2023) | 79.09 | 70.74 | 83.0 | 4.05 | 72.83 | 63.22 | 73.75 | 3.86 | 64.72 | 54.36 | 61.0 | 3.60 | |

| ST [23] | CVPR (2022) | 82.39 | 73.86 | 85.50 | 3.25 | 75.06 | 65.61 | 74.75 | 2.02 | 63.58 | 53.47 | 60.75 | 2.22 | |

| CSDT | Ours | 83.35 | 75.72 | 89.75 | 3.67 | 81.36 | 72.52 | 86.50 | 3.45 | 76.72 | 66.96 | 82.0 | 3.37 | |

| UCTransNet [25] | Sup.only [25] | AAAI (2022) | 81.04 | 72.36 | 86.50 | 4.48 | 74.92 | 65.94 | 78.50 | 3.87 | 60.21 | 50.65 | 59.0 | 2.86 |

| MT [19] | Neurips (2017) | 84.99 | 75.98 | 91.0 | 6.73 | 76.41 | 66.85 | 79.25 | 4.01 | 71.86 | 61.71 | 72.0 | 3.69 | |

| UT [20] | ICLR (2021) | 83.99 | 74.68 | 91.75 | 5.88 | 83.02 | 73.41 | 90.50 | 5.49 | 76.43 | 66.14 | 78.25 | 8.21 | |

| ISMT [21] | CVPR (2021) | 83.65 | 74.63 | 90.0 | 4.84 | 80.67 | 70.99 | 87.0 | 4.77 | 77.16 | 66.72 | 81.75 | 4.03 | |

| PLMT [22] | ICASSP (2023) | 82.20 | 72.78 | 89.0 | 4.63 | 75.90 | 66.85 | 81.0 | 4.0 | 61.68 | 52.04 | 60.25 | 3.79 | |

| ST [23] | CVPR (2022) | 82.82 | 74.64 | 89.25 | 3.81 | 76.95 | 67.44 | 79.0 | 2.76 | 56.43 | 46.85 | 53.75 | 1.40 | |

| CSDT | Ours | 85.70 | 77.09 | 92.0 | 4.56 | 83.39 | 73.92 | 90.75 | 4.13 | 78.72 | 68.36 | 83.25 | 4.17 | |

| MRSA-Net (Ours) | Sup.only | Ours | 84.98 | 76.76 | 92.25 | 4.61 | 78.31 | 70.01 | 84.25 | 4.50 | 69.69 | 60.09 | 71.25 | 7.93 |

| MT [19] | Neurips (2017) | 85.35 | 77.54 | 91.25 | 4.95 | 80.20 | 71.38 | 85.0 | 4.22 | 73.73 | 64.37 | 75.25 | 3.95 | |

| UT [20] | ICLR (2021) | 85.42 | 77.50 | 92.50 | 6.19 | 83.73 | 75.37 | 92.25 | 6.38 | 77.98 | 68.49 | 83.25 | 13.43 | |

| ISMT [21] | CVPR (2021) | 84.31 | 76.67 | 91.75 | 5.66 | 82.34 | 74.14 | 88.25 | 4.69 | 78.68 | 69.20 | 84.0 | 9.94 | |

| PLMT [22] | ICASSP (2023) | 83.76 | 75.94 | 91.75 | 4.94 | 80.57 | 71.65 | 86.50 | 4.69 | 77.59 | 67.30 | 80.75 | 5.92 | |

| ST [23] | CVPR (2022) | 85.46 | 77.70 | 91.25 | 3.75 | 82.23 | 73.53 | 89.25 | 4.16 | 72.54 | 62.69 | 74.0 | 4.92 | |

| CSDT | Ours | 86.76 | 78.82 | 93.50 | 4.34 | 84.82 | 76.57 | 92.0 | 4.55 | 81.63 | 71.84 | 88.50 | 5.75 | |

| Network | Method | Source | Sun Light | Earth Light | Moon Light | Mixed Light | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dice | mIoU | Pd | Fa | Dice | mIoU | Pd | Fa | Dice | mIoU | Pd | Fa | Dice | mIoU | Pd | Fa | |||

| UNet [56] | Sup.only [56] | MICCAI (2015) | 66.81 | 57.71 | 64.67 | 2.25 | 71.26 | 62.11 | 73.0 | 2.88 | 71.47 | 62.83 | 72.67 | 3.52 | 66.83 | 57.34 | 65.0 | 3.53 |

| MT [19] | Neurips (2017) | 75.88 | 66.53 | 76.67 | 2.77 | 79.51 | 70.31 | 82.67 | 3.25 | 78.43 | 69.76 | 81.0 | 3.74 | 74.62 | 65.43 | 73.67 | 2.68 | |

| UT [20] | ICLR (2021) | 78.53 | 69.16 | 82.67 | 5.79 | 80.63 | 71.39 | 86.67 | 6.25 | 80.33 | 71.23 | 87.0 | 6.54 | 74.18 | 64.74 | 75.33 | 15.35 | |

| ISMT [21] | CVPR (2021) | 79.14 | 69.67 | 83.0 | 6.16 | 81.56 | 72.64 | 87.33 | 6.15 | 78.85 | 69.85 | 83.33 | 6.84 | 75.19 | 65.97 | 77.0 | 16.09 | |

| PLMT [22] | ICASSP (2023) | 70.29 | 60.87 | 69.67 | 3.10 | 75.25 | 65.51 | 77.33 | 3.94 | 73.33 | 64.48 | 75.0 | 4.08 | 69.98 | 60.21 | 68.33 | 4.23 | |

| ST [23] | CVPR (2022) | 70.80 | 61.51 | 69.67 | 1.93 | 76.50 | 67.10 | 78.0 | 2.64 | 75.13 | 66.12 | 75.67 | 2.99 | 72.27 | 62.53 | 71.33 | 2.41 | |

| CSDT | Ours | 80.39 | 71.60 | 86.33 | 3.09 | 82.41 | 73.63 | 88.67 | 3.87 | 80.66 | 72.32 | 87.33 | 4.14 | 78.44 | 69.39 | 82.00 | 2.88 | |

| UCTransNet [25] | Sup.only [25] | AAAI (2022) | 69.68 | 60.87 | 72.67 | 2.83 | 74.05 | 64.85 | 77.0 | 3.52 | 74.04 | 65.26 | 79.0 | 4.45 | 70.45 | 60.94 | 70.0 | 4.15 |

| MT [19] | Neurips (2017) | 75.92 | 66.16 | 78.67 | 3.75 | 80.11 | 70.59 | 83.33 | 4.39 | 78.38 | 69.01 | 82.67 | 5.09 | 76.60 | 66.97 | 78.33 | 5.99 | |

| UT [20] | ICLR (2021) | 81.44 | 71.58 | 88.0 | 5.70 | 82.40 | 72.72 | 88.67 | 6.55 | 81.24 | 71.80 | 88.0 | 6.44 | 79.51 | 69.54 | 82.67 | 7.42 | |

| ISMT [21] | CVPR (2021) | 80.43 | 70.52 | 86.67 | 4.05 | 81.83 | 72.42 | 89.0 | 4.74 | 81.19 | 71.40 | 86.33 | 5.38 | 78.50 | 68.70 | 83.0 | 4.01 | |

| PLMT [22] | ICASSP (2023) | 71.78 | 62.54 | 76.33 | 3.29 | 74.56 | 64.97 | 78.0 | 4.18 | 74.94 | 65.85 | 79.33 | 4.49 | 71.77 | 62.20 | 73.33 | 4.59 | |

| ST [23] | CVPR (2022) | 69.92 | 61.08 | 72.33 | 2.03 | 75.15 | 65.70 | 78.67 | 2.95 | 73.25 | 64.55 | 76.67 | 3.35 | 69.94 | 60.58 | 68.33 | 2.30 | |

| CSDT | Ours | 82.61 | 73.32 | 90.0 | 3.87 | 84.21 | 74.89 | 90.67 | 4.46 | 82.86 | 73.59 | 90.0 | 5.11 | 80.73 | 70.70 | 84.0 | 3.71 | |

| MRSA-Net (Ours) | Sup.only | Ours | 77.0 | 68.28 | 82.33 | 4.12 | 79.38 | 70.59 | 85.0 | 4.74 | 78.94 | 70.54 | 85.33 | 5.12 | 75.31 | 66.40 | 77.67 | 8.72 |

| MT [19] | Neurips (2017) | 78.94 | 70.01 | 82.67 | 3.99 | 82.14 | 73.58 | 87.33 | 4.84 | 81.63 | 73.14 | 86.67 | 4.86 | 76.34 | 67.65 | 78.67 | 3.79 | |

| UT [20] | ICLR (2021) | 84.05 | 75.30 | 91.67 | 5.77 | 84.19 | 75.71 | 91.67 | 7.26 | 82.48 | 74.07 | 90.67 | 6.65 | 78.78 | 70.06 | 83.33 | 14.99 | |

| ISMT [21] | CVPR (2021) | 82.47 | 73.48 | 89.0 | 5.42 | 83.86 | 75.60 | 91.33 | 6.26 | 81.61 | 73.74 | 88.67 | 5.82 | 79.17 | 70.36 | 83.33 | 9.54 | |

| PLMT [22] | ICASSP (2023) | 80.11 | 70.65 | 84.67 | 4.69 | 83.28 | 74.28 | 89.33 | 5.88 | 80.0 | 71.29 | 86.67 | 5.40 | 79.16 | 70.30 | 84.67 | 4.75 | |

| ST [23] | CVPR (2022) | 78.61 | 69.73 | 82.67 | 3.14 | 82.26 | 73.46 | 88.0 | 4.01 | 81.80 | 73.40 | 87.67 | 4.21 | 77.63 | 68.65 | 81.0 | 5.74 | |

| CSDT | Ours | 84.99 | 76.04 | 92.33 | 4.12 | 86.12 | 77.67 | 93.33 | 5.06 | 84.62 | 76.07 | 91.33 | 5.14 | 81.89 | 73.19 | 88.33 | 5.20 | |

| Network | Method | Source | Others | Ours | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Img (a) | Img (b) | Img (c) | Img (d) | Img (e) | Img (f) | Img (g) | Img (h) | |||

| MRSA-Net (Ours) | MT [19] | NeurIPS (2017) | 84.23 | 76.36 | 61.85 | 0.0 | 71.93 | 51.83 | 78.26 | 82.48 |

| UT [20] | ICLR (2021) | 89.0 | 78.87 | 91.93 | 0.0 | 74.41 | 69.68 | 64.31 | 77.02 | |

| ISMT [21] | CVPR (2021) | 85.16 | 78.25 | 54.19 | 42.22 | 54.31 | 54.46 | 79.86 | 87.16 | |

| PLMT [22] | ICASSP (2023) | 87.14 | 75.46 | 85.17 | 10.09 | 71.60 | 63.85 | 73.02 | 74.77 | |

| ST [23] | CVPR (2022) | 87.65 | 78.95 | 53.63 | 68.40 | 70.29 | 60.37 | 52.25 | 79.62 | |

| CSDT | Ours | 90.25 | 91.77 | 84.01 | 73.09 | 75.05 | 77.97 | 90.02 | 87.57 | |

| Network | Module | Average Metrics | ||||

|---|---|---|---|---|---|---|

| MDPC | FMWA | Dice | mIoU | Pd | Fa | |

| Baseline | ✓ | ✓ | 84.98 | 76.76 | 92.25 | 4.61 |

| ✓ | ✕ | 83.50 | 75.59 | 90.50 | 5.13 | |

| ✕ | ✓ | 80.17 | 72.09 | 84.25 | 5.34 | |

| ✕ | ✕ | 77.07 | 68.82 | 79.75 | 3.44 | |

| Module | Numbers | Average Metrics | |||

|---|---|---|---|---|---|

| Dice | mIoU | Pd | Fa | ||

| MDPC | 1 | 81.96 | 74.30 | 88.25 | 4.85 |

| 2 | 83.82 | 76.10 | 91.50 | 4.83 | |

| 3 | 84.98 | 76.76 | 92.25 | 4.61 | |

| 4 | 82.50 | 74.65 | 89.50 | 4.77 | |

| Network | Teacher Type | Average Metrics | ||||

|---|---|---|---|---|---|---|

| DT | ST | Dice | mIoU | Pd | Fa | |

| MRSA-Net (Ours) | ✓ | ✓ | 81.63 | 71.84 | 88.50 | 5.75 |

| ✕ | ✓ | 75.49 | 65.55 | 75.50 | 6.27 | |

| ✓ | ✕ | 76.59 | 67.16 | 81.25 | 6.07 | |

| ✕ | ✕ | 69.69 | 60.09 | 71.25 | 7.93 | |

| Network | PL Strategy | Epochs | Average Metrics | |||

|---|---|---|---|---|---|---|

| Dice | mIoU | Pd | Fa | |||

| MRSA-Net (Ours) | APL (Ours) | Overall | 81.63 | 71.84 | 88.50 | 5.75 |

| ST ∩ DT | Overall | 75.74 | 66.24 | 77.50 | 6.42 | |

| ST ∪ DT | Overall | 76.86 | 67.29 | 80.0 | 6.95 | |

| ST → DT | 30 | 80.67 | 71.02 | 86.5 | 7.94 | |

| ST → DT | 40 | 80.74 | 71.16 | 86.0 | 5.99 | |

| ST → DT | 50 | 80.32 | 70.61 | 85.50 | 7.32 | |

| Network | Loss Function | Average Metrics | |||||

|---|---|---|---|---|---|---|---|

| Dice | mIoU | Pd | Fa | ||||

| MRSA-Net (Ours) | ✓ | ✓ | ✓ | 81.63 | 71.84 | 88.50 | 5.75 |

| ✓ | ✓ | ✕ | 80.55 | 70.84 | 85.75 | 5.80 | |

| ✓ | ✕ | ✓ | 76.59 | 67.16 | 81.25 | 6.07 | |

| ✓ | ✕ | ✕ | 69.69 | 60.09 | 71.25 | 7.93 | |

| Network | Average Metrics | |||

|---|---|---|---|---|

| Dice | mIoU | Pd | Fa | |

| UNet | 77.07 | 68.82 | 79.75 | 3.44 |

| UCTransNet | 81.04 | 72.36 | 86.50 | 4.48 |

| MSHNet | 76.58 | 67.31 | 79.25 | 5.15 |

| RDIAN | 76.49 | 67.15 | 78.50 | 5.26 |

| MRSA-Net (Ours) | 84.98 | 76.76 | 92.25 | 4.61 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Z.; Zia, A.; Li, X.; Dan, B.; Ma, Y.; Long, H.; Lu, K.; Liu, E.; Zhao, R. Collaborative Static-Dynamic Teaching: A Semi-Supervised Framework for Stripe-like Space Target Detection. Remote Sens. 2025, 17, 1341. https://doi.org/10.3390/rs17081341

Zhu Z, Zia A, Li X, Dan B, Ma Y, Long H, Lu K, Liu E, Zhao R. Collaborative Static-Dynamic Teaching: A Semi-Supervised Framework for Stripe-like Space Target Detection. Remote Sensing. 2025; 17(8):1341. https://doi.org/10.3390/rs17081341

Chicago/Turabian StyleZhu, Zijian, Ali Zia, Xuesong Li, Bingbing Dan, Yuebo Ma, Hongfeng Long, Kaili Lu, Enhai Liu, and Rujin Zhao. 2025. "Collaborative Static-Dynamic Teaching: A Semi-Supervised Framework for Stripe-like Space Target Detection" Remote Sensing 17, no. 8: 1341. https://doi.org/10.3390/rs17081341

APA StyleZhu, Z., Zia, A., Li, X., Dan, B., Ma, Y., Long, H., Lu, K., Liu, E., & Zhao, R. (2025). Collaborative Static-Dynamic Teaching: A Semi-Supervised Framework for Stripe-like Space Target Detection. Remote Sensing, 17(8), 1341. https://doi.org/10.3390/rs17081341