1. Introduction

Earth observation has entered a new era with the advent of CubeSat constellations, which provide imagery with global coverage at meter-scale resolution and with the objective of daily or even sub-daily revisit times [

1]. The availability of images with such high spatial and temporal resolution results from the development of fleets that consist of a large number (hundreds) of small satellites. PlanetScope Doves provided first-of-its-kind CubeSat constellations imaging the Earth’s landmass on a daily basis with ~3 m spatial resolution and four spectral channels [

2]. The follow-up mission has taken an additional step further by launching a new generation of CubeSats called SuperDoves that enhance the spectral resolution by capturing eight spectral bands within the visible and near-infrared portion of the spectrum. The spectral bands of SuperDoves are narrower than those of earlier-generation Doves, and the additional bands include coastal blue (443 nm), extra green (531 nm), yellow (610 nm), and red edge (705 nm) channels [

3]. A key characteristic of both the Dove and SuperDove constellations is their ability to provide abundant cloud-free images. This capability has been made possible by a large number of CubeSats (>150 SuperDoves currently in orbit) deployed in two specially designed near-polar orbits. The orbits have opposite inclinations that scan the Earth through both ascending and descending orbits. There is a swath overlap between subsequent CubeSats in each orbit, allowing for the capture of images only 90 s apart. Moreover, the Planet constellation provides other sub-daily imagery with time lags ranging from a few minutes to a few hours, given that the image acquisitions are from both ascending and descending orbits [

1,

4]. Thus, the Planet CubeSat constellation allows for the unprecedented capture of daily and sub-daily images with global coverage at high spatial resolution. The frequent revisits increase the likelihood of capturing cloud-free conditions. The wealth of temporal imagery from CubeSats can potentially bring new opportunities in remote sensing of aquatic systems from both methodological and application-oriented perspectives.

The radiometric quality of optical imagery is of particular importance in aquatic applications [

5]. This is because water bodies typically have very low reflectance, and thus the water-leaving radiance is a small portion (<15%) of the at-sensor radiance and can be severely contaminated by confounding factors such as atmospheric effects and sun glint from the water surface [

6,

7]. Thus, sensors with high radiometric sensitivity are needed to resolve subtle variations in water-leaving radiance, which are linked to the variation in biophysical parameters of interest (e.g., bathymetry and concentration of constituents) [

8]. Doves and SuperDoves record spectral information with a relatively high radiometric resolution (12-bit). However, CubeSats carry small and inexpensive sensors; thus, the radiometric quality might not be as consistent as standard, larger sensors like those onboard Landsat-8/9 and Sentinel-2A/B [

9,

10]. Despite these concerns about the radiometric quality, CubeSat data have already proven promising in retrieving water depth and turbidity [

11,

12].

Bathymetry retrieval in inland waters, including fluvial systems, is essential for flood risk assessment, environmental conservation, and infrastructure planning. Information on water depth also supports habitat modeling and preservation of aquatic ecosystems [

13,

14]. CubeSat imagery has become increasingly appealing to aquatic scientists and managers, particularly those working in inland waters due to the high spatial and temporal resolution of these data [

15]. The meter-scale resolution allows even small water bodies to be captured, such as river channels, that cannot be resolved at the spatial resolution of publicly available satellite images (e.g., 10–30 m for Sentinel-2A/B and Landsat-8/9). Although commercial spaceborne missions like WorldView provide meter-scale spatial resolution, these data are only acquired on a tasked, on-demand basis, as opposed to routine daily imaging by CubeSats at the global scale. The daily (sub-daily) imagery offered by CubeSats enhances the chance to capture cloud-free imagery and monitor changes in a timely manner. Because the SuperDove imagery has been available only since early 2022, previous studies conducted in aquatic systems are mostly based on four-band Dove data (e.g., [

9,

11,

15]). For instance, Dove data provided promising results in mapping water turbidity in San Franciso Bay, USA, and coastal waters around the UK [

11]. Bathymetry retrieval using Dove imagery has been performed with promising results in coastal waters [

16] and rivers [

12].

The spectrally based methods for standard bathymetry retrieval from single images fall into two main approaches: physics-based and empirical [

17,

18,

19,

20]. Physics-based models require an atmospheric correction designed for aquatic applications to derive accurate remote sensing reflectance (

Rrs) data [

21]. Moreover, information on water column optical properties and bottom reflectance are needed to perform the inversion of a radiative transfer model [

21,

22,

23,

24,

25]. On the other hand, empirical methods allow for estimating bathymetry by developing a regression model that relates spectral characteristics to depths measured in the field. Empirical models are more straightforward to apply because there is no need to model the underlying physics. Thus, they can be applied to top-of-atmosphere (TOA) data without characterizing the water column’s bio-optical conditions and substrate properties. This study builds upon the regression-based approach for bathymetry retrieval. The literature covers a range of regression models employed for water depth retrieval, from different forms of band ratio models [

26] to more recent machine learning techniques like the neural network-based depth retrieval (NNDR) technique. Here, we leverage NNDR as a state-of-the-art technique that provided promising results in previous studies [

12,

27]. We incorporate NNDR into a new methodology for temporal ensembling of SuperDove imagery.

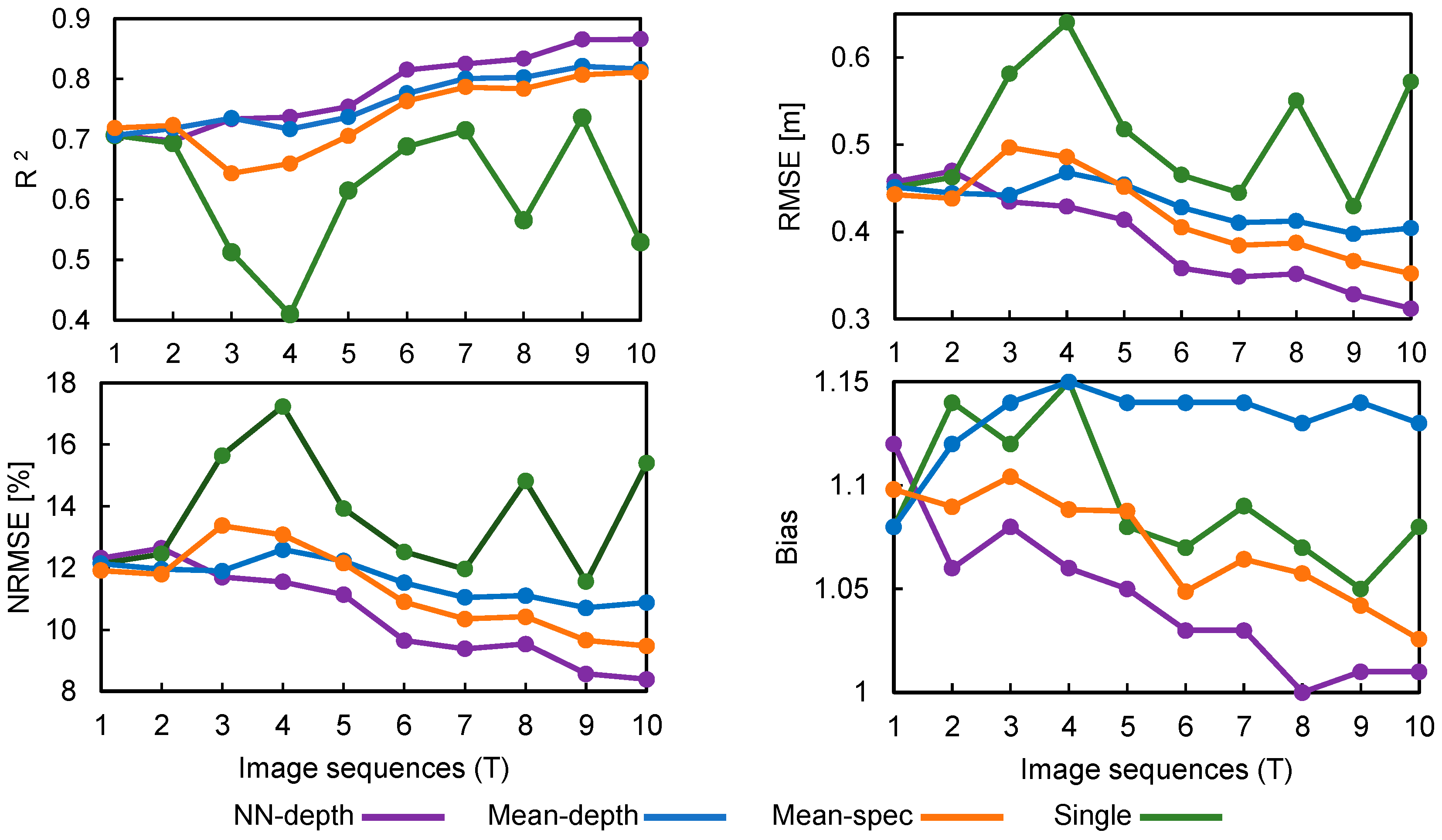

This study focuses on empirical bathymetry retrieval from newly available SuperDoves and introduces a machine learning-based model for ensembling depth estimates from dense time series of images. In a recent study, we exploited four-band Dove image sequences with short time lags (from seconds to hours) by averaging spectral data (mean–spec ensembling) to improve bathymetry retrieval relative to the standard single-image analysis [

12]. Time-averaging the original spectral data has been the predominant ensembling technique in prior studies, with the goal of leveraging temporal imagery to enhance bathymetry retrieval [

12,

28]. Here, we introduce an alternative approach that performs the ensembling on the bathymetry products derived from individual images rather than ensembling the spectral data first and then proceeding to depth retrieval. Although empirical methods do not require absolute radiance values, the spectral data must at least be internally consistent in order to obtain an accurate and robust regression model. However, confounding factors like the surface glint and water column optical properties vary not only in space but also in time. Consequently, the adverse effects of these factors are amplified when the spectral data are directly ensembled over time. The pixel-by-pixel consistency of a time-averaged image created by mean–spec ensembling can thus be degraded by any variations in confounding factors present in the multitemporal data. For example, variability in atmospheric effects can lead to pronounced artifacts in the time-averaged image, particularly when the time series spans a longer period of several days. Moreover, this issue can be exacerbated by any radiometric inconsistencies among different SuperDove sensors. The rationale behind the proposed ensembling is to mitigate the propagation of any or all of these confounding factors by treating the images individually at the first step and then ensembling the resulting depth estimates over time. The foundation of this approach is a neural network (NN) regressor (NN–depth ensembling) that essentially weights the contribution of the depth estimates from each time period to derive the final depth estimate at each location. This study addresses the following objectives: (i) develop a neural network (NN)-based method for ensembling temporal retrievals of water depth (NN–depth ensembling) from dense time-series of SuperDove imagery; (ii) compare the performance of the proposed NN–depth ensembling relative to the mean–spec and average depth (mean–depth) ensembling approaches, as well as standard single-image analysis; and (iii) perform bathymetry retrieval in a wide range of water depths in multiple case studies to evaluate the performance of the proposed NN–depth ensembling. This study also provides more insights into the utility of the newly available SuperDove imagery for bathymetric applications.

Section 2 describes the proposed NN–depth ensembling method along with other ensembling techniques. The case studies and the datasets are introduced in

Section 3. The results and discussions are provided in

Section 4. The manuscript concludes in

Section 5 by providing a summary and suggesting directions for additional studies.

2. Methods

Let

represent a set of temporally dense images of the same area captured by CubeSats at time instances

t1 to

tT. We assume that flow conditions remain relatively steady throughout the time period over which these images are acquired and that in situ bathymetry data (

d) from one of these time instances are available for use in developing the NN model. Note that in coastal waters, applying a tide correction to a datum like mean sea level helps maintain consistent bathymetry across the time period when images are acquired. We split the field measurements into training (

dTrain) and validation (

dVal) sets. In a recent study, the authors proposed spectral averaging of short time lag (from seconds to hours) image sequences from Dove CubeSats as a means of ensembling and showed that this approach improves bathymetry retrieval relative to standard single-image analysis [

12]. We refer to this method as mean–spec ensembling hereafter. Thus, the spectrally averaged image at a given wavelength (

λ) can be derived as:

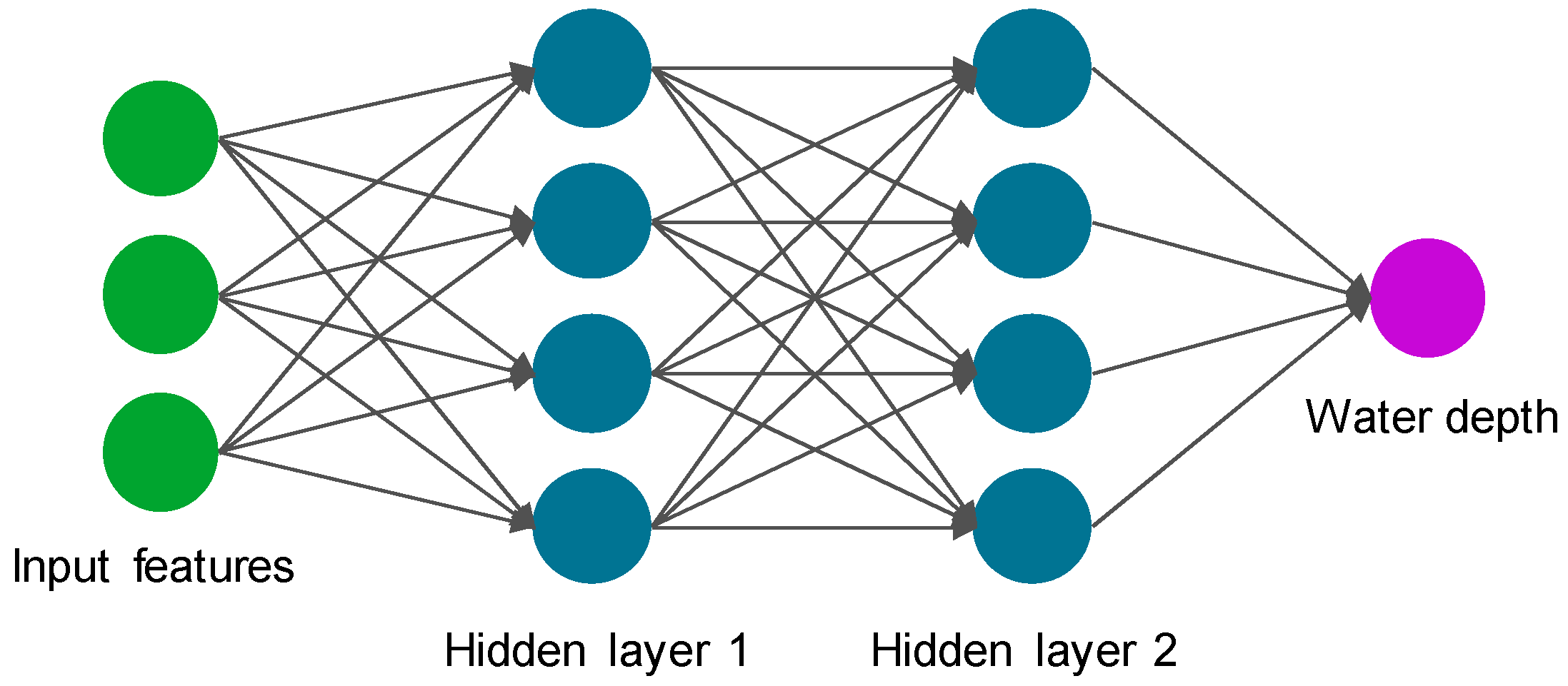

The mean–spec ensembling approach retrieves the bathymetry by training a NN depth retrieval (NNDR) model on the time-averaged image [

12,

27]. NN-based regressors automate the process of feature extraction and account for complex nonlinear relations among the features and response variables [

29]. The NNDR is trained by considering the spectral bands for the training samples

as input features and associated in situ depths

dTrain (

x) as the network’s response variable, where

x denotes the spatial location of a training sample. NNDR is a fully connected network with two hidden layers. Bayesian optimization is employed to tune the hyperparameters of NNDR, including the number of neurons in each layer and the type of activation function [

30,

31]. The hyperparameter tuning runs through layer sizes of up to 100 neurons to find the optimal size by minimizing the cross-validation error. The rectified linear unit (ReLU) is identified as an optimal activation function that effectively handles gradient explosion and disappearance [

32].

Figure 1 schematically illustrates the NNDR architecture.

This approach performs the time averaging on the spectral data (mean–spec) before performing any depth retrieval. The originally developed mean–spec ensembling leveraged CubeSat imagery only with short time lags to ensure relatively steady conditions in terms of water depth, water surface glint, and atmospheric effects [

12]. The temporal consistency of spectral data can be degraded due to variations in sun glint from the water surface, atmospheric effects, optical properties of the water column, and possibly inter-sensor radiometric inconsistencies between individual SuperDoves. As a result, approaches such as mean–spec that ensemble the spectral data are subject to temporally aggregated confounding factors. To tackle this issue, we propose an alternative approach that involves ensembling at the product level on the time series of bathymetry estimates. The proposed NN-depth ensembling first retrieves bathymetry independently for each image in the time series, employing a separate NNDR for each time instance (NNDR

1 to NNDR

T) that uses {

It (

x),

dTrain (

x)} for training. Then, the temporal estimates of depths for training samples

Dt(

x) are fed as features into another NNDR

Ens with field measurements as the response

dTrain(

x). The structure of NNDR

Ens is similar to the NNDR, but the input features are different. NNDR

Ens performs the ensembling by regressing the initial round of temporal depth estimates {

D1(

x),

D2(

x), …,

DT(

x)} against the corresponding in situ values

dTrain(

x). Thus, the number of input features for the NNDR

Ens is equal to the number of time instances

T. Because the ensembling is based on a NN regressor rather than simple averaging, the proposed ensembling allows for automatic weighting of the contribution of depths estimated at different time instances into the final bathymetry product. Moreover, the depth-to-depth (input-to-output) ensembling provides a means of compensating for errors in the

Dt, particularly any systematic biases. We also implemented a simple averaging of

Dt as an alternative ensembling approach (mean–depth) for comparison purposes. In this case, the time-averaged depths (

) can be derived as follows:

Figure 2 provides a schematic representation of three ensembling approaches to leverage temporally dense imagery from CubeSats in bathymetry retrieval. For consistency among different analyses, whether based on single images or on one of the ensembling approaches, the training samples

dTrain(

x) remain the same for all the NNs involved at each stage of the various ensemble-based methods. We also use the same validation set (

dVal) to evaluate all cases. The training is repeated ten times for all NNs, and the estimates resulting from these ten replicates are averaged to produce the final retrievals. The implementation of the NNs is carried out using the Deep Learning Toolbox of the MATLAB (R2024a) software package [

33]. Also note that we evaluated using the median rather than the mean for both the mean–spec and the mean–depth approaches, but we found that using the mean outperformed the alternative, median-based workflow. For brevity, results derived using the median are not included.

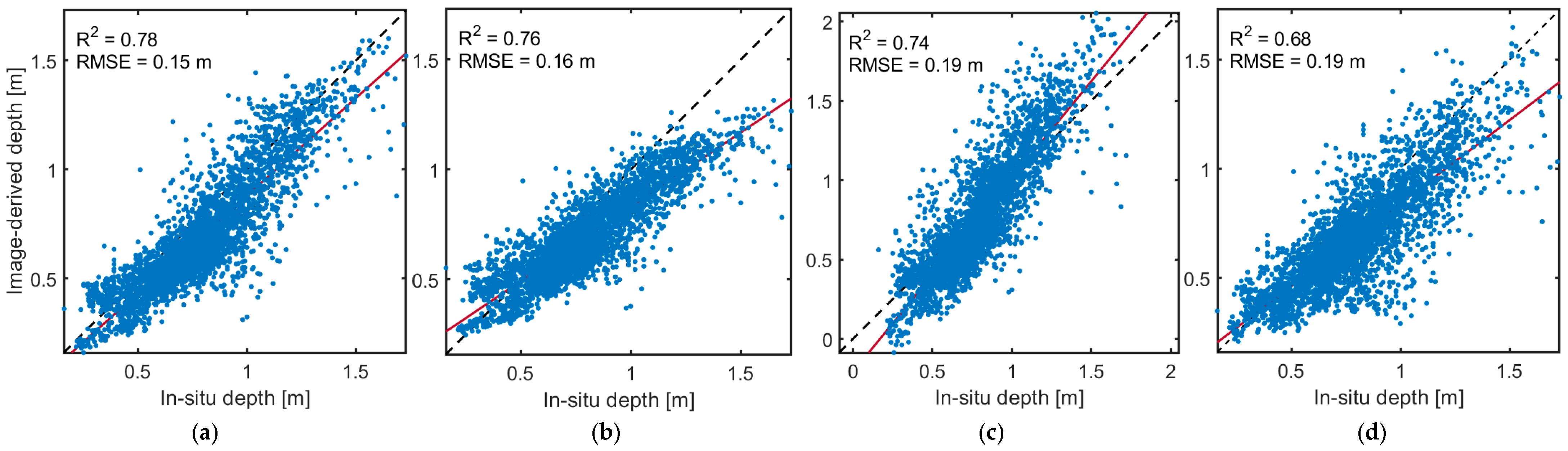

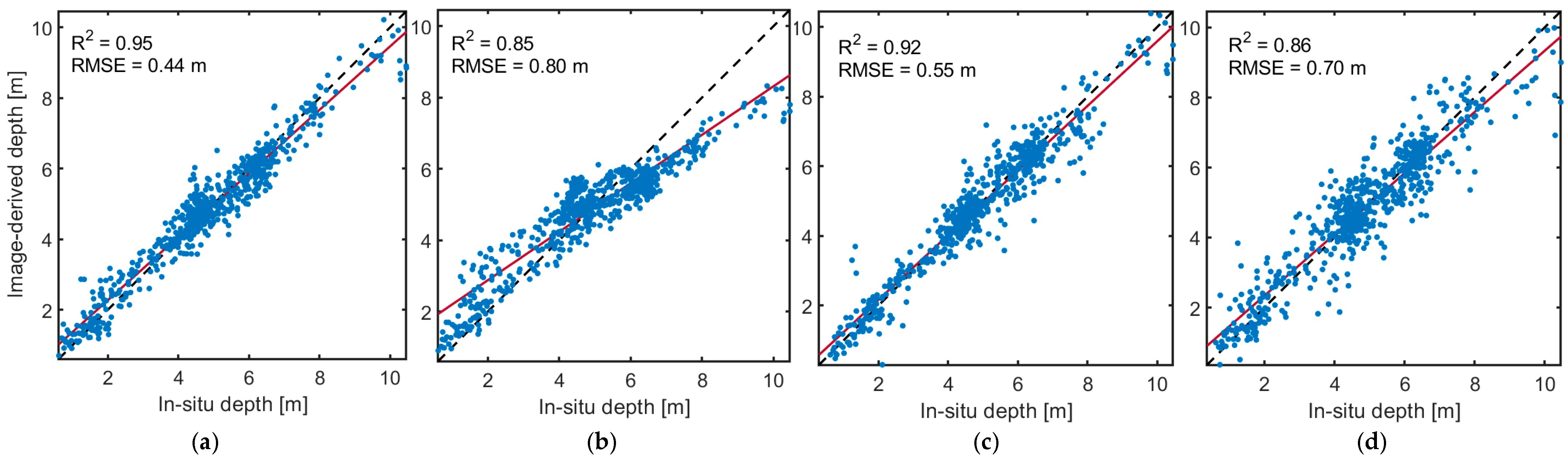

We used a set of metrics to compare the retrieved depths versus the in situ validation measurements, including the coefficient of determination (R

2); root mean square error (RMSE); normalized RMSE (NRMSE), which calculates the percentage of RMSE relative to the range of water depth (max–min); and bias. Bias values close to 1 indicate that the depth estimates are subject to minimal systematic errors, whereas overestimation and underestimation lead to bias >1 and <1, respectively. For instance, a bias of 1.1 conveys that the estimated depths are 10% overestimated on average [

34].

3. Case Studies and Datasets

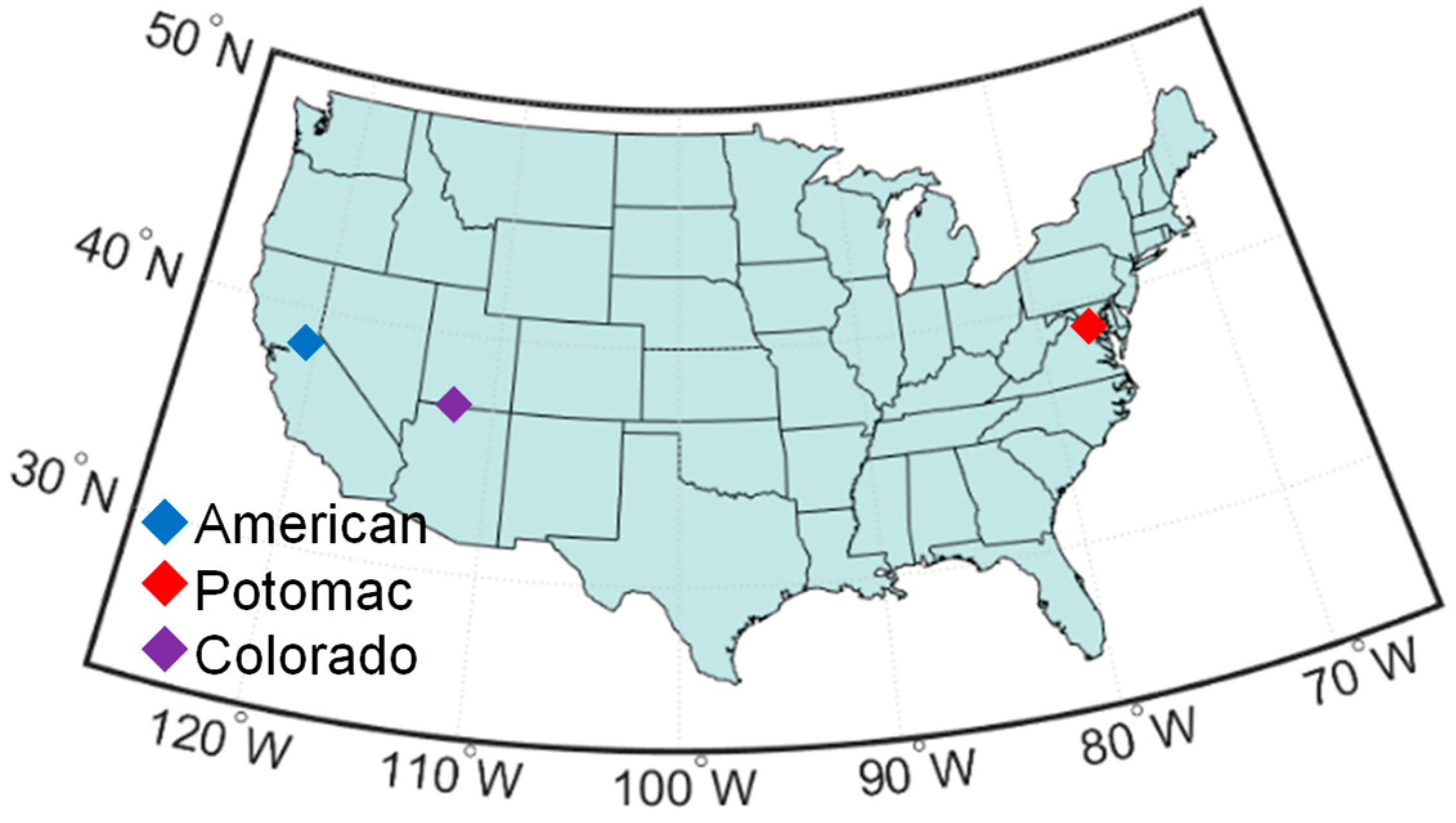

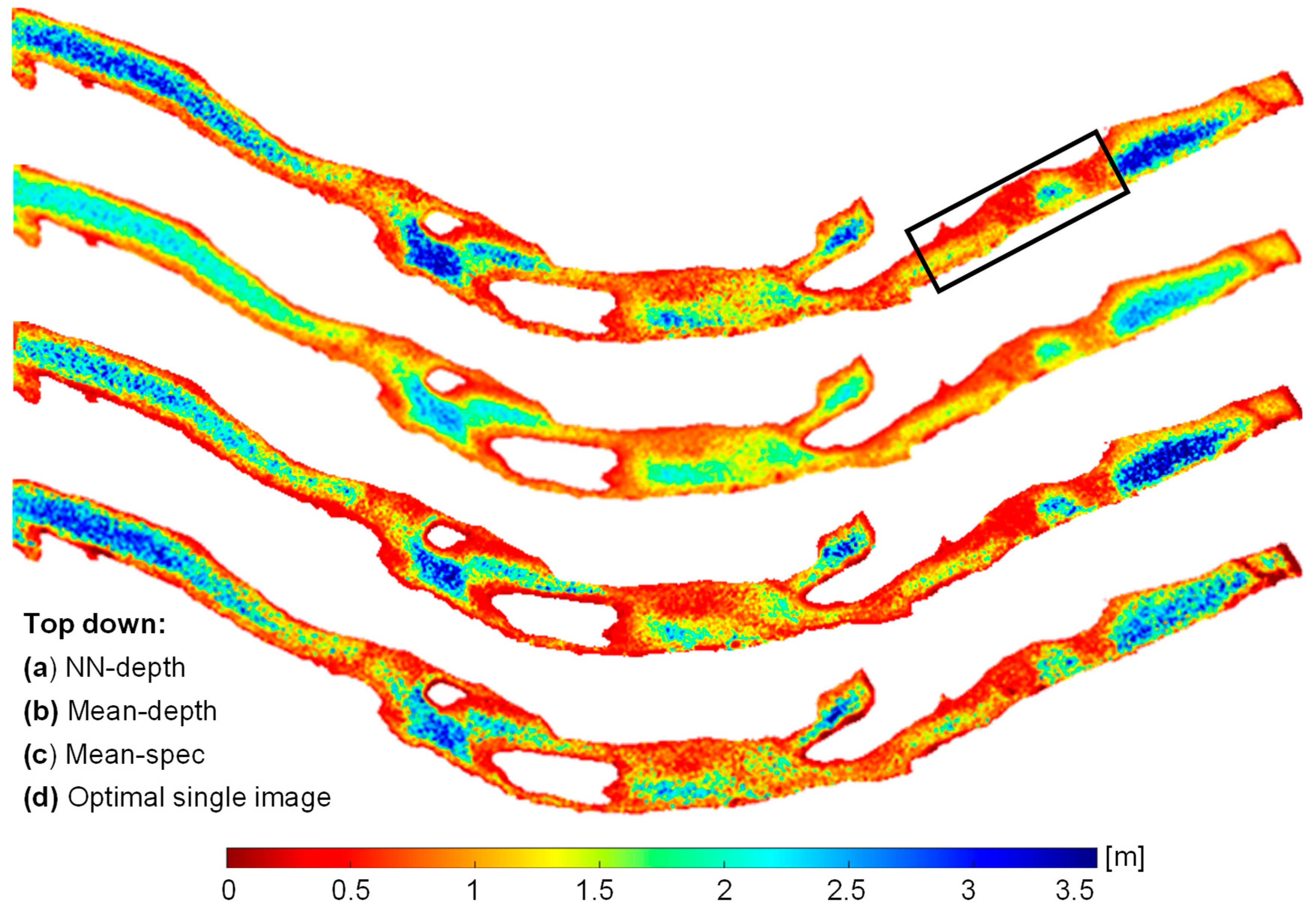

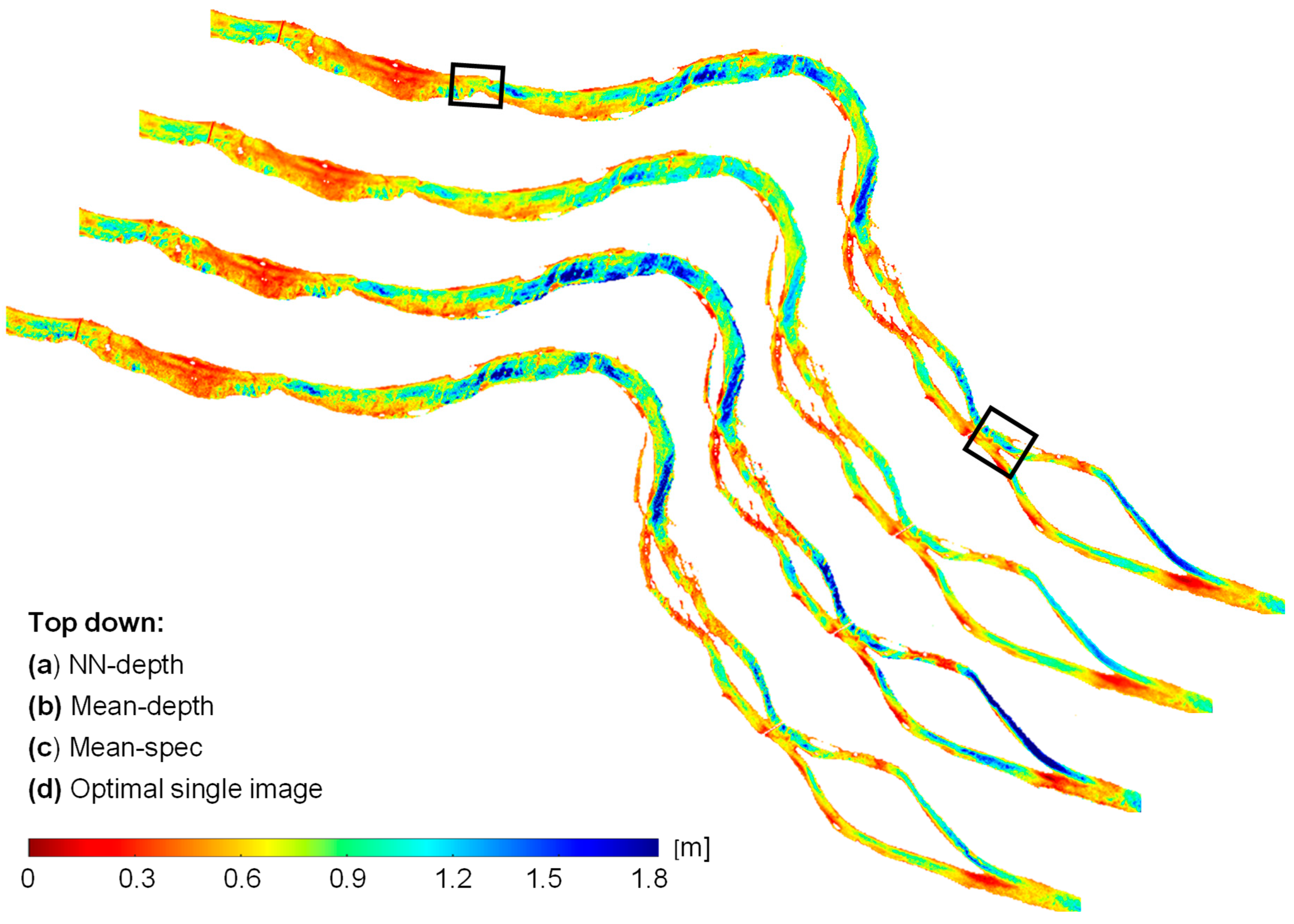

Three reaches of the American, Potomac, and Colorado rivers in the United States were studied to examine the effectiveness of different temporal ensembling methods in retrieving bathymetry (

Figure 3). The study sites represent clear waters suitable for spectrally based bathymetry retrieval. However, the river reaches differ regarding the range of water depths. The Potomac reach involves very shallow waters up to a maximum of ~2 m. Although the bottom-reflected signal (i.e., the radiance component of interest for bathymetry) is pronounced in such a shallow setting, the variations in bottom type can pose challenges to depth retrieval. The American site represents deeper waters of up to ~3.5 m, whereas the Colorado reach includes waters with ~10 m depths. Thus, the selected sites permit a thorough assessment of the proposed NN–depth ensembling on clear waters.

We employed in situ bathymetry data collected previously in each river for training and validation of the depth retrieval models.

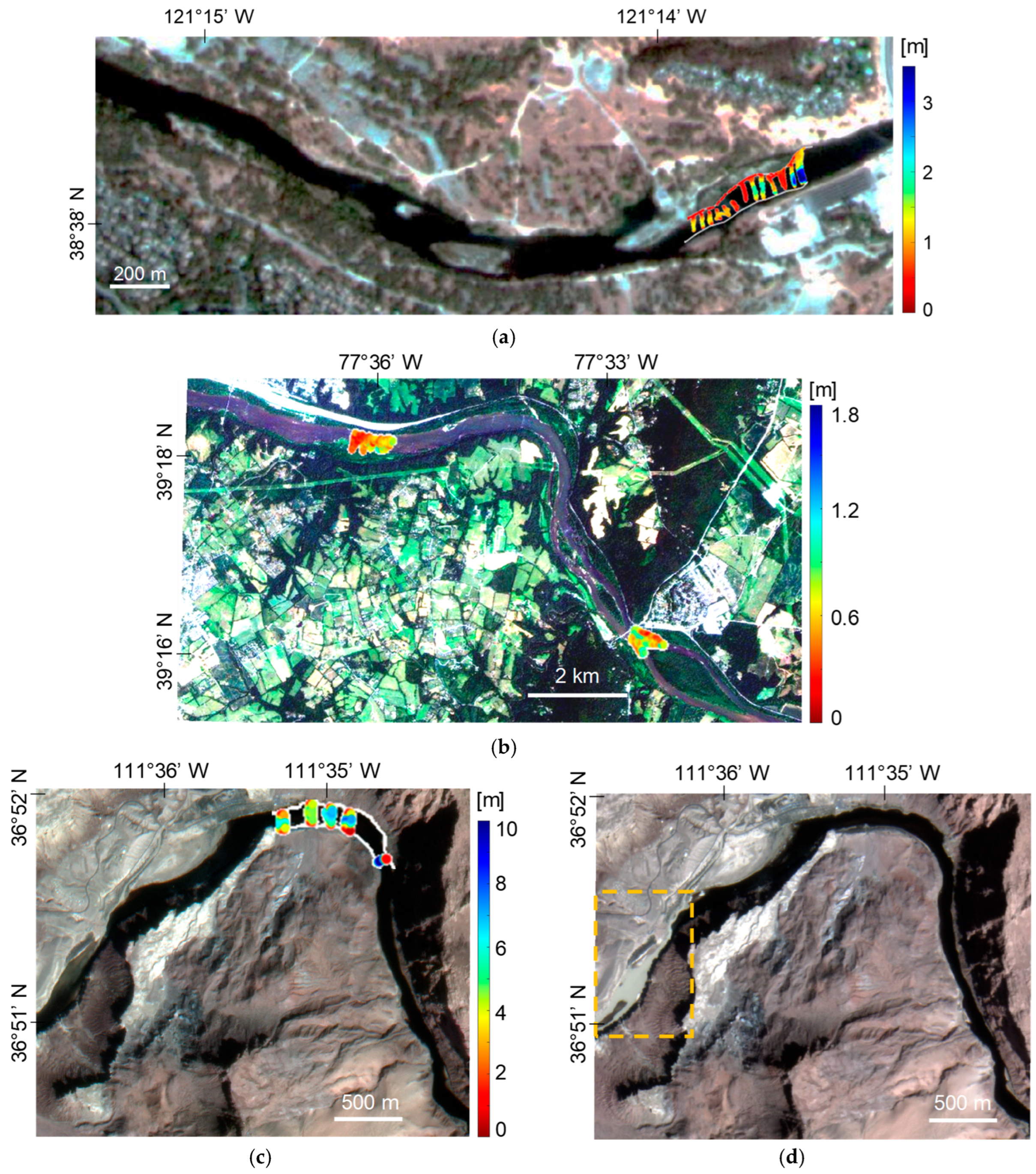

Figure 4 shows the in situ data superimposed on the SuperDove imagery of the study sites. Since the in situ data are collected with a spatial resolution to the order of a few centimeters, all depth measurements within each pixel were averaged to align with the spectral data at the resolution of an image pixel. The high density of field measurements ensured that this approach would provide representative pixel-scale mean depths. The field data and the details of the measurements can be found in the U.S. Geological Survey (USGS) data releases [

35,

36,

37]. In the cases of the American and Colorado rivers, half of the in situ samples were used for training, and the second half was held out for validation (

Table 1). The field survey in the Potomac River covers two distinct reaches (

Figure 4b). Thus, we considered the northern reach for training (1715 samples) and the other for validation (3484 samples).

A set of temporal SuperDove images was downloaded for each river, including 10, 11, and 35 images for the American, Potomac, and Colorado, respectively. A tributary, called the Paria River, feeds into the Colorado River and can introduce significant sediment during flash floods. One of the images from Colorado, acquired at time instance 10 (

T10), captured a sediment plume introduced by the Paria River in the western part of the studied reach. The area affected by the sediment plume is not within the area surveyed in the field, however. Thus, the plume did not affect the training and validation of the models. However, this image can provide insight into the robustness of different ensembling approaches when extending the depth retrieval to larger reaches in the presence of such environmental perturbations. Thus, the

T10 image from the Colorado River was included in our analysis to evaluate the behavior of different ensembling approaches. The images acquired before and during the flood are shown in

Figure 4c and 4d, respectively.

In this study, we employed all eight bands (443 to 865 nm) of SuperDove imagery. However, the surface reflectance products of Planet are not suitable for aquatic applications, and they provide unrealistic spectral shapes and large temporal inconsistencies over water bodies, particularly shallow rivers [

12,

27]. Thus, we employed the TOA radiance data by converting them to TOA reflectance using image-specific coefficients provided in the metadata. The radiance-to-reflectance conversion minimizes the effect of temporal variations in sensor viewing and solar illumination geometries. The selected images are cloud-free and represent similar discharges relative to the condition during in situ data acquisition. The USGS gage records were used to identify the dates with similar discharges (

Table 1). A visual inspection of in situ data superimposed on the SuperDove imagery (

Figure 4) indicated that the field data fall within the wetted channel, as depicted in the images. To quantify the geolocational accuracy of the imagery, we used very-high-resolution (0.6 m) aerial imagery from the National Agricultural Imagery Program (NAIP) [

38] to select reference points. Corresponding points on the SuperDove imagery were then identified to build a set of tie points. For each study site, we extracted the spatial coordinates of 20 tie points, focusing on distinct features that were easily recognizable in both aerial and SuperDove images, such as road intersections, building corners, or other landmarks. Then, the RMSE for each tie point was calculated using the spatial coordinates. The average RMSE value was then computed to quantify the absolute geolocational accuracy of an image. This process was repeated for all the temporal imagery of a given study site using the same reference points derived from the aerial image. After calculating an average RMSE for each SuperDove image, the average (

M) and standard deviation (

SD) of the RMSEs were calculated for the temporal imagery of each site (

Table 1). The

SD of the RMSEs served as an indicator for the temporal stability of the SuperDove imagery in terms of its geolocational accuracy. These analyses indicated sub-pixel absolute geometric accuracy for all the study sites considering the SuperDove imagery with a 3 m spatial resolution. Moreover, the SD was very small (0.1 m), indicating high temporal stability of the imagery. This result aligns with findings from a recent study [

39]. The high temporal stability of the geolocational accuracy of the imagery implies that these data are well suited for our temporal ensembling depth retrieval model.

6. Conclusions and Future Outlook

In this study, we proposed a machine learning approach to enhance bathymetry retrieval from time-series imagery. The new method presented herein involves ensembling water depths derived from individual images rather than ensembling the spectral data first and then proceeding to depth retrieval. The proposed NN–depth ensembling provided more accurate and robust retrievals of bathymetry relative to other approaches, including mean–spec and mean–depth ensembling models. Given the reduced sensitivity of the NN–depth ensembling to variations in the confounding factors over time, this ensembling approach can benefit from temporal imagery spanning a relatively long period, provided the bathymetry remains steady. The robust behavior of the NN–depth ensembling would be particularly valuable in an operational monitoring context in which images are processed as they are acquired, without any automatic means of accounting for episodic perturbations such as a sediment plume. In addition, this study provided further evidence of the utility of the newly available SuperDove imagery in bathymetric applications, as initially demonstrated in a comparative study with Landsat-9 and Sentinel-2A/B [

27].

Similar to other empirical bathymetric models, the proposed bathymetry method is constrained by the availability of in situ data. However, the in situ data required for training NN–depth ensembling does not need to be a lengthy time series; in fact, only field data from a single time instance are required. This approach is predicated upon the assumption that the images are acquired under steady flow conditions. Thus, the required field data are minimal and equivalent to those needed for the analysis of a single image. Moreover, since shallow neural networks are utilized, the number of training samples does not need to be unusually large. Although we performed the bathymetry retrieval in rivers, the proposed methodology is generic and can be applied to any optically shallow environment. This study leveraged NNs to ensemble the temporal depths. Future studies could be devoted to evaluating other machine/deep learning models, like decision trees, support vector machines, and convolutional NNs. In this study, we used automatic hyperparameter tuning to define the structure of the NNs through the proposed ensembling. However, we also demonstrated that a simple, fixed architecture can be used in cases where the training and prediction are applied to individual images [

12]. Applying a common, fixed architecture to process multitemporal data can facilitate routine application of the proposed method. In this study, we used TOA data, as Planet’s standard surface reflectance products are not useful for aquatic applications. The dark spectrum fitting (DSF) atmospheric correction and the exponential model implemented in the ACOLITE processor are the only aquatic-specific atmospheric corrections that can currently be applied to SuperDove imagery [

11,

41]. However, these methods are mainly suitable for turbid waters [

42,

43], which is not the case for bathymetric applications. The effect of atmospheric correction on the performance of the ensembling approaches can be investigated as more methods become available. However, this effect is expected to be minimal for the proposed NN–depth ensembling because the approach treats every image individually at the first stage, and regression-based depth retrieval on a single image can achieve even better results using TOA rather than BOA, particularly when the atmospheric correction is not reliable [

12]. However, mean–spec ensembling can benefit from an accurate atmospheric correction, as it can enhance the consistency among temporal images and, thus, the quality of the time-averaged image. In the future, the acquisition of CubeSat images will become even more frequent, with up to 30 images captured each day, as a result of a new Planet mission called Pelican [

44]. The novel approach to ensembling described herein will provide an effective means of incorporating the temporally rich data from this new constellation to produce accurate, highly robust bathymetric data products. Notably, the proposed NN–depth ensembling approach is not limited to CubeSat data and could also be applied to time-series imagery from other satellite or airborne missions.