EFCNet: Expert Feature-Based Convolutional Neural Network for SAR Ship Detection

Abstract

:1. Introduction

- (1)

- The CNN-based methods have a great reliance on the amount of data. To enable the model to effectively capture discriminative features associated with SAR ship targets, substantial training data is required. Nevertheless, acquiring SAR images is both challenging and expensive, leading to a limited amount of available data. This scarcity of data creates a significant bottleneck, making it difficult for CNN to learn generalizable features. Consequently, the limited SAR image data hinders the possibility of CNN to realize their full potential in SAR ship detection. In other words, it is worth exploring how to enable the model to fully extract robust features related to the target from the limited data for achieving better SAR ship detection.

- (2)

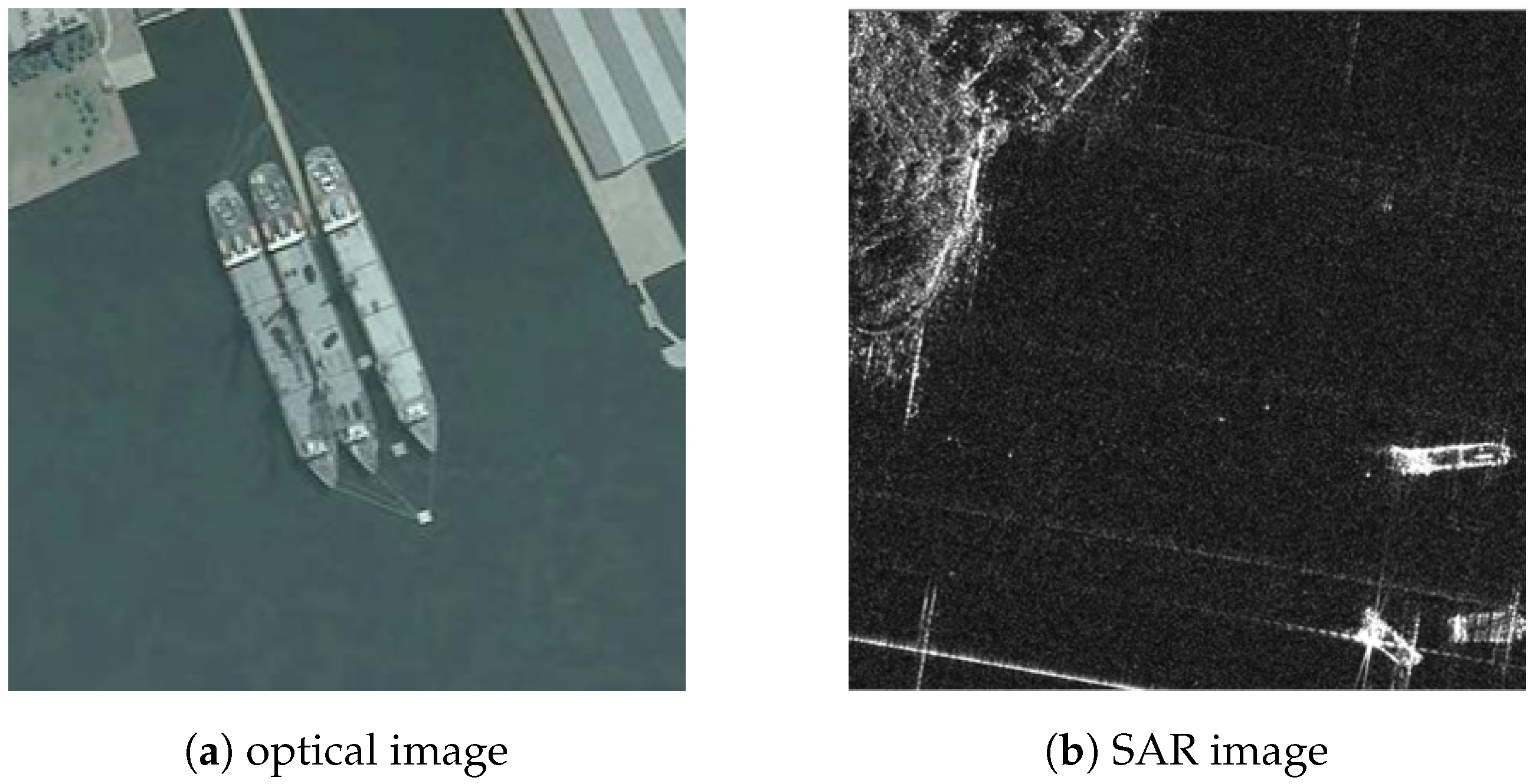

- Existing CNN-based approaches are notably vulnerable to interference arising from multiple sources, such as noise perturbations, alterations in target orientation, and variations in imaging angles. As shown in Figure 1, unlike optical images, SAR images are prone to substantial background clutter, potentially obfuscating the discernible features of target. Besides, the inherent relative motion between the radar apparatus and the target may induce azimuthal and distance blurring, introducing uncertainty regarding the target’s spatial positioning and morphology. These intrinsic attributes render the detection of ship targets within SAR images a notably intricate endeavor. If the SAR ship-detection task is directly treated as an optical target-detection task, it may not achieve the same performance.

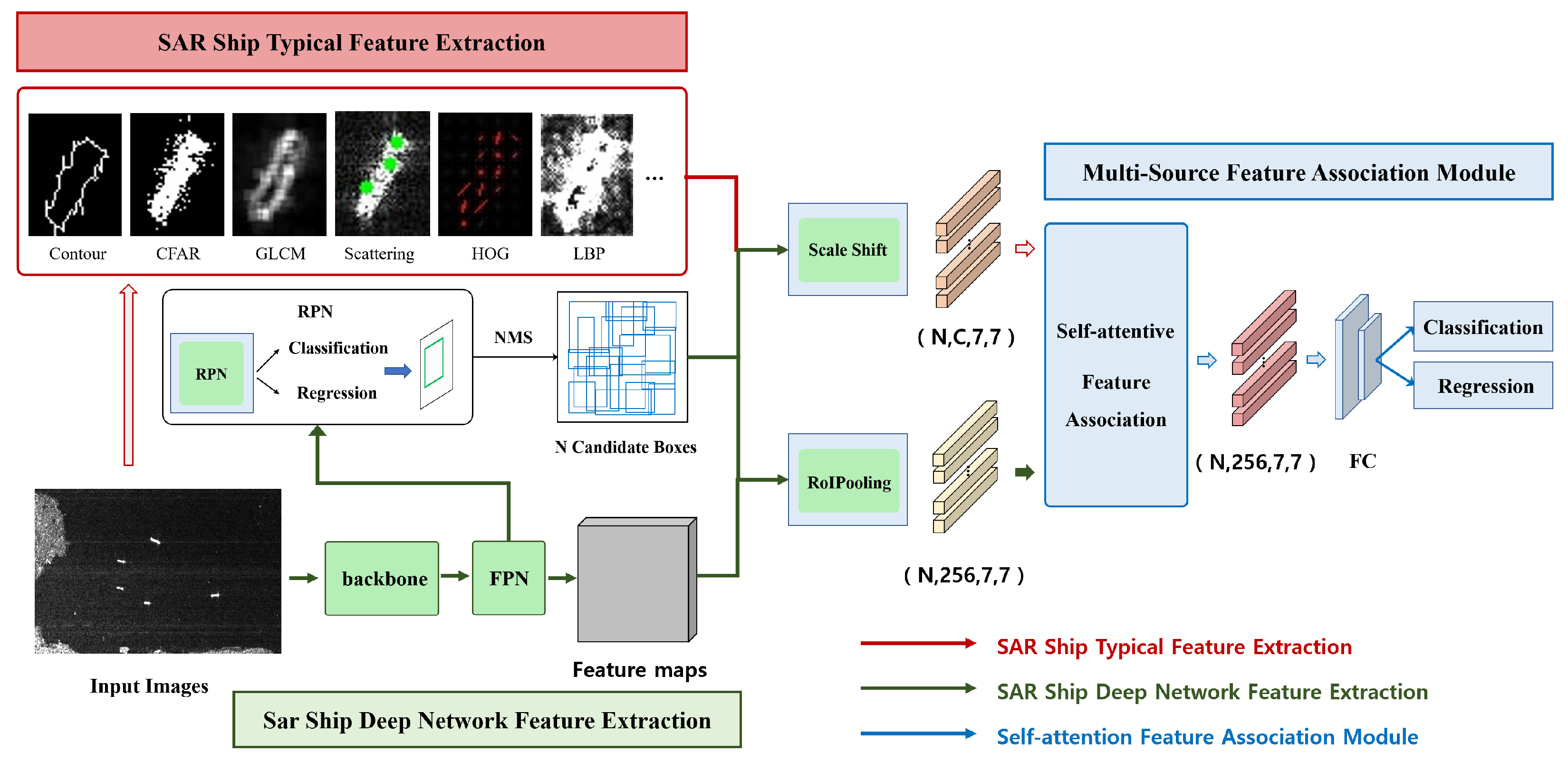

2. Method

2.1. Overview

2.2. Deep-Feature Extraction

2.3. Expert Feature Extraction

2.3.1. Strong Scattering Point Extraction

2.3.2. Peak Features

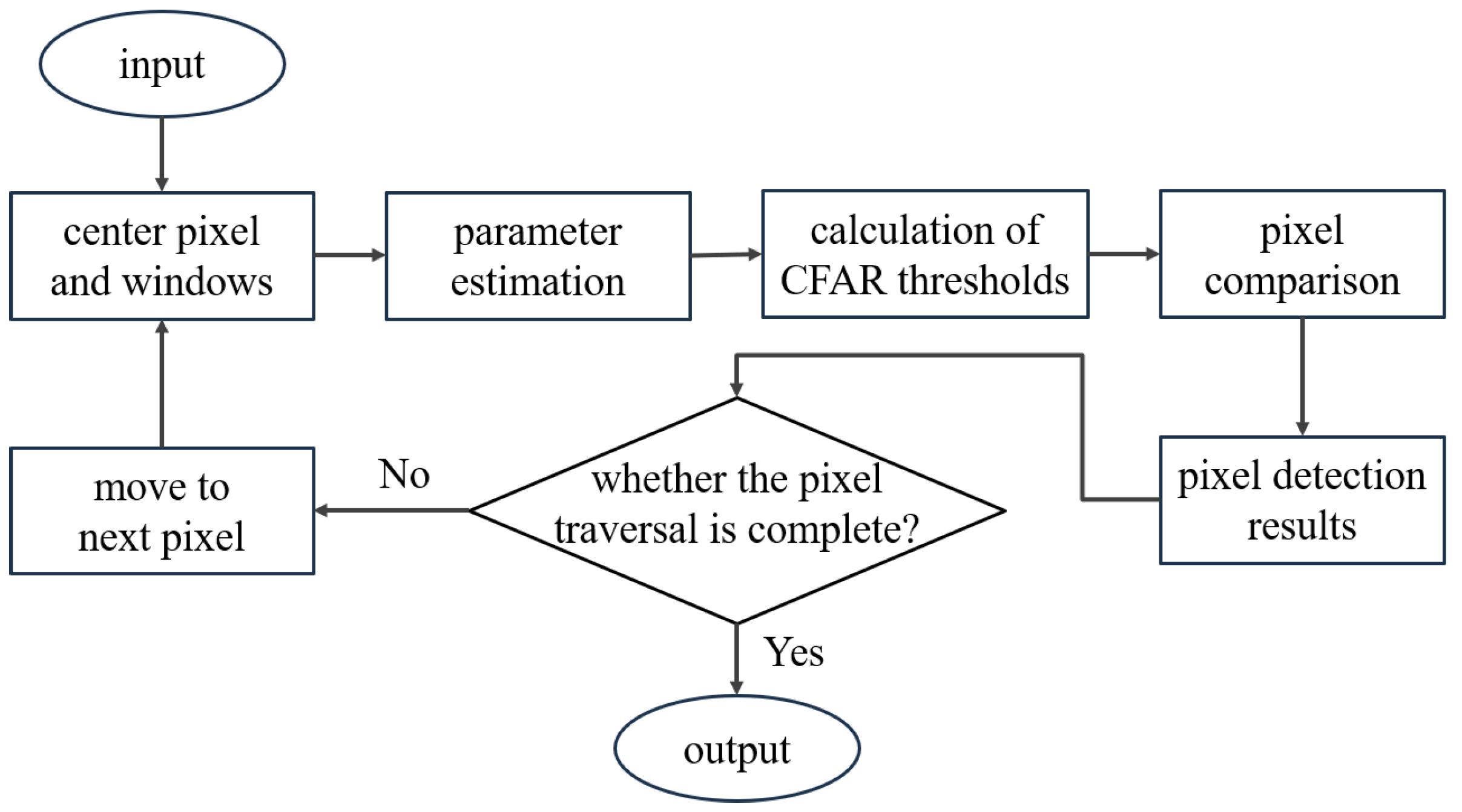

2.3.3. CFAR

2.4. Multi-Source Features Association Module

3. Result

3.1. Datasets and Experimental Details

3.2. Ablation Experiment and Analysis

3.3. Comparison Experiments and Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Reigber, A.; Scheiber, R.; Jager, M.; Prats-Iraola, P.; Hajnsek, I.; Jagdhuber, T.; Papathanassiou, K.P.; Nannini, M.; Aguilera, E.; Baumgartner, S.; et al. Very-high-resolution airborne synthetic aperture radar imaging: Signal processing and applications. Proc. IEEE 2012, 101, 759–783. [Google Scholar]

- Li, J.; Xu, C.; Su, H.; Gao, L.; Wang, T. Deep learning for SAR ship detection: Past, present and future. Remote Sens. 2022, 14, 2712. [Google Scholar] [CrossRef]

- Yasir, M.; Jianhua, W.; Mingming, X.; Hui, S.; Zhe, Z.; Shanwei, L.; Colak, A.T.I.; Hossain, M.S. Ship detection based on deep learning using SAR imagery: A systematic literature review. Soft Comput. 2023, 27, 63–84. [Google Scholar]

- Bi, H.; Deng, J.; Yang, T.; Wang, J.; Wang, L. CNN-Based Target Detection and Classification When Sparse SAR Image Dataset is Available. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2021, 14, 6815–6826. [Google Scholar] [CrossRef]

- Li, J.; Chen, J.; Cheng, P.; Yu, Z.; Yu, L.; Chi, C. A Survey on Deep-Learning-Based Real-Time SAR Ship Detection. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2023, 16, 3218–3247. [Google Scholar]

- ZHANG, T.; ZHANG, X.; SHI, J.; WEI, S. High-speed ship detection in SAR images by improved yolov3. In Proceedings of the 2019 16th International Computer Conference on Wavelet Active Media Technology and Information Processing, Chengdu, China, 14–15 December 2019; IEEE: New Youk, NJ, USA, 2019; pp. 149–152. [Google Scholar]

- Li, D.; Liang, Q.; Liu, H.; Liu, Q.; Liu, H.; Liao, G. A novel multidimensional domain deep learning network for SAR ship detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5203213. [Google Scholar]

- Pan, D.; Wu, Y.; Dai, W.; Miao, T.; Zhao, W.; Gao, X.; Sun, X. TAG-Net: Target Attitude Angle-Guided Network for Ship Detection and Classification in SAR Images. Remote Sens. 2024, 16, 944. [Google Scholar] [CrossRef]

- Du, Y.; Du, L.; Guo, Y.; Shi, Y. Semisupervised SAR ship detection network via scene characteristic learning. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5201517. [Google Scholar]

- Song, S.; Xu, B.; Yang, J. SAR target recognition via supervised discriminative dictionary learning and sparse representation of the SAR-HOG feature. Remote Sens. 2016, 8, 683. [Google Scholar] [CrossRef]

- Agrawal, A.; Mangalraj, P.; Bisherwal, M.A. Target detection in SAR images using SIFT. In Proceedings of the 2015 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Abu Dhabi, United Arab Emirates, 7–10 December 2015; pp. 90–94. [Google Scholar]

- Zhang, T.; Ji, J.; Li, X.; Yu, W.; Xiong, H. Ship Detection From PolSAR Imagery Using the Complete Polarimetric Covariance Difference Matrix. IEEE Trans. Geosci. Remote Sens. 2019, 57, 2824–2839. [Google Scholar]

- Bai, J.; Lu, J.; Xiao, Z.; Chen, Z.; Jiao, L. Generative adversarial networks based on transformer encoder and convolution block for hyperspectral image classification. Remote Sens. 2022, 14, 3426. [Google Scholar] [CrossRef]

- Bai, J.; Yu, W.; Xiao, Z.; Havyarimana, V.; Regan, A.C.; Jiang, H.; Jiao, L. Two-stream spatial–temporal graph convolutional networks for driver drowsiness detection. IEEE Trans. Geosci. Remote Sens. 2021, 52, 13821–13833. [Google Scholar]

- Bai, J.; Ding, B.; Xiao, Z.; Jiao, L.; Chen, H.; Regan, A.C. Hyperspectral image classification based on deep attention graph convolutional network. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5504316. [Google Scholar]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A survey on vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 87–110. [Google Scholar] [PubMed]

- Li, Y.; Wang, H.; Jin, Q.; Hu, J.; Chemerys, P.; Fu, Y.; Wang, Y.; Tulyakov, S.; Ren, J. Snapfusion: Text-to-image diffusion model on mobile devices within two seconds. Adv. Neural Inf. Process. Syst. 2024, 36, 20662–20678. [Google Scholar]

- Wang, Y.; Bai, J.; Xiao, Z.; Chen, Z.; Xiong, Y.; Jiang, H.; Jiao, L. AutoSMC: An Automated Machine Learning Framework for Signal Modulation Classification. IEEE Trans. Inf. Forensics Secur. 2024, 19, 6225–6236. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Bai, J.; Huang, S.; Xiao, Z.; Li, X.; Zhu, Y.; Regan, A.C.; Jiao, L. Few-shot hyperspectral image classification based on adaptive subspaces and feature transformation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5523917. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked autoencoders are scalable vision learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16000–16009. [Google Scholar]

- Bai, J.; Shi, W.; Xiao, Z.; Regan, A.C.; Ali, T.A.A.; Zhu, Y.; Zhang, R.; Jiao, L. Hyperspectral image classification based on superpixel feature subdivision and adaptive graph structure. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5524415. [Google Scholar]

- Bai, J.; Yuan, A.; Xiao, Z.; Zhou, H.; Wang, D.; Jiang, H.; Jiao, L. Class incremental learning with few-shots based on linear programming for hyperspectral image classification. IEEE Trans. Cybern. 2020, 52, 5474–5485. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Bai, J.; Sun, F. Visual localization method for unmanned aerial vehicles in urban scenes based on shape and spatial relationship matching of buildings. Remote Sens. 2024, 16, 3065. [Google Scholar] [CrossRef]

- Bai, J.; Zhou, Z.; Chen, Z.; Xiao, Z.; Wei, E.; Wen, Y.; Jiao, L. Cross-dataset model training for hyperspectral image classification using self-supervised learning. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5538017. [Google Scholar] [CrossRef]

- Bai, J.; Shi, W.; Xiao, Z.; Ali, T.A.A.; Ye, F.; Jiao, L. Achieving better category separability for hyperspectral image classification: A spatial–spectral approach. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 9621–9635. [Google Scholar] [CrossRef]

- Bai, J.; Liu, R.; Zhao, H.; Xiao, Z.; Chen, Z.; Shi, W.; Xiong, Y.; Jiao, L. Hyperspectral image classification using geometric spatial–spectral feature integration: A class incremental learning approach. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5531215. [Google Scholar] [CrossRef]

- Bai, J.; Wen, Z.; Xiao, Z.; Ye, F.; Zhu, Y.; Alazab, M.; Jiao, L. Hyperspectral image classification based on multibranch attention transformer networks. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5535317. [Google Scholar] [CrossRef]

- Gao, G.; Chen, Y.; Feng, Z.; Zhang, C.; Duan, D.; Li, H.; Zhang, X. R-LRBPNet: A Lightweight SAR Image Oriented Ship Detection and Classification Method. Remote Sens. 2024, 16, 1533. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. Injection of traditional hand-crafted features into modern CNN-based models for SAR ship classification: What, why, where, and how. Remote Sens. 2021, 13, 2091. [Google Scholar] [CrossRef]

- Yasir, M.; Liu, S.; Mingming, X.; Wan, J.; Pirasteh, S.; Dang, K.B. ShipGeoNet: SAR image-based geometric feature extraction of ships using convolutional neural networks. IEEE Trans. Geosci. Remote. Sens. 2024, 62, 5202613. [Google Scholar] [CrossRef]

- Chang, S.; Deng, Y.; Zhang, Y.; Zhao, Q.; Wang, R.; Zhang, K. An advanced scheme for range ambiguity suppression of spaceborne SAR based on blind source separation. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 5230112. [Google Scholar] [CrossRef]

- Liangjun, Z.; Feng, N.; Yubin, X.; Gang, L.; Zhongliang, H.; Yuanyang, Z. MSFA-YOLO: A Multi-Scale SAR Ship Detection Algorithm Based on Fused Attention. IEEE Access 2024, 12, 24554–24568. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Wu, K.; Zhang, Z.; Chen, Z.; Liu, G. Object-Enhanced YOLO Networks for Synthetic Aperture Radar Ship Detection. Remote Sens. 2024, 16, 1001. [Google Scholar] [CrossRef]

- Liu, Y.; Ma, Y.; Chen, F.; Shang, E.; Yao, W.; Zhang, S.; Yang, J. YOLOv7oSAR: A Lightweight High-Precision Ship Detection Model for SAR Images Based on the YOLOv7 Algorithm. Remote Sens. 2024, 16, 913. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, S.; Wang, W.Q. A lightweight faster R-CNN for ship detection in SAR images. IEEE Geosci. Remote. Sens. Lett. 2020, 19, 4006105. [Google Scholar]

- Zhang, T.; Zhang, X.; Li, J.; Xu, X.; Wang, B.; Zhan, X.; Xu, Y.; Ke, X.; Zeng, T.; Su, H.; et al. SAR ship detection dataset (SSDD): Official release and comprehensive data analysis. Remote Sens. 2021, 13, 3690. [Google Scholar] [CrossRef]

- Gui, Y.; Li, X.; Xue, L. A multilayer fusion light-head detector for SAR ship detection. Sensors 2019, 19, 1124. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Shi, J.; Wei, S. Depthwise separable convolution neural network for high-speed SAR ship detection. Remote Sens. 2019, 11, 2483. [Google Scholar] [CrossRef]

- Wang, S.; Cai, Z.; Yuan, J. Automatic SAR Ship Detection Based on Multifeature Fusion Network in Spatial and Frequency Domains. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4102111. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X.; Liu, C.; Xu, X.; Zhan, X.; Wang, C.; Ahmad, I.; Zhou, Y.; Pan, D.; et al. HOG-ShipCLSNet: A Novel Deep Learning Network with HOG Feature Fusion for SAR Ship Classification. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 5210322. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Zhang, J.; Xing, M.; Xie, Y. FEC: A feature fusion framework for SAR target recognition based on electromagnetic scattering features and deep CNN features. IEEE Trans. Geosci. Remote Sens. 2020, 59, 2174–2187. [Google Scholar]

- Suzuki, S.; be, K. Topological structural analysis of digitized binary images by border following. Comput. Vision Graph. Image Process. 1985, 30, 32–46. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 886–893. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Harris, C.G.; Stephens, M.J. A Combined Corner and Edge Detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988. [Google Scholar]

- Zhang, T.; Zhang, X. A polarization fusion network with geometric feature embedding for SAR ship classification. Pattern Recognit. 2022, 123, 108365. [Google Scholar]

- Kuiying, Y.; Lin, J.; Changchun, Z.; Jin, J. SAR automatic target recognition based on shadow contour. In Proceedings of the 2013 Fourth International Conference on Digital Manufacturing & Automation, ICDMA, Shinan, China, 29–30 June 2013; IEEE: New Youk, NJ, USA, 2013; pp. 1179–1183. [Google Scholar]

- HM, F. Adaptive detection mode with threshold control as a function of spatially sampled clutter-level estimates. Rca Rev. 1968, 29, 414–465. [Google Scholar]

- Steenson, B.O. Detection performance of a mean-level threshold. IEEE Trans. Aerosp. Electron. Syst. 1968, AES-4, 529–534. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; IEEE: New Youk, NJ, USA, 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Bai, J.; Ren, J.; Yang, Y.; Xiao, Z.; Yu, W.; Havyarimana, V.; Jiao, L. Object detection in large-scale remote-sensing images based on time-frequency analysis and feature optimization. IEEE Trans. Geosci. Remote. Sens. 2021, 60, 5405316. [Google Scholar]

- Szegedy, C.; Toshev, A.; Erhan, D. Deep neural networks for object detection. Adv. Neural Inf. Process. Syst. 2013, 26, 2553–2561. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Chen, S.; Sun, P.; Song, Y.; Luo, P. Diffusiondet: Diffusion model for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 19830–19843. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the 14th European Conference Computer Vision—ECCV, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Lu, X.; Li, B.; Yue, Y.; Li, Q.; Yan, J. Grid r-cnn. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7363–7372. [Google Scholar]

- Wu, Y.; Chen, Y.; Yuan, L.; Liu, Z.; Wang, L.; Li, H.; Fu, Y. Rethinking classification and localization for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10186–10195. [Google Scholar]

- Sun, P.; Zhang, R.; Jiang, Y.; Kong, T.; Xu, C.; Zhan, W.; Tomizuka, M.; Li, L.; Yuan, Z.; Wang, C.; et al. Sparse r-cnn: End-to-end object detection with learnable proposals. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 14454–14463. [Google Scholar]

- Xia, R.; Chen, J.; Huang, Z.; Wan, H.; Wu, B.; Sun, L.; Yao, B.; Xiang, H.; Xing, M. CRTransSar: A Visual Transformer Based on Contextual Joint Representation Learning for SAR Ship Detection. Remote Sens. 2022, 14, 1488. [Google Scholar] [CrossRef]

- Ren, X.; Bai, Y.; Liu, G.; Zhang, P. YOLO-Lite: An Efficient Lightweight Network for SAR Ship Detection. Remote Sens. 2023, 15, 3771. [Google Scholar] [CrossRef]

- Zhang, Y.; Hao, L.Y.; Li, Y. SD-YOLO: An Attention Mechanism Guided YOLO Network for Ship Detection. In Proceedings of the 2024 14th International Conference on Information Science and Technology (ICIST), Chengdu, China, 6–9 December 2024; IEEE: New Youk, NJ, USA, 2024; pp. 769–776. [Google Scholar]

- Bayındır, C.; Altintas, A.A.; Ozaydin, F. Self-localized solitons of a q-deformed quantum system. Commun. Nonlinear Sci. Numer. Simul. 2021, 92, 105474. [Google Scholar] [CrossRef]

- Fujimoto, W.; Miratsu, R.; Ishibashi, K.; Zhu, T. Estimation and Use of Wave Information for Ship Monitoring. ClassNK Tech. J. 2022, 2022, 79–92. [Google Scholar]

- Wang, H.; Nie, D.; Zuo, Y.; Tang, L.; Zhang, M. Nonlinear ship wake detection in SAR images based on electromagnetic scattering model and YOLOv5. Remote Sens. 2022, 14, 5788. [Google Scholar] [CrossRef]

| Feature | Recall | AP@0.5:0.95 | AP@0.5 |

|---|---|---|---|

| Faster R-CNN (Baseline) | 0.661 | 0.609 | 0.970 |

| Feature association type: stack channel & self-attention mechanism | |||

| (1) Scattering | 0.648 | 0.529 | 0.974 |

| (2) Peak | 0.666 | 0.610 | 0.973 |

| (3) CFAR | 0.642 | 0.583 | 0.960 |

| (4) Contour | 0.647 | 0.592 | 0.973 |

| (5) Hog | 0.662 | 0.608 | 0.974 |

| (6) GLCM | 0.614 | 0.540 | 0.952 |

| (7) Canny | 0.652 | 0.599 | 0.969 |

| (8) Harris | 0.642 | 0.580 | 0.959 |

| Feature association type: multi-source features association module | |||

| (1) Scattering | 0.683 | 0.631 | 0.976 |

| (2) Peak | 0.670 | 0.613 | 0.970 |

| (3) CFAR | 0.662 | 0.611 | 0.974 |

| (4) Contour | 0.662 | 0.607 | 0.975 |

| (5) Hog | 0.670 | 0.619 | 0.975 |

| (6) GLCM | 0.664 | 0.607 | 0.973 |

| (7) Canny | 0.656 | 0.604 | 0.974 |

| (8) Harris | 0.667 | 0.613 | 0.974 |

| Feature | Recall | AP@0.5:0.95 | AP@0.5 |

|---|---|---|---|

| Faster R-CNN (Baseline) | 0.661 | 0.609 | 0.970 |

| Feature association type: stack channel & self-attention mechanism | |||

| 4 5 | 0.643 | 0.589 | 0.964 |

| 1 3 | 0.643 | 0.575 | 0.966 |

| 1 2 3 | 0.633 | 0.574 | 0.966 |

| 4 5 8 | 0.637 | 0.573 | 0.959 |

| 1 4 5 8 | 0.640 | 0.578 | 0.959 |

| 1 3 4 7 8 | 0.612 | 0.542 | 0.935 |

| Feature association type: multi-source features association module | |||

| 4 5 | 0.665 | 0.613 | 0.961 |

| 1 3 | 0.664 | 0.612 | 0.973 |

| 1 2 3 | 0.667 | 0.611 | 0.971 |

| 4 5 8 | 0.657 | 0.606 | 0.975 |

| 1 4 5 8 | 0.666 | 0.608 | 0.974 |

| 1 3 4 7 8 | 0.661 | 0.619 | 0.975 |

| (1) Scattering, (2) Peak, (3) CFAR, (4) Contour | |||

| (5) Hog, (6) GLCM, (7) Canny, (8) Harris | |||

| Method | Faster R-CNN (Baseline) | EFCNet (Ours) | ||||

|---|---|---|---|---|---|---|

| Recall | AP@0.5:0.95 | AP@0.5 | Recall | AP@0.5:0.95 | AP@0.5 | |

| 10% | 0.473 | 0.352 | 0.722 | 0.507 | 0.495 | 0.880 |

| 20% | 0.498 | 0.409 | 0.777 | 0.517 | 0.543 | 0.891 |

| 30% | 0.515 | 0.421 | 0.795 | 0.612 | 0.554 | 0.914 |

| 40% | 0.566 | 0.475 | 0.833 | 0.631 | 0.579 | 0.925 |

| 50% | 0.595 | 0.524 | 0.862 | 0.645 | 0.591 | 0.927 |

| 60% | 0.609 | 0.534 | 0.889 | 0.627 | 0.576 | 0.919 |

| 70% | 0.636 | 0.565 | 0.905 | 0.664 | 0.612 | 0.947 |

| 80% | 0.638 | 0.568 | 0.907 | 0.670 | 0.615 | 0.958 |

| 90% | 0.676 | 0.618 | 0.936 | 0.676 | 0.623 | 0.960 |

| 100% | 0.661 | 0.609 | 0.970 | 0.661 | 0.619 | 0.975 |

| Method | AP@0.5:0.95 | AP@0.5 |

|---|---|---|

| EfficientNet [64] | 0.507 | 0.866 |

| YOLOv3 [39] | 0.563 | 0.915 |

| SSD [65] | 0.558 | 0.948 |

| RetinaNet [66] | 0.585 | 0.900 |

| Faster R-CNN [37] | 0.609 | 0.970 |

| Cascade R-CNN [67] | 0.624 | 0.945 |

| Grid R-CNN [68] | 0.531 | 0.958 |

| Double-Head R-CNN [69] | 0.605 | 0.944 |

| Sparse-RCNN [70] | 0.612 | 0.932 |

| CRTransSar [71] | - | 0.970 |

| YOLO-Lite [72] | - | 0.944 |

| SD-YOLO [73] | 0.623 | 0.961 |

| EFCNet (ours) | 0.631 | 0.976 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.; Zhang, Y.; Bai, J.; Hou, B. EFCNet: Expert Feature-Based Convolutional Neural Network for SAR Ship Detection. Remote Sens. 2025, 17, 1239. https://doi.org/10.3390/rs17071239

Chen Z, Zhang Y, Bai J, Hou B. EFCNet: Expert Feature-Based Convolutional Neural Network for SAR Ship Detection. Remote Sensing. 2025; 17(7):1239. https://doi.org/10.3390/rs17071239

Chicago/Turabian StyleChen, Zheng, Yuxiang Zhang, Jing Bai, and Biao Hou. 2025. "EFCNet: Expert Feature-Based Convolutional Neural Network for SAR Ship Detection" Remote Sensing 17, no. 7: 1239. https://doi.org/10.3390/rs17071239

APA StyleChen, Z., Zhang, Y., Bai, J., & Hou, B. (2025). EFCNet: Expert Feature-Based Convolutional Neural Network for SAR Ship Detection. Remote Sensing, 17(7), 1239. https://doi.org/10.3390/rs17071239