Abstract

Remote sensing image captioning (RSIC) aims to describe ground objects and scenes within remote sensing images in natural language form. As the complexity and diversity of scenes in remote sensing images increase, existing methods, although effective in specific tasks, are largely trained on particular scene images and corpora. This limits their ability to generate descriptions for scenes not encountered during training. Given the finite resources for data annotation and the expanding range of application scenarios, training data typically cover only a subset of common scenes, leaving many potential scene types unrepresented. Consequently, developing models capable of effectively handling unseen scenes with limited training data is imperative. This study introduces an innovative remote sensing image captioning model based on scene attribute learning—SALCap. The proposed model defines scene attributes and employs a specifically designed global object scene attribute extractor to capture these attributes. It then uses an attribute inference module to predict scene information through scene attributes, ensuring that this part of the scene’s information is reused in sentence generation through additional attribute loss. Experiments show that the method not only improves the accuracy of the description but also significantly enhances the model’s adaptability and generalizability relative to unseen scenes. This advancement expands the practical utility of remote sensing image captioning across diverse scenarios, particularly under the constraints of limited annotations.

1. Introduction

Remote sensing technology has become an indispensable tool for monitoring and understanding the Earth’s surface. Remote sensing image captioning refers to inputting a remote sensing image and generating a corresponding sentence to describe the image’s content. This task can be used for understanding remote sensing image [1] and image retrieval [2], which reduces the threshold of remote sensing image research to a certain extent. With the proliferation of high-resolution satellite and aerial imagery, there is a growing need for automated systems that can interpret these images and provide natural language descriptions of their content.

However, with the continuous enrichment of scenes in remote sensing images, the task of remote image captioning faces significant challenges. Existing methods [3,4,5], although performing well on specific tasks, are primarily trained on particular scene images and corpora. This approach limits their ability to generate descriptions for scenes not encountered during training. Given the finite resources for data annotation and the expanding range of application scenarios, training data typically cover only a subset of common scenes, leaving many potential scene types unrepresented. Consequently, developing models capable of effectively handling unseen scenes with limited training data is imperative. This advancement is crucial for enhancing the model’s practicality and generalizability, thereby better supporting a variety of real-world applications and maximizing the utility of existing data under the constraints of limited annotations.

Unseen scenes refer to geographic areas and environments that have not appeared in training dataset images or have unique combinations of features. For example, if the training dataset contains common scenes such as residential areas and basketball courts but does not cover a specific location such as a school and its unique structural layout and activity patterns, the model faces an “unseen scene” when it encounters a remote sensing image that contains a school. This type of scene is not only limited to specific types of locations (such as schools) but also includes those composite scenes, i.e., they may be composed of known elements due to their special arrangement, functional use, or dynamic changes in forming a completely new scene.

Due to the diverse nature of land features in remote sensing images, such as buildings, vegetation, and water bodies, which vary in shape, size, and spatial distribution, the manual annotation of remote sensing image captioning datasets is labor-intensive and costly. Therefore, research on using existing data for training to describe remote sensing images for application to more scenarios is of great interest.

Zhou et al. [6] proposed a few-shot remote sensing image captioning framework. It focuses on optimizing the decoder using parameter integration, multi-model integration, and self-distillation techniques for efficient few-shot caption generation. Unlike few-shot learning, the goal of remote sensing image captioning in unseen scenes is to train models that can provide reasonable descriptions of scenes that have not appeared in the caption training set. To achieve this goal, we refer to the human reasoning process when facing remote sensing images of unseen scenes.

Humans’ ability to explore rich scenes stems from their ability to recognize various land forms, urban structures, vegetation types, and water distributions from known remotely sensed images and, at the same time, relate this visual information to the scene of the overall image, constructing a system of reasoning from various feature targets relative to scene information. This learning process enables us to consider individual targets in a scene as part of the scene’s properties: farmland, buildings, rivers, aircraft, runways, etc. Humans are able to not only quickly recognize these targets but also infer the scene in which they are located as a whole, e.g., agricultural land and airports. Even when confronted with a specific scene that we have not seen before, we are able to make reasonable conjectures and descriptions based on known combinations of attributes.

In view of the above thoughts, this study proposes SALCap, a scene-attribute-learning-based remote sensing image captioning method. We first define part of the target objects as scene attributes, and we construct an understanding of the entire scene based on the distribution and combination between attributes so as to establish the mapping relationship between attributes and scene information. This method first extracts scene attribute features through a global object scene attribute extractor and then associates the attribute’s feature information to obtain scene information through the attribute’s inference module. The extracted scene’s information is then used in the decoding stage to assist in generating new scene words for unseen scenes. The method is able to extend the descriptive ability of the model to richer scenes, and reasonable speculations and descriptions for scene images that have not appeared in the training set can be obtained. Consequently, this broadens the scope of applications for the remote sensing image captioning task.

2. Related Studies

Remote sensing image captioning is an important branch of image captioning. At present, most remote image captioning methods mainly fall into four general categories: retrieval-based [7,8], detection-based [9,10], pre-training-based [11,12], and encoder–decoder-based methods [3,13,14].

2.1. Retrieval-Based and Detection-Based Remote Sensing Image Captioning Methods

Hoxha et al. [15] combined caption and remote sensing image retrieval tasks by first using beam search [16] to obtain many sentences, and then they retrieved images, along with their reference captions, that are comparable to the original image. With the development of cross-modal pre-training models, Li et al. [17] proposed a novel approach, called Cross-Modal Retrieval and Semantic Refinement (CRSR), to enhance RSIC by integrating a semantic refinement module with a CLIP-based retrieval model. The disadvantage of retrieval-based methods lies in their dependence on retrieval results, making them difficult to adapt to the description of unseen scenes.

Image captioning based on object detection [18] utilizes the average of the extracted target frame features of Faster R-CNN [19] as region features. This feature can be directly located in the local region in the image, and each local region is directly used for subsequent caption generation. Shi et al. [20] decomposed image descriptions into three key sub-tasks: detecting key objects, analyzing environmental elements, and evaluating landscapes. Addressing multi-scale problems, Li et al. [21] proposed multi-scale methods, including multi-scale attention (MSA) and multi-task attention (MFA), which integrate object-level and scene-level features and use object detection to enhance contextual features. In remote sensing images, the scale, distribution, and quantity of objects (such as buildings, roads, and natural features) often vary significantly. This variability can lead to incomplete or inaccurate feature extraction during the object detection process. For instance, small-scale objects might be overlooked, while large-scale objects might not be fully captured, resulting in a loss of critical information needed for accurate captioning.

Moreover, in complex scenes with multiple types of objects, correctly describing the relationships between different objects is extremely challenging. Traditional object detection methods typically focus on identifying individual objects but struggle to capture the contextual relationships between them. This limitation makes it difficult to generate coherent and accurate captions that reflect the true semantic content of the scene.

2.2. Remote Sensing Image Captioning Methods for Unseen or Novel Scenes

Describing remote sensing images for unseen or novel scenes has received relatively less attention. In the field of natural images, existing image caption methods for unseen objects mainly fall into two categories: object-detection-based and pre-trained-model-based methods. For the natural image captioning tasks of new objects, the results reported by the authors of [22] are based on the local features of new objects in order to adjust, optimize, and realize the caption description of the new target. Due to the significant variation in target sizes in remote sensing images, directly applying Faster R-CNN for object detection results in a large number of detection boxes with substantial overlap and significant background noise within each box. Therefore, object-detection-based methods struggle to adapt to the description of remote sensing images in unseen scenes.

Methods based on pre-trained models mainly rely on large language models (LLMs) [23] and large-scale pre-trained vision–language models (VLMs) [24,25] for implementation. LLaVA [11] is a VLM that leverages LLMs and visual extractors. By learning the mapping from visual modality to linguistic modality, it significantly reduces the training costs and resource requirements. LLaMa Vision [12] likely employs a similar strategy, combining pre-trained visual encoders with language models to generate image descriptions. QWen-VL [26], through large-scale pre-training, high-quality supervised fine-tuning (SFT), and reinforcement learning from human feedback (RLHF), demonstrates emerging capabilities in language understanding, generation, and reasoning. ViECap [27] utilizes entity-aware decoding to generate descriptions in both seen and unseen scenes.

However, these large models usually face some challenges, such as training stability issues, deployment difficulties, and poor model interpretability. In addition, VLMs often rely heavily on carefully designed prompts, and their performance may vary significantly depending on the prompt’s formulation. These models face significant challenges when applied to remotely sensed imagery due to key differences in resolution, spectral information, and background complexity compared to natural imagery, and they suffer from high computational effort and unstable generated results.

2.3. Encoder–Decoder-Based Remote Sensing Image Captioning Methods

Encoder–decoder architectures have gained significant attention in recent years due to their effectiveness in understanding and describing visual content. The multi-mode encoder–decoder [1] is the first remote sensing image captioning model based on the NIC model [13], which uses CNN to extract remote sensing image information and then inputs it into RNN [28] for text generation. Afterward, in order to better understand the content of the image, the authors of [18,29] introduced the attention mechanism.

Due to the large field in remote sensing images, conventional feature extractors cannot effectively represent the relationships between ground objects in the image. Transformer’s multi-head attention [30] effectively solves this problem. In multi-head attention, different attention heads also focus on different points, thus obtaining the correlation between a single feature and multiple features. Even if some attention heads may obtain inaccurate association information, which means that there is redundancy, the final weighting module can remove this inaccurate association information, ensuring that final, useful multiple-association information can be used to obtain the correct attention value. The calculation for the multi-head attention module is shown in the following:

where represents the multi-head attention mechanism; represents the i- attention head; and , , and are the weight vectors corresponding to the three inputs of the multi-head attention module, representing the weight vector of the attention head.

For the better identification of targets in remotely sensed images, Meng et al. [31] initially developed the PKG-transformer, integrating a prior knowledge-guided attention (PKA) mechanism to enhance object selection relevance in remote sensing images and achieving good results. However, it fails to make full use of multi-scale features; thus, the generated captions are not comprehensive enough. In order to integrate features from different transformer coding layers, the authors of [4,14] used aggregation strategies to optimize cross-layer features in order to obtain higher quality representations, realizing better performance.

3. Methods

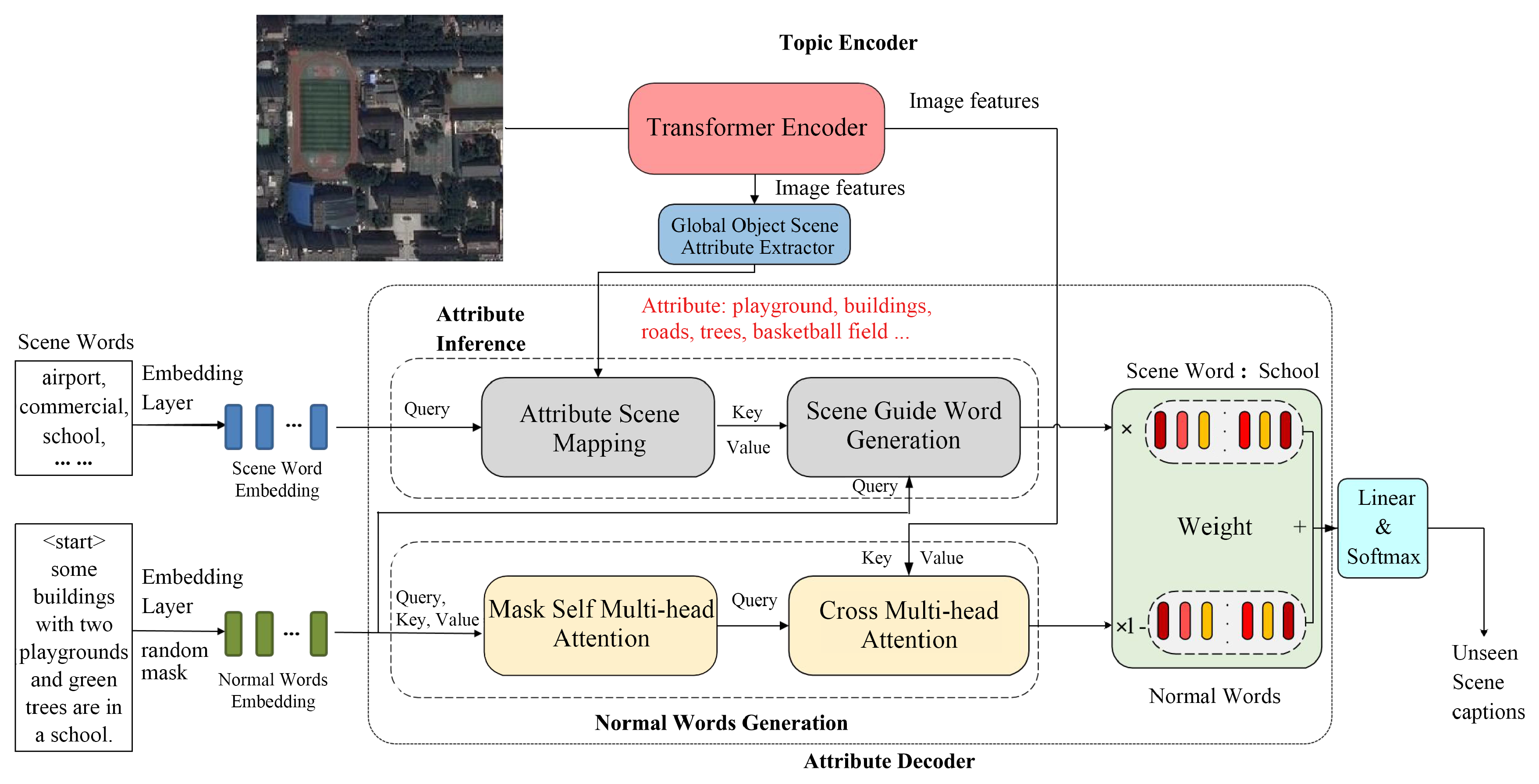

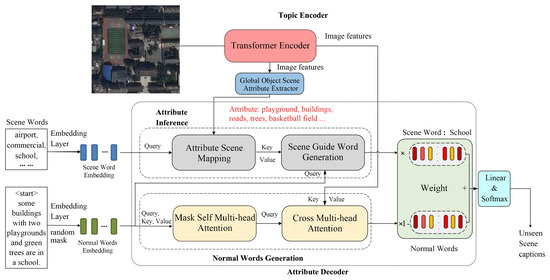

In this section, we mainly introduce the main structure of SALCap. Its overall structure is shown in Figure 1. The model adopts the main structure of an encoder–decoder, which first uses the topic encoder [3] to extract image features. Then, the global object scene attribute extractor acquires the attribute’s information in the image. Finally, the attribute-guided decoder uses attribute information to generate the scene’s information and uses it to guide the generation of captions.

Figure 1.

Overview of SALCap. First, remote sensing image features are extracted using transformer encoders. Then, the global object scene attribute extractor identifies semantic attribute information for various objects in the image. Subsequently, in the attribute inference module, the attribute-to-scene map first maps attribute information to a scene probability distribution, which is then used to guide the generation of scene-based text. Finally, a weighted combination of descriptions generated based on scenes and descriptions generated via normal procedures produces the final caption for unseen scenes.

3.1. Global Object Scene Attribute Extractor

Considering the diversity of scenes in remote sensing images, if we want to cover various scenes from different times and environments, a substantial amount of training data are necessary. In order to achieve accurate scene prediction in the case of the unseen scene, the attribute learning method is considered.

The attribute study starts with some characteristics and attributes of objects. If an object has some specific attributes, the category of the object can be inferred. We can consider object features in a scene as semantic properties of the scene and use them as attribute words, e.g., factories, storage tanks, etc. The attribute words represent the semantic features localized in the image. In addition, attribute words represent the object features of specific regions. Moreover, the distribution of attribute words is also crucial, which reflects the relationship between objects and helps the overall presentation of the scene. For example, a scene described by “pond” and “trees” may be considered a natural scene rather than an industrial scene. This distinction is vital for the accurate representation of the scene’s semantic content. Therefore, we introduce the global object scene attribute extractor, which aims to recognize the attributes of objects and their distributions in a scene. By utilizing the underlying semantic attributes of the scene, the model is enhanced to handle a variety of scenes, including those that may be considered “unseen”.

The scene category of the image is used as the scene word, such as “industrial area” or “airport”, and it offers a broad categorization of the image’s content, providing a general idea of the scene’s type without delving into specific details. The normal words refer to any words found in captions or descriptions that may not have a direct correlation with the scene’s attributes or categories. They can be descriptive but lack the specificity needed for detailed scene inference. This includes common verbs, adjectives, and even nouns (e.g., some, many, green, red, etc.). They are not significantly helpful in identifying the scene’s type or its key features.

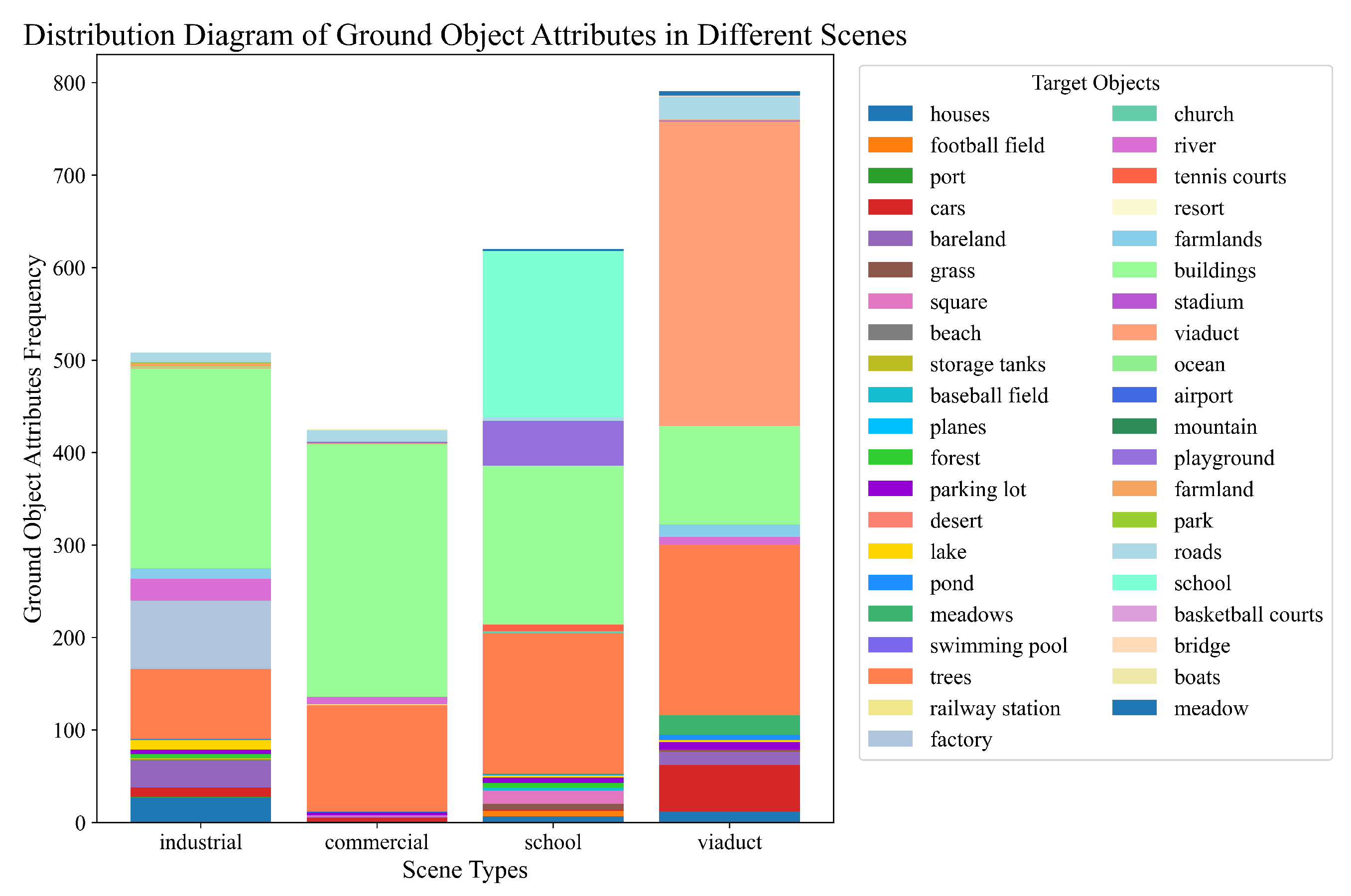

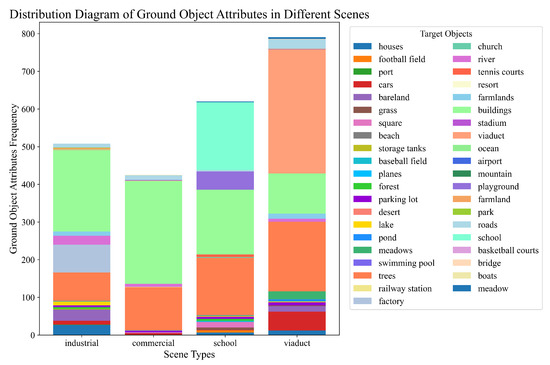

The distribution of ground object attributes varies across different scenes, as shown in Figure 2. It reveals significant differences in object attributes in various types of scenes. In viaduct scenes, roads and bridges are the predominant features, while attributes such as buildings are relatively rare. Industrial areas are characterized mainly by buildings and factories, reflecting their functions in production and storage. Commercial areas are predominantly composed of buildings, with a relatively limited variety with respect to ground objects. In contrast, school scenes not only include a significant proportion of buildings but also feature large areas of playgrounds and grass. Through the combination and association of specific attributes, a mapping between attributes and scene information can be established. In this case, even if a new scene appears, since the objects in the scene have all appeared before, we only need to add the association between previous objects and the new scene instead of training a new scene classifier from the perspective of the features of the image.

Figure 2.

Distribution diagram of ground object attributes in different scenes.

To simulate this inference process, we first need to extract the attribute features of the scene from the image. Considering the large size difference of different feature targets in remote sensing images, the use of global information allows us to ignore the specific size and details of different feature targets, which helps the system in understanding and inferring the meaning of the entire scene. Moreover, compared to detailed local feature extraction, extracting global information can significantly reduce the amount of data processing and facilitate the expansion of subsequent tasks. Therefore, we chose to adopt a multi-label classification method to obtain the attribute information of the image instead of the corresponding local image features.

To ensure that the scene attribute extractor extracts object feature information that is closely related to the scene and facilitates the use of the subsequent decoder, a sub-task of multi-label classification needs to be set up in order to pre-train the scene attribute extractor. Specifically, we used Stanford’s part-of-speech classifier to divide the parts of speech of each word in the data, and we selected the noun for which its word frequency numbered more than 50 as the attribute word and then deleted some nouns that had no practical significance. Finally, there was a remainder of 40 words forming the attribute corpus, as shown in Figure 3. In the RSICD dataset, each image corresponds to 5 caption descriptions. When the word in the attribute corpus appears in the caption’s description, we think that the word is an attribute label of the image.

Figure 3.

Attribute words.

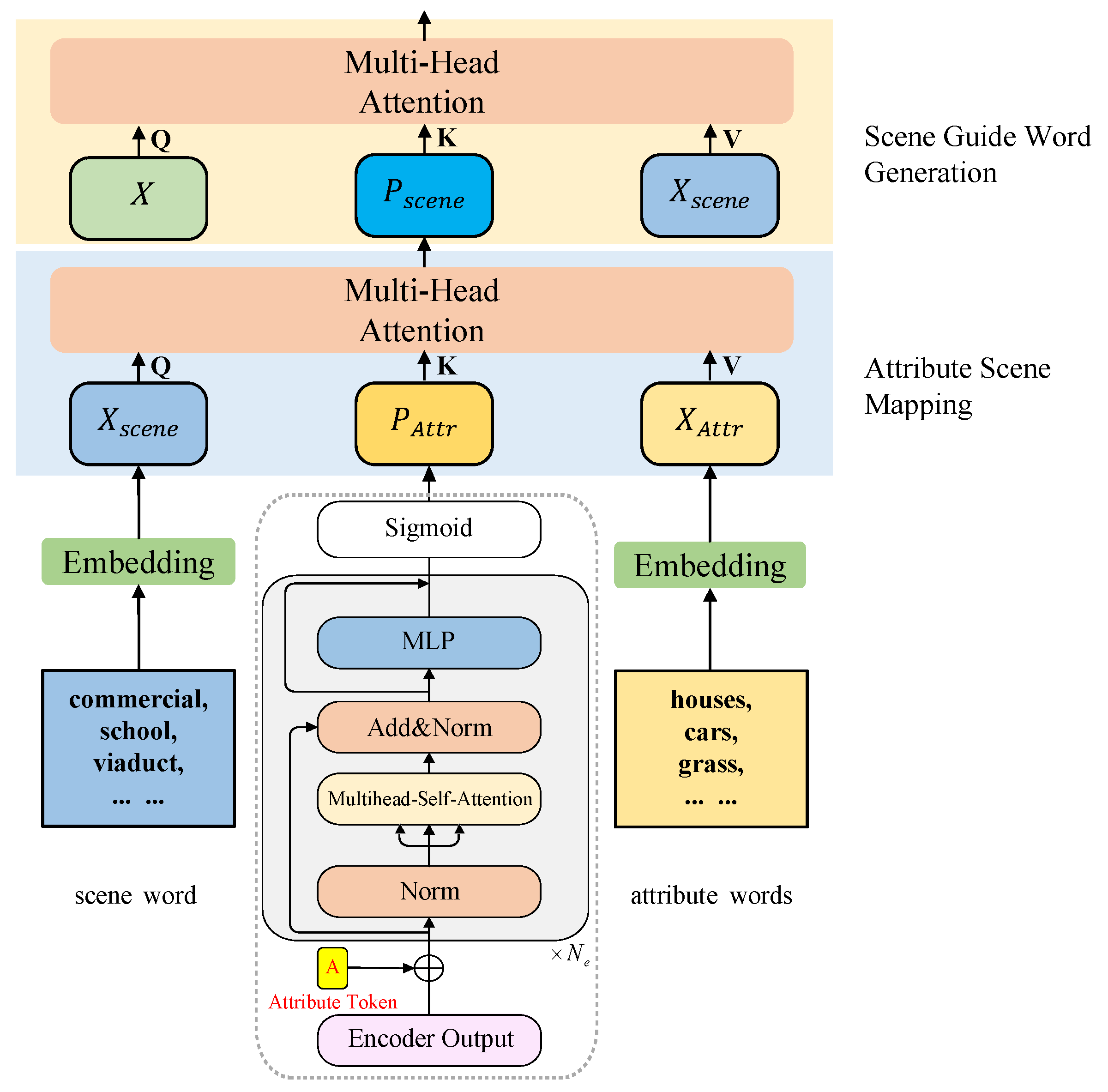

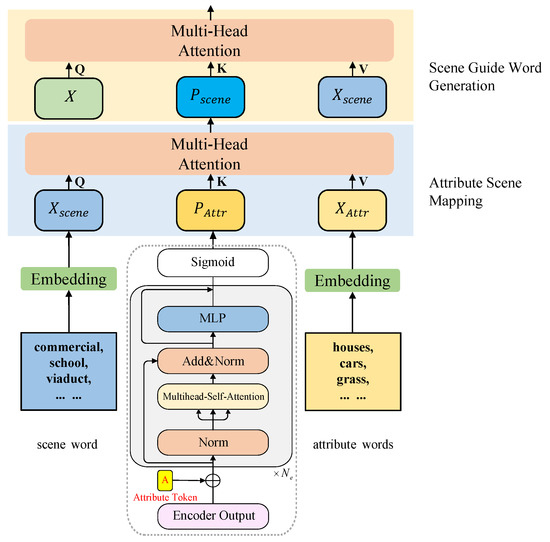

Our scene attribute extractor adopts transformer as the main framework. Its structure is shown in the dashed box in Figure 4. The attribute token A is derived from attribute labels through an embedding layer. The input to the encoder consists of image-encoded features, which are associated with the attribute token via a multi-head self-attention mechanism; finally, the probability distribution corresponding to each attribute is obtained.

Figure 4.

Global object scene attribute extractor and attribute inference module.

After pre-training relative to the sub-task, the scene attribute extractor can predict the probability distribution of 40 attribute words for each image. In the subsequent decoder, the probability distribution of attribute words is inputted into the attribute inference module to generate the scene word.

3.2. Attribute-Guided Decoder

After obtaining the attributes of the image, it is necessary to infer the corresponding scene words of the image in the decoder according to the combination of the attributes and ensure that the scene’s words can be reflected in the final caption’s description; this is carried out in order to accurately describe the unseen scene.

It should be noted that the so-called unseen scene’s image caption generation has the following characteristic: the unseen scene and its corresponding caption description have not appeared in the training set but the words corresponding to the unseen scene need to exist in the corpus in advance because the model needs to describe the unseen scene’s caption; moreover, it must ensure that the unseen scene’s information can be obtained in the model, for if the information has never appeared in the model, then there is no way to obtain the information.

In order to establish the mapping between attributes and scenes, we must ensure that it can be learned from the training set. The method for realizing this is divided into two steps: 1. The model should be allowed to learn the mapping relationship between attributes and scenes through training. This part requires the support of the training set. 2. The obtained scene’s information is used for generating captions, which is not supported by the training set. Therefore, we need to use a limited training set to ensure that the caption’s description generated by the model can be guided by the scene information obtained from the previous part from the perspective of sentence composition.

Inspired by this, we can express the scene’s information in an image by obtaining the probability distribution of scene words. We designed an attribute inference module, as shown in Figure 4, which consists of two parts: attribute scene mapping and scene-guided word generation. The inputs come from the encoding of scene words and attribute words, as well as the output of the global object scene attribute extractor.

The attribute scene mapping module is responsible for inferring scene probabilities based on attribute distributions, while the scene guide word generation module transforms these probabilities into scene text descriptions. First, the corresponding attribute word embedding vector and scene word embedding vector are set up. These vectors are the attribute words and the attribute words obtained through the embedding layer. Then, the attribute scene mapping module uses attention to construct a scene word probability distribution based on the attribute word distribution obtained from the global object scene attribute extractor, which is used to characterize the scene features of the image. Finally, the scene guide word generation module uses attention again to combine the scene word probability distribution with sentence embedding X to generate caption descriptions related to the image scene. The output of the attribute inference module can be written as follows:

where is the attribute probability from the global object scene attribute extractor, is the prediction of scene probability, and are the word embeddings of the scene and attribute, and X is the sentence embedding.

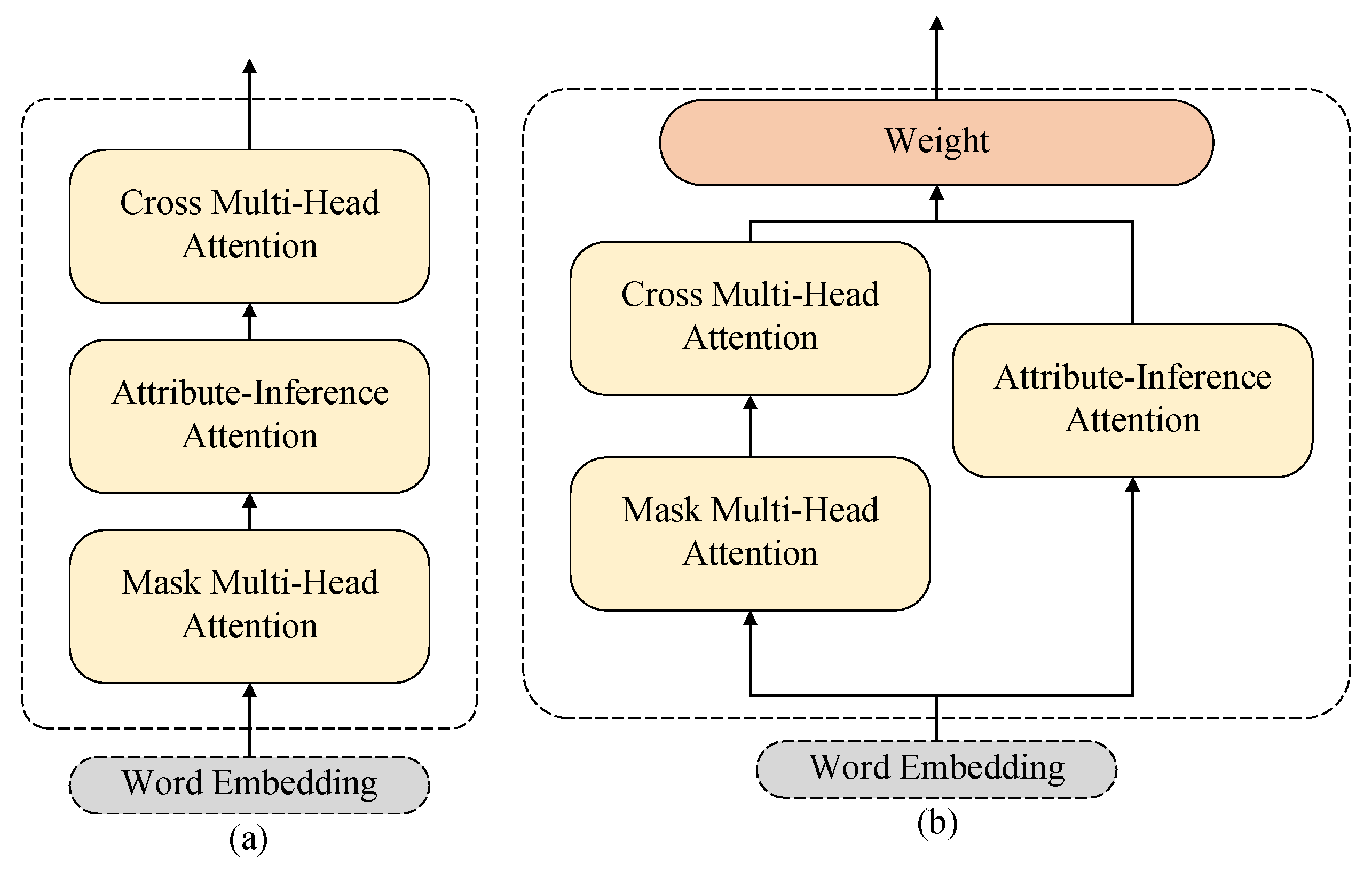

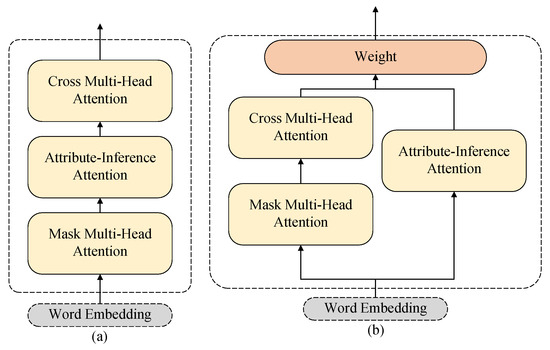

After setting up the attribute inference module, it needs to be combined with the original decoder. We considered two methods of combining them, comprising serial and parallel combinations, as shown in Figure 5. However, preliminary experiments (DCA in Table 1, Table 2 and Table 3) revealed that the serial design in Figure 5a does not work well. From the perspective of setting purposes, this section is mainly aimed at generating specific scene words, and these should not have a substantial impact on other parts of the sentence. Therefore, this module is separated, as shown in Figure 5b, with an additional weighting module to weigh the attention module of the two parts. The conventional decoder is used to generate the main components of the sentence, while the attribute inference module is mainly used to generate specific scene words, and the sum of the weights of the two parts is 1. The weighting module is used to learn the composition of sentences. For the positions where scene words should be generated, the weight is concentrated in the attribute inference module, while for the positions of regular words, the weight is concentrated in the masked attention and cross-modal attention parts. The calculation method for weight is shown in the following:

where X is the sentence word embedding and is the fully connected layer, converting the embedding dimension to 1. The final output of the attribute inference decoder is as follows:

where is the output of the attribute inference module in generating scene words such as “school” and is the output of the conventional decoder for generating the main parts in the sentence.

Figure 5.

(a,b) Regular decoder and two attribute decoders with different connections. (a) serial combination. (b) parallel combination.

3.3. Attribute Loss Function

In the context of image captioning, maintaining a balance between the diversity and accuracy of generated captions is crucial. In MTT [3], the masked cross-entropy loss function is proposed, which can greatly improve the diversity of caption generation without losing the accuracy of caption generation. Therefore, we still use masked cross-entropy as the basic loss function for the caption generation of remote sensing images in unseen scenes:

where y is a sentence’s ground truth, is the model parameter, is the sentence that is randomly masked, and L is the length of the sentence. For each predicted time step, the probability of the word corresponding to the current time step is obtained based on the information before the time step processed via the random mask.

However, it should be noted that this loss function has not been specifically optimized for the description of unseen scenes. It is necessary to set a new loss function to ensure that the decoder can receive guidance from the attribute’s reasoning module.

Firstly, to ensure that the correct scene distribution can be obtained from attribute mapping, a classification cross-entropy loss value is set to constrain the attribute mapping module in order to obtain scene information:

where C is the number of scene categories, is the probability distribution of the i- scene obtained from the encoder, and is the probability distribution of the i- scene obtained based on attribute association.

In order to ensure that scene information can be reflected in the sentence, the weighted information of attribute attention output is used during classification. As it is weighted information, this information can accurately represent the role of attribute inference in the sentence:

where is the probability distribution of the scene obtained by weighting and summing the attribute inference module, T is the length of the sentence, is the weight of the attribute inference module, and is the output of the attribute inference module.

The final attribute loss function is the weighted sum of three loss functions:

where and are the two loss weights corresponding to the attention part of the attribute, which is set to 0.8 in the experiment. is the masked cross-entropy loss value in order to ensure the generation of conventional text information. is set to ensure that the mapping of attributes to the scene is correct, and is set to ensure that the information derived from attribute reasoning can be captured correctly using the decoder.

4. Experiments

4.1. Dataset

In order to highlight the adaptability of the model to multi-scene images, the dataset should include as many scenes as possible. Because we needed to set up unseen scenarios, we could not directly use the three original standard datasets for data delineation. In addition, the UCM and Sydney datasets [1] as a whole have fewer scene categories, which is not conducive to the learning of the attribute inference part and the division of the test set. Therefore, the basic dataset used is derived from the RSICD dataset [32], which includes 10,921 images from 30 categories, each with a size of 224 × 224. It is currently one of the most widely used datasets in the field of remote sensing image captions. In order to reflect the characteristics of the task of generating remote sensing image captions for unseen scenes, we selected two scenes as the test set, namely, schools and viaducts, where the data corresponding to these two categories are completely removed from the training set. The test set’s partitioning in other scenes still follows publicly available data partitioning.

After removing the data of two unseen scenes from the training set, in order to reflect the unseen scene information in the model, we did not remove the images of the two unseen scenes from the training set when pre-training the encoder and scene attribute extractor. Instead, we used random dataset partitioning for all scenes.

In addition to the classic datasets mentioned above, in recent years, there have also been remote sensing image description datasets with varying scales and diversity, such as the NWPU-Captions dataset [33]. It is constructed based on the NWPU-RESISC45 dataset [34], one of the largest benchmark datasets for remote sensing classification tasks. The dataset includes 31,500 images collected from Google Earth, covering 45 scene classes, with each class containing 700 images. The images are uniformly sized at 256 × 256 pixels. For this dataset, we selected three scenarios as unseen scenes, namely, snowberg, park, and farmland, and processed them in the same manner as the RSICD dataset.

4.2. Evaluation Metrics

To assess the performance of our image captioning model, we utilized a suite of widely accepted metrics: BLEU [35], ROUGE-L [36], METEOR [37], and CIDEr [38].

BLEU: derived from machine translation, BLEU quantifies the overlap between generated and reference captions through n-gram matching, with sub-scores for unigrams to four-gram models, ensuring a thorough assessment of caption accuracy and fluency.

ROUGE-L: focusing on recall, ROUGE-L identifies the longest common subsequence between captions, highlighting the retention of key reference information in the generated text.

METEOR: incorporating stemming, synonyms, and paraphrasing, METEOR employs the F1 score to balance precision and recall, offering a nuanced measure of caption quality that accommodates linguistic variations.

CIDEr: prioritizing semantic richness, CIDEr weighs n-gram similarity with a focus on less common, more informative words, thus emphasizing the essence of the caption over generic terms.

For any of the above metrics, a higher score indicates better performances. These metrics collectively provide a robust framework for evaluating the quality, accuracy, and diversity of our model’s image captioning capabilities.

4.3. Experimental Setting

The model was trained in a Python 3.7 environment using PyTorch 1.6, with an NVIDIA RTX 2080 GPU equipped with 8 GB of memory. During the training stage, the batch size is set to five, the Adam optimizer is used, and the initial learning rate is set to 3 × 10−5, which decays to 80% every three training epochs. The training epoch is set to 20, and the probability of the masked setting in masked cross-entropy is 30%. In the attribute loss function module, the value range of the weights , is 0 to 1. When the weight is small (0 to 0.5), the attribute reasoning module plays a small role in the model and cannot easily generate the attribute words of unseen scenes. When the value is large (0.9 to 1), the model will pay too much attention to the generation of unseen scene words and ignore the integrity of sentences. Therefore, through experiments, we found that the best effect is achieved when the range of weights is 0.8. In the caption-generation stage, the beam search method was applied to improve the accuracy of caption generation, with the beam size set to three.

4.4. Experimental Results

Due to the lack of research on models for generating captions in unseen scenes in the field of remote sensing images, the results of some existing models in unseen scene datasets are missing. We only selected a portion of the publicly available code for model comparison. In addition, the experiment used some variants of the models used as comparative models. The introduction of these comparative models is as follows:

ConZIC [39] is a training-free method based on frozen pre-trained large models, involving no training process.

DCA (direct connect attribute reference): this refers to the direct connection of the attribute inference module to the decoder of the model, as shown in Figure 5b.

AS (attribute reference without scene): this refers to using the extracted attribute information directly for caption-generation guidance—rather than establishing a mapping of attributes to scene information—and then using specific scene information for guidance.

Table 1.

The result comparisons of different models relative to the RSICD dataset.

Table 1.

The result comparisons of different models relative to the RSICD dataset.

| Method | Bleu-1 | Bleu-2 | Bleu-3 | Bleu-4 | METEOR | ROUGE | CIDEr |

|---|---|---|---|---|---|---|---|

| MLAT [4] | 62.94 | 48.89 | 39.45 | 32.41 | 29.83 | 54.06 | 165.5 |

| SCAMET [40] | 47.00 | 31.40 | 22.56 | 16.89 | 12.61 | 34.83 | 78.1 |

| HCNet [14] | 64.50 | 50.58 | 41.39 | 34.51 | 37.12 | 56.74 | 176.8 |

| ViECap [27] | 62.96 | 48.38 | 38.75 | 31.80 | 31.84 | 52.13 | 159.5 |

| DeCap [41] | 50.98 | 34.57 | 25.09 | 18.83 | 22.87 | 40.74 | 99.03 |

| * [39] | 10.88 | 2.60 | 0.29 | 0.00 | 5.65 | 10.39 | 2.18 |

| MTT [3] | 64.58 | 51.07 | 41.90 | 34.90 | 30.84 | 58.02 | 194.7 |

| DCA | 66.64 | 53.05 | 43.84 | 36.86 | 30.91 | 57.46 | 194.6 |

| AS | 66.77 | 53.06 | 43.49 | 36.30 | 30.18 | 55.30 | 188.9 |

| SALCap (ours) | 68.64 | 55.22 | 45.82 | 38.65 | 31.05 | 57.70 | 200.1 |

* means no training has been conducted.

Table 1 shows the comparison of the results of different models on the RSICD dataset. Even though the inclusion of two unseen scenarios increases the amount of test data by 70% and decreases the amount of training data by 10% compared to the traditional division of data, resulting in a decrease in the metric, our model still performs well on several evaluation metrics: For the RSICD dataset, our model achieves high scores on all four Bleu metrics, significantly outperforming the other models. It also performs well relative to the METEOR metric, which is second only to MTT. In particular, for the CIDEr metric, our model scores much better than the other models.

In order to further illustrate the effectiveness of the model on unseen scene images, we conducted separate tests on the images of the “viaduct” and “school” scenes, and the results are shown in Table 2.

Table 2.

The result comparison of different models relative to only unseen scenes in the RSICD dataset.

Table 2.

The result comparison of different models relative to only unseen scenes in the RSICD dataset.

| Method | Bleu-1 | Bleu-2 | Bleu-3 | Bleu-4 | METEOR | ROUGE | CIDEr |

|---|---|---|---|---|---|---|---|

| MLAT [4] | 49.92 | 33.60 | 24.44 | 18.33 | 22.27 | 39.59 | 40.59 |

| SCAMET [40] | 40.74 | 23.76 | 15.16 | 9.89 | 11.23 | 29.06 | 27.84 |

| HCNet [14] | 50.84 | 33.52 | 25.66 | 19.68 | 23.10 | 43.05 | 35.68 |

| ViECap [27] | 50.93 | 33.02 | 22.94 | 16.58 | 20.30 | 38.93 | 39.96 |

| DeCap [41] | 41.64 | 21.65 | 12.20 | 6.86 | 16.72 | 27.77 | 26.68 |

| MTT [3] | 49.42 | 32.21 | 24.61 | 18.07 | 21.05 | 36.73 | 39.41 |

| DCA | 50.28 | 31.54 | 23.82 | 18.21 | 20.46 | 36.74 | 43.52 |

| AS | 50.44 | 31.28 | 22.76 | 17.80 | 20.17 | 35.55 | 42.36 |

| SALCap (ours) | 51.30 | 33.88 | 24.65 | 18.87 | 21.11 | 37.86 | 47.76 |

The decrease in metrics in Table 2 is even more pronounced in the external independent validation that contains only images of unseen scenes. However, the image caption model using attribute learning obtained better results on most of the metrics. Our approach does not perform as well as HCNet relative to the BLEU-3, BLEU-4, and METEOR ROUGE metrics for unknown scenes, but because the metrics focus on the similarity of the generated text relative to the reference text, they may not adequately capture whether the generated text contains the correct scene. Therefore, we analyzed the text generated by the model to determine whether it contained the correct scene words for unseen scenarios such as “school” and “viaduct”. Specifically, we counted how often these specific scene words appeared in the descriptions generated by the model. Based on these statistical results, we calculated the model’s accuracy, recall, and F1 score in order to evaluate its performance in accurately using scene words. The scores obtained were 0.67 for accuracy, 0.23 for recall, and 0.34 for the F1 score. In contrast, other comparative algorithms rarely generated descriptions with the correct scene words directly, with most of their metrics being 0.

Table 3 and Table 4 show the comparison of the results of different models for all scenarios and unseen scenes relative to the NWPU dataset, respectively. As observed in the tables, our model obtained better results relative to the entire dataset. In particular, in the unseen scene in Table 4, our model not only outperformed the comparison algorithms in all metrics but also demonstrated significant advantages in some key metrics, such as Bleu-4, METEOR, and ROUGE. This superior performance proves that our model can cope well with unseen data with good generalization ability.

Table 3.

The result comparison of different models relative to the NWPU dataset.

Table 3.

The result comparison of different models relative to the NWPU dataset.

| Method | Bleu-1 | Bleu-2 | Bleu-3 | Bleu-4 | METEOR | ROUGE | CIDEr |

|---|---|---|---|---|---|---|---|

| MLAT [4] | 77.55 | 65.49 | 57.84 | 52.30 | 33.02 | 63.98 | 129.82 |

| SCAMET [40] | 55.85 | 38.04 | 26.46 | 18.91 | 18.49 | 41.34 | 24.96 |

| HCNet [14] | 78.49 | 64.90 | 56.48 | 52.06 | 30.53 | 64.09 | 131.06 |

| ViECap [27] | 56.73 | 38.81 | 28.17 | 21.62 | 20.37 | 42.08 | 49.86 |

| DeCap [41] | 68.80 | 50.50 | 37.70 | 28.30 | 23.90 | 48.90 | 63.60 |

| MTT [3] | 78.75 | 65.71 | 56.88 | 50.13 | 30.87 | 62.50 | 117.74 |

| DCA | 73.51 | 58.58 | 46.74 | 37.96 | 24.96 | 54.13 | 88.95 |

| AS | 77.86 | 65.03 | 56.32 | 49.70 | 31.28 | 62.85 | 120.02 |

| SALCap (ours) | 79.25 | 65.92 | 56.88 | 49.94 | 30.99 | 63.17 | 118.04 |

Table 4.

The result comparison of different models in only unseen scenes relative to the NWPU dataset.

Table 4.

The result comparison of different models in only unseen scenes relative to the NWPU dataset.

| Method | Bleu-1 | Bleu-2 | Bleu-3 | Bleu-4 | METEOR | ROUGE | CIDEr |

|---|---|---|---|---|---|---|---|

| MLAT [4] | 46.20 | 24.10 | 12.89 | 6.24 | 10.24 | 29.50 | 3.68 |

| SCAMET [40] | 54.40 | 36.20 | 23.70 | 15.00 | 17.10 | 39.40 | 7.20 |

| HCNet [14] | 60.57 | 40.70 | 30.95 | 24.75 | 19.23 | 41.79 | 14.51 |

| ViECap [27] | 47.94 | 26.38 | 14.98 | 7.91 | 14.84 | 32.08 | 6.62 |

| DeCap [41] | 60.68 | 39.26 | 26.14 | 16.69 | 18.70 | 41.19 | 9.88 |

| MTT [3] | 56.87 | 37.49 | 24.57 | 14.34 | 15.02 | 41.98 | 11.59 |

| DCA | 59.53 | 39.53 | 25.37 | 15.87 | 16.47 | 40.45 | 11.60 |

| AS | 62.91 | 42.94 | 32.17 | 24.95 | 19.12 | 45.23 | 12.86 |

| SALCap (ours) | 66.38 | 46.17 | 35.28 | 27.70 | 20.02 | 48.21 | 14.64 |

4.5. Discussion and Analysis

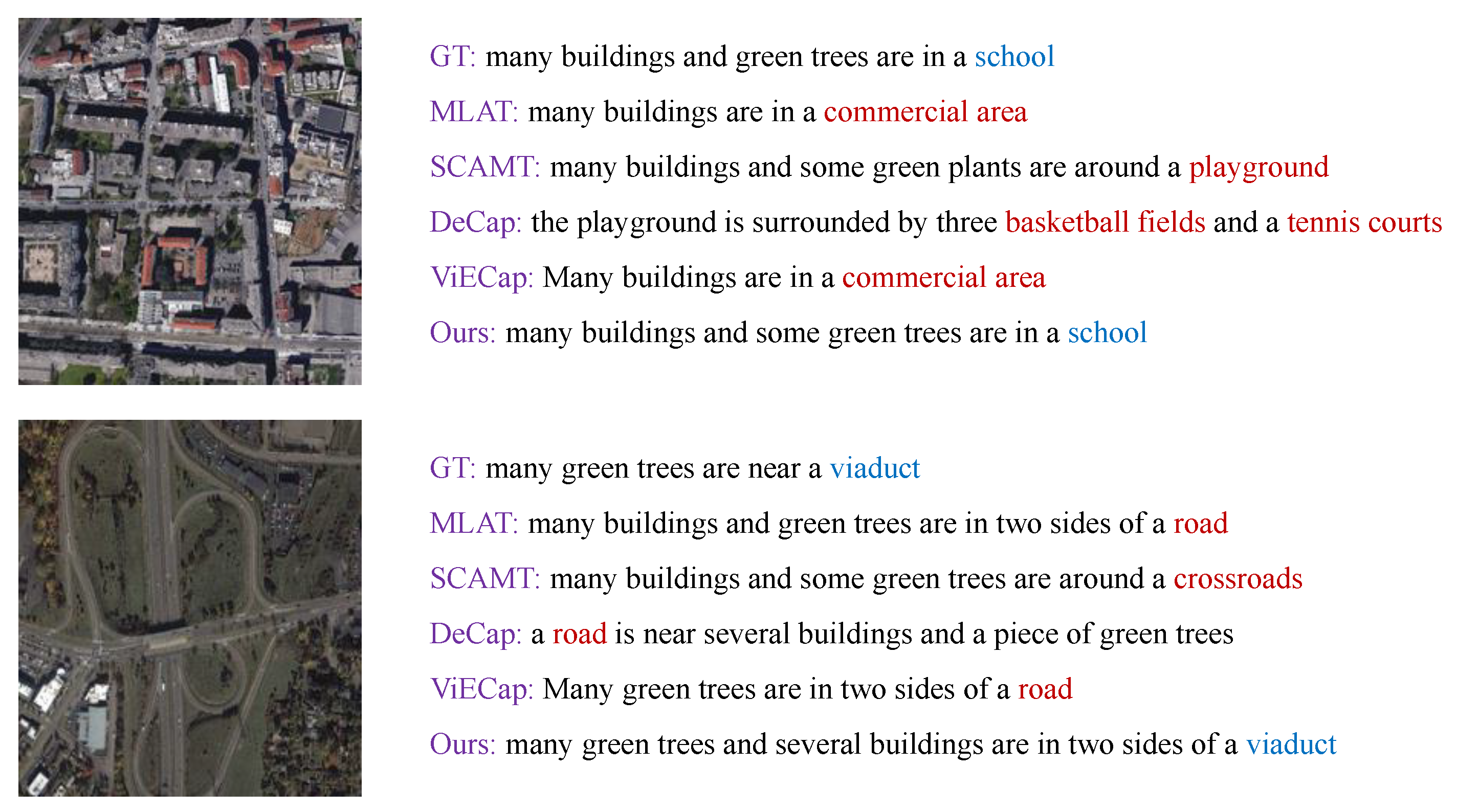

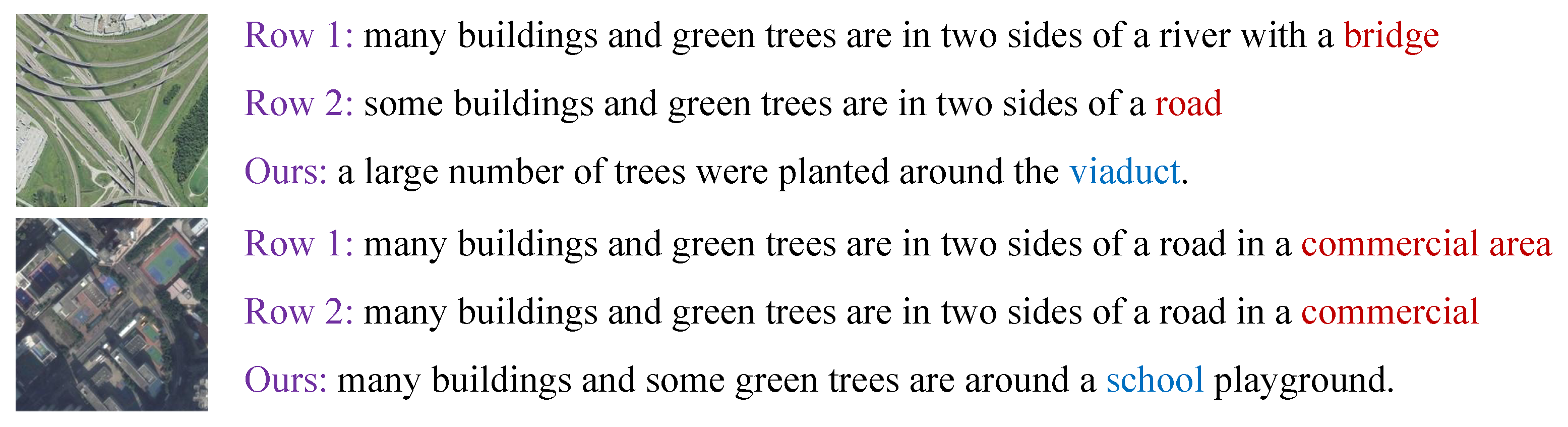

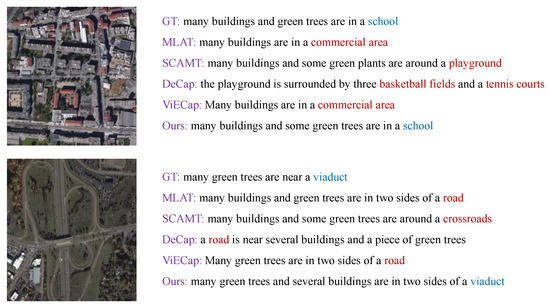

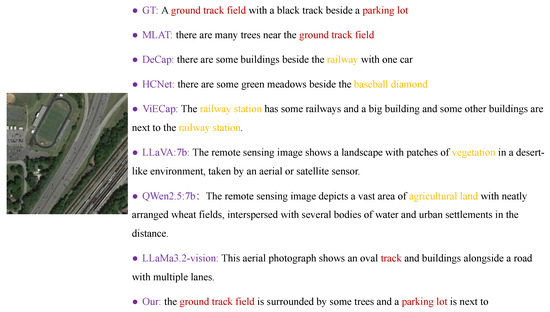

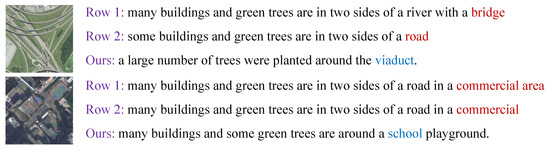

In the task of generating captions for unseen scenes, our main purpose is to evaluate and assess whether the model can generate appropriate descriptions of unseen scenes. Therefore, it may not be easy to observe the adaptability of the model relative to unseen scenes solely based on the size of the indicators. In order to comprehensively demonstrate the effectiveness of the model, we selected two images from unseen scenes and displayed the captions generated under different models, as shown in Figure 6.

Figure 6.

Captions generated by different models relative to the RSICD dataset with unseen scene images.

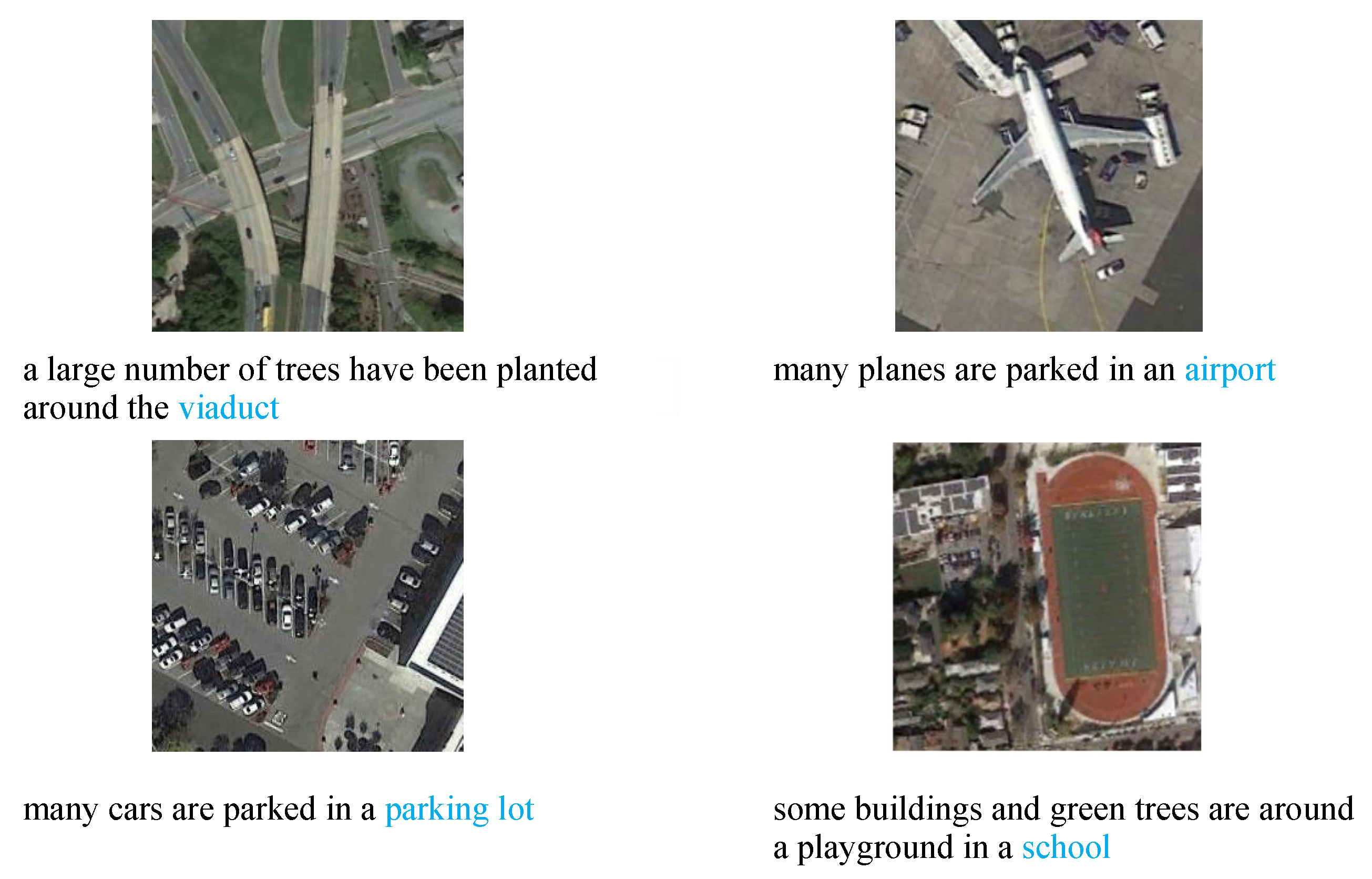

With the results demonstrated in Figure 6, the existing model faces an unseen scene, although it can describe some feature targets in the remote sensing image, such as buildings, trees, etc. However, the scene of the remote sensing image cannot be described correctly, as only our method generates the scene words accurately. For example, in the viaduct scene, the MLAT, DeCap, and SCAMT models all incorrectly identify the scene as involving a road or a crossroad, ignoring the key viaduct feature. The ViECap model correctly recognizes the presence of green trees and their relative position to the road, but it also ignores the key feature of the scene. Our proposed model accurately captures the presence of green trees and buildings and correctly identifies the key feature of the scene as a “viaduct”, which closely matches the ground truth. This shows that the method of first obtaining scene information through attribute mapping and then applying the scene’s information to the caption-generation stage can result in the correct description of the unseen scene. Simultaneously, our method also performs well on the remote sensing images of known scenes. As shown in Figure 7, our method not only generates correct scene descriptions but also provides rich information.

Figure 7.

Captions generated by different models relative to the RSICD dataset with known scene images.

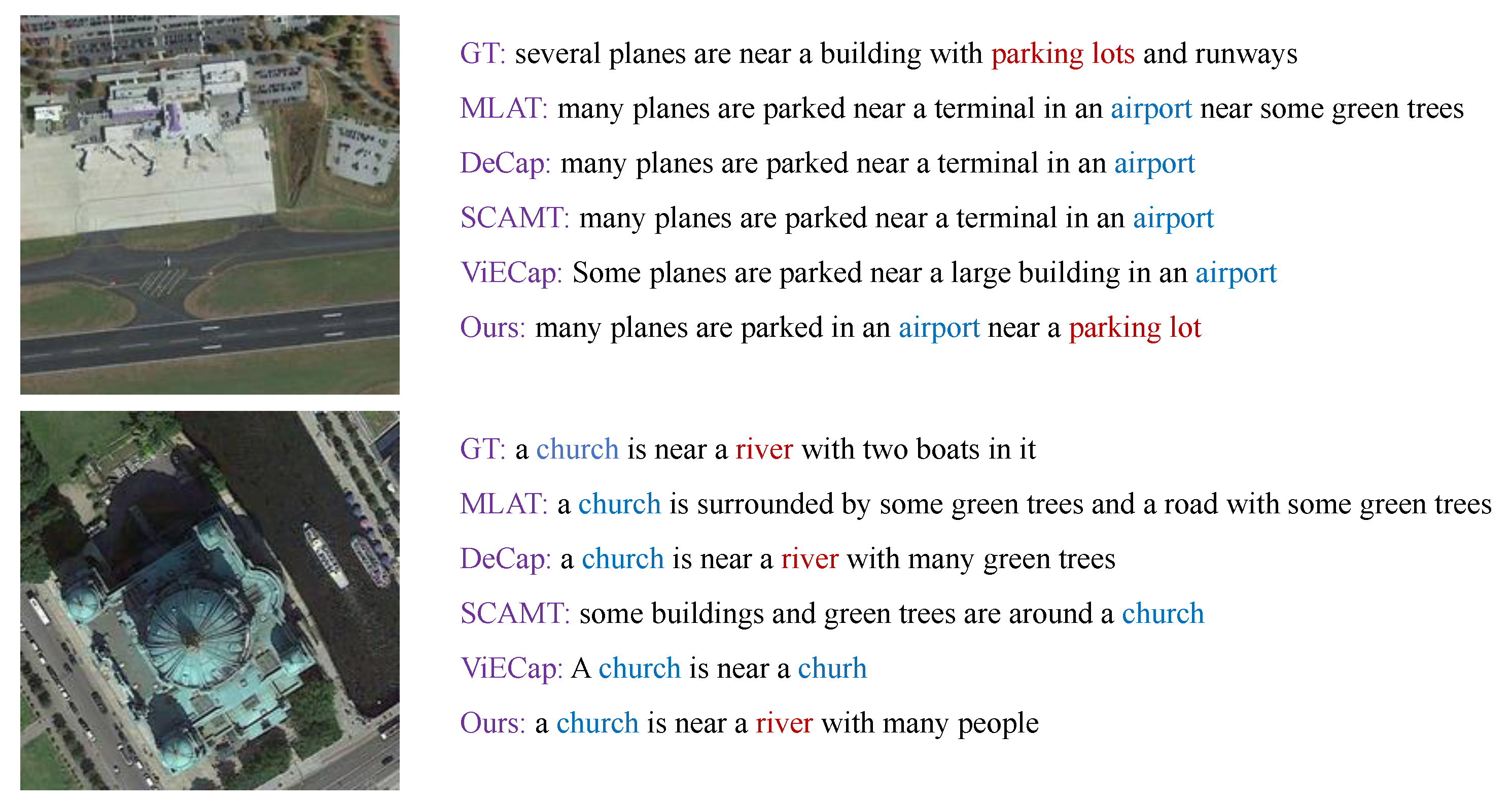

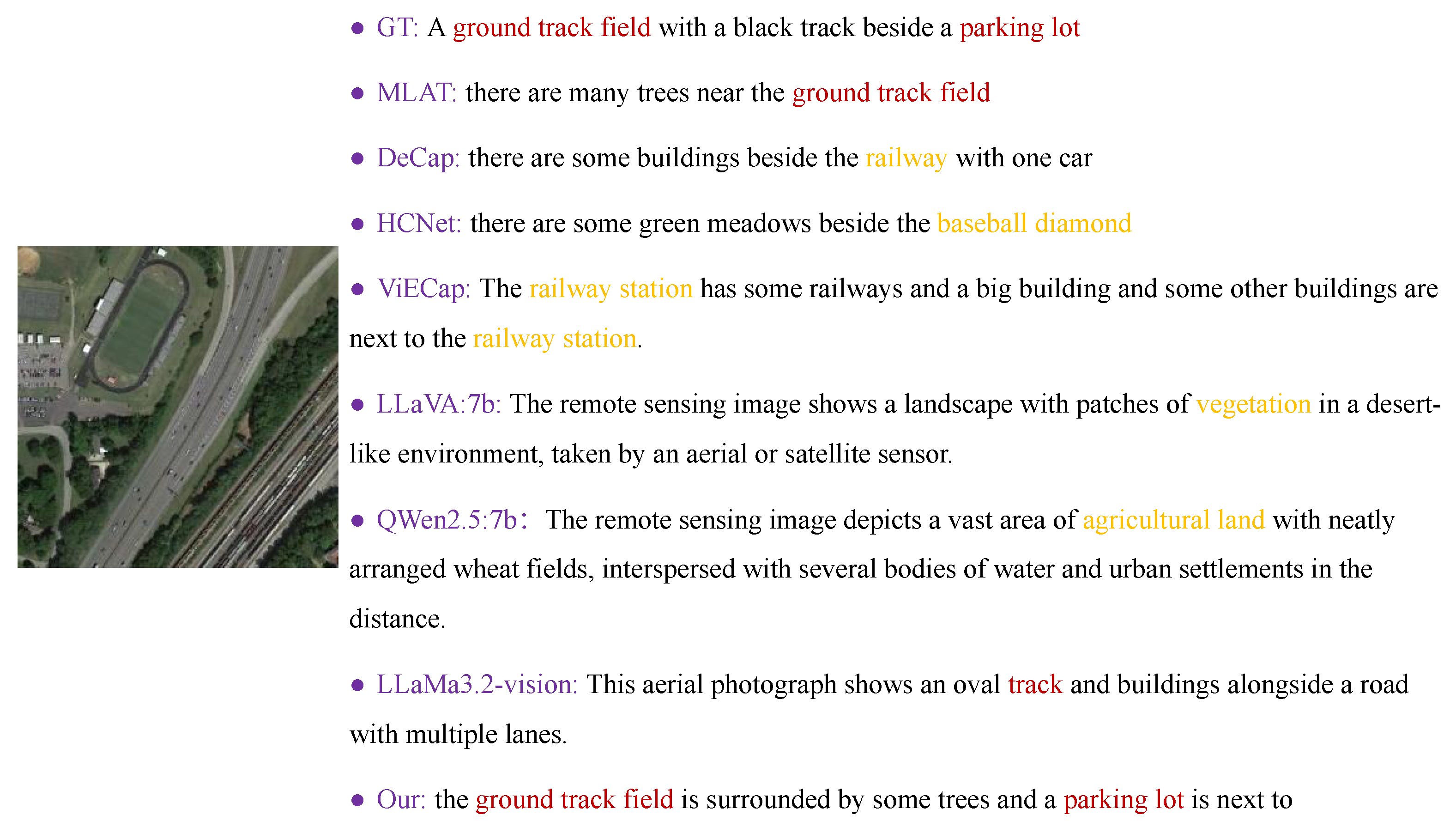

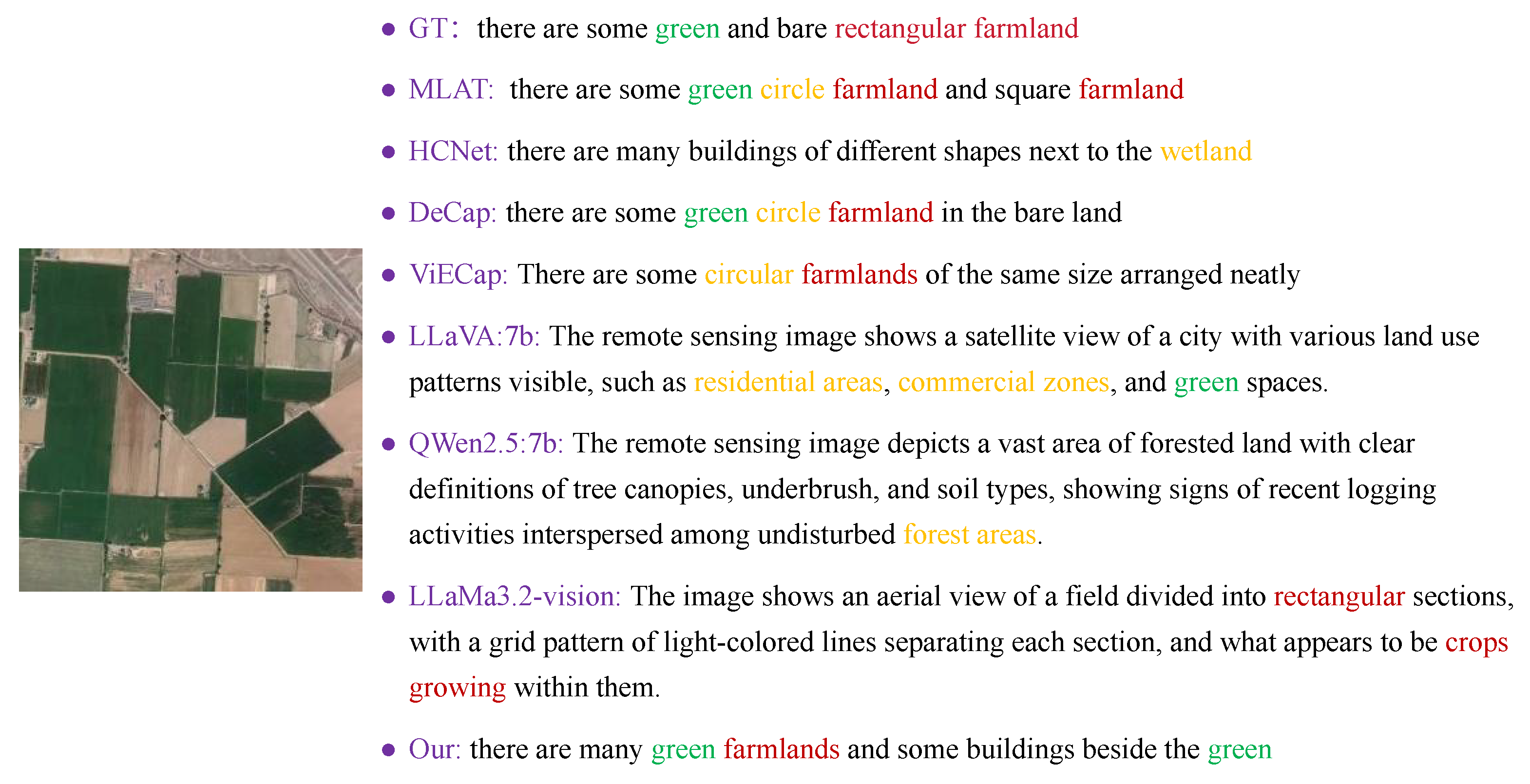

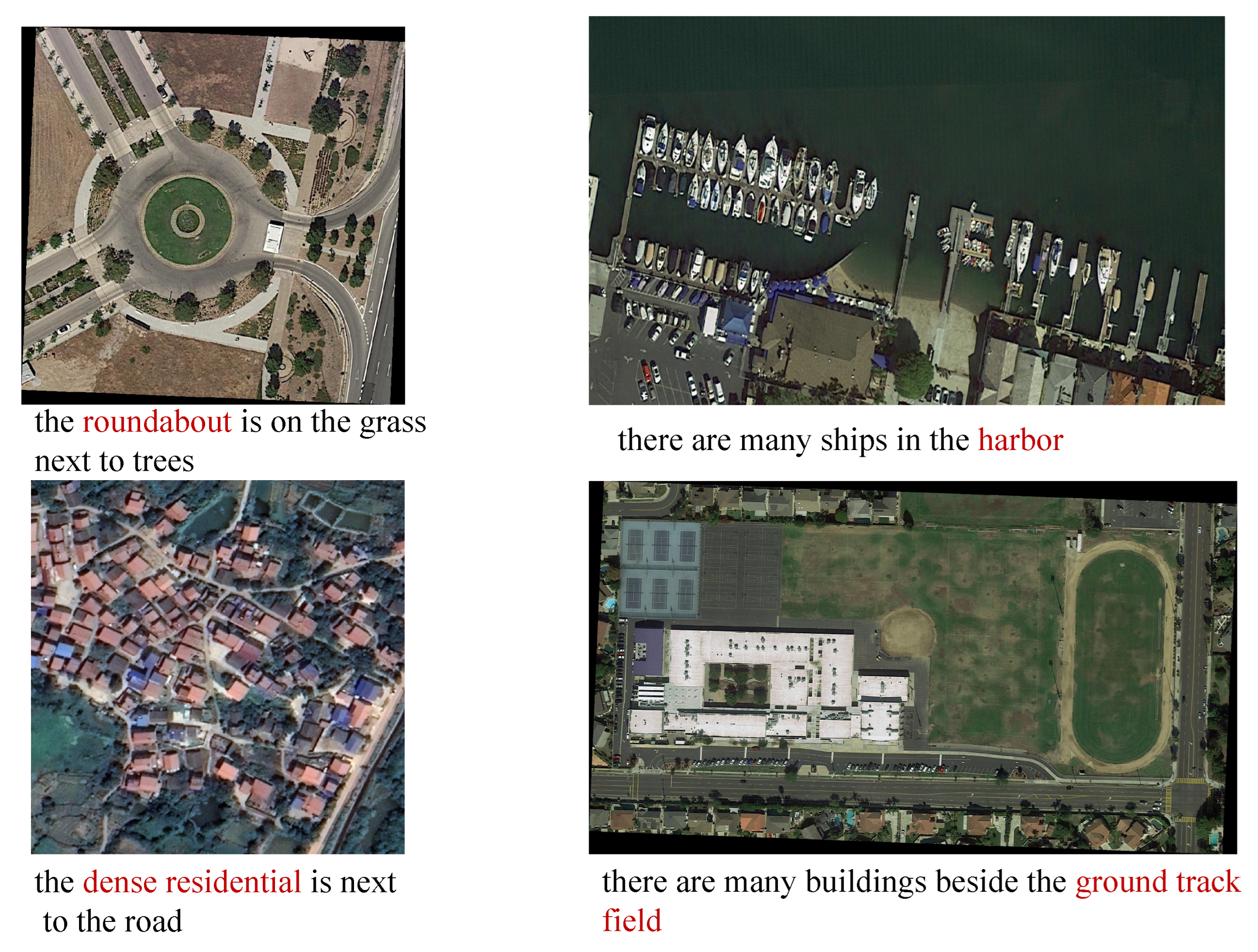

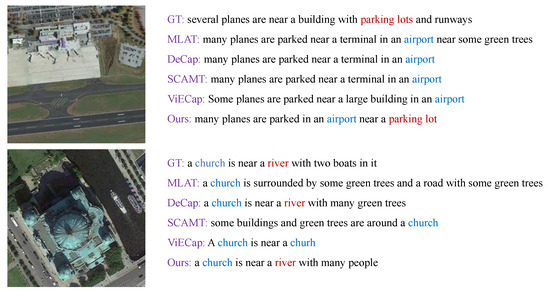

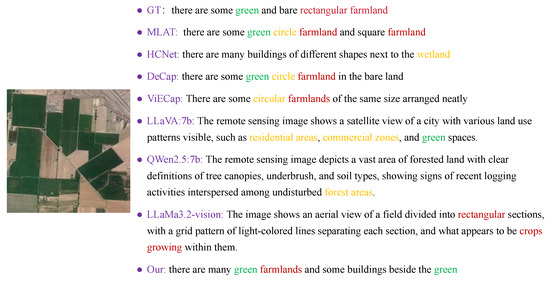

In order to compare the results via large model generation, the generation results relative to the NWPU dataset are shown in Figure 8 and Figure 9. When describing remotely sensed images, we provide a uniform prompt for all LLaVA, QWen-VL, and Llama models: “Please briefly describe the remotely sensed image in one sentence”.

Figure 8.

Captions generated by different models relative to the NWPU dataset with known scene images.

Figure 9.

Captions generated by different models relative to the NWPU dataset with unknown scene images.

Figure 8 shows a ground track with a parking lot next to it, and MLAT notes that there are many trees near the track but does not acknowledge the parking lot. DeCap, SCAMT, and ViECap all misjudged the scene, and their descriptions do not match the GT. The LLaVA model incorrectly identified the scene as a desert-like environment, while the QWen-VL model judged it to consist of agricultural land. Although the Llama model correctly describes the presence of multi-lane roads in the image, it fails to more accurately identify the actual scene as a ground track field. Our model can accurately describe the trees around the track and acknowledges the parking lot next to it. Thus, it can be seen that our model can generate a more complete and rich description.

Some green and bare rectangular agricultural fields are shown in Figure 9. It can be observed that most algorithms make obvious errors in determining the shape of the farmland, while our model circumvents shape misjudgments and focuses more attention on the overall scene’s information. In addition to these inaccuracies, large language models face the challenge of producing inconsistent results for multiple generations. The LLaVA model inaccurately identified the scene as comprising residential areas, commercial zones, and green spaces, while the QWen-VL model misjudged it as comprising forested land. Although the Llama model correctly described the rectangular farmland, large language models still generate inconsistent results.

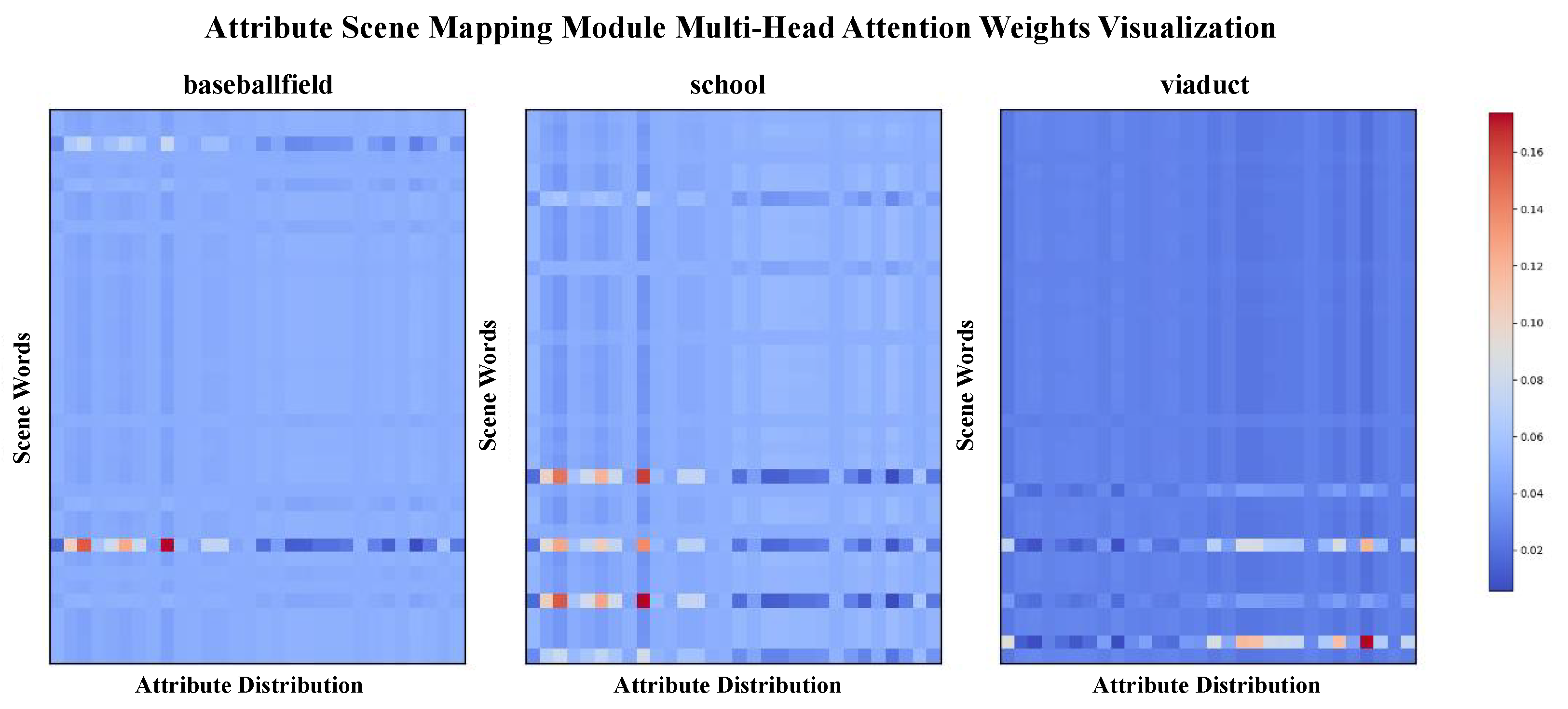

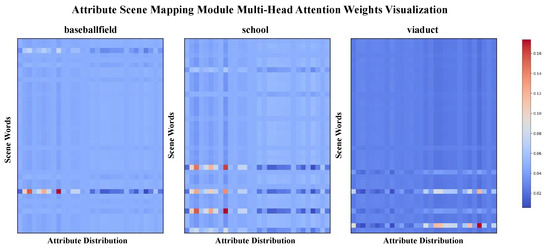

To highlight the effectiveness of our method in capturing the interactions between scenes and objects, we visualized the multi-head attention of the attribute-scene-mapping module for three different scenes—”baseballfield”, “school”, and “viaduct”—as shown in Figure 10. It can be observed that different attribute distributions correspond to different scene words.

Figure 10.

Attribute-scene-mapping multi-head attention weight module visualization.

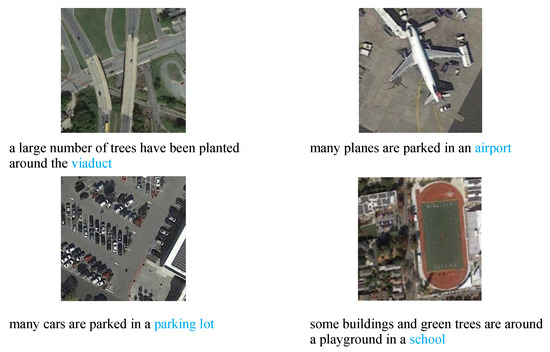

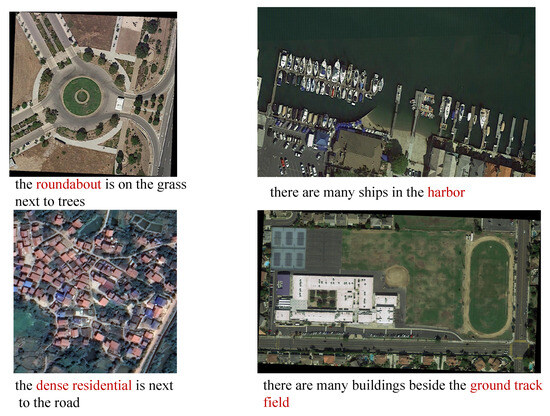

In order to further verify the feasibility of this method, we selected some scene images outside of the dataset for testing in order to verify the scalability of the attribute-learning-based caption-generation model in remote sensing images. Specifically, we carried out testing using publicly available remote sensing image classification datasets, namely PatternNet datasets [42], which contain 38 scene categories. We selected four scenarios from datasets for caption-generation testing, corresponding to overpasses, airports, parking lots, and schools. The experimental results under these datasets are shown in Figure 11. To validate the generalizability of the models, images from more diverse vision–language datasets, such as GeoChat [43] and EarthVQA [44], were utilized for evaluation, as shown in Figure 12. Although the images in these datasets do not have caption descriptions, they have corresponding category information, which can be used to evaluate whether the caption descriptions generated by the model correctly describe the scene.

Figure 11.

Generation results of scene image captions under different data distributions relative to the PatternNet dataset.

Figure 12.

Generation results of scene image captions under different data distributions relative to the GeoChat and EarthVQA datasets.

Figure 11 presents the descriptions generated by our model for the scenes of overpasses, airports, parking lots, and schools within the PatternNet dataset. Additionally, Figure 12 displays the model’s generated descriptions for roundabouts, harbors, dense residential areas, and ground track fields, as sourced from the GeoChat and EarthVQA datasets. As shown in Figure 11 and Figure 12, which demonstrate the results, it can be found that even for images with different data distributions—whether unseen or known scene images—this attribute-based learning model can generate correct caption descriptions, which also proves the scalability of the model in different remote sensing images.

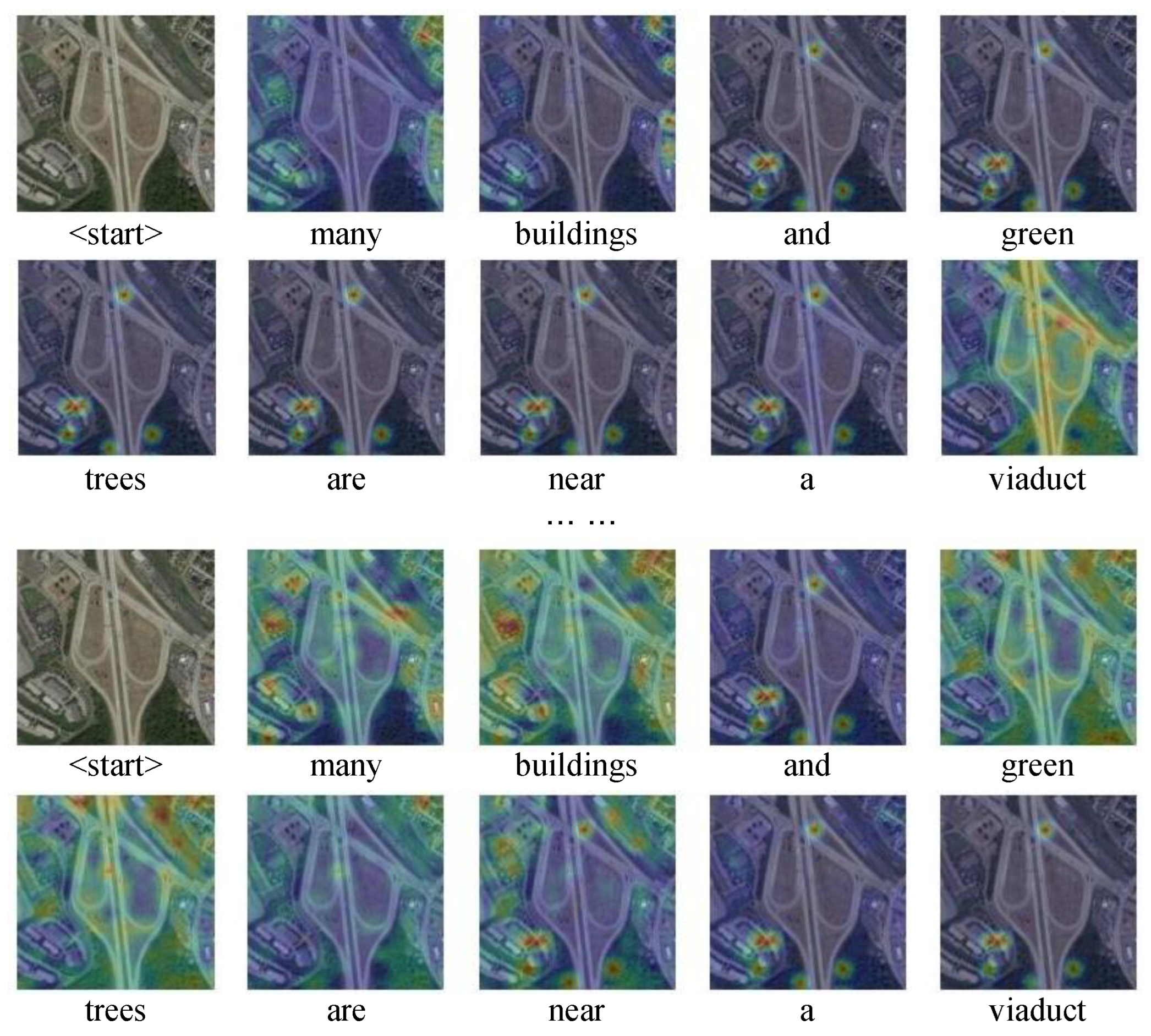

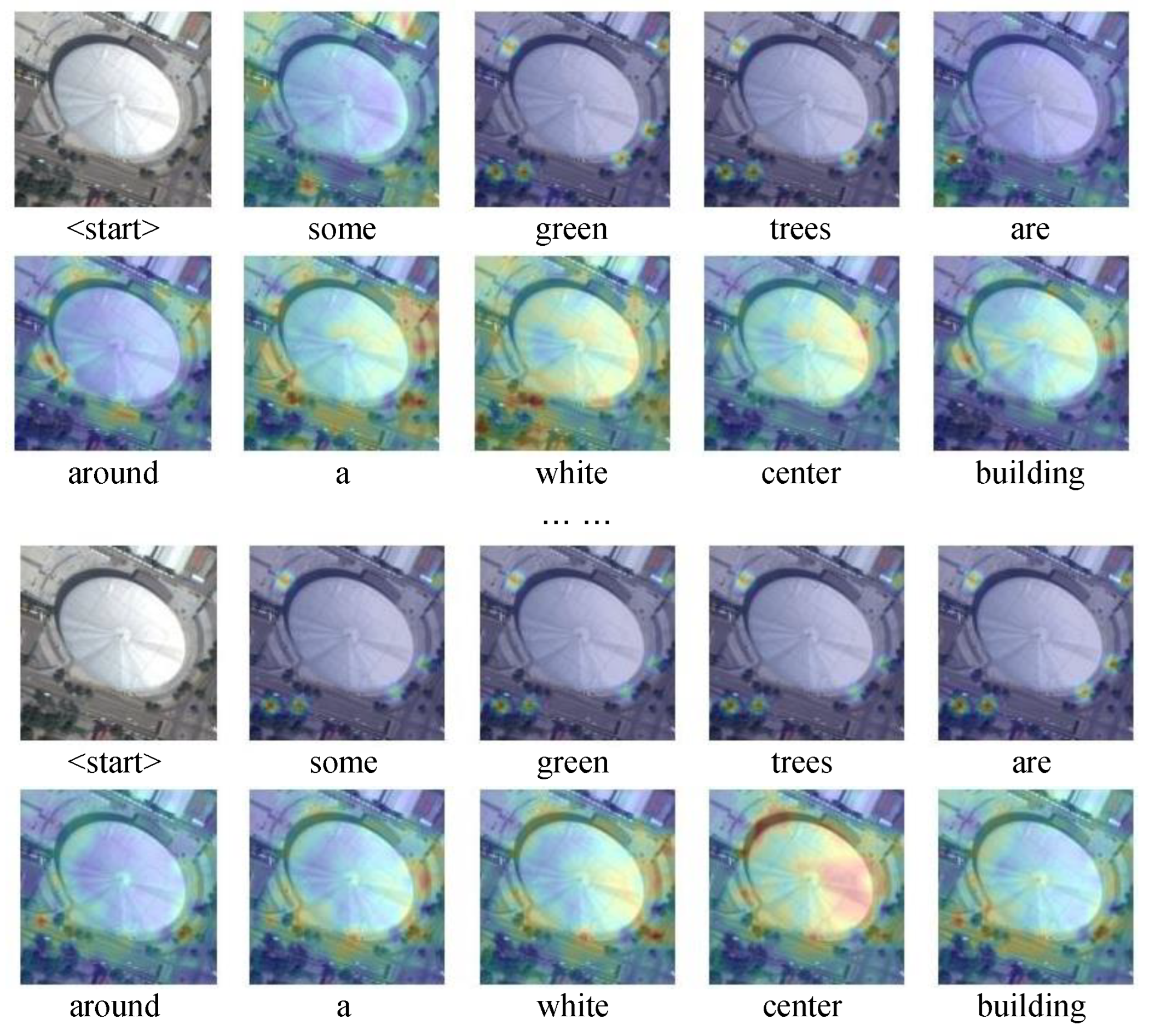

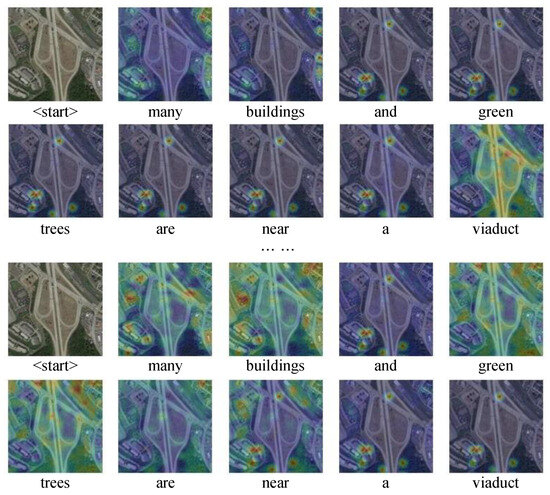

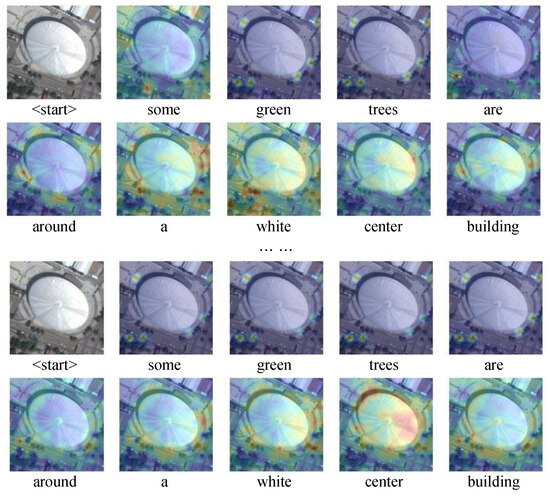

In Figure 13 and Figure 14, we present visualizations of the multi-head attention mechanism during the image captioning process in an unknown scene (viaduct) and in a known scene (center), respectively. It is evident from these figures that different attention heads focus on various regions of the image. When generating words such as “some” and “many”, attention is broadly distributed across the image, reflecting the model’s consideration of the overall scene’s context. In contrast, when producing nouns such as “building” or “tree”, which denote specific attributes, attention becomes more localized and concentrated within the regions corresponding to these objects, albeit with some dispersion, indicating the model’s ability to hone in on the relevant features. Furthermore, when the model processes spatial relationship terms such as “around” and “near”, attention is directed toward areas of the image that are associated with spatial positioning, exemplified by the relative placement of trees to the “center”. Ultimately, when generating scene words such as “viaduct” and “center”, attention is centered around the defining structures while also encompassing the distribution of surrounding objects.

Figure 13.

Visualization of generated captions and attention weights in unseen scenes.

Figure 14.

Visualization of generated captions and attention weights in seen scenes.

These visualizations demonstrate the model’s dynamic adjustment of its focus during caption generation. It not only concentrates on image regions that align semantically with the output’s vocabulary but also synthesizes the overall scene by integrating the content of the image.

4.6. Ablation Experiments

Ablation experiments were conducted to evaluate the effectiveness of two modules in the unseen scenes’ image captioning, including the attribute inference module and the attribute loss function. The ablation experiments were performed according to the following methods:

- (1)

- The attribute inference module (Attr) is removed, and the classification loss function is obtained using the entire sentence for classification.

- (2)

- The attribute loss function (CLS) is removed, and only the masked cross-entropy loss function is used. Without additional constraints on the model, the attribute reasoning module is used to obtain sentences.

From the ablation experiment, as shown in Table 5, it can be observed that compared to adding an attribute learning module, if only an attribute learning module is added but not constrained with an attribute loss function, the added attribute learning module will not affect the model, and redundant information may also cause a decline in model performance. In addition, as shown in Figure 15, from the actual captions generated by the model, regardless of whether the attribute study part (ROW1) or the additional classification loss function (ROW2) is removed, the impact on the model’s effect is not only the decline in evaluation indicators but also, more importantly, its inability to generate correct descriptions of the unseen scenes. For example, in the context of a viaduct scene, the model generates descriptions such as “road” and “bridge”, and it incorrectly identifies a school scene as a commercial area. It can only produce scene words that were present in the pre-existing dataset. These scene words are generally somewhat similar to those found in unknown scene images. This also indirectly proves the role of the attribute inference module and the additional attribute loss function proposed in this study.

Table 5.

The ablation experiment of different modules.

Figure 15.

Ablation-experiment-generated caption.

5. Conclusions and Future Studies

This study addresses the critical issue of existing models’ inability to accurately describe unseen scenes in remote sensing images. By leveraging attribute learning, we introduce an attribute inference module that establishes a mapping between attribute features and scenes, thereby generating scene information. An additional classification loss value ensures that this information guides caption generation effectively. Extensive experiments demonstrated the effectiveness of our method. This method significantly enhances the model’s adaptability and generalizability, enabling it to describe unseen scenes with limited training data. This advancement is crucial for enhancing the model’s practicality and generalizability, thereby expanding the practical utility of remote sensing image captioning across diverse scenarios.

Looking ahead, our future research may explore two key directions. One potential direction is the construction of caption-generation datasets that encompass a richer variety of scene types using existing remote sensing image data. Another possible focus is the exploration of new evaluation metrics that are designed to more intuitively capture the performance enhancements in remote sensing image captioning.

Author Contributions

Conceptualization, H.L., Z.R. and Z.G.; funding acquisition, Z.G. and R.L.; methodology, H.L. and Z.R.; software, H.L.; supervision, S.G. and L.J.; writing—original draft, H.L. and Z.R.; writing—review and editing, S.G., Z.G. and R.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (no. 62301395 and 62102296), the Shaanxi Province Postdoctoral Science Foundation (no. 2023BSHEDZZ177), and the Fundamental Research Funds for the Central Universities (no. XJSJ24071).

Data Availability Statement

The dataset used in this study is publicly accessible.

Acknowledgments

The authors would like to express their gratitude to the editors and anonymous reviewers for their insightful comments.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Qu, B.; Li, X.; Tao, D.; Lu, X. Deep semantic understanding of high resolution remote sensing image. In Proceedings of the 2016 International Conference on Computer, Information and Telecommunication Systems (Cits), Kunming, China, 6–8 July 2016; pp. 1–5. [Google Scholar]

- Hoxha, G.; Melgani, F.; Demir, B. Toward remote sensing image retrieval under a deep image captioning perspective. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4462–4475. [Google Scholar] [CrossRef]

- Ren, Z.; Gou, S.; Guo, Z.; Mao, S.; Li, R. A mask-guided transformer network with topic token for remote sensing image captioning. Remote Sens. 2022, 14, 2939. [Google Scholar] [CrossRef]

- Liu, C.; Zhao, R.; Shi, Z. Remote-sensing image captioning based on multilayer aggregated transformer. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6506605. [Google Scholar]

- Ni, Z.; Zong, Z.; Ren, P. Incorporating object counts into remote sensing image captioning. Int. J. Digit. Earth 2024, 17, 2392847. [Google Scholar] [CrossRef]

- Zhou, H.; Xia, L.; Du, X.; Li, S. FRIC: A framework for few-shot remote sensing image captioning. Int. J. Digit. Earth 2024, 17, 2337240. [Google Scholar]

- Ordonez, V.; Han, X.; Kuznetsova, P.; Kulkarni, G.; Mitchell, M.; Yamaguchi, K.; Stratos, K.; Goyal, A.; Dodge, J.; Mensch, A.; et al. Large scale retrieval and generation of image descriptions. Int. J. Comput. Vis. 2016, 119, 46–59. [Google Scholar]

- Kuznetsova, P.; Ordonez, V.; Berg, A.; Berg, T.; Choi, Y. Collective generation of natural image descriptions. In Proceedings of the 50th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Jeju Island, Republic of Korea, 8–14 July 2012; pp. 359–368. [Google Scholar]

- Kulkarni, G.; Premraj, V.; Ordonez, V.; Dhar, S.; Li, S.; Choi, Y.; Berg, A.C.; Berg, T.L. Babytalk: Understanding and generating simple image descriptions. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2891–2903. [Google Scholar]

- Gupta, A.; Mannem, P. From image annotation to image description. In Proceedings of the International Conference on Neural Information Processing, Doha, Qatar, 12–15 November 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 196–204. [Google Scholar]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual instruction tuning. Adv. Neural Inf. Process. Syst. 2024, 36, 34892–34916. [Google Scholar]

- Chu, X.; Su, J.; Zhang, B.; Shen, C. VisionLLaMA: A Unified LLaMA Interface for Vision Tasks. arXiv 2024, arXiv:2403.00522. [Google Scholar]

- Vinyals, O.; Toshev, A.; Bengio, S.; Erhan, D. Show and tell: A neural image caption generator. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3156–3164. [Google Scholar]

- Yang, Z.; Li, Q.; Yuan, Y.; Wang, Q. HCNet: Hierarchical Feature Aggregation and Cross-Modal Feature Alignment for Remote Sensing Image Captioning. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5624711. [Google Scholar] [CrossRef]

- Hoxha, G.; Melgani, F.; Slaghenauffi, J. A new CNN-RNN framework for remote sensing image captioning. In Proceedings of the 2020 Mediterranean and Middle-East Geoscience and Remote Sensing Symposium (M2GARSS), Tunis, Tunisia, 9–11 March 2020; pp. 1–4. [Google Scholar]

- Chowdhary, C.L.; Goyal, A.; Vasnani, B.K. Experimental assessment of beam search algorithm for improvement in image caption generation. J. Appl. Sci. Eng. 2019, 22, 691–698. [Google Scholar]

- Li, Z.; Zhao, W.; Du, X.; Zhou, G.; Zhang, S. Cross-modal retrieval and semantic refinement for remote sensing image captioning. Remote Sens. 2024, 16, 196. [Google Scholar] [CrossRef]

- Anderson, P.; He, X.; Buehler, C.; Teney, D.; Johnson, M.; Gould, S.; Zhang, L. Bottom-up and top-down attention for image captioning and visual question answering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6077–6086. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar]

- Shi, Z.; Zou, Z. Can a machine generate humanlike language descriptions for a remote sensing image? IEEE Trans. Geosci. Remote Sens. 2017, 55, 3623–3634. [Google Scholar]

- Li, Y.; Zhang, X.; Cheng, X.; Tang, X.; Jiao, L. Learning consensus-aware semantic knowledge for remote sensing image captioning. Pattern Recognit. 2024, 145, 109893. [Google Scholar]

- Demirel, B.; Cinbis, R.G.; Ikizler-Cinbis, N. Image captioning with unseen objects. arXiv 2019, arXiv:1908.00047. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Jia, C.; Yang, Y.; Xia, Y.; Chen, Y.T.; Parekh, Z.; Pham, H.; Le, Q.; Sung, Y.H.; Li, Z.; Duerig, T. Scaling up visual and vision-language representation learning with noisy text supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 4904–4916. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Wang, P.; Bai, S.; Tan, S.; Wang, S.; Fan, Z.; Bai, J.; Chen, K.; Liu, X.; Wang, J.; Ge, W.; et al. Qwen2-vl: Enhancing vision-language model’s perception of the world at any resolution. arXiv 2024, arXiv:2409.12191. [Google Scholar]

- Fei, J.; Wang, T.; Zhang, J.; He, Z.; Wang, C.; Zheng, F. Transferable decoding with visual entities for zero-shot image captioning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 3136–3146. [Google Scholar]

- Zaremba, W.; Sutskever, I.; Vinyals, O. Recurrent neural network regularization. arXiv 2014, arXiv:1409.2329. [Google Scholar]

- Huang, L.; Wang, W.; Chen, J.; Wei, X.Y. Attention on attention for image captioning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4634–4643. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Meng, L.; Wang, J.; Yang, Y.; Xiao, L. Prior Knowledge-Guided Transformer for Remote Sensing Image Captioning. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4706213. [Google Scholar]

- Lu, X.; Wang, B.; Zheng, X.; Li, X. Exploring models and data for remote sensing image caption generation. IEEE Trans. Geosci. Remote Sens. 2017, 56, 2183–2195. [Google Scholar]

- Cheng, Q.; Huang, H.; Xu, Y.; Zhou, Y.; Li, H.; Wang, Z. NWPU-captions dataset and MLCA-net for remote sensing image captioning. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–19. [Google Scholar]

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar]

- Lin, C.Y. Rouge: A package for automatic evaluation of summaries. In Proceedings of the Text Summarization Branches out, Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Ann Arbor, MI, USA, 29 June 2005; pp. 65–72. [Google Scholar]

- Vedantam, R.; Lawrence Zitnick, C.; Parikh, D. Cider: Consensus-based image description evaluation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4566–4575. [Google Scholar]

- Zeng, Z.; Zhang, H.; Lu, R.; Wang, D.; Chen, B.; Wang, Z. Conzic: Controllable zero-shot image captioning by sampling-based polishing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 23465–23476. [Google Scholar]

- Gajbhiye, G.O.; Nandedkar, A.V. Generating the captions for remote sensing images: A spatial-channel attention based memory-guided transformer approach. Eng. Appl. Artif. Intell. 2022, 114, 105076. [Google Scholar]

- Li, W.; Zhu, L.; Wen, L.; Yang, Y. Decap: Decoding clip latents for zero-shot captioning via text-only training. arXiv 2023, arXiv:2303.03032. [Google Scholar]

- Zhou, W.; Newsam, S.; Li, C.; Shao, Z. PatternNet: A benchmark dataset for performance evaluation of remote sensing image retrieval. ISPRS J. Photogramm. Remote Sens. 2018, 145, 197–209. [Google Scholar]

- Kuckreja, K.; Danish, M.S.; Naseer, M.; Das, A.; Khan, S.; Khan, F.S. Geochat: Grounded large vision-language model for remote sensing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 27831–27840. [Google Scholar]

- Wang, J.; Zheng, Z.; Chen, Z.; Ma, A.; Zhong, Y. Earthvqa: Towards queryable earth via relational reasoning-based remote sensing visual question answering. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 5481–5489. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).