UOrtos: Methodology for Co-Registration and Subpixel Georeferencing of Satellite Imagery for Coastal Monitoring

Abstract

1. Introduction

2. Materials and Methods

- Feature pairing: for every possible pair of images, and whenever feasible, a set of pairs of feature pixels is found that is consistent with the proposed transformation (namely, a rotation and translation) in two steps using the following:

- (I)

- The Oriented FAST and Rotated BRIEF (ORB) or, alternatively, the Scale-Invariant Feature Transform (SIFT) feature detection algorithms;

- (II)

- Normalized cross-correlations locally around each feature pair.

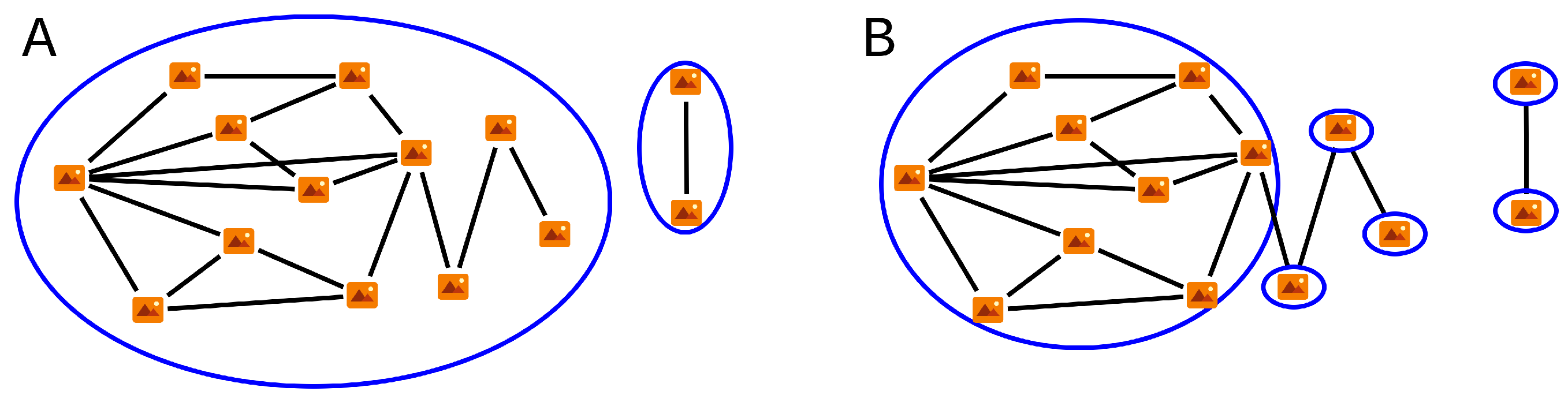

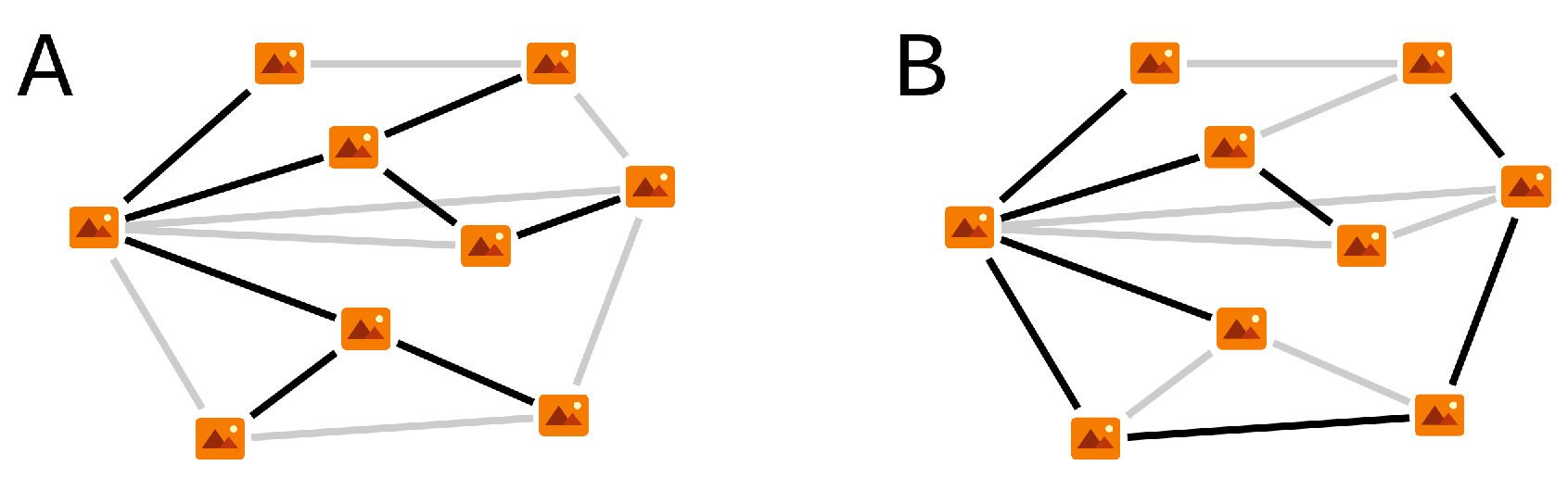

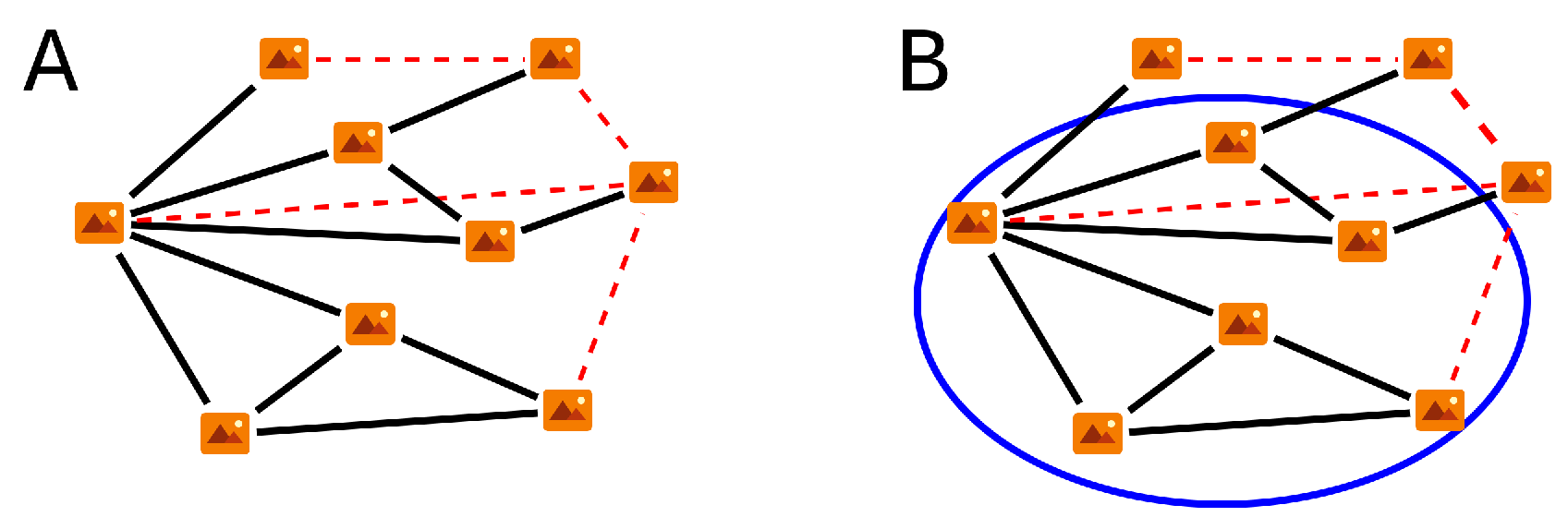

In both cases, the ability of the pairs of pixels to align with the proposed transformation is validated using RANdom SAmple Consensus (RANSAC). The outcome of this feature matching process is a set of observed connections, each of them consisting of pairs of pixels that are consistent with the given transformation (whose coefficients are not kept), for some of the image-to-image pairs. - Image clustering: Once a set of the observed connections among the images is available, the set of transformations for co-registration is obtained by a cluster analysis where we have the following:

- (I)

- The images are clustered taking into account the image-to-image observed connections and a required degree of connectivity;

- (II)

- For the largest cluster (or group), the set of transformations is obtained with a RANSAC approach that uses the image-to-image pairs from the feature pairing step.

As a result of this second step, we obtain a subset of images and the transformations required to co-register them. It is considered that the (usually few) images that are not included in this subset of images cannot be co-registered.

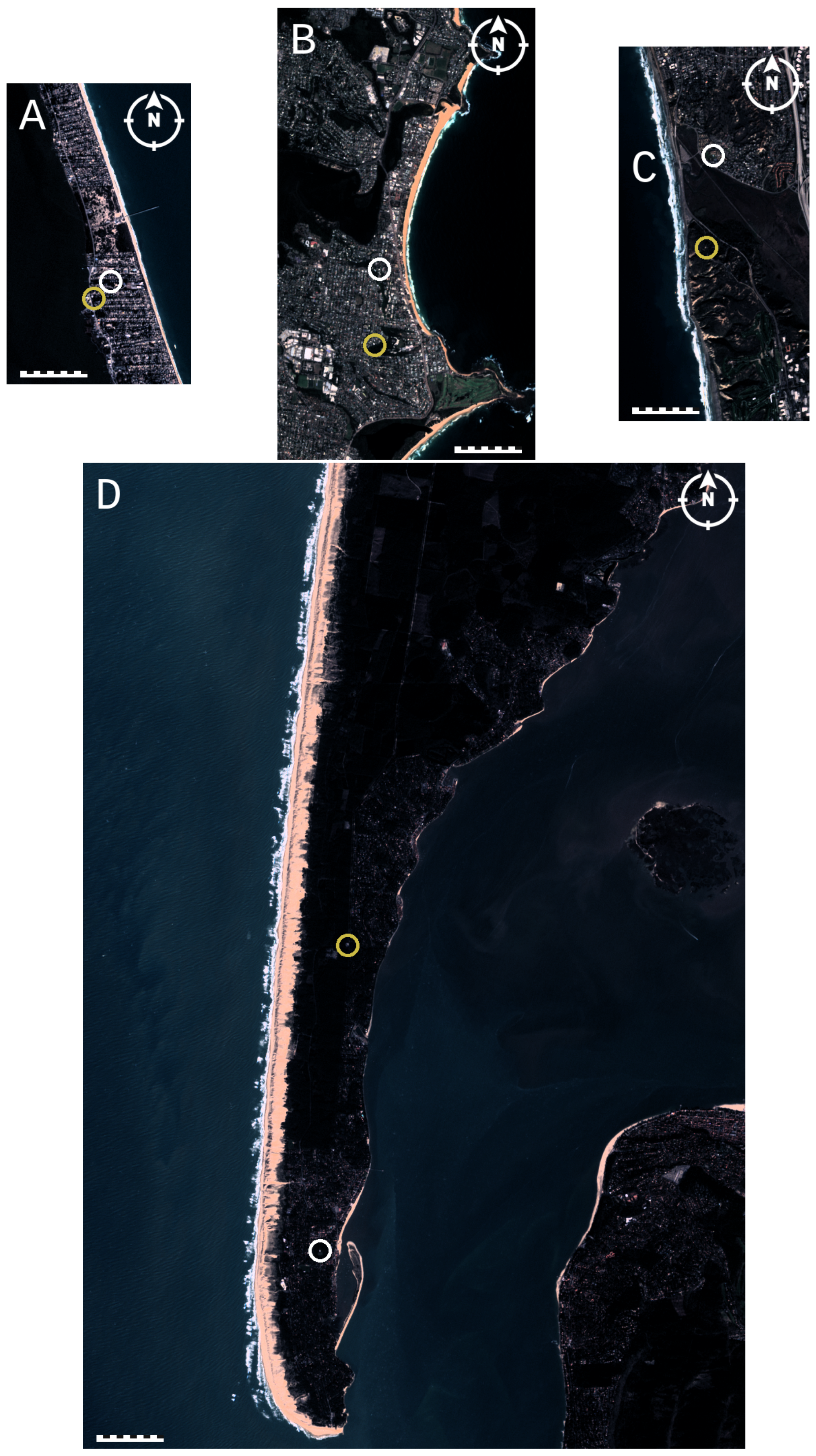

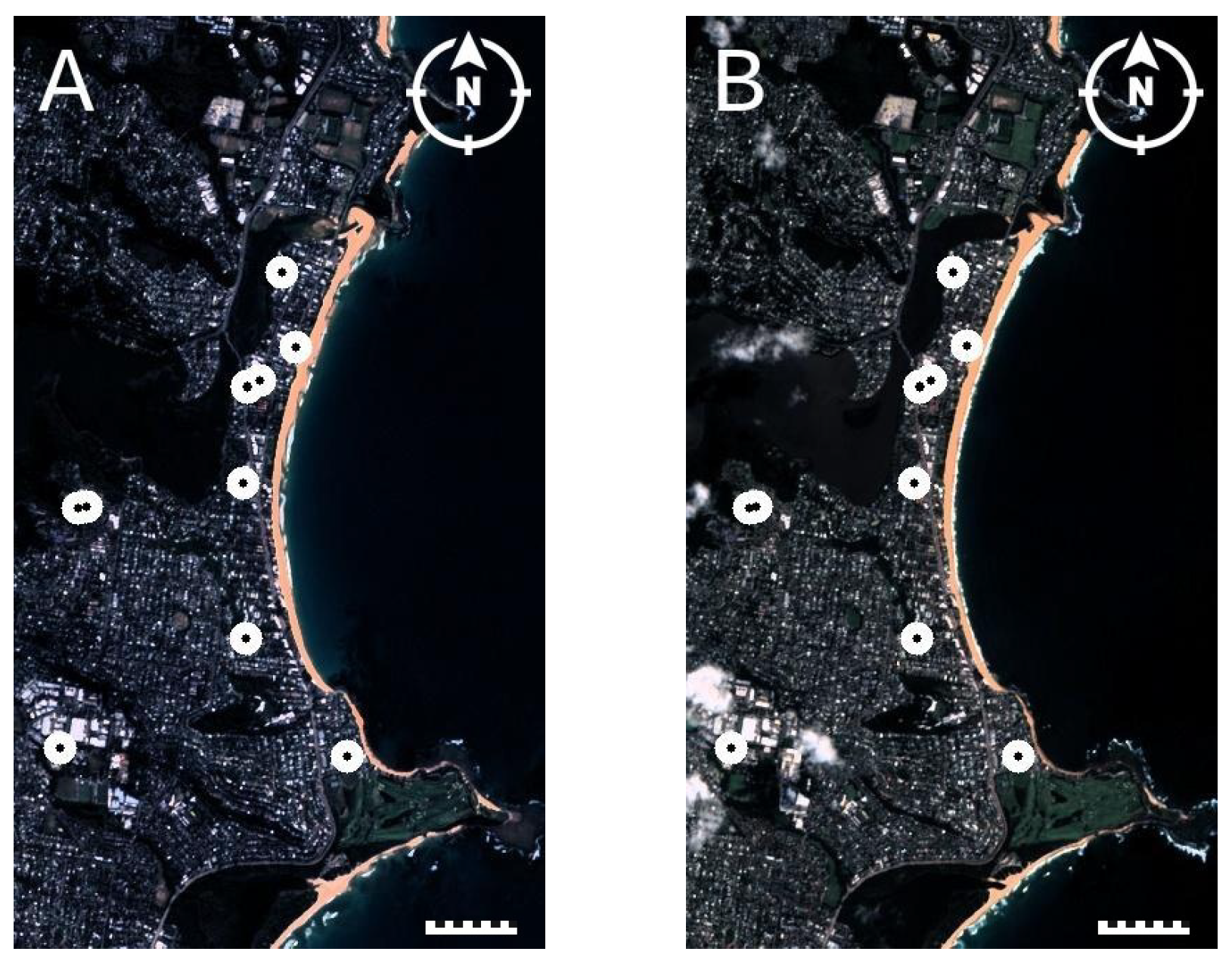

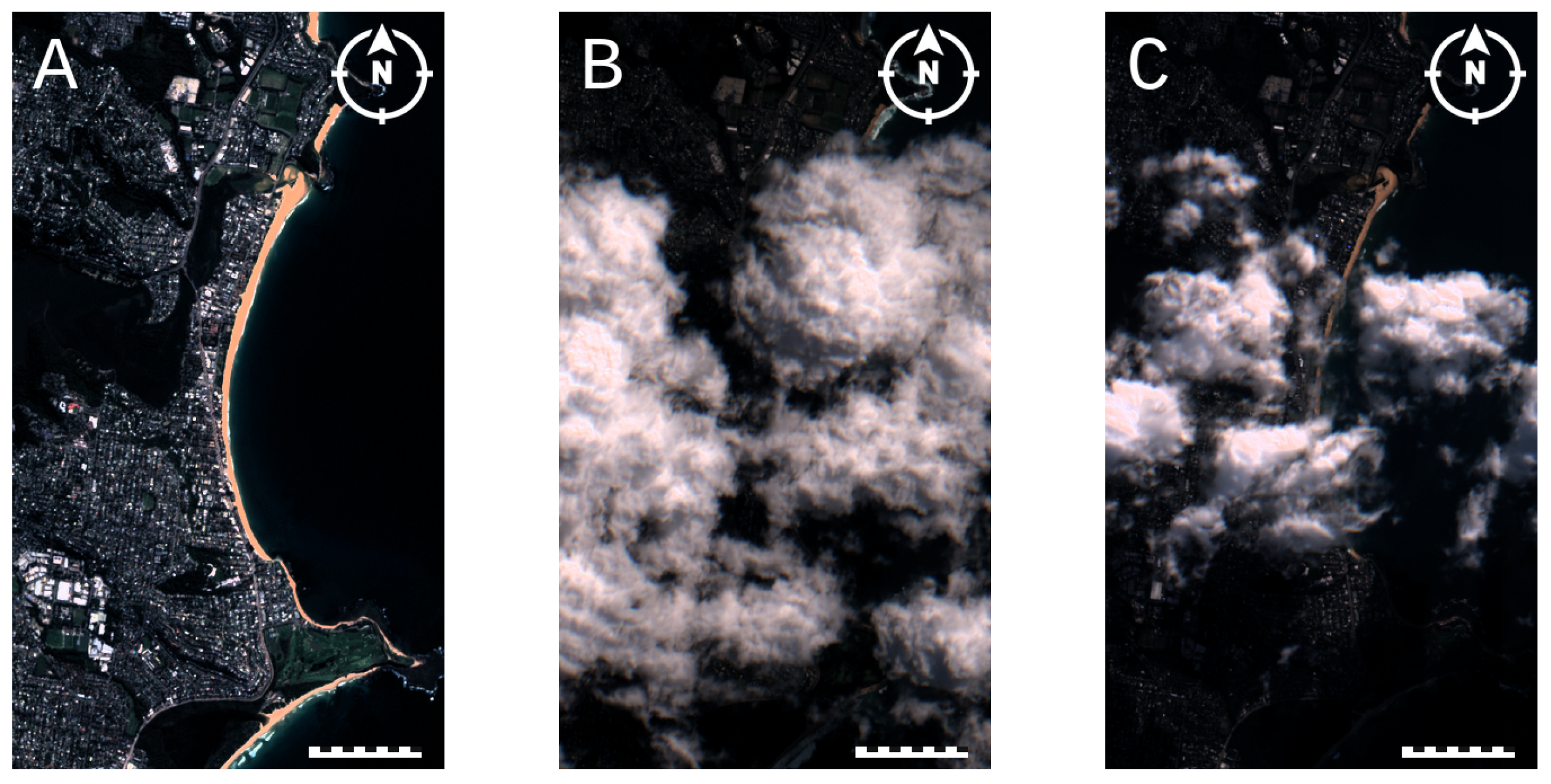

2.1. Study Sites

2.2. Feature Pairing

2.2.1. Feature Pairing (I): Global ORB

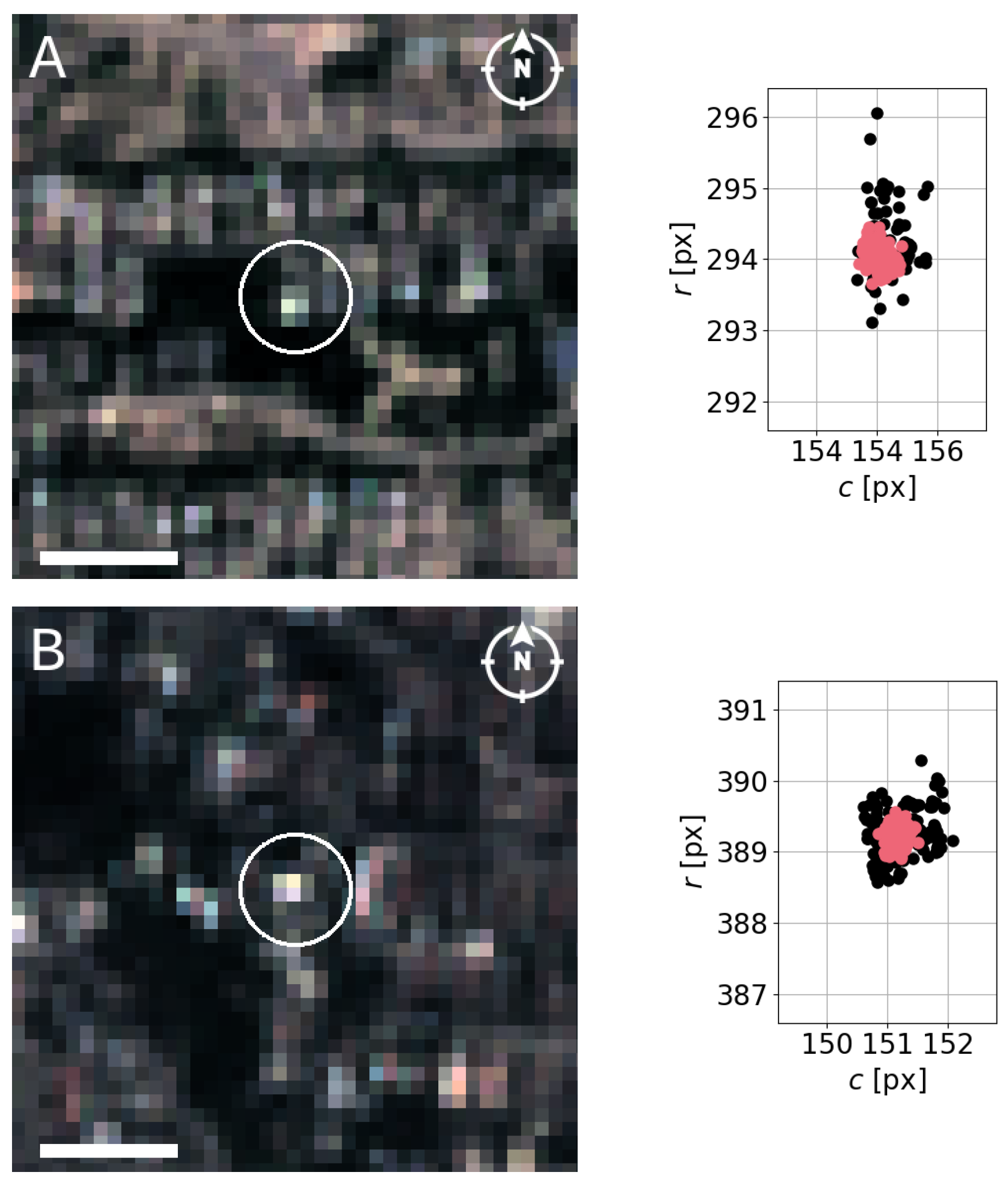

2.2.2. Feature Pairing (II): Local Correlation

2.3. Image Clustering and Transformations

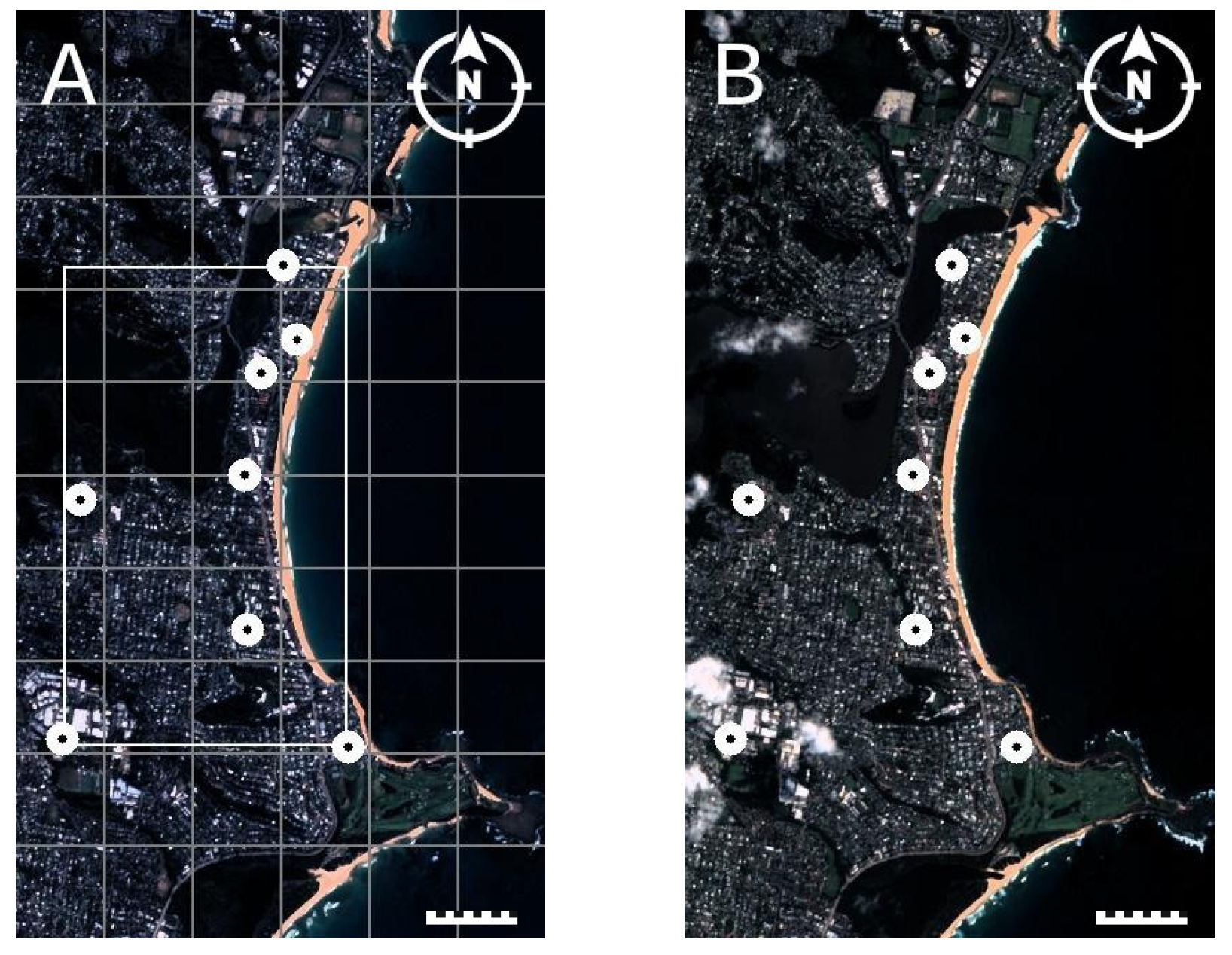

2.3.1. Image Clustering (I): Initial Clustering

2.3.2. Image Clustering (II): Transformations, RANSAC Analysis and Final Clustering

- Then, for a given random walk, we obtain the corresponding transformations by choosing, for each edge (observed connection), random pairs of pixels (recall that there are at least pairs of feature pixels for each connection); the set of transformations allows to relate any two images of the group.

3. Results

3.1. Feature Pairing

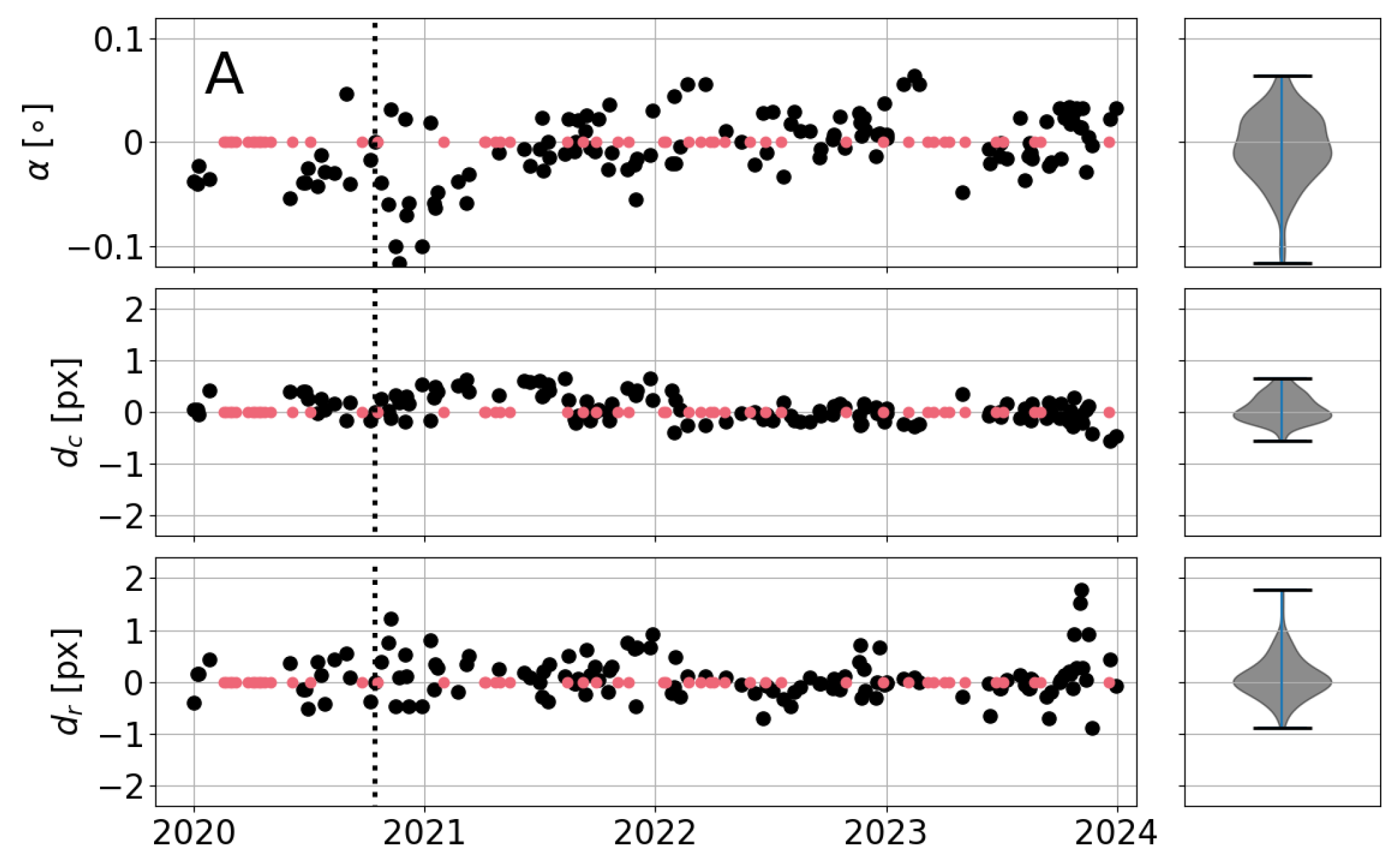

3.2. Image Clustering and Transformations

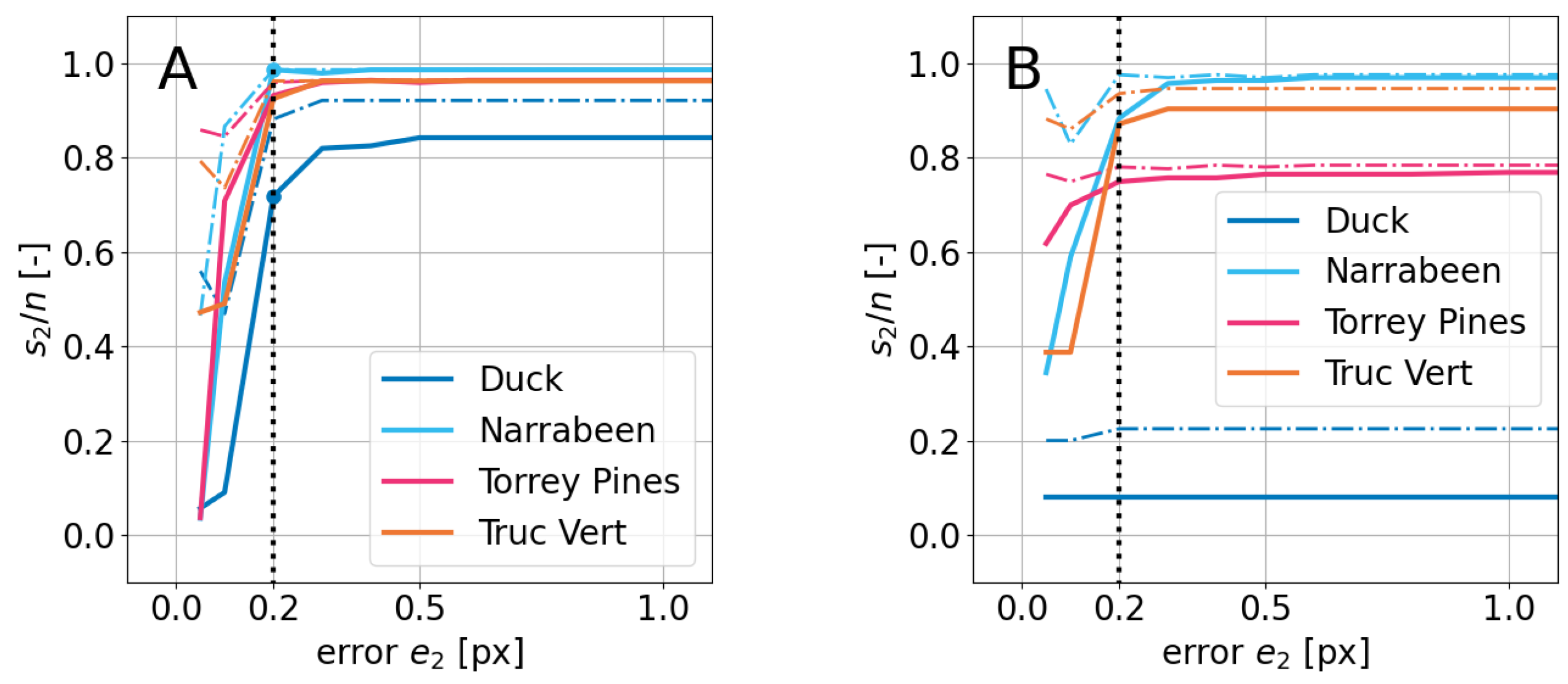

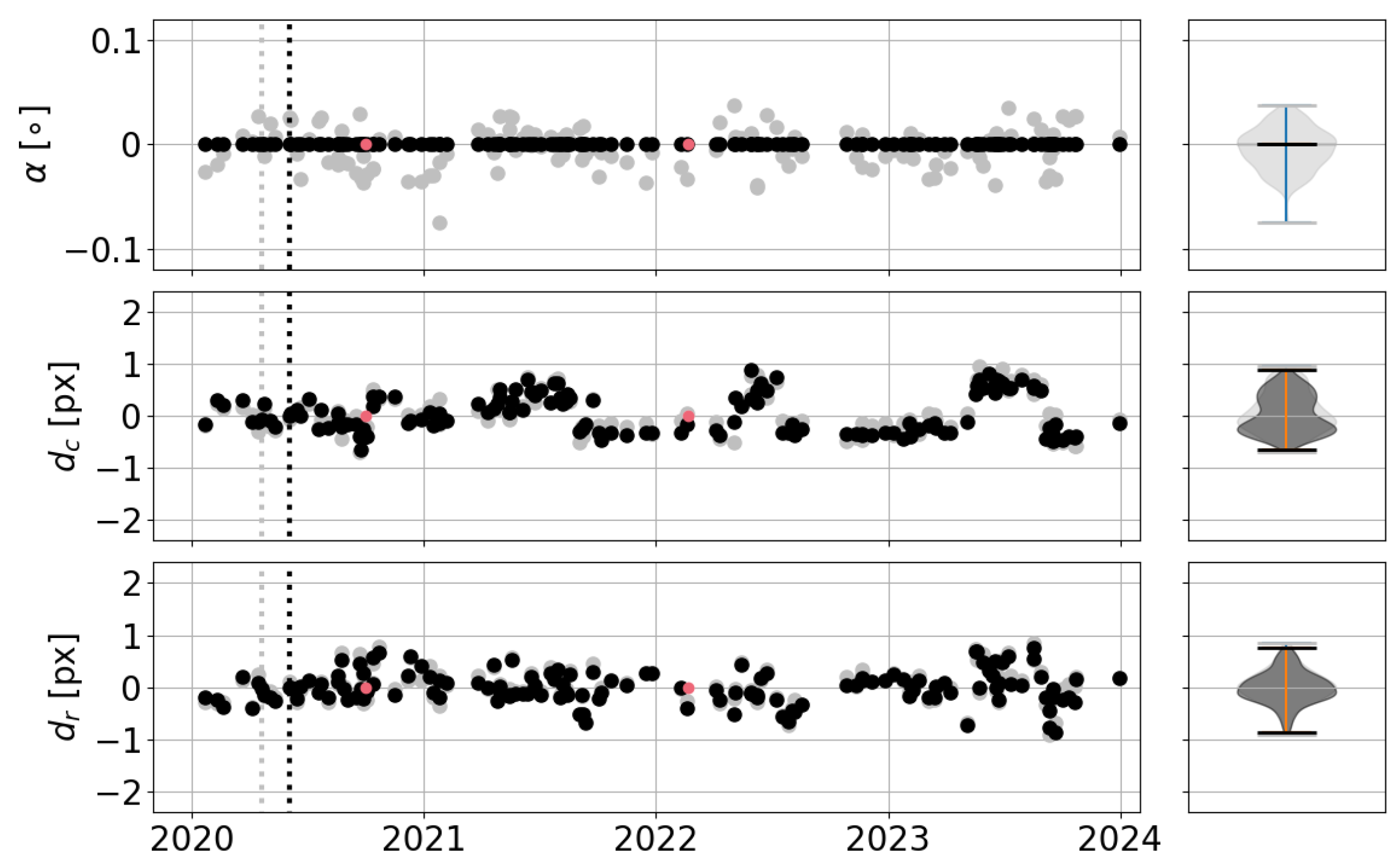

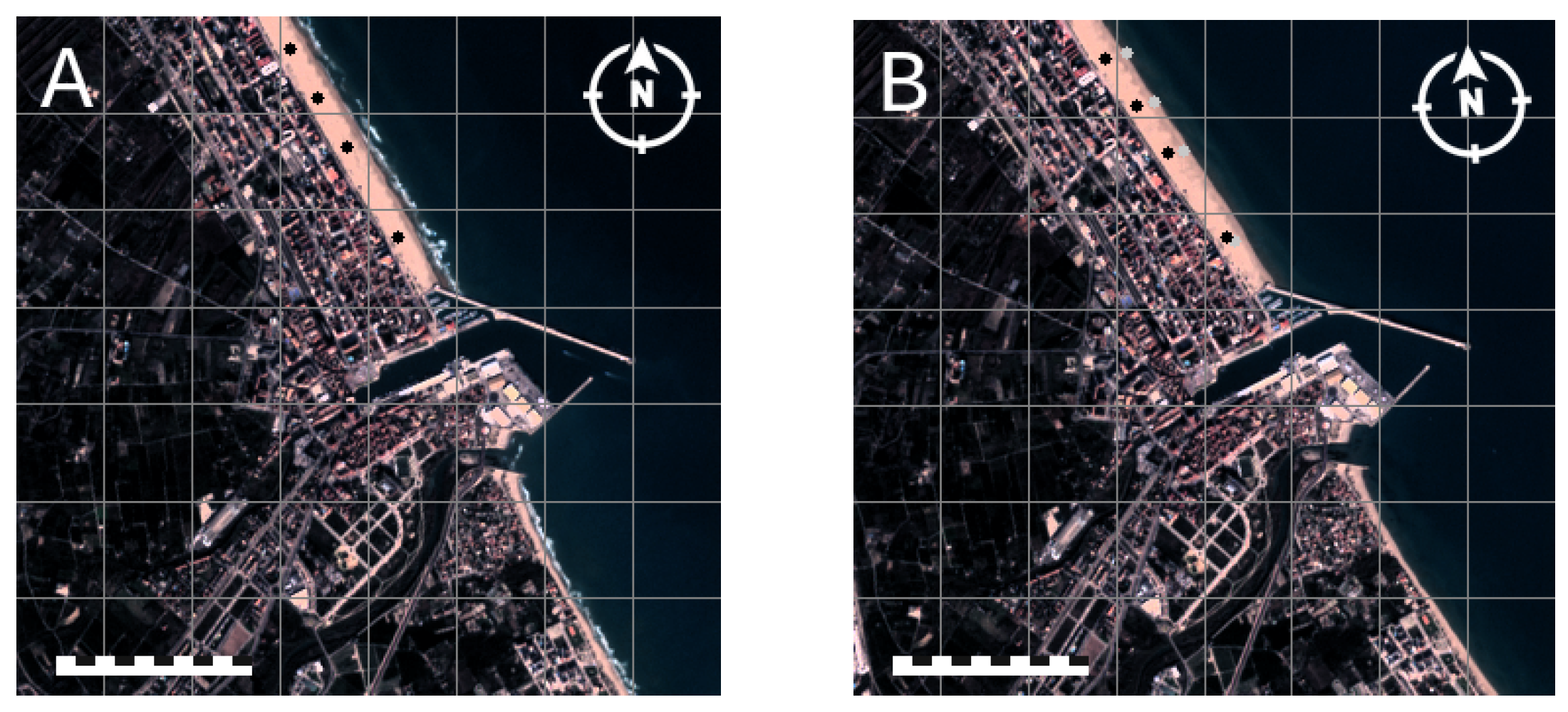

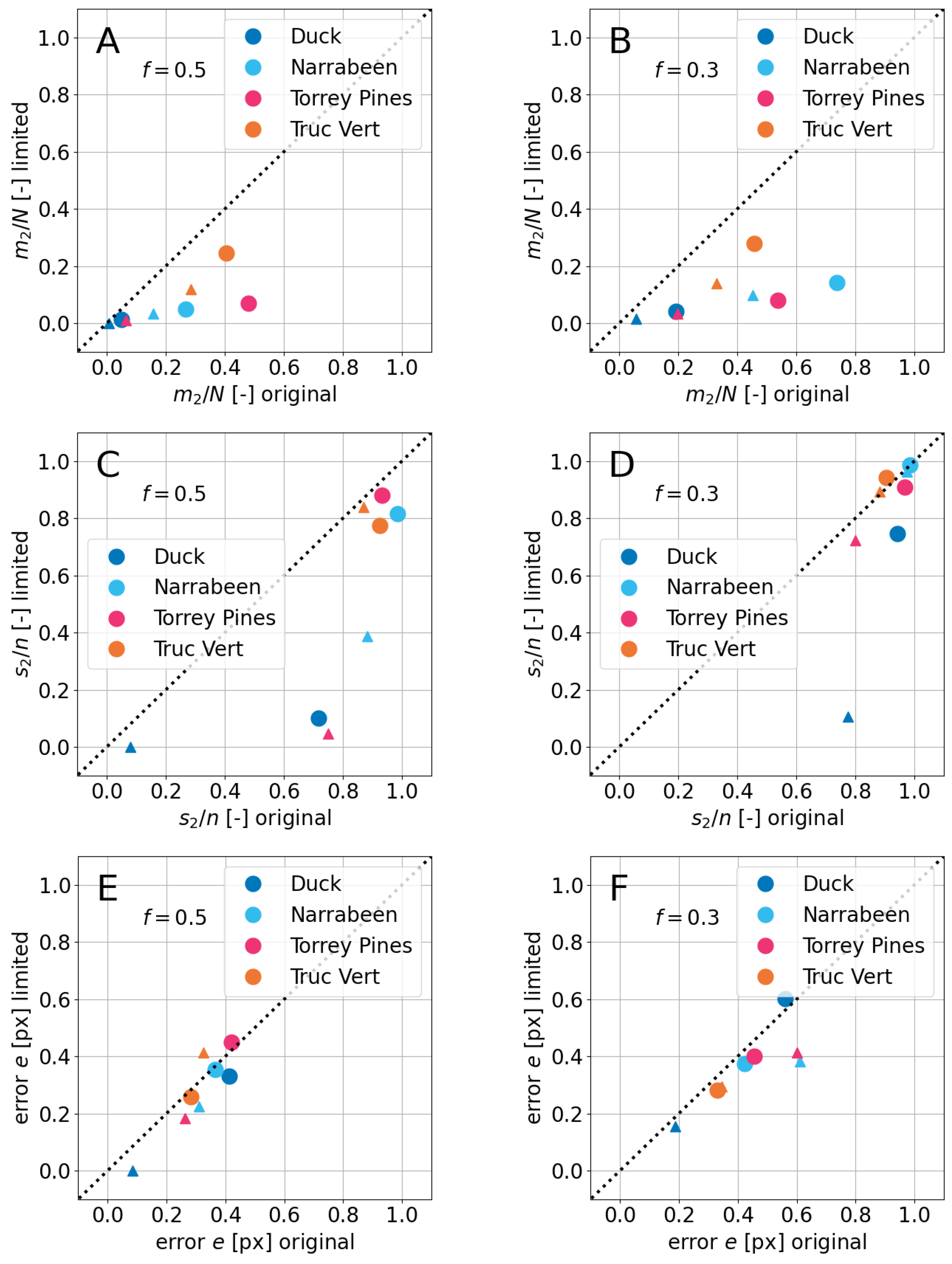

3.3. Validation

4. Discussion

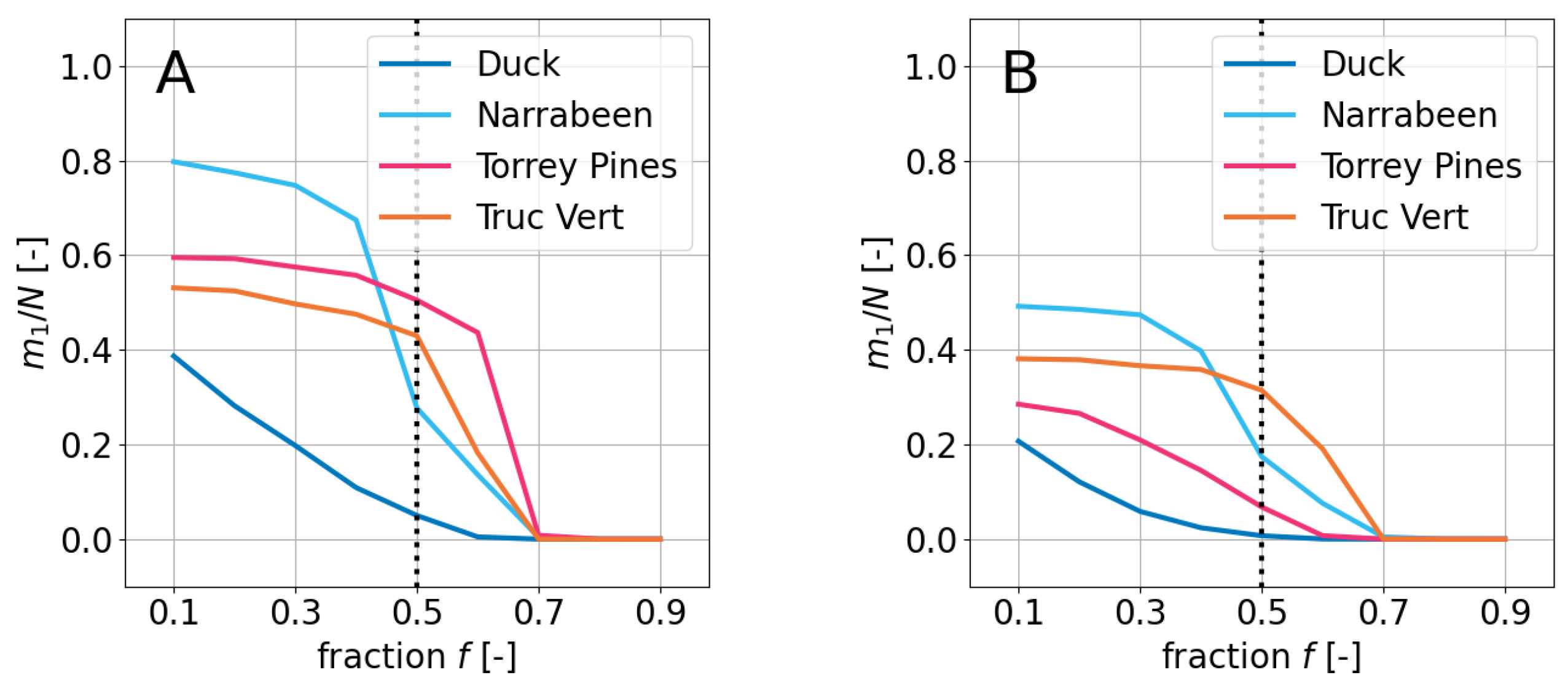

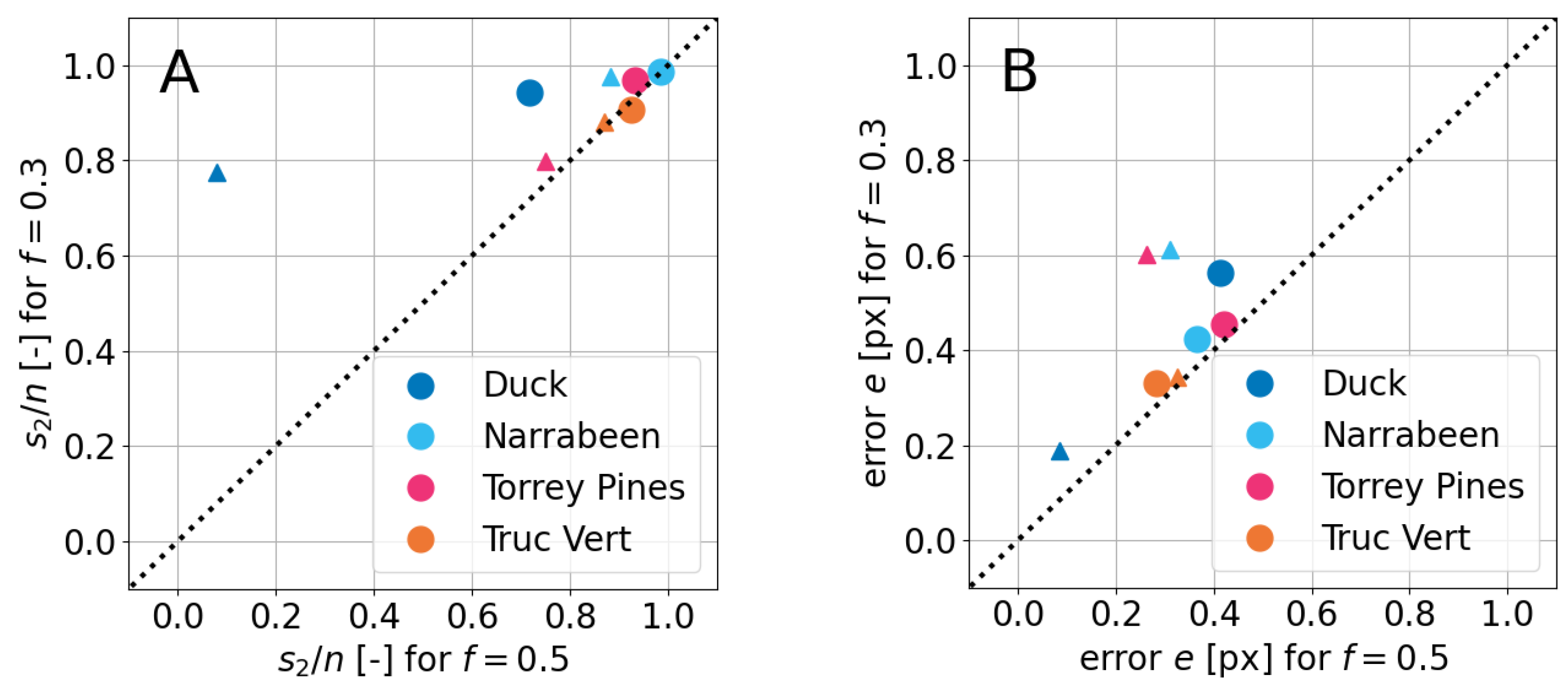

4.1. On the Influence of f

4.2. Null Rotation Case

4.3. Very Large Datasets

5. Conclusions

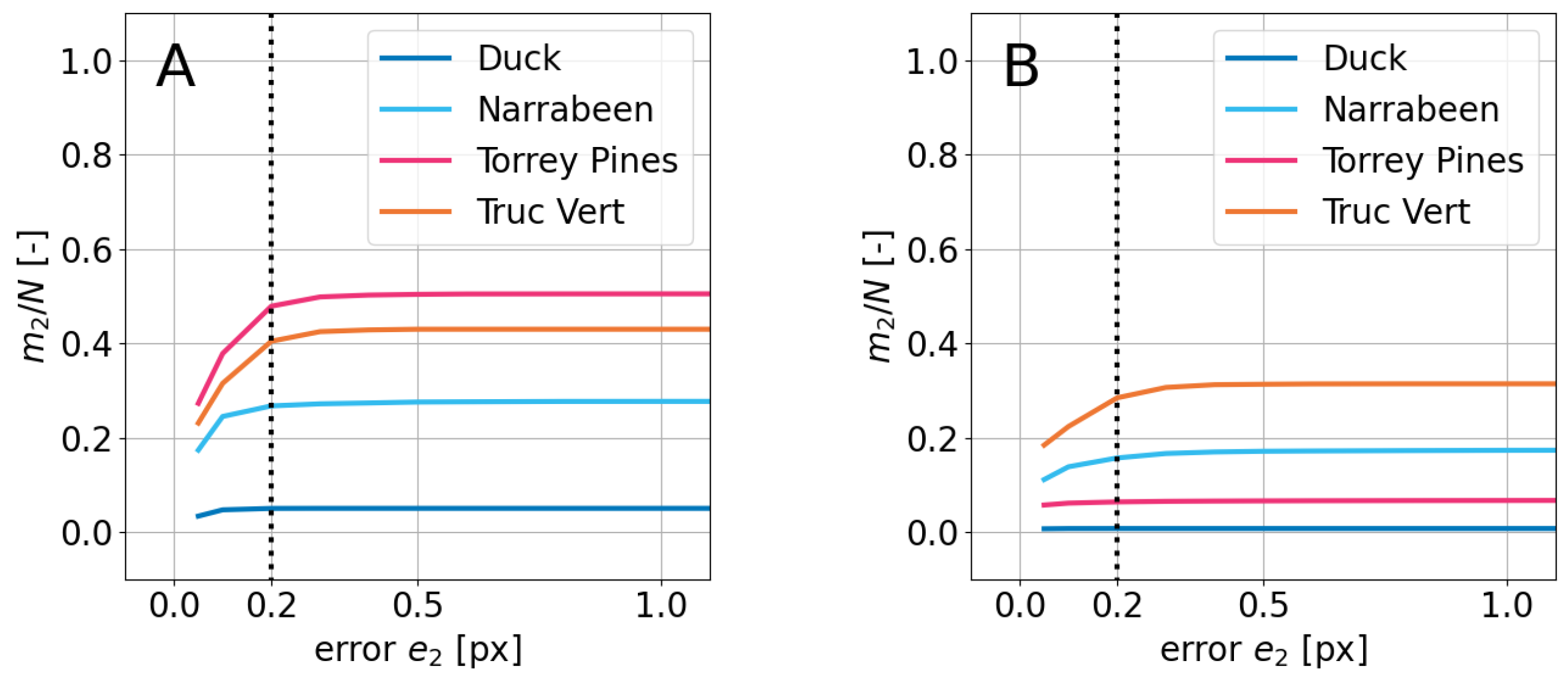

- Improved Image Alignment: The co-registration method, based on ORB/SIFT, local cross-correlation, and RANSAC, enhances the alignment of satellite images, potentially reducing the impact of georeferencing errors from 1 to at least 0.4 px (it is probably smaller, of the order of 0.2 px), in the tested 2020–2023 images of four sites. This could have a significant benefit on shoreline detection by reducing the present-day errors of 0.5–1 px, and enhance coastal monitoring.

- Outlier Reduction: The RANSAC-based filtering process seems to help in eliminating erroneous pixel-pair connections as well as bad image transformations, which contributes to more reliable transformations across the majority of the image set.

- Rotation Handling: The ability to account for image rotation, especially in cases involving different projections (e.g., Gandia), underscores the method’s flexibility. This suggests that the approach may work well even under challenging conditions.

- Flexibility and Applicability: The approach can be applied to different coastal environments and image resolutions as demonstrated by the present examples across multiple locations. Moreover, it remains adaptable to various datasets with minimal user input.

- Potential Applications: While further validation is needed, the method holds potential for applications in coastal management, disaster preparedness, and studying climate change impacts, particularly for monitoring short-term events such as storms and long-term shoreline evolution.

- Future Improvements: The method could benefit from future advancements in feature matching algorithms to further enhance its accuracy and efficiency. There is also room for future improvements, including optimizing the algorithm for very large datasets and integrating additional environmental data. The open-source nature of the tool could allow for further development and broader applications within the remote sensing community.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ESA | European Space Agency |

| GEE | Google Earth Engine |

| NASA | National Aeronautics and Space Administration (USA) |

| ORB | Oriented FAST and Rotated BRIEF |

| RANSAC | RANdom SAmple Consensus |

| RMSE | Root Mean Squared Error |

| SDS | Satellite-Derived Shorelines |

| SIFT | Scale-Invariant Feature Transform |

| AoI | Area of Interest |

References

- Mentaschi, L.; Vousdoukas, M.; Pekel, J.F.; Voukouvalas, E.; Feyen, L. Global long-term observations of coastal erosion and accretion. Sci. Rep. 2018, 8, 12876. [Google Scholar] [CrossRef]

- Vos, K.; Splinter, K.; Palomar-Vázquez, J.; Pardo-Pascual, J.; Almonacid-Caballer, J.; Cabezas-Rabadán, C.; Kras, E.; Luijendijk, A.; Calkoen, F.; Almeida, L.; et al. Benchmarking satellite-derived shoreline mapping algorithms. Commun. Earth Environ. 2023, 4, 345. [Google Scholar] [CrossRef]

- Toure, S.; Diop, O.; Kpalma, K.; Maiga, A. Shoreline detection using optical remote sensing: A review. ISPRS Int. J. Geo-Inf. 2019, 8, 75. [Google Scholar] [CrossRef]

- Zulkifle, F.; Hassan, R.; Kasim, S.; Othman, R. A review on shoreline detection framework using remote sensing satellite image. Int. J. Innov. Comput. 2017, 7, 40–51. [Google Scholar]

- European Space Agency. Copernicus Programme—European Space Agency. 2024. Available online: https://www.copernicus.eu/en (accessed on 3 March 2025).

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Vos, K.; Splinter, K.; Harley, M.; Simmons, J.; Turner, I. CoastSat: A Google Earth Engine-enabled Python toolkit to extract shorelines from publicly available satellite imagery. Environ. Model. Softw. 2019, 122, 104528. [Google Scholar] [CrossRef]

- Sánchez-García, E.; Palomar-Vázquez, J.; Pardo-Pascual, J.; Almonacid-Caballer, J.; Cabezas-Rabadán, C.; Gómez-Pujol, L. An efficient protocol for accurate and massive shoreline definition from mid-resolution satellite imagery. Coast. Eng. 2020, 160, 103732. [Google Scholar] [CrossRef]

- Almeida, L.P.; Efraim de Oliveira, I.; Lyra, R.; Scaranto Dazzi, R.L.; Martins, V.G.; Henrique da Fontoura Klein, A. Coastal Analyst System from Space Imagery Engine (CASSIE): Shoreline management module. Environ. Model. Softw. 2021, 140, 105033. [Google Scholar] [CrossRef]

- Pardo-Pascual, J.; Almonacid-Caballer, J.; Cabezas-Rabadán, C.; Fernández-Sarría, A.; Armaroli, C.; Ciavola, P.; Montes, J.; Souto-Ceccon, P.; Palomar-Vázquez, J. Assessment of satellite-derived shorelines automatically extracted from Sentinel-2 imagery using SAET. Coast. Eng. 2024, 188, 104426. [Google Scholar] [CrossRef]

- Luijendijk, A.; Hagenaars, G.; Ranasinghe, R.; Baart, F.; Donchyts, G.; Aarninkhof, S. The State of the World’s Beaches. Sci. Rep. 2018, 8, 6641. [Google Scholar] [CrossRef]

- Mao, Y.; Harris, D.; Xie, Z.; Phinn, S. Efficient measurement of large-scale decadal shoreline change with increased accuracy in tide-dominated coastal environments with Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2021, 181, 385–399. [Google Scholar] [CrossRef]

- Enache, S.; Clerc, S.; Poustomis, F. Optical MPC Data Quality Report—Sentinel-2 L1C MSI. 2024. Available online: https://sentinels.copernicus.eu/documents/247904/4893455/OMPC.CS.APR.001+-+i1r0+-+S2+MSI+Annual+Performance+Report+2022.pdf (accessed on 19 March 2025).

- Gomes da Silva, P.; Jara, M.S.; Medina, R.; Beck, A.L.; Taji, M.A. On the use of satellite information to detect coastal change: Demonstration case on the coast of Spain. Coast. Eng. 2024, 191, 104517. [Google Scholar] [CrossRef]

- Rengarajan, R.; Choate, M.; Hasan, M.N.; Denevan, A. Co-registration accuracy between Landsat-8 and Sentinel-2 orthorectified products. Remote Sens. Environ. 2024, 301, 113947. [Google Scholar] [CrossRef]

- Brown, L.G. A survey of image registration techniques. ACM Comput. Surv. 1992, 24, 325–376. [Google Scholar] [CrossRef]

- Scheffler, D.; Hollstein, A.; Diedrich, H.; Segl, K.; Hostert, P. AROSICS: An automated and robust open-source image co-registration software for multi-sensor satellite data. Remote Sens. 2017, 9, 676. [Google Scholar] [CrossRef]

- Wong, A.; Clausi, D.A. ARRSI: Automatic Registration of Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1483–1493. [Google Scholar] [CrossRef]

- Fischler, M.; Bolles, R. Random sample consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Gao, F.; Masek, J.; Wolfe, R. Automated registration and orthorectification package for Landsat and Landsat-like data processing. J. Appl. Remote Sens. 2009, 3, 033515. [Google Scholar] [CrossRef]

- Behling, R.; Roessner, S.; Segl, K.; Kleinschmit, B.; Kaufmann, H. Robust automated image co-registration of optical multi-sensor time series data: Database generation for multi-temporal landslide detection. Remote Sens. 2014, 6, 2572–2600. [Google Scholar] [CrossRef]

- Yan, L.; Roy, D.; Zhang, H.; Li, J.; Huang, H. An automated approach for sub-pixel registration of Landsat-8 Operational Land Imager (OLI) and Sentinel-2 Multi Spectral Instrument (MSI) imagery. Remote Sens. 2016, 8, 520. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Zhao, F.; Huang, Q.; Gao, W. Image Matching by Normalized Cross-Correlation. In Proceedings of the 2006 IEEE International Conference on Acoustics, Speech, and Signal Processing, Toulouse, France, 14–19 May 2006; p. II. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2008. [Google Scholar]

| Location | Lon [°] | Lat [°] | Width [km] | Height [km] |

|---|---|---|---|---|

| Duck | ||||

| Narrabeen | ||||

| Torrey Pines | ||||

| Truc Vert | ||||

| Gandia |

| Resolution | ||

|---|---|---|

| Location | 10-m | 30-m |

| Duck | 177 | 200 |

| Narrabeen | 142 | 163 |

| Torrey Pines | 219 | 259 |

| Truc Vert | 53 | 93 |

| Gandia | 201 | 275 |

| Step | rot | f | d | ||||||

|---|---|---|---|---|---|---|---|---|---|

| feature pairing (I) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | — | — | — |

| feature pairing (II) | — | ✓ | — | ✓ | ✓ | ✓ | ✓ | — | — |

| image clustering (I) | — | — | — | — | — | — | — | ✓ | — |

| image clustering (II) | — | ✓ | — | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| default values | 1000 | free | 50 | 5 | 2 | 500 |

| Pre Co-Register | Post Co-Register | |||

|---|---|---|---|---|

| Location | 10-m | 30-m | 10-m | 30-m |

| Duck | ||||

| Narrabeen | ||||

| Torrey Pines | ||||

| Truc Vert | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Simarro, G.; Calvete, D.; Ribas, F.; Castillo, Y.; Puig-Polo, C. UOrtos: Methodology for Co-Registration and Subpixel Georeferencing of Satellite Imagery for Coastal Monitoring. Remote Sens. 2025, 17, 1160. https://doi.org/10.3390/rs17071160

Simarro G, Calvete D, Ribas F, Castillo Y, Puig-Polo C. UOrtos: Methodology for Co-Registration and Subpixel Georeferencing of Satellite Imagery for Coastal Monitoring. Remote Sensing. 2025; 17(7):1160. https://doi.org/10.3390/rs17071160

Chicago/Turabian StyleSimarro, Gonzalo, Daniel Calvete, Francesca Ribas, Yeray Castillo, and Càrol Puig-Polo. 2025. "UOrtos: Methodology for Co-Registration and Subpixel Georeferencing of Satellite Imagery for Coastal Monitoring" Remote Sensing 17, no. 7: 1160. https://doi.org/10.3390/rs17071160

APA StyleSimarro, G., Calvete, D., Ribas, F., Castillo, Y., & Puig-Polo, C. (2025). UOrtos: Methodology for Co-Registration and Subpixel Georeferencing of Satellite Imagery for Coastal Monitoring. Remote Sensing, 17(7), 1160. https://doi.org/10.3390/rs17071160