Abstract

Few-shot object detection (FSOD) in remote sensing images (RSIs) faces challenges such as data scarcity, difficulty in detecting small objects, and underutilization of frequency-domain information. Existing methods often rely on spatial-domain features, neglecting the complementary insights from low- and high-frequency characteristics. Additionally, their performance in detecting small objects is hindered by inadequate feature extraction in cluttered backgrounds. To tackle these problems, we propose a novel detection framework of Spatial-Frequency Interaction and Distribution Matching (SFIDM), which significantly enhances FSOD performance in RSIs. SFIDM focuses on rapid adaptation to target datasets and efficient fine-tuning with limited data. First, to improve feature representation, we introduce the Spatial-Frequency Interaction (SFI) module, which leverages the complementarity between low-frequency and high-frequency information. By decomposing input images into their frequency components, the SFI module extracts features critical for classification and precise localization, enabling the framework to capture fine details essential for detecting small objects. Secondly, to resolve the limitations of traditional label assignment strategies when dealing with small bounding boxes, we construct the Distribution Matching (DM) module, which models bounding boxes as 2D Gaussian distributions. This allows for the accurate detection of subtle offsets and overlapping or non-overlapping small objects. Moreover, to leverage the learned base-class information for improved performance on novel class detection, we employ a feature reweighting module, which adaptively fuses features extracted from the backbone network to generate representations better suited for downstream detection tasks. We conducted extensive experiments on two benchmark FSOD datasets to demonstrate the effectiveness and performance improvements achieved by the proposed SFIDM framework.

1. Introduction

With the rapid advancement of satellite technology and the proliferation of large-scale image datasets [1,2,3], deep learning has become a cornerstone of remote sensing images (RSIs) interpretation, encompassing key tasks such as object detection [4,5,6], image classification [7,8,9], object recognition [10,11,12], image segmentation [13,14,15], and object tracking [16]. Among these, object detection (OD) serves as a foundational task in RSI analysis by providing instance-level information on object categories and locations, thereby supporting downstream applications such as environmental monitoring [17], urban planning [15], and disaster management [18].

Although recent advances in computer vision have greatly enhanced the analytical capabilities of RSIs, these achievements typically rely on large-scale annotated datasets [19]. In the remote sensing domain, the acquisition of such datasets is constrained by high costs and labor-intensive annotation processes, further limiting the construction of large, high-quality datasets [20,21]. Moreover, the increasing complexity of object detection models necessitates even larger datasets to fully exploit their potential. This disparity between the scarcity of RSI data and the demands of modern models poses a significant challenge, often leading to overfitting or performance degradation, especially for the OD task.

To tackle the unique challenges of remote sensing images (RSIs), including large intra-class variance, high inter-class similarity, scale variations, sparse annotations, and imbalanced data distributions, researchers have explored innovative approaches such as advanced feature extraction, optimized model architectures, and customized training strategies [22,23,24,25,26]. Despite these efforts, the complexity of RSIs and the scarcity of labeled data continue to hinder the progress of object detection (OD) in this domain, often resulting in feature misalignment, incomplete supervision, and attention biases. Few-Shot Object Detection (FSOD) has emerged as a promising solution, enabling models to generalize to new categories with minimal labeled examples by leveraging knowledge from data-rich base categories. Fine-tuning-based FSOD methods, in particular, have garnered significant attention [27,28,29] due to their streamlined architectures, minimized dependency on structured data, and enhanced practical applicability. These methods typically involve a two-stage process: training on base categories to learn generalizable feature representations, followed by fine-tuning novel categories with limited samples. However, directly applying FSOD techniques to remote sensing images (RSIs) presents additional challenges due to the significant variability in object sizes within the same scene. Objects in RSIs can range from small vehicles to large infrastructure, making it challenging for models to effectively capture features across such diverse scales. Notably, its extreme case—One-Shot Object Detection (OSOD)—further intensifies the challenge when only a single annotated instance is available, especially under RSIs’ inherent scale variations [30,31,32,33,34]. Integrating segmentation methods has also emerged as a direction in few-shot object detection [35,36,37,38,39,40]. Incorporating segmentation-assisted modules has shown promise in few-shot object detection by providing pixel-level foreground guidance to suppress background noise and enhance feature discriminability [41]. While segmentation-guided approaches could theoretically benefit both FSOD and OSOD scenarios, such methods often require additional segmentation annotations during training, which may introduce higher annotation costs or depend on weakly supervised approximations. Furthermore, existing FSOD and OSOD methods often fail to fully explore how frequency-domain information can be effectively integrated with spatial-domain features to improve detection performance. These challenges underscore the need for FSOD models specifically tailored to the unique scale variations and characteristics of RSIs, by incorporating domain-specific optimizations to enhance detection accuracy under data-limited conditions.

Building on these advancements, we propose a novel FSOD framework tailored for RSIs: Spatial-Frequency Interaction and Distribution Matching (SFIDM). Our approach integrates spatial and frequency-domain interactions with a robust distribution matching mechanism to address the unique challenges of FSOD in RSIs. We summarize our contributions as follows:

- To effectively leverage complementary frequency information, we introduce a mechanism that decomposes images into low-frequency and high-frequency components. Low-frequency components capture global structural patterns essential for classification, while high-frequency components focus on fine details crucial for precise localization. This design enhances the detection of small objects, particularly in complex backgrounds where traditional methods struggle.

- To improve label assignment, we employ a novel strategy of distribution matching that models bounding boxes as 2D Gaussian distributions. By utilizing Kullback–Leibler (KL) divergence [42] as a localization metric, this approach overcomes the limitations of traditional IoU-based heuristics, enabling accurate detection of subtle offsets and addressing the challenges posed by small or overlapping objects.

- We conducted experiments on publicly available datasets, DIOR and NWPU VHR-10, and performed comparative analyses between our proposed method and previous approaches. The results demonstrate the effectiveness and superiority of SFIDM. Furthermore, as a plug-and-play model, SFIDM can be seamlessly integrated with different backbone networks and detection frameworks, showcasing its versatility and adaptability.

The rest of this paper is organized as follows: Section 2 reviews the related work, focusing on few-shot object detection (FSOD) and its application in remote sensing scenarios. Section 3 presents our proposed method, detailing the overall framework and the design of its individual modules. Section 4 discusses the experimental results, highlighting the performance of our approach on the NWPU VHR-10 and DIOR datasets. Finally, Section 5 concludes the paper, summarizing the key contributions and findings.

2. Related Work

2.1. Few-Shot Object Detection in Natural Images

Few-Shot Object Detection (FSOD) aims to identify new or previously unseen object categories using only a small number of labeled examples. The primary challenge of FSOD lies in efficiently transferring existing knowledge to scenarios with limited data while maintaining strong generalization capabilities [29,43,44,45]. Currently, FSOD methods can be broadly categorized into three approaches: transfer learning-based methods, metric learning-based methods, and meta-learning-based methods.

2.1.1. Transfer Learning-Based Methods

Transfer learning-based methods fine-tune pre-trained models by transferring object detection models trained on base-class datasets to new classes [46,47,48,49]. The main advantage of this approach is that it eliminates the need to design complex training tasks, instead optimizing detection performance for new classes by leveraging transferred knowledge. Specifically, these methods utilize model parameters pre-trained on large-scale base-class datasets and transfer them to new-class data, enabling efficient object detection under few-shot conditions.

For example, LSTD [50], one of the early transfer learning methods, significantly improved detection accuracy by extracting rich features from base-class data and effectively transferring these features to target data. Meanwhile, TFA [51] simplified the training process by only fine-tuning the classification head and normalizing features using cosine similarity, successfully reducing intra-class variance and improving detection performance for new classes. Additionally, FSCE [52] introduced contrastive loss and optimized proposal encoding, further enhancing the discriminative ability of new-class features.

Although transfer learning methods show promising results, several challenges remain in few-shot object detection. Mostly, reducing category confusion between new and base classes and enhancing feature representations for new classes remain critical issues. Another significant challenge is how to ensure that base-class performance is maintained while improving new-class detection at the same time. Therefore, further research is urged to address issues such as category confusion and feature degradation, ensuring that improvements in new-class detection do not come at the expense of base-class performance.

2.1.2. Metric Learning-Based Methods

Metric learning-based methods aim to improve the ability to differentiate between various object categories by learning their similarities in the feature space, especially for the bounding-box classification tasks. These methods frame FSOD as a few-shot classification problem, employing a “comparison” strategy to address the issue of data scarcity. The core idea is to optimize feature representations through metric learning, providing effective classification cues for object detection. Optimization primarily focuses on three aspects: (1) enhancing the feature representation of support set images through class prototype representations [53,54], (2) improving inter-class separability and enhancing intra-class consistency through improved metric mechanisms [55,56], and (3) designing suitable loss functions to further optimize the model’s learning ability [32,57].

Note that object detection not only requires accurate classification but also precise localization of the target regions. Effective class comparison can only occur once the target’s location is determined. Therefore, metric learning-based detection must integrate target region capture mechanisms into the traditional metric learning framework. For instance, RepMet [55] models categories using Gaussian Mixture Models and optimizes the intra-class and inter-class distance differences through embedding loss, enhancing inter-class separability. Attention RPN [58] leverages support features to enhance region proposal generation, significantly reducing background noise interference. Although metric learning-based methods show significant advantages in feature representation and inter-class distinction, their performance still depends on the optimization of prototype design, metric mechanisms, and loss functions. Therefore, combining target localization techniques while retaining the advantages of metric learning remains an important direction in this line.

2.1.3. Meta-Learning-Based Methods

Meta-learning-based methods play a crucial role in FSOD. The core idea is to simulate a series of similar few-shot tasks, transferring prior knowledge learned from data-rich base classes to data-scarce new classes, thus addressing the issue of insufficient samples. In the meta-learning framework, the model is trained on tasks, typically by sampling both tasks and data to construct diverse new few-shot tasks. This strategy enables the model to quickly update parameters using a few support-set samples, achieving rapid generalization to new tasks, and, in some cases, even eliminating the need for further fine-tuning.

Meta-learning methods have shown significant success in FSOD. For example, MetaYOLO [43] improves the model’s adaptability to new classes by generating weight coefficients from support samples and dynamically adjusting the weight of query features. FsDetView [59] proposed a feature aggregation strategy that combines query and support features in a weighted manner, effectively enhancing detection performance for new classes. However, meta-learning methods are typically complex in design, requiring dynamic adjustments of multiple tasks during training. This may lead to convergence difficulties during iterations, limiting their practical application. Additionally, although these methods perform well in few-shot tasks, they are often constrained by low training efficiency and limited generalization ability.

Generally, few-shot object detection is a challenging research area, with ongoing advancements in methods and the combination of various strategies, such as integrating transfer learning with metric learning, to enhance the model’s ability to learn features for new classes. Other challenges include optimizing training efficiency, reducing category confusion, etc. Addressing these issues will be crucial to advancing the field of FSOD.

2.2. Few-Shot Object Detection in Remote Sensing Images

Remote sensing images (RSIs) have distinct characteristics, such as complex backgrounds, multi-directional objects, and significant scale variations, making the detection of small objects from these images a formidable challenge. Due to the significant differences between remote sensing and natural image acquisition principles, traditional object detection methods designed for natural images often struggle in remote sensing scenarios, especially when dealing with small objects and large-scale variations. Small objects are particularly prominent in remote sensing images and are often obscured by complex background information, making their detection difficult [60,61,62].

Focusing on data augmentation strategy, several studies have presented different solutions to small object detection in remote sensing images. For instance, Zhang et al. [63] introduced a directional feature enhancement method based on a dual-pipeline solution. This method enhances features by rotating single samples at multiple angles, which alleviates the issue of excessive intra-class variability. Cheng et al. [64] proposed a data augmentation strategy by randomly rotating each object instance five times at different angles, aiming to increase the diversity of the samples. However, while these methods somewhat mitigate the problem, the effectiveness of this augmentation approach is limited because the rotated samples still bear significant similarities to the original samples. In another line, several studies [65,66] integrated multi-scale feature fusion techniques to detect objects at various scales and enrich the diversity of feature representations. Attention mechanisms and learning strategies have been widely researched in few-shot settings. Huang et al. [67] proposed a few-shot fine-tuning network with a shared attention module (SAM) for object detection in remote sensing images. Xiao et al. [68] proposed a few-shot object detection method with a self-adaptive attention network (SAAN) tailored for remote sensing images. Liu et al. [69] proposed a novel few-shot object detection method that addresses inconsistent labeling by introducing a coarse-to-fine regression method (Gradual RPN) to enhance region proposal network (RPN) recall. Li et al. [70] proposed MM-RCNN, a meta-memory-based few-shot object detection approach that uses a memory module to store category-specific knowledge and memory-based external attention (MEA) to aggregate information. Zhang et al. [71] proposed ST-FSOD, a self-training-based few-shot object detection method using a two-branch RPN and a student-teacher mechanism to include confident unlabeled targets as pseudo-labels. Zang et al. [72] proposed a Gaussian-scale enhancement (GSE) strategy and a multi-branch patch-embedding attention aggregation (MPEAA) module for cross-scale few-shot object detection in remote sensing images. Meanwhile, small sample detection in RSIs has also spurred innovative approaches, including weakly supervised learning to reduce annotation costs and cross-modal fusion to compensate for limited training data [73,74,75,76,77,78,79,80]. Despite their positive impact on enhancing sample diversity and detection capabilities, these methods mostly remain limited by spatial domain features.

Building on the above observations, we propose the Spatial-Frequency Interaction and Distribution Matching (SFIDM) network to address the challenges of FSOD in remote sensing images. SFIDM leverages spatial-frequency interactions and a KL-divergence-based selection mechanism to enhance few-shot detection accuracy, particularly for small objects in complex scenes. By effectively utilizing low- and high-frequency information for recognition and localization, SFIDM outperforms existing methods, offering a robust solution for few-shot detection tasks.

3. Our Method

3.1. Preliminary Knowledge

In the task of few-shot object detection (FSOD) in remote sensing images, the dataset is divided into two parts: the base class set and the novel class set . Here, represents the input data, and denotes the corresponding labels. The categories of the base class set and the novel class set are disjoint, i.e., .

The training process involves two stages. In the first stage, the model is trained on the base class set to learn generalizable feature representations and initialize model parameters. In the second stage, samples (i.e., -shot) are randomly selected from and for each category to fine-tune the model, enabling it to adapt to novel class detection tasks. This two-stage training framework allows the model to fully utilize the labeled data of the base classes and transfer the learned features to the novel classes. This protocol provides an effective solution to the few-shot object detection challenges in remote sensing images.

3.2. General Architecture

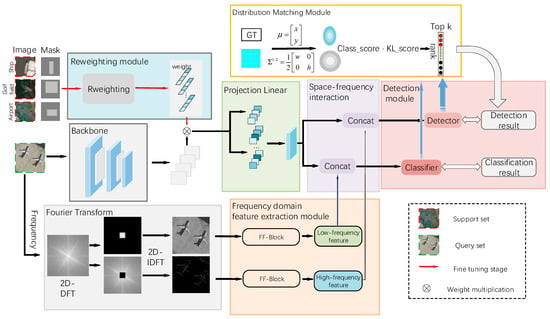

The overall structure of SFIDM, illustrated in Figure 1, emphasizes the interaction between the spatial and frequency-domain information through the integration of respective modules to enhance feature expressiveness. The framework begins with two feature extraction branches. Spatial features are extracted by a backbone network, while the frequency module decomposes the images into high-frequency and low-frequency components by a 2D discrete Fourier transform (2D-DFT). These components, capturing edge details and smooth regions, respectively, are then reconstructed via a 2D inverse discrete Fourier transform (2D-IDFT). Through further feature extractors, the output high-frequency features enhance localization accuracy, and low-frequency features improve classification stability. Frequency-domain features are concatenated with spatial features to provide complementary information for subsequent detection modules. Moreover, in the distribution matching module, a Kullback–Leibler Divergence (KLD) [42] sample selection mechanism is exploited to address biases in traditional label assignment strategies, ensuring fair and precise label assignment. For the detector, we utilize YOLO’s detection and localization capabilities to implement the solution. During base class training, the reweighting module is not applied, while in the few-shot fine-tuning phase, this reweighting network generates weight vectors from base class images, adapting channel features of few-shot images to emphasize critical meta-features for novel object detection. Leveraging these innovative strategies, SFIDM excels in detecting both large and small objects, achieving superior accuracy, adaptability, and generalization in diverse scenarios. The following sections detail the design and implementation of each module.

Figure 1.

The overall architecture of SFIDM. The framework majorly consists of a backbone network, a frequency-domain feature extraction module, a reweighting module, and a distribution matching mechanism. The frequency module extracts complementary high- and low-frequency features, which are then integrated with spatial features provided by the backbone, to enhance detection performance. The KL divergence-based matching module refines label assignment and localization, while the reweighting network ensures effective adaptation for few-shot learning tasks. Note that the reweighting module only works in the fine-tuning stage.

3.3. Frequency-Domain Feature Extraction Module

Frequency domain information is the key to understanding image structure and texture. By analyzing high- and low-frequency components, they can enhance detail recovery and image denoising, providing an effective and influential tool for image processing and analysis. In our SFIDM network, we design the frequency-domain feature extraction Module to extract frequency-domain features, which highlight the different contributions from low-frequency and high-frequency components. By means of transforming the input image using DFT to obtain its frequency domain representation. A threshold is then applied to separate the high-frequency and low-frequency components. These components are reconstructed into low-frequency and high-frequency images through IDFT. The extracted low-frequency features, representing global structural information from data, are used for target classification, while high-frequency features, capturing fine image details, are leveraged for precise target localization.

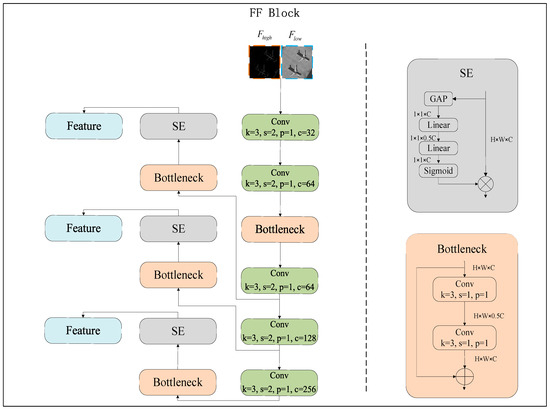

FF-Block is the core component of the frequency domain feature extraction module. In this module, the reconstructed high-frequency and low-frequency images are processed by their respective FF-Block for feature extraction. These extracted features are then integrated into the Spatial-Frequency Interaction module, where they are concatenated and interact with the corresponding spatial information. The structural design of the FF-Block is illustrated in Figure 2. Mathematically, the features produced by the FF-Block can be represented as:

In the FF-Block, the process begins with the Fourier-transformed features , which is separated by the threshold as and . The low-frequency features is obtained through the low-frequency feature extractor, while the high-frequency features is extracted using the high-frequency feature extractor.

Figure 2.

The structure of FF-Block. By extracting high-frequency and low-frequency features via separate modules, it aims to optimize frequency-domain data representation for classification and localization.

The FF-Block processes single-channel images using a combination of convolution layers, Batch Normalization (BatchNorm), ReLU activation, Bottleneck layers, and Squeeze-and-Excitation (SE) modules [81] to efficiently extract and represent features. The Bottleneck layers compress intermediate dimensions, reducing model parameters while preserving critical information. The SE modules enhance feature extraction by dynamically recalibrating channel-wise feature responses, focusing on inter-channel dependencies to highlight meaningful features. To ensure compatibility with the backbone, input images in both the low-frequency and high-frequency domains undergo downsampling, aligning the dimensions of their feature maps with those of the backbone. Notably, the FF-Block parameters for low- and high-frequency features are independent, allowing the network to tailor low-frequency features for robust classification and high-frequency features for precise localization. In the final Spatial-Frequency Interaction stage, the spatial feature extracted from the backbone is concatenated with low-frequency and high-frequency features, respectively. This comprehensive fusion enhances the model’s capacity to handle diverse classification and localization tasks, significantly improving its performance in object detection.

3.4. Distribution Matching Module

According to natural observation, the distribution of foreground and background pixels in small objects is often concentrated near the center and edges of their bounding boxes. Unlike IoU and other region-based positioning metrics, which struggle to evaluate both overlapping and non-overlapping bounding boxes, a distribution matching module is presented in this paper to assess positioning quality. To better capture the distribution in the feature space, we transform the parameterized bounding box into a two-dimensional Gaussian distribution, encoding both the center and boundary variations. This approach ensures that the results of bounding box regression are independent of the target size, addressing the limitations of traditional allocation strategies that tend to favor larger targets. Furthermore, this module effectively measures the offsets in non-overlapping bounding boxes and improves small target detection performance during training.

The probability density function (PDF) of the two-dimensional Gaussian distribution is defined as follows:

where represents the coordinates of the bounding box (e.g., the center point) and d is its dimensionality. is the mean vector of the two-dimensional Gaussian distribution, representing the center position of the bounding box. is the covariance matrix of the two-dimensional Gaussian distribution, describing the boundary shape (e.g., width and height) and variations.

Using this formulation, the bounding box is modeled as a probabilistic distribution to describe the spatial relationship between predicted and ground truth bounding boxes. When and are fixed, the formula is related only to .

Here, is the result obtained after transformation by matrix , commonly used for simplifying bounding box position calculations.

where represents the eigenvalues that measure the positional differences between predicted and ground truth bounding boxes, and denotes the dimensionality.

Note that in special case of :

Now the Gaussian distribution projects as an ellipse on the plane, where the major and minor axes are proportional to .

In bounding box representation, a horizontal box (center coordinates, width, and height) can be modeled as a 2D Gaussian distribution with:

Although earlier research used Wasserstein distance (GWD) to measure distribution differences, its lack of scale invariance limits localization capabilities. Here, we use Kullback–Leibler Divergence (KLD) [42] to measure the difference between the two distributions and as:

The terms and represent the mean vectors of the predicted and ground truth bounding boxes, respectively, while and denote their covariance matrices. The determinant of a matrix is indicated by “det”, and the trace operator, “Tr”, sums the diagonal elements of the matrix.

This equation quantitatively measures positional and shape differences between the predicted and ground truth bounding boxes. By calculating KLD, the difference between two Gaussian distributions is effectively represented. Although IoU is still widely used as a baseline for evaluation, it is primarily a simplified metric. In this work, the adoption of KLD measure demonstrates the advantages of precise distribution matching, especially for small and complex objects.

3.5. Reweighting Module

Our feature reweighting module aims to extract meta-knowledge from support images. To achieve this, we designed a lightweight convolutional neural network (CNN) that maps each support image to a set of reweighting vectors, with each scale corresponding to one reweighting vector. These reweighting vectors are used to adjust the contributions of meta-features, highlighting the features that are crucial for detecting new targets.

Assume that the support samples come from object categories. Our feature reweighting module takes support images and their masks as inputs. For each of the categories, a single support image and its corresponding bounding box annotation are randomly selected from the support set. Then, our feature reweighting module maps them to a category-specific representation , where . The reweighting vector is used to reweight the meta-features, emphasizing information relevant to the scale and category.

Table 1 presents the network architecture of our feature reweighting module. The output reweighting vectors are obtained from each Global MaxPooling layer, and each reweighting vector matches the dimensions of the corresponding meta-feature. After obtaining the meta-features and reweighting vectors , the category-specific reweighted feature map is calculated using the following formula:

where denotes element-wise multiplication. is the convolutional weight, whose range is from 0 to 1. The reweighting coefficients focus on weight calibration from the support feature set, dynamically adjusting the feature importance. After the reweighting stage, the feature map generated by the reweighting module is assigned to the corresponding object category within the support images.

Table 1.

Detailed layers and blocks of reweighting module.

The reweighting module in our framework is designed to dynamically adjust feature importance across multiple scales to enhance the detection of small and diverse objects. It incorporates a hierarchical structure of convolutional layers, max-pooling layers, dilated convolutions, residual blocks, and global pooling operations. The convolutional layers extract features at various resolutions, while max-pooling layers reduce spatial dimensions, enabling the network to capture contextual information. Dilated convolutions expand the receptive field without increasing the number of parameters, which is crucial for capturing fine-grained details in small objects. Residual blocks ensure efficient gradient flow and allow the network to learn deeper representations. Furthermore, the inclusion of global max pooling aggregates global information, ensuring robust feature recalibration and better feature representation across channels. This multi-layered approach ensures that the reweighting module effectively integrates local and global information, enhancing the overall detection performance in handling complex and cluttered scenes.

3.6. Loss

The total loss function of the model includes classification loss, regression loss, and DFL (Distribution Focal Loss).

3.6.1. Classification Loss

The classification loss is calculated using the cross-entropy loss. Cross-entropy loss measures the difference between the predicted and ground truth labels for each category. Specifically, for each target in detection, the model predicts the confidence of each category, and the cross-entropy loss compares these predictions with the ground truth labels.

The calculation formula is as follows:

where is the total number of samples, is the total number of categories, yi,c is the ground truth label, is the predicted confidence of sample belonging to category , and denotes natural logarithm. If sample belongs to category , then ; otherwise .

3.6.2. Regression Loss

The regression loss is calculated using Distance-IoU (DIoU) Loss [82], which is defined as:

where and represent the center positions of the predicted bounding box and the ground truth (GT) bounding box, respectively. denotes the Euclidean distance between the two centers. is diagonal length of the smallest enclosing box covering both the predicted and ground truth bounding boxes. By adding the penalty term , DIoU explicitly optimizes the positional relationship of bounding boxes, leading to better localization accuracy, especially for small objects.

3.6.3. DFL (Distribution Focal Loss)

Distribution Focal Loss (DFL) [83] is used to encourage the predicted bounding box to quickly focus on the ground truth. Each feature map unit predicts the distance between it and the ground truth bounding box, where the distance is treated as a probability for that unit. The calculation formula is as follows:

where represents the number of samples participating in the calculation, corresponding to the number of feature map units. denotes the ground truth center coordinate. and are the two integers closest to , where is the smaller integer and is the larger integer. and indicate the predicted probabilities of the feature map units closest to and , respectively. The natural logarithm, denoted as “log”, measures the model’s confidence in the predicted values. The DFL loss function encourages the predicted bounding box to iteratively refine its distance toward the ground truth boundary by minimizing deviations. Its core objective is to use the fractional information of the ground truth center to optimize the predicted bounding box’s location. By leveraging distance-based penalties, DFL ensures more accurate boundary localization.

3.6.4. Total Loss Function

The total loss function is defined as:

where and are weighting parameters that balance the impacts of classification loss, regression loss, and DFL. A higher leads to more emphasis on improving bounding box accuracy, especially for complex or small objects. A higher enhances classification by leveraging distance-based refinements, effectively addressing imbalanced class distributions and focusing on challenging cases. By considering these losses simultaneously, the total loss combines the classification loss , regression loss and DFL to ensure the model achieves precise classification while refining bounding box localization.

3.7. Algorithm Description

The specific workflow of our SFIDM, coined Algorithm 1, is shown in the following table. The training process of our algorithm consists of two main stages:

Base Class Training Stage: The model learns the target features of base categories by combining spatial and frequency-domain features. KL divergence is introduced to optimize bounding box selection, improving localization accuracy.

Few-Shot Fine-Tuning Stage: The model incorporates the feature reweighting module, leveraging support samples from novel categories. By combining spatial and frequency-domain features, the model performs detection tasks for novel categories. KL divergence is further used to optimize bounding box regression, and the loss function is adjusted to enhance performance on novel categories.

Table 2 describes the meaning of the variables involved in the training and testing process of our algorithm.

| Algorithm 1: SFIDM Algorithm | |

| 1: | Initialization: Set up initial training dataset and model . Define KL divergence threshold for bounding box optimization. |

| 2: | Base Training Phase: for each iteration in the base training phase do (a) Input into the backbone network to extract spatial features; (b) Extract frequency-domain features using 2D-DFT for high-frequency and low-frequency components; (c) Train using extracted features and to classify base categories; (d) Optimize bounding box regression with KL divergence to refine boundary predictions; |

| 3: | Few-shot Fine-tuning Phase: for each support image and test image do (a) Extract initial features using the backbone network; (b) Generate reweighting vectors from support images to recalibrate features; (c) Fuse reweighted features with frequency-domain features ; (d) Use fused features for classification and bounding box prediction; |

| 4: | Testing Phase: for each test image do (a) Extract spatial and frequency-domain features without reweighting; (b) Predict bounding boxes and refine localization using KL divergence; (c) Evaluate performance metrics such as mAP; |

| 5: | Output: Final detection model with optimized performance for few-shot object detection in remote sensing images. |

Table 2.

Description of used variables in algorithm workflow.

In summary, our algorithm starts from performing foundational learning on base category object detection tasks, followed by rapid adaptation to novel category detection with limited data through few-shot fine-tuning. Specifically, in the foundational learning stage, the model is trained on base category data and extracts the general features of the objects. Subsequently, in the few-shot fine-tuning stage, the model is quickly fine-tuned with a small amount of novel category data, further improving its detection capability on new categories. Note that the reweighting module is only used in the fine-tuning stage. In the test stage, the model only uses the backbone network to extract spatial features, then combines the frequency domain features extracted by the frequency domain feature extraction module and finally conducts detection and classification.

4. Experiments

4.1. Benchmark Datasets

The experiments were conducted using the NWPU VHR-10 dataset and DIOR dataset. NWPU VHR-10 is a high-resolution remote sensing image dataset released by [1], specifically designed to advance research in object detection within remote sensing images. This dataset consists of 800 remote sensing images obtained from Google Earth and the ISPRS Vaihingen dataset. Among these, 140 images contain no target objects and are classified as “negative samples”, while the remaining 640 images contain at least one target object and are categorized as “positive samples”. The NWPU VHR-10 dataset covers 10 typical target categories: airplanes, baseball fields, basketball courts, bridges, tennis courts, athletic fields, harbors, ships, storage tanks, and vehicles. These categories represent common object types across diverse geographical and environmental scenarios, providing valuable materials for testing and improving object detection algorithms.

DIOR dataset [19], on the other hand, is a larger-scale benchmark dataset for remote sensing object detection, designed to provide more challenging test scenarios. The dataset consists of 23,463 images obtained from Google Earth, encompassing 20 target categories with a total of 192,472 object instances. The categories include airplanes, airports, baseball fields, basketball courts, bridges, chimneys, dams, highway service areas, highway toll stations, harbors, golf courses, athletic fields, overpasses, ships, stadiums, storage tanks, tennis courts, train stations, vehicles, and wind turbines. The image resolution ranges from 0.4 m to 30 m, and the image dimensions are 800 × 800 pixels. Due to its diverse geographical settings and significant variations in object sizes, this dataset comprehensively evaluates the robustness and generalization capabilities of object detection models.

4.2. Experimental Setup and Implementation Details

To comprehensively evaluate the performance of the proposed SFIDM model in few-shot object detection tasks, each dataset was divided into base and novel categories. All input images were cropped to square dimensions to meet the input requirements of the model. The DIOR dataset, where the original image size is 800 × 800, was kept unchanged as it already aligns with the required input dimensions, as suggested in [84]. During the cropping process, object instances with an overlap area of less than 70% relative to the original instances were excluded to reduce noise and ensure the accuracy and consistency of the training process. The cropped patches were then resized to the predefined scales for network training. The detection performance was evaluated using the mean Average Precision (mAP) metric under the PASCAL VOC2007 benchmark [85]. Specifically, predictions were considered true positives when the Intersection over Union (IoU) between detected and ground-truth bounding boxes exceeded a threshold of 0.5, balancing localization rigor and practical applicability. For each object category, the Average Precision (AP) was computed using the 11-point interpolation method: precision values were sampled at 11 equally spaced recall levels (0.0 to 1.0 in 0.1 increments), and the AP was derived as the mean of the maximum precision values at these recall points. The final mAP score, representing the model’s overall detection accuracy, was obtained by averaging AP values across all categories. This protocol ensures robustness against precision–recall curve fluctuations while maintaining consistency with widely adopted evaluation standards for object detection tasks. During inference, Non-Maximum Suppression (NMS) was applied with a confidence threshold of 0.05 and an IoU threshold of 0.5. Additionally, our method utilizes YOLOv3’s backbone network, detector, and locator as the foundational framework.

The proposed method was implemented using the PyTorch (1.12.1) framework. During the base training phase, the model was optimized by 20,000 iterations with an initial learning rate of 0.001, while in the fine-tuning phase, the initial learning rate remained at 0.001, and the optimization was performed over 10,000 iterations. The batch size, which represents the number of images processed in a single iteration, was set to 16 to achieve robust results while optimizing computational efficiency. The optimization process utilized the Adam optimizer with stochastic gradient descent. The experiments were conducted on a system equipped with an Intel i9-12900k processor (Intel, Santa Clara, CA, USA), an NVIDIA RTX 3090 GPU with 24 GB of memory (NVIDIA, Santa Clara, CA, USA), and 32 GB of RAM.

4.3. Experimental Results on the NWPU VHR-10 Dataset

For the NWPU VHR-10 dataset, three target categories, i.e., airplanes, baseball diamonds, and tennis courts, are selected as novel classes, while the remaining seven categories are designated as base classes. We chose these three classes because they are commonly adopted as reference standards for evaluating detection algorithms, as evidenced by [84,86,87,88]. We evaluate the performance of the proposed SFIDM model with several state-of-the-art few-shot object detection approaches. Specifically, the representative works are Meta R-CNN [89], a meta-learning-based approach; FsDetView [59], which leverages viewpoint consistency; FSFR [43], focusing on feature reweighting strategies; DAnA [86], a domain adaptation network; and DCFS [87], which integrates dense contrastive feature selection. We also include FSODM [84], a few-shot object detection method utilizing object modeling, MFDC [48], which applies multi-faceted distillation, FSCE [52], which incorporates contrastive proposal encoding, and MLII-FSOD [88], a method emphasizing multi-level information integration. These comparative approaches represent diverse strategies for tackling few-shot object detection, providing a comprehensive benchmark to evaluate the effectiveness of our proposed SFIDM model. It should be noted that the number of compared methods varies across cases, as we exclusively report results available in the literature. For instance, FSFR [43] and FSODM [84] are excluded from the 5-shot case because their original publications only provided 3-shot and 10-shot experimental results.

The quantitative results in Table 3 demonstrate the remarkable improvements achieved by the proposed SFIDM model over existing SOTA (State-of-the-Art) methods in few-shot object detection across all shot settings. Specifically, (1) In the 3-shot case, SFIDM achieves noticeable gains over competing methods, particularly excelling in complex categories such as baseball diamond. This indicates the model’s ability to effectively integrate multi-scale features and capture fine-grained details in a few-shot setting. (2) In the 5-shot case, SFIDM outperforms the previous best-performing method by more than 5% in mAP. This improvement is further reflected in its balanced performance across diverse novel classes, showcasing its robustness and versatility. (3) In the 10-shot case, SFIDM achieves an additional improvement of over 3% in mAP compared to the next-best method. This demonstrates the model’s scalability to the higher-shot settings. Across all scenarios, SFIDM consistently delivers better results. By integrating frequency-domain information with spatial features strategy, it can handle objects of varying sizes and appearances effectively.

Table 3.

Experimental results (mAP50) on the NWPU VHR-10 test set for each novel class under 3, 5, and 10 settings. Results in black denote the best performance. Note that our results are averaged over multiple runs.

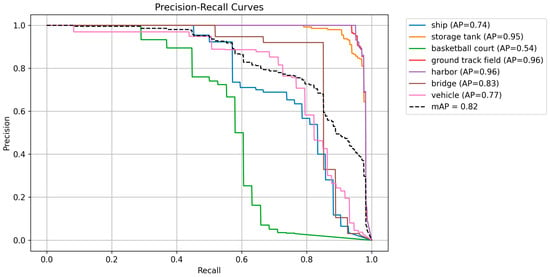

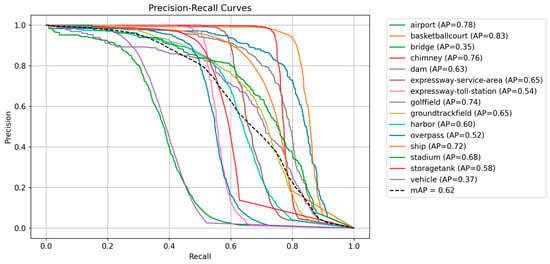

Table 4 presents the detection results for each base class in the NWPU VHR-10 dataset, while Figure 3 illustrates the PR curve of our method on the same dataset. The results demonstrate the effectiveness of the proposed SFIDM in few-shot object detection on the base classes. It achieves the highest mean Average Precision (mAP) of 0.82, outperforming YOLOv3 (0.77), FSFR (0.76), and FSODM (0.78). Specifically, SFIDM excels in challenging categories such as storage tank and harbor, achieving significant improvements of 15% and 10%, respectively, compared to FSODM. This highlights SFIDM’s ability to effectively capture features in categories with complex structures and varying object scales. In other categories, such as ground track field and bridge, SFIDM also demonstrates competitive performance, achieving the highest precision of 0.97 and 0.83, respectively. For simpler categories like ship and vehicle, SFIDM maintains comparable performance with YOLOv3 and FSODM, reflecting its consistency and robustness across different object types.

Table 4.

Detection results (mAP50) for each base class in the NWPU VHR-10 dataset.

Figure 3.

PR curve of our method on the NWPU VHR-10 dataset.

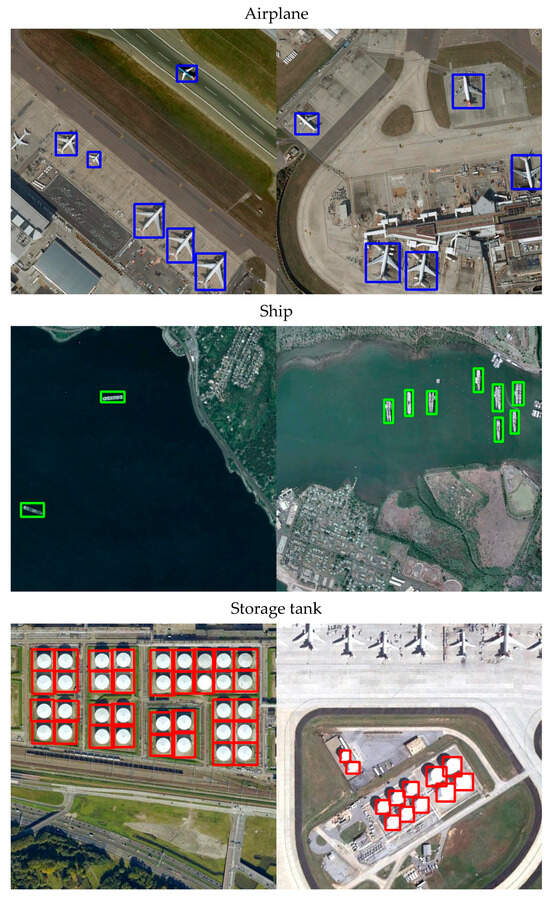

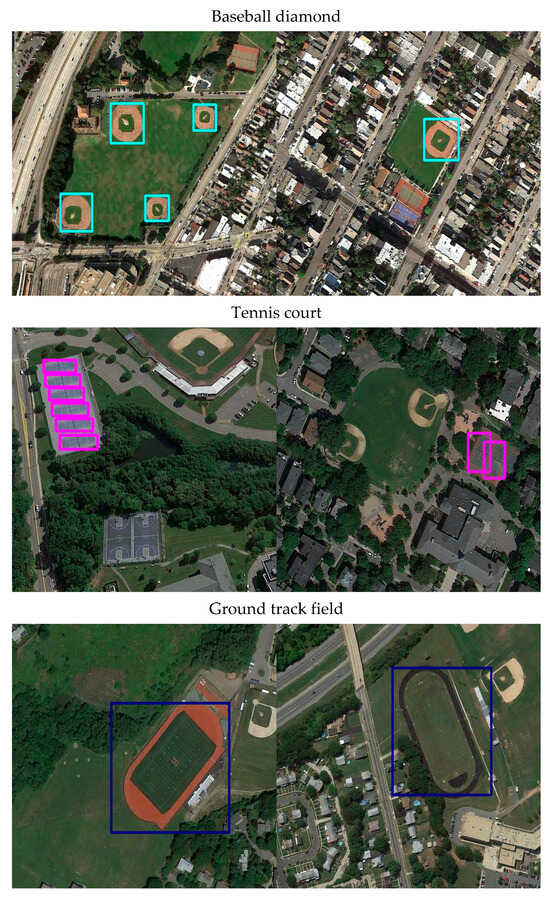

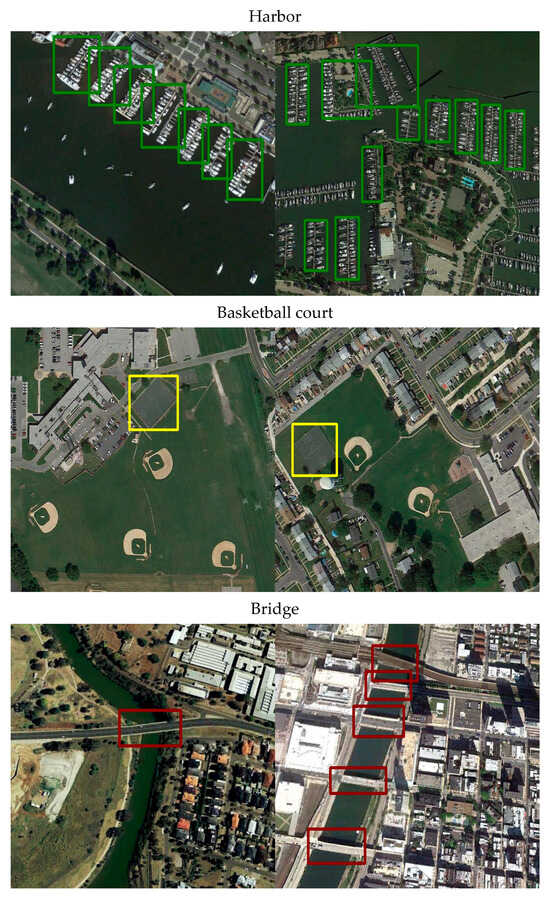

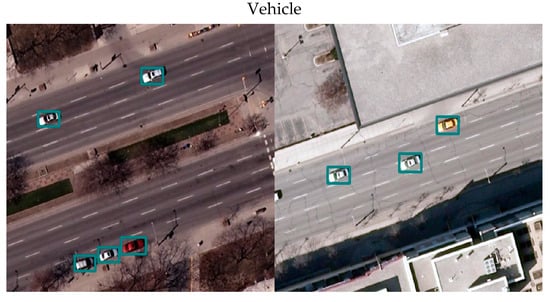

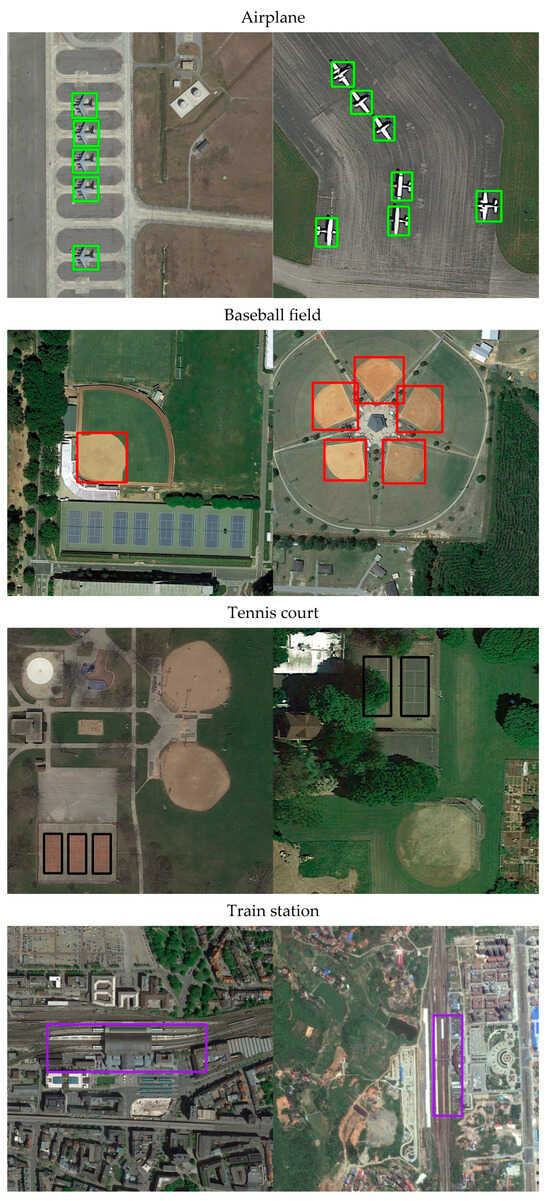

The detection performance of the proposed SFIDM, as illustrated in Figure 4, Figure 5, Figure 6 and Figure 7, demonstrates its exceptional capability across various target categories and scenarios. The results show that SFIDM successfully detects objects of different scales, shapes, and categories such as airplanes, ships, storage tanks, and bridges. We use different color boxes to represent them. Even in complex scenarios like densely populated port areas or urban regions with high object variability, the model maintains precise detection with minimal false positives. For challenging categories such as baseball fields and tennis courts, the model accurately identifies objects even when they are small or partially occluded. Additionally, for large structures such as bridges and ground track fields, SFIDM effectively captures the spatial extent of the objects, demonstrating strong generalization capabilities.

Figure 4.

Detection results of our method on NWPU VHR-10 dataset (Examples from Class 1–3).

Figure 5.

Detection results of our method on NWPU VHR-10 dataset (Examples from Class 4–6).

Figure 6.

Detection results of our method on NWPU VHR-10 dataset (Examples from Class 7–9).

Figure 7.

Detection results of our method on NWPU VHR-10 dataset (Examples from Class 10).

4.4. Experimental Results on the DIOR Datasets

In the DIOR dataset, we designate five categories—airplane, baseball field, tennis court, train station, and windmill—as novel classes, while treating the remaining 15 categories as base classes. As in Section 4.3, we chose these five classes here because they are widely adopted for evaluating detection algorithms on this dataset. This partitioning enables a focused assessment of the model’s ability to generalize across known and novel categories. The results, presented in Table 5 and Table 6, along with Figure 8, highlight the effectiveness of the proposed SFIDM model in addressing this challenging scenario. It should be noted that the number of compared methods varies across cases, as we exclusively report results available in the literature. For instance, FSFR [43] is included only in the 10-shot case because the original paper provided only 10-shot experimental results.

Table 5.

Experimental results (mAP50) on the DIOR test set for each novel class under 3, 5, 10, and 20 settings. Results in black denote the best performance. Note that our results are averaged over multiple runs.

Table 6.

Detection results (mAP50) for each base class in the DIOR dataset.

Figure 8.

PR curve of our method on the DIOR dataset.

Table 5 verifies that SFIDM has significant performance improvements over other state-of-the-art methods across all settings. Particularly in the challenging 3-shot and 5-shot cases, SFIDM exhibits remarkable generalization capabilities, consistently outperforming methods such as Meta R-CNN, FSCE, and MLII-FSOD, with mAP improvements exceeding 10%. As the number of training samples increases, the advantages of SFIDM become more pronounced. In the 5-shot setting, SFIDM achieves substantial improvements across multiple categories, particularly in complex scenarios such as “tennis court” and “train station”. Similarly, in the 10-shot and 20-shot settings, SFIDM maintains its superiority, achieving notable performance gains over methods that leverage contrastive learning, feature reweighting, or domain adaptation. These consistent improvements demonstrate SFIDM’s ability to fully exploit spatial-frequency interactions and distribution-matching mechanisms to deliver robust few-shot object detection performance.

Table 6 shows that SFIDM outperforms FSODM, FSFR, and YOLOv3 across most base categories in the DIOR dataset. It achieves notable improvements in challenging categories like “airport”, “dam” and “ship” while also performing well in complex environments such as “bridge”, “harbor” and “stadium”. In some categories like “basketball court” and “expressway service area” SFIDM matches the best-performing methods, but with better results than mAP50. Figure 8 presents the PR curve of our method, while Figure 9 and Figure 10 illustrate the detection results of the proposed SFIDM model on various categories from the DIOR dataset. These include a diverse range of object types such as airplanes, baseball fields, tennis courts, train stations, windmills, chimneys, dams and expressway service areas. We use different color boxes to represent different categories. The visualizations demonstrate the model’s ability to detect objects across different scales, densities, and complexities, highlighting its effectiveness in real-world remote sensing scenarios.

Figure 9.

Detection results of our method on DIOR dataset (Examples from Class 1–4).

Figure 10.

Detection results of our method on DIOR dataset (Examples from Class 5–8).

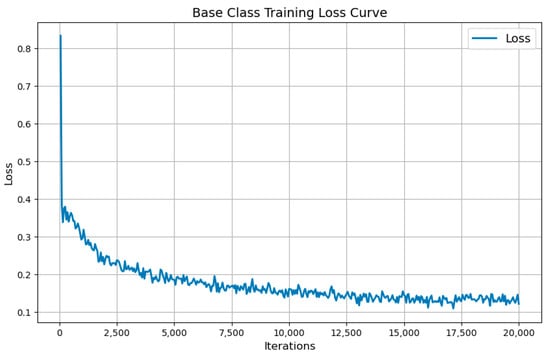

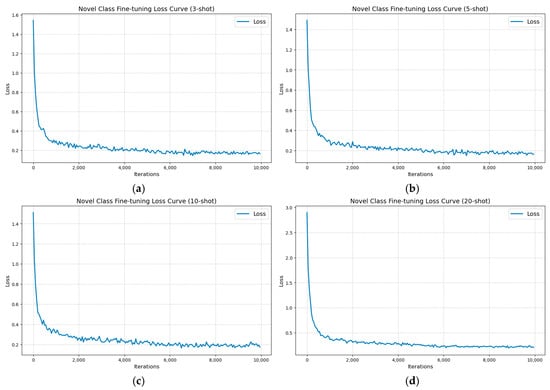

4.5. Convergence Analysis

To validate the effectiveness of the model during the base class training and few-shot fine-tuning stages, we analyze its convergence behavior. As shown in the loss curves in Figure 11 and Figure 12, the model exhibits good convergence in both stages on the DIOR dataset. During the base class training phase, the loss value is initially high but gradually decreases and stabilizes as the number of iterations increases. This indicates that the model effectively learns meaningful features, and the training process is stable. In the fine-tuning phase, the loss decreases significantly faster, demonstrating that the fine-tuning process leverages the pre-trained features to quickly adapt to new few-shot tasks. The loss eventually stabilizes at a low level, indicating good convergence without significant oscillations or overfitting. Overall, the loss curves in both stages clearly highlight the model’s capability for effective learning and stable convergence.

Figure 11.

The loss of our method for base class training on the DIOR dataset.

Figure 12.

The loss of our method for novel class training on the DIOR dataset. The loss curves for (a) 3-shot, (b) 5-shot, (c) 10-shot, and (d) 20-shot scenarios show rapid initial decline and stabilization, demonstrating efficient task adaptation.

4.6. Parameter Sensitivity

SFIDM includes two hyperparameters: and. In this section, we analyze the impact of these parameters on the model using a controlled variable approach. The analysis focuses on and , with their values set to 0.6, 0.8, 1.0, 1.2, and 1.4, respectively. Under each combination, the performance of SFIDM on the NWPU VHR-10 dataset is reported. Other experimental settings are consistent with Section 4.2.

The results in Table 7 show that SFIDM achieves the best object detection performance, with a mAP of 82.3%, when and . This combination balances the contributions of regression loss and distribution-focused loss, enhancing detection accuracy. Extreme values for and , whether too high or too low, result in suboptimal performance, emphasizing the importance of careful hyperparameter tuning. Accordingly, and are selected as the final parameter settings. This analysis highlights the robustness of SFIDM and provides valuable insights into its optimal configuration for specific datasets.

Table 7.

Object detection results of our method on the NWPU VHR-10 dataset with different parameter combinations.

4.7. Ablation Study

To better validate the effectiveness of each module in the SFIDM model, an ablation study is conducted on the NWPU VHR-10 dataset. This involves removing or retaining specific modules in the SFIDM framework to assess their contributions. Based on different module combinations, experimental configurations are named M1, M2, etc., with parameter settings consistent with those used in Section 4.2.

The ablation experiment results are presented in Table 8, which highlights the contributions of individual components in the SFIDM model. When only the low-frequency or high-frequency feature extraction is performed, the model’s performance drops down to 80% below, indicating their complementary nature in capturing critical image information. Integrating the distribution matching module with either low- or high-frequency features significantly improves the performance to 80% above, showing that this module enhances the model’s ability to handle sample diversity and scale differences. When all components are involved, the final model achieves the highest performance. These results indicate the potential of the proposed spatial-frequency interaction and distribution matching mechanisms in enhancing RSI object detection.

Table 8.

Results of SFIDM ablation experiment (√ indicates that the module is used).

4.8. Extended Experiment

In this experiment, we further validate the adaptability of the SFIDM network by replacing the backbone from YOLOv3 to YOLOv8. As a representative efficient object detector in recent years, YOLOv8 is known for its strong feature extraction capabilities and lightweight design. Specifically, we employ the YOLOv8 backbone to extract spatial features and perform final detection, while the rest modules are kept unchanged. We name this new version as SFIDM-L, since it uses a larger backbone.

As presented in Table 9, which compares the performance of related few-shot methods on the DIOR dataset, SFIDM-L (based on YOLOv8s) outperform the existing approaches in most cases. Notably, our model has fewer parameters and lower computational complexity, while maintaining competitive detection accuracy. Regarding detection performance on base class or novel class, we can find that SFIDM-L has significant improvements in novel class detection, achieving competitive or superior mAP compared to other methods, particularly in the 10-shot and 20-shot settings. However, it is interesting to note as the number of novel samples increases, this improvement is accompanied by a slight decline in base class detection, with base mAP decreasing from 0.758 (5-shot) to 0.746 (20-shot). Consequently, while SFIDM-L achieves the highest total mAP in the 5-shot and 10-shot settings, it does not achieve the best total performance in the 20-shot setting. This is because, during novel class fine-tuning, base classes are only used as a support set. The focus is primarily on extracting features for the novel classes to train the classifier and detector, which results in a slight decline in base class performance. Although fine-tuning introduces a trade-off with a small decline in base class performance, it ensures significant improvements in novel class detection, which is more desirable for few-shot tasks.

Table 9.

Experimental results of few-shot detection methods on the DIOR dataset. The best result in each column is bolded.

4.9. Limitation

Generally, according to the above experiments, SFIDM-L demonstrates strong adaptability and effectiveness in novel class detection, with consistent improvements in total mAP as the number of novel samples increases. This highlights the model’s ability to extract meaningful features and optimize performance for novel categories, outperforming the standard SFIDM and most state-of-the-art methods in the 5-shot and 10-shot settings. However, the observed trade-off between novel and base class performance indicates a limitation. It can be found that the decline in base class mAP with increasing novel samples prevents SFIDM-L from achieving optimal total mAP in the 20-shot setting. While SFIDM-L excels in novel-class detection, how to refine the network to balance the base and novel category detection needs to be further studied.

5. Conclusions

This paper presents the SFIDM network, a novel FSOD framework that integrates spatial-frequency interaction and distribution matching to address challenges in remote sensing object detection. By incorporating the FF block, SFIDM separates and processes low-frequency and high-frequency information to enhance classification and localization, particularly for small objects. The inclusion of KL divergence further improves boundary box precision for handling both overlapping and non-overlapping objects. Additionally, a reweighting network fuses channel features from the backbone, generating discriminative features that boost detection performance. Experimental results demonstrate SFIDM’s significant improvements in few-shot and small-object detection tasks, outperforming traditional methods and showcasing its potential for practical remote sensing applications. In future work, other detectors and backbone networks can be adopted to further enhance the performance. We also hope to integrate the promising modules of our SFIDM with other state-of-the-art networks for RSI-related tasks, such as multimodal object detection, target tracking, and scene classification.

Author Contributions

Conceptualization, L.W. and D.L.; methodology, Y.W.; software, J.L.; validation, J.G.; formal analysis, R.L.; writing—original draft preparation, Y.W. and J.L.; investigation, Q.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by the Key Research and Development Program of Shaanxi (Program No. 2024GX-YBXM-144) and the Natural Science Basic Research Program of Shaanxi (Program No. 2024JC-YBMS-499).

Data Availability Statement

The NWPU VHR-10 dataset and DIOR dataset can be found at https://gcheng-nwpu.github.io/#Datasets/, accessed on 10 August 2024.

Acknowledgments

The authors are grateful to the editors and reviewers for their constructive feedback and valuable suggestions, which significantly improved the quality of this manuscript. Special thanks to one of the anonymous reviewers for refining Figure 2 by personally creating an illustrative version, greatly enhancing the clarity of the FF block’s module diagram. We also acknowledge the High-Performance Computing Platform of Xidian University for providing essential computational resources.

Conflicts of Interest

Authors Yong Wang, Rui Liu and Qiusheng Cao were employed by the company The 27th Research Institute of China Electronics Technology Group Corporation. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Cheng, G.; Han, J.; Zhou, P.; Guo, L. Multi-class geospatial object detection and geographic image classification based on collection of part detectors. ISPRS J. Photogramm. Remote Sens. 2014, 98, 119–132. [Google Scholar] [CrossRef]

- Sun, X.; Wang, P.; Yan, Z.; Xu, F.; Wang, R.; Diao, W.; Chen, J.; Li, J.; Feng, Y.; Xu, T.; et al. FAIR1M: A benchmark dataset for fine-grained object recognition in high-resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2022, 184, 116–130. [Google Scholar] [CrossRef]

- Cheng, G.; Lang, C.; Wu, M.; Xie, X.; Yao, X.; Han, J. Feature enhancement network for object detection in optical remote sensing images. J. Remote Sens. 2021, 2021, 9805389. [Google Scholar] [CrossRef]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 3520–3529. [Google Scholar]

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Hu, J.; Wei, Y.; Chen, W.; Zhi, X.; Zhang, W. CM-YOLO: Typical Object Detection Method in Remote Sensing Cloud and Mist Scene Images. Remote Sens. 2025, 17, 125. [Google Scholar] [CrossRef]

- Peng, Y.; Li, H.; Zhang, W.; Zhu, J.; Liu, L.; Zhai, G. Underwater Sonar Image Classification with Image Disentanglement Reconstruction and Zero-Shot Learning. Remote Sens. 2025, 17, 134. [Google Scholar] [CrossRef]

- Cheng, G.; Li, Q.; Wang, G.; Xie, X.; Min, L.; Han, J. SFRNet: Fine-grained oriented object recognition via separate feature refinement. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5610510. [Google Scholar] [CrossRef]

- Al Hinai, A.A.; Guida, R. Confidence-Aware Ship Classification Using Contour Features in SAR Images. Remote Sens. 2025, 17, 127. [Google Scholar] [CrossRef]

- Cheng, G.; Lai, P.; Gao, D.; Han, J. Class attention network for image recognition. Sci. China Inf. Sci. 2023, 66, 132105. [Google Scholar] [CrossRef]

- Luo, B.; Cao, H.; Cui, J.; Lv, X.; He, J.; Li, H.; Peng, C. SAR-PATT: A Physical Adversarial Attack for SAR Image Automatic Target Recognition. Remote Sens. 2025, 17, 21. [Google Scholar] [CrossRef]

- Kemker, R.; Salvaggio, C.; Kanan, C. Algorithms for semantic segmentation of multispectral remote sensing imagery using deep learning. ISPRS J. Photogramm. Remote Sens. 2018, 145, 60–77. [Google Scholar] [CrossRef]

- Jia, H.; Lang, C.; Oliva, D.; Song, W.; Peng, X. Dynamic Harris hawks optimization with mutation mechanism for satellite image segmentation. Remote Sens. 2019, 11, 1421. [Google Scholar] [CrossRef]

- Lai, P.; Cheng, G.; Zhang, M.; Ning, J.; Zheng, X.; Han, J. NCSiam: Reliable matching via neighborhood consensus for siamese-based object tracking. IEEE Trans. Image Process. 2023, 32, 6168–6182. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Liu, S.; Liu, H. Lightweight Multi-Scale Network for Segmentation of Riverbank Sand Mining Area in Satellite Images. Remote Sens. 2025, 17, 227. [Google Scholar] [CrossRef]

- Lv, Z.; Huang, H.; Li, X.; Zhao, M.; Benediktsson, J.A.; Sun, W.; Falco, N. Land cover change detection with heterogeneous remote sensing images: Review, progress, and perspective. Proc. IEEE 2022, 110, 1976–1991. [Google Scholar] [CrossRef]

- Gautam, D.; Mawardi, Z.; Elliott, L.; Loewensteiner, D.; Whiteside, T.; Brooks, S. Detection of Invasive Species (Siam Weed) Using Drone-Based Imaging and YOLO Deep Learning Model. Remote Sens. 2025, 17, 120. [Google Scholar] [CrossRef]

- Chen, K.; Chen, J.; Xu, M.; Wu, M.; Zhang, C. DRAF-Net: Dual-Branch Residual-Guided Multi-View Attention Fusion Network for Station-Level Numerical Weather Prediction Correction. Remote Sens. 2025, 17, 206. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Xia, G.-S.; Hu, J.; Hu, F.; Shi, B.; Bai, X.; Zhong, Y.; Zhang, L.; Lu, X. AID: A benchmark dataset for performance evaluation of aerial scene classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3965–3981. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, B.; Wang, B. Few-Shot Object Detection with Self-Adaptive Global Similarity and Two-Way Foreground Stimulator in Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7263–7276. [Google Scholar] [CrossRef]

- Li, C.; Cheng, G.; Han, J. Boosting knowledge distillation via intra-class logit distribution smoothing. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 4190–4201. [Google Scholar] [CrossRef]

- Cheng, X.; He, X.; Qiao, M.; Li, P.; Chang, P.; Zhang, T.; Guo, X.; Wang, J.; Tian, Z.; Zhou, G. Multi-view graph convolutional network with spectral component decompose for remote sensing images classification. IEEE Trans. Circuits Syst. Video Technol. 2022, 35, 3–18. [Google Scholar] [CrossRef]

- Geng, J.; Song, S.; Jiang, W. Dual-path feature aware network for remote sensing image semantic segmentation. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 3674–3686. [Google Scholar] [CrossRef]

- Lang, C.; Wang, J.; Cheng, G.; Tu, B.; Han, J. Progressive parsing and commonality distillation for few-shot remote sensing segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5613610. [Google Scholar] [CrossRef]

- Xie, X.; Cheng, G.; Li, Q.; Miao, S.; Li, K.; Han, J. Fewer is more: Efficient object detection in large aerial images. Sci. China Inf. Sci. 2023, 67, 112106. [Google Scholar] [CrossRef]

- Yan, C.; Chang, X.; Luo, M.; Liu, H.; Zhang, X.; Zheng, Q. Semantics-guided contrastive network for zero-shot object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 1530–1544. [Google Scholar] [CrossRef]

- Chen, H.; Wang, Q.; Xie, K.; Lei, L.; Lin, M.G.; Lv, T.; Liu, Y.; Luo, J. SD-FSOD: Self-distillation paradigm via distribution calibration for few-shot object detection. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 5963–5976. [Google Scholar] [CrossRef]

- Huang, J.; Cao, J.; Lin, L.; Zhang, D. IRA-FSOD: Instant-response and accurate few-shot object detector. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 6912–6923. [Google Scholar] [CrossRef]

- Li, L.; Yao, X.; Cheng, G.; Xu, M.; Han, J.; Han, J. Solo-to-Collaborative Dual-Attention Network for One-Shot Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5607811. [Google Scholar] [CrossRef]

- Li, X.; Zhang, L.; Chen, Y.P.; Tai, Y.W.; Tang, C.K. One-Shot Object Detection without Fine-Tuning. arXiv 2020, arXiv:2005.03819. [Google Scholar]

- Hsieh, T.-I.; Lo, Y.-C.; Chen, H.-T.; Liu, T.-L. One-shot object detection with co-attention and co-excitation. Proc. Conf. Adv. Neural Inform. Process. Syst. 2019, 32, 2725–2734. [Google Scholar]

- Zhao, Y.; Guo, X.; Lu, Y. Semantic-Aligned Fusion Transformer for One-Shot Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 7601–7611. [Google Scholar]

- Chen, D.J.; Hsieh, H.Y.; Liu, T.L. Adaptive Image Transformer for One-Shot Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 12247–12256. [Google Scholar]

- Michaelis, C.; Ustyuzhaninov, I.; Bethge, M.; Ecker, A.S. One-Shot Instance Segmentation. arXiv 2018, arXiv:1811.11507. [Google Scholar]

- Wang, H.; Liu, J.; Liu, Y.; Maji, S.; Sonke, J.J.; Gavves, E. Dynamic Transformer for Few-Shot Instance Segmentation. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 2969–2977. [Google Scholar]

- Nguyen, K.; Todorovic, S. FAPIS: A Few-Shot Anchor-Free Part-Based Instance Segmenter. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 11099–11108. [Google Scholar]

- Ganea, D.A.; Boom, B.; Poppe, R. Incremental Few-Shot Instance Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 1185–1194. [Google Scholar]

- Wei, S.; Wei, X.; Ma, Z.; Dong, S.; Zhang, S.; Gong, Y. Few-Shot Online Anomaly Detection and Segmentation. Knowl.-Based Syst. 2024, 300, 112168. [Google Scholar] [CrossRef]

- Li, A.; Danielczuk, M.; Goldberg, K. One-Shot Shape-Based Amodal-to-Modal Instance Segmentation. In Proceedings of the 2020 IEEE 16th International Conference on Automation Science and Engineering (CASE), Hong Kong, China, 20–21 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1375–1382. [Google Scholar]

- Zhang, J.; Hong, Z.; Chen, X.; Li, Y. Few-Shot Object Detection for Remote Sensing Imagery Using Segmentation Assistance and Triplet Head. Remote Sens. 2024, 16, 3630. [Google Scholar] [CrossRef]

- Kullback, S.; Leibler, R.A. On Information and Sufficiency. Ann. Math. Statist. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Kang, B.; Liu, Z.; Wang, X.; Yu, F.; Feng, J.; Darrell, T. Few-shot object detection via feature reweighting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8419–8428. [Google Scholar]

- Wu, X.; Sahoo, D.; Hoi, S. Meta-RCNN: Meta learning for few-shot object detection. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 1679–1687. [Google Scholar]

- Han, G.; Huang, S.; Ma, J.; He, Y.; Chang, S.-F. Meta Faster R-CNN: Towards accurate few-shot object detection with attentive feature alignment. Proc. AAAI Conf. Artif. Intell. 2022, 36, 780–789. [Google Scholar] [CrossRef]

- Qiao, L.; Zhao, Y.; Li, Z.; Qiu, X.; Wu, J.; Zhang, C. DeFRCN: Decoupled faster R-CNN for few-shot object detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 8681–8690. [Google Scholar]

- Tang, Y.; Cao, Z.; Yang, Y.; Liu, J.; Yu, J. Semi-supervised few-shot object detection via adaptive pseudo labeling. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 2151–2165. [Google Scholar] [CrossRef]

- Wu, S.; Pei, W.; Mei, D.; Chen, F.; Tian, J.; Lu, G. Multi-faceted distillation of base-novel commonality for few-shot object detection. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2022; pp. 578–594. [Google Scholar]

- Amoako, P.Y.O.; Cao, G.; Shi, B.; Yang, D.; Acka, B.B. Orthogonal Capsule Network with Meta-Reinforcement Learning for Small Sample Hyperspectral Image Classification. Remote Sens. 2025, 17, 215. [Google Scholar] [CrossRef]

- Chen, H.; Wang, Y.; Wang, G.; Qiao, Y. LSTD: A low-shot transfer detector for object detection. Proc. AAAI Conf. Artif. Intell. 2018, 32, 2836–2843. [Google Scholar] [CrossRef]

- Wang, X.; Huang, T.E.; Darrell, T.; Gonzalez, J.E.; Yu, F. Frustratingly simple few-shot object detection. arXiv 2020, arXiv:2003.06957. [Google Scholar]

- Sun, B.; Li, B.; Cai, S.; Yuan, Y.; Zhang, C. FSCE: Few-shot object detection via contrastive proposal encoding. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 7352–7362. [Google Scholar]

- Lee, H.; Lee, M.; Kwak, N. Few-shot object detection by attending to per-sample-prototype. In Proceedings of the 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, Hi, USA, 3–8 January 2022; pp. 2445–2454. [Google Scholar]

- Zhang, B.; Li, X.; Ye, Y.; Huang, Z.; Zhang, L. Prototype completion with primitive knowledge for few-shot learning. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 3754–3762. [Google Scholar]

- Karlinsky, L.; Karlinsky, L.; Shtok, J.; Harary, S.; Marder, M.; Pankanti, S.; Bronstein, A.M. RepMet: Representative-based metric learning for classification and few-shot object detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5192–5201. [Google Scholar]

- Zhang, G.; Cui, K.; Wu, R.; Lu, S.; Tian, Y. PNPDet: Efficient few-shot detection without forgetting via plug-and-play sub-networks. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 3822–3831. [Google Scholar]

- Li, B.; Yang, B.; Liu, C.; Liu, F.; Ji, R.; Ye, Q. Beyond max-margin: Class margin equilibrium for few-shot object detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 7363–7372. [Google Scholar]

- Fan, Q.; Zhuo, W.; Tang, C.-K.; Tai, Y.-W. Few-shot object detection with attention-RPN and multi-relation detector. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 4012–4021. [Google Scholar]

- Xiao, Y.; Lepetit, V.; Marlet, R. Few-shot object detection and viewpoint estimation for objects in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 3090–3106. [Google Scholar]

- Zhang, T.; Zhang, X.; Zhu, P.; Jia, X.; Tang, X.; Jiao, L. Generalized few-shot object detection in remote sensing images. ISPRS J. Photogramm. Remote Sens. 2023, 195, 353–364. [Google Scholar] [CrossRef]

- Wang, B.; Ma, G.; Sui, H.; Zhang, Y.; Zhang, H.; Zhou, Y. Few-shot object detection in remote sensing imagery via fuse context dependencies and global features. Remote Sens. 2023, 15, 3462. [Google Scholar] [CrossRef]

- Liu, S.; You, Y.; Su, H.; Meng, G.; Yang, W.; Liu, F. Few-shot object detection in remote sensing image interpretation: Opportunities and challenges. Remote Sens. 2022, 14, 4435. [Google Scholar] [CrossRef]

- Gao, H.; Wu, S.; Wang, Y.; Kim, J.Y.; Xu, Y. FSOD4RSI: Few-Shot Object Detection for Remote Sensing Images via Features Aggregation and Scale Attention. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 4784–4796. [Google Scholar] [CrossRef]

- Zhang, Z.; Hao, J.; Pan, C.; Ji, G. Oriented feature augmentation for few-shot object detection in remote sensing images. In Proceedings of the 2021 IEEE International Conference on Computer Science, Electronic Information Engineering and Intelligent Control Technology (CEI), Fuzhou, China, 24–26 September 2021; pp. 359–366. [Google Scholar]

- Cheng, G.; Yan, B.; Shi, P.; Li, K.; Yao, X.; Guo, L.; Han, J. Prototype-CNN for few-shot object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5604610. [Google Scholar] [CrossRef]

- Chen, J.; Qin, D.; Hou, D.; Zhang, J.; Deng, M.; Sun, G. Multi-scale object contrastive learning-derived few-shot object detection in VHR imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5635615. [Google Scholar] [CrossRef]

- Huang, X.; He, B.; Tong, M.; Wang, D.; He, C. Few-shot object detection on remote sensing images via shared attention module and balanced fine-tuning strategy. Remote Sens. 2021, 13, 3816. [Google Scholar] [CrossRef]

- Xiao, Z.; Qi, J.; Xue, W.; Zhong, P. Few-shot object detection with self-adaptive attention network for remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4854–4865. [Google Scholar] [CrossRef]

- Liu, Y.; Pan, Z.; Yang, J.; Zhang, B.; Zhou, G.; Hu, Y.; Ye, Q. Few-shot object detection in remote sensing images via label-consistent classifier and gradual regression. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5612114. [Google Scholar] [CrossRef]

- Li, J.; Tian, Y.; Xu, Y.; Hu, X.; Zhang, Z.; Wang, H.; Xiao, Y. MM-RCNN: Toward few-shot object detection in remote sensing images with meta memory. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5635114. [Google Scholar] [CrossRef]

- Sun, X.; Wang, B.; Wang, Z.; Li, H.; Li, H.; Fu, K. Research progress on few-shot learning for remote sensing image interpretation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2387–2402. [Google Scholar] [CrossRef]

- Zhang, F.; Shi, Y.; Xiong, Z.; Zhu, X.X. Few-shot object detection in remote sensing: Lifting the curse of incompletely annotated novel objects. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5603514. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, S.; Li, Y.; Tian, L.; Chen, Q.; Li, J. Small Object Detection with Small Samples Using High-Resolution Remote Sensing Images. J. Phys. Conf. Ser. 2024, 2890, 012012. [Google Scholar] [CrossRef]

- Zhou, L.; Wang, C.; Yang, S.; Huang, P. A Review of Small-Sample Target Detection Research. In Proceedings of the International Workshop of Advanced Manufacturing and Automation, Singapore, 10–12 October 2024; Springer Nature: Singapore; pp. 100–107. [Google Scholar]

- Peng, H.; Li, X. Multi-Scale Selection Pyramid Networks for Small-Sample Target Detection Algorithms. In Proceedings of the 2021 3rd International Conference on Machine Learning, Big Data and Business Intelligence (MLBDBI), Shanghai, China, 17–19 December 2021; IEEE: Piscataway, NJ, USA; pp. 359–364. [Google Scholar]

- Sun, F.; Jia, J.; Han, X.; Kuang, L.; Han, H. Small-Sample Target Detection Across Domains Based on Supervision and Distillation. Electronics 2024, 13, 4975. [Google Scholar] [CrossRef]

- Zheng, Y.; Yan, J.; Meng, J.; Liang, M. A Small-Sample Target Detection Method of Side-Scan Sonar Based on CycleGAN and Improved YOLOv8. Appl. Sci. 2025, 15, 2396. [Google Scholar] [CrossRef]