Advanced Insect Detection Network for UAV-Based Biodiversity Monitoring

Abstract

1. Introduction

- Through its refined architecture, the AIDN aims to enhance the sensitivity and specificity of insect detection, thereby reducing both false positives and missed detections.

- By automating the detection process, the AIDN allows for the monitoring of larger areas than is feasible with manual methods, offering a more comprehensive understanding of insect populations across diverse landscapes.

- The AIDN’s processing framework is designed to support real-time data analysis, providing immediate insights that are crucial for timely decision making in pest management and ecological conservation.

- Improved monitoring accuracy helps with better conservation efforts for beneficial insect species and more effective control of pest populations, leading to enhanced agricultural productivity and reduced chemical pesticide use.

2. Materials and Methods

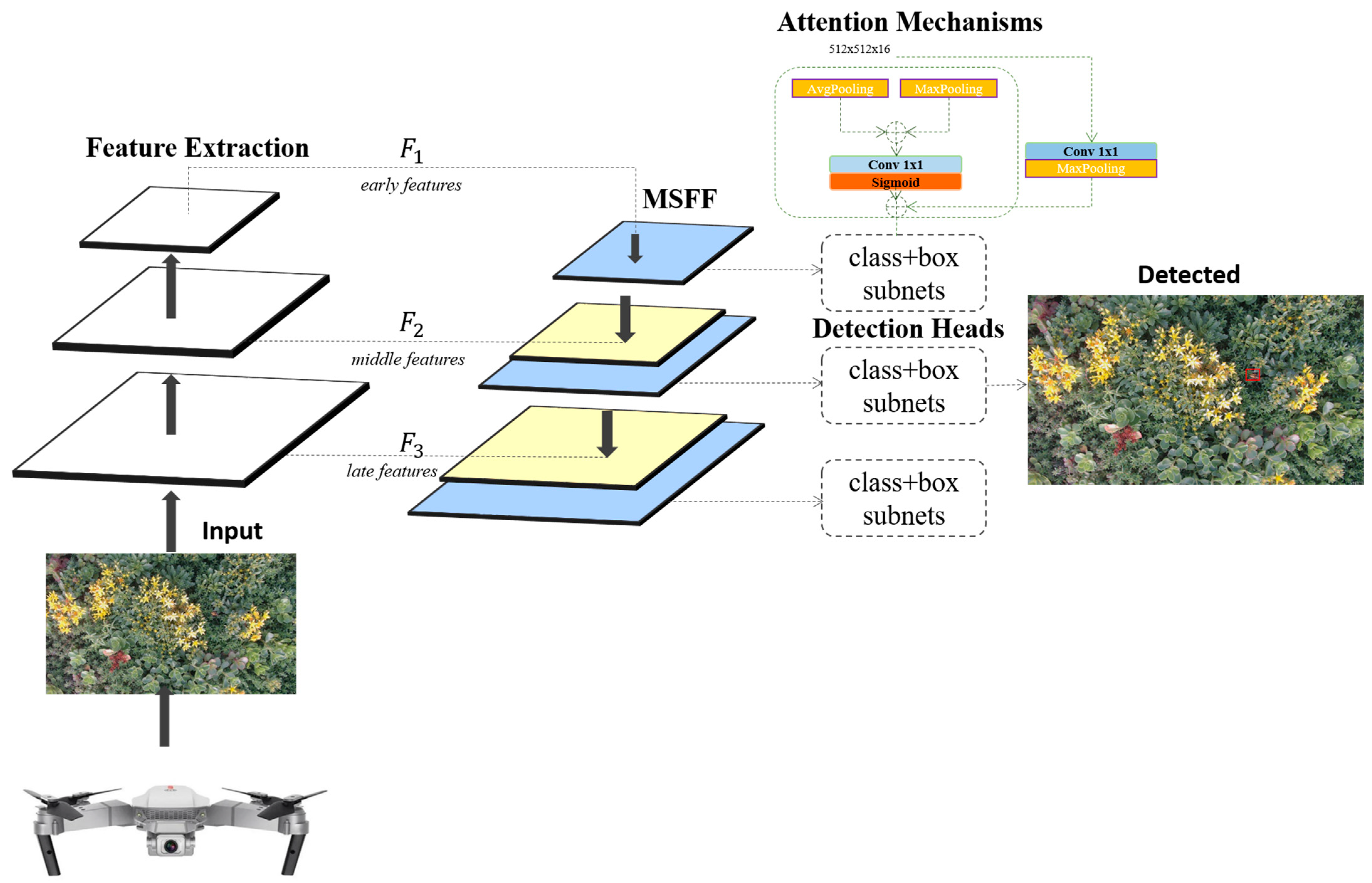

2.1. Multi-Scale Feature Fusion (MSFF) Module

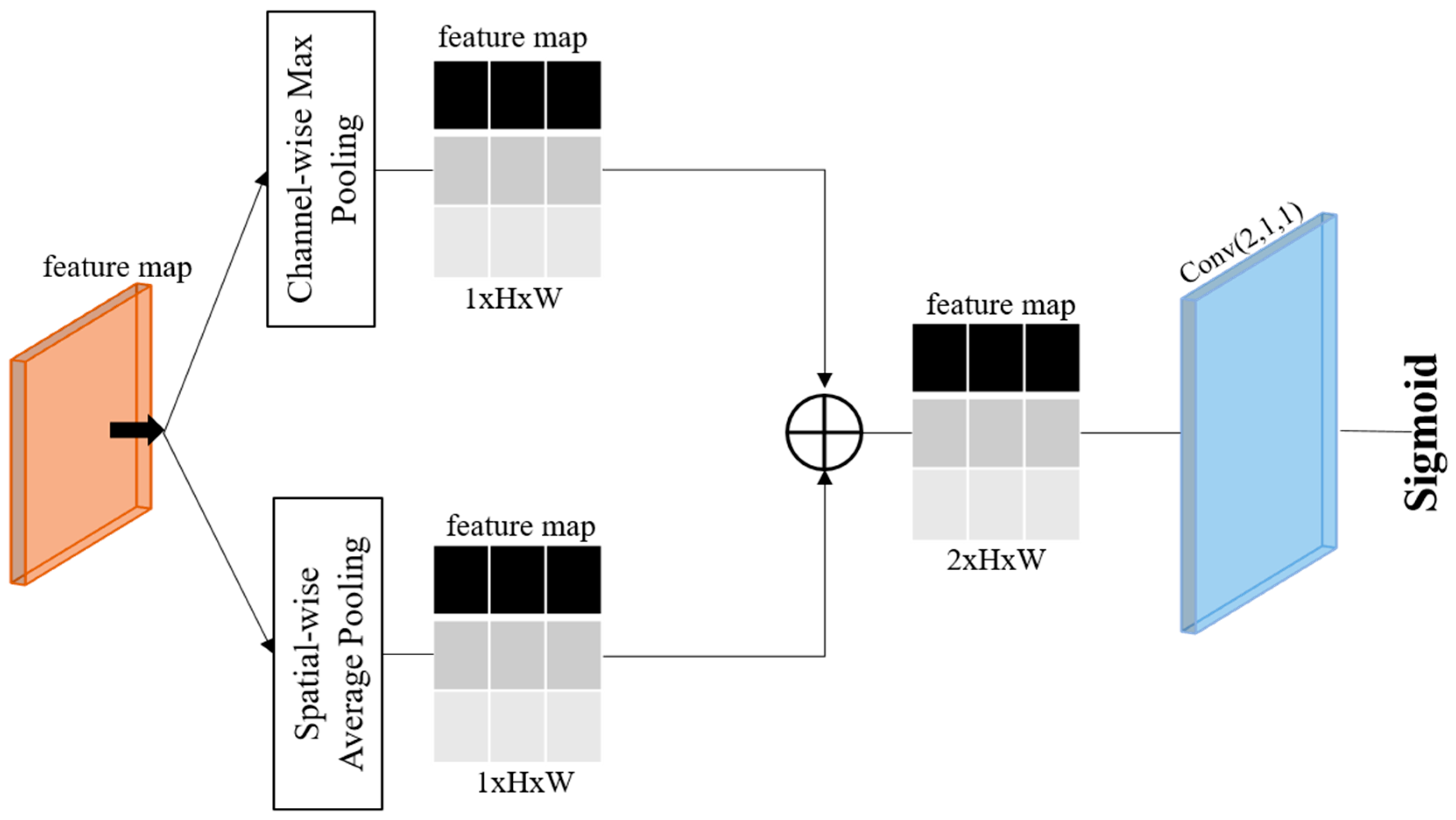

2.2. Attention Mechanisms

2.3. Custom Loss Function

3. Results

3.1. Implementation Details

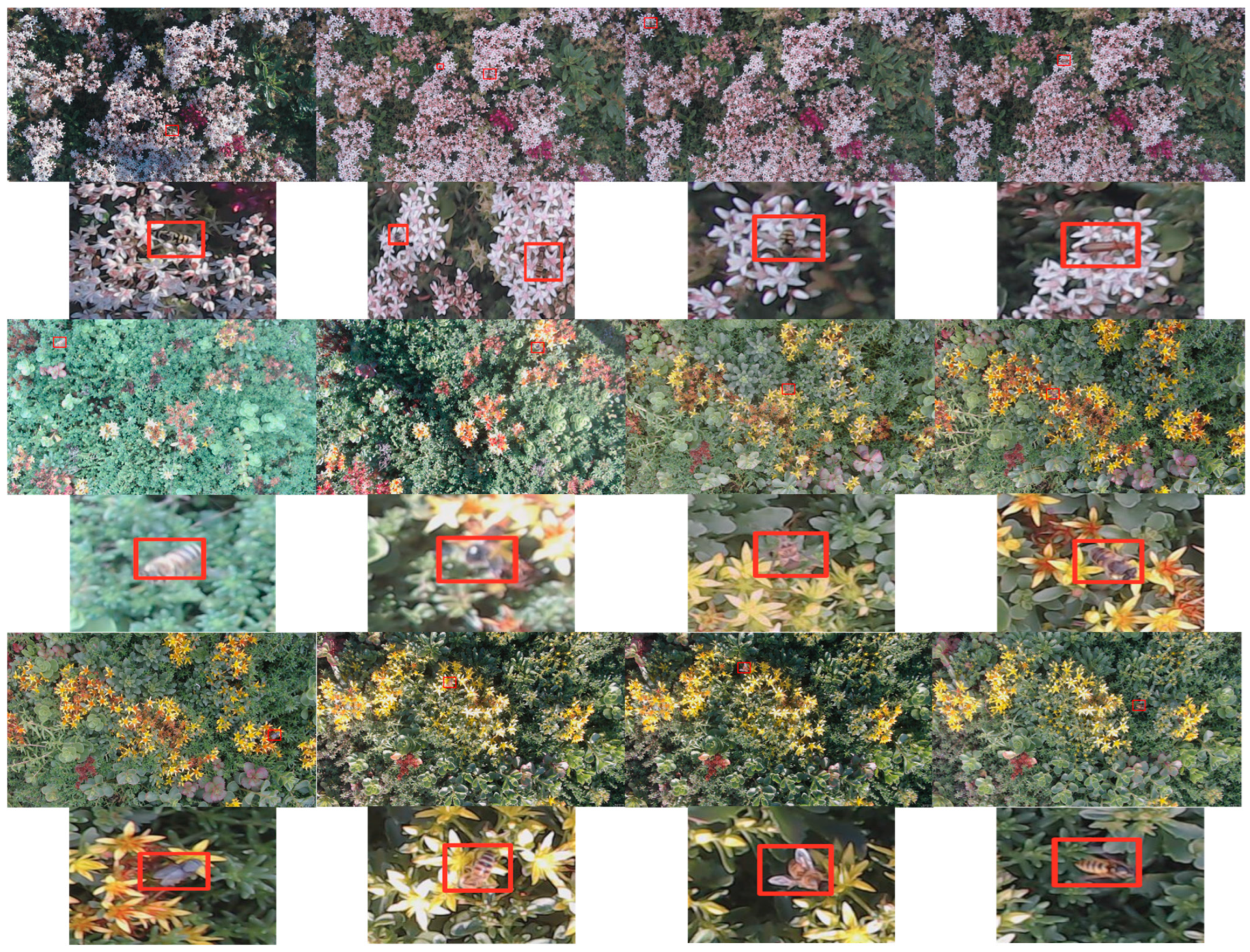

3.2. Dataset

3.3. Comparison with SOTA Models

3.4. Ablation Studies

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sánchez Herrera, M.; Forero, D.; Calor, A.R.; Romero, G.Q.; Riyaz, M.; Callisto, M.; de Oliveira Roque, F.; Elme-Tumpay, A.; Khan, M.K.; Justino de Faria, A.P.; et al. Systematic challenges and opportunities in insect monitoring: A Global South perspective. Philos. Trans. R. Soc. B 2024, 379, 20230102. [Google Scholar] [CrossRef] [PubMed]

- Rajabpour, A.; Yarahmadi, F. Monitoring and Population Density Estimation. In Decision System in Agricultural Pest Management; Springer Nature: Singapore, 2024; pp. 37–67. [Google Scholar]

- Keasar, T.; Yair, M.; Gottlieb, D.; Cabra-Leykin, L.; Keasar, C. STARdbi: A pipeline and database for insect monitoring based on automated image analysis. Ecol. Inform. 2024, 80, 102521. [Google Scholar] [CrossRef]

- Kariyanna, B.; Sowjanya, M. Unravelling the use of artificial intelligence in management of insect pests. Smart Agric. Technol. 2024, 8, 100517. [Google Scholar] [CrossRef]

- Duarte, A.; Borralho, N.; Cabral, P.; Caetano, M. Recent advances in forest insect pests and diseases monitoring using UAV-based data: A systematic review. Forests 2022, 13, 911. [Google Scholar] [CrossRef]

- Betti Sorbelli, F.; Coró, F.; Das, S.K.; Palazzetti, L.; Pinotti, C.M. Drone-based Bug Detection in Orchards with Nets: A Novel Orienteering Approach. ACM Trans. Sens. Netw. 2024, 20, 1–28. [Google Scholar] [CrossRef]

- Jain, A.; Cunha, F.; Bunsen, M.J.; Cañas, J.S.; Pasi, L.; Pinoy, N.; Helsing, F.; Russo, J.; Botham, M.; Sabourin, M.; et al. Insect identification in the wild: The ami dataset. arXiv 2024, arXiv:2406.12452. [Google Scholar]

- Safarov, F.; Khojamuratova, U.; Komoliddin, M.; Bolikulov, F.; Muksimova, S.; Cho, Y.I. MBGPIN: Multi-Branch Generative Prior Integration Network for Super-Resolution Satellite Imagery. Remote Sens. 2025, 17, 805. [Google Scholar] [CrossRef]

- O’Connor, T.; García, O.G.; Cabral, V.; Isacch, J.P. Agroecological farmer perceptions and opinions towards pest management and biodiversity in the Argentine Pampa region. Agroecol. Sustain. Food Syst. 2025, 49, 182–203. [Google Scholar] [CrossRef]

- Padhiary, M. The Convergence of Deep Learning, IoT, Sensors, and Farm Machinery in Agriculture. In Designing Sustainable Internet of Things Solutions for Smart Industries; IGI Global: Hershey, PA, USA, 2025; pp. 109–142. [Google Scholar]

- Vhatkar, K.N. An intellectual model of pest detection and classification using enhanced optimization-assisted single shot detector and graph attention network. Evol. Intell. 2025, 18, 3. [Google Scholar] [CrossRef]

- Zhao, D. Cognitive process and information processing model based on deep learning algorithms. Neural Netw. 2025, 183, 106999. [Google Scholar] [CrossRef] [PubMed]

- Santoso, C.B.; Singadji, M.; Purnama, D.G.; Abdel, S.; Kharismawardani, A. Enhancing Apple Leaf Disease Detection with Deep Learning: From Model Training to Android App Integration. J. Appl. Data Sci. 2025, 6, 377–390. [Google Scholar] [CrossRef]

- Chen, H.; Wen, C.; Zhang, L.; Ma, Z.; Liu, T.; Wang, G.; Yu, H.; Yang, C.; Yuan, X.; Ren, J. Pest-PVT: A model for multi-class and dense pest detection and counting in field-scale environments. Comput. Electron. Agric. 2025, 230, 109864. [Google Scholar] [CrossRef]

- Bhoi, S.K.; Jena, K.K.; Panda, S.K.; Long, H.V.; Kumar, R.; Subbulakshmi, P.; Jebreen, H.B. An Internet of Things assisted Unmanned Aerial Vehicle based artificial intelligence model for rice pest detection. Microprocess. Microsyst. 2021, 80, 103607. [Google Scholar] [CrossRef]

- Berger, G.S.; Mendes, J.; Chellal, A.A.; Junior, L.B.; da Silva, Y.M.; Zorawski, M.; Pereira, A.I.; Pinto, M.F.; Castro, J.; Valente, A.; et al. A YOLO-based insect detection: Potential use of small multirotor unmanned aerial vehicles (UAVs) monitoring. In Proceedings of the International Conference on Optimization, Learning Algorithms and Applications, Ponta Delgada, Portugal, 27–29 September 2023; Springer Nature: Cham, Switzerland, 2023; pp. 3–17. [Google Scholar]

- Thakre, R.N.; Kunte, P.A.; Chavhan, N.; Dhule, C.; Agrawal, R. UAV Based System For Detection in Integrated Insect Management for Agriculture Using Deep Learning. In Proceedings of the 2023 2nd International Conference on Futuristic Technologies (INCOFT), Belagavi, India, 24–26 November 2023; IEEE: Karnataka, India, 2023; pp. 1–6. [Google Scholar]

- Casas, E.; Arbelo, M.; Moreno-Ruiz, J.A.; Hernández-Leal, P.A.; Reyes-Carlos, J.A. UAV-based disease detection in palm groves of Phoenix canariensis using machine learning and multispectral imagery. Remote Sens. 2023, 15, 3584. [Google Scholar] [CrossRef]

- Godinez-Garrido, G.; Gonzalez-Islas, J.C.; Gonzalez-Rosas, A.; Flores, M.U.; Miranda-Gomez, J.M.; Gutierrez-Sanchez, M.D.J. Estimation of Damaged Regions by the Bark Beetle in a Mexican Forest Using UAV Images and Deep Learning. Sustainability 2024, 16, 10731. [Google Scholar] [CrossRef]

- Pak, J.; Kim, B.; Ju, C.; You, S.H.; Son, H.I. UAV-Based Trilateration System for Localization and Tracking of Radio-Tagged Flying Insects: Development and Field Evaluation. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; IEEE: Detroit, MI, USA, 2023; pp. 1–8. [Google Scholar]

- Gokeda, V.; Yalavarthi, R. Deep Hybrid Model for Pest Detection: IoT-UAV-Based Smart Agriculture System. J. Phytopathol. 2024, 172, e13381. [Google Scholar] [CrossRef]

- Qin, K.; Zhang, J.; Hu, Y. Identification of Insect Pests on Soybean Leaves Based on SP-YOLO. Agronomy 2024, 14, 1586. [Google Scholar] [CrossRef]

- Bjerge, K.; Alison, J.; Dyrmann, M.; Frigaard, C.E.; Mann, H.M.; Høye, T.T. Accurate detection and identification of insects from camera trap images with deep learning. PLOS Sustain. Transform. 2023, 2, e0000051. [Google Scholar] [CrossRef]

| Category | Specifications |

|---|---|

| Hardware Configuration | |

| CPU | Intel Xeon Processor E5-2640 v4 |

| GPU | NVIDIA Tesla P100 |

| RAM | 64 GB DDR4 |

| Software Environment | |

| Operating System | Ubuntu 18.04 LTS |

| Deep-learning Framework | TensorFlow 2.3 |

| Additional Libraries | NumPy 1.19, OpenCV 4.5 |

| Model Training Parameters | |

| Learning Rate | 0.001, decaying by 0.1 every 10 epochs |

| Batch Size | 32 |

| Optimizer | Adam |

| Epochs | 50 |

| Regularization | Dropout, rate = 0.5 |

| No. | Class Name | Training Set Size | Validation Set Size | Test Set Size |

|---|---|---|---|---|

| 1 | Coccinella septempunctata | 6344 | 299 | 396 |

| 2 | Apis mellifera | 6934 | 1663 | 2162 |

| 3 | Bombus lapidarius | 1551 | 250 | 269 |

| 4 | Bombus terrestris | 2955 | 240 | 268 |

| 5 | Eupeodes corolla | 3410 | 275 | 573 |

| 6 | Episyrphus balteatus | 1306 | 274 | 415 |

| 7 | Eristalis tenax | 286 | 27 | 31 |

| 8 | Aglais urticae | 286 | 27 | 31 |

| 9 | Vespula vulgaris | 956 | 201 | 217 |

| 10 | Bombus spp. (related to 3, 4) | - | - | 667 |

| 11 | Syrphidae (related to 5, 6, 7) | - | - | 1982 |

| 12 | Coccinellidae (related to 1) | - | - | 27 |

| 13 | Non-Bombus Anthophila (2) | - | - | 271 |

| 14 | Rhopalocera (8) | - | - | 51 |

| 15 | Non-Anthophila Hymenoptera (9) | - | - | 285 |

| 16 | Non-Syrphidae Diptera | - | - | 421 |

| 17 | Non-Coccinelidae Coleoptera | - | - | 19 |

| 18 | Unclear insect | - | - | 489 |

| 19 | Other animal | - | - | 231 |

| Model | Precision | Recall | F1-Score | mAP |

|---|---|---|---|---|

| AIDN | 92% | 91% | 92% | 92% |

| Baseline models | ||||

| YOLO v4 | 80% | 75% | 77% | 76% |

| YOLO v5 | 82% | 81% | 82% | 80% |

| YOLO v6 | 84% | 84% | 83% | 83% |

| YOLO v7 | 83% | 85% | 83% | 81% |

| YOLO v8 | 85% | 84% | 85% | 82% |

| YOLO v9 | 85% | 85% | 84% | 83% |

| YOLO v10 | 85% | 86% | 85% | 82% |

| YOLO v11 | 86% | 85% | 85% | 84% |

| SSD | 78% | 74% | 76% | 74% |

| Faster R-CNN | 82% | 80% | 81% | 79% |

| SOTA models | ||||

| Ref. [6] | 79% | 77% | 77% | 79% |

| Ref. [15] | 81% | 81% | 82% | 81% |

| Ref. [16] | 88% | 87% | 87% | 85% |

| Ref. [17] | 87% | 87% | 88% | 82% |

| Ref. [18] | 79% | 75% | 77% | 77% |

| Ref. [19] | 89% | 87% | 87% | 87% |

| Ref. [20] | 78% | 77% | 79% | 78% |

| Ref. [21] | 89% | 88% | 88% | 87% |

| Ref. [22] | 85% | 85% | 86% | 82% |

| Model | Precision (%) | Recall (%) | F1-Score (%) | mAP (%) | GFLOPs | FPS |

|---|---|---|---|---|---|---|

| AIDN | 92 | 91 | 92 | 92 | 15 | 30 |

| YOLO v4 | 80 | 75 | 77 | 76 | 20 | 25 |

| YOLO v5 | 82 | 81 | 82 | 80 | 22 | 28 |

| SSD | 78 | 74 | 76 | 74 | 18 | 22 |

| Faster R-CNN | 82 | 80 | 81 | 79 | 25 | 20 |

| Experimental Setting | MSFF Module | Attention Mechanisms | Custom Loss Function | Precision (%) | Recall (%) | F1-Score (%) | mAP (%) |

|---|---|---|---|---|---|---|---|

| Baseline (Full Model—AIDN) | ✓ | ✓ | ✓ | 92 | 91 | 92 | 92 |

| Without MSFF Module | ✗ | ✓ | ✓ | 87 | 85 | 86 | 86 |

| Without Attention Mechanisms | ✓ | ✗ | ✓ | 89 | 88 | 88 | 88 |

| With Standard Loss Function | ✓ | ✓ | ✗ | 90 | 89 | 89 | 90 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khujamatov, H.; Muksimova, S.; Abdullaev, M.; Cho, J.; Jeon, H.-S. Advanced Insect Detection Network for UAV-Based Biodiversity Monitoring. Remote Sens. 2025, 17, 962. https://doi.org/10.3390/rs17060962

Khujamatov H, Muksimova S, Abdullaev M, Cho J, Jeon H-S. Advanced Insect Detection Network for UAV-Based Biodiversity Monitoring. Remote Sensing. 2025; 17(6):962. https://doi.org/10.3390/rs17060962

Chicago/Turabian StyleKhujamatov, Halimjon, Shakhnoza Muksimova, Mirjamol Abdullaev, Jinsoo Cho, and Heung-Seok Jeon. 2025. "Advanced Insect Detection Network for UAV-Based Biodiversity Monitoring" Remote Sensing 17, no. 6: 962. https://doi.org/10.3390/rs17060962

APA StyleKhujamatov, H., Muksimova, S., Abdullaev, M., Cho, J., & Jeon, H.-S. (2025). Advanced Insect Detection Network for UAV-Based Biodiversity Monitoring. Remote Sensing, 17(6), 962. https://doi.org/10.3390/rs17060962