Abstract

Due to its unique geometric configuration, passive radar offers enhanced surveillance capabilities for low-altitude targets. Traditional passive radar signal processing typically relies on energy accumulation and Constant False Alarm Rate (CFAR) detection. However, insufficient accumulation gain or mismatched statistical models in complex electromagnetic environments can compromise detection performance. To address these challenges, this paper proposes an intelligent target detection method for passive radar. Specifically, a residual network is integrated with a Squeeze-and-Excitation (SE) module, which preserves the powerful feature extraction capabilities of the residual network while improving the model’s ability to adaptively adjust channel weights. This fusion effectively enhances the target detection process. Furthermore, based on the particle swarm algorithm, a gray wolf population search strategy and a multi-target iterative search mechanism are introduced to enable the rapid extraction of time-frequency difference parameters for multiple targets. Both simulation and field experiments demonstrate that the proposed method enables intelligent detection of low–slow–small targets in passive radar, ensuring efficient time-frequency parameter extraction while maintaining a high detection success rate.

1. Introduction

With the rapid advancement of unmanned aerial vehicle (UAV) technology, UAVs have been widely utilized in reconnaissance, surveillance, and aerial photography. However, the increasing frequency of unauthorized UAV flights highlights the urgent need for the development of detection technologies specifically designed for low–slow–small targets. Passive Radar (PR) is a radar system that uses non-cooperative electromagnetic signals for the silent detection of targets in specific areas [1,2]. As a novel radar system, passive radar leverages ambient electromagnetic signals for target detection. Compared to active radar, passive radar offers significant advantages [3,4,5], including strong anti-jamming capabilities, effectiveness against stealth targets, efficient spectrum utilization, and ease of networking and deployment. Given these advantages, passive radar shows significant potential for applications in military reconnaissance, civil aviation, and traffic monitoring [6,7].

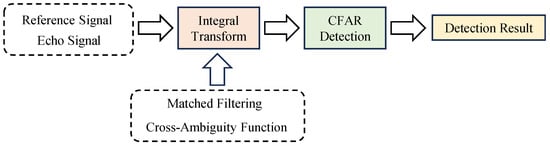

As a bistatic radar system, passive radar typically uses two channels for signal reception: the reference channel captures the direct signal from the radiation source, while the surveillance channel receives the target’s reflected echoes [8]. Current target detection methods often enhance SNR through pulse compression or cross-ambiguity accumulation [9], focusing the target’s energy for improved detection. A statistical model is then constructed to generate detection statistics [10,11] based on the principle of ‘energy detection’. The traditional radar target detection process is shown in Figure 1. Traditional detection methods rely on expansion of the available signal dimensions [12]. Examples include one-dimensional CFAR detection for time-domain radar sequences [13] and two-dimensional techniques such as range Doppler moving target detection, 2D CFAR for range azimuth, and space-time (spatial Doppler) processing. However, for the detection of extremely weak target echoes, insufficient accumulation time or complex target echoes causing model mismatches may degrade the detection performance of these methods [14,15]. In addition to the traditional ‘energy accumulation and target detection’ approaches, other techniques also enable effective target detection. In [16], sparse decomposition of direct and echo signals was used, eliminating the need for energy accumulation. In [17], the strengths of RNNs for time-series processing were demonstrated, using LSTM for radar signal prediction and anomaly detection, enabling target detection in cluttered sea environments. Thus, while energy accumulation enhances SNR and aids in target detection, it is not essential for effective detection.

Figure 1.

Traditional radar target detection process.

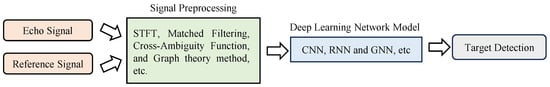

With the improvement of computer performance and the development of neural networks, deep learning has made significant progress in the intelligent information processing of radar data, particularly in addressing classification problems that traditional methods struggle to solve. Target detection is typically framed as a binary hypothesis testing problem [12], where the goal is to determine whether the received signal is a target echo or just noise. Deep learning’s powerful ability to model high-dimensional data enables comprehensive characterization of target echo features, such as RCS fluctuations, target shape, and Doppler frequency. This facilitates the extraction of critical features from target echoes, distinguishing them from background noise and enabling effective detection. The target detection process based on deep learning is shown in Figure 2. Several studies have demonstrated the potential of deep learning in this field. The combination of auto-encoders and convolutional neural networks enables the extraction of deeper feature information [18]. RNNs can capture the correlated features of potential sequences within the time-frequency distribution matrix [19]. By leveraging a dual-channel convolutional neural network to capture multi-dimensional features of targets and clutter in both time and frequency domains, robust target detection can be achieved [20]. In [21], a multi-frame RD spectrum was used, and a sliding window was applied to obtain observation vectors, with detection performed by an MLP detector. By modeling the target echo as a frequency-modulated signal and transforming it into a time-frequency image, GoogLeNet and LetNet can be applied for classification [22]. In [23], a complex-valued UNet network was used to extract features from both the amplitude and phase of the echo to achieve clutter suppression and target detection. In [24], deep neural networks utilized the time-frequency features extracted from the echo cells to achieve detection. Based on the constructed pulse Doppler radar R-D image dataset, a CNN-based detection model was proposed that can accurately detect and locate UAV targets [25]. To address the problem of multi-scale and multi-scene SAR ship detection, a densely connected multi-scale neural network was designed within the Faster R-CNN framework [26]. To address the fact that the features in the range-Doppler map vary over time, 3D-CNN was applied to the 3D representation of time-varying features [27]. In [28], the spectrum of the echo sequence was used as the feature of graph nodes. The adjacency matrix of the graph was computed using the spatiotemporal and power information between the sequences. Classification of the graph nodes was then performed using a graph convolutional network. By using the Visibility Graph (VG) algorithm, the radar echo sequence was modeled as a visibility graph. The graph was then classified using a GCN to achieve detection of weak sea surface targets [29]. In [30], by processing the measured sea clutter data in the time domain, the Laplacian matrix of the graph was constructed, and its maximum eigenvalue was extracted as the detection feature, thereby achieving target detection. It can be observed that deep learning in radar target detection has primarily focused on two-dimensional image processing, where SNR enhancement is achieved through preprocessing techniques such as integral transforms. While these methods improve the target echo SNR before detection, they do not inherently enhance the detector’s capability. Consequently, the detection of low–slow–small targets remains a significant challenge.

Figure 2.

Deep learning-based target detection workflow.

The cross-ambiguity function (CAF) [4] is a classical tool for time-delay parameter estimation. However, for accurate estimation of time-frequency differences across various target types, longer detection ranges and faster target velocities expand the search space, significantly increasing the computational load of the CAF. Existing methods of calculating the CAF can be broadly categorized into two types. The first involves exhaustive search. The FFT-based cross-ambiguity function calculation method treats the echo signal and the conjugate of the reference signal, with applied time delay, as a whole and performs FFT processing to traverse the Doppler ambiguity function at specific time delays [31]. The cross-correlation FFT method can obtain the ambiguity function results corresponding to all time delays at the given Doppler frequency [32]. The time-frequency peak search method effectively reduces the search time but still incurs inevitable computational redundancy [33]. Using a multi-population particle swarm optimization algorithm for non-exhaustive search effectively reduces the number of frequency points to search but is prone to falling into local optima [34].

This paper proposes an intelligent detection method for Low, Slow, and Small (LSS) targets. First, deep feature extraction of the radar echo signals is performed using SE-ResNet34 [35]. The channel attention mechanism effectively captures dependencies between the network’s channels, enabling the detection of subtle changes in the radar echoes and accurately determining target presence. The target detection method proposed in this paper leverages the powerful feature extraction capability of deep networks to deeply characterize the background environment and target features, effectively overcoming the issue of statistical model mismatching in traditional digital signal processing. Moreover, this method directly uses the echo signal for network training, without the need for integration or transformation processing. Next, based on the PSO algorithm [34], a gray wolf upper-layer individual guidance and encircling search strategy is introduced, along with a multi-target search mechanism, facilitating the rapid estimation of time-frequency difference parameters for multiple targets. Additionally, the fast time-frequency difference extraction method proposed in this paper further reduces computational redundancy and introduces a multi-target search mechanism, enabling the method to perform multi-target search. Simulation and experimental data demonstrate that the proposed method enables intelligent detection of ‘LLS’ targets and facilitates the rapid extraction of target time-frequency difference parameters.

2. Passive Radar Signal Model

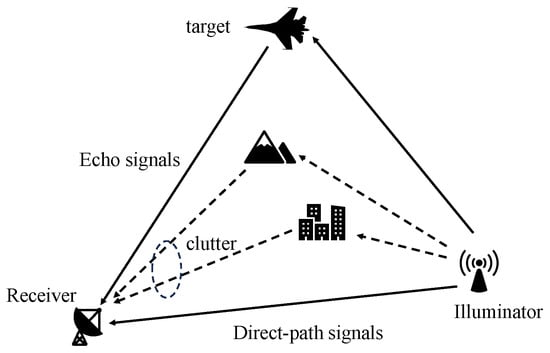

The passive radar system based on DTMB (Digital Terrestrial Multimedia Broadcasting) signals primarily consists of three components: the target, the receiving station, and the transmitting station [8]. The transmitting station is a terrestrial DTMB transmitter with a known position. The receiving station typically uses two channels for signal reception: a reference channel and a surveillance channel. Its structural diagram is shown in Figure 3.

Figure 3.

Geometric model of passive radar.

The reference channel is directed toward the transmitting station, primarily receiving the direct path signal as the reference signal (), which can be expressed as

where denotes the complex envelope of the DTMB signal, denotes the amplitude scaling factor after the DTMB signal propagates through space, and represents complex Gaussian white noise. The surveillance channel is directed toward the target area, receiving the echo signal () scattered by the target, which can be expressed as

where represents the amplitude scaling factor of the DTMB signal after scattering and propagation through space by the n-th target, denotes the time delay of the echo from the n-th target, represents the Doppler frequency of the n-th target, represents the complex amplitude of the received clutters, M represents the number of multipath clutters, represents the time delay of each multipath clutter, N is the number of targets, and denotes complex Gaussian whiter noise. By using the ECA clutter suppression algorithm [36], the clutter can be removed, and the echo signal can be expressed as

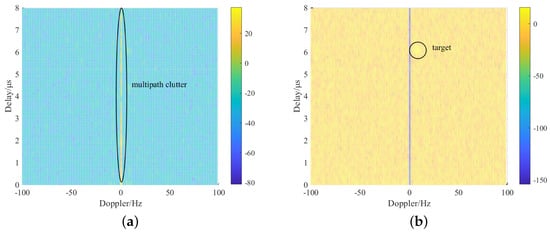

As shown in Figure 4, before clutter suppression, the energy of multipath clutter is significantly stronger than that of the target echo, completely masking the target and making detection impossible. However, after applying the ECA algorithm for clutter suppression, the target becomes more prominent.

Figure 4.

Multipath clutter suppression effect. (a) Before multipath clutter suppression; (b) After multipath clutter suppression.

The cross−ambiguity function is calculated using the received direct path signal and the echo signal and is defined as follows:

where denotes the time delay, denotes the Doppler frequency, is the coherent integration time, and represents the complex conjugation operation.

In the ideal case (without noise), it can be observed that the time delay () and Doppler frequency () of the echo signal relative to the reference signal correspond to the position of the maximum value in the range-Doppler matrix. This indicates that the time delay and Doppler frequency of the target echo can be expressed as follows:

In modern radar signal processing, radar signals are typically stored in a discrete digital format. The discrete form of Equation (4) is given by

where , L represents the signal time delay unit, , K represents the Doppler frequency unit, and represents the number of sampling points within one coherent integration interval.

3. Intelligent Detection Method for Low–Slow–Small Targets

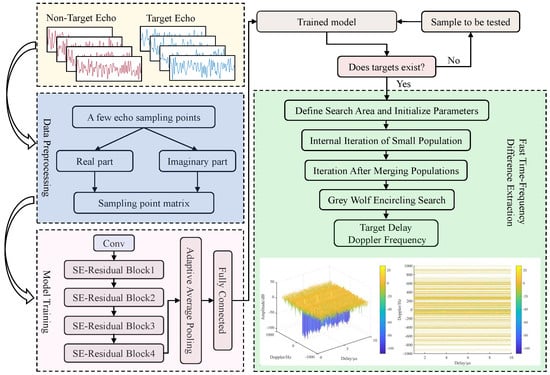

As shown in Figure 5, this paper proposes a deep learning-based method for intelligent detection of low–slow–small targets in passive radar, along with a fast multi−target time−frequency difference extraction method. These two methods are sequential: first, target detection determines if the echo contains a target, providing a basis for parameter extraction; then, the time−delay and Doppler frequency information of the targets are extracted using the multi−target method. The workflow is described as follows:

Figure 5.

Flowchart of the proposed method.

First, a small number of samples from the received radar echo signal are extracted for the creation of training and testing datasets. Since the radar signal is a complex sequence in practice, its real and imaginary parts are extracted, and the one−dimensional signal is transformed into a two−dimensional form to meet the network input dimension requirements, serving as two input channels for the neural network.

Next, the network is trained using the training set. The SE module’s channel attention mechanism and the residual module’s strong feature extraction capabilities are utilized to perform the ’binary classification’ task for target detection.

Then, the trained network model is used for testing. If a target is detected, the process moves to the fast time−frequency difference extraction step; if no target is detected, a new sample is tested, and the process is repeated.

Finally, the fast extraction of target time-frequency differences is performed. By adding upper-layer individual guidance and an enclosing search strategy, the rapid computation of the ambiguity function is achieved. Additionally, a multi-target search mechanism is incorporated, enabling the proposed algorithm to perform a multi−target search.

3.1. Target Detection Model Based on Channel Attention Mechanism

3.1.1. Squeeze-and-Excitation Blocks

It can be seen from the signal model in Section 2 that when a target is present, the radar echo contains features such as the target’s motion information and RCS scattering variations, which are significantly different from the noise signal alone. In traditional convolutional neural networks, convolutional layers typically process the spatial information of input feature maps independently, while channel information is handled separately within each convolutional channel. Squeeze-and-excitation networks, in contrast, adaptively recalibrate channels, enabling the network to more effectively capture inter-channel dependencies [35].

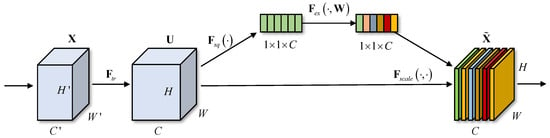

As shown in Figure 6, the key to this network lies in performing the squeeze and excitation operations. First, the network applies global average pooling to the feature map of each channel, and its mathematical formulation is expressed as follows:

Figure 6.

A squeeze-and-excitation block.

This operation ensures that each channel produces a single value, aggregating the global information of each channel and capturing its global feature. Suppose the input feature map is a tensor of size H × W × C, where H and W are the spatial dimensions and C is the number of channels. By performing global average pooling, a vector of size 1 × 1 × C is obtained. In radar echoes, signals that contain targets usually exhibit specific features, such as time delay and Doppler frequency. These features can be represented through the aggregation of global information. This is particularly important for the detection of low, slow, and small targets in radar echoes, as these targets may exhibit weak and localized signal characteristics.

Next, a fully connected layer is employed to learn the weight relationships between channels. The excitation operation is performed on the compressed vector to adaptively adjust and generate the final weight for each channel. The mathematical formulation of this process is expressed as follows:

where and are the weight matrices of the fully connected layers, is the sigmoid activation function, and is the output from the squeeze operation. The result, , is the final adaptive weight vector for each channel. In radar signal processing, certain channels may contain key target features (such as time delay and Doppler frequency), while others may only represent noise. Through training, the SE module can automatically identify and enhance the channels relevant to target detection while suppressing noise or irrelevant channels.

Finally, the feature map of each of channel is multiplied by its corresponding weight, which ‘amplifies’ the important channels and ’suppresses’ the less important ones. Mathematically, this can be expressed as

where is the original feature map for channel c and is the scaling factor for that channel.

In conclusion, the SE module helps the network better focus on key features in small target signals. When Low, slow, and small targets are typically smaller with weaker signals. By enhancing critical channels, the SE module improves the network’s ability to perceive these signals and prevents interference from irrelevant information.

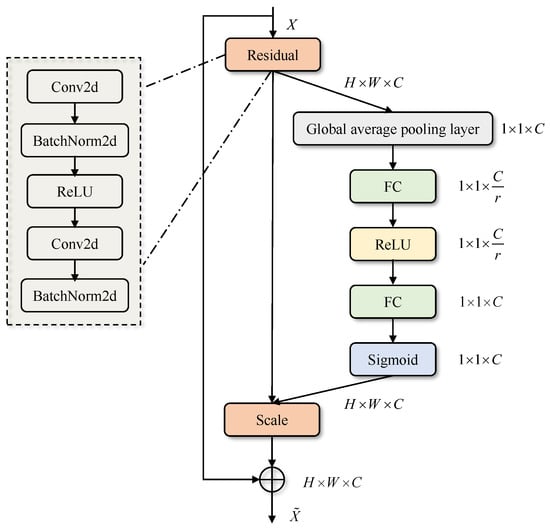

3.1.2. A Brief Introduction to SE-ResNet

SE-ResNet [35] is a streamlined version of the traditional ResNet [37] that incorporates the squeeze-and-excitation mechanism to enhance channel-wise feature recalibration. The SE-residual block is shown in Figure 7.

Figure 7.

SE-residual block.

The residual module effectively mitigates the vanishing gradient problem that occurs with increasing model depth, ensuring performance improvement as the number of layers increases [37]. In this paper, ResNet34 is combined with a squeeze-and-excitation block.

In various image classification tasks, the combination of the SE block and ResNet has been shown to significantly enhance the performance of the ResNet model. The SE block enables the ResNet model to selectively focus on the most important features, allowing the network to learn more discriminative data representations, thereby improving the model’s prediction accuracy. The specific network structure parameters are presented in Table 1.

Table 1.

SE-ResNet34 parameters.

3.1.3. Fast Multi-Target Time-Frequency Difference Extraction Method

This paper presents an efficient method for the rapid extraction of multi-target time-frequency differences in passive radar. By integrating GWO [38] with PSO [34] and incorporating a multi-target iterative search mechanism, the proposed approach enables the accurate and efficient extraction of time delays and Doppler frequency shifts for multiple targets.

If the process of searching for the target’s time-frequency difference is compared to the hunting process of a wolf pack, the position of the prey is analogous to the target’s Doppler frequency, while each individual wolf’s position represents the current Doppler frequency value during the search. The formula for the distance between the target’s Doppler frequency and the current Doppler frequency value during this process is given by (10), and the Doppler frequency update formula is provided in (11).

where represents the current Doppler frequency value during the search; denotes the number of iterations; indicates the Doppler frequency value after iterations; D is the distance between the target’s Doppler frequency and the current doppler frequency; and and are coefficient factors, which are calculated as follows:

where and are random numbers in the range of and is the convergence factor, which, at iteration , is defined as follows:

where is the maximum number of iterations set by the algorithm.

During each iteration, the algorithm updates , , and while retaining their position information (current Doppler frequency), ensuring that each iteration is guided by superior individuals. The specific formulas for position updates during this phase are expressed as follows:

where , , and represent the distances between , , and and other individuals; , , and are random vectors; , , and denote the position of , , and , respectively; and represents the position of .

Formula (16) defines the step length and direction of moving towards , , and , while Formula (17) defines the updated position of .

The particle velocity and position update formulas proposed in [34] are expressed as (18) and (19), respectively:

Based on (18), the velocity update formula for the method proposed in this paper is derived as follows:

The velocity update formula exhibits the following characteristics: it retains the contraction factor () to accelerate the algorithm’s convergence speed, preserves the guiding mechanism of individual experience () and the optimal particle () from the original algorithm, and incorporates the upper-ranking wolf guidance strategy and encircling search strategy ().

To enhance the multi-target search capability of the algorithm, this paper introduces an iterative peak search mechanism. Specifically, by establishing a historical particle set and an optimal particle set to store searched particles and peaks, the algorithm temporarily excludes previously searched target peaks to avoid interference in subsequent iterations. The specific steps are shown in Algorithm 1.

| Algorithm 1 Multi-Fast target time-frequency difference extraction method for passive radar |

|

3.1.4. Computational Complexity Analysis

As shown in Table 2, is the number of Doppler frequency bins in the RD spectrum obtained by the cross-correlation FFT method. represents the number of algorithm iterations, N is the signal length, Q is the population size, and is the repetition factor for multiple particles updating to the same Doppler frequency. M is the number of targets, is the number of iterations for the small population, is the size of the small population, is the number of iterations for the gray wolf population, and is the size of the gray wolf population.

Table 2.

Comparison of the number of complex multiplications.

Since, in practical situations, the Doppler frequency range of the targets is unknown, the value of K is set as large as possible to ensure that all targets can be searched. Therefore, based on the algorithm’s principles, we have < and > . As a result, the method proposed in this paper reduces the computational complexity compared to the cross-correlation FFT method. However, it increases computational complexity compared to the method proposed in reference [34] due to the added iterative search mechanism, which ensures the multi-target search capability of the proposed method.

4. Experimental Results

4.1. Performance of SE-ResNet34 in Passive Radar Target Detection

4.1.1. Dataset Construction

The original data used in this experiment is obtained from field tests. A low, slow, and small target detection scenario based on passive radar is constructed, utilizing the Beigaofeng TV tower in Hangzhou as the signal source. The signal type is DTMB, with a bandwidth of 7.56 MHz and a carrier frequency of 530 MHz. The receiving station is situated in farmland in Jiaxing, where a large antenna array is employed to capture both the reference signal and the target echoes. The receiver’s sampling rate is 25 MHz. To control the SNR of the target echo, based on the passive radar echo signal model, time delay and Doppler frequency shifts are applied to the direct signal to simulate the target echoes.

Based on the constructed passive radar signal model, 60,000 training samples are generated. The signal length is set to , with half of the samples labeled as (containing only noise) and the other half as (containing both target and noise). The expected outputs are 0 for samples and 1 for samples. In constructing the training set for samples, the following assumptions are made: The number of targets is randomly generated within the range of . Each target’s initial time delay is randomly generated within the range of μs, and each target’s Doppler frequency is randomly generated within the range of . The SNR is set to one of the following values: . For each SNR value, 5000 data samples are generated, resulting in a total of 30,000 samples. Next, Test Set 1 is created with 18,000 samples, ensuring that the class distribution in this test set is consistent with that of the training set.

To evaluate the generalization ability of the model, Test Set 2 is constructed. The number of targets is randomly generated within the range of . Each target’s initial time delay and Doppler frequency are generated in the same manner as described earlier. The echo SNR values are set to range from 0 dB to 20 dB, with each SNR value generating 1500 samples and 1500 samples. Therefore, for each SNR value, a total of 3000 samples are generated.

4.1.2. Evaluation Metrics

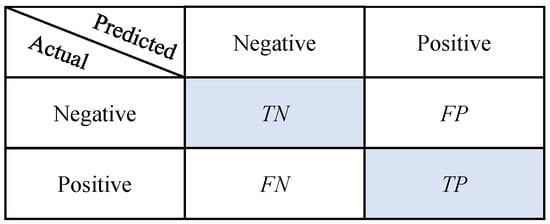

In handing binary classification problems, the test data are divided into two categories: positive and negative samples. As shown in Figure 8, TP (True Positive) refers to correctly classified positive samples, while FP (False Positive) refers to negative samples incorrectly classified as positive. TN (True Negative) represents correctly classified negative samples, and FN (False Negative) refers to positive samples incorrectly classified as negative.

Figure 8.

Confusion matrix.

The formula for accuracy is defined as follows:

The formula for the detection rate (also called recall) is defined as follows:

The formula for the false alarm rate is defined as follows:

4.1.3. Network Effectiveness Analysis

During the training process, the backpropagation algorithm is employed to minimize the cross-entropy loss function. The hyperparameters are set as follows: the batch size is 64, the model is trained for 500 epochs, the learning rate is initialized at 0.001, and the Adam optimizer is utilized for optimization.

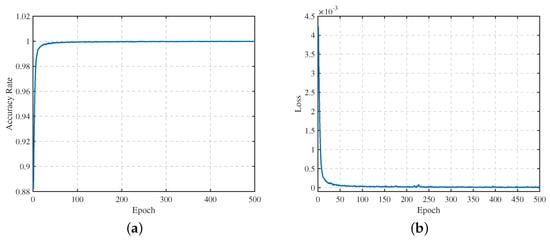

As shown in Figure 9, the network’s loss curve decreases rapidly during the first ten epochs. As the number of iterations increases, the loss gradually approaches zero and stabilizes. Concurrently, the model’s accuracy rises sharply, reaching approximately 99.5% within the first ten epochs. After 50 epochs of training, the accuracy on the training set approaches 100%, and it remains stable at this level throughout the remaining training process.

Figure 9.

The curves of training accuracy and loss. (a) Training accuracy variation; (b) training loss variation.

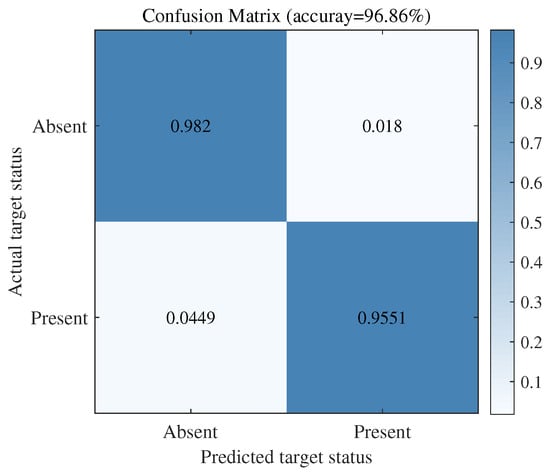

The performance of the network on Test Set 1 is shown in Figure 10. As illustrated, in the binary classification task discussed in this paper, when the target is absent, the probability of the network correctly predicting the result is 98.2%, while the probability of incorrect prediction is 1.8%; when the target is present, the probability of the network correctly predicting the result is 95.51%, while the probability of incorrect prediction is 4.49%. These results indicate that SE−ResNet34 demonstrates significant effectiveness and feasibility in passive radar target detection.

Figure 10.

The confusion matrix for Test Set 1.

4.1.4. Experiment on the Relationship Between Accuracy and SNR

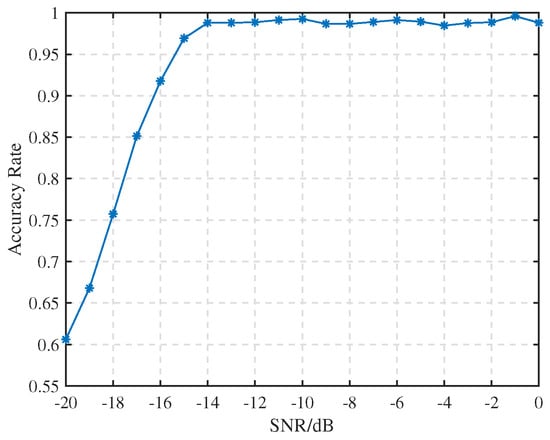

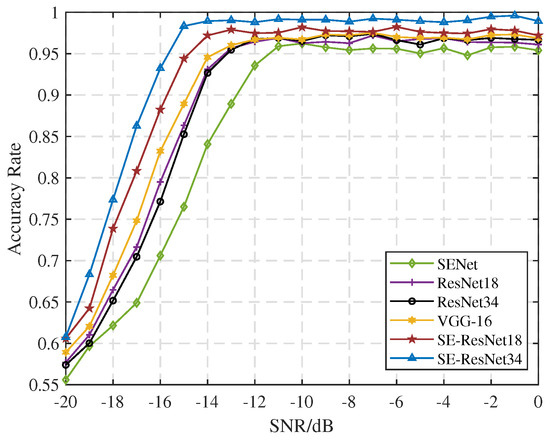

To further evaluate the feasibility and generalization ability of the network in the target detection task, Test Set 2 was used to assess how the accuracy of the network model varies under different SNR conditions. The experimental results are shown in Figure 11.

Figure 11.

Accuracy variation under different SNRs.

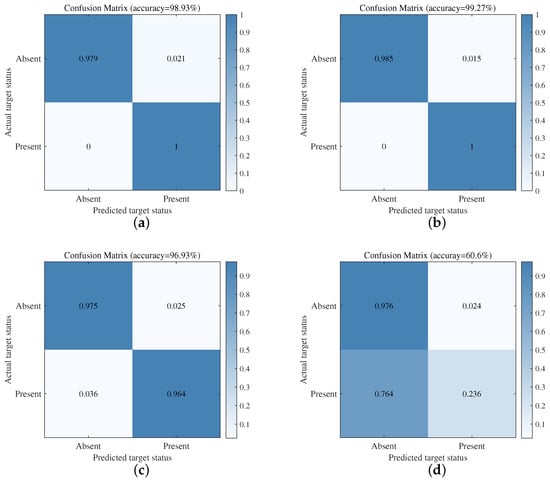

As shown in Figure 12, when the SNR is relatively high, such as at and , when the target is present, the probability of the network correctly predicting the result is 100%; when the target is absent, only a small number of samples is misclassified, with a probability of approximately 1% to 2%. The overall accuracy is approximately 99%.

Figure 12.

The confusion matrix under different SNRs. (a) SNR = −5 dB; (b) SNR = −10 dB; (c) SNR = −15 dB; (d) SNR = −20 dB.

However, as the echo SNR decreases, the network’s probability of detecting positive samples also decreases. This trend is particularly noticeable when the SNR is very low, as the echo signal becomes increasingly dominated by noise. For example, when the SNR is −20 dB and the target is present, the probability of the network making a correct prediction is only 23.6%. Under these conditions, it becomes challenging for the network to effectively distinguish between the actual target features and the noise. As a result, detection accuracy significantly drops when the echo SNR is low.

4.1.5. Comparison with Other Deep Learning Networks

To demonstrate the superiority of the SE-ResNet34 model, this paper uses SE-ResNet18, ResNet34, ResNet18, VGG-16 [39], and SENet as benchmark models for comparison. All models are trained using the same set of parameters, including learning rate, optimizer, and others. The test sets used for the comparison experiments are Test Set 1 and Test Set 2.

Table 3 presents the computational costs of different deep learning networks. It can be observed that after adding the SE module, the increase in both the parameter count and computational cost of the residual networks is minimal. Adding the SE module improves the model’s feature representation ability while keeping the parameter count under control. By comparing SE−ResNet18 with SE−ResNet34, it can be observed that as the network depth increases, the number of parameters grows significantly. VGG−16 has more total parameters compared to SE−ResNet, but its accuracy is lower than that of the latter. When computational resources permit, it is acceptable to trade computational resources for improved detection accuracy.

Table 3.

Computational costs of different methods.

From the comparison results in Table 4, it can be observed that SE-ResNet34 achieves the best performance in terms of accuracy, detection accuracy, and false alarm rate. However, due to its larger number of parameters, the average training time is longer, although still within an acceptable range. Overall, SE−ResNet34 shows outstanding performance in passive radar target detection.

Table 4.

Performance comparison of different models.

As shown in Figure 13, as the SNR decreases, the accuracy of all networks declines. Among them, SE−ResNet34 maintains the highest accuracy across a broad range of SNR values. SE−ResNet18 exhibits sightly lower accuracy due to its shallower architecture compared to SE−ResNet34. However, the increase in the number of parameters in SE−ResNet34, resulting from its deeper structure, does not significantly affect its performance. In contrast, ResNet18, ResNet34, and SENet lack a channel attention mechanism and residual structure, which limits their ability to effectively extract deep features.

Figure 13.

Target detection accuracy under different SNRs.

4.2. Experimental Study on the Rapid Extraction of Time-Frequency Differences

After performing target detection on the test signal using the SE−ResNet34 network model, rapid time−frequency difference estimation is applied to signals identified as containing a target. A series of experiments are conducted to validate the effectiveness and efficiency of the proposed method.

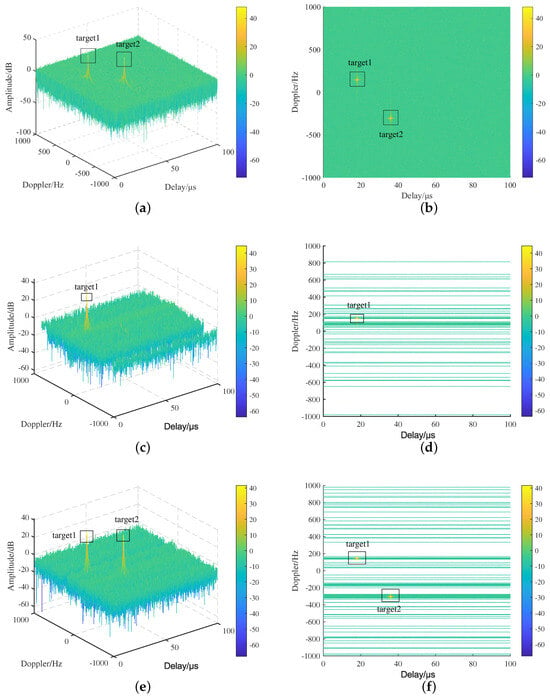

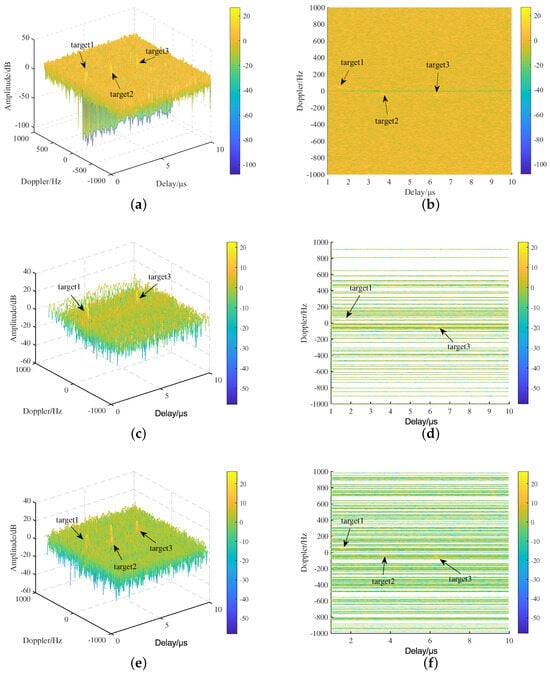

Assuming the echo contains two targets with SNRs of −15 dB and −14 dB, respectively, the time delay of target 1 is 18 μs, and its Doppler frequency is 145 Hz, while the time delay of target 2 is 36 μs, and its Doppler frequency is −305 Hz. The accumulation time is 0.5 s. Given the absence of prior information about the targets, a wide-range search for time delay and Doppler frequency is necessary when using the cross-ambiguity function for time-frequency difference estimation. The time-delay search range is set to [0, 100] μs, and the Doppler frequency search range is set to [−1000, 1000] Hz, with a total of 1000 frequency points.

Figure 14b,d,f display the corresponding time-delay Doppler-plane accumulation results, where each line represents the total ambiguity function results within the time-delay search range at a specific frequency point and the unit of the color bar is dB. The number of computation frequency points in these case are 1000, 59, and 92, respectively. When two targets are present, both the cross-correlation FFT method and the proposed method are able to detect all targets, with the computational complexity of the proposed method being approximately 1/10 that of the cross-correlation FFT method. The method proposed in [34] has the lowest computation complexity but lacks the ability to detect multiple targets, resulting in target misdetection. The proposed method provides the best detection performance while maintaining an acceptable computational load.

Figure 14.

Time −frequency difference estimation results. (a) Cross−correlation FFT method; (b) top view of cross−correlation FFT; (c) method proposed in [34]; (d) top view of the method proposed in [34]; (e) the proposed method; (f) top view of the proposed method.

A Monte Carlo experiment was conducted on the ambiguity function calculation process, with each experiment repeated 100 times. Statistics were performed on the average number of frequency points, average computation time, and multi-target search success rate to provide a comprehensive measure of the proposed algorithm’s performance. The experimental results are shown in Table 5. The definition of the multi-target search success rate is expressed as follows:

Table 5.

Comparison of different methods for two targets.

As shown in Table 5, although the method proposed in [34] has the lowest computation time, it is unable to perform multi-target searches and fails to detect all targets. In contrast, the method proposed in this paper accurately detects all targets, with a computational cost approximately 1/8 of that of the cross-correlation FFT method. The proposed method significantly reduces the number of frequency search points while maintaining detection accuracy.

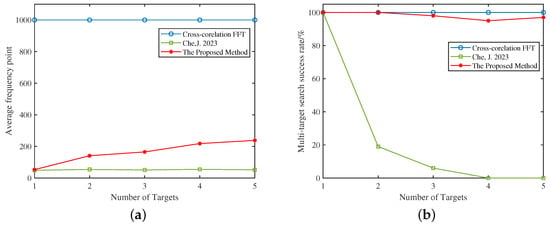

Next, the number of targets is varied, with the parameters for each target specified in Table 6. Monte Carlo experiments are performed for each target using the previously described search process, generating the search results for different methods with varying target quantities.

Table 6.

Target parameter settings.

As shown in Table 7, after varying the number of targets, the method proposed in [34] has the fewest frequency points and the shortest computation time but lacks multi-target detection capability, making it unsuitable for practical use. The proposed method achieves nearly 100% multi-target search success and significantly reduces the number of frequency points compared to the traditional method, and the increased computation time is acceptable.

Table 7.

Impact of the number of targets on algorithm performance.

As shown in Figure 15, as the number of targets increases, the proposed method’s frequency-point count rises but remains lower than that of the cross-correlation FFT method. The method proposed in [34] rapidly loses multi-target search success as the number of targets increases, failing to detect all targets. In conclusion, the proposed method maintains strong multi-target detection capability while significantly reducing computation.

Figure 15.

Performance of Cross-corelation FFT, method in [34] and the proposed method with varying target numbers. (a) Variation in average frequency points; (b) variation in search success rate.

4.3. Experiment with Measured Data

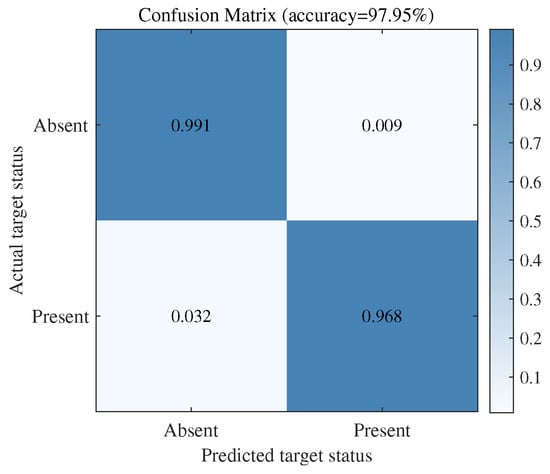

The field test scenario follows the description in Section 4.1, where DJI drones are flown at low altitude to serve as the targets for detection. First, the received echoes are processed using DBF at the receiver to enhance the target echo energy. Then, clutter suppression is applied to minimize the impact of environmental clutter. Finally, the processed echoes are used to construct the measured dataset. The training set consists of 3000 samples with targets and 3000 samples without targets. The test set includes 1000 samples with targets and 1000 samples without targets. Each sample consists of 1444 sampling points.

The data are preprocessed and fed into the network model for target detection. The resulting confusion matrix for the test results is shown in Figure 16. As shown in Figure 16, the confusion matrix validates the effectiveness of the network model. When the target is absent, the probability of the network correctly predicting the result is 99.1%, while the probability of incorrect prediction is 0.9%; when the target is present, the probability of the network correctly predicting the result is 96.8%, while the probability of incorrect prediction is 3.2%.

Figure 16.

Confusion matrix for measured data.

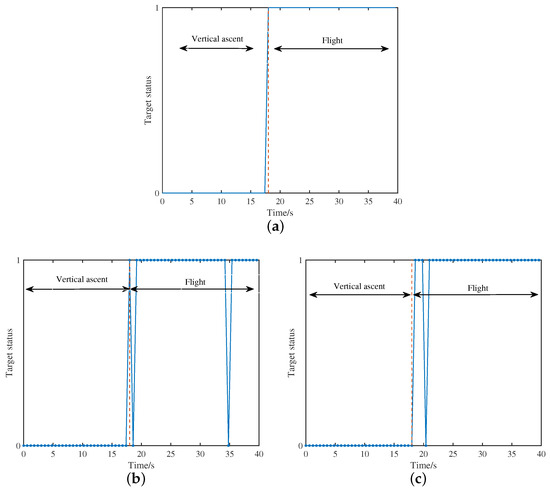

Now, the drones perform predefined maneuvers to evaluate the model’s effectiveness. The total data collection time is set to 40 s, with the drones ascending vertically from 0 to 18 s, followed by radial motion toward the receiver array from 18 to 40 s. The theoretical target status and detection results are shown in Figure 17, assuming the drones are in the ‘target present’ state during radial motion.

Figure 17.

Target status detection results. (a) Theoretical target status; (b) result of the proposed method; (c) results of the traditional method.

As shown in Figure 17, the detection results of the deep learning-based target detection network are close to the theoretical scenario. The fluctuation around 18 s occurs due to the transition of the drones from vertical ascent to radial motion. The target detection method based on traditional coherent accumulation followed by CFAR detection in passive radar also successfully detects the target. However, the overall computational time is too high and does not meet real-time requirements.

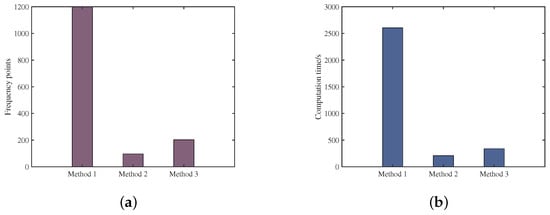

The time required by the proposed method includes the duration for testing with the trained model (T1) and the time for performing time-frequency difference extraction (T2). Since T1 is negligible compared to T2, only T2 is considered here. The time required by the traditional passive radar target detection method is mainly concentrated in the coherent accumulation step, i.e., the cross-correlation FFT algorithm described in this paper. A comparison of the time taken using the measured data is shown in Figure 18.

Figure 18.

Detection speed comparison. (a) Comparison of search frequency points; (b) comparison of computation time.

The cross-correlation accumulation time is 0.6 s, with a delay search range of μs and a Doppler search range of . The environment includes three drones as the detection targets. As shown in Figure 18, Method 1 is the cross-correlation FFT algorithm, Method 2 is the method proposed in [34], and Method is the time-frequency difference extraction algorithm proposed in this paper. The frequency points for the three methods are 1200, 97, and 203, and the computation times for three methods are 2605.76 s, 210.61 s, and 338.98 s, respectively. As shown in Figure 19, the cross-correlation FFT method detects all three targets but with significant computational redundancy, while the method proposed in [34] detects only two targets. The proposed method detects all three targets while significantly reducing computation, making it suitable for practical applications.

Figure 19.

Time−frequency difference estimation result. (a) Cross−correlation FFT method; (b) top view of cross−correlation FFT; (c) method proposed in [34]; (d) top view of the method proposed in [34]; (e) the proposed method; (f) top view of the proposed method.

5. Discussion

In real-world applications, the proposed system may encounter several limitations that could impact its performance. Some of the most common factors include the following:

First, adverse weather conditions such as heavy rain, snow, or fog can significantly degrade the performance of radar and other sensing systems. Radar signals may be heavily attenuated by precipitation, resulting in reduced detection capabilities.

Next, due to its bistatic configuration, the passive radar system imposes strict terrain requirements. Obstructions such as mountains and tall buildings can block both the echo signal and even the direct signal reception. Additionally, during long-range detection, the Earth’s curvature may hinder the reception of reference signals.

Finally, in practical applications, environmental parameters evolve over time. As a result, the features extracted by the network may no longer be applicable to the current conditions. Therefore, periodic data collection and network retraining are necessary to maintain optimal performance.

6. Conclusions

This paper proposes an intelligent target detection method based on passive radar. By incorporating a residual network architecture with a channel attention mechanism to construct SE-ResNet34, the proposed method effectively determines the presence or absence of targets in echo signals. The time-frequency differences of the targets are then efficiently extracted, enabling the successful completion of the passive radar target detection task. Simulation experiments demonstrate that the SE-ResNet34 model achieves a high target detection rate in the binary classification task. Furthermore, under low-SNR conditions, the accuracy of the proposed model surpasses that of the compared models. The simulation experiments also indicate that the proposed time-frequency difference extraction method is capable of multi-target time-frequency difference search, achieving a success rate above 95%, while significantly reducing the number of search frequency points. Field tests confirm that the proposed method performs robustly in real-world environments, highlighting its significant potential for engineering applications.

Although SE-ResNet34 maintains high accuracy, it also requires considerable computational resources. Developing a lightweight target detection network will be a key focus of future research. Moreover, a deeper analysis of the model’s generalization to larger datasets from real-world field experiments will be an important direction for further investigation. The proposed rapid time-frequency difference extraction method still exhibits computational redundancy compared to the methods compared in this paper. Further reducing the number of search frequency points and improving search efficiency will be important areas for future work. Moreover, the practical performance of this method needs to be further explored as the number of targets increases.

Author Contributions

Conceptualization, C.W.; methodology, T.C.; software, T.C.; formal analysis, T.C. and Z.R.; data curation, H.Z.; writing—original draft preparation, T.C.; visualization, T.C. and Y.Y.; writing—review and editing, T.C. and C.W.; project administration, F.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data that support this study are available from author H.Z. upon reasonable request.

Acknowledgments

Supported by the National Key Laboratory on Blind Signal Processing.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kuschel, H.; Cristallini, D.; Olsen, K.E. Tutorial: Passive radar tutorial. IEEE Aerosp. Electron. Syst. Mag. 2019, 34, 2–19. [Google Scholar] [CrossRef]

- Wan, X.; Yi, J.; Zhan, W.; Xie, D.; Shu, K.; Song, J.; Cheng, F.; Rao, Y.; Gong, Z.; Ke, H. Research Progress and Development Trend of the Multi-Illuminator-based Passive Radar. J. Radars 2020, 9, 939–958. [Google Scholar]

- Colone, F.; Martelli, T.; Bongioanni, C.; Pastina, D.; Lombardo, P. WiFi-based PCL for monitoring private airfields. IEEE Aerosp. Electron. Syst. Mag. 2017, 32, 22–29. [Google Scholar] [CrossRef]

- Palmer, J.E.; Harms, H.A.; Searle, S.J.; Davis, L. DVB-T Passive Radar Signal Processing. IEEE Trans. Signal Process. 2012, 61, 2116–2126. [Google Scholar] [CrossRef]

- Wang, Q.; Du, P.; Yang, J.; Wang, G.; Lei, J.; Hou, C. Transferred deep learning based waveform recognition for cognitive passive radar. Signal Process. 2019, 155, 259–267. [Google Scholar] [CrossRef]

- Wan, X. An Overview on Development of Passive Radar Based on the Low Frequency Band Digital Broadcasting and TV Signals. J. Radars 2012, 1, 109–123. [Google Scholar]

- Zhao, Y.; Hu, D.; Zhao, Y.; Liu, Z. Moving target localization for multistatic passive radar using delay, Doppler and Doppler rate measurements. J. Syst. Eng. Electron. 2020, 31, 939–949. [Google Scholar]

- Pine, K.C.; Pine, S.; Cheney, M. The Geometry of Far-Field Passive Source Localization With TDOA and FDOA. IEEE Trans. Aerosp. Electron. Syst. 2021, 57, 3782–3790. [Google Scholar] [CrossRef]

- Huang, P.; Liao, G.; Yang, Z.; Xia, X.G.; Ma, J.T.; Ma, J. Long-Time Coherent Integration for Weak Maneuvering Target Detection and High-Order Motion Parameter Estimation Based on Keystone Transform. IEEE Trans. Signal Process. 2016, 64, 4013–4026. [Google Scholar] [CrossRef]

- Conte, E.; Lops, M.; Ricci, G. Asymptotically optimum radar detection in compound-Gaussian clutter. IEEE Trans. Aerosp. Electron. Syst. 1995, 31, 617–625. [Google Scholar] [CrossRef]

- Zhou, W.; Xie, J.; Zhang, B.; Li, G. Maximum Likelihood Detector in Gamma-Distributed Sea Clutter. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1705–1709. [Google Scholar] [CrossRef]

- Chen, X.; He, X.; Deng, Z.; Guan, J.; Du, X.; Xue, W.; Su, N.; Wang, J. Radar Intelligent Processing Technology and Application for Weak Target. J. Radars 2024, 13, 501. [Google Scholar]

- Steenson, B.O. Detection Performance of a Mean-Level Threshold. IEEE Trans. Aerosp. Electron. Syst. 1968, AES-4, 529–534. [Google Scholar] [CrossRef]

- Chen, X.; Guan, J.; He, Y. Applications and Prospect of Micro-motion Theory in the Detection of Sea Surface Target. J. Radars 2013, 2, 123. [Google Scholar] [CrossRef]

- Liu, J.; Li, C.; Nie, Y.; Cui, G.; Wang, Y.; Xu, R. A target detection method based on deep learning. Radar Sci. Technol. 2020, 18, 667–671. [Google Scholar]

- Herman, M.A.; Strohmer, T. High-Resolution Radar via Compressed Sensing. IEEE Trans. Signal Process. 2009, 57, 2275–2284. [Google Scholar] [CrossRef]

- Su, N.; Chen, X.; Guan, J.; Huang, Y.; Liu, N. One-dimensional Sequence Signal Detection Method for Marine Target Based on Deep Learning. J. Signal Process. 2020, 36, 1987–1997. [Google Scholar]

- Kong, Y.; Feng, D.; Zhang, J. Radar HRRP Target Recognition Based on Composite Deep Networks. In Proceedings of the 2022 International Applied Computational Electromagnetics Society Symposium (ACES-China), Xuzhou, China, 9–12 December 2022; pp. 1–5. [Google Scholar]

- Tang, T.; Wang, C.; Gao, M. Radar Target Recognition Based on Micro-Doppler Signatures Using Recurrent Neural Network. In Proceedings of the 2021 IEEE 4th International Conference on Electronics Technology (ICET), Chengdu, China, 7–10 May 2021; pp. 189–194. [Google Scholar]

- Chen, X.; Su, N.; Huang, Y.; Guan, J. False-Alarm-Controllable Radar Detection for Marine Target Based on Multi Features Fusion via CNNs. IEEE Sens. J. 2021, 21, 9099–9111. [Google Scholar] [CrossRef]

- Wang, L.; Tang, J.; Liao, Q. A Study on Radar Target Detection Based on Deep Neural Networks. IEEE Sens. Lett. 2019, 3, 7000504. [Google Scholar] [CrossRef]

- Chen, X.; Su, N.; Guan, J.; Mou, X.; Xue, Y. Integrated Processing of Radar Detection and Classification for Moving Target via Time-frequency Graph and CNN Learning. In Proceedings of the 2019 URSI Asia-Pacific Radio Science Conference (AP-RASC), New Delhi, India, 9–15 March 2019; pp. 1–4. [Google Scholar]

- Wang, Y.; Zhao, W.; Wang, X.; Chen, J.; Li, H.; Cui, G. Nonhomogeneous sea clutter suppression using complex-valued U-Net model. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4027705. [Google Scholar] [CrossRef]

- Qu, Q.; Wang, Y.L.; Liu, W.; Li, B. A false alarm controllable detection method based on CNN for sea-surface small targets. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4025705. [Google Scholar] [CrossRef]

- Wang, C.; Tian, J.; Cao, J.; Wang, X. Deep learning-based UAV detection in pulse-Doppler radar. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5105612. [Google Scholar] [CrossRef]

- Jiao, J.; Zhang, Y.; Sun, H.; Yang, X.; Gao, X.; Hong, W.; Fu, K.; Sun, X. A densely connected end-to-end neural network for multiscale and multiscene SAR ship detection. IEEE Access 2018, 6, 20881–20892. [Google Scholar] [CrossRef]

- Kim, Y.; Alnujaim, I.; You, S.; Jeong, B.J. Human detection with range-Doppler signatures using 3D convolutional neural networks. In Proceedings of the IGARSS 2020-2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 2440–2442. [Google Scholar]

- Su, N.; Chen, X.; Guan, J.; Huang, Y. Maritime target detection based on radar graph data and graph convolutional network. IEEE Geosci. Remote Sens. Lett. 2021, 19, 4019705. [Google Scholar] [CrossRef]

- Chen, S.; Feng, C.; Huang, Y.; Chen, X.; Li, F. Small target detection in X-band sea clutter using the visibility graph. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5115011. [Google Scholar] [CrossRef]

- Yan, K.; Bai, Y.; Wu, H.C.; Zhang, X. Robust target detection within sea clutter based on graphs. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7093–7103. [Google Scholar] [CrossRef]

- Tolimieri, R.; Winograd, S. Computing the ambiguity surface. IEEE Trans. Acoust. Speech Signal Process. 1985, 33, 1239–1245. [Google Scholar] [CrossRef]

- Li, J.; He, Y.; Song, J. The algorithms and performance analysis of cross ambiguity function. In Proceedings of the 2009 IET International Radar Conference, Guilin, China, 20–22 April 2009; pp. 1–4. [Google Scholar]

- Wang, Z.; Yan, T. Fast Time Delay Estimation Algorithm Using Ambiguity Function. Radio Commun. Technol. 2015, 41, 52–55. [Google Scholar]

- Che, J.; Wang, C.; Jia, Y.; Ren, Z.; Liu, C.; Zhou, F. Fast algotithm for intelligent optimization of the cross ambiguity function of passive radar. J. Xidian Univ. 2023, 50, 21–33. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Colone, F.; O’Hagan, D.; Lombardo, P.; Baker, C. A multistage processing algorithm for disturbance removal and target detection in passive bistatic radar. IEEE Trans. Aerosp. Electron. Syst. 2009, 45, 698–722. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Faris, H.; Aljarah, I.; Al-Betar, M.; Mirjalili, S. Grey wolf optimizer: A review of recent variants and applications. Neural Comput. Appl. 2018, 30, 413–435. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).