Multi-Temporal and Multi-Resolution RGB UAV Surveys for Cost-Efficient Tree Species Mapping in an Afforestation Project

Abstract

1. Introduction

2. Materials and Methods

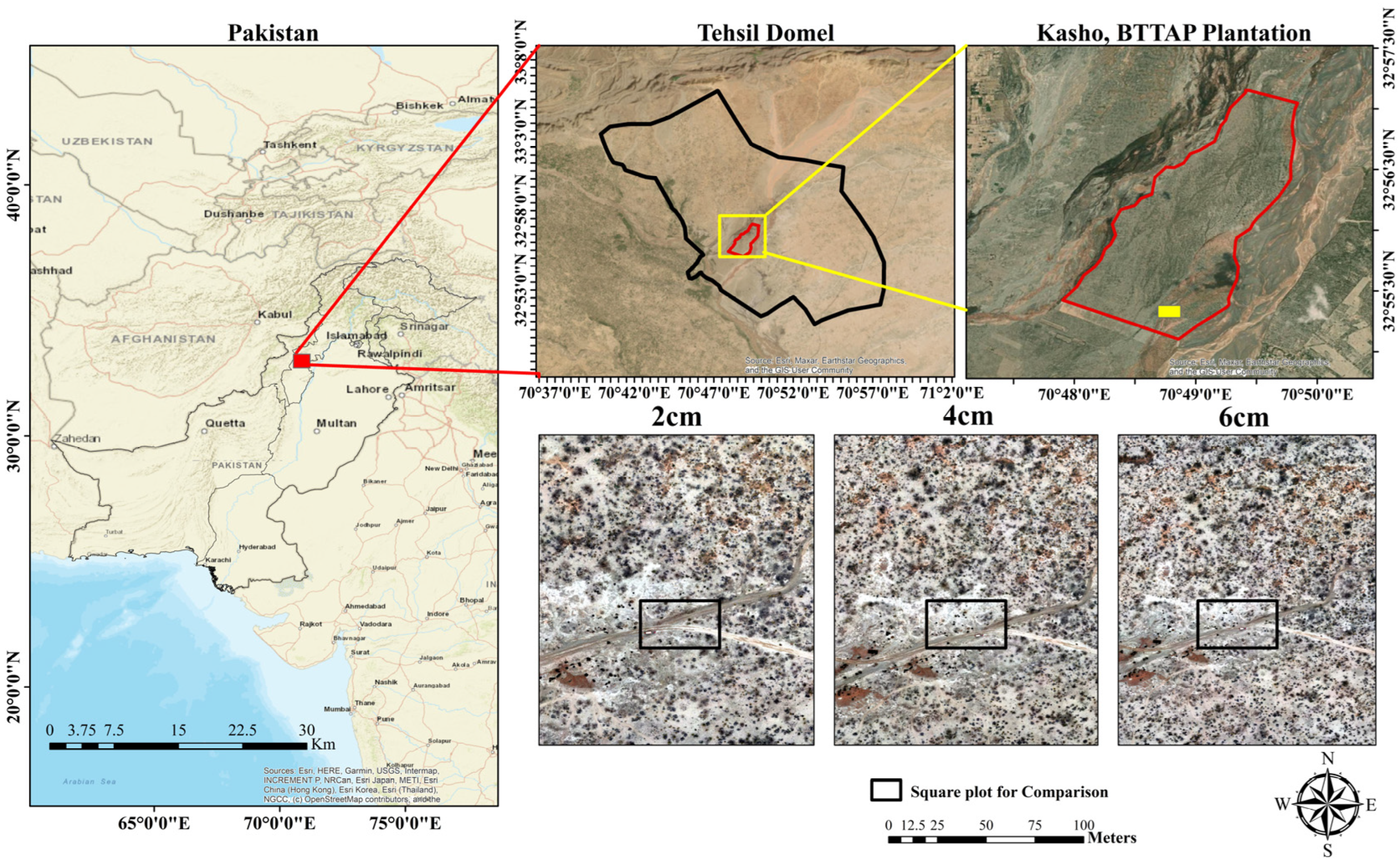

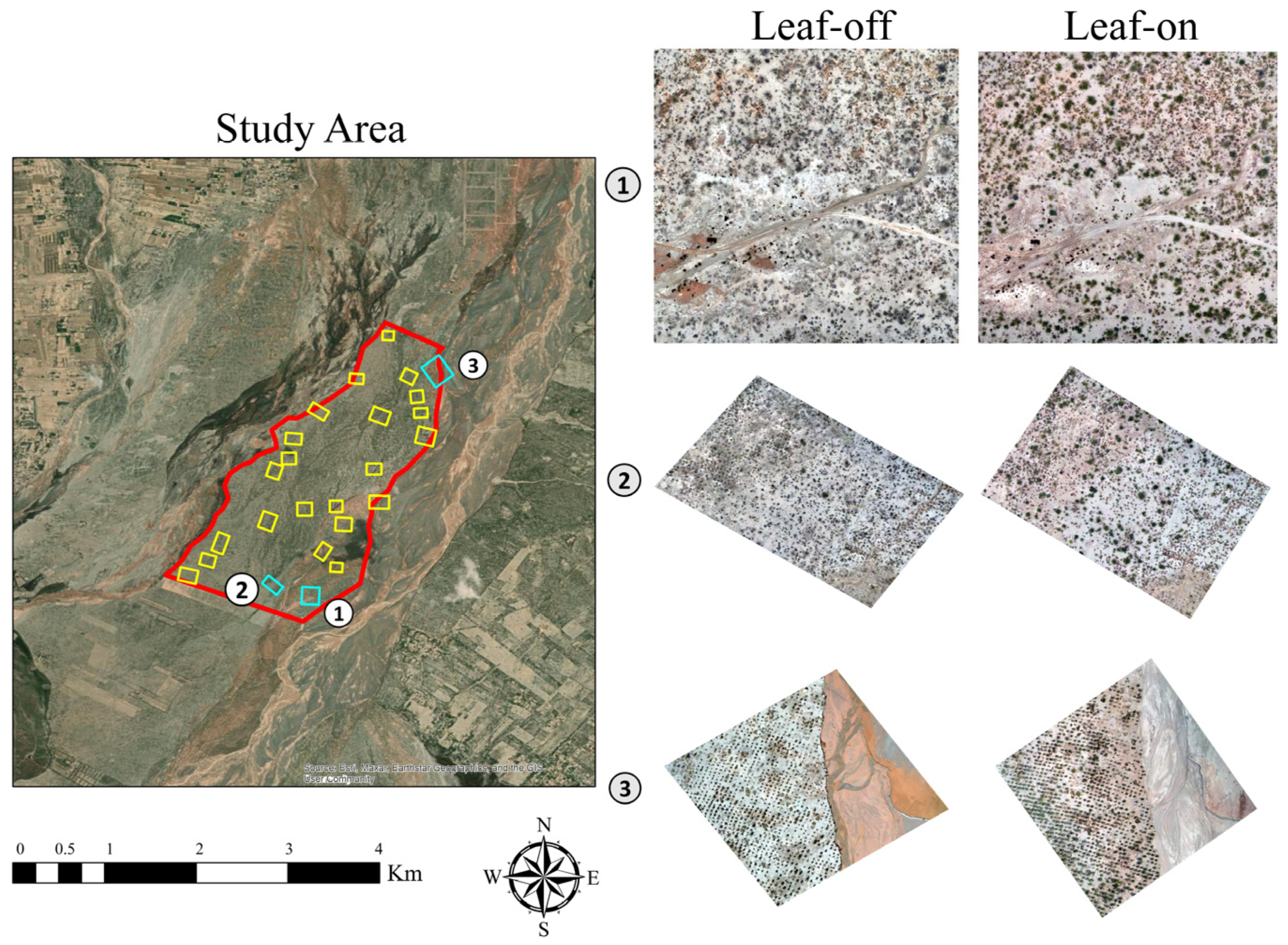

2.1. Study Area

2.2. Data Collection

Leaf-Off and Leaf-On Data Collection

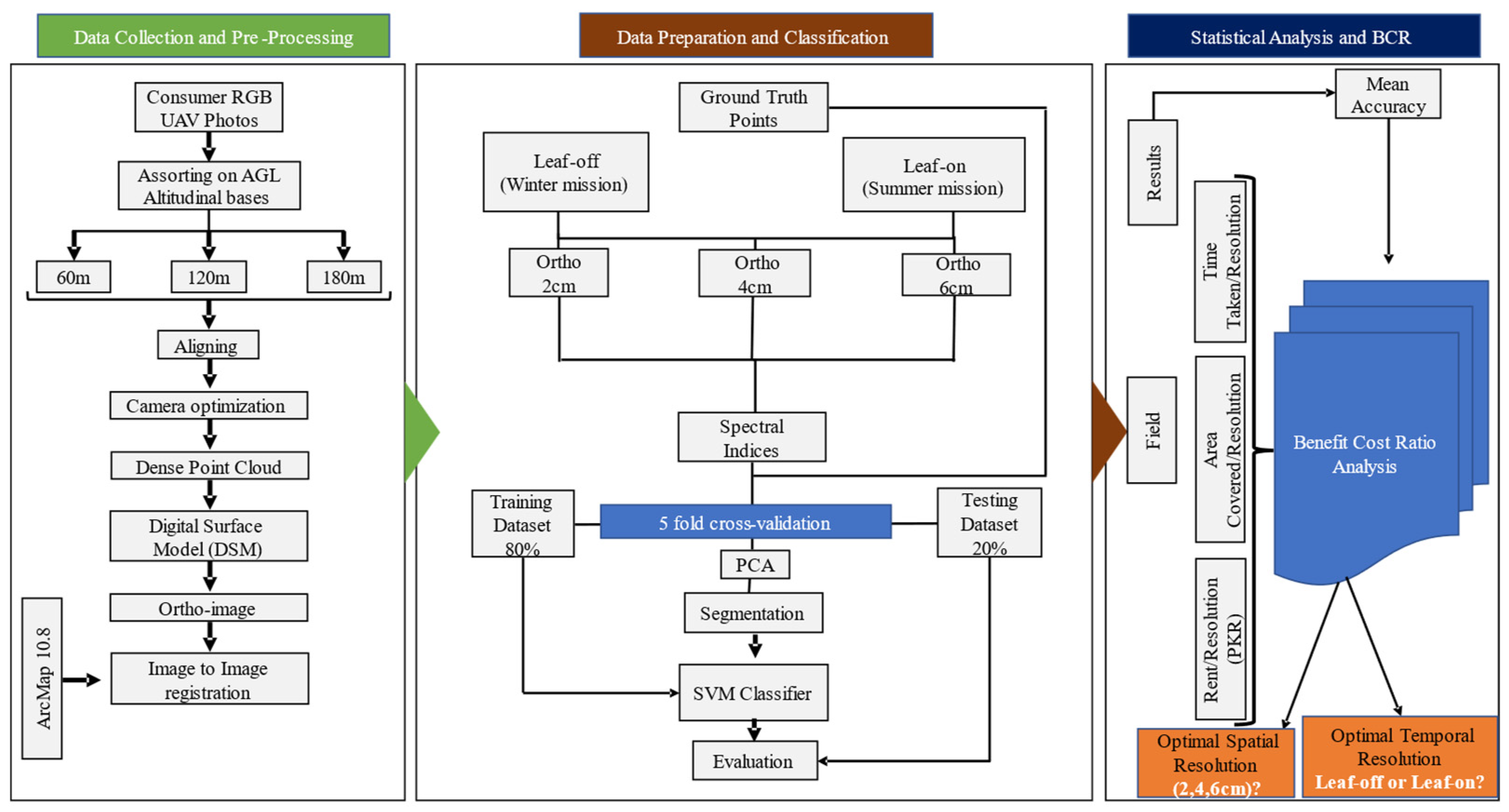

2.3. Method

2.3.1. Orthoimage Generation

2.3.2. Feature Preparation and Principal Component Analysis (PCA) for Effective Model Training

- Kernel: RBF;

- C: 1.0 (the default value in Scikit-learn, representing the penalty parameter for misclassification);

- Gamma: ‘scale’ (the default value in Scikit-learn, which is 1/(n_features * X.var()), where n_feature = 3(RGB) + 1(PCA), while X.var() represents the variance of a given independent variable).

- W: Represents the weight vector, which defines the orientation of the hyperplane in the feature space.

- b: Refers to the bias term, which adjusts the position of the hyperplane relative to the origin, allowing it to be shifted.

- Xi: The feature vector that includes both RGB ortho and PCA data, providing the input features for classification.

- Yi: Denotes the class label for each data point, representing the category or class to which the data point belongs.

2.3.3. Training and Validation

2.3.4. Entropy Analysis for Information Gain and Loss Assessment

- H(X) represents the entropy of a patch;

- is the probability of occurrence of pixel value within that patch.

3. Results

3.1. Impact of Resolution and Seasonal Conditions on Classification Accuracy and Variability

3.2. Resolution Impact on Area Coverage and Time Efficiency

3.3. Entropy and Information Gain/Loss Across Spatial and Temporal Resolutions

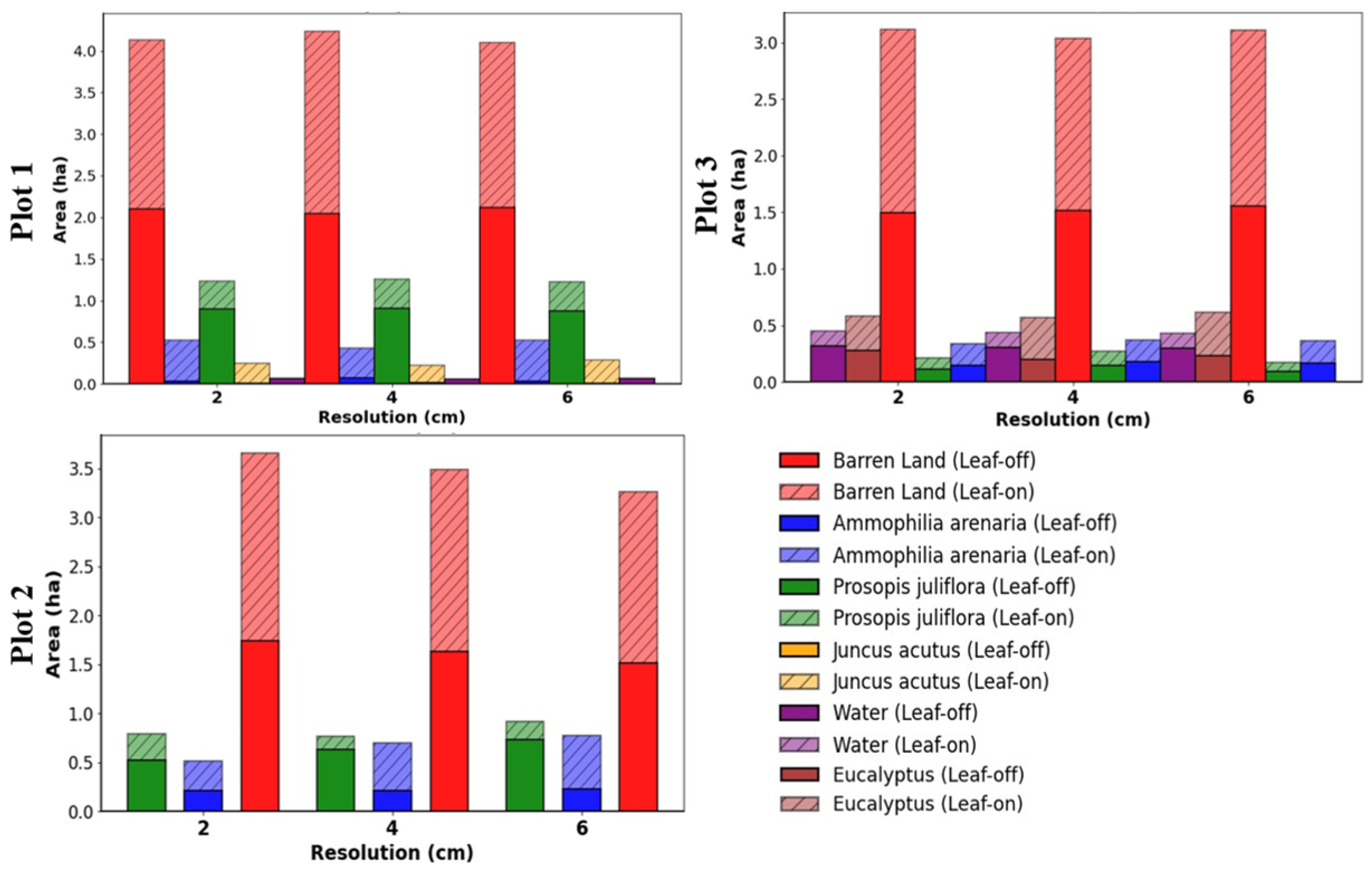

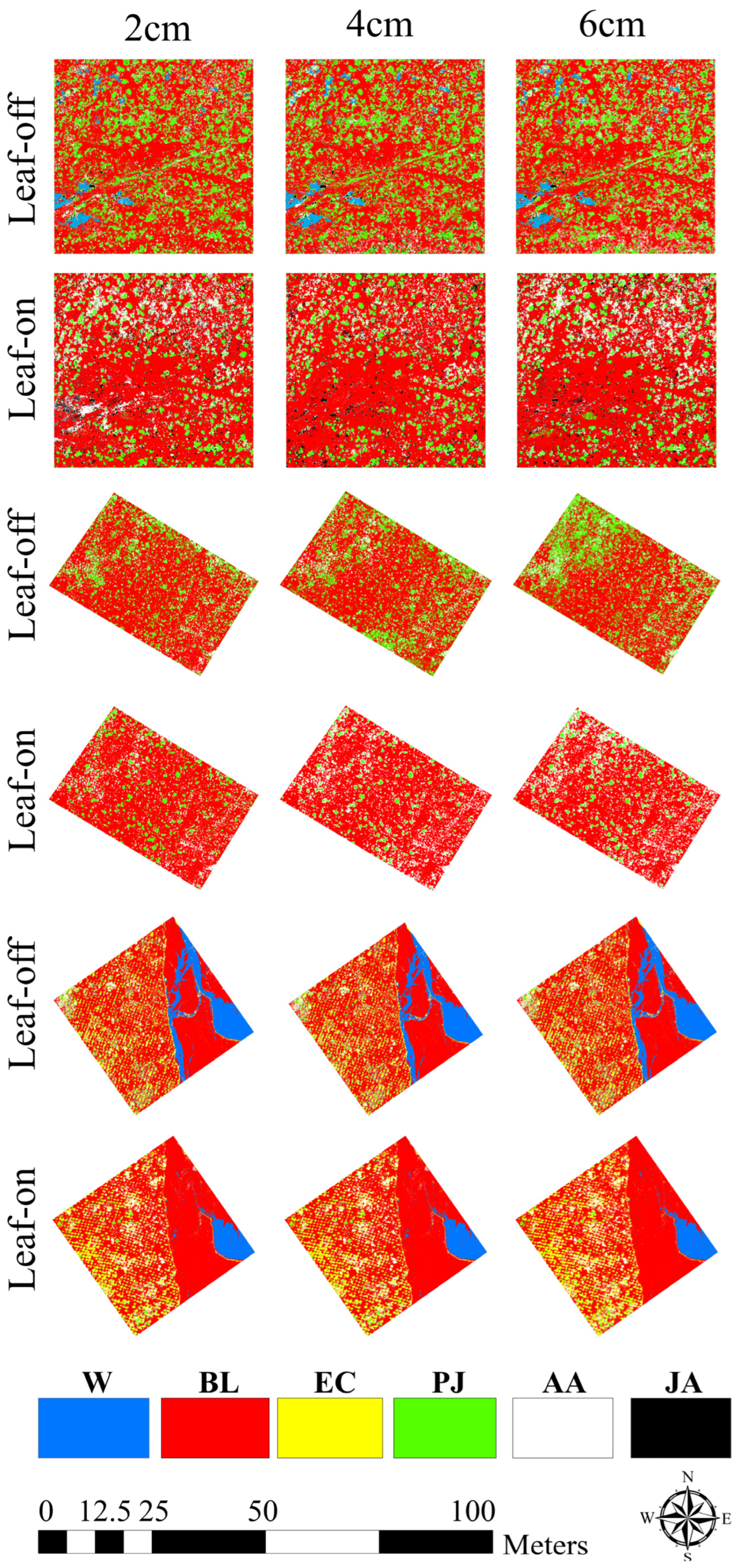

3.4. Spatial Distribution of Vegetation Classes and Area Coverage at Each Resolution

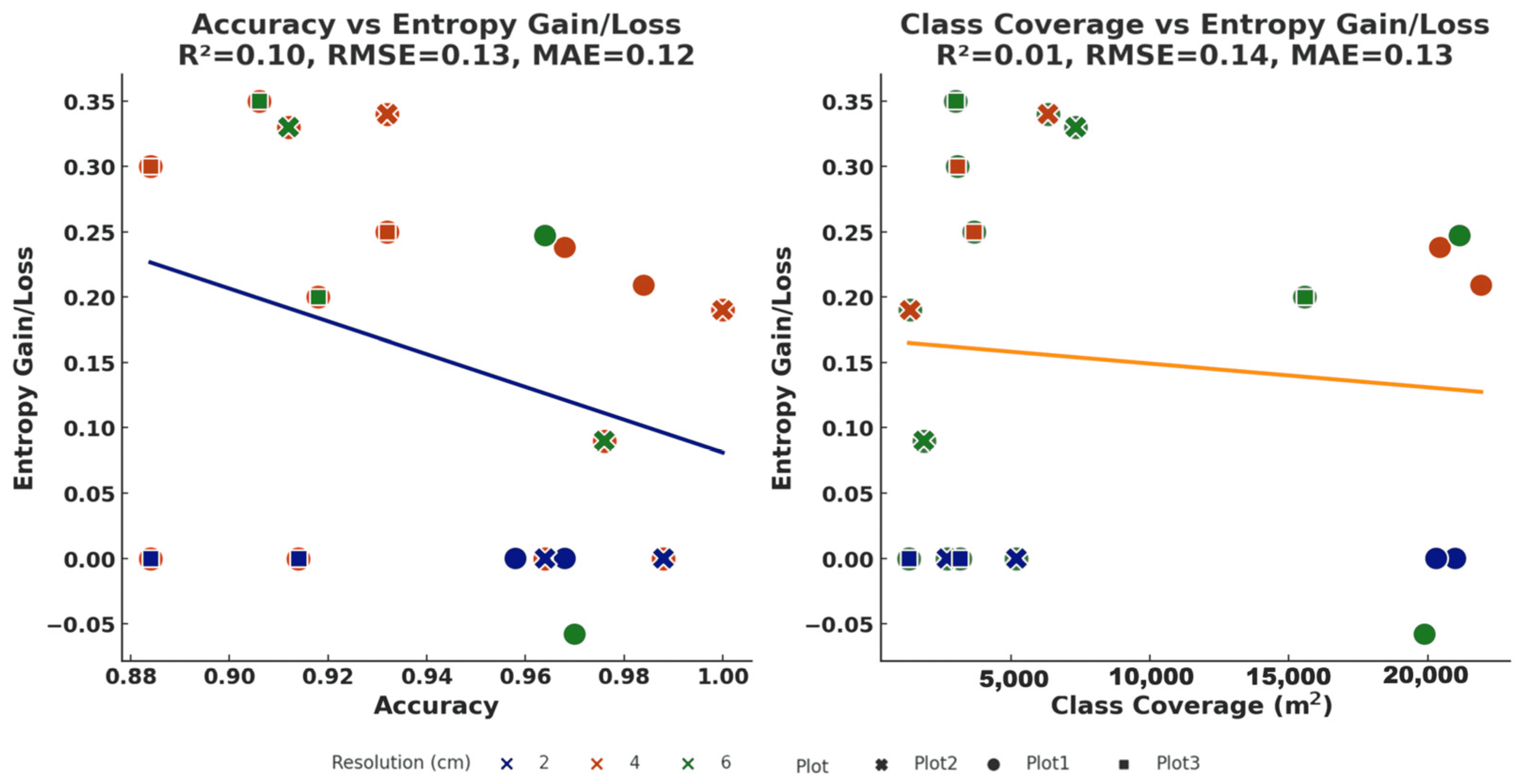

3.5. Resolution and Seasonal Effects on Information Dynamics, Accuracy, and Vegetation Coverage

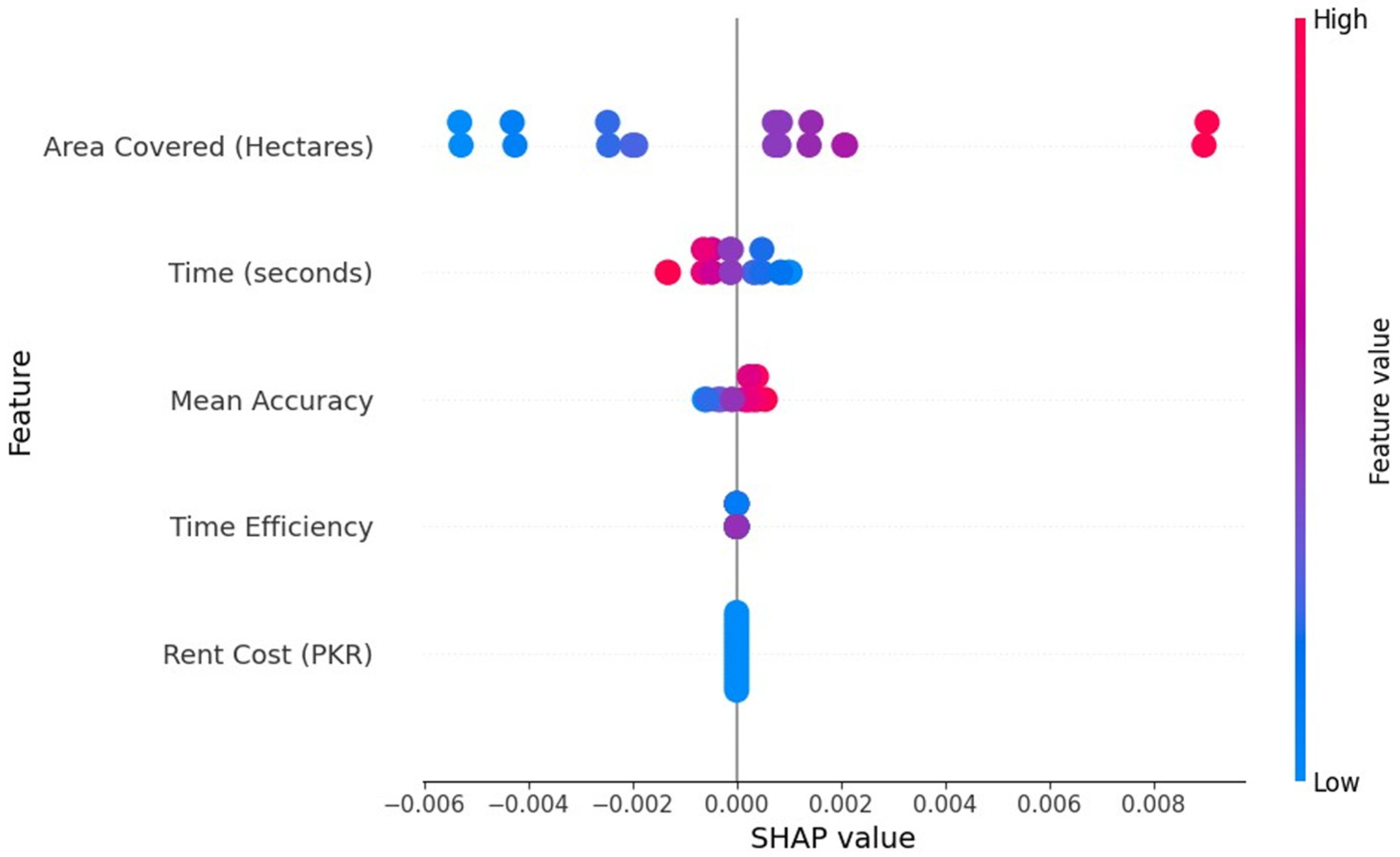

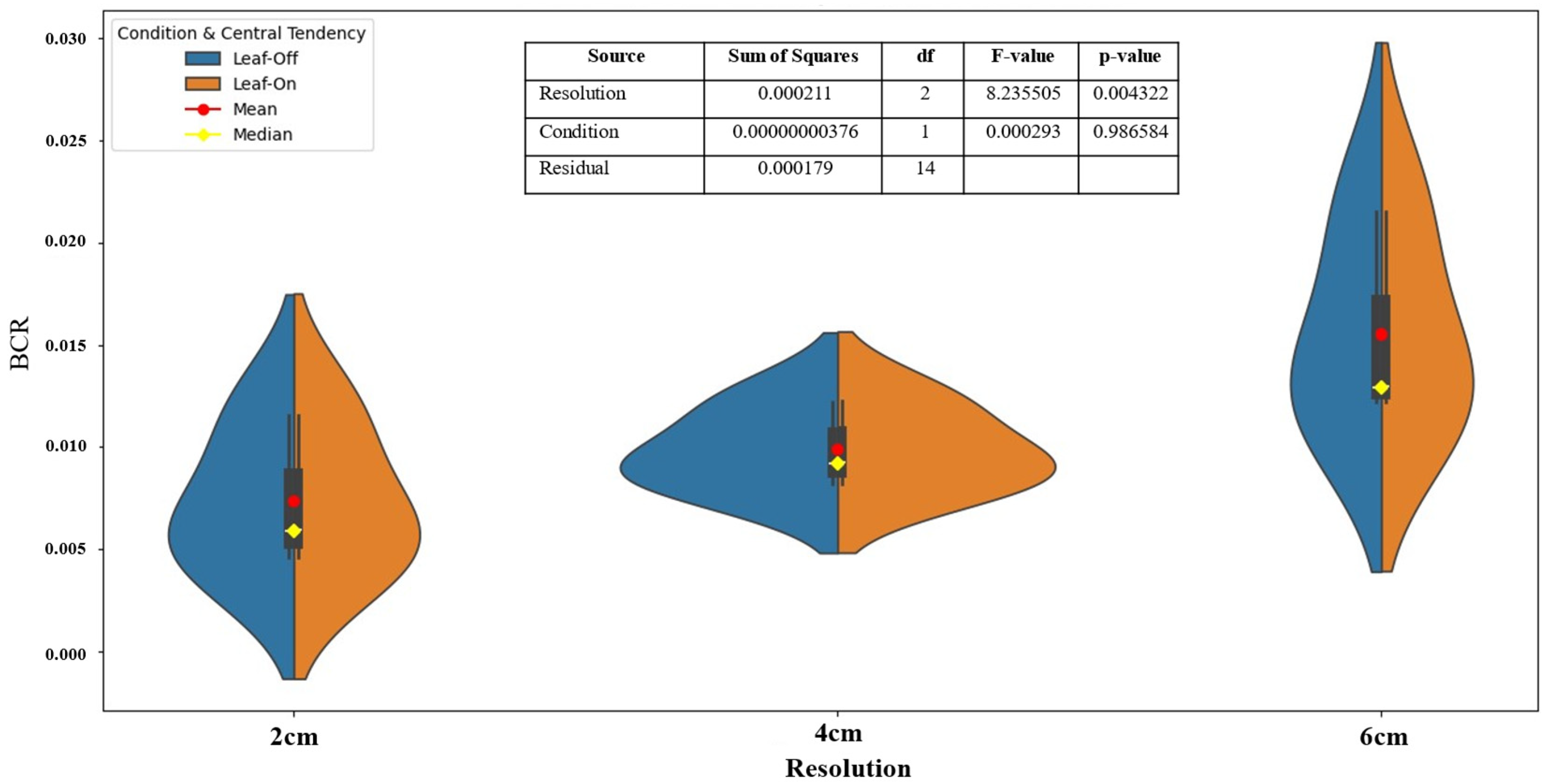

3.6. Optimizing Resolution and Mission Selection for Benefit–Cost Ratio Efficiency

4. Discussion

4.1. Optimal Resolution for UAV-Based Vegetation Mapping

4.2. Cost-Efficiency and Trade-Offs Between Accuracy and Resolution

4.3. Temporal Effects on Classification Accuracy

4.4. Practical Implications for Large-Scale Vegetation Monitoring

4.5. Limitations and Future Directions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Food and Agriculture Organization of the United Nations. The State of the World’s Forests 2018. Forest Pathways to Sustainable Development. 2018. Available online: http://www.fao.org/3/ca0188en/ca0188en.pdf (accessed on 13 November 2024).

- Gorte, R.W. Carbon Sequestration in Forests; DIANE Publishing: Darby, PA, USA, 2009. [Google Scholar]

- Burrell, A.L.; Evans, J.P.; De Kauwe, M.G. Anthropogenic climate change has driven over 5 million km2 of drylands towards desertification. Nat. Commun. 2020, 11, 3853. [Google Scholar] [CrossRef]

- Bristol-Alagbariya, E.T. UN Convention to Combat Desertification as an International Environmental Regulatory Framework for Protecting and Restoring the World’s Land towards a Safer, More Just and Sustainable Future. Int. J. Energy Environ. Res. 2023, 11, 1–32. [Google Scholar] [CrossRef]

- Intergovernmental Panel on Climate Change. IPCC Special Report on Climate Change, Desertification, Land Degradation, Sustainable Land Management, Food Security, and Greenhouse Gas Fluxes in Terrestrial Ecosystems. In Climate Change and Land; Cambridge University Press: Cambridge, UK, 2022. [Google Scholar] [CrossRef]

- Aziz, T. Changes in land use and ecosystem services values in Pakistan, 1950–2050. Environ. Dev. 2021, 37, 100576. [Google Scholar] [CrossRef]

- Kamal, A.; Yingjie, M.; Ali, A. Significance of billion tree tsunami afforestation project and legal developments in forest sector of Pakistan. Int. J. Law Soc. 2019, 1, 20. [Google Scholar]

- Rehman, J.U.; Alam, S.; Khalil, S.; Hussain, M.; Iqbal, M.; Khan, K.A.; Sabir, M.; Akhtar, A.; Raza, G.; Hussain, A.; et al. Major threats and habitat use status of Demoiselle crane (Anthropoides virgo), in district Bannu, Pakistan. Braz. J. Biol. 2021, 82, e242636. [Google Scholar] [CrossRef]

- Çalişkan, S.; Boydak, M. Afforestation of arid and semiarid ecosystems in Turkey. Turk. J. Agric. For. 2017, 41, 317–330. [Google Scholar] [CrossRef]

- Muñoz-Pizza, D.M.; Villada-Canela, M.; Rivera-Castañeda, P.; Reyna-Carranza, M.A.; Osornio-Vargas, A.; Martínez-Cruz, A.L. Stated benefits from air quality improvement through urban afforestation in an arid city—A contingent valuation in Mexicali, Baja California, Mexico. Urban For. Urban Green. 2020, 55, 126854. [Google Scholar] [CrossRef]

- Che’Ya, N.N.; Dunwoody, E.; Gupta, M. Assessment of weed classification using hyperspectral reflectance and optimal multispectral UAV imagery. Agronomy 2021, 11, 1435. [Google Scholar] [CrossRef]

- Qiao, Y.; Jiang, Y.; Zhang, C. Contribution of karst ecological restoration engineering to vegetation greening in southwest China during recent decade. Ecol. Indic. 2021, 121, 107081. [Google Scholar] [CrossRef]

- Hu, J.; Peng, J.; Zhou, Y.; Xu, D.; Zhao, R.; Jiang, Q.; Fu, T.; Wang, F.; Shi, Z. Quantitative estimation of soil salinity using UAV-borne hyperspectral and satellite multispectral images. Remote Sens. 2019, 11, 736. [Google Scholar] [CrossRef]

- Lu, Y.; Xue, Z.; Xia, G.-S.; Zhang, L. A survey on vision-based UAV navigation. Geo-Spat. Inf. Sci. 2018, 21, 21–32. [Google Scholar] [CrossRef]

- Zhang, Y.; Migliavacca, M.; Penuelas, J.; Ju, W. Advances in hyperspectral remote sensing of vegetation traits and functions. Remote Sens. Environ. 2021, 252, 112121. [Google Scholar] [CrossRef]

- Dalponte, M.; Bruzzone, L.; Gianelle, D. Fusion of hyperspectral and LIDAR remote sensing data for classification of complex forest areas. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1416–1427. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Malenovský, Z.; Turner, D.; Vopěnka, P. Assessment of forest structure using two UAV techniques: A comparison of airborne laser scanning and structure from motion (SfM) point clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef]

- Brede, B.; Calders, K.; Lau, A.; Raumonen, P.; Bartholomeus, H.M.; Herold, M.; Kooistra, L. Non-destructive tree volume estimation through quantitative structure modelling: Comparing UAV laser scanning with terrestrial LIDAR. Remote Sens. Environ. 2019, 233, 111355. [Google Scholar] [CrossRef]

- Chen, X.; Sun, Y.; Qin, X.; Cai, J.; Cai, M.; Hou, X.; Yang, K.; Zhang, H. Assessing the potential of UAV for large-scale fractional vegetation cover mapping with satellite data and machine learning. Remote Sens. 2024, 16, 3587. [Google Scholar] [CrossRef]

- Deval, K.; Joshi, P.K. Vegetation type and land cover mapping in a semi-arid heterogeneous forested wetland of India: Comparing image classification algorithms. Environ. Dev. Sustain. 2022, 24, 3947–3966. [Google Scholar] [CrossRef]

- Ullah, S.U.; Zeb, M.; Ahmad, A.; Ullah, S.; Khan, F.; Islam, A. Monitoring the Billion Trees Afforestation Project in Khyber Pakhtunkhwa, Pakistan through remote sensing. Acadlore Trans. Geosci. 2024, 3, 89–97. [Google Scholar] [CrossRef]

- Haq, I.U.; Mehmood, Z.; Khan, G.A.; Kainat, B.; Ahmed, B.; Shah, J.; Sami, A.; Nazar, M.S.; Xu, J.; Xiang, H. Modeling the effect of climatic conditions and topography on malaria incidence using Poisson regression: A retrospective study in Bannu, Khyber Pakhtunkhwa, Pakistan. Front. Microbiol. 2024, 14, 1303087. [Google Scholar] [CrossRef]

- Ullah, N. Nasib Ullah. Master’s Thesis, University of Science and Technology Bannu, Bannu, Pakistan, 2021. [Google Scholar] [CrossRef]

- Kawashima, S. An algorithm for estimating chlorophyll content in leaves using a video camera. Ann. Bot. 1998, 81, 49–54. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Ilniyaz, O.; Du, Q.; Shen, H.; He, W.; Feng, L.; Azadi, H.; Kurban, A.; Chen, X. Leaf area index estimation of pergola-trained vineyards in arid regions using classical and deep learning methods based on UAV-based RGB images. Comput. Electron. Agric. 2023, 207, 107723. [Google Scholar] [CrossRef]

- Lesani, F.S.; Fotouhi Ghazvini, F.; Amirkhani, H. Smart home resident identification based on behavioral patterns using ambient sensors. Pers. Ubiquitous Comput. 2021, 25, 151–162. [Google Scholar] [CrossRef]

- Jolliffe, I.T.; Cadima, J. Principal component analysis: A review and recent developments. Philos. Trans. R. Soc. Math. Phys. Eng. Sci. 2016, 374, 20150202. [Google Scholar] [CrossRef]

- Shlens, J. A tutorial on principal component analysis. arXiv 2014, arXiv:1404.1100. [Google Scholar] [CrossRef]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially located platform and aerial photography for documentation of grazing impacts on wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- Ahmad, I.S.; Reid, J.F. Evaluation of colour representations for maize images. J. Agric. Eng. Res. 1996, 63, 185–195. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Feng, Q.; Yang, J.; Liu, Y.; Ou, C.; Zhu, D.; Niu, B.; Liu, J.; Li, B. Multi-temporal unmanned aerial vehicle remote sensing for vegetable mapping using an attention-based recurrent convolutional neural network. Remote Sens. 2020, 12, 1668. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Stoy, P.C.; Williams, M.; Spadavecchia, L.; Bell, R.A.; Prieto-Blanco, A.; Evans, J.G.; van Wijk, M.T. Using information theory to determine optimum pixel size and shape for ecological studies: Aggregating land surface characteristics in Arctic ecosystems. Ecosystems 2009, 12, 574–589. [Google Scholar] [CrossRef]

- Altieri, L.; Cocchi, D. Entropy Measures for Environmental Data: Description, Sampling and Inference for Data with Dependence Structures; Springer Nature Singapore: Singapore, 2024. [Google Scholar] [CrossRef]

- Turner, W.; Spector, S.; Gardiner, N.; Fladeland, M.; Sterling, E.; Steininger, M. Remote sensing for biodiversity science and conservation. Trends Ecol. Evol. 2003, 18, 306–314. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. Remote sensing of vegetation structure using computer vision. Remote Sens. 2010, 2, 1157–1176. [Google Scholar] [CrossRef]

- Sun, Z.; Wang, X.; Wang, Z.; Yang, L.; Xie, Y.; Huang, Y. UAVs as remote sensing platforms in plant ecology: Review of applications and challenges. J. Plant Ecol. 2021, 14, 1003–1023. [Google Scholar] [CrossRef]

- Sahana, M. Conservation, Management and Monitoring of Forest Resources in India; Springer International Publishing AG: Cham, Switzerland, 2022. [Google Scholar]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Shang, C.; Treitz, P.; Caspersen, J.; Jones, T. Estimation of forest structural and compositional variables using ALS data and multi-seasonal satellite imagery. Int. J. Appl. Earth Obs. Geoinf. 2019, 78, 360–371. [Google Scholar] [CrossRef]

- Tampubolon, W.; Reinhardt, W. UAV data processing for large scale topographical mapping. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, XL–5, 565–572. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, H.; Chen, C.; Nagy, G.; Jancso, T.; Huang, H. Detection of growth change of young forest based on UAV RGB images at single-tree level. Forests 2023, 14, 141. [Google Scholar] [CrossRef]

| Index | Formula | Reference |

|---|---|---|

| Red chromatic coordinate (RCC) | R/(R + G + B) | [24] |

| Green chromatic coordinate (GCC) | G/(R + G + B) | [24] |

| Blue chromatic coordinate (BCC) | B/(R + G + B) | [24] |

| Normalized difference index (NDI) | (RCC-GCC)/(RCC + GCC + 0.01) | [25] |

| Green leaf index (GLI) | (2 * R-G-B)/(2 * R + G + B) | [30] |

| Kawashima index (IKAW) | (R-B)/(R + B) | [24] |

| Mean of RGB bands (MRGB) | (R + G + B)/3 | [31] |

| Excess green vegetation index (EXG) | (2 * RCC – GCC − BCC) | [25] |

| Visible atmospherically resistance index (VARI) | (G-R)/(G + R − B) | [32] |

| Plot ID | EC | AA | PJ | JA | BL | W | Total |

|---|---|---|---|---|---|---|---|

| 1 | × | 50 | 50 | 50 | 50 | 50 | 250 |

| 2 | × | 30 | 30 | × | 30 | × | 90 |

| 3 | 40 | 40 | 40 | × | 40 | 40 | 200 |

| Resolution (cm) | Mission | Mean Accuracy | SD | Range | CV (%) | Plot Number |

|---|---|---|---|---|---|---|

| 2 | Leaf-off | 0.958 | 0.007 | 0.020 | 0.781 | Plot 1 |

| 4 | = | 0.968 | 0.032 | 0.080 | 3.357 | Plot 1 |

| 6 | = | 0.964 | 0.015 | 0.040 | 1.553 | Plot 1 |

| 2 | Leaf-on | 0.968 | 0.032 | 0.070 | 3.293 | Plot 1 |

| 4 | = | 0.984 | 0.015 | 0.040 | 1.521 | Plot 1 |

| 6 | = | 0.97 | 0.020 | 0.050 | 2.062 | Plot 1 |

| 2 | Leaf-off | 0.964 | 0.029 | 0.060 | 3.049 | Plot 2 |

| 4 | = | 0.932 | 0.041 | 0.110 | 4.366 | Plot 2 |

| 6 | = | 0.912 | 0.044 | 0.110 | 4.825 | Plot 2 |

| 2 | Leaf-on | 0.988 | 0.024 | 0.060 | 2.429 | Plot 2 |

| 4 | = | 1 | 0.000 | 0.000 | 0.000 | Plot 2 |

| 6 | = | 0.976 | 0.029 | 0.060 | 3.012 | Plot 2 |

| 2 | Leaf-off | 0.884 | 0.036 | 0.100 | 4.085 | Plot 3 |

| 4 | = | 0.884 | 0.041 | 0.100 | 4.614 | Plot 3 |

| 6 | = | 0.906 | 0.023 | 0.060 | 2.574 | Plot 3 |

| 2 | Leaf-on | 0.914 | 0.037 | 0.100 | 4.070 | Plot 3 |

| 4 | = | 0.932 | 0.041 | 0.110 | 4.366 | Plot 3 |

| 6 | = | 0.918 | 0.012 | 0.030 | 1.270 | Plot 3 |

| Plot 1 | |||||||

| Resolution (cm) | Moving Windows | Mission | Min | Max | Mean | SD | Entropy Gain/Loss |

| 2 | 25 | Leaf-off | 6.308 | 7.537 | 6.653 | 0.384 | Base |

| 4 | = | = | 6.232 | 7.511 | 6.891 | 0.478 | 0.238 |

| 6 | = | = | 6.352 | 7.464 | 7.138 | 0.347 | 0.247 |

| 2 | = | Leaf-on | 6.175 | 7.622 | 6.818 | 0.468 | Base |

| 4 | = | = | 6.234 | 7.621 | 7.027 | 0.462 | 0.209 |

| 6 | = | = | 6.370 | 7.566 | 6.970 | 0.398 | −0.057 |

| Plot 2 | |||||||

| Resolution (cm) | Moving Windows | Mission | Min | Max | Mean | SD | Entropy Gain/Loss |

| 2 | 42 | Leaf-off | 2.766 | 3.238 | 3.055 | 0.131 | Base |

| 4 | = | = | 2.611 | 3.232 | 3.002 | 0.164 | −0.053 |

| 6 | = | = | 2.352 | 3.779 | 3.014 | 0.281 | 0.012 |

| 2 | = | Leaf-on | 2.756 | 3.428 | 3.120 | 0.174 | Base |

| 4 | = | = | 2.588 | 4.006 | 3.034 | 0.235 | −0.086 |

| 6 | = | = | 2.513 | 4.009 | 3.158 | 0.404 | 0.124 |

| Plot 3 | |||||||

| Resolution (cm) | Moving Windows | Mission | Min | Max | Mean | SD | Entropy Gain/Loss |

| 2 | 34 | Leaf-off | 1.390 | 3.855 | 3.168 | 0.651 | Base |

| 4 | = | = | 1.302 | 3.824 | 3.127 | 0.651 | −0.041 |

| 6 | = | = | 1.276 | 3.825 | 3.126 | 0.633 | −0.001 |

| 2 | = | Leaf-on | 1.881 | 3.606 | 3.001 | 0.511 | Base |

| 4 | = | = | 1.927 | 3.457 | 2.898 | 0.472 | −0.103 |

| 6 | = | = | 2.163 | 3.422 | 2.941 | 0.371 | 0.043 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ullah, S.; Ilniyaz, O.; Eziz, A.; Ullah, S.; Fidelis, G.D.; Kiran, M.; Azadi, H.; Ahmed, T.; Elfleet, M.S.; Kurban, A. Multi-Temporal and Multi-Resolution RGB UAV Surveys for Cost-Efficient Tree Species Mapping in an Afforestation Project. Remote Sens. 2025, 17, 949. https://doi.org/10.3390/rs17060949

Ullah S, Ilniyaz O, Eziz A, Ullah S, Fidelis GD, Kiran M, Azadi H, Ahmed T, Elfleet MS, Kurban A. Multi-Temporal and Multi-Resolution RGB UAV Surveys for Cost-Efficient Tree Species Mapping in an Afforestation Project. Remote Sensing. 2025; 17(6):949. https://doi.org/10.3390/rs17060949

Chicago/Turabian StyleUllah, Saif, Osman Ilniyaz, Anwar Eziz, Sami Ullah, Gift Donu Fidelis, Madeeha Kiran, Hossein Azadi, Toqeer Ahmed, Mohammed S. Elfleet, and Alishir Kurban. 2025. "Multi-Temporal and Multi-Resolution RGB UAV Surveys for Cost-Efficient Tree Species Mapping in an Afforestation Project" Remote Sensing 17, no. 6: 949. https://doi.org/10.3390/rs17060949

APA StyleUllah, S., Ilniyaz, O., Eziz, A., Ullah, S., Fidelis, G. D., Kiran, M., Azadi, H., Ahmed, T., Elfleet, M. S., & Kurban, A. (2025). Multi-Temporal and Multi-Resolution RGB UAV Surveys for Cost-Efficient Tree Species Mapping in an Afforestation Project. Remote Sensing, 17(6), 949. https://doi.org/10.3390/rs17060949