Unsupervised Rural Flood Mapping from Bi-Temporal Sentinel-1 Images Using an Improved Wavelet-Fusion Flood-Change Index (IWFCI) and an Uncertainty-Sensitive Markov Random Field (USMRF) Model

Abstract

:1. Introduction

- A novel fused flood-CI, namely IWFCI, is introduced in this study, where the mean-ratio CI is modified and then integrated with the log-ratio CI and flood image to accurately reflect the flood-related changes in rural areas.

- A Gaussian-like uncertainty penalty term based on the gray values of the CI is constructed and incorporated into the MRF to decrease the errors of the model over inter-class uncertain areas.

2. Materials and Methods

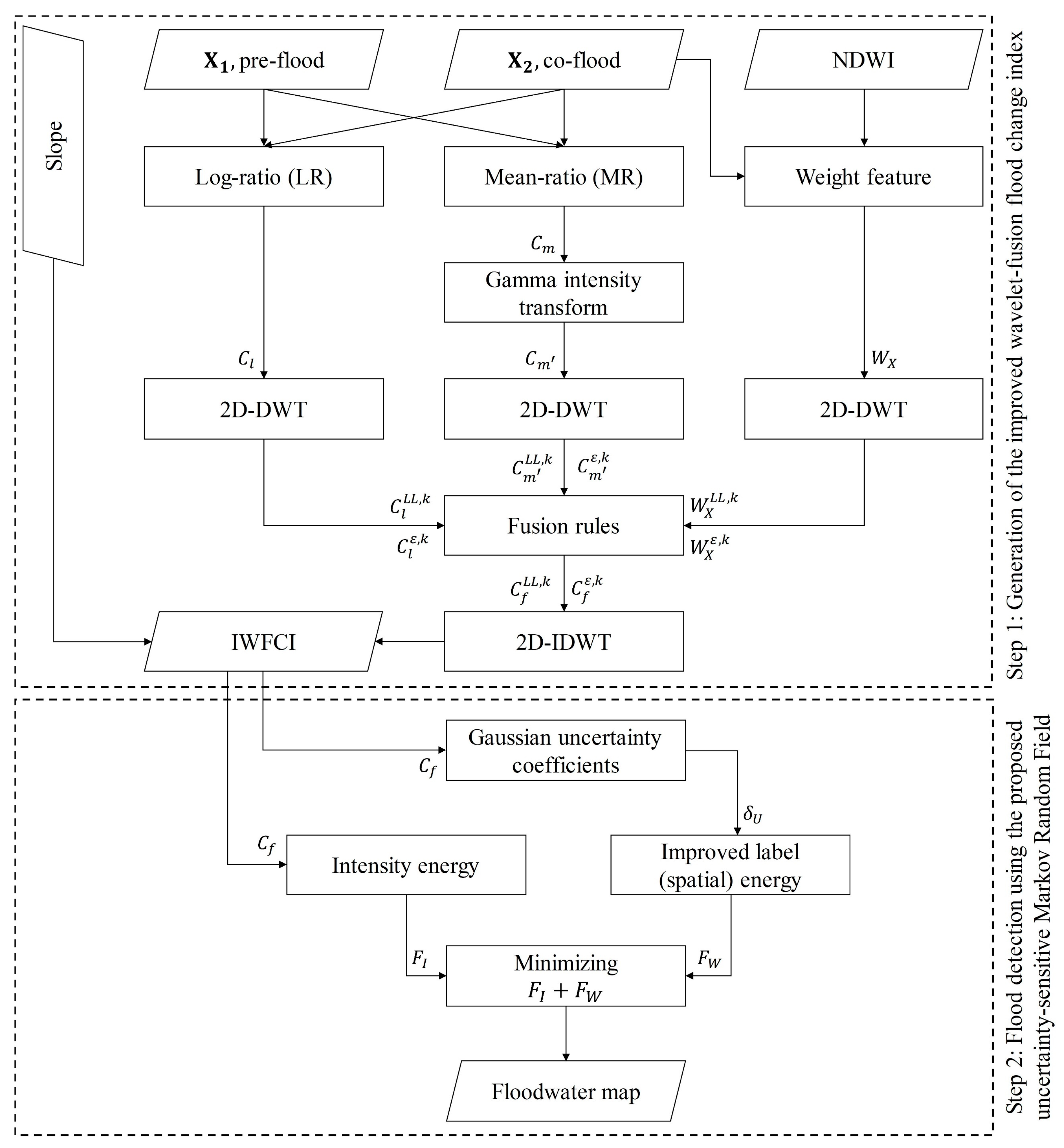

2.1. Proposed Unsupervised Floodwater Detection Approach

2.1.1. Improved Wavelet-Fusion Flood Change Index

2.1.2. Floodwater Detection Using the Uncertainty-Sensitive MRF (USMRF)

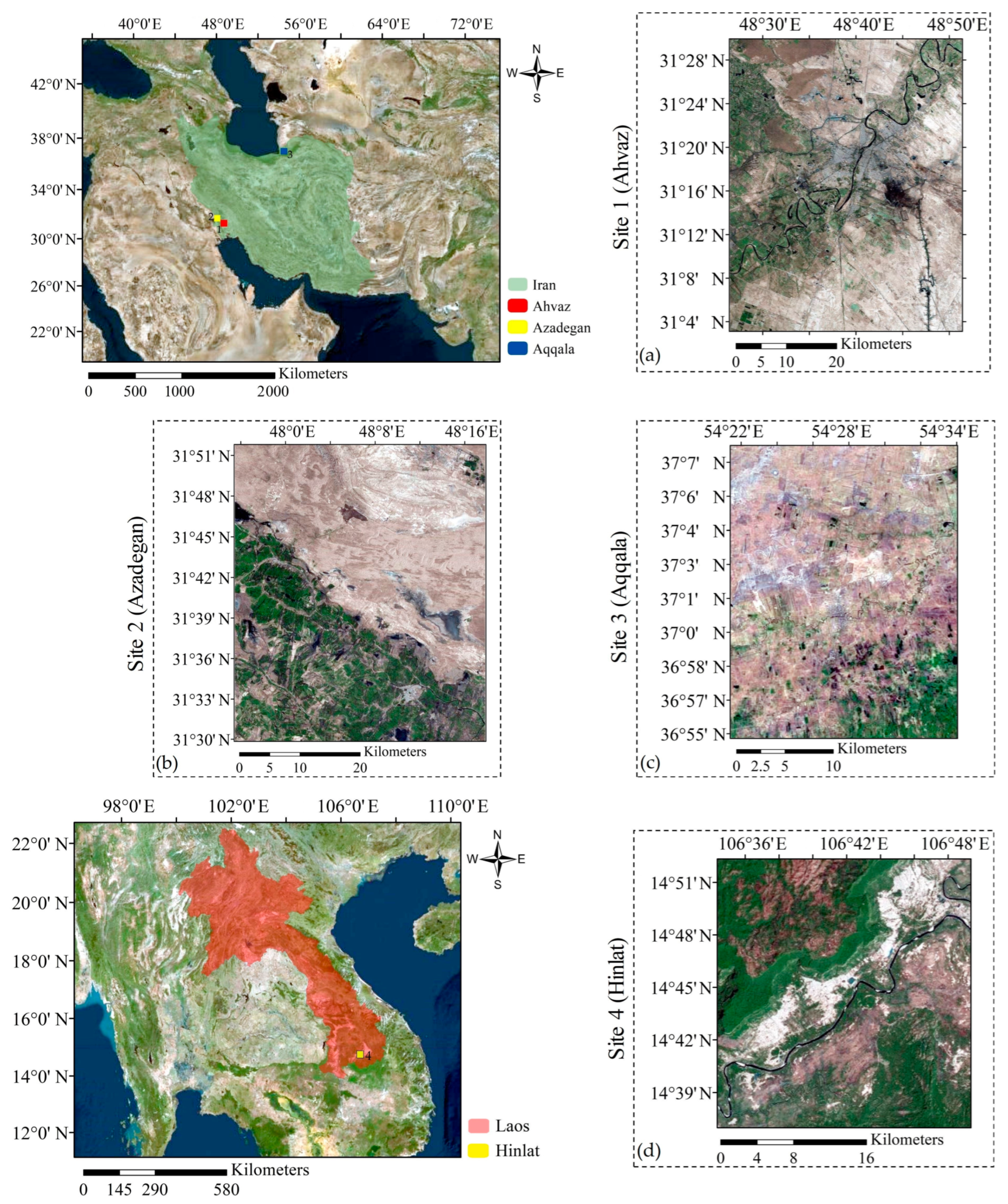

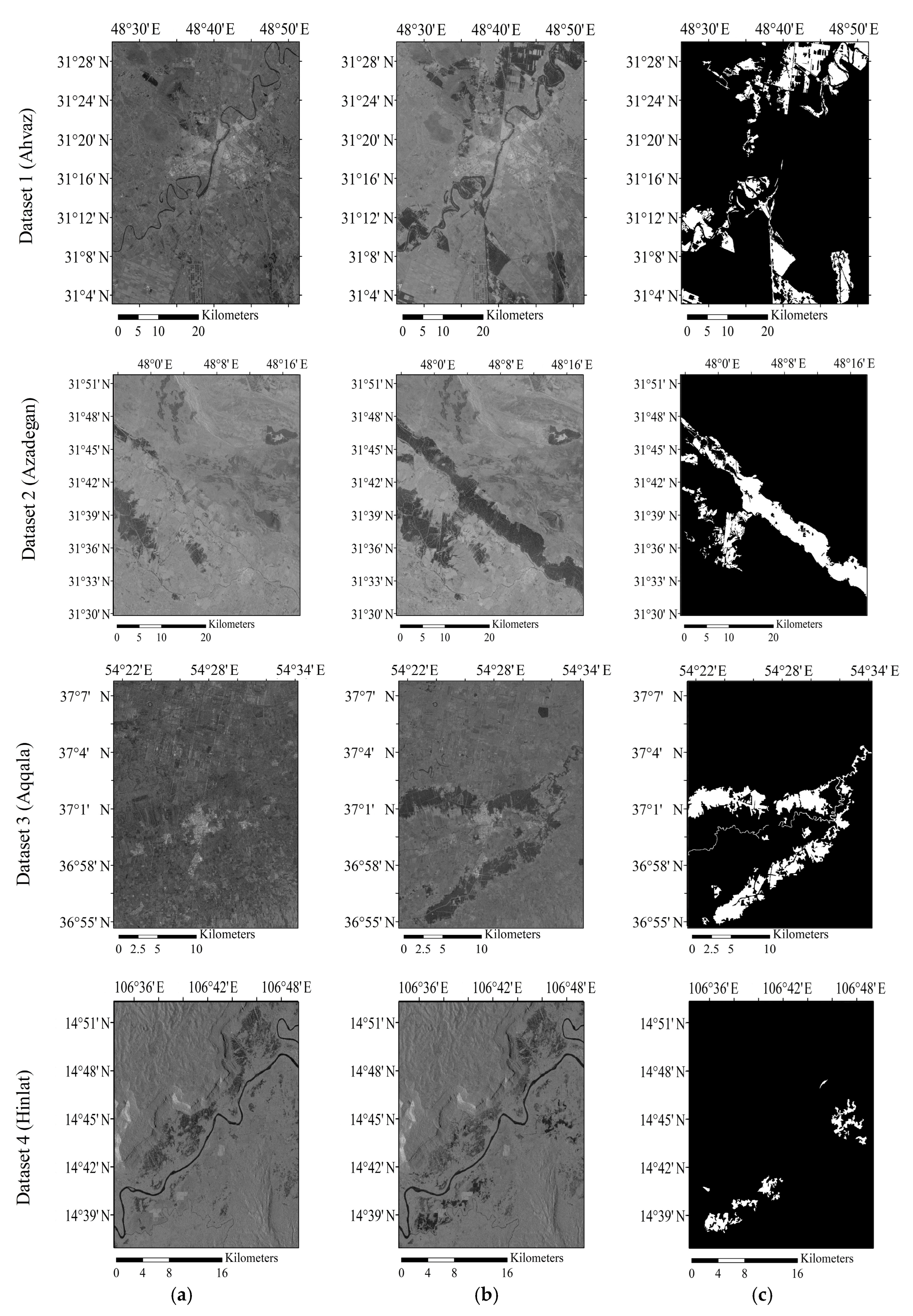

2.2. Study Areas and Datasets

2.3. Performance Evaluation Metrics

3. Results

3.1. Parameter Setting

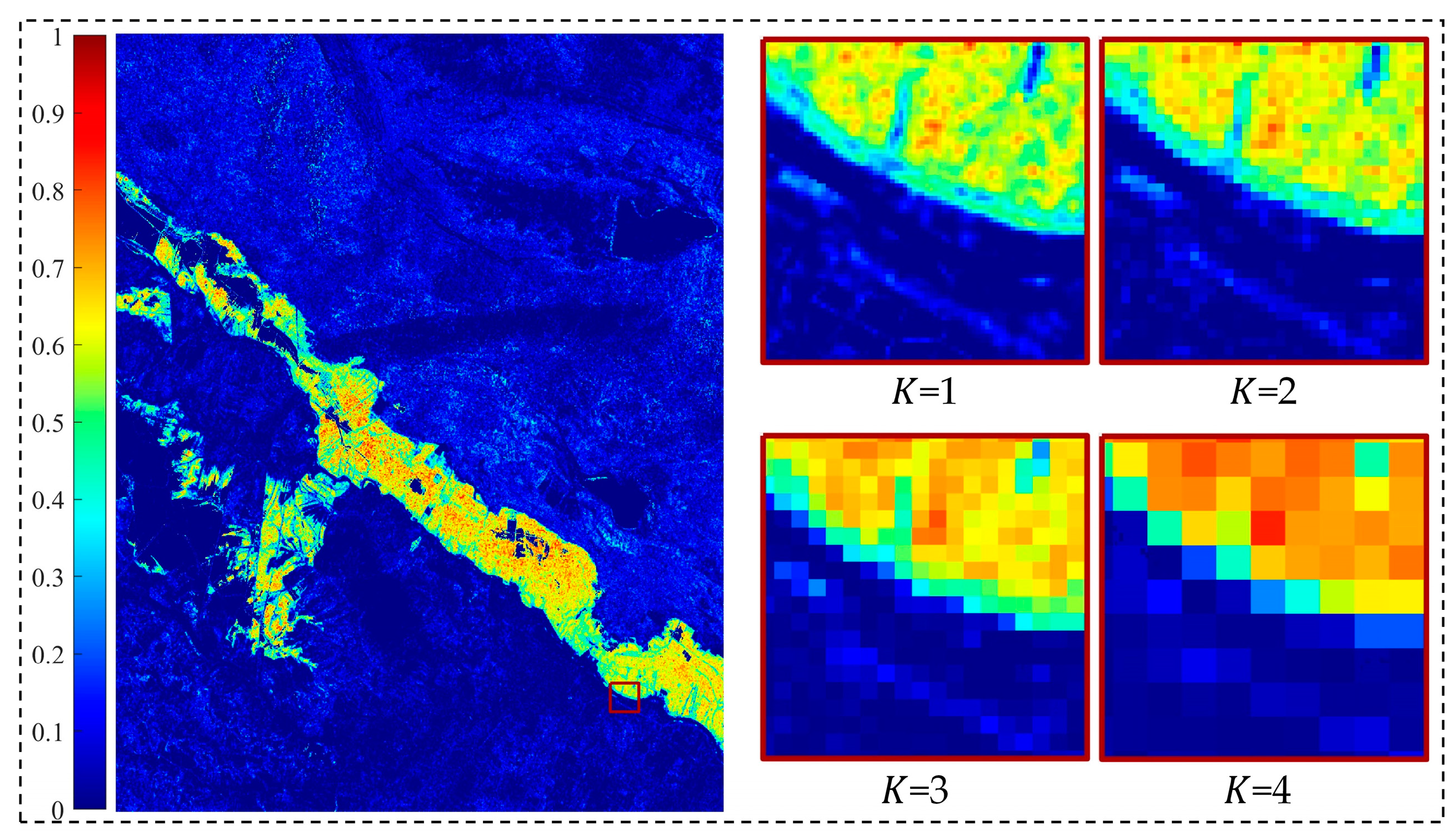

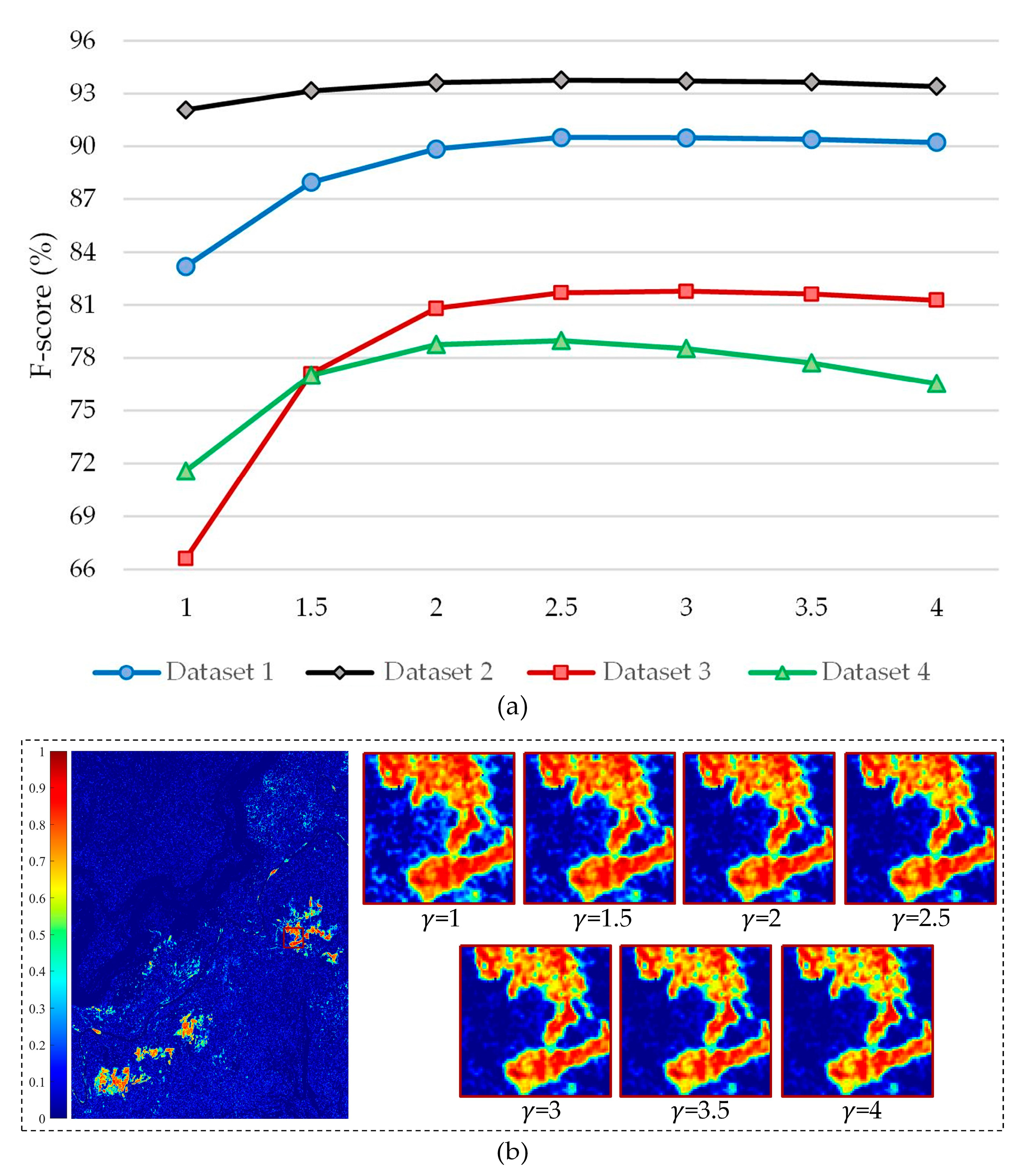

3.1.1. Level of Decomposition (K) and Intensity Transform () Parameters in IWFCI

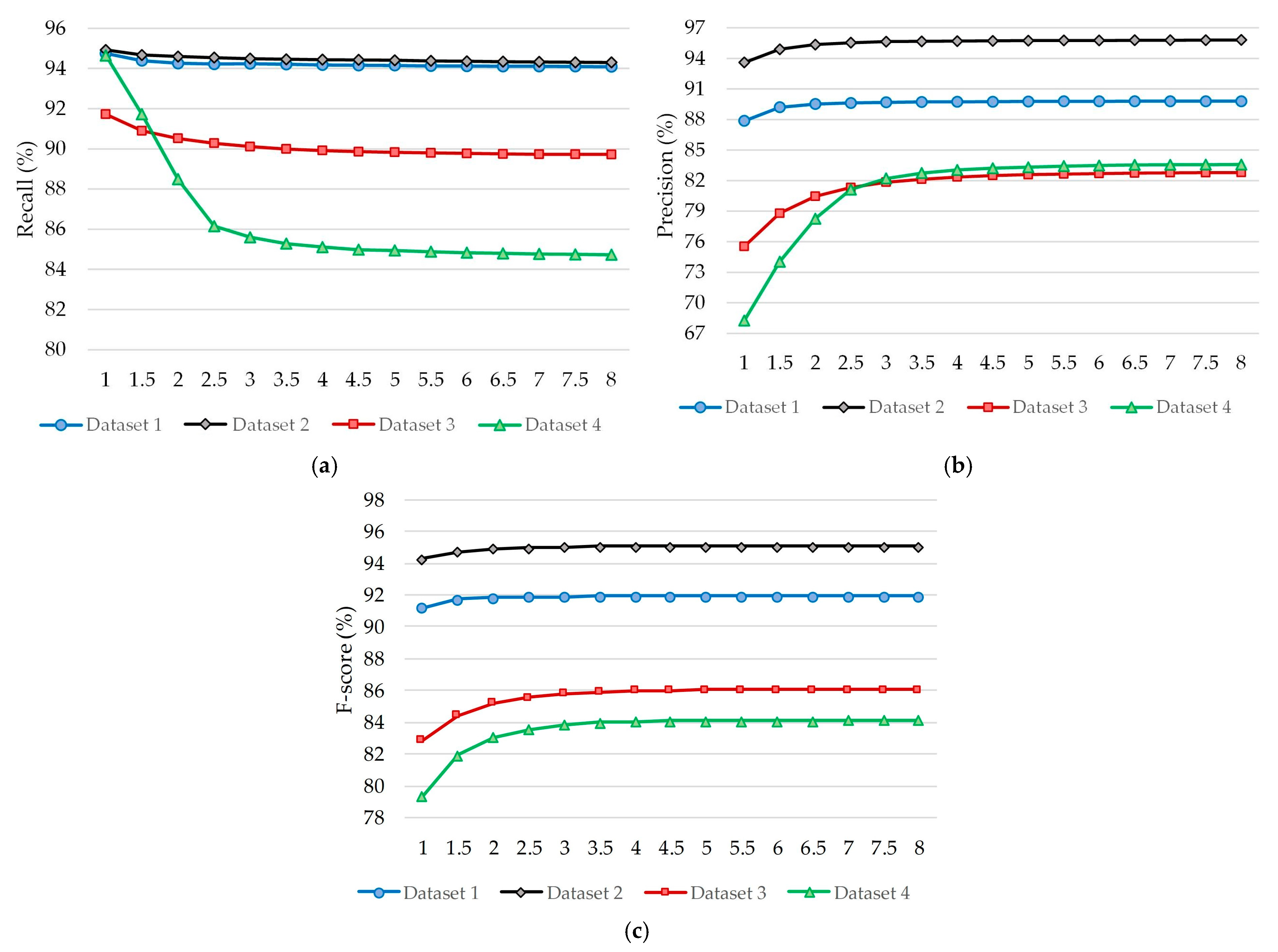

3.1.2. β Parameter in the USMRF Model

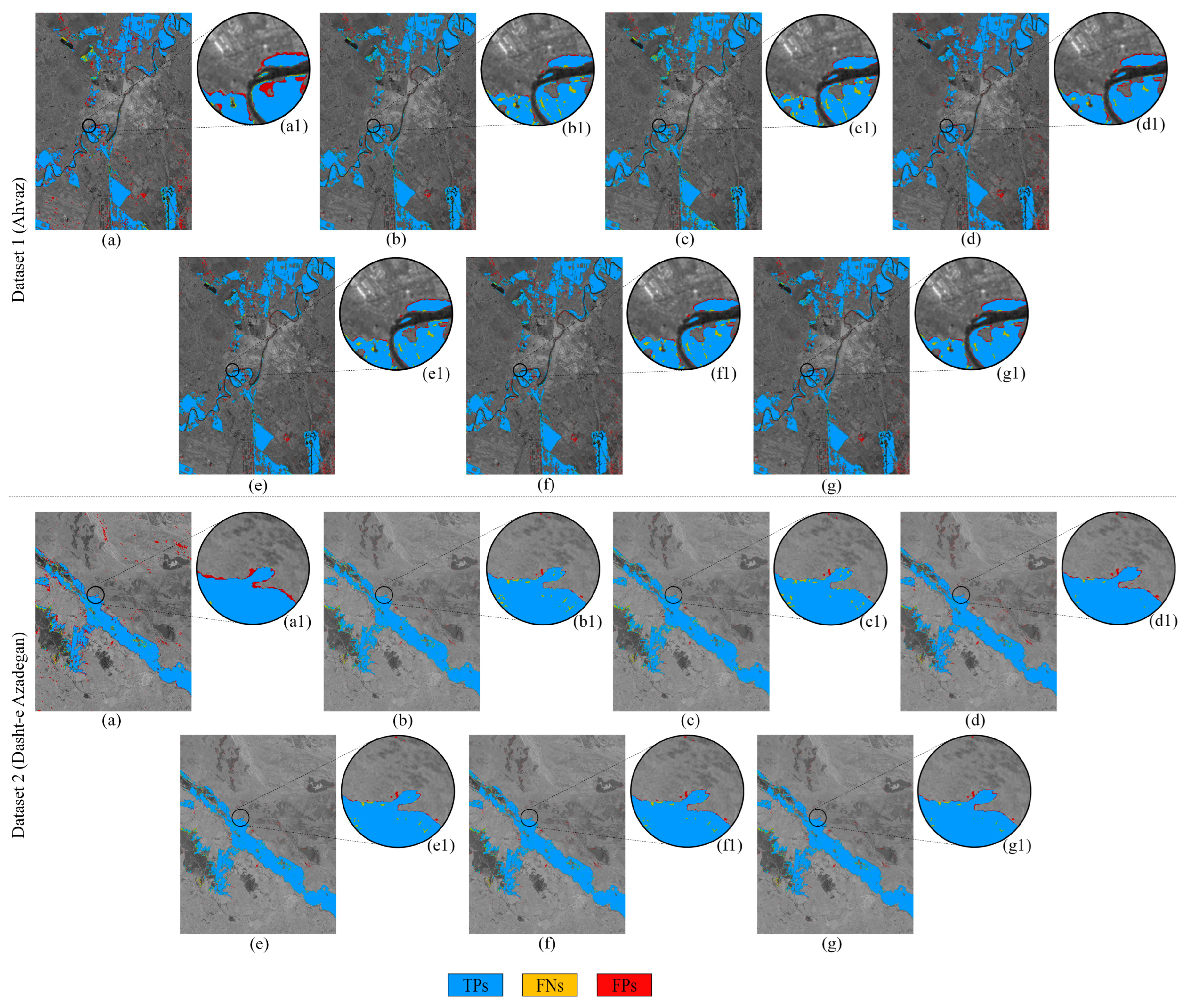

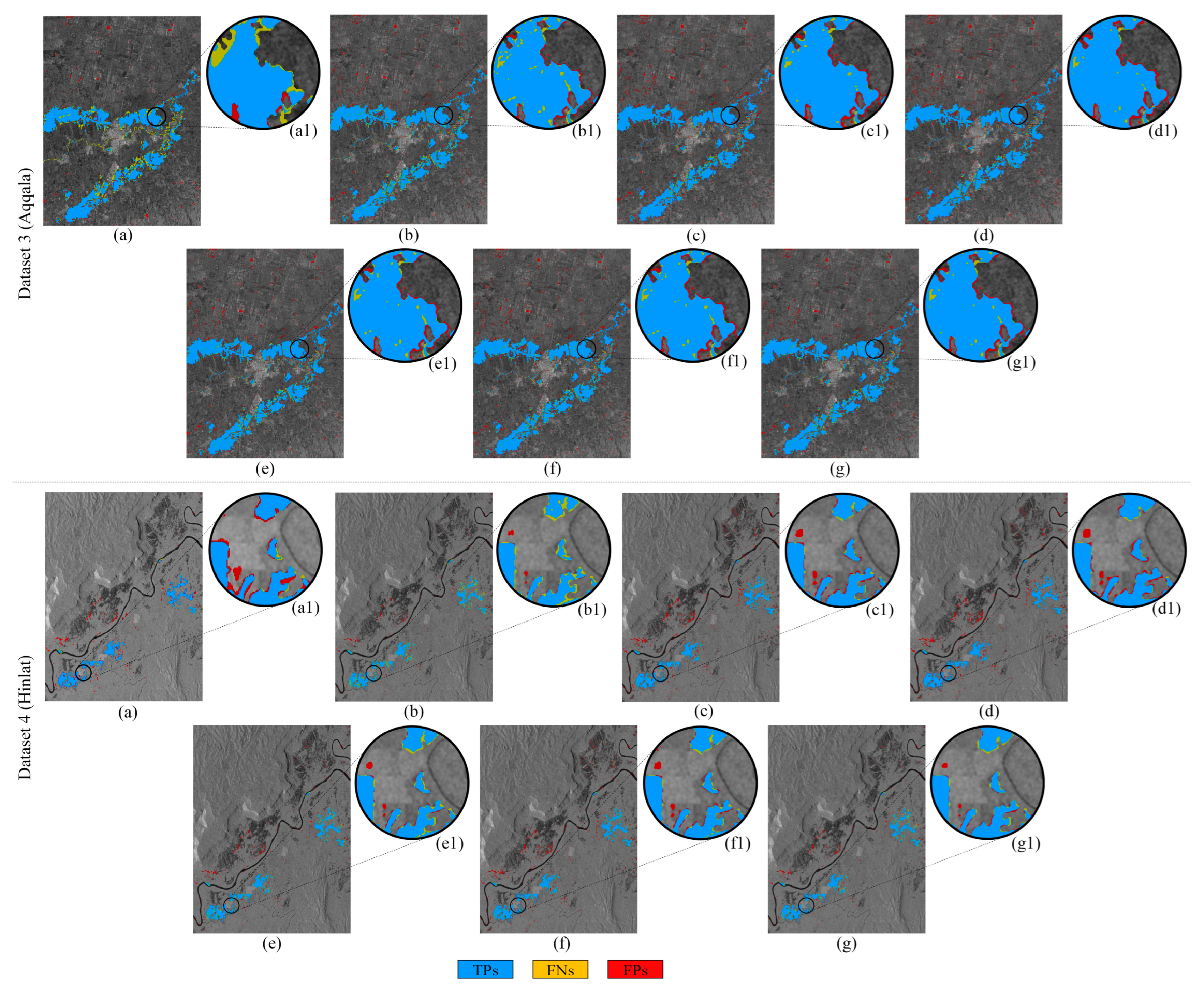

3.2. Evaluating the Proposed Flood Change Index

3.3. Assessment of the Proposed USMRF Model

4. Discussion

4.1. Performance of the Proposed IWFCI in Reflecting Flood Changes

4.2. Performance of the Proposed USMRF Model in Generating Flood Maps

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Addabbo, A.D.; Refice, A.; Pasquariello, G.; Lovergine, F.P.; Capolongo, D.; Manfreda, S. A Bayesian Network for Flood Detection Combining SAR Imagery and Ancillary Data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3612–3625. [Google Scholar] [CrossRef]

- Rosser, J.F.; Leibovici, D.G.; Jackson, M.J. Rapid flood inundation mapping using social media, remote sensing and topographic data. Nat. Hazards 2017, 87, 103–120. [Google Scholar] [CrossRef]

- Li, Y.; Martinis, S.; Wieland, M.; Schlaffer, S.; Natsuaki, R. Urban flood mapping using SAR intensity and interferometric coherence via Bayesian network fusion. Remote Sens. 2019, 11, 2231. [Google Scholar] [CrossRef]

- Ulloa, N.I.; Yun, S.-H.; Chiang, S.-H.; Furuta, R. Sentinel-1 Spatiotemporal Simulation Using Convolutional LSTM for Flood Mapping. Remote Sens. 2022, 14, 246. [Google Scholar] [CrossRef]

- Farhadi, H.; Ebadi, H.; Kiani, A.; Asgary, A. Introducing a new index for flood mapping using Sentinel-2 imagery (SFMI). Comput. Geosci. 2024, 194, 105742. [Google Scholar] [CrossRef]

- Li, Y.; Martinis, S.; Plank, S.; Ludwig, R. An automatic change detection approach for rapid flood mapping in Sentinel-1 SAR data. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 123–135. [Google Scholar] [CrossRef]

- Feng, Q.; Gong, J.; Liu, J.; Li, Y. Flood Mapping Based on Multiple Endmember Spectral Mixture Analysis and Random Forest Classifier-The Case of Yuyao, China. Remote Sens. 2015, 7, 12539–12562. [Google Scholar] [CrossRef]

- DeVries, B.; Huang, C.; Armston, J.; Huang, W.; Jones, J.W.; Lang, M.W. Rapid and robust monitoring of flood events using Sentinel-1 and Landsat data on the Google Earth Engine. Remote Sens. Environ. 2020, 240, 111664. [Google Scholar] [CrossRef]

- Farhadi, H.; Esmaeily, A.; Najafzadeh, M. Flood monitoring by integration of Remote Sensing technique and Multi-Criteria Decision Making method. Comput. Geosci. 2022, 160, 105045. [Google Scholar] [CrossRef]

- Khankeshizadeh, E.; Tahermanesh, S.; Mohsenifar, A.; Moghimi, A.; Mohammadzadeh, A. FBA-DPAttResU-Net: Forest burned area detection using a novel end-to-end dual-path attention residual-based U-Net from post-fire Sentinel-1 and Sentinel-2 images. Ecol. Indic. 2024, 167, 112589. [Google Scholar] [CrossRef]

- Shastry, A.; Carter, E.; Coltin, B.; Sleeter, R.; McMichael, S.; Eggleston, J. Mapping floods from remote sensing data and quantifying the effects of surface obstruction by clouds and vegetation. Remote Sens. Environ. 2023, 291, 113556. [Google Scholar] [CrossRef]

- Lang, F.; Zhu, Y.; Zhao, J.; Hu, X.; Shi, H.; Zheng, N.; Zha, J. Flood Mapping of Synthetic Aperture Radar (SAR) Imagery Based on Semi-Automatic Thresholding and Change Detection. Remote Sens. 2024, 16, 2763. [Google Scholar] [CrossRef]

- Schlaffer, S.; Chini, M.; Giustarini, L.; Matgen, P. Probabilistic mapping of flood-induced backscatter changes in SAR time series. Int. J. Appl. Earth Obs. Geoinf. 2017, 56, 77–87. [Google Scholar] [CrossRef]

- Voormansik, K.; Praks, J.; Antropov, O.; Jagomagi, J.; Zalite, K. Flood mapping with terraSAR-X in forested regions in estonia. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 562–577. [Google Scholar] [CrossRef]

- Chini, M.; Hostache, R.; Giustarini, L.; Matgen, P. A hierarchical split-based approach for parametric thresholding of SAR images: Flood inundation as a test case. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6975–6988. [Google Scholar] [CrossRef]

- Zhao, M.; Ling, Q.; Li, F. An Iterative Feedback-Based Change Detection Algorithm for Flood Mapping in SAR Images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 231–235. [Google Scholar] [CrossRef]

- Sui, H.; An, K.; Xu, C.; Liu, J.; Feng, W. Flood Detection in PolSAR Images Based on Level Set Method Considering Prior Geoinformation. IEEE Geosci. Remote Sens. Lett. 2018, 15, 699–703. [Google Scholar] [CrossRef]

- Jamali, A.; Roy, S.K.; Hashemi Beni, L.; Pradhan, B.; Li, J.; Ghamisi, P. Residual wave vision U-Net for flood mapping using dual polarization Sentinel-1 SAR imagery. Int. J. Appl. Earth Obs. Geoinf. 2023, 127, 103662. [Google Scholar] [CrossRef]

- Shen, X.; Wang, D.; Mao, K.; Anagnostou, E.; Hong, Y. Inundation extent mapping by synthetic aperture radar: A review. Remote Sens. 2019, 11, 879. [Google Scholar] [CrossRef]

- Celik, T. Multiscale change detection in multitemporal satellite images. IEEE Geosci. Remote Sens. Lett. 2009, 6, 820–824. [Google Scholar] [CrossRef]

- Bruzzone, L.; Prieto, D.F. Automatic analysis of the difference image for unsupervised change detection. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1171–1182. [Google Scholar] [CrossRef]

- Bazi, Y.; Bruzzone, L.; Melgani, F. An unsupervised approach based on the generalized Gaussian model to automatic change detection in multitemporal SAR images. IEEE Trans. Geosci. Remote Sens. 2005, 43, 874–887. [Google Scholar] [CrossRef]

- Martinis, S.; Twele, A.; Voigt, S. Unsupervised extraction of flood-induced backscatter changes in SAR data using markov image modeling on irregular graphs. IEEE Trans. Geosci. Remote Sens. 2011, 49, 251–263. [Google Scholar] [CrossRef]

- Giustarini, L.; Hostache, R.; Matgen, P.; Schumann, G.J.P.; Bates, P.D.; Mason, D.C. A change detection approach to flood mapping in Urban areas using TerraSAR-X. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2417–2430. [Google Scholar] [CrossRef]

- Moghimi, A.; Mohammadzadeh, A.; Khazai, S. Integrating Thresholding With Level Set Method for Unsupervised Change Detection in Multitemporal SAR Images. Can. J. Remote Sens. 2017, 43, 412–431. [Google Scholar] [CrossRef]

- Kim, Y.; Lee, M.-J. Rapid Change Detection of Flood Affected Area after Collapse of the Laos Xe-Pian Xe-Namnoy Dam Using Sentinel-1 GRD Data. Remote Sens. 2020, 12, 1978. [Google Scholar] [CrossRef]

- Natsuaki, R.; Nagai, H. Synthetic aperture radar flood detection under multiple modes and multiple orbit conditions: A case study in japan on typhoon hagibis, 2019. Remote Sens. 2020, 12, 903. [Google Scholar] [CrossRef]

- Samuele, D.P.; Federica, G.; Filippo, S.; Enrico, B.-M. A simplified method for water depth mapping over crops during flood based on Copernicus and DTM open data. Agric. Water Manag. 2022, 269, 107642. [Google Scholar] [CrossRef]

- Amitrano, D.; Di Martino, G.; Iodice, A.; Riccio, D.; Ruello, G. Unsupervised Rapid Flood Mapping Using Sentinel-1 GRD SAR Images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3290–3299. [Google Scholar] [CrossRef]

- Cian, F.; Marconcini, M.; Ceccato, P. Normalized Difference Flood Index for rapid flood mapping: Taking advantage of EO big data. Remote Sens. Environ. 2018, 209, 712–730. [Google Scholar] [CrossRef]

- Vanama, V.S.K.; Rao, Y.S.; Bhatt, C.M. Change detection based flood mapping using multi-temporal Earth Observation satellite images: 2018 flood event of Kerala, India. Eur. J. Remote Sens. 2021, 54, 42–58. [Google Scholar] [CrossRef]

- Vekaria, D.; Chander, S.; Singh, R.P.; Dixit, S. A change detection approach to flood inundation mapping using multi-temporal Sentinel-1 SAR images, the Brahmaputra River, Assam (India): 2015–2020. J. Earth Syst. Sci. 2023, 132, 3. [Google Scholar] [CrossRef]

- Lu, J.; Giustarini, L.; Xiong, B.; Zhao, L.; Jiang, Y.; Kuang, G. Automated flood detection with improved robustness and efficiency using multi-temporal SAR data. Remote Sens. Lett. 2014, 5, 240–248. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. A Split-Based Approach to Unsupervised Change Detection in Large-Size Multitemporal Images: Application to Tsunami-Damage Assessment. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1658–1669. [Google Scholar] [CrossRef]

- Mehravar, S.; Razavi-termeh, S.V.; Moghimi, A.; Ranjgar, B.; Foroughnia, F.; Amani, M. Flood susceptibility mapping using multi-temporal SAR imagery and novel integration of nature-inspired algorithms into support vector regression. J. Hydrol. 2023, 617, 129100. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. A detail-preserving scale-driven approach to change detection in multitemporal SAR images. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2963–2972. [Google Scholar] [CrossRef]

- Gong, M.; Zhou, Z.; Ma, J. Change detection in synthetic aperture radar images based on image fusion and fuzzy clustering. IEEE Trans. Image Process. 2012, 21, 2141–2151. [Google Scholar] [CrossRef]

- Wang, J.; Yang, X.; Yang, X.; Jia, L.; Fang, S. Unsupervised change detection between SAR images based on hypergraphs. ISPRS J. Photogramm. Remote Sens. 2020, 164, 61–72. [Google Scholar] [CrossRef]

- Moghimi, A.; Khazai, S.; Mohammadzadeh, A. An improved fast level set method initialized with a combination of k-means clustering and Otsu thresholding for unsupervised change detection from SAR images. Arab. J. Geosci. 2017, 10, 293. [Google Scholar] [CrossRef]

- Hou, B.; Wei, Q.; Zheng, Y.; Wang, S. Unsupervised change detection in SAR image based on Gauss-log ratio image fusion and compressed projection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 3297–3317. [Google Scholar] [CrossRef]

- Zheng, Y.; Zhang, X.; Hou, B.; Liu, G. Using combined difference image and k-means clustering for SAR image change detection. IEEE Geosci. Remote Sens. Lett. 2013, 11, 691–695. [Google Scholar] [CrossRef]

- Giustarini, L.; Vernieuwe, H.; Verwaeren, J.; Chini, M.; Hostache, R.; Matgen, P.; Verhoest, N.E.C.; de Baets, B. Accounting for image uncertainty in SAR-based flood mapping. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 70–77. [Google Scholar] [CrossRef]

- Long, S.; Fatoyinbo, T.E.; Policelli, F. Flood extent mapping for Namibia using change detection and thresholding with SAR. Environ. Res. Lett. 2014, 9, 035002. [Google Scholar] [CrossRef]

- Fang, H.; Du, P.; Wang, X.; Lin, C.; Tang, P. Unsupervised Change Detection Based on Weighted Change Vector Analysis and Improved Markov Random Field for High Spatial Resolution Imagery. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6002005. [Google Scholar] [CrossRef]

- Wei, C.; Zhao, P.; Li, X.; Wang, Y.; Liu, F. Unsupervised change detection of VHR remote sensing images based on multi-resolution Markov Random Field in wavelet domain. Int. J. Remote Sens. 2019, 40, 7750–7766. [Google Scholar] [CrossRef]

- Li, Z.; Shi, W.; Lu, P.; Yan, L.; Wang, Q.; Miao, Z. Landslide mapping from aerial photographs using change detection-based Markov random field. Remote Sens. Environ. 2016, 187, 76–90. [Google Scholar] [CrossRef]

- Gong, M.; Su, L.; Jia, M.; Chen, W. Fuzzy clustering with a modified MRF energy function for change detection in synthetic aperture radar images. IEEE Trans. Fuzzy Syst. 2014, 22, 98–109. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, X.; Wu, W.; Li, G. Continuous Change Detection of Flood Extents with Multisource Heterogeneous Satellite Image Time Series. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4205418. [Google Scholar] [CrossRef]

- Hao, M.; Zhou, M.; Jin, J.; Shi, W. An Advanced Superpixel-Based Markov Random Field Model for Unsupervised Change Detection. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1401–1405. [Google Scholar] [CrossRef]

- Mohsenifar, A.; Mohammadzadeh, A.; Moghimi, A.; Salehi, B. A novel unsupervised forest change detection method based on the integration of a multiresolution singular value decomposition fusion and an edge-aware Markov Random Field algorithm. Int. J. Remote Sens. 2021, 42, 9376–9404. [Google Scholar] [CrossRef]

- Gu, W.; Lv, Z.; Hao, M. Change detection method for remote sensing images based on an improved Markov random field. Multimed. Tools Appl. 2017, 76, 17719–17734. [Google Scholar] [CrossRef]

- He, P.; Shi, W.; Miao, Z.; Zhang, H.; Cai, L. Advanced Markov random field model based on local uncertainty for unsupervised change detection. Remote Sens. Lett. 2015, 6, 667–676. [Google Scholar] [CrossRef]

- Rockinger, O.; Fechner, T. Pixel-level image fusion: The case of image sequences. In Proceedings of the Signal Processing, Sensor Fusion, and Target Recognition VII; International Society for Optics and Photonics, Orlando, FL, USA, 13–15 April 1998; pp. 378–388. [Google Scholar]

- Inglada, J.; Mercier, G. A New Statistical Similarity Measure for Change Detection in Multitemporal SAR Images and Its Extension to Multiscale Change Analysis. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1432–1445. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E.; Prentice Hall, P. Digital Image Processing, 3rd ed.; Pearson Education: Upper Saddle River, NJ, USA, 2008. [Google Scholar]

- Hamidi, E.; Peter, B.G.; Munoz, D.F.; Moftakhari, H.; Moradkhani, H. Fast Flood Extent Monitoring With SAR Change Detection Using Google Earth Engine. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4201419. [Google Scholar] [CrossRef]

- Otsu, N. Threshold Selection Method from Gray-Level Histograms. IEEE Trans Syst Man Cybern 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Twele, A.; Cao, W.; Plank, S.; Martinis, S. Sentinel-1-based flood mapping: A fully automated processing chain. Int. J. Remote Sens. 2016, 37, 2990–3004. [Google Scholar] [CrossRef]

- Psomiadis, E.; Diakakis, M.; Soulis, K.X. Combining SAR and optical earth observation with hydraulic simulation for flood mapping and impact assessment. Remote Sens. 2020, 12, 3980. [Google Scholar] [CrossRef]

- Clement, M.A.; Kilsby, C.G.; Moore, P. Multi-Temporal SAR Flood Mapping using Change Detection. J. Flood Risk Manag. 2018, 11, 152–168. [Google Scholar] [CrossRef]

- Plank, S.; Juessi, M.; Martinis, S.; Twele, A. Mapping of flooded vegetation by means of polarimetric Sentinel-1 and ALOS-2/PALSAR-2 imagery. Int. J. Remote Sens. 2017, 38, 3831–3850. [Google Scholar] [CrossRef]

- Marjani, M.; Mohammadimanesh, F.; Mahdianpari, M.; Gill, E.W. Remote Sensing Applications: Society and Environment A novel spatio-temporal vision transformer model for improving wetland mapping using multi-seasonal sentinel data. Remote Sens. Appl. Soc. Environ. 2025, 37, 101401. [Google Scholar] [CrossRef]

- Khankeshizadeh, E.; Mohammadzadeh, A.; Mohsenifar, A.; Moghimi, A.; Pirasteh, S.; Feng, S.; Hu, K.; Li, J. Building detection in VHR remote sensing images using a novel dual attention residual-based U-Net (DAttResU-Net): An application to generating building change maps. Remote Sens. Appl. Soc. Environ. 2024, 36, 101336. [Google Scholar] [CrossRef]

- Moghimi, A.; Welzel, M.; Celik, T.; Schlurmann, T. A Comparative Performance Analysis of Popular Deep Learning Models and Segment Anything Model (SAM) for River Water Segmentation in Close-Range Remote Sensing Imagery. IEEE Access 2024, 12, 52067–52085. [Google Scholar] [CrossRef]

- Celik, T. Unsupervised Change Detection in Satellite Images Using Principal Component Analysis and k-means clustering. IEEE Geosci. Remote Sens. Lett. 2009, 6, 772–776. [Google Scholar] [CrossRef]

- Riyanto, I.; Rizkinia, M.; Arief, R. Three-Dimensional Convolutional Neural Network on Multi-Temporal Synthetic Aperture Radar Images for Urban Flood Potential Mapping in Jakarta. Appl. Sci. 2022, 12, 1679. [Google Scholar] [CrossRef]

| Area | Data | Temporal Status | Acquisition Time (YYYY-MM-DD) | Pass Direction | Image Size (Pixels) | Spatial Coverage (km2) |

|---|---|---|---|---|---|---|

| Site 1 (Ahvaz, Iran) | S2 | Pre-flood | 2019-03-17 | N/A | 5011 × 3582 | 1794.940 |

| S1 | Pre-flood | 2019-03-25 | Ascending | |||

| S1 | Co-flood | 2019-04-12 | Ascending | |||

| Site 2 (Azadegan, Iran) | S2 | Pre-flood | 2019-03-12 and 2019-03-17 | N/A | 4104 × 3196 | 1311.638 |

| S1 | Pre-flood | 2019-03-25 | Ascending | |||

| S1 | Co-flood | 2019-04-12 | Ascending | |||

| Site 3 (Aqqala, Iran) | S2 | Pre-flood | 2019-03-16 | N/A | 2396 × 1800 | 431.280 |

| S1 | Pre-flood | 2019-03-11 and 2019-03-18 | Descending | |||

| S1 | Co-flood | 2019-03-23 and 2019-03-30 | Descending | |||

| Site 4 (Hinlat, Laos) | S2 | Pre-flood | 2018-03-12 | N/A | 2851 × 2151 | 801.383 |

| S1 | Pre-flood | 2018-07-13 | Ascending | |||

| S1 | Co-flood | 2018-07-25 | Ascending |

| Flood Map | |||

|---|---|---|---|

| Ground truth | Class | Flood | Non-flood |

| Flood | TP | FN | |

| Non-flood | FP | TN | |

| Dataset | Methods | TPs | TNs | FNs | Recall (%) | FPs | Precision (%) | Fs (%) | IoU (%) |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 3D-CNN | 2,221,084 | 15,079,349 | 119,092 | 94.91 | 529,877 | 80.74 | 87.25 | 77.39 |

| PCAkmeans | 2,141,079 | 15,412,566 | 199,097 | 91.49 | 196,660 | 91.59 | 91.54 | 84.4 | |

| DBT | 2,082,436 | 15,467,227 | 257,740 | 88.99 | 141,999 | 93.62 | 91.24 | 83.90 | |

| MRF | 2,232,011 | 15,264,592 | 108,165 | 95.38 | 344,633 | 86.62 | 90.79 | 83.13 | |

| LUMRF | 2,187,602 | 15,357,184 | 152,574 | 93.48 | 252,042 | 89.67 | 91.53 | 84.39 | |

| IFBT | 2,180,172 | 15,369,232 | 160,004 | 93.16 | 239,994 | 90.08 | 91.6 | 84.5 | |

| USMRF | 2,203,538 | 15,357,668 | 136,638 | 94.16 | 251,558 | 89.75 | 91.9 | 85.02 | |

| 2 | 3D-CNN | 1,341,068 | 11,417,982 | 72,261 | 94.89 | 285,073 | 82.47 | 88.24 | 78.96 |

| PCAkmeans | 1,301,193 | 11,659,802 | 112,136 | 92.07 | 43,253 | 96.78 | 94.37 | 89.33 | |

| DBT | 1,300,286 | 11,659,131 | 113,043 | 92 | 43,924 | 96.73 | 94.31 | 89.23 | |

| MRF | 1,345,528 | 11,604,990 | 67,801 | 95.2 | 98,065 | 93.21 | 94.19 | 89.03 | |

| LUMRF | 1,323,469 | 11,646,163 | 89,860 | 93.64 | 56,892 | 95.88 | 94.75 | 90.02 | |

| IFBT | 1,323,903 | 11,644,165 | 89,426 | 93.67 | 58,890 | 95.74 | 94.7 | 89.93 | |

| USMRF | 1,334,619 | 11,643,410 | 78,710 | 94.43 | 59,645 | 95.72 | 95.07 | 90.61 | |

| 3 | 3D-CNN | 360,786 | 3,832,015 | 74,524 | 82.88 | 45,475 | 88.81 | 85.74 | 75.04 |

| PCAkmeans | 374,824 | 3,801,063 | 60,486 | 86.11 | 76,427 | 83.06 | 84.56 | 73.25 | |

| DBT | 395,208 | 3,773,452 | 40,102 | 90.79 | 104,038 | 79.16 | 84.58 | 73.28 | |

| MRF | 397,815 | 3,766,626 | 37,495 | 91.39 | 110,864 | 78.21 | 84.28 | 72.84 | |

| LUMRF | 385,125 | 3,798,500 | 50,185 | 88.47 | 78,990 | 82.98 | 85.64 | 74.88 | |

| IFBT | 388,261 | 3,782,382 | 47,049 | 89.19 | 95,108 | 80.32 | 84.53 | 73.2 | |

| USMRF | 391,188 | 3,794,491 | 44,122 | 89.86 | 82,999 | 82.5 | 86.02 | 75.47 | |

| 4 | 3D-CNN | 131,535 | 5,947,141 | 8,764 | 93.75 | 45,061 | 74.48 | 83.01 | 70.96 |

| PCAkmeans | 106,204 | 5,973,760 | 34,095 | 75.7 | 18,442 | 85.2 | 80.17 | 66.9 | |

| DBT | 126,754 | 5,938,155 | 13,545 | 90.35 | 54,047 | 70.11 | 78.95 | 63.59 | |

| MRF | 132,797 | 5,931,000 | 7,502 | 94.65 | 61,202 | 68.45 | 79.45 | 65.9 | |

| LUMRF | 117,069 | 5,964,784 | 23,230 | 83.44 | 27,418 | 81.02 | 82.22 | 69.8 | |

| IFBT | 123,441 | 5,947,058 | 16,858 | 87.98 | 45,144 | 73.22 | 79.93 | 66.57 | |

| USMRF | 119,282 | 5,968,100 | 21,017 | 85.02 | 24,102 | 83.19 | 84.1 | 72.56 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mohsenifar, A.; Mohammadzadeh, A.; Jamali, S. Unsupervised Rural Flood Mapping from Bi-Temporal Sentinel-1 Images Using an Improved Wavelet-Fusion Flood-Change Index (IWFCI) and an Uncertainty-Sensitive Markov Random Field (USMRF) Model. Remote Sens. 2025, 17, 1024. https://doi.org/10.3390/rs17061024

Mohsenifar A, Mohammadzadeh A, Jamali S. Unsupervised Rural Flood Mapping from Bi-Temporal Sentinel-1 Images Using an Improved Wavelet-Fusion Flood-Change Index (IWFCI) and an Uncertainty-Sensitive Markov Random Field (USMRF) Model. Remote Sensing. 2025; 17(6):1024. https://doi.org/10.3390/rs17061024

Chicago/Turabian StyleMohsenifar, Amin, Ali Mohammadzadeh, and Sadegh Jamali. 2025. "Unsupervised Rural Flood Mapping from Bi-Temporal Sentinel-1 Images Using an Improved Wavelet-Fusion Flood-Change Index (IWFCI) and an Uncertainty-Sensitive Markov Random Field (USMRF) Model" Remote Sensing 17, no. 6: 1024. https://doi.org/10.3390/rs17061024

APA StyleMohsenifar, A., Mohammadzadeh, A., & Jamali, S. (2025). Unsupervised Rural Flood Mapping from Bi-Temporal Sentinel-1 Images Using an Improved Wavelet-Fusion Flood-Change Index (IWFCI) and an Uncertainty-Sensitive Markov Random Field (USMRF) Model. Remote Sensing, 17(6), 1024. https://doi.org/10.3390/rs17061024