1. Introduction

With the development of computer vision, multi-sensor fusion, and other technologies, UAVs carrying a wide variety of sensors or tools are widely used in the fields of surveillance and rescue, surveying and mapping, military operations, and so on due to the advantages of low cost, reliability, and efficiency [

1,

2,

3]. The ground target localization tracking and motion state estimation capabilities of vision sensor-equipped UAV systems are crucial during mission execution [

4,

5]. We aim to utilize UAV aerial images, laser measurement distance, UAV position, and attitude information to accurately locate and track ground-moving targets and estimate their motion states. The task of target localization tracking and motion state estimation can be divided into two steps: the first step is to identify target objects within the field of view using computer vision techniques. The second step is to locate and track the recognized object and estimate its motion state [

6,

7]. The ground target localization tracking and motion state estimation require the pixel coordinates of the ground target detected and identified in the UAV aerial image as a priori information.

Target recognition tasks can be categorized into large and small targets based on the size of the target object or its proximity to the vision sensor. In real-world ground target localization tracking, long-range targets of hundreds of meters contain only a few appearance features, which makes aerial small-target recognition challenging [

8,

9]. Since the YOLO family of models was proposed in 2016, it has become a mainstream method for target recognition due to its low latency and high detection accuracy [

10,

11,

12,

13]. ReDet and S

2A-Net have become the most popular rotating target detection models in aerial image detection in recent years [

14,

15]. However, in our scenario, the exact rotation direction of the target is not required as a priori information. With limited arithmetic power, redundant rotation detection will affect the algorithm’s efficiency. In fixed-wing UAV high-altitude reconnaissance scenarios, Zhang et al. [

16] propose an algorithm for detecting small targets in UAV aerial photography scenarios (HSP-YOLOv8). The engineered and improved HSP-YOLOv8 runs on a single-board Raspberry Pi 5.

The relative height or relative distance between the UAV and the ground target is necessary to realize the ground target localization and tracking. Lightweight UAS typically use accurate digital elevation models (DEMs) to obtain the relative height of the UAV to a ground target [

17,

18]. Alternatively, the relative distance between the UAV and the ground target is estimated based on a known target size combined with imaging principles [

19,

20]. Zhang et al. [

21] proposed a method to estimate the relative height between the UAV and target without using a priori information, but it is only applicable when the ground target is in a stationary state. The further away the UAV is from the ground target, the more difficult it is to estimate the relative distance and relative height, and the accuracy of localization and tracking of long-distance targets will decrease dramatically [

22]. An alternative solution is utilizing the distributed predictive visual servo control of UAV clusters [

23] to acquire ground target images from multiple angles simultaneously and then use multi-view geometry for the 3D localization tracking of ground-moving targets [

24]. However, it brings new problems with increased cost scale, cooperative control, and data synchronization difficulties [

25].

When using UAV high-altitude reconnaissance for ground target localization tracking and motion state estimation, a typical mission scenario is that the UAV flies around the ground target at a high altitude. When the ground target appears in the field of view, the electrooptical stabilization and tracking platform (EOSTP) is controlled to lock onto and track the ground target, keeping the target in the center of the field of view [

26] and using a laser ranging sensor to obtain the relative distance between the UAV and the target [

27,

28]. In complex outdoor environments, small angular deviations of the visual sensors due to the motion of the optoelectronic payload platform, unstable vibration, etc., can exacerbate the final localization error [

29]. An attitude angle compensation approach has been used to improve the accuracy of ground target localization tracking. However, it does not take the irregular motion of the optoelectronic payload platform into account [

30]. A dynamic target localization tracking and motion state estimation algorithm based on extended Kalman filtering is proposed in the reference [

31]. The real-time estimation of ground-motion target position and motion state is combined with the trajectory smoothing algorithm to optimize the tracking trajectory, but the accuracy improvement is marginal.

In this study, we develop a framework for high-precision localization tracking and motion state estimation of ground-motion targets using high-altitude reconnaissance by UAVs, and the constructed system is shown in

Figure 1. The main contributions of this paper are as follows:

A new framework for the geolocalization of ground-moving targets based on monocular vision and laser range sensors is proposed. Using the laser ranging sensor to continuously measure the center point of the field of view, there is no need to align with the ground target and only a need to keep the ground target within the field of view of the UAV to complete the high-precision geolocalization tracking. It should be noted that the closer the ground target is to the center of the field of view, the higher the ground target positioning accuracy.

The first layer of the layered filtering algorithm estimates the motion state of the optoelectronic load, and the second layer of the filtering estimates the motion state of the ground target. The first layer filter compensates for the error of the AHRS data to a certain extent, which may reach more than a degree, thus seriously affecting the tracking accuracy of the UAV on the ground dynamic target [

32]. The second layer of filtering is constrained by the laser ranging value, which greatly improves the estimation accuracy of the ground target motion state.

2. Ground Dynamic Target Geolocalization Model

2.1. Relevant Research Base

In our previous related work [

33], we derived in detail the transformation relationship between the geographic coordinate system and the spatial Cartesian coordinate system. In this paper, the position

of the UAV under the spatial Cartesian coordinate system can be obtained from the GPS. At the same time, according to the laser ranging, we can know that the corresponding point of the image center on the ground is represented in the camera coordinate system as follows:

, where

is the distance to the center of the field of view as measured by the laser ranging equipment of the optronic pod. The coordinate transformation relationship between the ground point corresponding to the center of the image in the spatial Cartesian coordinate system and the camera coordinate system is as follows:

In this equation,

, the UAV navigational yaw angle is

φ, the UAV navigational pitch angle is

γ, and the UAV navigational roll angle is

θ.

,

and

are rotation matrices about the

X-axis,

Y-axis, and

Z-axis, respectively.

, where

α is the azimuth of rotation of the photoelectric load, and

β is the optoelectronic load pitch angle. The coordinates of the ground point correspond to the center of the image in the spatial Cartesian coordinate system

. The computational expression can be written as

According to (2), the coordinates of the ground point corresponding to the center of the field of view under the spatial right-angle coordinate system can be obtained and then converted to the geographic coordinate system of the Earth; the latitude, longitude, and altitude of the ground point corresponding to the center of the field of view can be obtained.

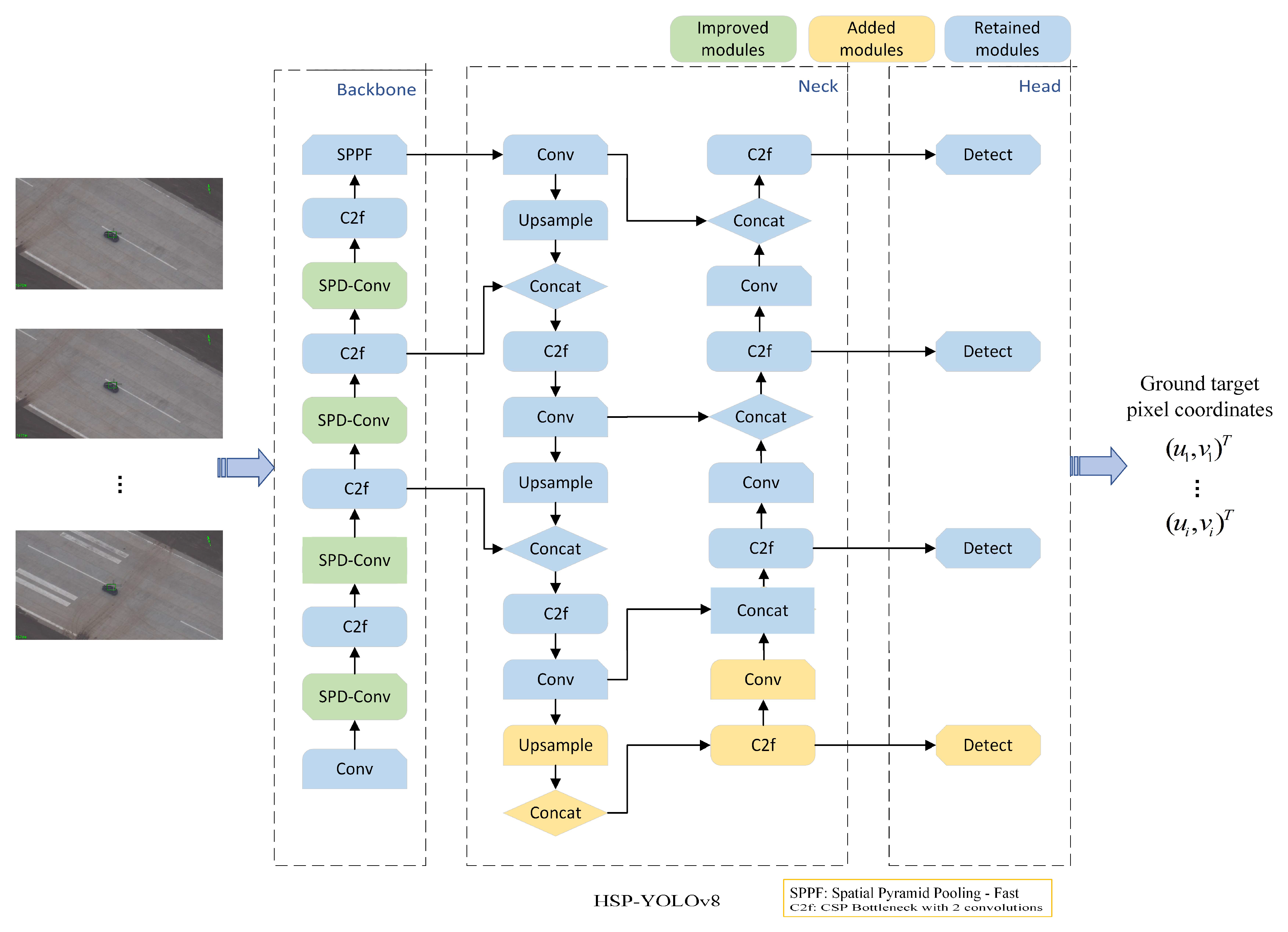

Our goal is geolocalization and tracking targets within the field of view, and to achieve this goal, we also need to use our previous related research [

16]. We added an extra tiny prediction head and a null-depth convolution module to the YOLOv8 algorithm and a post-processing algorithm more suitable for small target recognition, the Space-to-Depth Convolution (SPD-Conv) module. In the fixed-wing UAV high-altitude reconnaissance scenario, the network structure that has been specially designed by us runs on a single-board Raspberry Pi 5, and the algorithm has a speed of 5 FPS, which is consistent with the laser measurement distance frequency. The input and output of the algorithm and the network structure are shown in

Figure 2. In this paper, we default to the target pixel location in the UAV vision image being accessible, and we do not discuss the case where the target pixel location is not accessible due to image quality.

2.2. Geolocalization of Any Point in an Image

The geolocation of an arbitrary point in an image differs from the geolocation of the center of the image. Because of the lack of physical distance measured by laser ranging at the target point, it is impossible to directly obtain the coordinates of the target point in the camera coordinate system. Therefore, it is necessary to calculate the coordinates of the ground position at the target point in the camera coordinate system, and then the coordinates of the target point in the geographic coordinate system can be obtained after coordinate conversion.

In the camera coordinate system, the two-dimensional coordinates of any point

in the image are expressed as

. Point

is the corresponding point of point

in the physical plane. The geometric relationship between the coordinates of point

under the camera coordinate system

and the pixel coordinates

of point

m to each other can be expressed as follows:

In this equation, is the focal length of the camera mounted on the drone. are the pixel coordinates of the center point of the image, and and are the horizontal and vertical physical lengths represented by each camera pixel, respectively.

The geometric relationship of imaging during UAV high-altitude reconnaissance is shown in

Figure 3. The plane

indicates the ground taken during aerial photography.

represents the plane that is imaged inside the camera when the camera is imaging. In plane

,

is the center of the camera’s field of view, and the length of

is the focal length

.

denotes the horizontal axis of the

-plane.

represents the vertical axis of the plane

. Point

is the corresponding point of point

the corresponding point of the projection onto the ground. In plane

,

is the intersection of the centerline of the camera’s field of view and the plane

.

is the projection of the

-axis through

in the plane

.

is the mapping of the

-axis in the plane

.

is the point under the projection of

in the plane

.

denotes the camera coordinate system.

represents a system of right-angled coordinates in space.

The angle between

and

-axis is noted as

λ. The angle between

and

is noted as

μ. The angle between

and

-axis is noted as

ω. Vector

is the vector in the camera coordinate system

. The unit vector of the

-axis of the coordinate system

is

.

is represented in the coordinate system

as

, so

.

is the vector in the camera coordinate system

.

is represented in the coordinate system

as

.

, so the angle of pinch

λ,

μ,

ω can be expressed as

Let

, then

,

, and the

-axis coordinates of point

in the camera coordinate system are

Substituting into (3), the other coordinate values under the camera coordinates of the point

can be calculated.

At this point, we have obtained the coordinates of point

in the camera coordinate system

, and then the coordinates of point

under the coordinate system of the spatial Cartesian coordinate system can be calculated according to (2).

Then, is converted to a geographic coordinate system, which completes the geolocalization of any point in the image.

3. Ground Dynamic Target Tracking Estimation Model

Considering that in practical applications, the rotational azimuth and pitch angle of the photoelectric load provided by the photoelectric imaging module have some deviation from the real values. Combined with other measurement errors, the calculated ground target position will also have a large error. The calculated ground target position jumps and changes at successive times. Such results do not match the real movement trajectory and are not favorable for practical applications. Therefore, it is necessary to establish a corresponding motion-tracking model to suppress the influence of various types of noise on the system by designing a suitable filtering-tracking algorithm to obtain more accurate and stable target-tracking results.

A typical discrete-time nonlinear state-space model and state-observation model can be expressed as follows:

where

and

denote the state vector and observation vector at the

moment, respectively.

and

represent the nonlinear state function and observation function, respectively.

is a zero-mean Gaussian process noise satisfying

.

is the zero-mean Gaussian measurement noise satisfying

.

3.1. Optical Load Equation of Motion

For the motion of the photoelectric load carried by the UAV, the equation of motion of the photoelectric load can be constructed as

The photoelectric load at the

moment of real-time state is represented by vector

, representing the angle values and rotation speeds of the photoelectric load at the

moment and the angular values and rotation speeds of the photoelectric load in the rotational and pitching directions, respectively. Thus, the state transfer matrix

can be written as

The results of drone vibration, wind, and other effects can be modeled by noise . For the convenience of model solving, it is assumed that noise approximately follows a Gaussian distribution, .

3.2. Dynamic Target Equations of Motion

For the motion trajectory tracking of a ground-motion target, the motion equation of the target can be constructed as

The real-time state at the moment

of a ground-moving target is represented by the vector

, representing, respectively, the ground-motion target coordinates and velocities in the

,

and

directions of the reference coordinate system at moment

. Thus, the state transfer matrix

can be written as

The results of ground, wind, and other effects in the environment in which a ground-moving target is located can be modeled by noise . For the convenience of model solving, it is assumed that noise approximately follows a Gaussian distribution, .

3.3. Constructing the Observation Equation

For photoelectric loads, the observation equation can be written as

where

represents the measured value obtained by the system through the sensor at moment

,

is the rotational azimuth, and

is the pitch angle. Units are in the international system of units. Considering that this result is affected by sensor noise and has some deviations from the true value, it is modeled using the measurement noise

. For the convenience of model solving, it is assumed that the measurement noise

approximately follows a Gaussian distribution, and

.

For dynamic targets on the ground, the distance between the UAV and the ground point corresponding to the center of the field of view can be obtained from the laser ranging sensor, which, in terms of

can be written as

where

denotes the coordinates of the UAV’s location at moment

, and

denotes the coordinates of the center of the visual field corresponding to the ground point at moment

. The observation equation can be written as

where

indicates the results of the initial calculation of the ground target position by the system at moment

through (7) and the measured value of the laser ranging sensor, all in the international system of units. The measured values are affected by sensor noise and deviate from the true values, which are modeled using measurement noise

. For the convenience of model solving, the noise

is considered to follow a Gaussian distribution,

.

3.4. Algorithm Iteration Process

The iterative process of the algorithm in this paper consists of two steps: Propagation and Updating. At the Propagation step, based on the estimation of the target state

and the corresponding estimation error covariance

at moment

, the prediction of the target state

and the corresponding prediction error covariance

at moment

is accomplished, and the specific process is expressed as follows:

At the Update step, the predicted values are first utilized to compute the Jacobi matrix

, which is used to compute the Kalman gain

. Then, the predicted value

is corrected based on the measured value

provided by the platform to obtain an estimate

of the target state at moment

. Finally, the error covariance matrix

of this estimate is calculated, and the procedure is expressed as follows:

This completes the estimation of the ground target state at moment . Afterwards, whenever the measurements at moment are obtained, the ground target state estimation at moment can be performed. By recursively proceeding in this manner, a real-time estimation of the target state is achieved.

Such an iterative approach fully utilizes the available historical observations to predict the current state of the ground target. Compared with the direct use of observations to estimate the ground target state, our hierarchical filtering algorithm utilizes more a priori information by first estimating the current motion state of the optoelectronic load and then using the updated angular data of the optoelectronic load to participate in the estimation of the current state of the ground target so that we can obtain more accurate and stable results of the ground target positioning and tracking and the motion state estimation.

4. Results and Discussion

In the previous two sections, we introduced a hierarchical filtering algorithm for the high-precision geolocalization tracking and motion state estimation of UAV dynamic targets. In this section, we evaluate the performance of our proposed algorithm, HPLTMSE, in all aspects and compare the algorithms EKF, VOLTS [

31], and MGG [

30]. We set up three separate sets of experiments to verify. The ground target in the test moves in three different motion modes, and we use an ASN-216 fixed-wing UAV to conduct aerial following reconnaissance of the target and collect real-time UAV visual images, UAV position, UAV attitude, optoelectronic load attitude, and laser ranging values. The experiment site is shown in

Figure 4. The experiment site is located at the geographical coordinates (108°51′22″E, 37°45′26″N) of an airport in the northern region of Shaanxi Province. At the same time, the GPS receiver mounted on the ground target provides us with real-time position and velocity information of the ground target as the real values for algorithmic comparison.

The experiments simulate three scenarios for military applications. Experiment A simulates the tracking and positioning of transportation vehicles with high-speed maneuverability in variable-acceleration linear motion. Experiment B simulates the tracking and positioning of transport vehicles with high-speed maneuverability in a variable-acceleration folding motion. Experiment C simulates the tracking and positioning of an armored vehicle with slightly weaker maneuverability. However, the armored vehicle will change its maneuvering state to be towed by the transport vehicle in the course of the mission, so there will be two variable-acceleration motions. The algorithm was developed and tested on a PC but ultimately runs on a single-board Raspberry Pi 5, and the errors of each sensor of the drone platform are shown in

Table 1.

4.1. Experiment A

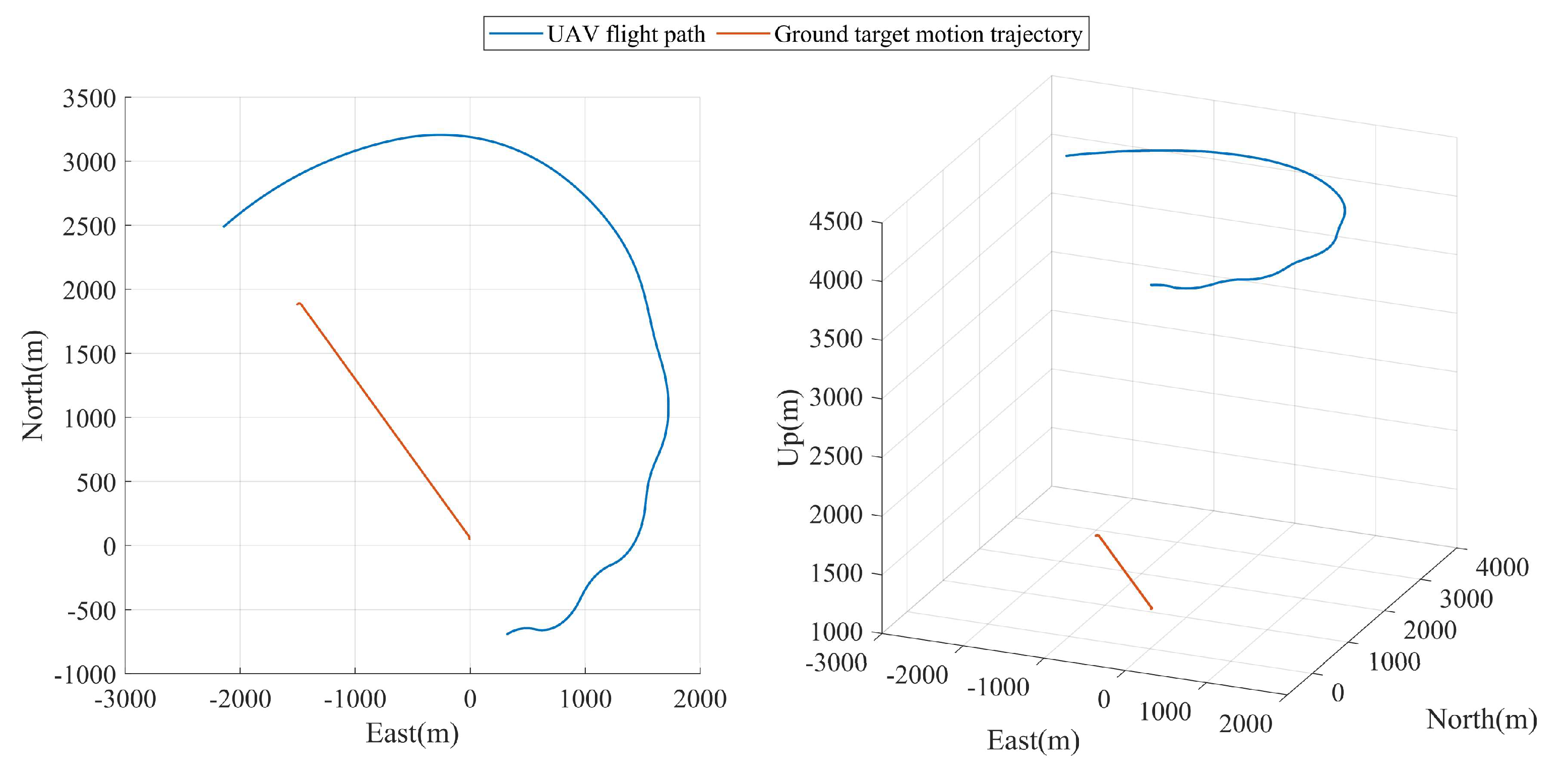

In experiment A, the ground-motion target moved along a closed section of road in a straight-line, variable-speed motion, accelerating from about 20 km/h to about 70 km/h and decelerating to about 20 km/h. At the same time, the UAVs circled around the ground target at distances ranging from 3850 m to 4550 m and collected the required data. The UAV flight trajectory and ground target movement trajectory are shown in

Figure 5. The laser measurement distance is shown in

Figure 6. The EKF, VOLTS, and MGG algorithms are used for the geolocalization tracking and motion state estimation of ground-motion targets on the first set of UAV time-series data collected, respectively, to compare and verify the performance of the HPLTMSE algorithm proposed in this paper.

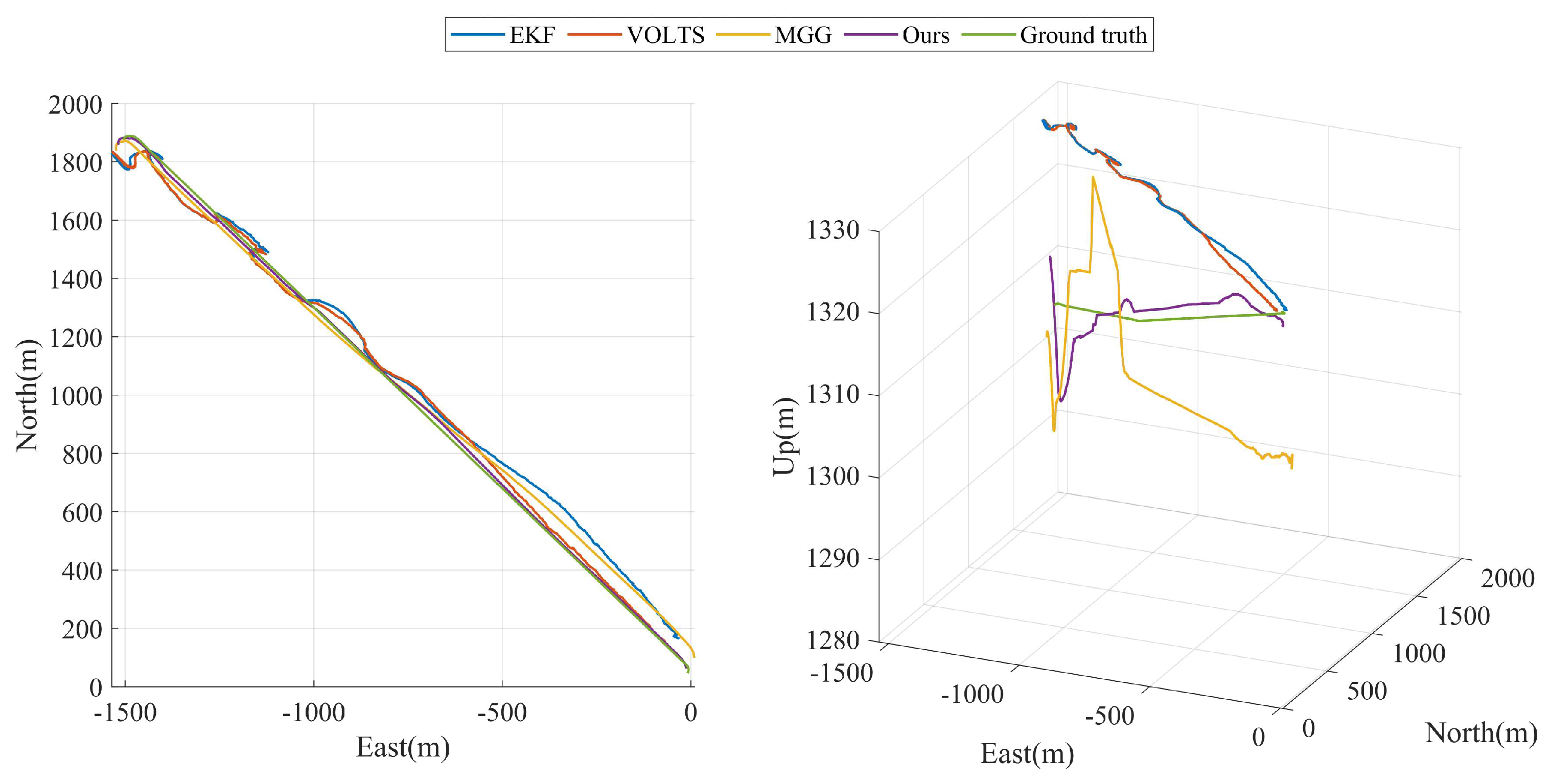

The geolocalization tracking results of the four algorithms for ground-motion targets in experiment A are shown in

Figure 7. From the figure, it can be seen that among the two-dimensional trajectories in the horizontal plane output by the four algorithms, HPLTMSE most closely matches the real motion trajectory of the ground target, MGG is a little bit worse, and VOLTS and EKF have the worst performance in terms of accuracy. This is because VOLTS is essentially an EKF superimposed trajectory smoothing algorithm. The EKF algorithm is effective only for Gaussian-distributed noise and is not robust to non-Gaussian-distributed noise. This is manifested by the fact that when the sensor measurements appear to deviate from the wild values of the expected point cloud, the estimation results given by the system will have large jumps and will not be stable enough. In comparison, MGG is based on UAV visual images, and HPLTMSE is based on hierarchical filtering and laser-measured distance constraints, which enable the geolocalization of ground targets and smoother trajectory tracking as long as the ground targets are not lost in the UAV’s field of view. The accuracy of the HPLTMSE algorithm for estimating ground target height information is significantly improved due to the laser measurement distance constraints.

The results of the ground target motion state estimation for experiment A are shown in

Figure 8. From the figure, it can be seen that the purple curve is closest to the green curve when the motion speed of the ground target changes, i.e., the HPLTMSE algorithm has the fastest response to the motion state estimation of the ground target among the four algorithms. The VOLTS and EKF algorithms are subject to large fluctuation distortions in ground target motion state estimation due to the poor performance of the results of the geolocalization and tracking of ground targets.

The error metrics (RMSE) for each of the four algorithms in experiment A are shown in

Table 2. Benefiting from the laser measurement distance constraints, the HPLTMSE algorithm significantly improves the estimation accuracy of the ground target height information, with a ground target height estimation RMSE of 3.34 m. The overall RMSE of this paper’s algorithm ground target geolocalization and tracking is 22.26 m, and the estimated RMSE of the ground target motion velocity is 1.13 m/s. Compared with other comparative algorithms, this paper’s algorithm reduces the ground target geolocalization and tracking error by at least 14.95 m and reduces the estimation error of the ground target’s movement speed by at least 1.03 m/s.

4.2. Experiment B

In experiment B, the ground-motion target made a round-trip motion along a closed road, and the motion speed was decelerated from about 70 km/h to about 20 km/h and then accelerated to about 70 km/h again. At the same time, the UAV flew asymptotically at a distance of 4650 m from the ground target to a distance of 3900 m from the ground target to reconnoiter the ground target and collect the required data. The UAV flight trajectory and ground target movement trajectory are shown in

Figure 9.

The laser measurement distance is shown in

Figure 10. The EKF, VOLTS, and MGG algorithms are used for geolocalization tracking and the motion state estimation of ground-motion targets, respectively, on the second set of UAV time series data collected to compare and verify the performance of the HPLTMSE algorithm proposed in this paper.

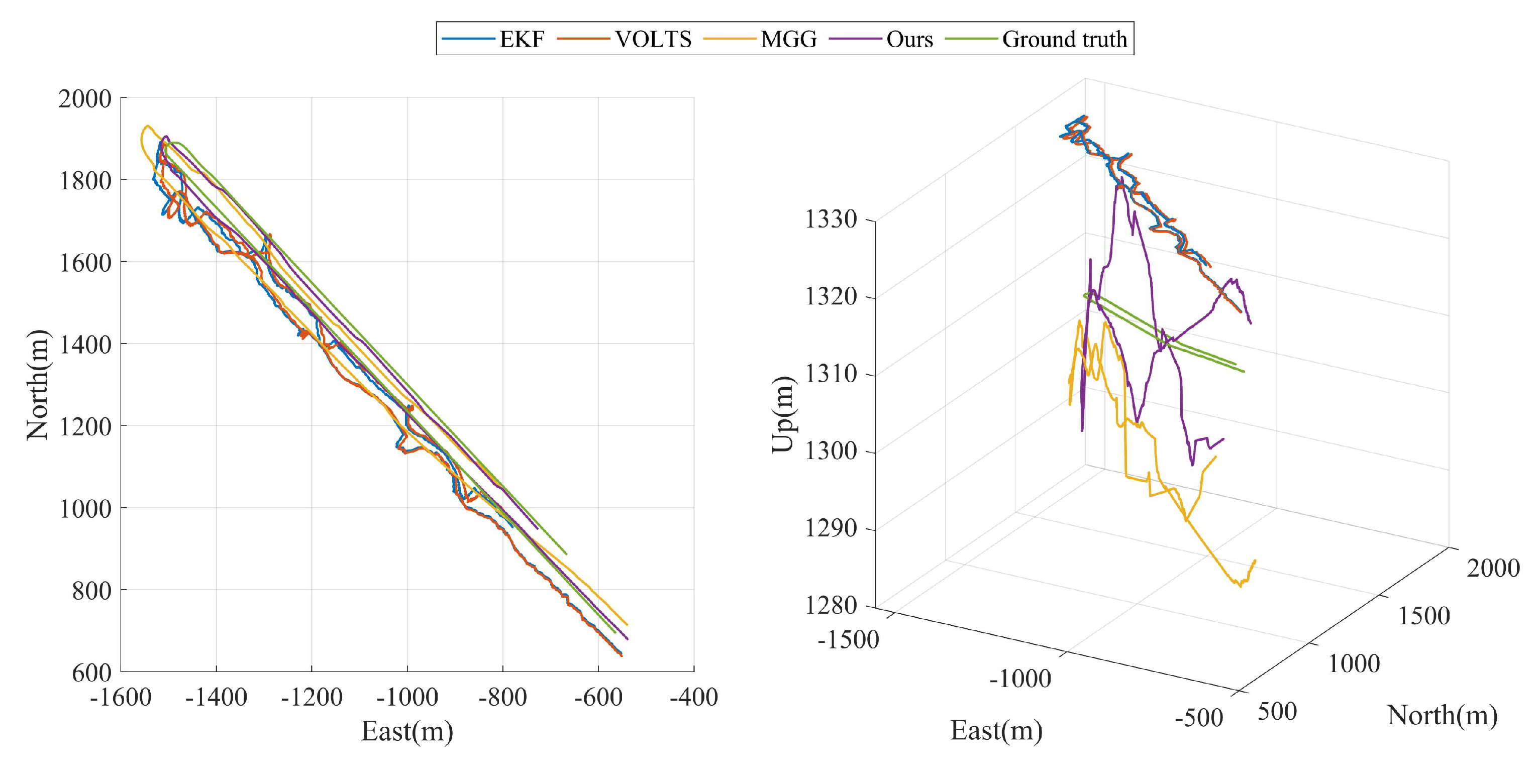

The geolocalization tracking results of the four algorithms for ground-motion targets in experiment B are shown in

Figure 11, which are consistent with the experiment A results. From

Figure 11, it can be seen that among the two-dimensional trajectories in the horizontal plane output by the four algorithms, HPLTMSE best fits the real motion trajectory of the ground target, MVBMTG is a little bit worse, and VOLTS and EKF perform the worst in terms of accuracy. It is worth noting that the MGG algorithm has a larger tracking trajectory error at this point when the ground target turns its direction due to the large changes in the UAV’s visual image and the increased difficulty in image alignment. As can be seen in

Figure 11, the accuracy of the HPLTMSE algorithm in estimating the ground target height information is significantly better than the other comparative algorithms due to the constraint of the laser measurement distance.

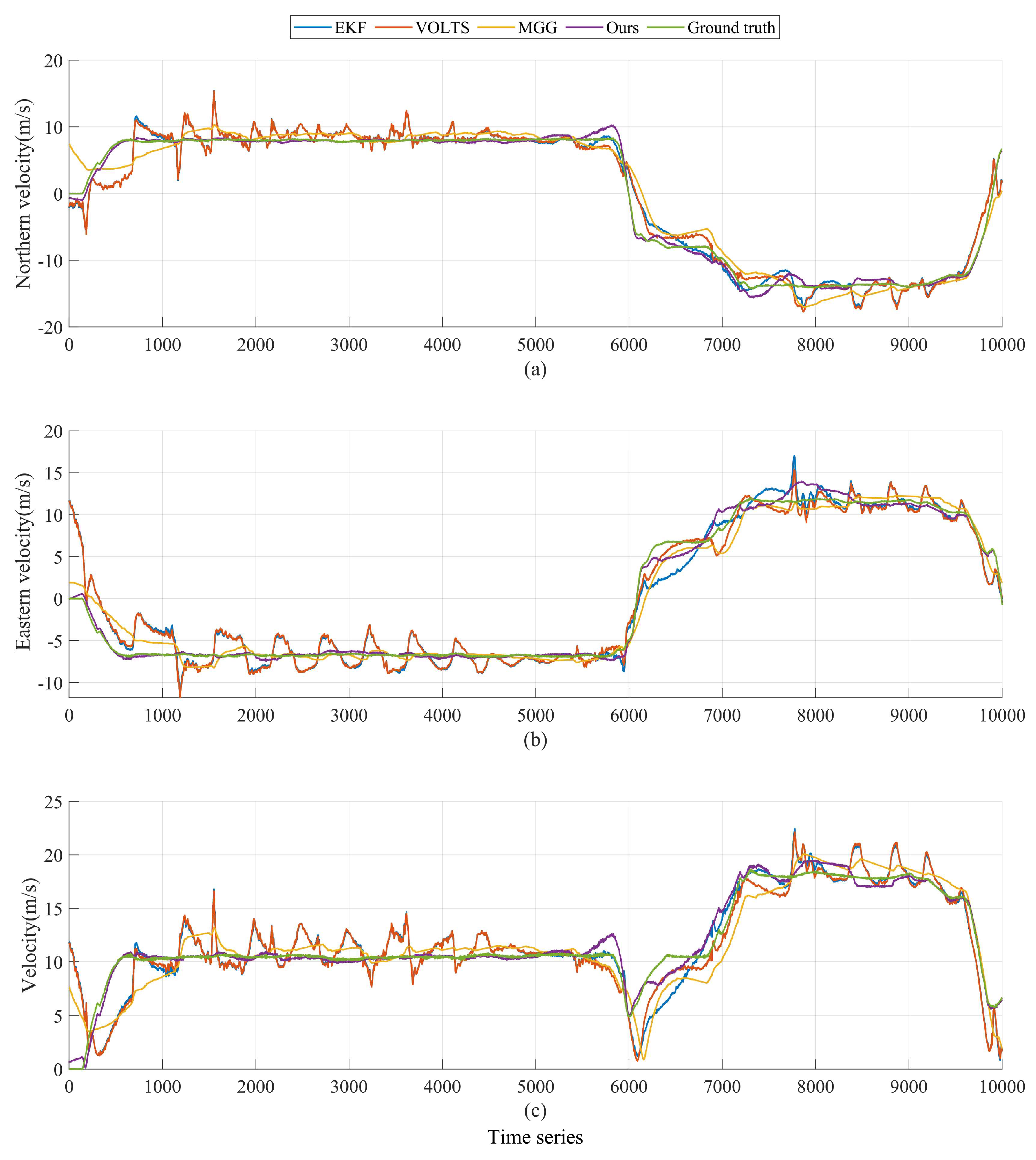

The results of the ground target motion state estimation for experiment B are shown in

Figure 12. From the figure, it can be seen that the purple curve is closest to the green curve when the ground target’s motion speed changes, i.e., the HPLTMSE algorithm has the fastest response to the ground target’s motion state estimation among the four algorithms. The VOLTS and EKF algorithms are subject to large fluctuation distortions in ground target motion state estimation due to the poor performance of the results of the geolocalization and tracking of ground targets.

The error metrics (RMSE) for each of the four algorithms in experiment B are shown in

Table 3. Benefiting from the laser measurement distance constraint, the HPLTMSE algorithm significantly improves the estimation accuracy of ground target height information. The overall RMSE of this paper’s algorithm ground target geolocalization and tracking is 25.31 m. The estimated RMSE of the ground target height is 8.42 m. The estimated RMSE of the ground target motion velocity is 1.39 m/s. Compared with other comparative algorithms, this paper’s algorithm reduces the geolocalization and tracking error of ground targets by at least 16.07 m and reduces the estimation error of ground targets’ movement speed by at least 1.06 m/s.

4.3. Experiment C

In experiment C, the ground-motion target made a folding back for one lap motion along a section of a closed road, with a speed of about 35 km/h for the first half of the motion from rest and about 65 km/h for the second half of the motion. At the same time, the UAV flew asymptotically at a distance of 5300 m from the ground target to 3600 m from the ground target to transform into an encircling flight to reconnoiter the ground target and collect the required data. The UAV flight trajectory and ground target movement trajectory are shown in

Figure 13. The laser measurement distance is shown in

Figure 14. The EKF, VOLTS, and MGG algorithms are used for geolocalization tracking and the motion state estimation of ground-motion targets for the third set of UAV time series data collected, respectively, to compare and verify the performance of the HPLTMSE algorithm proposed in this paper.

The geolocalization tracking results of the four algorithms for ground-motion targets in experiment C are shown in

Figure 15. The results of ground target motion state estimation in experiment C are shown in

Figure 16. Consistent with the results of experiment A and experiment B, it can be seen from the figure that among the tracking trajectories output by the four algorithms, HPLTMSE most closely matches the real trajectory of the ground target, MGG is a little bit worse, and the accuracy of VOLTS and EKF is the worst. Among the four algorithms, the HPLTMSE algorithm estimates the ground target’s motion state most accurately, and the algorithm performs best when the ground target’s motion state is stable.

The error metrics (RMSE) for each of the four algorithms in experiment C are shown in

Table 4. Benefiting from the laser measurement distance constraint, the accuracy of the HPLTMSE algorithm in estimating the ground target height information is significantly improved, and the RMSE of the ground target height estimation is 5.84 m. The overall RMSE of this paper’s algorithm for ground target geolocalization and tracking is 26.99 m, and the RMSE of ground target motion velocity estimation is 1.08 m/s. Compared with other comparative algorithms, this paper’s algorithm reduces the ground target geolocalization and tracking error by at least 7.93 m and the ground target motion velocity estimation error by at least 0.83 m/s.

5. Conclusions

In this work, we propose a framework for the geolocalization tracking and motion state estimation of ground-motion targets based on monocular vision and laser ranging sensors. Based on the pixel position information of ground targets in UAV aerial images that have been obtained, a general formula for the geolocalization of ground targets in the field of view under non-directed laser irradiation is derived. We designed a hierarchical filtered motion state estimation algorithm to estimate the motion states of optoelectronic loads and ground targets separately. The laser measurement distance is used as a constraint to participate in the estimation of the ground target motion state using the first layer of optoelectronic load angle estimates. The experimental results in

Section 4 show that our algorithm improves the ground target localization tracking accuracy by at least 7.5 m, improves the ground target motion velocity estimation accuracy by at least 0.8 m/s, and, in particular, controls the geolocalization altitude error of the ground target to within 10 m. High-precision ground-motion target localization tracking and motion state estimation under high-altitude reconnaissance for fixed-wing UAVs are realized.

In a military scenario, using the upper limit of the geolocation error confidence interval (90% confidence level) as the radius of fire coverage, military strikes against tracked ground targets can ensure that the target has a 95% probability of being hit. Higher geolocation accuracy at this time can save a lot of weapon resources. Therefore, our study has significant applications.

However, the accuracy of ground target localization tracking and motion state estimation that we achieve relies on the accuracy of laser ranging. In order to keep the drone itself hidden, the laser ranging sensor cannot measure continuously. How to realize the robust geolocalization of ground targets in the case of intermittent measurements by laser ranging sensors will be our future research direction.