MtAD-Net: Multi-Threshold Adaptive Decision Net for Unsupervised Synthetic Aperture Radar Ship Instance Segmentation

Abstract

1. Introduction

- 1.

- We propose a new network model named Multi-threshold Adaptive Decision Network (MtAD-Net). Unlike existing methods, our approach utilizes the CFAR algorithm to analyze the sea clutter noise around the ship based on different false alarm rates, obtaining the corresponding thresholds and achieving adaptive decision fusion. This process enables accurate localization of the ship’s target boundary and segmentation into continuous regions.

- 2.

- We design a pixel-level contrast loss function (PLC-Loss) that reduces the distance of pixels within the same category and increases the distance of pixels of different categories so that the model can converge quickly under unsupervised conditions.

- 3.

- Experimental results show that unsupervised instance segmentation of SAR ship images is a challenging task, and previous unsupervised segmentation methods cannot provide precise results. Our method achieves state-of-the-art (SOTA) results in five metrics [30]: Pixel Accuracy (PA), Mean Intersection over Union (MIoU), Frequency Weighted Intersection over Union (FWIoU), kappa (K), and F1-Score (F1).

2. Related Work

2.1. Unsupervised Deep Learning Segmentation Method for Optical Images

2.2. Unsupervised Deep Learing Segmentation Method for SAR Images

3. Method

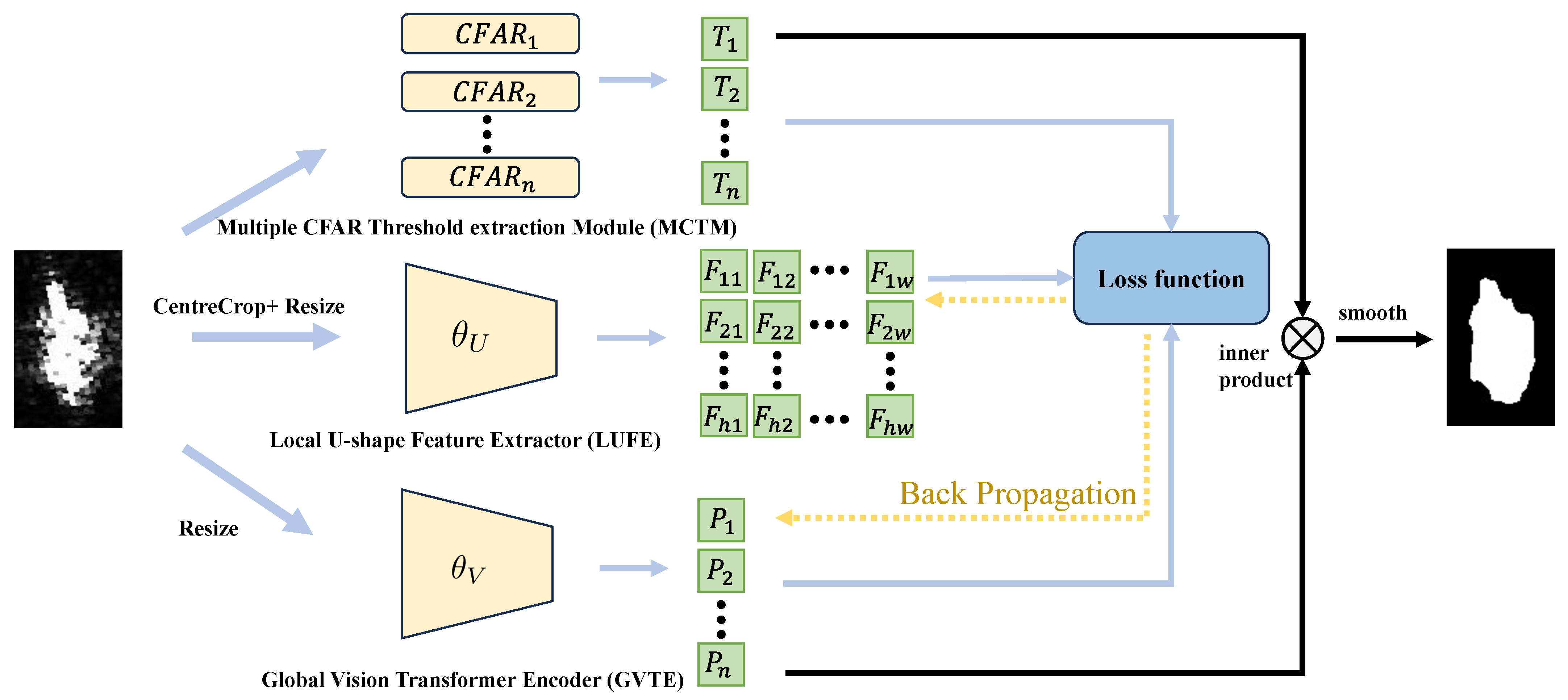

3.1. Overall Architecture

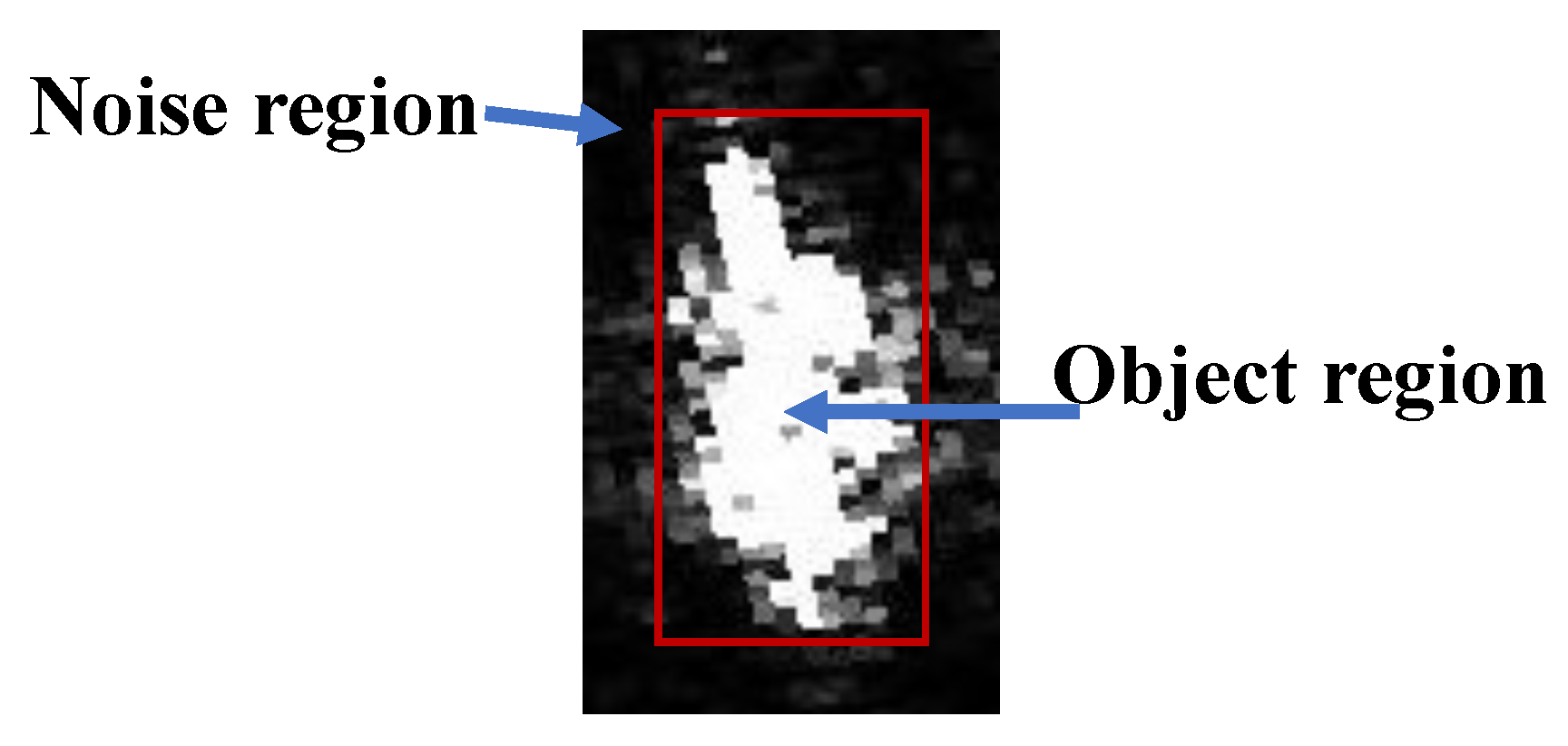

3.2. Multiple CFAR Threshold-Extraction Module

| Algorithm 1 Get Noise Region. |

| Input: Output: Noise region 1: 2: 3: 4: 5: 6: 7: return Noise region |

| Algorithm 2 Get Threshold T by . |

| Input: Output: T 1: 2: for each do 3: 4: 5: while do 6: if then 7: 8: break 9: end if 10: 11: 12: end while 13: end for 14: 15: return T |

3.3. Local U-Shape Feature Extractor

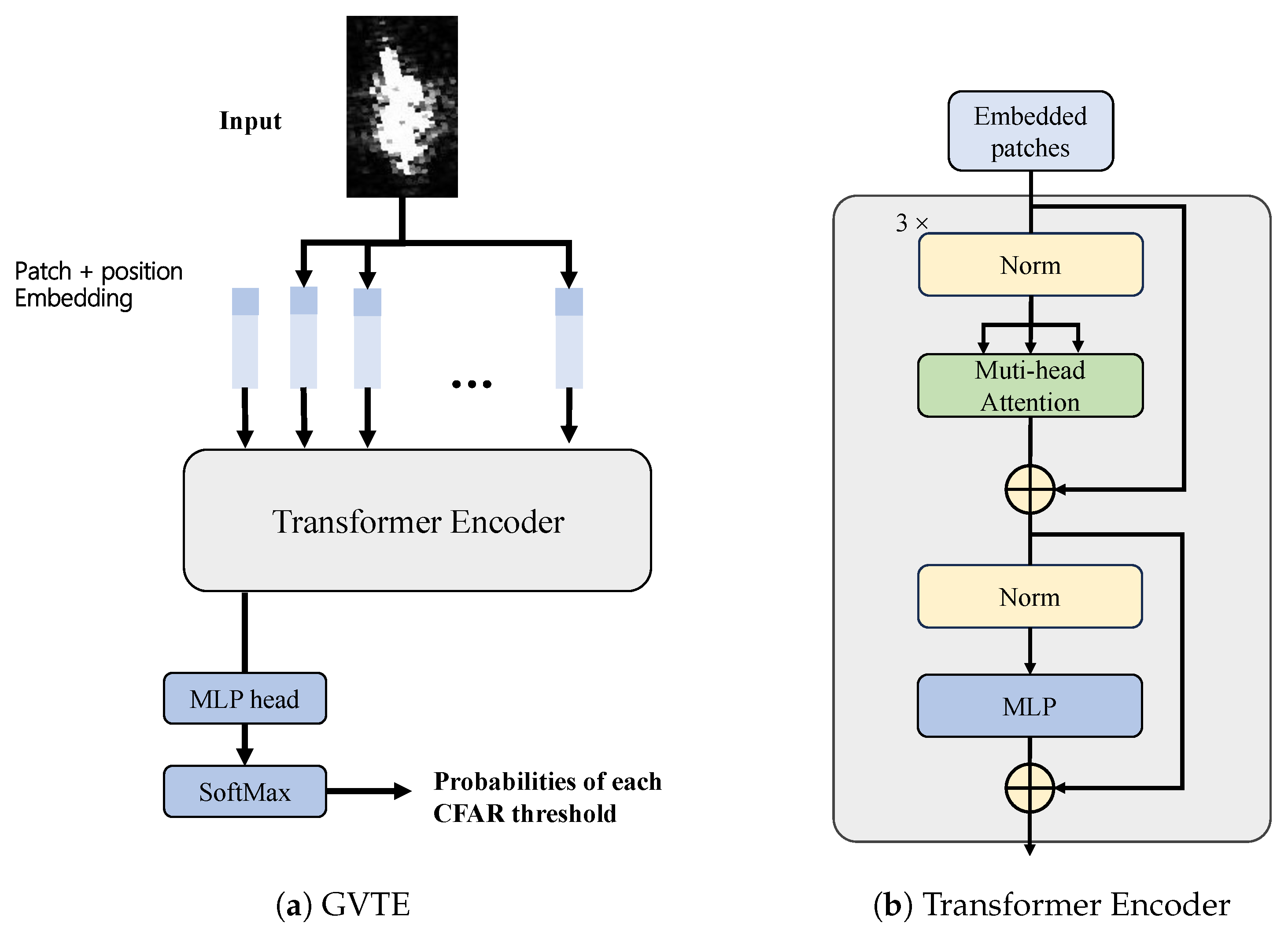

3.4. Global Vision Transformer Encoder

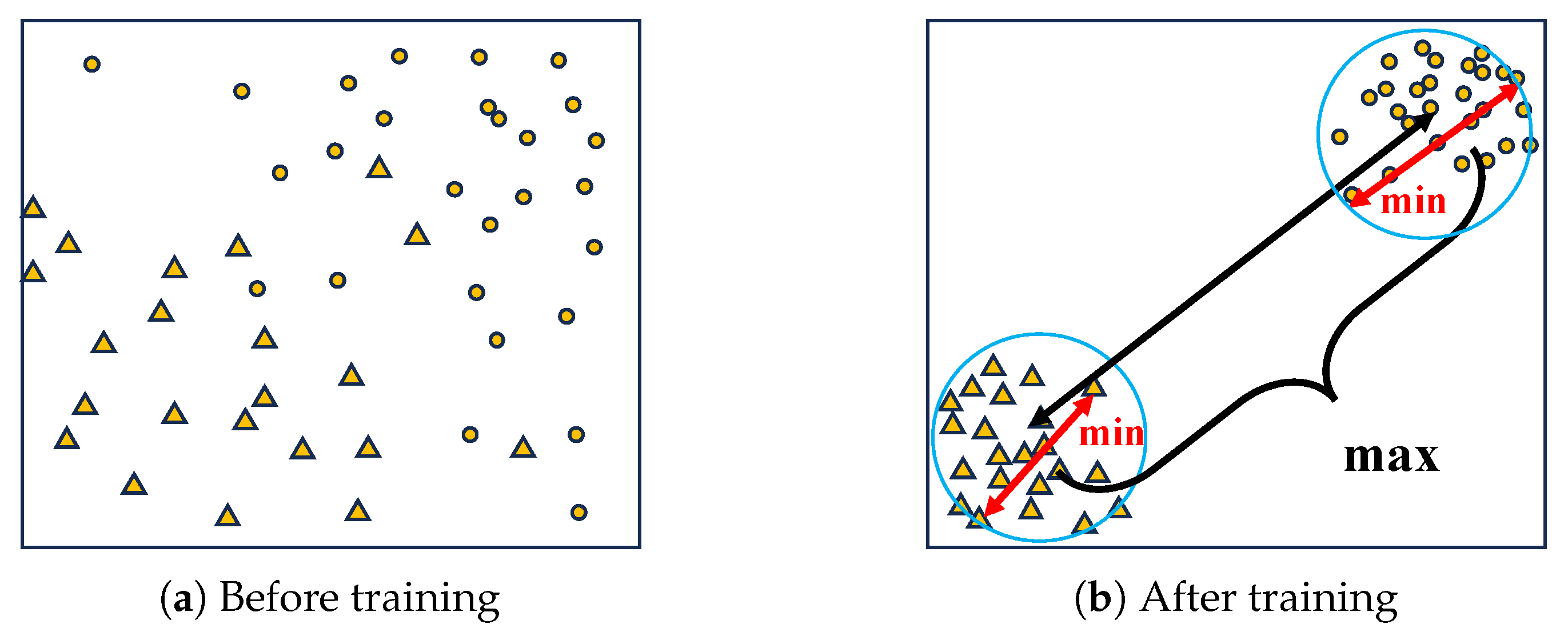

3.5. Pixel-Level Contrast Loss Function

3.6. Label Smoothing Module

| Algorithm 3 Denoise large size of pepper noise in the ship area |

| Input: Output: I 1: 2: 3: if then 4: 5: else if 6: 7: else 8: 9: end if 10: 11: return I |

4. Experiment

4.1. Dataset

4.2. Evaluation Metrics

4.3. Implementation Details

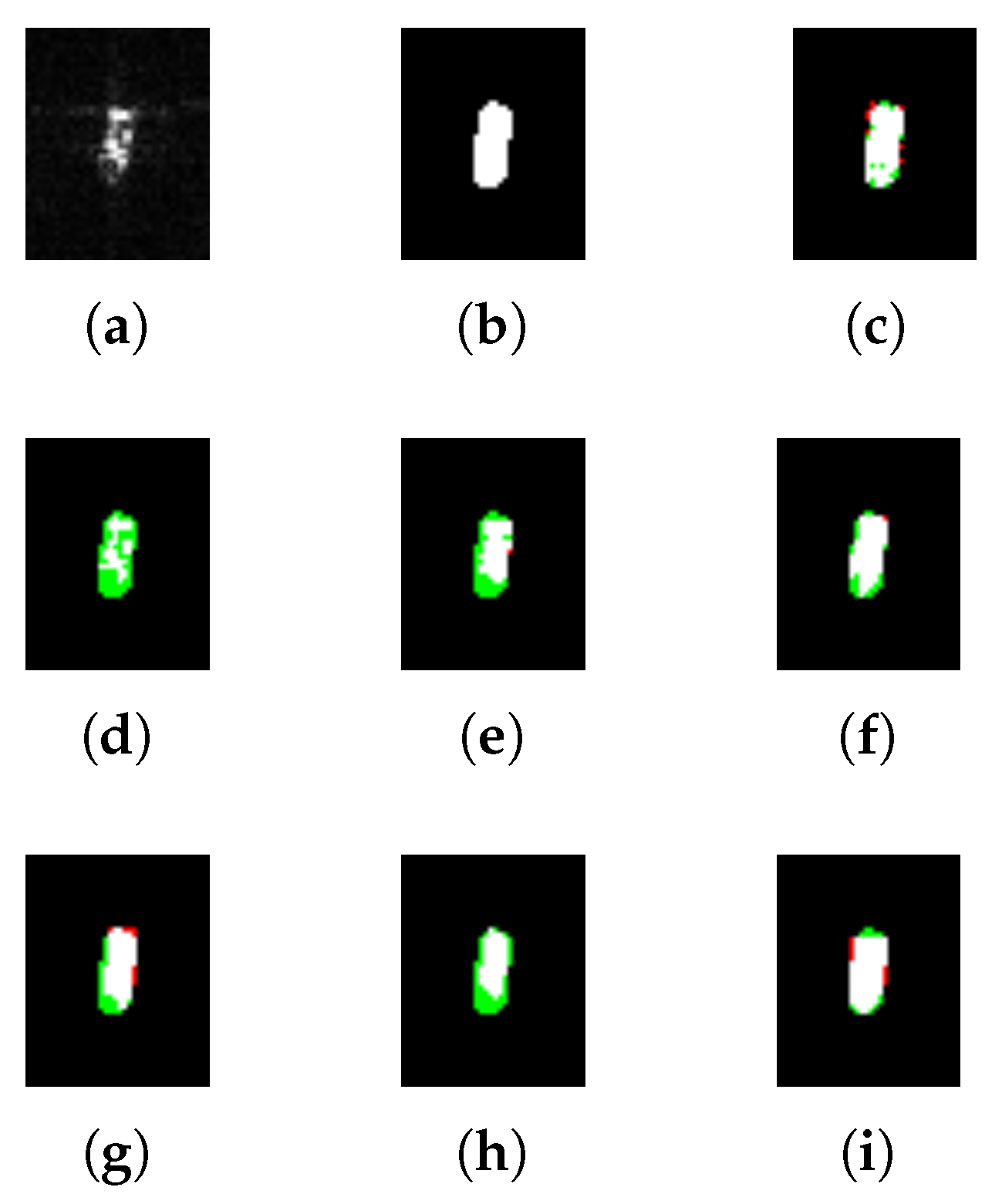

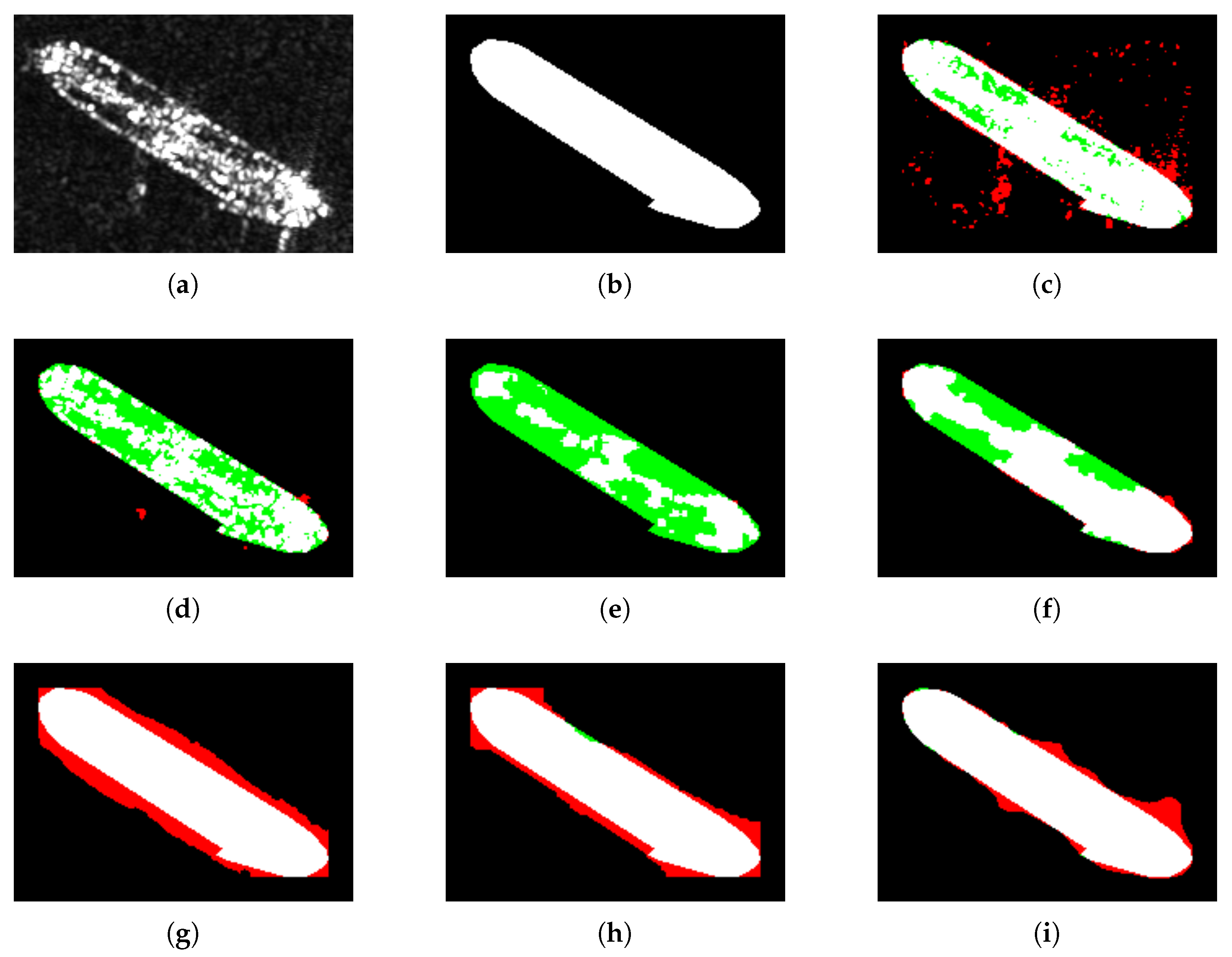

4.4. Comparison with Representative Methods

4.5. Ablation Study

4.5.1. Ablation Study on Removing the Percentage of Pixel Values in RemoveLargeValue()

4.5.2. Ablation Study on the Impact of Different Fa Vectors

4.5.3. Effectiveness of Different Components

- MtAD-Net without MCTM: We remove the MCTM from the proposed MtAD-Net and replace the threshold vector provided by the MCTM with a constant set of thresholds . For a fair comparison, we retrained this variant on our datasets.

- MtAD-Net without LUFE: We use SegNet [45] with the same number of layers to replace the LUFE module in the proposed MtAD-Net. For fair comparison, we retrained this variant on our datasets.

- MtAD-Net without GVTE: We use ResNet to replace the GVTE module in the proposed MtAD-Net. For fair comparison, we retrained this variant on our datasets.

- MtAD-Net without PLC-Loss: we retrained the MtAD-Net using the InfoNCE loss for a fair comparison.

- MtAD-Net without Label Smoothing: we removed the label smoothing module from our MtAD-Net, and retrained this variant on our dataset.

4.6. Analysis of in MtAD-Net

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhao, M.; Zhang, X.; Kaup, A. Multitask learning for SAR ship detection with Gaussian-mask joint segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5214516. [Google Scholar] [CrossRef]

- Cheng, P.; Toutin, T. RADARSAT-2 data. GeoInformatics 2010, 13, 22. [Google Scholar]

- Pitz, W.; Miller, D. The terrasar-x satellite. IEEE Trans. Geosci. Remote Sens. 2010, 48, 615–622. [Google Scholar] [CrossRef]

- Torres, R.; Navas-Traver, I.; Bibby, D.; Lokas, S.; Snoeij, P.; Rommen, B.; Osborne, S.; Ceba-Vega, F.; Potin, P.; Geudtner, D. Sentinel-1 SAR system and mission. In Proceedings of the 2017 IEEE Radar Conference (RadarConf), Seattle, WA, USA, 8–12 May 2017; pp. 1582–1585. [Google Scholar]

- Zhao, L.; Zhang, Q.; Li, Y.; Qi, Y.; Yuan, X.; Liu, J.; Li, H. China’s Gaofen-3 satellite system and its application and prospect. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 11019–11028. [Google Scholar] [CrossRef]

- Ao, W.; Xu, F.; Li, Y.; Wang, H. Detection and discrimination of ship targets in complex background from spaceborne ALOS-2 SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 536–550. [Google Scholar] [CrossRef]

- Li, J.; Chen, J.; Cheng, P.; Yu, Z.; Yu, L.; Chi, C. A survey on deep-learning-based real-time SAR ship detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 3218–3247. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Li, J.; Xu, X.; Wang, B.; Zhan, X.; Xu, Y.; Ke, X.; Zeng, T.; Su, H.; et al. SAR ship detection dataset (SSDD): Official release and comprehensive data analysis. Remote Sens. 2021, 13, 3690. [Google Scholar] [CrossRef]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A high-resolution SAR images dataset for ship detection and instance segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Yousefi, J. Image Binarization Using Otsu Thresholding Algorithm; University of Guelph: Guelph, ON, Canada, 2011; Volume 10. [Google Scholar]

- Roy, P.; Dutta, S.; Dey, N.; Dey, G.; Chakraborty, S.; Ray, R. Adaptive thresholding: A comparative study. In Proceedings of the 2014 International Conference on Control, Instrumentation, Communication and Computational Technologies (ICCICCT), Kanyakumari, India, 10–11 July 2014; pp. 1182–1186. [Google Scholar]

- Raju, P.D.R.; Neelima, G. Image segmentation by using histogram thresholding. Int. J. Comput. Sci. Eng. Technol. 2012, 2, 776–779. [Google Scholar]

- Rohling, H. Radar CFAR thresholding in clutter and multiple target situations. IEEE Trans. Aerosp. Electron. Syst. 1983, AES-19, 608–621. [Google Scholar] [CrossRef]

- Pohle, R.; Toennies, K.D. Segmentation of medical images using adaptive region growing. In Proceedings of the Medical Imaging 2001: Image Processing, SPIE, San Diego, CA, USA, 17–22 February 2001; Volume 4322, pp. 1337–1346. [Google Scholar]

- Ning, J.; Zhang, L.; Zhang, D.; Wu, C. Interactive image segmentation by maximal similarity based region merging. Pattern Recognit. 2010, 43, 445–456. [Google Scholar] [CrossRef]

- Bieniek, A.; Moga, A. An efficient watershed algorithm based on connected components. Pattern Recognit. 2000, 33, 907–916. [Google Scholar] [CrossRef]

- Wang, C. Research of image segmentation algorithm based on wavelet transform. In Proceedings of the 2015 IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 10–11 October 2015; pp. 156–160. [Google Scholar]

- Coleman, G.B.; Andrews, H.C. Image segmentation by clustering. Proc. IEEE 1979, 67, 773–785. [Google Scholar] [CrossRef]

- Xia, X.; Kulis, B. W-net: A deep model for fully unsupervised image segmentation. arXiv 2017, arXiv:1711.08506. [Google Scholar]

- Ji, X.; Henriques, J.F.; Vedaldi, A. Invariant information clustering for unsupervised image classification and segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9865–9874. [Google Scholar]

- Kim, W.; Kanezaki, A.; Tanaka, M. Unsupervised learning of image segmentation based on differentiable feature clustering. IEEE Trans. Image Process. 2020, 29, 8055–8068. [Google Scholar] [CrossRef]

- Zhou, L.; Wei, W. DIC: Deep image clustering for unsupervised image segmentation. IEEE Access 2020, 8, 34481–34491. [Google Scholar] [CrossRef]

- Cho, J.H.; Mall, U.; Bala, K.; Hariharan, B. Picie: Unsupervised semantic segmentation using invariance and equivariance in clustering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 16794–16804. [Google Scholar]

- Abdal, R.; Zhu, P.; Mitra, N.J.; Wonka, P. Labels4free: Unsupervised segmentation using stylegan. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 13970–13979. [Google Scholar]

- Wang, B.; Wang, S.; Yuan, C.; Wu, Z.; Li, B.; Hu, W.; Xiong, J. Learnable Pixel Clustering Via Structure and Semantic Dual Constraints for Unsupervised Image Segmentation. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 1041–1045. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Dosovitskiy, A. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Li, R.; Zheng, S.; Zhang, C.; Duan, C.; Su, J.; Wang, L.; Atkinson, P.M. Multiattention network for semantic segmentation of fine-resolution remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–13. [Google Scholar] [CrossRef]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 8110–8119. [Google Scholar]

- Wang, X.; Zhou, J.; Fan, J. IDUDL: Incremental double unsupervised deep learning model for marine aquaculture SAR images segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Cheng, X.; Zhu, C.; Yuan, L.; Zhao, S. Cross-modal domain adaptive instance segmentation in sar images via instance-aware adaptation. In Proceedings of the Chinese Conference on Image and Graphics Technologies, Bejing, China, 17–19 August 2023; Springer Singapore: Singapore, 2023; pp. 413–424. [Google Scholar]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in vision: A survey. ACM Comput. Surv. (CSUR) 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Mao, A.; Mohri, M.; Zhong, Y. Cross-entropy loss functions: Theoretical analysis and applications. In Proceedings of the International Conference on Machine Learning, PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 23803–23828. [Google Scholar]

- Oord, A.v.d.; Li, Y.; Vinyals, O. Representation learning with contrastive predictive coding. arXiv 2018, arXiv:1807.03748. [Google Scholar]

- Wu, K.; Otoo, E.; Shoshani, A. Optimizing connected component labeling algorithms. In Proceedings of the Medical Imaging 2005: Image Processing, SPIE, San Diego, CA, USA, 13–17 February 2005; Volume 5747, pp. 1965–1976. [Google Scholar]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. A SAR dataset of ship detection for deep learning under complex backgrounds. Remote Sens. 2019, 11, 765. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X.; Zhan, X.; Shi, J.; Wei, S.; Pan, D.; Li, J.; Su, H.; Zhou, Y.; et al. LS-SSDD-v1. 0: A deep learning dataset dedicated to small ship detection from large-scale Sentinel-1 SAR images. Remote Sens. 2020, 12, 2997. [Google Scholar] [CrossRef]

- Kingma, D.P. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Imambi, S.; Prakash, K.B.; Kanagachidambaresan, G. PyTorch. In Programming with TensorFlow: Solution for Edge Computing Applications; Springer: Cham, Switzerland, 2021; pp. 87–104. [Google Scholar]

- Hsu, H.; Lachenbruch, P.A. Paired t Test. Wiley StatsRef: Statistics Reference Online; Wiley: Hoboken, NJ, USA, 2014. [Google Scholar]

- Lachenbruch, P.A. McNemar Test. Wiley StatsRef: Statistics Reference Online; Wiley: Hoboken, NJ, USA, 2014. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

| Method Description | PA | Kappa | MIoU | FWIoU | F1 |

|---|---|---|---|---|---|

| CFAR | 0.948 | 0.788 | 0.820 | 0.906 | 0.820 |

| OTSU | 0.933 | 0.631 | 0.720 | 0.874 | 0.665 |

| KIM | 0.909 | 0.565 | 0.676 | 0.839 | 0.608 |

| PiCIE | 0.936 | 0.683 | 0.749 | 0.883 | 0.719 |

| CDA-SAR | 0.952 | 0.762 | 0.802 | 0.910 | 0.789 |

| IDUDL | 0.952 | 0.734 | 0.792 | 0.910 | 0.760 |

| Ours | 0.963 | 0.846 | 0.865 | 0.932 | 0.868 |

| Method Description | PA | Kappa | MIoU | FWIoU | F1 |

|---|---|---|---|---|---|

| CFAR | 0.941 | 0.737 | 0.784 | 0.901 | 0.771 |

| OTSU | 0.933 | 0.564 | 0.681 | 0.874 | 0.596 |

| KIM | 0.919 | 0.537 | 0.668 | 0.859 | 0.574 |

| PiCIE | 0.945 | 0.706 | 0.773 | 0.903 | 0.735 |

| CDA-SAR | 0.946 | 0.765 | 0.803 | 0.909 | 0.797 |

| IDUDL | 0.959 | 0.757 | 0.809 | 0.925 | 0.779 |

| Ours | 0.963 | 0.830 | 0.853 | 0.935 | 0.851 |

| PA | Kappa | MIoU | FWIoU | F1 | |

|---|---|---|---|---|---|

| not remove | 0.948 | 0.742 | 0.792 | 0.902 | 0.769 |

| remove 1% | 0.956 | 0.794 | 0.827 | 0.918 | 0.819 |

| remove 2% | 0.960 | 0.816 | 0.842 | 0.924 | 0.839 |

| remove 5% (selected) | 0.963 | 0.846 | 0.865 | 0.932 | 0.868 |

| remove 10% | 0.960 | 0.841 | 0.860 | 0.932 | 0.867 |

| remove 20% | 0.952 | 0.827 | 0.849 | 0.918 | 0.857 |

| PA | Kappa | MIoU | FWIoU | F1 | |

|---|---|---|---|---|---|

| not remove | 0.953 | 0.718 | 0.786 | 0.913 | 0.740 |

| remove 1% | 0.962 | 0.789 | 0.829 | 0.930 | 0.818 |

| remove 2% | 0.962 | 0.822 | 0.847 | 0.933 | 0.844 |

| remove 5% (selected) | 0.963 | 0.830 | 0.853 | 0.935 | 0.851 |

| remove 10% | 0.956 | 0.814 | 0.840 | 0.926 | 0.839 |

| remove 20% | 0.941 | 0.771 | 0.807 | 0.904 | 0.805 |

| False Alarm Rate Vector Configuration | PA | Kappa | MIoU | FWIoU | F1 |

|---|---|---|---|---|---|

| , Length = 3 | 0.961 | 0.822 | 0.848 | 0.926 | 0.844 |

| , Length = 8 | 0.965 | 0.848 | 0.867 | 0.935 | 0.869 |

| 0.962 | 0.844 | 0.863 | 0.930 | 0.867 | |

| , Length = 5 | 0.963 | 0.840 | 0.860 | 0.931 | 0.862 |

| , Length = 5 | 0.960 | 0.834 | 0.856 | 0.927 | 0.858 |

| , Length = 5 | 0.961 | 0.836 | 0.858 | 0.930 | 0.860 |

| , Length = 5 (selected) | 0.963 | 0.846 | 0.865 | 0.932 | 0.868 |

| False Alarm Rate Vector Configuration | PA | Kappa | MIoU | FWIoU | F1 |

|---|---|---|---|---|---|

| , Length = 3 | 0.961 | 0.796 | 0.833 | 0.931 | 0.817 |

| , Length = 8 | 0.963 | 0.827 | 0.851 | 0.935 | 0.849 |

| 0.959 | 0.825 | 0.849 | 0.930 | 0.849 | |

| , Length = 5 | 0.961 | 0.828 | 0.851 | 0.933 | 0.851 |

| , Length = 5 | 0.954 | 0.812 | 0.838 | 0.923 | 0.839 |

| , Length = 5 | 0.957 | 0.820 | 0.845 | 0.927 | 0.845 |

| , Length = 5 (selected) | 0.963 | 0.830 | 0.853 | 0.935 | 0.851 |

| Model | PA | Kappa | MIoU | FWIoU | F1 |

|---|---|---|---|---|---|

| MtAD-Net without MCTM | 0.950 | 0.779 | 0.824 | 0.909 | 0.802 |

| MtAD-Net without LUFE | 0.957 | 0.823 | 0.850 | 0.922 | 0.846 |

| MtAD-Net without GVTE | 0.957 | 0.810 | 0.842 | 0.921 | 0.831 |

| MtAD-Net without PLC-Loss | 0.958 | 0.784 | 0.826 | 0.923 | 0.808 |

| MtAD-Net without Label Smoothing | 0.954 | 0.806 | 0.833 | 0.916 | 0.835 |

| MtAD-Net | 0.963 | 0.846 | 0.865 | 0.932 | 0.868 |

| Model | PA | Kappa | MIoU | FWIoU | F1 |

|---|---|---|---|---|---|

| MtAD-Net without MCTM | 0.960 | 0.773 | 0.822 | 0.927 | 0.792 |

| MtAD-Net without LUFE | 0.962 | 0.805 | 0.839 | 0.932 | 0.826 |

| MtAD-Net without GVTE | 0.960 | 0.806 | 0.837 | 0.929 | 0.829 |

| MtAD-Net without PLC-Loss | 0.951 | 0.768 | 0.811 | 0.915 | 0.794 |

| MtAD-Net without Label Smoothing | 0.952 | 0.783 | 0.816 | 0.917 | 0.811 |

| MtAD-Net | 0.963 | 0.830 | 0.853 | 0.935 | 0.851 |

| False Alarm Rate | CFAR Threshold | Probability of Each Threshold | |

|---|---|---|---|

| 0.005 | 37 | 0.382 | |

| 0.01 | 36 | 0.192 | |

| 0.1 | 24 | 0.161 | 26.77 |

| 0.25 | 12 | 0.133 | |

| 0.5 | 2 | 0.132 |

| False Alarm Rate | CFAR Threshold | Probability of Each Threshold | |

|---|---|---|---|

| 0.005 | 20 | 0.636 | |

| 0.01 | 20 | 0.351 | |

| 0.1 | 14 | 0.007 | 19.89 |

| 0.25 | 11 | 0.002 | |

| 0.5 | 7 | 0.004 |

| False Alarm Rate | CFAR Threshold | Probability of Each Threshold | |

|---|---|---|---|

| 0.005 | 80 | 0.002 | |

| 0.01 | 76 | 0.002 | |

| 0.1 | 48 | 0.507 | 41.25 |

| 0.25 | 34 | 0.487 | |

| 0.5 | 23 | 0.002 |

| False Alarm Rate | CFAR Threshold | Probability of each Threshold | |

|---|---|---|---|

| 0.005 | 43 | 0.069 | |

| 0.01 | 42 | 0.083 | |

| 0.1 | 32 | 0.707 | 32.45 |

| 0.25 | 25 | 0.119 | |

| 0.5 | 18 | 0.022 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xue, J.; Yin, J.; Yang, J. MtAD-Net: Multi-Threshold Adaptive Decision Net for Unsupervised Synthetic Aperture Radar Ship Instance Segmentation. Remote Sens. 2025, 17, 593. https://doi.org/10.3390/rs17040593

Xue J, Yin J, Yang J. MtAD-Net: Multi-Threshold Adaptive Decision Net for Unsupervised Synthetic Aperture Radar Ship Instance Segmentation. Remote Sensing. 2025; 17(4):593. https://doi.org/10.3390/rs17040593

Chicago/Turabian StyleXue, Junfan, Junjun Yin, and Jian Yang. 2025. "MtAD-Net: Multi-Threshold Adaptive Decision Net for Unsupervised Synthetic Aperture Radar Ship Instance Segmentation" Remote Sensing 17, no. 4: 593. https://doi.org/10.3390/rs17040593

APA StyleXue, J., Yin, J., & Yang, J. (2025). MtAD-Net: Multi-Threshold Adaptive Decision Net for Unsupervised Synthetic Aperture Radar Ship Instance Segmentation. Remote Sensing, 17(4), 593. https://doi.org/10.3390/rs17040593