Modelling LiDAR-Based Vegetation Geometry for Computational Fluid Dynamics Heat Transfer Models

Abstract

1. Introduction

2. Materials and Methods

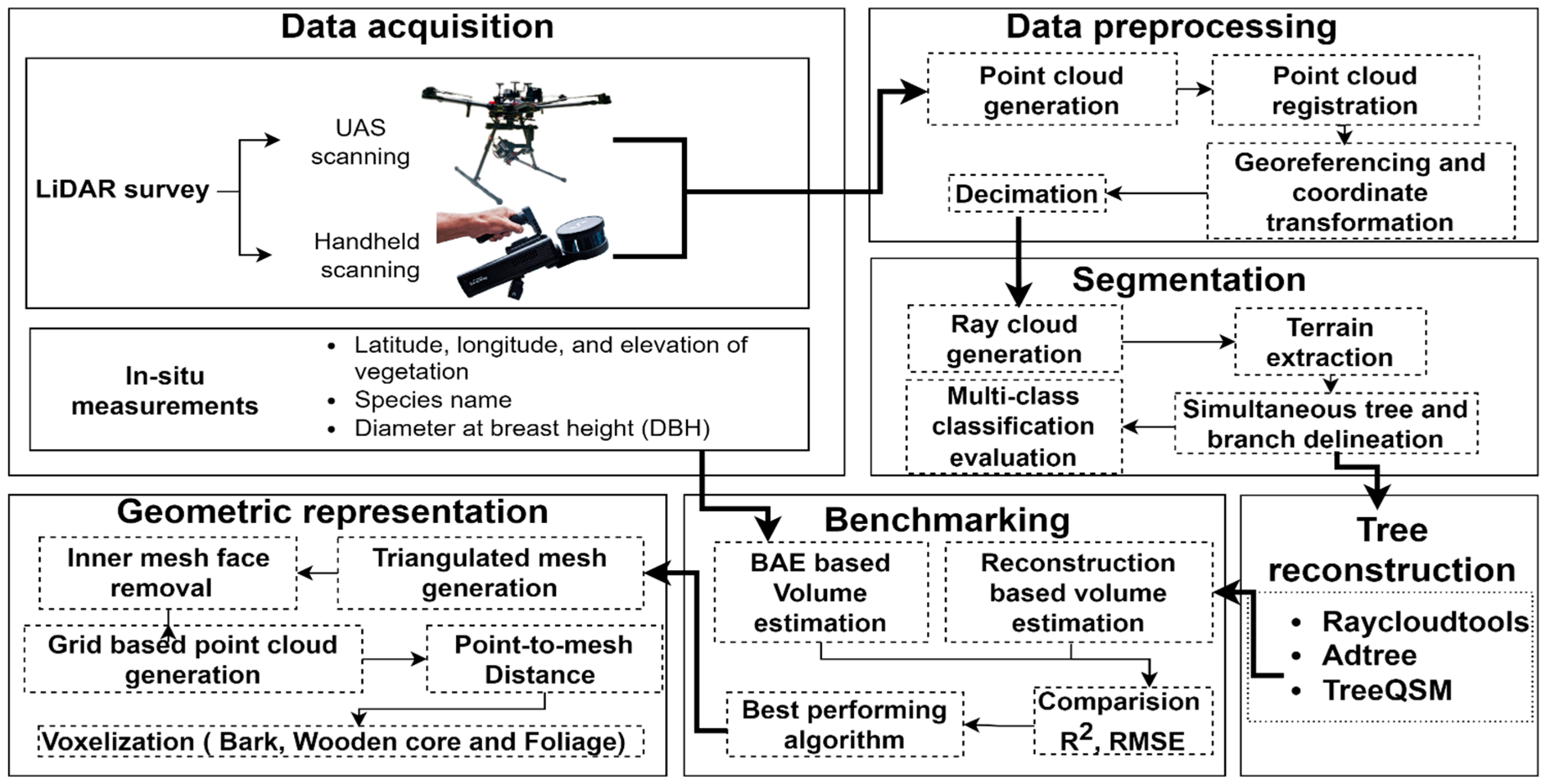

2.1. Process Pipeline

2.2. Study Site

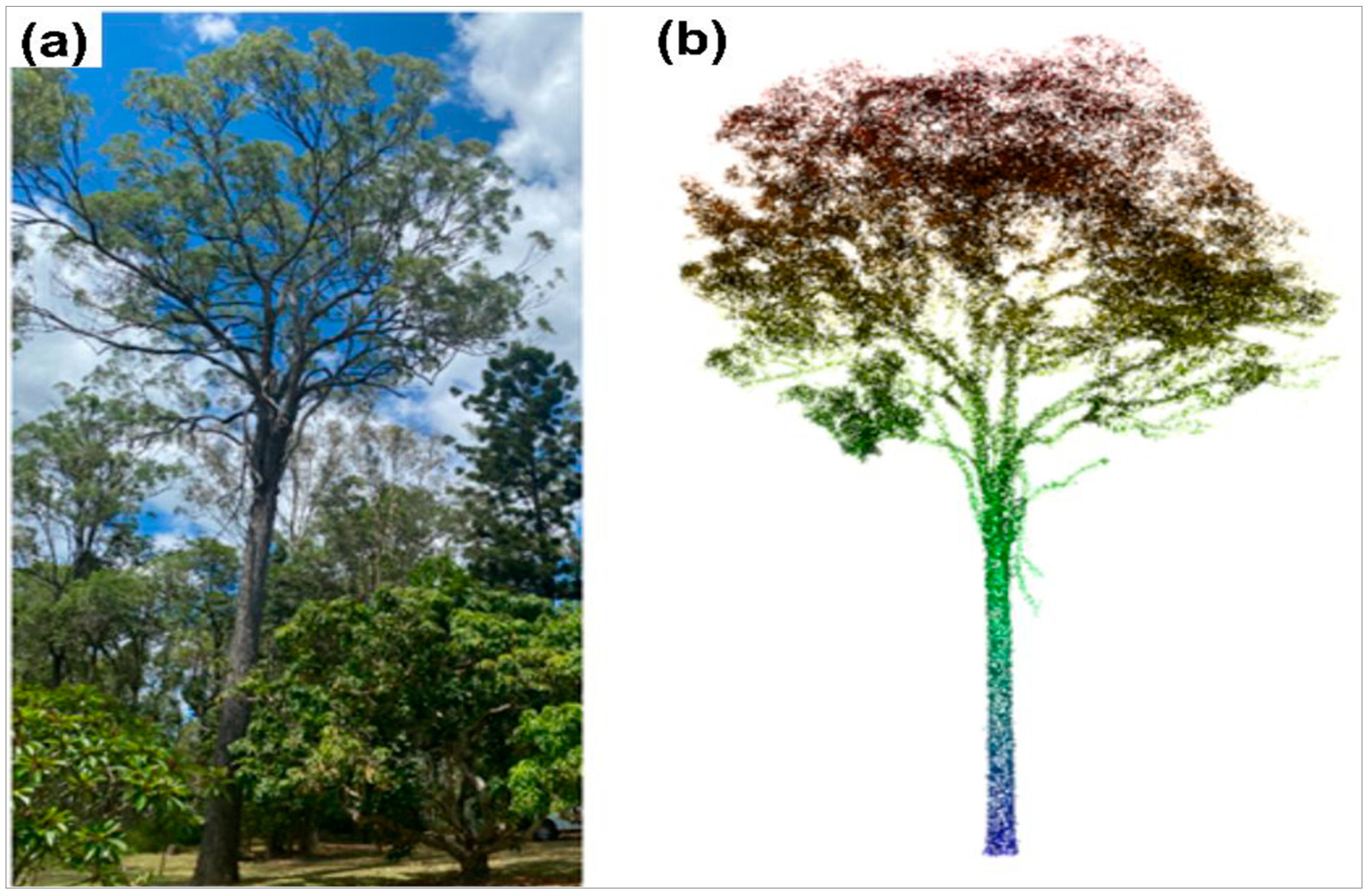

2.3. LiDAR Data Acquisition

2.4. Field Measurements and Ground-Based Observations

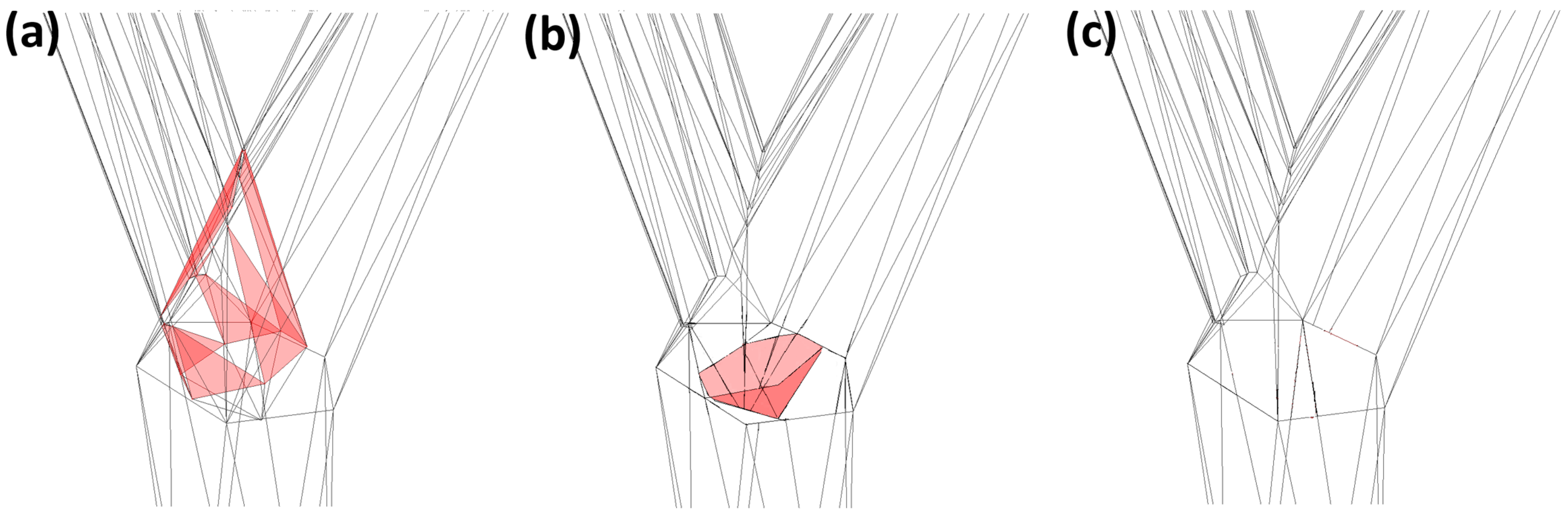

2.5. Tree Segmentation with Raycloudtools

2.6. Evaluation of Raycloudtools Segmentation

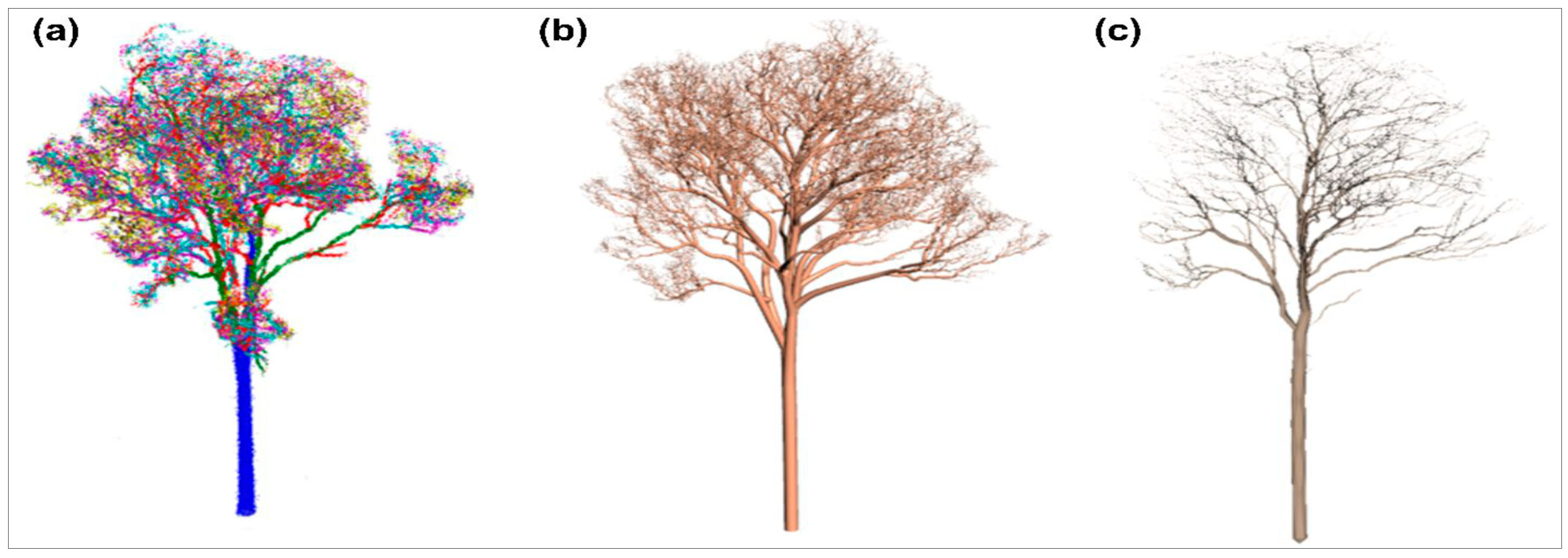

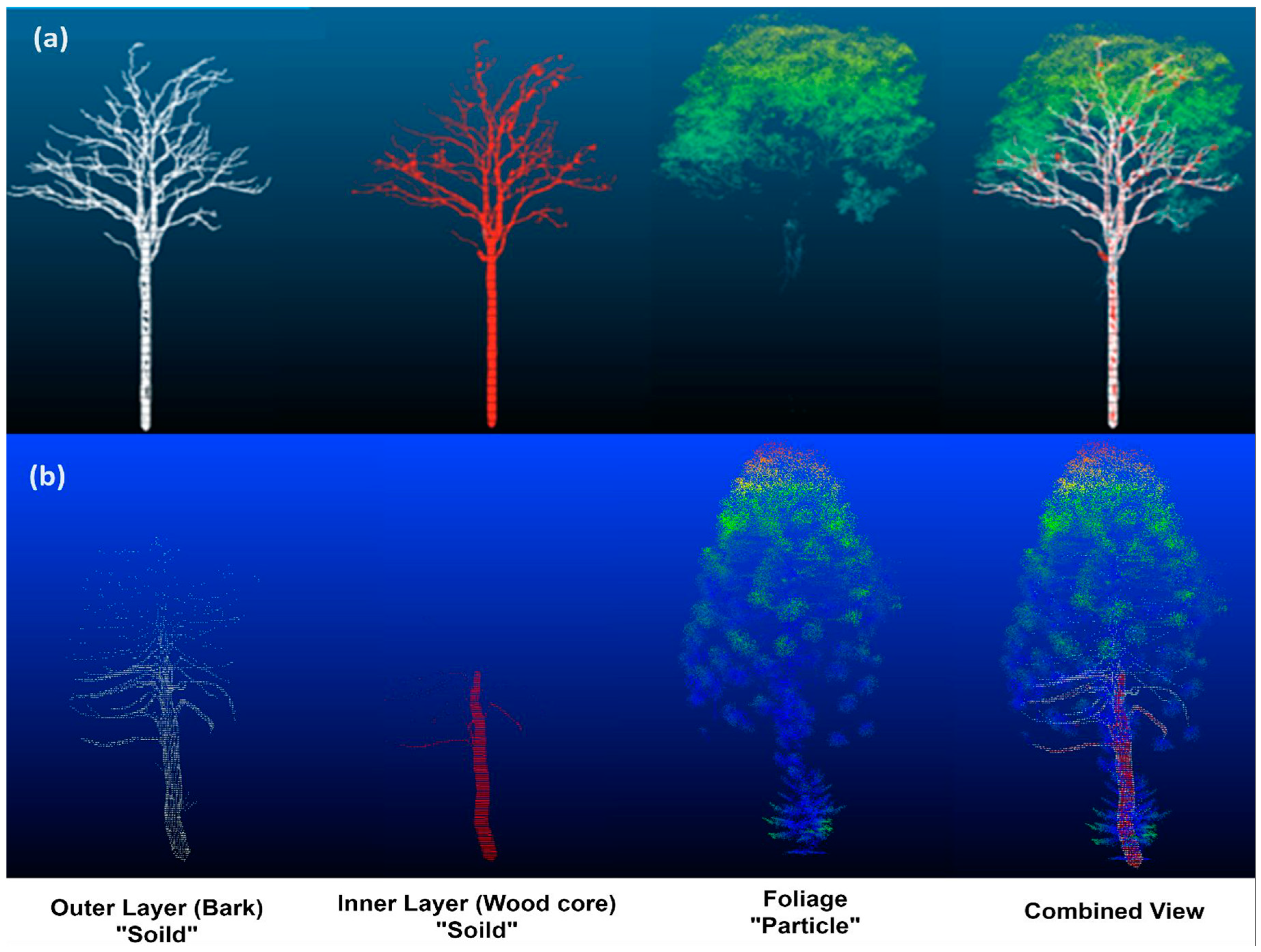

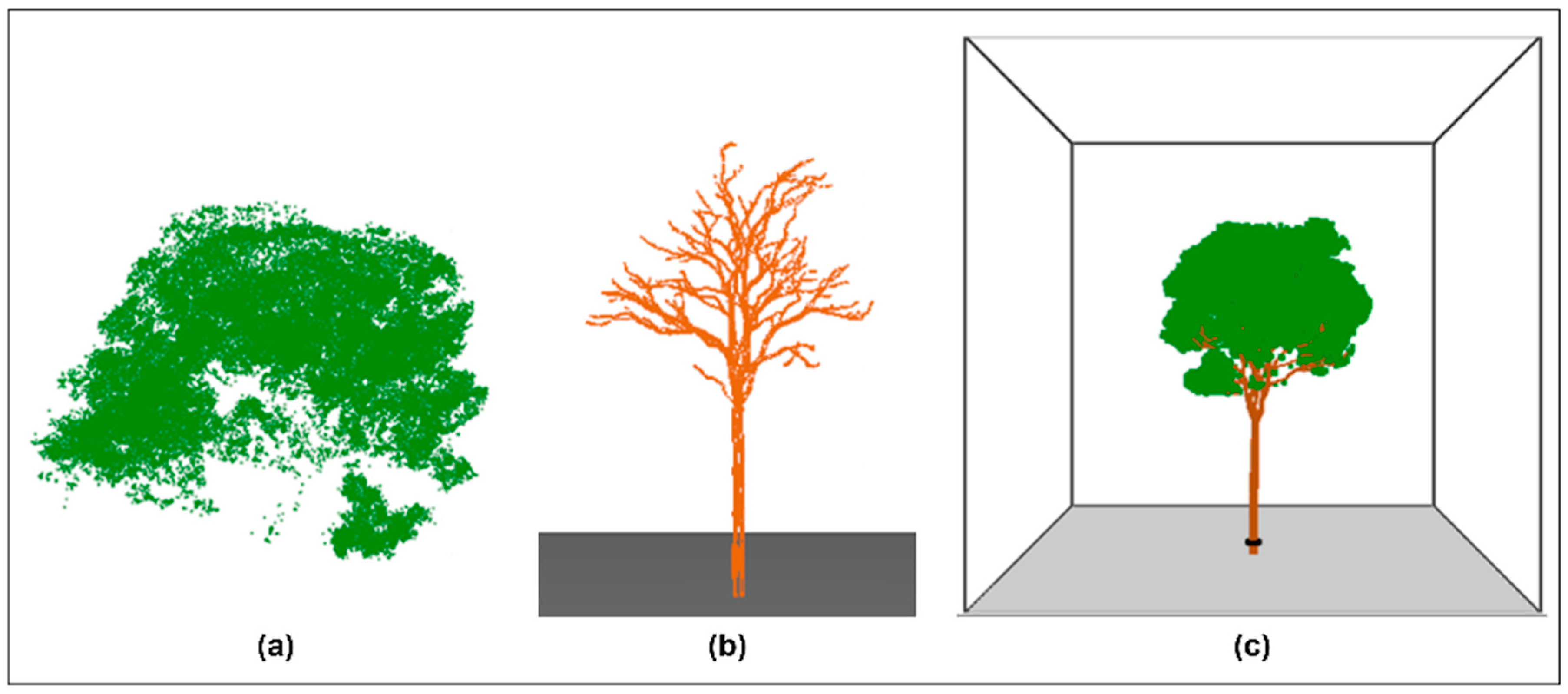

2.7. Tree Reconstruction

2.8. Reconstructed Branch Volume Comparison

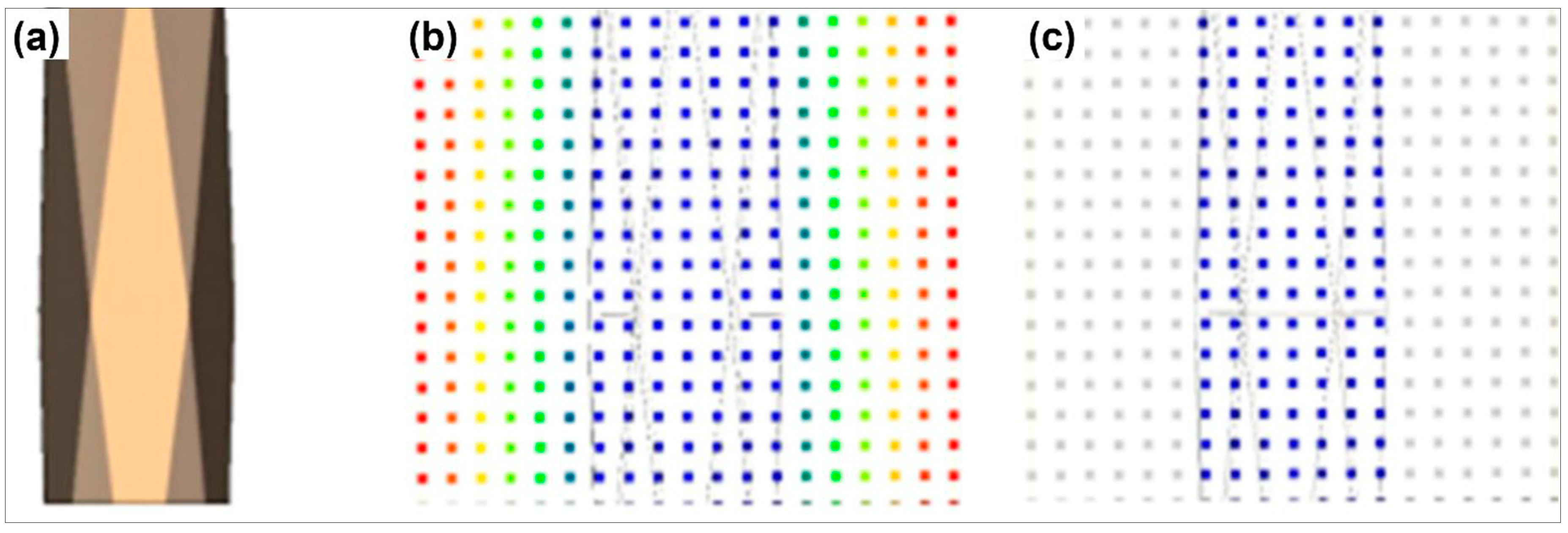

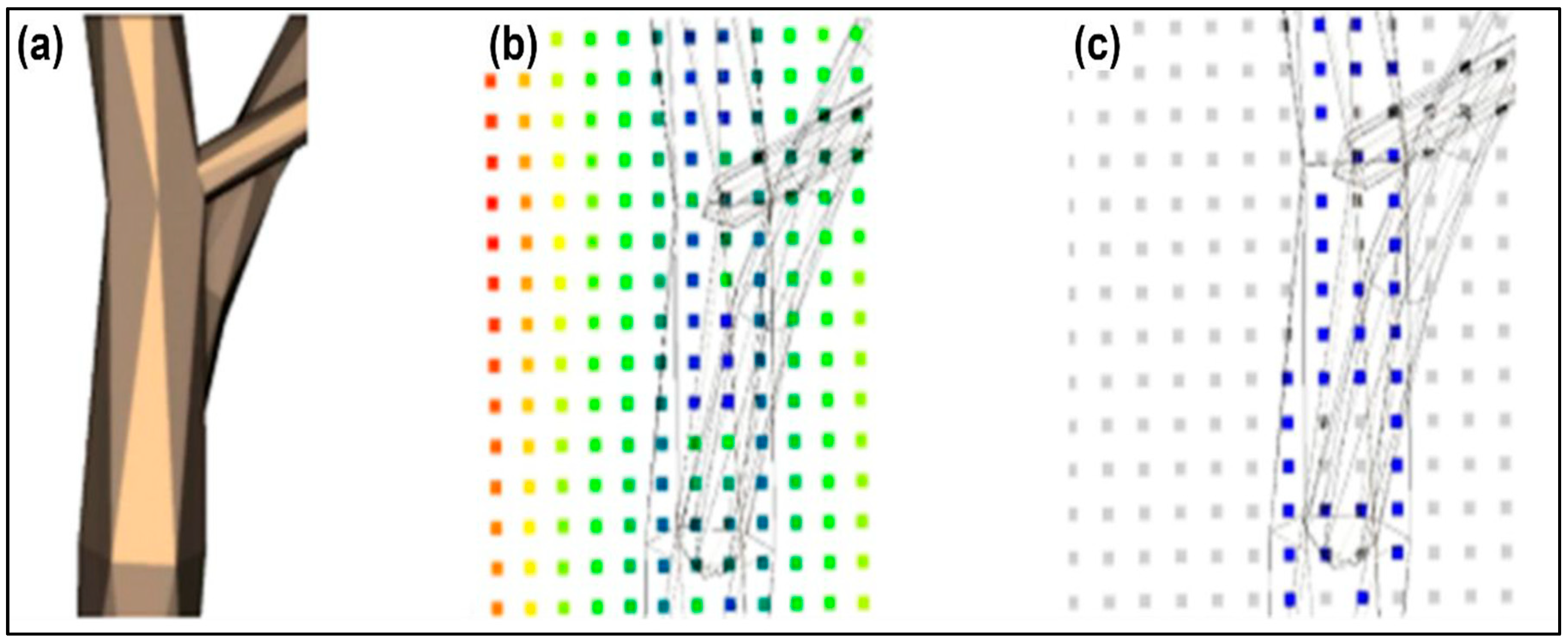

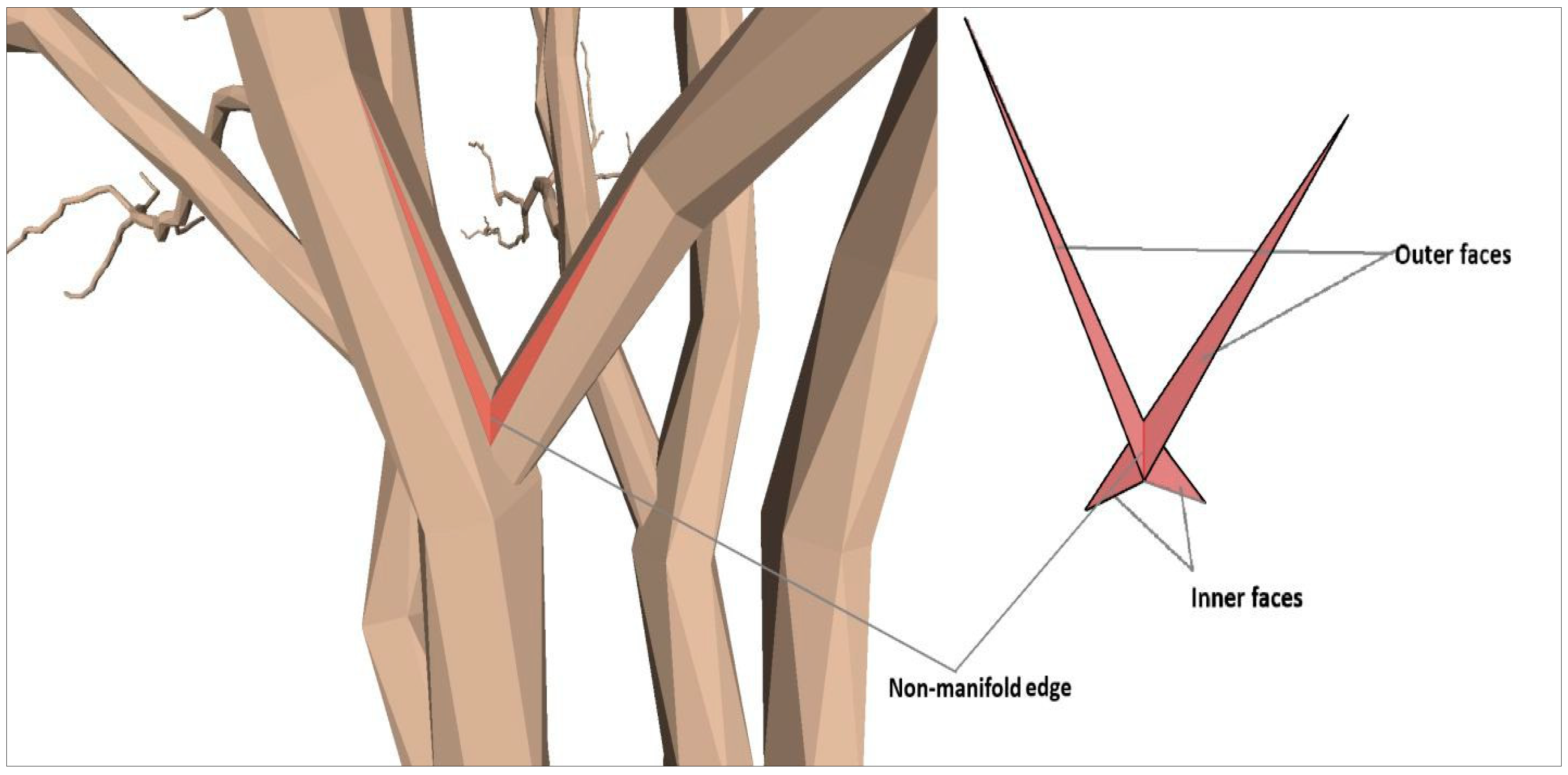

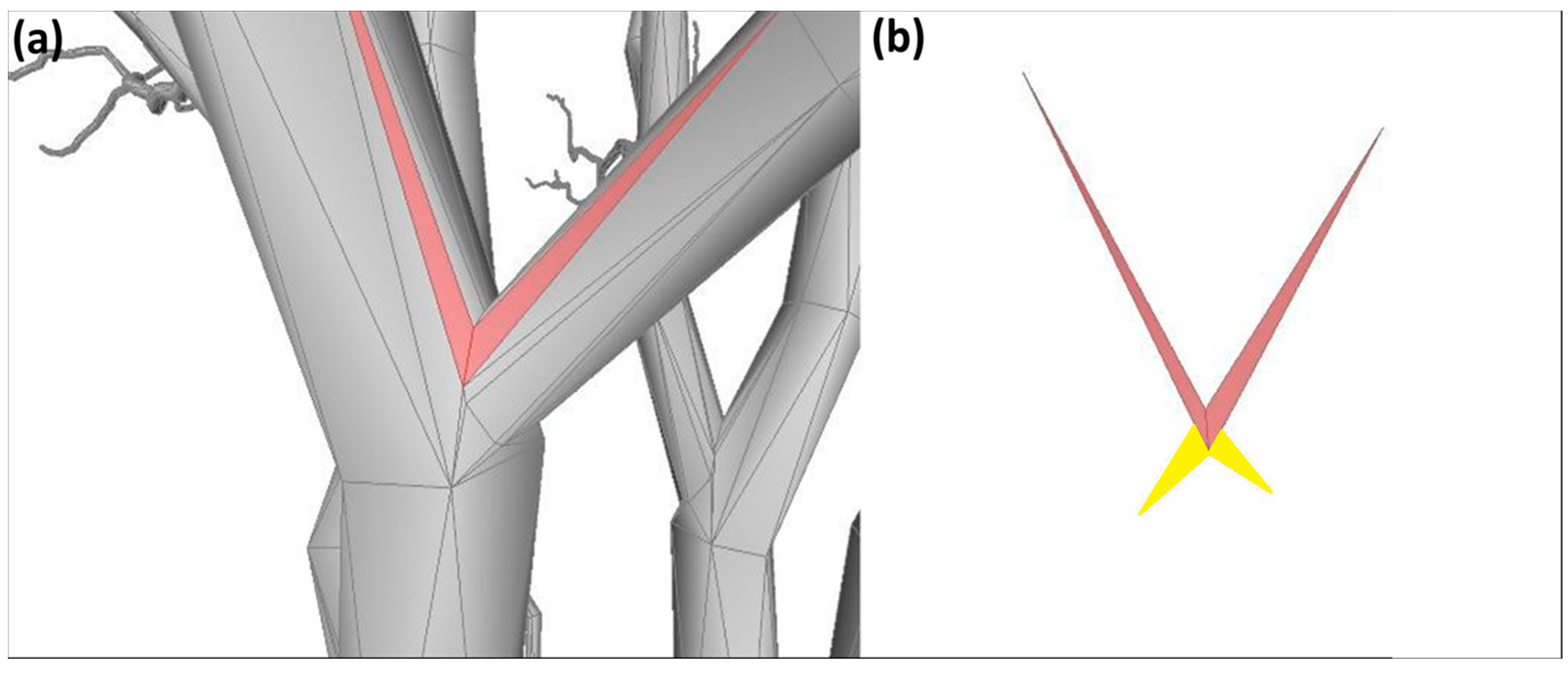

2.9. Geometric Representation of Vegetation

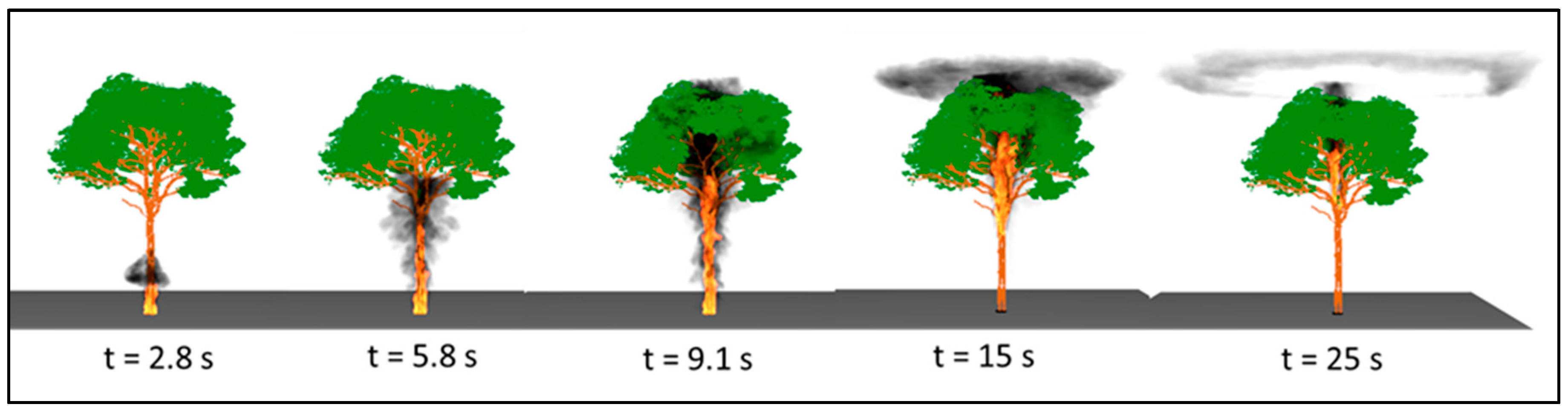

2.10. Proof of Concept

3. Results

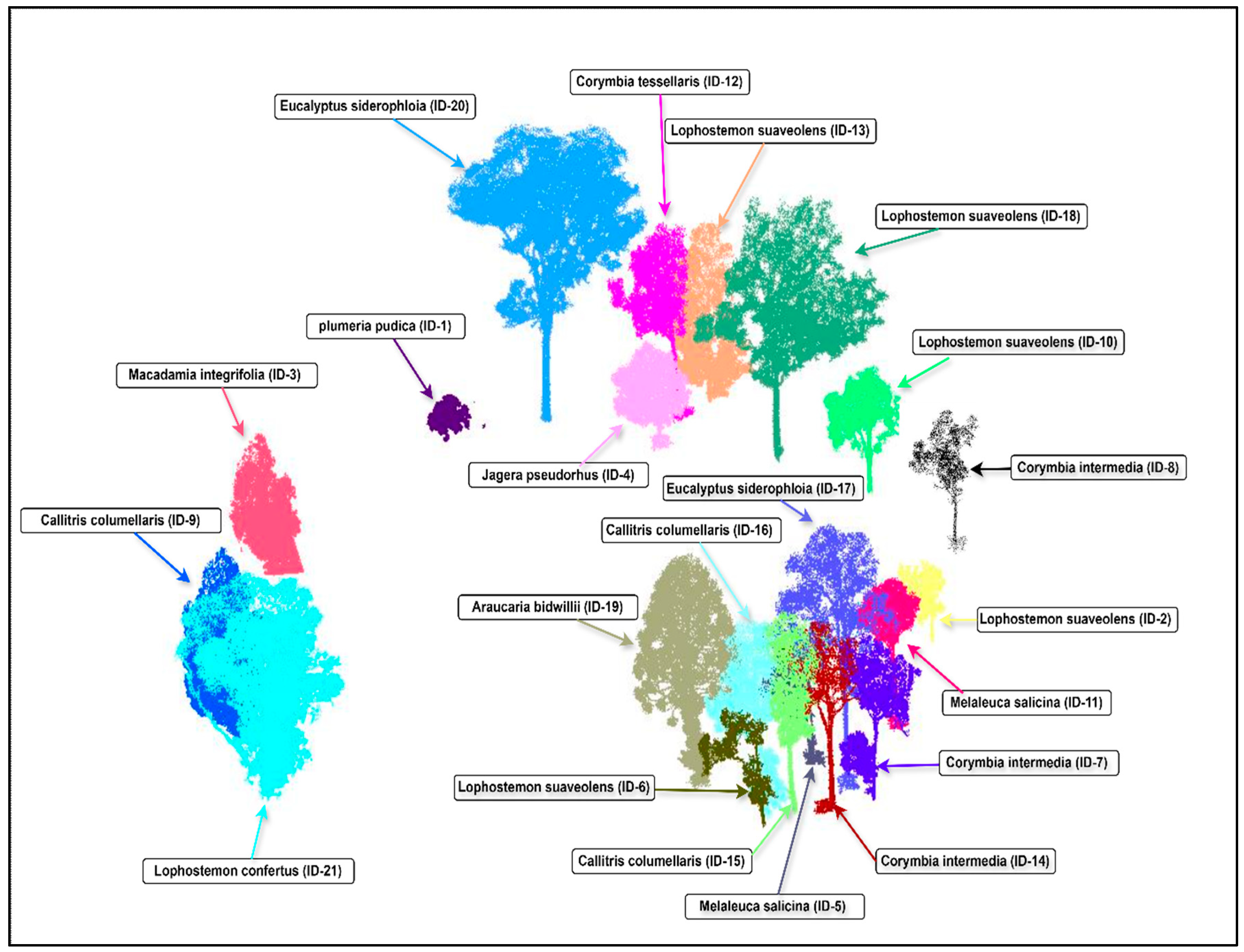

3.1. Tree Segmentation

3.2. Comparison of Field Measurements and Reconstructed Tree DBH

3.3. Reconstructed Branch Volume Comparison

3.4. Geometric Representation of Vegetation

3.5. Classification of Fuel Components

3.6. Fire Simulation of Eucalyptus Siderophloia

4. Discussion

5. Current Limitations and Future Research

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bento-Gonçalves, A.; Vieira, A. Wildfires in the wildland-urban interface: Key concepts and evaluation methodologies. Sci. Total Environ. 2020, 707, 135592. [Google Scholar] [CrossRef]

- Schug, F.; Bar-Massada, A.; Carlson, A.R.; Cox, H.; Hawbaker, T.J.; Helmers, D.; Hostert, P.; Kaim, D.; Kasraee, N.K.; Martinuzzi, S.; et al. The global wildland–urban interface. Nature 2023, 621, 94–99. [Google Scholar] [CrossRef]

- Moinuddin, K.A.M.; Sutherland, D. Modelling of tree fires and fires transitioning from the forest floor to the canopy with a physics-based model. Math. Comput. Simul. 2020, 175, 81–95. [Google Scholar] [CrossRef]

- Koksal, K.; McLennan, J.; Bearman, C. Living with bushfires on the urban-bush interface. Aust. J. Emerg. Manag. 2020, 35, 54–61. [Google Scholar]

- Keerthinathan, P.; Amarasingam, N.; Hamilton, G.; Gonzalez, F. Exploring unmanned aerial systems operations in wildfire management: Data types, processing algorithms and navigation. Int. J. Remote Sens. 2023, 44, 5628–5685. [Google Scholar] [CrossRef]

- McAneney, J.; Chen, K.; Pitman, A. 100-years of Australian bushfire property losses: Is the risk significant and is it increasing? J. Environ. Manag. 2009, 90, 2819–2822. [Google Scholar] [CrossRef]

- Kumagai, Y.; Carroll, M.; Cohn, P. Coping with Interface Wildfire as a Human Event: Lessons from the Disaster/Hazards Literature. J. For. 2004, 102, 28–32. [Google Scholar] [CrossRef]

- Llausàs, A.; Buxton, M.; Beilin, R. Spatial planning and changing landscapes: A failure of policy in peri-urban Victoria, Australia. J. Environ. Plan. Manag. 2016, 59, 1304–1322. [Google Scholar] [CrossRef]

- Lohm, D.; Davis, M. Between bushfire risk and love of environment: Preparedness, precariousness and survival in the narratives of urban fringe dwellers in Australia. Health Risk Soc. 2015, 17, 404–419. [Google Scholar] [CrossRef]

- Keerthinathan, P.; Amarasingam, N.; Kelly, J.E.; Mandel, N.; Dehaan, R.L.; Zheng, L.; Hamilton, G.; Gonzalez, F. African Lovegrass Segmentation with Artificial Intelligence Using UAS-Based Multispectral and Hyperspectral Imagery. Remote Sens. 2024, 16, 2363. [Google Scholar] [CrossRef]

- Liu, X.; Zheng, C.; Wang, G.; Zhao, F.; Tian, Y.; Li, H. Integrating Multi-Source Remote Sensing Data for Forest Fire Risk Assessment. Forests 2024, 15, 2028. [Google Scholar] [CrossRef]

- Rocha, K.D.; Silva, C.A.; Cosenza, D.N.; Mohan, M.; Klauberg, C.; Schlickmann, M.B.; Xia, J.; Leite, R.V.; Almeida, D.R.A.d.; Atkins, J.W.; et al. Crown-Level Structure and Fuel Load Characterization from Airborne and Terrestrial Laser Scanning in a Longleaf Pine (Pinus palustris Mill.) Forest Ecosystem. Remote Sens. 2023, 15, 1002. [Google Scholar] [CrossRef]

- Sakellariou, S.; Sfougaris, A.; Christopoulou, O.; Tampekis, S. Integrated wildfire risk assessment of natural and anthropogenic ecosystems based on simulation modeling and remotely sensed data fusion. Int. J. Disaster Risk Reduct. 2022, 78, 103129. [Google Scholar] [CrossRef]

- Arkin, J.; Coops, N.C.; Hermosilla, T.; Daniels, L.D.; Plowright, A. Integrated fire severity-land cover mapping using very-high-spatial-resolution aerial imagery and point clouds. Int. J. Wildland Fire 2019, 28, 840–860. [Google Scholar] [CrossRef]

- González, C.; Castillo, M.; García-Chevesich, P.; Barrios, J. Dempster-Shafer theory of evidence: A new approach to spatially model wildfire risk potential in central Chile. Sci. Total Environ. 2018, 613–614, 1024–1030. [Google Scholar] [CrossRef]

- Du, S.; Lindenbergh, R.; Ledoux, H.; Stoter, J.; Nan, L. AdTree: Accurate, Detailed, and Automatic Modelling of Laser-Scanned Trees. Remote Sens. 2019, 11, 2074. [Google Scholar] [CrossRef]

- Kokosza, A.; Wrede, H.; Esparza, D.G.; Makowski, M.; Liu, D.; Michels, D.L.; Pirk, S.; Palubicki, W. Scintilla: Simulating Combustible Vegetation for Wildfires. ACM Trans. Graph. 2024, 43, 70. [Google Scholar] [CrossRef]

- Lecigne, B.; Delagrange, S.; Taugourdeau, O. Annual Shoot Segmentation and Physiological Age Classification from TLS Data in Trees with Acrotonic Growth. Forests 2021, 12, 391. [Google Scholar] [CrossRef]

- Raumonen, P.; Kaasalainen, M.; Åkerblom, M.; Kaasalainen, S.; Kaartinen, H.; Vastaranta, M.; Holopainen, M.; Disney, M.; Lewis, P. Fast Automatic Precision Tree Models from Terrestrial Laser Scanner Data. Remote Sens. 2013, 5, 491–520. [Google Scholar] [CrossRef]

- Abd Rahman, M.; Majid, Z.; Bakar, M.; Rasib, A.; Kadir, W. Individual Tree Measurement in Tropical Environment using Terrestrial Laser Scanning. J. Teknol. 2015, 73, 127–133. [Google Scholar] [CrossRef]

- Shen, X.; Huang, Q.; Wang, X.; Li, J.; Xi, B. A Deep Learning-Based Method for Extracting Standing Wood Feature Parameters from Terrestrial Laser Scanning Point Clouds of Artificially Planted Forest. Remote Sens. 2022, 14, 3842. [Google Scholar] [CrossRef]

- Xu, Y.; Tong, X.; Stilla, U. Voxel-based representation of 3D point clouds: Methods, applications, and its potential use in the construction industry. Autom. Constr. 2021, 126, 103675. [Google Scholar] [CrossRef]

- Marcozzi, A.A.; Johnson, J.V.; Parsons, R.A.; Flanary, S.J.; Seielstad, C.A.; Downs, J.Z. Application of LiDAR Derived Fuel Cells to Wildfire Modeling at Laboratory Scale. Fire 2023, 6, 394. [Google Scholar] [CrossRef]

- Mell, W.; Maranghides, A.; McDermott, R.; Manzello, S.L. Numerical simulation and experiments of burning douglas fir trees. Combust. Flame 2009, 156, 2023–2041. [Google Scholar] [CrossRef]

- Mell, W.; Jenkins, M.A.; Gould, J.; Cheney, P. A physics-based approach to modelling grassland fires. Int. J. Wildland Fire 2007, 16, 1–22. [Google Scholar] [CrossRef]

- Ganteaume, A.; Guillaume, B.; Girardin, B.; Guerra, F. CFD modelling of WUI fire behaviour in historical fire cases according to different fuel management scenarios. Int. J. Wildland Fire 2023, 32, 363–379. [Google Scholar] [CrossRef]

- Fiorini, C.; Craveiro, H.D.; Santiago, A.; Laím, L.; Simões da Silva, L. Parametric evaluation of heat transfer mechanisms in a WUI fire scenario. Int. J. Wildland Fire 2023, 32, 1600–1618. [Google Scholar] [CrossRef]

- McGrattan, K.; McDermott, R.; Weinschenk, C.; Forney, G. Fire Dynamics Simulator, Technical Reference Guide, 6th ed; Special Publication (NIST SP), National Institute of Standards and Technology: Gaithersburg, MD, USA, 2013. [Google Scholar] [CrossRef]

- Dickman, L.T.; Jonko, A.K.; Linn, R.R.; Altintas, I.; Atchley, A.L.; Bär, A.; Collins, A.D.; Dupuy, J.-L.; Gallagher, M.R.; Hiers, J.K.; et al. Integrating plant physiology into simulation of fire behavior and effects. New Phytol. 2023, 238, 952–970. [Google Scholar] [CrossRef]

- Hendawitharana, S.; Ariyanayagam, A.; Mahendran, M.; Gonzalez, F. LiDAR-based Computational Fluid Dynamics heat transfer models for bushfire conditions. Int. J. Disaster Risk Reduct. 2021, 66, 102587. [Google Scholar] [CrossRef]

- Karna, Y.K.; Penman, T.D.; Aponte, C.; Gutekunst, C.; Bennett, L.T. Indications of positive feedbacks to flammability through fuel structure after high-severity fire in temperate eucalypt forests. Int. J. Wildland Fire 2021, 30, 664–679. [Google Scholar] [CrossRef]

- Winsen, M.; Hamilton, G. A Comparison of UAV-Derived Dense Point Clouds Using LiDAR and NIR Photogrammetry in an Australian Eucalypt Forest. Remote Sens. 2023, 15, 1694. [Google Scholar] [CrossRef]

- Parsons, R.A.; Mell, W.E.; McCauley, P. Linking 3D spatial models of fuels and fire: Effects of spatial heterogeneity on fire behavior. Ecol. Model. 2011, 222, 679–691. [Google Scholar] [CrossRef]

- McGrattan, K.; McDermott, R.; Mell, W.; Forney, G.; Floyd, J.; Hostikka, S. Modeling the Burning of complicated objects using lagrangian particles. In Proceedings of the 2010 Interflam Conference, Nottingham, UK, 4 July 2010; Available online: https://tsapps.nist.gov/publication/get_pdf.cfm?pub_id=905798 (accessed on 4 December 2024).

- Rowell, E.; Loudermilk, E.L.; Hawley, C.; Pokswinski, S.; Seielstad, C.; Queen, L.L.; O’Brien, J.J.; Hudak, A.T.; Goodrick, S.; Hiers, J.K. Coupling terrestrial laser scanning with 3D fuel biomass sampling for advancing wildland fuels characterization. For. Ecol. Manag. 2020, 462, 117945. [Google Scholar] [CrossRef]

- Lowe, T.D.; Stepanas, K. RayCloudTools: A Concise Interface for Analysis and Manipulation of Ray Clouds. IEEE Access 2021, 9, 79712–79724. [Google Scholar] [CrossRef]

- Quan, Y.; Li, M.; Hao, Y.; Liu, J.; Wang, B. Tree species classification in a typical natural secondary forest using UAV-borne LiDAR and hyperspectral data. GIScience Remote Sens. 2023, 60, 2171706. [Google Scholar] [CrossRef]

- Yadav, B.K.V.; Lucieer, A.; Baker, S.C.; Jordan, G.J. Tree crown segmentation and species classification in a wet eucalypt forest from airborne hyperspectral and LiDAR data. Int. J. Remote Sens. 2021, 42, 7952–7977. [Google Scholar] [CrossRef]

- Rauch, L.; Braml, T. Semantic Point Cloud Segmentation with Deep-Learning-Based Approaches for the Construction Industry: A Survey. Appl. Sci. 2023, 13, 9146. [Google Scholar] [CrossRef]

- Åkerblom, M.; Raumonen, P.; Mäkipää, R.; Kaasalainen, M. Automatic tree species recognition with quantitative structure models. Remote Sens. Environ. 2017, 191, 1–12. [Google Scholar] [CrossRef]

- Fan, G.; Nan, L.; Dong, Y.; Su, X.; Chen, F. AdQSM: A New Method for Estimating Above-Ground Biomass from TLS Point Clouds. Remote Sens. 2020, 12, 3089. [Google Scholar] [CrossRef]

- Chave, J.; Réjou-Méchain, M.; Búrquez, A.; Chidumayo, E.; Colgan, M.S.; Delitti, W.B.C.; Duque, A.; Eid, T.; Fearnside, P.M.; Goodman, R.C.; et al. Improved allometric models to estimate the aboveground biomass of tropical trees. Glob. Change Biol. 2014, 20, 3177–3190. [Google Scholar] [CrossRef] [PubMed]

- Mensah, S.; Glele Kakaï, R.L.; Seifert, T. Patterns of biomass allocation between foliage and woody structure: The effects of tree size and specific functional traits. Ann. For. Res. 2016, 59, 49–60. [Google Scholar] [CrossRef]

- Cignoni, P.; Callieri, M.; Corsini, M.; Dellepiane, M.; Ganovelli, F.; Ranzuglia, G. MeshLab: An Open-Source Mesh Processing Tool. In Proceedings of the Eurographics Italian Chapter Conference, Salerno, Italy, 2–4 July 2008; Volume 1, pp. 129–136. [Google Scholar]

- Community, B.O. Blender—A 3D Modelling and Rendering Package. 2018. Available online: https://manpages.ubuntu.com/manpages/xenial/man1/blender.1.html (accessed on 4 December 2024).

- Wickramasinghe, A.; Khan, N.; Filkov, A.; Moinuddin, K. Physics-based modelling for mapping firebrand flux and heat load on structures in the wildland–urban interface. Int. J. Wildland Fire 2023, 32, 1576–1599. [Google Scholar] [CrossRef]

- Chave, J.; Coomes, D.; Jansen, S.; Lewis, S.L.; Swenson, N.G.; Zanne, A.E. Towards a worldwide wood economics spectrum. Ecol. Lett. 2009, 12, 351–366. [Google Scholar] [CrossRef] [PubMed]

- “Global Wood Density Database”. edited by Encyclopedia of Life. Available online: http://eol.org (accessed on 22 August 2023).

- Cherlet, W.; Cooper, Z.; Broeck, W.A.J.V.D.; Disney, M.; Origo, N.; Calders, K. Benchmarking Instance Segmentation in Terrestrial Laser Scanning Forest Point Clouds. In Proceedings of the IGARSS 2024—2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024; pp. 4511–4515. [Google Scholar]

- Wang, W.; Li, Y.; Huang, H.; Hong, L.; Du, S.; Xie, L.; Li, X.; Guo, R.; Tang, S. Branching the limits: Robust 3D tree reconstruction from incomplete laser point clouds. Int. J. Appl. Earth Obs. 2023, 125, 103557. [Google Scholar] [CrossRef]

- Morhart, C.; Schindler, Z.; Frey, J.; Sheppard, J.; Calders, K.; Disney, M.; Morsdorf, F.; Raumonen, P.; Seifert, T. Limitations of estimating branch volume from terrestrial laser scanning. Eur. J. For. Res. 2024, 143, 687–702. [Google Scholar] [CrossRef]

- Lau, A.; Bentley, L.P.; Martius, C.; Shenkin, A.; Bartholomeus, H.; Raumonen, P.; Malhi, Y.; Jackson, T.; Herold, M. Quantifying branch architecture of tropical trees using terrestrial LiDAR and 3D modelling. Trees 2018, 32, 1219–1231. [Google Scholar] [CrossRef]

- Lowe, T.; Pinskier, J. Correction: Lowe, T.; Pinskier, J. Tree Reconstruction Using Topology Optimisation. Remote Sens. 2023, 15, 172. [Google Scholar] [CrossRef]

- Cooper, Z.T. Using Terrestrial LiDAR to Quantify Forest Structural Heterogeneity and Inform 3D Fire Modeling. Ph.D. Dissertation, Sonoma State University, Sonoma, CA, USA, 2022. [Google Scholar]

- Hernandez-Santin, L.; Rudge, M.L.; Bartolo, R.E.; Erskine, P.D. Identifying Species and Monitoring Understorey from UAS-Derived Data: A Literature Review and Future Directions. Drones 2019, 3, 9. [Google Scholar] [CrossRef]

- Wang, D.; Li, W.; Liu, X.; Li, N.; Zhang, C. UAV environmental perception and autonomous obstacle avoidance: A deep learning and depth camera combined solution. Comput. Electron. Agric. 2020, 175, 105523. [Google Scholar] [CrossRef]

- Dalponte, M.; Coops, N.; Bruzzone, L.; Gianelle, D. Analysis on the Use of Multiple Returns LiDAR Data for the Estimation of Tree Stems Volume. Sel. Top. Appl. Earth Obs. Remote Sens. IEEE J. 2010, 2, 310–318. [Google Scholar] [CrossRef]

- Pagad, S.; Agarwal, D.; Narayanan, S.; Rangan, K.; Kim, H.; Yalla, G. Robust Method for Removing Dynamic Objects from Point Clouds. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), 31 May–31 August 2020; pp. 10765–10771. [Google Scholar]

- De Lillis, M.; Bianco, P.M.; Loreto, F. The influence of leaf water content and isoprenoids on flammability of some Mediterranean woody species. Int. J. Wildland Fire 2009, 18, 203–212. [Google Scholar] [CrossRef]

- Hachmi, M.; Sesbou, A.; Benjelloun, H.; Bouanane, F. Alternative equations to estimate the surface-to-volume ratio of different forest fuel particles. Int. J. Wildland Fire 2011, 20, 648–656. [Google Scholar] [CrossRef]

- Belcher, C.; New, S.; Santín, C.; Doerr, S.; Dewhirst, R.; Grosvenor, M.; Hudspith, V. What Can Charcoal Reflectance Tell Us About Energy Release in Wildfires and the Properties of Pyrogenic Carbon? Front. Earth Sci. 2018, 6, 169. [Google Scholar] [CrossRef]

- Crawford, A.; Feldpausch, T.; Junior, B.; Oliveira, E.; Belcher, C. Effect of tree wood density on energy release and charcoal reflectance under constant heat exposure. Int. J. Wildland Fire 2023, 32, 1788–1797. [Google Scholar] [CrossRef]

| No | Name | DBH (cm) | Height (m) |

|---|---|---|---|

| 1 | Plumeria pudica | 6.4 | 3.3 |

| 2 | Lophostemon suaveolens | 13.0 | 8.0 |

| 3 | Macadamia integrifolia | 14.6 | 12.6 |

| 4 | Jagera pseudorhus | 18.0 | 9.6 |

| 5 | Melaleuca salicina | 20.8 | 11.0 |

| 6 | Lophostemon suaveolens | 20.0 | 12.6 |

| 7 | Corymbia intermedia | 21.0 | 16.5 |

| 8 | Corymbia intermedia | 22.6 | 14.6 |

| 9 | Callitris columellaris | 20.0 | 18.5 |

| 10 | Lophostemon suaveolens | 48.0 | 25.8 |

| 11 | Melaleuca salicina | 25.2 | 13.8 |

| 12 | Corymbia tessellaris | 22.1 | 19.6 |

| 13 | Lophostemon suaveolens | 26.9 | 18.3 |

| 14 | Corymbia intermedia | 34.6 | 20.3 |

| 15 | Callitris columellaris | 35.0 | 21.0 |

| 16 | Callitris columellaris | 37.6 | 20.1 |

| 17 | Eucalyptus siderophloia | 34.8 | 25.7 |

| 18 | Corymbia tessellaris | 48.0 | 25.9 |

| 19 | Araucaria bidwillii | 55.4 | 22.4 |

| 20 | Eucalyptus siderophloia | 58.0 | 29.2 |

| 21 | Lophostemon confertus | 84.0 | 21.6 |

| Metrics | Equation | Remarks |

|---|---|---|

| Accuracy | Accuracy of a classification increases with correctly segmented points that belong to a particular class | |

| Precision | Precision decreases with the number of incorrectly segmented points that are included in a particular class | |

| Recall | Recall decreases the number of incorrectly segmented points that should be assigned to a particular class but are not | |

| F1-score | F1-score is a weighted harmonic mean of precision and recall |

| Algorithm | Skeleton Generation | Trunk Fitting | Branch Fitting |

|---|---|---|---|

| AdTree | Minimum spanning tree (MST) using Dijkstra’s shortest path algorithm | Cylinder fitting | Allometric models |

| TreeQSM | Region growing method with segmented point cloud (cover sets) | Cylinder fitting | |

| Raycloudtools | Disjoint acyclic graph using Dijkstra’s shortest path algorithm from root nodes | Cylinder fitting | Allometric models |

| Reconstruction Algorithm | RMSE | R2 |

|---|---|---|

| Adtree | 55.50 | 0.73 |

| TreeQSM | 17.58 | 0.89 |

| Raycloudtools | 13.54 | 0.95 |

| Species | Common Name/s | Number in Field Sample | Wood Density (g·cm−3) | Stem-Specific Density (g·cm−3) |

|---|---|---|---|---|

| Araucaria bidwillii | Bunya pine | 1 | 0.39–0.46 (mean 0.42) | 0.42 |

| Callitris columellaris | Sandy/white cypress pine | 3 | 0.58 | * |

| Corymbia intermedia | Pink bloodwood | 3 | * | 0.8 |

| Corymbia tessellaris | Moreton Bay ash | 2 | 0.90–0.93 (mean 0.92) | 0.91 |

| Eucalyptus siderophloia | Grey ironbark | 2 | 0.95 | 0.95 |

| Jagera pseudorhus | Foambark | 1 | 0.68 | 0.68 |

| Lophostemon confertus | Brush box | 1 | 0.72–0.76 (mean 0.75) | 0.75 |

| Lophostemon suaveolens | Swamp box | 4 | 0.55–0.76 (mean 0.69) | 0.73 |

| Macadamia integrifolia | Macadamia nut | 1 | * | * |

| Melaleuca salicina (Callistemon salignus) | Willow/white-flowering bottle brush | 2 | 0.84 | 0.84 |

| Plumeria pudica | Frangipani | 1 | * | * |

| Reconstruction Algorithm | AdTree | TreeQSM | Raycloudtools |

|---|---|---|---|

| R2 | 0.525 | 0.908 | 0.972 |

| RMSE (m3) | 500.94 | 20.30 | 0.85 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Keerthinathan, P.; Winsen, M.; Krishnakumar, T.; Ariyanayagam, A.; Hamilton, G.; Gonzalez, F. Modelling LiDAR-Based Vegetation Geometry for Computational Fluid Dynamics Heat Transfer Models. Remote Sens. 2025, 17, 552. https://doi.org/10.3390/rs17030552

Keerthinathan P, Winsen M, Krishnakumar T, Ariyanayagam A, Hamilton G, Gonzalez F. Modelling LiDAR-Based Vegetation Geometry for Computational Fluid Dynamics Heat Transfer Models. Remote Sensing. 2025; 17(3):552. https://doi.org/10.3390/rs17030552

Chicago/Turabian StyleKeerthinathan, Pirunthan, Megan Winsen, Thaniroshan Krishnakumar, Anthony Ariyanayagam, Grant Hamilton, and Felipe Gonzalez. 2025. "Modelling LiDAR-Based Vegetation Geometry for Computational Fluid Dynamics Heat Transfer Models" Remote Sensing 17, no. 3: 552. https://doi.org/10.3390/rs17030552

APA StyleKeerthinathan, P., Winsen, M., Krishnakumar, T., Ariyanayagam, A., Hamilton, G., & Gonzalez, F. (2025). Modelling LiDAR-Based Vegetation Geometry for Computational Fluid Dynamics Heat Transfer Models. Remote Sensing, 17(3), 552. https://doi.org/10.3390/rs17030552