Highlights

What are the main findings?

- This review systematically analyzed the methods of fine-grained remote sensing image interpretation at different levels (pixel-level, object-level, scene-level), and discussed the challenges faced by fine-grained methods (such as lack of a unified definition of fine granularity, heavy reliance on computer vision methods but with domain gaps, limited cross-domain generalization, and open-world recognition, etc.)

- Current fine-grained interpretation datasets cover typical objects/scenarios but face defects like high intra-class similarity, limited modality diversity, geographic imbalance, and high annotation costs, while future datasets will develop toward multi-modal integration, global coverage, and temporal dynamics.

What is the implication of the main finding?

- The summarized method system and dataset optimization direction provide a clear technical framework for subsequent research, helping to solve key challenges such as small inter-class differences and poor cross-domain generalization in fine-grained interpretation.

- Dataset optimization will break one of the bottlenecks and promote the application of fine-grained interpretation technology in environmental monitoring, agriculture, urban planning, and other fields.

Abstract

This article conducts a systematic review on the fine-grained interpretation of remote sensing images, delving deeply into its background, current situation, datasets, methodology, and future trends, aiming to provide a comprehensive reference framework for research in this field. In terms of fine-grained interpretation datasets, we focus on introducing representative datasets and analyze their key characteristics such as the number of categories, sample size, and resolution, as well as their benchmarking role in research. For methodologies, by classifying the core methods according to the interpretation level system, this paper systematically summarizes the methods, models, and architectures for implementing fine-grained remote sensing image interpretation based on deep learning at different levels such as pixel-level classification and segmentation, object-level detection, and scene-level recognition. Finally, the review concluded that although deep learning has driven substantial advances in accuracy and applicability, fine-grained interpretation remains an inherently challenging problem due to issues such as the distinction of highly similar categories, cross-sensor domain migration, and high annotation costs. We also look forward to future directions, emphasizing the need to enhance the generalization, support open-world recognition further, and adapt to actual complex scenarios, etc. This review aims to promote the application of fine-grained interpretation technology for remote sensing images across a broader range of fields.

1. Introduction

The acceleration of satellite imaging technology and the flourishing of advanced remote sensing platforms have ushered in a new era for Earth observation. Modern sensors are capable of capturing imagery at higher spatial resolution, more abundant spectral bands, and denser revisit frequency, creating rich datasets that go well beyond coarse land-cover maps. However, the interpretive methods for these data commonly remain at coarse granularity—that is, assigning broad semantic labels such as “urban,” “forest,” or “water” to pixels or regions. As demands for more precise, context-sensitive, and operationally useful products grow, the idea of “fine-grained remote sensing interpretation” has emerged as an indispensable research direction.

In the remote sensing community, the term fine-grained interpretation has been used with different connotations across semantic levels of analysis. Unlike the computer vision field, where “fine-grained” typically refers to discriminating sub-categories within an object class (e.g., species of birds or types of vehicles), remote sensing imagery embodies a hierarchical spatial–semantic structure, where fine-grainedness manifests differently at the pixel, object, and scene levels.

Pixel-level fine-grainedness primarily relates to spectral and radiometric resolution. It concerns the discrimination of subtle spectral variations within mixed or adjacent pixels—for instance, distinguishing different wetland types [1] or tree species [2]. Here, fine-grained interpretation is more about spectral granularity and sub-pixel information modeling.

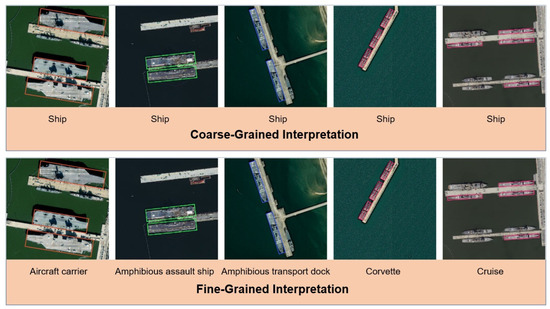

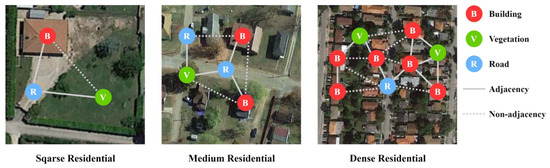

Object-level fine-grainedness parallels the computer vision notion of part-based or subclass recognition. It focuses on identifying specific structures or components within a broader class (as Figure 1), such as distinguishing different types of buildings or ships [3,4]. The “fine-grainedness” lies in structural and morphological variability rather than subtle spectral differences.

Scene-level fine-grainedness extends beyond local patterns to the semantic composition and contextual relationships within complex landscapes. It captures nuanced differences between semantically similar environments—e.g., distinguishing residential neighborhoods from mixed industrial–commercial zones [5], or subtle patterns of urban sprawl [6]. At this level, fine-grained interpretation involves contextual semantics and hierarchical scene understanding.

Yet despite many advances, there is no universally accepted formal definition of fine-grained remote sensing interpretation. Different authors may emphasize subclass discrimination, structural detail, or semantic subdivision, leading to blurred conceptual boundaries. To bring clarity, in this work we propose that (fine-grained remote sensing interpretation) FRSI should refer to the family of remote sensing image interpretation tasks that aim to achieve “higher semantic resolution and discriminative capability” than conventional coarse-level labeling. In essence, FRSI seeks “multi-dimensional finer granularity”—spectrum, semantic, spatial, or structure, and produces interpretable attribution or reasoning at these dimensions.

In recent years, there are existing several survey and review about “fine-grained”. For instance, “Fine-Grained Image Analysis With Deep Learning: A Survey” [7] focus on “fine-grained image analysis (FGIA)” in the field of computer vision —emphasizing the identification of subcategories within the same major category (such as bird species, car models). Meanwhile, “Fine-Grained Image Recognition Methods and Their Applications in Remote Sensing Images: A Review” [8] has been transferred into remote sensing contexts but its scope is still relatively close to object detection in the field of computer vision. However, this review of ours does not fully follow the perspectives and understandings of fine granularity presented in these existing reviews. We will give more consideration to different level semantics and contextual meanings of fine-grained interpretation.

Figure 1.

Examples of fine-grained objects (ships [9]) in remote sensing images. Top line: coarse-grained interpretation. Bottom line: fine-grained interpretation.

The contributions of this survey are threefold: (1) Different from previous reviews on fine-grained interpretation, we review fine-grained interpretation from the perspectives of different semantic levels (pixel, object, and scene levels) of remote sensing image interpretation and summarized the existing methods. (2) We trace the “evolution” of remote sensing interpretation, systematically summarize the developing trend and challenges of fine-grained interpretation in the field of remote sensing. (3) We identify open issues and future directions—such as cross-domain adaptation, annotation efficiency, interpretability, open-world generalization, etc.

2. The Datasets for Fine-Grained Interpretation

2.1. Current Status of the Dataset

Remote sensing datasets play an extremely important role in the research of fine-grained remote sensing image interpretation. The current fine-grained remote sensing interpretation datasets can roughly be divided into three categories: pixel-level, target(object)-level, and scene-level. When choosing a dataset, we mainly select task types that are directly related to fine-grained interpretation and try to cover the three levels

Pixel-level datasets are mostly used for land cover classification and feature change detection, such as TREE [10], Belgium Data [11], and FUSU [12]. The data sources are mostly airborne or ground systems (such as the LiCHy hyperspectral system). They emphasize the subtle distinctions in spectral dimensions, such as the spectral differences among land covers. Object-level datasets mainly are constructed for individual targets such as ships, aircraft, and buildings, for example, HRSC2016 [13], FGSCR-42 [14], ShipRSImageNet [15], and MFBFS [16]. They are often high resolution (0.1–6 m) remote sensing images. There are a large number of categories, emphasizing the subtle differences between similar categories (such as ship models, aircraft models). The data sources mainly include Google Earth, WorldView, GaoFen series satellites, etc. Scene-level datasets have the widest coverage and are applied in remote sensing scene classification and retrieval, such as AID [17], NWPU-RESISC45 [18], PatternNet [19], MLRSNet [20], Million-AID [21], and MEET [22], etc. They have a wide resolution range (0.06–153 m). The sample size is large (ranging from tens of thousands to millions of images). The sources mainly include Google Earth, Bing Maps, Sentinel, OpenStreetMap, etc. In Table 1, common datasets for fine-grained remote sensing image interpretation are summarized.

Table 1.

Summary of datasets for fine-grained remote sensing image interpretation. “M” is multimodal feature. “T” is temporal feature.

Overall, the existing datasets have basically covered typical fine-grained objects and scenarios such as ships, aircraft, buildings, vegetation, and land use/cover, providing important support for related research.

2.2. Existing Deficiencies of Datasets

Despite substantial progress in fine-grained remote sensing interpretation, current datasets face fundamental limitations rooted in machine learning and deep learning theoretical constraints, hindering model generalization and real-world applicability.

High Intra-Class Similarity. (1) Imbalanced Feature Space and Blurred Decision Boundaries: From statistical learning theory, fine-grained classification suffers from skewed intra-class vs. inter-class variance. Categories are differentiated only by subtle local, spectral, or texture differences, resulting in narrow inter-class distances in the feature space. Meanwhile, intra-class samples are scattered due to imaging angle shifts, atmospheric interference, and target state changes (e.g., vegetation phenology), often making intra-class variance exceed inter-class variance. This violates the “compact intra-class, separated inter-class” assumption, masking discriminative features with noise. For CNNs and Transformers, the low signal-to-noise ratio of fine-grained features impedes effective gradient descent, failing to encode stable discriminative representations. (2) Bias–Variance Imbalance and Generalization Failures: High intra-class similarity disrupts the bias–variance tradeoff: increasing model complexity to capture subtle differences reduces bias but amplifies sensitivity to intra-class variations, triggering overfitting. From generalization error decomposition, this overfitting stems from overlearning “non-essential variations”—datasets lack systematic coverage of multi-dimensional factors (e.g., imaging geometry, meteorology), leaving representations vulnerable to environmental perturbations (out-of-distribution failures). Additionally, metric learning methods (e.g., Triplet Loss) fail to optimize, as minimal gaps between sample pairs prevent a discriminative metric space.

Limited Modality Diversity. (1) Information Bottleneck of Single Modalities: From information theory, single-modality data (e.g., optical imagery) has an inherent entropy ceiling. Optical data captures spectral and spatial features but lacks 3D geometry, material composition, or dielectric property information due to band and physical constraints, leading to incomplete representation. This violates deep learning’s demand for “distribution integrity,” creating an information bottleneck that caps performance. Single modalities also lack robustness: optical data is prone to cloud cover and illumination changes, while SAR suffers from speckle noise. Multi-modal data offsets interference via complementary noise distributions, but its absence abandons this theoretical advantage. (2) Cross-Modal Heterogeneity and Alignment Deficits: Modality heterogeneity is the core fusion challenge. Remote sensing modalities (2D optical grids, 3D LiDAR point clouds, SAR coherence features) differ in dimension, scale, and physics, requiring alignment to a unified semantic space. Effective alignment depends on large-scale paired samples, but current datasets lack such data, trapping fusion algorithms in “data–theory decoupling.” Advanced methods (e.g., modal embedding) cannot be validated, forcing reliance on simple concatenation that achieves only “weak complementarity.”

Geographic Imbalance. (1) Domain Shift and Violated IID Assumption: From domain adaptation theory, geographic imbalance causes severe domain shift. Training domains (China, U.S., Europe) and test domains (Africa, South America) differ in distribution due to climate, terrain, and human activity—manifesting as covariate shift and concept shift. This violates the independent and identically distributed (IID) assumption: when test domains deviate, learned representations and decision functions fail. Insufficient domain coverage means models cannot adapt to unseen regional feature complexity, leading to irreducible generalization errors. (2) Barriers to Domain-Invariant Feature Learning: Ideal representations should be “domain-invariant,” encoding intrinsic ground object attributes. From meta-learning theory, this requires cross-domain diverse data to separate domain noise. However, geographic imbalance creates a “data sparsity trap”: over-represented single-domain samples dominate learning with domain-specific features (e.g., regional roof preferences), preventing cross-domain generalization. This triggers “negative transfer,” where domain-specific features interfere with test domain classification, raising errors beyond random guessing.

Annotation Bottlenecks. (1) Scarce Strong Supervision and Data Inefficiency: Fine-grained annotation demands expert-driven pixel/object-level labels—high-information-density but costly signals. This limits dataset scale and quality. Deep learning models (especially large-parameter ones) rely on sufficient data; below a “critical threshold,” gradient descent fails, causing dimensionality curse and underfitting. Weak supervision alternatives (coarse labels, pseudo-labels) are flawed: coarse labels lack gradient signals, while pseudo-labels suffer from high noise, exacerbating overfitting. (2) Dataset Saturation and Generalization Risks: Benchmark saturation reflects divergent empirical and generalization risks. As algorithms iterate, training error drops to near-zero, but test error stagnates or rises—consistent with the “overfitting limit.” When model complexity exceeds data capacity, models learn “benchmark-specific biases” (annotation errors, imaging noise) instead of intrinsic features. Saturated datasets deviate from real-world distributions, leading to sharp performance drops in practice and “pseudo-positive progress” driven by bias adaptation.

2.3. Future Outlook of Fine-Grained Datasets

Future dataset development for fine-grained remote sensing interpretation is expected to follow several important directions:

1. Multi-modal integration. Most existing benchmarks are dominated by optical imagery, which captures rich spectral and spatial details but is often limited by weather, lighting, and occlusion. To address these challenges, constructing datasets that integrate optical, SAR, LiDAR, and hyperspectral modalities will be critical. SAR can penetrate clouds and provide structural backscatter features, LiDAR captures accurate 3D geometry and elevation information, and hyperspectral imaging offers detailed spectral signatures for material identification. By combining these complementary data sources, future datasets will enable models to recognize fine-grained categories even under challenging conditions (e.g., distinguishing tree species in dense canopies or identifying military targets under camouflage). Multi-modal benchmarks will also foster the development of fusion-based algorithms that better reflect real-world operational requirements.

2. Global coverage and domain diversity. Current datasets are geographically imbalanced, with most samples collected from regions such as China, the United States, and parts of Europe. This geographic bias restricts the generalization ability of models to unseen domains. Expanding datasets to cover diverse climates, cultures, and ecosystems—for instance, tropical rainforests in South America, arid deserts in Africa, or island regions in Oceania—will help mitigate domain bias. In addition, datasets should incorporate varying socio-economic environments (urban, rural, coastal, industrial) to ensure broader representativeness. Such global and cross-domain coverage will make fine-grained datasets more reliable for worldwide applications such as biodiversity monitoring, agricultural assessment, and disaster response.

3. Temporal and dynamic monitoring. Most existing benchmarks are static snapshots, which limits their use for monitoring changes over time. However, many fine-grained tasks are inherently dynamic, such as crop phenology, urban expansion, forest succession, and water resource fluctuation. Incorporating time-series data will allow researchers to capture temporal evolution and model long-term trends. For example, crop species might be indistinguishable at a single time point but reveal distinct spectral or structural patterns when tracked across multiple growth stages. Similarly, urban construction stages or seasonal flooding patterns can only be fully captured in temporal datasets. Building fine-grained time-series benchmarks will thus support more realistic monitoring and predictive modeling tasks.

4. Efficient annotation strategies. The creation of fine-grained datasets is constrained by the costly and time-consuming nature of expert annotations, especially when subtle distinctions (e.g., between aircraft variants or tree species) require domain expertise. To reduce labeling costs, future work should explore weakly supervised learning (using coarse labels or incomplete annotations), self-supervised learning (leveraging large-scale unlabeled imagery), and crowdsourcing platforms that engage non-experts under expert validation. Additionally, incorporating knowledge graphs and generative augmentation can help generate pseudo-labels or synthetic samples to expand datasets efficiently. These strategies will make it feasible to construct large-scale fine-grained benchmarks in a scalable and sustainable way.

5. Open-world and zero-shot benchmarks. In real-world applications, remote sensing systems often encounter novel classes that were not present in the training data. However, most current datasets assume closed-world settings, where the label space is fixed. Future benchmarks should explicitly support open-world recognition and zero-shot learning, where models can detect and reason about unseen categories by leveraging semantic embeddings, textual descriptions, or external knowledge bases. Initiatives such as OpenEarthSensing [30] exemplify this trend, providing benchmarks that require models to generalize to novel classes and handle uncertain environments. Such benchmarks will be vital for practical deployments in tasks like disaster monitoring, where emergent phenomena (e.g., new building types or unusual environmental events) cannot be predefined.

In summary, fine-grained datasets at the pixel, object, and scene levels have substantially advanced research in remote sensing interpretation, enriching both the scale and complexity of available benchmarks. Nevertheless, limitations such as high intra-class similarity, modality constraints, geographic imbalance, and annotation costs continue to hinder broader applicability. The future of fine-grained dataset construction will rely on multi-modality, global-scale diversity, temporal dynamics, efficient labeling strategies, and open-world settings, enabling more generalizable, intelligent, and application-ready solutions for remote sensing interpretation.

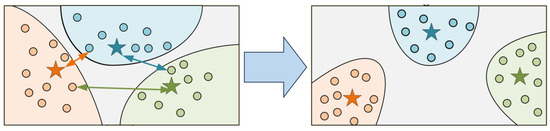

3. Methodology Taxonomy

Remote sensing image interpretation refers to the comprehensive technical process of analyzing, identifying, and interpreting the spectral, spatial, textural, and temporal characteristics of objects or phenomena in remote sensing images. Essentially, it serves as a “bridge” between remote sensing data and practical Earth observation applications. According to the granularity and objectives of information extraction, it can be divided into three core levels: pixel-level, object-level, and scene-level, as in Figure 2. Each level is interrelated yet has a clear differentiated positioning, while fine-grained interpretation is an in-depth extension of the demand for “subclass distinction” based on these levels.

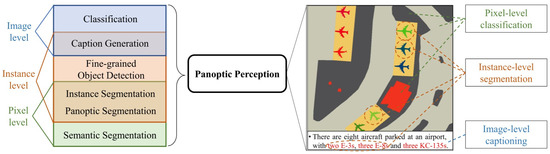

Figure 2.

Examples of fine-grained interpretation at different levels [38]. It proposed a new ”panoptic perception” task, which showed similar conception of image interpretation at different levels. The instance-level is similar to the object-level in this paper.

Pixel-level interpretation, as the foundation of remote sensing interpretation, focuses on the semantic attribution of individual or local pixels. Its core tasks include pixel-level classification (e.g., distinguishing basic ground objects such as farmland, water bodies, and buildings) and semantic segmentation (delineating pixel-level boundaries of ground objects). Traditional methods rely on spectral features (e.g., the low near-infrared reflectance of water bodies) or simple texture features, which are suitable for macro ground object classification in medium- and low-resolution images (e.g., large-scale land use classification). With the development of high-resolution remote sensing technology, pixel-level interpretation has gradually advanced toward “fine-grained attribute distinction.” For example, it can distinguish different crop varieties in hyperspectral images and identify building roof materials in high-resolution optical images. This demand for “subclass segmentation under basic ground objects” has become the prototype of fine-grained interpretation at the pixel level.

Object-level interpretation centers on “discrete ground object targets” and requires both spatial localization of targets (e.g., bounding box annotation) and category judgment. Typical applications include ship detection, aircraft recognition, and building extraction. Traditional object-level interpretation focuses on “presence/absence” and “broad category distinction” (e.g., distinguishing “ships” from “aircraft”). However, practical scenarios often require more refined target classification: for instance, ships need to be distinguished into “frigates” and “destroyers,” aircraft into “passenger planes” and “military transport planes,” and buildings into “historic protected buildings” and “ordinary residential buildings.” This type of “subclass identification under the same broad category” has driven object-level interpretation toward fine-grained development, which needs to overcome the technical challenge of “feature confusion between highly similar targets” (e.g., the similar outlines of different ship models).

Scene-level interpretation takes the “entire image scene” as the analysis unit. By integrating pixels, targets, and contextual information, it judges the overall semantics of the scene (e.g., “airport,” “port,” “urban residential area”) and supports regional-scale applications (e.g., urban functional zone division, disaster scene assessment). Traditional scene-level interpretation focuses on “broad scene category distinction” (e.g., distinguishing “forests” from “cities”). However, refined applications require more detailed scene subclass division: for example, “urban residential areas” need to be subdivided into “high-density high-rise communities” and “low-density villa areas,” “wetlands” into “swamp wetlands” and “tidal flat wetlands,” and “airports” into “military-civilian joint-use airports” and “civil airports.” This ”functional/morphological subclass identification under broad scene categories“ has become the core demand for fine-grained scene-level interpretation.

Before the deep learning era, fine-grained interpretation of remote sensing images largely relied on handcrafted features designed to capture spectral, textural, structural, and spatial nuances within and across land-cover categories. These approaches formed the foundation of modern semantic interpretation, achieving remarkable success in scenarios with subtle intra-class variability and limited labeled data. At the pixel level, fine-grainedness was primarily expressed through the use of spectral and radiometric indicators. Researchers developed feature-based models to discriminate minute spectral differences in vegetation, soil, or water conditions. Typical examples include the use of spectral indices and texture descriptors to characterize vegetation stress or sub-pixel composition [39,40]. At the object level, the focus shifted toward the structural and geometric variability within object categories, such as ships, buildings, or aircraft. Before convolutional models emerged, these tasks were accomplished through manually designed descriptors, including Histogram of Oriented Gradients (HOG) [41], Local Binary Patterns (LBPs) [42], and Gabor filters, etc. At the scene-level, fine-grained interpretation aimed to distinguish semantically similar environments—such as residential, industrial, and commercial zones—by integrating global appearance and spatial context. Bag-of-Visual-Words (BoVW) [43] and Spatial Pyramid Matching (SPM) models [44] became particularly influential in remote sensing scene classification.

In summary, traditional handcrafted-feature-based approaches laid the conceptual and technical groundwork for modern fine-grained remote sensing interpretation. They introduced the notions of spectral granularity, structural detail, and contextual hierarchy—ideas later absorbed and generalized by deep learning frameworks. While limited in scalability and robustness, these methods provided interpretability and physical insight that remain valuable for hybrid and explainable AI paradigms.

Fine-grained interpretation is not a new paradigm independent of the three levels, but a technical deepening centered on the goal of “subclass distinction” based on each level. Its core value lies in breaking the bottleneck of semantic ambiguity of ground objects in the same broad category. In the following sections, a comprehensive and in-depth review of the methodologies for these three levels of fine-grained interpretation will be presented.

3.1. Fine-Grained Pixel-Level Classification or Segmentation

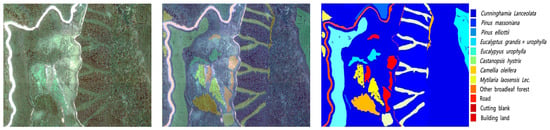

The fine-grained remote sensing interpretation at the pixel level mainly includes the classification at the pixel level and the semantic segmentation of the remote sensing images. Generally, there are more studies on pixel-level classification for hyperspectral images and more on semantic segmentation for high-resolution multispectral images. In recent years, research based on spatial–spectral joint classification has become popular. Many classification applications also take advantage of the correlation between pixels and feature consistency, and the boundary between segmentation and classification has gradually blurred. Whether it is classification or segmentation, the challenges faced by pixel-level fine-grained interpretation mainly come from the similarity of the spectral characteristics of subclass pixels within a large category. Some subclasses are even almost indistinguishable in the spectral dimension and can only be distinguished by features such as spatial texture or consistency in the temporal dimension.

An example of this is shown in Figure 3 from the GSFF dataset [10], which originally has 12 different land-cover classes, containing 9 forest vegetation categories. However, we find that these nine types of vegetation are very difficult to distinguish because their spectra are all very similar. Distinguishing these nine types of vegetation is a typical fine-grained classification problem. In addition, in natural image research like ImageNet, this fine-grained pixel-level classification based on similar spectra is not common. This is one of the significant differences between fine-grained research in the field of remote sensing and traditional computer vision.

Figure 3.

Examples of fine-grained pixel-level classification of remote sensing images [2,10].

Common methods for fine-grained classifying or segmenting pixel-level remote sensing images include novel data representation methods, the coarse–fine category relationship modeling method, the multi-source data fusion method, advanced data annotation optimization methods, etc.

3.1.1. Novel Data Representation

This category of methods focuses on breaking through the limitations of traditional and single features from spectrum through innovative feature extraction mechanisms, enabling more accurate capture of key information required for fine-grained classification (such as morphological structures, subtle texture differences, and edge textures, etc.).

Spatial–Spectral Joint Representation. Spatial–spectral joint representation is one of the most popular methods in fine-grained classification at the pixel-level. For example, CASST [45] establishes long-range mappings between spectral sequences (inter-band dependencies) and spatial features (neighboring pixel correlations) through a dual-branch Transformer and cross-attention; GRetNet [46] introduces Gaussian multi-head attention to dynamically calibrate the saliency of spectral–spatial features, enhancing the discriminability of fine-grained differences (e.g., spectral peak shifts in closely related tree species); in [47], CenterFormer focuses on the spatial–spectral features of target pixels through a central pixel enhancement mechanism to reduce background interference; E2TNet [48] designs an efficient multi-granularity fusion module to balance global correlations of coarse/fine-grained spatial–spectral features; FGSCNN [49] fuses high-level semantic features with fine-grained spatial details (e.g., edge textures) through an encoder–decoder architecture. Some studies do not simply jointly extract features in the spatial–spectral dimension, but introduce new spatial features such as gradients. For example, in [50], it proposes G2C-Conv3D, which weighted and combined traditional convolution with gradient-centralized convolution to simultaneously capture pixel intensity semantic information and gradient changes, so that it supplements intensity features with gradient information to improve the model’s sensitivity to subtle structures such as edges and textures. Spatial–Spectral joint representations break the independent modeling of spectral and spatial features, capturing their intrinsic correlations via mechanisms like attention and Transformer to improve classification accuracy in complex scenes with fine-grained classes.

Morphological Representation. Different from spatial–spectral joint representation, there are also studies exploring new feature extraction methods that are completely different from convolution operations or transform operations to deal with fine-grained classification problems. In [51], the authors propose SLA-NET, which combines morphological operators (erosion and dilation) with trainable structuring elements to extract fine morphological features (e.g., contours and compactness) of tree crowns; in [2], it designs a dual-concentrated network (DNMF) that separates spectral and spatial information before fusing morphological features to enhance the robustness of tree species classification; morphFormer [52] models the interaction between the structure and shape of trees/minerals through spectral–spatial morphological convolution and attention mechanisms. This kind of method focuses on the geometric morphology of objects (e.g., crown shape and texture distribution), compensating for the inability of traditional convolution to capture non-Euclidean features.

Edge and Area Representation. These methods focus on edge continuity and regional integrity, addressing the fragmentation of classification results in traditional methods. In [53], PatchOut adopts a Transformer–CNN hybrid architecture and a feature reconstruction module to retain large-scale regional features while restoring edge details, enabling patch-free fine land-cover classification; SSUN-CRF [54] combines a spectral–spatial unified network with a fully connected conditional random field to smooth the edges of classification results and enhance regional consistency; th edge feature enhancement framework (EDFEM+ESM) [55] improves the segmentation accuracy of mineral edges through multi-level feature fusion and edge supervision.

The advantages of novel data representation mainly lie in the following: (1) Strong fine-grained feature capture: Innovative representation mechanisms accurately capture key information such as morphology, spatial–spectral correlations, gradient changes, and edge textures, significantly improving the discriminability of closely related categories (e.g., tree species and minerals). (2) Flexible model adaptability: Modular designs (e.g., morphological modules and attention modules) can be embedded into mainstream architectures like CNN and Transformer, compatible with diverse scene requirements. Their limitations are as follows: High model complexity: Modules like multi-scale fusion and morphological transformation increase parameter scales and computational loads, imposing strict requirements on training data volume and hardware computing power.

3.1.2. Modeling Relationships Between Coarse and Fine Classes

This category of methods reduces the reliance of fine-grained tasks on annotated data by modeling the hierarchical relationship between coarse-grained categories (e.g., “vegetation”) and fine-grained categories (e.g., “oak” and “poplar”), using prior knowledge of coarse categories to guide fine category classification.

Typical methods are as follows: in [56], it uses GAN and DenseNet, where the generator learns coarse category distributions and the discriminator distinguishes fine category differences to achieve semi-supervised fine-grained classification; the coarse-to-fine joint distribution alignment framework [57] matches cross-domain coarse category distributions and then calibrates fine category feature differences through coupled VAE and adversarial learning; CSSD [58] maps patch-level coarse-grained information to pixel-level fine category classification through central spectral self-distillation, solving the “granularity mismatch” problem; The CPDIC [59] framework aligns cross-domain coarse-fine category distributions using calibrated prototype loss to enhance domain adaptability; the fine-grained multi-scale network [60] combines superpixel post-processing to iteratively optimize fine category boundaries from coarse classification results; CFSSL [61] performs coarse classification with a small number of labels, then uses high-confidence pseudo-labels to guide fine-grained classification of small categories.

The advantages of modeling relationships between coarse and fine classes are as follows: (1) High data efficiency: By reusing coarse category knowledge (e.g., spectral commonalities of “vegetation”), the demand for annotated samples for fine-grained categories (e.g., specific tree species) is reduced, making it particularly suitable for few-shot scenarios. (2) Strong generalization ability: Hierarchical modeling mitigates the interference of intra-fine-category variations (e.g., different growth stages of the same tree species) on classification, improving the model’s adaptability to scene changes. The limitations are as follows: (1) Risk of hierarchical bias: Unreasonable definition of hierarchical relationships between coarse and fine categories (e.g., incorrectly classifying “shrubs” as a subclass of “arbor”) can lead to systematic bias in fine-grained classification. (2) Limited cross-domain adaptability: In scenes with severe spectral variation (e.g., vegetation in different seasons), differences in feature distribution between coarse and fine categories may disrupt hierarchical relationships, reducing classification accuracy.

3.1.3. Multi-Source Data Integration

The core of this category of methods is to break through the information dimensional limitations of single-source data by fusing complementary data sources (e.g., hyperspectral and LiDAR, remote sensing and crowdsourced data), thereby improving the robustness and accuracy of fine-grained classification.

The fusion of hyperspectral data with LiDAR data or hyperspectral data with geographic information data is one of the two most common methods for fine-grained classification based on data fusion. In [62], they propose a coarse-to-fine high-order network that fuses spectral features of hyperspectral data and 3D structural information of LiDAR to capture multi-dimensional attributes of land cover through hierarchical modeling; in [63], it designs a multi-scale and multi-directional feature extraction network that integrates spectral–spatial–height features of hyperspectral and LiDAR data to enhance category discriminability in complex scenes; in [64], Sentinel-1 radar images (capturing microwave scattering characteristics of flooded areas) are combined with OpenStreetMap crowdsourced data (providing semantic labels of urban functional zones) to improve the accuracy of fine-grained urban flood detection.

The advantages of multi-source data integration methods are as follows: (1) Information complementarity: Multi-source data provide multi-dimensional information such as spectral, spatial, structural, and semantic, compensating for the lack of discriminability of single-source data in complex scenes (e.g., vegetation coverage and urban heterogeneous areas). It is applicable to diverse scenarios such as forests, cities, and hydrology, especially outstanding in distinguishing fine-grained subcategories (e.g., different tree species and flood-submerged buildings/roads). (2) Their limitations include the following: Challenges in data heterogeneity: Differences in spatial resolution (e.g., 10 m for hyperspectral vs. 1 m for LiDAR), coordinate systems, and noise levels among different data sources require complex registration and preprocessing steps, increasing the difficulty of method implementation.

3.1.4. Advanced Data Annotation Strategies

This category of methods focuses on reducing the reliance of fine-grained classification on large-scale accurately annotated data, optimizing annotation efficiency through strategies such as few-shot learning and semi-supervised annotation, and addressing the practical pain points of “high annotation cost and scarce samples”.

The most common approach is to introduce active learning [65] or incremental learning methods into fine-grained remote sensing image classification. LPILC [66] algorithm, based on linear programming, enables incremental learning with only a small number of new category samples without requiring original category data, adapting to dynamically updated classification needs; CSSD [58] uses central spectral self-distillation, taking the model’s own predictions as pseudo-labels to reduce dependence on manual annotation; CFSSL [61] screens high-confidence pseudo-labels through “breaking-tie” sampling (BT criterion) to reduce the impact of noisy annotations on the model.

The advantages of advanced data annotation strategies are as follows: (1) Significantly reduced annotation cost: These strategies can reduce manual annotation, making them particularly suitable for scenarios requiring professional knowledge for annotation, such as hyperspectral data. (2) Their limitations are as follows: The performance of few-shot/incremental learning highly depends on the robustness of pre-trained models. If the initial model has biases (e.g., a tendency to misclassify certain categories), it will continuously affect the classification of new categories.

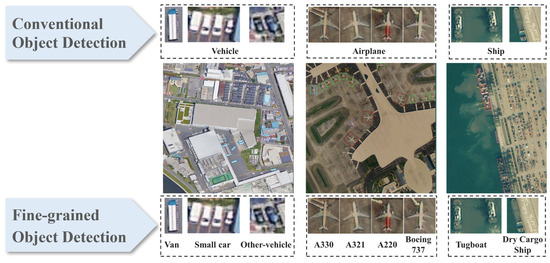

3.2. Fine-Grained Object-Level Detection

In the context of remote sensing, fine-grained object detection refers to the task of not only identifying major target categories such as vehicles, airplanes, and ships, but also distinguishing their more detailed subcategories. As illustrated in the Figure 4, conventional object detection merely recognizes broad categories like Vehicle, Airplane, or Ship. In contrast, fine-grained object detection is able to further differentiate vehicles into Van, Small Car, and Other Vehicle; airplanes into A330, A321, A220, and Boeing 737; and ships into Tugboat and Dry Cargo Ship, among others. This enables a more precise and detailed recognition and classification of targets in remote sensing imagery.

Figure 4.

Comparison between ordinary remote sensing target detection and fine-grained remote sensing target detection [67].

Object detection, a core task in computer vision, aims to localize and classify objects in images. It has mainly evolved into two dominant paradigms: two-stage detectors and one-stage detectors, each with distinct architectural designs and trade-offs between accuracy and speed. Most of the target detection methods in the field of remote sensing are derived from these two types of methods in the field of computer vision. The following subsections will respectively review and summarize the improvements of the two types of methods (two-stage and one-stage) for fine-grained object detection tasks.

3.2.1. Two-Stage Detectors

Two-stage methods separate object detection into two sequential steps: (1) generating region proposals (potential object locations) and (2) classifying these proposals and refining their bounding boxes. This modular design typically achieves higher accuracy but at the cost of computational complexity.

R-CNN (Region-based Convolutional Neural Networks) [68] introduced the first two-stage framework. It uses selective search to generate region proposals, extracts features via CNNs, and applies SVMs for classification. Despite its pioneering nature, redundant computations make it inefficient. Fast R-CNN [69] addressed R-CNN’s inefficiencies by sharing convolutional features across proposals, using a RoI (Region of Interest) pooling layer to unify feature sizes, and integrating classification and regression into a single network. Faster R-CNN [70] revolutionized the field by replacing selective search with a Region Proposal Network (RPN), a fully convolutional network that predicts proposals directly from feature maps. This made two-stage detection end-to-end trainable and significantly faster. Mask R-CNN [71] extended Faster R-CNN by adding a branch for instance segmentation, demonstrating the flexibility of two-stage architectures in handling complex tasks beyond detection. Cascade R-CNN [72] improved bounding box regression by iteratively refining proposals with increasing IoU thresholds, addressing the mismatch between training and inference in standard two-stage methods.

R-CNN-based methods rely on a two-stage framework to address the core challenge of distinguishing highly similar targets (e.g., aircraft subtypes, ship models) in remote sensing images. In the research of fine-grained object detection, the two-stage structure is more popular than the one-stage structure. Two-stage object detection architectures can be further decomposed into a feature extraction backbone (Backbone) with a feature pyramid network (FPN), a region proposal network (RPN) for candidate regions, a region of interest alignment module (RoIAlign) for precise feature mapping, and task-specific heads for object classification (Cls), bounding box regression (Reg), and optional mask prediction (Mask Branch). Table 2 summarizes the improvements of fine-grained object detection on these components. Below is a detailed analysis of their improvements with different methods.

Table 2.

Summary of the improvements of fine-grained object detection on different components.

Contrastive Learning. This subcategory focuses on optimizing the feature space of highly similar targets through inter-sample contrast to amplify inter-class differences and reduce intra-class variations. Its core logic is to construct positive/negative sample pairs and use contrastive loss to guide the model in learning discriminative features, which is particularly effective for scenarios where visual similarity leads to feature confusion.

Existing studies in this subcategory (Contrastive Learning) mainly focus on the following: To address insufficient feature discrimination caused by long-tailed distributions, ref. [67] proposed PCLDet, which builds a category prototype library to store feature centers of targets (e.g., ships, aircraft) and introduces Prototypical Contrastive Loss (ProtoCL) to maximize inter-class distances while minimizing intra-class distances. A Class-Balanced Sampler (CBS) further balances sample distribution, ensuring that rare subtypes receive sufficient attention. For the problem of intra-class diversity in fine-grained aircraft detection, ref. [78] designed an Instance Switching-Based Contrastive Learning method. The Contrastive Learning Module (CLM) uses InfoNCE+ loss to expand the feature gap between aircraft subtypes (e.g., passenger aircraft models), while the Refined Instance Switching (ReIS) module mitigates class imbalance and iteratively optimizes features of discriminative regions (e.g., wings, engines). For oriented highly similar targets (e.g., ships), ref. [85] combined Oriented R-CNN (ORCNN) with Adaptive Prototypical Contrastive Learning (APCL) as in Figure 5. The Spatial-Aligned FPN (SAFPN) solves the spatial misalignment issue of traditional FPN, providing high-quality feature inputs for contrastive learning, and significantly improves the separability of features for ship subtypes (e.g., frigates vs. destroyers) on datasets such as FGSD and ShipRSImageNet. With regard to unknown ship detection via memory bank and uncertainty reduction, ref. [86] proposed a method that uses a Class-Balanced Proposal Sampler (CBPS) to balance sample learning and a fine-grained memory bank-based Contrastive Learning (FGCL) strategy to separate known/unknown ships. The Uncertainty-Aware Unknown Learner (UAUL) module reduces prediction uncertainty, solving the misjudgment of unknown highly similar ships (e.g., new military ships).

Figure 5.

Prototypical contrast learning [85].

Knowledge Distillation. This subcategory aims to balance detection accuracy and model efficiency by transferring fine-grained knowledge from complex “teacher models” to lightweight “student models.” It has expanded from traditional multi-model distillation to self-distillation, enabling knowledge reuse within a single model and adapting to scenarios such as lightweight deployment and few-shot learning.

The technical evolution of this subcategory (Knowledge Distillation) is reflected in three directions: Multi-teacher knowledge distillation for accuracy–efficiency balance [77] used oriented R-CNN as the first teacher to locate vehicles/ships and Coarse-to-Fine Object Recognition Network (CF-ORNet) as the second teacher for fine-grained recognition. By distilling knowledge from both teachers into a student model and combining filter grafting, the model achieves high accuracy on high-resolution remote sensing images while reducing computational costs. For decoupled distillation for lightweight underwater detection, ref. [87] proposed the Prototypical Contrastive Distillation (PCD) framework, which uses R-CNN as the teacher model to transfer fine-grained knowledge of underwater targets (e.g., submersibles) via prototypical contrastive learning. The decoupled distillation mechanism allows the student model to focus on discriminative features, and contrastive loss enhances semantic structural attributes, improving the robustness of lightweight models in underwater environments. Self-distillation for few-shot scenarios [88] proposed Decoupled Self-Distillation for fine-grained few-shot detection. The model uses its “high-confidence branch” as an implicit teacher and “low-confidence branch” as a student to transfer knowledge of rare highly similar subtypes (e.g., rare aircraft models). Combined with progressive prototype calibration, this method addresses the problem of insufficient knowledge transfer due to limited data in few-shot scenarios.

Hierarchical Feature Optimization and Highly Similar Feature Mining (HFOSFM). This subcategory follows the logic of “from low-level feature purification to high-level feature fusion” to iteratively improve feature quality, with the ultimate goal of mining subtle discriminative features of highly similar targets. Low-level optimization focuses on eliminating noise (e.g., background interference, posture misalignment), while high-level optimization emphasizes integrating semantic information to enhance feature completeness.

Key innovations across these HFOSFM studies include the following: For low-level noise filtering and high-level feature matching, ref. [79] proposed PETDet, which uses the Quality-Oriented Proposal Network (QOPN) to generate high-quality oriented proposals (low-level purification) and the Bilinear Channel Fusion Network (BCFN) to extract independent discriminative features for proposals (high-level refinement). Adaptive Recognition Loss (ARL) further guides the R-CNN head to focus on high-quality proposals, solving the mismatch between proposals and features for highly similar targets. For multi-domain feature fusion and semantic association construction, ref. [76] proposed DIMA, which synchronously learns image and frequency-domain features via the Frequency-Aware Representation Supplement (FARS) mechanism (low-level detail enhancement) and builds coarse-fine feature relationships using the Hierarchical Classification Paradigm (HCP) (high-level semantic integration). This approach effectively amplifies structural differences between highly similar samples (e.g., ships of different tonnages). For oriented targets (e.g., rotating ships), ref. [80] proposed SFRNet, which uses the Spatial-Channel Transformer (SC-Former) to correct feature misalignment caused by posture variations (low-level spatial interaction) and the Oriented Transformer (OR-Former) to encode rotation angles (high-level semantic supplementation). This ensures that local differences (e.g., wing angles of tilted aircraft) are fully captured.

Category Relationship Modeling and Similarity Measurement Optimization (CRMSMO). This subcategory explicitly models intrinsic relationships between categories (e.g., hierarchical, structural, or functional relationships) to optimize similarity measurement logic, addressing the issue where traditional methods fail to distinguish highly similar targets due to over-reliance on visual features.

Representative studies of CRMSMO involve the following: Regarding semantic decoupling and anchor matching optimization, ref. [82] proposed a method for fine-grained ship detection that decouples classification and regression features using a polarized feature-focusing module and selects high-quality anchors via adaptive harmony anchor labeling. By optimizing the matching between anchors and category features, it improves the localization accuracy of highly similar ships. For hierarchical relationship constraint and feature distance expansion, ref. [83] proposed HMS-Net, which reinforces features at different semantic levels (e.g., ship contours vs. local components) and uses hierarchical relationship constraint loss to model the semantic hierarchy of ship subtypes (e.g., destroyer models). This explicitly expands the feature distance between highly similar subcategories. For invariant structural feature extraction via graph modeling, ref. [84] proposed Invariant Structure Representation, which uses the Graph Focusing Process (GFP) module to extract invariant structural features (e.g., cross-shaped aircraft, rectangular vehicles) based on graph convolution. The Graph Aggregation Network (GAN) updates node weights to enhance structural feature expression, enabling the model to distinguish visually similar targets by their inherent structural relationships. Shape-aware modeling for large aspect ratio targets [75] addressed the high similarity and large aspect ratio of ships in high-resolution satellite images by designing a Shape-Aware Feature Learning module to alleviate feature alignment bias and a Shape-Aware Instance Switching module to balance category distribution. This ensures sufficient learning of rare ship subtypes (e.g., special operation ships).

Multi-Source Feature Fusion and Context Utilization. This subcategory compensates for the lack of discriminative information caused by visual similarity by fusing multi-modal data (e.g., RGB, multispectral, LiDAR) and leveraging contextual relationships. It is particularly effective for scenarios where single-modal features are insufficient to distinguish highly similar targets (e.g., street tree subtypes). For example, in [74], it proposed a multisource region attention network that fuses RGB, multispectral, and LiDAR data. A multisource region attention module assigns weights to features of highly similar street tree subtypes, using multi-modal differences (e.g., spectral reflectance, elevation information) to supplement the information gap caused by visual similarity. This approach significantly improves the fine-grained classification accuracy of street trees in remote sensing imagery. Few-shot aircraft detection via cross-modal knowledge guidance [89] proposed the TEMO method, which introduced text-modal descriptions of aircraft and fused text-visual features via a cross-modal assembly module. This reduces confusion between new categories and known similar aircraft, enabling fine-grained recognition in few-shot scenarios based on the R-CNN two-stage framework.

3.2.2. One-Stage Detectors

Single-stage detectors (such as the YOLO series) omit the separation steps of candidate region generation and subsequent classification, and directly perform category prediction and bounding box regression on the feature map. This end-to-end structure significantly reduces model complexity and inference latency, thereby enabling real-time detection capabilities. Although early single-stage methods generally lagged behind two-stage detectors in terms of accuracy, YOLO et al. have made many improvements and achieved significant enhancements in aspects such as network structure optimization, loss function improvement, and the introduction of feature enhancement modules.

YOLO (You Only Look Once) [90] pioneered one-stage detection by treating object detection as a regression task. It divides the image into a grid, with each grid cell predicting bounding boxes and class probabilities, enabling real-time performance. SSD (Single Shot MultiBox Detector) [91] introduced multi-scale feature maps to detect objects of varying sizes, using default bounding boxes (anchors) at different layers to improve small object detection. RetinaNet [92] addressed the class imbalance issue in one-stage detectors with Focal Loss, a modified cross-entropy loss that down-weights easy background examples. This closed the accuracy gap with two-stage methods. YOLOv3 [93] enhanced the original YOLO with multi-scale prediction, a more efficient backbone (Darknet-53), and better class prediction, balancing speed and accuracy. EfficientDet [94] optimized both accuracy and efficiency through compound scaling (co-scaling depth, width, and resolution) and a weighted bi-directional feature pyramid network (BiFPN), achieving state-of-the-art results on COCO. YOLOv7 [95] introduced trainable bag-of-freebies (e.g., ELAN architecture, model scaling) and bag-of-specials (e.g., reparameterization) to boost performance, outperforming previous YOLO variants and other one-stage detectors on speed–accuracy curves.

Methods based on YOLO can be structurally decomposed into Backbone, Neck, and Head. Table 3 summarizes the improvements of existing fine-grained object detection approaches with respect to these decomposed components.

These methods can also be broadly categorized into four groups: data and input augmentation-driven, attention and feature fusion-driven, discriminative learning and task design-driven, and optimization and post-processing-driven. Each direction addresses different technical aspects, yet they share the common goal of enhancing the ability to distinguish visually similar targets and to improve the detection of small objects in complex remote sensing scenes.

Data and Input Augmentation-Driven Methods. This category mainly focuses on enriching input data and sample representation, alleviating challenges of limited training samples and class imbalance in remote sensing. For instance, the improved YOLOv7-Tiny [96] applies multi-scale/rotation augmentation to expand input sample diversity; Lightweight FE-YOLO [97] optimizes input by preprocessing input data to highlight fine-grained features of small targets; YOLOv8 (G-HG) [98], adjusts input feature resolution to match multi-scale remote sensing targets; YOLO-RS [99] adopts context-aware input sampling to focus on crop fine-grained regions; YOLOX-DW [100] applies adaptive sampling to balance the distribution of fine-grained classes in input data. Moreover, DETet [101] and MFL [102] explore image degradation recovery and super-resolution enhancement, offering new approaches to restore fine details in low-quality remote sensing images. These studies highlight that input-level improvements not only enhance robustness but also provide stronger foundations for fine-grained discrimination.

Attention and Feature Fusion-Driven Methods. Methods in this category emphasize enhancing discriminative feature representations by leveraging attention mechanisms and multi-scale fusion. For example, FGA-YOLO [103] and SR-YOLO [104] combine global multi-scale modules, bidirectional FPNs, and super-resolution convolutions to strengthen fine-grained representation of aircraft and UAV targets. WDFA-YOLOX [105] and YOLOv5+CAM [106] address SAR feature loss and wide-area vehicle detection through wavelet-based compensation and attention mechanisms. IF-YOLO [107] and FiFoNet [108] improve feature pyramid and fusion strategies to preserve small-object features and suppress background noise. These works demonstrate that precise feature modeling under complex backgrounds and scale variations is crucial for fine-grained detection.

Table 3.

Summary of one-stage methods (YOLO) for fine-grained object detection.

Table 3.

Summary of one-stage methods (YOLO) for fine-grained object detection.

| Method | Input Stage | Backbone | Neck | Head | Purpose | Reference |

|---|---|---|---|---|---|---|

| FGA-YOLO | × | ✓ | ✓ | ✓ | Aggregate multi-layer features to enhance multi-scale information; Extract key discriminative features to improve fine-grained recognition; Alleviate imbalance between easy/hard samples via EMA Slide Loss | [103] |

| SR-YOLO | × | ✓ | ✓ | ✓ | Extract small-target fine-grained features via SR-Conv module; Enhance small-target feature fusion with bidirectional FPN; Improve detection accuracy via Normalized Wasserstein Distance Loss | [104] |

| IF-YOLO | × | ✓ | ✓ | × | Preserve small-target intrinsic features via IPFA module; Suppress conflicting information with CSFM; Fuse multi-scale features via FGAFPN | [107] |

| WDFA-YOLOX | × | ✓ | ✓ | ✓ | Compensate SAR fine-grained feature loss via WSPP module; Enhance small-ship features with GLFAE; Improve bounding-box regression via Chebyshev distance-GIoU Loss | [105] |

| Related-YOLO | × | ✓ | ✓ | × | Model ship component geometric relationships via relational attention; Adapt to rotated ships with deformable convolution; Optimize anchors via hierarchical clustering | [109] |

| YOLOv5+CAM | × | ✓ | ✓ | ✓ | Capture key regions via CAM attention module; Fuse multi-scale features with CAM-FPN; Enhance training via coarse-grained judgment + background supervision | [106] |

| FiFoNet | × | ✓ | ✓ | × | Capture global–local context via GLCC module; Select valid multi-scale features to block redundant information; Improve small-target detection in UAV images | [108] |

| FD-YOLOv8 | × | ✓ | ✓ | × | Preserve aircraft local details via local feature module; Enhance local–global interaction via focus modulation; Improve fine-grained accuracy in complex backgrounds | [110] |

| YOLOX (GTDet) | × | ✓ | ✓ | ✓ | Adapt to oriented targets via GCOTA label assignment; Improve angle prediction via DLAAH; Enhance localization via anchor-free detection | [111] |

| DEDet | × | ✓ | ✓ | × | Restore nighttime details via FPP module; Filter background interference via progressive filtering; Improve nighttime UAV target detection | [101] |

| MFL | × | ✓ | ✓ | × | Realize SR-OD mutual feedback via MFL closed-loop; Focus on ROI details via FROI module; Narrow target feature differences via MSOI | [102] |

| InterMamba | × | ✓ | ✓ | ✓ | Capture long-range dependencies via VMamba backbone; Fuse multi-scale features via cross-VSSM; Optimize dense detection via UIL loss | [112] |

| Improved YOLOv7-Tiny | ✓ | ✓ | × | × | Construct diverse remote sensing aircraft dataset; Apply multi-scale/rotation augmentation to enrich input samples | [96] |

| Lightweight FE-YOLO | ✓ | ✓ | ✓ | × | Preprocess input data to highlight small-target fine-grained features; Reduce input noise interference via similarity-based channel screening; Optimize input feature distribution for remote sensing scenarios | [97] |

| YOLOv8 (G-HG) | ✓ | ✓ | ✓ | × | Adjust input feature resolution to match multi-scale remote sensing targets; Retain fine-grained details in input via redundant feature map sampling; Optimize input data utilization for complex background scenarios | [98] |

| YOLO-RS | ✓ | ✓ | ✓ | ✓ | Adopt context-aware input sampling to focus on crop fine-grained regions; Balance input class distribution via AC mix module | [99] |

| YOLOX-DW | ✓ | ✓ | × | × | Apply adaptive sampling to balance fine-grained class distribution in input; Optimize input sample selection to avoid rare class underrepresentation | [100] |

Note: ✓ = The method involves improvements in this YOLO stage; × = The method does not involve improvements in this YOLO stage.

Discriminative Learning and Task Design-Driven Methods. This research line emphasizes introducing additional discriminative constraints or multi-task mechanisms to improve the separation of visually similar categories. FD-YOLOv8 [110] captures subtle differences in aircraft through local detail modules and focused modulation mechanisms. Related-YOLO [109] leverages relational attention, hierarchical clustering, and deformable convolutions to model structural relations between ship components. GTDet [111] enhances classification–regression consistency for oriented objects using optimal transport-based label assignment and decoupled angle prediction. MFL [102] builds a closed-loop between detection and super-resolution, guiding degraded images to recover discriminative details. Overall, these methods contribute discriminative signals by focusing on local part modeling, relational learning, and multi-task integration.

Optimization and Post-Processing-Driven Methods. This category centers on loss function design and post-processing optimization, improving adaptation to fine-grained targets during both training and inference. WDFA-YOLOX [105] and SR-YOLO [104] introduce novel regression losses (Chebyshev distance-IoU and normalized Wasserstein distance) to improve bounding box localization for small objects. GTDet [111] applies optimal transport-based assignment to address the scarcity of positive samples for oriented objects with large aspect ratios. SA-YOLO [113] dynamically adjusts class weights with adaptive loss functions to mitigate bias from data imbalance. DETet [101] employs iterative filtering during post-processing to suppress noise and false positives in night-time UAV imagery. These strategies demonstrate that careful optimization and post-processing not only stabilize training but also ensure the preservation of small and fine-grained targets during inference.

Overall, YOLO-based fine-grained detection research in remote sensing has established a comprehensive improvement pathway spanning input augmentation, feature modeling, discriminative learning, and optimization strategies. Data and input enhancements improve baseline robustness, attention and feature fusion strengthen discriminative representations, discriminative learning and task design introduce novel supervision signals, and optimization and post-processing ensure stability and reliability across stages. Future trends are expected to further integrate these directions, such as combining input augmentation with discriminative learning, or unifying feature modeling and optimization strategies into an end-to-end framework, to comprehensively improve fine-grained detection performance in remote sensing.

3.2.3. Other Methods for Fine-Grained Object Detection

In the field of fine-grained object detection in remote sensing, aside from YOLO and RCNN-based methods, existing studies can be broadly categorized into four classes: methods based on Transformer/DETR, classification/recognition networks, customized approaches for special modalities or scenarios, and graph-based or structural feature modeling methods. These approaches address challenges such as category ambiguity, feature indistinctness, and complex scene conditions from different perspectives, including global feature modeling, fine-grained feature optimization, environment-specific adaptation, and structural information exploitation.

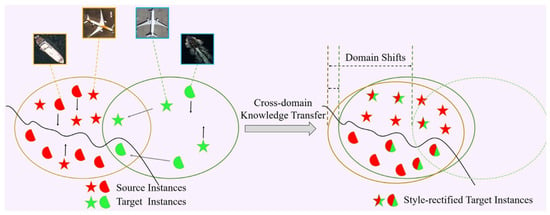

Transformer/DETR-Based Methods. Transformer and DETR-based methods leverage self-attention mechanisms to model global feature dependencies, enabling the capture of fine-grained target relationships across the entire image and supporting end-to-end detection. Typical studies include the following: FSDA-DETR [114] as in Figure 6 employs cross-domain style alignment and category-aware feature calibration to achieve effective adaptation in optical-SAR cross-domain few-shot scenarios; GMODet [115] integrates region-aware and semantic–spatial progressive interaction modules within the DETR framework to capture spatio-temporal correlations of ground-moving targets, enabling efficient detection in large-scale remote sensing images; InterMamba [112] combines cross-visual selective scanning with global attention and user interaction feedback to optimize detection and annotation in dense scenes, enhancing discriminability in crowded environments.

Figure 6.

FSDA-DETR [114]. Domain shift is observed between the source and target domains when the given target-domain data is scarce.

CNN-Based Feature Interaction and Classification (Non-YOLO/RCNN). This category primarily focuses on fine-grained object classification or recognition. Most methods do not involve explicit detection or YOLO/RCNN structures (without localization modules), but rely on feature optimization, data augmentation, and feature purification to improve category discriminability. A few approaches (Context-Aware method [116]) incorporate lightweight localization modules in addition to classification. Representative works include [117], which combines CNN features with natural language attributes for zero-shot recognition; ref. [118], which uses region-aware instance modeling and adversarial generation to mitigate inter-class similarity; EFM-Net [119], which leverages feature purification and data augmentation to enhance fine-grained characteristics; ref. [120], integrating weak and strong features to iteratively optimize discriminative regions in low-resolution images; ref. [121], proposing a coarse-to-fine hierarchical framework for urban village classification; and [122], which uses feature decoupling and pyramid transformer encoding to distinguish visually similar targets in UAV videos. Overall, these methods emphasize enhancing classification capability under limited or ambiguous feature conditions.

Customized Methods for Special Modalities or Scenarios. These methods target fine-grained object detection under specific modalities (e.g., thermal infrared, underwater) or challenging scenarios (e.g., low-light, nighttime), optimizing feature extraction and localization through specialized modules. Typical studies include the following: U-MATIR [123] constructs a multi-angle thermal infrared dataset and leverages heterogeneous label spaces with hybrid-view cascade modules to enable efficient detection of thermal infrared targets; DEDet [101] employs pixel-level exposure correction and background noise filtering to improve feature quality and detection performance under low-light UAV imagery; the PCD method [87] uses prototype contrastive learning and decoupled distillation to transfer features and lighten models for underwater fine-grained targets, enhancing overall detection performance.

Graph-Based or Structural Feature Modeling Methods. Graph-based methods model structural relationships among target components, reinforcing classification and localization through structural consistency. Typical studies include the following: GFA-Net [84] employs a graph-focused aggregation network to model structural features and node relations, achieving precise detection of structurally deformed targets; in [124], they integrate geospatial priors with frequency-domain analysis to infer the distribution and class relationships of aircraft in large-scale SAR images, enabling efficient localization.

Overall, the three categories of fine-grained object detection methods form a complementary technical system targeting the core challenge of “high inter-class similarity”: R-CNN-based methods mainly achieve high precision through specialized technical paths (contrastive learning, knowledge distillation, hierarchical feature optimization) and are suitable for complex scenarios (few-shot, unknown categories); YOLO-based methods mainly prioritize efficiency via multi-scale fusion and attention mechanisms, making them ideal for real-time scenarios (UAV, SAR); other methods break through traditional frameworks to address special scenarios (cross-domain, nighttime, zero-shot), providing innovative supplements.

3.3. Fine-Grained Scene-Level Recognition

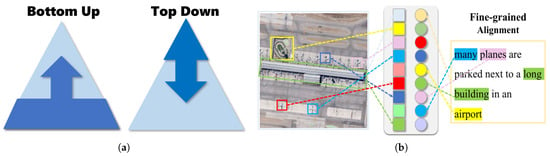

Fine-grained scene-level recognition is playing an increasingly important role in remote sensing applications, where distinguishing subtle differences between visually similar scenes has become more complex and challenging. Many studies on scene understanding in remote sensing images draw on methods and models from the field of computer vision. In the field of computer vision, image scene understanding generally follows two fundamental paradigms: bottom-up and top-down approaches.

Bottom-up methods start from pixels and low-level features, progressively extracting textures, shapes, and spectral information, and then aggregating them into high-level semantics through deep neural networks, as in Figure 7. Their advantages lie in being data-driven, well-suited for large-scale imagery, and capable of automatic feature learning with good transferability. However, they often lack high-level semantic constraints, making them vulnerable to intra-class variability and complex backgrounds, which may lead to insufficient semantic interpretability.

Figure 7.

Paradigms and example of scene-level recognition. (a) Bottom-up vs. top-down. (b) Example of scene-level recognition (bottom-up) [125].

Top-down methods, in contrast, begin with task objectives or prior knowledge, employing geographic knowledge graphs, ontologies, or semantic rules to guide and constrain the interpretation of low-level features, as in Figure 7. These approaches have the strengths of semantic clarity and interpretability, aligning more closely with human cognition. Their limitations, however, include dependence on high-quality prior knowledge, high construction costs, and limited scalability in large-scale automated tasks.

In terms of research trends, bottom-up methods dominate the current literature. In fine-grained remote sensing scene understanding in particular, most studies rely on multi-scale feature modeling, attention mechanisms, convolutional neural networks, and Transformer architectures to capture subtle inter-class differences through hierarchical abstraction. These approaches are well-suited to large-scale data-driven training and have therefore become the mainstream. By contrast, top-down methods are mainly explored in knowledge-based scene parsing, cross-modal alignment, and zero-shot learning, and remain relatively limited in number, though they show promise for enhancing semantic interpretability and cross-domain generalization.

In summary, fine-grained remote sensing scene understanding is currently almost exclusively driven by bottom-up feature learning approaches, while top-down methods remain at an exploratory stage. This paper mainly reviews two studies on the scene level of remote sensing images: scene classification and image retrieval. Most of them are based on bottom-up fine-grained image recognition or understanding.

3.3.1. Scene Classification

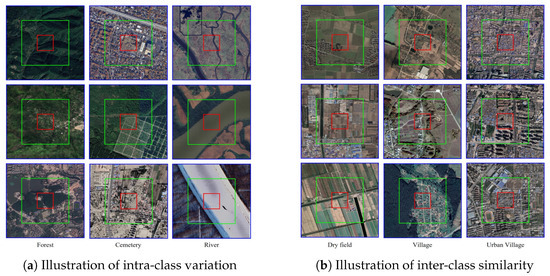

The core challenges of fine-grained remote sensing image scene classification converge on four dimensions: feature confusion caused by “large intra-class variation and high inter-class similarity” as in Figure 8, data constraints from “high annotation costs and scarce samples”, modeling imbalance between “local details and global semantics”, and domain shift across “sensors and regions”. The studies can be categorized into four core classes and one category of scattered research according to technical objectives and methodological logic.

Figure 8.

Illustration of intra-class variation and inter-class similarity in scene-level recognition in MEET [22].

Multi-Granularity Feature Modeling. It is one of the core approaches to resolving “Intra-Class Variation-Inter-Class Similarity”. This category represents the fundamental technical direction for fine-grained classification. Its core logic involves mining multi-dimensional features (e.g., “local–global”, “low–high resolution”, “high–low frequency”) to capture subtle discriminative information between subclasses, thereby addressing the pain points of “large intra-class variation and high inter-class similarity” in remote sensing scenes. Its technical evolution has progressed from single-granularity enhancement to multi-granularity collaborative decoupling, which can be further divided into two technical branches: (1) Multi-Level Feature Fusion and Semantic Collaboration. This branch strengthens the transmission and discriminability of fine-grained semantics through feature interaction across different network levels. Typical studies include the following: Ref. [126] proposed the MGML-FENet framework, innovatively designing a Channel-Separate Feature Generator (CS-FG) to extract multi-granularity local features (e.g., building edge textures, crop ridge structures) at different network levels. Ref. [127] proposed MGSN, pioneering a coarse-grained guiding fine-grained bidirectional mechanism. Its MGSL module enables simultaneous learning of global scene structures and local details. Ref. [128] proposed the MG-CAP framework: it generates multi-granularity features via progressive image cropping, and uses Gaussian covariance matrices (replacing traditional CNN features) to capture feature high-order correlations. (2) Frequency/Scale Decoupling and Enhancement. Typical studies include the following: Targeting the characteristic of remote sensing images where “high-frequency details are separated from low-frequency structures”, this branch strengthens the independence and discriminability of fine-grained features through frequency decomposition or multi-scale modeling. Ref. [129] proposed MF2CNet: it realizes parallel extraction and decoupling of high/low-frequency features (high-frequency for fine-grained details like road markings, low-frequency for global structures like road orientation). Ref. [130] designed a Multi-Granularity Decoupling Network (MGDNet), focusing on “fine-grained feature learning under class-imbalanced scenarios”. The network is guided to focus on subclass differences using region-level supervision. Ref. [131] proposed the ECA-MSDWNet, which integrates “multi-scale feature extraction” with incremental learning: the Efficient Channel Attention module focuses on key fine-grained features, while the multi-scale depthwise convolution reduces computational costs.